Abstract

It is well established that movement planning recruits motor-related cortical brain areas in preparation for the forthcoming action. Given that an integral component to the control of action is the processing of sensory information throughout movement, we predicted that movement planning might also modulate early sensory cortical areas, readying them for sensory processing during the unfolding action. To test this hypothesis, we performed 2 human functional magnetic resonance imaging studies involving separate delayed movement tasks and focused on premovement neural activity in early auditory cortex, given the area’s direct connections to the motor system and evidence that it is modulated by motor cortex during movement in rodents. We show that effector-specific information (i.e., movements of the left vs. right hand in Experiment 1 and movements of the hand vs. eye in Experiment 2) can be decoded, well before movement, from neural activity in early auditory cortex. We find that this motor-related information is encoded in a separate subregion of auditory cortex than sensory-related information and is present even when movements are cued visually instead of auditorily. These findings suggest that action planning, in addition to preparing the motor system for movement, involves selectively modulating primary sensory areas based on the intended action.

Keywords: action, auditory, grasping, motor, planning

Introduction

Most theories of motor control distinguish between the planning of a movement and its subsequent execution. Research examining the neural basis of movement planning has commonly used delayed movement tasks—in which instructions about what movement to perform are separated in time from the instruction to initiate that movement—and has focused on delay period activity in motor-related brain areas. The main focus of this work has been on understanding how planning-related neural activity relates to features of the forthcoming movement to be executed (Tanji and Evarts 1976; Messier and Kalaska 2000), and more recently, how the dynamics of these neural activity patterns prepare the motor system for movement (Churchland et al. 2012). However, in addition to generating appropriate muscle commands, a critical component of skilled action is the prediction of the sensory consequences of movement (Wolpert and Flanagan 2001), which is thought to rely on internal models (Wolpert and Miall 1996). For example, the sensorimotor control of object manipulation tasks involves predicting the sensory signals associated with contact events, which define subgoals of the task (Flanagan et al. 2006). Importantly, these signals can occur in multiple sensory modalities, including tactile, proprioceptive, visual, and auditory (Johansson 2009). By comparing the predicted to actual sensory outcomes, the brain can monitor task progression, detect performance errors, and quickly launch appropriate, task-protective corrective actions as needed (Johansson 2009). For instance, when lifting an object that is heavier than expected, anticipated tactile events, associated with liftoff, fail to occur at the expected time, triggering a corrective response. Likewise, similar compensatory behavior has also been shown to occur during action-related tasks when anticipated auditory events fail to occur at the predicted time (Safstrom et al. 2014). Sensory prediction is also critical in sensory cancellation, such as the attenuation of predictable sensory events that arise as a consequence of movement. Such attenuation is thought to allow the brain to disambiguate sensory events due to movement from events due to external sources (Schneider and Mooney 2018).

At the neural level, anticipation of the sensory consequences of movement has been shown to rely on a corollary discharge signal or efference copy of outgoing motor commands being sent, in parallel, to early sensory systems (von Holst et al. 1950; Crapse and Sommer 2008). Consistent with this idea, work in crickets, rodents, songbirds and nonhuman primates has reported modulations in neural activity in early sensory areas such as auditory (Poulet and Hedwig 2002; Eliades and Wang 2008; Mandelblat-Cerf et al. 2014; Schneider et al. 2014) and visual cortex (Saleem et al. 2013; Lee et al. 2014; Leinweber et al. 2017) during self-generated movements. Critically, these movement-dependent modulations are distinct from modulations related to sensory reafference and have even been shown to occur when sensory reafference is either masked (Schneider et al. 2014; Leinweber et al. 2017) or removed entirely (Keller et al. 2012; Keck et al. 2013; Saleem et al. 2013). This importantly demonstrates that these modulations result from automatic mechanisms that are motor in origin (Leinweber et al. 2017; Schneider and Mooney 2018). Given the functional importance of predicting task-specific sensory consequences, we hypothesized that action planning, in addition to preparing motor areas for execution (Churchland et al. 2010; Shenoy et al. 2013), involves the automatic preparation of primary sensory areas for processing task-specific sensory signals. Given that these sensory signals will generally depend on the precise action being performed, this hypothesis predicts that neural activity in early sensory areas will represent specific motor-related information prior to the movement being executed, during action preparation.

One sensory system that is particularly well suited to directly testing this hypothesis is the auditory cortex. The mammalian auditory system exhibits an extensive, highly interconnected web of feedback projections, providing it with access to the output of information processing across multiple distributed brain areas (Hackett 2015). To date, this feedback architecture has been mainly implicated in supporting auditory attention and working memory processes (Linke and Cusack 2015; Kumar et al. 2016). However, recent work in rodents has also demonstrated that the auditory cortex receives significant direct projections from ipsilateral motor cortex (Nelson et al. 2013; Schneider et al. 2014, 2018). Consistent with this coupling between the motor and auditory systems, recent studies in both humans and rodents have shown that auditory cortex is functionally modulated by top-down motor inputs during movement execution (Reznik et al. 2014, 2015; Schneider et al. 2014, 2018). While the focus of this prior work has been on the real-time attenuation, during movement execution, of the predictable auditory consequences of movement, it did not selectively focus on the movement planning process itself or the broader function of the motor system in modulating early auditory activity in preparation for action.

Here we show that, using functional magnetic resonance imaging (fMRI) and 2 separate delayed movement experiments involving object manipulation, the movement effector to be used in an upcoming action can be decoded from delay period activity in early auditory cortex. Critically, this delay period decoding occurred despite the fact that any auditory consequences associated with movement execution (i.e., the sounds associated with object lifting and replacement) were completely masked both by the loud magnetic resonance imaging (MRI) scanner noise and the headphones that participants wore to protect their hearing. This indicates that the premovement decoding in the auditory cortex is automatic in nature (i.e., occurs in the absence of any sensory reafference) and of motor origin. Beyond real-time sensory attenuation, these findings suggest that, during movement preparation, the motor system selectively changes the neural state of early auditory cortex in accordance with the specific motor action being prepared, likely readying it for the processing of sensory information that normally arises during subsequent movement execution.

Materials and Methods

Overview

To test our hypothesis that motor planning modulates the neural state of early auditory cortex, we performed 2 separate fMRI experiments that used delayed movement tasks. This allowed us to separate motor planning-related modulations from the later motor-and somatosensory-related modulations that occur during movement execution. In effect, these delayed movement tasks allowed us to ask whether the motor command being prepared—but not yet executed—can be decoded from neural activity patterns in early auditory cortex. Moreover, because auditory reafference (i.e., sounds related to object lifting, replacement, etc.) is masked in our experiments both by the loud scanner noise and the headphones participant’s wear to protect their hearing (and allow the delivery of auditory commands), we are able to test the extent to which modulations in auditory cortex are automatic and motor related in origin (Schneider et al. 2014).

In the first of these experiments, in each trial we had participants first prepare and then execute (after a jittered delay interval) either a left or right hand object lift-and-replace action, which were cued by 2 nonsense auditory commands (“Compty” or “Midwig”; Fig. 1). Importantly, halfway throughout each experimental run, participants were required to switch the auditory command-to-hand mapping (i.e., if Compty cued a left hand object lift-and-replace action in the first half of the experimental run, then Compty would cue a right hand object lift-and-replace action in the second half of the experimental run; Fig. 1B). Critically, this design allowed us to examine early auditory cortex activity during the planning of 2 distinct hand actions (left vs. right hand movements), with invariance to the actual auditory commands (e.g., Compty) used to instruct those hand actions. In the second of these experiments, in each trial we had participants first prepare and then execute (after a fixed delay interval) either a right hand object lift-and-replace action or a target-directed eye movement (Fig. 4). Unlike in Experiment 1, both of these movements were instructed via change in the color of the central fixation light, thus allowing us to examine early auditory cortex activity during the planning of 2 distinct effector movements (hand vs. eye) in the absence of any direct auditory input (i.e., no auditory commands). As such, any neural differences in the auditory cortex prior to movement in both of these experiments is likely to reflect modulations related to motor, and not bottom-up sensory, processing.

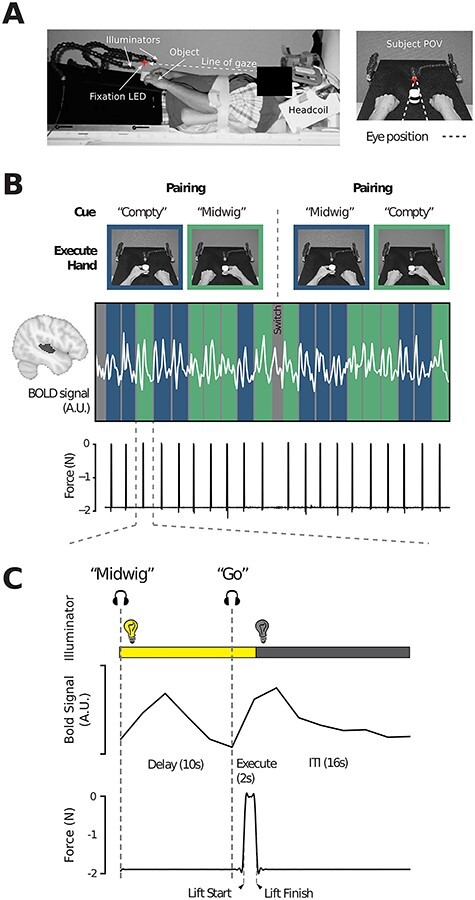

Figure 1.

Experiment 1 setup and task overview. (A) MRI setup (left) and subject point-of-view (right) of the experimental workspace. Red star indicates the fixation LED placed above the object. Illuminator LEDs, attached to the flexible stalks, are shown on the left and right. (B) Example fMRI run of 20 task trials. Color-coded columns (blue = left hand, green = right hand) demarks each trial and the associated time-locked BOLD activity from STG (shaded in dark gray on cortex, left) of an exemplar subject is indicated by the overlaid white trace. Pairings between auditory cue (Compty or Midwig) and hand (left or right) are indicated in the pictures above and were reversed halfway through each run following a “Switch” auditory cue, such that each hand is paired with each auditory cue in every experimental run (see Materials and Methods). The corresponding force sensor data, used to track object lifting, is shown below. (C). Sequence of events and corresponding single-trial BOLD and force sensor data of an exemplar trial from a representative participant in which Midwig cued a right-handed movement. Each trial begins with the hand workspace being illuminated while, simultaneously, participants receive the auditory cue (Compty or Midwig). This is then followed by a jittered 6–12 s Delay interval (10 s in this exemplar trial). Next, an auditory Go cue initiates the start of the 2 s Execute epoch, during which the subject grasp-and-lifts the object (shown by the force trace; arrows indicate the start of the lift and object replacement). Following the 2 s Execute epoch, illumination of the workspace is extinguished and subjects then wait a fixed 16-s ITI prior to onset of the next trial. See also Supplemental Figure 1 for a more detailed overview of the trial sequence and the data obtained from a separate behavioral training session.

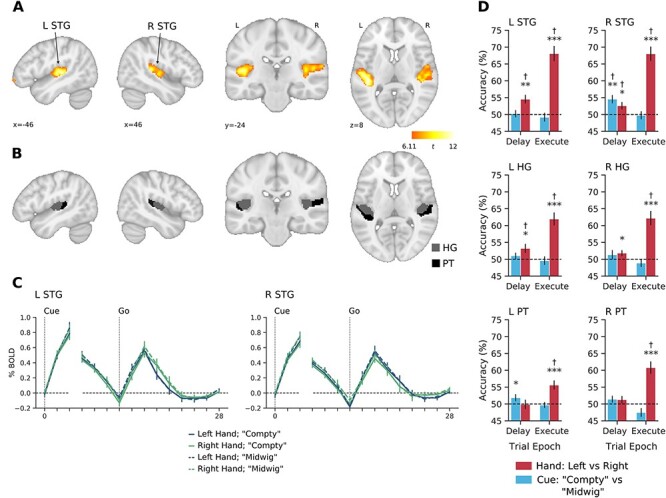

Figure 4.

Experiment 2 shows effector-specific decoding in (contralateral) left auditory cortex and that it occurs despite baseline levels of auditory neural activity during the delay period. (A) Subject point of view of the experimental workspace in Delay and Execute epochs (top) and trial flow (bottom). Red star indicates the fixation illuminator LED. (B) Group-averaged BOLD timecourses for the Grasp (gray) and Eye movement (“Look” black) conditions in left and right STG. Note the absence of any net change in BOLD activity during the delay epoch for both trial types, which is consistent with the lack of there being any auditory input. (C) Decoding accuracies for Grasp versus Look conditions during the Delay and Execute epochs in all auditory ROIs. Decoding accuracies for each epoch were tested against chance decoding (50%) using 1-sample t-tests. Error bars show ±1 SE of mean. *P < 0.05, **P < 0.01, ***P < 0.001, †FDR-corrected q < 0.05. (D) Purple clusters show searchlight results of significant decoding for hand versus eye information in contralateral (left) auditory cortex during the Delay epoch. As in Experiment 1, searchlight analyses were restricted to a mask defined by significant voxels in an Intact Speech > Rest contrast using the independent auditory localizer task data (gray-traced regions; see Materials and Methods). Group-level searchlight maps were thresholded at t > 2.65 (1-tailed P < 0.01) and cluster-corrected at P < 0.05. (E) Significant decoding in the cerebellum, for the same analysis as in D.

Participants

Sixteen healthy right-handed subjects (8 females, 21–25 years of age) participated in Experiment 1, which involved 1 behavioral testing session followed by 2 fMRI testing sessions (a localizer testing session and then the experimental testing session, performed on separate days ~1–2 weeks apart). A separate cohort of 15 healthy right-handed subjects (8 females, 20–32 years of age) participated in Experiment 2 approximately a year after Experiment 1, which involved 1 behavioral testing session and 1 fMRI testing session. Right handedness was assessed with the Edinburgh handedness questionnaire (Oldfield 1971). Informed consent and consent to publish were obtained in accordance with ethical standards set out by the Declaration of Helsinki (1964) and with procedures cleared by the Queen’s University Health Sciences Research Ethics Board. Subjects were naïve with respect to the hypotheses under evaluation and received monetary compensation for their involvement in the study. Data from 1 subject in Experiment 1 and from 2 subjects in Experiment 2 were excluded from further analyses due to data collection problems in the experimental testing sessions, resulting in final sample sizes of 15 and 13 subjects, respectively. Meanwhile, all 16 subjects from Experiment 1 were used for the localizer testing session.

Experiment 1

Experimental Apparatus

The experimental setup for both the localizer and experimental testing sessions was a modification from some of our previous fMRI studies (Gallivan et al. 2011, 2013a; Hutchison and Gallivan 2018) and consisted of a black platform placed over the waist and tilted away from the horizontal at an angle (~15°) to maximize comfort and target visibility. The MRI head coil was tilted slightly (~20°) and foam cushions were used to give an approximate overall head tilt of 30°. To minimize limb-related artifacts, subjects had the right and left upper arms braced, limiting movement of the arms to the elbow and thus creating an arc of reachability for each hand. The exact placement of object stimuli on the platform was adjusted to match each subject’s arm length such that all required actions were comfortable and ensured that only movement of the forearm, wrist, and fingers was required. The platform was illuminated by 2 bright white light-emitting diodes (LEDs) attached to flexible plastic stalks (Loc-Line, Lockwood Products) located to the left and right of the platform. To control for eye movements, a small red fixation LED, attached to a flexible plastic stalk, was positioned above the hand workspace and located ~ 5 cm beyond the target object position (such that the object appeared in the subject’s lower visual field). Experimental timing and lighting were controlled with in-house software created with C++ and MATLAB (The Mathworks). Throughout fMRI testing, the subject’s hand movements were monitored using an MR-compatible infrared-sensitive camera (MRC Systems GmbH), optimally positioned on one side of the platform and facing towards the subject. The videos captured during the experiment were analyzed offline to verify that the subjects were performing the task as instructed and to identify error trials.

Auditory Localizer Task

A separate, block-design localizer task was collected to independently identify auditory cortex and higher-order language regions of interest (ROIs) for use in the analyses of the main experimental task. This auditory localizer task included 3 conditions: 1) Intact speech trials (Intact), which played one of 8 unique excerpts of different speeches; 2) scrambled speech trials (Scrambled), which were incoherent signal-correlated noise versions of the speech excerpts (i.e., applying an amplitude envelope of the speech to uniform Gaussian white noise, ensuring that the noise level was utterance specific and exactly intense enough at every moment to mask the energy of the spoken words); and 3) rest trials (Rest), in which no audio was played (subjects thus only heard background MRI scanner noise during this time). Trials lasted 20 s each and alternated, in pseudo-random order, between Intact Speech, Scrambled Speech, and Rest for a total of 24 trials in each experimental run. In addition, a 20-s baseline block was placed at the beginning of each experimental run. Each localizer run totaled 500 s and participants completed 2 of these runs during testing (resulting in 16 repetitions per experimental condition per subject). To encourage that participants maintained attention throughout this auditory localizer run, they were required to monitor each of the Intact speeches and let the experimenter know, following the run, whether any of them were repeated. This repeat happened in only one of the experimental runs and each and every subject correctly identified the repeat and nonrepeat run (100% accuracy).

Motor Localizer task

Four experimental runs of a motor localizer task were also collected alongside the auditory localizer task, which constituted a block-design protocol that alternated between subtasks designed to localize 8 separate motor functions. Task set up and details for all 8 conditions are described in Supplemental Figure 2. The hand grasping condition from this localizer task was used to define dorsal premotor cortex (PMd), which we used as a basis for comparison with our auditory cortex decoding findings (see Results).

The motor and auditory localizer testing session lasted approximately 2 h and included setup time, one 7.5-min high-resolution anatomical scan and 6 functional scanning runs, wherein subjects alternated between performing 2 runs of the motor localizer task and 1 run of the auditory localizer, twice. A brief (~10 min) practice session was carried out before the localizer testing session in the MRI control room in order to familiarize participants with localizer tasks.

Main Experimental Task

In our experimental task (Fig. 1), we used a delayed movement paradigm wherein, on each individual trial, subjects were first auditorily cued (via headphones) to prepare either a left versus right hand object grasp-and-lift action on a centrally located cylindrical object (1.9 N weight). Then, following a variable delay period, they were prompted to execute the prepared hand action (Gallivan et al. 2013b, 2013c). At the start of each event-related trial (Fig. 1C), simultaneously with the LED illuminator lights going on (and the subject’s workspace being illuminated), subjects received one of 2 nonsense speech cues, “Compty” or “Midwig.” For a given trial, each nonsense speech cue was paired with a corresponding hand action (e.g., subjects were instructed that, for a predetermined set of trials, Compty cued a left hand movement, whereas Midwig cued a right hand movement). [Note that nonsense speech commands were chosen because semantically meaningful words such as “left” and “right” would already have strong cognitive associations for participants.] Following the delivery of the auditory command, there was a jittered delay interval of 6–12 s (a Gaussian random jitter with a mean of 9 s), after which the verbal auditory command “Go” was delivered, prompting subjects to execute the prepared grasp-and-lift action. For the execution phase of the trial, subjects were required to precision grasp-and-then-lift the object with their thumb and index finger (~2 cm above the platform, via a rotation of the wrist), hold it in midair for ~ 1 s, and then replace it. Subjects were instructed to keep the timing of each hand action as similar as possible throughout the study. Two seconds following the onset of this Go cue, the illuminator lights were extinguished, and subjects then waited 16 s for the next trial to begin (intertrial interval, ITI). Throughout the entire time course of the trial, subjects were required to maintain gaze on the fixation LED.

These event-related trials were completed in 2 separate blocks per experimental run. At the beginning of each experimental run, the experimenter informed subjects of the auditory-hand mapping to be used for the first 10 event-related trials of the experimental run (e.g., Compty for left hand movements, Midwig for right hand movements; 5 intermixed trials of each type). After the 10th trial, the illuminator was turned on (for a duration of 6 s) and subjects simultaneously heard the auditory command “Switch” (following by a 16-s delay). This indicated that the auditory-hand mapping would now be reversed for the remaining 10 event-related trials (i.e., Compty would now cue a right hand movement, whereas Midwig would now cue a left hand movement). The sequential ordering of this auditory-hand mapping was counterbalanced across runs and resulted in a total of 4 different auditory-hand mappings (and thus, trial types) per experimental run: Compty-left hand, Compty-right hand, Midwig-left hand, and Midwig-right hand (with 5 repetitions each; 20 trials in total per run). With the exception of the blocked nature of these trials, these trial types were pseudorandomized within a run and counterbalanced across all runs so that each trial type was preceded and followed equally often by every other trial type across the entire experiment.

Separate practice sessions were carried out before the actual fMRI experiment to familiarize subjects with the delayed timing of the task. One of these sessions was conducted before subjects entered the scanner (See Behavioral Control Experiment in the Supplemental Material) and another was conducted during the anatomical scan (collected at the beginning of the experimental testing session). The experimental testing session for each subject lasted approximately 2 h and included setup time, one 7.5 min high-resolution anatomical scan (during which subjects could practice the task) and 8 functional scanning runs (for a total of 160 trials; 40 trials for each auditory–motor mapping). Each functional run (an example run shown in Fig. 1B) had a duration of 576 s, with a 30–60 s break in between each run. Lastly, a resting state functional scan, in which subjects lay still (with no task) and only maintained gaze on the fixation LED, was performed for 12 min (data not analyzed here).

During MRI testing, we also tracked subjects’ behavior using an MRI-compatible force sensor located beneath the object (Nano 17 F/T sensors; ATI Industrial Automation), and attached to our MRI platform. This force sensor, which was capped with a flat circular disk (diameter of 7.5 cm), supported the object. The force sensor measured the vertical forces exerted by the object (signals sampled at 500 Hz and low-pass filtered using a fifth order, zero-phase lag Butterworth filter with a cutoff frequency of 5 Hz), allowing us to track both subject reaction time, which we define as the time from the onset of the Go cue to object contact (mean = 1601 ms, standard deviation [SD] = 389 ms), and movement time, which we define as the time from object lift to replacement (mean = 2582 ms, SD = 662 ms), as well as generally monitor task performance. Note that we did not conduct eye tracking during this or any of the other MRI scan sessions because of the difficulties in monitoring gaze in the head-tilted configuration with standard MRI-compatible eye trackers (due to occlusion from the eyelids) (Gallivan et al. 2014, 2016, 2019).

Experiment 2

This study and experimental setup was similar to Experiment 1, with the exception that: 1) participants performed either a right hand object grasp-and-lift action on a centrally located cylindrical object (1.9 N weight) or a target-directed eye movement toward that same object (i.e., 2 experimental conditions), 2) the Delay epoch was a fixed duration (12 s), and 3) subjects were cued about the upcoming movement to be executed via a 0.5-s change in the fixation LED color (from red to either blue or green, with the color-action mapping being counterbalanced across subjects; i.e., a LED change to blue cued a grasp action in half the subjects and cued an eye movement in the other half of subjects). The eye movement action involved the subject making a saccadic eye movement from the fixation LED to the target object, holding that position until the illuminator LEDs were extinguished and then returning their gaze to the fixation LED. The 2 trial types, with 5 repetitions per condition per run (10 trials total), were pseudorandomized as in Experiment 1. Each subject participated in at least 8 functional runs.

Data Acquisition and Analysis

Subjects were scanned using a 3-Tesla Siemens TIM MAGNETOM Trio MRI scanner located at the Centre for Neuroscience Studies, Queen’s University (Kingston, Ontario, Canada). An identical imaging protocol was used for both Experiments 1 and 2, with the exception of slice thickness (Experiment 1 = 4 mm; Experiment 2 = 3 mm). In both experiments, MRI volumes were acquired using a T2*-weighted single-shot gradient-echo echo-planar imaging acquisition sequence (time to repetition = 2000 ms, in-plane resolution = 3 × 3 mm, time to echo = 30 ms, field of view = 240 × 240 mm, matrix size = 80 × 80, flip angle = 90°, and acceleration factor (integrated parallel acquisition technologies) = 2 with generalized auto-calibrating partially parallel acquisitions reconstruction). Each volume comprised 35 contiguous (no gap) oblique slices acquired at a ~30° caudal tilt with respect to the plane of the anterior and posterior commissure. Subjects were scanned in a head-tilted configuration, allowing direct viewing of the hand workspace. We used a combination of imaging coils to achieve a good signal to noise ratio and to enable direct object workspace viewing without mirrors or occlusion. Specifically, we tilted (~20° degrees) the posterior half of the 12-channel receive-only head coil (6 channels) and suspended a 4-channel receive-only flex coil over the anterior–superior part of the head. An identical T1-weighted ADNI MPRAGE anatomical scan was also collected for both Experiments 1 and 2 (time to repetition = 1760 ms, time to echo = 2.98 ms, field of view = 192 × 240 × 256 mm, matrix size = 192× 240× 256, flip angle = 9°, 1-mm isotropic voxels).

fMRI Data Preprocessing

Preprocessing of functional data collected in the localizer testing session, and Experiments 1 and 2, was performed using fMRIPrep 1.4.1 (Esteban et al. 2018), which is based on Nipype 1.2.0 (Gorgolewski et al. 2011; Gorgolewski et al. 2019).

Anatomical Data Preprocessing

The T1-weighted (T1w) image was corrected for intensity nonuniformity with N4BiasFieldCorrection (Tustison et al. 2010), distributed with ANTs 2.2.0 (Avants et al. 2008), and used as T1w-reference throughout the workflow. The T1w-reference was then skull-stripped with a Nipype implementation of the antsBrainExtraction.sh workflow (from ANTs), using OASIS30ANTs as target template. Brain tissue segmentation of cerebrospinal fluid, white-matter, and gray matter (GM) was performed on the brain-extracted T1w using fast (FSL 5.0.9, Zhang et al. 2001). Brain surfaces were reconstructed using recon-all (FreeSurfer 6.0.1, Dale et al. 1999), and the brain mask estimated previously was refined with a custom variation of the method to reconcile ANTs-derived and FreeSurfer-derived segmentations of the cortical GM of Mindboggle (Klein et al. 2017). Volume-based spatial normalization to standard space (voxel size = 2 × 2 × 2 mm) was performed through nonlinear registration with antsRegistration (ANTs 2.2.0), using brain-extracted versions of both T1w reference and the T1w template. The following template was selected for spatial normalization: FSL’s MNI ICBM 152 nonlinear 6th Generation Asymmetric Average Brain Stereotaxic Registration Model (Evans et al. 2012; TemplateFlow ID: MNI152NLin6Asym).

Functional Data Preprocessing

For each blood oxygen level–dependent (BOLD) run per subject (across all tasks and/or sessions), the following preprocessing was performed. First, a reference volume and its skull-stripped version were generated using a custom methodology of fMRIPrep. The BOLD reference was then co-registered to the T1w reference using bbregister (FreeSurfer), which implements boundary-based registration (Greve and Fischl 2009). Co-registration was configured with 9 degrees of freedom to account for distortions remaining in the BOLD reference. Head-motion parameters with respect to the BOLD reference (transformation matrices, and 6 corresponding rotation and translation parameters) are estimated before any spatiotemporal filtering using mcflirt (FSL 5.0.9, Jenkinson et al. 2002). BOLD runs were slice-time corrected using 3dTshift from AFNI 20160207 (Cox and Hyde 1997). The BOLD time-series were normalized by resampling into standard space. All resamplings were performed with a single interpolation step by composing all the pertinent transformations (i.e., head-motion transform matrices and co-registrations to anatomical and output spaces). Gridded (volumetric) resamplings were performed using antsApplyTransforms (ANTs), configured with Lanczos interpolation to minimize the smoothing effects of other kernels (Lanczos 1964).

Many internal operations of fMRIPrep use Nilearn 0.5.2 (Abraham et al. 2014), mostly within the functional processing workflow. For more details of the pipeline, see the section corresponding to workflows in fMRIPrep’s documentation.

Postprocessing

Additional postprocessing was performed for specific analyses. Normalized functional scans were temporally filtered using a high-pass filter (cutoff = 0.01 Hz) to remove low-frequency noise (e.g., linear scanner drift) as part of general linear models (GLMs) (see below). For the localizer data, normalized functional scans were spatially smoothed (6-mm full-width at half-maximum [FWHM] Gaussian kernel; SPM12) prior to GLM estimation to facilitate subject overlap. [Note that no spatial smoothing was performed on the experimental task data sets, wherein multivoxel pattern analyses were performed.]

Error Trials

Error trials were identified offline from the videos recorded during the experimental testing session and were excluded from analysis by assigning these trials predictors of no interest. Error trials included those in which the subject performed the incorrect instruction (Experiment 1: 9 trials, 4 subjects; Experiment 2: 1 trial, 1 subject) or contaminated the delay phase data by slightly moving their limb or moving too early (Experiment 1: 7 trials, 4 subjects; Experiment 2: 1 trial, 1 subject). The fact that subjects made so few errors when considering the potentially challenging nature of the tasks (e.g., in Experiment 1 having to remember whether Compty cued a left hand or right hand movement on the current trial) speaks to the fact that subjects were fully engaged during experimental testing and very well practiced at the task prior to participating in the experiment.

Statistical Analyses

General Linear Models

For the localizer task analyses, we carried out subject-level analysis using SPM12’s first-level analysis toolbox to create a GLM for each task (auditory and motor). Each GLM featured condition predictors created from boxcar functions convolved with a double-gamma hemodynamic response function (HRF), which were aligned to the onset of each action/stimulus block with durations dependent on block length (i.e., 10 imaging volumes for both localizer tasks). Temporal derivatives of each predictor and subjects’ 6 motion parameters obtained from motion correction were included as additional regressors. The Baseline/Fixation epochs were excluded from the model; therefore, all regression coefficients (betas) were defined relative to the baseline activity during these time points.

In the experimental tasks, we employed a least-squares separate procedure (Mumford et al. 2012) to extract beta coefficient estimates for decoding analyses. This procedure generated separate GLM models for each individual trial’s Delay and Execute epochs (e.g., in Experiment 1: 20 trials × 2 epochs × 8 runs = 320 GLMs). The regressor of interest in each model consisted of a boxcar regressor aligned to the start of the epoch of interest. The duration of the regressor was set to the duration of the cue that initiates the epoch (0.5 s): the auditory command cue (Compty or “Midwig”) and the visual cue (fixation LED color change) for the Delay epoch in Experiment 1 and 2, respectively, and the auditory Go cue for the Execute epoch in both experiments. For each GLM, we included a second regressor that comprised of all remaining trial epochs in the experimental run. Each regressor was then convolved with a double-gamma HRF, and temporal derivatives of both regressors were included along with subjects’ 6 motion parameters obtained from motion correction. Isolating the regressor of interest in this single-trial fashion reduces regressor collinearity and has been shown to be advantageous in estimating single-trial voxel patterns and for multivoxel pattern classification (Mumford et al. 2012).

ROI Selection

ROIs were identified based on second-level (group) analyses of first-level contrast images from each subject. Early auditory cortex ROIs were identified for both Experiments 1 and 2 by thresholding a Scrambled Speech > Rest group contrast at an uncorrected voxelwise threshold of P < 10−5. This procedure identified tight superior temporal gyrus (STG) activation clusters in left and right Heschl’s gyrus (HG), the anatomical landmark for primary (core) auditory cortex (Morosan et al. 2000, 2001; Rademacher et al. 2001; Da Costa et al. 2011), and more posteriorly on the superior temporal plane (planum temporale, PT). We verified these locations by intersecting region masks for HG and PT obtained from the Harvard–Oxford anatomical atlas (Desikan et al. 2006) with the masks of left and right STG clusters. This allowed us to define, for each participant, voxels that were active for sound that fell in anatomically defined HG and PT. We considered HG and PT separately since they are at different stages of auditory processing: HG is the location of the core, whereas the PT consists of belt and probably parabelt regions, as well as possibly other types of cortical tissue (Hackett et al. 2014). Since our PT activity is just posterior to HG, we suspect that this is probably in belt or parabelt cortex, 1 or 2 stages of processing removed from core. Lastly, a more expansive auditory and speech processing network was obtained using an Intact Speech > Rest contrast with an uncorrected height threshold of P < 0.001 and cluster-extent correction threshold of P < 0.05. Together, these were used as 3-dimensional binary masks to constrain our analyses and interpretations of motor-related effects in the auditory system.

Multivoxel Pattern Analysis (MVPA)

For the experimental task, MVPA was performed with in-house software using Python 3.7.1 with Nilearn v0.6.0 and Scikit-Learn v0.20.1 (Abraham et al. 2014). All analyses implement support vector machine (SVM) binary classifiers (libSVM) using a radial-basis function kernel and with fixed regulation parameter (C = 1) and gamma parameter (automatically set to the reciprocal of the number of voxels) in order to compute a hyperplane that best separated the trial responses. The pattern of voxel beta coefficients from the single-trial GLMs, which provided voxel patterns for each trial’s Delay and Execute epochs, were used as inputs into the binary classifiers. These values were standardized across voxels such that each voxel pattern had a mean of 0 and SD of 1. Therefore, the mean univariate signal for each pattern was removed in the ROI.

Decoding accuracies for each subject were computed as the average classification accuracy across train-and-test iterations using a “leave-one-run-out” cross-validation procedure. This procedure was performed separately for each ROI, trial epoch (Delay and Execute), and pairwise discrimination (left hand vs. right hand movements and Compty vs. Midwig in Experiment 1 and hand vs. eye movements in Experiment 2). We statistically assessed decoding significance at the group-level using 1-tailed t-tests versus 50% chance decoding. To control for the problem of multiple comparisons within each ROI (i.e., the number of pairwise comparisons tested), we applied a Benjamini–Hochberg false-discovery rate (FDR) correction of q < 0.05.

Significant decoding accuracies were further verified against null distributions constructed by a 2-step permutation approach based on Stelzer et al. (2013). In the first step, distributions of chance decoding accuracies were independently generated for each subject using 100 iterations. In each iteration, class labels were randomly permuted within each run, and a decoding accuracy was computed by taking the average classification accuracy following leave-one-run-out cross-validation. In the second step, the subject-specific distributions of decoding accuracies were used to compute a distribution of 10 000 group-average decoding accuracies. Here, in each iteration, a decoding accuracy was randomly selected from each subject distribution and the mean decoding accuracy across subjects was calculated. The distribution of group-average decoding accuracies was then used to compute the probability of the actual decoding accuracy.

Searchlight Pattern-Information Analyses

To complement our MVPA ROI analyses in both Experiments 1 and 2, we also performed a pattern analysis in each subject using the searchlight approach (Kriegeskorte et al. 2006). Given the scope of this paper (i.e., to examine the top-down modulation of auditory cortex during planning), we constrained this searchlight analysis to the auditory network mask defined by the Intact Speech > Rest contrast using the independent auditory localizer data (allowing us to localize both lower-order and higher-order auditory regions). In this procedure, the SVM classifier moved through each subjects’ localizer-defined auditory network in a voxel-by-voxel fashion, whereby, at each voxel, a sphere of surrounding voxels (radius of 4 mm; 33 voxels) were extracted, z-scored within pattern (see above) and input into the SVM classifier. The decoding accuracy for that sphere of voxels was then written to the central voxel. This searchlight procedure was performed separately with beta coefficient maps for the Delay and Execute epochs based on the GLM procedure described above, which yielded separate Delay and Execute whole-brain decoding maps. To allow for group-level analyses, the decoding maps were smoothed (6-mm FWHM Gaussian kernel) in each subject, which is particularly pertinent given the known neuroanatomical variability in human auditory cortex (Hackett et al. 2001; Zoellner et al. 2019; Ren et al. 2020). Then, for each voxel, we assessed statistical significance using a 1-tailed t-test versus 50% chance decoding.

Group-level decoding maps for Delay and Execute epochs were thresholded at P < 0.01 and cluster corrected to P < 0.05 using Monte-Carlo style permutation tests with AFNI’s 3dClustSim algorithm (Cox 1996; Cox et al. 2017). Cluster correction was additionally validated using a nonparametric approach (see Supplemental Fig. 3). We note that this latter approach resulted in more liberal cluster correction thresholds in comparison with 3dClustSim; we therefore opted to use the more conservative thresholds obtained from 3dClustSim.

Results

Experiment 1

Delay Period Decoding of Hand Information From Early Auditory Cortex

To determine whether signals related to hand movement planning influence early auditory cortex activity, we extracted the trial-related voxel patterns (beta coefficients) associated with the Delay (as well as Execute) epochs from early auditory cortex. To this end, we first functionally identified, using data from an independent auditory localizer task (see Materials and Methods), fMRI activity in the left and right STG. To provide greater specificity with regards to the localization of potential motor planning-related effects, we further delineated these STG clusters based on their intersections with HG and the PT, 2 adjacent human brain regions associated with primary and higher-order cortical auditory processing, respectively (Poeppel et al. 2012) (see Fig. 2A,B for our basic approach). Next, for each of these 3 regions (STG and its subdivisions into HG and PT) we used their z-scored Delay epoch voxel activity patterns as inputs to a SVM binary classifier. In order to derive main effects of hand information (i.e., examine decoding of upcoming left hand vs. right hand movements) versus auditory cue information (examine decoding of Compty vs. Midwig cues) and to increase the data used for classifier training, we performed separate analyses wherein we collapsed across auditory cue or hand trials, respectively. Our logic is that, when collapsing across auditory cue (i.e., relabelling all trials based on the hand used), if we can observe decoding of hand information in auditory cortex during the Delay phase (prior to movement), then this information is represented with invariance to the cue and thus sensory input (and vice versa).

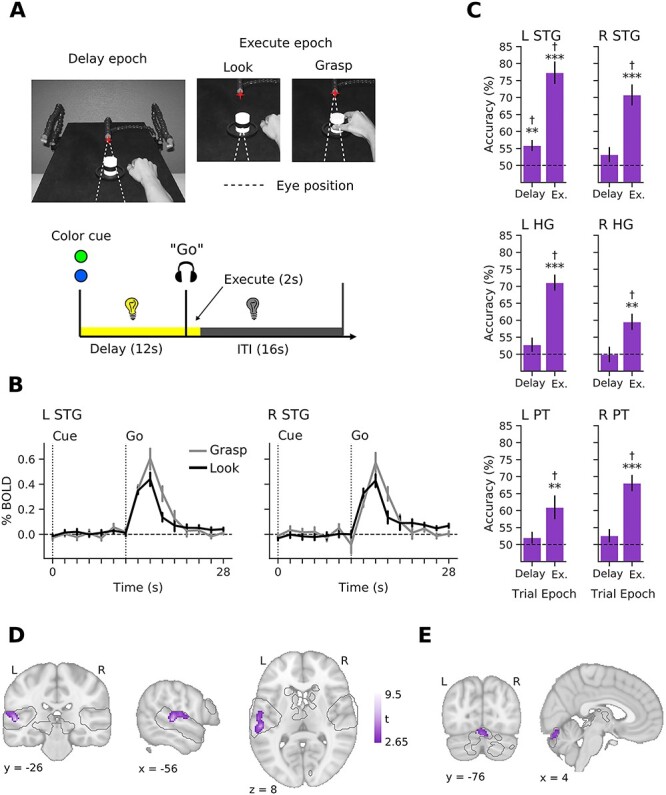

Figure 2.

Decoding of hand information in early auditory cortex in Experiment 1. (A) Left and right STG clusters defined at the group level (N = 16) with the independent auditory localizer task using the contrast of Scrambled Speech > Rest (see Materials and Methods). (B) Delineation of HG and PT, within each STG cluster from A. (C) Group-averaged percent-signal change BOLD time courses for each trial type in left and right STG. The first 3 time points are time-locked to the onset of the Delay epoch and remaining time points are time-locked to onset of the Execute epoch. There is a high degree of overlap amongst the time courses for the different trial types. Note the separate increases in activity associated with delivery of the auditory cue (Compty or Midwig) and Go signal. (D) Decoding accuracies for hand (red) and cue (blue) information. Hand and cue decoding accuracies were analyzed separately in each epoch (Delay and Execute) using 1-sample t-tests (1 tailed) against chance level (50%). Error bars show ±1 standard error (SE) of mean. *P < 0.05, **P < 0.01, ***P < 0.001, †FDR-corrected q < 0.05.

Our analysis on the resulting classification accuracies (Fig. 2D) revealed that information related to the upcoming hand actions to be performed (i.e., during the Delay epoch) was present in bilateral STG (left: t14 = 3.55, P = 0.002; right: t14 = 2.34, P = 0.017) and left HG (t14 = 2.43, P = 0.014). A significant effect was also found in right HG, but it did not survive FDR correction (t14 = 2.06, P = 0.029). These findings were additionally confirmed using follow-up permutation analyses (Supplemental Fig. 4A). Meanwhile, no significant decoding was found in left (t14 = −0.074, P = 0.529) or right (t14 = 1.17, P = 0.131) PT. By contrast, during the Execute epoch, we found that hand decoding was robust in all 3 areas in both hemispheres (all P < 0.001, Fig. 2D; all P < 0.001 in permutation analyses). Because our task did not pair the execution of hand movements to sound generation, and subjects would not have heard the auditory consequences associated with movement (e.g., object lifting and replacement) due to their wearing of headphones and the loud background noise of the scanner, these Execution results suggest that the modulation of auditory cortex activity is automatic and motor related in nature (Schneider et al. 2014). Importantly, our finding of decoding in bilateral STG during the Delay period further suggests that this automatic and motor-related modulation also occurs well before execution, during movement preparation. An additional behavioral control experiment, performed prior to MRI testing (see Supplementary Material), suggests that the emergence of these hand-related effects are unlikely to be driven by systematic differences in eye position across trials (Werner-Reiss et al. 2003), since our trained participants exhibited highly stable fixations throughout the task (Supplemental Fig. 1).

In contrast to our motor-related hand decoding results, our analysis on the resulting classification accuracies for the sensory-related auditory cue (Compty vs. Midwig) revealed that, during the Delay epoch, information related to the delivered verbal cue was present only in right STG (t14 = 3.71, P = 0.001, Fig. 2D). Left PT also showed significant decoding (t14 = 1.79, P = 0.048), although this did not survive FDR correction. Subsequent permutation analyses replicated this pattern of results (Supplemental Fig. 4B). No cue decoding was found in the remaining ROIs (all P > 0.10). Critically, consistent with the fact that this auditory cue information was delivered to participants only during the Delay epoch (i.e., participants always received a Go cue at the Execute epoch, regardless of trial identity), we also observed no evidence of cue decoding during the Execute epoch (all P > 0.10).

Delay Period Decoding From Auditory Cortex Mirrors That Found in the Motor System

To provide a basis for comparing and interpreting the above hand-related decoding effects in the auditory cortex, we also used the data from our experimental task to examine Delay epoch decoding in a positive control region, the PMd. This region, which we independently identified using a separate motor localizer task in our participants (see Materials and Methods), is well known to differentiate limb-related information during movement planning in both humans and nonhuman primates (Cisek et al. 2003; Gallivan et al. 2013b). As shown in Supplemental Figure 2C, we found a remarkably similar profile of limb-specific decoding in this motor-related region to that observed in the auditory cortex (area STG). In summary, this PMd-result allows for 2 important observations. First, similar levels of action-related information can be decoded from early auditory cortex as from PMd, the latter area known to have a well-established role in motor planning (Weinrich et al. 1984; Kaufman et al. 2010; Lara et al. 2018). Second, this Delay period decoding suggests that the representation of hand-related information evolves in a similar fashion prior to movement onset in both STG and PMd.

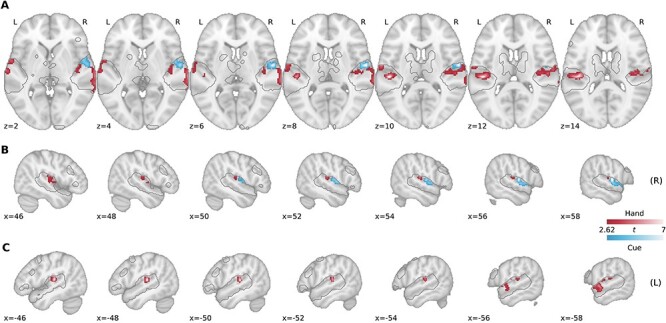

Searchlight Analyses Reveal the Representation of Hand Information in Early Auditory Cortex Prior to Movement

To complement our above ROI analyses, we also performed a group-level searchlight analysis within the wider auditory processing network, localized using our independent auditory localizer data (see Materials and Methods, Supplemental Fig. 5A). During the Delay epoch (Fig. 3), 2 hand-related decoding clusters were identified in left STG, which includes a cluster centered on HG (212 voxels; peak, x = −48, y = −24, z = 10, t14 = 8.31, P < 0.001) and a cluster spanning anterolateral portions of left STG (321 voxels; peak, x = −60, y = −2, z = −2, t14 = 5.72, P < 0.001). In the right hemisphere, 1 large cluster was revealed, which broadly spans across STG and superior temporal sulcus (519 voxels; peak, x = −50, y = −22, z = 8, t14 = 6.06, P < 0.001). Notably, when we examined cue-related decoding during the Delay epoch (i.e., decoding the auditory command “Compty” vs. “Midwig”), we found 1 cluster in right STG (272 voxels; peak, x = 58, y = −6, z = 8, t14 = 8.27, P < 0.001), which did not overlap with the hand decoding clusters. This result suggests that, rather than a multiplexing of hand-related and cue-related within a common region of auditory cortex, separate subregions of the auditory system are modulated by motor-related (hand) versus sensory-related (auditory cue) information. In addition, the overlap of hand decoding clusters on bilateral HG and STG, as well as a cue decoding cluster in right STG, replicates our basic pattern of ROI-based results presented in Figure 2.

Figure 3.

The representation of motor-related (hand) information during the Delay epoch in Experiment 1 is spatially distinct from the representation of sensory-related (auditory cue) information within STG. Searchlight analyses were restricted to a mask defined by significant voxels in a Intact Speech > Rest contrast using an independent auditory localizer task (gray-traced regions; see Materials and Methods). Group-level searchlight maps were thresholded at t > 2.62 (1-tailed P < 0.01) and cluster-corrected at P < 0.05. (A) Transverse slices of significant decoding clusters for hand (red) and auditory cue (blue) in early auditory areas during the Delay epoch. (B) Sagittal slices of the right hemisphere. (C) Sagittal slices of left hemisphere.

A searchlight analysis using the Execute epoch data revealed a far more extensive pattern of hand decoding throughout the auditory network, with significant decoding extending all along the superior and middle temporal gyri bilaterally, and even into the basal ganglia and medial temporal cortex (Supplemental Fig. 5B). By contrast, and in line with our ROI-based results, no cue decoding clusters were detected during the Execute epoch. These searchlight findings, when considered jointly with our ROI-based results, provide initial evidence that movement preparation selectively modulates neural activity patterns in early auditory cortex.

Experiment 2

Our analyses from Experiment 1 demonstrate that hand-related information (left vs. right hand) is represented bilaterally in early auditory cortex well prior to movement. What remains undetermined from this first study, however, is the extent to which these modulations in auditory cortex purely reflect a motor-specific process. Indeed, one alternative explanation of the Experiment 1 results is that the decoding of limb-specific information could reflect some type of auditory working-memory and/or rehearsal process, whereby, during the delay period, participants translate the auditory command (e.g., “Compty”) into the corresponding auditory instruction (e.g., subvocalize to themselves “Right hand”). If true, then what we interpret here as “hand-related” decoding may instead reflect a subvocalization process that recruits auditory cortex. Indeed, prior fMRI work has shown that the both the rehearsal (Paulesu et al. 1993) and maintenance (Kumar et al. 2016) of auditory information involves the activation of bilateral auditory cortex, and in Experiment 1, we are unable to disentangle such rehearsal/maintenance effects from the increase in BOLD activity related to the actual auditory cue delivered to participants at the onset of Delay epoch (Compty and Midwig; Fig. 2C). Thus, what is needed to disentangle these effects is a delayed movement task in which no auditory cues are used to instruct movement at the onset of the Delay epoch. This would allow us to examine whether we still observe an increase in BOLD activity during the delay period (as in Fig. 2C), which would be consistent with the alternative explanation of our results that the “hand-related” decoding observed instead reflects an auditory rehearsal/maintenance process.

To rule out the aforementioned potential confound and to replicate and extend the effects of Experiment 1 to different effector systems, in a second experiment we modified a classic task from primate neurophysiology used to dissociate motor- versus sensory-related coding during action planning (Snyder et al. 1997; Cui and Andersen 2007). In our version of this delayed movement task, in each trial, participants either grasped an object with their right hand or made an eye movement toward it (Fig. 4A). Importantly, unlike in Experiment 1 (wherein we used auditory commands to instruct actions at the onset of the Delay epoch), here we cued the 2 movements via a change in the color of the fixation LED (Fig. 4A). As such, we could examine whether, in the absence of any auditory input at the onset of the Delay period, we still find increased activity in bilateral auditory cortex. Such an observation would be consistent with an auditory rehearsal/maintenance process (Paulesu et al. 1993; Kumar et al. 2016). By contrast, if we were to instead find that the decoding of the upcoming action to be performed (i.e., hand vs. eye movement) occurs in the absence of any net change in auditory activity, then this would suggest that such decoding is linked to a top-down motor-related process.

Delay Period Decoding From Early Auditory Cortex Is Unlikely to Reflect Auditory Rehearsal

As in Experiment 1, a pattern classification analysis on Delay period voxel patterns revealed that information related to the upcoming effector to be used (hand vs. eye) could be decoded from the STG (Fig. 4C). Notably, we found that this decoding during the Delay epoch was only observed in the contralateral (left) and not ipsilateral (right), STG (left: t12 = 3.58, P = 0.001; right: t12 = 1.42, P = 0.091), which was also significant in follow-up permutation analyses (P = 0.001; Supplemental Fig. 6A). Decoding in the remaining ROIs during the Delay epoch were all P > 0.10. During the Execute epoch, as in Experiment 1, we found that effector-related decoding was robust in all 3 areas and in both hemispheres (all P < 0.008; all permutation analyses P < 0.001). Together, these results support the key observation from Experiment 1 that the auditory cortex contains effector-specific information prior to movement onset. In addition, this second experiment does not display the expected characteristics of auditory rehearsal/working memory processes (Paulesu et al. 1993; Kumar et al. 2016), in that 1) the effector-related modulation we observe occurs in the absence of any BOLD activation prior to movement during the Delay period (Fig. 4B shows the baseline levels of activity in both left and right STG) and that 2) this effector-specific information is represented in the auditory cortex contralateral to the (right) hand being used, rather than in bilateral auditory cortex. With respect to this latter finding, if auditory cortex is modulated in a top-down fashion via the motor system, then we might expect—given the contralateral organization of the motor system (Porter and Lemon 1995) and the existence of direct within-hemispheric projections from motor to auditory cortex (Nelson et al. 2013)—that these modulations should be primarily contralateral (to the hand) in nature.

As in Experiment 1, it is useful to interpret these above decoding effects in left STG with respect to a positive control region, like PMd, known to distinguish upcoming hand versus eye movements during the Delay epoch in humans (Gallivan et al. 2011). As shown in the Supplementary Figure 6B, we find a similar level of decoding of effector-specific information in left PMd (t12 = 2.78, P = 0.008) as that observed in left STG above. This shows that motor-related information is just as decodable from the auditory cortex as it is from a motor-related region, like PMd.

Searchlight Analyses Reveal the Representation of Effector-Specific Information in Contralateral Auditory Cortex Prior to Movement

To complement our Experiment 2 ROI analyses and bolster our observations in Experiment 1, we again performed a group-level searchlight analysis within the wider auditory processing network (denoted by the gray traces in Fig. 4D,E). During the Delay epoch (Fig. 4D), we identified a large effector-specific (hand vs. eye) decoding cluster in left STG (308 voxels; peak, x = −54, y = −28, z = 4, t13 = 9.49, P < 0.00001), as well as a smaller cluster in cerebellum (150 voxels; peak, x = 6, y = −78, z = −18, t13 = 4.47, P < 0.00026, Fig. 4E). An uncorrected searchlight map (Supplemental Fig. 7A) also reveals no major subthreshold clusters in right STG, reinforcing the notion that decoding in Experiment 2 is contralateral in nature. A searchlight analysis using the Execute epoch data replicated our general observations from Experiment 1 that movement execution elicits far more widespread activity throughout the wider auditory network (Supplemental Fig. 7). These searchlight results, when considered jointly with our ROI-based findings, provide strong evidence that movement preparation primarily modulates neural activity in the contralateral auditory cortex.

Discussion

Here we have shown that, using fMRI and 2 separate experiments involving delayed movement tasks, effector-specific information (i.e., left vs. right hand in Experiment 1, and hand vs. eye in Experiment 2) can be decoded from premovement neural activity patterns in early auditory cortex. In Experiment 1, we showed that effector-specific decoding was invariant to the auditory cue used to instruct the participant on which hand to use. Separately, with our searchlight analyses, we also found that the decoding of this hand-related information occurred in a separate subregion of auditory cortex than the decoding of the auditory cue that instructed the motor action. In Experiment 2, we showed that effector-specific decoding in the auditory cortex occurs even in the absence of any auditory cues (i.e., we cued movements via a visual cue) or even when there is no net increase in BOLD activity during the Delay period. Moreover, we found through both our ROI-based and searchlight-based analyses that this decoding in auditory cortex was contralateral to the hand being used. Taken together, the findings from these 2 experiments suggest that a component of action planning, beyond preparing motor areas for the forthcoming movement, involves modulating early sensory cortical regions. Such modulation may enable these areas to more effectively participate in the processing of task-specific sensory signals that normally arise during the unfolding movement itself.

“Motor” Versus “Sensory” Plans

With respect to motor-related brain areas (e.g., the primary, premotor, and supplementary motor cortices), several hypotheses have been proposed about the role of planning-related activity. Some researchers have suggested that planning activity codes a variety of different movement parameters (e.g., direction, speed), with a view that it represents some subthreshold version of the forthcoming movement (Tanji and Evarts 1976; Riehle and Requin 1989; Hocherman and Wise 1991; Shen and Alexander 1997; Messier and Kalaska 2000; Churchland et al. 2006; Pesaran et al. 2006; Batista et al. 2007). More recent work, examining populations of neurons in motor areas, has instead suggested that movement planning involves setting up the initial state of the population, such that movement execution can unfold naturally through transitory neural dynamics (Churchland et al. 2010, 2012; Shenoy et al. 2013; Sussillo et al. 2015; Pandarinath et al. 2017; Lara et al. 2018). In the context of this newer framework, our results suggest that motor planning may also involve setting up the initial state of primary sensory cortical areas. Whereas the neural activity patterns that unfold during movement execution in motor areas are thought to regulate the timing and nature of descending motor commands (Churchland et al. 2012; Shenoy et al. 2013), such activity in primary sensory areas could instead regulate the timing and nature of the processing of incoming sensory signals.

One idea is that motor preparation signals originating from the motor system could tune early sensory areas for a role in sensory prediction. Prediction of the sensory consequences of action is essential for the accurate sensorimotor control of movement, per se, and also provides a mechanism for distinguishing between self-generated and externally generated sensory information (Wolpert and Flanagan 2001). The critical role of prediction in sensorimotor control has been well documented in the context of object manipulation tasks (Flanagan et al. 2006; Johansson 2009), like those used in our experiments. The control of such tasks centers around “contact events,” which give rise to discrete sensory signals in multiple modalities (e.g., auditory, tactile) and represent subgoals of the overall task. Thus, in the grasp, lift, and replace task that our participants performed in both Experiments 1 and 2, the brain automatically predicts the timing and nature of discrete sensory signals associated with contact between the digits and object, as well as the breaking, and subsequent making, of contact between the object and surface; events that signify successful object grasp, liftoff, and replacement, respectively. By comparing predicted and actual sensory signals associated with these events, the brain can monitor task progression and launch rapid corrective actions if mismatches occur (Wolpert et al. 2011). These corrective actions are themselves quite sophisticated and depend on both the phase of the task and the nature of the mismatch (Flanagan et al. 2006). Thus, outside the motor system, the preparation of manipulation tasks clearly involves forming what could be referred to as a “sensory plan”; that is, a series of sensory signals that, during subsequent movement execution, can be predicted based on knowledge of object properties and information related to outgoing motor commands (Johansson 2009).

In the context of the experimental tasks and results presented here, it would make sense that such “sensory plans” be directly linked to the acting limb (and thus, decodable at the level of auditory cortex). This is because successful sensory prediction, error detection, and rapid motor correction would necessarily require direct knowledge of the hand being used. There is evidence from both songbirds and rodents to suggest that the internal motor-based estimate of auditory consequences is established in auditory cortex (Canopoli et al. 2014; Schneider et al. 2018). This provides a putative mechanism through which auditory cortex itself can generate sensory error signals that can be used to update ongoing movement. However, for the latter to occur, auditory cortex must be able to relay this sensory error information back to the motor system, either through direct connections or through some intermediary brain area. In principle, this could be achieved through known projections from auditory to frontal cortical regions. Neuroanatomical tracing studies in nonhuman primates have identified bidirectional projections between regions in auditory and frontal cortex (Petrides and Pandya 1988, 2002; Deacon 1992; Romanski et al. 1999), and in humans, the arcuate and uncinated fasciculi fiber tracts are presumed to allow the bidirectional exchange of information between the auditory and motor cortices (Schneider and Mooney 2018). However, any auditory-to-motor flow of information must account for the fact that the motor system, unlike the auditory system, has a contralateral organization (Porter and Lemon 1995). That is, given that the auditory sensory reafference associated with movements of a single limb (e.g., right hand) is processed in bilateral auditory cortex, then there must be a mechanism by which resulting sensory auditory errors can be used to selectively update the contralateral motor (e.g., left) cortex.

One possibility, supported by our finding that effector-related information could only be decoded from the contralateral auditory cortex (in Experiment 2), is that the motor hemisphere involved in movement planning (i.e., contralateral to the limb) may only exert a top-down modulation on the auditory cortex within the same hemisphere. This would not only be consistent with current knowledge in rodents that motor-to-auditory projections are within hemisphere only (Nelson et al. 2013), but it would also provide a natural cortical mechanism by which sensory errors computed in auditory cortex can be directly tagged to the specific motor effector being used. For example, if the motor cortex contralateral to the acting hand is providing an internal motor-based estimate of the consequences of movement to the auditory cortex within the same hemisphere, then only that auditory cortex would be able to compute the sensory error signal. In this way, the intrinsic within-hemispheric wiring of motor-to-auditory connections could provide the key architecture through which auditory error signals can be linked to (and processed with respect to) the acting hand and thus also potentially used to update motor commands directly.

One addendum that should be added to our above speculation is that, due to the loud MRI scanner environment, subjects would have never expected to hear any of the auditory consequences associated with their movements. On the one hand, this bolsters the view that the modulations we describe in auditory cortex are “automatic” in nature (i.e., they are context-invariant and occur outside the purview of cognitive control). On the other hand, it might instead suggest that, rather than their being an interaction between hand dependency and expected auditory consequences (as speculated in the previous paragraph), there is an effector-dependent global gating mechanism associated with movement planning. Moreover, given that in Experiment 2 we show that the effector decoding occurs in contralateral auditory cortex during planning, whereas it occurs in bilateral auditory cortex during execution (Supplemental Fig. 7), it could suggest different gating mechanisms for action planning versus execution. Further studies will be needed to address these possibilities.

Sensory Predictions for Sensory Cancellation Versus Sensorimotor Control

The disambiguation of self- and externally generated sensory information is thought to rely on canceling, or attenuating, the predictable sensory consequences of movements (Wolpert and Flanagan 2001; Schneider and Mooney 2018). Such sensory predictions for use in “sensory cancellation” are generally thought to be represented in primary sensory areas. According to this view, an efference copy of descending motor commands, associated with movement execution, is transmitted in a top-down fashion to early sensory cortices in order to attenuate self-generated sensory information (von Holst et al. 1950; Crapse and Sommer 2008). By contrast, sensory predictions for use in “sensorimotor control” are thought to be represented in the same frontoparietal circuits involved in movement planning and execution (Scott 2012, 2016). According to this view, incoming sensory information, associated with movement execution, is transmitted in a bottom-up fashion from early sensory areas to frontoparietal circuits wherein processes associated with mismatch detection and subsequent movement correction are performed (Desmurget et al. 1999; Tunik et al. 2005; Jenmalm et al. 2006). Although here we observe our task-related modulations in early sensory (auditory) cortex, we think it is unlikely that it purely reflects a sensory cancellation process per se. First, sensory attenuation responses in primary auditory cortex have been shown to occur about 200 ms prior to movement onset (Schneider et al. 2014, 2018), whereas the effector-specific modulations of auditory cortex we report here occurred, at minimum, several seconds prior to movement onset (as revealed through our analyses linked to the onset of the Delay epoch). Second, as already noted above, any sensory attenuation would be expected to occur in bilateral auditory cortex (Schneider et al. 2014), which contrasts with our finding of effector-related decoding in only the contralateral auditory cortex in Experiment 2. Given these considerations, a more likely explanation of our results is that they reflect the motor system preparing the state of auditory cortex, in a top-down fashion, to process future auditory signals for a role in forthcoming “sensorimotor control.”

Effector-Specific Representations in Auditory Cortex Are Likely Motor-Related in Origin

There are 3 lines of support for the notion that the effector-specific decoding observed here in auditory cortex is the result of a top-down, motor-related modulation. First, as noted above, in the MRI scanner environment (i.e., loud background noise, subjects’ wearing of headphones), our participants could not have heard the auditory consequences of their actions; for example, sounds associated with contacting, lifting, and replacing the object. This argues that our reported modulation of auditory cortex is automatic in nature and not linked to any sensory reafference or attentional processes (Otazu et al. 2009; Schneider et al. 2014). Second, our searchlight analyses in Experiment 1 identified separate clusters in STG that decoded hand information (in early auditory cortex) from those that decoded auditory cue information (in higher-order auditory cortex). The fact that different subregions of auditory cortex are modulated prior to movement for motor (i.e., discriminating left vs. right hand) versus auditory language information (i.e., discriminating Compty vs. Midwig) is consistent with the notion that our hand-related decoding effects are invariant to sensory input. This finding, along with others presented in our paper (see Results), also argues against the idea that our hand-related decoding results can be attributed to some subvocalization process, as these would be expected to recruit the same region of auditory cortex as when actually hearing the commands (Paulesu et al. 1993; McGuire et al. 1996; Shergill et al. 2001). Third, in Experiment 2 the decoding of effector-specific information was found to be contralateral to the hand being used in the task. As already noted, such lateralization is a main organizational feature of movement planning and control throughout the motor system (Porter and Lemon 1995) and is consistent with neuroanatomical tracing work showing that motor cortex has direct projections to auditory cortex within the same hemisphere (Nelson et al. 2013). By contrast, no such organization exists in the cortical auditory system, with lateralization instead thought to occur along different dimensions (e.g., language processing; Hackett 2015). Notably, the contralaterality observed in our Experiment 2 also similarly rules out the possibility that our results are merely the byproduct of an auditory working-memory/rehearsal process, as these have been shown to recruit bilateral auditory cortex (Kumar et al. 2016). Indeed, we find through both our ROI-based and searchlight-based analyses that the decoding of motor effector information occurs in bilateral auditory cortex when both hands are interchangeably used in the task (Experiment 1) and find only contralateral decoding of motor effector information when only one of the hands is used in the task (Experiment 2). These observations, when taken together, support the notion that the signals being decoded from the auditory cortex prior to movement have a motor, rather than sensory, origin. We appreciate that, however, we are only able to infer that the modulation in auditory cortex arises via the motor system, as in the current study we do not assess any circuit-level mechanisms related to directionality or causality, as has been done in nonhuman work (Nelson et al. 2013; Schneider et al. 2014).

Representation of Predicted Tactile Input in Auditory Cortex?

Prior work has demonstrated that tactile input alone is capable of driving auditory cortex activity (Foxe et al. 2002; Kayser et al. 2005; Schürmann et al. 2006; Lakatos et al. 2007), indicating a potential role for auditory cortex in multisensory integration. As noted above, the control of object manipulation tasks involves accurately predicting discrete sensory events that arise in multiple modalities, including tactile and auditory (Johansson 2009). It is plausible then that some of the premovement auditory cortex modulation described here reflects the predicted tactile events arising from our task (e.g., object contact, liftoff, and replacement), which we would also expect to be linked to the acting hand (and thus, decodable). Though we cannot disentangle this possibility in the current study, it does not undercut our main observation that early auditory cortex is selectively modulated as a function of the movement being prepared, nor does it undercut our interpretation that such modulation is likely linked to sensorimotor prediction.

Conclusions

Here we show that, prior to movement onset, neural activity patterns in early auditory cortex carry information about the movement effector to be used in the upcoming action. This result supports the hypothesis that sensorimotor planning, which is critical in preparing neural states ahead of movement execution (Lara et al. 2018), not only occurs in motor areas but also in primary sensory areas. While further work is required to establish the precise role of this movement-related modulation, our findings add to a growing line of evidence indicating that early sensory systems are directly modulated by sensorimotor computations performed in higher-order cortex (Chapman et al. 2011; Steinmetz and Moore 2014; Gutteling et al. 2015; Gallivan et al. 2019) and not merely low-level relayers of incoming sensory information (Scheich et al. 2007; Matyas et al. 2010; Weinberger 2011; Huang et al. 2019).

Funding

Canadian Institutes of Health Research (CIHR) (MOP126158 to J.R.F. and J.P.G.); Natural Sciences and Engineering Research Council (NSERC) Discovery Grant to J.P.G.; Canadian Foundation for Innovation to J.P.G.; R.S. McLaughlin Fellowship to D.J.G.; NSERC Graduate Fellowship to D.J.G. and C.N.A.

Notes

The authors would like to thank Martin York, Sean Hickman, and Don O’Brien for technical assistance. Conflict of Interest: None declared.

Supplementary Material

References

- Abraham A, Pedregosa F, Eickenberg M, Gervais P, Mueller A, Kossaifi J, Gramfort A, Thirion B, Varoquaux G. 2014. Machine learning for neuroimaging with scikit-learn. Front Neuroinform. 8:14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avants BB, Epstein CL, Grossman M, Gee JC. 2008. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med Image Anal. 12:26–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batista AP, Santhanam G, Yu BM, Ryu SI, Afshar A, Shenoy KV. 2007. Reference frames for reach planning in macaque dorsal premotor cortex. J Neurophysiol. 98:966–983. [DOI] [PubMed] [Google Scholar]

- Canopoli A, Herbst JA, Hahnloser RHR. 2014. A higher sensory brain region is involved in reversing reinforcement-induced vocal changes in a songbird. J Neurosci. 34:7018–7026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman CS, Gallivan JP, Culham JC, Goodale MA. 2011. Mental blocks: fMRI reveals top-down modulation of early visual cortex when obstacles interfere with grasp planning. Neuropsychologia. 49:1703–1717. [DOI] [PubMed] [Google Scholar]

- Churchland MM, Cunningham JP, Kaufman MT, Foster JD, Nuyujukian P, Ryu SI, Shenoy KV. 2012. Neural population dynamics during reaching. Nature. 487:51–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland MM, Cunningham JP, Kaufman MT, Ryu SI, Shenoy KV. 2010. Cortical preparatory activity: representation of movement or first cog in a dynamical machine? Neuron. 68:387–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland MM, Santhanam G, Shenoy KV. 2006. Preparatory activity in premotor and motor cortex reflects the speed of the upcoming reach. J Neurophysiol. 96:3130–3146. [DOI] [PubMed] [Google Scholar]

- Cisek P, Crammond DJ, Kalaska JF. 2003. Neural activity in primary motor and dorsal premotor cortex in reaching tasks with the contralateral versus ipsilateral arm. J Neurophysiol. 89:922–942. [DOI] [PubMed] [Google Scholar]

- Cox RW. 1996. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 29:162–173. [DOI] [PubMed] [Google Scholar]

- Cox RW, Chen G, Glen DR, Reynolds RC, Taylor PA. 2017. FMRI clustering in AFNI: false-positive rates redux. Brain Connect. 7:152–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW, Hyde JS. 1997. Software tools for analysis and visualization of fMRI data. NMR Biomed. 10:171–178. [DOI] [PubMed] [Google Scholar]

- Crapse TB, Sommer MA. 2008. Corollary discharge across the animal kingdom. Nat Rev Neurosci. 9:587–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui H, Andersen RA. 2007. Posterior parietal cortex encodes autonomously selected motor plans. Neuron. 56:552–559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Da Costa S, Zwaag W, Marques JP, Frackowiak RSJ, Clarke S, Saenz M. 2011. Human primary auditory cortex follows the shape of Heschl’s gyrus. J Neurosci. 31:14067–14075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. 1999. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage. 9:179–194. [DOI] [PubMed] [Google Scholar]

- Deacon TW. 1992. Cortical connections of the inferior arcuate sulcus cortex in the macaque brain. Brain Res. 573:8–26. [DOI] [PubMed] [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BTet al. 2006. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 31:968–980. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Epstein CM, Turner RS, Prablanc C, Alexander GE, Grafton ST. 1999. Role of the posterior parietal cortex in updating reaching movements to a visual target. Nat Neurosci. 2:563–567. [DOI] [PubMed] [Google Scholar]