Abstract

Objectives:

To assess the diagnostic accuracy of two smartphone cephalometric analysis apps compared with Viewbox software.

Materials and Methods:

Pretreatment digital lateral cephalograms of 50 consecutive orthodontic patients (20 males, 30 females; mean age, 19.1 years; SD, 11.7) were traced twice using two apps (ie, CephNinja and OneCeph), with Viewbox used as the gold standard computer software program. Seven angular and two linear measurements, originally derived from Steiner cephalometric analysis, were performed.

Results:

Regarding validity, intraclass correlation coefficients (ICCs) ranged from .903–.983 and .786–.978 for OneCeph vs Viewbox and CephNinja vs Viewbox, respectively. The ICC values for intratool reliability ranged from .647–.993. None of the CephNinja measurements was below the recommended cutoff values of ICCs for reliability.

Conclusions:

OneCeph has a high validity compared with Viewbox, while CephNinja is the best alternative to Viewbox regarding reliability. Smartphone apps may have a great potential in supplementing traditional cephalometric analysis.

Keywords: Orthodontic diagnosis, Cephalometrics, Apps, Teledentistry

INTRODUCTION

Cephalometrics is an integral component of clinical orthodontics and orthognathic surgery aiming to evaluate dentofacial proportions, to clarify the anatomic basis for a malocclusion, and to analyze growth- and treatment-related changes.1 Manual cephalometric analysis has been largely replaced by semiautomatic computer-based software,2 which enables direct landmark identification on screen-displayed digital images. Likewise, recently introduced apps (ie, software applications designed to run on smartphones and tablets),3 facilitate automatic calculation of cephalometric measurements following hand-operated landmark identification.

The adoption of mobile technologies by health care professionals has been associated with several advantages, including improved practice productivity and clinical decision making, rapid access to information and multimedia resources, and more accurate patient documentation.4 There is emerging evidence supporting the efficacy of teledentistry, that is, the combination of telecommunications and dentistry in the exchange of clinical information and images between distant locations, in remote dental consultation and treatment planning.5 In addition, the use of technology-enhanced learning (TEL); ie, smartphones, computers, apps, learning management systems, and discussion boards is increasingly involved in education and training in health professions.6

Currently available orthodontic apps are targeted for either clinicians or patients and are intended to promote orthodontic news, meetings, products, diagnostics, and practice management or to serve as patient education materials, treatment simulators, progress trackers, and elastic wear reminders.7–9 Nevertheless, a systematic approach to evaluating the accuracy and evidence base of mobile apps is at this point lacking.9 Most of the relevant studies refer to established criteria for assessing health care information displayed on websites and not specifically for apps.10 Consequently, a decision to embed a health care app in everyday practice should be thoroughly explored.11

Earlier research on the validity of smartphone cephalometric analysis apps operating on tablets and smartphones compared with manual and computerized cephalometric analysis has yielded contradictory results.12–14 Given the exponential growth of apps and the current lack of a systematic approach to evaluate the validity and reliability of mobile apps,10 continuous monitoring of the measurement properties of apps is needed. Therefore, the aim of this study was to assess the concurrent validity and reliability of cephalometric measurements generated by two popular, free apps, CephNinja (version 1.0, Naveen Madan, Bothell, Wash) and OneCeph (version beta 1.1, NXS, Hyderabad, Telangana, India), compared with Viewbox (Viewbox 4, dHAL Software, Kifissia, Greece) as the reference standard.

MATERIALS AND METHODS

Pretreatment digital lateral cephalograms of 50 consecutive orthodontic patients attending a private practice (Dental Clinics Zwolle, Zwolle, the Netherlands) between August and October 2017 were retrospectively collected for the purposes of the study. No selection criteria were applied in relation to patients' gender, age, and type of malocclusion. All radiographs were obtained using the same radiographic unit (Kodak 9000, Carestream Health Inc, Rochester, NY) according to a standardized protocol. Patient identifiers (ie, name, age, gender, and date of examination) were cropped out of the original lateral cephalograms to maintain patient privacy. The Medical Ethics Review Committee of the University Medical Center Groningen, Groningen, the Netherlands, provided a waiver for the study (M18.225513) upon request.

Tracing Techniques

The free versions of the CephNinja and OneCeph15 apps were downloaded from the Google Play Store on March 30, 2018 (Google Inc, Mountain View, Calif) on a Samsung Galaxy S8 smartphone (Samsung Telecommunications, Suwon, South Korea). Viewbox, a CE-certified computerized cephalometric analysis program broadly used in orthodontic research,16–19 was installed on a laptop (Microsoft Surface Laptop Core, i8, 8–256 GB, Microsoft Corporation, Redmond, Wash) and served as the gold standard. To eliminate interobserver variability and to focus on the intertool variability, a single examiner (Dr Livas) traced the radiographs randomly first using Viewbox, then OneCeph, and finally CephNinja. All tracings were repeated again in random order in a second session, 2 weeks after the first one. Tracing periods were set to 1 hour to prevent operator fatigue. At the time the study was conducted, the examiner had 15 years of clinical experience in orthodontics and more than 10 years of experience using Viewbox and has been previously calibrated.16–19 Prior to the study, a 3-hour training for each app was carried out to allow the examiner to master the tracing method. As the vast majority of smartphones are not equipped with a stylus, identification of landmarks was performed directly on the touchscreen by a finger to represent mainstream use.

Cephalometric Measurements

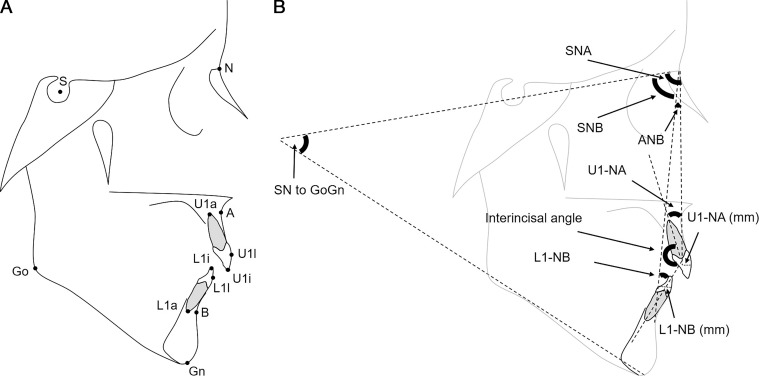

To define the cephalometric variables, a total of 12 landmarks were digitized (Figure 1A). Seven angular and two linear measurements originating from the Steiner cephalometric analysis,20 the prevailing cephalometric analysis in orthodontic practices,2 all available in the analysis protocols of Viewbox and both apps, were selected for the tracing procedures, namely, the angles SNA, SNB, ANB, SN to GoGn, upper incisor to NA (U1 to NA), lower incisor to NB (L1 to NB), interincisal angle, and the linear distances of the most prominent points of the labial surfaces of the upper and lower incisors perpendicular to NA and NB, respectively (Figure 1B).

Figure 1.

(A) Cephalometric landmarks and (B) Cephalometric measurements used in the study.

Statistical Analysis

Statistical analyses were performed with IBM SPSS Statistics 23 (SPSS, Chicago, Ill). Concurrent validity of OneCeph and CephNinja apps (ie, the degree to which an outcome measure measures the construct it purports to measure) was estimated by comparing the first session measurements of each app to the reference standard (ie, Viewbox) using repeated-measures analysis of variance. Sphericity was checked using Mauchly's test. In case of significant deviations from sphericity, a Greenhouse-Geisser correction was applied. Intraclass correlation coefficients (ICCs; two-way mixed-effects model, single measures, absolute agreement) and the 95% confidence intervals (CIs) were calculated. When interpreting the results for research purposes (comparing groups), the ICC should be at least .7, and for clinical practice, the ICC should at least be .9.21 Plots were constructed to analyze differences in measurements between the apps and the reference standard. A clinically relevant difference was claimed when the angles and distances measured by the apps differed by >2° or >2 mm, respectively.22,23

Reliability (ie, the degree to which the measurement is free from measurement error) was determined using paired t-test and the limits of agreement on measurements acquired by the three programs (session 1 vs session 2). In addition, the ICC and 95% CI were calculated. Bland-Altman plots were constructed to analyze differences in measurements between sessions for all three programs.

RESULTS

In total, lateral cephalograms of 20 males and 30 females (mean age, 19.1 years; SD, 11.7) were traced. The distribution of clinical malocclusion types was as follows: 12 Class I, 8 Class II division 1, 29 Class II division 2, and 1 Class III. Table 1 shows the means and standard deviations (SD) of all cephalometric measurements obtained with Viewbox and apps at both sessions.

Table 1.

Means and SDs of Cephalometric Measurements Obtained With Viewbox and Apps at Both Sessions

| Viewbox |

OneCeph |

CephNinja |

||||

| Session 1 | Session 2 | Session 1 | Session 2 | Session 1 | Session 2 | |

| Mean (SD) |

Mean (SD) |

Mean (SD) |

Mean (SD) |

Mean (SD) |

Mean (SD) |

|

| SNA, ° | 81.62 (4.66) | 81.64 (4.56) | 81.27 (4.38) | 81.36 (4.61) | 81.38 (4.74) | 81.17 (4.66) |

| SNB, ° | 77.78 (4.39) | 77.82 (4.30) | 77.57 (4.30) | 77.58 (4.34) | 77.61 (4.41) | 77.32 (4.31) |

| ANB, ° | 3.85 (2.38) | 3.82 (2.38) | 3.70 (2.30) | 3.79 (2.37) | 3.77 (2.44) | 3.84 (2.34) |

| Sn to GoGn, ° | 29.64 (6.44) | 30.51 (6.61) | 31.17 (9.45) | 31.31 (6.68) | 30.86 (6.52) | 30.84 (6.38) |

| U1 to NA, ° | 22.42 (10.32) | 21.41 (10.15) | 21.80 (10.07) | 21.75 (9.69) | 21.63 (9.73) | 21.28 (9.84) |

| U1 to NA, mm | 6.26 (4.70) | 6.10 (4.63) | 5.91 (4.95) | 5.40 (4.48) | 5.61 (3.10) | 5.56 (2.98) |

| L1 to NB, ° | 27.75 (8.91) | 28.27 (8.60) | 26.55 (10.38) | 27.28 (8.81) | 28.16 (9.00) | 27.43 (8.80) |

| L1 to NB, mm | 6.54 (3.95) | 6.51 (3.70) | 6.05 (5.13) | 6.04 (3.59) | 5.18 (2.41) | 5.22 (2.47) |

| Interincisal angle, ° | 125.99 (15.11) | 126.44 (14.75) | 127.21 (15.05) | 127.20 (14.85) | 126.74 (15.37) | 127.79 (15.69) |

Validity

The variables SN to GoGn and L1 to NB (mm) as measured by both apps significantly differed from the Viewbox values. U1 to NA (mm) in OneCeph was significantly different compared with Viewbox (Table 2). The ICC of the comparison between OneCeph and Viewbox ranged from .903 to .983 (Table 2). The lower border of the 95% CI of SN to GoGn was below .7 and those of U1 to NA (mm) and L1 to NB (mm) were below .9. The ICC of the comparison of CephNinja to Viewbox ranged from .786 to .978 (Table 2). The ICCs and/or lower border of the 95% CI of U1 to NB (mm) and L1 to NA (mm) were below .7 and of ANB as well as SN to GoGn were below .9. Plots did not reveal systematic bias in any of the measurements (Figure 2), except for U1 to NA (mm) and L1 to NB (mm). When measuring U1 to NA (mm) with OneCeph and when measuring L1 to NB (mm) with either OneCeph or CephNinja, measurements were systematically lower compared with those obtained from Viewbox. In addition, this discrepancy was larger for higher U1 to NA (mm) and L1 to NB (mm) measurements, as the linear trend shows (Figure 2).

Table 2.

Repeated-Measures Analysis of Variance and ICC of Measurements Obtained with Viewbox and Apps During the First Session With Viewbox as Reference

| Measurementa |

OneCeph |

CephNinja |

||

| n (%) Patients With Difference in Measurements Indicating Possible Clinically Relevant Differenceb |

ICC [Lower-Upper 95% CI] |

n (%) Patients With Difference in Measurements Indicating Possible Clinically Relevant Differenceb |

ICC [Lower–Upper 95% CI] |

|

| SNA, ° | 5 (10) | .971 [.950–.984] | 6 (12) | .961 [.933–.978] |

| SNB, ° | 3 (6) | .983 [.969–.990] | 1 (2) | .978 [.961–.987] |

| ANB, ° | 1 (2) | .949 [.911–.970] | 3 (6) | .935 [.889–.963] |

| Sn to GoGn, ° | 22 (44) | .925 [.679c–.972] | 19 (38) | .934 [.831–.969] |

| U1 to NA, ° | 25 (50) | .957 [.926–.976] | 22 (44) | .954 [.920–.974] |

| U1 to NA, mm | 20 (40) | .903 [.806–.948] | 22 (44) | .814 [.691c–.891] |

| L1 to NB, ° | 22 (44) | .956 [.923–.975] | 18 (36) | .958 [.928–.976] |

| L1 to NB, mm | 8 (16) | .940 [.883–.968] | 21 (42) | .786 [.414c–.905] |

| Interincisal angle, ° | 28 (56) | .966 [.938–.981] | 27 (54) | .970 [.948–.983] |

Table 2.

Extended

| Overall P Value |

Post Hoc P Value |

|

| Viewbox vs OneCeph |

Viewbox vs CephNinja |

|

| .194 | .101 | .204 |

| .215 | .086 | .203 |

| .681 | .557 | .523 |

| <.001 | <.001 | <.001 |

| .089 | .102 | .064 |

| .015 | .002 | .059 |

| .040 | .206 | .273 |

| <.001 | .005 | <.001 |

| .052 | .027 | .154 |

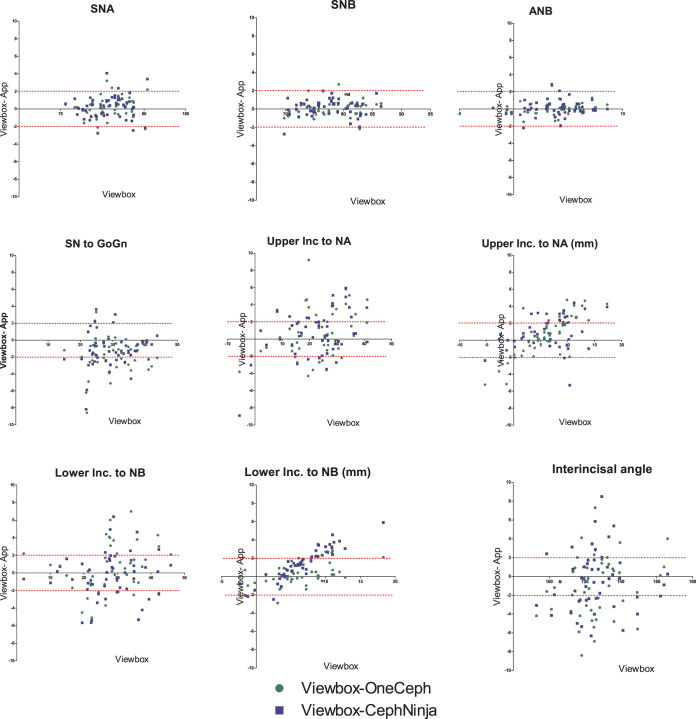

Figure 2.

Plots of the measurements obtained with Viewbox (reference standard) against the difference of the measurements obtained with Viewbox and each app (ie, Viewbox-OneCeph and Viewbox-CephNinja). The intermittent lines indicate the cutoff for clinically relevant differences, that is, >2° for angular and >2 mm for linear measurements.

Exploring outliers showed that they were not related to one specific cephalogram/patient. The percentage of patients for which a clinically relevant difference was found when compared with the reference values ranged from 2% for ANB in the case of OneCeph vs Viewbox and for SNB in the case of CephNinja vs Viewbox, to 56% and 54% for the interincisal angle for OneCeph and CephNinja, respectively (Table 2).

Intratool Reliability

Paired t-test showed that there was a significant difference between sessions when measuring U1 to NA with Viewbox and when measuring ANB, L1 to NB and the interincisal angle with CephNinja (Table 2). The ICC values for intratool reliability ranged from .647 to .993 (Table 3). For Viewbox, the lower border of the 95% CI of SN to GoGn was below .9. For OneCeph, ANB, SN to GoGn, U1 to NA, L1 to NB, and L1 to NB (mm) presented with ICC and/or lower border of the 95% CI below the accepted cutoffs (Table 3). For CephNinja, none of the values were below .9. Plots did not reveal any pattern.

Table 3.

Paired t-Test and ICC of Measurements Obtained With All Three Software During the First and Second Session

| Number (%) of Patients With Difference in Measurements Indicating Possible Clinically Relevant Differencea |

P Value |

ICC [Lower-Upper 95% CI] |

|

| Viewbox | |||

| SNA, ° | 1 (2) | .859 | .988 [.979–.993] |

| SNB, ° | 0 (0) | .543 | .993 [.987–.996] |

| ANB, ° | 1 (2) | .542 | .987 [.977–.992] |

| Sn to GoGn, ° | 10 (20) | .002 | .951 [.897–.975] |

| U1 to NA, ° | 25 (50) | .005 | .968 [.936–.983] |

| U1 to NA, mm | 2 (4) | .222 | .982 [.968–.989] |

| L1 to NB, ° | 23 (46) | .186 | .951 [.915–.972] |

| L1 to NB, mm | 0 (0) | .733 | .987 [.977–.993] |

| Interincisal angle, ° | 24 (48) | .305 | .979 [.963–.988] |

| OneCeph | |||

| SNA, ° | 4 (8) | .536 | .972 [.952–.984] |

| SNB, ° | 2 (4) | .899 | .988 [.980–.993] |

| ANB, ° | 4 (8) | .536 | .921 [.866–.955] |

| Sn to GoGn, ° | 14 (24) | .887 | .658b [.466b–.791] |

| U1 to NA, ° | 12 (24) | .862 | .974 [.955–.985] |

| U1 to NA, mm | 12 (24) | .330 | .701 [.529b–.818] |

| L1 to NB, ° | 13 (26) | .303 | .867 [.778–.922] |

| L1 to NB, mm | 8 (16) | .979 | .647b [.451b–.784] |

| Interincisal angle, ° | 17 (34) | .964 | .988 [.979–.993] |

| CephNinja | |||

| SNA, ° | 4 (8) | .206 | .967 [.943–.981] |

| SNB, ° | 4 (8) | .065 | .969 [.945–.983] |

| ANB, ° | 2 (4) | .047 | .955 [.923–.974] |

| Sn to GoGn, ° | 13 (26) | .937 | .965 [.939–.980] |

| U1 to NA, ° | 19 (38) | .205 | .981 [.966–.989] |

| U1 to NA, mm | 2 (4) | .706 | .965 [.939–.980] |

| L1 to NB, ° | 12 (24) | .004 | .978 [.957–.989] |

| L1 to NB, mm | 1 (2) | .650 | .961 [.932–.977] |

| Interincisal angle, ° | 25 (50) | .007 | .984 [.968–.991] |

DISCUSSION

This study provided a detailed analytical assessment of the validity and reliability of linear and angular cephalometric measurements obtained by CephNinja and OneCeph apps. Overall, both cephalometric analysis apps performed satisfactorily, suggesting the potential use of easy-to-reach digital technology to make cephalometrics more readily accessible. Strictly looking at the number of app measurements below the acceptable cutoffs for research and clinical practice, OneCeph might be considered a slightly more valid alternative to Viewbox than CephNinja. On the other hand, fewer CephNinja measurements indicated significant differences in comparison with Viewbox, with SN to GoGn and L1 to NB (mm) being significantly differently measured by either app. Regarding reliability testing, in contrast to OneCeph and Viewbox, no CephNinja value fell below the recommended cutoffs for reliability.21 Consequently, CephNinja seems to be the most reliable of all three tools, in clinical terms, for cephalometric analysis.

The observed differences in ANB, SN to GoGn, U1 to NA (mm), and L1 to NB (mm) may reflect either the difficulty in locating the associated cephalometric points or technical discrepancies between the two apps. Inconsistencies in defining the landmarks N,24 Gn, Go, and lower incisor apex25–28 and the linear measurements U1 to NA and L1 to NB29 have been repeatedly reported for manual and computerized methods. Interestingly, CephNinja, unlike OneCeph, does not have incorporated features to assist the user with relocating points on the mobile touchscreen in case of wrong identification.

Evaluation of cephalometric measurements deriving from two apps installed on an iPad (ie, CephNinja and SmartCeph) compared with Pro Dolphin Imaging computer software showed statistically significant differences in 56.3–62.5% of the measurements.13 Other investigators who compared conventional manual cephalometric tracings with those acquired with CephNinja detected statistically significant differences in 9 of 13 variables.14 These authors, however, interpreted the results differently by either claiming arbitrarily clinical relevant differences13 or not.14 A third cephalometric study revealed high agreement for all measurements obtained with an iPad app (ie, SmileCeph), computer-aided software (ie, NemoCeph), and manual tracing.12 It must be emphasized that determining thresholds of clinical relevant differences for cephalometric measurements varies greatly in the literature and is mostly empirically based. However, a difference of less than two units of measurement (millimeters or degree) is deemed to be within clinically acceptable limits.22,23

Multidisciplinary consultation using smartphone cephalometric analysis apps may be beneficial in distant rural areas with a high need for orthodontic and orthognathic surgery care and rare or totally unavailable specialized oral health services. Providing orthodontic expertise to general dental practitioners serving disadvantaged children via teleconferencing has been proven to be successful at improving the accurate diagnoses of malocclusions and appropriate referrals.30–32

Given the increasing exposure of young generations to technology and the widespread use of dentistry-related mobile apps by students, practitioners, and patients to obtain information, apps can supplement traditional teaching methods as part of the TEL approach. In this way, training in cephalometrics can take place away from traditional learning locations. In addition, the flexibility of the mobile platform enhances a more interactive and personalized education.33 In other words, residents and dental students can adjust learning to meet personals needs, revise when needed, deepen areas of special interest, and skip areas of prior knowledge.

Despite the plausible advantages of implementing smartphone cephalometric analysis apps in orthodontic/orthognathic practice and education, the current state of mobile health apps and, particularly, legislative and technical issues calls for attention. For example, the existing laws for approving health-related apps are applicable only to a limited number of apps.34 The number of features, diversity of information, and rapid development of the mobile health app industry hinder timely and reliable certification.35 To address the absence of control mechanisms, several measures have been recommended, for example, formation of research groups to work on developing evaluation tools for mobile health apps, guidance from governmental organizations, or creation of internal app stores to promote the use of appropriately vetted apps.36 In this context, assessment based on usability scores33 and consulting peer review websites, blogs, and social networks for e-mobile practice updates9 have been also proposed. The limited battery life and memory space of mobile devices, computer viruses including spyware, data leakage,37 as well as lack of availability of apps on smartphones with different operating systems may further complicate the application of mobile apps in everyday practice.

Strengths and Limitations

The sample size, extent of the repeated measurements, robustness of statistical methods, and masking of patient identifiers applied in this investigation are deemed more advantageous compared with similar research.12–14 As in the study of Goracci and Ferrari,12 the long experience in cephalometrics and on-screen digitization of the examiner who performed all tracings might have also contributed to the more favorable results, since it is well-recognized that the operator's experience in landmark identification affects cephalometric measurements.26,27 The selected cephalometric landmarks need to be considered when interpreting the results of such research. While other authors included the most easily locatable points to further minimize errors,12 this study engaged variables from a widely used cephalometric analysis2 to resemble real-life practice and to test without distinctions the performance of the apps.

In accordance with previous studies,12–14 the involvement of one observer well-trained in digital cephalometric tracing was deliberately chosen to eliminate variability in results consequent to different observers, since the present study aimed to investigate the reliability of the different tools used for cephalometric analysis. Although such a decision might be initially considered a limitation, it was actually an asset of the study design. In the case of multiple observers, interindividual differences in competence in using mobile apps as well as in cephalometric experience would have influenced the results.

Recommendations for Future Research

Hypothetically, and regardless of the app design that allows image magnification and adjusting brightness/contrast, the larger viewing screen of tablets and the use of a stylus to digitize the landmarks may be more operator friendly compared with smartphones. Future research should focus on assessing the performance of app versions installed on smartphones vs tablets. App engineers need to optimize the ease of use of cephalometric analysis apps operating on smartphones, especially to simplify landmark relocation. To further generalize the current findings, it would be useful to run studies on the feasibility of app-based cephalometric analysis, namely, the time required to complete cephalometric analysis using apps compared with computer-aided software.

CONCLUSIONS

Smartphone cephalometric analysis apps perform satisfactorily in terms of validity and reliability.

OneCeph is highly valid when compared with Viewbox as a gold standard, while CephNinja is the most reliable one.

Further development of smartphone apps for cephalometrics may assist specialty training and interprofessional communication.

REFERENCES

- 1.Proffit WR, Fields HJ., Jr . Cephalometric analysis. In: Proffit WR, Fields HJ Jr, Sarver DM, editors. Contemporary Orthodontics 4th ed. St Louis, Mo: Mosby; 2007. p. 202. [Google Scholar]

- 2.Keim RG, Gottlieb EL, Vogels DS, III, Vogels PB. 2014 JCO study of orthodontic diagnosis and treatment procedures, part 1: results and trends. J Clin Orthod. 2014;48:607–630. [PubMed] [Google Scholar]

- 3.Statista. Mobile app usage: statistics & facts. Statista. The Statistics Portal. 2018 Available at: https://www.statista.com/topics/1002/mobile-app-usage/ Accessed October 3.

- 4.Ventola CL. Mobile devices and apps for health care professionals: uses and benefits. P T. 2014;39:356–364. [PMC free article] [PubMed] [Google Scholar]

- 5.Estai M, Kanagasingam Y, Tennant M, Bunt S. A systematic review of the research evidence for the benefits of teledentistry. J Telemed Telecare. 2018;24:147–156. doi: 10.1177/1357633X16689433. [DOI] [PubMed] [Google Scholar]

- 6.Goodyear P, Retalis S. Technology-Enhanced Learning. Design Patterns and Pattern Languages. Rotterdam, the Netherlands: Sense Publishers; 2010. [Google Scholar]

- 7.Singh P. Orthodontic apps for smartphones. J Orthod. 2013;40:249–255. doi: 10.1179/1465313313Y.0000000052. [DOI] [PubMed] [Google Scholar]

- 8.Baheti MJ, Toshniwal N. Orthodontic apps at fingertips. Prog Orthod. 2014;15:36. doi: 10.1186/s40510-014-0036-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Moylan HB, Carrico CK, Lindauer SJ, Tüfekçi E. Accuracy of a smartphone-based orthodontic treatment-monitoring application: a pilot study. Angle Orthod. In press. [DOI] [PMC free article] [PubMed]

- 10.Fiore P. How to evaluate mobile health applications: a scoping review. Stud Health Technol Inform. 2017;234:109–114. [PubMed] [Google Scholar]

- 11.Boudreaux ED, Waring ME, Hayes RB, Sadasivam RS, Mullen S, Pagoto S. Evaluating and selecting mobile health apps: strategies for healthcare providers and healthcare organizations. Transl Behav Med. 2014;4:363–371. doi: 10.1007/s13142-014-0293-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Goracci C, Ferrari M. Reproducibility of measurements in tablet-assisted, PC-aided, and manual cephalometric analysis. Angle Orthod. 2014;84:437–442. doi: 10.2319/061513-451.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Aksakallı S, Yılancı H, Görükmez E, Ramoğlu SI. Reliability assessment of orthodontic apps for cephalometrics. Turkish J Orthod. 2016;29:98–102. doi: 10.5152/TurkJOrthod.2016.1618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sayar G, Kilnic DD. Manual tracing versus smartphone application (app) tracing: a comparative study. Acta Odontol Scand. 2017;75:588–594. doi: 10.1080/00016357.2017.1364420. [DOI] [PubMed] [Google Scholar]

- 15.Mamillapalli PK, Sesham VM, Neela PK, Mandaloju SP, Keesara S. A smartphone app for cephalometric analysis. J Clin Orthod. 2016;50:694–633. [PubMed] [Google Scholar]

- 16.Dvortsin DP, Sandham A, Pruim GJ, Dijkstra PU. A comparison of the reproducibility of manual tracing and on-screen digitization for cephalometric profile variables. Eur J Orthod. 2008;30:586–591. doi: 10.1093/ejo/cjn041. [DOI] [PubMed] [Google Scholar]

- 17.Livas C, Halazonetis DJ, Booij JW, Katsaros C. Extraction of maxillary first molars improves second and third molar inclinations in Class II Division 1 malocclusion. Am J Orthod Dentofacial Orthop. 2011;140:377–382. doi: 10.1016/j.ajodo.2010.06.026. [DOI] [PubMed] [Google Scholar]

- 18.Livas C, Halazonetis DJ, Booij JW, Pandis N, Tu YK, Katsaros C. Maxillary sinus floor extension and posterior tooth inclination in adolescent patients with Class II Division 1 malocclusion treated with maxillary first molar extractions. Am J Orthod Dentofacial Orthop. 2013;143:479–485. doi: 10.1016/j.ajodo.2012.10.024. [DOI] [PubMed] [Google Scholar]

- 19.van der Plas MC, Janssen KI, Pandis N, Pandis N, Livas C. Twin Block appliance with acrylic capping does not have a significant inhibitory effect on lower incisor proclination. Angle Orthod. 2017;87:513–518. doi: 10.2319/102916-779.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Steiner CC. Cephalometrics for you and me. Am J Orthod. 1953;39:729–755. [Google Scholar]

- 21.Nunnally JC, Bernstein IH. Psychometric Theory. New York: McGraw-Hill; 1978. [Google Scholar]

- 22.Chen YJ, Chen SK, Yao JC, Chang HF. The effects of differences in landmark identification on the cephalometric measurements in traditional versus digitized cephalometry. Angle Orthod. 2004;74:155–161. doi: 10.1043/0003-3219(2004)074<0155:TEODIL>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- 23.Akhare PJ, Dagab AM, Alle RS, Shenoyd U, Garla V. Comparison of landmark identification and linear and angular measurements in conventional and digital cephalometry. Int J Comput Dent. 2013;16:241–254. [PubMed] [Google Scholar]

- 24.Sekiguchi T, Savara BS. Variability of cephalometric landmarks used for face growth studies. Am J Orthod. 1972;61:603–618. doi: 10.1016/0002-9416(72)90109-1. [DOI] [PubMed] [Google Scholar]

- 25.Houston WJB, Maher RE, McElroy D, Sherriff M. Sources of error in measurements from cephalometric radiographs. Eur J Orthod. 1986;8:149–151. doi: 10.1093/ejo/8.3.149. [DOI] [PubMed] [Google Scholar]

- 26.Chen YJ, Chen SK, Chan HF, Chen KC. Comparison of landmark identification in traditional versus computer-aided digital cephalometry. Angle Orthod. 2000;70:387–392. doi: 10.1043/0003-3219(2000)070<0387:COLIIT>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- 27.Gregston MD, Kula T, Hardman P, Glaros A, Kula K. Comparison of conventional and digital radiographic methods and cephalometric analysis software: I. Hard tissue. Semin Orthod. 2004;10:204–211. [Google Scholar]

- 28.Santoro M, Jarjoura K, Cangialosi TJ. Accuracy of digital and analogue cephalometric measurements assessed with the sandwich technique. Am J Orthod Dentofacial Orthop. 2006;129:345–351. doi: 10.1016/j.ajodo.2005.12.010. [DOI] [PubMed] [Google Scholar]

- 29.Polat-Ozsoy O, Gokcelik A, Toygar Memikoglu TU. Differences in cephalometric measurements: a comparison of digital versus hand-tracing methods. Eur J Orthod. 2009;31:254–259. doi: 10.1093/ejo/cjn121. [DOI] [PubMed] [Google Scholar]

- 30.Cook J, Edwards J, Mullings C, Stephens C. Dentists' opinions of an online orthodontic advice service. J Telemed Telecare. 2001;7:334–337. doi: 10.1258/1357633011936967. [DOI] [PubMed] [Google Scholar]

- 31.Mandall NA, O'Brien KD, Brady J, Worthington HV, Harvey L. Teledentistry for screening new patient orthodontic referrals. Part 1: a randomised controlled trial. Br Dent J. 2005;199:659–662. doi: 10.1038/sj.bdj.4812930. [DOI] [PubMed] [Google Scholar]

- 32.Mandall NA, Qureshi U, Harvey L. Teledentistry for screening new patient orthodontic referrals. Part 2: GDP perception of the referral system. Br Dent J. 2005;199:727–729. doi: 10.1038/sj.bdj.4812969. [DOI] [PubMed] [Google Scholar]

- 33.Boulos MN, Brewer AC, Karimkhani C, Buller DB, Dellavalle RP. Mobile medical and health apps: state of the art, concerns, regulatory control and certification. Online J Public Health Inform. 2014;5:229. doi: 10.5210/ojphi.v5i3.4814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Yasini M, Marchand G. Mobile health applications, in the absence of an authentic regulation, does the usability score correlate with a better medical reliability? Stud Health Technol Inform. 2015;216:127–131. [PubMed] [Google Scholar]

- 35.Chan SR, Misra S. Certification of mobile apps for health care. JAMA. 2014;312:1155–1156. doi: 10.1001/jama.2014.9002. [DOI] [PubMed] [Google Scholar]

- 36.Mobasheri MH, King D, Johnston M, Gautama S, Purkayastha S, Darzi A. The ownership and clinical use of smartphones by doctors and nurses in the UK: a multicentre survey study. BMJ Innov. 2015;1:1–8. [Google Scholar]

- 37.Gandhi V. Are mobile apps a leaky tap in the enterprise? zscalerTM. 2018 Available at: https://www.zscaler.com/blogs/research/are-mobile-apps-leaky-tap-enterprise Accessed October 5.