Summary

Across a range of motor and cognitive tasks, cortical activity can be accurately described by low-dimensional dynamics unfolding from specific initial conditions on every trial. These “preparatory states” largely determine the subsequent evolution of both neural activity and behavior, and their importance raises questions regarding how they are, or ought to be, set. Here, we formulate motor preparation as optimal anticipatory control of future movements and show that the solution requires a form of internal feedback control of cortical circuit dynamics. In contrast to a simple feedforward strategy, feedback control enables fast movement preparation by selectively controlling the cortical state in the small subspace that matters for the upcoming movement. Feedback but not feedforward control explains the orthogonality between preparatory and movement activity observed in reaching monkeys. We propose a circuit model in which optimal preparatory control is implemented as a thalamo-cortical loop gated by the basal ganglia.

Keywords: thalamo-cortical loop, movement preparation, optimal control, neural population dynamics, neural circuits, nullspace, manifold

Highlights

-

•

Motor preparation is formalized as optimal control of M1 population dynamics

-

•

Preparatory activity can vary in a large subspace without causing future motor errors

-

•

A thalamo-cortical loop implements the optimal feedback control (OFC) solution

-

•

OFC explains fast preparation and key features of monkey M1 activity during reaching

Optimal control theory has successfully explained aspects of motor cortex activity during but not before movement. Kao et al. formalize movement preparation as optimal feedback control of cortex. They show that optimal preparation can be realized in a thalamo-cortical loop, enables fast preparation, and explains prominent features of pre-movement activity.

Introduction

Fast ballistic movements (e.g., throwing) require spatially and temporally precise commands to the musculature. Many of these signals are thought to arise from internal dynamics in the primary motor cortex (M1; Figure 1A; Evarts, 1968; Todorov, 2000; Scott, 2012; Shenoy et al., 2013; Omrani et al., 2017). In turn, consistent with state trajectories produced by a dynamical system, M1 activity during movement depends strongly on the “initial condition” reached just before movement onset, and variability in initial condition predicts behavioral variability (Churchland et al., 2006; Afshar et al., 2011; Pandarinath et al., 2018). An immediate consequence of this dynamical systems view is the so-called optimal subspace hypothesis (Churchland et al., 2010; Shenoy et al., 2013): the network dynamics that generate movement must be seeded with an appropriate initial condition prior to each movement. In other words, accurate movement production likely requires fine adjustment of M1 activity during a phase of movement preparation (Figure 1B, green).

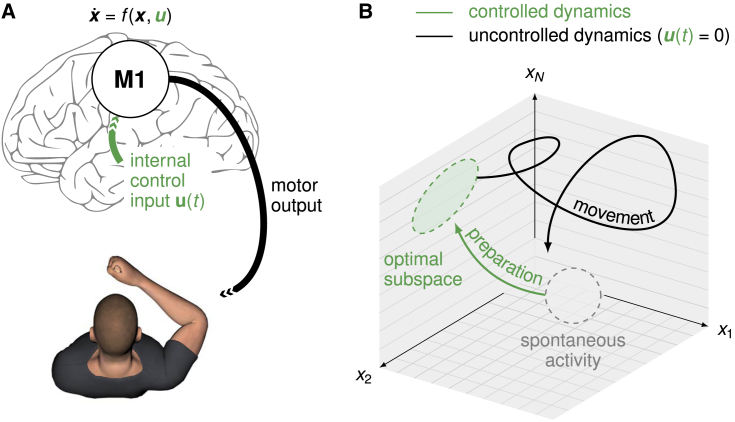

Figure 1.

Preparation and execution of ballistic movements

(A) Under a dynamical systems view of motor control (Shenoy et al., 2013), movement is generated by M1 dynamics. Prior to movement, the M1 population activity state must be controlled into an optimal, movement-specific subspace in a phase of preparation; this requires internally generated control inputs .

(B) Schematic state space trajectory during movement preparation and execution.

The optimal subspace hypothesis helps make sense of neural activity during the preparation epoch, yet several unknowns remain. What should the structure of the optimal preparatory subspace be? How does this structure depend on the dynamics of the cortical network during the movement epoch, and on downstream motor processes? Must preparatory activity converge to a single movement-specific state and be held there until movement initiation, or is some slack allowed? What are the dynamical processes and associated circuit mechanisms responsible for motor preparation? These questions can be (and have been partially) addressed empirically, for example, through analyses of neural population recordings in reaching monkeys (Churchland et al., 2010; Ames et al., 2014; Elsayed et al., 2016) or optogenetic dissection of circuits involved in motor preparation (Li et al., 2016; Guo et al., 2017; Gao et al., 2018; Sauerbrei et al., 2020). Yet for lack of an appropriate theoretical scaffold, it has been difficult to interpret these experimental results within the broader computational context of motor control.

Here, we bridge this gap by considering motor preparation as an integral part of motor control. We show that optimal control theory, which has successfully explained behavior (Todorov and Jordan, 2002; Scott et al., 2015) and neural activity (Todorov, 2000; Lillicrap and Scott, 2013) during the movement epoch, can also be brought to bear on motor preparation. Specifically, we argue that there is a prospective component of motor control that can be performed in anticipation of the movement (i.e., during preparation). This leads to a normative formulation of the optimal subspace hypothesis. Our theory specifies the control inputs that must be given to the movement-generating network during preparation to ensure that (1) any subsequent motor errors are kept minimal and (2) movements can be initiated rapidly. These optimal inputs can be realized by a feedback loop onto the cortical network.

This normative model provides a core insight: the “optimal subspace” is likely high dimensional, with many different initial conditions giving rise to the same correct movement. This has an important consequence for preparatory control: at the population level, only a few components of preparatory activity affect future motor outputs, and it is these components only that need active controlling. By taking this into account, the optimal preparatory feedback loop dramatically improves upon a simpler feedforward strategy. This holds for multiple classes of network models trained to perform reaches and whose movement-epoch dynamics are quantitatively similar to those of monkey M1. Moreover, optimal feedback inputs, but not feedforward inputs, robustly orthogonalize preparatory- and movement-epoch activity, thus accounting for one of the most prominent features of perimovement activity in reaching monkeys (Kaufman et al., 2014; Elsayed et al., 2016).

Finally, we propose a way in which neural circuits may implement optimal anticipatory control. In particular, we propose that cortex is actively controlled by thalamic feedback during motor preparation, with thalamic afferents providing the desired optimal control inputs. This is consistent with the causal role of thalamus in the preparation of directed licking in mice (Guo et al., 2017). Moreover, we posit that the basal ganglia operate an ON/OFF switch on the thalamo-cortical loop (Jin and Costa, 2010; Cui et al., 2013; Halassa and Acsády, 2016; Logiaco et al., 2019; Logiaco and Escola, 2020), thereby flexibly controlling the timing of both movement planning and initiation.

Beyond motor control, a broader set of cortical computations are also thought to rest on low-dimensional circuit dynamics, with initial conditions largely determining behavior (Pandarinath et al., 2018; Sohn et al., 2019). These computations, too, may hinge on careful preparation of the state of cortex in appropriate subspaces. Our framework, and control theory more generally, may provide a useful language for reasoning about putative algorithms and neural mechanisms (Kao and Hennequin, 2019).

Results

A model of movement generation

We begin with an inhibition-stabilized network (ISN) model of the motor cortex in which a detailed balance of excitation and inhibition enables the production of rich, naturalistic activity transients (STAR Methods; Hennequin et al., 2014; Figure 2A). This network serves as a pattern generator for the production of movement (we later investigate other movement-generating networks). Specifically, the network directly controls the two joint torques of a two-link arm, through a linear readout of the momentary network firing rates:

| (1) |

Here, is a vector containing the momentary torques, and is the population firing rate vector (described below). The network has neurons, whose momentary internal activations evolve according to (Dayan and Abbott, 2001):

| (2) |

| (3) |

Here, τ is the single-neuron time constant, is the synaptic connectivity matrix, and (applied to element-wise) is a rectified-linear activation function converting internal activations into momentary firing rates. The network is driven by two different inputs shared across all movements: a constant input responsible for maintaining a heterogeneous spontaneous activity state and a transient input arising at movement onset and decaying through movement. The latter input models the dominant, condition-independent, timing-related component of monkey M1 activity during movement (Kaufman et al., 2016). We note that although the network model is generally nonlinear, it can be well approximated by a linear model , as only a small fraction of neurons are silent at any given time (Figure S5; Discussion). Our formal analyses here rely on linear approximations, but all simulations are based on Equations 2 and 3 with nonlinear φ.

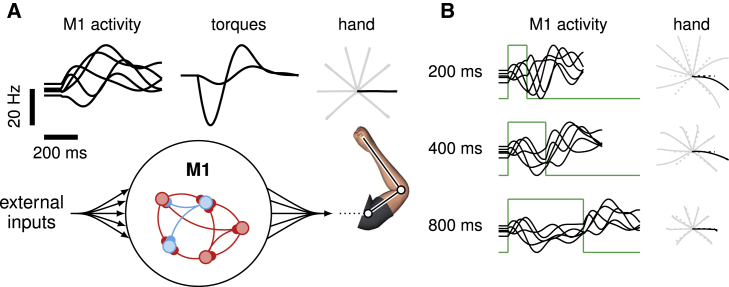

Figure 2.

Movement generation in a network model of M1

(A) Schematics of our M1 model of motor pattern generation. The dynamics of an excitation-inhibition network (Hennequin et al., 2014) unfold from movement-specific initial conditions, resulting in firing rate trajectories (left; five neurons shown), which are linearly read out into joint torques (middle), thereby producing hand movements (right). The model is calibrated for the production of eight straight center-out reaches (20 cm length); firing rates and torques are shown only for the movement colored in black. To help visualize initial conditions, firing rates are artificially clamped for the first 100 ms. See also Figure S1.

(B) Network activity and corresponding hand trajectories as in (A), for three different preparation lengths, under the naive feedforward strategy whereby a static input step (green) moves the fixed point of the dynamics to the desired initial condition.

We calibrated the model for the production of eight rapid straight reaches with bell-shaped velocity profiles (Figure S1). To perform this calibration, we noted that, in line with the dynamical systems view of movement generation (Shenoy et al., 2013), movements produced by our model depend strongly on the “initial condition,” that is, the cortical state just before movement onset (Churchland et al., 2010; Afshar et al., 2011). We thus “inverted” the model numerically by optimizing eight different initial conditions and a common readout matrix such that the dynamics of the nonlinear model (Equations 2 and 3), seeded with each initial condition, would produce the desired movement. Importantly, we constrained so that its nullspace contained the network’s spontaneous activity state, as well as all eight initial conditions. This constraint ensures that movement does not occur spontaneously or during late preparatory stages when has converged to one of these initial conditions. Nevertheless, these constraints are not sufficient to completely silence the network’s torque readout during preparation. Although such spurious output tended to be very small in our simulations (Figure S7), the two stages of integration of by the arm’s mechanics led to substantial drift of the hand before the reach. To prevent drift without modeling spinal reflexes for posture control, we artificially set during movement preparation.

As we show below, our main results do not depend on the details of the model chosen to describe movement-generating M1 dynamics. Here, we chose the ISN model for its ability to produces activity similar to M1’s during reaching, both in single neurons (Figure 2A, top left) and at the population level as shown by jPCA and canonical correlations analysis (Figure S6; Churchland et al., 2012; Sussillo et al., 2015).

Optimal control as a theory of motor preparation

Having calibrated our network model of movement generation, we now turn to preparatory dynamics. Shenoy et al (2013)'s dynamical systems perspective suggests that accurate movement execution likely requires careful seeding of the generator’s dynamics with an appropriate, reach-specific initial condition (Afshar et al., 2011). In our model, this means that the activity state of the cortical network must be steered toward the initial condition corresponding to the intended movement (Figure 1B, green). We assume that this process is achieved through additional movement-specific control inputs (Figure 1, green):

| (4) |

The control inputs are then rapidly switched off to initiate movement.

A very simple way of achieving a desired initial condition , which we call the “naive feedforward strategy,” is to use a static external input of the form

| (5) |

This establishes as a fixed point of the population dynamics, as in Equation 4 when . In stable linear networks (and, empirically, in our nonlinear model too), these simple preparatory dynamics are guaranteed to achieve the desired initial state eventually (i.e., after sufficiently long preparation time). However, the network takes time to settle in the desired state (Figure 2B); in fact, in a linear network, the response to an input step (here, the feedforward input driving preparation) contains exactly the same timescales as the corresponding impulse response (here, the autonomous movement-generating response to the initial condition ). Thus, under the naive feedforward strategy, the duration of the movement itself sets a fundamental limit on how fast can approach . This is at odds with experimental reports of relatively fast preparation, on the order of ms, compared with the ms movement-epoch window over which neural activity is significantly modulated (Lara et al., 2018). Here, we argue that feedback control can be used to speed up preparation.

How can preparation be sped up? An important first step toward formalizing preparatory control and unraveling putative circuit mechanisms is to understand how deviations from “the right initial condition” influence the subsequent movement. Are some deviations worse than others? Mathematical analysis reveals that depending on the direction in state space along which the deviation occurs, there may be strong motor consequences or none at all (Figure 3; STAR Methods). Some preparatory deviations are “prospectively potent”: they propagate through the dynamics of the generator network during the movement epoch, modifying its activity trajectories, and eventually leading to errors in torques and hand motion (Figure 3A, left). Other preparatory deviations are “prospectively readout-null”: they cause subsequent perturbations in cortical state trajectories, too, but these are correlated across neurons in such a way that they cancel in the readout and leave the movement unaltered (Figure 3A, center). Yet other preparatory perturbations are “prospectively dynamic-null”: they are outright rejected by the recurrent dynamics of the network, thus causing little impact on subsequent neuronal activity during movement, let alone on torques and hand motion (Figure 3A, right; Figures 3B and 3C).

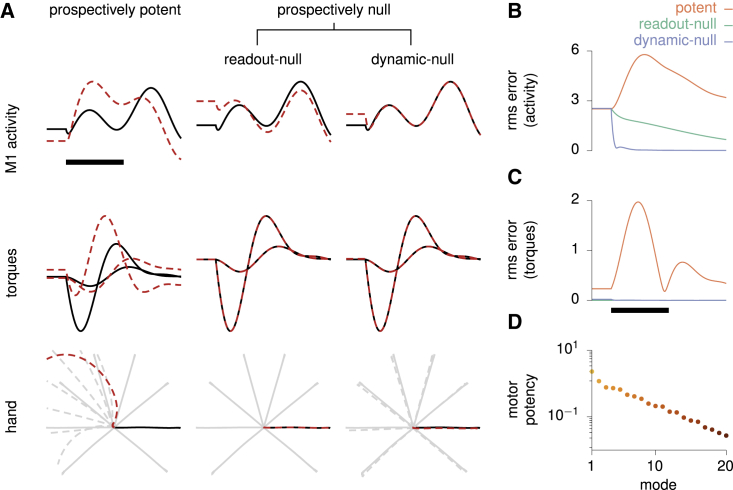

Figure 3.

Formalization of the optimal subspace hypothesis

(A) Effect of three qualitatively different types of small perturbations of the initial condition (prospectively potent, prospectively readout-null, prospectively dynamic-null) on the three processing stages leading to movement (M1 activity, joint torques, and hand position), as shown in Figure 2A. Unperturbed traces are shown as solid lines, perturbed ones as dashed red lines. Only one example neuron (top) is shown for clarity. Despite all having the same size here (Euclidean norm), these three types of perturbation on the initial state have very different consequences. Left: “prospectively potent” perturbations result in errors at every stage. Middle: “prospectively readout-null” perturbations cause sizable changes in internal network activity but not in the torques. Right: “prospectively dynamic-null” perturbations are inconsequential at all stages.

(B) Time course of the root-mean-square error in M1 activity across neurons and reach conditions, for the three different types of perturbations.

(C) Same as (B) for the root-mean-square error in torques.

(D) The motor potency of the top 20 most potent modes.

In (A)–(C), signals are artificially held constant in the first 100 ms for visualization, and black scale bars denote 200 ms from movement onset. See also Supplemental Math Note S1 and Figure S4.

We emphasize that the “prospective potency” of a preparatory deviation is distinct from its “immediate potency,” that is, from the direct effect such neural activity might have on the output torques (Kaufman et al., 2014; Vyas et al., 2020). For example, the initial state for each movement is prospectively potent by construction, as it seeds the production of movement-generating network responses. However, it does not itself elicit movement on the instant and so is immediately null. Having clarified this distinction, we will refer to prospectively potent/null directions simply as potent/null directions for succinctness.

The existence of readout-null and dynamic-null directions implies that, in fact, there is no such thing as “the right initial condition” for each movement. Rather, a multitude of initial conditions that differ along null directions give rise to the correct movement. To quantify the extent of this degeneracy, we measure the “prospective potency” of a direction by the integrated squared error in output torques induced during movement by a fixed-sized perturbation of the initial condition along that direction. We can then calculate a full basis of orthogonal directions ranking from most to least potent (an analytical solution exists for linear systems; STAR Methods; Kao and Hennequin, 2019). For our model with only two readout torques, the effective dimensionality of the potent subspace is approximately 8 (Figure 3D; STAR Methods). This degeneracy substantially lightens the computational burden of preparatory ballistic control: there are only a few potent directions in state space along which cortical activity needs active controlling prior to movement initiation. Thus, taking into account the energetic cost of neural control, preparatory dynamics should aim at preferentially eliminating errors in preparatory states along those few directions that matter for movement.

We now formalize these insights in a normative model of preparatory motor control. At any time t during preparation, we can assign a “prospective motor error” to the current cortical state . This prospective error is the total error in movement that would result if movement was initiated at this time, that is, if control inputs were suddenly switched off and the generator network was left to evolve dynamically from (STAR Methods). Note that is directly related to the measure of prospective potency described above. An ideal controller would supply the cortical network with such control inputs as necessary to lower the prospective motor error as fast as possible. This would enable accurate movement production in short order. We therefore propose the following cost functional:

| (6) |

where is an energetic cost that penalizes large control signals in excess of a baseline required to hold in the optimal subspace, and λ sets the relative importance of this energetic cost. Note that depends on via Equation 4.

Optimal preparatory control

When (1) the prospective motor error is quadratic in the output torques , (2) the energy cost is quadratic in , and (3) the network dynamics are linear, then minimizing Equation 6 corresponds to the well-known linear quadratic regulator (LQR) problem in control theory (Skogestad and Postlethwaite, 2007). The optimal solution is a combination of a constant input and instantaneous (linear) state feedback,

| (7) |

where is the momentary deviation of from a valid initial condition for the desired movement (STAR Methods). In Equation 7, the constant input is movement-specific, but the optimal gain matrix is generic; both can be derived in algebraic form. Thus, even though the actual movement occurs in “open loop” (without corrective sensory feedback), optimal movement preparation occurs in closed loop, with the state of the pattern generator being controlled via internal feedback in anticipation of the movement. Later in this section, we build a realistic circuit model in which optimal preparatory feedback (Equation 7) is implemented as a realistic thalamo-cortical loop. Prior to that, we focus on the core predictions of the optimal control law as an algorithm, independent of its implementation.

Optimal control inputs (Equation 7) lead to multiphasic preparatory dynamics in the cortical network (Figure 4A, top). Single-neuron responses separate across movement conditions in much the same way as they do in the monkey M1 data (Figure 4C), with a transient increase in total across-movement variance (Figure 4D). The prospective motor error decreases very quickly to negligible values (Figure 4A, bottom; note the small green area under the curve) as is driven into the appropriate subspace. After the preparatory feedback loop is switched off and movement begins, the system accurately produces the desired torques and hand trajectories (Figure 4A, middle). Indeed, movements are ready to be performed after as little as 50 ms of preparation (Figure 4B). We note, though, that it is possible to achieve arbitrarily fast preparation by decreasing the energy penalty factor λ in Equation 6 (Figure 4E). However, this is at the price of large energetic costs (i.e., unrealistically large control inputs).

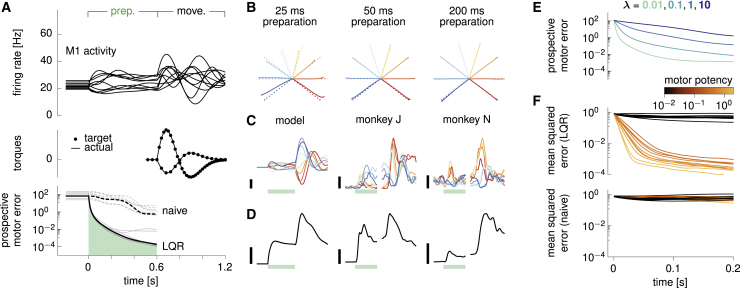

Figure 4.

Optimal preparatory control

(A) Dynamics of the model during optimal preparation and execution of a straight reach at a 144° angle. Optimal control inputs are fed to the cortical network during preparation and subsequently withdrawn to elicit movement. Top: firing rates of a selection of ten model neurons. Middle: generated torques (line), compared with targets (dots). Bottom: the prospective motor error quantifies the accuracy of the movement if it were initiated at time t during the preparatory phase. Under the action of optimal control inputs, decreases very fast, until it becomes small enough that an accurate movement can be triggered. The dashed line shows the evolution of the prospective cost for the naive feedforward strategy (see text). Gray lines denote the other seven reaches for completeness.

(B) Hand trajectories for each of the eight reaches (solid), following optimal preparation over windows of 25 ms (left), 50 ms (center), and 200 ms (right). Dashed lines show the target movements.

(C) Firing rate of a representative neuron in the model (left) and the two monkeys (center and right) for each movement condition (color-coded as in Figure 2B). Green bars mark the 500 ms preparation window, black scale bars indicate 20 Hz.

(D) Evolution of the average across-movement variance in single-neuron preparatory activity in the model (left) and the monkeys (center and right). Black scale bars indicate 16 .

(E) Prospective motor error during preparation, averaged over the eight reaches, for different values of the energy penalty parameter λ.

(F) The state of the cortical network is artificially set to deviate randomly from the target movement-specific initial state at time , just prior to movement preparation. The temporal evolution of the squared Euclidean deviation from target (averaged over trials and movements) is decomposed into contributions from the ten most and ten least potent directions, color-coded by their motor potency as in Figure 3D.

In (A)–(D) and (F), we used .

The neural trajectories under optimal preparatory control display a striking property, also observed in monkey M1 and dorsal premotor cortex (PMd) recordings (Ames et al., 2014; Lara et al., 2018): by the time movement is ready to be triggered (approximately 50 ms in the model), the firing rates of most neurons have not yet converged to the values they would attain after a longer preparation time, that is, (Figures 4A and 4C). Intuitively, this arises for the following reasons. First, the network reacts to the sudden onset of the preparatory input by following the flow of its internal dynamics, thus generating transient activity fluctuations. Second, the optimal controller is not required to suppress the null components of these fluctuations, which have negligible motor consequences. Instead, control inputs are used sparingly to steer the dynamics along potent directions only. Thus, the network becomes ready for movement initiation well before its activity has settled. To confirm this intuition, we artificially set at preparation onset to randomly and isotropically deviate from the target initial condition. We then computed the expected momentary squared deviation along the spectrum of potent directions (shown in Figure 3D) during preparation. Errors are indeed selectively eliminated along directions with motor consequences, while they linger or even grow in other, inconsequential directions (Figure 4F, top).

Importantly, feedback control vastly outperforms the naive feedforward strategy of Figure 2B, which corresponds to the limit of zero input energy as it is defined in our framework (STAR Methods; it is also the optimal solution in the limit of in Equation 6). The decrease in prospective motor error is much slower than under LQR (Figure 4A, bottom) and is non-selective, with errors along potent and null directions being eliminated at the same rate (compare Figure 4F, top and bottom).

Preparatory control in other M1 models

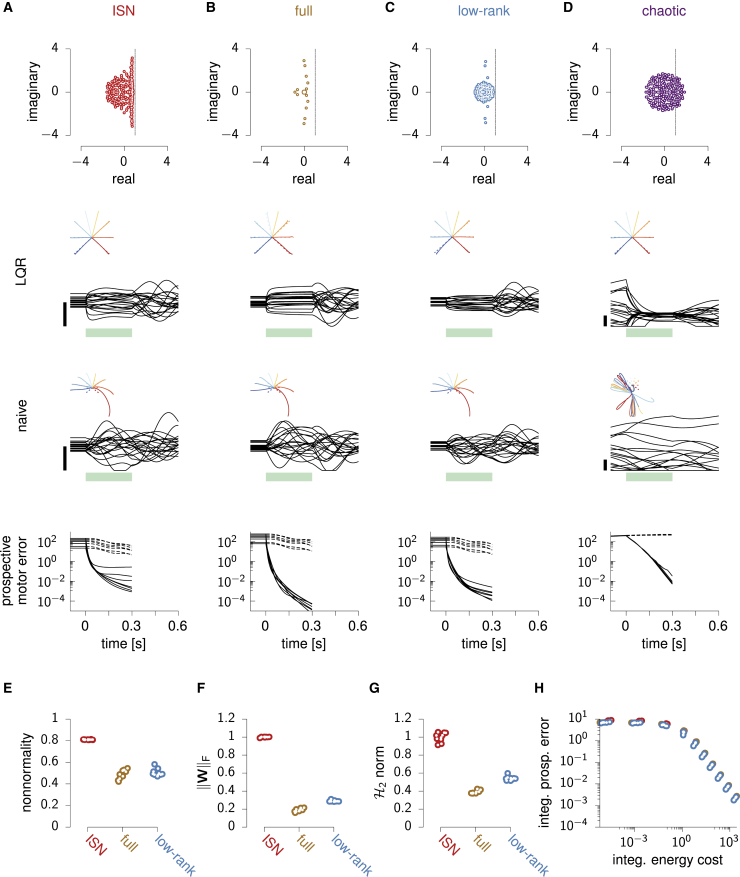

So far we have shown that feedback control is essential for rapid movement preparation in a specific model architecture. The ISN model we have used (Figures 2A and 5A) has strong internal dynamics, whose “nonnormal” nature (Figure 5E; Trefethen and Embree, 2005) gives rise to pronounced transient amplification of a large subspace of initial conditions (Hennequin et al., 2014). This raises the concern that this ISN network might be unduly high dimensional and difficult to control. After all, feedback control might not be essential in other models of movement generation for which the naive feedforward strategy might be good enough. To address this concern, we implemented optimal control in two other classes of network models which we trained to produce eight straight reaches in the same way as we trained the ISN, by optimizing the readout weights and the initial conditions (Figures 5B and 5C; STAR Methods). Importantly, we also optimized aspects of the recurrent connectivity: either all recurrent connections (“full” networks; Figure 5B) or a low-rank parameterization thereof (“low-rank” networks; Figure 5C; Sussillo and Abbott, 2009; Mastrogiuseppe and Ostojic, 2018). We trained ten instances of each network class and also produced ten new ISN networks for comparison. Empirically, we found that training was substantially impaired by the addition of the condition-independent movement-epoch input in Equation 2 in the full and low-rank networks. We therefore dispensed with this input in the training of all these networks, as well as in the newly trained ISNs for fair comparison (the results presented below also hold for ISNs trained with ).

Figure 5.

Optimal preparatory control benefits other models of movement generation

(A) ISN model. Top: eigenvalues of the connectivity matrix. Middle: activity of 20 example neurons during optimal (LQR) and naive feedforward preparatory control and subsequent execution of one movement, with hand trajectories shown as an inset for all movements. The green bar marks the preparatory period. Bottom: prospective motor error under LQR (solid) and naive feedforward (dashed) preparation, for each movement.

(B)–(D) Same as (A), for a representative instance of each of the three other network classes (see text).

(E)–(G) Index of nonnormality (E), Frobenius norm of the connectivity matrix (F), and norm (G) for the 10 network instances of each class (excluding chaotic networks for which these quantities are either undefined or uninterpretable). Dots are randomly jittered horizontally for better visualization. Both and the norm are normalized by the ISN average.

(H) Quantification of controllability in the various networks. Optimal control cost ( in Equation 6) against associated control energy cost , for different values of the energy penalty parameter λ, and for each network (same colors as in E–G). Note that the naive feedforward strategy corresponds to the limit of zero energy cost (horizontal asymptote).

See also Figure S6.

The full and low-rank networks successfully produce the correct hand trajectories after training (Figures 5B and 5C, middle), relying on dynamics with oscillatory components qualitatively similar to the ISN’s (eigenvalue spectra in Figures 5A–5C, top). They capture essential aspects of movement-epoch population dynamics in monkey M1, to a similar degree as the ISN does (Figure S6). Nevertheless, the trained networks differ quantitatively from the ISN in ways that would seemingly make them easier to control. First, they are less nonnormal (Figure 5E). Second, they have weaker internal dynamics than the ISN, as quantified by the average squared magnitude of their recurrent connections (Figure 5F). Third, these weaker dynamics translate into smaller impulse responses overall, as quantified by the norm (Figure 5G; STAR Methods; Hennequin et al., 2014; Kao and Hennequin, 2019).

Although these quantitative differences suggest that optimal feedback control might be superfluous in the full and low-rank networks, we found that this is not the case. In order to achieve a set prospective control performance, all networks require the same total input energy (Figure 5H). In particular, the feedforward strategy (limit of zero input energy) performs equally badly in all networks: preparation is unrealistically slow, with 300 ms of preparation still resulting in large reach distortions (Figures 5A–5C, bottom; recall also Figure 2B). In fact, we were able to show formally that the performance of feedforward control depends only on the target torques specified by the task but not on the details of how these targets are achieved through specific initial conditions, recurrent connectivity , and readout matrix (Supplemental Math Note S2). Stronger still, our derivations explain why the movement errors resulting from insufficiently long feedforward preparation are identical in every detail across all networks (Figures 5A–5C, bottom).

Finally, we also considered networks in the chaotic regime with fixed and strong random connection weights and the same threshold-linear activation function (“chaotic” networks; Figure 5D; Kadmon and Sompolinsky, 2015; Mastrogiuseppe and Ostojic, 2017). In these networks, as static inputs are unable to quench chaos, the naive feedforward strategy cannot even establish a fixed point, let alone a correct one (Figure 5D, bottom). However, by adapting the optimal feedback control solution to the nonlinear case (STAR Methods), we found that it successfully quenches chaos during preparation and enables fast movement initiation (Figure 5D, middle).

In summary, the need for feedback control during preparation is not specific to our particular ISN model but emerges broadly in networks of various types trained to produce reaches.

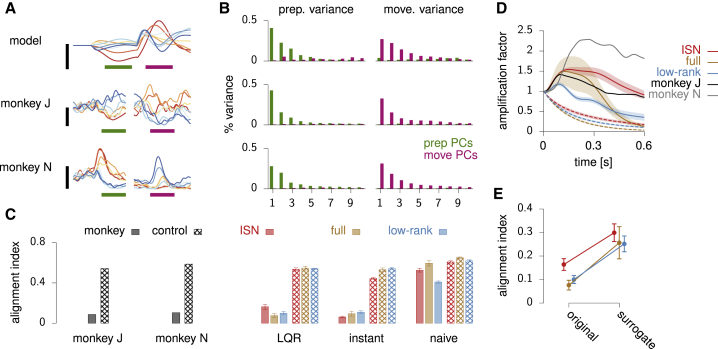

Orthogonal preparatory and movement subspaces

Optimal anticipatory control also accounts for a prominent feature of monkey motor cortex responses during motor preparation and execution: across time and reach conditions, activity spans orthogonal subspaces during the two epochs. To show this, we followed Elsayed et al. (2016) and performed principal component analysis (PCA) on trial-averaged activity during the two epochs separately, in both the ISN model of Figure 4 and the two monkey datasets (Figure 6A). We then examined the fraction of variance explained by both sets of principal components (prep-PCs and move-PCs) during each epoch. Activity in the preparatory and movement epochs is, respectively, approximately 4- and 6-dimensional for the model, 5- and 7-dimensional for monkey J, and 8- and 7-dimensional for monkey N (assessed by the “participation ratio”). Moreover, consistent with the monkey data, prep-PCs in the model account for most of the activity variance during preparation (by construction; Figure 6B, left), but account for little variance during movement (Figure 6B, right). Similarly, move-PCs capture little of the preparatory-epoch activity variance.

Figure 6.

Reorganization between preparatory and movement activity in model and monkey

(A) Example single-neuron PSTHs in model (top) and monkey M1/PMd (middle and bottom), for each reach (cf. Figure 2B).

(B) Fraction of variance (time and conditions) explained during movement preparation (left) and execution (right) by principal components calculated from preparatory (green) and movement-related (magenta) trial-averaged activity. Only the first ten components are shown for each epoch. Variance is across reach conditions and time in 300 ms prep. and move. windows indicated by green and magenta bars in (A). The three rows correspond to those of (A).

(C) Alignment index calculated as in Elsayed et al. (2016), for the two monkeys (left) and the three classes of trained networks (colors as in Figures 5A–5C) under three different preparation strategies (LQR, instant, naive; right). Here, preparation is long enough (500 ms) that even the naive feedforward strategy leads to the correct movements in all networks. Hashed bars show the average alignment index between random-but-constrained subspaces drawn as in Elsayed et al. (2016).

(D) Amplification factor, quantifying the growth of the centered population activity vector during the course of movement, relative to the pre-movement state (STAR Methods). It is shown here for the two monkeys (black and gray), as well as for the three classes of trained networks (solid) and their surrogate counterparts (dashed). Shaded region denotes ±1 SD around the mean across the ten instances of each network class. To isolate the autonomous part of the movement-epoch dynamics, here we set in Equation 2.

(E) Alignment index under the “instant” preparation strategy, for the original trained networks and their surrogates. In (C)–(E), error bars denote ±1 SD across the ten networks of each class.

To systematically quantify this (lack of) overlap for all the trained models of Figures 5A–5C and compare with monkey data, we used Elsayed et al (2016)'s “alignment index.” This is defined as the amount of preparatory-epoch activity variance captured by the top K move-PCs, further normalized by the maximum amount of variance that any K-dimensional subspace can capture. Here, K was chosen such that the top K prep-PCs capture of activity variance during the preparatory-epoch. Both monkeys have a low alignment index (Figure 6C, left), much lower than a baseline expectation reflecting the neural activity covariance across both task epochs (“random” control in Elsayed et al., 2016). All trained models show the same effect under optimal preparatory control (Figure 6C, “LQR”). Importantly, alignment indices arising from the naive feedforward solution are much higher and close to the random control.

To understand the origin of such orthogonality, we first note that the preparatory end states themselves tend to be orthogonal to movement-epoch activity in the models. Indeed, artificially clamping network activity to its end state during the whole preparatory epoch yields low alignment indices in all trained networks (Figure 6C, “instant”). We hypothesize that this effect arises from the fact that, as in monkeys, our trained networks all transiently amplify the initial condition at movement onset (Figure 6D). As the autonomous (near-linear) dynamics that drive these transients are stable, the initial growth of activity at movement onset must be accompanied by a rotation away from the initial condition. Otherwise the growth would continue, contradicting stability. To substantiate this interpretation, for each network that we trained, we built a surrogate network that achieved the same task without resorting to transient growth (STAR Methods). We thus predicted higher alignment indices in these surrogate networks. To construct them, we noted that by applying a similarity transformation simultaneously to , and the initial conditions, one can arbitrarily suppress transient activity growth at movement onset yet maintain performance in the task in the linear regime (a similarity transformation does not change the overall transfer function from initial condition to output torques). In particular, the similarity transformation that diagonalizes returns networks for which the magnitude of movement-epoch population activity can only decay during the course of movement, unlike that of the trained networks (Figure 6D, dashed). Consistent with our hypothesis, these surrogate networks have a higher “instant” alignment index (Figure 6F).

So far, we have shown that orthogonality between late preparatory and movement subspaces emerges generically in regularized trained networks. However, this does not fully explain why the alignment index is low under LQR in all models when early/mid-preparatory activity is considered instead, as in Elsayed et al. (2016). In fact, under the naive feedforward strategy, early preparatory activity can be shown to be exactly the negative image of movement-epoch activity, up to a relatively small constant offset (Supplemental Math Note S3). Thus, the temporal variations of early preparatory- and movement-epoch activity occur in a shared subspace, generically yielding a high alignment index (Figure 6C, “naive”). In contrast, optimal control rapidly eliminates preparatory errors along potent directions, which contribute significantly to movement-epoch activity: if during movement had no potent component, by definition the movement would stop immediately. Thus, one expects optimal control to remove a substantial fraction of overlap between preparatory- and movement-epoch activity. What is more, by quenching temporal fluctuations along potent directions early during preparation, optimal feedback also suppresses the subsequent transient growth of activity that these potent fluctuations would have normally produced under the naive strategy (and which are indeed produced during movement). The combination of these primary and secondary suppressive effects results in the lower alignment index that we observe (Figure 6C, “LQR”).

In summary, orthogonality between preparatory and movement activity in monkey M1 is consistent with optimal feedback control theory. In the ISN, as well as in other architectures trained on the same task, orthogonality arises robustly from optimal preparatory control, but not from feedforward control.

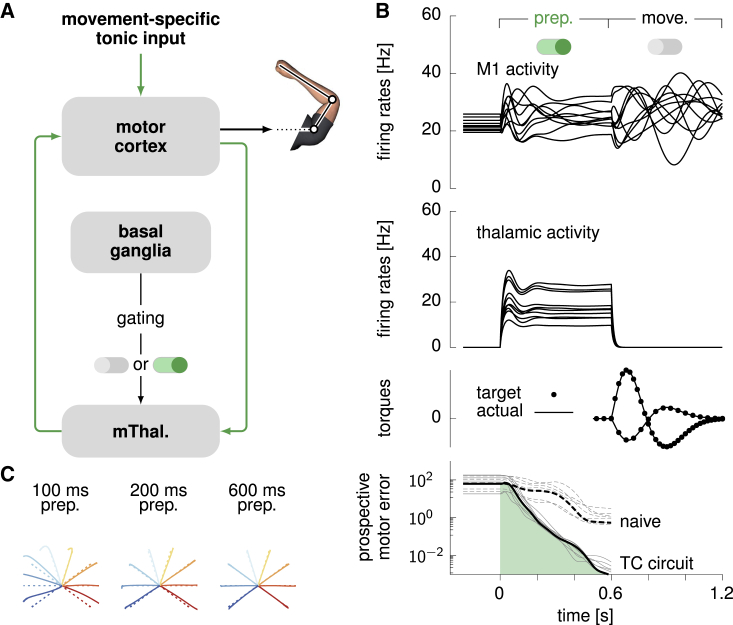

Circuit model for preparatory control: A gated thalamo-cortical loop

So far we have not discussed the source of optimal preparatory inputs , other than saying that they close a feedback loop from the cortex onto itself (Equation 7). Such a loop could in principle be absorbed in local modifications of recurrent cortical connectivity (Sussillo and Abbott, 2009). However, we are not aware of any mechanism that could implement near-instant ON/OFF switching of a select subset of recurrent synapses, as required by the model at onset of preparation (ON) and movement (OFF). If, instead, the preparatory loop were to pass through another brain area, fast modulation of excitability in that relay area would provide a rapid and flexible switch (Ferguson and Cardin, 2020). We therefore propose the circuit model shown in Figure 7A, in which the motor thalamus acts as a relay station for cortical feedback (Guo et al., 2017; Nakajima and Halassa, 2017). The loop is gated ON/OFF at preparation onset/offset by the (dis)-inhibitory action of basal ganglia outputs (Jin and Costa, 2010; Cui et al., 2013; Dudman and Krakauer, 2016; Halassa and Acsády, 2016; Logiaco et al., 2019; Logiaco and Escola, 2020). Specifically, cortical excitatory neurons project to 160 thalamic neurons, which make excitatory backprojections to a pool of 100 excitatory (E) and 100 inhibitory (I) neurons in cortex layer 4. In turn, these layer 4 neurons provide both excitation and inhibition to the main cortical network, thereby closing the control loop. Here, inhibition is necessary to capture the negative nature of optimal feedback. In addition to thalamic feedback, the cortical network also receives a movement-specific constant feedforward drive during preparation (analogous to in Equation 7 for the standard LQR algorithm; this could also come from the thalamus).

Figure 7.

Optimal movement preparation via a gated thalamo-cortical loop

(A) Proposed circuit architecture for the optimal movement preparation (cf. text).

(B) Cortical (top) and thalamic (upper middle) activity (ten example neurons), generated torques (lower middle), and prospective motor error (bottom) during the course of movement preparation and execution in the circuit architecture shown in (A). The prospective motor error for the naive strategy is shown as a dotted line as in Figure 4A. All black curves correspond to the same example movement (324° reach), and gray curves show the prospective motor error for the other seven reaches.

(C) Hand trajectories (solid) compared with target trajectories (dashed) for the eight reaches, triggered after 100 ms (left), 200 ms (middle), and 600 ms (right) of motor preparation.

See also Figure S3.

The detailed patterns of synaptic efficacies in the thalamo-cortical loop are obtained by solving the same control problem as above, based on the minimization of the cost functional in Equation 6 (STAR Methods). Importantly, the solution must now take into account some key biological constraints: (1) feedback must be based on the activity of the cortical E neurons only, (2) thalamic and layer 4 neurons have intrinsic dynamics that introduce lag, and (3) the sign of each connection is constrained by the nature of the presynaptic neuron (E or I).

The circuit model we have obtained meets the above constraints and enables flexible, near-optimal anticipatory control of the reaching movements (Figure 7B). Before movement preparation, thalamic neurons are silenced because of strong inhibition from basal ganglia outputs (not explicitly modeled), keeping the thalamo-cortical loop open (inactive) by default. At the onset of movement preparation, rapid and sustained disinhibition of thalamic neurons restores responsiveness to cortical inputs, thereby closing the control loop (ON/OFF switch in Figure 7B, top). This loop drives the cortical network into the appropriate preparatory subspace, rapidly reducing prospective motor errors as under optimal LQR feedback (Figure 7B, bottom). To trigger movement, the movement-specific tonic input to cortex is shut off, and the basal ganglia resume sustained inhibition of the thalamus. Thus, the loop re-opens, which sets off the uncontrolled dynamics of the cortical network from the right initial condition to produce the desired movement (Figure 7C).

The neural constraints placed on the feedback loop are a source of suboptimality with respect to the unconstrained LQR solution of Figure 4. Nevertheless, movement preparation by this thalamo-cortical circuit remains fast, on par with the shortest preparation times of primates in a quasi-automatic movement context (Lara et al., 2018) and much faster than the naive feedforward strategy (Figure 7B, bottom).

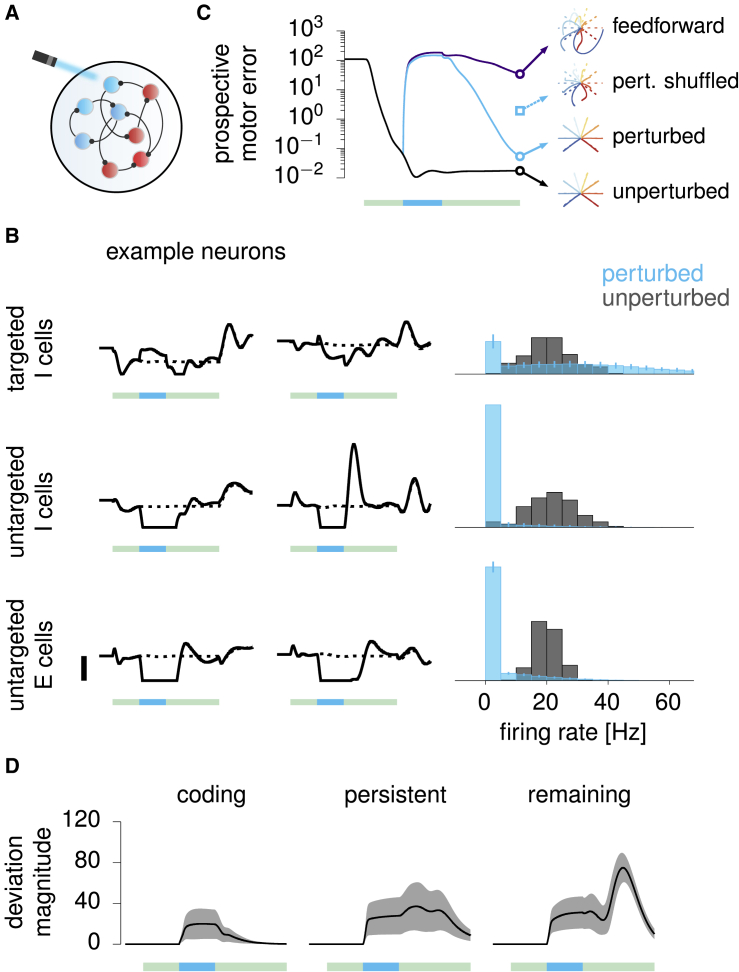

Model prediction: Selective elimination of preparatory errors following optogenetic perturbations

An essential property of optimal preparatory control is the selective elimination of preparatory errors along prospectively potent directions. As a direct corollary, the model predicts selective recovery of activity along those same directions following preparatory perturbations of the dynamics, consistent with results by Li et al. (2016) in the context of a delayed directional licking task in mice. Here, we spell out this prediction in the context of reaching movements in primates by simulating an experimental perturbation protocol similar to that of Li et al. (2016) and by applying their analysis of population responses. These concrete predictions should soon become testable with the advent of optogenetics techniques in primates (O’Shea et al., 2018).

As our E/I cortical circuit model operates in the inhibition-stabilized regime (Hennequin et al., 2014; Tsodyks et al., 1997; Ozeki et al., 2009; Sanzeni et al., 2020), we were able to use the same photoinhibition strategy as in Li et al. (2016) to silence the cortical network (Figure 8A) in the thalamo-cortical circuit of Figure 7. We provided strong excitatory input to a random subset (60%) of inhibitory neurons, for a duration of 400 ms starting 400 ms after preparation onset. We found that “photoinhibition” has mixed effects on the targeted neurons: some are caused to fire at higher rates, but many are paradoxically suppressed (Figure 8B, top). For E cells and untargeted I cells, though, the effect is uniformly suppressive, as shown in Figure 8B (middle and bottom).

Figure 8.

Testable prediction: Selective recovery from preparatory perturbations

(A) Illustration of perturbation via “photoinhibition”: a subset (60%) of I neurons in the model are driven by strong positive input.

(B) Left: firing rates (solid, perturbed; dashed, unperturbed) for a pair of targeted I cells (top), untargeted I cells (middle), and E cells (bottom). Green bars (1.6 s) mark the movement preparation epoch, and embedded turquoise bars (400 ms) denote the perturbation period. Right: histogram of firing rates observed at the end of the perturbation (turquoise) and at the same time in unperturbed trials (gray). Error bars show 1 SD across 300 experiments, each with a different random set of targeted I cells.

(C) Prospective motor error, averaged across movements and perturbation experiments, in perturbed (turquoise) versus unperturbed (black) conditions. Subsequent hand trajectories are shown for one experiment of each condition (middle and bottom insets; dashed lines show target trajectories). These are compared with the reaches obtained by randomly shuffling final preparatory errors across neurons and re-simulating the cortical dynamics thereafter (turquoise square mark). The purple line shows the performance of an optimal feedforward strategy, which pre-computes the inputs that would be provided to the cortex under optimal feedback control and subsequently replays those inputs at preparation onset without taking the state of the cortex into account anymore.

(D) Magnitude of the deviation caused by the perturbation in the activity of the network projected into the coding subspace (left), the persistent subspace (center), and the remaining subspace (right). Lines denote the mean across perturbation experiments, and shadings indicate ±1 SD. Green and turquoise bars as in (B).

The perturbation transiently resets the prospective motor error to pre-preparation level, thus nullifying the benefits of the first 400 ms of preparation (Figure 8C). Following the end of the perturbation, the prospective motor error decreases again, recovering to unperturbed levels (Figure 8C, solid versus dashed) and thus enabling accurate movement production (compare middle and bottom hand trajectories). This is due to the selective elimination of preparatory errors discussed earlier (Figure 4F): indeed, shuffling across neurons immediately prior to movement, thus uniformizing the distribution of errors in different state space directions, leads to impaired hand trajectories (Figure 8C, top right).

We next performed an analysis qualitatively similar to Li et al (2016)'s (STAR Methods). We identified a “coding subspace” (CS) that accounts for most of the across-condition variance in firing rates toward the end of movement preparation in unperturbed trials. Similarly, we identified a “persistent” subspace (PS) that captures most of the activity difference between perturbed and unperturbed trials toward the end of preparation, regardless of the reach direction. Finally, we also constructed a third subspace, constrained to be orthogonal to the PS and the CS, and capturing most of the remaining variance across the different reaches and perturbation conditions (“remaining subspace” [RS]).

We found the CS and the PS to be nearly orthogonal (minimum subspace angle of 89°) even though they were not constrained to be so. Moreover, the perturbation causes cortical activity to transiently deviate from unperturbed trajectories nearly equally in each of the three subspaces (Figure 8D). Remarkably, however, activity recovers promptly along the CS but not in the other two subspaces. In fact, the perturbation even grows transiently along the PS during early recovery. Such selective recovery can be understood from optimal preparation eliminating errors along directions with motor consequences, but (because of energy constraints) not in other inconsequential modes. Indeed, the CS is by definition a prospectively potent subspace: its contribution to the preparatory state is what determines the direction of the upcoming reach. In contrast, the PS and the RS are approximately prospectively null (respectively 729 times and 8 times less potent than the CS, by our measure of motor potency in Figure 3D). This not only explains why the CS and PS are found to be near orthogonal but also why the thalamo-cortical dynamics implementing optimal preparatory control only quench perturbation-induced deviations in the CS.

In summary, optogenetic perturbations of M1 could be used to test a core prediction of optimal preparatory control, namely, the selective recovery of activity in subspaces that carry movement information, but not in others.

Finally, perturbation experiments can also help distinguish between optimal feedback control and feedforward control strategies. The naive feedforward strategy discussed previously can already be ruled out for being unrealistically slow. However, it is easy to conceive of other feedforward control mechanisms that would be more difficult to distinguish from feedback control. In particular, consider “optimal” feedforward control, which pre-computes the optimal feedback inputs once and for all, and provides them each time the same movement is to be prepared. By definition, this feedforward strategy is indistinguishable from optimal feedback control in the absence of noise or perturbation during preparation (compare black and purple lines in Figure 8C before the onset of perturbation). However, unlike optimal feedback, the optimal feedforward strategy cannot react to state perturbations during preparation as its inputs are pre-computed and thus unable to adapt to unexpected changes in the cortical state (compare purple and turquoise lines in Figure 8C).

Discussion

Neural population activity in cortex can be accurately described as arising from low-dimensional dynamics (Churchland et al., 2012; Mante et al., 2013; Carnevale et al., 2015; Seely et al., 2016; Barak, 2017; Cunningham and Yu, 2014; Michaels et al., 2016). These dynamics unfold from a specific initial condition on each trial, and indeed these “preparatory states” predict the subsequent evolution of both neural activity and behavior in single trials of the task (Churchland et al., 2010; Pandarinath et al., 2018; Remington et al., 2018; Sohn et al., 2019). In addition, motor learning may rely on these preparatory states partitioning the space of subsequent movements (Sheahan et al., 2016).

How are appropriate initial conditions reached in the first place? Here, we have formalized movement preparation as an optimal control problem, showing how to translate anticipated motor costs phrased in terms of muscle kinematics into costs on neural activity in M1. Optimal preparation minimizes these costs, and the solution is feedback control: the cortical network must provide corrective feedback to itself, on the basis of prospective motor errors associated with its current state. In other words, optimal preparation may rely on an implicit forward model predicting the future motor consequences of preparatory activity—not motor commands, as in classical theories (Wolpert et al., 1995; Desmurget and Grafton, 2000; Scott, 2012)—and feeding back these predictions for online correction of the cortical trajectory. Thus, where previous work has considered the motor cortex as a controller of the musculature (Todorov, 2000; Lillicrap and Scott, 2013; Sussillo et al., 2015), our work considers M1 and the body jointly as a compound system under preparatory control by other brain areas.

One of the key assumptions of this work is that the cortical dynamics responsible for reaching are at least partially determined by the initial state (we do not assume that they are autonomous). If, on the contrary, M1 activity were purely input driven, there would be no need for preparatory control, let alone an optimal one. On the one hand, perturbation experiments in mice suggest that thalamic inputs are necessary for continued movement generation and account for a sizable fraction (though not all) of cortical activity during movement production (Sauerbrei et al., 2020). On the other hand, experiments in non-human primates provide ample evidence that preparation does occur, irrespective of context (Lara et al., 2018), and that initial pre-movement states influence subsequent behavior (Churchland et al., 2010; Afshar et al., 2011; Shenoy et al., 2013). Thus, preparatory control is likely essential for accurate movement production.

Sloppy preparation for accurate movements

A core insight of our analysis is that preparatory activity must be constrained in a potent subspace affecting future motor output but is otherwise free to fluctuate in a nullspace. This readily explains two distinctive features of preparatory activity in reaching monkeys: (1) that pre-movement activity on zero-delay trials needs not reach the state achieved for long movement delays (Ames et al., 2014) and (2) that nevertheless, movement is systematically preceded by activity in the same preparatory subspace irrespective of whether the reach is self-initiated, artificially delayed, or reactive and fast (Lara et al., 2018). In our model, preparatory activity converges rapidly in the subspace that matters, such that irrespective of the delay (above 50 ms), preparatory activity is always found to have some component in this common subspace as in Lara et al. (2018). Moreover, exactly which of the many acceptable initial conditions is reached by the end of the delay depends on the delay duration. Thus, our model predicts that different preparatory end states will be achieved for short and long delays, consistent with the results of Ames et al. (2014). Finally, these end states also depends on the activity prior to preparation onset. This would explain why Ames et al. (2014) observed different pre-movement activity states when preparation started from scratch and when it was initiated by a change in target that interrupted a previous preparatory process. In the same vein, Sauerbrei et al. (2020) silenced thalamic input to mouse M1 early during movement and observed that M1 activity did not recover to the pre-movement activity seen in unperturbed trials, even when the movement was successfully performed following the perturbation. This result is expected in our model if the perturbation is followed by a new preparatory phase in which the effect of the perturbation rapidly vanishes along prospectively potent dimensions but subsists in the nullspace.

Preparing without moving

How can preparatory M1 activity not cause premature movement, if M1 directly drives muscles? Kaufman et al. (2014) argued that cortical activity may evolve in a coordinated way at the population level so as to remain in the nullspace of the muscle readout. Here, we have not explicitly penalized premature movements as part of our control objective (Equation 6). As a result, although preparatory activity enters the nullspace eventually (because we constrained the movement-seeding initial conditions to belong to it), optimal preparatory control yields small activity excursions outside the nullspace early during preparation (Figure S7), causing the hand to drift absent any further gating mechanism. Nevertheless, one can readily penalize such premature movements in our framework and obtain network models that prepare rapidly “in the nullspace” via closed-loop feedback (Figure S7; STAR Methods). Importantly, a naive feedforward control strategy is unable to contain the growth of movement-inducing activity during preparation.

Thalamic control of cortical dynamics

The mathematical structure of the optimal control solution suggested a circuit model based on cortico-cortical feedback. We have proposed that optimal feedback can be implemented as a cortico-thalamo-cortical loop, switched ON during movement preparation and OFF again at movement onset. The ON-switch occurs through fast disinhibition of those thalamic neurons that are part of the loop. Our model thus predicts a large degree of specificity in the synaptic interactions between cortex and thalamus (Halassa and Sherman, 2019; Huo et al., 2020), as well as a causal involvement of the thalamus in movement preparation (Guo et al., 2017; Sauerbrei et al., 2020). Furthermore, the dynamical entrainment of thalamus with cortex predicts tuning of thalamic neurons to task variables, consistent with a growing body of work showing specificity in thalamic responses (Nakajima and Halassa, 2017; Guo et al., 2017; Rikhye et al., 2018). For example, we predict that neurons in the motor thalamus should be tuned to movement properties, for much the same reasons that cortical neurons are (Todorov, 2000; Lillicrap and Scott, 2013; Omrani et al., 2017). Finally, we speculate that an ON/OFF switch on the thalamo-cortical loop is provided by one of the output nuclei of the basal ganglia (SNr [substantia nigra pars reticulata] or GPi [internal segment of the globus pallidus]), or a subset of neurons therein. Thus, exciting these midbrain neurons during preparation should prevent the thalamus from providing the necessary feedback to the motor cortex, thereby slowing down the preparatory process and presumably increasing reaction times. In contrast, silencing these neurons during movement should close the preparatory thalamo-cortical loop at a time when thalamus would normally disengage. This should modify effective connectivity in the movement-generating cortical network and impair the ongoing movement. These are predictions that could be tested in future experiments.

Thalamic control of cortical dynamics offers an attractive way of performing nonlinear computations (Sussillo and Abbott, 2009; Logiaco et al., 2019). Although both preparatory and movement-related dynamics are approximately linear in our model, the transition from one to the other (orchestrated by the basal ganglia) is highly nonlinear. Indeed, our model can be thought of as a switching linear dynamical system (Linderman et al., 2017). Moreover, gated thalamo-cortical loops are a special example of achieving nonlinear effects through gain modulation. Here, it is the thalamic population only that is subjected to abrupt and binary gain modulation, but changes in gain could also affect cortical neurons. This was proposed recently as a way of expanding the dynamical repertoire of a cortical network (Stroud et al., 2018).

Switch-like nonlinearities may have relevance beyond movement preparation (e.g., for movement execution). In our model, different movement patterns are produced by different initial conditions seeding the same generator dynamics. However, we could equally well have generated each reach using a different movement-epoch thalamo-cortical loop. Logiaco et al. (2019) recently explored this possibility, showing that gated thalamo-cortical loops provide an ideal substrate for flexible sequencing of multiple movements. In their model, each movement is achieved by its own loop (involving a shared cortical network), and the basal ganglia orchestrate a chain of thalamic disinhibitory events, each spatially targeted to activate those neurons that are responsible for the next loop in the sequence. Interestingly, their cortical network must still be properly initialized prior to each movement chunk, as it must in our model. For this, they proposed a generic preparatory loop similar to the one we have studied here (Logiaco et al., 2019; Logiaco and Escola, 2020). However, theirs does not take into account the degeneracies in preparatory states induced by prospective motor costs, which ours exploits. In sum, our model and theirs address complementary facets of motor control (preparation and sequencing) and could be combined into a single model.

To conclude, we have proposed a theory of movement preparation and studied its implications for various models of movement-generating dynamics in M1. Although these models capture several salient features of movement-epoch activity, they could be replaced by more accurate, data-driven models (Pandarinath et al., 2018) in future work. This would enable our theory to make detailed quantitative predictions of preparatory activity, which could be tested further in combination with targeted perturbation experiments. Our control-theoretic framework could help elucidate the role of the numerous brain areas that collectively control movement (Svoboda and Li, 2018) and make sense of their hierarchical organization in nested loops.

STAR★Methods

Key resources table

| REAGENT OR RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Experimental models: Organisms/strains | ||

| Rhesus macaque (Macaca mulatta) | N/A | Details of the two macaque monkeys used in this study were previously reported in Kaufman et al., 2014; requests for these datasets should be directed to Mark Churchland, Matthew Kaufman and Krishna Shenoy. |

| Software and algorithms | ||

| OCaml 4.10 | Open source | https://www.ocaml.org |

| Owl | Open source | https://ocaml.xyz |

| Python 3 | Open source | https://python.org |

| Gnuplot 5.2 | Open source | http://gnuplot.info |

| Custom code | Open source | https://github.com/hennequin-lab/optimal-preparation |

Resource availability

Lead contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Guillaume Hennequin (g.hennequin@eng.cam.ac.uk).

Materials availability

This study did not generate new unique reagents.

Data and code availability

The full datasets have not been deposited in a public repository because they are made available to the authors by Mark Churchland, Matthew Kaufman and Krishna Shenoy. Requests for these recordings should be directed to them. The code generated during this study is available at https://github.com/hennequin-lab/optimal-preparation.

Experimental model and subject details

In this study, we analyzed two primate datasets that were made available to us by Mark Churchland, Matthew Kaufman and Krishna Shenoy. Details of animal care, surgery, electrophysiological recordings, and behavioral task have been reported previously in Churchland et al. (2012); Kaufman et al. (2014) (see in particular the details associated with the J and N “array” datasets). Briefly, the subjects of this study, J and N, were two adult male macaque monkeys (Macaca mulatta). The animal protocols were approved by the Stanford University Institutional Animal Care and Use Committee. Both monkeys were trained to perform a delayed reaching task on a fronto-parallel screen. At the beginning of each trial, they fixated on the center of the screen for some time, after which a target appeared on the screen. A variable delay period (0–1000 ms) ensued, followed by a go cue instructing the monkeys to reach toward the target. Recordings were made in the dorsal premotor and primary motor areas using a pair of implanted 96-electrode arrays.

Method details

The values of all the parameters mentioned in this section are listed in the tables below.

| Parameters of the M1 circuit model | |||

|---|---|---|---|

| symbol | value | unit | description |

| 160 | - | number of E units | |

| 40 | - | number of I units | |

| τ | 150 | ms | time constant of M1 dynamics |

| 50 | ms | rise time constant of | |

| 500 | ms | decay time constant of | |

| A | implicit | - | set so that has a maximum of 5 |

| Parameters of the arm mechanics and hand trajectories | |||

| symbol | value | unit | description |

| 30 | cm | length of upper arm link | |

| 33 | cm | length of lower arm link | |

| 1.4 | kg | mass of upper arm link | |

| 1.0 | kg | mass of lower arm link | |

| 16 | cm | center of mass of lower link, away from elbow | |

| 0.025 | kg m-2 | moment of inertia of upper link | |

| 0.045 | kg m-2 | moment of inertia of lower link | |

| 10. | deg. | value of at rest | |

| 143.54 | deg. | value of at rest | |

| deg. | reach angles | ||

| 20 | cm | reach distance | |

| 120 | ms | time constant of reach velocity profile | |

| Parameters of the LQR algorithm | |||

| symbol | value | unit | description |

| λ | 0.1 | - | input energy penalty in Equation 30 |

| Parameters of the thalamo-cortical circuit model | |||

| symbol | value | unit | description |

| λ | 0.01 | - | input energy penalty in Equation 30 |

| p | 0.2 | - | density of random connections from M1 to thalamus |

| 100 | - | number of E units in M1 L4 | |

| 100 | - | number of I units in M1 L4 | |

| 10 | ms | neuronal time constant in thalamus | |

| 10 | ms | neuronal time constant in M1 L4 | |

| Parameters of the photoinhibition model | |||

| symbol | value | unit | description |

| 100 | - | number of M1 I units perturbed (60%) | |

| 3 | - | input to perturbed I units during photoinhibition | |

| 400 | ms | duration of photoinhibition | |

A model for movement generation by cortical dynamics

Network dynamics

We model M1 as a network with two separate populations of excitatory (E) neurons and inhibitory (I) neurons, operating in the inhibition-stabilized regime (Tsodyks et al., 1997; Ozeki et al., 2009; Hennequin et al., 2014). We constructed its synaptic architecture using the algorithm we have previously described in Hennequin et al. (2014). Briefly, we iteratively updated the inhibitory synapses of a random network, with an initial spectral abscissa of 1.2, to minimize a measure of robust network stability. We implemented early stopping, terminating the stabilization procedure as soon as the spectral abscissa of the connectivity matrix dropped below .

We describe the dynamics of these neurons by a standard nonlinear rate equation. Specifically, the vector of internal neuronal “activations” obeys:

| (8) |

where τ is the single-neuron time constant, is the synaptic connectivity matrix, and is a static, rectified-linear nonlinearity – applied elementwise to – that converts internal activations into momentary firing rates. The input consists of three terms: an input held constant throughout all phases of the task to instate a heterogeneous set of spontaneous firing rates (elements drawn i.i.d. from ); a transient, movement-condition-independent and spatially uniform α-shaped input bump

| (9) |

kicking in at movement onset (Kaufman et al., 2016); and a preparatory control input (further specified below) whose role is to drive the circuit into a preparatory state appropriate for each movement.

We assume that the uncontrolled dynamics of this network directly drives movement. To actuate the two-link arm model described in the next section, the activity of the network is read out into two joint torques:

| (10) |

where is such that its last columns are zero, i.e., only the excitatory neurons contribute directly to the motor output. Although our simulations show that the muscle readouts are very small during preparation, they do cause drift in the hand prior to movement onset (and therefore wrong movements afterward) as they are effectively integrated twice by the dynamics of the arm (see below and Figure S7). For this reason, we artificially set to zero during movement preparation.

Arm model

To simulate reaching movements, we used the planar two-link arm model previously described in Li and Todorov (2004). The upper arm and the lower arm are connected at the elbow (Figure S1). The two links have lengths and , masses and , and moments of inertia and respectively. The lower arm’s center of mass is located at a distance from the elbow. By considering the geometry of the upper and lower limb, we can write down the position of the hand as a vector given by

| (11) |

where the angles and are defined in Figure S1A. The joint angles evolve dynamically according to the differential equation

| (12) |

where is the momentary torque vector (the output of the neural network, c.f. Equation 10), is the matrix of inertia, accounts for the centripetal and Coriolis forces, and is a damping matrix representing joint friction. These parameters are given by

| (13) |

| (14) |

with , , and .

Target hand trajectories and initial setup

We generated a set of eight target hand trajectories, namely straight reaches of length cm going from the origin ( from the shoulder) into eight different directions, with a common bell-shaped scalar speed profile

| (15) |

where is adjusted such that the hand reaches the target. Given these target hand trajectories, we solved for the required time course of the torque vector through optimization, by backpropagating through the equations of motion of the arm (discretized using Euler’s method) to minimize the squared difference between actual and desired hand trajectories. We forced the initial torques at to be zero, and also included a roughness penalty in the form of average squared torque gradient.

To calibrate the network for the production of the desired movement-specific torques , , we optimized a set of eight initial conditions , as well as the readout matrix , by minimizing the loss function:

| (16) |

where second and depends on and implicitly through the dynamics of the network. The first term of minimizes the squared difference between the actual and desired torque trajectories, while the second term penalizes 's squared Frobenius norm. We performed the optimization using the L-BFGS algorithm (Liu and Nocedal, 1989) and backpropagating through Equations 8 and 10. In addition, we parameterized the readout matrix in such a way that its nullspace automatically contains both the spontaneous activity vector and the movement-specific initial conditions , . This is to ensure that (i) there is no muscle output during spontaneous activity and (ii) the network does not unduly generate muscle output at the end of preparation, before movement. More specifically, prior to movement preparation and long enough after movement execution, the cortical state is in spontaneous activity . By ensuring that , we ensure that our model network does not elicit movement “spontaneously.” Similarly, control inputs drive the cortical state toward , which it will eventually reach late in the preparation epoch; therefore, if we did not constrain to be in 's null-space, premature movements would be elicited toward the end of preparation.

Training of other network classes

In Figures 5 and 6, we considered three other network types: ‘full’, ‘low-rank’, and ‘chaotic’ networks, which differed from the ISN in the way we constructed their synaptic connectivity matrices. We implemented 10 independent instances of each class.

For the chaotic networks, synaptic weights were drawn from a normal distribution with mean and variance ; this places the networks in the chaotic regime with a threshold-linear nonlinearity (Kadmon and Sompolinsky, 2015). We calibrated the chaotic networks for generating the desired hand trajectories by optimizing and as described above.

Unlike the ISNs and the chaotic networks, we optimized the connectivity matrix of the full and low-rank networks in addition to and , when we calibrated these networks for movement production. While we optimized every element of for the full networks, we parameterized the low-rank networks as

| (17) |

where is a rank-5 perturbation to a random connectivity matrix . Here, both and are free parameters, whereas is a fixed matrix with elements drawn anew for each network instance from . Following Sussillo et al. (2015), we regularized the ‘full’ and ‘low-rank’ networks by augmenting the loss function (Equation 16) with an additional term: for the ‘full’ networks and for the ‘low-rank’ networks.

We found that the addition of the reach-independent input during the movement epoch (Equation 2) made the ‘full’, ‘chaotic’, and ‘low-rank’ networks difficult to train: they either became unstable, entered a chaotic regime, or were unable to produce the desired movements altogether. Thus, we excluded for all these networks, as well as for the 10 other ISNs that we constructed as part of this multi-network comparison.

Similarity transformation of network solutions

A trained (linear) network’s solution is completely determined by the triplet , where is the state transition matrix, is the linear readout, and for is the set of movement-specific initial conditions. Using an invertible transformation , we can construct a new solution given by the triplet , where

| (18) |

and changed to to ensure the spontaneous state remains the same. To see that is also a solution to the task, we note that the output torques at any time t are equal to

| (19) |

In Figure 6, we build the surrogate networks by applying the similarity transformation that (block-)diagonalizes , resulting in of the form:

| (20) |

where and are the sets of real and complex-conjugate eigenvalues of . The resulting is a “normal” matrix (; Trefethen and Embree, 2005), which—contrary to the original matrix —is unable to transiently amplify the set of movement-specific initial states during movement (Figure 6D). In order to be able to meaningfully compare and in Figure 6F, we scale so as to preserve the average magnitude of the initial states (relative to the spontaneous state):

| (21) |

We can always achieve this because for any .

Formalization of anticipatory motor control

We formalise the notion of anticipatory control by asking: given an intended movement (indexed by k), and the current (preparatory) state of the network, how accurate would the movement be if it were to begin now? We measure this prospective motor error as the total squared difference between the time courses of the target torques and those that the network would generate (Equations 8 and 10) if left uncontrolled from time t onward, starting from initial condition :

| (22) |

(we will often drop the explicit reference to the movement index k to remove clutter, as we did in the main text). Thus, any preparatory state is associated with a prospective motor error .

The prospective error changes dynamically during movement preparation, as evolves under the action of control inputs. The aim of the control inputs is to rapidly decrease this prospective error, until it drops below an acceptably small threshold, or until movement initiation is forced. We formalize this as the minimization of the following control cost:

| (23) |

where is a regularizer (see below) and the average is over some distribution of states we expect the network to be found in at the time the controlled preparatory phase begins (we leave this unspecified for now as it turns out not to influence the optimal control strategy – see below). Thus, we want control inputs to rapidly steer the cortical network into states of low from which the movement can be readily executed, but these inputs should not be too large. The infinite-horizon summation expresses uncertainty about how long movement preparation will last, and indeed encourages the network to be “ready” as soon as possible.

Mathematically, is a functional of the spatiotemporal pattern of control input — indeed, depends on through Equation 8. The regularizer , or “control effort,” encourages small control inputs and will be specified later. Without regularization, the problem is ill-posed, as arbitrarily large control inputs could be used to instantaneously force the network into the right preparatory state in theory, leading to physically infeasible control solutions in practice. Also note that Equation 23 is an “infinite-horizon” cost, i.e., the integral runs from the beginning of movement preparation when control inputs kick in, until infinity. This does not mean, however, that the preparation phase must be infinitely long. In fact, good control inputs should (and will!) bring the integrand close to zero very fast, such that the movement is ready to begin after only a short preparatory phase (see e.g., Figure 4A in the main text).

In order to derive the optimal control law, we further assume that the dynamics of the network remain approximately linear during both movement preparation and execution. This is a good approximation provided only few neurons become silent in either phase (the saturation at zero firing rate is the only source of nonlinearity in our model, c.f. in Equation 8; Figure S5). In this case, the prospective motor error of Equation 22 affords a simpler, interpretable form, which we derive now. In the linear regime, Equation 8 becomes

| (24) |

with an effective state transition matrix . The network output at time , starting from state at time and with no control input thereafter, has an analytical form given by

| (25) |

and similarly for with replaced by . The final term is a contribution from the external input: it does not depend on the initial condition, and is therefore the same in both cases. Thus, the prospective motor error (Equation 22) attached to a given preparatory state is

| (26) |