Abstract

With the availability of cellular-resolution connectivity maps, connectomes, from the mammalian nervous system, it is in question how informative such massive connectomic data can be for the distinction of local circuit models in the mammalian cerebral cortex. Here, we investigated whether cellular-resolution connectomic data can in principle allow model discrimination for local circuit modules in layer 4 of mouse primary somatosensory cortex. We used approximate Bayesian model selection based on a set of simple connectome statistics to compute the posterior probability over proposed models given a to-be-measured connectome. We find that the distinction of the investigated local cortical models is faithfully possible based on purely structural connectomic data with an accuracy of more than 90%, and that such distinction is stable against substantial errors in the connectome measurement. Furthermore, mapping a fraction of only 10% of the local connectome is sufficient for connectome-based model distinction under realistic experimental constraints. Together, these results show for a concrete local circuit example that connectomic data allows model selection in the cerebral cortex and define the experimental strategy for obtaining such connectomic data.

Subject terms: Network models, Neural circuits

Large-scale connectomes from the mammalian brain are becoming available, but it remains unclear how informative these are for the distinction of circuit models. Here, the authors use connectome statistics to test competing models of local cortical circuits with approximate Bayesian computation.

Introduction

In molecular biology, the use of structural (x-ray crystallographic or single-particle electron microscopic) data for the distinction between kinetic models of protein function constitutes the gold standard (e.g.,1,2). In Neuroscience, however, the question whether structural data of neuronal circuits is informative for computational interpretations is still heavily disputed3–6, with the extreme positions that cellular connectomic measurements are likely uninterpretable6 or indispensable5. In fact, structural circuit data has been decisive in resolving competing models for the computation of directional selectivity in the mouse retina7.

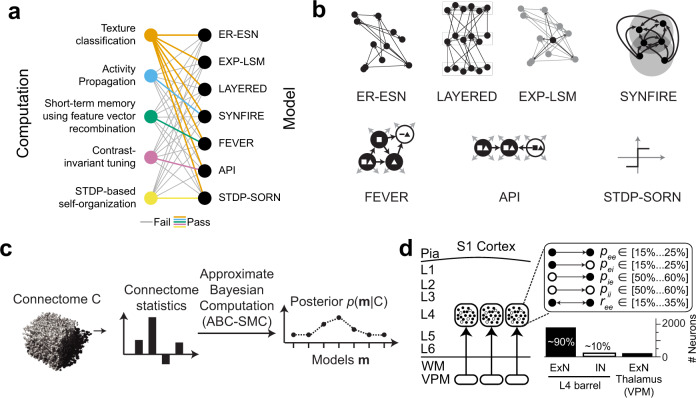

For the mammalian cerebral cortex, the situation can be considered more complicated: it can be argued that it is not even known which computation a given cortical area or local circuit module carries out. In this situation, hypotheses about the potentially relevant computations and about their concrete implementations are to be explored simultaneously. To complicate the investigation further, the relation between a given computation and its possible implementations is not unique. Take, for example pattern distinction (of tactile or visual inputs) as a possible computation in layer 4 of sensory cortex. This computation can be carried out by multi-layer perceptrons8, but also by random pools of connected neurons in an “echo state network”9 (Fig. 1a, Supplementary Fig. 1a–g) and similarly by networks configured as “synfire chains”10 (Fig. 1a). If one considers different computational tasks, however, such as the maintenance of sensory representations over time scales of seconds (short-term memory), or the stimulus tuning of sensory representations, then the relation between the computation and its implementation becomes more distinct (Fig. 1a). Specifically, a network implementation of antiphase inhibition for stimulus tuning11 is not capable of performing the short-term memory task (Supplementary Fig. 1k, l), and a network proposed for a short-term memory task (FEVER12), fails to perform stimulus tuning (Fig. 1a, Supplementary Figs. 1–3). Together, this illustrates that while it is impossible to uniquely equate computations with their possible circuit-level implementations, the ability to discriminate between proposed models would allow to narrow down the hypothesis space both about computations and their circuit-level implementations in the cortex.

Fig. 1. Relationship between models and possible computations in cortical circuits, and proposed strategy for connectomic model distinction in local circuit modules of the cerebral cortex.

a Relationship between computations suggested for local cortical circuits (left) and possible circuit-level implementations (right). Colored lines indicate successful performance in the tested computation; gray lines indicate failure to perform the computation (see Supplementary Fig. 1 for details). b Enumeration of candidate models possibly implemented in a barrel-circuit module. See text for details. c Flowchart of connectomic model selection approach to obtain the posterior p (m|C) over hypothesized models m given a connectome C. ABC-SMC: approximate Bayesian computation using sequential Monte–Carlo sampling. d Sketch of mouse primary somatosensory cortex with presumed circuit modules (“barrels”) in cortical input layer 4 (L4). Currently known constraints of pairwise connectivity and cell prevalence of excitatory (ExN) and inhibitory (IN) neurons (: pairwise excitatory-excitatory connectivity30–33,36, : pairwise excitatory-inhibitory connectivity31,33, : pairwise inhibitory-inhibitory connectivity31,34, : pairwise inhibitory-excitatory connectivity31,33,35, : pairwise excitatory-excitatory reciprocity30,31,33).

With this background, the question whether purely structural connectomic data is sufficiently informative to discriminate between several possible previously proposed models and thus a range of possible cortical computations is of interest.

Here we asked whether for a concrete cortical circuit module, the “barrel” of a cortical column in mouse somatosensory cortex, the measurement of the local connectome can in principle serve as an arbiter for a set of possibly implemented local cortical models and their associated computations.

We developed and tested a model selection approach (using Approximate Bayesian Computation with Sequential Monte-Carlo Sampling, ABC-SMC13–15, Fig. 1c) on the main models proposed so far for local cortical circuits (Fig. 1b) ranging from pairwise random Erdős–Rényi (ER16) to highly structured “deep” layered networks used in machine learning17,18. We found that connectomic data alone is in principle sufficient for the discrimination between these investigated models, using a surprisingly simple set of connectome statistics. The model discrimination is stable against substantial measurement noise, and only partly mapped connectomes have already high discriminative power.

Results

To develop our approach we focus on a cortical module in mouse somatosensory cortex, a “barrel” in layer 4 (L4), a main input layer to the sensory cortex19–21. The spatial extent of this module (roughly db = 300 μm along each dimension) makes it a realistic goal of experimentally mapped dense connectomes using state-of-the-art 3D electron microscopy22,23 and circuit reconstruction approaches24–27. A barrel is composed of about 2,000 neurons28,29. Of these about 90% are excitatory, and about 10% inhibitory28,29 (Fig. 1d), which establish a total of about 3 million chemical synapses within L4. The ensuing average pairwise synaptic connectivity within a barrel has been estimated based on data from paired whole-cell recordings30–35: excitatory neurons connect to about 15–25% of the other intra-barrel neurons; inhibitory neurons connect to about 50–60% of the other intra-barrel neurons (Fig. 1d). Moreover, the probability of a connection to be reciprocated ranges between 15% and 35%29–31,33,36. Whether intracortical connections in L4 follow only such pairwise connection statistics or establish higher-order circuit structure is not known23,37–39. Furthermore, it is not understood whether the effect of layer 4 circuits is primarily the amplification of incoming thalamocortical signals30,40, or whether proper intracortical computations commence within L441–43. A L4 circuit module is therefore an appropriate target for model selection in local cortical circuits.

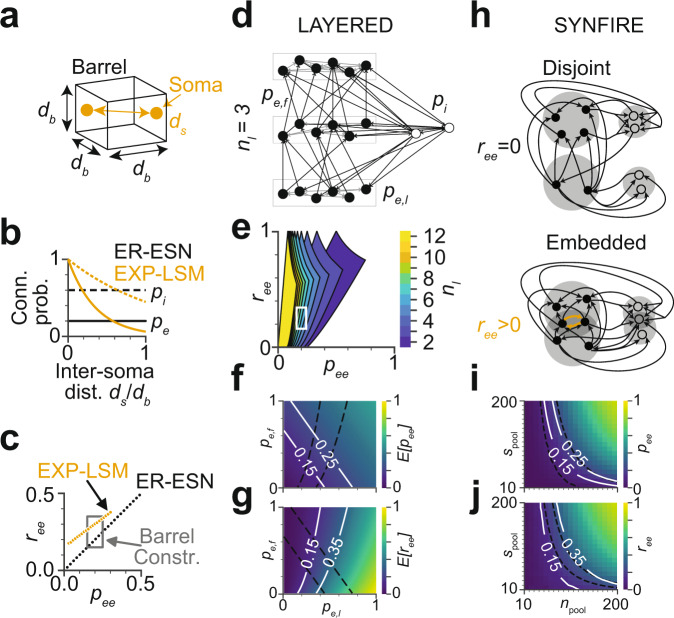

The simplest model of local cortical circuits assumes pairwise random connectivity between neurons, independent of their relative spatial distance in the cortex (Erdős–Rényi16, Fig. 2a–c). This model has been proposed as Echo State Network (ESN9,44). As a slight modification, random networks with a pairwise connectivity dependent on the distance between the neurons’ cell bodies are the basis of liquid state machines (LSMs45,46, Fig. 2a–c). At the other extreme, highly structured layered networks are successfully used in machine learning and were originally inspired by neuronal architecture (multi-layer perceptrons8, Fig. 2d–g). Furthermore, embedded synfire chains have been studied (SYN10,47, Fig. 2h–j), which can be considered an intermediate between random and layered connectivity. In addition to these rather general model classes, particular suggestions of models for concrete cortical operations have been put forward that make less explicit structural assumptions (feature vector recombination network (FEVER12), proposed to achieve stimulus representation constancy on macroscopic timescales within a network; and antiphase inhibition (API11,48), proposed to achieve contrast invariant stimulus tuning), or that are based on local learning rules (spike timing-dependent plasticity/self-organizing recurrent neural network (STDP-SORN49,50)).

Fig. 2. Compliance of candidate models with the so-far experimentally determined pairwise barrel circuit constraints in L4 (see Fig. 1d).

a Illustration of a simplified cortical barrel of width and somata with inter soma distance . b Pairwise excitatory and inhibitory connection probabilities and are constant over inter soma distance in the Erdős–Rényi echo state network (ER-ESN) and decay in the exponentially decaying connectivity - liquid state machine model (EXP-LSM). c Possible pairwise excitatory-excitatory connectivity and excitatory-excitatory reciprocity in the ER-ESN and EXP-LSM model satisfy the so-far determined barrel constraints (box). d–g Layered model: d example network with three layers (), excitatory forward (between-layer) connectivity , excitatory lateral (within-layer) connectivity and inhibitory connectivity . e Range of and in the LAYERED model for varying number of layers (white box: barrel constraints as in c). f, g Expected excitatory pairwise connectivity and reciprocity as function of and for . Isolines indicate barrel constraints, model parameters in compliance with these constraints: area between intersecting isolines. Note that constraints are fulfilled only for within-layer connectivity , refuting a strictly feedforward network. h–j Embedded synfire chain model (SYNFIRE). h Two subsequent synfire pools in the disjoint (top) and embedded (bottom) synfire chain. Since intra-pool connectivity is strictly zero, reciprocal connections do not exist in the disjoint case () but in the embedded configuration. i, j Pairwise excitatory connectivity and pairwise excitatory reciprocity as function of the number of pools and the pool size for a SYNFIRE network with neurons. Respective barrel constraints (white and dashed line). See Supplementary Fig. 2 for analogous analysis of FEVER, API, and STDP-SORN models.

We first had to investigate whether the so far experimentally established circuit constraints of local cortical modules in S1 cortex (Fig. 1d; number of neurons, pairwise connectivity, and reciprocity; see above) were already sufficient to refute any of the proposed models.

Both the pairwise random ER model (Fig. 2c) and the pairwise random but soma-distance dependent EXP-LSM model are directly compatible with measured constraints on pairwise connectivity and reciprocity (Fig. 2c). A strictly layered multilayer perceptron model, however, does not contain any reciprocal connections and would in the strict form have to be refuted for cortical circuit modules, in which the reciprocity range is 0.15–0.35. Instead of rejecting such a “deep” layered model altogether, we studied a layered configuration of locally randomly connected ensembles (Fig. 2d). We found that models with up to ten layers are consistent with the circuit constraints of barrel cortex (Fig. 2e). In subsequent analyses we considered configurations with 2–4 layers. In this regime, the connectivity within layers is 0.2–0.6 and between layers 0.3–0.6 (Fig. 2f, g; nl = 3 layers). Similarly, disjoint synfire chains10 (Fig. 2h) would have to be rejected for the considered circuits due to lack of reciprocal connections. Embedded synfire chains (e.g., ref. 47), however, yield reciprocal connectivity for the sets of neurons overlapping between successive pools (Fig. 2h). This yields a range of pool sizes for which the SYNFIRE model is compatible with the known circuit constraints (Fig. 2i, j). The other models were investigated analogously (Supplementary Fig. 2), finding slight (API, Supplementary Fig. 2d–g) or substantial modifications (FEVER, STDP-SORN, Supplementary Fig. 2a–c, h–m) that make the models compatible with a local cortical circuit in L4. Notably, the FEVER model as originally proposed12 yields substantially too low connectivity and too high reciprocity to be realistic for local cortical circuits in L4 (Supplementary Fig. 2b). A modification in which FEVER rules are applied on a pre-drawn random connectivity rescues this model (Supplementary Fig. 2a, b).

Structural model discrimination via connectome statistics

We then asked whether these local cortical models could be distinguished on purely structural grounds, given a binary connectome of a barrel circuit.

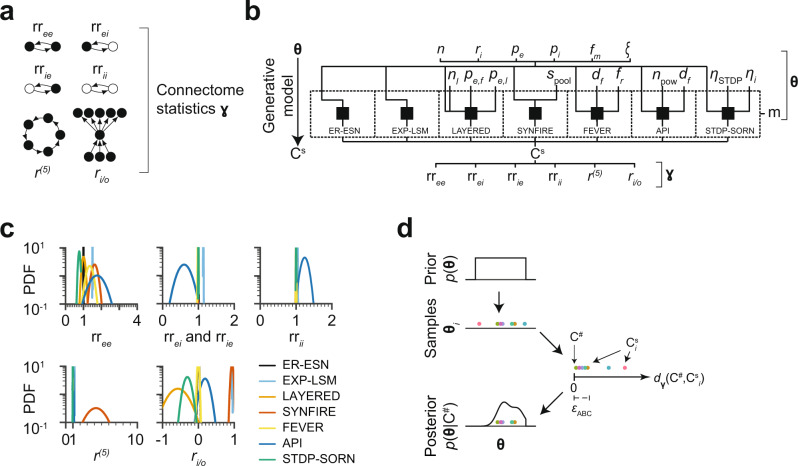

We first identified circuit statistics that could serve as potentially distinctive connectome descriptors (Fig. 3a). We started with the relative reciprocity of connections within ( and ) and across ( and ) the populations of excitatory and inhibitory neurons. Since we had already found that some of the models would likely differ in reciprocity (see above, Fig. 2c, g, j Supplementary Fig. 2b, f, g), these statistics were attractive candidates. We further explored the network recurrency at cycle length , which is a measure for the number of cycles in a network (Fig. 3a). This measure can be seen as describing how much of the information flow in the network is fed back to the network itself. So a LAYERED network would be expected to achieve a low score in this measure, while a highly recurrent network, such as SYNFIRE is expected to achieve a high score. We used with since for smaller this measure is more equivalent to the reciprocity and for larger , the measure is numerically less stable. Moreover, we investigated the in/out-degree correlation of the excitatory population (Fig. 3a). This measure was motivated by the notion that should point towards a separation of input and output subpopulations of L4, as for example expected in the LAYERED model.

Fig. 3. Connectome statistics and generative models for approximate Bayesian inference.

a Connectome statistics used for model distinction: relative excitatory-excitatory reciprocity , relative excitatory-inhibitory reciprocity , relative inhibitory-excitatory reciprocity , relative inhibitory-inhibitory reciprocity , relative cycles of length 5, , and in-out degree correlation of excitatory neurons b Generative model for Bayesian inference: shared set of parameters (top: number of neurons , fraction of inhibitory neurons , excitatory connectivity , inhibitory connectivity , fractional connectome measurement , noise ) and model-specific parameters (middle: model choice , number of layers , excitatory forward connectivity , excitatory lateral connectivity , pool size , STDP learning rate , intrinsic learning rate , feature space dimension , feverization ratio , selectivity , see Supplementary Fig. 4), generated sampled connectome Cs described by the summary statistics . c Gaussian fits of probability density functions (PDFs) of the connectome statistics (a) for all models (see Fig. 1b). d Sketch of ABC-SMC procedure: given a measured connectome , parameters (colored dots) are sampled from the prior . Each generates a connectome that has a certain distance to in the space defined by the connectome statistics (a). If this distance is below a threshold , the associated parameters are added as mass to the posterior distribution , and are rejected otherwise.

For a first assessment of the distinctive power of these six connectome statistics , we sampled 50 L4 connectomes from each of the 7 models (Fig. 3b). The free parameters of the models were drawn from their respective prior distributions (Fig. 3b; priors shown in Supplementary Fig. 4). For example, for the LAYERED model, the prior parameters were the number of layers , the forward connectivity and the lateral connectivity . The proposed network statistics (Fig. 3a) were then evaluated for each of the 350 sampled connectomes (Fig. 3b, c). While the statistics had some descriptive power for certain combinations of models (for example, seemed to separate API from EXP-LSM, Fig. 3c), none of the six statistics alone could discriminate between all the models (see the substantial overlap of their distributions, Fig. 3c), necessitating a more rigorous approach for model selection.

Discrimination via Bayesian model selection

We used an Approximate Bayesian Computation-Sequential Monte Carlo (ABC-SMC) model selection scheme13–15 to compute the posterior probability over a range of models given a to-be-measured connectome .

In this approach, example connectomes are generated from the models m in question (using the priors over the model parameters (Fig. 3b, d; see Supplementary Fig. 4 for plots of all priors)). For each sampled connectome , the dissimilarity to the measured connectome was computed (formalized as a distance between and ). The connectome distance was defined as an L1 norm over the six connectome statistics (Fig. 3a), normalized by the 20%-to-80% percentile per connectome statistic (see Methods). If the sampled connectome was sufficiently similar to the measured connectome (i.e. their distance was below a preset threshold , see Methods), the sample was accepted and considered as evidence towards the model that had generated (Fig. 3d). With this, an approximate sample from the posterior was obtained (Fig. 3d). The posterior was iteratively refined by resampling and perturbing the parameters of the accepted connectomes and by sequentially reducing the distance threshold .

We then tested our approach on simulated connectomes . These were again generated from the different model classes (as in Fig. 3b); however in the ABC method, only the distances between the sampled connectomes and the simulated connectomes were used (Fig. 3d). It was therefore not clear a-priori whether the statistics are sufficiently descriptive to distinguish between the models; and whether this would be the case for all or only some of the models.

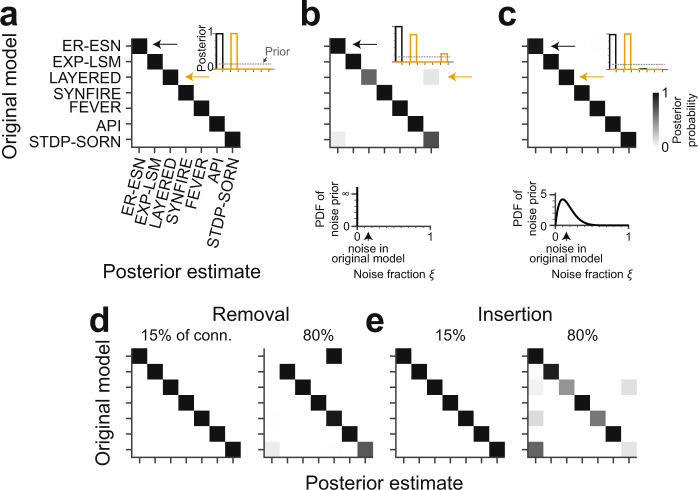

We first considered the hypothetical case of a dense, error-free connectomic reconstruction of a barrel circuit under the ER-ESN model yielding a connectome . The ABC-SMC scheme correctly identified this model as the one model class at which the posterior probability mass was fully concentrated compared to all other models (Fig. 4a). ABC-SMC inference was repeated for n = 3 ER-ESN models, resulting in three consistent posterior distributions. Similarly, connectomes obtained from all other investigated models yielded posterior probability distributions concentrated at the correct originating model (Fig. 4a). Thus, the six connectome statistics together with ABC-based model selection were in fact able to distinguish between the tested set of models given binary connectomes.

Fig. 4. Identification of models using Bayesian model selection under ideal and noisy connectome measurements.

a Confusion matrix reporting the posteriors over models given example connectomes. Example connectomes were sampled from each model class (rows; Fig. 3b) and then exposed to the ABC-SMC method (Fig. 3d) using only the connectome statistics (Fig. 3a). Note that all model classes are uniquely identified from the connectomes (inset: average posteriors for ER-ESN and LAYERED connectomes, respectively; n = 3 repetitions). b Posteriors over models given example connectomes to which a random noise of 15% (inset, dashed line) was added before applying the ABC-SMC method. The generative model (Fig. 3b) was ignorant of this noise (n = 3 repetitions; bottom: noise prior ). c Same analysis as in b, this time including a noise prior into the generative model (n = 3 repetitions). Bottom: The noise prior was modeled as . Note that in most connectome measurements, the level of reconstruction errors is quantifiable, such that the noise can be rather faithfully incorporated into the noise prior (see text). Model identification is again accurate under these conditions (compare c and a). d Confusion matrix when simulating split errors in neuron reconstructions by randomly removing 15% (left) or 80% (right) of connections before ABC-SMC inference. e Confusion matrix when simulating merge errors in neuron reconstructions by insertion of additional 15% (left) and 80% (right) of the original number of connections into random locations in the connectome before ABC-SMC inference. d, e Noise prior during ABC-SMC inference was of the same type as the simulated reconstruction errors (n = 1 repetition; noise prior ). Color bar in c applies to all panels.

Discrimination of noisy connectomes

We next explored the stability of our approach in the face of connectome measurements in which was simulated to contain noise from biological sources, or errors resulting from connectomic reconstruction inaccuracies. The latter would be caused by the remaining errors made when reconstructing neuronal wires in dense nerve tissue24,26,27,51 and by remaining errors in synapse detection, especially when using automated synapse classifiers52–57. To emulate such connectome noise, we first randomly removed 15% of the connections in and reinserted them again randomly. We then computed the posterior on such noisy connectomes , which in fact became less stable (Fig. 4b; shown is average of n = 3 repetitions with accuracies of 83.0%, 99.8%, and 100.0%, respectively).

However, in this setting, we were pretending to be ignorant about the fact that the connectome measurement was noisy (see noise prior in Fig. 4b), and had assumed a noise-free measurement. In realistic settings, however, the rate of certain reconstruction errors can be quantitatively estimated. For example, the usage of automated synapse detection57 and neurite reconstructions with quantified error rates24,26,27,58–60, provide such error rates explicitly. We therefore next investigated whether prior knowledge about the reconstruction error rates would improve the model posterior (Fig. 4c). For this, we changed our prior assumption about reconstruction errors from noise-free (Fig. 4b) to a distribution with substantial probability mass around 0–30% noise (modeled as , Fig. 4c). When we applied the posterior computation again to connectomes with 15% reconstruction noise, these were now as discriminative as in the noise-free case (Fig. 4c, cf. Fig. 4a, b).

To further investigate the effect of biased noise, we also tested conditions in which synaptic connections were only randomly removed or only randomly added (corresponding to cases in which reconstruction of the connectome may be biased towards neurite splits (Fig. 4d) or neurite mergers (Fig. 4e)); and cases in which errors were focused on a part of the connectome (corresponding to cases in which certain neuronal connections may be more difficult to reconstruct than others, Supplementary Fig. 5a). These experiments indicate a rather stable range of faithful model selection under various types of measurement errors.

Incomplete connectome measurement

In addition to reconstruction noise, a second serious practical limitation of connectomic measurements is the high resource consumption (quantified in human work hours, which are in the range of 90,000–180,000 h for a full barrel reconstruction today, assuming 1.5 mm/h reconstruction speed, 5–10 km path length per cubic millimeter and a barrel volume of (300 µm)3 24,61). Evidently, the mapping of connectomes for model discrimination would be rendered substantially more feasible if the measurement of only a fraction of the connectome was already sufficient for model discrimination. We therefore next investigated the stability of our discrimination method under two types of fractional measurements (Fig. 5).

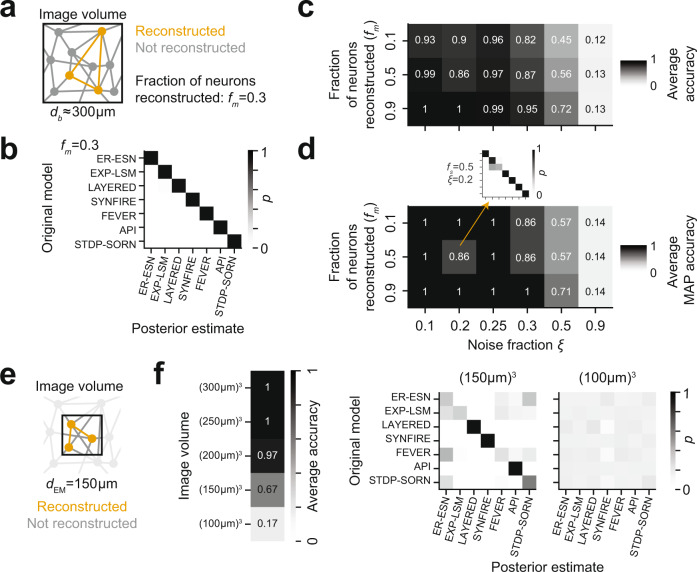

Fig. 5. Model selection for partially measured and noisy connectomes.

a Fractional (incomplete) connectome measurement when reconstructing only a fraction fm of the neurons in a given circuit, thus obtaining a fraction fm2 of the complete connectome. b Effect of incomplete connectome measurement on model selection performance for fm = 0.3 (no noise; n = 1 repetition). Note that model selection is still faithfully possible. c, d Combined effects of noisy and incomplete connectome measurements on model selection accuracy reported as average posterior probability (c; n = 1 repetition per entry) and maximum-a-posteriori accuracy (d; n = 1 repetition per entry). Note that model selection is highly accurate down to 10% fractional connectome measurement at up to 25% noise, providing an experimental design for model distinction that is realistic under current connectome measurement techniques (see text). Model selection used a fixed noise prior. More informative noise priors result in more accurate model selection (Supplementary Fig. 5b). e Effect of fractional dense circuit reconstruction: Locally dense connectomic reconstruction of the neurons and of their connections in a circuit subvolume. f Effect of partial imaging and dense reconstruction of the circuit subvolume on average model selection accuracy (left: n = 1 repetition per entry). Note that model selection based on dense reconstruction of a (150 μm)3 volume (12.5% of circuit volume) is substantially less accurate than model selection based on complete reconstructions of 10% in the complete circuit volume (see c). Right: Posterior distributions over models for image volumes of (150 μm)3 and (100 μm)3, respectively (n = 1 repetition, each).

We first tested whether reconstruction of only fm = 30% of neurons and of their connectivity is sufficient for model selection (Fig. 5a). We found model discrimination to be 100% accurate in the absence of reconstruction errors (Fig. 5b). This reconstruction assumes the 3D EM imaging of a tissue volume that comprises an entire barrel, followed by a fractional circuit reconstruction (see sketch in Fig. 5a). Such an approach is realistic since the speed of 3D EM imaging has increased more quickly than that of connectomic reconstruction61–64.

We then screened our approach for stability against both measurement noise and incomplete connectome measurement by applying our method on connectomes of varying noise rates and measurement fractions fm with a fixed noise prior (). For evaluating classification performance, we used two approaches: first, we averaged the model posterior along the diagonal of the classification matrix (e.g., Fig. 5b), yielding the average accuracy for a given noise and fractional measurement combination (Fig. 5c). In addition, we evaluated the quality of the maximum-a-posteriori (MAP) classification, which takes the peak of the posterior as binary classification result (Fig. 5d). The MAP connectome classification was highly accurate even in a setting in which only 10% of the connectome were sampled, and at a substantial level of reconstruction error of 25%. This implies that we will be able to perform the presented model distinction in a partially mapped barrel connectome consuming 18,000 instead of 180,000 work hours24,57,61 (Fig. 5c, d). Evidently, this makes a rather unrealistic reconstruction feasible (note the largest reconstructions to date consumed 14,000–25,000 human work hours58–60,65).

We then asked whether complete connectomic reconstructions of small EM image volumes27 could serve as an alternative to the fractional reconstruction of large image volumes (Fig. 5e, f). This would reduce image acquisition effort and thereby make it realistic to rapidly compare how brain regions, species or disease states differ in terms of circuit models. To simulate locally dense reconstructions, we first restricted the complete noise-free connectome to the neurons with their soma located within the imaged barrel subvolume (Fig. 5e). Importantly, connections between the remaining neurons may be established outside the image volume. To account for the loss of these connections, we further subsampled the remaining connections. We found model selection from dense connectomic reconstruction of a (150 µm)3 volume (12.5% of the barrel volume) to be unstable (67% average accuracy; Fig. 5f) due to the confusion between the ER-ESN, EXP-LSM, FEVER, and STDP-SORN models (Fig. 5f). For the dense reconstruction of (100 µm)3, accuracy of model selection was close to chance level for all models (17% average accuracy; Fig. 5f). So our tests indicate that an experimental approach in which the image volume comprises an entire local cortical circuit module (barrel), but the reconstruction is carried out only in a subset of about 10–15% of neurons is favored over a dense reconstruction of only 12.5% of the barrel volume. Since the imaging of increasingly larger volumes in 3D EM from the mammalian brain is becoming feasible64,66, while its reconstruction is still a major burden, these results propose a realistic experimental setting for connectomic model selection in the cortex.

Incomplete set of hypotheses

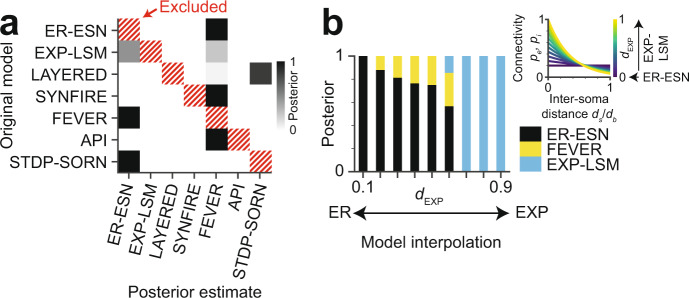

Bayesian analyses can only compare evidence for hypotheses known to the researcher. But what if the true model is missing from the set of tested hypotheses? To investigate this question, we excluded the original model during inference of the posterior distribution from a complete noise-free barrel connectome (Fig. 6a). In these settings, rather than obtaining uniformly distributed posteriors, we found that the probability mass of the posterior distributions was concentrated at one or two of the other models. The FEVER model, for example, which is derived from pairwise random connectivity (ER-ESN) while imposing additional local constraints that result in heightened relative excitatory-excitatory reciprocity, resembles the EXP-LSM model (see Fig. 3c). Accordingly, these three models (ER-ESN, EXP-LSM, FEVER) showed a high affinity for mutual confusion when the original model was excluded during ABC-SMC (Fig. 6a). This may indicate that our Bayesian model selection approach assigns the posterior probability mass to the most similar tested models, thus providing a ranking of the hypotheses. Notably, models with zero posterior probability in the confusion experiment (Fig. 6a) were in fact almost exclusively those at largest distance from the original model. As a consequence, rejecting the models with zero posterior probability mass may provide falsification power even when the “true” model is not among the hypotheses.

Fig. 6. Effect of incomplete hypothesis space and of model interpolation on Bayesian model selection.

a Confusion matrix reporting the posterior distribution when excluding the true model (hatched) from the set of tested model hypotheses (n = 1 repetition). Note that posterior probability is non-uniformly distributed and concentrated at plausibly similar models even when the true model is not part of the hypothesis space. b Posterior distributions for connectome models interpolated between ER-ESN and EXP-LSM (n = 1 repetition per bar). Inset: Space constant dEXP acts as interpolation parameter between ER-ESN (dEXP = 0) and EXP-LSM (dEXP = 1). Note that the transition between the two models is captured by the estimated model posterior, with an intermediate (non-dominant) confusion with the FEVER model.

In order to investigate whether our approach provided sensible model interpolation in cases of mixed or weak model evidence (Fig. 6b), we considered the following example. The EXP-LSM model turns into an ER-ESN model in the limit of large decay constants of pairwise connectivity (that is modeled to depend on inter-soma distance, see inset Fig. 6b). This allowed us to test our approach on connectomes that were sampled from models interpolated between these two model classes. When we exposed such “mixed” connectomes to our model discrimination approach, the resulting posterior had most of its mass at the EXP-LSM model for samples with close to 1 and much of its mass at the ER-ESN model for samples with close to 0. For intermediate model mixtures, the Bayesian model selection approach in fact yielded interpolated posterior probability distributions. This result gave an indication that the approach had in fact some stability against model mixing.

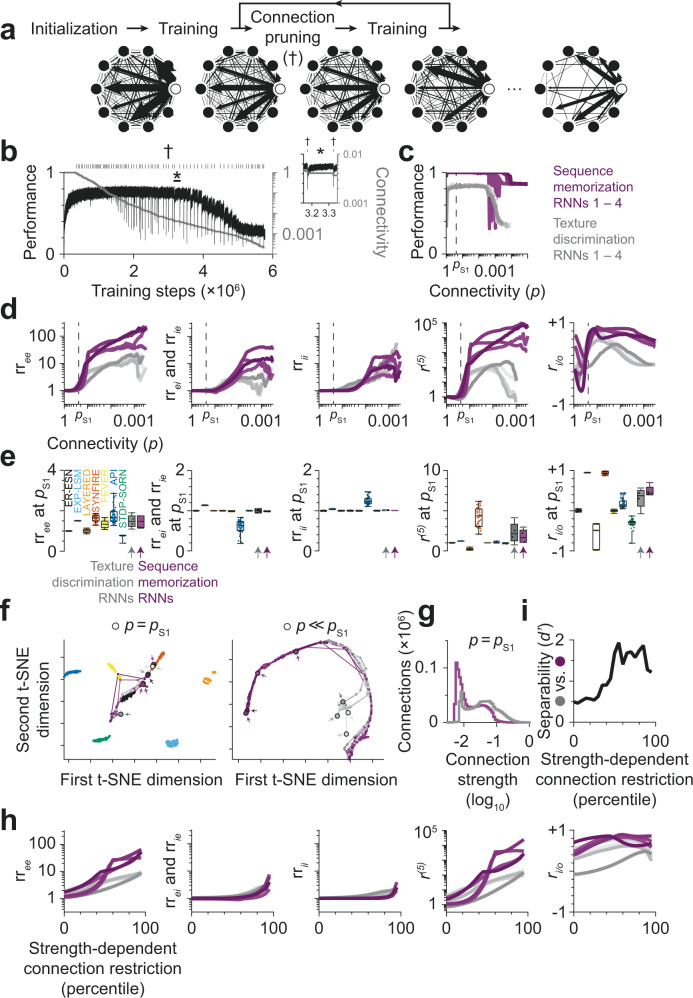

Connectomic separability of sparse recurrent neural networks trained on different tasks

Finally, we asked whether recurrent neural networks (RNNs) that were randomly initialized and then trained on different tasks could be distinguished by the proposed model selection procedure based on their connectomes after training. To address this question, we trained RNNs on either a texture discrimination task or a sequence memorization task. Initially, all RNNs were fully connected with random connection strengths (Fig. 7a). During training, connection strengths were modified by error back-propagation to maximize performance on the task. At the same time, we needed to reduce the connectivity p of the RNNs to a realistic level of sparsity (pS1[0.15…0.25], see Fig. 1d) and used the following strategy: Whenever task performance saturated, we interrupted the training to identify the weakest 10% of connections and permanently pruned them from the RNN (Fig. 7b). This training-pruning cycle then continued on the remaining connections. As a result, connectivity within an RNN was constrained only by the task used for training.

Fig. 7. Connectomic separability of recurrent neural network (RNNs) with similar initialization, but trained on different tasks.

a Overview of training process: RNNs were initially fully connected. Whenever task performance saturated during training, the weakest 10% of connections were pruned (†) to obtain a realistic level of sparsity. b Task performance (black) and network connectivity (gray) of a texture discrimination RNN during training. Ticks indicate the pruning of connections. Inset (*): Connection pruning causes a decrease in task performance, which is (partially) compensated by further training of the remaining connections. c Task performance as a function of network connectivity (p). Performance defined as: Accuracy (Texture discrimination RNNs, gray); 1 – mean squared error (Sequence memorization RNNs, magenta). Note that maximum observed performance was achieved in a wide connectivity regime including connectivity consistent with experimental data (pS1 = 24%; dashed line). Task performance started to decay after pruning at least 99.6% of connections. d Connectome statistics of RNNs over iterative training and pruning of connections (cf. Fig. 3a). e Distribution of connectome statistics at p = pS1 for RNNs and structural network models. Note that structural network models and structurally unconstrained RNNs exhibit comparable variance in connectome statistics (rree: 0.088 vs. 0.15 for API; rrei and rrie: 0.0019 vs. 0.026 for API; rrii: 9.35 × 10−7 vs. 8.17 × 10−3 for API; r(5): 1.54 vs. 1.51 for SYNFIRE; ri/o: 0.057 vs. 0.061 for LAYERED; cf. Fig. 3c). RNNs trained on different tasks did not differ significantly in terms of connectome statistics (rree: 1.48 ± 0.30 vs. 1.46 ± 0.29, p = 0.997; rrei and rrie: 1.00 ± 0.04 vs. 0.99 ± 0.01, p = 0.534; rrii: 1.01 ± 0.01 vs. 1.01 ± 0.00, p = 0.107; r(5): 2.28 ± 1.24 vs. 1.84 ± 0.80, p = 0.997; ri/o: 0.31 ± 0.24 vs. 0.49 ± 0.12, p = 0.534; mean ± std for n = 4 texture discrimination vs. sequence memorization RNNs, each; two-sided Kolmogorov-Smirnov test without correction for multiple comparisons). Boxes: center line is median; box limits are quartiles; whiskers are minimum and maximum; all data points shown. f Similarity of RNNs based on connectome statistics (lines) as connectivity approaches biologically plausible connectivity pS1 (circles and arrows, left) and for connectivity range from 100% to 0.04% (circles and arrows, right). Note that connectome statistics at ≤11% connectivity separate texture discrimination and sequence memorization RNNs into two clusters. g Distribution of connection strengths at p = pS1 for two RNNs trained on different tasks. h Connectome statistics of RNNs with pS1 connectivity when ignoring weak connections. i Separability of texture discrimination and sequence memorization RNNs with biologically plausible connectivity based on statistics derived from weighted connectome.

Maximum task performance was reached early in training while connectivity was still high (p ≈ 80%) and started to decay only after pruning more than 99.6% of connections (p < 0.4%). Within this connectivity range (80% ≥ p ≥ 0.4%), task performance substantially exceeded chance level (approx. 82.8–83.8% vs. 14.3% accuracy for n = 4 texture discrimination RNNs; 0.000–0.002 vs. 0.125 mean squared error for n = 4 sequence memorization RNNs; range of measurements vs. chance level; Fig. 7c). Importantly, task performance was at the highest achieved level also at realistic connectivity of pS1 = 24%.

We then investigated the connectome statistics applied to the RNNs during training (Fig. 7d). We wanted to address the following two questions: First, how strongly are connectome statistics constrained by the training task? In particular, is the variance of connectome statistics in trained RNNs much larger than in network models that are primarily defined by their structure (e.g., LAYERED or SYNFIRE)? Second, does training of RNNs on different tasks result in different connectomic structures? And if so, are the connectome statistics sensitive enough to distinguish RNNs trained on different tasks based only on their structure?

At 24% connectivity, we found the variance of the connectome statistics to be comparable to the variance in structural network models (Fig. 7e; cf. Figure 3c), but connectome statistics of RNNs trained on different tasks were statistically indistinguishable (Fig. 7e), and RNNs with different tasks were thus only poorly separable (sensitivity index d’ of 0.495; Fig. 7f). However, we noticed a separation into two clusters when RNNs were trained and further sparsified to a connectivity of p << 11% (d’ = 1.45 ± 0.23, mean ± std; Fig. 7f).

To further study the effect of sparsification of a trained RNN, we investigated whether additional information about the strength of connections (Fig. 7g) could improve the separability of RNNs trained on different tasks. We started with the weighted connectomes of RNNs that were trained and sparsified to 24% connectivity. For the evaluation of connectome statistics, we then restricted the RNNs to strong connections (Fig. 7h). When ignoring the weakest 50% of connections of each RNN, the texture discrimination and sequence memorization RNNs differed significantly in their relative excitatory→excitatory reciprocity (3.60 ± 0.99 vs. 7.83 ± 1.98, p = 0.011) and relative prevalence of cycles (22.19 ± 12.69 vs. 118.16 ± 40.86, p = 0.011; Fig. 7h). As a result, RNNs trained on different tasks could be separated by the six connectome statistics with 85 ± 3% accuracy (Fig. 7i, separability d’ = 1.61 ± 0.24, mean ± std). We concluded that RNNs with biologically plausible connectivity that were trained on different tasks could be distinguished based on the proposed statistics derived from weighted connectomes, in which only the strongest connections were used for connectome analysis.

Discussion

We report a probabilistic method to use a connectome measurement as evidence for the discrimination of local models in the cerebral cortex. We show that the approach is robust to experimental errors, and that a partial reconstruction of the connectome suffices for model distinction. We furthermore demonstrate the applicability to large cortical connectomes consisting of thousands of neurons. Surprisingly, a set of rather simple connectome statistics is sufficient for the discrimination of a large range of models. These results show that and how connectomes can function as arbiters of local cortical models5 in the cerebral cortex.

Previous work on the classification of connectomes addressed smaller networks, consisting of up to 100 neurons, in which the identity of each neuron was explicitly defined. For these settings, the graph matching problem was approximately solved67. However, such approaches are currently computationally infeasible for larger unlabeled networks67,68, which are found in the cerebral cortex.

As an alternative, the occurrence of local circuit motifs has been used for the analysis of local neuronal networks69–71. Four of our connectome statistics (Fig. 3a) could be interpreted as such motifs: the relative reciprocity within and across the excitatory and inhibitory neuron populations, whose prevalence we could calculate exactly. The key challenge of these descriptive approaches is the interpretation of the observed motifs. The Bayesian approach as proposed here provides a way to use such data as relative, discriminating evidence for possible underlying circuit models.

One approach for the analysis of neuronal connectivity data is the extraction of descriptive graph properties (for example those termed clustering coefficient72, small-worldness73, closeness- and betweenness centrality74), followed by a functional interpretation of these measures. Such discovery-based approaches have been successfully applied especially for the analysis of macroscopic whole-brain connectivity data75,76.

The relationship between (static) network architecture and task performance was previously studied in feed-forward models of primate visual object recognition77,78, in which networks with higher object recognition performance were shown to yield better prediction of neuronal responses to visual stimuli. Our study considered recurrent neural networks, accounting for the substantial reciprocity in cortical connectivity, and investigated the structure-function relationship for static recurrent network architectures on a texture classification task (Supplementary Fig. 1), as well as for sparse recurrent neural networks in which both network architecture and task performance were jointly optimized (Fig. 7).

Pre-hoc connectome analyses, in which the circuit models are defined before connectome reconstruction, offer several advantages over exploratory analyses, where the underlying circuit model is constructed after-the-fact: First, the statistical power of a test with pre-hoc defined endpoints is substantially higher79,80, rendering pre-hoc endpoint definition a standard for example in the design of clinical studies79. Especially since so far, microscopic dense connectomes are mostly obtained and interpreted form a single sample, n = 123,58,81,82, this concern is substantial, and a pre-hoc defined analysis relieves some of this statistical burden. Moreover, the pre-hoc analysis allowed us to determine an experimental design for the to-be-measured connectome, defining bounds on reconstruction and synapse errors and the required connectome measurement density (Fig. 5c, d). Especially given the substantial challenge of data analysis in connectomics61, this is a relevant practical advantage.

We considered it rather unexpected that a 10% fractional reconstruction, and reconstruction errors up to 25% would be tolerable for the selection of local circuit models. One possible reason for this is the homogeneity of the investigated network models. For each model, the (explicit or implicit) structural connectivity rules are not defined per neuron individually, but apply to a whole sub-population of neurons. For example, the ER-ESN model implies one connectivity rule for all excitatory neurons and a second one for all inhibitory neurons; the layered model defines one connectivity rule for each layer. Hence, the model properties were based on the wiring statistics of larger populations, permitting low fractional reconstruction and substantial wiring errors. If, on the contrary, the network models were to define for each neuron a very specific connectivity structure, a different experimental design would likely be favorable, in which the precise reconstruction of few individual neurons could suffice to refute hypotheses.

How critical were the particular circuit constraints which we considered for initial model validation (Fig. 1d)? What if, for example, pairwise excitatory connectivity was lower than concluded from pairwise recordings in slice (Fig. 1d28–36), and instead for example rather 10%, not 15–25% in L4? The results on discriminability of trained RNNs (Fig. 7), which was higher for sparser networks, may indicate that model identification would even improve for lower overall connectivity regimes. Also, such a setting would imply that the model priors would be in a different range (Supplementary Fig. 4; for example the layered network with four layers would imply a pairwise forward connectivity = 27% instead of 53%). Circuit measurements that already clearly refute any of the hypothesized models based on simple pairwise connectivity descriptors would of course reduce the model space a-priori. Once a full connectomic measurement is available, the connectivity constraints (Fig. 1d) can be updated, the model hypothesis space diminished or not, and then our model selection approach can be applied.

The choice of summary statistics in ABC is generally not unique, and poorly chosen statistics may bias model selection83–85. Our use of emulated reconstruction experiments with known originating models was therefore required to verify ABC performance (Figs. 4–6). These results also indicate that it was sufficient to use summary statistics that were constrained to operate on unweighted graphs. More detailed summary statistics that also make use of indicators of synaptic weights accessible in 3D EM data (such as size of post-synaptic density, axon-spine interface, or spine head volume86–88) may allow further distinction of plasticity models with subtle differences in neuronal activity history27. In fact, we found that weighted connectomes were necessary to distinguish between circuit models that were subject to identical structural constraints and that only differed in the tasks that they performed (Fig. 7).

The proposed Bayesian model selection also has a number of drawbacks.

First, likelihood-free model inference using ABC-SMC depends on efficient simulation of the models. Computationally expensive models, such as recurrent neural networks trained by stochastic gradient descent (Fig. 7), are prohibitive for sequential Monte Carlo sampling. However, the proposed connectome statistics and the resulting connectomic distance function provide a quantitative measure of similarity even for individual samples (Fig. 7i). Furthermore, a rough estimate of the posterior distribution over models can be obtained already by a single round of ABC-SMC with a small sample size.

Second, an exhaustive enumeration of all hypotheses is needed for Bayesian model selection. What if none of the investigated models was correct? This problem cannot be escaped in principle, and it has been argued that Bayesian approaches have the advantage of explicitly and transparently accounting for this lack of prior knowledge rather than implicitly ignoring it89. Nevertheless, this caveat strongly emphasizes the need for a proper choice of investigated models. Our results (Fig. 6) indicate that models close to but not identical to any of the investigated ones are still captured in the posterior by reporting their relative similarity to the remaining investigated models. We argue that rejection of models without posterior probability mass provides valuable scientific insights, even when the set of tested hypotheses is incomplete.

Third, we assumed a flat prior over the investigated models, considering each model equally likely a-priori. Pre-conceptions about cortical processing could strongly alter this prior model belief. If one assumed a non-homogenous model prior, this different prior can be multiplied to the posterior computed in our approach. Therefore, the computed posterior can in turn be interpreted as a quantification of how much more likely a given model would have to be considered by prior belief in order to become the classification result, enabling a quantitative assessment of a-priori model belief about local cortical models.

Together, we show that connectomic measurement carries substantial distinctive power for the discrimination of models in local circuit modules of the cerebral cortex. The concrete experimental design for the identification of the most likely local model in cortical layer 4, proposed pre-hoc, will make the mapping of this cortical connectome informative and efficient. Our methods are more generally applicable for connectomic comparison of possible models of the nervous system.

Methods

Circuit constraints

The following circuit constraints were shared across all cortical network models. A single barrel was assumed to consist of 1800 excitatory and 200 inhibitory neurons28,29. The excitatory connectivity , i.e. the probability of an excitatory neuron to project to any other neuron was assumed to be 30–33,36, the excitatory-excitatory reciprocity , i.e., the probability of also observing a bidirectional connection given one connection between two excitatory neurons, was assumed to lie in the range 29–31,33,36. The inhibitory connectivity , i.e., the probability of an inhibitory neuron to project onto any other neuron, was assumed as 31,33–35. Self-connections were not allowed.

Estimates of reconstruction time and synapse number

Neurite path length density was assumed to be , barrel volume was assumed to be V = (300 µm)3, annotation speed was taken as 24 together yielding the total annotation time .

The total number of synapses in a barrel was calculated as with the average number of synapses per connection30 and the total number of synaptically connected pairs of neurons.

Implementations of cortical network models

Seven cortical models were implemented: the Erdős–Rényi echo state network (ER-ESN9,16), the exponentially decaying connectivity - liquid state machine model (EXP-LSM45,46), the layered model (LAYERED8,90), the synfire chain model (SYNFIRE10,11,48), the feature vector recombination model (FEVER12), the antiphase inhibition model (API) and the spike timing-dependent plasticity self-organizing recurrent neural network model (STDP-SORN49,50).

The Erdős–Rényi echo state network (ER-ESN) model was a directed Erdős–Rényi random graph. Each possible excitatory projection was realized with probability , each possible inhibitory projection with probability .

For the exponentially decaying connectivity - liquid state machine model (EXP-LSM), excitatory and inhibitory neurons were assumed to be uniformly and independently distributed in a cubic volume of equal side lengths. The excitatory and inhibitory pairwise connection probabilities and were functions of the Euclidean distance of a neuron pair according to , , , . The length scale parameters were adjusted to match an overall connectivity of in the excitatory case () and a connectivity of in the inhibitory case ().

The layered model (LAYERED) consisted of excitatory layers. Lateral excitatory-excitatory connections were realized within one layer with connection probability . Forward connections from one layer to the next layer were realized with probability . Inhibitory neurons were not organized in layers but received excitatory projections uniformly and independently from all excitatory neurons with probability and projected onto any other neuron uniformly and independently with probability .

The synfire chain (SYNFIRE) implementation used in this work followed47. The inhibitory pool size was proportional to the excitatory pool size . The network was constructed as follows: (1) An initial excitatory source pool of size was chosen uniformly from the excitatory population. (2) An excitatory target pool of size and an inhibitory target pool of size were chosen uniformly. The excitatory source and target pools were allowed to share neurons, i.e., neurons were drawn with replacement. (3) The excitatory source pool was connected all-to-all to the excitatory and inhibitory target pools but no self-connections were allowed. (4) The excitatory target pool was chosen to be the excitatory source pool for the next iteration. Steps (2) to (4) were repeated times, with denoting the nearest integer. Inhibitory neurons projected uniformly to any other neuron with probability .

The feature vector recombination model (FEVER) network was constructed from an initial ER random graph with initial pairwise connection probabilities for with the feverization, the feature space dimension and the number of neurons. The outgoing projections of neuron were obtained from according to the sparse optimization problem , where the were the feature vectors drawn uniformly and independently from a unit sphere of feature space dimension and denoted the initial outgoing projections of neuron as given by and if neuron was excitatory, otherwise. The sparse optimization was performed with scikit-learn91 using the “sklearn.linear_model.Lasso” optimizer with the options “positive = True” and “max_iter = 100000” for the excitatory and the inhibitory population individually. The parameter , was fitted to match the excitatory and inhibitory connectivity of and respectively.

In the antiphase inhibition model (API), a feature vector was associated with each neuron . The feature vectors were drawn uniformly and independently from a unit sphere with feature space dimension . The cosine similarity between the feature vectors of neuron and were transformed into connection probabilities between neuron and according to , where if neuron was excitatory and if neuron was inhibitory. The coefficients with were fitted to match the excitatory and inhibitory connectivity constraints. The coefficient was in the range (Supplementary Fig. 4f11).

The spike timing dependent plasticity self-organizing recurrent neural network model (STDP-SORN) network was constructed as follows: An initial random matrix with pairwise connection probabilities for was drawn. Let denote the sum of all excitatory incoming weights of neuron and similarly denote the sum of all inhibitory incoming weights of neuron . Each weight was normalized according to and each weight according to such that for each neuron the sum of all incoming excitatory weights was and the sum of all incoming inhibitory weights was . No self-connections were allowed. The so obtained matrix was the initial adjacency matrix . The initial vector of firing thresholds was initialized to . The neuron state and the past neuron state were initialized as zero vectors.

After initialization, for each of the simulation time points, the following steps were repeated50: (1) Propagation, (2) Intrinsic plasticity, (3) Normalization, (4) STDP, (5) Pruning and (6) Structural plasticity as follows:

Propagation. The neuron state was updated , where was noise with iid., and .

Intrinsic plasticity. The firing thresholds were updated where was the target firing rate and the intrinsic plasticity learning rate.

Normalization. The excitatory incoming weights were normalized to : If then

STDP (Spike timing dependent plasticity). Weights were updated according to for . Finally the past neuron state was also updated

Pruning. Weak synapses were removed: If then .

Structural plasticity. It was attempted to add synapses randomly, with the number of excitatory synapses currently present in the network. For each of these attempts two integers were chosen randomly and independently. If and then .

The STDP-SORN model was implemented in Cython and OpenMP.

All code was verified using a set of unit tests with 91% code coverage.

Reconstruction errors and network subsampling

Reconstruction errors were implemented by randomly rewiring connections: A fraction of the edges of the network was randomly removed, ignoring their signs. The same number of edges was then randomly reinserted and the signs were adjusted to match the sign of the new presynaptic neuron. Partial connectomic reconstruction was implemented by network subsampling: A fraction of the neurons was uniformly drawn. The subgraph induced by these neurons was preserved, its complement discarded.

Connectomic cortical network measures

The following measures (Fig. 3a) were computed: (1) relative excitatory-excitatory reciprocity, (2) relative excitatory-inhibitory reciprocity, (3) relative inhibitory-excitatory reciprocity, (4) relative inhibitory-inhibitory reciprocity, (5) relative excitatory recurrency, and (6) excitatory in/out-degree correlation. All measures were calculated on binarized networks as follows:

Reciprocity with , e = excitatory, i = inhibitory, was defined as the number of reciprocally connected neuron pairs between neurons of population and divided by the total number of directed connections from to . If the number of connections from to was zero then was set to zero. Hence was an estimate for the conditional probability of observing the reciprocated edge of a connection from to , given a connection from to . The relative excitatory-inhibitory reciprocity was defined as . I.e., relative reciprocities were obtained by dividing the reciprocity of a network by the expected reciprocity of an ER network with the same connectivity.

Relative excitatory recurrency was defined as , where was the excitatory submatrix and denoted the trace of the matrix. The cycle length parameter was set to .

The excitatory in/out-degree correlation was the Pearson correlation coefficient of the in- and out-degrees of neurons of the excitatory subpopulation. Let denote the in-degree of neuron and the out-degree of neuron . Let and , with the total number of excitatory neurons. Then .

Bayesian model selection

Bayesian model selection was performed on networks sampled from the seven models as follows: First, a noise-free network with 2000 neurons was drawn from one of the network models . Second, this noise-free network was perturbed with noise of strength as described above. Then, a fraction of the network was subsampled, yielding .

The Bayesian posterior was then calculated on the noisy subnetwork using an approximate Bayesian-sequential Monte Carlo (ABC-SMC) method. The implemented ABC-SMC algorithm followed the ABC-SMC procedure proposed by92 with slight modifications to ensure termination of the algorithm, as described below. The ABC-SMC algorithm was implemented as custom Python library (see Supplementary Code file and https://gitlab.mpcdf.mpg.de/connectomics/discriminatEM).

The network measures described above were used as summary statistics for the ABC-SMC algorithm. The distance between two networks and was defined as , where the sum over was taken over the six network measures. The quantities and were the 80% and 20% percentiles of the measure , evaluated on an initial sample from the prior distribution of size 2000; the particle number, i.e., the number of samples per generation, was set to 2000. If a particle of the initial sample contained an undefined measure (e.g., in-/out-degree correlation), it was discarded. When and were equal, the corresponding normalization constant of the distance function was set to the machine epsilon instead. The initial acceptance distance was the median of the distances as obtained from the same initially sampled connectomes .

After each generation, for the following generation was set to the median of the error distances of the particles in the current generation. Particles were perturbed hierarchically. First, a model was drawn from the current approximating posterior model distribution. With probability 0.85 the model was kept, with probability 0.15 it was redrawn uniformly from all models. Second, given the sampled model, a single particle from the model specific particles was sampled. The sampled particle was perturbed according to a multivariate normal kernel with twice the variance of the variance of the particles in the current population of the given model. The perturbed particle was accepted if the error distance was below . To obtain again 2000 particles for the next population, 2000 particle perturbation tasks were run in parallel. However, to ensure termination of the algorithm, each of the 2000 tasks was allowed to terminate without returning a new particle if more than 2000 perturbation attempts within the task were not successful. Model selection was stopped if only one single model was left, the maximum number of 8 generations was reached, the minimum was reached or less than 1000 accepted particles were obtained for a population. See Supplementary Code for implementation details.

Functional testing

The ER-ESN, EXP-LSM, and LAYERED models were trained to discriminate natural texture classes, which were represented by one natural image each. Samples of length 500 pixel of these classes were obtained at random locations of these images. These samples were then fed into LAYERED networks via a single input neuron projecting to the first layer of the network. In the ER and EXP case the input neuron projected to all neurons in the network. Within the recurrent network, the dynamical model was given by , where was the adjacency matrix, the input, the activation, the leak rate and . Readout was a softmax layer with seven neurons ; one neuron for each class. Adam93 was used to train all the forward connections with exception of the input connections. The loss was the categorical cross-entropy accumulated over the last 250 time steps , where denoted the sample and the ground truth class of sample . At prediction time the predicted class was . The model was implemented in Theano (https://deeplearning.net/software/theano) and Keras (https://keras.io) as custom recurrent layer and run on Tesla M2090 GPUs. See Supplementary Code for details of the implementation.

In the SYNFIRE model, a conductance based spiking model was used with membrane potential with , inhibitory reversal potential , excitatory reversal potential , resting potential , spiking threshold , inter pool delay , excitatory intra pool jitter inhibitory intra pool jitter , excitatory refractory period and inhibitory refractory period . On spiking of presynaptic neuron the membrane potential of postsynaptic neuron was increased by where denoted the presynaptic efficacy, the presynaptic reversal potential and the postsynaptic membrane potential. The excitatory synaptic efficacy and the inhibitory synaptic efficacy were functions of the pool size and were obtained by interpolating , and linearly.

The fractional chain activation was calculated as follows: Let denote the number of active neurons of pool between time and , with . Let the maximal activation be and define the pool activity indicator . Let the cumulative activity be and . The number of activated pools was and the fractional chain activation in which was the chain length. Fractional pool activation at time was the fraction of neurons in a pool that exceeded a threshold activity between time and , with .

Additional model-functional testing was performed. Also, SYNFIRE, FEVER, API, and STDP-SORN networks were trained to discriminate textures, analogous to the ER-ESN and EXP-LSM models. The test previously applied to the SYNFIRE model was not applied to the remaining models because the SYNFIRE model was the only integrate-and-fire model. The recombination memory test, originally proposed as part of the FEVER model, was also applied to the API model and vice versa the antiphase inhibition test, originally proposed as part of the API model was also applied to the FEVER model. These two tests were not applied to the remaining models because these lacked feature vectors. The test for uncorrelated and equally distributed activity, originally proposed as part of the STDP-SORN model, was also not applied to the remaining models because they did not feature binary threshold neurons. If a model was not able to carry out a given task due to inherent properties of that model such as, e.g., absence of feature vectors, the model was considered to fail that task.

Training, sparsification, and connectomic separability of recurrent neural networks trained on different tasks

Architecture and initialization of recurrent neural networks

Recurrent neural networks (RNNs) consisting of 1800 excitatory, 200 inhibitory, and a single input neuron were trained on either a texture discrimination or a sequence memorization task (Fig. 7). Each of the 2000 neurons in the RNN received synaptic inputs from the input neuron and from all other RNN neurons. The total input to neuron i at time t was given by Ii,t = Wi,1 × A1,t-1 + … + Wi,2000 × A2000,t-1 + vi×ut + bi, where Wi,j is the strength of the connection from neuron j to neuron i. Connections originating from excitatory neurons were non-negative, while connections from inhibitory neurons were non-positive. Self-innervations was prohibited (Wi,i = 0 for all i). Aj,t-1 = max(0, min(2, Ii,t-1)) is the activation of neuron j in at time t-1. The input signal ut was projected to neuron i by connection of strength vi. bi was a neuron-specific bias.

Prior to training, RNNs were initialized as follows (Fig. 7a): Neuronal activations Ai,0 were set to zero. Internal connection strengths Wj,i were sampled from a truncated normal distribution (by resampling values with absolute values greater than two). If necessary, the sign of Wj,i was inverted. Connections from inhibitory neurons were rescaled such that <Wj> = 0, where <•> denotes the average. Finally, connection strengths were rescaled to a standard deviation of (2/2001)1/2 (94). Connections from the input neuron were initialized by the same procedure. Neuronal biases were set to minus <v>×<u>.

Texture discrimination task

RNNs were trained to discriminate between seven different natural textures. The activity of the input neuron, ut, was given by the intensity values of 100 consecutive pixels in a texture image. For each texture, a different excitatory neuron was randomly chosen as output neuron. The RNNs were trained to activate an output neuron if and only if the input signal was sampled from the corresponding natural texture.

The texture images were split into training (top half), validation (third quarter), and test sets (bottom quarter). Input sequences were sampled by random uniform selection of a texture image, of a row therein, and of a pixel offset. The sequences were reversed with 50% probability. The excitatory character of the input neuron was emulated by normalizing the intensity values within each gray-scale image, clamping the values to two standard deviations and adding a bias of two.

The RNNs were trained by minimizing the cross-entropy loss on mini-batches of 128 sequences using Adam93 (learning rate: 0.0001, β1: 0.9, and β2: 0.999). The gradient was clipped to a norm of at most 1. Every ten gradient steps, the RNN was evaluated on a mini-batch from the validation set. If the running median of 100 validation losses did not decrease for 20,000 consecutive gradient steps, the connectivity matrix W was saved for offline analysis and then sparsified (Fig. 7a). Following95, connections with absolute connection strength below the 10th percentile were pruned (and couldn’t be regained thereafter). The validation loss and gradient step counter were reset before training of the sparsified RNN continued (Fig. 7a).

Four RNNs were trained with different sets of initial parameters and different training sequence orders. Each RNN was trained for around 5 days and 21 h, corresponding to roughly 5.75 million training steps (Python 3.6.8, NumPy 1.16.4, TensorFlow 1.12, CUDA 9.0, CuDNN 7.4, Nvidia Tesla V100 PCIe; Fig. 7b, c).

Sequence memorization task

In the sequence memorization task, RNNs were trained to output learned sequences at the command of the input signal. The sequences were 100-samples-long whisker traces from96. The input signal determined the onset time and type of sequence to generate. The activity of the input neuron, ut, was initially at zero (u0 = 0) and switched to either +1 or −1 at a random point in time. The RNN was trained to output zero while the input is zero, to start producing sequence one at the positive edge, and to generate sequence two starting at the negatives edge in ut. The whisker traces were drift-corrected, such that they started and ended at zero. The amplitudes were subsequently divided by twice their standard deviation.

Training proceeded as for texture discrimination. The mean squared error was used as loss function. Four RNNs with different random initializations and different training sequence orders were each trained for roughly 15 days and 22 h, corresponding to 18.5 million training steps.

Analysis of RNN connectomes

Connectivity matrices were quantitatively analyzed in terms of the relative excitatory-excitatory reciprocity (rree), the relative excitatory-inhibitory reciprocity (rrei), the relative inhibitory-excitatory reciprocity (rrie), the relative inhibitory-inhibitory reciprocity (rrii), the relative prevalence of cycles of length 5 (r(5)), and the in-out degree correlation (ri/o) (Fig. 7d–i). The connectome statistics were then further processed using MATLAB R2017b. Equality of connectome statistics across different tasks was tested using the two-sample Kolmogorov-Smirnov test. To visualize structural similarity of neural networks in two dimensions, t-SNE97 was applied to the six connectome statistics. For a quantitative measure of structural separability of RNNs, the connectomic distance dγ(Ci, Cj) (see “Bayesian model selection”) was computed for all pairs of RNNs. dγ(Ci, Cj) < θ was used to predict whether RNNs i and j were trained on the same task. The performance of this predictor was evaluated in terms of the area (A) under the receiver operating characteristic (ROC) curve, and accuracy. The sensitivity index d’ was computed as 21/2Z(A), where Z is the inverse of the cumulative distribution function of the standard normal distribution.

Whether information about connection strength helps to distinguish texture discrimination and sequence memorization RNNs (Fig. 7g–i) was tested as follows: For each RNN, the configuration with average connectivity closest to 24% was further sparsified by discarding the weakest 5, 10, 15,..., 95% of connections before computing the connectome statistics. Separability of texture discrimination and sequence memorization network based on the connectome statistics was quantified as above.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

We thank Till Kretschmar for investigation of connectome metrics in an early phase of the project, Jan Hasenauer for discussions, Robert Gütig, and Andreas Schaefer for comments on an earlier version of the manuscript, Fabian Fröhlich for performing an independent reproduction experiment and Christian Guggenberger and Stefan Heinzel at the Max Planck Compute Center Garching for excellent support of the high-performance computing environment.

Source data

Author contributions

Conceived, initiated, and supervised the study: M.H.; supervised the study: C.M., F.T.; carried out simulations, developed methods, analyzed data: E.K. and A.M. with contributions from all authors; wrote the paper: E.K., M.H., and A.M. with input from all authors.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Data availability

The data that support the findings of this study are available at https://discriminatEM.brain.mpg.de.

Code availability

All methods were implemented in Python 3 (compatible with version 3.7), unless noted otherwise. All code is available under the MIT license in the Supplementary Code file and at https://gitlab.mpcdf.mpg.de/connectomics/discriminatEM. To install and run discriminatEM please follow the instruction in the readme.pdf provided within discriminatEM_v2.zip. Detailed API and tutorial style documentation are also provided within discriminatEM_v2.zip in the HTML format (doc/index.html).

Competing interests

The authors declare no competing interests

Footnotes

Peer review information Nature Communications thanks Michael Reimann and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

10/26/2021

The Funding information section was missing from this article and should have read ‘Open Access funding enabled and organized by Projekt DEAL’. The original article has been corrected.

Contributor Information

Fabian J. Theis, Email: fabian.theis@helmholtz-muenchen.de

Moritz Helmstaedter, Email: mh@brain.mpg.de.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-021-22856-z.

References

- 1.Doyle DA, et al. The structure of the potassium channel: molecular basis of K+ conduction and selectivity. Science. 1998;280:69–77. doi: 10.1126/science.280.5360.69. [DOI] [PubMed] [Google Scholar]

- 2.Nogales E. The development of cryo-EM into a mainstream structural biology technique. Nat. Methods. 2016;13:24–27. doi: 10.1038/nmeth.3694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bargmann CI, Marder E. From the connectome to brain function. Nat. Methods. 2013;10:483–490. doi: 10.1038/nmeth.2451. [DOI] [PubMed] [Google Scholar]

- 4.Morgan JL, Lichtman JW. Why not connectomics? Nat. Methods. 2013;10:494–500. doi: 10.1038/nmeth.2480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Denk W, Briggman KL, Helmstaedter M. Structural neurobiology: missing link to a mechanistic understanding of neural computation. Nat. Rev. Neurosci. 2012;13:351–358. doi: 10.1038/nrn3169. [DOI] [PubMed] [Google Scholar]

- 6.Jonas E, Kording KP. Could a Neuroscientist Understand a Microprocessor? PLoS Comput Biol. 2017;13:e1005268. doi: 10.1371/journal.pcbi.1005268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Briggman KL, Helmstaedter M, Denk W. Wiring specificity in the direction-selectivity circuit of the retina. Nature. 2011;471:183–188. doi: 10.1038/nature09818. [DOI] [PubMed] [Google Scholar]

- 8.Rosenblatt, F. Principles of Neurodynamics; Perceptrons and the Theory of Brain Mechanisms. Spartan Books (1962).

- 9.Jaeger H, Haas H. Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science. 2004;304:78–80. doi: 10.1126/science.1091277. [DOI] [PubMed] [Google Scholar]

- 10.Abeles, M. Local Cortical Circuits. Springer (1982).

- 11.Troyer TW, Krukowski AE, Priebe NJ, Miller KD. Contrast-invariant orientation tuning in cat visual cortex: thalamocortical input tuning and correlation-based intracortical connectivity. J. Neurosci. 1998;18:5908–5927. doi: 10.1523/JNEUROSCI.18-15-05908.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Druckmann S, Chklovskii Dmitri DB. Neuronal circuits underlying persistent representations despite time varying activity. Curr. Biol. 2012;22:2095–2103. doi: 10.1016/j.cub.2012.08.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Beaumont MA, Zhang W, Balding DJ. Approximate Bayesian computation in population genetics. Genetics. 2002;162:2025–2035. doi: 10.1093/genetics/162.4.2025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sisson SA, Fan Y, Tanaka MM. Sequential Monte Carlo without likelihoods. Proc. Natl Acad. Sci. 2007;104:1760–1765. doi: 10.1073/pnas.0607208104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Toni T, Welch D, Strelkowa N, Ipsen A, Stumpf MPH. Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems. J. R. Soc. Interface. 2009;6:187–202. doi: 10.1098/rsif.2008.0172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Erdős P, Rényi A. On random graphs. Publicationes Mathematicae Debr. 1959;6:290–297. [Google Scholar]

- 17.Schmidhuber J. Learning complex, extended sequences using the principle of history compression. Neural Comput. 1992;4:234–242. doi: 10.1162/neco.1992.4.2.234. [DOI] [Google Scholar]

- 18.El Hihi, S. & Bengio, Y. Hierarchical recurrent neural networks for long-term dependencies. In: Advances in Neural Information Processing Systems 8. (MIT Press, 1996).

- 19.Binzegger, T., Douglas, R. J. & Martin, K. A. C. Cortical architecture. In: Brain, Vision, and Artificial Intelligence. (Springer, 2005).

- 20.Bruno RM, Sakmann B. Cortex is driven by weak but synchronously active thalamocortical synapses. Science. 2006;312:1622–1627. doi: 10.1126/science.1124593. [DOI] [PubMed] [Google Scholar]

- 21.Meyer HS, et al. Number and laminar distribution of neurons in a thalamocortical projection column of rat vibrissal cortex. Cereb. Cortex. 2010;20:2277–2286. doi: 10.1093/cercor/bhq067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Denk W, Horstmann H. Serial block-face scanning electron microscopy to reconstruct three-dimensional tissue nanostructure. PLoS Biol. 2004;2:e329. doi: 10.1371/journal.pbio.0020329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kasthuri N, et al. Saturated reconstruction of a volume of neocortex. Cell. 2015;162:648–661. doi: 10.1016/j.cell.2015.06.054. [DOI] [PubMed] [Google Scholar]

- 24.Boergens KM, et al. webKnossos: efficient online 3D data annotation for connectomics. Nat. Methods. 2017;14:691–694. doi: 10.1038/nmeth.4331. [DOI] [PubMed] [Google Scholar]

- 25.Berning M, Boergens KM, Helmstaedter M. SegEM: efficient image analysis for high-resolution connectomics. Neuron. 2015;87:1193–1206. doi: 10.1016/j.neuron.2015.09.003. [DOI] [PubMed] [Google Scholar]

- 26.Januszewski M, et al. High-precision automated reconstruction of neurons with flood-filling networks. Nat. Methods. 2018;15:605–610. doi: 10.1038/s41592-018-0049-4. [DOI] [PubMed] [Google Scholar]

- 27.Motta, A. et al. Dense connectomic reconstruction in layer 4 of the somatosensory cortex. Science366, 1093–1093 (2019). [DOI] [PubMed]