Abstract

Autism spectrum disorder (ASD) is a highly prevalent neurodevelopmental disorder. ASD community-based organizations (ASD-CBOs) underutilize or inconsistently utilize evidence-based practices (ASD-EBPs) despite numerous available EBPs to treat ASD. Despite this, ASD-CBOs implement changes to practices regularly. Understanding ASD-CBO’s implementation-as-usual (IAU) processes may assist to develop strategies to facilitate ASD-EBP adoption, implementation and sustainment. A convergent mixed methods (quan+QUAL) design was utilized. Twenty ASD-CBO agency leaders (ALs) and 26 direct providers (DPs), from 21 ASD-CBOs, completed the Autism Model of Implementation Survey Battery, including demographic and agency IAU process questions. Surveys were analyzed through descriptive and content analyses. A subset of 10 ALs provided qualitative interview data that were analyzed using coding, consensus and comparison methods to allow for a more comprehensive understanding of the IAU process within their ASD-CBOs. Quantitative analyses and qualitative coding were merged utilizing a joint display and compared. Results suggest that the IAU process follows some phases identified in the Exploration, Preparation, Implementation, Sustainment (EPIS) framework but were conducted in an informal manner—lacking specificity, structure and consistency across and within ASD-CBOs. Moreover, data suggest adding a specific adoption decision phase to the framework. Nonetheless, most ALs felt previous implementation efforts were successful. IAU processes were explored to determine whether the implementation process may be an area for intervention to increase ASD-EBP utilization in ASD-CBOs. Developing a systematized implementation process may facilitate broader utilization of high quality ASD-EBPs within usual care settings, and ultimately improve the quality of life for individuals with ASD and their families.

Individuals with autism spectrum disorder (ASD) need comprehensive, evidence-based practices (EBPs) across their lifespan. EBPs developed for individuals with ASD (ASD-EBPs) may reduce the prevalence, severity and interference to daily life related to ASD symptoms, comorbid psychiatric disorders and co-occurring challenging behaviors, as well as substantially improving social communication and adaptive skills (National Autism Center, 2015; Wong et al., 2015). Overall, EBPs lead to positive health outcomes for many individuals receiving these practices (Wong et al., 2015), with the intended outcomes including reduced symptoms and improved quality of life among individuals with ASD and families. Yet, evidence suggests that many individuals with ASD do not receive ASD-EBPs (Brookman-Frazee, et al., 2012; Pickard et al., 2018). This may be due to the multiple service systems involved (either sequentially or simultaneously) in the care of individuals with ASD (Dingfelder & Mandell, 2011; Hyman et al., 2020). Further, differences in training and varied educational backgrounds of providers can impact provider knowledge of, exposure to, and attitude toward ASD-EBPs, which may reduce EBP utilization (McLennan et al., 2008; Aarons, 2004; Paytner & Keen, 2015). In fact, although 28 ASD-EBPs addressing a range of behavioral or health outcomes have been identified through recent large-scale systematic reviews (cf. Steinbrenner et al., 2020; Wong et al., 2015), ASD-EBPs continue to be underutilized, particularly in community-based settings (Brookman-Frazee et al., 2012; Dingfelder & Mandell, 2011).

Community-based organizations specializing in the delivery of ASD services (ASD-CBOs) are a common provider of services for individuals with ASD and their families (Brookman-Frazee et al., 2010; BLINDED; BLINDED). Recent studies have found that CBOs, including ASD-CBOs, have insufficient capacity to adopt, implement and sustain EBPs (Ramanadhan et al., 2017). Specifically, barriers existing at the organizational-level (e.g., organizational culture) and individual provider-level (e.g., knowledge, attitudes) can limit the delivery of ASD services, generally, and ASD-EBPs, specifically (Paytner & Keen, 2015). For example, ASD-CBOs vary in their funding structure, which has been associated with the services that are provided through these organizations (Bachman et al., 2012; Wang et al., 2013).

Additionally, self-report and observational data demonstrate that providers who deliver ASD-EBPs to individuals with ASD also commonly use preference-based practices with unestablished or undetermined evidence for use (Brookman-Frazee et al., 2010; Pickard et al., 2018; Kerns et al., 2019). This lack of appropriately trained providers contributes to the gap in EBP service availability (Baller et al., 2016; Chiri & Warfield, 2012; Paytner & Keen, 2015), thereby exacerbating observed health disparities of individuals with ASD (Benevides et al., 2016).

Further, even when providers use ASD-EBPs, they tend to do so with low to moderate intensity (Brookman-Frazee et al., 2010). This research-to-practice gap illustrates the ongoing challenges of translating research knowledge from university-based settings into community-based usual care settings in a manner that facilitates the fit, feasibility, acceptability, utility, fidelity and sustainment of ASD-EBPs (Chambers et al., 2013). Thus, access to quality behavioral and mental healthcare remains one of the largest unmet service needs for individuals with ASD (Chiri & Warfield, 2012).

Importantly, differential use of ASD-EBPs limit their effectiveness to fully address the behavioral and mental health concerns of individuals with ASD, and use of practices lacking an evidence base may even be harmful to those receiving the services (Chorpita, 2003; Ganz et al., 2018). Finally, individuals with ASD who are disadvantaged in multiple ways (i.e., low socioeconomic status, minority status) are at even greater risk for adverse health and behavioral outcomes when ASD-EBPs are inaccessible as compared with the general population and other individuals with ASD who are not members of a marginalized group (Bishop-Fitzpatrick & Kind, 2017). Overall, a lack of EBP availability within ASD-CBOs may lead to increases in health disparities and reduced quality of life for individuals with ASD, given that many individuals with ASD and their families receive specialized services within these settings (Bishop-Fitzpatrick & Kind, 2017; Brookman-Frazee et al., 2010).

By exploring implementation-as-usual (IAU) processes utilized within ASD-CBOs, we may better understand naturally occurring implementation efforts, including effective strategies, and identify barriers to implementation efforts or areas that may be improved upon for ASD-CBO providers. Once components of the IAU process are better understood, researchers and ASD-CBO community partners may be better suited to create tools facilitating adoption, uptake, implementation and sustainment of ASD-EBPs within ASD-CBOs (BLINDED; Stahmer et al., 2017). Thus, an important and critical first step to increase adoption and utilization of ASD-EBPs is to understand the IAU processes used among ASD-CBOs.

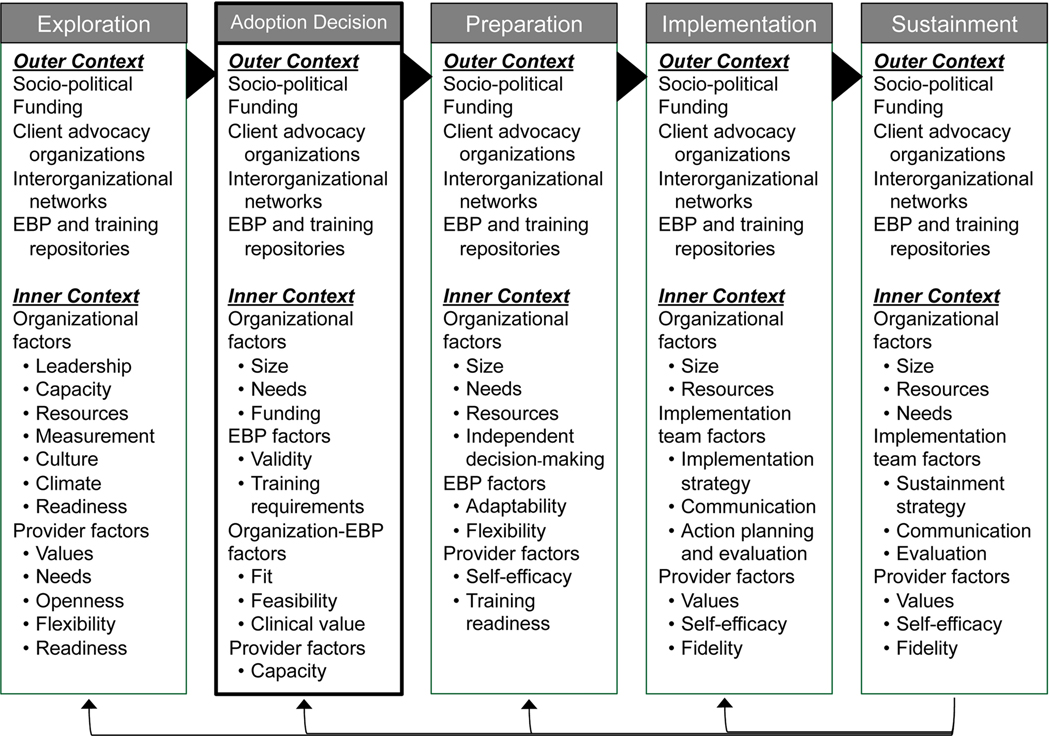

Dissemination and implementation (D&I) models can provide useful guidance for considering a comprehensive set of organizational and individual provider factors likely to contribute to the implementation and sustainment of EBPs (Moullin et al., 2019; Aarons et al., 2011; Greenhalgh et al., 2004; Tabak et al., 2012). The EPIS implementation framework (Aarons et al., 2011) is both a process and determinant framework (Nilsen, 2015) that includes four phases—Exploration, Preparation, Implementation, and Sustainment—each of which includes outer and inner contextual factors, bridging factors linking outer and inner contexts, and innovation factors that collectively are proposed to facilitate EBP implementation and sustainment (see Moullin et al., 2019 for systematic review of the EPIS). Guided by the EPIS framework, the current study aimed to provide the first evaluation of the IAU processes within ASD-CBOs providing specialized services to individuals with ASD. Given the paucity of information about IAU, generally, and IAU processes within ASD-CBOs, specifically, this study sought to answer the following research questions:

What specific activities are involved in the IAU process within ASD-CBOs?

What factors facilitate or hinder the IAU process for new EBPs within ASD-CBOs?

How do agency leaders perceive the IAU process within their ASD-CBO?

Method

Design

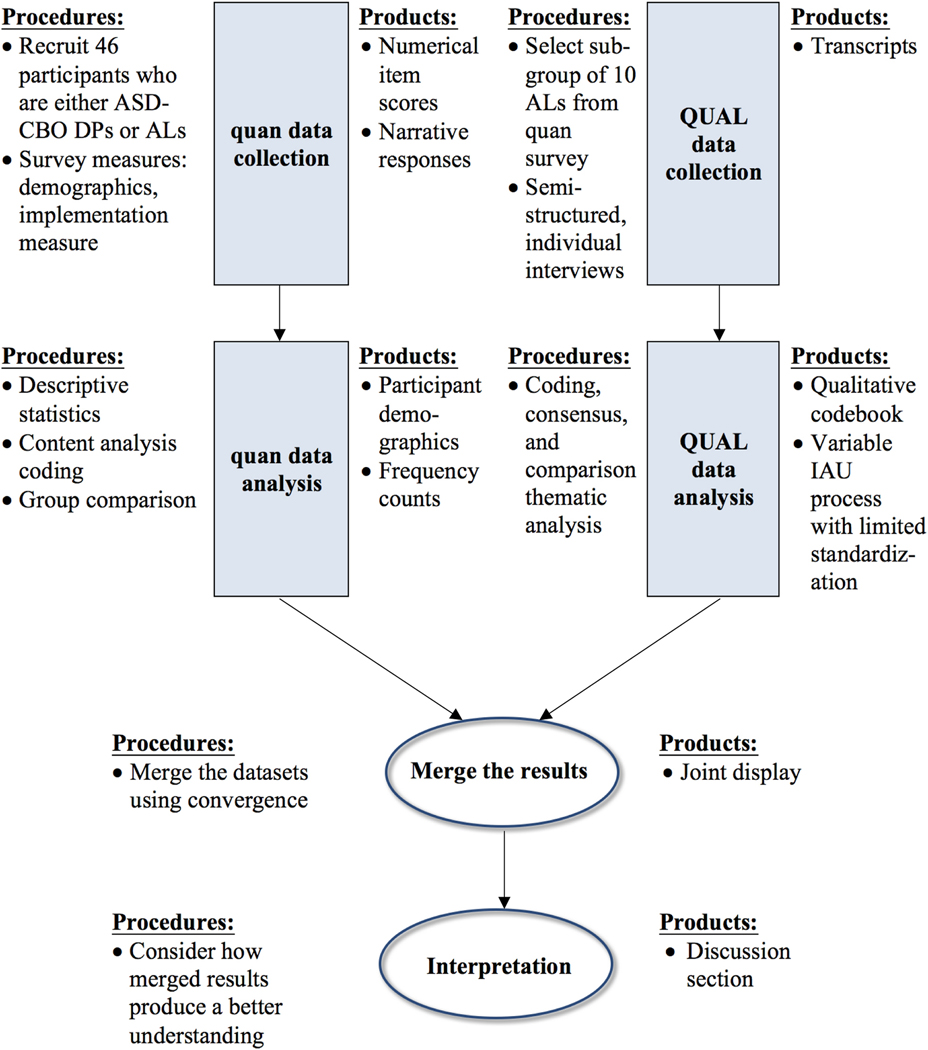

Data for the current study were drawn from a broader study of the BLINDED (BLINDED; PI: BLINDED), which evaluated usual care strategies for training, treatment delivery, and implementation within ASD-CBOs. The purpose of collecting these data was to inform the development of a comprehensive implementation toolkit in collaboration with ASD community stakeholders participating in a community-academic partnership (CAP; BLINDED). The current study (Figure 1) utilized a convergent parallel (quan+QUAL) mixed methods research design where quantitative datum and qualitative inquiry were collected independently, and where qualitative methods were primary (indicated by capital letters) (Creswell & Plano Clark, 2011; Palinkas et al., 2011). This design was selected in order to draw upon the strengths of both quantitative and qualitative methods (also known as “methodological plurality”) by integrating the data to draw convergent and/or divergent conclusions from the quantitative and qualitative results (Fetters & Molina-Azorin, 2017; Landrum & Garza, 2015). Survey and semi-structured interview data were collected concurrently from ASD-CBO agency leaders and direct service providers. Each strand of the mixed methods design was analyzed independently from one another.

Figure 1.

Visual model of convergent parallel (quan+QUAL) design procedures

Integrating the Methods.

Results from the quantitative analyses and qualitative thematic coding were then integrated utilizing a joint display of the data and compared. Use of integrating the methodological strands during the interpretation phase of the study allowed for a more comprehensive understanding of the perceptions of the IAU process and factors influencing EBP implementation within ASD-CBOs (Fetters & Molina-Azorin, 2017; Landrum & Garza, 2015; Guetterman et al., 2015; Palinkas et al., 2011).

Quantitative Phase

Participants

Participants included a nonprobability sample (Etikan et al., 2016) recruited from 3 Southern California counties in the United States. The sample included ASD-CBO agency leaders (ALs) and direct providers (DPs). See Table 1 for participant demographics.

Table 1.

Participant Demographics by Agency Role and Data Source

| Participant Role | AL | AL | DP |

|---|---|---|---|

| Data Source | AMI Survey | AMI Interview | AMI Survey |

| Total Participant N | N = 20 | N = 10 | N = 26 |

| n (%) | n (%) | n (%) | |

| Gender | |||

| Female | 17 (85.0) | 9 (90.0) | 24 (92.3) |

| Male | 3 (15.0) | 1 (10.0) | 2 (7.7) |

| Mean Age (SD) | 40.3 (8.4) | 40.4 (7.2) | 28.1 (4.1) |

| Race/Ethnicity | |||

| White | 18 (90.0) | 9 (90.0) | 15 (57.7) |

| African American/Black | 0 (0.0) | 0 (0.0) | 1 (3.8) |

| Hispanic or Latinx | 1 (5.0) | 1 (10.0) | 4 (15.4) |

| Asian American/Pacific Islander | 1 (5.0) | 0 (0.0) | 3 (11.5) |

| Filipino/a American | 1 (5.0) | 0 (0.0) | 1 (3.8) |

| Mixed | 0 (0.0) | 0 (0.0) | 2 (7.7) |

| Highest Degree Earned | |||

| Bachelor’s Degree | 0 (0.0) | 0 (0.0) | 16 (61.5) |

| Master’s Degree | 11 (55.0) | 4 (40.0) | 10 (38.5) |

| Doctorate | 9 (45.0) | 6 (60.0) | 0 (0.0) |

| Discipline/Educational Background (Not mutually exclusive) | |||

| Psychology | 13 (65.0) | 7 (70.0) | 15 (57.7) |

| Marriage and Family Therapy | 2 (10.0) | 1 (10.0) | 0 (0.0) |

| Social Work | 0 (0.0) | 0 (0.0) | 1 (3.8) |

| Speech/Language/Communication | 4 (20.0) | 2 (20.0) | 4 (14.8) |

| Occupational/Physical Therapy | 1 (5.0) | 0 (0.0) | 0 (0.0) |

| Education | 1 (5.0) | 1 (10.0) | 7 (26.9) |

| Behavior Analysis | 10 (50.0) | 0 (0.0) | 11 (42.3) |

| ASD Training in Education | |||

| Yes | 16 (80.0) | 8 (80.0) | 19 (73.1) |

| No | 4 (20.0) | 2 (20.0) | 7 (26.9) |

| Work History | |||

| Mean Years at Current Agency (SD) | 7.7 (4.8) | 8.8 (4.9) | 2.5 (2.6) |

| Mean Years Experience w/ASD (SD) | 17.1 (6.8) | 15.9 (6.4) | 4.5 (2.9) |

| Licensed/ Certified | |||

| Yes | 20 (100.0) | 10 (100.0) | 9 (34.6) |

| No | 0 (0.0) | 0 (0.0) | 17 (65.4) |

| Service Delivery Setting (Not mutually exclusive)a | |||

| Clinic | 14 (70.0) | 6 (60.0) | |

| Community | 18 (90.0) | 8 (80.0) | |

| School | 14 (70.0) | 6 (60.0) | |

| Home | 17 (85.0) | 8 (80.0) | |

| Other | 3 (15.0) | 1 (10.0) | |

DPs were not asked this item.

ASD-CBO Agency Leaders.

Twenty ASD ALs participated in the quantitative phase of this study. ALs were eligible to participate if: a) they had the role of director, CEO, and/or decision-maker of treatments used at the ASD-CBO, b) the ASD-CBO provided community-based treatment services to youth with ASD, and c) services provided included one or more of the following service types: applied behavioral analysis, speech and language therapy, mental health services, occupational therapy, or physical therapy. ALs were excluded if they worked in a school or nonprofit organization. It was thought that organizations operating within these service sectors were distinctly different from the specialty service ASD-CBOs (e.g., operating in a distinct outer context that might influence ASD-EBP implementation).

Most ALs were female (85%) and White (90%). All participating ALs held either master’s (55%) or doctoral level (45%) degrees, all were licensed or certified in their field, and most participants (80%) had training related to ASD interventions and practices during their education. AL education disciplines included (not mutually exclusive): psychology, behavioral analysis, speech and language/communication, marriage and family therapy, occupational/physical therapy, and education. ALs had provided or directed services delivered to individuals with ASD for 17.1 (SD=6.8) years on average, and ALs were at their current agency for 7.7 (SD=4.8) years on average.

ASD-CBO Direct Providers.

Twenty-six DPs participated in the quantitative phase of this study. DPs were eligible to participate if they: a) worked in a community agency providing services to youth with ASD, b) were direct service providers or supervisors of direct providers who delivered ASD treatment services from the above included service types, and c) had worked at the same agency for the past six months or more. Most DPs were female (92%) and White (58%), though DPs reported greater racial and ethnic diversity than ALs: Latinx (15%), Asian American/Pacific Islander (12%), African American/Black (4%), or Filipino/a American (4%). Most DPs had their bachelor’s degree, and the rest had their master’s degree. Just over half (58%) of the DPs had an educational background in psychology with many also having an educational background in behavioral analysis (42%), education (27%), and speech and language/communication (15%). DPs had an average of 4.5 (SD=2.9) years providing services to individuals with ASD and had worked at their current agency for 2.5 (SD=2.6) years on average.

Procedure

A recruitment database of ASD-CBOs, comprised of 144 agencies located within San Diego, Imperial and Orange counties in California, was developed by reviewing existing ASD service resource guides, web-based searches, and snowball sampling methods including discussions with established service providers in the area. The recruitment database included agency name, AL name (if available), address and email information, and service type. Utilizing this recruitment database, ALs were emailed, called, and (if no response) mailed recruitment flyers with information about the study. ALs were also asked to distribute recruitment flyers to DPs within their ASD-CBOs. Moreover, the recruitment flyer was posted on ASD parenting network websites, in ASD parenting and service provider newsletters, and on institutional websites.

Individuals interested in participating in the quantitative phase of the study contacted study personnel, were provided detailed information about the study’s purpose and procedures, and were given a brief eligibility screening. If eligible for inclusion, participants were asked to provide their name and email address. A survey link was sent to participants using Qualtrics® software. Participants who opted for the survey in a paper-and-pencil format (determined during the screening) were mailed the consent form and survey along with a postage-paid envelop in which to return the materials (n=2 DPs). Participants were provided a $25 incentive for completing the survey, which lasted from 45–60 minutes. All procedures were reviewed and approved by the San Diego State University Institutional Review Board.

Measures

Autism Model of Implementation (AMI) Survey Battery.

The AMI survey battery was completed by ALs and DPs, and included items related to the demographic characteristics of the survey respondent (e.g., age, length of time at the current ASD-CBO, service context, educational and training background), organizational characteristics (e.g., typical client demographics: age range, typical co-occurring diagnoses, common presenting problems, number of clients currently being provided services), training and IAU processes within the participant’s current ASD-CBO, organizational climate, culture, and readiness for new innovations, and provider attitudes toward ASD-EBPs. For the current study, only items related to participant demographics and the IAU items (described below) were included in the data analyses.

AMI implementation-as-usual (IAU) items.

The IAU items were developed by the first author, in collaboration with the AMI collaborative group–a community-academic partnership that was developed to provide: a) contextual information about ASD service provision in Southern California, b) important domains for inquiry in the AMI Survey and item construction, and c) provide feedback about the instruments and materials being developed through the study (BLINDED; BLINDED). Five items related to ASD-CBO IAU processes were included in the analysis. The first item, “Does your agency discuss providing new intervention strategies for working with children with ASD?”, required a binary (yes/no) response. For participants who responded, “Yes,” the following four items were administered: “What is the process to: (a) …Find a new intervention? (b) …Adopt the new intervention? (c) …Begin to use the new intervention? (d) …Monitor the new intervention’s use?” For each of these items, participants were asked to type responses reflecting their experiences at their current ASD-CBO. No limit was established for the number of responses or characters allowed in their responses.

Data Analysis

The binary screening item was analyzed using chi-square analysis. Thereafter, typed responses to follow-up items (M=6.42 words for ALs; 5.99 words for CPs) were analyzed using text content analysis methodology (Neuendorf, 2002). Two of the co-authors (BLINDED) independently read each text response and developed a list of frequent responses both within each question and across questions. Three co-authors (BLINDED) examined the lists of frequent responses to generate a final codebook in which all content themes were fully conceptualized and defined. After obtaining reliability on the use of the codebook, two coders independently coded all text responses. The first author reviewed the coding forms and, if a coding discrepancy arose, the coders utilized a consensus method to determine the best code for the text. Frequency counts of the content analysis codes were then conducted and visually inspected by code and group (AL vs. DP) (see Table 2).

Table 2.

Quantitative Data Frequency Counts

| Quantitative Survey Item / Quantitative Content Analysis Codes | AL (n = 20) Count (%) | DP (n = 26) Count (%) |

|---|---|---|

| Item 1. Does your agency discuss providing new intervention strategies? | ||

| Yes | 17 (85%) | 18 (69.2%) |

| No | 2 (10%) | 8 (30.8%) |

| Missing | 1 (5%) | 0 |

| Participants who responded yes, provided responses about their agency’s IAU process. | ||

| Identifying Practice and Delivery Gaps | ||

| Team meeting or staff discussion | 8 (40%) | 8 (30.8%) |

| Done by an authority (director, supervisor) but process is informal | 4 (20%) | 5 (19.2%) |

| Global client needs assessment | 5 (25%) | 0 |

| Survey | 3 (15%) | 0 |

| Not done/ Only assess individual client needs | 0 | 3 (11.5%) |

| “Don’t know” | 0 | 10 (38.5%) |

| Practice Selection | ||

| Learn about new practices from research literature | 10 (50%) | 9 (34.6%) |

| Learn about new practices from conferences/presentations | 4 (20%) | 0 |

| Develop new practices | 4 (20%) | 0 |

| Consult with supervisor to learn about new practices | 0 | 7 (26.9%) |

| “Don’t know” | 0 | 8 (30.8%) |

| Evaluating Practice Evidence | ||

| Review published literature | 8 (40%) | 6 (23.1%) |

| Adoption Decision | ||

| Staff discussion before adoption decision | 4 (20%) | 0 |

| Director/Supervisor decision | 2 (10%) | 8 (30.8%) |

| “Don’t know” | 0 | 8 (30.8%) |

| Implementation Strategy Use | ||

| Pilot then revise, if needed; Trial and error | 4 (20%) | 2 (7.7%) |

| Staff training | 4 (20%) | 0 |

| “Just use it” | 5 (25%) | 6 (23.1%) |

| “Implement it” | 2 (10%) | 6 (23.1%) |

| Consult with supervisor | 0 | 8 (30.8%) |

| “Don’t know” | 0 | 8 (30.8%) |

| Monitoring Fidelity | ||

| Assess individual client progress via data collection | 8 (40%) | 8 (30.8%) |

| Staff meetings/discussions; Supervision | 6 (30%) | 8 (30.8%) |

| Fidelity monitoring | 2 (10%) | 0 |

| “Don’t know” | 0 | 8 (30.8%) |

Quantitative Results

Seventeen ALs (85%) and 18 DPs (69.2%) reported that direct providers were involved in discussions related to the implementation process for new interventions, whereas 2 ALs (10%) and 8 DPs (30.8%) responded that DPs were not involved in implementation process discussions. Chi-square analysis indicated that the frequency of these responses was not significantly different by group, X2(2, 45)=2.60, p=0.11.

Frequency counts by code and group revealed similarities between AL and DP responses related to ASD-CBO implementation processes. For example, both ALs (40%) and DPs (30.8%) reported that identification of practice and delivery gaps within the agency was done through team meetings or staff discussions, whereas 20% of the ALs and 19.2% of the DPs reported that this exploration was done in an informal manner by the AL. For practice selection, ALs provided more varied and specific responses about the methods used to identify or develop new practices for possible use at their ASD-CBO, including: learning about new practices from research literature (50%) or conferences or presentations (20%), or developing practice-based interventions (20%). DPs stated that they learned about new practices from research literature (34.6%) and also reported that they consulted with their supervisor to learn about new practices for use (26.9%). Yet, fewer than half of the ALs and fewer than one-third of the DPs reported any method of evaluating the research evidence of a new practice (40% of ALs; 23.1% of DPs) once identified.

Related to the process of making an adoption decision about a new practice, DPs (30.8%) reported that ALs alone made decision, whereas only 10% of ALs explicitly stated that it was solely their decision. Moreover, 20% of the ALs reported that staff discussions occurred about a new practice before an adoption decision was made; however, no DPs reported staff discussion before making an adoption decision.

When asked about which implementation strategies were utilized to facilitate EBP uptake and use, 20% of the ALs reported each of the following strategies: “pilot then revise, if needed; trial and error” and “train staff.” Finally, data suggests that monitoring new practice implementation was being formally done but at a low frequency (AL: 10%; DP: 0%), and that the fidelity monitoring that was occurring was related to individual client progress either through the collection and review of progress datum (AL: 40%; DP: 30.8%) or during staff meetings/supervision (AL: 30%; DP: 30.8%) rather than monitoring the fidelity of practice components. For DPs, the most frequently reported implementation strategy was “consult with supervisor” (30.8%).

Of note, most DPs did not endorse any systematic, agency-wide implementation efforts occurring at their ASD-CBO. For example, 10 DPs (38.5%) explicitly reported that they “didn’t know” how practice and delivery gaps were identified at their agency, and 8 (30.8%) DPs stated that they “didn’t know” how practices were selected for adoption and implementation, what implementation strategies were utilized within their ASD-CBO, or how treatment implementation was monitored. No ALs stated that they “didn’t know” how these things occurred. While it makes sense that ALs would be aware of these processes more so than DPs, the data suggest a lack of explicitness of the implementation process within ASD-CBOs.

Qualitative Phase

Procedure

ALs who participated in the quantitative phase were invited to participate in a qualitative phase of the study. Individual qualitative interviews occurred at a location convenient to the ALs, typically in their office. Interviews ranged in duration from 30–57 minutes (M=43.5 minutes, SD=9.25). ALs who participated in the interview received a $20 incentive.

Participants

Ten of the ALs (50%) who participated in the quantitative phase agreed to participate in the qualitative phase. Table 1 includes demographic information about this subsample.

Measure

Interview.

The AMI interview protocol was semi-structured, containing topics and prompts guided by the phases of the EPIS framework (see Appendix A for interview protocol), and matched to the constructs administered through the quantitative survey in an effort to maximize the potential for convergence between the strands of data and ease of integrating the results (Fetters & Molina-Azorin, 2017). The first section included questions asking about the ASD-CBO’s process to determine unmet client needs and training needs of staff at their agency. The second section included questions about factors related to learning about new intervention practices and what factors were considered when determining whether to implement a new practice. The third section included questions related to adoption factors, characteristics of DPs and ALs that facilitated uptake and implementation of new practices, their ASD-CBO’s usual implementation process for the uptake of new practices, and process satisfaction. The fourth section included questions addressing implementation factors, staff training, and perceptions of previous implementation success. The final section included questions asking about their initial thoughts related to the development of a multi-phased and systematic toolkit to guide ASD-EBP implementation within ASD-CBOs.

Data Analysis

The qualitative interview data were analyzed using a coding, consensus, and comparison methodology (Willms et al., 1990), which followed an iterative approach rooted in Grounded Theory (Glaser & Strauss, 1967; Creswell & Poth, 2017). All interviews were first transcribed and de-identified. Following this, two co-authors, trained in qualitative data coding through didactic and practice activities with a project consultant, were provided with two random interviews to examine independently (BLINDED) in order to elicit emergent themes (i.e., themes surfacing from the transcriptions) within the interviews. Emergent themes were identified and assigned a code by considering the frequency of and salience (i.e., importance or emphasis) with which an AL discussed it. The second author reviewed the coding schema for clarity of the codes, coding definitions, and exemplar text. These codes were developed into the AMI Qualitative Coding Schema (available upon request from the first author). The first author then trained members of the coding team to use the coding schema through didactic and practice exercises. Members of the coding team included the codebook developers and a third research team member who was employed as a project research assistant.

Segments of texts, ranging from sentences to paragraphs, were assigned specific codes that enabled members of the research team to consolidate interview data into analyzable units. Following this, two members of the research team independently coded each interview and then collectively reviewed the codes with the first author to ensure coding consensus and to resolve discrepancies. A review of all of the codes for each interview was conducted until members of the research team reached coding consensus for all segments of text. After consensus was achieved among coders, interview transcripts were then entered, coded, and analyzed in QSR-NVivo 2.0 (Tappe, 2002). Moreover, the qualitative data was quantitized in order to present ever-coded (e.g., the number of transcripts that had the code assigned ever) and frequency (e.g., the number of times the code was assigned throughout all of the transcripts) counts, which provided additional data to support the salience of emergent themes (Landrum & Garza, 2015).

To further contextualize the IAU process and to understand the application of D&I frameworks in usual care settings, themes identified in the qualitative phase of the current study were compared with the multi-phased EPIS model (Moullin et al., 2019; Aarons et al., 2011). The final themes from the current study are described below.

Qualitative Results

The following primary themes accompanied with illustrative quotes emerged from the AL qualitative interviews about the IAU processes within ASD-CBOs.

Perspectives of IAU Process.

Overall, ALs perceived that their IAU process was successful or “pretty successful” (ever-coded: 8; frequency: 11) despite noting problems (ever-coded: 6; frequency: 11) during previous implementation efforts and that the implementation process is difficult (ever-coded: 4; frequency: 8). Most salient, ALs reported that their IAU process was variable, lacking consistency or systematization (ever-coded: 6; frequency: 9).

Identifying Practice and Delivery Gaps.

Overall, ALs noted that their process for identifying practice and delivery gaps within their ASD-CBO was informal (ever-coded: 7, frequency: 8), and stated a need for a standardized process to identify service and delivery gaps (ever-coded: 6, frequency: 7). Additionally, ALs reported that one method for identifying practice and delivery gaps was through provider/staff discussions or suggestions (ever-coded: 5, frequency: 7). As stated by one AL, “…the information trickles up to [agency CEO] then trickles back down. A lot of time goes by, a lot of discussion in getting things implemented. So I think that having a little bit more of a structure for how to make that decision, umm, and how to do that initial needs assessment, I think it would make things go a little bit more quickly.”

Practice Selection.

ALs reported that they learned about new practices from three sources: conferences (ever-coded: 9, frequency: 13), research literature (ever-coded: 8, frequency: 18), and parents (ever-coded: 7, frequency: 9). Of these sources, learning about new practices from research literature was most salient.

Evaluating Practice Evidence.

ALs noted two strategies that they used to evaluate evidentiary support for new practices. These strategies included researching published literature (ever-coded: 5, frequency: 7) and deferring to professional expertise to support the use of new practices within their ASD-CBOs (ever-coded: 4, frequency: 7). For example, one AL stated, “Typically we do a little literature review…and then for some of our strategies, you know, we have occupational therapists that come in and do some sensory things, um, that don’t have an evidence base but they feel are important and do not have any evidence that they’re harmful in any way…so we defer to their discipline level expertise.”

Adoption Decision.

Related to adoption decisions, some ALs reported consideration of staff opinions before finalizing the decision (ever-coded: 7, frequency: 7), while others reported that they alone made the adoption decision (ever-coded: 5, frequency: 5). Moreover, descriptions related to how the final adoption decision was ultimately made by ALs was salient (ever-coded: 6, frequency: 7). For example, one respondent stated, “So the final choice would be [clinical director and me]…but we would take into consideration, obviously, everybody’s thought. But I would say, we would have a final decision.”

Factors Influencing Practice Adoption.

ALs reported several contextual and innovation factors that influenced adoption decisions. Specifically, ALs perceived client needs/progress (ever-coded: 9, frequency: 26) and funding/reimbursement (ever-coded: 7, frequency: 24) as salient contextual factors influencing practice adoption. ALs also frequently considered innovation factors, such as the feasibility of a new practice within the existing organizational structure (ever-coded: 8, frequency: 24), the evidence base of a new practice (ever-coded: 8, frequency: 16), practice adaptability/flexibility (ever-coded: 7, frequency: 12), and agency-practice fit (ever-coded: 7, frequency: 11). Other salient inner contextual factors included staff training requirements (ever-coded: 6, frequency: 9) and staff training costs (ever-coded: 6, frequency: 7), the practice’s cost-effectiveness (ever-coded: 5, frequency: 8), provider expertise (ever-coded: 5, frequency: 7), certification requirements (ever-coded: 4, frequency: 7), and training modality (e.g., in-person vs. online training) (ever-coded: 3, frequency: 5). For example, one AL stated, “agency-wide we’ve tried implementing [new data collection process], and that has been tough and there’s been a lot of resistance. Umm, I think that [direct providers] didn’t feel that the resources were available at the time, and the value.”

Implementation Strategy Use.

Some ALs reported piloting, and revising when needed, new practices within their ASD-CBOs (ever-coded: 6, frequency: 13). One AL stated, “we’ve got a lot of little like test groups going on, so maybe like two therapists are, umm, really involved, and really doing it, and they might report back and report their data…almost like a mini pilot. So three to six months, and say ‘Was this valuable?’” However, some ALs reported reliance on staff training as the only implementation strategy to facilitate EBP uptake and utilization (ever-coded: 4, frequency: 8). For example, one AL stated, “One or two [people] are kind of identified to be the people who are going to, gonna get trained in this and then communicate it to the rest of the supervisors, and then the supervisors…implement it with their staff.” Finally, a few of the ALs reported monitoring clinical outcomes when implementing new practices (ever-coded: 3, frequency: 7) as a method for monitoring practice fidelity. However, two ALs explicitly stated that there was no treatment fidelity monitoring for new or established practices (ever-coded: 2, frequency: 3).

Discussion

The current study assessed the IAU processes specifically within the context of CBOs providing specialized services to individuals with ASD. Using a convergent mixed methods approach, our interpretation of the integrated data strands (Table 3) are presented by research question.

Table 3.

Joint Display Integrating Convergent Quantitative Content Analysis with Qualitative Findings

| quan strand (Frequencies from content analysis) | QUAL strand (n = 10 ALs) | |||

|---|---|---|---|---|

| Themes | Content analysis code | ALs (n = 20) | DPs (n = 26) | |

| Identifying Practice and Delivery Gaps | Done by an authority (director, supervisor) but process is informal | 4 (20%) | 5 (19.2%) | “…the information trickles up to [agency CEO] then trickles back down. A lot of time goes by, a lot of discussion in getting things implemented. So I think that having a little bit more of a structure for how to make that decision, umm, and how to do that initial needs assessment, I think it would make things go a little bit more quickly.” |

| Team meeting/ Staff discussion | 8 (40%) | 8 (30.8%) | “The biggest would be probably group collaborations, so, when the team of therapists and the decision makers meet, which is bi-weekly, umm, we discuss either needs from other agencies that have come in or parents or just clinician discovery and uh, start the conversation from there.” | |

| Practice Selection | Learn about new practices from conferences | 4 (20%) | 0 | “We try to have people go to conferences and trainings as much as possible. Sometimes the therapists will say, “Oh there’s this really cool conference, is there a way to help get funding for that?” And other times, um, a leader will see something and say, “Oh gosh, who wants to go to this? ‘Cause we would really like some expertise.” But again, I would say that’s pretty informal.” |

| Learn about new practices from research literature | 10 (50%) | 9 (34.6%) | “Typically we do a little literature review.. .and then for some of our strategies, you know, we have occupational therapists that come in and do some sensory things, um, that don’t have an evidence base but they feel are important and do not have any evidence that they’re harmful in any way…so we defer to their discipline level expertise” | |

| Develop new practice-based interventions | 4 (20%) | 0 | “Any system, anything, it’s all based on clinical judgment.” | |

| Evaluating Practice Evidence | Research published literature | 8 (40%) | 6 (23.1%) | “It’s also like there’s two levels of research, there’s the, umm you know, the peer review journal articles, lots of data research, and then there’s, you know, our professional ASHA. So our professional organization, which yeah, it might have a pilot project attached to it, but maybe not a huge amount of research. So there’s kind of two levels of that, but mostly through our professional and, organization and then autism organization” |

| Adoption Decision | Staff discussion before adoption decision | 4 (20%) | 0 | “So the final choice would be [clinical director and me] .but we would take into consideration, obviously, everybody’s thought. But I would say, we would have a final decision.” |

| Director/ Supervisor decision | 2 (10%) | 8 (30.8%) | ||

| Implementation Strategy Use | Pilot then revise, if needed/ trial and error | 4 (20%) | 2 (7.7%) | “we’ve got a lot of little like test groups going on, so maybe like two therapists are, umm, really involved, and really doing it, and they might report back and report their data…almost like a mini pilot. So three to six months, and say ‘Was this valuable?’” |

| Train staff | 4 (20%) | 0 | “One or two [people] are kind of identified to be the people who are going to, gonna get trained in this and then communicate it to the rest of the supervisors, and then the supervisors…implement it with their staff.” | |

| Fidelity monitoring | 2 (10%) | 0 | “We would rely on more objective behavioral measures of the, with respect with fidelity of implementation [of the new practice] rather than checklists or… or subjective measures. So you know we developed fidelity checklists and at the end of training. at various points of trainings the trainees are assessed… ” | |

1. What specific activities are involved in the IAU process within ASD-CBOs?

Overall, several IAU processes were identified and converged through the integration of the data strands. Further, IAU processes were similar to those included in the EPIS implementation framework. For example, “Identifying practice and delivery gaps” was similar to the EPIS Exploration phase, “Practice selection” was similar to the EPIS Preparation phase, and “Conducting the implementation plan” and “Monitoring fidelity” were similar to the EPIS Implementation phase.

Specifically, this study found that ALs typically used informal processes, such as team meetings, discussions or provider suggestions, to identify practice and delivery gaps. Additionally, the quantitative data also indicated that some ALs utilize global client needs assessments or surveys to identify service and delivery gaps; however, no DPs reported the use of these methods. Instead, DPs most frequently indicated through the quantitative survey that they did not know how these gaps were identified. Notably, 60% of the ALs reported during qualitative interviews that a standardized process to identify service and delivery gaps was needed.

Additionally, AL and DP data converged to indicate that they learned about new practices primarily from reviewing research literature. ALs also reported that they learned about new practices from conferences and parents or developed practice-based interventions when needed, whereas DPs reported that they learned about new practices from their agency supervisors or that they didn’t know how they learned about new practices. After selecting a new practice for adoption consideration, ALs and DPs both reported that they review the published literature about the practice but only ALs reported that they may defer to professional expertise, if needed. Additional inner contextual and intervention factors considered prior to adoption decision were derived from the qualitative data and are consistent with those identified in the recent EPIS systematic review (Moullin et al., 2019). Finally, when adoption decisions are being finalized, data converged to suggest that ALs make the final adoption decision. While ALs indicated that they consider DPs opinions prior to making an adoption decision, no DPs reported this.

Finally, the data strands converged to suggest three evidence-based implementation strategies that facilitate the utilization of new practices in ASD-CBOs: (1) piloting the new practice and revising if needed, (2) training staff, and (3) client outcome monitoring. Interestingly, some qualitative data diverged from the quantitative data. For example, in the quantitative survey, both ALs and DPs reported that the providers “just use [the new practice]” or “implement it.”

These integrated findings indicate that implementation of new ASD practices within ASD-CBOs may involve various strategies—some with supportive evidence (e.g., pilot ASD-EBP within the agency and revise, if needed; Powell et al., 2014) and others without evidence to support that they effectively change provider behavior (e.g., train staff only, “just use [the practice]”; Fixsen et al., 2009). Of importance, variability in implementation strategy use was noted both across and within ASD-CBOs. For example, some ALs reported use of implementation strategies, such as piloting the implementation of new practices with small groups as well as relying only on staff training to facilitate uptake and implementation.

These results, along with discussions with AMI community-academic partnership members (BLINDED), supported the need for developing a systematic implementation process for use within ASD-CBOs. A systematized implementation process may facilitate adoption, uptake, implementation and sustained use of EBPs within ASD-CBOs (Chinman et al., 2013). Additionally, these results support adapting the EPIS framework to include an adoption decision phase (Figure 2). This phase can then facilitate AL’s consideration of factors that were reported to influence adoption decisions about new ASD practices, such as whether the practice is feasible within existing organizational structures. Of note, the current study indicated that once a new practice had been adopted (defined as, “The decision of an organization or community to commit to and initiate an evidence-based intervention,” Rabin & Brownson, 2017, p. 21), actual implementation efforts were not well known or systematized. Future studies that include an EPIS adoption decision phase is warranted as both theoretical and research-based publications related to the EPIS framework indicate that an adoption decision is the end result of the Exploration Phase (Becan et al., 2018; Nilsen, 2015).

Figure 2.

Adapted EPIS Implementation Model (Adapted from Aarons et al., 2011

2. What factors facilitate or hinder the IAU process for new EBPs within ASD-CBOs?

Given the description of the IAU process within ASD-CBOs, communication seems to be incredibly important between ALs, supervisors and DPs. Specifically, three of the 16 IAU qualitative codes and 6 of the 27 quantitative content analysis codes involved some type of discussion between co-workers or staff and supervisors to facilitate the implementation process. Moreover, much of these discussions seem to involve staff from multiple levels of the agency’s hierarchy. For example, 85% of the ALs and 69.2% of the DPs reported that agency leaders discussed the process of implementing a new EBP with direct providers and supervisors. These findings align with theories describing how communication can help develop shared understanding and foster knowledge for greater implementation use (Manojlovich et al., 2015). Relationship-building and effective communication strategies have the potential to further contribute to healthcare quality, with more successful improvements in practices (Lanham et al., 2009). Thus, the value of communicating IAU processes carries a multitude of implications in improved implementation efforts. Future studies should assess how different communication strategies may influence the impact of communication on more successful implementation efforts.

3. How do agency leaders perceive the IAU process within their ASD-CBO?

While ASD-CBO ALs reported relative success when discussing the overall perception of their IAU processes, this study found considerable discordant data. Findings suggest that the IAU processes used within ASD-CBOs focused primarily on initial EBP uptake (e.g., single-time staff training) and was fraught with challenges. The IAU process involved very limited use of implementation strategies, as has generally been the case in the limited descriptions of IAU (Schoenwald et al., 2008), and these primarily included training. While ASD-CBOs endorsed some “best practices” in implementation (Aarons et al., 2011; Powell et al., 2012), such as referencing the research literature when selecting practices, training and outcome monitoring, usual implementation often lacked other strategies that may be particularly effective. For instance, training alone may not be enough to impact DP behaviors (Beidas & Kendall, 2010; Herschell et al., 2010) but may be enhanced with the inclusion of quality assurance strategies, such as EBP fidelity monitoring—an implementation strategy that was absent in ASD-CBO IAU. Moreover, ALs suggested that there are no existing systematic implementation processes or tools available for use that fit within the ASD-CBO service setting. As a result, ALs indicated that they had to attempt the complex process of EBP implementation without assistance or structured, evidence-based guidance, which expectedly resulted in a lack of structure or consistency with EBP implementation efforts. For example, one AL, whose agency had a highly structured initial staff training and competency monitoring, stated, “I described our infrastructure for our initial training, which I’m really proud of, but I think that, um, for ongoing training for someone who has been here for a year or two and now we are going to implement something different, it’s…more challenging because the infrastructure is not in place. We have staff meetings…so we can, you know, sort of roll things out there but again that’s primarily didactic. There’s no mechanism.”

Fit with the existing literature

Comparing the current findings with that of other IAU studies reveal similar gaps in the IAU process, but also underscore the need for studies of IAU processes across multiple service settings. One of the richest descriptions of IAU comes from a mixed-methods study of seven social service organizations serving children, youth and families (Powell et al., 2013; Powell, 2014). Similar to the current study, some “best practices” specified in the implementation literature were present, however, there also existed major discrepancies between IAU and established standards for implementation. Notably, these agencies similarly lacked a systematic process for implementation and monitoring implementation fidelity. Limited use of implementation strategies, mainly focused on training, supervision and outcome monitoring, have also been noted in characterizations of usual care settings for children’s mental health (Schoenwald et al., 2008). In contrast, Powell and colleagues (2014) identified the use of 50 implementation strategies by agencies related to planning, education, finances, restructuring, and quality improvement. This may, in part, be due to their use of a survey that explicitly asked about CBO’s use of each of the 50 strategies. However, the discrepancy also points to the importance of examining IAU practices in various service settings delivering different types of EBPs.

While this study converges with other IAU studies showing a need for more systematic methods and plans for implementation (Powell et al., 2014), understanding the specific gaps in usual care across service settings is crucial, as “it is difficult and perhaps foolhardy to try to improve what you do not understand” (Hoagwood & Kolko, 2009, pg. 35). The implementation process and types of implementation strategies already being used in IAU may vary based on characteristics of the service setting. For instance, in a naturalistic study of IAU of mandated clinical practice guidelines in Veterans Affairs (VA) facilities, sites were able to rely on regionally or nationally provided resources for implementation, such as data warehouses (Hysong et al., 2007). This facilitated the use of technologically advanced implementation strategies that may not be feasible in other settings. Understanding the existing implementation landscape may support targeted selection and application of implementation strategies (Waltz et al., 2015) and may guide implementation strategy refinement.

Development of an Implementation Toolkit for ASD-CBOs

The current study provides foundational support for developing a multifaceted implementation strategy (e.g., combining a number of discrete implementation strategies) specifically for ASD-CBOs (Proctor et al., 2013; Squires et al., 2014). ALs and DPs indicated the need for a more systematic and standardized implementation process that would fit within the unique ASD-CBO service system. Specifically, ALs reported a need for feasible, acceptable and useful strategies to identify agency-, provider-, and client-level needs, match ASD-EBPs to these needs in a manner that will fit with current organizational- and system-level characteristics, and then facilitate a systematic process to plan for and enact EBP adaptations, if needed, staff training, implementation and sustainment plans as well as evaluate the completion of these plans.

Limitations

Limitations to the current study warrant further discussion. This study took place within a single geographical region and, thus, the results may be specific to this area. Moreover, the outer contextual factors that were influencing the ASD-CBOs within the current study may be idiosyncratic to the State of California. Other areas–countries, states, and even neighborhoods—likely have different outer contextual factors influencing the adoption, implementation and sustainment of ASD-EBPs. For example, interorganizational networks may contribute to the adoption and utilization of practices that may be with or without an evidence base (Greenhalgh et al., 2004; Valente, 2010; Valente et al., 2015). Further, this study involved a convenience sample of participants. The perspectives of the participating ALs and DPs may not reflect the broader population’s view of typical implementation processes. Finally, this study utilized an unvalidated quantitative measure of IAU within ASD-CBOs. As has been noted in the literature, very few validated measures of implementation processes exist (Hartveit et al., 2019; Chamberlain et al., 2011), requiring researchers rely on laboratory-specific measures. As a result, the psychometric properties of the measure involved in the current study are unknown. Despite these study limitations, this is the first study to explore the IAU of ASD-CBOs. Further, the use of a mixed methods design strengthened the scientific rigor of this study and allowed both deductive and inductive evaluation of the IAU process. Thus, we believe that these findings contribute to the broader D&I science literature as well as assist in the development of a comprehensive EBP implementation toolkit.

Conclusions

There is limited data available to understand the underlying IAU processes for adopting and implementing ASD-EBPs among ASD-CBOs (National Advisory Mental Health Council, 2001). Currently, literature suggests that ASD-CBOs underutilize or inconsistently utilize EBPs designed specifically for individuals with ASD (Ramanadhan et al., 2017; Brookman-Frazee et al., 2012; Pickard et al., 2018). This study adds to the existing literature by exploring the IAU processes within ASD-CBOs to determine whether the implementation process may be one area for intervention to increase the use of ASD-EBPs in community-based, usual care settings. Findings indicate that ASD-EBP implementation efforts lack structure and consistency. Given the variability and limited knowledge of systematic procedures for identifying ASD-EBPs, results from the study warrant the development of an implementation toolkit to guide the phases of implementation within these settings. With greater adoption, implementation and sustained utilization of ASD-EBPs within community-based usual care settings, especially when delivered with a high degree of fidelity, it is expected that the symptoms associated with ASD as well as the commonly co-occurring challenging behaviors and disorders will reduce while the quality of lives of individuals with ASD and their families will significantly improve. Further, by increasing the availability of ASD-EBPs within ASD-CBOs serving individuals with ASD and their families from marginalized backgrounds, discrepancies in health equity and health disparities outcomes may be reduced such that individuals from minority backgrounds appropriate services at the right time to effect positive change.

Supplementary Material

Acknowledgements.

The authors would like to acknowledge and thank Dr. Lawrence Palinkas for consultation on the study design and data collection, analysis and interpretation, as well as the Autism Model of Implementation Collaborative Group for guidance and feedback on interpretation of these mixed methods results and on the development of the ACT SMART Implementation Toolkit.

Funding. The National Institute of Mental Health (K01MH093477, PI: Drahota) supported the design of the study and data collection, analysis and interpretation of data, and in writing the manuscript. Interpretation of data and preparation of this manuscript was also supported in part by the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis; through an award from the National Institute of Mental Health (R25MH080916) and Quality Enhancement Research Initiative (QUERI), Department of Veterans Affairs Contract, Veterans Health Administration, Office of Research & Development, Health Services Research & Development Service. Final preparation of this manuscript with supported by the Department of Psychology at Michigan State University and Michigan State University, Flint Center for Health Equity Solutions (U54MD011227).

Footnotes

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

References

- Aarons GA, Hurlburt M, & Horwitz SM (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 4–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA (2004). Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS). Mental Health Services Research, 6(2), 61–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bachman SS, Comeau M, Tobias C, Allen D, Epstein S, Jantz K, & Honberg L. (2012). State health care financing strategies for children with intellectual and developmental disabilities. Intellectual and Developmental Disabilities, 50(3), 181–189. [DOI] [PubMed] [Google Scholar]

- Baller JB, Barry CL,, Shea K, Walker MM, Ouellette R, & Mandell DS (2016). Assessing early implementation of state autism insurance mandates. Autism, 20(7), 796–807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becan JE, Bartkowski JP, Knight DK, Wiley TR, DiClemente R, Ducharme L, Welsh WN, Bowser D, McCollister K, Hiller M & Spaulding AC (2018). A model for rigorously applying the Exploration, Preparation, Implementation, Sustainment (EPIS) framework in the design and measurement of a large scale collaborative multi-site study. Health & Justice, 6(1), 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS & Kendall PC (2010). Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science and Practice, 17(1), 1–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benevides TW, Carretta HJ, & Lane SJ (2016). Unmet need for therapy among children with autism spectrum disorder: Results from the 2005–2006 and 2009–2010 National Survey of Children with Special Health Care Needs. Maternal and Child Health, 20(4), 878–88. [DOI] [PubMed] [Google Scholar]

- Bishop-Fitzpatrick L & Kind AJH (2017). A scoping review of health disparities in autism spectrum disorder. Journal of Autism and Developmental Disorders, 47(11), 3380–3391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookman-Frazee L, Baker, Ericzén M, Stadnick N, & Taylor R. (2012). Parent perspectives on community mental health services for children with autism spectrum disorders. Journal of Child and Family Studies, 21(4), 533–544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookman-Frazee LI, Taylor R, & Garland AF (2010). Characterizing community-based mental health services for children with autism spectrum disorders and disruptive behavior problems. Journal of Autism and Developmental Disorders, 40(10), 1188–1201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Brown CH, & Saldana L. (2011). Observational measure of implementation progress in community based settings: The Stages of Implementation Completion (SIC). Implementation Science, 6, 116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA, Glasgow RE, & Stange KC (2013). The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science, 8, 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chinman M, Ebener P, Malone PS et al. (2018). Testing implementation support for evidence-based programs in community settings: A replication cluster-randomized trial of Getting to Outcomes®. Implementation Science, 13, 131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiri G & Warfield ME (2012). Unmet need and problems accessing core health care services for children with autism spectrum disorder. Maternal and Child Health Journal, 16(5), 1081–1091. [DOI] [PubMed] [Google Scholar]

- Chorpita B. (2003). The frontier of evidence-based practice. In Kazdin AE& Weisz JR (Eds.), Evidence based psychotherapies for children and adolescents (pp. 42–59). New York, NY: Guilford Press. [Google Scholar]

- Creswell J & Poth CN (2017). Qualitative inquiry and research design. 4th Edition. Thousand Oaks, CA: Sage. [Google Scholar]

- Creswell J & Plano Clark VL (2011). Designing and conducting mixed methods research. Thousand Oaks, CA: Sage. [Google Scholar]

- Dingfelder HE & Mandell DS (2011). Bridging the research-to-practice gap in autism intervention: An application of diffusion of innovation theory. Journal of autism and developmental disorders, 41(5), 597–609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- BLINDED

- BLINDED

- Etikan I, Musa SA, & Alkassim RS (2016). Comparison of convenience sampling and purposeful sampling. American Journal of Theoretical and Applied Statistics, 5(1), 1–4. [Google Scholar]

- Fetters MD & Molina-Azorin JF (2017). The Journal of Mixed Methods Research starts a new decade: The mixed methods research integration trilogy and its dimensions. Journal of Mixed Methods Research, 11(3), 291–307. [Google Scholar]

- Fixsen DL, Blase KA, Naoom SF, & Wallace F. (2009). Core implementation components. Research on Social Work Practice, 19(5), 531–540. [Google Scholar]

- Ganz JB Katsiyannis A, & Morin KL (2018). Facilitated communication: The resurgence of a disproven treatment for individuals with autism. Intervention in School and Clinic, 54(1), 52–56. [Google Scholar]

- Glaser BG & Strauss A. (1967). The discovery of grounded theory: Strategies for qualitative research. New York: Aldine Publishing Co. [Google Scholar]

- BLINDED

- Greenhalgh T, Robert G, Macfarlane F, Bate P, & Kyriakidou O. (2004). Diffusion of innovations in service organizations: Systematic review and recommendations. The Milbank Quarterly, 82(4), 581–629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guetterman TC, Fetters MD, & Creswell JW (2015). Integrating quantitative and qualitative results in health science mixed methods research through joint displays. Annals of Family Medicine, 13(6), 554–561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartveit M, Hovlid E, Nordin MHA, Øvretveit J, Bond GR, Biringer E et al. (2019). Measuring implementation: Development of the implementation process assessment tool (IPAT). BMC Health Services Research, 19, 721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, & Davis AC (2010). The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review, 30(4), 448–466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoagwood K & Kolko DJ (2009). Introduction to the special section on practice contexts: A glimpse into the nether world of public mental health services for children and families. Administration and Policy in Mental Health and Mental Health Services Research, 36(1), 35–36. [DOI] [PubMed] [Google Scholar]

- Hysong SJ, Best RG, & Pugh JA (2007). Clinical practice guideline implementation strategy patterns in veterans affairs primary care clinics. Health Services Research, 42(1, Pt. 1), 84–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerns C, Moskowitz L, Rosen T, Drahota A, Wainer A, Josephson A et al. (2019). A cross-regional and multidisciplinary Delphi consensus study describing usual care for school to transition-age youth with autism. Journal of Clinical Child and Adolescent Psychology, 48(Sup1), S247–S268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landrum B & Garza G. (2015). Mending fences: Defining the domains and approaches of quantitative and qualitative research. Qualitative Psychology, 2(2), 199–209. [Google Scholar]

- Manojlovich M, Squires JE, Davies B, & Graham ID (2015). Hiding in plain sight: Communication theory in implementation science. Implementation Science, 10(58), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLennan JD, Huculak S, & Sheehan D. (2008). Brief report: Pilot investigation of service receipt by young children with autistic spectrum disorders. Journal of Autism and Developmental Disorders, 38(6), 1192–1196. [DOI] [PubMed] [Google Scholar]

- Moullin JC, Dickson KS, Stadnick NA, Rabin B, & Aarons GA (2019). Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implementation Science, 14, 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Advisory Mental Health Council (2001). Educating children with autism. Committee on Educational Interventions for Chidren with Autism. Lord C & McGee JP (Eds.) Division of Behavioral and Social Sciences and Education. Washington, DC: National Academy Press. [Google Scholar]

- National Autism Center (2015). National Standards Project findings and conclusions: Phase 2. Randolph, MA: Author. [Google Scholar]

- Neuendorf KA (2002). The Content Analysis Guidebook. 2nd ed. Thousand Oaks, CA: Sage. [Google Scholar]

- Nilsen P. (2015). Making sense of implementation theories, models and frameworks. Implementation Science, 10(1), 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, & Landsverk J. (2011). Mixed method designs in implementation research. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 44–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paytner JM & Keen D. (2015). Knowledge and use of intervention practices by community-based early intervention service providers. Journal of Autism and Developmental Disorders, 45(6), 1614–1623. [DOI] [PubMed] [Google Scholar]

- Pickard K, Meza R, Drahota A, & Brikho B. (2018). They’re doing what? A brief paper on service use and attitudes in ASD community-based agencies. Journal of Mental Health Research in Intellectual Disabilities, 11(2), 111–123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC et al. (2012). A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review, 69(2), 123–157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Proctor EK, & Glass JE (2014). A systematic review of strategies for implementing empirically supported mental health interventions. Research on Social Work Practice, 24(2), 192–212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Proctor EK, Glisson CA, Kohl PL, Raghavan R, Brownson RC et al. (2013). A mixed methods multiple case study of implementation as usual in children’s social service organizations: Study protocol. Implementation Science, 8, 92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell B, Proctor EK, Brownson R, Carpenter C, Glisson C, Kohl P, Raghavan R, & Stoner B. (2014). A Mixed Methods Multiple Case Study of Implementation as Usual in Children’s Social Service Organizations. ProQuest Dissertations and Theses. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Powell BJ, & McMillen JC (2013). Implementation strategies: Recommendations for specifying and reporting. Implementation Science, 8, 139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rabin BA & Brownson RC (2017). Terminology for Dissemination and Implementation Research (pp. 19–27). Dissemination and Implementation Research in Health: Translating Science to Practice (2nd ed.). In Brownson RC, Colditz GA, & Proctor EK. New York, New York: Oxford University Press. [Google Scholar]

- Ramanadhan S, Minsky S, Martinez-Dominguez, & Viswanath K(2017). Building practitioner networks to support dissemination and implementation of evidence-based programs in community settings. Translational Behavioral Medicine, 7(3), 532–541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenwald SK, Chapman JE, Kelleher K, Hoagwood KE, Landsverk J, Stevens J et al. (2008). A survey of the infrastructure for children’s mental health services: Implications for the implementation of empirically supported treatments (ESTs). Administration and Policy in Mental Health and Mental Health Services Research, 35(1–2), 84–97. [DOI] [PubMed] [Google Scholar]

- Squires JE, Sullivan K, Eccles MP, Worswick J, & Grimshaw JM (2014). Are multifaceted interventions more effective than single component interventions in changing health-care professionals’ behaviours? An overview of systematic reviews. Implementation Science, 9, 152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stahmer AC, Aranbarri A, Drahota A, & Rieth S. (2017). Toward a more collaborative research culture: Extending translational science from research to community and back again. Autism, 21(3), 259–261. [DOI] [PubMed] [Google Scholar]

- Steinbrenner JR, Hume K, Odom SL, Morin KL, Nowell SW, Tomaszewski B, Szendrey S, McIntyre NS, Yücesoy-Özkan S, & Savage MN (2020). Evidence-based practices for children, youth, and young adults with Autism. The University of North Carolina at Chapel Hill, Frank Porter Graham Child Development Institute, National Clearinghouse on Autism Evidence and Practice Review Team. [Google Scholar]

- Tabak RG, Khoong EC, Chambers DA, & Brownson RC (2012). Bridging research and practice: Models for dissemination and implementation research. American Journal of Preventive Medicine, 43(3), 337–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tappe A. (2002). Using NVivo in qualitative research. Melbourne: QSR International. [Google Scholar]

- Valente TW (2010). Social networks and health: Models, methods, and applications. New York, NY: Oxford University Press. [Google Scholar]

- Valente TW, Palinkas LA, Czaja S, Chu K-H, & Hendricks-Brown C. (2015). Social network analysis for program implementation. PLOS One, 10(6), 1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waltz TJ Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL et al. (2015). Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: Results from the expert recommendations for implementing change (ERIC) study. Implementation Science, 10(1), 109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L, Mandell DS, Lawer L, Cidav Z, & Leslie DL (2013). Healthcare service use and costs for autism spectrum disorder: A comparison between Medicaid and private insurance. Journal of Autism and Developmental Disorders, 43(5), 1057–1064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willms DG, Best JA, Taylor DW, Gilbert JR, Wilson DM, Lindsay EA, Singer J. (1990). A systematic approach for using qualitative methods in primary prevention research. Medical Anthropology Quarterly, 4(4), 391–409. [Google Scholar]

- Wong C, Odom SL, Hume KA, Cox AW, Fettig A, Kucharczyk S et al. (2015). Evidence-based practices for children, youth, and young adults with autism spectrum disorder: A comprehensive review. Journal of Autism and Developmental Disorders, 45(7), 1951–1966. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.