Abstract

Individualized treatment rules (ITRs) recommend treatment according to patient characteristics. There is a growing interest in developing novel and efficient statistical methods in constructing ITRs. We propose an improved doubly robust estimator of the optimal ITRs. The proposed estimator is based on a direct optimization of an augmented inverse-probability weighted estimator (AIPWE) of the expected clinical outcome over a class of ITRs. The method enjoys two key properties. First, it is doubly robust, meaning that the proposed estimator is consistent when either the propensity score or the outcome model is correct. Second, it achieves the smallest variance among the class of doubly robust estimators when the propensity score model is correctly specified, regardless of the specification of the outcome model. Simulation studies show that the estimated ITRs obtained from our method yield better results than those obtained from current popular methods. Data from the Sequenced Treatment Alternatives to Relieve Depression (STAR*D) study is analyzed as an illustrative example.

Keywords: Double robustness, Individualized treatment rule, Personalized medicine, Propensity score

1. Introduction

In recent years, personalized medicine, or precision medicine, has received tremendous attention in clinical practice and medical research (Hamburg and Collins, 2010; Chan and Ginsburg, 2011; Collins and Varmus, 2015). Its development originates from the fact that patients often exhibit heterogenous responses to treatments. A drug that works for the majority of individuals may not work for a subgroup of patients with certain characteristics. For example, trastuzumab is shown to be effective for treating HER2-overexpressing metastatic breast cancer as it is specifically designed to target HER2 amplification (Vogel et al., 2002). Individualized treatment rules (ITRs) formalize personalized treatment decisions, which recommend treatments using patients’ own information, with the optimal ITR maximizing the mean of a pre-specified clinical outcome if followed by the patient population.

Using data collected from clinical trials or observational studies, numerous methods have been developed on estimation of optimal ITRs. One approach is to fully or partly specify a model of the clinical outcome given treatment and covariates, and then use the fitted model to infer the optimal ITR. This includes Q-learning (Qian and Murphy, 2011) and A-learning (Murphy, 2003; Robins, 2004; Blatt et al., 2004). Q-learning models the conditional mean of the outcome given treatment and covariates while A-learning directly models the differential treatment effects between treatments. However, one drawback of Q- and A-learning is that the optimal treatment rule is indirectly estimated through posited regression models, and thus sensitive to model misspecification. Value-search or direct-maximization methods offer an alternative to regression-based methods by directly maximizing an estimator of the marginal mean outcome over a pre-specified class of ITRs (Zhao et al., 2012; Zhang et al., 2012; Zhou et al., 2017; Zhao et al., 2019), thereby separating the class of decision rules from the posited regression models.

In particular, Zhang et al. (2012) estimated the optimal ITR by maximizing an augmented inverse-probability weighted estimator (AIPWE) for the population mean outcome over a class of ITRs. The aforementioned estimator is doubly robust (DR) in the sense that it consistently estimates the optimal ITR if either the propensity score or the outcome regression model is correctly specified. Doubly robust estimation has enjoyed great popularity in missing data and causal inference models (Scharfstein et al., 1999; Robins and Rotnitzky, 2001; Van der Laan and Robins, 2003; Bang and Robins, 2005). The DR estimators require specification of two nuisance working models, one for the missingness or treatment assignment mechanism, and another one for the distribution of complete data or potential outcomes. Historically, estimation of the nuisance parameters indexing the working models in DR estimators had received little attention, partly because the asymptotic properties of the DR estimators do not depend on the choice of nuisance parameter estimates when both working models are correctly specified (Tsiatis, 2007). As a result, standard maximum likelihood estimators are used, i.e., logistic regression for the propensity score model, and linear regression for the outcome model. This standard practice starts to change after Kang and Schafer (2007) cautioned against the use of DR estimators when both working models are misspecified. Several discussion articles (Robins et al., 2007; Tsiatis and Davidian, 2007; Tan, 2007) further pointed out that the choice of nuisance parameter estimates can have a dramatic impact on the properties of the DR estimators when at least one working model is misspecified. Indeed, in the context of estimating optimal ITRs using DR methods, there is still room for improved performance. For example, as illustrated in simulation studies of Zhao et al. (2019), the usual DR estimator (Zhang et al., 2012) can be inefficient, i.e, exhibits a large variation when the outcome regression model is misspecified. The poor performance may be partly a consequence of the default use of maximum likelihood estimators for the coefficients in the misspecified outcome regression model (Cao et al., 2009). This motivates us to develop improved DR approaches for learning optimal ITRs.

Several improved DR estimators have been proposed in missing data and causal inference models for the purpose of variance reduction. The nuisance parameters indexing the outcome model are estimated so as to minimize the variance of the DR estimator under a correctly specified propensity score model (Rubin and van der Laan, 2008; Cao et al., 2009; Tan, 2010; Tsiatis et al., 2011). In this article, we propose to estimate the optimal ITR by maximizing an improved DR estimator of the population mean outcome among a set of ITRs. Our proposed estimator is doubly robust. In addition, it achieves the smallest variance among its class of DR estimators when the propensity score models are correctly specified, regardless of the specification of the outcome models. As we demonstrate, this approach leads to estimated optimal regimes achieving comparable or better performance than those from Zhang et al. (2012).

The heterogeneity in response to treatments exists not only between patients but also within each patient. A patient’s response to treatment can change over time because individual characteristics, and the nature of disease itself, evolve. This motivates the development of dynamic treatment regimes (DTRs) (Murphy, 2003), which are sequential decision rules that adapt over time to the clinical status of each patient. At each decision point, the available patient history data are used as input for the decision rule, and an individualized treatment is recommended for the next stage. Construction of optimal DTRs has been of great interest, where several methods are developed to handle multi-stage problems (Zhang et al., 2013; Laber et al., 2014; Schulte et al., 2014; Zhao et al., 2015; Wallace and Moodie, 2015; Liu et al., 2018). In this paper, we also discuss extending the proposed method to estimate optimal DTRs with added efficiency and robustness.

This article proposes 2 major contributions to the literature. (1) We propose improved DR approaches for estimating optimal ITRs, which has not been investigated in the field of personalized medicine. (2) Current literature such as Cao et al. (2009) and Tsiatis et al. (2011) employed inverse-probability weighted estimating equations to estimate the nuisance parameters. Instead, we propose augmented inverse-probability weighted estimating equations for this purpose, which brings further stability.

The remainder of the article is organized as follows. In Section 2, we introduce background information and review existing doubly robust estimators in learning optimal ITRs. We then formally describe the proposed improved doubly robust estimator in single-stage optimal treatment problems. Theoretical results are presented in Section 3. In Section 4, we present simulation studies to evaluate finite sample performance of the proposed method. The method is then illustrated using data from the Sequenced Treatment Alternatives to Relieve Depression (STAR*D) Study in Section 5. Some concluding remarks are given in Section 6. Technical results are relegated to the supplementary material.

2. Method

2.1. Background and preliminaries

We consider the estimation of the optimal ITR in the single-stage setting. We observe , comprising n independent and identically distributed triplets of , where denotes the patient’s baseline variables; denotes the assigned treatment; Y denotes the clinical outcome of interest, coded so that the larger the better. The data comes from either randomized trials or observational studies. An ITR is a map such that a patient presenting with will receive treatment .

Let denote a class of ITRs of interest. To formally define the optimal ITR, , we adopt the potential outcome framework (Rubin, 1974). Let Y(a) denote the potential outcome under treatment . The potential outcome under any ITR, d, can be defined as , where is the indicator function. Here we suppress the dependence of Y(d) on X. The performance of d is measured by the marginal mean outcome , the so-called value function associated with the rule d. In other words, the value function V(d) represents the overall population mean if treatment were to be assigned according to d. The optimal ITR, , is a rule that maximizes V(d) among , i.e., for all .

In order to connect the potential outcomes with the observed data, we make the following assumptions: (i) consistency, ; (ii) positivity, for and for all X; (iii) no unmeasured confounding, . These are standard and well-studied assumptions in causal inference (Imbens and Rubin, 2015). Assumption (iii) is trivial in a randomized trial but unverifiable in an observation study (Robins et al., 2000).

Define , then under the aforementioned assumptions, it can be shown that

where the outer expectation is taken with respect to the marginal distribution of X. The above formulation implies that . One approach is to posit a regression model for , and estimate the nuisance parameter β by some β; e.g. least squares. Subsequently the optimal ITR is estimated by (Qian and Murphy, 2011). This is usually referred to as an indirect approach, which could lead to inconsistent estimators of when the posited model Q(X, A; β) is incorrect.

To alleviate the above issue, value-search or direct-maximization methods attempt to estimate by directly maximizing an estimator of the value function over the class . The key step is to construct a consistent and robust estimator of the value function, say . Then is estimated by . Let denote the true propensity score, so the value function can be rewritten as (Qian and Murphy, 2011; Zhao et al., 2012)

In an observation study, is unknown. A parametric model may be posited; for example, a logistic regression model , . Let denote the maximum likelihood estimator for based on , an inverse-probability weighted estimator (IPWE) for V(d) is

where is the empirical measure. It is straightforward to show that the IPWE is consistent for V(d) if is correctly specified, that is, for some γ0, but may not be otherwise.

Following ideas from Robins et al. (1994), an AIPWE can be constructed:

| (1) |

By adding an augmentation term that involves both estimated propensity scores and regression models, the AIPWE improves efficiency and provides additional protection against model misspecification. The AIPWE is doubly robust in that it consistently estimates V(d) as long as one of the nuisance working models is correctly specified, i.e., .

Throughout the paper, we focus on the AIPWE, and suppress the superscript ‘AIPWE’ in . We refer to the estimator (1), with γ estimated by maximum likelihood and β estimated by least squares, as the usual doubly robust estimator from Zhang et al. (2012). However, when the propensity score model is correctly specified, but the outcome model is not, it is inefficient to adopt the least squares estimates of β in (1), where could have a large variation. This motivates us to develop improved DR estimators with desirable efficiency properties.

2.2. Improved doubly robust estimators when the propensity score is fully specified

We first consider a fully specified propensity score model , say, involving no nuisance parameters. We will relax this shortly. Here, the specified propensity score model may or may not be the same as the true propensity . For a fixed treatment regime d, the class of AIPW estimators for V(d) is

| (2) |

We use to emphasize that the estimator does not involve the nuisance parameters related to propensity score, and varying the choice of β leads to different DR estimators with potentially very different behaviors. In the following, we will derive an estimator for β, denoted by , such that the resulting value function estimator satisfies two properties:

-

(i)

Doubly robust. consistently estimates V(d) when either the propensity score or the outcome model is correctly specified.

-

(ii)

If the propensity score is correctly specified, it achieves the smallest asymptotic variance among all estimators of form (2), regardless of the specification of the outcome model.

Hence, when the outcome model is correctly specified, but the propensity score may not be, the desired must converge in probability to β0, where . On the other hand, when the propensity score is correctly specified, for any .

Lemma 1.

Let β be any root-n consistent estimator converging in probability to some , i.e., . When the propensity score is correct, , but may or may not be, the influence function for is

| (3) |

A proof is given in Appendix A. The preceding result shows that when the propensity score is correct, the asymptotic variance of does not depend on the sampling variation of β but only on its limit in probability β*. Based on Lemma 1 and the law of total variance, its asymptotic variance is proportional to

| (4) |

Notice that (II) in (4) equals , which does not depend on β*. Furthermore, it can be shown that (see Appendix A for details)

Denote the minimizer of (4) as βopt. By taking the derivative of (4) with respect to β* and setting it equal to zero, βopt is the solution to

| (5) |

where .

Hence, if the outcome model is correct; that is, for some β0, then in fact . If the outcome model is incorrect, such still exists and minimizes (4). However, the usual least squares estimates solving does not converge to this . This explains why the usual DR estimator is sub-optimal when the outcome regression model is misspecified.

In the following, we will propose two different forms of estimators , which converge in probability to and satisfy (i) and (ii) simultaneously. We first consider as the solution to the following inverse-probability weighted estimating equation

| (6) |

This can be viewed as a weighted least squares based on subjects whose treatment assignments coincide with those recommended by d, with weights . When the propensity score is correct, but the outcome regression may not be, the left-hand side of (6) converges in probability to the left-hand side of (5), hence . On the other hand, when the outcome regression is correct but the propensity score may not be, the left-hand side of (6) converges in probability to

which equals 0 when , thus . The following lemma formally establishes the improved doubly robust property of the proposed estimator . See Appendix A for the proof.

Lemma 2.

when either the propensity score or the outcome regression model is correctly specified. In addition, when the propensity score model is correct, achieves the smallest asymptotic variance among all estimators of form (2).

The estimating equation (6) only utilizes the subjects whose treatment assignments coincide with those recommended by d. Since we need to search for the best treatment rule in a large class of ITRs, , it is possible that for some d, there are very few subjects satisfying . This leads to highly unstable and could be problematic, in particular when the sample size n is very small. To address this issue, we propose an augmented inverse-probability weighted estimating equation, denoted by , which is the solution to

Here, (*) is the left hand side of (6), and is the estimator for . We propose to use nonparametric techniques for obtaining , which provides flexibility in model specification. For continuous X, we apply the kernel regression method, i.e.,

where is a multivariate kernel with a bandwidth matrix H. When X contains both continuous and categorical variables, the ‘generalized product kernels’ from Racine and Li (2004) is used. Under some regularity conditions, is a consistent estimator for . As a consequence, when the propensity score is correct; and when the outcome regression is correct. The following lemma formally establishes the improved doubly robust property of the estimator . The technical conditions and the proofs are provided in Appendix A.

Lemma 3.

when either the propensity score or the outcome regression model is correctly specified. In addition, when the propensity score is correct, achieves the smallest asymptotic variance among all estimators of form (2).

2.3. Scenario where there is a nuisance parameter in the propensity score model

In practice, if the propensity scores are unknown, we can posit a parametric propensity score model involving some nuisance parameters. To construct an improved DR estimator for the value function, we must take into account the effect of estimating γ. Consider the class of AIPW estimators presented in (1). Let be the maximum likelihood estimator of γ based on . We aim to find such that is doubly robust, and has the smallest asymptotic variance among the class of estimators (1) when the propensity score is correctly specified.

Since is the maximizer of the binomial likelihood

the score vector for γ is

where . When is correctly specified, i.e. for some γ0, and β converging in probability to β*, the influence functions corresponding to estimators of the form (1) have the following expression

| (7) |

where , and

Compared with (3), the influence functions (7) involve an additional term due to estimation of γ. However, this additional term disappears when both models are correct. Define . In a slight abuse of notation, denote the minimizer of the variance of (7) as . It is the solution to

| (8) |

Detailed derivations of the influence function and its variance are deferred to Appendix A.

To compress notations, we write to . Consider as the solution to

| (9) |

where , and . In Appendix A, we show that is doubly robust, and achieves the smallest asymptotic variance with when the propensity score is correct.

Correspondingly, we can construct an augmented inverse-probability weighted estimating equation and consider as the solution to

| (10) |

where (**) is the left hand side of (9). Using a similar argument, satisfies (i) and (ii), and is improved doubly robust.

In the above discussion, we proposed improved DR estimators of V(d) for a fixed treatment regime d. Notice that by (8), the optimal value is d-dependent, i.e., different d’s correspond to different . Rigorously speaking, we should write (d), and (d) for the nuisance parameter estimates. To estimate , we first identify the corresponding and the , for each . We then find the optimal d among the class that leads to the largest , i.e., . In practice, the ITR is often indexed by a set of parameters, for instance, , where . Since is a nonsmooth function of η, standard optimization methods can be problematic. We used a genetic algorithm discussed by Goldberg (1989), which is available in the R package rgenoud (Mebane Jr and Sekhon, 2011). In the rest of the paper, we suppress the letter d in and when there is no confusion.

3. Theoretical results

In this section, we establish asymptotic normality of the proposed estimators and the usual doubly robust estimator of V(d). We do not discuss the situation when both propensity score and outcome models are misspecified, given that the resulting estimator is not consistent for V(d). We first consider the case where propensity score is fully specified. We have the following result.

Theorem 1.

(Asymptotic normality when propensity score model is full specified). When either the propensity score or the outcome model is correct,

See Appendix C for detailed expressions of . The true parameters are where satisfies . where satisfies . where satisfies

The estimators and involve solving jointly a set of M-estimating equations (Stefanski and Boos, 2002). Thus, the asymptotic variance of and can be calculated based on standard M-estimation theory. The estimator is obtained by solving a set of estimating equations where some infinite dimensional parameters, in this case, , are estimated nonparametrically in the first stage, which is referred to as semiparametric M-estimators (Andrews, 1994; Newey, 1994; Chen et al., 2003; Ichimura and Lee, 2010). In Appendix B, we establish asymptotic normality of such semiparametric M-estimators by extending Theorem 2 in Chen et al. (2003). The detailed proof of Theorem 1 can be found in Appendix C.

Remark 1.

When propensity score is correct, it can be shown that which equals to (4), the asymptotic variance of the influence function for . In addition, when propensity score is correct but outcome model incorrect, . Recall that is defined in (5), which minimizes the asymptotic variance (4). However, , the limit of least squares estimates, is different from . Consequently, and have the same asymptotic variance, which is smaller than that of . Though, in small sample size scenarios, is preferred since it utilizes the complete data, and could lead to a more stable estimate. When both models are correct, , where satisfies . As a result, all three estimators have the same asymptotic variance. When the outcome model is correct but propensity score incorrect, it is not possible to directly compare the asymptotic variances of these estimators.

The following theorem presents asymptotic properties of and , the estimators for the value function of d where there is a nuisance parameter in the propensity score model.

Theorem 2.

(Asymptotic normality when there is a nuisance parameter in the propensity score model). When either the propensity score or the outcome model is correct,

The true values arewhere where satisfies .

When either the propensity score or the outcome model is correct,

The true parameters are where is the solution to the following set of equations:

| (11) |

and

| (12) |

The true parameters are where is the solution to (11) and

where (***) is the left hand side of (12). See Appendix D for detailed expressions of and the definitions of , , . Note that here we use to represent a matrix with j-th column being , similarly for .

Remark 2.

When the propensity score model is correct, observe that where , where is the the minimizer of the variance of (7) in this case. Furthermore, it can be shown that , , equal to the variance of (7) evaluated at and , respectively. Therefore, when the propensity score is correct but outcome incorrect, and are asymptotically equivalent and more efficient than . When both models are correct, all three estimators are asymptotically equivalent.

Remark 3.

An estimator for , the overall population mean under the optimal regime, may be found as . Following Zhang et al. (2012), .

Thus, the asymptotic variance of can be approximated by that of , which by Theorem 2 can be estimated using the usual sandwich technique.

4. Simulation Studies

We conducted several simulation studies to evaluate the finite sample performance of our proposed method. The following six methods were compared: Q-learning based on linear regression (QL-LR, Qian and Murphy (2011)); Q-learning based on kernel regression (QL-KR); maximizing within a pre-specified class of ITRs (IPWE); maximizing where standard maximum likelihood estimators are used for the nuisance parameters (Usual-DR, Zhang et al. (2012)); maximizing where solves the IPW estimating equation (9) (Improved-DR); maximizing where DR). solves the augmented IPW estimating equation (10) (Aug-Improved-DR).

The simulation set up is similar to Kang and Schafer (2007) with some modifications. was generated as standard multivariate normal, and was defined as , so that Z can be expressed in terms of X. The treatment A was generated from according to the model , where l(x) = − z1 + 0.5z2 − 0.25z3 − 0.1z4 in Scenario 1, and in Scenario 2. The response variable was normally distributed with , where . It is straightforward to deduce that . Via Monte Carlo simulation with 106 replicates, we obtained . The following modeling choices are considered for the propensity and outcome regression models.

CCA correctly specified logistic regression model for with Z as predictors in both scenarios, and a correctly specified model for with Z, A, ZA as predictors in both scenarios.

CI A correctly specified logistic regression model for with Z as predictors in both scenarios, and an incorrectly specified model for with as predictors in both scenarios.

IC An incorrectly specified logistic regression model for with X as predictors in Scenario 1, and without any predictors in Scenario 2, and a correctly specified model for with Z, A, ZA as predictors in both scenarios.

II An incorrectly specified logistic regression model for with X as predictors in Scenario 1, and without any predictors in Scenario 2, and an incorrectly specified model for with as predictors in both scenarios.

For IPWE, we use C. and I. to denote correct and incorrect propensity models, respectively. For QL-LR, we use.C and.I to denote correct and incorrect linear regression models. For QL-KR, we use .C and .I to denote kernel regression based on (Z, A) and based on (X, A), respectively. In all direct-maximization methods (IPWE, Usual-DR, Improved-DR, Aug-Improved-DR), we choose so that . By imposing , corresponds to .

For each scenario, we considered four sample sizes for training datasets: n = 100, 250, 500 or 1000, and repeated the simulation 500 times. The ITRs are constructed based on the training set and then evaluated on a large and independent test set (size 10000) based on two criteria: value function, i.e., the overall population mean when we apply the estimated optimal ITR to the test dataset; the misclassification error rate of the estimated optimal ITR from the true optimal ITR, i.e., . Here denotes the empirical measure using the test data.

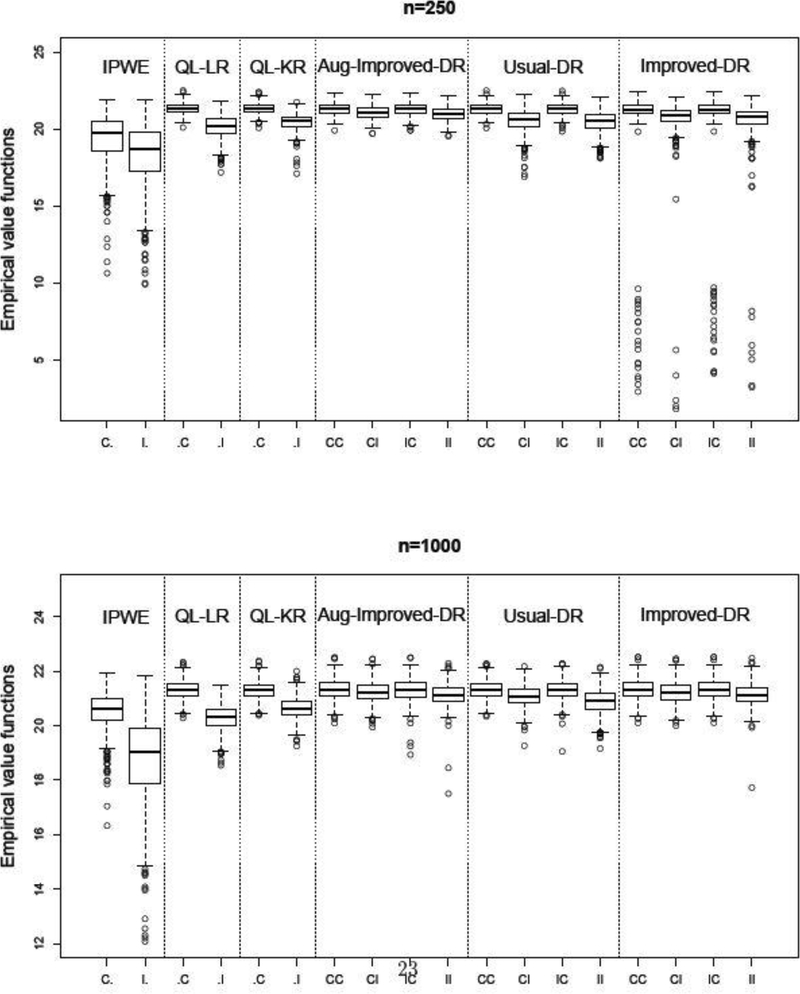

Results for Scenario 1 are presented in Figure 1, where we draw boxplots of the value functions over 500 replications. Here we only report the results for n = 250 or 1000 (see Appendix E for further results, e.g., n = 100 or 500). As expected, Q-learning works the best if the outcome model is correctly specified but has relatively poor performance if this model is incorrect. When the outcome model is correct (CC, IC), Aug-Improved-DR and Usual-DR have similar performance. This is not surprising. Recall that when the outcome model is correct, the proposed nuisance parameter estimate converges in probability to , the same limit of the least squares estimates. When the propensity model is correct but the outcome regression model is misspecified (CI), Aug-Improved-DR dominates Usual-DR, evidenced by larger value functions and smaller variance in value functions, e.g., the mean (sd) of value functions for Aug-Improved-DR are 21.07 (0.43) and 21.23 (0.39) when the sample size is 250 and 1000, respectively. Comparatively, for Usual-DR, the mean (sd) of value functions are 20.52 (0.76) and 21.07 (0.40). In addition, note that Improved-DR and Aug-Improved-DR have almost identical performance when the sample size is large (n = 1000). However, Improved-DR is unstable under small sample size (n = 250). This justifies the need to construct augmented IPW estimating equations to estimate the nuisance parameters, as we discussed in the method section.

Fig. 1.

Simulation results for Scenario 1. Value functions over 500 replications. The optimal value is E{Y(dopt)} = 21.32.

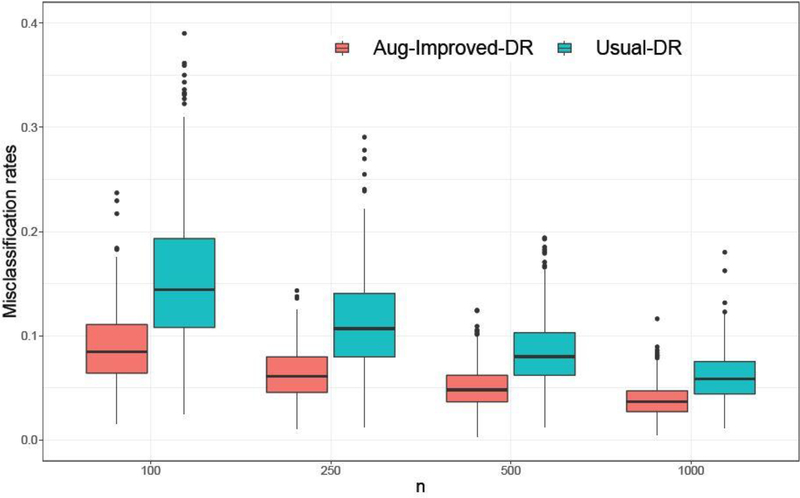

To better demonstrate the superior performance of our proposed method under the CI setting, we focus on the comparison between Aug-Improved-DR and Usual-DR in terms of the misclassification rates. Results for Scenario 1 are shown in Figure 2 with sample sizes ranging from 100 to 1000. Notice that Aug-Improved-DR produced much smaller misclassification rates as well as smaller variations. In particular, it outperforms the usual DR estimator by a large margin when the sample size is small.

Fig. 2.

Simulation results for Scenario 1 under CI: propensity score correct, outcome model incorrect. Misclassification rates over 500 replications.

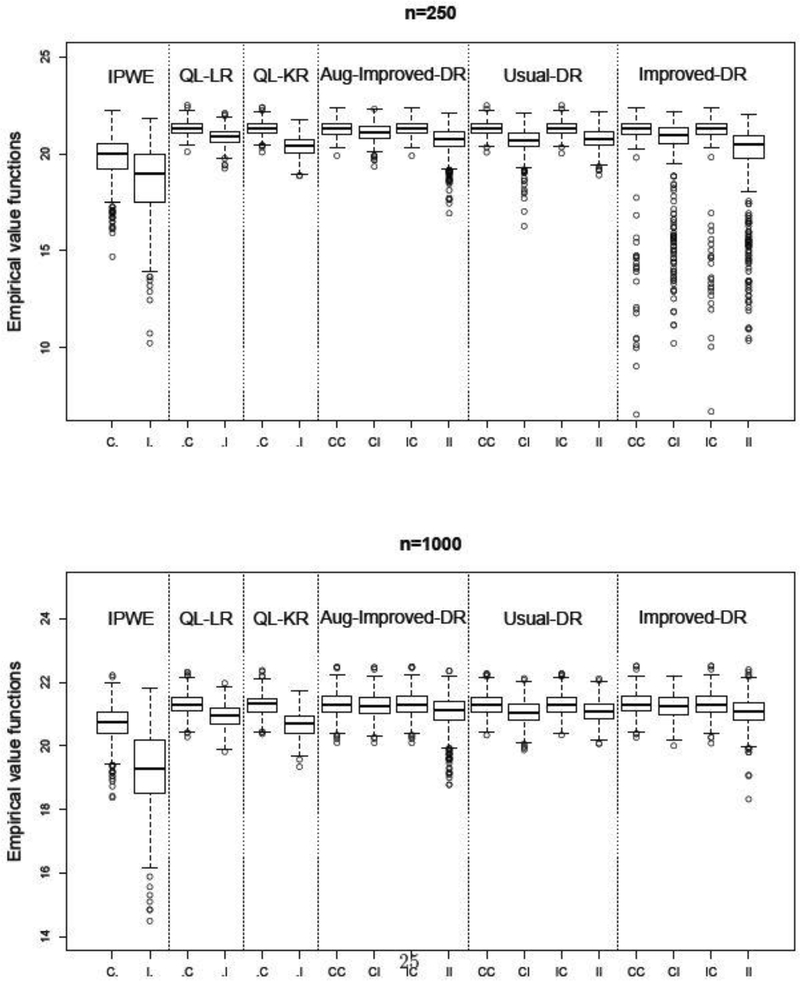

Simulation results for Scenario 2 are provided in Figure 3 and Appendix E. Again, the proposed method outperforms other competing methods in both value functions and misclassification rates. In Appendix E, we also report the mean squared errors (MSE) of different methods in terms of estimating η. Aug-Improved-DR has smaller MSE than its competitors.

Fig. 3.

Simulation results for Scenario 2. Value functions over 500 replications. The optimal value is E{Y(dopt)} = 21.32.

5. Application to the STAR*D Study

We apply the proposed method to analyze data from the STAR*D Study (Rush et al., 2004). Funded by the National Institute of Mental Health, the study was conducted to compare various treatment options for major depressive disorder when patients fail to respond to the initial treatment of citalopram (CIT). From 2001 to 2006, a total of 4041 outpatients with nonpsychotic depression, aged 18–75, were enrolled from 41 clinical sites in the U.S. The score on the 16-item Quick Inventory of Depressive Symptomatology (QIDS) was the primary outcome. The QIDS score ranges from 0 to 27, where higher scores indicate more severe depression.

The trial had four levels (see Fig. 1 in Rush et al. (2004)). Here, we focused on the first two levels. At level-1, patients received CIT for 12 to 14 weeks. Those who achieved clinically meaningful response (total QIDS score under 5) were remitted from future treatments. At level-2, participants without a satisfactory response to CIT had the option to either switch to a different medication, or to augment their existing citalopram. Those in the “switch” group were randomly assigned to bupropion (BUP), cognitive therapy (CT), sertraline (SER), or venlafaxine (VEN). Those in the “augment” group were randomly assigned to CIT+BUP, CIT+buspirone (BUS), or CIT+CT. If a patient had no preference, he/she was assigned to any of the above treatments.

We use the QIDS score at the end of level-2 as the clinical outcome Y and compared two categories of treatments: (i) treatment with selective serotonin reputake inhibitors (SSRI): CIT+BUP, CIT+BUS, CIT+CT, and SER; (ii) non-SSRI: BUP, CT, and VEN. Denote A = 1 for SSRI and A = − 1 for non-SSRI. Since patients in the “augment” group were all treated with SSRIs (violating the positivity assumption), we exclude these subjects from our analysis, which leaves a total of 817 subjects. Among them, 656 and 161 patients were in the “switch” and “no preference” group, respectively. 296 patients received SSRI treatments, while 521 patients received non-SSRI treatments. Comparisons using t-test show that there is no significant difference between the SSRI and the non-SSRI category with respect to QIDS scores.

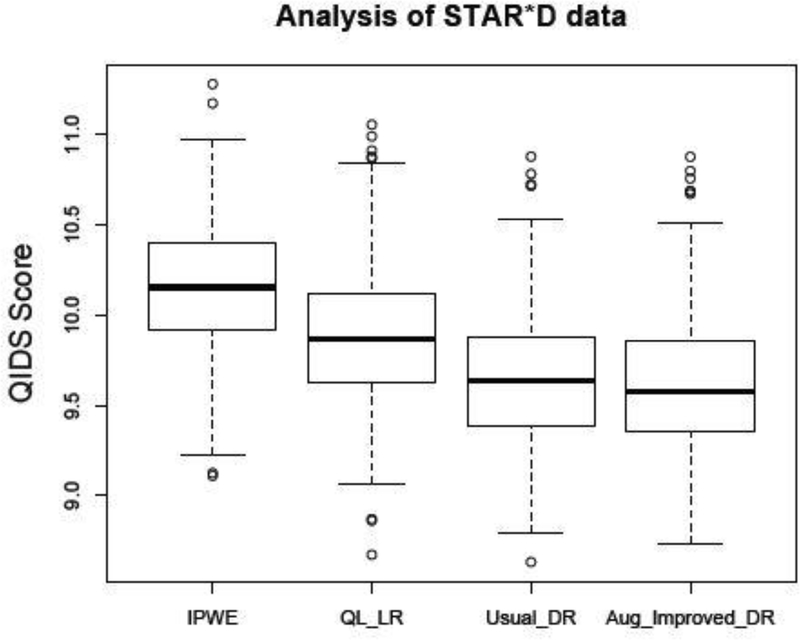

We applied four methods to estimate the optimal ITR for those patients who had entered level-2. Prognostic variables X include QIDS score at the start of level-2, change of QIDS score during the level-1 period, preference regarding level-2 treatment, and other demographic variables such as gender, race, age, education level and employment status. The propensity scores estimated by empirical proportions based on preferring to switch or no are preference. We used a linear regression of Y given for the outcome model. For all methods, we randomly split the data into training and test set with 1:1 ratio. The estimated ITR was obtained using the training set, and then evaluated on the test set by . This procedure is repeated 500 times. Results for IPWE, QL-LR, Usual-DR and Aug-Improved-DR are displayed in Figure 4, where lower scores are desirable. The estimated QIDS score by using Aug-Improved-DR is 9.62 (sd = 0.37), which is smaller than IPWE (10.15, sd = 0.36), QL-LR (9.87, sd = 0.38), and Usual-DR (9.65, sd = 0.40). In addition, Aug-Improved-DR outperformed the one-size-fits-all approaches (QIDS score of 9.98 for SSRI and 10.12 for non-SSRI).

Fig. 4.

QIDS score based on 500 replications. Lower scores are more preferable.

6. Discussion

In this article, we proposed an improved DR estimator for the optimal ITRs by directly maximizing an AIPWE of the marginal mean outcome over a class of ITRs. Our estimator is doubly robust, and designed to be more efficient than other DR estimators when the propensity score model is correctly specified, regardless of the specification of the outcome model. As shown in the numerical studies, the proposed method achieves better performance compared to other existing methods. The proposed method is appealing, given that in many practical applications, correct specification of the outcome model can be challenging, while the propensity score is either known by design or more likely to be correctly specified.

There are several important ways this work may be extended. The first is to extend it to the multi-stage decision setting. Zhang et al. (2013) proposed a doubly robust estimator for the optimal DTR where the nuisance parameters indexing the outcome models are estimated iteratively by a sequence of least squares regressions. More efficient DR estimators could be obtained if we use IPW or augmented IPW estimating equations to estimate these nuisance parameters. This is the direction we are currently pursuing.

Another future direction is to consider biased-reduced doubly robust estimation, i.e., estimate the nuisance parameters so as to minimize the bias of the DR estimator under misspecification of both working models. Vermeulen and Vansteelandt (2015) proposed biased-reduced DR estimators for several missing data and causal inference models. It would be interesting to investigate whether this principle can be adapted to the context of estimating optimal treatment regimes.

Supplementary Material

Acknowledgments

The authors gratefully acknowledge support by R01DK108073 awarded by the National Institutes of Health.

Footnotes

SUPPLEMENTARY MATERIAL

The online supplementary materials contain the appendices for the article.

References

- Andrews DW (1994). Asymptotics for semiparametric econometric models via stochastic equicontinuity. Econometrica, 62:43–72. [Google Scholar]

- Bang H. and Robins JM (2005). Doubly robust estimation in missing data and causal inference models. Biometrics, 61(4):962–973. [DOI] [PubMed] [Google Scholar]

- Blatt D, Murphy SA, and Zhu J. (2004). A-learning for approximate planning. Ann Arbor, 1001:48109–2122. [Google Scholar]

- Cao W, Tsiatis AA, and Davidian M. (2009). Improving efficiency and robustness of the doubly robust estimator for a population mean with incomplete data. Biometrika, 96(3):723–734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan IS and Ginsburg GS (2011). Personalized medicine: progress and promise. Annual review of genomics and human genetics, 12:217–244. [DOI] [PubMed] [Google Scholar]

- Chen X, Linton O, and Van Keilegom I. (2003). Estimation of semiparametric models when the criterion function is not smooth. Econometrica, 71(5):1591–1608. [Google Scholar]

- Collins FS and Varmus H. (2015). A new initiative on precision medicine. New England journal of medicine, 372(9):793–795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg DE (1989). Genetic Algorithms in Search, Optimization and Machine Learning. Addison-Wesley Longman Publishing Co., Inc., Boston, MA, USA, 1st edition. [Google Scholar]

- Hamburg MA and Collins FS (2010). The path to personalized medicine. New England Journal of Medicine, 363(4):301–304. [DOI] [PubMed] [Google Scholar]

- Ichimura H. and Lee S. (2010). Characterization of the asymptotic distribution of semiparametric M-estimators. Journal of Econometrics, 159(2):252–266. [Google Scholar]

- Imbens GW and Rubin DB (2015). Causal inference in statistics, social, and biomedical sciences. Cambridge University Press. [Google Scholar]

- Kang JD and Schafer JL (2007). Demystifying double robustness: A comparison of alternative strategies for estimating a population mean from incomplete data. Statistical science, 22(4):523–539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laber EB, Linn KA, and Stefanski LA (2014). Interactive model building for Q-learning. Biometrika, 101(4):831–847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Y, Wang Y, Kosorok MR, Zhao Y, and Zeng D. (2018). Augmented outcome-weighted learning for estimating optimal dynamic treatment regimens. Statistics in medicine, 37(26):3776–3788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mebane WR Jr and Sekhon JS (2011). Genetic optimization using derivatives: the rgenoud package for R. Journal of Statistical Software, 42(11):1–26. [Google Scholar]

- Murphy SA (2003). Optimal dynamic treatment regimes. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 65(2):331–355. [Google Scholar]

- Newey WK (1994). The asymptotic variance of semiparametric estimators. Econometrica, 62(6):1349–1382. [Google Scholar]

- Qian M. and Murphy SA (2011). Performance guarantees for individualized treatment rules. Annals of statistics, 39(2):1180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Racine J. and Li Q. (2004). Nonparametric estimation of regression functions with both categorical and continuous data. Journal of Econometrics, 119(1):99–130. [Google Scholar]

- Robins J, Sued M, Lei-Gomez Q, and Rotnitzky A. (2007). Comment: Performance of double-robust estimators when “inverse probability” weights are highly variable. Statistical Science, 22(4):544–559. [Google Scholar]

- Robins JM (2004). Optimal structural nested models for optimal sequential decisions. In Proceedings of the second seattle Symposium in Biostatistics, pages 189–326. Springer. [Google Scholar]

- Robins JM, Hernan MA, and Brumback B. (2000). Marginal structural models and causal inference in epidemiology. [DOI] [PubMed] [Google Scholar]

- Robins JM and Rotnitzky A. (2001). Comment on the bickel and kwon article, “inference for semiparametric models: Some questions and an answer”. Statistica Sinica, 11(4):920–936. [Google Scholar]

- Robins JM, Rotnitzky A, and Zhao LP (1994). Estimation of regression coefficients when some regressors are not always observed. Journal of the American statistical Association, 89(427):846–866. [Google Scholar]

- Rubin DB (1974). Estimating causal effects of treatments in randomized and nonrandomized studies. Journal of educational Psychology, 66(5):688. [Google Scholar]

- Rubin DB and van der Laan MJ (2008). Empirical efficiency maximization: Improved locally efficient covariate adjustment in randomized experiments and survival analysis. The International Journal of Biostatistics, 4(1). [PMC free article] [PubMed] [Google Scholar]

- Rush AJ, Fava M, Wisniewski SR, Lavori PW, Trivedi MH, Sackeim HA, Thase ME, Nierenberg AA, Quitkin FM, Kashner TM, et al. (2004). Sequenced treatment alternatives to relieve depression (STAR*D): rationale and design. Controlled clinical trials, 25(1):119–142. [DOI] [PubMed] [Google Scholar]

- Scharfstein DO, Rotnitzky A, and Robins JM (1999). Adjusting for nonignorable drop-out using semiparametric nonresponse models. Journal of the American Statistical Association, 94(448):1096–1120. [Google Scholar]

- Schulte PJ, Tsiatis AA, Laber EB, and Davidian M. (2014). Q-and A-learning methods for estimating optimal dynamic treatment regimes. Statistical science, 29(4):640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanski LA and Boos DD (2002). The calculus of M-estimation. The American Statistician, 56(1):29–38. [Google Scholar]

- Tan Z. (2007). Comment: Understanding OR, PS and DR. Statistical Science, 22(4):560–568. [Google Scholar]

- Tan Z. (2010). Bounded, efficient and doubly robust estimation with inverse weighting. Biometrika, 97(3):661–682. [Google Scholar]

- Tsiatis A. (2007). Semiparametric theory and missing data. Springer Science & Business Media. [Google Scholar]

- Tsiatis AA and Davidian M. (2007). Comment: Demystifying double robustness: A comparison of alternative strategies for estimating a population mean from incomplete data. Statistical science, 22(4):569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsiatis AA, Davidian M, and Cao W. (2011). Improved doubly robust estimation when data are monotonely coarsened, with application to longitudinal studies with dropout. Biometrics, 67(2):536–545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van der Laan MJ and Robins JM (2003). Unified methods for censored longitudinal data and causality. Springer Science & Business Media. [Google Scholar]

- Vermeulen K. and Vansteelandt S. (2015). Bias-reduced doubly robust estimation. Journal of the American Statistical Association, 110(511):1024–1036. [Google Scholar]

- Vogel CL, Cobleigh MA, Tripathy D, Gutheil JC, Harris LN, Fehrenbacher L, Slamon DJ, Murphy M, Novotny WF, Burchmore M, et al. (2002). Efficacy and safety of trastuzumab as a single agent in first-line treatment of her2-overexpressing metastatic breast cancer. Journal of Clinical Oncology, 20(3):719–726. [DOI] [PubMed] [Google Scholar]

- Wallace MP and Moodie EE (2015). Doubly-robust dynamic treatment regimen estimation via weighted least squares. Biometrics, 71(3):636–644. [DOI] [PubMed] [Google Scholar]

- Zhang B, Tsiatis AA, Laber EB, and Davidian M. (2012). A robust method for estimating optimal treatment regimes. Biometrics, 68(4):1010–1018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang B, Tsiatis AA, Laber EB, and Davidian M. (2013). Robust estimation of optimal dynamic treatment regimes for sequential treatment decisions. Biometrika, 100(3):681–694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y-Q, Laber EB, Ning Y, Saha S, and Sands B. (2019). Efficient augmentation and relaxation learning for individualized treatment rules using observational data. Journal of Machine Learning Research. In press. [PMC free article] [PubMed] [Google Scholar]

- Zhao Y-Q, Zeng D, Laber EB, and Kosorok MR (2015). New statistical learning methods for estimating optimal dynamic treatment regimes. Journal of the American Statistical Association, 110(510):583–598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y-Q, Zeng D, Rush AJ, and Kosorok MR (2012). Estimating individualized treatment rules using outcome weighted learning. Journal of the American Statistical Association, 107(499):1106–1118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou X, Mayer-Hamblett N, Khan U, and Kosorok MR (2017). Residual weighted learning for estimating individualized treatment rules. Journal of the American Statistical Association, 112(517):169–187. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.