Abstract

Background

The ongoing coronavirus disease 2019 (COVID-19) pandemic has put radiologists at a higher risk of infection during the computer tomography (CT) examination for the patients. To help settling these problems, we adopted a remote-enabled and automated contactless imaging workflow for CT examination by the combination of intelligent guided robot and automatic positioning technology to reduce the potential exposure of radiologists to 2019 novel coronavirus (2019-nCoV) infection and to increase the examination efficiency, patient scanning accuracy and better image quality in chest CT imaging .

Methods

From February 10 to April 12, 2020, adult COVID-19 patients underwent chest CT examinations on a CT scanner using the same scan protocol except with the conventional imaging workflow (CW group) or an automatic contactless imaging workflow (AW group) in Wuhan Leishenshan Hospital (China) were retrospectively and prospectively enrolled in this study. The total examination time in two groups was recorded and compared. The patient compliance of breath holding, positioning accuracy, image noise and signal-to-noise ratio (SNR) were assessed by three experienced radiologists and compared between the two groups.

Results

Compared with the CW group, the total positioning time of the AW group was reduced ((118.0 ± 20.0) s vs. (129.0 ± 29.0) s, P = 0.001), the proportion of scanning accuracy was higher (98% vs. 93%), and the lung length had a significant difference ((0.90±1.24) cm vs. (1.16±1.49) cm, P = 0.009). For the lesions located in the pulmonary centrilobular and subpleural regions, the image noise in the AW group was significantly lower than that in the CW group (centrilobular region: (140.4 ± 78.6) HU vs. (153.8 ± 72.7) HU, P = 0.028; subpleural region: (140.6 ± 80.8) HU vs. (159.4 ± 82.7) HU, P = 0.010). For the lesions located in the peripheral, centrilobular and subpleural regions, SNR was significantly higher in the AW group than in the CW group (centrilobular region: 6.6 ± 4.3 vs. 4.9 ± 3.7, P = 0.006; subpleural region: 6.4 ± 4.4 vs. 4.8 ± 4.0, P < 0.001).

Conclusions

The automatic contactless imaging workflow using intelligent guided robot and automatic positioning technology allows for reducing the examination time and improving the patient's compliance of breath holding, positioning accuracy and image quality in chest CT imaging.

Keywords: Coronavirus disease 2019, Artificial intelligence, Robotics, Computer tomography

1. Introduction

The ongoing outbreak of coronavirus disease 2019 (COVID-19) pandemic has spread rapidly worldwide. World Health Organization (WHO) announced the pneumonia epidemic caused by a novel coronavirus as a public health emergency of international concern on January 30, 2020 [1]. In a short period of time, the soar of patients has brought a near collapse to our currently overburdened hospitals. In particular, due to the high infectiousness of COVID-19 and the close proximity of technicians to COVID-19 patients, many of these medical workers are getting infected, which also arouses more cautions to technicians during the computer tomography (CT) examination for patients. The current operating workflow not only slows down the speed and efficiency of CT examination, but also adds more pressure to the overwhelmed hospital system.

Generally, the conventional workflow of CT imaging for the COVID-19 patients includes three stages, (1) pre-examination preparation, (2) positioning operation, and (3) scanning and image acquisition. To improve the patient's compliance of breath holding and positioning accurate, the technician must keep close contact with the patients in the first two stages. However, conventional imaging workflow (CW) is a technologist-dependent and time-consuming process, and it is often inconsistent and non-optimal, as well as of a high risk of viral exposure during COVID-19 pandemic. Thus, an automatic contactless imaging workflow (AW) is needed to minimize the contact.

Artificial intelligence (AI) is a newly emerging technology which has been widely used in the field of medical imaging [2]. Recently AI-empowered positioning technology was established, mainly including the visual sensors cameras and auto-positioning devices which relies on the deep neural network for image contouring [3]. These technologies can automatically and accurately position the patients by identifying their shapes from the images acquired with visual sensors, e.g. thermal (Far-Infra-Red) cameras or RGB-depth input sensor [4], [5]. However, only relying on automatic positioning technology, it is still challenging for the technicians to provide assistance in the breath-holding training of patients without contact and inform patients of the notes at the stage of pre-examination preparation. For patients with infectious diseases, this interaction still exposes the technicians to the potential cross-infection risk. Thus, a completely contactless and automatic CT imaging workflow is needed to minimize the contact between technicians and patients.

Here, we adopted a remote-enabled and automatic contactless imaging workflow for CT examination by the combination of intelligent guided robot and automatic positioning technology. This workflow was successfully used for diagnosing COVID-19 patients in our hospital during the pandemic. Additionally, an analytic comparison was made between AW and conventional manual workflow (CW) involving total examination time, positioning accuracy, contact rate and image quality of COVID-19 patients. We hope our findings may provide useful information to inspire future practical applications and methodological research.

2. Methods

2.1. Patients and data source

This study was approved by the Medical Ethics Committee of Zhongnan Hospital of Wuhan University (Approval Number 2020037) and waived from the written informed consent of patients by our institutional review board.

The patients diagnosed of COVID-19 in Wuhan Leishenshan Hospital according to the guideline of 2019-nCoV (Fifth Trial Edition) issued by the National Health Commission of China [6] were enrolled in this study. Inclusive and exclusive criteria were as follows: (1) confirmed as COVID-19 patients with common type pneumonia; (2) without complications; (3) without life-supporting tubes and other equipment; (4) patients could move freely and follow verbal command. The enrolled patients were divided into two groups according to the chest CT examination workflow: the conventional imaging workflow (CW) group and automatic contactless imaging workflow (AW) group.

The patients in AW group adopted from February 13 to April 12, 2020 were prospectively enrolled in this study. Patients in CW group for the first time between February 10 and March 9, 2020 were retrospective enrolled in.

2.2. Conventional imaging workflow

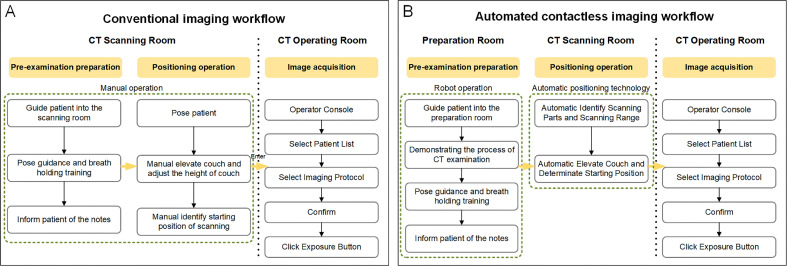

CW included pre-examination preparation, positioning operation, and image acquisition. In pre-examination preparation and positioning operation, the technicians firstly guided the patients entering into the scanning room, conducted the pose guidance and breath-holding training, and informed them of the notes, then provided assistance in posing the patients according to a chest scanning protocol, manually elevated the couch and adjusted its height, and identified the starting position of scanning. The technician finally entered into the CT operation room to start the scanning (Figure 1 A).

Figure 1.

Schematic diagram for the operating steps of conventional imaging workflow and automatic imaging workflow. (A) Flowchart for conventional imaging workflow. (B) Flowchart for automatic imaging workflow.

2.3. Automatic contactless imaging workflow

To improve the scanning efficiency and evaluate the positioning accuracy, we proposed an AW to reduce the potential exposures risk (Figure 1B). This workflow was realized through two key technologies, including intelligent guided robot and automatic positioning technology. An intelligent robot (TAMI Intelligence Technology, Beijing, China http://www.tamigroup.com) was used to guide the patients entering into the preparation room, and demonstrated the process of CT examination. The robot then conducted the pose guidance and breath-holding training for the patients by vision sensor, ultrasonic sensor, and acoustic sensor, and finally informed patients the notes from technicians (Figure 2 A and 2B). This robot consisted of mechanical system, driving system, control system, and perception system. The driving system and sensing system were responsible for hardware control and data acquisition; while the control system was responsible for interaction, data processing, and flow control.

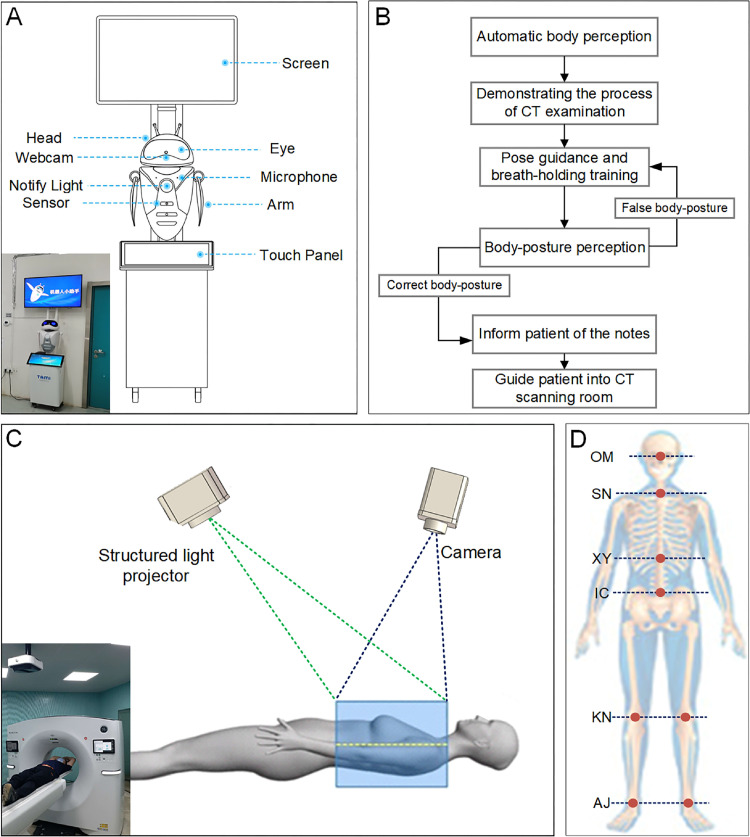

Figure 2.

Schematic diagram of intelligent guided robot and automatic positioning technology. (A) Composition of intelligent guided robot. (B) Flowchart of pose guidance and breath-holding training by the intelligent guided robot. (C) Automatic positioning technology used a fixed, ceiling-mounted, off-the-shelf, structured light projector and 2D/3D video camera that could determine the distances among various points in its field of view. (D) Eight supporting anatomical references / landmarks.

The automatic positioning technology used a fixed, ceiling mounted, off the shelf 2D/3D video camera that could determine the distances among various points in its field of views (Figure 2C). The standard red green blue (RGB) video images were displayed on the existing gantry‐mounted touchscreen of CT system. The information from the standard output of the camera was used, along with the precise spatial information of the individual CT system's gantry and table installation geometry, to determine the anatomical landmark location and the start and end locations for the scout scans. There were 8 anatomical references for the automatic positioning technology: orbital meatal baseline (OM), sternoclavicular notch (SN), xyphoid (XY), iliac crest (IC), left and right knee (KN), left and right ankle joints (AJs) (Figure 2D). The automatic positioning software used two deep learning algorithms (RGB LandmarkNet network and Depth LandmarkNet network) with different inputs that produced comparable outputs to identify all the 8 anatomical landmarks on patient's body (https://www.gehealthcare.com). The RGB LandmarkNet network used 2D video images as inputs and outputs of all eight of the predefined landmark locations in X and Z. In parallel, the Depth LandmarkNet network used the 3D depth data from the camera to also produce all eight of the predefined landmark locations. The SN and IC landmarks were used for the chest scan in this study.

2.4. Acquisition and reconstruction of CT images

The imaging workflows for the CW and AW groups are shown in Figure 1A and 1B. The chest CT scanning was performed on a Revolution Maxima CT scanner equipped with an AI-based automatic patient centering and positioning software (GE Healthcare, Waukesha, USA) from the apex pulmonis to the diaphragm. In both groups, the same scan protocol was used with the following parameters, tube voltage: 120 kVp; gantry rotation time: 0.4 s; pitch: 1.375:1; scan field-of-view (SFOV): 50 cm; slice thickness: 5 mm; tube current (mA), automated tube current modulation (ATCM) to obtain a noise index of 11.57; a standard reconstruction algorithm with the standard kernel used for the axial images; reconstruction display field-of-view (DFOV): 35–50 cm; reconstruction thickness: 1.25 mm.

2.4.1. Contact rate

During CT scan, if one patient needed help or had difficulty cooperating and completing the examination, the radiologist could enter into the CT scanning room or preparation room to help him/her. This patient was defined as the one who contacted with radiologist. The contact rate was calculated by the following formula: the number of patients contact with radiologist / the total number of patients in CW or AW group.

2.4.2. Total examination time

The total examination time was recorded by the CT technician for each group; it was defined as the time from the patient entering the CT scanning room to walking out of the examination room after finishing the CT examination.

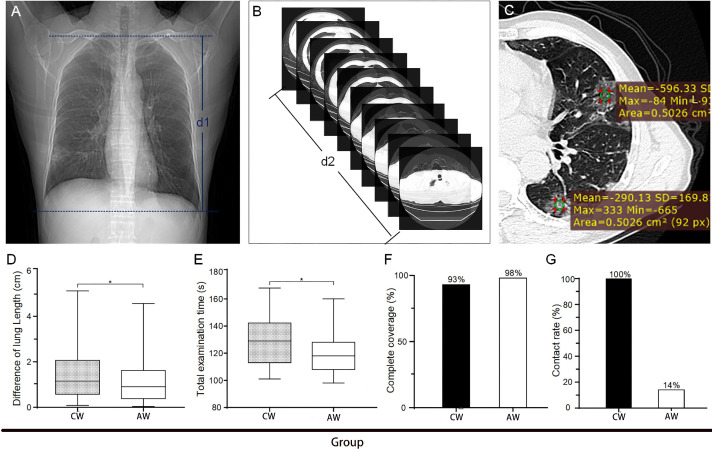

2.4.3. Evaluation of breath-holding training and positioning accuracy

A complete chest scan consisted of two parts, scout image scanning and axial thin-layer image acquisition. These two parts both required the patients to hold breath following the voice prompts. The patient compliance of breath-holding training was indirectly evaluated by measuring the difference of lung length between the scout image and the axial thin-layer image, The difference of lung length was measured using an axial CT image in the following steps: (1) selecting a scout image and measuring the distance (d1) between apex pulmonis and basis pulmonis (Figure 3 A); (2) recording the distance (d2) from apex pulmonis to basis pulmonis on the axial thin-layer image (Figure 3B). The difference of lung length was calculated with the formula of the difference of lung length = |d2-d1|.

Figure 3.

Measurement and comparison of the difference of lung length, total examination time and complete coverage between conventional workflow and automatic workflow in CT imaging for COVID-19 patients. The difference of lung length was measured using the scout image (A) and axial thin-layer image (B) of the same patient, and the distance (d1 or d2) between apex pulmonis and basis pulmonis on the scout image or axial thin-layer image was recorded. (C) The lesions were shown along with ROI locations (green circles) used to acquire CT value (mean±SD) in different lung regions. (D) Measurement of the difference of lung length. (E) Quantification of the positioning time. (F) Comparison of the complete coverage on the axial thin-layer image acquired by CW and AW (data was presented as n (%), where n was the number of patients with complete chest CT axial images). G: Comparison of contact rate between CW and AW. *P<0.01; CW group, n = 146; AW group, n = 165.

For the positioning accuracy, a complete coverage of axial thin-layer image should contain the range from the apex pulmonis to the diaphragm. Thus, if the images of apex pulmonis and diaphragm were fully covered, the patient positioning was considered to be successful; otherwise, it was defined as incomplete or inaccurate.

2.4.4. Assessment of image quality

The image quality was analyzed by the same three radiologists at a standard pulmonary display window setting (window level = −700 and window width = 1500). The pulmonary lesions and the location of region-of-interest (ROI) were established by consensus. The mean CT value and standard deviation (SD) in Hounsfield Units (HU) of pulmonary lesions were measured by placing a 50 mm2 ROI on the center of the lesions (Figure 3C). Three consecutive images were measured in each ROI for each group, and the measurement results were averaged. The pulmonary lesions mainly included ground glass opacification, consolidation opacification and interstitial thickening. Other radiographic abnormalities (hydrothorax, nodule or lump, cavitation or calcification, bronchiole or bronchiectasis and emphysema) were also noted. The lungs were divided into five regions by referring to the previous studies [7], [8]: central zone (CZ), including the main and segmental bronchi within 20 mm of hila; peripheral zone (PZ), including small bronchi and bronchioles within 20–40 mm of hila; subpleural region (SPR), within 10 mm of the chest wall; centrilobular region (CLR); and apical zone (AZ). The signal-to-noise ratio (SNR) of the lesions was calculated based on the formula: SNR = Mean CT values/SD. The image noise was represented using the SD value.

2.5. Statistical analysis

The continuous variables were expressed as mean ± SD and compared using unpaired-sample t tests if being normally distributed as shown by the analysis of Sapiro-Wilk test; otherwise, the variables were represents as the median (Q1, Q3) and compared with Wilcoxon signed-rank tests. The categorical variables were expressed as number (%) and compared by χ² test. A two-tailed P value less than 0.05 indicated a statistically significance. All statistical analyses were conducted with IBM SPSS software (version 22.0, USA).

3. Results

3.1. General information

Totally 165 patients meeting the inclusive and exclusive criteria were prospectively enrolled in AW group, 89 men and 76 women with the median age of 55 (21, 81) years. A total of 584 patients underwent CW chest CT examinations for the first time at Wuhan Leishenshan Hospital between February 10 and March 9, 2020, among which 146 patients meeting the inclusive and exclusive criteria were retrospective enrolled in this study, 77 men and 69 women with the median age of 56 (19, 78) years. There were no statistical differences regarding to age (P = 0.24) and body mass index (BMI) (P = 0.92) between AW and CW groups (Table 1 ).

Table 1.

Demographics and baseline characteristics of 311 enrolled COVID-19 patients.

| Groups | Number of patients | Age (years, M (Q1, Q3)) | Ratio of male to female | Body mass index (kg/m2, M (Q1, Q3)) |

|---|---|---|---|---|

| CW group | 146 | 56 (19, 78) | 1.11:1 | 24.1 (17.5, 32.6) |

| AW group | 165 | 55 (21, 81) | 1.17:1 | 23.7 (18.1, 33.2) |

| P values | – | 0.24 | – | 0.92 |

CW: conventional manual workflow; AW: automatic contactless workflow.

3.2. Evaluation of breath-holding training and positioning accuracy

The difference of lung length was determined using the scout images and axial thin-layer images, and it was significantly smaller in AW group than that in CW group ((0.90±1.24) cm vs. (1.16±1.49) cm, P = 0.009) (Figure 3D).

3.3. Total examination time

The total examination time in the AW group was significantly less than that in the CW group ((118.0 ± 20.0) s vs. (129.0 ± 29.0) s, P = 0.001) (Figure 3E).

3.4. Scanning accuracy and contact rate

The complete coverage of axial chest images was used to evaluate the scanning accuracy. The scanning accuracy in the AW group was higher than that in the CW group (98.0% (162/165) vs. 93.0% (136/146) (Figure 3F) and a significantly less contact rate was showed in the AW group (14%) than that in the CW group (100%) (Figure 3G).

3.5. Image noise and SNR of pulmonary lesions

The pulmonary lesions were found within any lung regions in both AW and CW groups. However, they were predominantly distributed in the peripheral, centrilobular, and sub-pleural regions of the lungs (926 of 1056 lesions (87.7%) in the CW group and 1026 of 1184 lesions (86.7%) in the AW group). Overall, the AW group had a marginally lower image noise and higher SNR for the lesions from the view of lung regions (Table 2 ). But for lesions located in the centrilobular and subpleural regions, the AW group had a significantly lower image noise than that in the CW group (CLR: (140.4 ± 78.6) HU vs. (153.8 ± 72.7) HU, P = 0.028; SPR: (140.6 ± 80.8) HU vs. (159.4 ± 82.7) HU, P = 0.010). There was no significant difference in the noise values of other lung regions between the two groups. For the lesions located in the peripheral, centrilobular, and subpleural regions, the AW group had a significantly higher SNR than those in the CW group (Table 2).

Table 2.

Distribution of image noise and SNR of CW and AW groups in different lesion locations in chest CT.

| Lesion location (zone) | All lesions (n, CW/AW) | Image noise (HU, mean±SD) |

SNR |

||||

|---|---|---|---|---|---|---|---|

| CW | AW | P values | CW | AW | P values | ||

| Apical | 71/86 | 136.3 ± 70.7 | 133.3 ± 71.1 | 0.713 | 6.3 ± 4.1 | 6.7 ± 3.1 | 0.120 |

| Central | 59/72 | 134.1 ± 66.3 | 130.2 ± 67.5 | 0.430 | 6.4 ± 4.2 | 6.9 ± 3.9 | 0.188 |

| Peripheral | 232/261 | 146.6 ± 66.7 | 135.8 ± 74.4 | 0.069 | 5.4 ± 4.3 | 6.8 ± 4.4 | 0.011 |

| Centrilobular | 497/534 | 153.8 ± 72.7 | 140.4 ± 78.6 | 0.028 | 4.9 ± 3.7 | 6.6 ± 4.3 | 0.006 |

| Subpleural | 197/231 | 159.4 ± 82.7 | 140.6 ± 80.8 | 0.010 | 4.8 ± 4.0 | 6.4 ± 4.4 | <0.001 |

The data are mean±SD, where n is the number of the pulmonary lesions with available data. CW: conventional manual workflow; AW: automatic contactless workflow; SNR: signal-to-noise ratio.

4. Discussion

We proposed an AW for CT examination by the combination of intelligent guided robot and automatic positioning technology, and analyzed and compared the examination time and image quality for COVID-19 patients examined using CW or AW. Our results indicated that the imaging workflow not only improved the patient compliance of breath holding but also reduced the examination time and overall image noise by better breath-holding training and positioning.

The application of AI technology in CT imaging have been proven to make the scanning procedure not only more efficient but also effective in imaging-based diagnosis [9]. Recently, AI-empowered visual sensors and ISO-centering technology has been introduced into CT imaging system, which makes it possible to realize the automatic contactless positioning. Wang et al. [10] found that using visual sensors and deep convolutional neural networks could significantly improve the scanning efficiency. Our results showed that the use of automatic imaging workflow might result in a shorter time to complete the chest CT examination. In particular, the total examination time was reduced by 8.6% in the AW group compared with the CW group. This automatic image workflow was also confirmed to be accurate in chest imaging. Our result also suggested that the accuracy of automatic imaging workflow (98%) was higher than that of conventional imaging workflow (93%).

Other important positioning technology (including ISO-centering and RGB-depth input sensor) is applied in CT positioning and imaging can improve image quality by precisely adjusting the bed height [11], [12], because the CT image quality and temporal resolution nearest to the isocenter and image noise will be decreased substantially when the patients are accurately positioned. However, due to the barrier of various personal protective equipments, the normal communication between medical staffs and patients is limited, and the motion artifacts in chest CT imaging caused by the insufficient breath-holding training of patients are still not well solved during the epidemic period. The intelligent robot and automatic positioning technique could present and perceive correct body posture of patient by virtue of infrared detection system and feedback system. Our results revealed that the difference of lung length in CT image obtained with the AW was statistically lower than that in CW group. These findings indirectly indicate that the patient's compliance of breath holding can be improved by intelligent guided robot.

This result also showed that breath-holding training of robot is better than that of technicians, and there may be two reasons for this. First, due to the barrier of personal protective equipment's, communication between radiologists and patients was limited, which lead to insufficient breath-holding training in the CW group; second, the intelligent robot could present correct body posture in the screen, which could help patients better understand breath-holding training process. More importantly, AW greatly reduce the contact opportunities between radiologist and patient. In this study, only 14% of patient (23 of 165) in the AW group required the radiologist enter the CT scanning room or preparation room to have close contact with patients during CT scanning. This reveals that the AW can reduce the contact opportunity between patients and medical workers and improve the scanning accuracy in the meanwhile.

In this study, the image quality of AW group had significantly higher SNR and less image noise than that of CW group. The main reason that affected the noise and SNR of pulmonary lesions may be the patient off-center distance. The AI-based automatic positioning technique could effectively reduce the off-center distance and optimize image quality. According to some related studies, misposition will cause the degradation of CT image quality [13], [14], [15], [16].The image noise in AW group particularly for the lesions in the centrilobular and subpleural areas of the lungs was statistically lower than that in CW group. Chung et al. [17], [18] reported that the lung lesions in COVID-19 patients were predominantly distributed in the peripheral region of lungs. Hence, the patients receiving CT scan using AW may have the positive impact on image quality of peripheral lesions.

This study had some limitations. Firstly, the study might have a confounding bias due to the relatively small number of patients. Secondly, we only evaluated one CT scanner from one manufacturer. Additional studies are needed for investigating the generality of automatic imaging workflow to different CT scanners. Thirdly, the patients were limited to those without the demand for life-supporting tubes and other equipment; this means they could follow the verbal commands. In addition, all the patient positions such as feet-first prone position, lateral position, and other special conditions were not included.

In summary, this study indicates that the use of AW might lead to higher examination efficiency, scanning accuracy, and image quality in chest CT imaging, and reduce the cross-infection risks for diagnosing COVID-19 patients.

Conflicts of interest statement

The authors of this manuscript declare no relationships with any companies whose products or services may be related to the subject matter of the article. The authors declare that there are no conflicts of interest related to this article.

Funding

This study was supported by the National Key Research and Development Plan of China (Grant No. 2017YFC0108803), the National Natural Science Foundation of China (Grant Nos. 81771819 and 81801667).

Author Contributions

Xinghuan Wang and Haibo Xu had the idea for the study and take charge of the integrity of entire study. Yadong Gang initiated the current project, analyzed the data, and created all the figures in addition to writing the manuscript. Xiongfeng Chen, Hanlun Wang and Jinxiang Hu analyzed the data. Jianying Li, Ying Guo and Bin Wen provided technical support for entire study.

Acknowledgements

We thank Yunxiao Han and Yinghong Ge for assistance with the collecting image data. We thank Zhusha Wang, Chenhong Fan, Wenbo Sun, Gao Lei and other members of the Department of Radiology, Zhongnan Hospital of Wuhan University for their help with comments and advice for revising the manuscript.

Editor notes

Given his role as editorial board member, Xinghuan Wang had no involvement in the peer- review of this article and has no access to information regarding its peer-review. Full responsibility for the editorial process for this article was delegated to Jing Sun and Zhuqingqing Cui.

References

- 1.WHO, Geneva. Statement on the second meeting of the International Health Regulations (2005) Emergency Committee regarding the outbreak of novel coronavirus (2019-nCoV). 2020. Available from: https://www.who.int/news-room/detail/30-01-2020-statement-on-the-second-meeting-of-the-international-health-regulations-(2005)-emergency-committee-regarding-the-outbreak-of-novel-coronavirus-(2019-ncov),30 January 2020.

- 2.Vaishya R, Javaid M, Khan IH, et al. Artificial Intelligence (AI) applications for COVID-19 pandemic. Diabetes Metab Syndr. 2020;14(4):337–339. doi: 10.1016/j.dsx.2020.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shi F, Wang J, Shi J, et al. Review of Artificial intelligence techniques in imaging data acquisition, segmentation, and diagnosis for COVID-19. IEEE Rev Biomed Eng. 2021;14:4–15. doi: 10.1109/RBME.2020.2987975. [DOI] [PubMed] [Google Scholar]

- 4.Singh V, Chang YJ, Ma K, et al. Estimating a patient surface model for optimizing the medical scanning workflow. Med Image Comput Comput Assist Interv. 2014;17(Pt1):472–479. doi: 10.1007/978-3-319-10404-1_59. [DOI] [PubMed] [Google Scholar]

- 5.Casas L, Navab N, Demirci S. Patient 3D body pose estimation from pressure imaging. Int J Comput Assist Radiol Surg. 2019;14(3):517–524. doi: 10.1007/s11548-018-1895-3. [DOI] [PubMed] [Google Scholar]

- 6.China National Health Committee. Diagnosis and treatment of pneumonitis caused by novel coronavirus (Trial Version 5). 2020. Available from: http://www.nhc.gov.cn/yzygj/s7653p/202002/3b09b894ac9b4204a79db5b8912d4440/files/7260301a393845fc87fcf6dd52965ecb.pdf.

- 7.Christe A, Charimo-Torrente J, Roychoudhury K, et al. Accuracy of low-dose computed tomography (CT) for detecting and characterizing the most common CT-patterns of pulmonary disease. Eur J Radiol. 2013;82(3):e142–e150. doi: 10.1016/j.ejrad.2012.09.025. [DOI] [PubMed] [Google Scholar]

- 8.Cristofaro M, Busi Rizzi E, Piselli P, et al. Image quality and radiation dose reduction in chest CT in pulmonary infection. Radiol Med. 2020;125(5):451–460. doi: 10.1007/s11547-020-01139-5. [DOI] [PubMed] [Google Scholar]

- 9.Luengo-Oroz M, Pham KH, Bullock J, et al. Artificial intelligence cooperation to support the global response to COVID-19. Nat Mach Intell. 2020;2:295–297. [Google Scholar]

- 10.Wang Y, Lu X, Zhang Y, et al. Precise pulmonary scanning and reducing medical radiation exposure by developing a clinically applicable intelligent CT system: toward improving patient care. EBioMedicine. 2020;54 doi: 10.1016/j.ebiom.2020.102724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Booij R, van Straten M, Wimmer A, et al. Automated patient positioning in CT using a 3D camera for body contour detection: accuracy in pediatric patients. Eur Radiol. 2021;31(1):131–138. doi: 10.1007/s00330-020-07097-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kadkhodamohammadi A, Gangi A, De Mathelin M, et al. IEEE winter conference on applications of computer vision (WACV) 2017. A multi-view RGB-D approach for human pose estimation in operating rooms. [Google Scholar]

- 13.Kaasalainen T, Palmu K, Reijonen V, et al. Effect of patient centering on patient dose and image noise in chest CT. AJR Am J Roentgenol. 2014;203(1):123–130. doi: 10.2214/AJR.13.12028. [DOI] [PubMed] [Google Scholar]

- 14.Saltybaeva N, Alkadhi H. Vertical off-centering affects organ dose in chest CT: evidence from Monte Carlo simulations in anthropomorphic phantoms. Med Phys. 2017;44(11):5697–5704. doi: 10.1002/mp.12519. [DOI] [PubMed] [Google Scholar]

- 15.Toth T, Ge Z, Daly MP. The influence of patient centering on CT dose and image noise. Med Phys. 2007;34(7):3093–3101. doi: 10.1118/1.2748113. [DOI] [PubMed] [Google Scholar]

- 16.Gang Y, Chen X, Li H, et al. A comparison between manual and artificial intelligence-based automatic positioning in CT imaging for COVID-19 patients. Eur Radiol. 2021:1–10. doi: 10.1007/s00330-020-07629-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Xie X, Zhong Z, Zhao W, et al. Chest CT for typical coronavirus disease 2019 (COVID-19) pneumonia: relationship to negative RT-PCR testing. Radiology. 2020;296(2) doi: 10.1148/radiol.2020200343. E41-E45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chung M, Bernheim A, Mei X, et al. CT imaging features of 2019 novel coronavirus (2019-nCoV) Radiology. 2020;295(1):202–207. doi: 10.1148/radiol.2020200230. [DOI] [PMC free article] [PubMed] [Google Scholar]