Abstract

Recent advancements in the field of artificial intelligence have demonstrated success in a variety of clinical tasks secondary to the development and application of big data, supercomputing, sensor networks, brain science, and other technologies. However, no projects can yet be used on a large scale in real clinical practice because of the lack of standardized processes, lack of ethical and legal supervision, and other issues. We analyzed the existing problems in the field of artificial intelligence and herein propose possible solutions. We call for the establishment of a process framework to ensure the safety and orderly development of artificial intelligence in the medical industry. This will facilitate the design and implementation of artificial intelligence products, promote better management via regulatory authorities, and ensure that reliable and safe artificial intelligence products are selected for application.

Keywords: Artificial intelligence, machine learning, closed-loop framework, clinical decision support, regulation, medical industry, clinical practice

Introduction

Artificial intelligence (AI) originated in the United States in 1956,1 at which time its essence was an algorithm established by analyzing existing data and self-learning. After decades of development, AI has gradually been integrated into daily medical practice and has made considerable progress in medical image processing,2–7 medical process optimization,8,9 medical education, and other applications.6,7,10,11 However, several problems have emerged along with the rapid development of AI technology; therefore, it is not yet widely applied in clinical practice and public health. We searched the PubMed database to identify articles on the topic of AI medical applications, laws, and ethics in the most recent 5 years. In this narrative review article, we discuss the current application of AI in medicine and summarize the existing obstacles preventing AI from universal acceptance. We also explore and discuss potential problem-solving strategies.

Application of AI in the COVID-19 epidemic

In the COVID-19 epidemic at the beginning of 2020, AI algorithms combined with chest computed tomography findings, clinical symptoms, exposure history, and laboratory tests were able to quickly diagnose COVID-19-positive patients. AI systems also improved the reverse-transcriptase polymerase chain reaction viral detection ability in COVID-19-positive patients with normal computed tomography findings.12–14 Through AI system screening, we could quickly focus on drugs with anti-COVID-19 effects15,16 and predict the possible sequence of next-generation viruses.17 Thermal scanners with body and face detection techniques were used to screen people passing through crowded places and identify high temperatures that may be related to COVID-19. Based on individuals’ self-reported health status, travel history, contact history, and other parameters, “health quick response codes” were established by AI analysis to distinguish diagnosed patients, patients with suspected disease, close contacts, and normal residents, thus guiding anti-epidemic policies in various places; this endeavor has effectively slowed the spread of the epidemic.18 An AI strategy based on an improved susceptible-infected model and self-organizing feature map could summarize the propagation law close to the actual situation and predict the development of the epidemic.19,20 The use of a low-cost blockchain and AI-coupled self-detection and tracking system helped resource-limited areas carry out effective disease surveillance.21

Problems and challenges

The inherent challenges of machine learning, the imperfection of ethics and laws, and the poor acceptance by society have all hindered the development of AI.

Ethical issues

Data ethics

Data ethics is the foundation of AI, and its key areas include informed consent, privacy and data protection, ownership, objectivity, and transparency.22 Can our personal health data be measured by money? Unfortunately, such data transactions are not uncommon; an example is the data exchange cooperation between DeepMind and the Royal Free London Foundation Trust. Who has ownership of such a massive amount of personal health data? Regulations in Canada admit that healthcare providers are the “information custodians” of patients’ private health data, and their ownership belongs to patients. This “guardianship” reflects the reality that there are interests in patients’ medical records, and these interests are protected by law.23 Therefore, as the owners of data, patients have the right to know how and to what extent their personal health data are recorded and used.24

Fairness

Unfairness caused by bias in data sources is the most common ethical issue. Any data set will be biased to a certain extent based on gender, sexual orientation, race, or sociologic, environmental, or economic factors.24 AI programs are developed based on existing data to learn and give corresponding conclusions. Historical data also capture patterns of inequality in healthcare, and machine learning models trained on these data may perpetuate these inequalities.25 For example, a study conducted in the United States showed that clinicians might have neglected African Americans’ positive results because they assumed that the model’s positive predictive value for African Americans was low. Actually, the low positive rate was caused by the small number of African Americans who participated in the initial experiment, and false-positive results were more likely.26 In addition, injustices may be encountered in the design, deployment, and evaluation of models.25 When providing medical services, clinicians always stop treatment for patients with certain findings (such as extreme preterm birth or brain injury). These differences in personal tendencies are learned by AI and will lead to serious ethical problems that may be fatal for patients.27 In terms of resource allocation, residents in poor communities with long hospital stays due to poverty or long distances will be abandoned by the model. The model may disproportionately allocate case management resources to patients from wealthy communities that are predominantly white.28 The occurrence of automation deviations will also hinder the practical application of AI. This situation is exacerbated by decisions made by AI based on subtle features that humans cannot perceive. In under-resourced populations, the risk of automation bias may be magnified because there is no local expert to veto these results.24

However, it may be difficult for us to know in advance whether an AI system or improvement plan will harm or benefit a particular group.

Ethics of clinical practice

The introduction of AI to healthcare practice brings new challenges to doctors.29 Although a strictly rule-oriented robot may initially seem more reliable, an ethical person is more trustworthy in situations where complex clinical practice decisions are required.30 AI tends to amplify biased findings regardless of the specific clinical interactions. In a practical example, AI classified patients with pneumonia alone as high-risk but erroneously classified patients with pneumonia and asthma as low-risk despite comorbid asthma complicating pneumonia.31

Some scholars have considered that when the participants of an algorithm no longer have the ability to predict the machine’s future behavior, they cannot assume moral responsibility.32 This will aggravate the contradiction between doctors and patients, which is not feasible in the field of healthcare. In AI ethics joint statements in Canada, Europe, and North America, doctors played the role of the “guardian of the machine,” serving as an active operator rather than a passive user. Therefore, doctors were responsible for the outcome of the patient’s diagnostic strategy regardless of whether the strategy was partially or fully assisted by the AI system.23,24,33 In addition, doctors now face new ethical challenges because AI-generated models tend to be difficult to explain, and many doctors lack an understanding of the internal working principles of algorithms.32,33

Legal issues arising in AI

Healthcare workers undergo strict assessments before they are employed, and they should abide by a series of codes of conduct in daily practice. No globally unified laws or regulations regarding the application of AI in medicine are currently in place to standardize the behavior of practitioners.34 If AI is used by criminals, AI-crime (a new and destructive crime) may occur.35 Therefore, the formulation of broad and detailed AI laws is urgently needed. However, several issues must be considered. First, legal experts alone will not work to formulate such laws. We need the participation of stakeholders who are involved in the construction or development of AI-based medical solutions.36 Second, when encountering AI-related infringement, we should clarify whether the responsibility belongs to the AI manufacturer, user, or maintainer. Where is the boundary of responsibility of each stakeholder? When a complicated case arises, what proportion of responsibility needs to be distributed instead of simply asking doctors to bear all the risks of AI medical treatment?29 Finally, we must continuously upgrade the laws that have been formulated. Studies have shown that health-related data have far exceeded the original expectations of the original privacy protection laws (such as the HIPAA Act of the US Congress in 1996).37–39 Fortunately, many new laws have been introduced to regulate AI data protection, responsibility determination, and supervision.37,40,41

Even if a legal basis is established, no clear AI regulatory agency or accountability path is currently available to better regulate AI. For example, the NHS 111 powered by Babylon, a children’s intelligent inquiry app, was recognized as a medical device by the Medicines and Healthcare products Regulatory Agency despite the absence of rigorous clinical verification and sufficient evidence.42

Security

Security is the most important issue in the application of AI to the medical industry, and it also requires the most rigorous review.

Hardware security

All AI products currently require a series of electronic products to perform their functions, such as computers, mobile phones, and bracelets. Three key issues regarding the security of such hardware must be noted. First, even the best physically unclonable functions will be affected by factors such as cost, temperature variations, and electromagnetic interference.43 Second, the complexity and professionalism of medical knowledge and information technology makes it difficult for doctors or engineers to use AI that integrates multiple technologies. On one hand, engineers must be retrained to access and process medical system data, which may disrupt the medical workflow and cause data leakage.44 On the other hand, doctors may have a poor understanding of the principles and usage methods of AI products in real practice, causing problems such as reduced efficiency and increased errors. Third, the issue of AI network security must be addressed. A global cascading reaction may occur if key nodes are attacked or fail in the complex network transmission process.45,46

Software security

Even algorithm programs with powerful functions are very vulnerable under design attacks.47–50 The performance of the AI system is often unsatisfactory in a targeted design confrontation despite its performance being excellent in the initial design inspection. In fact, all stages of the AI algorithm formation process will be attacked, assuming that the attacker knows everything related to the trained neural network model (training data, model architecture, hyperparameters, number of layers, activation function, and model weights).50 A false-positive attack can be used to generate a negative sample or a false-negative attack can be used to generate a positive sample, causing confusion in the system classification. Attacks can even be made without awareness of the structure and parameters of the target model or the training data set.50,51 Errors will also occur in the system without external interference. The original algorithm will gradually deviate from the correct direction because of changes in disease patterns,52 missing data,53 and autonomous update errors.54

Human factors

Today’s AI is realized by software codes. When dealing with thousands of codes, engineers will inevitably make mistakes. An AI system can be improved through subsequent patches and updates. However, in the AI programs used in the medical field, such errors may directly endanger the health of patients. The end point to which developers pay attention is often the effectiveness of the AI system, not the security.55 Doctors can also make mistakes, and when the usually predictable AI system is consistent with their diagnosis, busy clinicians no longer consider other alternatives.56,57

Protection of human genetic resources

All DNA sequences of human chromosomes have been sequenced to complete the mapping of the human genome, and a shared information system has been established. This information system focuses on the relationship between function and genes, especially the relationship between genes and diseases.58 Scientists have mapped the intact human genome during the past three decades, facilitating much more detailed research of clinical diseases at the genetic and cellular levels. On one hand, human genetic resources are of great help in medical diagnosis and treatment59; on the other hand, however, such resources have catastrophic potential for all human beings if illegally applied. The Ministry of National Defense of the People’s Republic of China has reported a biologic weapon called the “gene weapon,” which consists of bacteria, insects, or microorganisms containing disease-causing genes through genetic engineering modifications with devastating effects in warfare. Genetic mutations in specific ethnic groups could be designed through the study of genetic characteristics; therefore, the protection of human genome resources is of vital importance. Additionally, with the development of the Internet and gene testing technology, increasing numbers of patients are undergoing genetic testing to assist in diagnosis and treatment, and the testing results are being recorded in hospitals or testing organizations. This introduces a risk of data exposure among inappropriate personnel and may also induce discrimination in insurance or employment. Thus, the legal provisions protecting the privacy of patients in DNA collection, transmission, and storage should be improved.

Societal acceptance

Although most patients show a willingness to believe in an AI-based diagnosis, they tend to more frequently trust doctors when the AI-based diagnosis differs from that of doctors. One survey showed that resident physicians and medical students desired AI-related training but that only a few had opportunities to participate in personal-level data science or machine learning courses.60 Moreover, medical workers in less developed areas have great concerns about whether they will be replaced by AI in the future.61

Possible solutions

AI-based ecological network

The progression from the initial acquisition of complex clinical data or clinical phenomena to the birth of AI programs for clinical application is complex. We herein propose an AI-based ecological network and connect the entire network through a public big data sample database. The framework describes in detail the various links generated by AI and the issues needing attention. It can be divided into the following three steps.

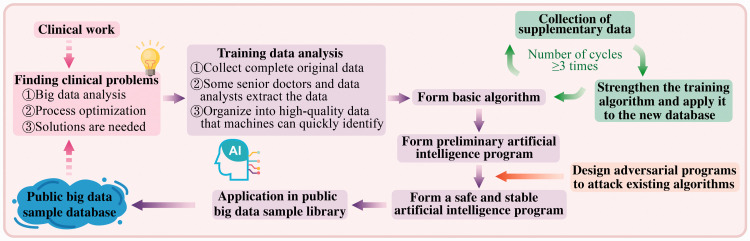

Step 1. Preclinical (Figure 1)

Figure 1.

Artificial intelligence before clinical application

The first step is to identify valuable problems from clinical work or the public big data sample database. A preliminary algorithm is then formed through data collection and technology development, and a specially designed attack program is used to test the algorithm. Those algorithms that survive the test will be simulated and applied in the public big data sample library to screen out truly safe and stable AI programs.

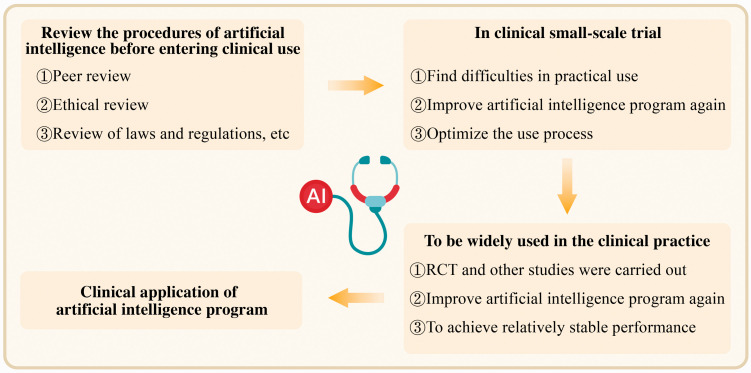

Step 2. Clinical application (Figure 2)

Figure 2.

Application of artificial intelligence in clinical practice

Small-scale clinical application can be applied after a series of review procedures. The problems and experience in the process of use and optimization of the algorithm are summarized, and large-scale clinical application is subsequently conducted. Finally, an AI program suitable for clinical application in the real world is formed.

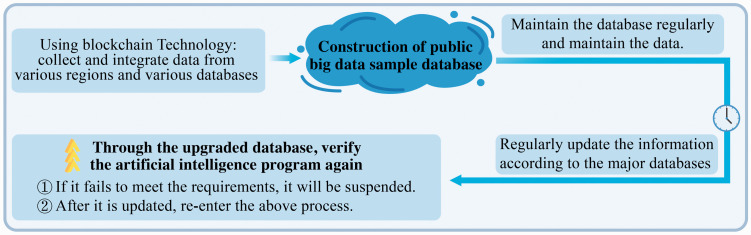

Step 3. Construction of public big data sample database (Figure 3)

Figure 3.

Maintenance and application of database

We use blockchains, big data, and other technologies to integrate high-quality data sets and store them in secure data storage software. The big data sample database requires daily maintenance (including maintenance of its data storage status, data security, and other features) and regular updates. The AI program is verified again through the updated database. If the program fails to meet the corresponding standards or is problematic, it needs to be re-debugged. Additionally, a special supervisory department is needed to supervise the whole process and provide corresponding complaint channels to solve problems in a timely manner.

Ethical adoption of AI in medicine

The development of AI requires ethicists to participate in the supervision of the whole process to solve ethical issues in data, resource allocation, and practice. First, we must ensure the independence of ethics committee.62 Next, with respect to the issue of data ethics, we can refer to the “land” policy, which states that patients have ownership of medical data just as landowners have surface rights. However, the right to access the data for the purpose of improving healthcare can be considered to belong to other parties, such as healthcare providers or the government.23 To ensure fairness, we must fully consider marginalized populations and the fairness of distribution in specific clinical and organizational environments. We must ensure the “three fairs”: equal outcomes, equal performance, and equal allocation.25 In terms of practice, we believe that it is necessary for ethics committees to formulate uniform rules, standards, and codes of conduct that must be agreed upon and continuously updated to ensure that the development of AI in medical care does not violate ethics.

Establishment and perfection of the legal system

A complete legal system must be developed. Based on the “no harm” principle, strict and prudent rules are formulated for every step of AI from the laboratory to clinical application. The legal system must be flexible; i.e., it should not restrict the development of AI. Instead, the development of AI must be guided by established regulations. Laws must keep pace with the era and cannot be set in stone. Laws and regulations based on the level of AI technology at former stages are bound to fail to meet the needs of supervision with the advancement of technology. Thus, we need to re-study the current regulations, eliminate any emerging legal ambiguities or inadequacies in a timely manner, and improve the legal terms.

Notably, however, it is doctors who are responsible for medical decisions. Doctors should not blindly accept the information given by AI but should instead remain skeptical and formulate the best plan for patients based on the actual situation.

Improvement in feasibility of AI in the real world

Individuals and communities must be provided more powerful AI products with convenient usage flow, reliable results, and stable performance. Production can then be improved according to the shortcomings reflected by the users. Hence, people’s exposure to AI should be increased, such as through offline experience stores in conjunction with social media, live broadcast platforms, and other methods.

Above all, patients and medical staff can truly benefit from AI products in terms of decreasing expenses, saving time, and reducing errors and conflicts. We believe that after a period of hard work, increasingly more people will accept AI products in the medical industry.

Conclusion

AI will not replace doctors. Just as biochemical analyzers do not replace laboratory scientists, the application of AI is not a threat to doctors. On the contrary, it will promote reshaping of the doctor’s role. AI research should not be limited to the accuracy and sensitivity of the report but should focus on the nature of diseases, such as their etiology and pathogenesis, and should enrich our understanding and knowledge of biology.63 Interpretable algorithms will be recognized by more people and will bring AI-based medical treatment to people’s lives. We must improve the research on the relevant ethics, laws, and supervision of AI as soon as possible. Additionally, we need to build a large public database containing information such as human genome data in conjunction with strict security protection measures, regular upgrades, and maintenance.

The era of AI has arrived, and all walks of life are stepping up to integrate with it; medical treatment is no exception. In the near future, AI-assisted medicine will experience a qualitative leap, and people’s understanding and acceptance of medical AI will increase.

Footnotes

Ethics: Ethics approval was not required because of the nature of this research (narrative review).

Declaration of conflicting interest: The authors declare that there is no conflict of interest.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was funded by the National Natural Science Foundation of China (No. 81971982) and the Zhejiang Provincial Science and Technology Department (No. 2020C03046).

ORCID iD: Lushun Jiang https://orcid.org/0000-0002-4026-7418

References

- 1.Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism 2017; 69s: S36–S40. [DOI] [PubMed] [Google Scholar]

- 2.McKinney SM, Sieniek M, Godbole V, et al. International evaluation of an AI system for breast cancer screening. Nature 2020; 577: 89–94. [DOI] [PubMed] [Google Scholar]

- 3.Ehteshami Bejnordi B, Veta M, Johannes Van Diest P, et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017; 318: 2199–2210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ardila D, Kiraly AP, Bharadwaj S, et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat Med 2019; 25: 954–961. [DOI] [PubMed] [Google Scholar]

- 5.Ahmad OF, Soares AS, Mazomenos E, et al. Artificial intelligence and computer-aided diagnosis in colonoscopy: current evidence and future directions. Lancet Gastroenterol Hepatol 2019; 4: 71–80. [DOI] [PubMed] [Google Scholar]

- 6.El Hajjar A, Rey JF. Artificial intelligence in gastrointestinal endoscopy: general overview. Chin Med J (Engl) 2020; 133: 326–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Matava C, Pankiv E, Raisbeck S, et al. A convolutional neural network for real time classification, identification, and labelling of vocal cord and tracheal using laryngoscopy and bronchoscopy video. J Med Syst 2020; 44: 44. [DOI] [PubMed] [Google Scholar]

- 8.Gutierrez G. Artificial intelligence in the intensive care unit. Crit Care 2020; 24: 101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bellini V, Guzzon M, Bigliardi B, et al. Artificial intelligence: a new tool in operating room management. Role of machine learning models in operating room optimization. J Med Syst 2019; 44: 20. [DOI] [PubMed] [Google Scholar]

- 10.Sarker A, Mollá D, Paris C. Automatic evidence quality prediction to support evidence-based decision making. Artif Intell Med 2015; 64: 89–103. [DOI] [PubMed] [Google Scholar]

- 11.Mirchi N, Bissonnette V, Yilmaz R, et al. The Virtual Operative Assistant: an explainable artificial intelligence tool for simulation-based training in surgery and medicine. PLoS One 2020; 15: e0229596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mei X, Lee HC, Diao KY, et al. Artificial intelligence-enabled rapid diagnosis of patients with COVID-19. Nat Med 2020; 26: 1224–1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Murphy K, Smits H, Knoops AJG, et al. COVID-19 on the chest radiograph: a multi-reader evaluation of an AI system. Radiology 2020: 201874. [Google Scholar]

- 14.Oh Y, Park S, Ye JC. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans Med Imaging 2020; 39: 2688–2700. [DOI] [PubMed] [Google Scholar]

- 15.Ke YY, Peng TT, Yeh TK, et al. Artificial intelligence approach fighting COVID-19 with repurposing drugs. Biomed J 2020; 43: 355–362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang DH, Wu KL, Zhang X, et al. In silico screening of Chinese herbal medicines with the potential to directly inhibit 2019 novel coronavirus. J Integr Med 2020; 18: 152–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Park Y, Casey D, Joshi I, et al. Emergence of new disease: how can artificial intelligence help? Trends Mol Med 2020; 26: 627–629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bragazzi NL, Dai H, Damiani G, et al. How big data and artificial intelligence can help better manage the COVID-19 pandemic. Int J Environ Res Public Health 2020; 17: 3176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Simsek M, Kantarci B. Artificial intelligence-empowered mobilization of assessments in COVID-19-like pandemics: a case study for early flattening of the curve. Int J Environ Res Public Health 2020; 17: 3437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zheng N, Du S, Wang J, et al. Predicting COVID-19 in China using hybrid AI model. IEEE Trans Cybern 2020; 50: 2891–2904. [DOI] [PubMed] [Google Scholar]

- 21.Mashamba-Thompson TP, Crayton ED. Blockchain and artificial intelligence technology for novel coronavirus disease-19 self-testing. Diagnostics (Basel) 2020; 10: 198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mittelstadt BD, Floridi L. The ethics of big data: current and foreseeable issues in biomedical contexts. Sci Eng Ethics 2016; 22: 303–341. [DOI] [PubMed] [Google Scholar]

- 23.Jaremko JL, Azar M, Bromwich R, et al. Canadian Association of Radiologists white paper on ethical and legal issues related to artificial intelligence in radiology. Can Assoc Radiol J 2019; 70: 107–118. [DOI] [PubMed] [Google Scholar]

- 24.Geis JR, Brady AP, Wu CC, et al. Ethics of artificial intelligence in radiology: summary of the joint European and North American multisociety statement. J Am Coll Radiol 2019; 16: 1516–1521. [DOI] [PubMed] [Google Scholar]

- 25.Rajkomar A, Hardt M, Howell MD, et al. Ensuring fairness in machine learning to advance health equity. Ann Intern Med 2018; 169: 866–872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Drew BJ, Harris P, Zègre-Hemsey JK, et al. Insights into the problem of alarm fatigue with physiologic monitor devices: a comprehensive observational study of consecutive intensive care unit patients. PLoS One 2014; 9: e110274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Char DS, Shah NH, Magnus D. Implementing machine learning in health care - addressing ethical challenges. N Engl J Med 2018; 378: 981–983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Epstein AM, Stern RS, Tognetti J, et al. The association of patients' socioeconomic characteristics with the length of hospital stay and hospital charges within diagnosis-related groups. N Engl J Med 1988; 318: 1579–1585. [DOI] [PubMed] [Google Scholar]

- 29.Tobia K, Nielsen A, Stremitzer A. When does physician use of AI increase liability? J Nucl Med 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gelhaus P. Robot decisions: on the importance of virtuous judgment in clinical decision making. J Eval Clin Pract 2011; 17: 883–887. [DOI] [PubMed] [Google Scholar]

- 31.Nabi J. How bioethics can shape artificial intelligence and machine learning. Hastings Cent Rep 2018; 48: 10–13. [DOI] [PubMed] [Google Scholar]

- 32.Basu T, Engel-Wolf S, Menzer O. The ethics of machine learning in medical sciences: where do we stand today? Indian J Dermatol 2020; 65: 358–364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Artificial intelligence and medical imaging 2018: French Radiology Community white paper. Diagn Interv Imaging 2018; 99: 727–742. [DOI] [PubMed] [Google Scholar]

- 34.Mitchell C, Ploem C. Legal challenges for the implementation of advanced clinical digital decision support systems in Europe. J Clin Transl Res 2018; 3: 424–430. [PMC free article] [PubMed] [Google Scholar]

- 35.King TC, Aggarwal N, Taddeo M, et al. Artificial intelligence crime: an interdisciplinary analysis of foreseeable threats and solutions. Sci Eng Ethics 2020; 26: 89–120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Cath C. Governing artificial intelligence: ethical, legal and technical opportunities and challenges. Philos Trans A Math Phys Eng Sci 2018; 376: 20180080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kayaalp M. Patient privacy in the era of big data. Balkan Med J 2018; 35: 8–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Golbus JR, Price WN, 2nd, Nallamothu BK. Privacy gaps for digital cardiology data: big problems with big data. Circulation 2020; 141: 613–615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Matthias A. The responsibility gap: ascribing responsibility for the actions of learning automata. Ethics Inf Technol 2004; 6: 175–183. 10.1007/s10676-004-3422-1. [DOI] [Google Scholar]

- 40.Halamka J. John Halamka: Japan to emerge as the leading learning lab for digital health innovation [Internet]. Healthcare IT News. 2019. [cited 2019 Sep 30]. Available from: https://www.healthcareitnews.com/news/johnhalamka-japan-emerge-leading-learning-lab-digital-health-innovation.

- 41.The European Parliament and the Council of the European Union. General Data Protection Regulation. 2012/0011 (COD). Brussels Council of the European Union; 2016.

- 42.McCartney M. Margaret McCartney: AI in medicine must be rigorously tested. BMJ 2018; 361: k1752. [DOI] [PubMed] [Google Scholar]

- 43.Pu YF, Yi Z, Zhou JL. Defense against chip cloning attacks based on fractional Hopfield neural networks. Int J Neural Syst 2017; 27: 1750003. [DOI] [PubMed] [Google Scholar]

- 44.Sohn JH, Chillakuru YR, Lee S, et al. An open-source, vender agnostic hardware and software pipeline for integration of artificial intelligence in radiology workflow. J Digit Imaging 2020; 33: 1041–1046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lu ZM, Li XF. Attack vulnerability of network controllability. PLoS One 2016; 11: e0162289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.O'Sullivan S, Nevejans N, Allen C, et al. Legal, regulatory, and ethical frameworks for development of standards in artificial intelligence (AI) and autonomous robotic surgery. Int J Med Robot 2019; 15: e1968. [DOI] [PubMed] [Google Scholar]

- 47.Mozaffari-Kermani M, Sur-Kolay S, Raghunathan A, et al. Systematic poisoning attacks on and defenses for machine learning in healthcare. IEEE J Biomed Health Inform 2015; 19: 1893–1905. [DOI] [PubMed] [Google Scholar]

- 48.Mygdalis V, Tefas A, Pitas I. K-Anonymity inspired adversarial attack and multiple one-class classification defense. Neural Netw 2020; 124: 296–307. [DOI] [PubMed] [Google Scholar]

- 49.Nguyen DT, Pham TD, Lee MB, et al. Visible-light camera sensor-based presentation attack detection for face recognition by combining spatial and temporal information. Sensors (Basel) 2019; 19: 410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yuan X, He P, Zhu Q, et al. Adversarial examples: attacks and defenses for deep learning. IEEE Trans Neural Netw Learn Syst 2019; 30: 2805–2824. [DOI] [PubMed] [Google Scholar]

- 51.Zhang X, Wu D. On the vulnerability of CNN classifiers in EEG-based BCIs. IEEE Trans Neural Syst Rehabil Eng 2019; 27: 814–825. [DOI] [PubMed] [Google Scholar]

- 52.Davis SE, Lasko TA, Chen G, et al. Calibration drift in regression and machine learning models for acute kidney injury. J Am Med Inform Assoc 2017; 24: 1052–1061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Dawson NV, Arkes HR. Systematic errors in medical decision making: judgment limitations. J Gen Intern Med 1987; 2: 183–187. [DOI] [PubMed] [Google Scholar]

- 54.Nemati S, Ghassemi MM, Clifford GD. Optimal medication dosing from suboptimal clinical examples: a deep reinforcement learning approach. Annu Int Conf IEEE Eng Med Biol Soc 2016; 2016: 2978–2981. [DOI] [PubMed] [Google Scholar]

- 55.Belard A, Buchman T, Forsberg J, et al. Precision diagnosis: a view of the clinical decision support systems (CDSS) landscape through the lens of critical care. J Clin Monit Comput 2017; 31: 261–271. [DOI] [PubMed] [Google Scholar]

- 56.Parasuraman R, Manzey DH. Complacency and bias in human use of automation: an attentional integration. Hum Factors 2010; 52: 381–410. [DOI] [PubMed] [Google Scholar]

- 57.Carter SM, Rogers W, Win KT, et al. The ethical, legal and social implications of using artificial intelligence systems in breast cancer care. Breast 2019; 49: 25–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lander ES, Linton LM, Birren B, et al. Initial sequencing and analysis of the human genome. Nature 2001; 409: 860–921. [DOI] [PubMed] [Google Scholar]

- 59.Lander ES. Initial impact of the sequencing of the human genome. Nature 2011; 470: 187–197. [DOI] [PubMed] [Google Scholar]

- 60.Ooi SKG, Makmur A, Soon AYQ, et al. Attitudes toward artificial intelligence in radiology with learner needs assessment within radiology residency programmes: a national multi-programme survey. Singapore Med J 2019. (online ahead of print). 10.11622/smedj.2019141. [DOI] [PMC free article] [PubMed]

- 61.Abdullah R, Fakieh B. Health care employees’ perceptions of the use of artificial intelligence applications: survey study. J Med Internet Res 2020; 22: e17620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.International AI ethics panel must be independent. Nature 2019; 572: 415. [DOI] [PubMed]

- 63.Ghosh A, Kandasamy D. Interpretable artificial intelligence: why and when. AJR Am J Roentgenol 2020; 214: 1137–1138. [DOI] [PubMed] [Google Scholar]