Abstract

Pragmatic trials testing the effectiveness of interventions under “real world” conditions help bridge the research-to-practice gap. Such trial designs are optimal for studying the impact of implementation efforts, such as the effectiveness of integrated behavioral health clinicians in primary care settings. Formal pragmatic trials conducted in integrated primary care settings are uncommon, making it difficult for researchers to anticipate the potential pitfalls associated with balancing scientific rigor with the demands of routine clinical practice. This paper is based on our experience conducting the first phase of a large, multisite, pragmatic clinical trial evaluating the implementation and effectiveness of behavioral health consultants treating patients with chronic pain using a manualized intervention, brief cognitive behavioral therapy for chronic pain (BCBT-CP). The paper highlights key choice points using the PRagmatic-Explanatory Continuum Indicator Summary (PRECIS-2) tool. We discuss the dilemmas of pragmatic research that we faced and offer recommendations for aspiring integrated primary care pragmatic trialists.

Keywords: Chronic pain, Primary care, Pragmatic trials, Dissemination and implementation science, Primary care behavioral health

Introduction

Background

Research findings can take up to 17 years to be incorporated into clinical practice (Balas & Boren, 2000; Colditz & Emmons, 2018; Morris et al., 2011). Sluggish research uptake is likely due to the fact that most clinical research is conducted under tightly controlled conditions that do not mirror routine clinical practice (Ruggeri et al., 2013). Randomized controlled trials (RCTs) maximize internal validity to establish treatment efficacy in early stages of treatment development. RCTs are the gold-standard research design for the establishment of empirically-supported treatments, and they are the primary studies considered in the establishment of clinical practice guidelines. However, tight controls and strict inclusion and exclusion criteria employed in typical RCTs result in treatments that may not be as effective in real-world clinics (i.e., poor external validity; Kessler & Glasgow, 2011; Rothwell, 2005). Concerns about this “research to practice gap” (Kazdin, 2008) have led to novel research designs and approaches to speed the translation of empirically-supported psychological treatments into routine practice (Kessler & Glasgow, 2011; Meffert et al., 2016; Peek et al., 2014).

Although not a type of study design per se, a “pragmatic” trial approach may allow for identification of actionable, real-world data leading to better and more rapid health service delivery and outcomes (Kessler & Glasgow, 2011). Notably, any number of research designs can have a “pragmatic attitude,” meaning the study addresses clinical translation by focusing on informing clinical care, health services delivery and health policies (Treweek & Zwarenstein, 2009). For example, in the field of implementation science, different “hybrid” study designs have emerged that allow for simultaneous examination of intervention and implementation strategy effectiveness (e.g., Curran et al., 2012). Use of any of these hybrid designs may be considered pragmatic.

Pragmatic trials differ from typical RCTs—explanatory trials—in that they eschew explaining or studying efficacy. Pragmatic and explanatory trials are best considered as two ends of the same research continuum (Treweek & Zwarenstein, 2009). In contrast to explanatory trials, pragmatic trials focus on gathering data under “usual” conditions so ineffective therapies can be quickly abandoned and effective therapies can be rapidly applied in the real world (Schwartz & Lellouch, 1967). Since studies that are more pragmatic have more immediate applicability to clinical practice (Schwartz & Lellouch, 1967; Treweek & Zwarenstein, 2009), pragmatic trials are well-suited to primary care settings and are critical for guiding evidence-based practice (Kessler & Glasgow, 2011).

Primary care settings have increasingly integrated licensed behavioral health providers to assist in provision of comprehensive healthcare. The Primary Care Behavioral Health (PCBH) model of integration was established more than 20 years ago and is “a team-based primary care approach to managing behavioral health problems and biopsychosocially influenced health conditions” (Reiter et al., 2018). The PCBH model involves behavioral health consultants (BHCs) as core members of the primary care team (e.g., psychologists, licensed clinical social workers, marriage and family therapists; Reiter et al., 2018; Robinson & Reiter, 2016; Strosahl, 1998). BHCs deliver treatments with RCT-derived evidence, adapted for use in this setting. Effectiveness research studies have found that BHCs are able to help patients improve their functioning and add value to the primary care team (Hunter et al., 2018a; Vogel et al., 2017). Many such effectiveness studies may be considered “pragmatic,” although they might not overtly describe themselves as such. Unfortunately, one criticism of the literature on the PCBH model is that little rigorous research has been conducted demonstrating its effectiveness, although conducting traditional RCTs within primary care settings is challenging (Hunter et al., 2018a).

A pragmatic approach may be optimal for studying the effectiveness of BHCs in primary care settings, but there is little specific guidance and there are few examples available to inform the development of these trials. Therefore, this paper will focus on the design and methods of a pragmatic trial of manualized brief cognitive behavioral therapy for chronic pain (BCBT-CP; Beehler, Dobmeyer, Hunter, & Funderburk, 2018; Beehler et al., 2019) delivered by a BHC operating within the integrated PCBH model using the PRagmatic-Explanatory Continuum Indicator Summary (PRECIS-2) tool (Loudon et al., 2015). There are many tools available to evaluate the implementation elements of a trial (e.g., RE-AIM evaluation framework, Gaglio et al., 2013; Glasgow et al., 1999), and they are not mutually exclusive. We chose to focus on our use of the PRECIS-2 tool because we found it to be the most comprehensive in guiding development and design of our study protocol. Specific considerations and choice points are discussed using a case example incorporating our experiences conducting the first phase of a large, multisite, pragmatic trial titled “Targeting Chronic Pain in Primary Care Settings Using Behavioral Health Consultants” (Goodie, Kanzler et al., 2020).

Choice Points

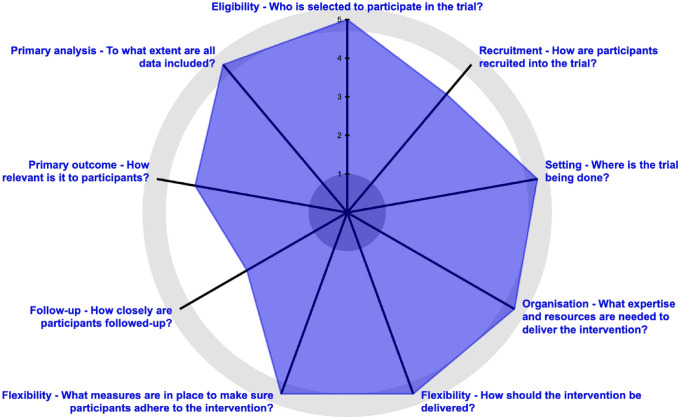

The PRECIS-2 tool was developed to assist researchers in making study design decisions (Loudon et al., 2015; tool available at http://www.precis-2.org). The PRECIS-2 consists of nine different domains that should be considered when developing a clinical trial, each of which is scored on pragmatism (Loudon et al., 2015). The PRECIS-2 can be used by trialists who utilize diverse research designs. Trialists can iteratively rate how explanatory or pragmatic their study design is across these domains: eligibility, recruitment, setting, organization, flexibility (delivery), flexibility (adherence), follow-up, primary outcome, and primary analysis. The domains are rated from 1 (very explanatory) to 5 (very pragmatic). See Fig. 1 for the wheel summarizing our pilot study ratings in each of the domains. The PRECIS-2 tool helps researchers clarify the purpose of their trial and align their goals and design choices; it has been recommended for use in integrated primary care research (Hunter et al., 2018a). Thus, it is an ideal framework from which to explore our study choice points and challenges.

Fig. 1.

Our study ratings on the domains presented in the PRagmatic-Explanatory Continuum Indicator Summary (PRECIS-2) wheel Loudon et al., 2015; tool

available at www.precis-2.org

Each of the domains described next are part of the PRECIS-2 tool (Loudon et al., 2015) and are explored in the context of the first phase of our pragmatic trial on behavioral health consultants treating chronic pain in primary care (Goodie, Kanzler et al., 2021). Our study took place in the context of the Military Health System (MHS) within the US Department of Defense (DoD), which serves active duty military service members, military retirees, and their families. We conducted a single-site open trial with two aims: (1) to test feasibility and acceptability of the BCBT-CP component of the Military Health System Stepped Care Model for Pain pathway (Defense Health Agency, 2018); and (2) to examine the effectiveness of BCBT-CP delivered by a BHC. Our study design may be best described as a hybrid effectiveness-implementation trial (type 2), since we sought to examine both effectiveness of the BCBT-CP intervention and feasibility and utility of our method of implementation (Curran et al., 2012). During this first, pilot stage of the trial, identification of challenges and important decisions at were critical, since choices made in this phase were going to guide our next multisite trial phase. This study was reviewed for compliance with federal and DoD codes for the conduct of human subjects research by the University of Texas Health Science Center at San Antonio (UTHSCSA) Institutional Review Board. The Uniformed Services University and the Carl R. Darnall Army Medical Center deferred their review to UTHSCSA.

Domain 1: Eligibility Criteria. To What Extent are the Participants in the Trial Similar to Those Who Would Receive This Intervention if it was Part of Usual Care?

We wanted the results of our study to be applicable to typical patients being seen in the primary care clinics. Therefore, our inclusion criteria needed to be broad and our exclusion criteria minimal. Our study examined a new approach to chronic pain care in military primary care clinics whereby patients with chronic pain are referred to BHCs to obtain BCBT-CP (Goodie, Kanzler et al., 2020). We included all patients who would normally receive BCBT-CP.

Our exclusion criteria were based on the same criteria a BHC would consider make deciding upon usual care (i.e., deciding whether this patient is a good candidate for receiving BCBT-CP), including the following: presence of symptoms of psychosis and/or delirium, a medical condition or life circumstance that would contraindicate or prevent BCBT-CP (e.g., a scheduled surgery), and inability to comprehend the study instructions. The choice to minimize exclusion to mirror clinical practice as much as possible meant that we enrolled patients into the study with more physical and mental health comorbidities, taking a greater number of medications, and experiencing other factors outside of the control built into explanatory trials; however, this choice ensured greater generalizability and scalability of findings. Eligibility criteria PRECIS score: 5.

Recommendations for Trialists in Determining Eligibility Criteria

We recommend that trialists conducting pragmatic trials in integrated primary care settings consider how their eligibility criteria will impact the ultimate goals of the trial. The PRECIS-2 model suggests that if the trial aims to explain the efficacy of a specific intervention or treatment in a particular, well-defined patient population, then choosing to have strict inclusion and broad exclusion criteria is appropriate. Employing an explanatory approach to eligibility has been criticized in the literature as being too strict to be relevant to stakeholders, such as clinicians and patients (Ford & Norrie, 2016; Kessler & Glasgow, 2011). Selecting study participants that are as similar as possible to typical primary care patients is a key consideration in designing a trial that will have more rapid applicability to primary care providers and patients.

Domain 2: Recruitment. How Much Extra Effort is Made to Recruit Participants Over and Above What Would be Used in the Usual Care Setting to Engage with Patients?

This domain presented notable challenges to our team, many of whom are more familiar with conducting RCT explanatory-type trials. There is a dynamic tension between wanting to recruit as many participants as possible and not interfering with usual clinical care.

We struggled with a number of options for participant recruitment and ultimately chose to support clinic personnel in their usual methods for identifying and linking appropriate patients with the BHC for treatment of pain. We were careful not to engage in extra efforts to recruit participants, such as advertising within or outside the clinic. While not wanting to offer incentives for participating in the research, we were interested in determining whether there were any objective changes in physical activity levels to be measured using an activity tracker not typically given to patients in routine care. Therefore, in a dual purpose of both an incentive and as a measure of physical activity, participants were offered the choice to (1) wear a commercial activity tracker provided by the study (Fitbit), (2) use their own (if they had one), or (3) decline to wear one. We also struggled with how much the presence in the clinic of our study staff for the purpose of conducting study activities, including recruitment and gathering research outcome data, might influence usual care. The primary care providers and staff were fully aware of why our project coordinator was in the clinic and knew about the research study. Based on qualitative feedback, we know that many primary care providers made concerted efforts to support the project. This included telling their patients about the study and introducing potential participants to the research coordinator to consider consenting into the study after seeing their BHC. The presence of the research coordinator and the intention of the primary care providers and BHC to assist in completing the project contributed to extra attention recruiting individuals to participate in the study. This was not viewed as coercive or as considerable “extra effort” beyond what would happen within the normal clinical setting. Recruitment PRECIS score: 4.

Recommendations for Trialists in the Consideration of Recruitment

It is critical for researchers in integrated care to consider the degree to which their efforts at recruiting participants may impact or deviate from usual care. In our study, we examined a new standard of care, so very little extra effort was needed by providers and participants. Other studies, which are introducing assignment to or choices between interventions, may need to do more to facilitate recruitment. However, to maximize the pragmatic nature of a study, it is important to reduce efforts that may unduly influence participation in the treatment or processes being studied.

Domain 3: Setting. How Different are the Settings of the Trial from the Usual Care Setting?

The goal of our study was to examine an intervention delivered by a BHC in an existing Primary Care Behavioral Health program; therefore, we sought a clinic that met such criteria. However, there were many details to consider. We wanted our pilot phase site to resemble other military primary care clinics that would be invited to participate in the next multisite phase of the study, and we wanted to ensure that our findings would be broadly applicable to other military primary care clinics. Informed by PRECIS-2 recommendations (Loudon et al., 2015), we had to consider many factors in selecting the study site for our pilot, such as geographic location of the clinic, size of the clinic, affiliated military branch (i. e., Army, Air Force, or Navy), and clinic population.

In the pilot phase, we were most concerned with developing methods and identifying challenges and solutions in advance of our larger trial. We considered that if our pilot was carried out in an unusually large or small clinic, we might draw conclusions about the pain intervention, the study protocol, or research methods that were inaccurate. Ultimately, we were able to conduct the pilot in a moderately sized clinic that had features we hoped would help us prepare well for the next phase of the study. Setting PRECIS score: 5.

Recommendations for Trialists in the Consideration of Setting

Researchers studying aspects of integrated primary care may face significant limitations in the selection of their clinical research sites, particularly for smaller studies with little or no funding. It is important to consider the impact of the setting when interpreting findings, acknowledging how results could vary in different settings. Integrated primary care happens across a great diversity of clinic types (Robinson & Reiter, 2016), including different medical specialties (e.g., family medicine, obstetrics and gynecology, internal medicine, and pediatrics), BHC disciplines (e.g., psychologists, licensed clinical social workers, licensed marriage and family therapists, licensed counselors), healthcare system types [e.g., Military Health System, Federally Qualified Health Center, US Department of Veterans Affairs (VA), residency training site] and geographic and regional settings (e.g., urban vs rural). Finding a clinic setting that is as similar as possible to the types of clinics where your results are likely to be applied is critical.

Domain 4: Organization. How Different are the Resources, Provider Expertise, and the Organization of Care Delivery in the Intervention Arm of the Trial from Those Available in Usual Care?

We struggled with this domain. In particular, we questioned whether we should (1) hire another BHC to work at the study site, (2) require a certain level of BHC proficiency for participation, and/or (3) deliver more extensive or targeted training in the delivery of care using BCBT-CP. Manipulating any of these variables would have reduced the pragmatic goals of the study.

Grant-funded explanatory clinical studies that our team has previously conducted often included provision for the external funding of an additional interventionist who delivered the treatment under investigation. We had many discussions about the appropriateness of adding, for example, a postdoctoral fellow who could serve as a BHC in the study site. When conducting research in a clinical setting where none of the researchers already practice, we view being able to “give back” to the clinic as an important part of building relationships and stakeholder engagement. Supplying additional staffing could be seen as valuable to the clinic; thus, it could increase the likelihood of the success of the study and engender greater support for the pilot study and the next larger trial. However, adding a resource of this level to the clinic builds clinical capacity that might not be sustainable once the study ended and would definitely be a nontrivial departure from usual care.

We also considered whether to ensure the BHC had a certain level of expertise or experience; if they had more than a typical BHC, then perhaps the BHC’s outcomes would be better than a BHC with less expertise or experience. In this case our findings would apply only to other experienced BHCs. In contrast, if we engaged a less experienced BHC in the study, we might risk our ability to draw accurate conclusions about effectiveness of the behavioral intervention and/or the implementation methods.

As part of the roll-out of the new DoD Stepped Care Model for Pain pathway, all BHCs across the MHS are trained to deliver BCBT-CP in a standardized manner (Defense Health Agency, 2018; Goodie et al., 2018). Other primary care providers working in these clinics will be also be trained in the pathway. The training across the Defense Health Agency (DHA) will use both in-person and virtual delivery methods (for more details, see protocol paper, Goodie, Kanzler et al., 2020). We had concerns about whether to enhance the standard training to ensure its effectiveness for our study site, but we opted not to monitor training or provide additional training.

Ultimately, we chose not to add a BHC with study resources for our pilot study, and we chose to work with an early-career licensed clinical social worker with moderate experience as a BHC. We sought balance so that our findings would be more generalizable to other BHCs. Although we did not augment training with additional resources, we obtained feedback about the quality and quantity of the training experience. Organization PRECIS score: 5.

Recommendations for Trialists in the Consideration of Organization

Researchers in integrated primary care settings must carefully consider the ways research procedures could intentionally or unintentionally affect the organization under study. We recommend that pragmatic trialists study organic staff providing care. If additional research-funded resources are provided to the clinic (e. g., training, staffing, or otherwise), consider whether these resources will be available to other usual integrated primary care clinics and available after the study is completed.

Domain 5: Flexibility (Delivery). How Different is the Flexibility in How the Intervention is Delivered and the Flexibility Anticipated in Usual Care?

A highly pragmatic approach to delivery of care would mean our intervention should be as close as possible to usual care. The BHC would have flexibility in how to approach care, making decisions regardless of the research study. The DHA, in collaboration with the VA Center for Integrated Healthcare, adapted the BCBT-CP protocol used in the VA’s integrated primary care program (Beehler, Dobmeyer, Hunter, & Funderburk, 2018). There are seven treatment BCBT-CP “modules” that BHCs can deliver flexibly, so we did not need to consider protocol development for this study. The DHA BCBT-CP protocol recommends that BHCs encourage patients to complete a minimum of three modules; however, patients may receive all seven modules if indicated, depending on the individual and primary care team decisions (Beehler et al., 2018; Hunter et al., 2018b). Standard Primary Care Behavioral Health practice is flexible (Robinson & Reiter, 2016); some patients may be seen more frequently (e. g., weekly), while others might be seen less frequently (e. g., monthly). This variability in treatment scheduling presented a significant challenge in identifying treatment adherence and treatment completion. Although modular treatments allow for easy tailoring of treatments to individual patients, they present a significant challenge to defining the “dose” of treatment. While 3–7 visits were recommended for each patient—yet one is the modal number of visits in usual BHC practice (Haack et al., 2020)—when should we conduct our post- and follow-up assessments? This was not a typical trial with standardized, specific intervention delivery methods, doses, and scheduled assessments. The trialists and regulatory staff on our team frequently raised questions: How can we know when treatment is done? What if we do a posttreatment assessment after five visits, but then more visits are received after that? What if some people received weekly treatment and others received monthly? Those of us who were practicing BHCs raised other points: When is treatment in primary care ever “done?” Can treatment of chronic pain be considered complete when the patient continues to be seen by other members of the primary care team? Episodes of care are highly variable in practice and may be interrupted, restarted, or ended unpredictably. What if the BHC initially delivers BCBT-CP and then switches gears due to the needs of the patient or changes in the overall treatment plan? The uniqueness of integrated care and the tension between explanatory and pragmatic goals was evident in these discussions.

We did not make any adaptations to the DHA-specified treatment modules or delivery methods since the goal was simply to monitor the implementation of a clinical approach developed by the leadership of a very large healthcare system. We decided to track what interventions were provided and conduct chart reviews to measure fidelity. We agreed not to provide feedback or corrective action if there was nonadherence; instead, such observations would inform us that there were problems with some aspect of implementation (e.g., the training or manual). Regarding timing of assessments, we opted not to base this on number of visits, due to potentially great variability across patients. Considering that the 3–7 visits should be scheduled approximately every 2 weeks, we instead chose a time-based method, conducting assessments 3 and 6 months following the first visit. In this pilot study, we also chose to monitor clinical (non-research) outcomes for patients who continued their episode of care with the BHC through review of the electronic medical record. Flexibility (Delivery) PRECIS score: 5.

Recommendations for Trialists in the Consideration of Flexibility of Delivery

Researchers seeking to study an intervention in integrated primary care settings are encouraged to consider if any of the study-related treatment delivery interferes with or is distinct from usual BHC care. A more explanatory study would standardize the treatment protocol and the dose and frequency of the intervention. Although such parameters will contribute to improved internal validity, findings will have limitations when implemented in usual integrated primary care practice. To speed implementation and uptake of findings, we encourage a more pragmatic approach to the delivery of BHC interventions, taking care to monitor differences across BHC practice and to consider how these variances may be affecting treatment response.

Domain 6: Flexibility (Adherence). What Measures are in Place to Make Sure Participants Adhere to the Intervention?

An explanatory trial requires close monitoring of behavior change plans and intervention adherence. For example, we could have a study staff member make between-BHC visit calls to check on and encourage patients to do their homework, or we could train the study site BHC in additional motivation-enhancement techniques. However, in typical Primary Care Behavioral Health practice, the type of intervention is left up to the BHC and primary care team; appropriately, the BCBT-CP protocol allows for much flexibility in this area. Our team did not want to interfere with that process, even though taking steps to ensure adherence could improve rigor of the study.

No study staff “encouraged” patients to be adherent, and the BHC provided usual care for anybody who struggled with behavior changes. However, in a pilot study, it was important for us to know if patients were adherent to certain modules and assignments. We chose to monitor these outcomes without intervening or “dropping” any nonadherent outliers. Our study coordinator did engage in additional contact with participants to conduct assessments. While these contacts were not designed to increase use of the skills taught by the BHC, simply being contacted may have served as a prompt to use the learned pain management skills. Flexibility of Adherence PRECIS score: 4.

Recommendations for Trialists in the Consideration of Flexibility of Adherence

The degree to which researchers influence adherence is an important question in integrated primary care settings. Nearly all interventions provided in this context include a self-management component which may include “homework” or some kind of new behavior between visits (Hunter et al., 2017). Knowing whether participants are adherent is important, but designing additional prompts, interventions, or encouragements would be outside the norm of routine care and could be difficult to scale up, if effective.

Domain 7: Follow-up. How Closely are Participants Followed up?

Like most researchers, our team was interested in collecting follow-up data for analysis. Collecting follow-up data can be challenging as patients have completed their course of care and may not be motivated to complete follow-up assessments. Good Primary Care Behavioral Health practice necessitates gathering routine clinical outcomes (Robinson & Reiter, 2016), but our team was interested in more than routine clinical data. We struggled with decisions about length of assessment batteries, number/frequency/duration of assessment visits, and length of time for follow-up after the episode of care concluded.

We tried to balance the need for meaningful data with a pragmatic assessment approach. Our stakeholders representing the DHA leadership were interested in understanding the impact of this clinical approach. Therefore, it was important for us to systematically gather more than routine clinical outcome data. We included research assessments at all BCBT-CP visits (i.e., 2–7 appointments scheduled approximately 1–2 weeks apart) and during follow-up at 3 and 6 months following their initial appointment with the BHC. Gathering data from activity trackers also introduced additional research steps that typical patients would not need to do, such as have an app they ensure is opened to “refresh” data regularly. However, based on anecdotal data, technology is increasingly incorporated into BHC visits as a tool to improve motivation or track outcomes (e.g., mindfulness apps, sleep trackers). Follow-Up PRECIS score: 3.

Recommendations for Trialists in the Consideration of Follow-up

Intensity, frequency, and duration of assessments and length of time for follow-up are critical considerations for would-be pragmatic trialists. In most pragmatic trials, all of the data are collected during the course of normal clinical care. However, within primary care settings, there is little time for self-report measures. As such, outside of the primary outcomes (e.g., improved functioning), it would be difficult to understand the factors that contributed to the primary outcomes without allowing for more intensive assessment periods. In our study, we wanted to know whether other factors (e.g., sleep, physical activity, perceptions of disability) associated with chronic pain were also changing, beyond pain intensity and functioning. By adding these measures and additional assessment periods, we informed not only ourselves, but also the DHA stakeholders who were implementing the chronic pain pathway.

Domain 8: Primary Outcome. How Relevant is it to Participants?

Because our team was interested in effectiveness of BHC-delivered care, we had choice points about our primary outcome. We wanted to select outcomes that would be relevant to our stakeholders, which include DHA leadership (e.g., policymakers, stakeholders), hospital leadership, BHCs, and patients. There are two aspects to consider when selecting primary outcomes (Loudon et al., 2015): (1) Are the outcomes meaningful to participants? (2) Is the way we measure the outcome similar to usual care?

Our first aim was to test feasibility and acceptability of the BCBT-CP treatment approach. As we developed this aim, we considered the various options for measuring “feasibility and acceptability.” We wanted to know how the treatment delivered by the BHC was perceived by all stakeholders. So in this case, any outcomes we selected would inherently be relevant to them.

Our second aim was to examine the effectiveness of BHCs delivering care consistent with the DHA BCBT-CP training. There were many options for examining effectiveness; some could be more explanatory (e.g., number of steps per day based on activity data), while other options were more pragmatic (e.g., report of pain levels on routinely administered pain measures).

For the first aim, we decided to gather qualitative data in provider and patient focus groups conducted following care delivery. We developed a semistructured interview designed to assess the usability, ease of use, perceived effectiveness, helpfulness, and barriers to implementing the chronic pain intervention. Additionally, we included a standardized measure of satisfaction and treatment helpfulness, using a modified Treatment Helpfulness Questionnaire (THQ; (Chapman et al., 1996), which rates the value of different pain-related BCBT-CP components on a -5 (very harmful) to + 5 (very helpful) scale.

To address effectiveness in Aim 2, we selected many outcome measures, some of which were in addition to the course of usual care and may not have meaning to patients, which brought our PRECIS-2 score down. We wanted a comprehensive battery for the pilot phase to determine which measures would be the most informative for the larger pragmatic randomized clinical trial. One of the outcomes, physical activity, was very relevant to patient stakeholders but not necessarily to their providers or the medical system. We found that the Defense and Veterans Pain Rating Scale (DVPRS; Buckenmaier et al., 2013) and the Pain Intensity, Enjoyment and General Activity measure (PEG-3; Krebs et al., 2009) were the two most relevant outcomes. The PEG-3 is often used by BHCs, and the DVPRS is administered routinely as part of clinical care. Both of these scales measure potential changes in pain severity, as well as any changes in the impact of pain on daily living. Primary outcome PRECIS score:4.

Recommendations for Trialists in the Consideration of Outcomes

Future integrated primary care researchers must consider if their selected measures are consistent with what is important to “participants,” broadly defined. Which outcomes matter most to patients, BHCs, primary care providers, administrators, and policymakers? Ideally, primary outcomes will be relevant to all stakeholders, because not only should patients find the outcome meaningful, but those who are responsible for implementing the treatment approach in primary care also should value the patient outcomes. Having patients and providers both satisfied with the results of their efforts will help ensure uptake and sustainability (Loudon et al., 2015). For example, studying a new clinical pathway to improve diabetes outcomes may require tracking glycated hemoglobin (HbA1c), which is not always relevant to patients; a compromise may be to include this objective lab work—which is part of routine care—as an outcome, while also measuring participants’ subjective experiences of functioning or wellness.

Domain 9: Primary Analysis. To What Extent are All Data Included?

A fully pragmatic approach would be to include all participants in an “intent-to-treat” (ITT) approach (Loudon et al., 2015). An ITT analytic strategy is also recommended for fully explanatory RCTs that are designed to establish the efficacy and safety of an intervention (Lewis & Machin, 1993). Our team did not struggle much with this question, as it was our acknowledged intent to follow an ITT analytic strategy.

We chose, a priori, not to exclude any participants from the study, even if they dropped out before completing their course of care or were outliers. Our inferential analytic plan was to conduct repeated-measures t test to determine if there was a statistically significant difference in posttreatment scores compared to pretreatment scores on the PEG-3 and DVPRS. Primary analysis PRECIS score: 5.

Recommendations for Trialists in the Consideration of Primary Analysis

Researchers of integrated behavioral health in primary care must consider whether to include all participants in their analyses. Selecting only participants who complete their course of care or were fully adherent, or including only BHCs who adhered closely to the protocol, makes the study less pragmatic and ultimately less applicable to other integrated primary care clinics. While there may be appropriate times to exclude data, we generally recommend ITT analyses for studies conducted in integrated primary care settings.

Discussion and Conclusions

Pragmatic trials are well-suited for primary care environments and help inform the uptake of evidence-based treatments within these health care settings. Pragmatic trials require researchers to balance internal and external validity questions. The PRECIS-2 tool may be a useful tool in assisting teams with making research design decisions. Although there are many tools and frameworks available for use in the conduct of translational behavioral science, we found the PRECIS-2 tool to be the most helpful in aiding our team in making thoughtful decisions across a range of domains. At times we chose to be less pragmatic, because we believed it was necessary for conducting meaningful research within the context of primary care.

The PRECIS-2 tool is not without limitations, however. For example, its domains do not assess other important elements of implementation research, such as adoption over time and long-term maintenance of intervention outcomes (e.g., as in the RE-AIM tool; Glasgow et al. (1999)). However, we do plan to study these important aspects in our upcoming multi-site trial using additional implementation science evaluation instruments. There are also questions regarding the effects of using the PRECIS-2 tool. It is unknown at this time whether trials rated more pragmatic on PRECIS-2 dimensions are actually more likely to be acceptable, or whether the interventions/programs tested prove to be more scalable to other primary care settings. More research is needed to understand the potential impact of the PRECIS-2 tool on effectiveness and implementation outcomes. We also do not know whether experiences and results from our pilot will generalize to other sites. With the addition of multiple sites in our next study, PRECIS-2 scores may vary based on location. For example, the way a clinic responds to providing care in the context of COVID-19 (e.g., increases in telehealth visits) may differ from another clinic. Differences in the way care is delivered may complicate the ability to make conclusions regarding the effectiveness of BCBT-CP, so we plan to carefully track how this care is delivered (e.g., in-person vs. telehealth). We adopted an approach that maximized pragmatic methodology in several domains (e.g., recruitment, treatment implementation) which limits our ability to explain systematic change in symptoms as a function of treatment (because we are not manipulating treatment). However, an emphasis on pragmatic methodology will allow for more certainty about the transition of treatment into the clinical environment.

Based on our experiences, we recommend the PRECIS-2 tool be considered by researchers conducting investigations in primary care, regardless of study design or use of additional implementation science framework, and hope the choice points highlighted in this paper will serve as a model for future studies. Future research in integrated primary care must be relevant to its intended audience, and a pragmatic approach paves the way for rapid uptake of innovations that ultimately can improve patients’ lives.

Acknowledgements

We would like to thank Paul Fowler, Lisanne Gross, Nicole Hawkins, Kevin Vowles, Jennifer Bell, and the South Texas Research Organizational Network Guiding Studies on Trauma and Resilience (STRONG STAR Consortium) team.

Author contributions

KEK and JLG developed the article idea; KEK, DDM, CM, JLG performed literature reviews; KEK, JLG, DDM, CM, AEB, SY-M, ALP drafted the manuscript; CJB, BAC, ACD CLH, AB, JAB provided critical revisions.

Funding

The U.S. Army Medical Research Acquisition Activity, 820 Chandler Street, Fort Detrick MD 21702–5014 is the awarding and administering acquisition office. This work was supported by the Assistant Secretary of Defense for Health Affairs endorsed by the Department of Defense, through the Pain Management Collaboratory—Pragmatic Clinical Trials Demonstration Projects under Award No. W81XWH-18-2-0008. Opinions, interpretations, conclusions and recommendations are those of the authors and do not reflect an endorsement by or the official policy or position of the US Army, the Department of Defense, the Department of Health and Human Services, the Department of Veterans Affairs, or the US Government. Additional research support for this publication was provided by the National Center for Complementary and Integrative Health of the National Institutes of Health under Award Number U24AT009769 and the National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health under Award Number K23DK123398. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Declarations

Conflict of interest

Kathryn E. Kanzler, Donald D. McGeary, Cindy McGeary, Abby E. Blankenship, Stacey Young-McCaughan, Alan L. Peterson, J. Christine Buhrer, Briana A. Cobos, Anne C. Dobmeyer, Christopher L. Hunter, Aditya Bhagwat, John A. Blue Star, and Jeffrey L. Goodie declare that they have no conflict of interest.

Informed Consent

All study participants provided informed consent..

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearbook of Medical Informatics. 2000;9(1):65–70. doi: 10.1055/s-0038-1637943. [DOI] [PubMed] [Google Scholar]

- Beehler GP, Dobmeyer AC, Hunter CL, Funderburk JS. Brief cognitive behavioral therapy for chronic pain: BHC manual. Defense Health Agency; 2018. [Google Scholar]

- Beehler GP, Murphy JL, King PR, Dollar KM, Kearney LK, Haslam A, Wade M, Goldstein WR. Brief cognitive behavioral therapy for chronic pain. The Clinical Journal of Pain. 2019;35(10):809–817. doi: 10.1097/AJP.0000000000000747. [DOI] [PubMed] [Google Scholar]

- Buckenmaier CC, III, Galloway KT, Polomano RC, McDuffie M, Kwon N, Gallagher RM. Preliminary validation of the defense and veterans pain rating scale (DVPRS) in a military population. Pain Medicine. 2013;14(1):110–123. doi: 10.1111/j.1526-4637.2012.01516.x. [DOI] [PubMed] [Google Scholar]

- Chapman SL, Jamison RN, Sanders SH. Treatment helpfulness questionnaire: A measure of patient satisfaction with treatment modalities provided in chronic pain management programs. Pain. 1996;68(2):349–361. doi: 10.1016/S0304-3959(96)03217-4. [DOI] [PubMed] [Google Scholar]

- Colditz GA, Emmons KM. The promise and challenges of dissemination and implementation research. In: Brownsen RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: Translating science into practice. 2. Oxford University Press; 2018. pp. 1–18. [Google Scholar]

- Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care. 2012;50(3):217. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Defense Health Agency (2018, June 8). Pain management and opioid safety in the Military Health System (MHS) (Procedural Instruction No. 6025.04). Retrieved May 13, 2020 from Military Health System website: https://health.mil/Reference-Center/Policies/2018/06/08/DHA-PI-6025-04-Pain-Management-and-Opioid-Safety-in-the-MHS

- Ford I, Norrie J. Pragmatic trials. New England Journal of Medicine. 2016;375(5):454–463. doi: 10.1056/NEJMra1510059. [DOI] [PubMed] [Google Scholar]

- Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: A systematic review of use over time. American Journal of Public Health. 2013;103(6):e38–e46. doi: 10.2105/AJPH.2013.301299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: The RE-AIM framework. American Journal of Public Health. 1999;89(9):1322–1327. doi: 10.2105/AJPH.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodie J, McGeary D, Kanzler K, Hunter C. Using pragmatic trial designs to examine IBHC interventions for chronic pain in primary care. Annals of Behavioral Medicine. 2018;52(Suppl 1):S188. doi: 10.1093/abm/kay013. [DOI] [Google Scholar]

- Goodie JL, Kanzler KE, McGeary C, Blankenship AE, Young-McCaughan S, Peterson A, McGeary D. Targeting chronic pain in primary care settings using behavioral health consultants: Methods of a randomized pragmatic trial. Pain Medicine. 2020;21(Suppl_2):S83–S90. doi: 10.1093/pm/pnaa346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodie, J. L., Kanzler, K. E., McGeary, C., Blankenship, A. E., Young-McCaughan, S., Peterson, A., & McGeary, D. (2021). Targeting chronic pain in primary care settings using behavioral health consultants: Findings from a pragmatic pilot study. Manuscript in preparation. [DOI] [PMC free article] [PubMed]

- Haack S, Erickson JM, Iles-Shih M, Ratzliff A. Integration of primary care and behavioral health. In: Levin BL, Hinson A, editors. Foundations of behavioral health. Springer; 2020. pp. 273–300. [Google Scholar]

- Hunter C, Goodie J, Oordt M, Dobmeyer A. Integrated behavioral health in primary care: Step-by-step guidance for assessment and intervention. American Psychological Association; 2017. [Google Scholar]

- Hunter CL, Funderburk JS, Polaha J, Bauman D, Goodie JL, Hunter CM. Primary care behavioral health (PCBH) model research: Current state of the science and a call to action. Journal of Clinical Psychology in Medical Settings. 2018;25(2):127–156. doi: 10.1007/s10880-017-9512-0. [DOI] [PubMed] [Google Scholar]

- Hunter CL, Kanzler KE, Albert S, Robinson P. Extending our reach: Using pathways to address persistent pain. Annals of Behavioral Medicine. 2018;52(Suppl 1):S427. doi: 10.1093/abm/kay013. [DOI] [Google Scholar]

- Kazdin AE. Evidence-based treatment and practice: New opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. American Psychologist. 2008;63(3):146–159. doi: 10.1037/0003-066X.63.3.146. [DOI] [PubMed] [Google Scholar]

- Kessler R, Glasgow RE. A proposal to speed translation of healthcare research into practice: Dramatic change is needed. American Journal of Preventive Medicine. 2011;40(6):637–644. doi: 10.1016/j.amepre.2011.02.023. [DOI] [PubMed] [Google Scholar]

- Krebs EE, Lorenz KA, Bair MJ, Damush TM, Wu J, Sutherland JM, Asch SM, Kroenke K. Development and initial validation of the PEG, a three-item scale assessing pain intensity and interference. Journal of General Internal Medicine. 2009;24(6):733–738. doi: 10.1007/s11606-009-0981-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis J, Machin D. Intention to treat—who should use ITT? British Journal of Cancer. 1993;68(4):647–650. doi: 10.1038/bjc.1993.402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: Designing trials that are fit for purpose. British Medical Journal. 2015;350:2147. doi: 10.1136/bmj.h2147. [DOI] [PubMed] [Google Scholar]

- Meffert SM, Neylan TC, Chambers DA, Verdeli H. Novel implementation research designs for scaling up global mental health care: Overcoming translational challenges to address the world’s leading cause of disability. International Journal of Mental Health Systems. 2016;10:19. doi: 10.1186/s13033-016-0049-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: Understanding time lags in translational research. Journal of the Royal Society of Medicine. 2011;104(12):510–520. doi: 10.1258/jrsm.2011.110180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peek CJ, Glasgow RE, Stange KC, Klesges LM, Purcell EP, Kessler RS. The 5 R’s: An emerging bold standard for conducting relevant research in a changing world. Annals of Family Medicine. 2014;12(5):447–455. doi: 10.1370/afm.1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiter JT, Dobmeyer AC, Hunter CL. The primary care behavioral health (PCBH) model: An overview and operational definition. Journal of Clinical Psychology in Medical Settings. 2018;25(2):109–126. doi: 10.1007/s10880-017-9531-x. [DOI] [PubMed] [Google Scholar]

- Robinson PJ, Reiter JT. Behavioral consultation and primary care: A guide to integrating services. 2. Springer; 2016. [Google Scholar]

- Rothwell PM. External validity of randomised controlled trials: “To whom do the results of this trial apply?”. The Lancet. 2005;365(9453):82–93. doi: 10.1016/s0140-6736(04)17670-8. [DOI] [PubMed] [Google Scholar]

- Ruggeri M, Lasalvia A, Bonetto C. A new generation of pragmatic trials of psychosocial interventions is needed. Epidemiology and Psychiatric Sciences. 2013;22(2):111–117. doi: 10.1017/S2045796013000127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz D, Lellouch J. Explanatory and pragmatic attitudes in therapeutical trials. Journal of Chronic Diseases. 1967;20(8):637–648. doi: 10.1016/0021-9681(67)90041-0. [DOI] [PubMed] [Google Scholar]

- Strosahl K. Integrating behavioral health and primary care services: The primary mental health care model. In: Blount A, editor. Integrated primary care: The future of medical and mental health collaboration. W. W Norton & Co; 1998. pp. 139–166. [Google Scholar]

- Treweek S, Zwarenstein M. Making trials matter: Pragmatic and explanatory trials and the problem of applicability. Trials. 2009;10(1):37. doi: 10.1186/1745-6215-10-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel ME, Kanzler KE, Aikens JE, Goodie JL. Integration of behavioral health and primary care: Current knowledge and future directions. Journal of Behavioral Medicine. 2017;40(1):69–84. doi: 10.1007/s10865-016-9798-7. [DOI] [PubMed] [Google Scholar]