Abstract

Learning to associate a positive or negative experience with an unrelated cue after the presentation of a reward or a punishment defines associative learning. The ability to form associative memories has been reported in animal species as complex as humans and as simple as insects and sea slugs. Associative memory has even been reported in tardigrades [1], species that diverged from other animal phyla 500 million years ago. Understanding the mechanisms of memory formation is a fundamental goal of neuroscience research. In this article, we work on resolving the current contradictions between different Drosophila associative memory circuit models and propose an updated version of the circuit model that predicts known memory behaviors that current models do not. Finally, we propose a model for how dopamine may function as a reward prediction error signal in Drosophila, a dopamine function that is well-established in mammals but not in insects [2, 3].

Introduction and Overview of the Current Models

The Mushroom Body Memory Center

The mushroom body (MB) neuropil is a compartmentalized higher brain structure that is necessary for most forms of associative memory in Drosophila [4–6]. Each hemibrain of the bilateral Drosophila central nervous system has ~2000 Kenyon cells (KCs) of 3 types: α′β′, αβ, and γ. KC axons bifurcate to form the MB neuropil. Each one of the 15 MB compartments is a convergence zone for axons of KCs, their presynaptic dopaminergic neurons (DANs), and their postsynaptic MB output neurons (MBONS), along with several types of other extrinsic neurons [7–9]. Twenty-one types of MBONs have been shown to drive either attraction or avoidance behavior when activated, and thus are categorized as either approach MBONs or avoidance MBONs [10, 11]. In Drosophila, the oldest and most commonly used associative memory paradigms pair an odor (Conditioned Stimulus; CS) with a punishment or a reward (Unconditioned Stimulus; US). This results in the formation of aversive [12, 13] or appetitive memory [14, 15] to the paired odor, respectively. The ability to investigate the workings of the Drosophila associative memory circuit has been greatly enhanced by the development of genetically encoded calcium indicators which have allowed the visualization of physical/functional changes that occur with memory formation [16]. These changes have been considered by the field to represent memory “engrams”. Engrams are physical instantiations of memory or memory traces. In Drosophila, changes in a neuron’s calcium response to the trained odor after pairing are taken to be an engram of the odor memory. Tracking these memory traces in the Drosophila brain has allowed the development of associative memory circuit models that predict the fly’s behavior after different types of training.

The Classical Model—Dopamine is the US Signal

What we refer to as the “classical” model of Drosophila olfactory associative memory is based on the schema explicated by Heisenberg in 2003 [4], with significant modifications catalyzed by the recent revolution in MB anatomy [7, 10]. In this model, odor information (CS) flows from the antennal olfactory receptor neurons to the projection neurons before being encoded in a random sparse group of KCs [17–22]. The US activates one of two dopaminergic clusters. Punishment activates PPL1s, which project mainly to the vertical lobes of the MB, while reward activates PAMs, which project mainly to the horizontal lobes [10] (although a few exceptions have been recently found, such as the PAM-y3, which encodes punishment [23]). PPL1 and PAM neurons encode the valence of the US in the MB through activation of dD1R dopamine receptors [3, 8, 24–31]. The coincidence of KC activation by an odor and the activation of dD1Rs in an MB compartment is the gate for associative learning.

Memory is encoded by depression at synapses of odor-responding KCs onto a subset of their readout MBONs. CS + punishment training depresses the KC → approach MBONs synapses, achieving aversive learning, and CS + reward training depresses the KC → avoidance MBONs synapses, achieving appetitive learning [11, 32–39]. A cardinal characteristic of this model is that it posits an innate balance of KC synapses onto approach and avoidance MBONs. Learning occurs through biasing this balance by modulating the synaptic strengths of the sparse group of KCs activated by the CS onto approach and avoidance MBONs via a dopamine-dependent mechanism (Fig. 1).

Fig. 1.

The classical model of associative learning in Drosophila. A In a naïve fly, the response to an odor is neutral since odor responses in MBONs that drive approach (green) and MBONs that drive avoidance (red) are unaltered. B In aversive training, a punishment such as electrical shock (ES) activates PPL1s. The coincidence between the dopaminergic input to KCs and the activation of the same KCs by odor results in long-term depression (LTD) of the KC → approach.MBON synapse. In future encounters with the odor, the circuit is biased to the output of avoidance MBONs because approach MBON responses are suppressed. This results in aversive memory behavior. C In appetitive training, a reward such as sugar activates PAMs. Coincidence between the dopaminergic input and odor responses in KCs depresses KC → avoidance.MBON synapses resulting in a positively-biased response to the odor and appetitive memory. Green, approach-related synapse; red, avoidance-related synapse; dotted line, multisynaptic odor pathway from antennae to KC projections in the Mushroom Body (MB). MB is shown with the 3 different types of KCs: α′β′ (dark grey), αβ (light grey), and γ (black).

This model is supported by the majority of the behavioral and imaging experiments that have been carried out in the MB: (a) aversive memory is formed after pairing the CS with activation of PPL1s and conversely, appetitive memory is formed if the CS is paired with activation of PAMs [24–26, 28, 36, 40]; (b) memory is specific to the CS temporally coupled with the activation of the DANs, while preference for unpaired odors does not change; (c) activation of DANs alone or adding a delay between the CS and DAN activation does not produce associative memory; (d) imaging studies report an enhancement engram (increased KC calcium response to the trained odor, but see [41]) and inhibiting the synaptic transmission from these KC cells impairs memory recall [42–47], while inhibiting the dopamine signaling during training blocks the engram formation [10, 25]; (e) calcium responses to the CS are suppressed in approach MBONs after aversive training, and in avoidance MBONs after appetitive training, and silencing DANs during training blocks this suppression [11, 32, 38].

There are, however, a number of observations that have been made in behaving animals that are not completely explained by the general version of the classical model. For example, after forward training, in addition to the LTD of KC → MBONs, a potentiation of the responses of opposite valence MBONs to the paired odor has been reported [29, 48, 49]. In addition, flipping the order of the CS and the US presentations during training results in the formation of a memory that is opposite to the US valence [29, 50, 51], while presentation of the CS alone or the US alone after learning abolishes the formed memory [48, 52–54]. The current version of the classical model fails to explain these findings, suggesting that refinement is needed.

The ex vivo Model – Dopamine is Downstream of Coincidence

In an effort to investigate the associative memory circuit in a more reduced preparation, Ueno et al. developed an ex vivo (dissected brain) preparation for aversive memory. This preparation was conceived to try to overcome some of the experimental drawbacks of working with intact behaving animals and its potential utility is analogous to that of hippocampal long-term potentiation for rodent behavior [55, 56]. The authors dissected out the brain and the ventral nerve cord into a dish and paired electrical stimulation of the CS and US pathways. They recorded an enhanced MB response to CS pathway activation that was similar to the enhancement engram detected after training in vivo. In contrast to in vivo studies, however, they reported no dopamine release upon unpaired CS pathway or US pathway activation. In this preparation, dopamine was only released after the coincidence of CS and US pathway activation [56]. Surprisingly, the perfusion of dopamine (at high concentration) was sufficient to enhance MB responses. In their model, the authors suggested that dopamine does not encode the valence of the US signal, rather it is released downstream of CS + US coincidence. This model proposes that the US signal is carried to the MB by glutamatergic inputs and that coincidence-driven dopamine release directly drives memory formation.

This conclusion is inconsistent with the classical model and its supporting data in several major ways. The first is that multiple in vivo studies report dopamine release in response to both unpaired electric shock [3, 8, 36, 57, 58] and CS alone [2, 3, 8, 33, 36, 57, 59–62]. The discrepancy with the finding of US-alone dopamine release may be explained by a limitation in the in vivo setup; it cannot guarantee a lack of fly-perceived coincidence with some feature of the environment (unintended by the investigator) causing a covert “coincidence”, and hence dopamine release, to occur. Although this rationalization might explain why dopamine is released in vivo after unpaired US presentation, it is more difficult to explain how dopamine is released in vivo in response to unpaired CS presentation [2, 3, 8, 33, 36, 57, 59–62], as an odor requires no association to valence. It is more likely that the inability of Ueno et al. (2017) to detect dopamine release after US pathway activation [56] is due to a technical limitation of the reporters used. In the ex vivo experiments, the pH-dependent reporter SynaptopHluorin was used [56] while the in vivo experiments used the recently developed, and more sensitive, calcium sensor sytGCaMP6s [33, 57].

A second, more difficult to reconcile, discrepancy is that the unpaired optogenetic or thermogenetic activation of DANs is a typical control experiment for all in vivo studies and does not cause associative memory formation. This could in theory be explained by lack of specificity. If learning relies on biasing the balance of the MB circuit towards approach or escape in response to a specific group of KCs that are activated in training, the generalized unpaired dopaminergic neuron activation may bias the responses of too many/all cells, making the plastic changes that occur too broad to be linked to the CS and occluding choice-based memory. However, recent findings suggest that simple lack of specificity may not underlie this discrepancy between ex vivo and in vivo results. Cohn et al. showed that paired and unpaired dopamine actually have opposite modulatory effects on the circuit. The paired signal depresses KC → MBON synapses while the unpaired signal strengthens them [33]. This shift in plasticity from potentiation to depression requires CS + dopamine coincidence in the presynaptic cells (KCs) [29, 32]. This means that the effects of unpaired dopamine on the circuit are likely to be qualitatively, not just quantitatively, different and that the context in which dopamine is released can have radical effects on its actions.

An additional problematic feature of the ex vivo model is the conclusion in Ueno et al. (2017) that the US information is delivered to the MB via glutamatergic synapses onto KCs, and that the coincidence between CS cholinergic input and US glutamatergic input gates dopamine release [56]. To the best of our knowledge, there is no direct evidence that glutamatergic inputs or glutamatergic receptors exist in the MB. The glutamatergic neurons in their study that were activated in response to the electric shock application do not actually innervate the MB [63]. An alternative interpretation of the ex vivo experiments demonstrating a general requirement for NMDA receptors and glutamatergic neurons in memory formation is that glutamatergic input is needed to encode US information going to the MB, but it is not the last step of this pathway, rather it is upstream of the dopamine US signal.

Finally, the ex vivo preparation, and most critically induction by unpaired dopamine, has not been shown to recapitulate the change in KC → MBON responses to the CS pathway activation induced by memory formation. While the enhancement of CS responses in KCs has been demonstrated ex vivo, it is not clear that this enhancement is mechanistically the same as that produced by learning. Of concern is a recent study showing that the unpaired dopamine release in the mouse striatum drives an increase in the excitability of dopamine receptor (D1R)-expressing neurons that persists for at least 10 min [64]. This unpaired dopamine effect is similar to the enhanced excitability of the dD1R-expressing KCs in the MB after the unpaired dopamine application raising the possibility that bath dopamine application in the ex vivo preparation induces a memory-irrelevant enhancement of KC excitability. As discussed earlier, associative learning takes place by a depression of KC → MBON synapses [11, 32–39]. It will be critical to examine the change in MBON responses to CS pathway activation after unpaired dopamine application in the ex vivo preparation, especially in light of the previously discussed Cohn et al. findings [33] which suggest that it is likely that an enhancement rather than inhibition of MBON calcium will be seen. Reconciling these experiments with the rest of the literature will likely require some integration of dopamine as part of the coincidence event.

But in spite of the contradictions between the ex vivo model and the extensive literature on the MB circuit, the idea that dopamine release might be amplified after coincidence is one that has some support. A dopamine amplification mechanism in the context of the classical model was suggested by Cervantes-Sandoval et al. (2017) [57]. This study showed that dopamine release is stronger after CS + US coincidence than after the unpaired US presentation. This study also demonstrated the existence of reciprocal synapses between KCs and their presynaptic DANs, and that blocking the cholinergic transmission from the KCs significantly reduces dopamine release [57]. The activity of these reciprocal KC ⇌ DAN synapses may gate amplification of a sub-threshold dopamine signal that could not be detected by the tools used in the ex vivo experiments.

In this in vivo study, knocking down cholinergic receptors in DANs modestly weakened learning but did not completely abolish it [57], suggesting a non-cholinergic neurotransmitter may gate dopamine amplification. Consistent with this idea, Ueno et al. (2020) showed that carbon monoxide (CO) is released from KCs onto DANs in the ex vivo prep. CO release is coincidence-dependent, and inhibiting the CO synthesis in the MB or blocking its release onto DANs impaired the detection of dopamine release [65]. This raises the possibility that a CS + US coincidence-dependent release of CO may gate amplification of dopamine release (although a study in larvae suggests a different neurotransmitter for KC → DAN feedback [66]). Interestingly, the authors also demonstrate that the CO-induced dopamine release in the ex vivo preparation is action potential-independent [65]. An action potential-independent neuromodulatory mechanism may provide a more sophisticated local layer to assure that learning is specific to the presented CS, since the lack of action potentials allows the dopamine amplification at specific synapses, as there is no activity propagation to the soma. The idea that dopamine signaling is locally amplified is therefore supported by experiments in both behaving files and explanted brains and provides a natural starting place for an integrative model that could be tested in both preparations.

An Integrative Model

Rules of Dopamine Release

While dopamine levels have an important role in establishing the internal state of animals [33, 59], the acutely evoked dopamine release in response to a CS or US is critical for memory formation in both the classical and ex vivo models (Fig. 2 AB). Evoked release alone, however, is not sufficient to achieve associative learning. In our integrative model (Fig. 2C), this is due to 2 limiting factors: (1) a lack of specificity to the CS, and (2) the release of sub-threshold levels of dopamine by unpaired CS or US [57]. The coincidence-dependent activation of a positive feedback loop between KCs and DANs overcomes both limiting factors, being activated only in cells that are responding to the CS, and amplifying dopamine gain locally. The feedback loop activation may be gated by the coincidence-dependent CO release from the KCs onto presynaptic DANs (Fig. 2). This model suggests that the dopamine release is both upstream and downstream of the CS + US coincidence.

Fig. 2.

Rules of dopamine release in the updated model. A In the classical model, the CS activates a sparse subset of KCs, and the US causes dopamine release from dopaminergic neurons (DANs). Coincidence between KC activation and dopamine produces learning in downstream neurons. B In the ex vivo model, the US does not activate DANs but rather activates KCs through NMDA receptors. Coincidence between the KC activation and the NMDA receptor activation results in carbon monoxide release, activating DANs. The resultant dopamine release is sufficient to cause learning. C In the proposed updated model, the US activates both KCs and DANs. Activation of DANs is not sufficient to cause strong dopamine release. The simultaneous activation of KCs by both the CS and the US gates a positive feedback loop between KCs and DANs, possibly via the carbon monoxide release from KCs. This positive feedback loop increases the dopamine release locally onto KCs. Coincidence between the amplified dopamine signal onto KCs and their activation by the CS distinguishes the KCs responding to the CS, and results in specific learning.

Memory Circuits Need to Allow Flexible Behavior

While the addition of a dopamine amplification step to the classical model helps to explain some of the findings in the literature, a truly robust model for the associative memory circuit should explain and predict all of the associative memory behaviors that the fly performs. Despite the current models’ success in predicting behavioral outcomes for the traditional associative learning (forward learning), they fail to predict the outcomes of other learning and forgetting scenarios, some of which are described below, that flies encounter in nature and in labs.

Learning can be split into 2 primary types: forward and backward. Forward learning occurs when the CS precedes or coincides with punishment or reward (US), resulting in the formation of aversive or appetitive memory, respectively. Backward learning occurs when the order of the CS and US presentation is flipped so that punishment or reward precedes the CS, resulting in a memory opposite in valence to the US [29, 50, 51]. It is canonically believed that backward training is only successful for punishment, forming an appetitive or relief memory. However, an experiment by Handler et al. (2019) demonstrated that backward training in which the activation of reward neurons (PAM) precedes the CS forms aversive memory [29]. While in forward training the CS predicts a punishment or a reward, in backward training the CS predicts termination of the US, thus imparting a valence opposite that of the US to the CS. The current models explain the formation of US-valence memory after forward learning via the dopamine-mediated long-term depression (LTD) of KC → MBON synapses (Fig. 1). However, they neither predict the reported [29, 48, 49] potentiation of odor responses in MBONs opposite in valence to those that are directly downstream of the US-stimulated dopamine signal, nor explain the memory formation after backward learning.

As noted by Davis and Zhong in their 2017 review, forgetting, from an experimental psychology point of view, can also be split into 2 primary types: active and passive, each of which encompasses multiple subtypes [67]. Active forgetting is the process by which the brain removes or updates old memories, and it is divided into 4 subtypes: (1) Motivated forgetting, (2) Retrieval-induced forgetting, (3) Intrinsic forgetting, and (4) Interference-induced forgetting. Passive forgetting, however, is the natural decay of memories due to ongoing molecular turnover and is divided into (1) Forgetting by natural decay and (2) Forgetting by loss of context cues. Such classifications of forgetting, especially passive forgetting, are debatable and differ according to the point of view of the investigator. For example, the natural decay of memories, known as passive forgetting, can be considered a subtype of active forgetting because the underlying molecular turnover is achieved by triggering cellular processes to degrade old memories. For clarity in this paper, we will adhere to the experimental psychology classification and nomenclature provided by Davis and Zhong [67]. We will focus on two subtypes: Retrieval-induced forgetting, which happens when the CS is repetitively presented after learning without the predicted US [48], and intrinsic forgetting, which describes the gradual weakening of the acquired memory over time. We will also talk about a third type of forgetting which happens when the US is presented to the fly after learning without being paired with the CS [52]; we will refer to this type as US re-exposure-induced forgetting. Current models of the associative learning circuit cannot fully explain any of these behaviors.

Other types of learning/forgetting also exist but will not be discussed in detail in this article because they can be thought of as a combination of one or more of the aforementioned primary types. An example is memory update (also called counterconditioning), which occurs when a CS that was previously paired with a US is subsequently paired with an opposite valence US, thus forming a new opposite valence memory in place of the old one. Memory updating can be thought of as the retrieval-induced forgetting in combination with simultaneous learning by forward training.

These unexplained memory behaviors challenge the robustness of the current associative learning models. An update of the models which can predict the formation or erasure of these multiple forms of memory is therefore needed. Here, we first synthesize recent findings from the literature in an attempt to extract the most salient features of the data that need to be incorporated into a new circuit model. We then update the current associative memory model such that it predicts the correct outcomes of the primary behavioral paradigms. Furthermore, we show that the new model also predicts other memory behaviors that are less studied in Drosophila but are well-established in other organisms, such as trace memory formation and the transfer of old associative memories to novel sensory stimuli (here called memory transfer). Finally, we put forward a hypothesis as to how the two dopaminergic clusters (PPL1 and PAM) can function together to code a reward prediction error signal in Drosophila.

Cardinal Features of MB Behavior that Drive the Need for Updating the Associative Learning Model

As mentioned briefly above, we have an excellent understanding, down to the EM level [68], of the connectivity of the MB neuropil. This understanding can be used in the context of functional data to create very detailed circuit models for behavior. Utilizing more detailed recent functional and anatomical findings, we identify four key features of the circuit that can be axiomatized to formulate a more robust and flexible memory model. Firstly, there is the reciprocal inhibition between approach and avoidance MBONs. It was recently demonstrated that approach MBONs can inhibit avoidance MBONs; specifically, the GABAergic MBON11 inhibits MBON01 and MBON03 [48]. Also, the recently published connectome data show strong synaptic connections between avoidance MBONs and approach MBONs; in particular, the glutamatergic MBON01 synapses on MBON12, MBON13, and MBON15 [68]. This indicates that avoidance MBONs may also inhibit approach MBONs. Reciprocal inhibition between approach and avoidance MBONs may underlie a secondary layer of information such that the primary learning-induced KC → MBON depression releases opposite valence MBONs from inhibition, thus potentiating their responses to the trained odor. This communication between opposite valence MBONs improves the robustness of the memory circuit, especially regarding memory update mechanisms, because a new associative experience not only forms a new memory, but also influences the existent memories for the same CS such that it weakens or abolishes the old memory if the valence of the new association is opposite to the old one. Therefore, we assume the reciprocal inhibition between approach and avoidance MBONs to be the first axiom of our updated model.

Secondly, anatomical evidence demonstrates that MBONs directly synapse on their presynaptic DANs both in larvae [69] and in adult flies [68, 70]. Experimental evidence shows that MBON → DAN synapses can be excitatory in some compartments and inhibitory in others [48, 54, 71–73]. Also, some DANs show delayed inhibition followed by a post-inhibitory rebound after termination of MBON activity [48]. It is also important to note that not only can MBONs drive different responses in DANs, but the same MBON may drive distinct responses in different DANs [48]. This diversity in MBON → DAN communication enables old memories to modulate dopamine release with new associative training. Such features help explain memory behaviors that do not require DAN activation by a US; for example, retrieval-induced forgetting. The ability to adjust dopamine levels in the context of old experiences also supports a role of dopamine in reward prediction error in Drosophila. In summary, the second axiom of our updated model is that MBONs drive distinct responses in different DANs: excitatory, inhibitory, or delayed inhibition followed by a rebound.

Thirdly, Cohn et al. (2015) and Handler et al. (2019) demonstrated that while pairing the CS with activation of DANs results in the depression of postsynaptic MBON responses to the CS, unpaired dopamine results in potentiation of the responses of the same MBON [29, 33]. Although the potentiation of MBON responses is not sufficient to drive associative memory behavior due to a lack of specificity to KCs activated by the CS, it may be sufficient to alleviate a baseline MBON depression due to formation of an earlier memory. Adding this assumption to the memory model is key in explaining memory behaviors that do not require coincidence with the CS; for example, US re-exposure-induced forgetting. Therefore, based on the evidence by Cohn et al. and Handler et al. [29, 33], the third axiom of the updated memory model is that unpaired dopamine potentiates MBON responses such that it can abolish the previous learning-induced depression of KC → MBON synapses.

Fourthly, Cohn et al. (2015) showed that a positive reward like sucrose not only activates PAMs, but also inhibits PPL1s. Similarly, punishment both activates PPL1s and inhibits PAMs [33]. It is likely that this US-induced inhibition is followed by a post-inhibitory rebound in some DANs [48]. This feature of the circuitry is particularly useful in explaining associative memory formation after backward training paradigms. The fourth axiom of the updated model, therefore, is that a US both activates the DANs congruent with its valence and inhibits the DANs that respond to opposite valence stimuli. Inhibition of the opposite valence DANs causes rebound firing on release.

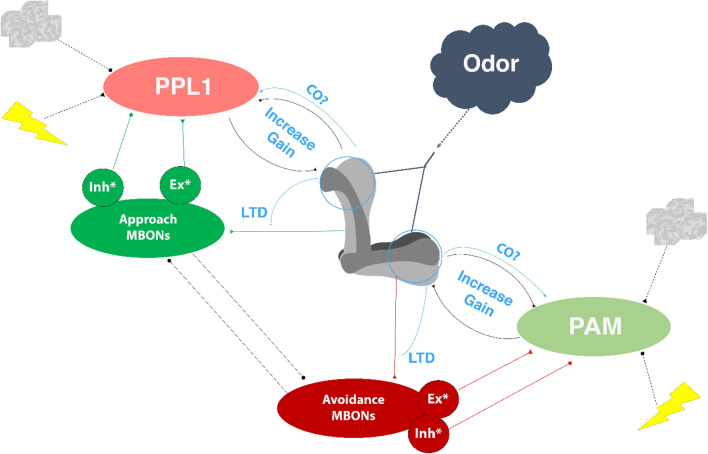

Incorporating these 4 axioms, all of which are well-supported by experimental and anatomical findings, into the associative learning circuit model enables it to explain the diverse memory behaviors performed by Drosophila (Fig. 3). In the updated model, PPL1 and PAM neurons are oppositely modulated by both reward and punishment. A coincidence between the CS and activity of PPL1 or PAM neurons gates a KC ⇌ DANs positive excitatory feedback that amplifies dopamine gain on the postsynaptic KC → MBON synapses and results in their depression. This in turn potentiates CS responses in the opposite valence MBONs due to their release from inhibition by the depressed MBONs. Activation of MBONs results in either activation or inhibition of their presynaptic DANs. Also, not shown in the figure, an unpaired dopamine signal can be sufficient to recover the depressed KC → MBON synapses to their baseline levels. These additions improve the current circuit model and allow it to predict Drosophila’s diverse associative learning behavioral repertoire.

Fig. 3.

Updated model of the Drosophila associative memory circuit. Odors are sparsely encoded in the KCs of the MB. Reward activates PAMs and inhibits PPL1s (which rebound on release, not shown). Similarly, punishment activates PPL1s and inhibits PAMs (followed by a rebound, not shown). Coincidence between the KC activation and dopamine in the MB initiates a CO-dependent positive feedback loop between KCs and DANs, increasing the dopamine release. This amplified dopamine signal, paired with the KC activation, depresses KC → MBON synapses. Two groups of MBONs are shown for both approach and avoidance MBONs: excitatory and inhibitory. (*Excitatory and inhibitory MBON labels represent the MBON effect on DANs rather than any inherent property of the MBON). Recurrent feedback loops maintain the circuit balance between approach and avoidance MBONs. Importantly, the inhibition of DANs in this model is followed by a post-inhibitory rebound. This model relies on a recurrent-loop architecture between KC ⇌ DAN and approach.MBONs ⇌ avoidance.MBONs. Green and red colors indicate a positive (approach) or negative (avoidance) valence, respectively. Blue indicates neural events downstream of CS + US coincidence in the MB.

Testing the Updated Model on Diverse Memory Behaviors

Learning in the Drosophila associative memory circuit relies on shifting the balance between the CS responses of approach MBONs and avoidance MBONs. In this section, we will describe in detail how different training paradigms engage elements of the updated circuit model (Fig. 3) to produce the correct behavior. Also, we will review experimental evidence that supports the proposed mechanisms.

Forward Learning

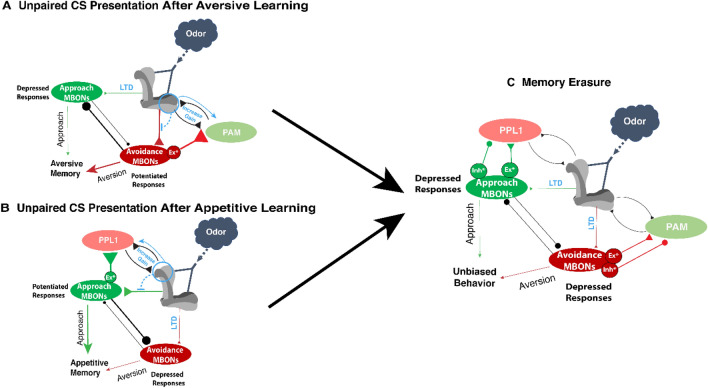

Forward learning is the most studied form of associative learning and occurs when a CS precedes or coincides with a reward or a punishment (the US). The valence of the resultant memory matches that of the US. In the case of a negative US valence such as electric shock punishment, a coincidence of the odor signal (encoded in all MB compartments) and dopamine (released on the vertical lobes) takes place in the MB vertical lobe compartments. This coincidence results in depression of the synapses between KCs and their postsynaptic approach MBONs, which biases the circuit towards aversion, hence driving escape behavior in future encounters with the same odor. In contrast, a reward activates PAMs that mainly synapse onto the MB horizontal lobe compartments; thus, coincidence of an odor with a reward depresses the KC → avoidance.MBON synapses, shifting the output behavior to approach. Thus far, this is largely well explained by the current classical model (Fig. 1).

Although the classical model explains the behavioral outcomes of forward learning, it fails to account for the physiological observation that CS responses in opposite-valence MBONs (the ones not innervated by the US-activated DANs) are potentiated by learning. Recent findings demonstrate that in addition to the depression of the KC → approach.MBONs after aversive training, odor responses in avoidance MBONs get potentiated. Likewise, odor responses in avoidance MBONs are potentiated after appetitive training [29, 48, 49]. The classical model cannot explain this potentiation because the opposite-valence MBONs do not receive neuromodulatory signals from neurons activated by the US. Our updated model offers an alternative mechanism through which this can occur (Fig. 3) via the feedback inhibition between approach and avoidance MBONs. This feedback inhibition provides a secondary layer of information which accounts for the neuromodulation of the opposite-valence MBONs and predicts the potentiated responses of avoidance MBONs to the trained odor (Fig. 4A, B). This approach MBONs → avoidance.MBONs inhibition is supported by previous studies, by Felsenberg et al. (2018) and Perisse et al. (2016), which show that the GABAergic approach MBON11 inhibits avoidance MBON01 and MBON03. When the CS response of MBON11 is suppressed, MBON01 and MBON03 responses to the trained odor are potentiated [48, 49]. The avoidance.MBONs → approach.MBONs communication is supported by anatomical findings. For example, glutamatergic avoidance MBON01 directly synapses onto approach MBON12, MBON13, and MBON15. If these synapses from avoidance MBONs onto approach MBONs are inhibitory, this explains the potentiated responses of approach MBONs to an appetitively trained odor. In summary, the updated model explains the potentiated responses of MBONs that drive US-congruent behaviors, thus filling in this gap in the current model.

Fig. 4.

Forward Learning in the updated model. A Odor responses are initially balanced in the naïve fly. B After aversive training, CS + US coincidence increases the gain of PPL1 dopamine release via a CO-dependent positive feedback loop. Coincidence between the amplified dopamine and the KC activation causes LTD of odor-specific KC → approach.MBON synapses. The LTD in approach MBONs releases avoidance MBONs from the inhibition by approach MBONs, resulting in potentiated odor responses in avoidance MBONs and aversive memory formation. C After appetitive training, CS + US coincidence increases the gain of PAM dopamine release via a CO-dependent positive feedback loop. The coincidence between the high dopamine and the KC activation causes LTD of odor-specific KC → avoidance.MBON synapses. The LTD in avoidance MBONs releases approach MBONs from the inhibition by avoidance MBONs, resulting in potentiated odor responses in approach MBONs, and appetitive memory formation.

Backward Learning

Backward learning occurs when the US precedes the CS, forming a memory of valence opposite to that of the US [29, 50, 51]. From a psychological perspective, this can be understood as the fly perceiving the CS to be either a sign that the punishment has ended or a sign indicating reward withdrawal. Encoding of such memories cannot be completely explained by the classical associative learning model but is accounted for in the updated model. In our proposed model, a punishment US not only activates negative-valence-encoding PPL1s, but also inhibits a subset of the opposite-valence-encoding PAMs; similarly, a reward US activates the positive-valence-encoding PAMs and inhibits a subset of the opposite-valence-encoding PPL1s (Fig. 3). The model also posits that a subset of the inhibited DANs exhibit a post-inhibitory rebound upon termination of the US. If the presentation of the CS after US termination coincides with this post-inhibitory rebound in the DANs encoding valence opposite to that of the US, a memory opposite in valence to the US is formed (Fig. 5).

Fig. 5.

Backward Learning. Upper panels: the learning circuit in different scenarios. Lower panel: theoretical prediction of PAM neuron activity before and during US presentation, and after US termination. A Before training, the associative circuit is balanced between approach and avoidance MBONs. The PAM activity is at baseline. B US presentation without the CS activates PPL1s but is insufficient to initiate the KC ⇌ PPL1 positive feedback loop due to lack of coincidence with the CS. C After termination of the US, a subset of PAMs are released from inhibition and have a post-inhibitory rebound. Presentation of the CS during this time window (grey shaded area) achieves the coincidence between CS + PAM, driving appetitive learning as in Fig. 4C.

The updated model explains the backward memory formation by differing from the classical circuit model in only two ways: (1) the DANs encoding valence opposite to that of the US are inhibited by the US, and (2) this inhibition is followed by rebound activity in the inhibited neurons. The first point has been experimentally demonstrated; Cohn et al. showed that a sugar reward inhibits the PPL1s innervating the γ2 MB compartment (PPL1-03) and that an electrical shock punishment inhibits the PAMs innervating the γ4 and γ5 MB compartments (PAM-08 and PAM-01) [33]. The second point is strongly supported by recent findings. Konig et al. demonstrated that, although the dopamine synthesis in the DANs activated by the US is dispensable for backward memory formation, stronger or longer US presentation still enhances backward learning [50]. This indicates that either backward learning relies on a neurotransmitter other than dopamine, or that it requires dopamine synthesis in neurons other than those activated by the US. With regard to the former, a recent study reported that another neurotransmitter, nitric oxide, is released from the DANs activated by the US, and that it has an effect on memory formation opposite to that of dopamine [74]. While this would have suggested that nitric oxide might drive memory formation after backward training, it was shown to be dispensable for this behavior [74]. This implies that dopamine is needed for backward learning, but it is released from neurons other than those activated by the US. Our model suggests that dopamine release after a post-inhibitory rebound in the DANs inhibited by the US is what underlies memory formation after backward training. Direct experimental and anatomical findings support this idea as well. Jacob et al. 2020 showed that an appetitive memory is formed to the unpaired CS (CS-) after spaced aversive training. Agreeing with our prediction of a rebound in PAMs after a punishment US, this appetitive memory requires activity in PAMs at the time of CS- presentation, and it is formed only if the CS is presented after termination of, not before presentation of, the US [75]. Also, Felsenberg et al. showed a weak post-inhibitory rebound in PAM-08, and a strong rebound in PAM-05 and PAM-06 neurons after the termination of inhibition [48]. Such a rebound after US termination may be a feature intrinsic to these DANs or it may be driven by post-inhibitory rebound in their presynaptic excitatory partners. For example, Berry et al. report a post-inhibitory rebound in MBON12 [52]; an excitatory cholinergic neuron that is presynaptic to PPL1-03 [68]. Therefore, a post-inhibitory rebound in MBON12 is expected to result in activation of its postsynaptic partner, PPL1-03. The post-inhibitory rebound suggested here in Drosophila DANs is analogous to that in mammals where subsets of the DANs that are inhibited by a reward US exhibit a post-inhibitory rebound after US termination [76–80]. In conclusion, a post-inhibitory rebound after US termination likely takes place in a subset of the DANs inhibited by the US, allowing a coincidence between a CS presented after US termination and activation of DANs that encode a valence opposite to that of the US. This results in formation of a memory of a valence opposite to the presented US (Fig. 5).

US Re-exposure-induced Forgetting

Re-applying the US after learning abolishes previously-formed aversive memory and is termed extinction [52]. Although this is an established behavior in Drosophila, the classical model falls short of explaining the circuit mechanism. Important insight into this process was provided by Berry et al. who showed that the odor responses in approach MBONs (MBON12) that had been depressed by learning, return to baseline after presentation of the unpaired US [52]. This indicates that forgetting engages a mechanism for memory erasure rather than forming a competing memory that masks the aversive behavior. These results were replicated by replacing the US with artificial activation of PPL1 neurons (PPL1-03) in both the initial aversive training and in the extinction paradigm (unpaired PPL1-03 activation) [52]. This confirmed that extinction requires activity of the same DANs activated during the initial training. The third axiom of our updated model builds upon this information to provide an explanation for how extinction occurs. If unpaired dopamine potentiates KC → MBON synapses [33], presentation of the US after training, which activates the same DANs activated during the initial training paradigm, or direct activation of those neurons, potentiates the previously depressed KC → MBON synapses such that they return to baseline levels restoring the balance between avoidance and approach MBONs (Fig. 6).

Fig. 6.

US re-exposure-induced forgetting. A After aversive learning, the circuit is biased towards avoidance of the learned odor due to LTD of the KC → approach.MBON synapses. Presentation of the US after training activates PPL1s. The unpaired dopamine is sufficient to abolish the LTD of KC → approach.MBONs (dashed black excitatory line). B Approach MBON odor responses return to normal levels after the unpaired dopamine potentiates the depressed synapses, occluding the old memory.

Our updated circuit model also predicts a secondary outcome of US re-exposure-induced forgetting that has been demonstrated by previous behavioral experiments. The presentation of a new odor (odor B) during the unpaired US presentation after learning of odor A, results in the erasure/weakening of odor A memory and the formation of a new memory for odor B. Different odors are encoded in different ensembles of KCs: odor A activates one ensemble of KCs (KC.A) while odor B activates a different ensemble (KC.B). Repeated presentation of the same US paired with odor B, instead of odor A, results in a paired CS + dopamine in KC.B, and unpaired dopamine in KC.A. The depressed KC.A → MBON synapses get potentiated by dopamine to return to baseline levels, abolishing the old memory of odor A. Concurrently, KC.B → MBON synapses get depressed by paired CS + dopamine, forming an aversive memory to odor B. In conclusion, the updated version of the associative memory circuit model explains both behavioral and physiological findings showing that repeating the US presentation after training results in forgetting of the old memory, while coincidental presentation of a new odor during this process forms a new memory for the new odor.

Retrieval-Induced Forgetting

Associative memory can also be abolished by unpaired presentation of the CS. A psychological explanation of this forgetting is that when the fly receives the trained CS without the expected reinforcement, it realizes that this CS is not predictive of punishment or reward. While the classical model does not explain this behavior, our updated model, using data from recent studies, provides a circuit mechanism (Fig. 3). First, as discussed earlier, responses of avoidance MBONs to the CS are potentiated after aversive learning, and responses of approach MBONs to the CS are potentiated after appetitive learning (see “Forward Learning” section). Second, our model assumes that avoidance MBONs excite a subset of PAMs, and approach MBONs excite a subset of PPL1s. Based on these assumptions, CS presentation after aversive training drives the activation of PAMs, while CS presentation after appetitive training drives the activation of PPL1s. CS + PAM coincidence depresses the KC → avoidance.MBON synapses corresponding to that CS. This suppression of CS responses in avoidance MBONs forms a parallel appetitive memory and balances out the depression of the KC → approach.MBON synapses that was formed after the initial aversive training. Likewise, unpaired presentation of the CS after appetitive learning activates a subset of PPL1s to form a parallel aversive memory and balance out the depression in the KC → avoidance.MBON synapses that was formed after the initial appetitive training. In summary, our updated model proposes a mechanism for retrieval-induced forgetting that relies on the CS-induced activity of the DANs that encode valence opposite to that of the initial memory (Fig. 7).

Fig. 7.

Retrieval-induced forgetting. A In a circuit biased towards odor aversion due to LTD of KC → approach.MBON synapses, odor responses in avoidance MBONs are released from their inhibition by approach MBONs. This potentiation in MBON responses drives the activation of PAMs. This allows for a coincidence between the odor presentation and the PAM activation, causing LTD of the KC → avoidance.MBON synapses. C The effects of this LTD of KC → avoidance.MBONs balances out the LTD of KC → approach.MBONs and occludes the aversive memory behavior. B Opposite to (A), appetitive training causes LTD of KC → avoidance.MBON synapses which releases approach MBONs from their inhibition by avoidance MBONs. This in turn drives the activation of PPL1s. Therefore, the unpaired presentation of the CS after appetitive training allows the coincidence between an odor and PPL1 activation and results in depressing KC → approach.MBON synapses. C This newly-formed LTD of KC → approach.MBONs balances out the LTD of KC → avoidance.MBONs and occludes the memory behavior. The dashed blue line represents the new LTD that forms after unpaired CS presentation.

Two recent studies support this model of retrieval-induced forgetting. Firstly, the GABAergic approach MBON11, in which CS responses after aversive learning are suppressed, inhibits avoidance MBON01 and MBON03 [48, 49]. Secondly, activation of MBON01 and MBON03 drives activation of PAM-01 neurons [48]. Taken together, these two studies provide evidence that aversive learning inhibits odor responses in approach MBONs (at least MBON11), which in turn releases avoidance MBONs (at least MBON01 and MBON03) from inhibition. These avoidance MBONs drive the activity of PAM-01 neurons. Thus, the unpaired CS presentation after aversive training becomes coincident with the activation of at least one PAM neuron, which is sufficient to form a parallel appetitive memory.

In the case of appetitive memory extinction by unpaired CS presentation, our model is mainly supported by anatomical data. The recent hemibrain connectome demonstrated that glutamatergic avoidance MBONs form strong direct synapses onto cholinergic approach MBONs. For example, the avoidance MBON01 forms 11 synapses onto each neuron of the approach MBON12 pair, 13 synapses onto the approach MBON13, and 4 and 6 synapses onto the neurons of the approach MBON15 pair [68]. These approach MBONs make strong direct synapses onto PPL1s. For example, one neuron of the MBON15 pair forms many direct synapses onto one neuron of the PPL1-01 pair (33 synapses), one neuron of the PPL1-03 pair (22 synapses), and one neuron of the PPL104 pair (20 synapses). The other neuron of the MBON15 pair directly synapses onto one neuron of the PPL1-3 pair (27 synapses) and one neuron of the PPL1-04 pair (18 synapses) [68]. Given the excitatory nature of the cholinergic receptors in the fly brain, it is reasonable to assume that the potentiated odor responses in these approach MBONs drive the activation of their postsynaptic PPL1 partners. This prediction is further supported by physiological evidence. It was demonstrated that the activation of a cluster of cholinergic approach MBONs (containing MBON15, MBON16, MBON17, MBON18, and MBON19) drives strong activation of PPL1s [54]. Based on these anatomical and physiological data, the CS presentation after appetitive learning drives potentiated responses in excitatory approach MBONs, and in turn, drives the activation of postsynaptic PPL1s. The CS + PPL1 coincidence is sufficient to form a parallel aversive memory by suppressing the CS responses in approach MBONs. This rebalances the responses in approach and avoidance MBONs and negates the initially learnt appetitive behavior. The specific synaptic connections we note here, that may drive this memory behavior, can be experimentally investigated. Overall, our model emphasizes that retrieval-induced forgetting is achieved by formation of a parallel opposite memory, not a direct erasure of the old memory.

Second-Order Conditioning

Second-order conditioning is similar to forward learning, except that the US, the “primary reinforcer” is replaced by a stimulus that engages the circuit downstream of CS + US coincidence: the “second-order reinforcer”. Since aversive learning results in depression of KC → approach.MBON synapses, for example, MBON11 [25, 32], the suppression of MBON11 is in theory a second-order reinforcer that could replace the US in aversive learning. Consistent with this, Ueoka et al. (2017) and Konig et al. (2019) demonstrated that pairing an odor with silencing of MBON11 (which is an approach MBON) induces aversive memory formation [81,82]. This result conflicts with the results of Felsenberg et al. (2018) which showed that after aversive training, when KC → approach.MBON synapses (like those from MBON11) are depressed, odor presentation results in the depression of KC → avoidance.MBON synapses, forming an appetitive memory [48] (see “Retrieval-Induced Forgetting” section). To reconcile this contradiction, Konig et al. suggested that initial CS pairing with a second-order reinforcer provides a strong effect which is extinguished if training is extended. This rationalization presents a problem because it suggests that extending the presentation of information results in an effect opposite to that of the initial presentation of the same information. Our updated associative learning circuit model provides a mechanism that reconciles these studies and illuminates the circuit basis for this apparent contradiction. Importantly, in our updated model, the presentation of the same information to the fly always results in the same behavioral outcome.

Our model, like the classical model, relies on a balance between approach and avoidance MBONs which is continuously modified by information encoded by PPL1s and PAMs. To understand how this model reconciles the aforementioned contradiction, it is useful to consider the circuit as a seesaw. The direction in which the seesaw falls (aversion or attraction) depends upon two balls, each applying their weight to one side. In this analogy, each ball represents the effects of either PAMs or PPL1s on the balance of the associative memory circuit. Now imagine the balls are each suspended above their respective sides of the seesaw by some number of springs. These springs represent the inhibitory effect of MBONs on PPL1 and PAM neurons and control the percentage of each ball’s total weight that can be applied to the seesaw.

Let us consider the case of inhibition by GABAergic MBON11, the neuron that is manipulated in the contradictory studies [48, 81, 82]. In this scenario, we assume that the PPL1 ball is slightly heavier than the PAM ball (Fig. 8A). This is because, according to the anatomical evidence, the PPL1 inhibition by MBON11 occurs by direct synapses [68], while the PAM inhibition by MBON11 is indirect – MBON11 inhibits avoidance MBONs that drive PAM activation and anatomical data indicate more synapses from MBON11 onto PPL1s than from MBON11 onto avoidance MBONs [68]. Collectively, this indicates that PPL1s are more inhibited by MBON11 than are PAMs, hence in a balanced circuit when PPL1 and PAMs are totally released from their inhibition by MBON11, PPL1s will have a greater impact on the circuit. In this analogy, cutting most or all of the springs suspending the two balls reflects the experimental silencing of MBON11 performed by Konig et al. and Ueoka et al. [81, 82]. The heavier ball (PPL1) pushes down its side of the seesaw more than the lighter ball (PAM). This is consistent with the formation of an aversive memory when a CS is paired with silencing of MBON11 [81, 82] (Fig. 8B).

Fig. 8.

Illustration of the second-order conditioning with a numerical example. In this example, we arbitrarily assume a 50% release of available PPL1 and PAM weight due to the suppression of MBON11 after each step. A In a natural situation, both PAM and PPL1 are completely suspended by the MBON11 springs. B Cutting all the springs (similar to silencing of approach MBONs) results in the release of both balls. PPL1 has a more significant impact in this case because it is heavier. C Aversive training results in a biased system where PPL1 is partially released from inhibition (50% of the ball weight is applied to the seesaw). D Re-exposure to the unpaired CS when MBON11 is suppressed releases an extra 50% of PPL1’s available weight, and 50% of PAM’s full weight. Note that the difference between applied weights (Δ) is less after the unpaired CS exposure than after the initial training. E Repeating unpaired exposure to the CS applies an extra 50% of the available weight of each ball; the effect of the extra release of PAMs is more significant than that of the extra release of PPL1s because of the greater available weight of the PAM ball, hence the PPL1-induced aversive memory is gradually abolished. Note that with every repetition of the unpaired CS exposure, the difference in applied weights between PPL1 and PAM gets smaller (Δ). Key: springs represent the direct inhibition of approach MBONs of PPL1s and the indirect inhibition of the same approach MBONs on PAMs through inhibition of avoidance MBONs. Grey bars represent the system state in the previous panel. Light-grey shapes represent the available weights. Colored sections represent the applied weights.

On the other hand, after aversive learning, the KC → approach.MBON synapses are already depressed. Thus, the circuit is already biased toward PPL1. In the seesaw example, this is represented by the PPL1 ball already applying a portion of its weight to its side at the beginning of the experiment (Fig. 8C). Repeating the CS exposure after training is like pairing the CS with the partial inhibition of approach MBONs. In the seesaw example this is represented by cutting a percentage of the springs suspending each ball; arbitrarily, 50% of each side’s weight. This results in PAM applying 50% of its total weight on its side of the seesaw, but the change in PPL1 force is 50% of its remaining weight, not its full weight. This results in a stronger activation of PAMs compared to PPL1s and reduces the circuit bias, weakening the old aversive memory (Fig. 8D). The repetitive unpaired CS presentation will further reduce the circuit bias until the old memory is abolished. This agrees with the behavioral data by Felsenberg et al. where repetitive CS presentation after aversive learning erases the old memory via a PAM-dependent mechanism [48] (Fig. 8E).

In summary, our model predicts the contradictory behavioral results between the CS presentation after aversive learning [48] and the second-order conditioning [81, 82]. Our model reconciles the contradiction by distinguishing the effects of partial suppression of approach MBONs from the effects of complete silencing. In this updated model, the partial inhibition of approach MBONs will result in a stronger relative change in PAM effects and form an appetitive memory by depressing KC → avoidance.MBON synapses, agreeing with the results of Felsenberg et al. [48], while a strong silencing of approach MBONs will cause a larger relative change in PPL1 effects and form an aversive memory by depressing KC → approach.MBON synapses, agreeing with the results of Ueoka et al. and Konig et al. [81, 82]. Of note, an important assumption in this explanation is that the naturally-occurring suppression in KC → MBON synapses due to associative learning plateaus at a partial level and the suppressed synapses are never completely silenced. Our model also guarantees that repeating the presentation of the same information to the fly does not result in contradictory behavioral outcomes, but rather, actually reinforces the resulting behavior.

Intrinsic Forgetting

In the absence of CS- or US-induced forgetting, memories tend to decay over time; this memory decay is referred to as intrinsic forgetting. While neither the current model nor our updated model provides a comprehensive circuit pathway for intrinsic forgetting, findings by Berry et al. (2012) suggest that this process may rely on the third axiom of our updated model, that the unpaired dopamine reverses the learning-induced depression of KC → MBON synapses such that they return to their baseline activity. Berry et al. demonstrated a requirement for ongoing activity of DANs, which has been confirmed [33, 53, 57, 58] in intrinsic forgetting [53]. In this study, blocking the ongoing synaptic transmission from PPL1s or from a subset of them (PPL1-01, PPL1-03, and PPL1-05) for at least 40 min immediately after learning reduced forgetting. Activation of the same neurons for as little as 5 min after learning enhanced it, when memory recall was measured 3 h after training [53]. Based on these findings, intrinsic forgetting can occur through the unpaired ongoing dopamine release that likely abolishes the synaptic signature of the memory. By combining the third axiom of our model with these findings, we propose the following mechanism for intrinsic forgetting: the ongoing activity of DANs after learning results in an unpaired dopamine signal at the KC → MBON synapses, which have been depressed by learning, and reverses their activity to baseline levels; thus abolishing the formed memory.

Given the fact that PPL1 and PAM neurons modulate the activity of KC synapses onto approach and avoidance MBONs, respectively, our proposed mechanism predicts a role for PPL1s in the decay of aversive memories, but for PAMs in the decay of appetitive memories. However, two studies indicate that this prediction may be an oversimplification. Berry et al. showed that the aforementioned PPL1 neurons (PPL1-01, PPL1-03, and PPL1-05) play a role in appetitive memory decay as well [53], and Shuai et al. showed that PAM neurons (PAM-13 and PAM-14) play a role in aversive memory decay [83]. Of note, a study by Shuai et al. also reported that the activation of MBON-05 induces aversive memory decay [83]. This can be explained by our model because MBON-05 likely drives activation of PPL1s – anatomical evidence shows direct synapses from MBON-05 onto PPL1-03 neurons (31 synapses) [68]. The findings of Berry et al. and Shuai et al., although seemingly not predicted by our proposed mechanism, can actually be rationalized within its framework. Different axons of the same KCs synapse onto both approach and avoidance MBONs and are modulated by PPL1 and PAM neurons, respectively [7]. Therefore, it is possible that the prolonged activity of PPL1 or PAM neurons has modulatory effects on the KC somata, which in turn modulate KC → MBON synapses that are not directly downstream those of DANs. If so, the activity of PPL1s can slowly weaken appetitive memories by indirectly reversing the depressed KC → avoidance.MBON synapses to their baseline levels, and the activity of PAMs can slowly weaken aversive memories through indirectly reversing the depressed KC → approach.MBON synapses to their baseline levels. This rationalization is also supported by the fact that the postsynaptic MBON is dispensable during learning [32], hence memory formation must be encoded presynaptically (in the KCs). Similarly, modulation of only the presynaptic cell may underlie memory decay as well. In conclusion, while our proposed model provides a circuit mechanism for the intrinsic forgetting of aversive and appetitive memories that relies on the ongoing activity of PPL1 or PAM neurons, respectively, it does not provide a conclusive explanation of the inverse. Therefore, a dedicated circuit for intrinsic forgetting may remain to be identified.

Trace Memory Formation

Trace memory forms similarly to forward learning but after training in which a time gap is introduced between CS and US presentation [84]. Flies are able to form trace memories in an MB-dependent manner [2, 85, 86]. Since the classical model relies on a close CS + US coincidence, it cannot explain trace memory formation. Our updated model demonstrates that reciprocal KC ⇌ DAN synapses [57] can circumvent the requirement of simultaneous CS + US coincidence by generating a positive feedback loop between the KCs responding to the CS and DANs, prolonging CS-induced KC activity beyond the time of CS presentation. If a US is presented before the degradation of the CS information maintained in this KC ⇌ DAN loop, the CS + US coincidence requirement is met, driving memory formation. This also predicts that the strength of the memory will decrease with increasing time gaps between CS and US presentations, and that after a certain time window, memory can no longer be formed because the activity of the KC ⇌ DAN positive feedback loop retaining the CS imprint in KCs has decayed (Fig. 9).

Fig. 9.

Trace Memory Formation. A CS information is encoded by activity in KCs and is prolonged due to a recurrent loop between KCs and DANs. This imprint degrades gradually over time. B Delivering an electric shock (US) after CS termination but before degradation of the prolonged activity of KCs allows for a coincidence between the CS and US signal encoded by PPL1s, which causes LTD of KC → approach.MBONs, thus forming an aversive memory. Similarly, the CS imprint in the KCs is prolonged by a reciprocal synapse with PAMs (not shown). C Hypothetical CS-induced activity of KCs before and after CS presentation showing the time window in which US presentation achieves learning either without adding the KC ⇌ DAN recurrent loop (light grey) or after adding this loop (dark grey).

Memory Transfer

An associative memory may transfer from one conditioned stimulus to another if they are presented together after training [87]. For example, pairing an aversively-trained odor (CS-A) with a novel odor (CS-B) may result in the formation of an aversive memory to CS-B, similar to the original memory to CS-A. To the best of our knowledge, memory transfer has not yet been shown in fruit flies and the classical model does not have a mechanism to predict such a behavioral feature. However, our updated model predicts, and provides a possible circuit mechanism for, the transfer of associative memories between different CSs.

We illustrated earlier, using a seesaw analogy, that pairing an odor with silencing MBON11 in a naïve fly results in aversive memory formation, while in a trained fly where the odor is aversively conditioned, it leads to gradual erasure of the aversive memory by forming a parallel appetitive memory to this odor (for details, see “Second-Order Conditioning” section). We can again employ the seesaw analogy, where inhibition of the effects of PPL1 and/or PAM on the learning circuit by approach MBONs is represented by springs suspending PPL1 and PAM balls above opposite sides of the seesaw. In this system, pairing odor A with a novel odor B, subsequent to training with odor A, engages a retrieval-induced forgetting pathway for odor A, and a second-order conditioning pathway for odor B. This in turn results in the memory transfer from odor A to odor B.

To examine this more closely we take the case of aversive learning of odor A. In this scenario, odor A’s seesaw is already biased towards aversion (PPL1). The repeated presentation of odor A, whose KC → approach.MBON synapses are already depressed due to aversive training, results in increased activity of both PPL1 and PAM neurons. This is because these neurons are released from inhibition by the depressed approach MBONs. As explained earlier, in a biased seesaw that has been aversively trained, PPL1 is already applying a portion of its weight to its side of the seesaw. The further release of PPL1 and PAM from inhibition results in adding a percentage of the full weight of the PAM ball to its side of the seesaw, and a similar percentage of the remaining weight of the PPL1 ball to its side of the seesaw (see “Second-Order Conditioning” section). Because the PAM ball had more “available” weight, the PAM ball will have more impact on the system than the PPL1 ball, hence weakening the memory of odor A (Fig. 10A, B, D).

Fig. 10.

A mechanism for memory transfer. Similar to the seesaw model shown in Fig. 8, the system is balanced before training (A). Aversive training with odor A applies 50% of PPL1’s weight on the circuit. This is represented by a partial release of the PPL1 ball (B). However, odor B responses are still balanced because the synapses between the KCs responding to odor B and approach MBONs are not depressed (C). For odor A, as described in Fig. 8, 50% of PPL1 weight is already applied to the seesaw. Therefore, presenting odor A with odor B releases 50% of the full PAM weight and an extra 50% of PPL1 available weight. This results in an added stronger impact of PAMs on the circuit and thus a weakening of odor A’s aversive memory; this is signified by the smaller (Δ) in (D). Presenting odor A with odor B allows odor B to utilize the suppression of approach MBONs due to aversive learning to odor A. However, for the novel odor B, the system is still balanced. Therefore, 50% of the full weight of both PPL1 and PAM is released. This results in a stronger impact of PPL1 than of PAM, hence the formation of a weak aversive memory for odor B (E). Key: springs represent the direct inhibition of approach MBONs of PPL1s and the indirect inhibition of the same approach MBONs on PAMs through inhibition of avoidance MBONs. Light-grey shapes represent the available weights. Colored sections represent the applied weights.

For odor B, because there is no memory, the seesaw is equally balanced between PPL1 and PAM. However, odor B still is subject to the effects of the depression of approach MBONs due to its simultaneous presentation with the previously trained odor A. Thus, in the seesaw example, an equal portion of the full PPL1 ball weight and the full PAM ball weight will be applied to the seesaw. Because the PPL1 ball is heavier than the PAM ball (as explained in “Second-Order Conditioning” section), the PPL1 ball applies more weight than the PAM ball and pushes down its side of the seesaw. Accordingly, pairing a novel odor B with an already aversively trained odor A results in a higher impact of PPL1s than of PAMs and forms a weak aversive memory (Fig. 10C, E). If odor B is presented alone after training with odor A, odor B is not affected by odor A’s learning-induced depression of MBONs, therefore PPL1 and PAM neurons are not released from inhibition and consequently, no learning occurs. In conclusion, our updated model predicts that transfer of an associative memory between different conditioned stimuli may occur if the conditioned stimuli are presented together after training to one of them.

Dopamine Encodes a Reward Prediction Error Signal

A powerful idea in the field of learning and memory holds that dopamine encodes a reward prediction error; it represents a discrepancy between predicted and experienced reward. This has become a standard model for reinforcement-based learning thanks to groundbreaking experimental and computational evidence in mammals [88–92]. In Drosophila, little experimental evidence has accumulated to address the role of dopamine in reward prediction error (RPE). Dopamine in Drosophila (from PPL1 and PAM neurons) is still thought of more simply as a reward signal that encodes US valence in associative learning. Although several studies indicate that DANs likely encode RPE to achieve learning [48, 54], the only published experimental study that directly tested whether dopamine encodes the RPE signal in Drosophila concluded that it does not. In this study, Dylla et al. (2017) showed that dopamine is released after the unpaired CS presentation in an aversively trained fly, which agrees with the RPE hypothesis. However, equal evoked dopamine signals were recorded after both repetitive CS + US presentations and in control experiments where the CS and US were separated by 90 s [2]. This is not consistent with the expected behavior of an RPE signal; dopamine would not be released when expected experience matches actual experience. More recently, inspired by the overwhelming evidence from mammals, a few computational studies attempted to model dopamine as an RPE signal in fruit flies [93–95]. These papers do not explain, and even contradict, the experimental findings by Dylla et al., in addition to presenting other issues. A mechanism for dopamine encoding an RPE signal in Drosophila has thus been elusive. In this section, we propose a new model for RPE coding by dopamine that is compatible with our updated circuit model and reconciles the findings by Dylla et al. (2017).

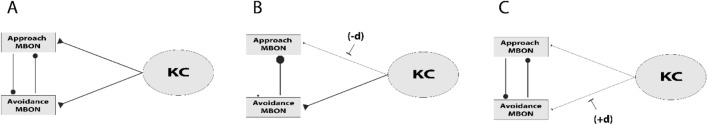

In the reward prediction error model, dopamine encodes the difference between experience and prediction. During the first CS exposure, the animal does not predict either positive or negative reinforcement (prediction = 0). If a US is not presented with or following the CS (experience = 0), then there is no difference between experience and prediction, so no dopamine should be released, and hence, no learning occurs. However, if a US of positive or negative valence is paired (experience = ±X), then a prediction error exists (experience {±X} – prediction {0} = ±X); thus, dopamine is released at the time of US presentation and learning is achieved. After this memory is formed, for example aversive memory, the CS predicts a negative experience {−X}. If the CS is then presented without the US, this will result in a positive prediction error (experience {0} – prediction {−X} = +X). Thus, dopamine will be released at the time of CS presentation to update the old memory. Finally, in the case of repetitive aversive training, prediction and experience are the same (experience {−X} – prediction {−X} = 0). Thus, no dopamine is released; hence, no new learning occurs, and the old memory remains stable. This last assumption underlies Dylla et al.’s conclusion that dopamine does not encode a reward prediction error in Drosophila, as they found no decrease in the dopamine signal after repeated CS + US training [2]. However, we propose an RPE model in which dopamine release may still occur even when the prediction is correct. As discussed earlier, two anatomically and functionally distinct subsets of DANs drive learning in Drosophila: PPL1 encodes negative valence and PAM encodes positive valence. We use this special feature of the Drosophila learning circuit to assign a negative prediction error component to PPL1s (−d) and a positive component to PAMs (+d). We suggest that learning by prediction error in Drosophila takes place only when (−d) ≠ (+d), while if both are equal, there is no prediction error and hence, no learning. In our model, we propose that a negative prediction error does not necessarily lead to dopamine release from PPL1s only, but rather it signifies that a relatively stronger dopamine signal is released from PPL1 (−d) than from PAM (+d). Similarly, a correct reward prediction, like in the case of repetitive CS + US training, does not necessarily result in no dopamine release, but it may signify dopamine release from both PPL1 and PAM neurons, where (+d) = (−d). Thus, our model suggests that dopamine can encode RPE via two opposite valence components that negate each other’s effects on learning, and that the mean of these two components is indicative of whether positive, negative, or no prediction error will be calculated.

In applying our model in different associative learning scenarios, we predict the following: presentation of an unpaired CS before training does not evoke an experience different from the prediction (experience – prediction = 0); thus (+d) and (−d) are equal, hence no learning occurs. When an aversive US {−X} is presented during training, (experience {−X} – prediction {0} = −X). This leads to PPL1s being activated more strongly than PAMs; thus, aversive learning takes place due to a stronger (−d) component. After training, if the CS is presented alone, then experience {0} – prediction {−X} = +X. This positive prediction error leads to a stronger activation of PAM than of PPL1; thus, a parallel appetitive memory is formed due to a relatively stronger (+d) component. If CS + US presentation is repeated after aversive training, a prediction error calculator results in no error (experience {−X} – prediction {−X} = 0). Thus, activation of both PPL1 and PAM neurons results in equal (+d) and (−d) components, hence no prediction error is calculated, and no learning occurs.

These ideas are supported by experimental evidence. Felsenberg et al. (2018) showed that CS presentation after aversive training causes activation of PAMs [48] and forms a parallel appetitive memory. Accordingly, it is expected that repetitive aversive CS + US pairings will result in the activation of both PPL1 (by the US) and PAM (by the CS) neurons. This resolves the contradiction between the findings of Dylla et al. 2017 [2] and the classical RPE hypothesis, as it predicts that even in cases of no RPE, dopamine may still be released, but the negative and positive components will be equal.

Two mechanisms may underlie the ability of PPL1 (−d) and PAM (+d) components to interact: (1) each component may separately evoke its own downstream changes on the synapses from KCs onto approach or avoidance MBONs such that behavior results from a difference in the strengths of these effects, or (2) each component abolishes the synaptic changes evoked by the other, thus only the stronger component manages to depress downstream KC → MBON synapses to form a memory. However, it is possible, and perhaps more likely, that RPE is encoded by the interplay of both of these mechanisms. We suggest that the opposite dopaminergic components directly cause downstream synaptic changes at KC → MBON synapses, but also indirectly abolish the synaptic changes downstream of the opposite component through the reciprocal MBON ⇌ MBON inhibition. For example, the depressed odor responses in an approach MBON due to aversive training, through PPL1s (encoding the – d component), may return to normal levels after the activation of PAMs (encoding the + d component). This is because the activation of PAMs depresses odor responses in avoidance MBONs, which in turn release approach MBONs from inhibition and thus potentiates their odor responses (see “Forward Learning” section). This potentiation abolishes the depression of the approach MBON odor responses that was encoded by the + d component. Therefore, our proposed RPE hypothesis functions through opposite components released by the PPL1 and PAM neurons; these components separately encode synaptic changes on their downstream opposite KC → MBON synapses and indirectly abolish each other’s effects through a KC → MBON ⇌ MBON ← KC circuit (Fig. 11).

Fig. 11.

Reward prediction error with two DAN components. A In a naïve fly, evoked responses in approach and avoidance MBONs by KC activation are balanced. Reciprocal inhibition between approach and avoidance MBONs maintains this balance. B Activation of optimistic neurons (PPL1; not shown) encodes a negative reward prediction error component (–d) that depresses the KC → approach.MBON synapse. This results in weaker responses in approach MBONs, hence weaker inhibition of avoidance MBONs. In turn, responses in avoidance MBONs, and inhibition of approach MBONs by avoidance MBONs, are potentiated. C Activation of pessimistic neurons (PAM; not shown) encodes a positive reward prediction error component (+d) that depresses the KC → avoidance.MBON synapse. This results in the weakening of avoidance MBON responses, and, consequently, weakening of the avoidance.MBON → approach.MBON inhibitory synapse. In turn, approach MBON responses are potentiated; thus, the depressed responses in approach MBONs that were encoded by the (–d) component are abolished by the (+d) component and return to baseline levels. No learning occurs when both components are equally activated.

Interestingly, a recent study indicates that the RPE coding in mammals may indeed operate through a similar framework, i.e. via positive and negative dopaminergic components. Dabney et al. (2020), using multielectrode recording, demonstrated that the firing of any one dopaminergic neuron does not encode the mean prediction error, but rather responses in the DAN ensemble represent a probability distribution of all possible prediction errors. Some DANs respond to positive valence stimuli while others respond to negative valence stimuli. The mean of the probability distribution represents the final learning-instructive prediction error, and the variance of the distribution is useful in estimating risks and assigning different weights to possible choices [96]. This also agrees with earlier findings that certain DANs fire when mice are rewarded while others fire when they are punished [80]. As an example, when the animal is presented with a stimulus of valence more negative than the prediction, not all DANs will encode a negative prediction error, rather they respond differently according to each neuron’s optimism/pessimism levels; as optimistic neurons are more sensitive to negative experiences, thus encoding negative prediction error components, and pessimistic neurons are more sensitive to positive experiences, thus encoding positive prediction error components. The Dabney et al. (2020) study shows that the prediction error coding is more complex than simply DAN firing vs. no DAN firing. For example, when repeating CS + US presentation after memory formation (no prediction error), both optimistic and pessimistic neurons may still fire to encode a probability distribution, but this time, the mean of this distribution will be zero.

Although the lack of information about individual responses of Drosophila DANs makes it too early to claim that DANs in flies act as an ensemble, our hypothesis of two subsets coding opposite error components: (+d)-coding PPL1s (optimistic) and (−d)-coding PAMs (pessimistic), might represent one step earlier on the evolutionary trajectory, prior to the expansion of the number of DANs and development of a probability distribution mechanism in mammals. The suggestion by Dabney et al. (2020) that an ensemble of positive and negative valence neuron responses encode a probability distribution is similar to our proposed simpler role of the Drosophila PAM and PPL1s in RPE coding. We suggest that PAM and PPL1 firing represents the RPE of each subset and provides positive (+d) and negative (−d) components; the comparison of the two components represents the final learning-instructive RPE value.