Abstract

Information processing can leave distinct footprints on the statistics of neural spiking. For example, efficient coding minimizes the statistical dependencies on the spiking history, while temporal integration of information may require the maintenance of information over different timescales. To investigate these footprints, we developed a novel approach to quantify history dependence within the spiking of a single neuron, using the mutual information between the entire past and current spiking. This measure captures how much past information is necessary to predict current spiking. In contrast, classical time-lagged measures of temporal dependence like the autocorrelation capture how long—potentially redundant—past information can still be read out. Strikingly, we find for model neurons that our method disentangles the strength and timescale of history dependence, whereas the two are mixed in classical approaches. When applying the method to experimental data, which are necessarily of limited size, a reliable estimation of mutual information is only possible for a coarse temporal binning of past spiking, a so-called past embedding. To still account for the vastly different spiking statistics and potentially long history dependence of living neurons, we developed an embedding-optimization approach that does not only vary the number and size, but also an exponential stretching of past bins. For extra-cellular spike recordings, we found that the strength and timescale of history dependence indeed can vary independently across experimental preparations. While hippocampus indicated strong and long history dependence, in visual cortex it was weak and short, while in vitro the history dependence was strong but short. This work enables an information-theoretic characterization of history dependence in recorded spike trains, which captures a footprint of information processing that is beyond time-lagged measures of temporal dependence. To facilitate the application of the method, we provide practical guidelines and a toolbox.

Author summary

Even with exciting advances in recording techniques of neural spiking activity, experiments only provide a comparably short glimpse into the activity of only a tiny subset of all neurons. How can we learn from these experiments about the organization of information processing in the brain? To that end, we exploit that different properties of information processing leave distinct footprints on the firing statistics of individual spiking neurons. In our work, we focus on a particular statistical footprint: We quantify how much a single neuron’s spiking depends on its own preceding activity, which we call history dependence. By quantifying history dependence in neural spike recordings, one can, in turn, infer some of the properties of information processing. Because recording lengths are limited in practice, a direct estimation of history dependence from experiments is challenging. The embedding optimization approach that we present in this paper aims at extracting a maximum of history dependence within the limits set by a reliable estimation. The approach is highly adaptive and thereby enables a meaningful comparison of history dependence between neurons with vastly different spiking statistics, which we exemplify on a diversity of spike recordings. In conjunction with recent, highly parallel spike recording techniques, the approach could yield valuable insights on how hierarchical processing is organized in the brain.

Introduction

How is information processing organized in the brain, and what are the principles that govern neural coding? Fortunately, footprints of different information processing and neural coding strategies can be found in the firing statistics of individual neurons, and in particular in the history dependence, the statistical dependence of a single neuron’s spiking on its preceding activity.

In classical, noise-less efficient coding, history dependence should be low to minimize redundancy and optimize efficiency of neural information transmission [1–3]. In contrast, in the presence of noise, history dependence and thus redundancy could be higher to increase the signal-to-noise ratio for a robust code [4]. Moreover, history dependence can be harnessed for active information storage, i.e. maintaining past input information to combine it with present input for temporal processing [5–7] and associative learning [8]. In addition to its magnitude, the timescale of history dependence provides an important footprint of processing at different processing stages in the brain [9–11]. This is because higher-level processing requires integrating information on longer timescales than lower-level processing [12]. Therefore, history dependence in neural spiking should reach further into the past for neurons involved in higher-level processing [9, 13]. Quantifying history dependence and its timescale could probe these different footprints and thus yield valuable insights on how neural coding and information processing is organized in the brain.

Often, history dependence is characterized by how much spiking is correlated with spiking with a certain time lag [14, 15]. From the decay time of this lagged correlation, one obtains an intrinsic timescale of how long past information can still be read out [9–11, 16]. However, to quantify not only a timescale of statistical dependence, but also its strength, one has to quantify how much of a neuron’s spiking depends on its entire past. Here, this is done with the mutual information between the spiking of a neuron and its own past [17], also called active information storage [5–7], or predictive information [18, 19].

Estimating this mutual information directly from spike recordings, however, is notoriously difficult. The reason is that statistical dependencies may reside in precise spike times, extend far into the past and contain higher-order dependencies. This makes it hard to find a parametric model, e.g. from the family of generalized linear models [20, 21], that is flexible enough to account for the variety of spiking statistics encountered in experiments. Therefore, one typically infers mutual information directly from observed spike trains [22–26]. The downside is that this requires a lot of data, otherwise estimates can be severely biased [27, 28]. A lot of work has been devoted to finding less biased estimates, either by correcting bias [28–31], or by using Bayesian inference [32–34]. Although these estimators alleviate to some extent the problem of bias, a reliable estimation is only possible for a much reduced representation of past spiking, also called past embedding [35]. For example, many studies infer history dependence and transfer entropy by embedding the past spiking using a single bin [26, 36].

While previously most attention was devoted to a robust estimation given a (potentially limited) embedding, we argue that a careful embedding of past activity is crucial. In particular, a past embedding should be well adapted to the spiking statistics of a neuron, but also be low-dimensional enough to enable a reliable estimation. To that end, we here devise an embedding optimization scheme that selects the embedding that maximizes the estimated history dependence, while reliable estimation is ensured by two independent regularization methods.

In this paper, we first provide a methods summary where we introduce the measure of history dependence and the information timescale, as well as the embedding optimization method employed to estimate history dependence in neural spike trains. A glossary of all the abbreviations and symbols used in this paper can be found at the beginning of Materials and methods. In Results, we first compare the measure of history dependence with classical time-lagged measures of temporal dependence on different models of neural spiking activity. Second, we test the embedding optimization approach on a tractable benchmark model, and also compare it to existing estimation methods on a variety of experimental spike recordings. Finally, we demonstrate that the approach reveals interesting differences between neural systems, both in terms of the total history dependence, as well as the information timescale. For the reader interested in applying the method, we provide practical guidelines in the discussion and in the end of Materials and methods. The method is readily applicable to highly parallel spike recordings, and a toolbox for Python3 is available online [37].

Methods summary

Definition of history dependence

First, we define history dependence R(T) in the spiking of a single neuron. We quantify history dependence based on the mutual information

| (1) |

between current spiking in a time bin [t, t + Δt) and its own past in a past range [t − T, t) (Fig 1B). Here, we assume stationarity and ergodicity, hence the measure is an average over all times t. This mutual information is also called active information storage [5], and is related to the predictive information [18, 19]. It quantifies how much of the current spiking information H(spiking) can be predicted from past spiking. The spiking information is given by the Shannon entropy [38]

| (2) |

where p(spike) = rΔt is the probability to spike within a small time bin Δt for a neuron with average firing rate r. The Shannon entropy H(spiking) quantifies the average information that a spiking neuron could transmit within one bin, assuming no statistical dependencies on its own past. In contrast, the conditional entropy H(spiking|past(T)) (see Materials and methods) quantifies the average spiking information (in the sense of entropy) that remains when dependencies on past spiking are taken into account. Note that past dependencies can only reduce the average spiking information, i.e. H(spiking|past(T)) ≤ H(spiking). The difference between the two then gives the amount of spiking information that is redundant or entirely predictable from the past. To transform this measure of information into a measure of statistical dependence, we normalize the mutual information by the entropy H(spiking) and define history dependence R(T) as

| (3) |

While the mutual information quantifies the amount of predictable information, R(T) gives the proportion of spiking information that is predictable or redundant with past spiking. As such, it interpolates between the following intuitive extreme cases: R(T) = 0 corresponds to independent and R(T) = 1 to entirely predictable spiking. Moreover, while the entropy and thus the mutual information I(spiking;past(T)) increases with the firing rate (see S13 Fig for an example on real data), the normalized R(T) is comparable across recordings of neurons with very different firing rates. Finally, all the above measures can depend on the size of the time bin Δt, which discretizes the current spiking activity in time. Too small a time bin holds the risk that noise in the spike emission reduces the overall predictability or history dependence, whereas an overly large time bin holds the risk of destroying coding relevant time information in the neuron’s spike train. Thus, we chose the smallest time bin Δt = 5ms that does not yet show a decrease in history dependence (S16 Fig).

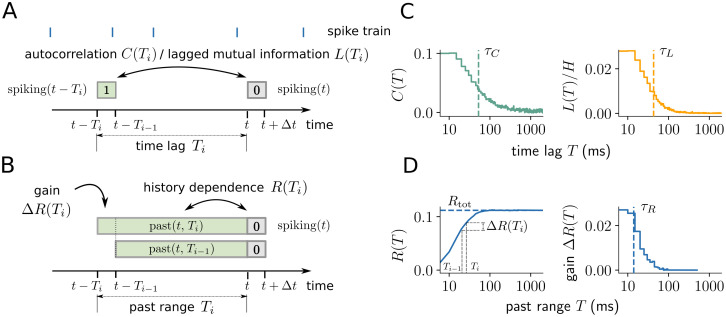

Fig 1. Illustration of history dependence and related measures in a neural spike train.

(A) For the analysis, spiking is represented by 0 or 1 in a small time bin Δt (grey box). Autocorrelation C(Ti) or the lagged mutual information L(Ti) quantify the statistical dependence of spiking on past spiking in a single past bin with time lag Ti (green box). (B) In contrast, history dependence R(Ti) quantifies the dependence of spiking on the entire spiking history in a past range Ti. The gain in history dependence ΔR(Ti) = R(Ti) − R(Ti−1) quantifies the increase in history dependence by increasing the past range from Ti−1 to Ti, and is defined in analogy to the lagged measures. (C) Autocorrelation C(T) and lagged mutual information L(T) for a typical example neuron (mouse, primary visual cortex). Both measures decay with increasing T, where L(T) decays slightly faster due to the non-linearity of the mutual information. Timescales τC and τL (vertical dashed lines) can be computed either by fitting an exponential decay (autocorrelation) or by using the generalized timescale (lagged mutual information). (D) In contrast, history dependence R(T) increases monotonically for systematically increasing past range T, until it saturates at the total history dependence Rtot. From R(T), the gain ΔR(Ti) can be computed between increasing past ranges Ti−1 and Ti (grey dashed lines). The gain ΔR(T) decays to zero like the time-lagged measures, with information timescale τR (dashed line).

Total history dependence and the information timescale

Here, we introduce measures to quantify the strength and the timescale of history dependence independently. First, note that the history dependence R(T) monotonically increases with the past range T (Fig 1D), until it converges to the total history dependence

| (4) |

The total history dependence Rtot quantifies the proportion of predictable spiking information once the entire past is taken into account.

While the history dependence R(T) is monotonously increasing, the gain in history dependence ΔR(Ti) ≡ R(Ti) − R(Ti−1) between two past ranges Ti > Ti−1 tends to decrease, and eventually decreases to zero for Ti, Ti−1 → ∞ (Fig 1D). This is in analogy to time-lagged measures of temporal dependence such as the autocorrelation C(T) or lagged mutual information L(T) (Fig 1A and 1C). Moreover, because R(T) is monotonically increasing, the gain cannot be negative, i.e. ΔR(Ti) ≥ 0. From ΔR(Ti), we quantify a characteristic timescale τR of history dependence similar to an autocorrelation time. In analogy to the integrated autocorrelation time [39], we define the generalized timescale

| (5) |

as the average of past ranges , weighted with their gain ΔR(Ti) = R(Ti) − R(Ti−1). Here, steps between two past ranges Ti−1 and Ti should be chosen small enough, and summing the middle points of the steps further reduces the error of discretization. T0 is the starting point, i.e. is the first past range for which R(T) is computed, and was set to T0 = 10ms to exclude short-term past dependencies like refractoriness (see Materials and methods for details). Moreover, the last past range Tn has to be high enough such that R(Tn) has converged, i.e. R(Tn) = Rtot. Here, we set Tn = 5 s unless stated otherwise.

To illustrate the analogy to the autocorrelation time, we note that if the gain decays exponentially, i.e. with decay constant τauto, then τR = τauto for n → ∞ and sufficiently small steps Ti − Ti−1. The advantage of τR is that it also generalizes to cases where the decay is not exponential. Furthermore, it can be applied to any other measure of temporal dependence (e.g. the lagged mutual information) as long as the sum in Eq (5) remains finite, and the coefficients are non-negative. Note that estimates of ΔR(Ti) can also be negative, so we included corrections to allow a sensible estimation of τR (Materials and methods). Finally, since τR quantifies the timescale over which unique predictive information is accumulated, we refer to it as the information timescale.

Binary past embedding of spiking activity

In practice, estimating history dependence R from spike recordings is extremely challenging. In fact, if data is limited, a reliable estimation of history dependence is only possible for a reduced representation of past spiking, also called past embedding [35]. Here, we outline how we embed past spiking activity to estimate history dependence from neural spike recordings.

First, we choose a past range T, which defines the time span of the past embedding. For each point in time t, we partition the immediate past window [t − T, t) into d bins and count the number of spikes in each bin. The number of bins d sets the temporal resolution of the embedding. In addition, we let bin sizes scale exponentially with the bin index j = 1, …, d as τj = τ110(j−1)κ (Fig 2A). A scaling exponent of κ = 0 translates into equal bin sizes, whereas for κ > 0 bin sizes increase. For fixed d, this allows to obtain a higher temporal resolution on recent past spikes by decreasing the resolution on distant past spikes.

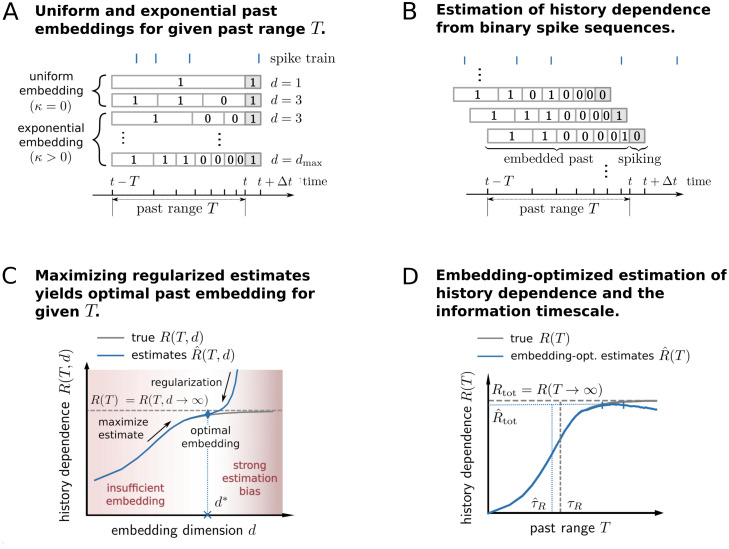

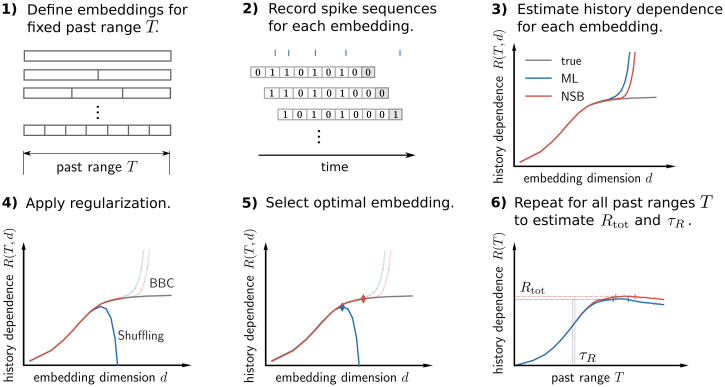

Fig 2. Illustration of embedding optimization to estimate history dependence and the information timescale.

(A) History dependence R is estimated from the observed joint statistics of current spiking in a small time bin [t + Δt) (dark grey) and the embedded past, i.e. a binary sequence representing past spiking in a past window [t − T, t). We systematically vary the number of bins d and bin sizes for fixed past range T. Bin sizes scale exponentially with bin index and a scaling exponent κ to reduce resolution for spikes farther into the past. (B) The joint statistics of current and past spiking are obtained by shifting the past range in steps of Δt and counting the resulting binary sequences. (C) Finding a good choice of embedding parameters (e.g. embedding dimension d) is challenging: When d is chosen too small, the true history dependence R(T) (dashed line) is not captured appropriately (insufficient embedding) and underestimated by estimates (blue solid line). When d is chosen too high, estimates are severely biased and R(T, d), as well as R(T), are overestimated (biased regime). Past-embedding optimization finds the optimal embedding parameter d* that maximizes the estimated history dependence subject to regularization. This yields a best estimate of R(T) (blue diamond). (D) Estimation of history dependence R(T) as a function of past range T. For each past range T, embedding parameters d and κ are optimized to yield an embedding-optimized estimate . From estimates , we obtain estimates and of the information timescale τR and total history dependence Rtot (vertical and horizontal dashed lines). To compute we average estimates in an interval [TD, Tmax], for which estimates reach a plateau (vertical blue bars, see Materials and methods). For high past ranges T, estimates may decrease because a reliable estimation requires past embeddings with reduced temporal resolution.

The past window [t − T, t) of the embedding is slid forward in steps of Δt through the whole recording with recording length Trec, starting at t = T. This gives rise to N = (Trec − T)/Δt measurements of current spiking in [t, t + Δt), and of the number of spikes in each of the d past bins (Fig 2B). We chose to use only binary sequences of spike counts to estimate history dependence. To that end, a count of 1 was chosen for a spike count larger than the median spike count over the N measurements in the respective past bin. A binary representation drastically reduces the number of possible past sequences for given number of bins d, thus enabling an estimation of history dependence even from short recordings.

Estimation of history dependence with binary past embeddings

To estimate history dependence R, one has to estimate the probability of a spike occurring together with different past sequences. The probabilities πi of these different joint events i can be directly inferred from the frequencies ni with which the events occurred during the recording. Without any additional assumptions, the simplest way to estimate the probabilities is to compute the relative frequencies , where N is the total number of observed joint events. This estimate is the maximum likelihood (ML) estimate of joint probabilities πi for a multinomial likelihood, and the corresponding estimate of history dependence will also be denoted by ML. This direct estimate of history dependence is known to be strongly biased when data is too limited [28, 30]. The bias is typically positive, because, under limited data, probabilities of observed joint events are given too much weight. Therefore, statistical dependencies are overestimated. Even worse, the overestimation becomes more severe the higher the number of possible past sequences K. Since K increases exponentially with the dimension of the past embedding d, i.e. K = 2d for binary spike sequences, history dependence is severely overestimated for high d (Fig 2C). The potential overestimation makes it hard to choose embeddings that represent past spiking sufficiently well. In the following, we outline how one can optimally choose embeddings if appropriate regularization is applied.

Estimating history dependence with past-embedding optimization

Due to systematic overestimation, high-dimensional past embeddings are prohibitive for a reliable estimation of history dependence from limited data. Yet, high-dimensional past embeddings might be required to capture all history dependence. The reason is that history dependence may reside in precise spike times, but also may extend far into the past.

To illustrate this trade-off, we consider a discrete past embedding of spiking activity in a past range T, where the past spikes are assigned to d equally large bins (κ = 0). We would like to obtain an estimate of the maximum possible history dependence R(T) for the given past range T, with R(T) ≡ R(T, d → ∞) (Fig 2C). The number of bins d can go to infinity only in theory, though. In practice, we have estimates of the history dependence R(T, d) for finite d. On the one hand, one would like to choose a high number of bins d, such that R(T, d) approximates R(T) well for the given past range T. Too few bins d otherwise reduce the temporal resolution, such that R(T, d) is substantially less than R(T) (Fig 2C). On the other hand, one would like to choose d not too large in order to enable a reliable estimation from limited data. If d is too high, estimates strongly overestimate the true history dependence R(T, d) (Fig 2C).

Therefore, if the past embedding is not chosen carefully, history dependence is either overestimated due to strong estimation bias, or underestimated because the chosen past embedding was too simple.

Here, we thus propose the following past-embedding optimization approach: For a given past range T, select embedding parameters d*, κ* that maximize the estimated history dependence , while overestimation is avoided by an appropriate regularization. This yields an embedding-optimized estimate of the true history dependence R(T). In terms of the above example, past-embedding optimization selects the optimal embedding dimension d*, which provides the best lower bound to R(T) (Fig 2C).

Since we can anyways provide only a lower bound, regularization only has to ensure that estimates are either unbiased, or a lower bound to the observable history dependence R(T, d, κ). For that purpose, in this paper we introduce a Bayesian bias criterion (BBC) that selects only unbiased estimates. In addition, we use an established bias correction, the so-called Shuffling estimator [31] that, within leading order of the sample size, is guaranteed to provide a lower bound to the observable history dependence (see Materials and methods for details).

Together with these regularization methods, the embedding optimization approach enables complex embeddings of past activity while minimizing the risk of overestimation. See Materials and methods for details on how we used embedding-optimized estimates to compute estimates and of the total history dependence and information timescale (Fig 2D, blue dashed lines).

Results

In the first part, we demonstrate the differences between history dependence and time-lagged measures of temporal dependence for several models of neural spiking activity. We then benchmark the estimation of history dependence using embedding optimization on a tractable neuron model with long-lasting spike adaptation. Moreover, we compare the embedding optimization approach to existing estimation methods on a variety of extra-cellular spike recordings. In the last part, we apply this to analyze history dependence for a variety of neural systems, and compare the results to the autocorrelation and other statistical measures on the data.

Differences between history dependence and time-lagged measures of temporal dependence

The history dependence R(T) quantifies how predictable neural spiking is, given activity in a certain past range T. In contrast, time-lagged measures of temporal dependence like the autocorrelation C(T) [40] or lagged mutual information L(T) [41, 42] quantify the dependence of spiking on activity in a single past bin with time lag T (Fig 1A and 1C; Materials and methods). In the following, we showcase the main differences between the two approaches.

History dependence disentangles the effects of input activation, reactivation and temporal depth of a binary autoregressive process

To show the behavior of the measures in a well controlled setup, we analyzed a simple binary autoregressive process with varying temporal depth l (Fig 3A). The process evolves in discrete time steps, and has an active (1) or inactive (0) state. Active states are evoked either by external input with probability h, or by internal reactivations that are triggered by activity within the past l steps. Each past activation increases the reactivation probability by m, which regulates the strength of history dependence in the process. In the following, we describe how the measures behave as we vary each of the different model parameters, and then summarize the key difference between the measures.

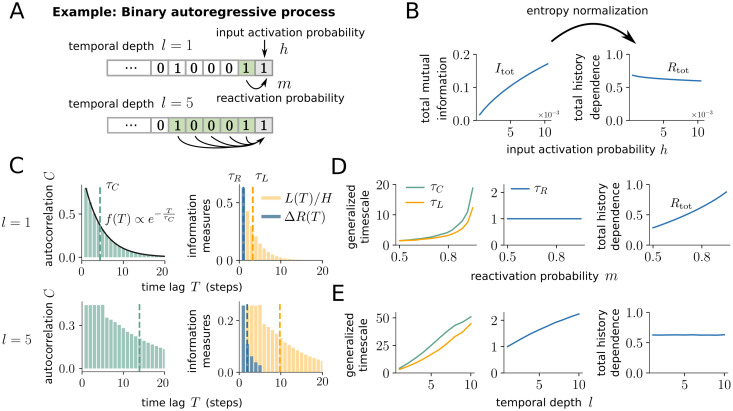

Fig 3. History dependence disentangles the effects of input activation, reactivation and temporal depth of a binary autoregressive process.

(A) In the binary autoregressive process, the state of the next time step (grey box) is active (one) either because of an input activation with probability h, or because of an internal reactivation. The internal activation is triggered by activity in the past l time steps (green), where each active state increases the activation probability by m. (B) Increasing the input activation probability h increases the total mutual information Itot, although input activations are random and therefore not predictable. Normalizing the total mutual information by the entropy yields the total history dependence Rtot, which decreases mildly with h. (C) Autocorrelation C(T), lagged mutual information L(T) and gain in history dependence ΔR(T) decay differently with the time lag T. For l = 1 and m = 0.8 (top), autocorrelation C(T) decays exponentially with autocorrelation time τC, whereas L(T) decays faster due to the non-linearity of the mutual information. For l = 5 (bottom), C(T) and L(T) plateau over the temporal depth, and then decay much slower than for l = 1. In contrast, ΔR(T) is non-zero only for T shorter or equal to the temporal depth of the process, with much shorter timescale τR. Parameters m and h were adapted to match the firing rate and total history dependence between l = 1 and l = 5. (D) When increasing the reactivation probability m for l = 1, timescales of time-lagged measures τC and τL increase. For history dependence, the information timescale τR remains constant, but the total history dependence Rtot increases. (E) When varying the temporal depth l, all timescales increased. Parameters h and m were adapted to hold the firing rate and Rtot constant.

The input strength h increases the firing rate and thus the spiking entropy H(spiking). This leads to a strong increase in the total mutual information , whereas the total history dependence Rtot is normalized by the entropy and does slightly decrease (Fig 3B). This slight decrease is expected from a sensible measure of history dependence, because the input is random and has no temporal dependence. In addition, input activations may fall together with internal activations, which slightly reduces the total history dependence.

In contrast, the total history dependence Rtot increases with the reactivation probability m, as expected (Fig 3D). For the autocorrelation, the reactivation probability m not only influences the magnitude of the correlation coefficients, but also the decay of the coefficients. For autoregressive processes (and l = 1), autocorrelation coefficients C(T) decay exponentially [14] (Fig 3C), where the autocorrelation time τC = −Δt/log(m) increases with m and diverges as m → 1 (Fig 3D). The lagged mutual information L(T) is a non-linear measure of time-lagged dependence, and has a very similar behavior as the autocorrelation, with a slightly faster decay and thus smaller generalized timescale τL (Fig 3C and 3D). Note that we normalized L(T) by the spiking entropy H to make it directly comparable to ΔR(T). In contrast to the time-lagged measures, the gain in history dependence ΔR(T) is only non-zero for T smaller or equal to the true temporal depth l of the process (Fig 3C). As a consequence, the information timescale τR does not increase with m for fixed l (Fig 3D).

Finally, the temporal depth l controls how far into the past activations depend on their preceding activity. Indeed, we find that the information timescale τR increases with l as expected (Fig 3C and 3E). Similarly, the timescales of the time-lagged measures τC and τL increase with the temporal depth l. Note that parameters m and h were adapted for each l to keep the firing rate and total history dependence Rtot constant, hence differences in the timescale can be unambiguously attributed to the increase in l.

To conclude, history dependence disentangles the effects of input activation, reactivation and temporal depth, which provides a comprehensive characterization of past dependencies in the autoregressive model. This is different from the total mutual information, which lacks the entropy normalization and is sensitive to the firing rate. This is also different from time-lagged measures, whose timescales are sensitive to both, the reactivation probability m and the temporal depth l. The confusion of effects in the timescales is rooted in the time-lagged nature of the measures—by quantifying past dependencies out of context, C(T) and L(T) also capture indirect, redundant dependencies onto past events. Indirect, redundant dependencies arise from unique dependencies, because past states that are uniquely predictive of future activities were in turn uniquely dependent on their own past. The stronger the unique dependence, the longer the indirect dependencies reach into the past, which increases the timescale of time-lagged measures. In contrast, indirect dependencies do not contribute to the history dependence, because they add no predictive information once more-recent past is taken into account.

History dependence dismisses redundant past dependencies and captures synergistic effects

A key property of history dependence is that it evaluates past dependencies in the light of more-recent past. This allows the measure to dismiss indirect, redundant past dependencies and to capture synergistic effects. In three common models of neural spiking activity, we demonstrate how this leads to a substantially different characterization of past dependencies compared to time-lagged measures of temporal dependence.

First, we simulated a subsampled branching process [14], which is a minimal model for activity propagation in neural networks and captures key properties of spiking dynamics in cortex [15]. Similar to the binary autoregressive process, active neurons activate neurons in the next time step with probability m, the so-called branching parameter, and are activated externally with some probability h. The process was simulated in time steps of Δt = 4 ms with a population activity of 500 Hz, which was subsampled to obtain a single spike train with a firing rate of 5 Hz (Fig 4A). Similar to the binary autoregressive process, the autocorrelation decays exponentially with autocorrelation time τC = −Δt/log(m) = 198 ms, and the lagged mutual information decays slightly faster (Fig 4B). In comparison, the gain in history dependence ΔR decays much faster. When increasing the branching parameter m (for fixed firing rate), the total history dependence increased, as in the autoregressive process (S11 Fig). Strikingly, the timescale τR remained constant or even decreased for larger m > 0.967 and thus higher autocorrelation time τC > 120 ms (S11 Fig), which is different from the binary autoregressive process. The reason is that the branching process evolves at the population level, whereas history dependence is quantified at the single neuron level. Thereby, history dependence also captures indirect dependencies, because the own spiking history reflects the population activity. The higher the branching parameter m, the more informative past spikes are about the population activity, and the shorter is the timescale τR over which all the relevant information about the population activity can be collected. Thus, for the branching process, the total history dependence Rtot captures the influence of the branching parameter, whereas the information timescale τR behaves very differently from the timescales of time-lagged measures.

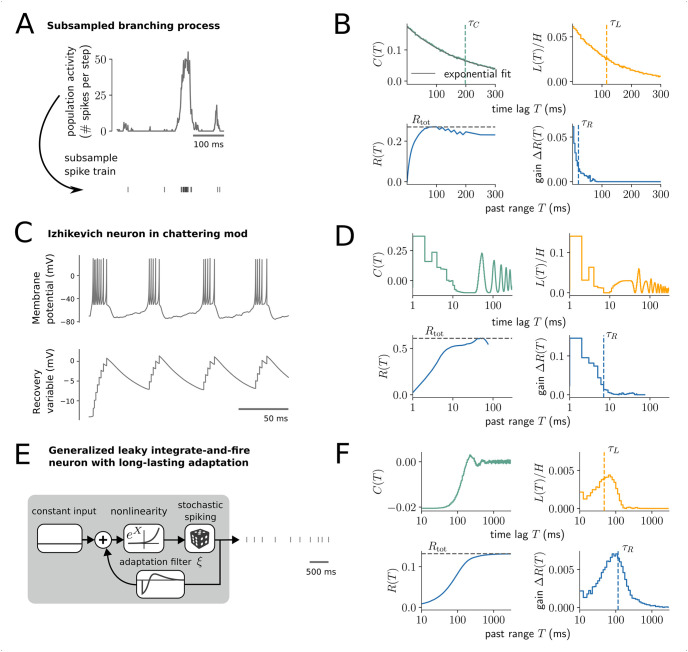

Fig 4. History dependence dismisses redundant past dependencies and captures synergistic effects.

(A,B) Analysis of a subsampled branching process. (A) The population activity was simulated as a branching process (m = 0.98) and subsampled to yield the spike train of a single neuron (Materials and methods). (B) Autocorrelation C(T) and lagged mutual information L(T) include redundant dependencies and decay much slower than the gain ΔR(T), with much longer timescales (vertical dashed lines). (C,D) Analysis of an Izhikevich neuron in chattering mode with constant input and small voltage fluctuations. The neuron fires in regular bursts of activity. (D) Time-lagged measures C(T) and L(T) measure both, intra- (T < 10 ms) and inter-burst (T > 10 ms) dependencies, which decay very slowly due to regularity of the firing. The gain ΔR(T) reflects that most spiking can already be predicted from intra-burst dependencies, whereas inter-burst dependencies are highly redundant. In this case, only ΔR(T) yields a sensible time scale (blue dashed line). (E,F) Analysis of a generalized leaky integrate-and-fire neuron with long-lasting adaptation filter ξ [3, 43] and constant input. (F) Here, ΔR(T) decays slower to zero than the autocorrelation C(T), and is higher than L(T) for long T. Therefore, the dependence on past spikes is stronger when taking more-recent past spikes into account (ΔR(T)), as when considering them independently (L(T)). Due to these synergistic past dependencies, ΔR(T) is the only measure that captures the long-range nature of the spike adaptation.

Second, we demonstrate the difference of history dependence to time-lagged measures on an Izhikevich neuron, which is a flexible model that can produce different neural firing patterns similar to those observed for real neurons [44]. Here, parameters were chosen according to the “chattering mode” [44], with constant input and small voltage fluctuations (Materials and methods). The neuron fires in regular bursts of activity, with consistent timing between spikes within and between bursts (Fig 4C). While time-lagged measures capture all the regularities in spiking and oscillate with the bursts of activity, history dependence correctly captures that spiking can almost be entirely predicted from intra-burst dependencies alone (Fig 4D). History dependence dismisses the redundant inter-burst dependencies and thereby yields a sensible measure of a timescale (blue dashed line).

Finally, we analyzed a generalized leaky integrate-and-fire neuron with long-range spike adaptation (22 seconds) (Fig 4E), which reproduces spike-frequency adaptation as observed for somatosensory pyramidal neurons [3, 43]. For this model, time-lagged measures C(T) and L(T) actually decay to zero much faster than the gain in history dependence ΔR(T), which is the only measure that captures the long-range adaptation effects of the model (Fig 4F). This shows that past dependencies in this model include synergistic effects, where the dependence is stronger in the context of more-recent spikes. This is most likely due to the non-linearity of the model, where past spikes cause a different adaptation when taken together as when considered as the sum of their contributions.

Thus, due to its ability to dismiss redundant past dependencies and to capture synergistic effects, history dependence really provides a complementary characterization of past dependencies compared to time-lagged measures. Importantly, because the approach better disentangles the effects of timescale and total history dependence, the results remain interpretable for very different models, and provide a more comprehensive view on past dependencies.

Embedding optimization captures history dependence for a neuron model with long-lasting spike adaptation

On a benchmark spiking neuron model, we first demonstrate that without optimization and proper regularization, past embeddings are likely to capture much less history dependence, or lead to estimates that severely overestimate the true history dependence. Readers that are familiar with the bias problem of mutual information estimation might want to jump to the next part, where we validate that embedding-optimized estimates indeed capture the model’s true history dependence, while being robust to systematic overestimation. As a model we chose a generalized leaky integrate-and-fire (GLIF) model with spike frequency adaptation, whose parameters were fitted to experimental data [3, 43]. The model was chosen, because it is equipped with a long-lasting spike adaptation mechanism, and its total history dependence Rtot can be directly computed from sufficiently long simulations (Materials and methods). For demonstration, we show results on a variant of the model where adaptation reaches one second into the past, and show results on the original model with a 22 second kernel in S1, S2 and S5 Figs. For simulation, the neuron was driven with a constant input current to achieve an average firing rate of 4 Hz. In the following, estimates are shown for a simulated recording of 90 minutes, whereas the true values R(T) were computed on a 900 minute recording (Materials and methods).

Without regularization, history dependence is severely overestimated for high-dimensional embeddings

For demonstration, we estimated the history dependence R(τ, d) for varying numbers of bins d and a constant bin size τ = 20 ms (i.e. κ = 0 and T = d ⋅ τ). We compared estimates obtained by maximum likelihood (ML) estimation [28], or Bayesian estimation using the NSB estimator [33], with the model’s true R(τ, d) (Fig 5A). Both estimators accurately estimate R(τ, d) for up to d ≈ 20 past bins. As expected, the NSB estimator starts to be biased at higher d than the ML estimator. For embedding dimensions d > 30, both estimators severely overestimate R(τ, d). Note that ± two standard deviations are plotted as shaded areas, but are too small to be visible. Therefore, any deviation of estimates from the model’s true history dependence R(τ, d) can be attributed to positive estimation bias, i.e. a systematic overestimation of the true history dependence due to limited data.

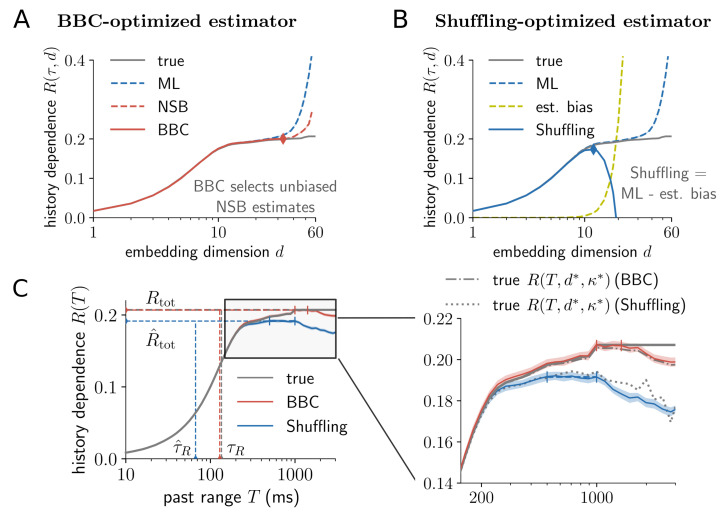

Fig 5. Embedding optimization captures history dependence for a neuron model with long-lasting spike adaptation.

Results are shown for a generalized leaky integrate-and-fire (GLIF) model with long-lasting spike frequency adaptation [3, 43] with a temporal depth of one second (Methods and material). (A) For illustration, history dependence R(τ, d) was estimated on a simulated 90 minute recording for different embedding dimensions d and a fixed bin width τ = 20 ms. Maximum likelihood (ML) [28] and Bayesian (NSB) [33] estimators display the insufficient embedding versus estimation bias trade-off: For small embedding dimensions d, the estimated history dependence is much smaller, but agrees well with the true history dependence R(τ, d) for the given embedding. For larger d, the estimated history dependence increases, but when d is too high (d > 20), it severely overestimates the true R(τ, d). The Bayesian bias criterion (BBC) selects NSB estimates for which the difference between ML and NSB estimate is small (red solid line). All selected estimates are unbiased and agree well with the true R(τ, d) (grey line). Embedding optimization selects the highest, yet unbiased estimate (red diamond). (B) The Shuffling estimator (blue solid line) subtracts estimation bias on surrogate data (yellow dashed line) from the ML estimator (blue dashed line). Since the surrogate bias is higher than the systematic overestimation in the ML estimator (difference between grey and blue dashed lines), the Shuffling estimator is a lower bound to R(τ, d). Embedding optimization selects the highest estimate, which is still a lower bound (blue diamond). For A and B, shaded areas indicate ± two standard deviations obtained from 50 repeated simulations, which are very small and thus hardly visible. (C) Embedding-optimized BBC estimates (red line) yield accurate estimates of the model neuron’s true history dependence R(T), total history dependence Rtot and information timescale τR (horizontal and vertical dashed lines). The zoom-in (right panel) shows robustness of both regularization methods: For all T the model neuron’s R(T, d*, κ*) lies within errorbars (BBC), or consistently above the Shuffling estimator that provides a lower bound. Here, the model’s R(T, d*, κ*) was computed for the optimized embedding parameters d*, κ* that were selected via BBC or Shuffling, respectively (dashed lines). Shaded areas indicate ± two standard deviations obtained by bootstrapping, and colored vertical bars indicate past ranges over which estimates were averaged to compute (Materials and methods).

The aim is now to identify the largest embedding dimension d* for which the estimate of R(τ, d) is not yet biased. A biased estimate is expected as soon as the two estimates ML and NSB start to differ significantly from each other (Fig 5A, red diamond), which is formalized by the Bayesian bias criterion (BBC) (Materials and methods). According to the BBC, all NSB estimates with d lower or equal to d* are unbiased (solid red line). We find that indeed all BBC estimates agree well with the true R(τ, d) (grey line), but d* yields the largest unbiased estimate.

The problem of estimation bias has also been addressed previously by the so-called Shuffling estimator [31]. The Shuffling estimator is based on the ML estimator and applies a bias correction term (Fig 5B). In detail, one approximates the estimation bias using surrogate data, which are obtained by shuffling of the embedded spiking history. The surrogate estimation bias (yellow dashed line) is proven to be larger than the actual estimation bias (difference between grey solid and blue dashed line). Therefore, Shuffling estimates provide lower bounds to the true history dependence R(τ, d). As with the BBC, one can safely maximize Shuffling estimates over d to find the embedding dimension d* that provides the largest lower bound to the model’s total history dependence Rtot (Fig 5B, blue diamond).

Thus, using a model neuron, we illustrated that history dependence can be severely overestimated if the embedding is chosen too complex. Only when overestimation is tamed by one of the two regularization methods, BBC or Shuffling, embedding parameters can be safely optimized to yield better estimates of history dependence.

Optimized embeddings capture the model’s true history dependence

In the previous part, we demonstrated how embedding parameters are optimized for the example of fixed κ and τ. Now, we optimize all embedding parameters for fixed past range T to obtain embedding-optimized estimates of R(T). We find that embedding-optimized BBC estimates agree well with the true R(T), hence the model’s total history dependence Rtot and information timescale τR are well estimated (Fig 5C, vertical and horizontal dashed lines). In contrast, the Shuffling estimator underestimates the true R(T) for past ranges T > 200 ms, hence the model’s Rtot and τR are underestimated (blue dashed lines). For large past ranges T > 1000 ms, estimates of both estimators decrease again, because no additional history dependence is uncovered, whereas the constraint of an unbiased estimation decreases the temporal resolution of the embedding.

Embedding-optimized estimates are robust to overestimation despite maximization over complex embeddings

In the previous part, we investigated how much of the true history dependence for different past ranges T (grey solid line) we miss by embedding the spiking history. An additional source of error is the estimation of history dependence from limited data. In particular, estimates are prone to overestimate history dependence systematically (Fig 5A and 5B).

To test explicitly for overestimation, we computed the true history dependence R(T, d*, κ*) for exactly the same sets of embedding parameters T, d*, κ* that were found during embedding optimization with BBC (grey dash-dotted line), and the Shuffling estimator (grey dotted line, Fig 5C, zoom-in). We expect that BBC estimates are unbiased, i.e. the true history dependence should lie within errorbars of the BBC estimates (red shaded area) for a given T. In contrast, Shuffling estimates are a lower bound, i.e. estimates should lie below the true history dependence (given the same T, d*, κ*). We find that this is indeed the case for all T. Note that this is a strong result, because it requires that the regularization methods work reliably for every single set of embedding parameters used for optimization—otherwise, parameters that cause overestimation would be selected.

Thus, we can confirm that the embedding-optimized estimates do not systematically overestimate the model neuron’s history dependence, and are on average lower bounds to the true history dependence. This is important for the interpretation of the results.

Mild overfitting can occur during embedding optimization on short recordings, but can be overcome with cross-validation

We also tested whether the recording length affects the reliability of embedding-optimized estimates, and found very mild overestimation (1–3%) of history dependence for BBC for recordings as short as 3 minutes (S1 and S4 Figs). The overestimation is a consequence of overfitting during embedding optimization: Variance in the estimates increases for shorter recordings, hence maximizing over estimates selects embedding parameters that have high history dependence by chance. Therefore, the overestimation can be overcome by cross-validation, e.g. by optimizing embedding parameters on the first half, and computing estimates on the second half of the data (S1 Fig). In contrast, we found that for the model neuron, Shuffling estimates do not overestimate the true history dependence even for recordings as short as 3 minutes (S1 Fig). This is because the effect of overfitting was small compared to the systematic underestimation of Shuffling estimates. Here, all experimental recordings where we apply BBC are long enough (≈ 90 minutes), thus no cross-validation was applied on the experimental data.

Estimates of the information timescale are sensitive to the recording length

Finally, we also tested the impact of the recording length on estimates of the total history dependence as well as estimates of the information timescale. While on recordings of 3 minutes embedding optimization still estimated ≈ 95% of the true Rtot, estimates were only ≈ 75% of the true τR (S2 Fig). Thus, estimates of the information timescale τR are more sensitive to the recording length, because they depend on the small additional contributions to R(T) for high past ranges T, which are hard to estimate for short recordings. Therefore, we advice to analyze recordings of similar length to make results on τR comparable across experiments. In the following, we explicitly shorten some recordings such that all recordings have approximately the same recording length.

In conclusion, embedding optimization accurately estimated the model neuron’s true history dependence. Moreover, for all past ranges, embedding-optimized estimates were robust to systematic overestimation. Embedding optimization is thus a promising approach to quantify history dependence and the information timescale in experimental spike recordings.

Embedding optimization is key to estimate long-lasting history dependence in extra-cellular spike recordings

Here, we apply embedding optimization to long spike recordings (≈ 90 minutes) from rat dorsal hippocampus layer CA1 [45, 46], salamander retina [47, 48] and in vitro recordings of rat cortical culture [49]. In particular, we compare embedding optimization to other popular estimation approaches, and demonstrate that an exponential past embedding is necessary to estimate history dependence for long past ranges.

Embedding optimization reveals history dependence that is not captured by a generalized linear model or a single past bin

We use embedding optimization to estimate history dependence R(T) as a function of the past range T (see Fig 6B for an example single unit from hippocampus layer CA1, and S6, S7 and S8 Figs for all analyzed sorted units). In this example, BBC and Shuffling with a maximum of dmax = 20 past bins led to very similar estimates for all T. Notably, embedding optimization with both regularization methods estimated high total history dependence of almost Rtot ≈ 40% with a temporal depth of almost a second, and an information timescale of τR ≈ 100 ms (Fig 6B). This indicates that embedding-optimized estimates capture a substantial part of history dependence also in experimental spike recordings.

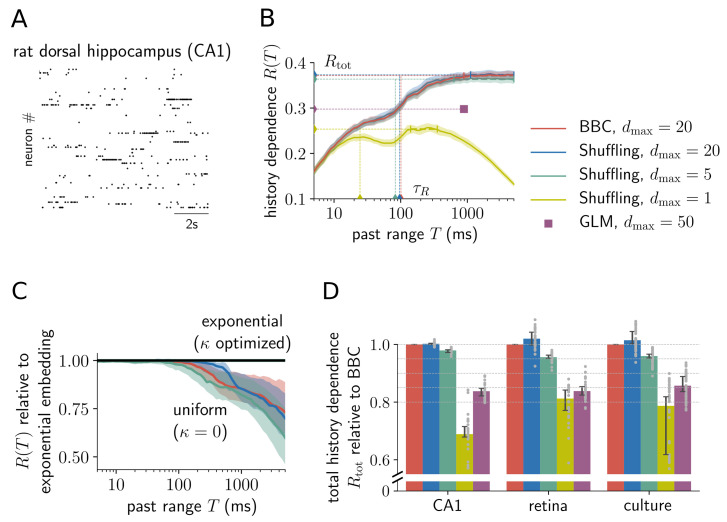

Fig 6. Embedding optimization is key to estimate long-lasting history dependence in extra-cellular spike recordings.

(A) Example of recorded spiking activity in rat dorsal hippocampus layer CA1. (B) Estimates of history dependence R(T) for various estimators, as well as estimates of the total history dependence Rtot and information timescale τR (dashed lines) (example single unit from CA1). Embedding optimization with BBC (red) and Shuffling (blue) for dmax = 20 yields consistent estimates. Embedding-optimized Shuffling estimates with a maximum of dmax = 5 past bins (green) are very similar to estimates obtained with dmax = 20 (blue). In contrast, using a single past bin (dmax = 1, yellow), or fitting a GLM for the temporal depth found with BBC (violet dot), estimates much lower total history dependence. Shaded areas indicate ± two standard deviations obtained by bootstrapping, and small vertical bars indicate past ranges over which estimates of R(T) were averaged to estimate Rtot (Materials and methods). (C) An exponential past embedding is crucial to capture history dependence for high past ranges T. For T > 100 ms, uniform embeddings strongly underestimate history dependence. Shown is the median of embedding-optimized estimates of R(T) with uniform embeddings, relative to estimates obtained by optimizing exponential embeddings, for BBC with dmax = 20 (red) and Shuffling with dmax = 20 (blue) and dmax = 5 (green). Shaded areas show 95% percentiles. Median and percentiles were computed over analyzed sorted units in CA1 (n = 28). (D) Comparison of total history dependence Rtot for different estimation and embedding techniques for three different experimental recordings. For each sorted unit (grey dots), estimates are plotted relative to embedding-optimized estimates for BBC and dmax = 20. Embedding optimization with Shuffling and dmax = 20 yields consistent but slightly higher estimates than BBC. Strikingly, Shuffling estimates for as little as dmax = 5 past bins (green) capture more than 95% of the estimates for dmax = 20 (BBC). In contrast, estimates obtained by optimizing a single past bin, or fitting a GLM, are considerably lower. Bars indicate the median and lines indicate 95% bootstrap confidence intervals on the median over analyzed sorted units (CA1: n = 28; retina: n = 111; culture: n = 48).

Importantly, other common estimation approaches fail to capture the same amount of history dependence (Fig 6B and 6D). To compare how well the different estimation approaches could capture the total history dependence, we plotted for each sorted unit the different estimates of Rtot relative to the corresponding BBC estimate (Fig 6D). Embedding optimization with Shuffling yields estimates that agree well with BBC estimates. The Shuffling estimator even yields slightly higher values on the experimental data. Interestingly, embedding optimization with the Shuffling estimator and as little as dmax = 5 past bins captures almost the same history dependence as BBC with dmax = 20, with a median above 95% for all neural systems. In contrast, we find that a single past bin only accounts for 70% to 80% of the total history dependence. A GLM bears little additional advantage with a slightly higher median of ≈ 85%. To save computation time, GLM estimates were only computed for the temporal depth that was estimated using BBC (Fig 6B, violet square). The remaining embedding parameters d and κ of the GLM’s history kernel were separately optimized using the Bayesian information criterion (Materials and methods). Since parameters were optimized, we argue that the GLM underestimates history dependence because of its specific model assumptions, i.e. no interactions between past spikes. Moreover, we found that the GLM performs worse than embedding optimization with only five past bins. Therefore, we conclude that for typical experimental spike trains, interactions between past spikes are important, but do not require very high temporal resolution. In the remainder of this paper we use the reduced approach (Shuffling dmax = 5) to compare history dependence among different neural systems.

Increasing bin sizes exponentially is crucial to estimate long-lasting history dependence

To demonstrate this, we plotted embedding-optimized BBC estimates of R(T) using a uniform embedding, i.e. equal bin sizes, relative to estimates obtained with exponential embedding (Fig 6C), both for BBC with dmax = 20 (red) and Shuffling with dmax = 20 (blue) or dmax = 5 (green). For past ranges T > 100 ms, estimates using a uniform embedding miss considerable history dependence, which becomes more severe the longer the past range. In the case of dmax = 5, a uniform embedding captures around 80% for T = 1 s, and only around 60% for T = 5 s (median over analyzed sorted units in CA1). Therefore, we argue that an exponential embedding is crucial for estimating long-lasting history dependence.

Together, total history dependence and the information timescale show clear differences between neural systems

In addition to recordings from rat dorsal hippocampus layer CA1, salamander retina and rat cortical culture, we analyzed sorted units in a recording of mouse primary visual cortex using the novel Neuropixels probe [50]. Recordings from primary visual cortex were approximately 40 minutes long. Thus, to make results comparable, we analyzed only the first 40 minutes of all recordings.

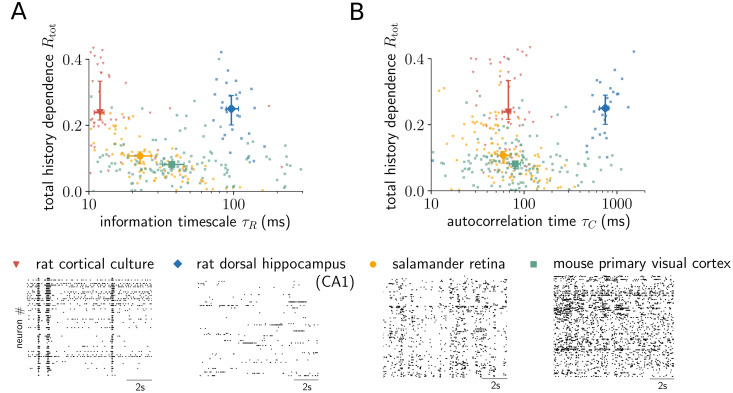

We find clear differences between the neural systems, both in terms of the total history dependence, as well as the information timescale (Fig 7A). Sorted units in cortical culture and hippocampus layer CA1 have high total history dependence Rtot with median over sorted units of ≈ 24% and ≈ 25%, whereas sorted units in retina and primary visual cortex have typically low Rtot of ≈ 11% and ≈ 8%. In terms of the information timescale τR, sorted units in hippocampus layer CA1 display much higher τR, with a median of ≈ 96ms, than units in cortical culture, with median τR of ≈ 12 ms. Similarly, sorted units in primary visual cortex have higher τR, with median of ≈ 37 ms, than units in retina, with median of ≈ 23 ms. These differences could reflect differences between early visual processing (retina, primary visual cortex) and high level processing and memory formation in hippocampus, or likewise, between neural networks that are mainly input driven (retina) or exclusively driven by recurrent input (culture). Notably, total history dependence and the information timescale varied independently among neural systems, and studying them in isolation would miss differences, whereas considering them jointly allows to distinguish the different systems. Moreover, no clear differentiation between cortical culture, retina and primary visual cortex is possible using the autocorrelation time τC (Fig 7B), with medians τC ≈ 68 ms (culture), τC ≈ 60 ms (retina) and τC ≈ 80 ms (primary visual cortex), respectively.

Fig 7. Together, total history dependence and the information timescale show clear differences between neural systems.

(A) Embedding-optimized Shuffling estimates (dmax = 5) of the total history dependence Rtot are plotted against the information timescale τR for individual sorted units (dots) from four different neural systems (raster plots show spike trains of different sorted units). No clear relationship between the two quantities is visible. The analysis shows systematic differences between the systems: sorted units in rat cortical culture (n = 48) and rat dorsal hippocampus layer CA1 (n = 28) have higher median total history dependence than units in salamander retina (n = 111) and mouse primary visual cortex (n = 142). At the same time, sorted units in cortical culture and retina show smaller timescale than units in primary visual cortex, and much smaller timescale than units in hippocampus layer CA1. Overall, neural systems are clearly distinguishable when jointly considering the total history dependence and information timescale. (B) Total history dependence Rtot versus the autocorrelation time τC shows no clear relation between the two quantities, similar to the information timescale τR. Also, the autocorrelation time gives the same relation in timescale between retina, primary visual cortex and CA1, whereas the cortical culture has a higher timescale (different order of medians on the x-axis). In general, neural systems are harder to differentiate in terms of the autocorrelation time τC compared to τR. Errorbars indicate median over sorted units and 95% bootstrap confidence intervals on the median.

To better understand how other well-established statistical measures relate to the total history dependence Rtot and the information timescale τR, we show Rtot and τR versus the median interspike interval (ISI), the coefficient of variation CV = σISI/μISI of the ISI distribution, and the autocorrelation time τC in S14 Fig. Estimates of the total history dependence Rtot tend to decrease with the median ISI, and to increase with the coefficient of variation CV. This result is expected for a measure of history dependence, because a shorter median ISI indicates that spikes tend to occur together, and a higher CV indicates a deviation from independent Poisson spiking. In contrast, the information timescale τR tends to increase with the autocorrelation time, as expected, with no clear relation to the median ISI or the coefficient of variation CV. However, the correlation between the measures depends on the neural system. For example in retina (n = 111), Rtot is significantly anti-correlated with the median ISI (Pearson correlation coefficient: r = −0.69, p < 10−5) and strongly correlated with the coefficient of variation CV (r = 0.90, p < 10−5), and τR is significantly correlated with the autocorrelation time τC (r = 0.75, p < 10−5). In contrast, for mouse primary visual cortex (n = 142), we found no significant correlations between any of these measures. Thus, the relation between Rtot or τR and the established measures is not systematic, and therefore one cannot replace the history dependence by any of them.

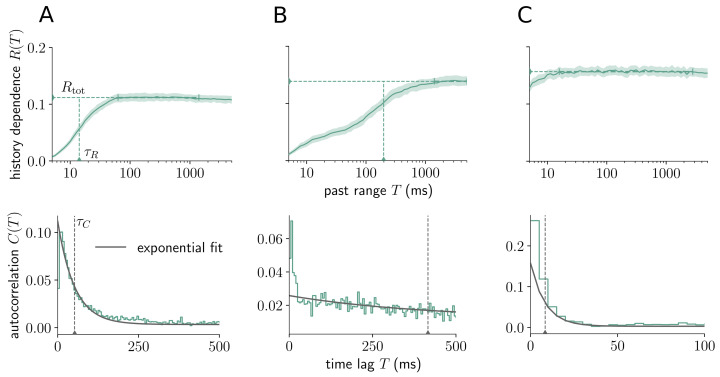

In addition to differences between neural systems, we also find strong heterogeneity of history dependence within a single system. Here, we demonstrate this for three different sorted units in primary visual cortex (Fig 8, see S9 Fig for all analyzed sorted units in primary visual cortex). In particular, sorted units display different signatures of history dependence R(T) as a function of the past range T. For some units, history dependence builds up on short past ranges T (e.g. Fig 8A), for some it only shows for higher T (e.g. Fig 8B), and for some it already saturates for very short T (e.g. Fig 8C). A similar behavior is captured by the autocorrelation C(T) (Fig 8, second row). The rapid saturation in Fig 8C indicates history dependence due to bursty firing, which can also be seen by strong positive correlation with past spikes for short T (Fig 8C, bottom). To exclude the effects of different firing modes or refractoriness on the information timescale, we only considered past ranges T > T0 = 10ms when estimating τR, or time lags T > T0 = 10ms when fitting an exponential decay to C(T) to estimate τC. The reason is that differences in the integration of past information are expected to show for larger T. This agrees with the observation that timescales among neural systems were much more similar if one instead sets T0 = 0 ms, whereas they showed clear differences for T0 = 10 ms or T0 = 20 ms (S15 Fig).

Fig 8. Distinct signatures of history dependence for different sorted units within mouse primary visual cortex.

(Top) Embedding-optimized estimates of R(T) reveal distinct signatures of history dependence for three different sorted units (A,B,C) within a single neural system (mouse primary visual cortex). In particular, sorted units have similar total history dependence Rtot, but differ vastly in the information timescale τR (horizontal and vertical dashed lines). Note that for unit C, τR is smaller than 5 ms and thus doesn’t appear in the plot. Shaded areas indicate ± two standard deviations obtained by bootstrapping, and vertical bars indicate the interval over which estimates of R(T) were averaged to estimate Rtot (Materials and methods). Estimates were computed with the Shuffling estimator and dmax = 5. (Bottom) Autocorrelograms for the same sorted units (A,B, and C, respectively) roughly show an exponential decay, which was fitted (solid grey line) to estimate the autocorrelation time τC (grey dashed line). Similar to the information timescale τR, only coefficients for T larger than T0 = 10 ms were considered during fitting.

We also observed that history dependence can build up on all timescales up to seconds, and that it shows characteristic increases at particular past ranges, e.g. T ≈ 100 ms and T ≈ 200 ms in CA1 (Fig 6B), possibly reflecting phase information in the theta cycles [45, 46]. Thus, the analysis does not only serve to investigate differences in history dependence between neural systems, but also resolves clear differences between sorted units. This could be used to investigate differences in information processing between different cortical layers, different neuron types or neurons with different receptive field properties. Importantly, because units are so different, ad hoc embedding schemes with a fixed number of bins or fixed bin width will miss considerable history dependence.

Discussion

To estimate history dependence in experimental data, we developed a method where the embedding of past spiking is optimized for each individual spike train. Thereby, it can estimate a maximum of history dependence, given what is possible for the limited amount of data. We found that embedding optimization is a robust and flexible estimation tool for neural spike trains with vastly different spiking statistics, where ad hoc embedding strategies would estimate substantially less history dependence. Based on our results, we arrived at practical guidelines that are summarized in Fig 9. In the following, we contrast history dependence R(T) with time-lagged measures such as the autocorrelation in more detail, clearly discussing the advantages—but also the limitations of the approach. We then discuss how one can relate estimated history dependence to neural coding and information processing based on the example data sets analyzed in this paper.

Fig 9. Practical guidelines for the estimation of history dependence in single neuron spiking activity.

More details regarding the individual points can be found at the end of Materials and methods.

Advantages and limitations of history dependence in comparison to the autocorrelation and lagged mutual information

A key difference between history dependence R(T) and the autocorrelation or lagged mutual information is that R(T) quantifies statistical dependencies between current spiking and the entire past spiking in a past range T (Fig 1B). This has the following advantages as a measure of statistical dependence, and as a footprint of information processing in single neuron spiking. First, R(T) allows to compute the total history dependence, which, from a coding perspective, represents the redundancy of neural spiking with all past spikes; or how much of the past information is also represented when emitting a spike. Second, because past spikes are considered jointly, R(T) captures synergistic effects and dismisses redundant past information (Fig 4). Finally, we found that this enables R(T) to disentangle the strength and timescale of history dependence for the binary autoregressive process (Fig 3). In contrast, autocorrelation C(T) or lagged mutual information L(T) quantify the statistical dependence of neural spiking on a single past bin with time lag T, without considering any of the other bins (Fig 1A). Thereby, they miss synergistic effects; and they quantify redundant past dependencies that vanish once spiking activity in more-recent past is taken into account (Fig 4). As a consequence, the timescales of these measures reflect both, the strength and the temporal depth of history dependence in the binary autoregressive process (Fig 3).

Moreover, technically, the autocorrelation time τC depends on fitting an exponential decay to coefficients C(T). Computing the autocorrelation time with the generalized timescale is difficult, because coefficients C(T) can be negative, and are too noisy for large T. While model fitting is in general more data efficient than the model-free estimation presented here, it can also produce biased and unreliable estimates [16]. Furthermore, when the coefficients do not decay exponentially, a more complex model has to be fitted [52], or the analysis simply cannot be applied. In contrast, the generalized timescale can be directly applied to estimates of the history dependence R(T) to yield the information timescale τR without any further assumptions or fitting models. However, we found that estimates of τR can depend strongly on the estimation method and embedding dimension (S12 Fig) and the size of the data set (S2 and S3 Figs). The dependence on data size is less strong for the practical approach of optimizing up to dmax = 5 past bins, but still we recommend to use data sets of similar length when aiming for comparability across experiments. Moreover, there might be cases where a model-free estimation of the true timescale might be infeasible because of the complexity of past dependencies (S2 Fig, neuron with a 22 seconds past kernel). In this case, only ≈ 80% of the true timescale could be estimated on a 90 minute recording.

Another downside of quantifying the history dependence R(T) is that its estimation requires more data than fitting the autocorrelation time τC. To make best use of the limited data, we here devised the embedding optimization approach that allows to find the most efficient representation of past spiking for the estimation of history dependence. Even so, we found empirically that a minimum of 10 minutes of recorded spiking activity are advisable to achieve a meaningful quantification of history dependence and its timescale (S2 and S3 Figs). In addition, for shorter recordings, the analysis can lead to mild overestimation due to optimizing and overfitting embedding parameters on noisy estimates (S2 Fig). This overestimation can, however, be avoided by cross-validation, which we find to be particularly relevant for the Bayesian bias criterion (BBC) estimator. Finally, our approach uses an embedding model that ranges from uniform embedding to an embedding with exponentially stretching past bins—assuming that past information farther into the past requires less temporal resolution [53]. This embedding model might be inappropriate if, for example, spiking depends on the exact timing of distant past spikes, with gaps in time where past spikes are irrelevant. In such a case, embedding optimization could be used to optimize more complex embedding models that can also account for this kind of spiking statistics.

Differences in total history dependence and information timescale between data sets agree with ideas from neural coding and hierarchical information processing

First, we found that the total history dependence Rtot clearly differs among the experimental data sets. Notably, Rtot was low for recordings of early visual processing areas such as retina and primary visual cortex, which is in line with the theory of efficient coding [1, 54] and neural adaptation for temporal whitening as observed in experiments [3, 55]. In contrast, Rtot was high for neurons in dorsal hippocampus (layer CA1) and cortical culture. In CA1, the original study [46] found that the temporal structure of neural activity within the temporal windows set by the theta cycles was beyond of what one would expect from integration of feed-forward excitatory inputs. The authors concluded that this could be due to local circuit computations. The high values of Rtot support this idea, and suggest that local circuit computations could serve the integration of past information, either for the formation of a path integration–based neural map [56], or to recognize statistical structure for associative learning [8]. In cortical culture, neurons are exclusively driven by recurrent input and exhibit strong bursts in the population activity [57]. This leads to strong history dependence also at the single-neuron level.

To summarize, history dependence was low for early sensory processing and high for high level processing or past dependencies that are induced by strong recurrent feedback in a neural network. We thus conclude that estimated total history dependence Rtot does indeed provide a footprint of neural coding and information processing.

Second, we observed that the information timescale τR increases from retina (≈ 23 ms) to primary visual cortex (≈ 37 ms) to CA1 (≈ 96 ms), in agreement with the idea of a temporal hierarchy in neural information processing [12]. These results qualitatively agree with similar results obtained for the autocorrelation time of spontaneous activity [9], although the information timescales are overall much smaller than the autocorrelation times. Our results suggest that the hierarchy of intrinsic timescales could also show in the history dependence of single neurons measured by the mutual information.

Conclusion

Embedding optimization enables to estimate history dependence in a diversity of spiking neural systems, both in terms of its strength, as well as its timescale. The approach could be used in future experimental studies to quantify history dependence across a diversity of brain areas, e.g. using the novel Neuropixels probe [58], or even across cortical layers within a single area. To this end we provide a toolbox for Python3 [37]. These analyses might yield a more complete picture of hierarchical processing in terms of the timescale and a footprint of information processing and coding principles, i.e. information integration versus redundancy reduction.

Materials and methods

In this section, we provide all mathematical details required to reproduce the results of this paper. We first provide the basic definitions of history dependence, time-lagged measures and the past embedding. We then describe the embedding optimization approach that is used to estimate history dependence from neural spike recordings, and provide a description of the workflow. Next, we delineate the estimators of history dependence considered in this paper, and present the novel Bayesian bias criterion. Finally, we provide details on the benchmark model and how we approximated its history dependence for given past range and embedding parameters. All code for Python3 that was used to analyze the data and to generate the figures is available online at https://github.com/Priesemann-Group/historydependence.

Ethics statement

Data from salamander retina were recorded in strict accordance with the recommendations in the Guide for the Care and Use of Laboratory Animals of the National Institutes of Health, and the protocol was approved by the Institutional Animal Care and Use Committee (IACUC) of Princeton University (Protocol Number: 1828). The rat dorsal hippocampus experimental protocols were approved by the Institutional Animal Care and Use Committee of Rutgers University. Data from mouse primary visual cortex were recorded according to the UK Animals Scientific Procedures Act (1986).

Glossary

Terms

Past embedding: discrete, reduced representation of past spiking through temporal binning

Past-embedding optimization: Optimization of temporal binning for better estimation of history dependence

Embedding-optimized estimate: Estimate of history dependence for optimized embedding

Abbreviations

GLM: generalized linear model

ML: Maximum likelihood

BBC: Bayesian bias criterion

Shuffling: Shuffling estimator based on a bias correction for the ML estimator

Symbols

Δt: bin size of the time bin for current spiking

T: past range of the past embedding

[t − T, t): embedded past window

d: embedding dimension or number of bins

κ: scaling exponent for exponential embedding

Trec: recording length

N = (Trec − T)/Δt: number of measurements, i.e. number of observed joint events of current and past spiking

X: random variable with binary outcomes x ∈ [0, 1], which indicate the presence of a spike in a time bin Δt

X−T: random variable whose outcomes are binary sequences X−T ∈ {0, 1}d, which represent past spiking activity in a past range T

Information-theoretic quantities

H(spiking) ≡ H(X): average spiking information

H(spiking|past(T)) ≡ H(X|X−T): average spiking information for given past spiking in a past range T

I(spiking;past(T)) ≡ I(X; X−T): mutual information between current spiking and past spiking in a past range T

R(T) ≡ I(X;X−T)/H(X): history dependence for given past range T

: history dependence for given past range T and past embedding d, κ

: total history dependence

ΔR(Ti)≡R(Ti) − R(Ti−1): gain in history dependence

τR: information timescale or generalized timescale of history dependence R(T)

L(T) ≡ I(X; X−T): lagged mutual information with time lag T

τL: generalized timescale of lagged mutual information L(T)

Estimated quantities

: estimated history dependence for given past range T and past embedding d, κ

: embedding-optimized estimate of R(T) for optimal embedding parameters d*, κ*

: estimated total history dependence, i.e. average for T ∈ [TD, Tmax], with interval of saturated estimates [TD, Tmax]

: estimated information timescale

Basic definitions

Definition of history dependence

We quantify history dependence R(T) as the mutual information I(X, X−T) between present and past spiking X and X−T, normalized by the binary Shannon information of spiking H(X), i.e.

| (6) |

Under the assumption of stationarity and ergodicity the mutual information can be computed either as the average over the stationary distribution p(x, x−T), or the time average [21, 58], i.e.

Here, indicates the presence of a spike in a small interval [tn, tn + Δt) with Δt = 5 ms throughout the paper, and encodes the spiking history in a time window [tn − T, tn) at times tn = nΔt that are shifted by Δt.

Definition of lagged mutual information

The lagged mutual information L(T) [41] for a stationary neural spike trains is defined as the mutual information between present spiking X and past spiking X−T with time lag T, i.e.

| (11) |

| (12) |

| (13) |

Here, indicates the presence of a spike in a time bin [tn, tn + Δt) and the presence of a spike in a single past bin [tn − T, tn − T + Δt) at times tn = nΔt that are shifted by Δt. In analogy to R(T), one can apply the generalized timescale to the lagged mutual information to obtain a timescale τL with

| (14) |

Definition of autocorrelation

The autocorrelation C(T) for a stationary neural spike train is defined as

| (15) |

with time lag T and and as above. For an exponentially decaying autocorrelation , τC is called autocorrelation time.

Past embedding

Here, we encode the spiking history in a finite time window [t − T, t) as a binary sequence of binary spike counts in d past bins (Fig 2). When more than one spike can occur in a single bin, is chosen for spike counts larger than the median activity in the ith bin. This type of temporal binning is more generally referred to as past embedding. It is formally defined as a mapping

| (16) |

from the set of all possible spiking histories , i.e. the sigma algebra generated by the point process (neural spiking) in the time interval [t − T, t), to the set of d-dimensional binary sequences Sd. We can drop the dependence on the time t because we assume stationarity of the point process. Here, T is the embedded past range, d the embedding dimension, and θ denotes all the embedding parameters that govern the mapping, i.e. θ = (d, …). The resulting binary sequence at time t for given embedding θ and past range T will be denoted by In this paper, we consider the following two embeddings for the estimation of history dependence.

Uniform embedding

If all bins have the same bin width τ = T/d, the embedding is called uniform. The main drawback of the uniform embedding is that higher past ranges T enforce a uniform decrease in resolution when d is fixed.

Exponential embedding

One can generalize the uniform embedding by letting bin widths increase exponentially with bin index j = 1, …, d according to τj = τ110(j−1)κ. Here, τ1 gives the bin size of the first past bin, and is uniquely determined when T, d and κ are specified. Note that κ = 0 yields a uniform embedding, whereas κ > 0 decreases resolution on distant past spikes. For fixed embedding dimension d and past range T, this allows to retain a higher resolution on spikes in the more-recent past.

Sufficient embedding

Ideally, the past embedding preserves all the information that the spiking history in the past range T has about the present spiking dynamics. In that case, no additional past information has an influence on the probability for xt once the embedded spiking history is given, i.e.

| (17) |