Abstract

Segmentation of the prostate bed, the residual tissue after the removal of the prostate gland, is an essential prerequisite for post-prostatectomy radiotherapy but also a challenging task due to its non-contrast boundaries and highly variable shapes relying on neighboring organs. In this work, we propose a novel deep learning-based method to automatically segment this “invisible target”. As the main idea of our design, we expect to get reference from the surrounding normal structures (bladder&rectum) and take advantage of this information to facilitate the prostate bed segmentation. To achieve this goal, we first use a U-Net as the backbone network to perform the bladder&rectum segmentation, which serves as a low-level task that can provide references to the high-level task of the prostate bed segmentation. Based on the backbone network, we build a novel attention network with a series of cascaded attention modules to further extract discriminative features for the high-level prostate bed segmentation task. Since the attention network has one-sided dependency on the backbone network, simulating the clinical workflow to use normal structures to guide the segmentation of radiotherapy target, we name the final composition model asymmetrical multi-task attention U-Net. Extensive experiments on a clinical dataset consisting of 186 CT images demonstrate the effectiveness of this new design and the superior performance of the model in comparison to the conventional atlas-based methods for prostate bed segmentation. The source code is publicly available at https://github.com/superxuang/amta-net.

Keywords: CT image segmentation, Fully convolutional networks, Multi-task learning

1. Introduction

Radical prostatectomy is an effective treatment for prostate cancer when the cancer is confined to the prostate. However, after the resection of the prostate gland, residual cancerous tissue may yet be hiding in the remaining part of the surgical bed and the surrounding tissues, where the region is known as the prostate bed (or prostatic fossa). Without treatment, this can increase the risk of cancer recurrence even metastasis substantially. To irradiate the cancerous tissue, postoperative radiotherapy on the prostate bed is regarded as a standard adjuvant or salvage setting for the radical prostatectomy. As a prerequisite for the postoperative radiotherapy, accurate prostate bed segmentation in planning computed tomography (CT) images is vital to the success of the disease control.

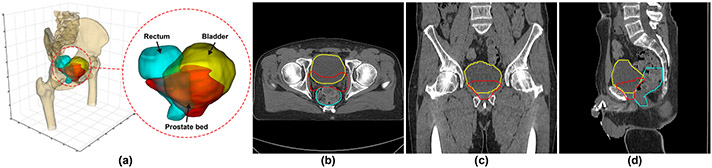

However, accurate contouring of the prostate bed from CT image is a highly challenging and unique task. As shown in Fig. 1, the prostate bed, an anatomical region in the male pelvis situated between the bladder and rectum, mainly consists of the residual prostatic tissue after the removal of the prostate gland and some adjacent volume of the bladder. It is not an intact structure with boundary like the prostate gland so often referred to as a “virtual” volume [1,3,4] in the literature. This made the segmentation of the prostate bed a unique problem and much harder than the segmentation of most other structures. The boundary of the prostate bed is mainly defined by the shape of neighboring organs and a series of consensus guidelines [9,10,12,14] rather than the local intensity contrast. Therefore, it is difficult to distinguish the prostate bed in CT image merely considering the difference in gray level without any anatomical knowledge. Moreover, since the prostate bed mainly consists of soft tissues, its shape and size are highly variable across different patients. The status (full or empty) of the neighboring bladder and rectum could significantly change the volume of the prostate bed.

Fig. 1.

An exampled post-prostatectomy case displaying the prostate bed (red), bladder (yellow), and rectum (cyan) in 3D (a) and 2D (b, c, d) views (Color figure online).

In clinical practice, this challenging task is commonly carried out by the physicians using manual contouring tools, which is time-consuming and prone to inter-observer variation. Although automated workflows are highly desired, there are only a few methods proposed for the prostate bed segmentation using the atlas-based methods [1,3]. Hwee et al. [3] firstly tried to segment the prostate bed automatically using a commercial atlas-based segmentation (ABS) software with 75 atlas images. Although their method could achieve significantly faster speed than the manual contouring procedure, the accuracy of the generated contours are not adequate for clinical use (with a mean Dice similarity coefficient (DSC) of 0.47). Similar conclusion was drawn in a later research by Delpon et al. [1] They compared the performance of five commercial ABS systems for prostate bed and neighboring organs-at-risk (OAR) segmentation. The results showed that the ABS methods were very efficient and accurate for high-contrast organs (e.g.., the femoral heads) but insufficient for the prostate bed. Overall, the atlas-based methods are not accurate enough for the prostate bed segmentation due to (1) over-reliance on local intensity contrast and (2) less consideration of the geometric correlation between the prostate bed and the neighboring organs.

In this paper, to address the issues above, we present a novel asymmetrical multi-task attention U-Net (AMTA-U-Net) to perform accurate segmentation of the prostate bed in CT image. Deep learning-based methods, specifically the fully convolutional networks (FCNs) [5,6,8], have significantly improved the accuracy of medical image segmentation, especially for the organs suffering highly variable shapes and ambiguous boundaries (e.g., pelvic organs [2,13,15]). Thus, we propose to use the FCN as the framework to handle the difficult prostate bed segmentation. Considering the strong geometric correlation between the prostate bed and bladder&rectum, we formulate the prostate bed segmentation as a high-level task depending on the low-level task of bladder&rectum segmentation, finally resulting in a novel multi-task network with asymmetrical attention mechanism to derive the prostate bed mask from the structural information of bladder&rectum.

In summary, the main contribution of this work is three-fold: (1) To achieve robust segmentation of the prostate bed, a treatment target with no clear margin, we leverage the power of deep learning to handle this challenging problem. To the best of our knowledge, this is the first attempt using deep learning-based methods to solve this clinical problem. (2) To further improve the segmentation accuracy of the prostate bed, we propose a novel asymmetrical multi-task attention U-Net to formulate the prostate bed segmentation as a high-level task getting references from the low-level bladder&rectum segmentation task. (3) As a by-product, the proposed method can segment the bladder and rectum jointly with the prostate bed, which is meaningful for the OAR definition in radiotherapy planning. To demonstrate the effectiveness of the proposed method for prostate bed segmentation, we conduct extensive experiments on a clinical dataset consisting of 186 CT images from 186 real post-prostatectomy subjects. The experimental results show that the proposed AMTA-U-Net achieves higher accuracy than the baseline U-Net and other multi-task deep models and significantly outperforms the conventional atlas-based methods.

2. Method

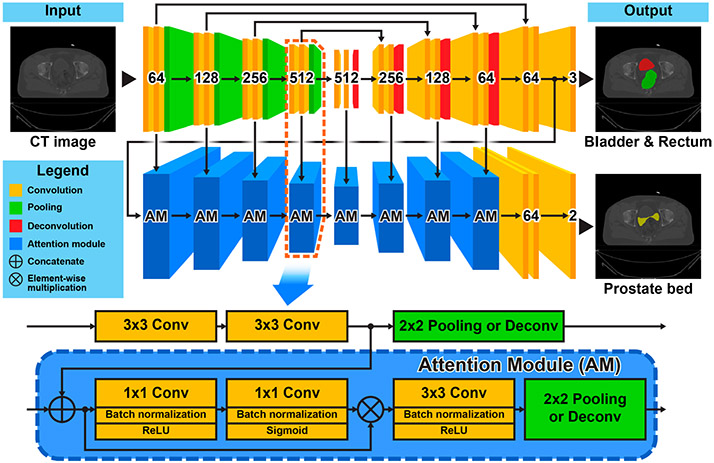

In this section, we will introduce in details the proposed AMTA-U-Net for prostate bed segmentation. The top part of Fig. 2 gives a schematic representation of this method. It mainly consists of two components: (1) a backbone network with U-Net architecture used for the low-level bladder&rectum segmentation task, and (2) an attention network built with a series of cascaded attention modules (AM) on the backbone network used for the high-level prostate bed segmentation task. The inner structure of the attention module is shown at the bottom of Fig. 2.

Fig. 2.

Schematic representation of the proposed asymmetrical multi-task attention U-Net (top) and the inner structure of the attention module (bottom).

2.1. Backbone Network for Bladder&Rectum Segmentation

The shape of the bladder&rectum is an essential reference for human physicians when they delineate the prostate bed. It would also be a prerequisite for the proposed method to segment the bladder&rectum first. To achieve this goal, we exploit the U-Net[11], which is a classical and powerful FCN architecture for medical image segmentation, as the backbone network to predict a pixel-wise mask of the bladder&rectum. The detailed architecture of the backbone U-Net is illustrated at the top of Fig. 2. The number labeled on each convolutional block denotes the corresponding output channels. As the input image propagates through the backbone network, we can get not only the segmentation mask of the bladder&rectum but also a series of hierarchical feature maps with gradually increased bladder&rectum structural information. These feature maps will serve as the reference to be further inferred in the subsequent attention network to predict the final prostate bed mask.

2.2. Attention Network for Prostate Bed Segmentation

Since the prostate bed boundary largely depends on the shape of the bladder&rectum, it is intuitive that the hierarchical features used for the bladder&rectum segmentation can also be used as the reference for the prostate bed segmentation. However, instead of directly learning from the entire feature map, focusing on some informative parts of the features could be more efficient for the model training to achieve better performance. Inspired by the attention mechanism in multi-task learning [7], we design an attention network to extract discriminative features from the backbone network to infer the prostate bed mask. As shown in Fig. 2, the attention network consists of a series of cascaded attention modules. Each attention module laterally connects to a convolutional block in the backbone U-Net. By this architecture, the attention network can fully access the hierarchical features in the backbone network and take advantages of the spatial image information (from the left convolutional blocks) and the semantic bladder&rectum structural information (from the right convolutional blocks) to infer the prostate bed mask gradually. The inner structure of the attention module is illustrated at the bottom of Fig. 2. It is designed to be able to self-learn a pixel-wise attention mask based on the input features and further extract features that are more discriminative for the final target. This procedure is naturally implemented by two cascaded 1×1 convolutional layers followed by another 3×3 convolutional layer. The first two 1×1 convolutional layers act as a feature selector, which is used to self-learn an element-wise [0, 1] soft mask to tailor the input features by element-wise multiplication. The following 3×3 convolutional layer acts as a feature extractor to further adapt the masked feature map to the final target. To keep the spatial consistency, a 2×2 pooling/deconvolutional layer is used to down-/up-sample the extracted feature map at the end of the attention module.

2.3. Implementation Details

The proposed AMTA-U-Net takes 2D CT slices as the input. To take more spatial context into consideration, we combine two adjacent slices with the center slice to compose a 3-channel input image. All the CT slices are center-cropped and resampled to a uniform size of 128×128 with a spatial resolution of 2 mm×2 mm. Pixel intensities are rescaled from [−200, 800] Hounsfield Unit (HU) to [0, 1]. To mitigate overfitting in the training stage, we randomly translate and rotate the input CT slices in a range of [−5.00, 5.00]mm and [−0.05, 0.05]rad, respectively. We train the models for 100 epochs with a base learning rate of 10−3 and a batch size of 144. For all training epochs, the model achieving the best performance on the validation set is used as the final model to be evaluated with the testing set. The implementation of the proposed method has been made publicly available at https://github.com/superxuang/amta-net.

3. Experiments

3.1. Dataset

We conduct experiments on a dataset that consists of 186 post-prostatectomy patients collected in one clinical site from the year 2009 to 2019. Each subject contains one planning CT image and three segmentation masks corresponding to the prostate bed, bladder, and rectum, respectively. We randomly divide the dataset into five-folds and use cross-validation to evaluate the performance of different models (three for training, one for validation, and one for testing). DSC and average symmetric surface distance (ASD) are used as the metrics for the evaluation.

3.2. Contribution Role of the Bladder&Rectum in Prostate Bed Segmentation

As we aforementioned, the contour of the bladder&rectum is an essential reference to help physicians in the manual delineation of the prostate bed. Thus, the reference contour may serve as a prerequisite for automatic methods as well. To verify the contribution of the bladder&rectum structures in the prostate bed segmentation, we consider the ground-truth mask of the bladder&rectum as prior knowledge. We combine it with the input CT slices to compose a multi-channel image, which is used to predict the prostate bed mask through a standard U-Net. As the experimental results show in Table 1, when using the prior knowledge of the bladder&rectum, the U-Net achieves an average DSC of 75.54%, which is 2.25% higher than that of a U-Net does not use the prior knowledge of the bladder&rectum (DSC = 73.29%). This result demonstrates that the bladder&rectum structural information can help to improve the segmentation accuracy of the prostate bed. Based on this finding, in the design of our proposed method, we consider the prostate bed and the bladder&rectum jointly, and reform the prostate bed segmentation as a high-level task based on the low-level task of the bladder&rectum segmentation.

Table 1.

Comparison of prostate bed segmentation with/without bladder&rectum structural prior knowledge.

| Using prior knowledge of bladder& rectum |

DSC [mean(std) %] | ASD [mean(std) mm] |

|---|---|---|

| No | 73.29(7.43) | 2.79(1.27) |

| Yes | 75.54(6.92) | 2.47(1.12) |

3.3. Comparison with Different Deep Learning Models

For the proposed method, there are three key properties contributing to the final improvement of the prostate bed segmentation when compared with the baseline U-Net: (1) the multi-task learning strategy improving model generalization by parameter sharing, (2) the asymmetrical network architecture underlining the geometric dependency between the prostate bed and the bladder&rectum, and (3) the attention mechanism selecting discriminative features for the specific target. To verify the effectiveness of these designs, in this experiment, we compare the proposed method with other deep models which are related to different combinations of the above properties. The experimental results are summarized in Table 2.

Table 2.

Comparison of different deep learning-based models.

| Models | DSC [mean(std) %] | ASD [mean(std) mm] | ||||

|---|---|---|---|---|---|---|

| PB | Bladder | Rectum | PB | Bladder | Rectum | |

| U-Net | 73.29(7.43) | - | - | 2.79(1.27) | - | - |

| Multi-task U-Net | 74.41(7.23) | 87.84(10.04) | 80.22(7.82) | 2.58(1.21) | 1.62(2.12) | 2.50(1.85) |

| Cascaded U-Net | 75.03(7.41) | 87.93(8.95) | 79.68(9.54) | 2.54(1.23) | 1.53(1.47) | 2.78(2.97) |

| MTA-U-Net[7] | 75.03(7.11) | 88.46(8.08) | 80.31(9.23) | 2.51(1.13) | 1.44(1.30) | 2.56(2.91) |

| Proposed | 75.67(6.56) | 88.40(8.72) | 80.35(9.36) | 2.42(1.03) | 1.47(1.22) | 2.60(3.00) |

As shown in Table 2, all the multi-task models (Multi-task U-Net, Cascaded U-Net, MTA-U-Net, and our method) outperform the single-task model (U-Net), demonstrating the effectiveness of the multi-task learning strategy for the prostate bed segmentation. Among the multi-task models, the proposed method achieves the highest accuracy on the prostate bed, outperforming the models without consideration of the dependency between the prostate bed and bladder&rectum (Multi-task U-Net and MTA-U-Net), nor the attention mechanism (Multi-task U-Net and Cascaded U-Net). This result indicates that an attention mechanism to include the geometric dependency between the prostate bed and bladder&rectum can help improve the prostate bed segmentation.

3.4. Comparison with Atlas-Based Method

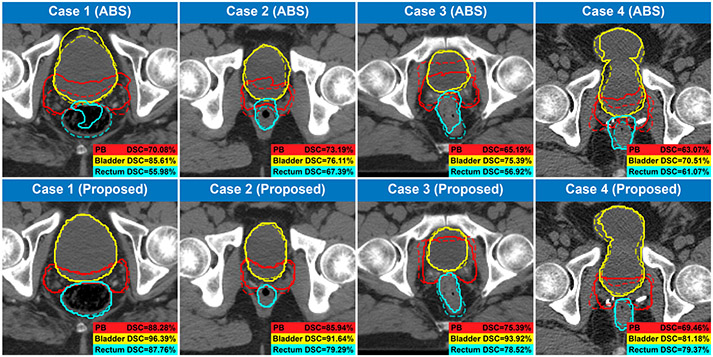

As we introduced in Sect. 1, the topic of automated prostate bed segmentation has been rarely studied in the past except for a few attempts using some commercial atlas-based segmentation (ABS) software. Hence, to demonstrate the superior performance of the proposed method, we conduct a comparison between these commercial ABS software and the proposed method. In this comparison, we not only list the results of the commercial ABS software that are conducted from different datasets but also evaluate one of the high-performance ABS software on our dataset using the same 5-folds cross-validation strategy as our proposed method (four folds for atlas building and one fold for testing). As the results show in Table 3, the proposed method significantly outperforms the atlas-based method on both the prostate bed segmentation task and the bladder&rectum segmentation task, demonstrating the superior performance of the proposed method when compared with the conventional atlas-based method. Figure 3 gives a visualization of some cases in this comparison.

Table 3.

Comparison with commercial atlas-based segmentation (ABS) software.

| Method | Dataset (Train/test) |

DSC [mean (std) %] | ASD [mean (std) mm] | ||||

|---|---|---|---|---|---|---|---|

| PB | Bladder | Rectum | PB | Bladder | Rectum | ||

| ABS(MIM)[3] | 80(75/5) | 47.0(16.0) | 67.0(18.0) | 58.0(9.0) | - | - | - |

| ABS(WFB)[1] | 20(10/10) | 56.0(10.0) | 76.0(12.0) | 73.0(7.0) | - | - | - |

| ABS(MIM)[1] | 20(10/10) | 61.0(9.0) | 80.0(14.0) | 75.0(7.0) | - | - | - |

| ABS(ABAS)[1] | 20(10/10) | 67.0(13.0) | 81.0(13.0) | 75.0(9.0) | - | - | - |

| ABS(SPICE)[1] | 20(10/10) | 37.0(9.0) | 76.0(26.0) | 68.0(12.0) | - | - | - |

| ABS(RS)[1] | 20(10/10) | 51.0(17.0) | 59.0(15.0) | 49.0(12.0) | - | - | - |

| ABS(MIM) | 186(5-fold CV) | 64.2(11.9) | 64.1(17.5) | 61.8(11.5) | 4.8(11.4) | 7.7(15.3) | 5.9(11.0) |

| Proposed | 186(5-fold CV) | 75.7(6.6) | 88.4(8.7) | 80.4(9.4) | 2.4(1.0) | 1.5(1.2) | 2.6(3.0) |

Fig. 3.

Contours generated by the ABS method and the proposed method. The predicted and the ground-truth contours are denoted by solid and dash lines, respectively.

4. Conclusion

In this work, we present a novel multi-task deep learning-based method, the asymmetrical multi-task attention U-Net, to handle the highly challenging problem of the “invisible target”—prostate bed segmentation in CT images. The proposed method performs the prostate bed segmentation jointly with the bladder&rectum segmentation in a multi-task manner. The high-level task of prostate bed segmentation is accomplished by an attention network that can extract discriminative features from a backbone U-Net used for the low-level task of bladder&rectum segmentation. Extensive experiments on a clinical dataset consisting of 186 CT images demonstrate the effectiveness of the designs in the proposed method and the superior performance of the deep learning-based methods in comparison to the conventional atlas-based methods for prostate bed segmentation.

Supplementary Material

Acknowledgments

This work was supported in part by NIH Grant CA206100.

Footnotes

Electronic supplementary material The online version of this chapter (https://doi.org/10.1007/978-3-030-59719-1_46 contains supplementary material, which is available to authorized users.

References

- 1.Delpon G, et al. : Comparison of automated atlas-based segmentation software for postoperative prostate cancer radiotherapy. Front. Oncol 6, 178 (2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.He K, Cao X, Shi Y, Nie D, Gao Y, Shen D: Pelvic organ segmentation using distinctive curve guided fully convolutional networks. IEEE Trans. Med. Imaging 38(2), 585–595 (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hwee J, et al. : Technology assessment of automated atlas based segmentation in prostate bed contouring. Radiat. Oncol 6(1), 110 (2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Latorzeff I, Sargos P, Loos G, Supiot S, Guerif S, Carrie C: Delineation of the prostate bed: the ”invisible target” is still an issue? Front. Oncol 7, 108 (2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lian C, Liu M, Zhang J, Shen D: Hierarchical fully convolutional network for joint atrophy localization and Alzheimer’s disease diagnosis using structural MRI. IEEE Trans. Pattern Anal. Mach. Intell 42(4), 880–893 (2020) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lian C, Zhang J, Liu M, Zong X, Hung SC, Lin W, Shen D: Multi-channel multi-scale fully convolutional network for 3D perivascular spaces segmentation in 7T MR images. Med. Image Anal 46, 106–117 (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liu S, Johns E, Davison AJ: End-to-end multi-task learning with attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1871–1880 (2019) [Google Scholar]

- 8.Long J, Shelhamer E, Darrell T: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015) [DOI] [PubMed] [Google Scholar]

- 9.Michalski JM, et al. : Development of RTOG consensus guidelines for the definition of the clinical target volume for postoperative conformal radiation therapy for prostate cancer. Int. J. Radiat. Oncol. Biol. Phys 76(2), 361–368 (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Poortmans P, Bossi A, Vandeputte K, Bosset M, Miralbell R, Maingon P, Boehmer D, Budiharto T, Symon Z, Van den Bergh AC, et al. : Guidelines for target volume definition in post-operative radiotherapy for prostate cancer, on behalf of the EORTC radiation oncology group. Radiother. Oncol 84(2), 121–127 (2007) [DOI] [PubMed] [Google Scholar]

- 11.Ronneberger O, Fischer P, Brox T: U-Net: convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells WM, Frangi AF (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham: (2015). 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 12.Sidhom MA, Kneebone AB, Lehman M, Wiltshire KL, Millar JL, Mukherjee RK, Shakespeare TP, Tai KH: Post-prostatectomy radiation therapy: consensus guidelines of the Australian and New Zealand radiation oncology genitourinary group. Radiother. Oncol 88(1), 10–19 (2008) [DOI] [PubMed] [Google Scholar]

- 13.Wang S, He K, Nie D, Zhou S, Gao Y, Shen D: Ct male pelvic organ segmentation using fully convolutional networks with boundary sensitive representation. Med. Image Anal 54, 168–178 (2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wiltshire KL, et al. : Anatomic boundaries of the clinical target volume (prostate bed) after radical prostatectomy. Int. J. Radiat. Oncol. Biol. Phys 69(4), 1090–1099 (2007) [DOI] [PubMed] [Google Scholar]

- 15.Xu X, Zhou F, Liu B: Automatic bladder segmentation from CT images using deep CNN and 3d fully connected CRF-RNN. Int. J. Comput. Assist. Radiol. Surg 13(7), 967–975 (2018) [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.