Abstract

Due to the point-like nature of neuronal spiking, efficient neural network simulators often employ event-based simulation schemes for synapses. Yet many types of synaptic plasticity rely on the membrane potential of the postsynaptic cell as a third factor in addition to pre- and postsynaptic spike times. In some learning rules membrane potentials not only influence synaptic weight changes at the time points of spike events but in a continuous manner. In these cases, synapses therefore require information on the full time course of membrane potentials to update their strength which a priori suggests a continuous update in a time-driven manner. The latter hinders scaling of simulations to realistic cortical network sizes and relevant time scales for learning. Here, we derive two efficient algorithms for archiving postsynaptic membrane potentials, both compatible with modern simulation engines based on event-based synapse updates. We theoretically contrast the two algorithms with a time-driven synapse update scheme to analyze advantages in terms of memory and computations. We further present a reference implementation in the spiking neural network simulator NEST for two prototypical voltage-based plasticity rules: the Clopath rule and the Urbanczik-Senn rule. For both rules, the two event-based algorithms significantly outperform the time-driven scheme. Depending on the amount of data to be stored for plasticity, which heavily differs between the rules, a strong performance increase can be achieved by compressing or sampling of information on membrane potentials. Our results on computational efficiency related to archiving of information provide guidelines for the design of learning rules in order to make them practically usable in large-scale networks.

Keywords: event-based simulation, voltage-based plasticity rules, spiking neural network simulator, NEST, Clopath rule, Urbanczik-Senn rule

1. Introduction

One mechanism for learning in the brain is implemented by changing the strengths of connections between neurons, known as synaptic plasticity. Already early on, such plasticity was found to depend on the activity of the connected neurons. Donald Hebb postulated the principle “Cells that fire together, wire together” (Hebb, 1949). Later on, it was shown that plasticity is shaped by temporal coordination of activities even down to the level of individual spikes (Markram et al., 1997; Bi and Poo, 1998). Synaptic plasticity rules for spiking neural networks, such as spike timing-dependent plasticity (STDP, Gerstner et al., 1996), consequently employ spike times of pre- and postsynaptic cells to predict the change in connections.

In recent years, a new class of biologically inspired plasticity rules has been developed that takes into account the membrane potential of the postsynaptic neuron as an additional factor (for a review, see Mayr and Partzsch, 2010; Gerstner et al., 2014). The rule by Clopath et al. (2010) can be seen as a prototypical example for a voltage-based plasticity rule since long-term potentiation of synapses depends on the presynaptic spike arrival and a filtered version of the postsynaptic membrane potential. This additional voltage dependence enables the Clopath rule to describe phenomena that are not covered by ordinary STDP but can be observed in experimental data, such as the complex frequency dependence of the synaptic weight changes in spike pairing experiments (Sjöström et al., 2001). Furthermore, it provides a mechanism for the creation of strong bidirectional connections in networks, which have been found to be overrepresented in some cortical areas (Song et al., 2005).

Further inspiration for recently proposed plasticity rules originates from the field of artificial neural networks. These networks showed great success in the past decade, for example in image or speech recognition tasks (Hinton et al., 2006; Krizhevsky et al., 2012; Hannun et al., 2014; LeCun et al., 2015). The involved learning paradigms, for example the backpropagation algorithm (Werbos, 1974; Lecun, 1985; Parker, 1985; Rumelhart et al., 1986), are, however, often not compatible with biological constraints such as locality of information for weight updates. To bridge the gap to biology, different biologically inspired approximations and alternatives to the backpropagation algorithm have been proposed (Neftci et al., 2017; Sacramento et al., 2018; Bellec et al., 2020; Cartiglia et al., 2020). A common feature of many of these rules is that weight updates not only depend on the output activity of pre- and postsynaptic cells, but also on a third factor, which is a time-continuous signal. A prominent example of such biologically and functionally inspired rules is the voltage-based plasticity rule proposed by Urbanczik and Senn (2014), where the difference between somatic and dendritic membrane potential serves as an error signal that drives learning. This rule, incorporated in complex microcircuits of multi-compartment neurons, implements local error-backpropagation (Sacramento et al., 2018).

Research on functionally inspired learning rules in biological neural networks is often led by the requirement to implement a particular function rather than efficiency. Present studies are therefore primarily designed to prove that networks with a proposed learning rule minimize a given objective function. Indeed many learning rules are rather simple to implement and to test in ad-hoc implementations where at any point the algorithm has access to all state variables. While the latter implementations are sufficient for a proof of principle, they are hard to reuse, reproduce, and generalize. In particular, simulations are restricted to small network sizes, as the simulation code cannot be straight-forwardly distributed across compute nodes and thus parallelized. This also limits the simulation speed which is, in particular, problematic given that successful learning requires simulating networks for long biological times.

In parallel to the above efforts are long-term developments of simulation software for biological neural networks (for a review, see Brette et al., 2007). Such open-source software, combined with interfaces and simulator-independent languages (Davison et al., 2008; Djurfeldt et al., 2010, 2014), supports maintainability and reproducibility, as well as community driven development. The design of such simulators is primarily led by implementation efficiency. Code is optimized for neuron and synapse dynamics, with the aim to upscale simulations to biologically realistic network sizes. A modular structure of the code facilitates re-use and extensions in functionality. Therefore, one aim of the community should be the transfer of ad-hoc proof-of-principle implementations to these well-tested platforms. Given the differences in design principles behind the exploratory development of specific models and general-purpose simulation technology, this transfer is not trivial. In the current study, we show how to make voltage-based learning rules compatible with spiking neural network simulators that employ an event-driven update scheme for synapses.

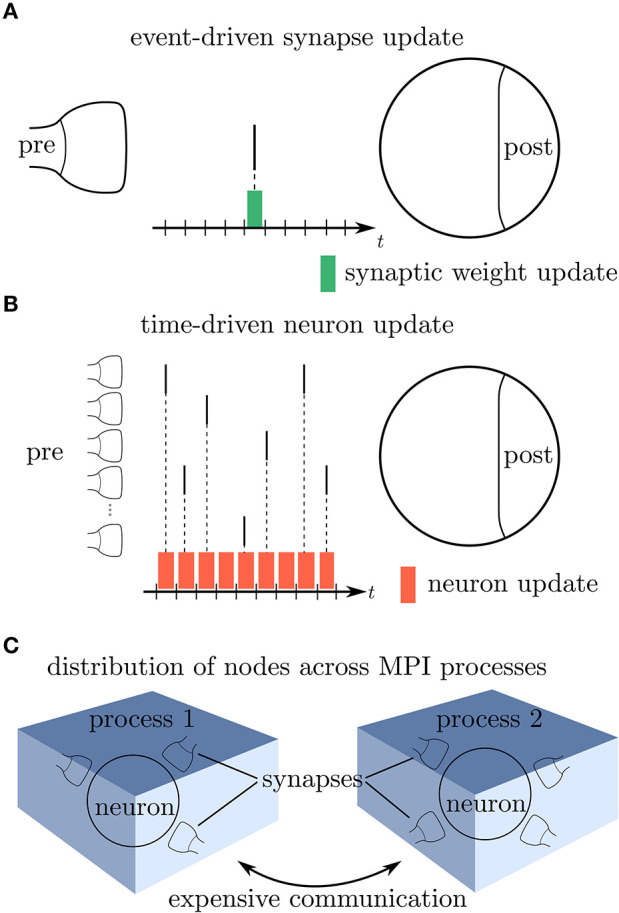

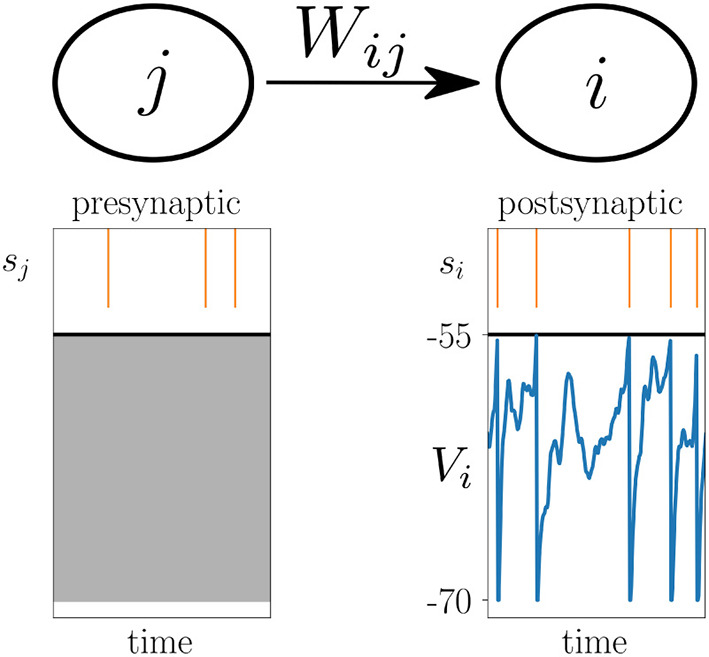

Modern network simulators use individual objects for different neurons and synapses. One common strategy of parallelization is to distribute these objects across many compute processes (Lytton et al., 2016; Jordan et al., 2018). Communication between neurons then implies exchange of information between compute processes. Neurons in the brain primarily communicate in an event-based fashion via spikes. The duration of these spike events is on the order milliseconds, which together with typical rates during physiological brain states of a few spikes per second yields a coupling that is sparse in time (Figure 1A). Spiking simulators emulate this communication by idealizing spikes as instantaneous events. Thus, in the absence of direct electrical coupling via gap junctions (Kumar and Gilula, 1996; Hahne et al., 2015; Jordan et al., 2020a), there is no neuronal interaction in between two spike events such that the dynamics of neuronal and synaptic state variables can be evolved independently in time. This led to the development of event-based simulation schemes, where synapses are only updated in their state at the times of incoming spikes (Watts, 1994; Morrison et al., 2005). This significantly reduces the amount of function calls to synapse code and optimizes computational performance in network simulations. Modern spiking network simulators such as Auryn (Zenke and Gerstner, 2014), Brian2 (Stimberg et al., 2014), Neuron (Carnevale and Hines, 2006), NEST (Gewaltig and Diesmann, 2007), and Nevesim (Pecevski et al., 2014) therefore employ an event-based update scheme for synapses. Even though spike events at single synapses are rare, each single neuron typically receives a large amount of spikes in rapid succession due to its large number of incoming connections (in-degree). This suggests a time-driven update of neurons (Figure 1B). The resulting hybrid simulation scheme for neurons and synapses (Morrison et al., 2005; D'Haene et al., 2014; Krishnan et al., 2017) is nowadays commonly used across many spiking network simulators (for a review, see Brette et al., 2007).

Figure 1.

Update schemes for neurons and synapses. (A) A spike crosses a synapse from the presynaptic (pre) to the postsynaptic (post) neuron. Since this is a rare event, the synaptic weight is computed only when a spike is delivered, indicated by the green bar (event-driven update). (B) Neurons with a large in-degree receive spikes in rapid succession which suggests a time-driven update of the neuron's state in each time step (red bars). (C) Since the computation of the synaptic weights requires information from the postsynaptic neuron, storing the synapses on the same compute node reduces the amount of expensive communication between compute processes.

An event-based scheme for synapses is perfectly suitable for classical STDP rules, which only rely on a comparison between the timings of spike events. In these rules, synaptic weights formally depend on spike traces, which are continuous signals that are fully determined by spike timings of pre- and postsynaptic neurons and which can be updated at the time of spike events. Optimizations of simulations including STDP have been extensively discussed (Song et al., 2000; Ros et al., 2006; Rudolph and Destexhe, 2006; Morrison et al., 2007a) and routinely used in spiking network simulators such as Auryn (Zenke and Gerstner, 2014), Brian2 (Stimberg et al., 2014), Neuron (Carnevale and Hines, 2006), NEST (Gewaltig and Diesmann, 2007), and Nevesim (Pecevski et al., 2014) as well as in neuromorphic hardware (Pfeil et al., 2013; Serrano-Gotarredona et al., 2013; Neftci et al., 2014; Galluppi et al., 2015; Friedmann et al., 2016; Thakur et al., 2018). Some STDP variants also include the membrane potential of postsynaptic cells at the time points of presynaptic spike events as a gating variable (Brader et al., 2007; Diederich et al., 2018). At the update, these rules only require the synapse to know the current value of the postsynaptic membrane potential in addition to the pre- and postsynaptic spike time. Obtaining this value from the neuron objects is efficient to implement and already employed in Brian2 (Stimberg et al., 2014) and in a range of neuromorphic systems (Serrano-Gotarredona et al., 2013; Galluppi et al., 2015; Qiao et al., 2015; Moradi et al., 2018; Cartiglia et al., 2020).

We here focus on more complex voltage-based learning rules which not only rely on membrane potentials at the time points of spike events, but on an extended history of membrane potentials. For these rules synapses continuously require information from the postsynaptic neurons in order to update their weights (Clopath et al., 2010; Mayr and Partzsch, 2010; Brea et al., 2013; Yger and Harris, 2013; Urbanczik and Senn, 2014; Albers et al., 2016). This a priori breaks the idea behind an event-based update scheme. Therefore, previous attempts to incorporate such voltage-based plasticity in spiking network simulators resorted to time-driven synapse updates for NEST (Jordan et al., 2020b) and NEURON (see implementation of Clopath plasticity on ModelDB, Hines et al., 2004). These implementations therefore only profit from the simulation environment on the level of the implementation language, but have not been able to exploit the algorithmic optimizations and speedup of event-based synapse updates.

In this study we present an efficient archiving method for the history of postsynaptic state variables that allows for an event-based update of synapses and thus makes complex voltage-based plasticity rules compatible with state-of-the-art simulation technology for spiking neural networks. In particular, we derive two event-based algorithms that store a time-continuous or discontinuous history, respectively. These algorithms apply to plasticity rules with any dependence on post-synaptic state variables and therefore cover a large range of existing models (Brader et al., 2007; Mayr and Partzsch, 2010; Legenstein and Maass, 2011; Brea et al., 2013, 2016; Yger and Harris, 2013; Qiao et al., 2015; Albers et al., 2016; Sheik et al., 2016; Diederich et al., 2018; Sacramento et al., 2018; Cartiglia et al., 2020). We theoretically analyze advantages of the two event-driven algorithms with respect to each other and compare to a straight-forward time-driven algorithm.

The presented simulation concepts are exemplified and evaluated in a reference implementation in the open source simulation code NEST (Gewaltig and Diesmann, 2007; Jordan et al., 2019). The reference implementation thereby exploits existing functionality of a scalable software platform which can be used on laptops as well as supercomputers. NEST is employed by a considerable user community and equipped with an interface to the programming language Python (Eppler et al., 2009) that is currently widely used in the field of computational neuroscience (Muller et al., 2015). It supports relevant neuron models and connection routines for the construction of complex networks. Despite this flexibility the simulation engine shields the researcher from the difficulties of handling a model description in a distributed setting (Morrison et al., 2005; Plesser et al., 2015).

To exemplify the general simulation algorithms, we here focus on the voltage-based plasticity rules by Clopath et al. (2010) and Urbanczik and Senn (2014). The two rules represent opposing ends of a family of learning rules in the amount of data required to compute weight updates. The Clopath rule by design only triggers plasticity in the vicinity of postsynaptic spike events; storing a history, which is non-continuous in time, thus becomes beneficial. In contrast, the Urbanczik-Senn rule considers noisy prediction errors based on postsynaptic membrane voltages and spikes. Such prediction errors never vanish and therefore always need to be stored to update the weights, leading to time-continuous histories. For a given span of biological time, simulations of the Urbanczik-Senn rule are therefore by design less efficient than those of the Clopath rule. However, we show that a compression of membrane potential information reduces this performance gap. Changing the learning rule to include a sparse sampling of the membrane voltage further increases efficiency and makes performance comparable to simulations with ordinary STDP.

Our study begins with a specification of the mathematical form of the learning rules that we consider (section 2.1). We distinguish between classical STDP (section 2.2) and voltage-based rules (section 2.3) and present a special case where voltage-based rules can be efficiently implemented by compressing information on the postsynaptic membrane potential. We then introduce the Clopath and the Urbanczik-Senn rule as two examples of voltage-based plasticity (sections 2.4 and 2.5). In section 3 we first contrast time- and event-driven schemes for updating synapses with voltage-based plasticity (section 3.1). Subsequently, we detail a reference implementation of the algorithms in NEST (section 3.2) and use this to reproduce results from the literature (section 3.3). After that, we examine the performance of the reference implementation for the Clopath and the Urbanczik-Senn rule (section 3.4). Conclusions from the implementation of the two rules are drawn in section 3.5, followed by a general Discussion in section 4. The technology described in the present article is available in the 2.20.1 release of the simulation software NEST as open source. The conceptual and algorithmic work is a module in our long-term collaborative project to provide the technology for neural systems simulations (Gewaltig and Diesmann, 2007).

2. Materials and Methods

2.1. General Structure of Learning Rules

The focus of this study are plasticity models of the general form

| (1) |

where the change of the synaptic weight Wij between the presynaptic neuron j and postsynaptic neuron i is given by a function F that potentially depends on the current synaptic weight Wij(t), as well as on which are causal functionals of the postsynaptic spike train si, the presynaptic spike train sj, and the postsynaptic membrane potential Vi, respectively (Figure 2). Causal functional here refers to potentially depending on all past values ; likewise V*(t) depends on V(t′ ≤ t). Note that for simplicity of the notation, we only show one function F on the right hand side of (1), while generally there could be a sum of multiple functions or functionals Fα, where each one depends on spike trains and membrane potentials in a different manner. Note also that F mixes information of pre- and postsynaptic neurons, while the functionals denoted by * only need to take into account information of either the pre- or postsynaptic neuron. In cases where F is a functional, i.e., where F depends on the whole time course of its arguments, it can take into account an additional joint history dependence on , and . A special case, the Urbanczik-Senn learning rule, is discussed further below.

Figure 2.

Voltage-based plasticity rules. The change ΔWij in synaptic strength between presynaptic neuron j and postsynaptic neuron i depends on the presynaptic spike train sj, the postsynaptic spike train si and the postsynaptic membrane potential Vi.

One can formally integrate (1) to obtain the weight change between two arbitrary time points t and T

| (2) |

2.2. Spike-Timing Dependent Plasticity

In general, the integral on the right hand side of the equation cannot be calculated analytically. There is, however, a notable exception, which is the model of spike-timing dependent plasticity (STDP). This model is a form of Hebbian plasticity that relies on the exact spike times of pre- and postsynaptic neurons and ignores any effect of the postsynaptic membrane potential. The dependence on the exact spike times becomes apparent by the fact that either the pre- or postsynaptic spike functional is the spike train itself, for example

| (3) |

where is the k-th spike of the i-th neuron. This yields a plasticity rule that reads (Morrison et al., 2008)

| (4) |

with functions f± that model the weight dependence, and functionals given as convolutions of spike trains with kernels κ±, which in the classical STDP rule correspond to one-sided exponential decays. The appearance of the raw spike trains (delta distributions) in the differential equation of the STDP model renders the integration of the ODE trivial

| (5) |

where . An update of the synaptic weight between any two time points only requires knowledge of the weight and spike functionals at the timing of the pre- and postsynaptic spikes.

For models that do not solely rely on exact spike times, but for example on filtered versions of the spike trains, much more information is needed in order to calculate a weight update ΔWij(t, T) between any two time points. This makes the computation more involved: the synapse needs all values of for t′ ∈ [t, T] to update its weight. The remainder of this study describes different approaches to this problem and their advantages and disadvantages.

2.3. Voltage-Based Plasticity

In a time-driven neuron update, the membrane potential in many simulators is computed at each simulation step tα = α · h, where h is the simulation step size and α ∈ ℕ. For plasticity models that rely on the membrane potential, the time discretization of (2) therefore yields

| (6) |

| (7) |

which, in comparison to the small sum over spikes in the STDP rule (5), contains a large sum over all time steps tα in between time points t and T. As the membrane potential is only known at time points t′ = tα, it generally enters (7) in a piecewise constant manner – hence the argument V(tα). The synapse therefore predominantly needs information of the postsynaptic neuron in order to update its weight. Thus, in a distributed simulation framework, where neurons are split across multiple compute processes, it is beneficial to store the synapses at the site of the postsynaptic neurons in order to reduce communication (Figure 1C). This confirms the earlier design decision of Morrison et al. (2005) who place synapses at the site of the postsynaptic neuron to reduce the amount of data communicated by the presynaptic site.

If weight changes ΔWij depend on the synaptic weight themselves, then (7) cannot be used in practice as intermediate weights for tα < t′ < tα+1 are not known. In this scenario, weight changes have to be calculated on the simulation grid with in case of a forward Euler scheme, or in case of a backward Euler scheme. In the following we, for simplicity, stick to the forward Euler setting and arrive at the core computation for voltage-based plasticity rules

| (8) |

Given that si and sj are spike trains, the functionals and are obtained trivially from the kernels of their corresponding Volterra expansions. If F in addition does not depend on and in a too complicated manner, which is usually the case (see examples below), the integral in (8) can be calculated analytically.

2.3.1. Compression of Postsynaptic Information

The major operation of the plasticity scheme in terms of frequency and complexity is the computation of infinitesimal weight changes . Since the presynaptic spike train enters F in (8), the same postsynaptic information on and is used many times for very similar computations: the membrane potential trace of each neuron is effectively integrated many times. Is there a way to employ the result of the computation for neuron j for the computations for other neurons k ≠ j? In a simple setting, where F factorizes into with and

| (9) |

defined via the Heaviside step function H(x), we can make use of the property

| (10) |

where t > tLS and tLS denotes the last spike time of the presynaptic neuron. In this case the weight update in between two spike events factorizes

| (11) |

where the latter integral ΔWi(tLS, tS) is independent of the presynaptic spike train . Moreover, ΔWi depends on tLS only via an exponential prefactor. Thus, an integral ΔWi(t1, t2) over an arbitrary time interval tLS ≤ t1 < t2 ≤ tS which is completely independent of any presynaptic information, can be used as a part of the whole integral ΔWi(tLS, tS) since it can be decomposed as

Therefore, whenever an integral of the postsynaptic quantities and is computed, it can be used to advance the weight update of all incoming connections and the integration only needs to be performed once. To account for the generally different last spike times tLS of the incoming connections, the postsynaptic neuron stores the different ΔWi(tLS, t) in a so-called compressed history. At the time of an incoming spike event, ΔWi(tLS, tS) can be read out by the synapse for the correct tLS of that synapse and be combined with the stored presynaptic spike trace .

2.4. Example 1: Clopath Plasticity

The Clopath rule (Clopath et al., 2010) was designed as a voltage-based STDP rule that accounts for non-linear effects of spike frequency on weight changes which had been previously observed in experiments (Sjöström et al., 2001). It does so by using the evolution of the postsynaptic membrane voltage around postsynaptic spike events instead of the postsynaptic spikes themselves. This requires a neuron model that takes into account features of membrane potential excursions near spike events, such as modified adaptive exponential integrate-and-fire (aeif) model neurons that are used in the original publication (Clopath et al., 2010, see section 5.2) or Hodgkin-Huxley (hh) neurons that are used in a NEURON reference implementation on ModelDB (Hines et al., 2004).

The plasticity rule is of the general form (1) with a sum of two different functions Fα on the right hand side. It treats long-term depression (LTD) and potentiation (LTP) of the synaptic weight in the two terms FLTD and FLTP, with

| (12) |

and

| (13) |

Here (x − x0)+ = H(x − x0)(x − x0) is the threshold-linear function and H(x) is the Heaviside step function. ALTD and ALTP are prefactors controlling the relative strength of the two contributions. κ± are exponential kernels of the form (9), which are applied to the postsynaptic membrane potential, and κs is an exponential kernel applied to the presynaptic spike train. The time-independent parameters θ± serve as thresholds below which the (low-pass filtered) membrane potential does not cause any weight change (Figure 3). Note that ALTP can also depend on the membrane potential. This case is described in Appendix Section 5.5.

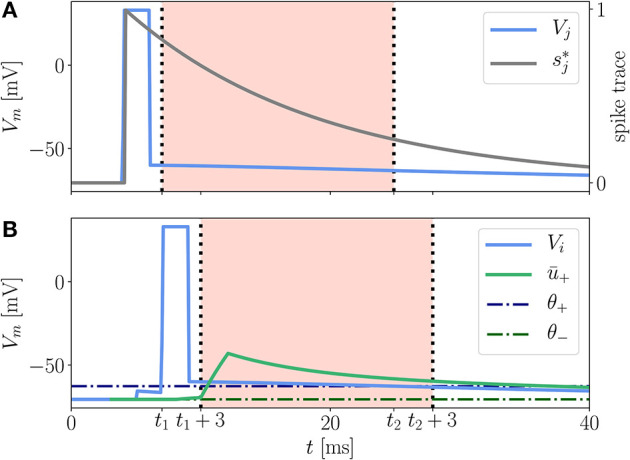

Figure 3.

Illustration of LTP contribution to the Clopath rule. A presynaptic neuron (A) and a postsynaptic neuron (B) emit a spike at tsp,pre = 4 ms and tsp,post = 6 ms, respectively. The presynaptic spike elicits a trace (gray) at the synapse. The excursion of the postsynaptic membrane potential Vi (B, blue) elevates the low-pass filtered potential ū+ (green) so that both Vi and ū+ exceed the respective thresholds θ+ (dash-dotted, dark blue) and θ− (dash-dotted, dark green), cf. (13), between t1 and t2. Only within this period, shifted by ds = 3 ms, which is for times t1 + 3 ms < t < t2 + 3 ms (B, red background), see section 3.2.3 for details, the LTP of the synaptic weight is non-vanishing because of the threshold-linear functions in equation (13). The shift by ds = 3 ms does not apply to the spike trace (A, red background). The rectangular shape of the spikes is achieved by a clamping of the membrane potential to Vclamp = 33 mV for a period of tclamp = 2 ms.

In a reference implementation of the Clopath rule by C. Clopath and B. Torben-Nielsen available on ModelDB (Hines et al., 2004), there is a subtle detail not explicitly addressed in the original journal article. In their implementation the authors introduce an additional delay ds between the convolved version of the membrane potentials ū± and the bare one [cf. parameter ds in (12) and (13)]. The convolved potentials are shifted backwards in time by the duration of a spike ds (see Supplementary Tables 1, 3). As a result, the detailed shape of the excursion of the membrane potential during a spike of the postsynaptic neuron does not affect the LTP directly but only indirectly via the low-pass filtered version ū+, see red background in Figure 3B. Incorporating this time shift in ū± is essential to reproduce the results from Clopath et al. (2010) on spike-pairing experiments.

The depression term FLTD depends on the unfiltered spike train sj. It can thus be treated analogous to ordinary STDP rules (cf. (4)ff). In particular, only needs to be available for time points of presynaptic spikes (potentially taking into account additional delays of the connection). The potentiation term FLTP, however, depends on the filtered spike train ; consequently needs to be known also for times in between spike events.

2.5. Example 2: Urbanczik-Senn Plasticity

The Urbanczik-Senn rule (Urbanczik and Senn, 2014) applies to synapses that connect to dendrites of multicompartment model neurons. The main idea of this learning rule is to adjust the weights of dendritic synapses such that the dendrite can predict the firing rate of the soma. The dendrite expects the firing rate to be high when the dendrite's membrane potential is elevated due to many incoming spikes at the dendrite, and to be low if there are only a few incoming spikes. Thus, for this prediction to be true, synapses that transmit a spike toward the dendrite while the firing rate of the soma is low are depressed and those that provide input while the soma's firing rate is high are facilitated. Learning can be triggered by applying a teacher signal to the neuron via somatic synapses such that the actual somatic firing deviates from the dendritic prediction.

The plasticity rule is again of the general form (1), with a functional F on the right hand side that reads

| (14) |

| (15) |

with exponential filter kernels κ and κs and non-linearities ϕ and h. Note that F depends on the postsynaptic spike train si via . The latter can be interpreted as a prediction error, which never vanishes as spikes si (point process) are compared against a rate prediction ϕ(Vi) (continuous signal).

In order to solve (1), we need to integrate over , cf. (2). Writing down the convolution with κ explicitly, we obtain

| (16) |

A straight forward implementation of this expression is inefficient in terms of memory usage and computations because of the two nested integrals. However, since the kernels κ and κs are exponentials, one can perform one of the integrations analytically (see Appendix Section 5.1 for a derivation) to rewrite the weight change as

| (17) |

which is in line with the general formulation discussed in section 2.3.

3. Results

In the following, we first discuss time- and event-driven update schemes for synapses with voltage-based plasticity. Then we present a reference implementation for the Clopath rule (Clopath et al., 2010) and the Urbanczik-Senn rule (Urbanczik and Senn, 2014) in the spiking network simulator NEST (Jordan et al., 2019). Finally, we show that these implementations reproduce results of the original works and we assess their simulation performance on a distributed computing architecture.

3.1. Time-Driven vs. Event-Driven Update Scheme for Synapses With Voltage-Based Plasticity

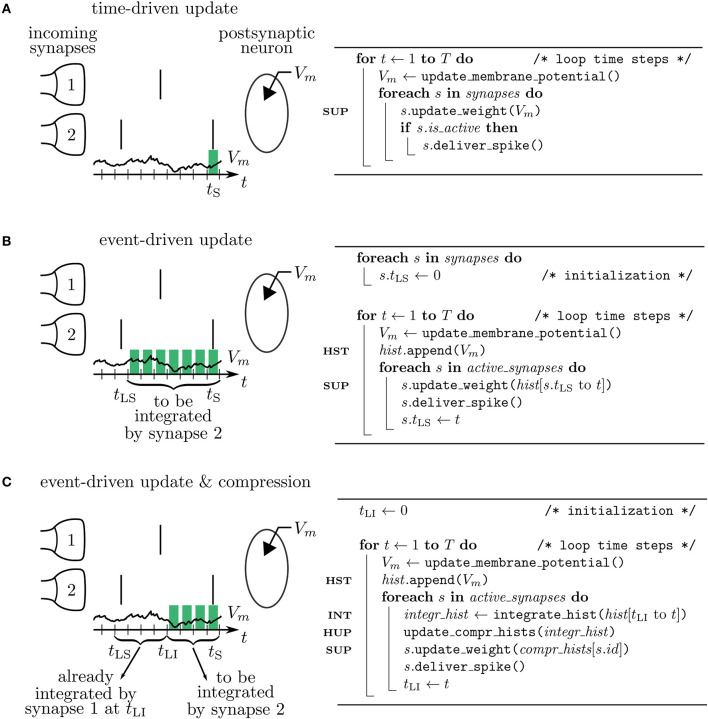

Let's assume in the following that tLS and tS denote two consecutive spike times of a presynaptic neuron j. The synaptic weight Wij(tS) corresponding to the spike at time tS can be obtained from the weight Wij(tLS) at the time of the previous spike at tLS and (6) by employing (8) to calculate the latter. As F mixes information of the pre- and postsynaptic neurons, this computation should be done in the synapse. Since there are no spikes in between tLS and tS, it does not matter when the synapse is performing the updates of its weight. Two possibilities are: 1) Neurons calculate their own s* and V* for the current time step and make it accessible to the synapse to enable direct readout and update according to (8) in every time step. This method corresponds to a time-driven update of synapses (Figure 4A). 2) Neurons store a history of s* and V* and the synapse reads out this information at tS, i.e., at the time where the weight update becomes relevant for the network. This method corresponds to an event-driven update of synapses (Figure 4B). Both methods have their advantages and disadvantages analyzed in the following section.

Figure 4.

Simulation concepts. Left: illustration of processing the postsynaptic voltage trace Vm (t) for three simulation concepts. Two incoming synapses (1 and 2) transmit spikes (black, vertical bars) to the postsynaptic neuron. Depending on the algorithm, a different number of past membrane potentials has to be stored (green blocks) so that synapse 2 can update its weight when it delivers the spike at time tS. Right: corresponding pseudocodes. (A) In the time-driven update scheme the synaptic weight change is evaluated in every time step of the simulation for all the synapses. This requires only the latest value of the membrane potential to be accessible by the synapse to update its weight at tS (see line marked SUP in pseudocode). (B) In the event-driven update scheme the computation of the synaptic weight change is performed only if a spike crosses the synapse. Therefore, storage of the time trace of Vm (see HST in code) from the last spike delivered by synapse 2 at tLS up to the current time step tS is needed. (C) In the compressed event-driven update scheme synapse 2 uses the time trace of Vm integrated from the last incoming spike at tLI up to the current time step tS (see INT in code) to complete its weight update (see SUP in code) and also to advance that of synapse 1. The preceding part of Vm from tLS to tLI was already integrated and applied to all incoming synapses (see HUP in code) by synapse 1 when it delivered the spike at tLI.

3.1.1. Time-Driven Scheme

In a time-driven update scheme the information on the membrane potential is directly processed by the synapses such that only the current value of the membrane potential needs to be stored, corresponding to a membrane potential history of length L = 1 (Figure 5 and Table 1). For a simulation of T time steps, the history needs to be manipulated H = T times: the single stored value gets updated once per time step. The price that comes with the short history is that synapses need to be updated as often as neurons. This amounts to M = K · T function calls to synapse code for each neuron. Here K denotes the in-degree of each neuron. Each function call of synapse code causes a single computation of , giving rise to in total C = K · T computations per neuron. The membrane potential trace is thus effectively integrated K times; once for each synapse. As both K and T are large numbers in typical simulations of plastic cortical networks, the amount of function calls and computations is therefore large in this setting. The time-driven scheme furthermore forces the execution of synapse code also at time steps where no update would be required, i.e., at time steps, where have values for which . In addition, for delayed connections a history of of length L = dmax of the maximal delay dmax measured in simulation steps needs to be stored. We here assume the delay to be on the postsynaptic side; it represents the time the fluctuations of the somatic membrane potential propagate back through the dendrites to the synapses. Therefore, F does not depend on , but on with a delay dj encoding the location of the synapse with presynaptic neuron j.

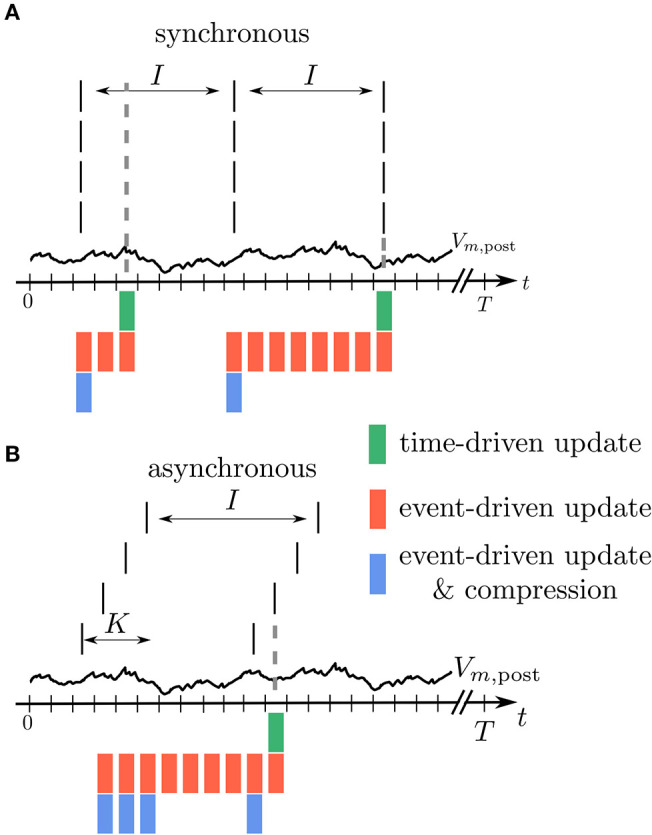

Figure 5.

Illustration of buffer sizes for different simulation schemes in case of fully synchronous or asynchronous spikes. (A) All incoming spikes arrive synchronously: In the time-driven scheme the synaptic weight is updated in every time step of the simulation, so that only the current value of Vm,post needs to be available (green). In the event-driven scheme every synapse processes Vm,post from the last spike to the current one. Therefore, the relevant time trace needs to be stored (red). In the compressed event-driven scheme this part of Vm,post is processed only once and used to update the weight of all the synapses. Since the weight change is a function of the last spike time which is the same for all the synapses, only one value needs to be updated (blue). In this situation the length L of the compressed history is i = 1, see Table 1. (B) All incoming spikes arrive in different time bins: For the time-driven and the event-driven scheme the scenario is similar to (A). For the compressed event-driven scheme the number of values that need to be updated equals the number of incoming synapses K so that i = K.

Table 1.

Comparison of synapse update schemes.

| Time-driven | Event-driven | Event-driven & compression | |

|---|---|---|---|

| History length L | 1 | I | i |

| Synapse function calls M | K · T | K · T/I | K · T/I |

| Weight change computations C | K · T | K · T | T |

| History entry manipulations H | T | T | K · T/I · i |

From the view point of a postsynaptic neuron, the table shows the maximal length of the history L, the number of function calls M of synapse code, the number of computations C of infinitesimal weight changes , and the number of history entry manipulations H for a simulation of T time steps, a uniform inter-spike interval I between spikes of a single presynaptic neuron, and an in-degree K for each neuron and no delays. For the event-driven compression scheme the entries show the length of the compressed history where i is the number of different spike times within the last inter-spike interval I.

3.1.2. Event-Driven Scheme

In an event-driven update scheme for synapses, the time trace of the membrane potential needs to be stored until all incoming synapses have read out the information to update their weight for a given period. The storage and management of such a history can be expensive in terms of memory and runtime. In each time step, the value of the current membrane potential is appended to the history, leading to H = T history manipulations for a simulation of T time steps. Assuming for simplicity a homogeneous inter-spike interval of I time steps between consecutive spikes of single neurons, we in the following showcase some qualitative history sizes. As synapses need all values of in between two consecutive spikes, the maximum history length is L = I (Figure 5). In case of different firing rates, I corresponds to the maximum inter-spike interval of any of the presynaptic neurons. Synapse code in this scheme is, however, only called in the event of a spike, leading to only M = K · T/I function calls per neuron, where T/I is the number of spikes passing a single synapse during the simulation of T time steps. The total amount of computations C of weight changes is of course unchanged with respect to the time-driven scheme; they are just split across fewer function calls (C = M · L = K · T). Table 1 immediately shows the trade-off between memory consumption (length of history) and run time (number of function calls): the event-based scheme consumes more memory, but is faster than the time-driven scheme. Note that since a history of the membrane potential is stored anyway, this scheme is naturally applicable to connections with different delays. A further performance increase can be achieved in plasticity rules, where weight changes only happen under certain conditions on : if values are rare, a non-continuous history can be stored. In such a scenario, time stamps need to be stored alongside the membrane potential to enable synapses to read out the correct time intervals (see section 3.2.3).

3.1.3. Event-Driven Compression

The event-driven compression scheme is a modified event-driven scheme that makes use of the fact that for a specific class of plasticity rules the integrated time trace of the membrane potential can be used to advance the weight update of all incoming synapses, see section 2.3.1. Therefore, the time trace of stored in the postsynaptic neuron only needs to extend back to the last incoming spike (denoted by tLI in Figure 4C). This way the history of is always short, as the total rate of incoming spikes is high in physiological network states. Due to the dependence of the weight update on the time of the last spike that crossed the synapse, the postsynaptic neuron stores the compressed history of length L = i, where i is the number of different spike times within the last inter-spike interval I (Figure 5). The compressed history is consequently never larger than the history length L = I of the ordinary event-driven scheme (Figure 5B). For synchronous spikes where the last presynaptic spike time is the same for all synapses, the compressed history, however, contains only one entry (Figure 5A). Still, synapse code is executed at every spike event, giving rise to M = K · T/I function calls. The full membrane potential trace of length T is effectively only integrated once, amounting to in total C = T infinitesimal weight change computations that are performed in batches in between any two incoming spike events (Table 1). The price for this is that history updates are more expensive: instead of appending a single entry in each time step, at each spike event the full compressed history is updated, giving rise to in total H = M · i = K · T · i/I history entry manipulations, as opposed to H = T in the time- and ordinary event-driven schemes (Table 1). In practice, infinitesimal weight change computations are, however, often more costly than history updates, such that the compression algorithm achieves a performance increase (see section 3.4).

Finally, a drawback of the event-driven compression is that it relies on the fact that all synapses use the same processed membrane potential . For distributed delays, ΔWi(tLS, T) has a dependence on the presynaptic neuron j via . In this case, a separate compressed history needs to be stored for every different delay of connections to the neuron.

3.2. Reference Implementation in Network Simulator With Event-Based Synapse Updates

This section describes the implementation of two example voltage-based plasticity rules by Clopath et al. (2010) and Urbanczik and Senn (2014) in a spiking neural network simulator that employs a time-driven update of neurons and an event-based update of synapses. While the naming conventions refer to our reference implementation in the simulation software NEST, the algorithms and concepts presented below are portable to other parallel spiking network simulators.

The Clopath and Urbanczik-Senn rule are chosen as widely used prototypical models of voltage-based plasticity. The differences in the two rules help to exemplify the advantages and disadvantages of the algorithms discussed in section 3.1. As originally proposed, they are implemented here for two different types of neuron models, Adex and Hodgkin-Huxley point-neurons for the Clopath rule (aeif_psc_delta_clopath and hh_psc_alpha_clopath) and two-compartment Poisson neurons (pp_cond_exp_mc_urbanczik) for the Urbanczik-Senn rule. Extensions to multiple dendritic compartments in the latter case are straight forward. Our implementation of aeif_psc_delta_clopath follows the reference implementation on ModelDB which introduced a clamping of the membrane potential after crossing the spiking threshold to mimic an action potential. Details can be found in Appendix Section 5.2.

The plasticity rules differ in the state variable that is being stored and its interpretation. For the Clopath rule, the stored variable is a thresholded and filtered version of the membrane potential that takes into account characteristics of membrane potential evolution within cells in the vicinity of spike events. The restriction to temporal periods around spikes suggests to implement a history that is non-continuous in time. In contrast, the Urbanczik-Senn rule uses the dendritic membrane potential to predict the somatic spiking; the resulting difference is taken as an error signal that drives learning. This error signal never vanishes and thus needs to be stored in a time-continuous history.

Finally, the proposed infrastructure for storing both continuous and non-continuous histories is generic so that it can also be used and extended to store other types of signals such as external teacher signals.

3.2.1. Exchange of Information Between Neurons and Synapses

The implementation of voltage-based plasticity rules in NEST follows the modular structure of NEST, key part of which is the separation between neuron and synapse models. This separation makes it easy for a newly added neuron model to be compatible with existing synapse models and vice versa. A downside is that information, such as values of parameters and state variables, is encapsulated within the respective objects. Simulations in NEST employ a hybrid parallelization scheme: OpenMP threads are used for intra node parallelization and the Message Passing Interface (MPI) for inter node communication. In parallel simulations, synapses are located at the same MPI process as the postsynaptic neurons (Morrison et al., 2005). Thereby, no communication between MPI processes is needed for the required transfer of information between postsynaptic neurons and synapses to compute weight changes of connections and only one spike needs to be communicated by a given source neuron for all target neurons living on the same MPI process.

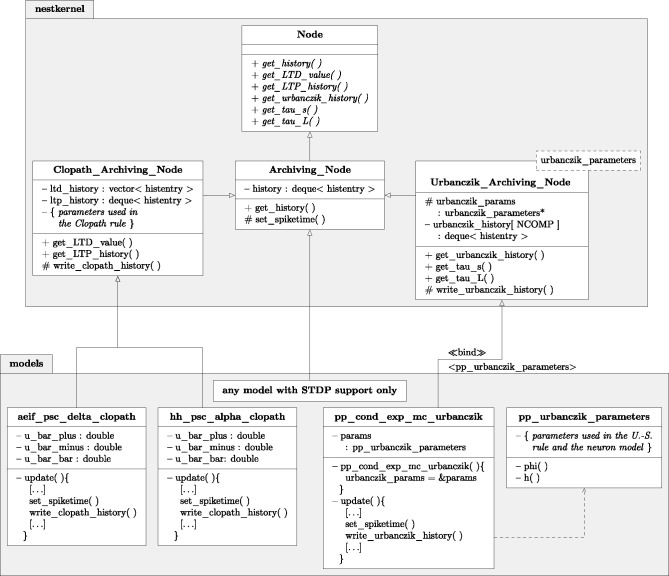

The model of STDP requires synapses to access spike times of postsynaptic neurons. In order to provide a standardized transfer of this information across all neuron models that support STDP, in recent years the so-called Archiving_Node has been introduced as a parent class of the respective neuron models (Morrison et al., 2007a). It provides member functions to store and access spike histories. If a neuron model supports STDP, it only needs to be a child of Archiving_Node and contain one additional line of code, namely a call of the function set_spiketime(), which stores the time of outgoing spike events. We here extended this framework for voltage-based plasticity rules and enhanced the functionality of the archiving node by the member functions write_history(), get_history(), and compress_history() to additionally store, read out and manipulate voltage traces or other continuous signals (for Details, see Appendix Section 5.3). To avoid overhead for simulations with only STDP synapses, we introduced two child classes of Archiving_Node, Clopath_Archiving_Node, and Urbanczik_Archiving_Node, that each provide containers and functions for the specific histories required for the two plasticity rules. Neuron models that support the respective synapse model then derive from the child classes instead of the root level archiving node.

3.2.2. Delays and Min_delay Communication

All synapses implemented in NEST are so far purely event-driven. To assess the performance of the time-driven update scheme of synapses with voltage-based plasticity, we also implemented a time-driven version of the Clopath and Urbanczik-Senn synapse. Spiking network simulators exploit the delay of connections to reduce communication between compute processes (Morrison et al., 2005): Instead of sending each spike individually, spikes are buffered and sent in a batch after a certain period. The length of this period, the min_delay, corresponds to the minimal delay of all connections in the network. The buffering of spikes within this period is possible because the earliest time point that a spike at time tS can affect the postsynaptic membrane potential is at t = tS + min_delay. In between tS and t neurons are decoupled such that their state variables can be propagated forward in time independent of each other and in a batch (Morrison and Diesmann, 2008). We implemented the same min_delay update scheme for synapses, by imposing a function call to time-driven synapses in every min_delay period to update their synaptic weight. If min_delay equals the simulation step size h, this scheme corresponds to the scheme explained in section 3.1.1. Making use of the min_delay infrastructure of NEST speeds up simulations with time-driven synapses in the case d > h as fewer function calls to synapses are needed (see section 3.4). In case of simulations with synaptic delays, the time-driven update scheme requires the storage of a history of the membrane potential of length max_delay.

Storing state variables in event-driven schemes is more complex as the history does not have a fixed length max_delay. Instead it needs to be dynamically extended and shortened. A long history can occupy a large amount of memory and its processing by the synapses becomes computationally expensive. Therefore, it is advantageous to optimize the way how information is stored and accessed and how entries that are no longer needed can be identified for deletion. For details of these optimizations in our NEST implementation, see Appendix Section 5.3.

As discussed in section 3.1.3, the event-based compression scheme relies on the fact that all synapses to one postsynaptic neuron employ the same . This is not the case if delays of the corresponding connections are distributed. The compression scheme can therefore only be efficient if all delays have a fixed value. If spikes are processed and synapses are updated in a chronological order, then a well-defined segment of the history of can be integrated and the compressed history can be updated. In NEST, spikes are, however, buffered within a period of min_delay before being sent and processed. Consequently, synapses are not necessarily updated in chronological order. Therefore, the event-based compression scheme can only be implemented in NEST in the case where delays equal the simulation time step. Future work may explore whether the latter restriction could be overcome by sorting all incoming spike events of a given postsynaptic neuron prior to delivery.

3.2.3. Specifics of Clopath Plasticity

We implement both an adaptive exponential integrate-and-fire neuron model (aeif_psc_delta_clopath) and a Hodgkin-Huxley neuron model (hh_psc_alpha_clopath) supporting Clopath plasticity. These implementations consider the filtered versions ū± of the membrane potential as additional state variables of the neuron. Thereby, they can be included in the differential equation solver of the neurons to compute their temporal evolution. Parameters of κ± consequently need to be parameters of the neuron object rather than the synapse. The same is true for the values of θ±; they are used in the neuron to determine whether and evaluate to zero, which systematically happens due to the Heaviside functions in their definitions.

The LTD mechanism is convenient to implement within the event-driven framework: when the synapse is updated at time t, it reads the values ū− (t − d) and θ− from its target and computes the new weight. Here, d denotes the dendritic delay of the connection that accounts for the time it takes to propagate somatic membrane potential fluctuations to the synapse. The archiving node contains a cyclic buffer, also called ring buffer, that stores the history of ū− for the past max_delay time steps so that the synapse can access a past value of this quantity. Consequently, the LTD history is always short and can be forgotten in a deterministic fashion.

The computation of the weight change due to LTP requires the evaluation of the integral over . The latter is stored in the archiving node as a vector whose elements are objects that contain three values: the corresponding time t, the value of and an access counter that initially is set to zero.

3.2.3.1. Time-Driven Update

For simulations with homogeneous delays equal to the simulation time step, the history of always contains only a single value as it is read out in every time step by all synapses. For larger delays, the history is of length max_delay, and each synapse reads out a segment of length min_delay, increasing the access counter of the corresponding entries by one. For the last synapse that requests a certain segment, the access counter then equals the in-degree K, which is the criterion to delete the corresponding entries from the history. Although for simplicity done in our reference implementation, the time-driven scheme does not require us to store the time stamp t of each history entry. The overhead of this additional number is, however, negligible.

3.2.3.2. Event-Driven Update

In event-driven schemes, the history of dynamically grows and shrinks depending on the spikes of presynaptic neurons. Since many values of are zero, it is beneficial to only store the non-zero values. In this case, a time stamp of each entry is required to assign values of the non-continuous history of to their correct times. In case of the uncompressed scheme, when a synapse j is updated at time tS of a spike, it requests the part of the history between the last spike tLS and the current spike tS (minus the dendritic delay, see Appendix Section 5.3) from the archiving node. This history segment is then integrated in synapse j and used for its weight update. Each synapse thus integrates the history anew (section 3.1.2). For the compressed scheme, the history of is integrated between the last incoming spike at tLI and the current spike at tS inside the archiving node. Using this newly integrated time trace, the weight of synapse j is updated and the compressed history for all other last spike times is advanced. Afterwards the history of is deleted. Thereby, is only integrated once for all synapses.

In any case, the integrated history of needs to be combined with the presynaptic spike trace . The latter is easily computed analytically inside the synapse because it is an exponential decay of the corresponding value at the time of the last spike. At the end of the update process the trace is increased by to account for the trace of the current spike, where τs is the time constant of the kernel κs.

3.2.4. Specifics of Urbanczik-Senn Plasticity

Following the original publication (Urbanczik and Senn, 2014), we implement a Poisson spiking neuron model (pp_cond_exp_mc_urbanczik) supporting Urbanczik-Senn plasticity. One peculiarity of this model is that the gain function ϕ that translates the membrane potential into a firing rate also enters the plasticity rule through V*. Therefore ϕ as well as its parameters need to be known by the neuron and the synapse. Creating an additional helper class (pp_urbanczik_parameters) as a template argument for the corresponding archiving node (Urbanczik_Archiving_Node) and neuron model (pp_cond_exp_mc_urbanczik) solves this problem (Figure 6): it contains all parameters and functions required by both classes. As explained in section 2.5, the representation (17) is more beneficial for implementing the Urbanczik-Senn rule than that of (16). The first two integrals in (17) only extend from t to T; history entries for times smaller than t are not needed and can be deleted after the corresponding update. The dependence on the full history back until 0 arising from the convolution with κ is accumulated in the last term in (17), which the synapse computes with the help of storing one additional value I2(0, t). At the end of a weight update this value is overwritten by the new value which is then used in the next update. Either the synapse (time- and event-driven update) or the archiving node (event-driven compression) compute the two integrals I1 and I2 but in all cases the archiving node stores the history of .

Figure 6.

Class diagram of NEST classes and functions. Simplified class diagram for embedding the Clopath (Left) and Urbanczik-Senn rule (Right) in the NEST infrastructure. The code is distributed across the nestkernel and neuron models. nestkernel contains the base class Node of all neurons models. Models that support ordinary STDP are derived from the Archiving_Node, models that can use the Clopath synapse (aeif_psc_delta_clopath and hh_psc_alpha_clopath) or Urbanczik-Senn synapse (pp_cond_exp_mc_urbanczik) are derived from the Clopath_Archiving_Node or the Urbanczik_Archiving_Node, respectively. The latter add the required functions for storing and managing the history of continuous quantities. The model pp_cond_exp_mc_urbanczik requires a helping class pp_urbanczik_parameters because the Urbanczik_Archiving_Node needs to access functions and parameters that are specific to the neuron model and therefore not located in the Urbanczik_Archiving_Node to keep its implementation more general.

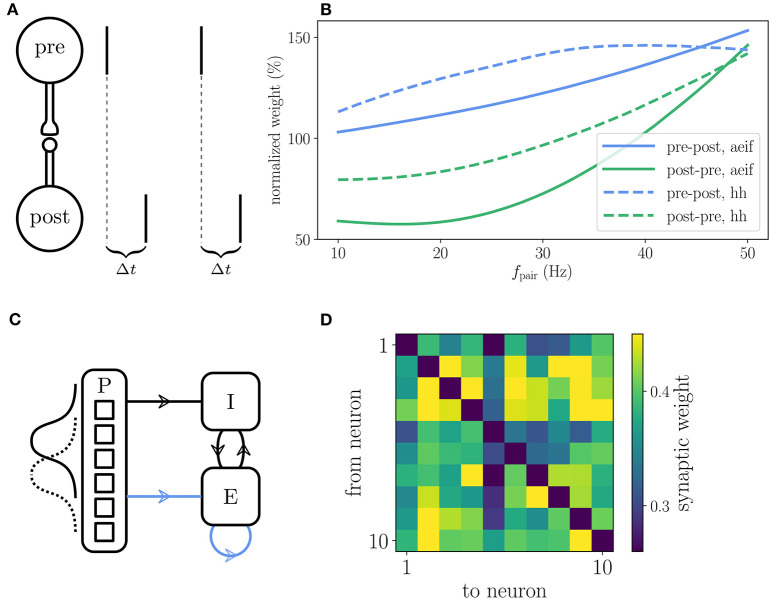

3.3. Reproduction of Results in Literature

The reference implementation of the Clopath plasticity reproduces the results from Clopath et al. (2010) on the frequency dependence of weight changes in spike-pairing experiments and the emergence of bidirectional connections in small all-to-all connected networks (Figure 7). The setup of the spike-pairing experiment in Figure 7A consists of two neurons connected via a plastic synapse. The pre- and postsynaptic neuron are forced to spike with a time delay of Δt multiple times which leads to a change in synaptic weight that depends on the frequency of the spike pairs (Figure 7B). The setup of the small network is shown in Figure 7C. The weights of the plastic synapses within the recurrently connected excitatory population are initialized all to the same value. At the end of the simulation during which the network receives a time varying input, some pairs of neurons show strong bidirectional connections (Figure 7D). See Appendix Section 5.4 for details on the setup of both experiments as implemented in NEST.

Figure 7.

Reproduction of results with Clopath rule. (A) Setup of the spike pairing experiment. Two neurons (“pre” and “post”) that are connected by a plastic synapse receive input so that they spike one after another with a delay Δt. The change of the synaptic weight is computed according to the Clopath rule as a function of the frequency fpair with which the spike pairs are induced. (B) Result of the spike pairing experiment. The relative change of the synaptic weight after five spike pairs as a function of fpair is shown for two different neuron models (aeif: solid curves, Hodgkin-Huxley: dashed curves). The blue curves represent a setup where the postsynaptic neuron fires after the presynaptic one (pre-post, Δt = 10 ms) and the green curves represent the opposite case (post-pre, Δt = −10 ms). This panel corresponds to Figure 2B of Clopath et al. (2010). (C) Setup of the network that produces strong bidirectional couplings. The network consists of an inhibitory (I) and an excitatory (E) population which receive Poisson spike trains (P) as an external input. The firing rate of the latter is modulated with a Gaussian shape whose center is shifted every 100 ms. The external input connections to the excitatory population are plastic as well as the connections within the excitatory population (indicated by blue arrows). (D) Synaptic weights of the all-to-all connected excitatory neurons after the simulation of the network. Strong bidirectional couplings can be found, e.g., between neurons 2 and 3, 2 and 9, and 4 and 7. The setup of this experiment is similar to that shown in Figure 5 of Clopath et al. (2010). A more detailed description of the two experiments can be found in Appendix Section 5.4.

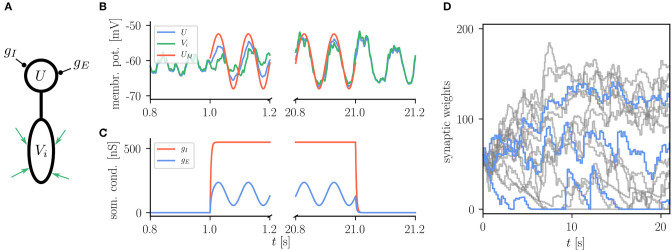

The basic use of the Urbanczik-Senn rule in NEST is exemplified in Figure 8 which shows the reproduction of a simple learning experiment from the original publication (Urbanczik and Senn, 2014). Here the neuron is supposed to transform spike patterns in the input to the dendritic compartment into a sinusoidal modulation of the somatic membrane potential. This target potential is determined by an external teaching signal during learning. Via minimizing the error between the dendritic prediction of the somatic membrane potential and the actual somatic membrane potential, weights of dendritic synapses are organizing such that the neuron can produce the desired membrane potential. There is, however, no stop-learning region in the Urbanczik-Senn rule (for a modified version, see Cartiglia et al., 2020): The error never vanishes completely which causes weights to keep changing despite an overall good approximation of the target signal. Details of the experiment and NEST setup can be found in Appendix Section 5.6.

Figure 8.

Reproduction of results with Urbanczik-Senn rule. (A) Setup of a simple learning task using the Urbanczik-Senn plasticity rule. The somatic conductances gI and gE of a two-compartment neuron are modulated such that they induce a teaching signal with sinusoidal shape. The dendrite receives a repeating spike pattern as an input via plastic synapses (green arrows). (B) The synapses adapt their weights so that the somatic membrane potential U (blue) and the dendritic prediction Vi (green) follow the matching potential UM (red) after learning. (C) Excitatory (gE) and inhibitory (gI) somatic conductances that produce the teaching signal. (A,B) correspond to Figure 1 of Urbanczik and Senn (2014). (D) Temporal evolution of the synaptic weights during learning. For the sake of better overview, only a subset of weights is shown (gray) with three randomly chosen time traces highlighted in blue. Synapses in NEST fulfill Dale's principle which means that a weight update cannot convert an excitatory into an inhibitory synapse and vice versa giving rise to the rectification at zero.

3.4. Performance of the Reference Implementations

3.4.1. Clopath Plasticity

In order to evaluate the performance of the implementation of the Clopath rule in NEST, in a weak-scaling setup, we simulate excitatory-inhibitory networks of increasing size, but fixed in-degree K. As we expect the performance to critically depend on the number of synapses, we examine two scenarios: a small in-degree K = 100, and a rather large in-degree K = 5, 000. While the first case might be suitable for small functional networks, the latter in-degree represents a typical number for cortical networks. Further details on network and simulation parameters are given in Supplementary Table 5. As a reference, we also simulate the same network with STDP synapses, which require much fewer computations as they rely solely on spike times. To achieve the same network state, that is the same spikes, for the different connectivity rules, we impose the weights to stay constant across time by setting learning rates to zero. This way all computations for weight changes are being performed, but just not applied. This has the additional advantage that reasonable asynchronous irregular network states are simple to find based on predictions for static synapses (Brunel, 2000).

The Clopath rule has originally been proposed for connections without delays (Clopath et al., 2010). Therefore, we first evaluate its performance in this setting (delay equals simulation time step), which is, however, not the natural setting for a simulator like NEST that makes use of delays to speed up communication between compute processes. The first observation is that, as expected, simulations with Clopath synapses are slower than those with ordinary STDP (Figure 9). Given the update of synapses in every simulation step, the time-driven scheme for Clopath synapses is much slower than the event-driven scheme (Figure 9A). The difference becomes larger the more synapses there are (Figure 9B). Introducing a delay leads to fewer function calls to synapses (once every min_delay) and therefore increases the speed of the time-driven scheme (Figure 9C). Its simulation times, however, remain much above the event-driven scheme. This comparison illustrates the benefit of event-driven updates for Clopath synapses.

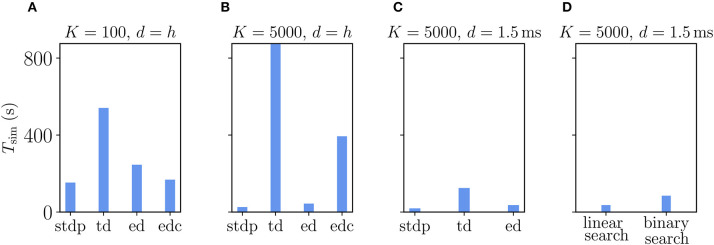

Figure 9.

Comparison of simulation times Tsim for excitatory-inhibitory networks with different implementations of the Clopath plasticity in NEST. Simulation times exclude network building and only account for updates of the dynamical state of the system. The following implementations are shown: “stdp”: standard implementation of STDP synapse, “td”: time-driven implementation of Clopath synapse, “ed”: event-driven scheme as included in NEST 2.20.1, “edc”: event-driven compression. (A) Network of size N = 1.92 · 106 with small in-degree K = 100 and all synapses having a delay d equal to the resolution of the simulation h = 0.1 ms. (B) Network of size N = 1.54 · 105 with large in-degree K = 5, 000 and d = h. (C) Same network as in (B) but d = 1.5 ms (for d > h “edc” not compatible with NEST, see section 3.1.3). In (A–C) both “ed” and “edc” use linear search of the history and access counters, see Appendix Section 5.3. (D) Comparison between “ed”-implementations using linear search and direct computation of the position, see Appendix Section 5.3.0.2. All simulations use 768 threads distributed over 32 compute nodes each running one MPI process. Further parameters as in Supplementary Table 5.

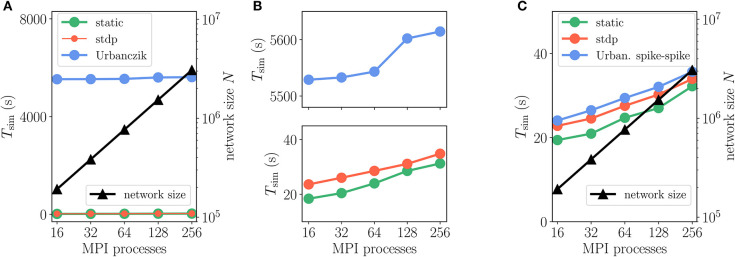

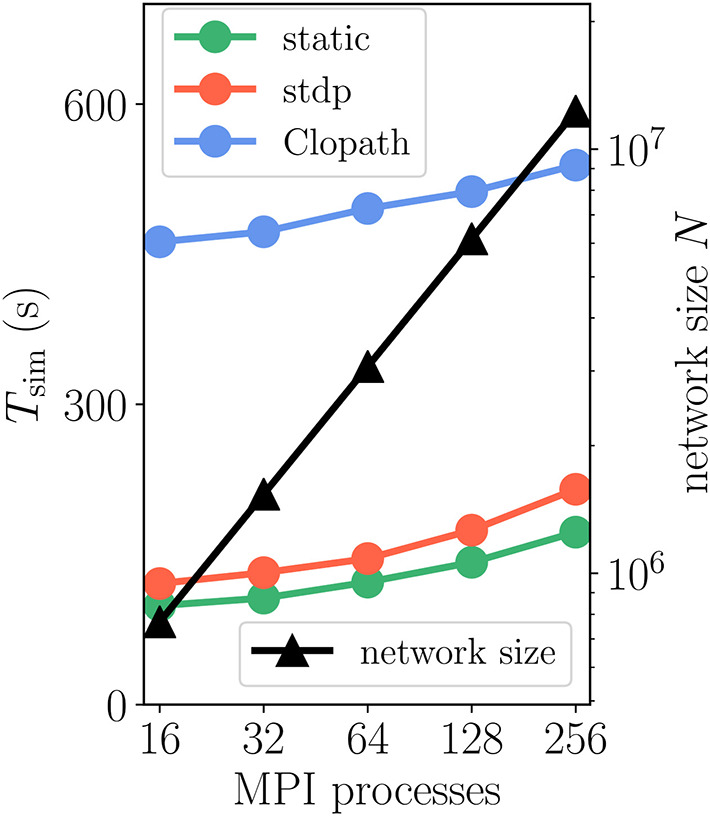

How does compression of the history change the picture? As discussed in section 3.1.3, compression has the advantage of not integrating the membrane potential history for each synapse separately. A downside of the event-based compression is that it requires storing one history entry for each last spike time of presynaptic neurons. For large in-degrees, this history is therefore longer than the history of , which we implemented as non-continuous for the Clopath rule. Consequently, the event-based compression scheme only outperforms the ordinary event-driven scheme for small in-degrees (Figure 9A), but not for large in-degrees (Figure 9B). Given that the compression can only be implemented in NEST for connections with delay equal to the resolution of the simulation (see section 3.2.2), the method of choice is therefore the ordinary event-driven scheme (section 3.1.2). Although a bit slower, its run-time is on the same order of magnitude as the ordinary STDP synapse, with similar weak-scaling behavior (Figure 10). The additional computations with respect to STDP result in a constant overhead.

Figure 10.

Scaling of simulation time Tsim with network size for 2 s of biological time: Clopath plasticity. Weak scaling: computational resources (horizontal axis) increase proportionally to network size N (black curve and triangles, right vertical axis). Event-driven scheme for Clopath rule (blue) compared to static (green) and STDP synapse (red). Network and simulation parameters as in Supplementary Table 5 with in-degree K = 5, 000. For all simulations each compute node runs one MPI process with 24 threads.

Another advantage of having short non-continuous histories is that searching the history at readout is fast. A simple linear iteration scheme is therefore even faster than a binary search (Figure 9D) because the latter search requires an additional list of presynaptic spike times (see Appendix Section 5.3) which is unnecessary overhead in this scenario. As a result the ordinary event-driven scheme with linear history iteration is the most general and efficient scheme and therefore integrated into NEST 2.20.1 (Jordan et al., 2019).

3.4.2. Urbanczik-Senn Plasticity

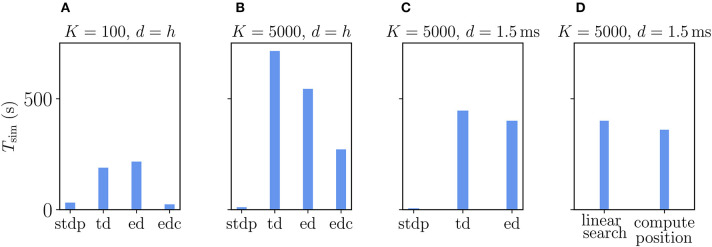

The Urbanczik-Senn rule, in its original version, does not account for delays in connections (Urbanczik and Senn, 2014). As for the Clopath rule, we therefore first evaluate its performance for connections with delays that equal the simulation time step. We compare the results to networks with ordinary STDP synapses, again setting all learning rates to zero to maintain the same network state across different types of plasticity. Naturally, the processing of the membrane potential information makes the Urbanczik-Senn plasticity less efficient to simulate than networks with ordinary STDP synapses (Figure 11). Note that the absolute numbers of simulation times are not directly comparable to simulations with Clopath plasticity (Figure 9) as network sizes are smaller here (Supplementary Table 5). Networks with small and large in-degrees behave qualitatively similar: given the long continuous history that needs to be stored and read out, the event-driven scheme does not significantly outperform the time-driven scheme (Figures 11A,B). In the network with small in-degree, the time-driven scheme is even slightly faster (Figure 11A). This behavior reverses for large in-degrees as the number of synapse calls grows stronger than the length of the history (Figure 11B). However, given that the length of the history is so critical in this rule, the compression algorithm can in both cases achieve a significant increase in performance (Figures 11A,B). This performance increase is larger the smaller the in-degree, as the compressed history becomes shorter (Figure 11A). Due to current NEST specifics (see section 3.2.2), the compression algorithm cannot be used in settings with delays that are larger than the simulation time step (Figure 11C): Here, as expected, the time-driven scheme becomes faster than in the d = h case, but it is in general still comparable in performance to the event-driven scheme. The latter is therefore the method of choice for simulations with delayed connections; for zero-delay connections, the compression algorithm performs best. Whether the history readout is done via linear iteration or via computing positions of history entries has no significant impact on the performance (Figure 11D). Therefore, the simple linear iteration is integrated in NEST 2.20.1.

Figure 11.

Comparison of simulation times Tsim for excitatory-inhibitory networks with different implementations of the Urbanczik-Senn plasticity in NEST. The following implementations are shown: “stdp”: standard implementation of STDP synapse in NEST, “td”: time-driven implementation of Urbanczik-Senn synapse, “ed”: event-driven scheme, edc”: event-driven compression. (A) Network of size N = 3.84 · 105 with small in-degree K = 100 and all synapses having a delay d equal to the resolution of the simulation h = 0.1 ms. (B) Network of size N = 3.84 · 104 with large in-degree K = 5, 000 and d = h. (C) Same network as in (B) but d = 1.5 ms (for d > h “edc” not compatible with NEST, see section 3.1.3). In (A–C) both “ed” and “edc” use linear search of the history and the access counters, see Appendix Section 5.3. (D) Comparison between “ed”-implementations using linear search and direct computation of the position, see Appendix Section 5.3.0.2. All simulations use 768 threads distributed over 32 compute nodes each running one MPI process. Details on network parameters in Supplementary Table 5.

We furthermore employ a weak-scaling setup with excitatory-inhibitory networks of increasing size and fixed in-degree K = 5, 000 (Figures 12A,B, and Supplementary Table 5). Apart from a constant offset, the scaling of simulation time Tsim for updating neurons and synapses is similar for Urbanczik, static and STDP synapses. With increasing network size N and proportionally increasing number of MPI processes, Tsim rises only slightly (Figure 12B), indicating almost ideal weak-scaling behavior. The constant offset in Tsim is larger than for Clopath synapses as the Urbanczik-Senn rule requires longer histories of membrane potentials and a more extensive history management.

Figure 12.

Scaling of simulation times Tsim with network size for 2 s of biological time: Urbanczik-Senn plasticity. Same weak scaling as in Figure 10. (A) Event-driven Urbanczik-Senn rule (blue) compared to static (green) and STDP synapse (red). On the scale of the vertical axis the red curve (STDP synapses) falls on top of the green curve (static synapses), indicated by finer line width and marker size of the former. (B) Same simulation time data as in (A) but with a smaller range on the vertical axis. Upper panel: enlargement of Urbanczik-Senn data. Lower panel: enlargement of data for static and STDP synapses. (C) Spike-spike version of the Urbanczik-Senn rule compared to static and STDP synapse. Network and simulation parameters as in Supplementary Table 5 with in-degree K = 5, 000. For all simulations each compute node runs one MPI process with 24 threads.

3.5. Conclusions

The analyses of the Clopath and the Urbanczik-Senn plasticity as prototypical examples for rules that rely on storage of discontinuous vs. continuous histories show that the former are much faster to simulate, in particular for large networks that require distributed computing. For discontinuous histories, the event-driven scheme is most generally applicable and efficient, which makes corresponding rules easy to integrate into modern simulators with event-based synapses. The performance gap between the different rules should be kept in mind in the design of new learning rules. Furthermore, it is worthwhile to test modifications of existing learning rules to decrease the amount of stored information.

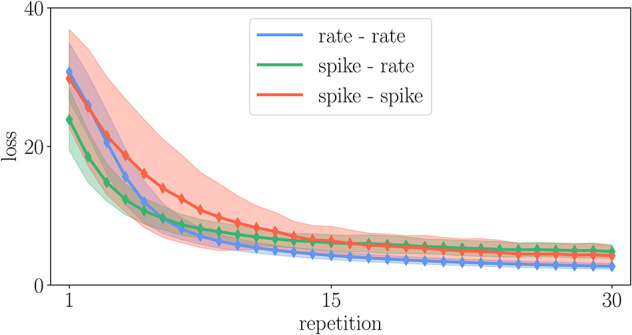

For illustration, we here test a spike-based alternative to the original Urbanczik-Senn rule, where we replace the rate prediction ϕ (Vi (t)) in V* of (15) by a noisy estimate, which we generate by a non-homogeneous Poisson generator with rate ϕ (Vi(t)), see Appendix Section 5.7. The prediction error then results in a comparison of somatic and dendritic spikes, si and , respectively; it is therefore purely based on point processes. In terms of storage and computations, the rule thereby becomes similar to ordinary STDP [cf. (5)]. This becomes apparent in the weak-scaling experiment in Figure 12C, which shows that the modification of the learning rule results in a speedup of a factor 10 to 30 arriving essentially at the same run time as the ordinary STDP rule.

When changing learning rules to improve the efficiency of an implementation, the question is in how far the modified rule, in our example including the noisy estimate of the dendritic prediction, still fulfills the functionality that the original rule was designed for. Generally, without control of the error any simulation can be made arbitrarily fast. Therefore, Morrison et al. (2007b) define efficiency as the wall-clock time required to achieve a given accuracy. We test in Figure 13 whether the dynamics is still robust enough to achieve proper learning and function in the reproduced task of Figure 8. The learning works as well as in the original Urbanczik-Senn rule. However, given the simplicity of the chosen task, this result may not generalize to other more natural tasks. We leave a more detailed investigation of this issue to future studies. The basic exploration here, however, illustrates how taking into account the efficiency of implementations can guide future development of learning rules to make them practically usable for large-scale simulations of brain networks.

Figure 13.

Comparison of learning curves in the experiment described in Appendix Section 5.6 for different variants of the Urbanczik-Senn plasticity rule. The loss is averaged over 128 trials of different input patterns. Solid curves denote the mean value and the shaded area the corresponding standard deviation of the loss.

4. Discussion

This work presents efficient algorithms to implement voltage-based plasticity in modern neural network simulators that rely on event-based updates of synapses (for a review, see Brette et al., 2007). This update scheme restricts function calls of synapse code to time points of spike events and thereby improves performance in simulations of biologically plausible networks, where spike events at individual synapses are rare and the total number of synapses is large compared to the number of neurons. While our framework has no restrictions on the postsynaptic voltage-dependence of the learning rule, a particular focus of this work is on those plasticity rules, where synapses rely on an extended history of membrane potentials and therefore continuous information of state variables of postsynaptic cells to update their strength. This dependence naturally suggests a time-driven update of synapses. Instead, we here propose an efficient archiving of voltage traces to enable event-based synapse updates and detail two schemes for storage, read out and post-processing of time-continuous or discontinuous information. We show their superior performance with respect to time-driven update both theoretically and with a reference implementation in the neural network simulation code NEST for the rules proposed in Clopath et al. (2010) and Urbanczik and Senn (2014).

Event-driven update schemes for voltage-based plasticity come at the expense of storing possibly long histories of a priori continuous state variables. Such histories not only require space in memory but they also affect the runtime of simulations, which we focus on here. The time spent for searching and post-processing the history to calculate weight updates increases with increasing length, and these operations have to be done for each synapse. Therefore, in addition to an ordinary event-driven scheme, we devised a compression scheme that becomes superior for long histories as occurring in the Urbanczik-Senn rule. In particular for networks with small in-degrees or synchronous spiking, the compression scheme results in a shorter history. It further reduces the total amount of computations for weight changes by partially re-using results from other synapses thereby avoiding multiple processing of the history. For short histories as occurring in the Clopath rule, the compression results in unnecessary overhead and an increase in history size as one entry per last presynaptic spike time needs to be stored instead of a discontinuous membrane potential around sparse postsynaptic spike events. We here, for simplicity, contrasted time- and event-driven update schemes. However, further work could also investigate hybrid schemes, where synapses are not only updated at spike events, but also on a predefined and coarse time grid to avoid long histories and corresponding extensive management. A similar mechanism is used in Kunkel et al. (2011) to implement a normalization of synaptic weights. The corresponding technical details can be found in Kunkel (2015, ch. 5.2).

The storage and management of the history as well as complex weight change computations naturally reduce the performance of simulations with voltage-based plasticity in comparison to static or STDP synapses. The latter only require information on spike times which is much less data compared to continuous signals. Nevertheless, given that the Clopath rule is based on thresholded membrane potentials and consequently short, discontinuous histories, the performance and scaling of the event-driven algorithms is only slightly worse than for ordinary STDP. Time-driven implementations cannot employ this model feature and update weights also in time steps where no adjustment would be required, leading to significantly slower simulations. The performance gain of using event-driven schemes is less pronounced for the Urbanczik-Senn rule as, by design, histories are typically long. In this case, the compression scheme naturally yields better results in terms of runtime. Our own modification of the Urbanczik-Senn rule only requires storage of sparsely sampled membrane potentials, giving rise to the same performance as STDP. Generally, an algorithm is faster if it requires fewer computations. However, opportunities for vectorization and cache efficient processing, outside of the scope of the present manuscript, may change the picture.