Abstract

Objective

Develop and evaluate an interactive information visualization embedded within the electronic health record (EHR) by following human-centered design (HCD) processes and leveraging modern health information exchange standards.

Materials and Methods

We applied an HCD process to develop a Fast Healthcare Interoperability Resources (FHIR) application that displays a patient’s asthma history to clinicians in a pediatric emergency department. We performed a preimplementation comparative system evaluation to measure time on task, number of screens, information retrieval accuracy, cognitive load, user satisfaction, and perceived utility and usefulness. Application usage and system functionality were assessed using application logs and a postimplementation survey of end users.

Results

Usability testing of the Asthma Timeline Application demonstrated a statistically significant reduction in time on task (P < .001), number of screens (P < .001), and cognitive load (P < .001) for clinicians when compared to base EHR functionality. Postimplementation evaluation demonstrated reliable functionality and high user satisfaction.

Discussion

Following HCD processes to develop an application in the context of clinical operations/quality improvement is feasible. Our work also highlights the potential benefits and challenges associated with using internationally recognized data exchange standards as currently implemented.

Conclusion

Compared to standard EHR functionality, our visualization increased clinician efficiency when reviewing the charts of pediatric asthma patients. Application development efforts in an operational context should leverage existing health information exchange standards, such as FHIR, and evidence-based mixed methods approaches.

Keywords: clinical decision support systems, human-centered design, health information interoperability, health information exchange, implementation science

INTRODUCTION

Growth in electronic health record (EHR) adoption has drastically increased the amount of health data recorded.1 This wealth of data can challenge the working memory limits of healthcare providers, which is used to help build an accurate overview of the patient.2 Reviewing relevant patient information is especially challenging in a fast-paced healthcare environment, such as the emergency department (ED), where clinicians must quickly assess a patient’s medical history by searching for information that is scattered across multiple screens in the EHR.3 Some organizations and vendors have attempted to integrate succinct displays of relevant data into clinical workflows,4 however, the information is often displayed using a tabular-based format similar to a spreadsheet. This severely limits a clinician’s ability to recognize patterns or trends related to the patient’s longitudinal plan of care.

Turning this vast amount of data into actionable information is possible through data visualization strategies, which take advantage of humans’ visual processing ability to more efficiently detect changes or make comparisons between objects across a number of dimensions including shape, size, or color.5 For over 2 decades, organizations have successfully implemented health-related information visualizations.6–8 When such interventions are implemented, evidence suggests that human-centered design (HCD) approaches, which can include an analysis of the work environment, active user involvement in the development process, iterative systems development, evaluation of use in context, and involvement of usability experts, yield better results.9–12 There also exists research using HCD methods to design innovative information displays for the ED.13–15 However, many of these systems were aimed at tracking patient care and resource allocation across the ED or were focused on information displays related to the current encounter.

Additionally, limitations in health application architectures and information exchange standards often present barriers when trying to scale such applications to other institutions.16 Integration within the EHR has also typically been limited, and the visualizations themselves have consequently lacked support for important workflow features (eg, the ability to place orders or “drill down” to view patient-specific summaries or reports available elsewhere in the EHR).17 Similar workflow gaps have been associated with usability-related issues within EHRs.18

Recent developments in health information exchange standards, such as Fast Healthcare Interoperability Resources (FHIR), are starting to make multisite deployments more feasible.19–21 FHIR is a data exchange specification that makes use of open internet standards, which offer a lightweight alternative to simple object access protocol, and supports both JavaScript object notation, and extensible markup language.22–25

OBJECTIVE

To develop and evaluate an interactive information visualization embedded within the production EHR by using HCD approaches and leveraging modern health information exchange standards.

MATERIALS AND METHODS

Project setting

The Asthma Timeline Application was developed for use in the ED of the Children’s Hospital of Philadelphia (CHOP), a large, academic, tertiary care children’s hospital that utilizes a commercial EHR (Epic Systems Inc., Verona, WI). We focused on asthma since it is 1 of the most common chronic illnesses of childhood and a leading cause of ED visits and hospitalizations. The CHOP ED treats over 7000 acute asthma visits with a 30% admission rate annually.

Project design

At the end of 2015, the director of Emergency Information Systems and coauthor of this article (JZ) observed that information about prior asthma encounters, treatments, and outcomes was separated into multiple areas of the EHR, making it hard for clinicians to aggregate it while formulating a treatment plan. In discussing approaches with colleagues, we hypothesized that a single view of this information would reduce time and effort to gather this information and improve provider efficiency and satisfaction. To support this work, a multidisciplinary team was formed that consisted of physicians (DF, JZ, LU, RG), software engineers (JM, JT), and a human computer interaction (HCI) specialist (DK). Three physicians (JZ, LU, RG) were board-certified clinical informaticians and 1 (DF) was in training at the time of this project. The 2 software engineers had over 11 years of experience developing clinical decision support applications embedded within the EHR. Our HCI specialist has a master’s degree in Human Computer Interaction and over 20 years of experience in clinical informatics research. Team formation began at the end of 2015, direct observations and semistructured interviews occurred throughout 2016, application design and development occurred at varying levels from 2016 to 2018, and postimplementation evaluation occurred from 2017 to 2018. The project was conducted in 5 phases: (1) cognitive task analysis; (2) design; (3) preimplementation comparative system evaluation; (4) intervention development; and (5) postimplementation feedback and monitoring. A generalized version of this cycle is presented in Figure 1. This project was conducted as part of a quality improvement project and determined to be exempt from requirements for human subject research by CHOP’s Institutional Review Board.

Figure 1.

Human-centered design process for application development.

Intervention

The Asthma Timeline Application was developed to intervene on high- and rising-risk pediatric patients presenting with a chief complaint of respiratory distress in the ED. High-risk patients were defined as having either ≥ 2 inpatient admissions for asthma or ≥ 3 ED visits for asthma in the 12 months preceding the current visit. Rising-risk patients were defined as those having either 1 inpatient admission for asthma or 2 ED visits for asthma. We used diagnosis codes associated with hospital billing records to classify an encounter as asthma-related. In addition to a qualifying diagnosis, emergency visits required that either a systemic steroid (eg, prednisone) or albuterol treatment was ordered, and inpatient visits required that a systemic steroid was ordered.

Cognitive task analysis

One author (JT) observed different provider types (attending, fellow, resident, advanced practice nurse, and nurse) treating asthma patients within the ED. Detailed notes about the environment in which work was conducted, as well as the tools used, were collected. To supplement the observations, 2 authors (JM, JT) conducted semistructured interviews with a total of 11 clinicians (2 attendings, 4 fellows, 3 advanced practice nurses, and 2 nurses). All clinician types in our institution were recruited in order to provide representation of multiple perspectives on the treatment of asthma patients in the ED and range in experience. We recruited new participants through informal introductions and terminated recruitment when 3 authors (DK, JM, JT) observed thematic saturation of participant responses. Participants were asked to describe their use of the EHR, what information they reviewed, and what information was not available or difficult to find when treating asthma patients. The same 3 authors synthesized responses from the interviews with information from the direct observations through a cognitive task analysis to develop an understanding of the work involved in treating asthma patients in the ED.26–28 Throughout the analysis, our understanding of the work environment, users, and tasks was iteratively validated by 4 subject matter experts on the project team (JZ, DF, RG, LU).

Design

Results from the cognitive task analysis were used to identify information requirements for the application through a formative design process.29,30 Each feature represented in the application was designed to address the challenges identified in both the users’ tasks and work environment. We created multiple wireframe prototypes that were presented to representative clinicians in a design walkthrough format.31 The walkthrough was performed with individual clinicians and in larger groups. Each presentation included a quick overview about the project before walking through the designed scenario. Detailed notes from the sessions were taken by 1 author (JT). Feedback from these sessions was used to iteratively modify the prototypes to correct for any discovered problems. The prototypes continued to increase in fidelity, which allowed for users to provide more specific feedback about the application.

Preimplementation comparative system evaluation

Following the formative design phase, we developed the Asthma Timeline Application and conducted a summative usability test.32 The test was designed to determine the usability and utility of the Asthma Timeline Application and to compare the Asthma Timeline Application to the standard EHR. Objective metrics included time on task, number of EHR screens accessed, and the ability to retrieve patient asthma history information (eg, previous encounters and medications). Subjective metrics included the NASA Task Load Index (TLX), raw format,33–35 and questions related to the perceived ease of use and perceived usefulness based on the Technology Adoption Model (TAM). We chose to use the TAM for our survey due its multiple constructs and established use in the evaluation of healthcare information technology.36

During the test, screen actions and audio were recorded using usability testing software (Techsmith, Okemos, MI). After a short pretest questionnaire, participants were asked to use the think aloud protocol as they reviewed 2 patient cases,37,38 1 using standard EHR features and 1 using the Asthma Timeline Application integrated into the EHR. In both cases, participants were asked to identify and record a count of specific asthma history information from the patient chart. This information retrieval task was evaluated as a binary outcome across data categories (eg, encounters or medications) where users either successfully retrieved all information or failed to retrieve all information. Upon completion of each case using each system (EHR or Asthma Timeline Application), participants completed the NASA TLX and TAM-based questionnaires. At the completion of the test, participants completed a post-test questionnaire.

A total of 12 participants were recruited via e-mail from a pool of ED providers and given a 5-dollar gift card for their time. There exists a disagreement among the usability community regarding the appropriate number of participants required to perform effective usability tests.39 Some experts suggest only 5 are needed,40,41 while others suggest more.42 Given this variation, we chose to limit our test to 12 participants based on practical limitations and experience from past projects. Participant and patient cases were randomized using a 3-way randomized complete block design, which ensures that the same number of each intervention (EHR vs Asthma Timeline Application) is applied to subjects and scenarios and, therefore, removes the subject and scenario effects.43 This allowed for 4 patient cases to be randomized among the twelve participants so that each case was reviewed a total of 6 times, 3 each for the standard EHR and Asthma Timeline Application. The test was conducted in a hospital-supported EHR test environment that is based on a complete copy of production and includes real patient data. Patient cases were selected based on a representative sample of patients visiting the CHOP ED. We used a paired (by provider) 2-tailed t-test for continuous variables and a Chi-squared test for proportions to evaluate statistical significance. All analyses were performed in R version 3.6.3.

Intervention development

We aimed to integrate the application within the EHR workflow of our ED clinicians. We prioritized the use of internationally recognized standards, such as Health Level 7 International’s (HL7) FHIR or other frameworks that utilized such standards, including Substitutable Medical Applications Reusable Technologies (SMART). Where gaps existed, we attempted to use proprietary services offered by our EHR vendor (ie, vendor web services or database logic). When neither solution was available, open source or homegrown technology was used.

Postimplementation feedback and monitoring

Application usage, which included mouse hovers and clicks, and system functionality were obtained using application performance monitoring logs. Additional feedback from ED users was obtained through a voluntary web-based survey using REDCap. The survey link was sent via e-mail to all frontline ordering ED staff members, which are the subset of users who had access to the application (n = 117), and included items similar to the usability questionnaire to assess satisfaction with the application. Two months after implementation, 2 authors (DF, JT) shadowed clinicians in the ED and interviewed 6 end users as they provided patient care.

RESULTS

During the first 3 phases of our HCD process, we elicited feedback from a total of 44 users (Table 1). An additional 50 order-writing clinicians responded to our online postimplementation survey. Nurses, improvement advisors, and EHR analysts were not included in all phases of the design process since the application was not developed for their use. Advanced practice nurses have higher representation due to the frequency with which they treat asthma patients in CHOP’s ED. A more detailed explanation of each phase in the process is described below.

Table 1.

Participant characteristics of human-centered design process

| Cognitive Task Analysis and Design (%) | Preimplementation Comparative System Evaluation (%) |

Intervention Development

(%) b |

Postimplementation

Feedback (%) |

Total (%) | |

|---|---|---|---|---|---|

| Participant characteristics | |||||

| n | 25 | 12 | 7 | 50 | 94 |

| Type | |||||

| Clinical | |||||

| Attending | 2 (8) | 3 (25) | 2 (29) | – | 7 (7) |

| Fellow | 4 (16) | 1 (8) | 1 (14) | – | 6 (6) |

| Resident | 1 (4) | 3 (25) | – | – | 4 (4) |

| Advanced practice nurse | 11 (44) | 5 (42) | 1 (14) | – | 17 (18) |

| Nurse | 2 (8) | – | – | – | 2 (2) |

| Order writing—unspecifieda | – | – | – | 50 (100) | 50 (53) |

| Nonclinical | |||||

| Improvement advisors | 2 (8) | – | – | – | 2 (2) |

| EHR analysts | 3 (12) | – | 3 (43) | – | 6 (6) |

Identifiable information, including provider type, was not requested as part of the survey.

Includes users involved in both the development and production deployment of the application.

Cognitive task analysis

Analysis of the direct observations and semistructured interviews revealed common themes related to the treatment of asthma patients in the ED. Clinicians performed their work in the context of a high-paced and time sensitive environment with a number of competing demands. When reviewing information, clinicians would consistently switch between multiple screens within the EHR to obtain information and would occasionally use external paper notes to keep counts of previous encounters and medication courses. All interview participants stated they experienced difficulty in finding relevant information in a timely manner to assess a patient’s asthma severity. This included asthma-related encounters, medications, and asthma treatment plans.

After synthesizing information from the observations and interviews, we were able to develop an understanding of the environment and the work to be completed. This understanding allowed us to identify the necessary information requirements for our initial prototype design, which included the ability to: (1) quickly obtain a count of a patient’s medical history (eg, encounters and medications); (2) identify whether the patient is being tracked by outpatient resources (eg, primary care, allergy, or pulmonary); (3) identify temporal relationships between data points; (4) access details related to previous encounters (eg, clinical notes); and (5) access additional care tools required for the current visit (eg, other functions within the EHR).

Design

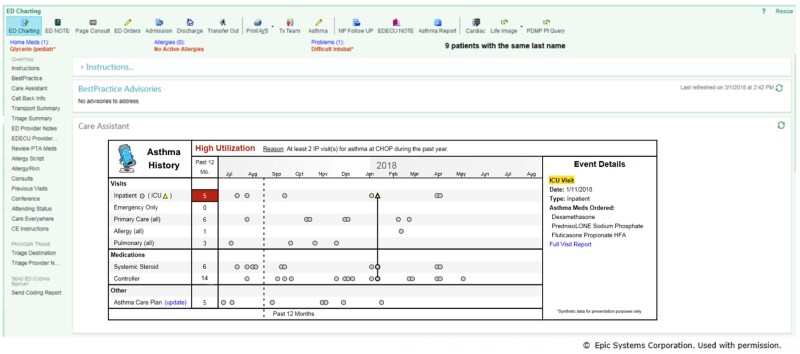

Results of the analysis phase were used to inform the design of the application. Each aspect of the information requirements was used to inform specific elements for the design of the application. For example, our users identified a limitation in current EHR designs, which use tabular-based formats for most data types. This design technique limits a user’s ability to efficiently assign temporal relationships with other information. From this, we hypothesized that an interactive timeline could serve as the functional foundation for the application. Additional requirements not generally represented on a timeline (eg, summary counts and encounter details) were added to the design. A total of 4 design feedback sessions were conducted using a combination of low- and high-fidelity prototypes (see Appendix A for previous iterations). The final design presented 14 months of patient data including asthma-related encounters, medications, and personalized asthma treatment plans (Figure 2). We chose a time duration of 14 months since many children have seasonal variation in their asthma symptoms. This allowed clinicians the opportunity to capture the year-to-year differences in the timing of common asthma triggers, such as viral illnesses and seasonal allergens. Inpatient stays involving the intensive care unit were denoted by a triangle and yellow highlighting. Each data point represented on the timeline is interactive through mouse hovers/clicks and can provide additional details through links to the source encounter, note, or order.

Figure 2.

Production version of the Asthma Timeline Application embedded in the EHR with synthetic patient data.

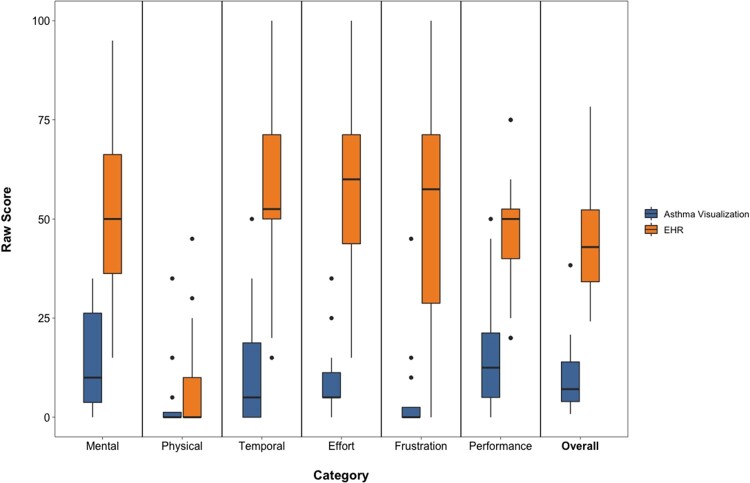

Preimplementation comparative system evaluation

We recruited a purposive sample of 12 pediatric ED providers with varied experience: advanced practice nurse (5); attending (3); resident (3); and fellow (1). The average number of years using the vendor EHR among participants was 4.33 (SD 1.78). When using the Asthma Timeline Application, users, on average, had lower task completion times (5.7 minutes—standard EHR, 2.0 minutes—Asthma Timeline Application, P < .001) and viewed fewer screens (12.8—standard EHR, 1—Asthma Timeline Application, P < .001) (Table 2). Information retrieval accuracy was higher when using the Asthma Timeline Application to identify asthma-specific information. None of the 12 participants were able to retrieve all of the patient asthma history information using the standard EHR. The Asthma Timeline Application also significantly reduced overall cognitive load when compared to the standard EHR using the NASA TLX, raw format (45.14—standard EHR, 10.83—Asthma Timeline Application, P < .001) (Figure 3). Finally, the Asthma Timeline Application had higher perceived usability/utility scores when compared to the standard EHR according to a post-task questionnaire based on the TAM (Table 3).

Table 2.

Preimplementation comparative system evaluation objective measures

|

Objective Task

Measure |

EHRa |

Asthma

Timelinea |

95% CI of the Differences | P Value c |

|---|---|---|---|---|

| Total Time (minutes) | 5.7 (1.7) | 2.0 (1.0)b | [−5.2, −2.4] | <.001 |

| Screens | 12.8 (3.9) | 1.0 (0.0) | [−14.3, −9.4] | <.001 |

| Accuracy (proportion correct) | ||||

| Inpatient Admissions | 0.42 | 0.92 | [0.1, 0.9] | .03 |

| Steroid Courses | 0.17 | 0.92 | [0.4, 1.0] | .001 |

For continuous measures (time, screens) values reported are mean (SD).

Includes time for the participant to explore the visualization.

Paired t-test for continuous measures (time, screens), Chi-squared for proportions (accuracy).

Figure 3.

Box plot of NASA TLX results.

Table 3.

Subjective measures for the EHR and asthma timeline application

| EHR b | Asthma Visualization b | 95% CI of the Differences | P Value c | |

|---|---|---|---|---|

| NASA Task Load Index (raw format) | ||||

| Mental | 50.83 (24.1) | 14.58 (13.4) | [−49.94, −22.56] | <.001 |

| Physical | 9.17 (15.3) | 4.58 (10.5) | [−12.06, 2.89] | .2 |

| Temporal | 55.83 (23.3) | 12.92 (16.6) | [−59.08, −26.75] | <.001 |

| Effort | 55.42 (25.2) | 10.0 (10.4) | [−62.63, −28.20] | <.001 |

| Frustration | 52.5 (28.2) | 5.83 (13.3) | [−66.27, −27.07] | <.001 |

| Performance | 47.08 (18.4) | 17.08 (15.9) | [−44.65, −15.35] | <.001 |

| Overall | 45.14 (15.1) | 10.83 (10.7) | [−44.14, −24.47] | <.001 |

| Usability/utility questionsa | ||||

| This system is easy to use to determine a patient's asthma history. | 2.42 (1.0) | 4.75 (0.5) | [1.65, 3.02] | <.001 |

| This system is efficient in helping me determine a patient's asthma history. | 2.50 (0.9) | 4.83 (0.4) | [1.77, 2.90] | <.001 |

| This system provides useful features to determine a patient's asthma history. | 2.92 (1.1) | 4.92 (0.3) | [1.34, 2.66] | <.001 |

| This system helps me feel confident in determining a patient's asthma history. | 2.83 (0.8) | 4.58 (0.5) | [1.36, 2.14] | <.001 |

| This system provides important patient information in addressing the care of asthmatic patients in the ED. | 3.50 (0.7) | 4.67 (0.5) | [0.71, 1.62] | <.001 |

| Overall, I am satisfied with how this system is designed to address the care of asthmatic patients in the ED. | 2.75 (1.2) | 4.75 (0.5) | [1.28, 2.72] | <.001 |

5-point Likert-type scale: 1 = strongly disagree to 5 = strongly agree.

All values reported are mean (SD).

Paired t-test.

Intervention development

During the project period, our EHR vendor had not yet fully implemented the specific FHIR resources required to achieve our project goals. Two of the 3 resources we required (Encounter and Medication) were listed as part of the US FHIR Core profiles. To facilitate future interoperability, we developed custom web services that were formatted to align as closely as possible with the constraints outlined in each respective FHIR specification based on the Draft Standard for Trial Use 2, which was the available release at the start of the project. Response objects for each resource were formed by augmenting the full FHIR resource specifications (Encounter and MedicationOrder) or adhering to information exchange best practices for those the FHIR specification, as implemented by our EHR, did not support (eg, asthma treatment plans). Extracted values, such as encounter diagnosis, were coded to use nationally recognized standards (eg, International Classification of Diseases). Notable changes to each resource included adding EHR vendor-specific record identifiers.

No native EHR feature existed that allowed the Asthma Timeline Application to integrate seamlessly into the workflow of ED providers. The SMART framework, as implemented by our EHR vendor, required users to manually launch a separate screen that did not support the required workflow. To reduce the potential fragmentation of the workflow, we utilized a homegrown clinical decision support (CDS) framework developed in 2008 that follows a similar web services-based architecture (later adopted by SMART) but allows for a wider range of launch options.17 Using this framework allowed the Asthma Timeline Application to directly integrate within the workflow context of the ED provider. The application was deployed to our production EHR on November 3, 2017. Prior to go-live, CHOP’s ED leadership gave a brief presentation at an ED department meeting and sent an e-mail communication to ED staff, which described the application’s purpose.

Postimplementation feedback and monitoring

Application monitoring

During our evaluation period (12 months), the Asthma Timeline Application was triggered in 4234 patient encounters and was viewed by 631 distinct users, 258 (41%) of whom interacted with the timeline at least once to view additional data. In total, users performed 3958 actions (ie, mouse hovers or clicks) within the application. During this period, there were 3 failures related to our EHR vendor’s web server that temporarily disrupted the functioning of our application (estimated 4 hours representing 0.0005% of unscheduled downtime).

User feedback

50 ED providers, out of 117 invited, responded to our postimplementation TAM survey, with most responding “agree” or “strongly agree” on the 5-point Likert scale indicating that the Asthma Timeline Application helped with efficiency (92%), confidence (82%), was useful (94%), and provided important information (90%) related to the care of asthma patients in the ED (Table 4). Users identified challenges with the colors of icons differentiating intensive care unit stays on some of the monitors in our ED and suggested additional use cases for the application (eg, sickle cell disease). During interviews with clinicians as part of our postimplementation observations with 6 representative users, we were able to witness some of the challenges related to color on monitors. We also discovered that, while some users may need more instruction on how to interact with the tool, many knew the tool was interactive but reported getting most of the value from the summary display of the timeline.

Table 4.

Postimplementation survey results

| Survey Questions | Asthma Timeline a , b |

|---|---|

| The ED Asthma Timeline is efficient in helping me determine a patient's asthma history. | 4.5 (0.9) |

| The ED Asthma Timeline provides useful features to determine a patient's asthma history. | 4.5 (0.6) |

| The ED Asthma Timeline helps me feel confident in determining a patient's asthma history. | 4.3 (1.0) |

| The ED Asthma Timeline provides important patient information in addressing the care of asthmatic patients in the ED. | 4.4 (0.8) |

| Overall, I am satisfied with how the ED Asthma Timeline is designed to address the care of asthmatic patients in the ED. | 4.4 (0.9) |

| I prefer to have the ED Asthma Timeline available over the standard electronic health record (EHR) alone. | 4.0 (1.4) |

N = 48; only included responses where ≥ 1 Likert-scale question was answered.

All values reported are mean (SD).

DISCUSSION

We applied HCD principles to develop, implement, and evaluate an Asthma Timeline Application that incorporated modern health data standards. Usability testing of the application demonstrated a statistically significant reduction in time on task and cognitive load for clinicians when compared to base EHR functionality. We also identified an increase in information retrieval accuracy of specific asthma-related data points when using the Asthma Timeline Application. The postimplementation survey helped us to reach a larger audience than usability testing alone. Feedback for the visualization was generally positive and aided in identifying issues that would have otherwise likely gone undetected (eg, computer screens with color distortion). We also discovered that some users found the visualization alone (eg, without interaction) provided substantial clinical value by providing them with a snapshot of the patient’s asthma history. This finding is consistent with research that suggests current EHRs are limited in their abilities to provide easy-to-access and interpretable graphical reviews of patient data.44 Additionally, though we initially focused on a single disease, since asthma is a chronic condition that requires continual follow up over many years, we believe our visualization can generalize well to other chronic conditions, such as sickle cell disease.

The use of HCD principles to clinical application development is a growing area of research interest.14,15,45–48 This comes at a time when clinician burnout is being recognized as a major problem in healthcare.49 Previous research has already demonstrated that some physicians spend more time in the EHR than they do on direct patient care.50 Research has also suggested a strong relationship between EHR usability and physician burnout.51,52 Additionally, when looking beyond the economic cost of rehiring and training new employees, recent studies have also linked physician burnout to lower quality patient care.53

Having recognized the impact EHR usability can have on both patients and clinicians, our approach was informed by other efforts to use HCD approaches. As such, we adapted and combined evidence-based methods, both quantitative and qualitative, that allowed us to evaluate the impact/effort of next steps and iterate on design rapidly. Furthermore, by including a multidisciplinary team from the outset, we benefitted from the different perspectives that these teams can offer.54 Our team included members with deep clinical and technical expertise as well as extensive institutional knowledge about how clinical and technical work is managed locally. Along these lines, key stakeholders from our institution’s operational teams (clinical and technical) were engaged early in the process, and as a result, the application is now supported by the operational technical team. We propose that the multidisciplinary approach described in this article—combining informaticists, information services personnel, clinical users, and quality experts—can serve as a model for teams conducting similar work in a variety of clinical domains.

Our work also highlights the potential benefits and challenges associated with developing web-based EHR applications using modern health data standards. A large number of commercial EHR vendors have instituted some level of support for FHIR.55,56 However, our work identified significant gaps in our EHR’s available FHIR resources that did not provide adequate support for methods to retrieve encounter or medication data. This limits the ability of the application to scale beyond a single institution without significant investment. Additionally, our EHR vendor’s implementation of the SMART standard was limited to isolated screens. This limitation has the potential to increase the cognitive burden placed on clinicians by requiring users to access multiple screens while maintaining large amounts of information in working memory. While other EHR vendors are beginning to adopt more flexible integration options that may improve the viability of SMART moving forward, we chose to rely on a custom CDS framework that provided more direct integration of the Asthma Timeline Application into the clinical workflow.

The potential benefit of utilizing FHIR-based web services is that it opens up the possibility of implementing the Asthma Timeline Application across organizations. Other institutions have also demonstrated the feasibility of utilizing the FHIR specification to develop EHR integrated applications.57,58 As the number of institutions using FHIR continues to increase, there is increased potential to share applications across EHR platforms without vendor-specific code. In general, while development of the timeline was feasible given the available resources of our institution, further advances within EHRs and other health application platforms are necessary to fulfill the promise of application interoperability.

Our work had a number of limitations. The Asthma Timeline Application was limited to a single institution as part of a broader quality improvement initiative that was able to take advantage of institutional resources (eg, HCI specialists, software engineers, informatics-trained clinicians, and a custom CDS framework) that may not be available to other organizations. Additionally, due to limitations in our vendor EHR and other nationally recognized frameworks, such as SMART, at the time of this project, we utilized some components from a homegrown CDS framework to implement our application. Future work in this area should focus on building standards-based tools to provide for more direct access to external CDS applications within the workflow of EHR users. We also are exploring methods to assess the impact of our visualization on clinical care as it relates to provider workflow.

CONCLUSION

This work demonstrates the feasibility of developing, implementing, and evaluating a custom application embedded in a commercial EHR that decreases providers’ mental workload while caring for children with asthma in a pediatric ED. As interoperable health applications are likely to play an increasing role in the way clinicians and patients interact with health data and the health application marketplace continues to grow, application development done in an operational context should leverage evidence-based mixed methods approaches, including best practices related to HCD, currently available health technology standards, and a multidisciplinary team that consists of members with clinical, technical, and operational knowledge.

FUNDING

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

AUTHOR CONTRIBUTIONS

JT contributed to the conception and design of the project, acquisition and analysis of data, software development, and drafted the manuscript. DF contributed to the conception and design of the project, acquisition and analysis of data, and critically reviewed the manuscript. JM contributed to the conception and design of the project, acquisition and analysis of data, software development, and critically reviewed the manuscript. DK contributed to the conception and design of the project, acquisition and analysis of data, and critically reviewed the manuscript. RG contributed to the analysis of data, software development, and critically reviewed the manuscript. LU contributed to the conception and design of the project, analysis of data, and critically reviewed the manuscript. JZ contributed to the conception and design of the project, analysis of data, and critically reviewed the manuscript, All authors approved the final manuscript as submitted.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We thank the network of emergency department physicians and the clinical quality improvement team for their contributions to this project. We also thank Linda Tague and William Lingenfelter for their work in navigating our vendor EHR system and Dr. Naveen Muthu, Dr. Marc Tobias, and Dr. Evan Orenstein for their expertise during the task analysis and design stages of the project.

DATA AVAILABILITY STATEMENT

The data underlying this article are available in the article and in its online supplementary material.

CONFLICT OF INTEREST STATEMENT

Mr Thayer and Drs Ferro, Grundmeier, Utidjian, and Zorc are coinventors of “HealthChart,” a clinical information visualization software. They hold no patent on the software and have earned no money from this invention. No licensing agreement exists.

REFERENCES

- 1. Ross MK, Wei W, Ohno-Machado L. ‘ Big data’ and the electronic health record. Yearb Med Inform 2014; 23 (01): 97–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Zhang J. The nature of external representations in problem solving. Cogn Sci 1997; 21 (2): 179–217. [Google Scholar]

- 3. Beasley JW, Wetterneck TB, Temte J, et al. Information chaos in primary care: implications for physician performance and patient safety. J Am Board Fam Med 2011; 24 (6): 745–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Laxmisan A, McCoy AB, Wright A, et al. Clinical summarization capabilities of commercially-available and internally-developed electronic health records. Appl Clin Inform 2012; 3 (1): 80–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Wickens CD, Hollands JG, Banbury S, et al. Engineering psychology and human performance. Eng Psychol Hum Perform 2013; 4: 394–5. [Google Scholar]

- 6. Plaisant C, Milash B, Rose A, et al. LifeLines: visualizing personal histories. Proceedings of ACM CHI 1996 1998; 30: 34–5. [Google Scholar]

- 7. Wongsuphasawat K, Gotz D.. Exploring flow, factors, and outcomes of temporal event sequences with the outflow visualization. IEEE Trans Visual Comput Graphics 2012; 18 (12): 2659–68. [DOI] [PubMed] [Google Scholar]

- 8. Forrest CB, Fiks AG, Bailey LC, et al. Improving adherence to otitis media guidelines with clinical decision support and physician feedback. Pediatrics 2013; 131 (4): e1071–81. [DOI] [PubMed] [Google Scholar]

- 9. Gulliksen J, Göransson B, Boivie I, Blomkvist S, Persson J, Cajander Å. Key principles for user-centred systems design. Behav Inf Technol 2003; 22 (6): 397–409. 10.1080/01449290310001624329 [DOI] [Google Scholar]

- 10. Belden JL, Wegier P, Patel J, et al. Designing a medication timeline for patients and physicians. J Am Med Inform Assoc 2019; 26 (2): 95–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Curran RL, Kukhareva PV, Taft T, et al. Integrated displays to improve chronic disease management in ambulatory care: a SMART on FHIR application informed by mixed-methods user testing. J Am Med Inform Assoc 2020; 27 (8): 1225–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.International Organization for Standardization: ISO 9241-210:2019. Ergon human-system Interact - Part 210 Human-centred Des Interact Syst; 2019. https://www.iso.org/standard/77520.html Accessed December 22, 2020

- 13. Clark LN, Benda NC, Hegde S, et al. Usability evaluation of an emergency department information system prototype designed using cognitive systems engineering techniques. Appl Ergon 2017; 60: 356–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Wang X, Kim TC, Hegde S, et al. Design and evaluation of an integrated, patient-focused electronic health record display for emergency medicine. Appl Clin Inform 2019; 10 (04): 693–706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. McGeorge N, Hegde S, Berg RL, et al. Assessment of innovative emergency department information displays in a clinical simulation center. J Cogn Eng Decis Mak 2015; 9 (4): 329–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Kawamoto K, Hongsermeier T, Wright A, et al. Key principles for a national clinical decision support knowledge sharing framework: synthesis of insights from leading subject matter experts. J Am Med Inform Assoc 2013; 20 (1): 199–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Thayer JG, Miller JM, Fiks AG, et al. Assessing the safety of custom web-based clinical decision support systems in electronic health records: a case study. Appl Clin Inform 2019; 10 (02): 237–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Ratwani RM, Savage E, Will A, et al. Identifying electronic health record usability and safety challenges in pediatric settings. Health Aff 2018; 37 (11): 1752–9. [DOI] [PubMed] [Google Scholar]

- 19. Mandel JC, Kreda DA, Mandl KD, et al. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J Am Med Inform Assoc 2016; 23 (5): 899–908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Gordon WJ, Baronas J, Lane WJ.. A FHIR Human Leukocyte Antigen (HLA) interface for platelet transfusion support. Appl Clin Inform 2017; 08 (02): 603–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Warner JL, Rioth MJ, Mandl KD, et al. SMART precision cancer medicine: a FHIR-based app to provide genomic information at the point of care. J Am Med Inform Assoc 2016; 23 (4): 701–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Franz B, Schuler A, Krauss O.. Applying FHIR in an integrated health monitoring system. EJBI 2015; 11 (02): 51–6. https://www.ejbi.org/scholarly-articles/applying-fhir-in-an-integrated-health-monitoring-system.pdf Accessed October 10, 2017 [Google Scholar]

- 23. Bender D, Sartipi K. HL7 FHIR: an agile and RESTful approach to healthcare information exchange. In: Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems. IEEE; June 20–22, 2013; Portugal, Spain. doi: 10.1109/CBMS.2013.6627810.

- 24. Pervez U, Hasan O, Latif K, et al. Formal reliability analysis of a typical FHIR standard based e-Health system using PRISM. In: 2014 IEEE 16th International Conference on e-Health Networking, Applications and Services (Healthcom). IEEE; October 15–18, 2014; Natal, Brazil. doi: 10.1109/HealthCom.2014.7001811.

- 25.Http - FHIR v4.0.1. http://hl7.org/fhir/http.htmlAccessed July 22, 2020

- 26. Burns C, Hajdukiewicz J.. Ecological Interface Design. Boca Raton, FL: CRC Press; 2004. [Google Scholar]

- 27. Crandall B, Klein G, Klein G, et al. Working Minds: A Practioners Guide to Cognitive Task Analysis. Cambridge, MA: MIT Press; 2006. [Google Scholar]

- 28. Militello LG. Learning to think like a user: using cognitive task analysis to meet today’s health care design challenges. Biomed Instrum Technol 1998; 32: 535–40. [PubMed] [Google Scholar]

- 29. Abras C, Abras C, Maloney-Krichmar D, et al. User-Centered Design. In: Bainbridge W, ed. Encyclopedia of Human-Computer Interaction. Thousand Oaks, CA: SAGE; 2004. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.94.381 Accessed 8 May 2020 [Google Scholar]

- 30. Nielsen J. Designing Web Usability. Thousand Oaks, CA: New Riders Publishing; 1999. [Google Scholar]

- 31. Nielsen J. Usability Inspection Methods. New York, NY: John Wiley & Sons; 1994. [Google Scholar]

- 32. Rubin J, Chisnell D.. How to Plan, Design, and Conduct Effective Tests. Indianapolis, IN: John Wiley & Sons; 2008. [Google Scholar]

- 33. Hart SG, Staveland LE.. Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv Psychol 1988; 52: 139–83. [Google Scholar]

- 34. Hoonakker P, Carayon P, Gurses AP, et al. Measuring workload of ICU nurses with a questionnaire survey: the NASA Task Load Index (TLX). IIE Trans Healthc Syst Eng 2011; 1 (2): 131–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Hart SG. Nasa-Task Load Index (NASA-TLX); 20 years later. Proc Hum Factors Ergon Soc Annu Meet 2006; 50 (9): 904–8. [Google Scholar]

- 36. Holden RJ, Karsh B-T.. The Technology Acceptance Model: its past and its future in health care. J Biomed Inform 2010; 43 (1): 159–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Ericsson KA, Simon HA.. Verbal reports as data. Psychol Rev 1980; 87 (3): 215–51. [Google Scholar]

- 38. Fonteyn ME, Kuipers B, Grobe SJ.. A description of think aloud method and protocol analysis. Qual Health Res 1993; 3 (4): 430–41. [Google Scholar]

- 39. Faulkner L. Beyond the five-user assumption: benefits of increased sample sizes in usability testing. In: Behavior Research Methods, Instruments, and Computers. Psychonomic Society; 2003: 379–83. doi: 10.3758/BF03195514. [DOI] [PubMed] [Google Scholar]

- 40. Nielsen J. Usability Engineering. Cambridge, MA: AP Professional; 1993.

- 41. Virzi RA. Refining the test phase of usability evaluation: how many subjects is enough? Hum Factors 1992; 34 (4): 457–68. [Google Scholar]

- 42. Spool J, Schroeder W.. Testing web sites: five users is nowhere near enough. In: Conference on Human Factors in Computing Systems - Proceedings. New York: ACM Press; 2001: 285–6. doi: 10.1145/634067.634236. [Google Scholar]

- 43. Cox G, Cochran W.. Experimental Designs. New York: John Wiley & Sons; 1957. [Google Scholar]

- 44. Howe JL, Adams KT, Hettinger AZ, et al. Electronic health record usability issues and potential contribution to patient harm. JAMA 2018; 319 (12): 1276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Patel VL, Kushniruk AW.. Interface design for health care environments: the role of cognitive science. Proc AMIA Symp 1998; 1998: 29–37. [PMC free article] [PubMed] [Google Scholar]

- 46. Johnson CM, Johnson TR, Zhang J.. A user-centered framework for redesigning health care interfaces. J Biomed Inform 2005; 38 (1): 75–87. [DOI] [PubMed] [Google Scholar]

- 47. Zhang J, Walji MF.. TURF: toward a unified framework of EHR usability. J Biomed Inform 2011; 44 (6): 1056–67. [DOI] [PubMed] [Google Scholar]

- 48. Clark LN, Benda NC, Hegde S, et al. Usability evaluation of an emergency department information system prototype designed using cognitive systems engineering techniques. Appl Ergon 2017; 60: 356–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Dzau V J, Kirch D G, Nasca T J. To Care Is Human- Collectively Confronting the Clinician-Burnout Crisis. N Engl J Med 2018; 378 (4): 312–4. [DOI] [PubMed] [Google Scholar]

- 50. Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time-motion observations. Ann Fam Med 2017; 15 (5): 419–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Melnick ER, Dyrbye LN, Sinsky CA, et al. The association between perceived electronic health record usability and professional burnout among US physicians. Mayo Clin Proc 2020; 95 (3): 476–87. [DOI] [PubMed] [Google Scholar]

- 52. Gardner RLCooper EHaskell J, . et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc 2019; 26 (2): 106–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Halbesleben JRB, Rathert C.. Linking physician burnout and patient outcomes: Exploring the dyadic relationship between physicians and patients. Health Care Manage Rev 2008; 33 (1): 29–39. [DOI] [PubMed] [Google Scholar]

- 54. Paletz SBF, Schunn CD.. A social-cognitive framework of multidisciplinary team innovation. Top Cogn Sci 2010; 2 (1): 73–95. [DOI] [PubMed] [Google Scholar]

- 55.Home | Cerner Code - Developer Portal. https://code.cerner.com/ Accessed July 13, 2017

- 56.open.epic. https://open.epic.com/ Accessed July 13, 2017

- 57. Alterovitz GWarner JZhang P, . et al. SMART on FHIR Genomics: facilitating standardized clinico-genomic apps. J Am Med Inform Assoc 2015; 22 (6): 1173–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Bloomfield RA Jr, Polo-Wood F, Mandel JC, et al. Opening the Duke electronic health record to apps: implementing SMART on FHIR. Int J Med Inform 2017; 99: 1–10. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are available in the article and in its online supplementary material.