Abstract

Objective

To derive 7 proposed core electronic health record (EHR) use metrics across 2 healthcare systems with different EHR vendor product installations and examine factors associated with EHR time.

Materials and Methods

A cross-sectional analysis of ambulatory physicians EHR use across the Yale-New Haven and MedStar Health systems was performed for August 2019 using 7 proposed core EHR use metrics normalized to 8 hours of patient scheduled time.

Results

Five out of 7 proposed metrics could be measured in a population of nonteaching, exclusively ambulatory physicians. Among 573 physicians (Yale-New Haven N = 290, MedStar N = 283) in the analysis, median EHR-Time8 was 5.23 hours. Gender, additional clinical hours scheduled, and certain medical specialties were associated with EHR-Time8 after adjusting for age and health system on multivariable analysis. For every 8 hours of scheduled patient time, the model predicted these differences in EHR time (P < .001, unless otherwise indicated): female physicians +0.58 hours; each additional clinical hour scheduled per month −0.01 hours; practicing cardiology −1.30 hours; medical subspecialties −0.89 hours (except gastroenterology, P = .002); neurology/psychiatry −2.60 hours; obstetrics/gynecology −1.88 hours; pediatrics −1.05 hours (P = .001); sports/physical medicine and rehabilitation −3.25 hours; and surgical specialties −3.65 hours.

Conclusions

For every 8 hours of scheduled patient time, ambulatory physicians spend more than 5 hours on the EHR. Physician gender, specialty, and number of clinical hours practicing are associated with differences in EHR time. While audit logs remain a powerful tool for understanding physician EHR use, additional transparency, granularity, and standardization of vendor-derived EHR use data definitions are still necessary to standardize EHR use measurement.

INTRODUCTION

Electronic health records (EHRs) have transformed everyday work for physicians. However, there is significant concern over the EHR’s negative influence on patient safety,1–3 physician work–life integration,4–7 and professional burnout.8–14 Although intended to improve efficiency, the clerical burden from documentation needs8 and EHR inbox messages and notifications,11,15 coupled with usability issues,12 have resulted in physicians spending as much as half of their workday on EHR-related activities.16–19 Time on EHR-related activities are defined as time spent actively using the EHR (whether or not the time is spent with the patient at the bedside or elsewhere) and can further be subdivided into specific clinical tasks, such as documentation, order entry, chart review, etc. A 2016 direct observation time-motion study of 57 physicians reported that ambulatory physicians spend 2 hours on the EHR for every 1 hour of direct clinical face time with patients as well as an additional 1–2 hours of personal time each night on additional EHR-related activities.19 There is conflicting evidence on the allocation of time spent on specific EHR activities during and after scheduled patient time. For example, a 2017 retrospective cohort study of 142 family medicine physicians’ Epic (Epic Systems, Verona, WI) EHR use based on audit log data validated by direct observation reported that 23.7% of EHR time is spent on inbox management; whereas, a 2020 descriptive study of 155,000 US physicians’ Cerner Millennium (Cerner Corporation, Kansas City, MO) EHR audit log data reported that only 10% of total EHR time was spent on inbox management.16,20 These differences could be due to differences in the practice environment, software, data collection, or measurement.

Standardizing EHR use measurement would allow direct and equitable comparisons of individual and group EHR use, vendor products, and progress over time. To improve the quality and delivery of healthcare, the 21st Century Cures Act, enacted in 2016 with bipartisan support, requires an EHR reporting program with a usability focus for vendors to maintain certification.21 Developing standard and objective EHR use measures have been proposed as an essential component to this program.22 Doing so would provide a systematic and consistent understanding of physician EHR work. Sinsky et al have proposed 7 core, normalized EHR use metrics to standardize this measurement and address current variability with the aim of improving the patient and physician experience as well as practice efficiency and physician retention.23 The metrics reflect multiple dimensions of ambulatory physician EHR practice efficiency normalized to 8 hours of scheduled patient time, including: total EHR time (during and outside of clinic sessions, EHR-Time8), work outside of work (here defined as outside of scheduled clinical hours, WOW8), time on encounter note documentation (Note-Time8), time on prescriptions (Script-Time8), time on inbox (IB-Time8), teamwork for orders (TWORD), and undivided attention (ATTN).23

To our knowledge, there have been no large-scale cross-sectional analyses of standardized EHR use across healthcare systems using different EHR vendor products. EHR audit logs24–28 (also known as event logs but referred to as audit logs from this point) show the best potential as a data source to perform such a large-scale analysis in an efficient and reproducible way. Originally intended to track inappropriate access to protected health information, audit logs are datasets that capture specific EHR users’ detailed, timestamped activities. However, a recent systematic review of 85 EHR audit log studies found wide variability and inadequate transparency in vendor data definitions and validity.27 Vendor-derived EHR-use platforms compile EHR audit log data to synthesize information on physician time on EHR activities for practice leaders. The primary objective of this study was to derive and report the 7 proposed core EHR use metrics across 2 healthcare systems with different EHR vendor product installations in a cross-sectional analysis. The secondary objective of this study was to examine factors associated with EHR time.

MATERIALS AND METHODS

Study design and setting

This cross-sectional analysis of ambulatory physician EHR use during the month of August 2019 was performed in the Yale-New Haven Health and MedStar Health Systems. The Yale-New Haven Health System has ambulatory locations in Connecticut, New York, and Rhode Island and operates on a single installation of the Epic EHR. MedStar Health has ambulatory locations in Washington, DC and the greater metropolitan area in Virginia and Maryland and operates on a single installation of the Cerner Millennium EHR. The 2 health systems were selected, since they used different EHR vendor products, to demonstrate the feasibility of deriving normalized EHR use in those different products. The study protocol was approved by the Yale (protocol #2000026556) and MedStar (protocol #STUDY00000233) IRBs.

Participants/inclusion criteria

All nontrainee (ie, attending) ambulatory physicians across both healthcare systems were eligible for inclusion in the analysis. The core EHR use measures were calculated for August 2019 for the complete ambulatory physician roster for both health systems. Since vendor-compiled EHR use data did not adequately differentiate ambulatory from inpatient EHR activity or scheduled hours for teaching attendings, the analysis was performed on a subset of nonteaching, ambulatory physicians. To assess for measure accuracy, results were examined for outliers, defined as measurements more than 3 standard deviations above the mean value for that metric. Multiple possible combinations of percent clinical effort, percent outpatient notes, percent outpatient orders, number of clinical hours per month, and time in EHR were considered and assessed as inclusion criteria. Selecting 30 or greater clinical hours and at least 1 hour in the EHR during the study period preserved the sample size with less than 10 outliers per health system whose EHR metrics were confirmed to be actual outliers in EHR use by manual chart review (Figure 1). For example, a physician who had 1 clinic day during the study period but the remainder of the time was inpatient would be excluded since the majority of their EHR activities would be related to inpatient not ambulatory care and the vendor-derived data could not differentiate EHR time between the 2 settings. After application of these inclusion criteria to limit the dataset to exclusively ambulatory physicians (attending physician with greater than 30 scheduled ambulatory clinical hours and 1 hour in the EHR over the study period), there were no missing EHR metric data for study physicians.

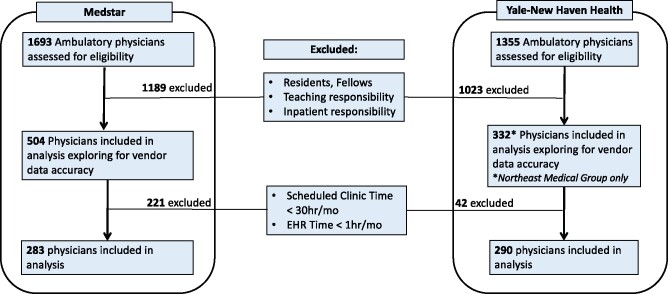

Figure 1.

CONSORT diagram. Flow diagram stratified by health system for participant eligibility and inclusion in the analysis.

Measurement: Core EHR use metrics

Sinsky et al recently proposed these 7 core metrics of ambulatory care EHR use be calculated based on audit log data: EHR-Time8, WOW8, Note-Time8, Script-Time8, IB-Time8, TWORD, and ATTN.23 A one-month reporting period was recommended when the core EHR metrics were initially proposed.23 This analysis includes EHR use during the month of August 2019.

Data sources

To optimize the quality of the metrics for this analysis and replication of these metrics in other health systems using Epic and Cerner EHRs, vendor-derived EHR use data platforms were selected as the primary data source. In both vendors’ systems, there were separate data sources for EHR use and scheduled clinical hours. For Yale-New Haven Health, Epic Signal was the data source for EHR use data (selected over UAL Lite and Event Log due to its more active data capture of EHR activities), and Epic Clarity was the source for scheduling data. For MedStar, Cerner Advance was the data source for EHR use data, and IDX was the data source for scheduling data. In both health systems, physician demographic data was obtained from human resources rosters. Per the protocol, the investigators were blinded to the physicians’ identities, so physician demographic data were reconciled by a third-party honest broker to avoid participant identification in the study dataset available to the investigative team.

Analysis

Results are reported using descriptive statistics and stratified by health system and medical specialty. Individual physicians were the unit of analysis with each physician having 1 measurement per metric for the 1-month study period. To ensure confidentiality and minimize variability, specialties with fewer than 5 participants from 1 health system were grouped within larger specialty domain categories. Missing demographic data are reported in Table 1. Multivariable analysis of differences in normalized EHR use was performed using linear regression. Age, gender, medical specialty, healthcare system, and clinical hours scheduled were included in the models to identify characteristics associated with EHR use outcomes. The level of statistical significance for the model was set as a 2-tailed P < .05. Participants were excluded for the model if they were missing demographic data for significant variables. The univariate relationship between the individual metrics was explored and reported using Pearson’s correlation coefficient. All analyses were performed with R (version 3.6.1, R Foundation).

Table 1.

Physician characteristics for Yale-New Haven Health and MedStar Health

| Characteristic | Yale-New Haven Health, N (%) | MedStar inclusion, N (%) |

|---|---|---|

| Total | 290 | 283 |

| Gender | ||

| Male | 176 (60.7%) | 125 (44.2%) |

| Female | 114 (39.3%) | 147 (51.9%) |

| Missing | 0 | 11 (3.9%) |

| Age (y) | ||

| Median (IQR) | 52 (44–62) | 49 (41–62) |

| <35 | 11 (3.8%) | 16 (5.6%) |

| 35–44 | 65 (22.4%) | 83 (29.3%) |

| 45–54 | 89 (30.7%) | 66 (23.3%) |

| 55–64 | 74 (25.5%) | 62 (21.9%) |

| ≥65 | 45 (15.5%) | 35 (12.4%) |

| Missing | 6 (2.1%) | 21 (7.4%) |

| Specialty | ||

| Internal Medicine | 97 (33.4%) | 97 (34.2%) |

| Cardiology | 43 (14.8%) | 21 (7.4%) |

| GI | 15 (5.2%) | 7 (2.5%) |

| Other Medicine Subspecialties | 43 (14.8%) | 13 (4.6%) |

| Family Medicine | 33 (11.4%) | 50 (17.7%) |

| Pediatrics Specialties | 20 (6.9%) | 21 (7.4%) |

| Surgical specialties | 24 (8.2%) | 17 (6.0%) |

| Obstetrics/Gynecology | 10 (3.4%) | 9 (3.2%) |

| Neurology/Psychiatry | 5 (1.7%) | 18 (6.4%) |

| Sports Medicine/Physical Medicine and Rehabilitation | 0 | 27 (9.5%) |

| Average Outpatient Hours Scheduled Per Week | ||

| Median (IQR) | 17.9 (16.4–30.3) | 22.0 (16.2–30.7) |

| <10 h | 37 (2.4%) | 27 (9.5%) |

| 10–19 h | 129 (44.5%) | 87 (30.7%) |

| 20–29 h | 102 (35.2%) | 124 (43.8%) |

| ≥30 h | 22 (7.6%) | 45 (15.9%) |

RESULTS

For Yale-New Haven Health, among 1355 physicians assessed for eligibility across 523 practice sites, 290 physicians across 133 practice sites met criteria for the analysis of whom 39.3% were female and the median age was 52 years (IQR 44–62, Figure 1; Table 1). For MedStar, among 1693 physicians assessed for eligibility across 298 practice sites, 283 physicians across 88 practice sites met criteria for the analysis of whom 51.9% were female and the median age was 49 years (IQR 41–62). A wide range of medical specialities were represented in the sample included in the analysis.

Five of the 7 proposed core EHR metrics could be calculated with available data (Table 2). Given differences in vendor definition of work outside of work, WOW8 measures were strikingly different between Yale-New Haven Health and Medstar physicians. WOW8 calculation required modifications to address different definitions of work after hours between vendors. Furthermore, MedStar uses a third-party scheduling platform which limited our ability to identify and adjust for all work outside of scheduled hours. Therefore, differences for WOW8 between the 2 health systems are likely artifactual and do not imply large differences in EHR time outside of scheduled hours by physicians in the 2 health systems. For Script-Time8, vendor-derived EHR use data could not distinguish between time spent ordering medications compared to nonmedication orders. Therefore, we instead report Ord-Time8, total time on orders per 8 hours of patient scheduled time. ATTN could not be calculated due to inaccurate or missing visit start and end times in available vendor-derived EHR use data. For TWORD, IB-Time8, Note-Time8, and EHR time8, similarities and differences between groups likely reflect actual differences in EHR use patterns.

Table 2.

Core EHR use metric definitions, abbreviations, and method of implementation across 2 EHR vendor systems. For Yale-New Haven Health, Epic Signal was the data source for EHR use data, and Epic Clarity was the source for scheduling data. For MedStar, Cerner Advance was the data source for EHR use data, and IDX was the data source for scheduling data

| Measure Definition | Abbreviation | Cerner Formula for Metric Calculationa | |

| Epic Formula for Metric Calculationb | |||

| Total EHR TimeTotal time on EHR (during and outside of clinic sessions) per 8 hours of patient scheduled time | EHR-Time8 |

|

|

|

| |||

| Work Outside of WorkTime on EHR outside of scheduled patient hours per 8 hours of patient scheduled time | WOW8 |

|

|

|

| |||

| Time on Encounter NoteHours on documentation (note writing) per 8 hours of scheduled patient time | Note-Time8 |

|

|

|

| |||

| Time on PrescriptionsTotal time on prescriptions per 8 hours of patient scheduled time | Script-Time8 | Cerner does not record data specific to medication orders. | |

| Epic does not record data specific to medication orders. Event logs record activity with any orders not specific to medications. | |||

| Teamwork for OrdersThe percentage of orders with team contribution | TWORD |

|

|

|

| |||

| Time on InboxTotal time on inbox per 8 hours of patient scheduled >time. | IB-Time8 | [(Time per Patient (min): Messaging + Endorse Results + |

|

|

| |||

| Undivided AttentionThe amount of undivided attention patients receive from their physicians. | ATTN | Goal is to approximate this metric by [(total time per session) minus (EHR time per session)]/total time per session. Currently unable to implement with Cerner Advance or Epic Signal |

IDX scheduled hours included start and end times reserved for outpatient clinical care, indicated as appointment status (ie, canceled, no-shows, arrived). Time scheduled at multiple clinic locations was accounted for by each physician in the calculations.

Scheduled hours from Clarity included start and end times reserved for outpatient clinical care, regardless if patients cancelled an appointment, were no-shows, or were double-booked.

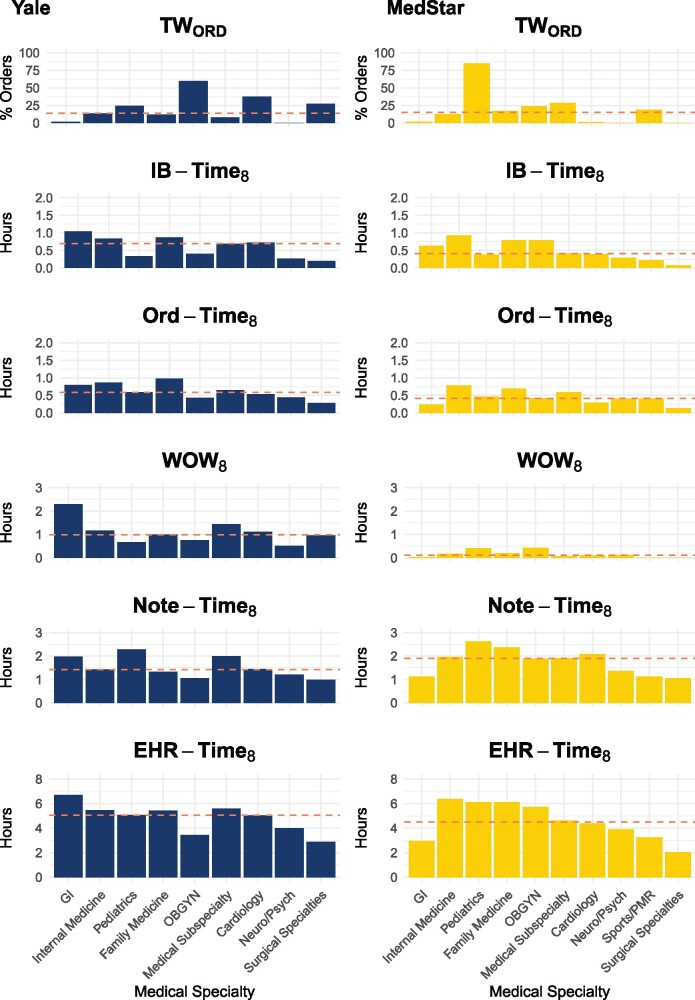

Among the 573 physicians (Yale-New Haven N = 290, MedStar N = 283) included in the analysis, the median values for the core metrics were (Figure 2; Supplementary MaterialTable 1): EHR-Time8 5.23 hours (mean 5.40, 95% CI 5.22–5.57), Note-Time8 1.73 hours (mean 1.89, 95% CI 1.80–1.97), WOW8 0.53 hours (mean 0.93, 95% CI 0.84–1.02), Ord-Time8 0.65 hours (mean 0.70, 95% CI 0.66–0.73), IB-Time8 0.67 hours (mean 0.72, 95% CI 0.68–0.76), and TWORD 15.1% (mean 26.0, 95% CI 23.6–28.4). There was substantial variation in EHR-Time8 by specialty with the highest raw median EHR-Time8 in gastroenterology, internal medicine, and family medicine and the lowest in the surgical specialties, sports/physical medicine and rehabilitation, neurology/psychiatry, and obstetrics/gynecology.

Figure 2.

Normalized EHR core measures by specialty. Distribution of EHR core measures stratified by medical specialty and health system (Yale-New Haven on left and MedStar on right) and with each institution’s median value noted with dotted coral pink line. Note that the metrics are not sufficiently aligned between Cerner and Epic to allow direct comparisons.

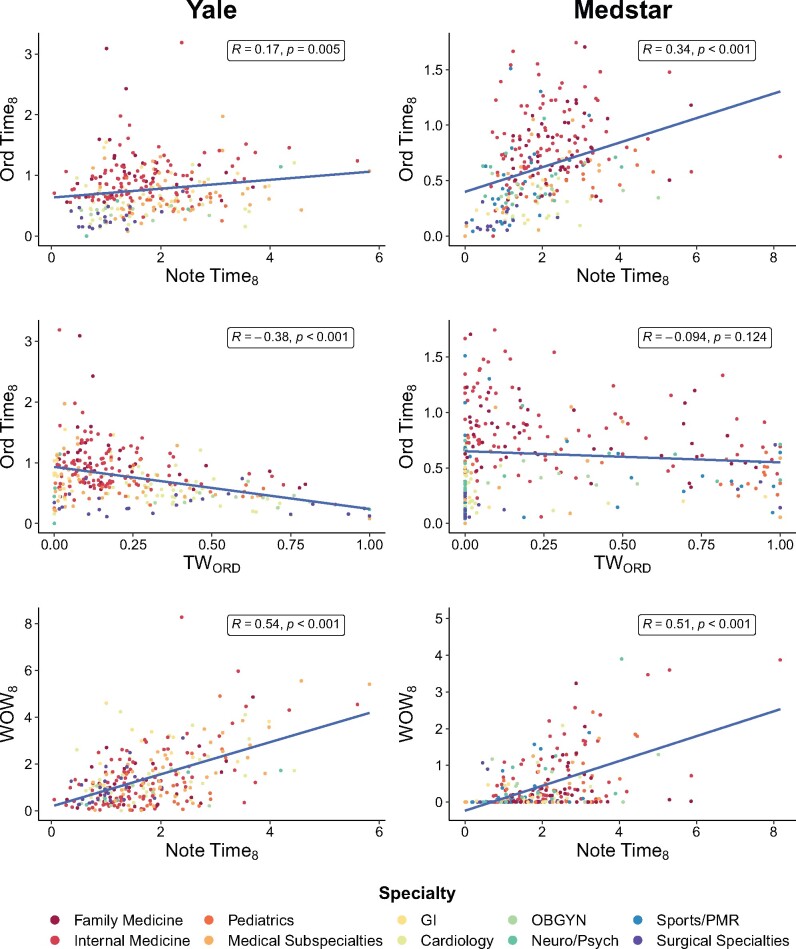

Gender, additional clinical hours scheduled, and certain medical specialties were all independently associated with normalized EHR-time8 after adjusting for age and health system on multivariable analysis. It is important to note that physician age as well as health system/EHR vendor were not predictive of EHR-Time8 in this model. Specifically, for every 8 hours of scheduled patient time, the following factors were independently associated with EHR time: female compared to male physicians (+0.58 hours, P < .001, Table 3); each additional clinical hour scheduled per month (−0.01 hours, P < .001); compared to Internal Medicine, practicing cardiology (−1.30 hours, P < .001), medical subspecialties (exclusive of gastroenterology, −0.89 hours, P = 0.002), neurology/psychiatry (−2.60 hours, P < .001), obstetrics/gynecology (−1.88 hours, P < .001), pediatrics (−1.05 hours, P = .001), sports/physical medicine and rehabilitation (−3.25 hours, P < .001), and surgical specialties (−3.65 hours, P < .001). In pooled analysis, Ord-Time8, IB-Time8, Note-Time8, and WOW8 were all positively associated with EHR-Time8 (R = 0.60, P < .001; R = 0.69, P < .001; R = 0.67, P < .001; R = 0.73, P < .001, respectively); whereas, TWORD was negatively associated with these measures (R=−0.22, P < .001; R=−0.15, P < .001; R=−0.01, p = 0.82; R=−0.18, P = .003, respectively). Potential relationships between metrics of interest on univariate analysis by health system were explored with several associations presented in Figure 3.

Table 3.

Predictors of EHR-Time8 in a multivariable linear regression model among nonteaching ambulatory-only physicians in the Yale-New Haven and MedStar Health Systems

| Predictor | Coefficient (95% CI) | P value |

|---|---|---|

| Gender, male as reference | ||

| Female | 0.58 (0.23, 0.94) | .001 |

| Age, compared to <35 years old | ||

| 35–44 | 0.24 (−0.50, 0.99) | .52 |

| 45–54 | −0.30 (−1.04, 0.44) | .42 |

| 55–64 | 0.19 (−0.56, 0.94) | .62 |

| ≥65 | 0.11 (−0.69, 0.92) | .78 |

| Specialty, general internal medicine as reference | ||

| Cardiology | −1.30 (−1.86, −0.74) | <.001 |

| Family Medicine | −0.26 (−0.76, 0.23) | .30 |

| Gastroenterology | −0.61 (−1.45, 0.24) | .16 |

| Medical Subspecialties | −0.89 (−1.44, −0.33) | .002 |

| Neurology/Psychiatry | −2.60 (−3.43, −1.77) | <.001 |

| Obstetrics/Gynecology | −1.88 (−2.78, −0.99) | <.001 |

| Pediatrics | −1.05 (−1.68, −0.41) | .001 |

| Sports/Physical Medicine and Rehabilitation | −3.25 (−4.06, −2.44) | <.001 |

| Surgical Specialties | −3.65 (−4.30, −3.01) | <.001 |

| Health system, Yale-New Haven as reference | ||

| Medstar Health | 0.20 (−0.12, 0.53) | .22 |

| Hours worked, additional time in EHR for each additional clinical hour scheduled | −0.01 (−0.02, −0.01) | <.001 |

Note: Model Overall Adjusted R2: 0.272; F-statistic: 13.7 on 16 and 528 degrees of freedom, P value: < .001.

Figure 3.

Univariate associations between normalized EHR core measures by specialty and health system. Scatterplot matrix of several pertinent EHR core metrics in both health systemsa with regression line and Pearson’s correlation coefficient between each measure. All units are in hours except TWORD which is reported as percentages.

aMedStar WOW8 was deemed less reliable.

DISCUSSION

Key results

The findings from this cross-sectional analysis of EHR use in 2 large healthcare systems using different EHR vendor products indicate that it is possible to normalize EHR use across vendor products. However, in their current form, EHR audit logs and vendor-derived EHR use platform data have multiple limitations to allow derivation of all proposed core EHR metrics and comparison of metrics across vendor products. Five of the 7 proposed core EHR metrics were measurable, while 2 were not. Even for the metrics that were measurable, some of the measure implementations were imperfect or differed substantially between vendors.

Amongst the exclusively ambulatory, nonteaching attending physicians whose EHR use could be normalized and compared, for every 8 hours of scheduled clinical time, these physicians spent more than 5 hours on the EHR. Of this time, on average, approximately 33% of this time is spent on documentation, 13% on inbox activity, and 12% on orders (Figure 2).16,19,20 These values and proportions are consistent with previous literature16,19,20,29 and can provide a benchmark for future measurement of standardized EHR use. Consistent with a case study from UCSF,30 we found that female physicians spend more time on the EHR than their male colleagues, even after controlling for age, health system, medical specialty, and number of clinical hours scheduled. Differences in characteristics of male and female physicians’ patient panels and time spent per patient were beyond the scope of this analysis. However, a recent cross-sectional analysis of 24.4 million primary care office visits reported that female physicians spend more time in direct patient care per visit and per day.31 These types of practice differences could contribute to additional EHR time. Although gender is not associated with physicians’ perception of their EHR’s usability,12 future work could explore if gender differences in EHR use contribute to gender differences in physician burnout. Variability in the EHR time by specialty is also consistent with previous literature12,20 and suggests both that EHR needs vary by medical specialty and that procedural specialties spend less time overall in the EHR with a higher contribution of teamwork on orders. This finding, along with the negative correlation between TWORD and multiple metrics, suggests that a team-based approach to EHR activities decreases the physician EHR burden. Such an interpretation is consistent with previous assertions that a team-based care model decreases clerical burden and enables increased engagement with patients.32–35 The positive correlation between documentation time and work outside of work presented in Figure 3 suggests that higher metrics for 1 activity could be predictive of increased time on other activities as well. The finding that each additional clinical hour scheduled per month was associated with a small reduction in normalized EHR time has implications consistent with previous research suggesting that physicians who are more proficient in the EHR have better satisfaction with their EHR;36 however, this finding may not indicate proficiency or satisfaction but could also be a function of the need to spend less time on the EHR to accommodate a larger clinical load. The positive associations between the time-based metrics and EHR-Time8 has face validity; these relationships are hypothesis-generating and warrant further study.

Limitations

While audit logs remain a powerful tool for understanding physician EHR use, vendor-derived EHR use data platforms have many limitations for reporting normalized EHR use. Among the proposed core metrics that could be calculated, there were limitations in determining clinical context, scheduled hours of teaching attendings, time-out lengths of data capture, and definitions and data capture for determining EHR activity completed outside of scheduled clinical hours and inbox activity. Since work context and teaching physicians’ scheduled hours could not be accurately determined within the vendor platforms, this analysis focused on nonteaching physicians practicing outpatient medicine only. Inclusion of all physicians using currently available vendor-derived EHR use data would have artificially inflated normalized metrics by including excess inpatient EHR activity and/or EHR activity related to care of patients scheduled to be seen by trainees. Differences in active use time-out lengths for vendor audit log data capture (5 seconds for Epic and 30 seconds for Cerner) likely caused an inflation of unknown magnitude for the MedStar time-based metrics compared to Yale-New Haven’s. Calculations of WOW8 were likely underestimated in both systems due to different challenges in each product. For Epic, the Signal platform includes an additional 30 minutes at the beginning and end of each scheduled day (termed “shoulder time”) in the daily total for scheduled work hours. For the MedStar health system, physician schedule data is stored in a separate third-party software system which only permits an approximation of WOW8 using Cerner’s definition of after-hours work (any EHR activity occurring outside of 6:00am to 6:00pm on weekdays) rather than the definition as specified for WOW8. Until differences such as these are resolved by harmonization of measure specifications, comparisons across vendors are limited. For inbox time, current vendor-derived EHR use data capture inbox screen time alone, not the proposed “time to resolve an inbox task.”23 It is possible that differences in physician’s efficiency, EHR proficiency, and patient panels could contribute to variation in EHR use. Controlling for these factors was beyond the scope of this analysis. There is also potential for selection bias given our inclusion criteria. However, this was minimized by including both procedural and nonprocedural specialties in a variety of practice locations in 2 geographically distinct areas of the US. Furthermore, the inclusion criteria of the main analysis are consistent with active, community-based ambulatory practice.

Interpretation, generalizability, and future directions

Given the large portion of the EHR market that Epic and Cerner have, the findings of this study are likely generalizable to a considerable portion of healthcare organizations. Namely, that the core measures proposed by Sinsky et al have the potential to allow for meaningful comparisons of EHR use patterns by individuals over time as well as within and across groups. However, current audit logs and vendor-derived EHR use data platforms do not adequately distinguish clinical context (outpatient versus inpatient), teaching physicians’ scheduled clinical hours, or appropriate data elements to derive all the proposed, normalized core EHR use metrics for all physicians using their products.23 To improve standardization of EHR use reporting across all physicians and for the remaining measures, vendors would need to more reliably distinguish: (1) specifications for work outside of scheduled hours that includes all of the time before and after scheduled hours as opposed to a one-size-fits-all clock time interval, (2) standardized time-out intervals, (3) actual start and end visit times (to derive ATTN), (4) clinical context, (5) teaching physicians’ clinical scheduled hours, and (6) specific time on prescriptions versus orders in general. These findings are consistent with other research highlighting the complexity, fragmentation, and vendor variation of audit log data.27,37 After controlling for gender, specialty, and number of patient-scheduled hours and despite differences in vendor measure specification, we found that physician time spent on the EHR did not differ significantly between vendors. Regardless, further harmonization of measurement specifications and reporting between vendors and health systems could drive quality improvement and interventions aiming to improve the EHR user experience as well as future research further exploring the relationship between EHR use and physician professional burnout, changes in professional effort, and retention.8,12,38 Until then, it will not be clear if the differences in some of the metrics reported here are real, due to differences between individuals or practice groups or are measurement artifacts based on limitations of current vendor-derived EHR use data platforms.

CONCLUSION

This is likely the first study to measure EHR use across vendor products in a standardized way. Although audit logs hold tremendous potential for EHR use research, this study reveals challenges to using vendor-derived EHR use data platforms to measure EHR use in a normalized manner with currently proposed core metrics. Further transparency, granularity, and standardization of metrics across vendors is crucial to enhance the capability of hospital administrators, departmental leaders, and researchers to more accurately assess and compare EHR use across vendors and health systems. Given these limitations, findings across systems should be interpreted cautiously. However, persistent differences in EHR use by specialty and physician gender remain compelling with important implications for EHR design, implementation, and policy. Understanding EHR use at scale has the potential to monitor, benchmark, and improve care delivery and physician wellness.12,39,40

FUNDING STATEMENT

This work was supported by 2 American Medical Association Practice Transformation Initiatives, contract numbers 36648 and 36650. The content is solely the responsibility of the authors and does not necessarily represent the official views of the American Medical Association.

Role of Funder/Sponsor: Dr. Sinsky of the American Medical Association (AMA) was a thought partner in the high-level design of the study and interpretation of the findings and contributed to the manuscript; The AMA had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. Investigators from Yale and MedStar designed and led the study. Dr. Saglia of Cerner Corporation was a content expert on the Cerner platform to ensure data validity from the MedStar health system. Cerner Corporation had no role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

AUTHOR CONTRIBUTIONS

ERM, SYO, RMR, and CAN significantly contributed to the conception and design of the study. SYO and AF acquired the data. ERM, SYO, VS, BN, MS, AF, RMR, and CAS analyzed the data. ERM, SYO, BN, and AF drafted the initial manuscript. All authors were involved in data interpretation and manuscript revision and approved the final version submitted for publication. ERM takes responsibility for all aspects of the work.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

ACKNOWLEDGMENTS

We thank the physicians of the Yale-New Haven and MedStar Health Systems. We would also like to thank the following individuals for their contribution and support: Prem Thomas, MD, Center for Medical Informatics, Yale School of Medicine, data analysis, not compensated; Becky Tylutki, Team Coordinator, Joint Data Analytics Team, Yale-New Haven Health System, data collection and honest broker, not compensated; Brian G. Arndt, MD, University of Wisconsin School of Medicine and Public Health, data interpretation and formatting suggestions for the formulas presented in Table 2, not compensated; Josh Cowdy, Director of Technology, Physician Compass, Epic data source expert, not compensated; John O’Bryan, Signal Director, Epic, WI, data interpretation, not compensated; Kyra Cappellucci, Program Coordinator, AMA, not compensated & Nancy Nankivil, Director, Practice Transformation Initiative, AMA, not compensated.

DATA AVAILABILITY

The data underlying this article cannot be shared publicly due to the risk of participant identification. The data will be shared on reasonable request to the corresponding author.

COMPETING INTERESTS STATEMENT

Dr. Saglia reports that he is employed by Cerner Corporation; Dr. Sinsky reports that she is employed by the American Medical Association.

Supplementary Material

REFERENCES

- 1. Howe JL, Adams KT, Hettinger AZ, et al. Electronic health record usability issues and potential contribution to patient harm. JAMA 2018; 319 (12): 1276–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Ratwani RM, Savage E, Will A, et al. Identifying electronic health record usability and safety challenges in pediatric settings. Health Aff 2018; 37 (11): 1752–9. [DOI] [PubMed] [Google Scholar]

- 3. Ratwani RM, Savage E, Will A, et al. A usability and safety analysis of electronic health records: a multi-center study. J Am Med Inform Assoc 2018; 25 (9): 1197–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Babbott S, Manwell LB, Brown R, et al. Electronic medical records and physician stress in primary care: results from the MEMO Study. J Am Med Inform Assoc 2014; 21 (e1): e100–6–e106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Robertson SL, Robinson MD, Reid A.. Electronic health record effects on work-life balance and burnout within the i3 population collaborative. J Grad Med Educ 2017; 9 (4): 479–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Holman GT, Waldren SE, Beasley JW, et al. Meaningful use’s benefits and burdens for US family physicians. J Am Med Inform Assoc 2018; 25 (6): 694–701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Melnick ER, Sinsky CA, Dyrbye LN, et al. Association of perceived electronic health record usability with patient interactions and work-life integration among us physicians. JAMA Netw Open 2020; 3 (6): e207374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Shanafelt TD, Dyrbye LN, Sinsky C, et al. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin Proc 2016; 91 (7): 836–48. [DOI] [PubMed] [Google Scholar]

- 9. Olson K, Sinsky C, Rinne ST, et al. Cross-sectional survey of workplace stressors associated with physician burnout measured by the Mini-Z and the Maslach Burnout Inventory. Stress Health 2019; 35 (2): 157–75. [DOI] [PubMed] [Google Scholar]

- 10. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc 2019; 26 (2): 106–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Tai-Seale M, Dillon EC, Yang Y, et al. Physicians’ well-being linked to in-basket messages generated by algorithms in electronic health records. Health Aff 2019; 38 (7): 1073–8. [DOI] [PubMed] [Google Scholar]

- 12. Melnick ER, Dyrbye LN, Sinsky CA, et al. The association between perceived electronic health record usability and professional burnout among US physicians. Mayo Clin Proc 2020; 95 (3): 476–487. [DOI] [PubMed] [Google Scholar]

- 13. Adler-Milstein J, Zhao W, Willard-Grace R, et al. Electronic health records and burnout: time spent on the electronic health record after hours and message volume associated with exhaustion but not with cynicism among primary care clinicians. J Am Med Inform Assoc 2020; 27 (4): 531–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Micek MA, Arndt B, Tuan W-J, et al. Physician burnout and timing of electronic health record use. ACI Open 2020; 04 (01): e1–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Murphy DR, Meyer AND, Russo E, et al. The burden of inbox notifications in commercial electronic health records. JAMA Intern Med 2016; 176 (4): 559–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time-motion observations. Ann Fam Med 2017; 15 (5): 419–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Tai-Seale M, Olson CW, Li J, et al. Electronic health record logs indicate that physicians split time evenly between seeing patients and desktop medicine. Health Aff 2017; 36 (4): 655–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Young RA, Burge SK, Kumar KA, et al. A time-motion study of primary care physicians’ work in the electronic health record era. Fam Med 2018; 50 (2): 91–9. doi:10.22454/fammed.2018.184803 [DOI] [PubMed] [Google Scholar]

- 19. Sinsky C, Colligan L, Li L, et al. Allocation of physician time in ambulatory practice: a time and motion study in 4 specialties. Ann Intern Med 2016; 165 (11): 753–60. [DOI] [PubMed] [Google Scholar]

- 20. Overhage JM, McCallie DM Jr . Physician time spent using the electronic health record during outpatient encounters: a descriptive study. Ann Intern Med 2020; 172 (3): 169. [DOI] [PubMed] [Google Scholar]

- 21. Ratwani RM, Moscovitch B, Rising JP.. Improving pediatric electronic health record usability and safety through certification: seize the day. JAMA Pediatr 2018; 172 (11): 1007–8. [DOI] [PubMed] [Google Scholar]

- 22. Ratwani RM, Reider J, Singh H.. A decade of health information technology usability challenges and the path forward. JAMA 2019; 321 (8): 743.doi:10.1001/jama.2019.0161 [DOI] [PubMed] [Google Scholar]

- 23. Sinsky CA, Rule A, Cohen G.. Metrics for assessing physician activity using electronic health record log data. J Am Med Inform Assoc 2020; 27 (4): 639–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Chen Y, Xie W, Gunter CA, et al. Inferring clinical workflow efficiency via electronic medical record utilization. AMIA Annu Symp Proc 2015; 2015: 416–25. [PMC free article] [PubMed] [Google Scholar]

- 25. Zhu X, Tu S-P, Sewell D, et al. Measuring electronic communication networks in virtual care teams using electronic health records access-log data. Int J Med Inform 2019; 128: 46–52. [DOI] [PubMed] [Google Scholar]

- 26. Adler-Milstein J, Adelman JS, Tai-Seale M, et al. EHR audit logs: A new goldmine for health services research? J Biomed Inform 2020; 101: 103343. [DOI] [PubMed] [Google Scholar]

- 27. Rule A, Chiang MF, Hribar MR.. Using electronic health record audit logs to study clinical activity: a systematic review of aims, measures, and methods. J Am Med Inform Assoc 2020; 27 (3): 480–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Hron JD, Lourie E.. Have you got the time? Challenges using vendor electronic health record metrics of provider efficiency. J Am Med Inform Assoc 2020; 27 (4): 644–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Akbar F, Mark G, Warton EM, et al. Physicians’ electronic inbox work patterns and factors associated with high inbox work duration. J Am Med Inform Assoc 2021; 28 (5): 923–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gupta K, Murray SG, Sarkar U, Mourad M, Adler-Milstein J. Differences in ambulatory EHR use patterns for male vs. female physicians. NEJM Catalyst. 2019; 5 (6). [Google Scholar]

- 31. Ganguli I, Sheridan B, Gray J, et al. Physician work hours and the gender pay gap — evidence from primary care. N Engl J Med 2020; 383 (14): 1349–57. doi:10.1056/nejmsa2013804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Bank AJ, Obetz C, Konrardy A, et al. Impact of scribes on patient interaction, productivity, and revenue in a cardiology clinic: a prospective study. Clinicoecon Outcomes Res 2013; 5: 399–406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Sinsky CA, Willard-Grace R, Schutzbank AM, et al. In search of joy in practice: a report of 23 high-functioning primary care practices. Ann Fam Med 2013; 11 (3): 272–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Misra-Hebert AD, Rabovsky A, Yan C, et al. A team-based model of primary care delivery and physician-patient interaction. Am J Med 2015; 128 (9): 1025–8. [DOI] [PubMed] [Google Scholar]

- 35. Smith CD, Balatbat C, Corbridge S; American College of Physicians, et al. Implementing optimal team-based care to reduce clinician burnout. NAM Perspectives 2018; 8 (9). doi:10.31478/201809c [Google Scholar]

- 36. Longhurst CA, Davis T, Maneker A; on behalf of the Arch Collaborative, et al. Local investment in training drives electronic health record user satisfaction. Appl Clin Inform 2019; 10 (02): 331–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Cohen G, Brown L, Fitzgerald M, et al. To Measure the Burden of EHR Use, Audit Logs Offer Promise—But Not Without Further Collaboration. Health Affairs. 2020; https://www.healthaffairs.org/do/10.1377/hblog20200226.453011/full/?mi=3u3irl&af=R&ConceptID=891&content=blog&countTerms=true&target=topic-blogAccessed 4 Mar 2020

- 38. Shanafelt TD, Mungo M, Schmitgen J, et al. Longitudinal study evaluating the association between physician burnout and changes in professional work effort. Mayo Clin Proc 2016; 91 (4): 422–31. [DOI] [PubMed] [Google Scholar]

- 39. DiAngi YT, Lee TC, Sinsky CA, et al. Novel metrics for improving professional fulfillment. Ann Intern Med 2017; 167 (10): 740–1. [DOI] [PubMed] [Google Scholar]

- 40. Tutty MA, Carlasare LE, Lloyd S, et al. The complex case of EHRs: examining the factors impacting the EHR user experience. J Am Med Informa Assoc 2019; 26 (7): 673–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article cannot be shared publicly due to the risk of participant identification. The data will be shared on reasonable request to the corresponding author.