Abstract

Consider a linear elliptic PDE defined over a stochastic stochastic geometry a function of N random variables. In many application, quantify the uncertainty propagated to a Quantity of Interest (QoI) is an important problem. The random domain is split into large and small variations contributions. The large variations are approximated by applying a sparse grid stochastic collocation method. The small variations are approximated with a stochastic collocation-perturbation method and added as a correction term to the large variation sparse grid component. Convergence rates for the variance of the QoI are derived and compared to those obtained in numerical experiments. Our approach significantly reduces the dimensionality of the stochastic problem making it suitable for large dimensional problems. The computational cost of the correction term increases at most quadratically with respect to the number of dimensions of the small variations. Moreover, for the case that the small and large variations are independent the cost increases linearly.

Keywords: Uncertainty Quantification, Stochastic Collocation, Perturbation, Stochastic PDEs, Finite Elements, Complex Analysis, Smolyak Sparse Grids

1. Introduction

The problem of design under the uncertainty of the underlying domain can be encountered in many real life applications. For example, in semiconductor fabrication the underlying geometry becomes increasingly uncertain as the physical scales are reduced [33]. This uncertainty is propagated to an important Quantity of Interest (QoI), such as the capacitance of the semiconductor circuit. If the variance of the capacitance is high this could lead to low yields during the manufacturing process. Quantifying the uncertainty in a given QoI, such as the capacitance, is of important so as to be able to maximize yields. This will have a direct impact in reducing the costly and time-consuming design cycle. Other examples included graphene nano-sheet fabrication [21]. In this paper we focus on the problem of how to efficiently compute the statistics of the QoI given uncertainty in the underlying geometry.

Uncertainty Quantification (UQ) methods applied to Partial Differential Equations (PDEs) with random geometries can be mostly divided into collocation and perturbation approaches. For large deviations of the geometry the collocation method [6,8,14,32] is well suited. In addition, in [6,18] the authors derive error estimates of the solution with respect to the number of stochastic variables in the geometry description. However, this approach is only effective for medium size stochastic problems. In contrast, the perturbation approaches introduced in [20,33,17,9,13,11,12] are very efficient for high dimension, but with small perturbations of the domain. More recently, new approaches based on multi-level Monte Carlo have been developed [28] that is well suited for low regularity of the solution. Furthermore, the domain mapping approach has been extended to elliptic problems with random domains in [19].

We develop a hybrid collocation-perturbation method that is well suited for a combination of large and small variations. The main idea is to meld both approaches such that the accuracy versus dimension of the problem is significantly accelerated.

We represent the domain in terms of a series of random variables and then remap the corresponding PDE to a deterministic domain with random coefficients. The random geometry is split into small and large deviations. A collocation sparse grid method is used to approximate the contribution to the QoI from the first large deviations NL terms of the stochastic domain expansion. Conversely, the contribution of the small deviations (the tail) are cheaply computed with a collocation and perturbation method. This contribution is called the variance correction.

For the collocation method we apply an isotropic sparse grid. This is to simplify the presentation in this work. However, we are free to use any collocation method such as anisotropic sparse grids [26], quasi-optimal [25] or dimension adaptive [15,23,22] to increase the efficiency of the collocation computation. The results in this paper show that the variance correction significantly reduces the overall dimensionality of the stochastic problem while the computational cost of the correction term increases at most quadratically with respect to the number of dimensions of the small variations.

A rigorous convergence analysis of the statistics of the QoI in terms of the number of collocation knots and the perturbation approximation of the tail is derived. Analytic estimates show that the error of the QoI for the hybrid collocation-perturbation method (or the hybrid perturbation method for short) decays quadratically with respect to the of sum of the coefficients of the expansion of the tail. This is in contrast to the linear decay of the error estimates derived in [6] for the pure stochastic collocation approach. Furthermore, numerical experiments show a faster convergence rate than the stochastic collocation approach. Moreover, the variance correction is computed at a fraction of the cost of the low dimensional large variations.

In Section 2 mathematical background material is introduced. In Section 3 the stochastic domain problem is introduced. We assume that there exist a bijective map such that the elliptic PDE with a stochastic domain is remapped to deterministic reference domain with a random diffusion matrix. The random boundary is assumed to be parameterized by N random variables. In section 4 the hybrid collocation-perturbation approach is derived. This approach reduces to computing mean and variance correction terms that quantifies the perturbation contribution from the tail of the random domain expansion. In Section 5 we show that analytic extension in exists for the variance correction term. In Section 6 mean and variance error estimates are derived in terms of the finite element, sparse grid and perturbation approximations. In section 7 complexity and tolerance analysis is derived. In section 8 we test our approach on numerical examples that are consistent with theoretically derived convergence rates.

2. Background

In this section we introduce the general notation and mathematical background that will be used in this paper. Let (Ω, , ) be a complete probability space, where Ω is the set of outcomes, is a sigma algebra of events and is a probability measure. Define , , as the following Banach spaces:

where is a measurable random variable.

Consider the random variables Y1, … , YN measurable in (Ω, , ). Form the N valued random vector Y := [Y1, … , YN], Y : Ω → Γ, and let . Without loss of generality denote Γn := [−1, 1] as the image of Yn for n = 1, … , N and let be the Borel σ− algebra.

For all consider the induced measure . Suppose that μY is absolutely continuous with respect to the Lebesgue measure defined on Γ. From the Radon–Nikodym theorem [4] we conclude that there exists a density function ρ(y) : Γ → [0, +∞) such that for any event we have that . For any measurable function define the expected value as . Finally, the following Banach spaces will be useful for the stochastic collocation sparse grid error estimates. For let

We discuss in the next section an approach of approximating a function with sufficient regularity by multivariate polynomials and sparse grid interpolation.

2.1. Sparse Grids

Our goal is to find a compact an accurate approximation of a multivariate function with sufficient regularity. It is assumed that where

and V is a Banach space. Consider the univariate Lagrange interpolant along the nth dimension of Γ

where i ⩾ 1 denotes the level of approximation and m(i) the number of collocation knots used to build the interpolation at level i such that m(0) = 0, m(1) = 1 and m(i) < m(i + 1) for i ⩾ 1. Furthermore let . The space is the set of polynomials of degree at most m(i) − 1.

We can construct an interpolant by taking tensor products of along each dimension for n = 1, … , N. However, the number of collocation knots explodes exponentially with respect to the number of dimensions, thus limiting feasibility to small dimensions. Alternately, consider the difference operator along the nth dimension

The sparse grid approximation of is defined as

| (1) |

where w ⩾ 0, , is the approximation level, , and is strictly increasing in each argument. The sparse grid can also we re-written as

| (2) |

From the previous expression, we see that the sparse grid approximation is obtained as a linear combination of full tensor product interpolations. However, the constraint g(i) ⩽ w in (2) restricts the growth of tensor grids of high degree.

Consider the multi-indexed vector m(i) = (m(i1), … , m(iN)) and the associated polynomial polynomial set

Let be the multivariate polynomial space

It can shown that (see e.g. [2]). One of the most popular choices for m and g is given by the Smolyak (SM) formulas (see [29,3,2])

in conjunction with Clenshaw-Curtis (CC) abscissas interpolation points. This choice gives rise to sequence of nested one dimensional interpolation formulas. The number of interpolation knots of the Smolyak sparse grid grows significantly slower than Tensor Product (TP) (see [2]) and Total Degree (TD) grids. Other popular choices include Hyperbolic Cross (HC) sparse grids.

It can also be shown that the TD, SM and HC anisotropic sparse approximation formulas can be readily constructed with improved convergence rates (see [26]). Moreover, in [10], the authors show convergence of anisotropic sparse grid approximations with infinite dimensions (N → ∞). In [25] the authors show the construction of quasi-optimal grids have been shown to have exponential convergence.

As pointed out in the introduction, we have the option of using any collocation method such as anisotropic [26], quasi-optimal [25] or dimension adaptive [15,23,22] sparse grids to increase the efficiency of the collocation computation. The important result in this paper is that the overall dimensionality of the stochastic problem is significantly reduced with the addition of the perturbation component.

3. Problem setup and formulation

Let , , be an open bounded domain with Lipschitz boundary that is shape dependent on the stochastic parameter ω ∈ Ω

Suppose there exist a reference domain , which is open and bounded with Lipschitz boundary ∂U. In addition assume that almost surely in Ω there exist a bijective map . The map η ↦ x, , is written as

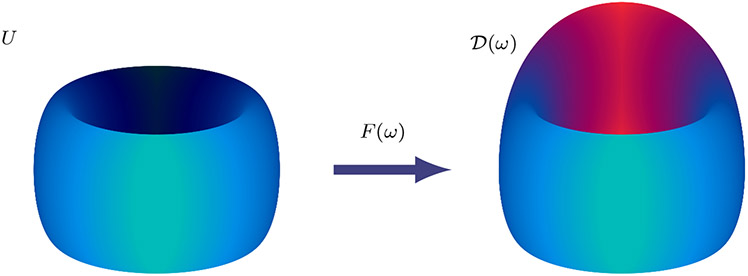

where η are the coordinates for the reference domain U and x are the coordinates for . See the cartoon example in Figure 1. Denote by J(ω) as the Jacobian of F(ω) and suppose that F satisfies the following assumption.

Fig. 1.

Example of reference domain deformation through the bijective map F(ω) for some realization ω ∈ Ω. The image is created Tikz code [30].

Assumption 1 Given a bijective map there exist constants and such that

almost everywhere in U and almost surely in Ω. We have denoted by σmin(J(ω)) (and σmax(J(ω))) the minimum (respectively maximum) singular value of the Jacobian J(ω). In Figure 1 a cartoon example of the deformation of the reference domain U is shown.

By applying the chain rule on Sobolev spaces [1] for any we have that ∇v = J-T∇(v ∘ F), where J-T := (J−1)T, i.e. the transpose of matrix J−1, and v ∘ F ∈ H1(U). Therefore we can prove the following result.

Lemma 1 Under Assumptions 1 it is immediate to prove the following results:

and L2(U) are isomorphic almost surely.

and H1(U) are isomorphic almost surely.

Proof For i) and ii) see [6] or [18].

Let , i.e. the region in defined by the union of all the perturbations of the stochastic domain. Consider the functions , and that are defined over the region of all the stochastic perturbations of the domain in . Similarly, let be region formed by the union of all the stochastic perturbations of the boundary. For ω ∈ Ω a.s. let be defined as the trace of a deterministic function .

Consider the following boundary value problem: Given and find such that almost surely

| (3) |

We now make the following assumption:

Assumption 2 Let and

Assume that the constants amin and amax satisfy the following inequality: 0 < amin ⩽ amax < ∞.

Recall that is open and bounded with Lipschitz boundary . By applying a change of variables the weak form of (3) can be formulated on the reference domain (see [6] for details) as:

Problem 1 Given that (f ∘ F)(η, ω) ∈ L2(U) find s.t.

| (4) |

almost surely, where , , , for any w,

C(η, ω) := J(ω)TJ(ω), and . This homogeneous boundary value problem can be remapped to as , thus we can rewrite . The solution for the Dirichlet boundary value problem is obtained as .

The solution of (4) is numerically computed with a semi-discrete approximation. Suppose that we have a set of regular triangulations with maximum mesh spacing parameter h > 0. Furthermore, let be the space of continuous piecewise polynomial defined on with Nh cardinality. Let be the semi-discrete approximation of the solution of Problem (4) that satisfies the following problem: Find such that

| (5) |

for all vh ∈ Hh(U) and for a.s. y ∈ Γ. Note that G(y) := (a ∘ F(y))∣J(y)∣J(y)−1J(y)−T and .

3.1. Quantity of Interest

For many practical problems the QoI is not necessarily the solution of the elliptic PDE, but instead a bounded linear functional of the solution. For example, this could be the average of the solution on a specific region of the domain, i.e.

| (6) |

with q ∈ L2(U) over the region . It is assumed that there exists δ > 0 such that .

In the next section, the perturbation approximation is derived for Q(u) and not directly from the solution u. It is thus necessary to introduce the influence function , which can be easily computed by the following adjoint problem:

Problem 2 Find such that for all

| (7) |

a.s. in Ω. After computing the influence function φ, the QoI can be computed as .

Remark 1 We can pick a particular operator T such that and vanishes in the region defined by . Thus we have that and .

3.2. Domain parameterization

To simplify the analysis of the elliptic PDE with a random domain from equation (3) we remapped the solution onto a fix deterministic reference domain. This approach has also been applied in [6,14,18,17]. This approach is reminiscent of Karhunen-Loève (KL) expansions of random fields (see [18]).

Suppose that b1, … , bN are a collection of vector valued Sobolev functions where each of the entries of for n = 1, … , N belong in the space W1,∞(U). We further make the following assumptions.

Assumption 3 Assume that F(η, ω) has the finite noise model

Assumption 4

1. ∥∥bn∥l∞∥L∞(U) = 1 for n =1, … N.

2. ∞ > μ1 ⩾ ⋯ ⩾ μN ⩾ 0.

The stochastic domain perturbation is now split as

where we denote FL(η, ω) as the large deviations and FS(η, ω) as the small deviations modes with the following parameterization:

where NL + NS = N. Furthermore, for n = 1, … , NL let μL,n := μn, bL,n(η) := bn(η), and for n = 1, … , NS let μS,n := μn+NL and bS,n(η) := bn+NL (η).

Denote yL := [y1, … ,yNL], , and as the joint probability density of yL. Similarly denote yS := [yNL+1, … , yN], , and as the joint probability density of yS. From the stochastic model the Jacobian J is written as

| (8) |

where for n = 1, … N, Bn(η) is the Jacobian of bn(η).

4. Perturbation approach

In this section a perturbation method is presented to approximate Q(y) with respect to the domain perturbation. In Section 4.1, the perturbation approach is applied with respect to the tail field FS(η, ω). A stochastic collocation approach is then used to approximate the contribution with respect to FL(η, ω). We follow a similar approach as in [20] by using shape calculus. To this end we introduce the following definition.

Definition 1 Let ψ be a regular function of the parameters , the Gâteaux derivative (shape derivative) evaluated at y on the space of perturbations is defined as

Similarly, the second order derivative (shape Hessian) as a bilinear form on W is defined as

Suppose that Q is a regular function with respect to the parameters y, then for all y = y0 + δy ∈ W the following expansion holds:

| (9) |

for some θ ∈ (0, 1). Thus we have a procedure to approximate the QoI Q(y) with respect to the first order term and bound the error with the second order term. To explicitly formulate the first and second order terms we make the following assumption:

Assumption 5 For all v, , let , where G(y) := (a ∘ F)(η, y)J−1(y)J−T(y)∣J(y)∣, we have that for all y ∈ W

- For n = 1, … , N there exists a uniformly bounded constant on W s.t.

Furthermore, for all y ∈ W we assume that ∇y(f ∘ F)(y), .

Remark 2 Although we have that (i) and (ii) are assumptions for now, under Assumptions 1 - 4, , and Lemma 9 in Section 6 it can be shown that Assumption 5(i) and (ii) are true for all y ∈ Γ.

Definition 2 For all v, , and y ∈ W let

Remark 3 Under Assumption 5 for any v, we have that for all y ∈ W

where G(y) := (a ∘ F)(η, y)J−1(y)J−T(y)∣J(y)∣. Furthermore, under Assumption 5 we have that

We can introduce as well the derivative for any function (v ∘ F)(η, y) ∈ L2(U) with respect to y: For all y ∈ W we have that

Lemma 2 Suppose that Assumptions 1 to 5 are satisfied. Then for any y, δy ∈ W and for all we have that

Proof

then

The result follows. □

Lemma 3 Suppose that Assumptions 1 to 5 are satisfied. Then for any y, δy ∈ W and for all we have that

Proof We follow the same procedure as in Lemma 2. □

A consequence of Lemma 2 and Lemma 3 is that and Dyφ(y)(δy) belong in for any y ∈ W and δy ∈ W.

Lemma 4 Under the same assumptions as Lemma 3 we have that

| (10) |

where the influence function φ(y) satisfies equation (7).

Proof

From Lemma 2 with v = φ(y) and Lemma 3 with we obtain the result. □

Lemma 5 Suppose that Assumptions 1 to 5 are satisfied. Then for any y, δy ∈ W and for all we have that

Proof Let (see Lemma 4). Taking the first variation of equation of E(y) we have that

Following the same approach as in Lemma 3) and 4) we obtain the result. □

4.1. Hybrid collocation-perturbation approach

We now consider a linear approximation of the QoI Q(y) with respect to y ∈ Γ. For any y = y0 + δy, y0 ∈ Γ, y ∈ Γ, the linear approximation has the form

where δy = y − y0 ∈ Γ. Recall that Γ = ΓL × ΓS and make the following definitions and assumptions

y := [yL, yS], δy := [δyL, δyS], and .

takes values on ΓL and δyL := 0 ∈ ΓL.

and δyS = yS takes values on ΓS.

We can now construct a linear approximation of the QoI with respect to the allowable perturbation set Γ. Consider the following linear approximation of Q(yL, yS)

| (11) |

and from Lemma 4 we have that

where

This linear approximation only shows the explicit dependence on the variable yS without the decay of the coefficients for n = 1, … , NS. However, to obtain directly capture the effect of the coefficients let for n = 1, … , N and . It is not hard to see that , can be reformulated with respect to the variables and by applying Lemma 4 as

| (12) |

where

This will allow an explicit dependence of the mean and variance error in terms of the coefficients μS,n, n = 1, … , NS, as show in in Section 6.

The mean of can be obtained as

From Fubini’s theorem we have

| (13) |

and from equation (12)

| (14) |

where , ρ(yL) is the marginal distribution of ρ(y) with respect to the variables yL and similarly for . The term is referred as the mean correction. The variance of can be computed as

The term (I) is referred as the variance correction of . From Fubini’s theorem and equation (12) we have that

| (15) |

and is equal to

| (16) |

Note that the mean and variance depend only on the large variation variables yL. If the region of analyticity of the QoI with respect to the stochastic variables yL is sufficiently large, it is reasonable to approximate with a Smolyak sparse grid , with respect to the variable yL (see [6] for details). Thus in equations (13) - (16) is replaced with the the sparse grid approximation and for n = 1, … , NS γn(yL, 0) is replaced with .

Remark 4 For the special case that for n = 1, … , N and m = 1, … , N , ρ(y) = ρ(yL)ρ(yS), for all yL ∈ ΓL and yS ∈ ΓS (i.e. independence assumption of the joint probability distribution ρ(yL, yS)), the mean and variance corrections are simplified. Applying Fubini’s theorem and from equation 13 the mean of now becomes

i.e. there is no contribution from the small variations. Applying a similar argument we have that

Notice that for this case the variance correction consists of NS terms, thus the computational cost will depend linearly with respect to NS.

5. Analytic correction

In this section we show that the mean and variance corrections are analytic in a well defined region in with respect to the variables yL ∈ ΓL. The size of the region of analyticity will directly correlated with the convergence rate of a Smolyak sparse grid. To this end, let us establish the following definition: For any , for some constant , define the following region in ,

| (17) |

Observe that the size of the region Θβ,NL is mostly controlled by the decay of the coefficients μl and the size of ∥Bl(η)∥2. Thus the faster the coefficient μl decays the larger the region Θβ,NL will be.

Furthermore, rewrite J(η, yL) as J(yL) = I+R(yL), with . We now state the first analyticity theorem for the solution with respect to the random variables yL ∈ ΓL.

To simplify the analyticity proof the following assumptions are made.

Assumption 6

(a ∘ F)(η, ω) is only a function of η ∈ U and independent of ω ∈ Ω.

is only a function of η ∈ U and independent of ω ∈ Ω.

can be analytically extended in . Furthermore assume that the analytic extension Re(f ∘ F)(η, z), Im(f ∘ F)(η, z) ∈ L2(U).

There exists such that , for all η ∈ U.

Remark 5 Note that Assumption 6 (i) is not necessarily hard and Theorem 1, 2 and the error analysis in Section 6 can be easily adapted for a less restrictive hypothesis (See Assumptions 7 and Lemma 2 in [7]). None-the-less, this assumption is still practical for layered materials such as semi-conductor design. For such problems can be non-constant along the non-stochastic directions.

Remark 6 Under Assumption 6 (iv) we have that for all z ∈ Θβ,NL, where . This implies the real part of Redet(J(z)) for all z ∈ Θβ,NL will never have a sign change.

The following theorem, can be proven with a slight modification of Theorem 7 in [7] to take into account Assumption 6 (ii) and the mapping model of equation (8)

Theorem 1 Let then the solution of Problem 1 can be extended holomorphically on Θβ,NL if

where .

Remark 7 By following a similar argument, the influence function φ(y) can be extended holomorphically in Θβ,NL if

Remark 8 To prove the following theorem will be using the following matrix calculus identity [5]: Suppose that the matrix is a function of a the real variable α then

We are now ready to show that the linear approximation can be analytically extended on Θβ,NL. Note that it is sufficient to show that can be analytically extended on Θβ,NL.

Theorem 2 Let , if , then there exists an extension of , for n = 1, … , NS, which is holomorphic in Θβ,NL.

Proof Consider the extension of yL → zL, where . First, we have that

| (18) |

for n = 1, … , NS can be extended on Θβ,NL. Note the for the sake of reducing notation clutter we dropped the dependence of the variable η ∈ U and it is understood from context unless clarification is needed.

We now show that each entry of the matrix is holomorphic on Θβ,NL for all y ∈ ΓS. First, we have that

From Assumption 6 (a ∘ F)(·, zL) and are holomorphic on Θβ,NL for all yS ∈ ΓS. From Remark 8 we have that

Since the series

is convergent for all zL ∈ Θβ and for all yS ∈ ΓS. It follows that each entry of ∂F(zL, y)−1 and therefore C(zL, y)−1 is holomorphic for all zL ∈ Θβ,NL and for all yS ∈ ΓS. We have that ∣J(zL, yS)∣ and are functions of a finite polynomial therefore they are holomorphic for all zL ∈ Θβ,NL and yS ∈ ΓS.

From Jacobi’s formula we have that for all zL ∈ Θβ,NL, yS ∈ ΓS and l = 1, … , NS

It follows that for all zL ∈ Θβ,NL and yS ∈ ΓS we have that are holomorphic for n = 1, … NL.

We shall now prove the main result. First, extend yL along the nth dimension as yn → zn, and let . From Theorem 1 we have that and φ(zL, yS) are holomorphic for zL ∈ Θβ,NL and yS ∈ ΓS if

Thus from Theorem 1.9.1 in [16] the series

are absolutely convergent in for all , where , for l = 0, … , ∞. Furthermore,

i.e. is holomorphic on Θβ,NL along the nth dimension. A similar argument is made for ∇φ(zL, yS).

Since the matrix is holomorphic for all zL ∈ Θβ,NL and yS ∈ ΓS then we can rewrite the (i, j) entry as where . For each i, j = 1, … , d consider the map

For i, j = 1, … , d, for all zL ∈ Θβ,NL and yS ∈ ΓS

Thus equation (18) can be analytically extended on Θβ,NL along the nth dimensions for all yS ∈ ΓS. Equation (18) can now be analytically extended on the entire domain Θβ,NL. Repeat the analytic extension of (18) for n = 1, … , NL. Hartog’s Theorem implies that (18) is continuous in Θβ,NL. Osgood’s Lemma them implies that (18) is holomorphic on Θβ,NL. Following a similar argument as for (18) we can analytically extended the rest of the terms of αn(yL, yS) on Θβ,NL for n = 1, … , NS. □

6. Error analysis

In this section we analyze the perturbation error between the exact QoI Q(yL, yS) and the sparse grid hybrid perturbation approximation . With a slight abuse of notation by we mean the two sparse grids approximations:

where αn,h(·, yL, 0), for n = 1, … , NS, and Qh(yL, yS) are the finite element approximations of αn(·, yL, 0) and Q(yL, yS) respectively. It is easy to show that is equal to

(I) Applying Jensen’s inequality we have that

| (19) |

(II) Similarly, we have that

Applying Jensen inequality

| (20) |

Combining equations (19) and (20) we have that

Similarly we have that the mean error satisfies the following bound:

Remark 9 For the case that probability distributions ρ(yL) and ρ(yS) are independent then the mean correction is exactly zero, thus the mean error would be bounded by the following terms

for some positive constants CT, CFE and CSG. We refer the reader to Section 5 in [6] for the definition of the constants and the bounds of these errors.

6.1. Perturbation error

In this section we analyze perturbation approximation error

| (21) |

where the remainder is equal to

for some θ ∈ (0, 1). Since the perturbation approach involves two derivatives, to obtain a bounded error estimate the following assumptions are made:

Assumption 7 Assume that . Furthermore, assume that is also 2-smooth almost surely.

From this assumption the following lemma can be proven.

Lemma 6

and H2(U) are isomorphic almost surely.

Proof See Theorem 3.35 in [1]. □

Remark 10 Note that the previous Sobolev norm equivalence will depend on the parameter ω ∈ Ω. The constant depends on the transformation F and the determinant of the Jacobian ∣J∣. See the proof of Theorem 3.35 in [1] for more details.

To estimate the perturbation error the next step is to bound bound the remainder. To this end the following series of lemmas are useful.

Lemma 7 For all n = 1, … , NS and for all y ∈ Γ

Proof From Remark 8 we have that

and thus

From Assumption 1 the result follows. □

Lemma 8 For all y ∈ Γ

Proof Using Jacobi’s formula we have that for all y ∈ Γ

□

Lemma 9 For all n, m = 1, … , NS and for all y ∈ Γ

Proof From Remark 8 we have that

Taking the triangular and multiplicative inequality, and following the same approach as Lemma 7 we obtain the desired result. □

Lemma 10 For n, m = 1, … , NS for all y ∈ Γ

Proof Using Jacobi’s formula we have

The result follows. □

Lemma 11 For all v, and y ∈ Γ we have that

where for all y ∈ Γ and

Proof First we expand the partial derivative of with respect to :

From Lemmas 7, 8 and the triangular inequality we have that

□

Lemma 12 For all v, and y ∈ Γ we have that

is less or equal to

Proof From Remark 8 we have that

From Lemmas 7 - 10, and the triangular inequality we have that for all y ∈ Γ

□

The next step is to bound and .

Lemma 13 For all yL ∈ ΓL and δyS ∈ Γ we have that:

(a)

where C is a uniformly bounded constant.

(b)

Proof (a) For any and for all yL ∈ ΓL. and δyS ∈ Γ from Lemma 2

With the choice of and from Lemma 11

Now,

Finally, from Lemma 8 the result follows.

(b) Apply Lemma 3 with and Lemma 11. □

Lemma 14 For all y ∈ Γ and n, m = 1, … , N we have that

Proof (a) Follow the proof in Lemma 13. (b) By applying the chain rule for

Sobolev spaces and Lemma 6 we obtain that for all y ∈ Γ

for some constant C > 0. This completes the proof. □

From Lemmas 4 - 5 and 7 - 14 we have that for all yL ∈ ΓL and for all δyS ∈ ΓS

where

is a bounded constant that depends on the indicated parameters. We have now proven the following result.

Theorem 3 Let be the solution to the bilinear Problem 1 that satisfies Assumptions 1-7 then for all yL ∈ ΓL and yS ∈ ΓS

where .

6.2. Finite element error

The finite element convergence rate for the solution and influence function φ are directly dependent on the regularity of these functions, the polynomial order of the finite element space and the mesh size h). By applying the triangular inequality

Following a duality argument we obtain

for some constant , CΓL (r) := ʃΓL C(r, u(yL, 0))ρ(yL)dy and DΓL (r) := ʃΓL C(r, φ(yL, 0)) ρ(yL)dy.

The constant r is function of i) the regularity properties of the influence function and the solution ()(·, yL, 0) ii) the polynomial degree of the finite element basis.

It follows that

| (22) |

where , and

are bounded constants for n = 1, … , NS.

6.3. Sparse grid error

For the sake of simplicity only convergence rates for the isotropic Smolyak sparse grid are shown. This analysis can be extended to the anisotropic case without much difficulty.

where and

for n = 1, … , NS, and

for any Banach space V defined on U.

It can be shown that (and for n = 1, … , NS) have a algebraic or sub-exponential convergence rate as a function of the number of collocation knots η (see [26,27]). necessary condition is that the semi-discrete solution and , n = 1, … , NS admit an analytic extension in the same region Θβ,NL. This is a reasonable assumption to make.

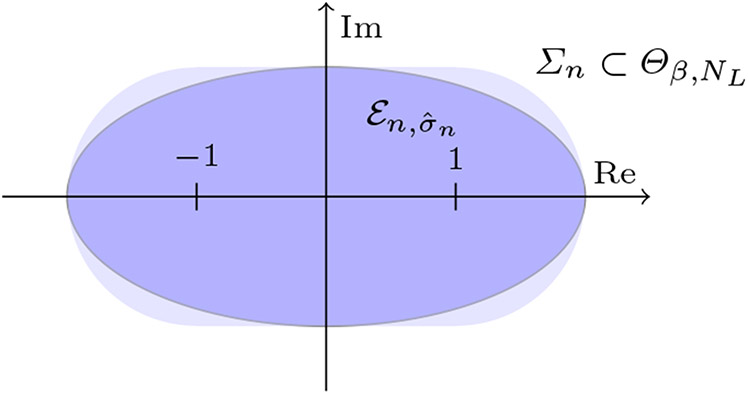

Consider the polyellipse in where

and let

for n = 1, … , NL. For the sparse grid error estimates to be valid the solution and , n = 1, … , NS, have to admit an extension on the polyellipse . The coefficients σn, for n = 1, … , N control the overall decay of the sparse grid error estimate. Since we restrict our attention to isotropic sparse grids the decay will be dictated by the smallest σn i.e. .

The next step is to find a suitable embedding of in Θβ,NL. Thus we need to pick the largest σn n = 1, … , NL such that . This is achieved by forming the set Σ := Σ1 × ⋯ × ΣNL and letting as shown in Figure 2.

Fig. 2.

Embedding of in Σn ⊂ Θβ,NL.

We now have almost everything we need to state the sparse grid error estimates. However, in [27] to simplify the estimate it is assumed that if then the term M(v) (see page 2322) is equal to one. We reintroduce the term M(v) and note that it can be bounded by maxz∈Θβ,NL and update the sparse grids error estimate. To this end let , .

Remark 11 In [6] Corollary 8 a bound for , z ∈ Θβ,NL, can be obtained by applying the Poincaré inequality. Following a similar argument a bound for for all z ∈ Θβ,NL. Thus bounds for for n = 0, … , NL and for all z ∈ Θβ,NL can be obtained.

Modifying Theorem 3.11 in [27] it can be shown that given a sufficiently large η (w > NL/log 2) a Smolyak sparse grid with a nested Clenshaw Curtis abscissas we obtain the following estimate

| (23) |

and

| (24) |

for n = 1, … , NS, where , ,

and . Furthermore, ,

and

7. Complexity analysis

We now perform an accuracy vs the total work analysis. The objective is to derived total work W as a function of a tolerance TOL > 0, such that and . We restrict our attention to the isotropic sparse grid with Clenshaw-Curtis abscissas. For each realization of the semi-discrete approximation uh, it is assumed that it requires work to compute, where Nh is the cardinality of the finite element space , and the constant q is a function of the regularity of uh and the efficiency of the solver. The cost for solving the approximation of the influence function φh ∈ Hh(U) is also assumed to be . Thus for any yL ∈ ΓL, the cost for computing Qh(yL, 0) := B(yL, 0; uh(yL, 0),φh(yL, 0)) is bounded by . Similarly, for any yL ∈ ΓL the cost for evaluating is .

Remark 12 To compute the expectation integrals for the mean and variance correction a Gauss quadrature scheme can be used coupled with an auxiliary probability distribution such that

for some C > 0 (See [6] for details). However, for the sake of simplifying the analysis it is assumed that quadrature is exact and of cost .

Let η0(NL, m, g, w, Θβ,NL) be the number of the sparse grid knots for constructing and ηn(NL, m, g, w, Θβ,NL) for constructing , for n = 1, … , NS. The cost for computing is and the cost for computing is bounded by , where

The total cost for computing the mean correction is bounded by

| (25) |

Following a similar argument the cost for computing the variance correction is bounded by

| (26) |

We now obtain the estimates for Nh(TOL), NS(TOL) and η(TOL) for the Perturbation, Finite Element and Sparse Grids respectively:

-

Perturbation: From the perturbation estimate derived in Section 6.1 we seek with respect to the decay of the coefficients , n = 1, … NS. First, make the assumption that for some uniformly bounded CD > 0 and l > 0. It follows that ifFinally, we have that

-

Finite Element: From Section 6.2 if, then . Solving the quadratic inequality we obtain thatAssuming that Nh grows as then

for some constant D3 > 0.

-

Sparse Grid: We seek . This is satisfied if andfor n = 1, … , NS. Now, following a similar approach as in [27] let . Thus iffor a sufficiently large NL, where , and . Similarly, for a sufficiently large NL we have that

for n = 1, … , NS.

Combining (a), (b) and (c) into equations (25) and (26) we obtain the total work and as a function of a given user error tolerance TOL.

8. Numerical results

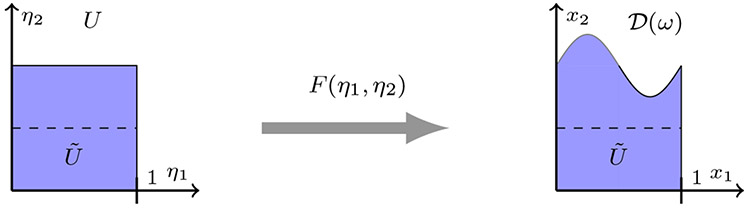

In this section the hybrid collocation-perturbation method is tested on an elliptic PDE with a stochastic deformation of the unit square domain i.e. U = (0, 1) × (0, 1). The deformation map is given by

According to this map only the upper half of the square is deformed but the lower half is left unchanged. The cartoon example of the deformation on the unit square U is shown in Figure 3.

Fig. 3.

Stochastic deformation of unit square U according to the rule given by . The region is not deformed and given by (0, 1) × (0, 0.5).

The Dirichlet boundary conditions are set according to the following rule:

where . Note that the boundary condition on the upper border does not change even after the stochastic perturbation.

For the stochastic model e(η1, w) we use a variant of the Karhunen Loève expansion of an exponential oscillating kernel that are encountered in optical problems [24]. This model is given by

with decay , , and

It is assumed that are independent uniform distributed in (, ), thus , for n, m = 1 … N where δ[·] is the Kronecker delta function.

It can be shown that for n > 1 we have that

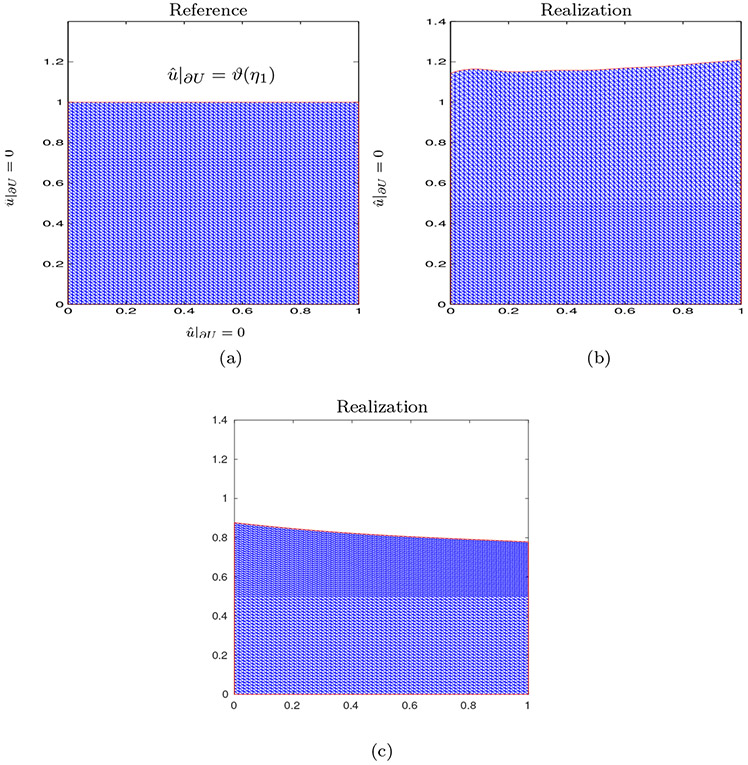

Thus for all we have that supx∈U σmax(Bl(η1)) < C for some constant C. Thus for k = 1 we obtain linear decay on the gradient of the deformation. In Figure 4 (a) a mesh example of the reference domain is shown with Dirichlet boundary conditions. In Figure 4 (b) and (c) two realizations of the reference domain U from the deformation model F(η1, η2, ω) are shown also. These realizations correspond to the 15 dimensional example (N = 15) with k = 3, c = 1/15 and L = 1/2.

Fig. 4.

Random deformation of a reference square domain U. (a) U reference domain with Dirichlet boundary conditions. (b) Realization of the deformed reference square U. (c) Second realization of the deformed reference square U.

The QoI is defined on the bottom half of the reference domain (), which is not deformed, as

In addition, we have the following:

a(x) = 1 for all x ∈ U, L = 1/2, LP = 1, N = 15.

The domain is discretized with a 2049 × 2049 triangular or 4097 × 4097 mesh.

, , and are computed with the Clenshaw-Curtis isotropic sparse grid from the Sparse Grids Matlab Kit [31,2].

The reference solutions var[Qh(uref)] and for N =15 dimensions are computed with a dimension adaptive sparse grid from the Sparse Grid Toolbox V5.1 [15,23,22]). The choice of abscissas is set to Chebyshev-Gauss-Lobatto.

The QoI is normalized by dividing by Q(U) i.e. the QoI of solution on the reference domain U

The reference computed mean value is 1.054 and variance is 0.1122 (0.3349 std) for c = 1/15 and cubic decay (k = 3). This shows a significant aleatory deformation of the solution with respect to the random domain .

Remark 13 The correction variance term is computed on the fixed reference domain U as described by Problem 1 instead of the perturbed domain. The pure collocation approach (without the variance correction) and reference solution are also computed on U. Numerical experiments confirm that computing the pure collocation approach on U, as described by Problem 1, or the perturbed domain lead to the same answer up to the finite element error. This is consistent with the theory.

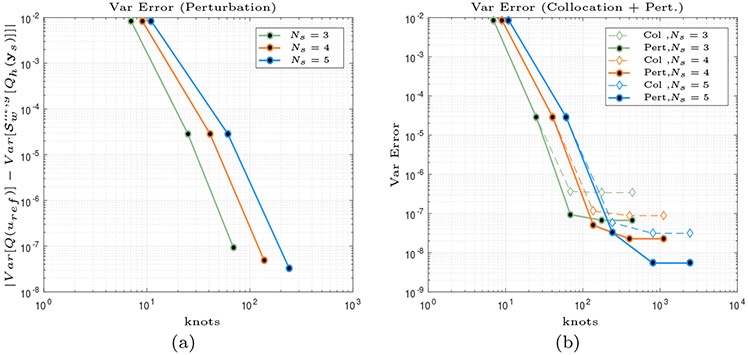

For the first numerical example we assume that we have cubic decay of the deformation i.e. the gradient terms decay as n−3. The domain is formed from a 2049 × 2049 triangular mesh. The reference domain is computed with 30,000 knots (dimension adaptive sparse grid). In Figure 5(a) we show the results for the hybrid collocation-perturbation method for c = 1/15, k = 3 (cubic decay), NL = 2, 3, 4 dimensions and compare them to the reference solution. For the collocation method the level of accuracy is set up to w = 5. For the variance correction we increase the level until w = 3 is reached since the there is no benefit to increasing w further as the sparse grid error is smaller than the perturbation error. The observed computational cost for computing the variance correction is about 10% of the collocation method.

Fig. 5.

Hybrid Collocation-Perturbation results with k = 3 (cubic decay) and c = 1/15. (a) Variance error for the hybrid collocation-perturbation method as a function of the number of collocation samples with a isotropic sparse grid and Clenshaw Curtis abscissas. The maximum level is set to w = 3. (b) Comparison between the pure collocation (Col) and the hybrid collocation-perturbation (Pert) approaches. As we observe the error decays significantly with the addition of the variance correction. However, the graphs saturate once the perturbation/truncation error is reached. Note that the number of knots of the sparse grid are computed up to w = 5 for the pure collocation method. For the variance correction the sparse grid level is set to w = 3 since at this point the error is smaller than the perturbation error and there is no benefit to increasing w. The sparse grid knots needed for the variance correction are almost negligible compared to the pure collocation.

In Figure 5(b) we compare the results between the pure collocation [6] and hybrid collocation-perturbation method. Notice the hybrid collocation-perturbation shows a marked improvement in accuracy over the pure collocation approach.

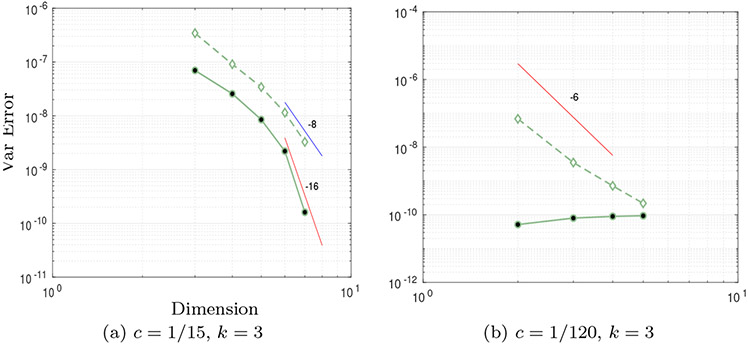

In Figure 6 the variance error decay plots for k = 3 (cubic decay) with (a) c = 1/15 and (b) c = 1/120 are shown for the collocation (dashed line) and hybrid methods (solid line). The reference solutions are computed with a dimension adaptive sparse grid with 20,000 knots for (a) and (b). The mesh size is set to 4097 × 4097 for (a) and 2049 × 2049 for (b). The collocation and hybrid estimates are computed with an isotropic sparse grid with Clenshaw-Curtis abscissas.

Fig. 6.

Variance error comparison of truncation and hybrid collocation-perturbation method as a function of the number of dimensions and different decay rates. (a) Variance error for the pure collocation (dashed line) and hybrid collocation-perturbation (solid line) methods for c = 1/15 and k = 3. (b) Variance error ratio between the collocation and hybrid methods for c = 1/120 (i.e. small perturbation) and k = 3. Note that the finite element error is reached at NS = 2, saturates the overall accuracy.

It is observed that the error for the hybrid collocation-perturbation method decays faster compared to the pure collocation method. Moreover, as the dimensions are increased the accuracy gain of the perturbation method accelerates significantly (c.f. Figure 6(a). The accuracy improves from around to about . Note that the computational cost for both the hybrid and collocation methods are relatively equal. This method shows a significant reduction in computational cost for the same accuracy, making it suitable for large dimensional problems.

In Figure 6 (b) the perturbation of geometry is significantly reduced (c = 1/120). Due to the small perturbation, the perturbation approximation is significantly higher and the error of the variance decreases substantially for Ns = 2. This is expected since perturbation methods work well under small variations of the geometry. Notice that for NS = 2, 3, … accuracy of the hybrid method appears not to improve, however the limiting factor at this point is due to the finite element error.

9. Conclusions

In this paper we propose a new hybrid collocation perturbation scheme to computing the statistics of the QoI with respect to random domain deformations that are split into large and small deviations. The large deviations are approximated with a stochastic collocation scheme. In contrast, the small deviations components of the QoI are approximated with a perturbation approach. A rigorous convergence analysis of the hybrid approach is developed.

It is shown that for a linear elliptic partial differential equation with a random domain the variance correction term can be analytically extended to a well defined region Θβ,NL embedded in with respect to the random variables. This analysis leads to a provable sub exponential convergence rate of the QoI computed with an isotropic Clenshaw-Curtis sparse grid. The size of the region Θβ,NL and therefore the rate of convergence of an isotropic sparse grid is a function of the gradient decay of the random deformation.

Error estimates and numerical experiments show that the error decays the square of the polynomial order with respect to the number of dimensions. This shows a marked reduction in effective dimensionality of the problem. Moreover, in practice, the variance correction term can be computed at a fraction of the cost of the low dimensional large variation component.

The hybrid approach is essentially a dimensionality reduction technique. We demonstrate both theoretically and numerically that the variance error with respect to the collocation dimensions NL decays quadratically faster than the pure stochastic collocation approach. Thus, the hybrid method is compatible with other stochastic collocations approaches such as anisotropic sparse grids [26]. This makes this method well suited for a large number of stochastic variables.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. 1736392. Research reported in this technical report was supported in part by the National Institute of General Medical Sciences (NIGMS) of the National Institutes of Health under award number 1R01GM131409-01.

Footnotes

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of a an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

Contributor Information

Julio E. Castrillón-Candás, Boston University, Department of Mathematics and Statistics, 111 Cummington Mall, Boston, MA 02215

Fabio Nobile, École Politechnique Fédérale Lausanne, Station 8, CH1015, Lausanne, Switzerland.

Raúl F. Tempone, Applied Mathematics and Computational Science, 4700 King Abdullah University of Science and Technology, Thuwal, 23955-6900, Saudi Arabia

References

- 1.Adams RA: Sobolev Spaces. Academic Press; (1975) [Google Scholar]

- 2.Bäck J, Nobile F, Tamellini L, Tempone R: Stochastic spectral Galerkin and collocation methods for PDEs with random coefficients: A numerical comparison. In: Hesthaven JS, Rønquist EM (eds.) Spectral and High Order Methods for Partial Differential Equations, Lecture Notes in Computational Science and Engineering, vol. 76, pp. 43–62. Springer; Berlin Heidelberg: (2011) [Google Scholar]

- 3.Barthelmann V, Novak E, Ritter K: High dimensional polynomial interpolation on sparse grids. Advances in Computational Mathematics 12, 273–288 (2000) [Google Scholar]

- 4.Billingsley P: Probability and Measure, third edn. John Wiley and Sons; (1995) [Google Scholar]

- 5.Brookes M: The matrix reference manual (2017). URL http://www.ee.ic.ac.uk/hp/staff/dmb/matrix/calculus.html

- 6.Castrillón-Candás J, Nobile F, Tempone R: Analytic regularity and collocation approximation for PDEs with random domain deformations. Computers and Mathematics with applications 71(6), 1173–1197 (2016) [Google Scholar]

- 7.Castrillon-Candas J, Xu J: A stochastic collocation approach for parabolic pdes with random domain deformations. ArXiv e-prints (2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chauviere C, Hesthaven J, Lurati L: Computational modeling of uncertainty in time-domain electromagnetics. SIAM J. Sci. Comput 28, 751–775 (2006) [Google Scholar]

- 9.Chernov A, Schwab C: First order k – th moment finite element analysis of nonlinear operator equations with stochastic data. Math. Comput 82(284), 1859–1888 (2013) [Google Scholar]

- 10.Chkifa A, Cohen A, Schwab C: High-dimensional adaptive sparse polynomial interpolation and applications to parametric pdes. Foundations of Computational Mathematics 14(4), 601–633 (2014). DOI 10.1007/s10208-013-9154-z. URL 10.1007/s10208-013-9154-z [DOI] [Google Scholar]

- 11.Dambrine M, Greff I, Harbrecht H, Puig B: Numerical solution of the poisson equation on domains with a thin layer of random thickness. SIAM Journal on Numerical Analysis 54(2), 921–941 (2016) [Google Scholar]

- 12.Dambrine M, Greff I, Harbrecht H, Puig B: Numerical solution of the homogeneous neumann boundary value problem on domains with a thin layer of random thickness. Journal of Computational Physics 330, 943–959 (2017). DOI 10.1016/j.jcp.2016.10.044. URL http://www.sciencedirect.com/science/article/pii/S0021999116305484 [DOI] [Google Scholar]

- 13.Dambrine Marc, Harbrecht Helmut, Puig Bénédicte: Computing quantities of interest for random domains with second order shape sensitivity analysis. ESAIM: M2AN 49(5), 1285–1302 (2015) [Google Scholar]

- 14.Fransos D: Stochastic numerical methods for wind engineering. Ph.D. thesis, Politecnico di Torino; (2008) [Google Scholar]

- 15.Gerstner T, Griebel M: Dimension-adaptive tensor-product quadrature. Computing 71(1), 65–87 (2003) [Google Scholar]

- 16.Gohberg I: Holomorphic operator functions of one variable and applications : methods from complex analysis in several variables. Operator theory : advances and applications. Birkhauser, Basel: (2009) [Google Scholar]

- 17.Guignard D, Nobile F, Picasso M: A posteriori error estimation for the steady Navier-Stokes equations in random domains. Computer Methods in Applied Mechanics and Engineering 313, 483–511 (2017). DOI 10.1016/j.cma.2016.10.008. URL http://www.sciencedirect.com/science/article/pii/S0045782516301803 [DOI] [Google Scholar]

- 18.Harbrecht H, Peters M, Siebenmorgen M: Analysis of the domain mapping method for elliptic diffusion problems on random domains. Numerische Mathematik 134(4), 823–856 (2016) [Google Scholar]

- 19.Harbrecht H, Schmidlin M: Multilevel quadrature for elliptic problems on random domains by the coupling of fem and bem (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Harbrecht H, Schneider R, Schwab C: Sparse second moment analysis for elliptic problems in stochastic domains. Numerische Mathematik 109, 385–414 (2008) [Google Scholar]

- 21.van den Hout M, Hall A, Wu MY, Zandbergen H, Dekker C, Dekker N: Controlling nanopore size, shape and stability. Nanotechnology 21 (2010) [DOI] [PubMed] [Google Scholar]

- 22.Klimke A: Sparse Grid Interpolation Toolbox – user’s guide. Tech. Rep IANS report 2007/017, University of Stuttgart; (2007) [Google Scholar]

- 23.Klimke A, Wohlmuth B: Algorithm 847: spinterp: Piecewise multilinear hierarchical sparse grid interpolation in MATLAB. ACM Transactions on Mathematical Software 31(4) (2005) [Google Scholar]

- 24.Kober V, Alvarez-Borrego J: Karhunen-Loève expansion of stationary random signals with exponentially oscillating covariance function. Optical Engineering (2003) [Google Scholar]

- 25.Nobile F, Tamellini L, Tempone R: Convergence of quasi-optimal sparse-grid approximation of Hilbert-space-valued functions: application to random elliptic pdes. Numerische Mathematik 134(2), 343–388 (2016). DOI 10.1007/s00211-015-0773-y. URL 10.1007/s00211-015-0773-y [DOI] [Google Scholar]

- 26.Nobile F, Tempone R, Webster C: An anisotropic sparse grid stochastic collocation method for partial differential equations with random input data. SIAM Journal on Numerical Analysis 46(5), 2411–2442 (2008) [Google Scholar]

- 27.Nobile F, Tempone R, Webster C: A sparse grid stochastic collocation method for partial differential equations with random input data. SIAM Journal on Numerical Analysis 46(5), 2309–2345 (2008) [Google Scholar]

- 28.Scarabosio L: Multilevel Monte Carlo on a high-dimensional parameter space for transmission problems with geometric uncertainties. ArXiv e-prints (2017) [Google Scholar]

- 29.Smolyak S: Quadrature and interpolation formulas for tensor products of certain classes of functions. Soviet Mathematics, Doklady 4, 240–243 (1963) [Google Scholar]

- 30.Stacey A: Smooth map of manifolds and smooth spaces. http://www.texample.net/tikz/examples/smooth-maps/

- 31.Tamellini L, Nobile F: Sparse grids matlab kit (2009–2015). http://csqi.epfl.ch/page-107231-en.html [Google Scholar]

- 32.Tartakovsky D, Xiu D: Stochastic analysis of transport in tubes with rough walls. Journal of Computational Physics 217(1), 248–259 (2006). Uncertainty Quantification in Simulation Science [Google Scholar]

- 33.Zhenhai Z, White J: A fast stochastic integral equation solver for modeling the rough surface effect computer-aided design. In: IEEE/ACM International Conference ICCAD-2005, pp. 675–682 (2005) [Google Scholar]