Abstract

High-dimensional logistic regression is widely used in analyzing data with binary outcomes. In this paper, global testing and large-scale multiple testing for the regression coefficients are considered in both single- and two-regression settings. A test statistic for testing the global null hypothesis is constructed using a generalized low-dimensional projection for bias correction and its asymptotic null distribution is derived. A lower bound for the global testing is established, which shows that the proposed test is asymptotically minimax optimal over some sparsity range. For testing the individual coefficients simultaneously, multiple testing procedures are proposed and shown to control the false discovery rate (FDR) and falsely discovered variables (FDV) asymptotically. Simulation studies are carried out to examine the numerical performance of the proposed tests and their superiority over existing methods. The testing procedures are also illustrated by analyzing a data set of a metabolomics study that investigates the association between fecal metabolites and pediatric Crohn’s disease and the effects of treatment on such associations.

Keywords: False discovery rate, Global testing, Large-scale multiple testing, Minimax lower bound

1. INTRODUCTION

Logistic regression models have been applied widely in genetics, finance, and business analytics. In many modern applications, the number of covariates of interest usually grows with, and sometimes far exceeds, the number of observed samples. In such high-dimensional settings, statistical problems such as estimation, hypothesis testing, and construction of confidence intervals become much more challenging than those in the classical low-dimensional settings. The increasing technical difficulties usually emerge from the non-asymptotic analysis of both statistical models and the corresponding computational algorithms.

In this paper, we consider testing for high-dimensional logistic regression model:

| (1) |

where is the vector of regression coefficients. The observations are i.i.d. samples Zi = (yi,Xi) for i = 1,..,n, and we assume yi | Xi ~ Bernoulli(πi) independently for each i = 1, …, n.

1.1. Global and Simultaneous Hypothesis Testing

It is important in high-dimensional logistic regression to determine 1) whether there are any associations between the covariates and the outcome and, if yes, 2) which covariates are associated with the outcome. The first question can be formulated as testing the global null hypothesis H0:β = 0; and the second question can be considered as simultaneously testing the null hypotheses H0,i:βi = 0 for i = 1, …, p. Besides such single logistic regression problems, hypothesis testing involving two logistic regression models with regression coefficients β(1) and β2 in is also important. Specifically, one is interested in testing the global null hypothesis H0:β(1) = β(2), or identifying the differentially associated covariates through simultaneously testing the null hypotheses for each i = 1, …, p.

Estimation for high-dimensional logistic regression has been studied extensively. van de Geer (2008) considered high-dimensional generalized linear models (GLMs) with Lipschitz loss functions, and proved a non-asymptotic oracle inequality for the empirical risk minimizer with the Lasso penalty. Meier, van de Geer, and Bühlmann (2008) studied the group Lasso for logistic regression and proposed an efficient algorithm that leads to statistically consistent estimates. Negahban et al. (2010) obtained the rate of convergence for the -regularized maximum likelihood estimator under GLMs using restricted strong convexity property. Bach (2010) extended tools from the convex optimization literature, namely self-concordant functions, to provide interesting extensions of theoretical results for the square loss to the logistic loss. Plan and Vershynin (2013) connected sparse logistic regression to one-bit compressed sensing and developed a unified theory for signal estimation with noisy observations.

In contrast, hypothesis testing and confidence intervals for high-dimensional logistic regression have only been recently addressed. van de Geer et al. (2014) considered constructing confidence intervals and statistical tests for single or low-dimensional components of the regression coefficients in high-dimensional GLMs. Mukherjee, Pillai, and Lin (2015) studied the detection boundary for minimax hypothesis testing in high-dimensional sparse binary regression models when the design matrix is sparse. Belloni, Chernozhukov, and Wei (2016) considered estimating and constructing the confidence regions for a regression coefficient of primary interest in GLMs. More recently, Sur, Chen, and Candès (2017) and Sur and Candès (2019) considered the likelihood ratio test for high-dimensional logistic regression under the setting that p / n → κ for some constant κ < 1 / 2, and showed that the asymptotic null distribution of the log-likelihood ratio statistic is a rescaled χ2 distribution. Cai et al. (2017) proposed a global test and a multiple testing procedure for differential networks against sparse alternatives under the Markov random field model. Nevertheless, the problems of global testing and large-scale simultaneous testing for high-dimensional logistic regression models with p ≳ n remain unsolved.

In this paper, we first consider global and multiple testing for a single high-dimensional logistic regression model. The global test statistic is constructed as the maximum of squared standardized statistics for individual coefficients, which are based on a two-step standardization procedure. The first step is to correct the bias of the logistic Lasso estimator using a generalized low-dimensional projection (LDP) method, and the second step is to normalize the resulting nearly unbiased estimators by their estimated standard errors. We show that the asymptotic null distribution of the test statistic is a Gumbel distribution and that the resulting test is minimax optimal under the Gaussian design by establishing the minimax separation distance between the null space and alternative space. For large-scale multiple testing, data-driven testing procedures are proposed and shown to control the false discovery rate (FDR) and falsely discovered variables (FDV) asymptotically. The framework for testing for single logistic regression is then extended to the setting of testing two logistic regression models.

The main contributions of the present paper are threefold.

We propose novel procedures for both the global testing and large-scale simultaneous testing for high dimensional logistic regressions. The dimension p is allowed to be much larger than the sample size n. Specifically, we require for the global test and for the multiple testing procedure, with some constant c1, c2 > 0. For the global alternatives characterized by the norm of the regression coefficients, the global test is shown to be minimax rate optimal with the optimal separation distance of order .

Following similar ideas in Ren, Zhang, and Zhou (2016) and Cai et al. (2017), our construction of the test statistics depends on a generalized version of the LDP method for bias correction. The original LDP method (Zhang and Zhang 2014) relies on the linearity between the covariates and outcome variable. For logistic regression, the generalized approach first finds a linearization of the regression function, and the weighted LDP is then applied. Besides its usefulness in logistic regression, the generalized LDP method is flexible and can be applied to other nonlinear regression problems (see Section 7 for a detailed discussion).

The minimax lower bound is obtained for the global hypothesis testing under the Gaussian design. The lower bound depends on the calculation of the χ2-divergence between two logistic regression models. To the best of our knowledge, this is the first lower bound result for high-dimensional logistic regression under the Gaussian design.

1.2. Other Related Work

We should note that a different but related problem, namely inference for high-dimensional linear regression, has been well studied in the literature. Zhang and Zhang (2014), van de Geer et al. (2014) and Javanmard and Montanari (2014a,b) considered confidence intervals and testing for low-dimensional parameters of the high-dimensional linear regression model and developed methods based on a two-stage debiased estimator that corrects the bias introduced at the first stage due to regularization. Cai and Guo (2017) studied minimaxity and adaptivity of confidence intervals for general linear functionals of the regression vector.

The problems of global testing and large-scale simultaneous testing for high-dimensional linear regression have been studied by Liu and Luo (2014), Ingster, Tsybakov, and Verzelen (2010) and more recently by Xia, Cai, and Cai (2018) and Javanmard and Javadi (2019). However, due to the nonlinearity and the binary outcome, the approaches used in these works cannot be directly applied to logistic regression problems. In the Markov random field setting, Ren, Zhang, and Zhou (2016) and Cai et al. (2017) constructed pivotal/test statistics based on the debiased LDP estimators for node-wise logistic regressions with binary covariates. However, the results for sparse high-dimensional logistic regression models with general continuous covariates remain unknown.

Other related problems include joint testing and false discovery rate control for high-dimensional multivariate regression (Xia, Cai, and Li 2018) and testing for high-dimensional precision matrices and Gaussian graphical models (Liu 2013; Xia, Cai, and Cai 2015), where the inverse regression approach and de-biasing were carried out in the construction of the test statistics. Such statistics were then used for testing the global null with extreme value type asymptotic null distributions or to perform multiple testing that controls the false discovery rate.

1.3. Organization of the Paper and Notations

The rest of the paper is organized as follows. In Section 2, we propose the global test and establish its optimality. Some comparisons with existing works are made in detail. In Section 3, we present the multiple testing procedures and show that they control the FDR/FDP or FDV/FWER asymptotically. The framework is extended to the two-sample setting in Section 4. In Section 5, the numerical performance of the proposed tests are evaluated through extensive simulations. In Section 6, the methods are illustrated by an analysis of a metabolomics study. Further extensions and related problems are discussed in Section 7. In Section 8, some of the main theorems are proved. The proofs of other theorems as well as technical lemmas, and some further discussions are collected in the online Supplementary Materials.

Throughout our paper, for a vector , we define the norm , and the norm . stands for the subvector of a without the j the component. We denote diag(a1, …, an) as the n × n diagonal matrix whose diagonal entries are a1, …, an. For a matrix , λi (A) stands for the i-th largest singular value of A and λmax (A) = λ1 (A), λmin (A) = λp^q (A). For a smooth function f(x) defined on , we denote and . Furthermore, for sequences {an} and {bn}, we write an = o(bn) if , and write an = O(bn), an ≲ bn or bn ≳ an if there exists a constant C such that an ≤ Cbn for all n. We write an ≍ bn if an ≲ bn and an ≳ bn. For a set A, we denote |A| as its cardinality. Lastly, C, C0, C1, … are constants that may vary from place to place.

2. GLOBAL HYPOTHESIS TESTING

In this section, we consider testing the global null hypotheses

under the logistic regression model with random designs. The global testing problem corresponds to the detection of any associations between the covariates and the outcome.

Our construction of the global testing procedure begins with a bias-corrected estimator built upon a regularized estimator such as the -regularized M-estimator. For high-dimensional logistic regression, the -regularized M-estimator is defined as

| (2) |

which is the minimizer of a penalized log-likelihood function. Negahban et al. (2010) showed that, when Xi are i.i.d. sub-gaussian, under some mild regularity conditions, standard high-dimensional estimation error bounds for under the or norm can be obtained by choosing . Once we obtain the initial estimator , our next step is to correct the bias of .

For technical reasons, we split the samples so that the initial estimation step and the bias correction step are conducted on separate and independent datasets. Without loss of generality, we assume there are 2n samples, divided into two subsets and , each with n independent samples. The initial estimator is obtained from . In the following, we construct a nearly unbiased estimator based on and the samples from , using the generalized LDP approach. Throughout the paper, the samples Zi = (Xi, Yi), i = 1, …, n, are from , which are independent of . We would like to emphasize that the sample splitting procedure is only used to simplify our theoretical analysis, which does not make it a restriction for practical applications. Numerically, as our simulations in Section 5 show, sample splitting is in fact not needed in order for our methods perform well (see further discussions in Section 7).

2.1. Construction of the Test Statistic via Generalized Low-Dimensional Projection

Let X be the design matrix whose i-th row is Xi. We rewrite the logistic regression model defined by (1) as

| (3) |

where f (u) = eu / (1 + eu) and ϵi is error term. To correct the bias of the initial estimator , we consider the Taylor expansion of f (ui) at for ui = β⊤ Xi and

where Rei is the reminder term. Plug this into the regression model (3), we have

| (4) |

By rewriting the logistic regression model as (4), we can treat on the left hand side as the new response variable, whereas as the new covariates and Rei + ϵi as the noise. Consequently, β can be considered as the regression coefficient of this approximate linear model.

The bias-corrected estimator, or, the generalized LDP estimator is defined as

| (5) |

where Xij is the j-th component of Xi and vj = (v1j, v2j, …, vnj) is the score vector that will be determined carefully (Ren, Zhang, and Zhou 2016; Cai et al. 2017). More specifically, we define the weighted inner product 〈·,·〉n for any as , and denote 〈·,·〉 as the ordinary inner product defined in Euclidean space. Combining (4) and (5), we can write

| (6) |

where denote the j-th column of X, where is the submatrix of X without the j-th column, and Re = (Re1, …, Ren) with . We will construct score vector vj so that the first term on the right hand side of (6) is asymptotically normal, while the second and third terms, which together contribute to the bias of the generalized LDP estimator , are negligible.

To determine the score vector vj efficiently, we consider the following node-wise regression among the covariates

| (7) |

where and ηj is the error term. Intuitively, if we set for , then it should follow that

In practice, we use the node-wise Lasso to obtain an estimate of ηj. For X from and obtained from , the score vj is obtained by calibrating the Lasso-generated residue , i.e.

| (8) |

Clearly, vj (λ) depends on the tuning parameter λ. Define the following quantities

| (9) |

The tuning parameter λ can be determined through ζj (λ) and τj (λ) by the algorithm in Table 1, which is adapted from the algorithm in Zhang and Zhang (2014).

Table 1.

Computation of vj from the Lasso (8)

| Input: | An upper bound for ζj with default value , |

| tuning parameters κ0 ∈[0,1] and κ1 ∈(0,1]; | |

| Step 1: | If for all λ = 0, set ; |

| Step 2: | |

| vi ← vj(λj), τj ← τj(λj), ζj ← ζj(λj) | |

| Input: | An upper bound for ζj with default value , |

| Output: | λj, vj, τj, ζj |

Once and τj are obtained, we define the standardized statistics

for j = 1, …, p. The global test statistic is then defined as

| (10) |

2.2. Asymptotic Null Distribution

We now turn to the analysis of the properties of the global test statistic Mn defined in (10). For the random covariates, we consider both the Gaussian design and the bounded design. Under the Gaussian design, the covariates are generated from a multivariate Gaussian distribution with an unknown covariance matrix . In this case, we assume

(A1). Xi ~ N(0, Σ) independently for each i = 1, …, n.

In the case of bounded design, we assume instead

(A2). Xi for i = 1, …, n are i.i.d. random vectors satisfying and max1≤i≤n ‖ Xi‖∞ ≤ T for some constant T > 0.

Define the ball

In general, includes any matrix Ω whose rows ωi are sparse with ‖ωi‖0≤ k or sparse with for all i = 1, …, p. The parameter space of the covariance matrix Σ and the regression vector β are defined as following.

(A3). The parameter space Θ(k) of satisfies

for some constant M ≥ 1. For convenience, we denote and , so that Θ(k) = Θ1(k) × Θ2(k).

The following theorem states that the asymptotic null distribution of Mn under either the Gaussian or bounded design is a Gumbel distribution.

Theorem 1. Let Mn be the test statistic defined in (10), D be the diagonal of Σ−1 and (ζij) = D−1/2Σ−1D−1/2. Suppose max1≤i<j≤p |ζij|≤ c0 for some constant 0 < c0 < 1, log p = O(nr) for some 0 < r < 1/5, and

1. under the Gaussian design, we assume (A1) (A3) and; or

2. under the bounded design, we assume (A2) (A3) and.

Then under H0, for any given,

The condition that log p = o(nr) for some 0 < r < 1/5 is consistent with those required for testing the global hypothesis in high-dimensional linear regression (Xia, Cai, and Cai 2018) and for testing two-sample covariance matrices (Cai, Liu, and Xia 2013). It allows the dimension p to be exponentially large comparing to the sample size n, which is much more flexible than the likelihood ratio test considered in Sur, Chen, and Candès (2017) and Sur and Candès (2019), where the dimension can only scale as p < n. Under the Gaussian design, it is required that the sparsity k is whereas for the bounded design, it suffices that the sparsity k to be .

Remark 1. The analysis can be extended to testing H0:βG = 0 versus H1:βG ≠ 0 for a given index set G. Specifically, we can construct the test statistic as and obtain a similar Gumbel limiting distribution by replacing p by | G |, as (n,|G|) → ∞. The sparsity condition thus should be forwarded to the set G.

Based on the limiting null distribution, the asymptotically α level test can be defined as

where qα is the 1 − α quantile of the Gumbel distribution with the cumulative distribution function , i.e.

The null hypothesis H0 is rejected if and only if Φα(Mn) = 1.

2.3. Minimax Separation Distance and Optimality

In this subsection, we answer the question: “What is the essential difficulty for testing the global hypothesis in logistic regression.” To fix ideas, we begin with defining the minimax separation distance that measures such an essential difficulty for testing the global null hypothesis at a given level and type II error. In particular, we consider the alternative

for some ρ > 0. This alternative concerns the detection of any discernible signals among the regression coefficients where the signals can be extremely sparse, which has interesting applications (see Xia, Cai, and Cai (2015)). Similar alternatives are also considered by Cai, Liu, and Xia (2013) and Cai, Liu, and Xia (2014).

By fixing a level α > 0 and a type II error probability δ > 0, we can define the δ-separation distance of a level α test procedure Φα for given design covariance Σ as

| (11) |

The δ-separation distance ρ(Φα, δ,Θ(k)) over Θ(k) can thus be defined by taking the supremum over all the covariance matrices Σ ∈Θ2(k), so that

which corresponds to the minimal distance such that the null hypothesis H0 is well separated from the alternative H1 by the test Φα. In general, δ-separation distance is an analogue of the statistical risk in estimation problems. It characterizes the performance of a specific α-level test with a guaranteed type II error δ. Consequently, we can define the (α, δ)-minimax separation distance over Θ(k) and all the α-level tests as

The definition of (α, δ)-minimax separation distance generalizes the ideas of Ingster (1993), Baraud (2002) and Verzelen (2012). The following theorem establishes the minimax lower bound of the (α, δ)-separation distance under the Gaussian design for testing the global null hypothesis over the parameter space Θ′(k) ⊂ Θ(k) defined as

Theorem 2. Assume that α + δ ≤ 1. Under the Gaussian design, if (A1) and (A3) hold, (β, Σ) ∈ Θ′(k) and for some 0 < γ <1 / 2 , then the (α, δ)-minimax separation distance over Θ′(k) has the lower bound

| (12) |

for some constant c > 0.

In order to show the above lower bound is asymptotically sharp, we prove that it is actually attainable under certain circumstances, by our proposed global test Φα. In particular, for the bounded design, we make the following additional assumption.

(A4). It holds that Pθ(max1≤i≤n |β⊤Xi|≥ C) = O(p−c) for some constant C, c > 0.

Theorem 3. Suppose that log p = O(nr) for some 0 < r < 1. Under the alternative for some c2 > 0, and

(i) under the Gaussian design, assume that (A1) and (A3) hold, for , log p ≳ log1+δ n for some δ > 0 and ; or

(ii) under the bounded design, assume that (A2), (A3), and (A4) hold, and.

Then we have Pθ(Φα(Mn) = 1) → 1 as (n, p) → ∞.

In Theorem 3, (A4) is assumed for the bounded case and is required for the Gaussian case. In particular, since log p = O(nr) for some 0 < r < 1, the upper bound for ‖β‖2 can be as large as . In Theorem 2, the minimax lower bound is established over (β, Σ) ∈Θ′(k), so that the same lower bound holds over a larger set

| (13) |

since . On the other hand, Theorem 3 (i) indicates an upper bound attained by our proposed test under the Gaussian design over the set (13). These two results imply the minimax rate and the minimax optimality of our proposed test over the set (13).

2.4. Comparison with Existing Works

In this section, we make detailed comparisons and connections with some existing works concerning global hypothesis testing in the high-dimensional regression literature.

Ingster, Tsybakov, and Verzelen (2010) addressed the detection boundary for high-dimensional sparse linear regression models, and more recently Mukherjee, Pillai, and Lin (2015) studied the detection boundary for hypothesis testing in high-dimensional sparse binary regression models. However, although both works obtained the sharp detection boundary for the global testing problem H0:β = 0, their alternative hypotheses are different from ours. Specifically, Mukherjee, Pillai, and Lin (2015) considered the alternative hypothesis , which implies that β has at least k nonzero coefficients exceeding A in absolute values. Ingster, Tsybakov, and Verzelen (2010) considered the alternative hypothesis , which concerns k sparse β with norm at least ρ. In fact, the proof of our Theorem 2 can be directly extended to such an alternative concerning the norm, which amounts to obtaining a lower bound of order for high dimensional logistic regression. However, developing a minimax optimal test for such alternative is beyond the scope of the current paper.

Additionally, in contrast to the minimax separation distance considered in this paper, the papers by Ingster, Tsybakov, and Verzelen (2010) and Mukherjee, Pillai, and Lin (2015) considered the minimax risk (or the minimax total error probability) given by

| (14) |

where the infimum is taken over all tests Φ. This minimax risk can be also written as

| (15) |

A comparison of (11) and (15) yields the slight difference between the two criteria, as one depends on a given Type I error α and the other doesn’t.

Moreover, these two papers considered different design scenarios from ours. In Ingster, Tsybakov, and Verzelen (2010), only the isotropic Gaussian design was considered. As a result, the optimal tests proposed therein rely highly on the independence assumption. In Mukherjee, Pillai, and Lin (2015), the general binary regression was studied under fixed sparse design matrices. In particular, the minimax lower and upper bounds were only derived in the special case of design matrices with binary entries and certain sparsity structures.

In comparison with the recent works of Sur, Chen, and Candès (2017), Candès and Sur (2018) and Sur and Candès (2019), besides the aforementioned difference in the asymptotics of (p, n), these two papers only considered the random Gaussian design, whereas our work also considered random bounded design as in van de Geer et al. (2014). In addition, Sur, Chen, and Candès (2017) and Sur and Candès (2019) developed the Likelihood Ratio (LLR) Test for testing the hypothesis for any finite k. Intuitively, a valid test for the global null and p / n → κ ∈(0, 1 / 2) can be adapted from the individual LLR tests using the Bonferroni procedure. However, as our simulations show (Section 5), such a test is less powerful compared to our proposed test.

Lastly, our minimax results focus on the highly sparse regime k ≲ pγ where γ ∈(0, 1 / 2). As shown by Ingster, Tsybakov, and Verzelen (2010) and Mukherjee, Pillai, and Lin (2015), the problem under the dense regime where γ ∈(1 / 2,1) can be very different from the sparse regime. Mostly likely, the fundamental difficulty of the testing problem changes in this situation so that different methods need to be carefully developed. We leave these interesting questions for future investigations.

3. LARGE-SCALE MULTIPLE TESTING

Denote by β the true coefficient vector in the model and denote . In order to identify the indices in , we consider simultaneous testing of the following null hypotheses

Apart from identifying as many nonzero βj as possible, to obtain results of practical interest, we would like to control the false discovery rate (FDR) as well as the false discovery proportion (FDP), or the number of falsely discovered variables (FDV).

3.1. Construction of Multiple Testing Procedures

Recall that in Section 2, we define the standardized statistics , for j = 1, …, p. For a given threshold level t > 0, each individual hypothesis H0,j:βj = 0 is rejected if |Mj|≥ t. Therefore for each t, we can define

and the expected number of falsely discovered variables

Procedure Controlling FDR/FDP.

In order to control the FDR/FDP at a pre-specified level 0 < α < 1, we can set the threshold level as

| (16) |

for some bp to be determined later.

In general, the ideal choice is unknown and needs to be estimated because it depends on the knowledge of the true null . Let G0(t) be the proportion of the nulls falsely rejected by the procedure among all the true nulls at the threshold level t, namely, , where . In practice, it is reasonable to assume that the true alternatives are sparse. If the sample size is large, we can use the tails of normal distribution G(t) = 2 − 2Φ(t) to approximate G0(t). In fact, it will be shown that, for in probability as (n, p) → ∞. To summarize, we have the following logistic multiple testing (LMT) procedure controlling the FDR and the FDP.

Procedure 1 (LMT). Let 0 < α < 1, and define

| (17) |

Ifin (17) does not exist, then let . We reject H0,j Whenever .

Procedure Controlling FDV.

For large-scale inference, it is sometimes of interest to directly control the number of falsely discovered variables (FDV) instead of the less stringent FDR/FDP, especially when the sample size is small (Liu and Luo 2014). By definition, the FDV control, or equivalently, the per-family error rate control, provides an intuitive description of the Type I error (false positives) in variable selection. Moreover, controlling FDV = r for some 0 < r < 1 is related to the family-wise error rate (FWER) control, which is the probability of at least one false positive. In fact, FDV control can be achieved by a suitable modification of the FDP controlling procedure introduced above. Specifically, we propose the following FDV (or FWER) controlling logistic multiple testing (LMTV) procedure.

Procedure 2 (LMTV). For a given tolerable number of falsely discovered variables r < p (or a desired level of FWER 0 < r < 1), let . H0,j is rejected whenever .

3.2. Theoretical Properties for Multiple Testing Procedures

In this section we show that our proposed multiple testing procedures control the theoretical FDR/FDP or FDV asymptotically. For simplicity, our theoretical results are obtained under the bounded design scenario. For FDR/FDP control, we need an additional assumption on the interplay between the dimension p and the parameter space Θ(k).

Recall that ηj = (ηj1, …, ηjn) for j = 1, …, p defined in (7). We define for 1 ≤ j, k ≤ p, and . Denote and .

(A5). Suppose that for some ϵ > 0 and q > 0, .

The following proposition shows that Mj is asymptotically normal distributed and G0(t) is well approximated by G(t).

Proposition 1. Under (A2) (A3) and (A4), suppose p = O(nc) for some constant c > 0, , then as (n, p) → ∞,

| (18) |

If in addition we assume (A5), then

| (19) |

in probability, where Φ is the cumulative distribution function of the standard normal distribution and .

The following theorem provides the asymptotic FDR and FDP control of our procedure.

Theorem 4. Under the conditions of Proposition 1, for defined in our LMT procedure, we have

| (20) |

for any ϵ > 0.

For the FDV/FWER controlling procedure, we have the following theorem.

Theorem 5. Under (A2) (A3) and (A4), assume p = O(nc) for some c > 0 and .

Let r < p be the desired level of FDV. Fordefined in our LMTVprocedure, we have. In addition, if 0 < r < 1, we have .

The above theoretical results are obtained under the dimensionality condition p = O(nc), which is stronger than that of the global test. Essentially, the condition is needed to obtain the uniform convergence (18), whose form (as ratio) is stronger than the convergence in distribution in the ordinary sense (as direct difference).

4. TESTING FOR TWO LOGISTIC REGRESSION MODELS

In some applications, it is also interesting to consider hypothesis testing that involves two separate logistic regression models of the same dimension. Specifically, for and , where , where f (u) = eu / (1 + eu), and is a binary random variable such that . The global null hypothesis H0:β(1) = β(2) implies that there is overall no difference in association between covariates and the response. If this null hypothesis is rejected, we are interested in simultaneously testing the hypotheses for each j = 1, …, p.

To test the global null H0:β(1) = β(2) against H1:β(1) ≠ β(2), we can first obtain and for each model, and then calculate the coordinate-wise standardized statistics , for j = 1, …, p. Define the global test statistic as , it can be shown that the limiting null distribution is also a Gumbel distribution. The α level global test is thus defined as Φα(Tn) = I{Tn ≥ 2log p−loglog p + qα}, where qα = −log(π) − 2loglog(1 − α)−1. For multiple hypotheses testing of two regression vectors , we consider the test statistics Tj defined above. The two-sample multiple testing procedure controlling FDR/FDP is given as follows.

Procedure 3. Let 0 < α < 1 and define . If the above does not exist, let . We reject H0,j whenever .

5. SIMULATION STUDIES

In this section we examine the numerical performance of the proposed tests. Due to the space limit, for both global and multiple testing problems, we only focus on the single regression setting, and report the results on two logistic regressions in the Supplementary Materials. Throughout our numerical studies, sample splitting was not used.

5.1. Global Hypothesis Testing

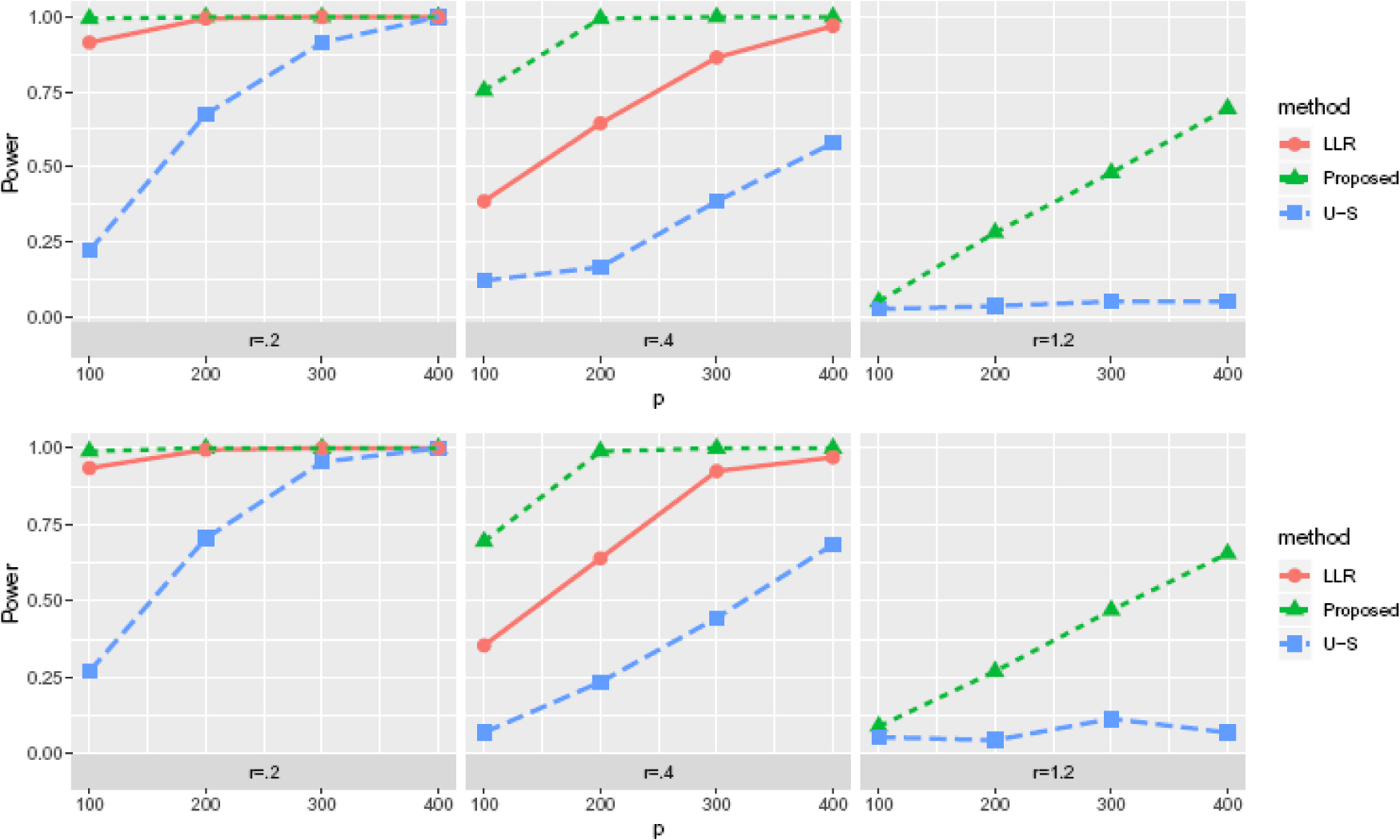

In the following simulations, we consider a variety of dimensions, sample sizes, and sparsity of the models. For alternative hypotheses, the dimension of the covariates p ranges from 100, 200, 300 to 400, and the sparsity k is set as 2 or 4. The sample sizes n are determined by the ratio r = p / n that takes values of 0.2, 0.4 and 1.2. To generate the design matrix X, we consider the Gaussian design with the blockwise-correlated covariates so that Σ = ΣB, where ΣB is a p × p blockwise diagonal matrix including 10 equal-sized blocks, whose diagonal elements are 1’s and off-diagonal elements are set as 0.7. Under the alternative, suppose is the support of the regression coefficients β and , we set for j = 1, …, p and ρ = 0.75 with equal proportions of ρ and −ρ. We set κ0 = 0 and κ1 = 0.5.

To assess the empirical performance of our proposed test (“Proposed”), we compare our test with (i) a Bonferroni procedure applied to the p-values from univariate screening using MLE statistic (“U-S”), and (ii) to the method of Sur, Chen, and Candès (2017); Sur and Candès (2019) (“LLR”) in the setting where r = 0.2 and 0.4.

Table 2 shows the empirical type I errors of these tests at level α = 0.05 based on 1000 simulations. Figure 1 shows the corresponding empirical powers under various settings. As we expected, our proposed method outperforms the other two alternatives in all the cases (including the moderate dimensional cases where r = 0.2 and 0.4), and the power increases as n or p grows. In the rather lower dimensional setting where r = 0.2, the LLR performs almost as well as our proposed method.

Table 2.

Type I error with α = 0.05 for the proposed method (Proposed), the Bonferroni corrected univariate screening method (U-S) and the Bonferroni corrected likelihood ratio based method of Sur and Candès (2019) (LLR), for different n, p and k.

| p / n | k = 2 | k = 4 | ||||||

|---|---|---|---|---|---|---|---|---|

| p = 100 | 200 | 300 | 400 | p = 400 | 600 | 800 | 1000 | |

| Proposed | ||||||||

| 0.2 | 0.052 | 0.066 | 0.042 | 0.054 | 0.058 | 0.050 | 0.046 | 0.070 |

| 0.4 | 0.038 | 0.054 | 0.062 | 0.054 | 0.046 | 0.050 | 0.060 | 0.074 |

| 1.2 | 0.026 | 0.044 | 0.042 | 0.045 | 0.014 | 0.044 | 0.054 | 0.054 |

| U-S | ||||||||

| 0.2 | 0.040 | 0.032 | 0.024 | 0.018 | 0.018 | 0.022 | 0.028 | 0.034 |

| 0.4 | 0.050 | 0.032 | 0.024 | 0.020 | 0.028 | 0.028 | 0.032 | 0.046 |

| 1.2 | 0.028 | 0.038 | 0.024 | 0.020 | 0.032 | 0.018 | 0.034 | 0.014 |

| LLR | ||||||||

| 0.2 | 0.050 | 0.050 | 0.068 | 0.040 | 0.058 | 0.044 | 0.046 | 0.034 |

| 0.4 | 0.084 | 0.070 | 0.048 | 0.056 | 0.062 | 0.042 | 0.058 | 0.064 |

Fig. 1.

Empirical power with α = 0.05 for the proposed method (Proposed), the Bonferroni corrected univariate screening method (U-S) and the Bonferroni corrected likelihood ratio based method of Sur and Candès (2019) (LLR). Top panel: k = 2; bottom panel: k = 4.

5.2. Multiple Hypotheses Testing

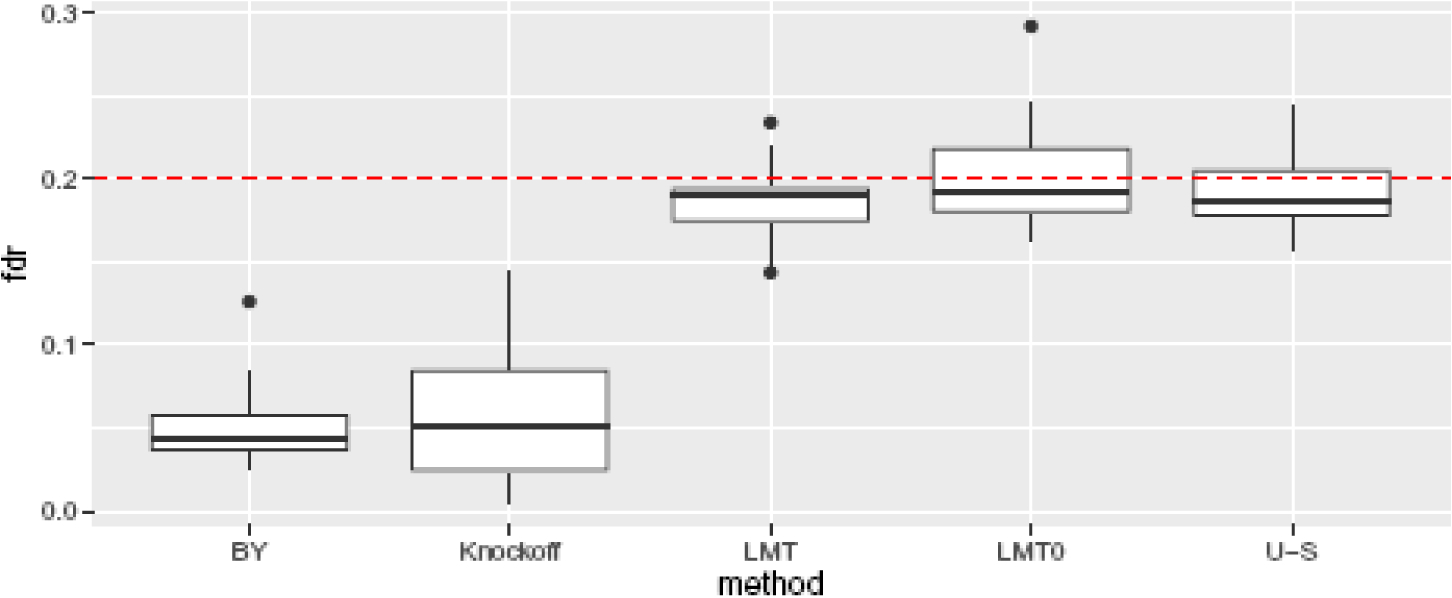

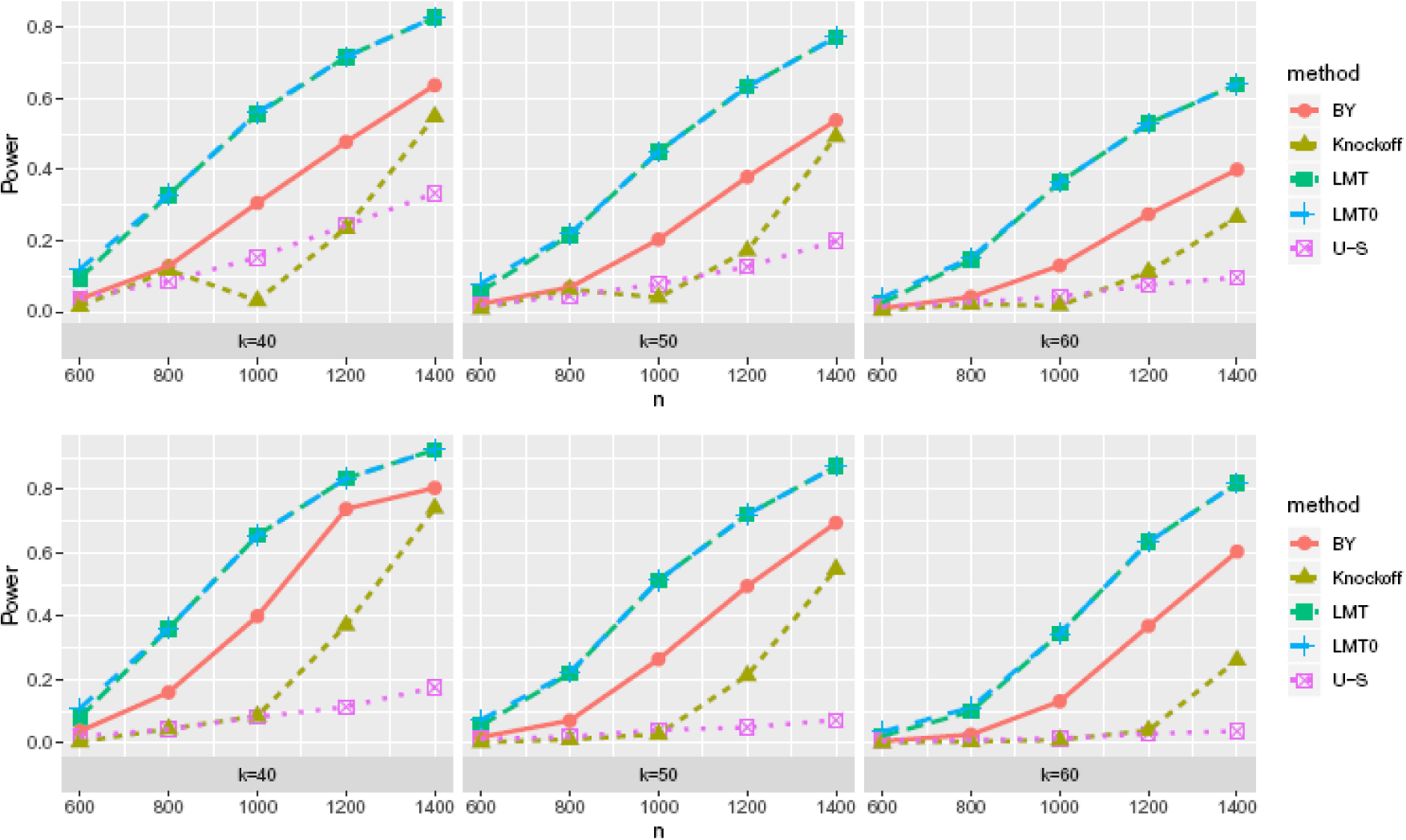

FDR Control.

In this case, we set p = 800 and let n vary from 600, 800, 1000, 1200 to 1400, so that all the cases are high-dimensional in the sense that p > n / 2. The sparsity level k varies from 40, 50 to 60. For the true positives, given the support such that , we set for j = 1, …, p with equal proportions of ρ and −ρ. The design covariates Xi’s are generated from a -truncated multivariate Gaussian distribution with covariance matrix Σ = 0.01ΣM, where ΣM is a p × p blockwise diagonal matrix of 10 identical unit diagonal Toeplitz matrices whose off-diagonal entries descend from 0.1 to 0 (see Supplementary Material for the explicit form). The choice of κ0 and κ1 are the same as the global testing. Throughout, we set the desired FDR level as α = 0.2.

We compare our proposed procedure (denoted as “LMT”) with following methods: (i) the basic LMT procedure with bp in (17) replaced by ∞ (“LMT0”), which is equivalent to applying the BH procedure (Benjamini and Hochberg 1995) to our debiased statistics Mj, (ii) the BY procedure (Benjamini and Yekutieli 2001) using our debiased statistics Mj (“BY”), implemented using the R function p.adjust(…,method=“BY”), (iii) a BH procedure applied to the p-values from univariate screening using the MLE statistics (“U-S”), and (iv) the knockoff method of Candès et al. (2018) (“Knockoff”). Figure 2 shows boxplots of the pooled empirical FDRs (see Supplementary Material for the case-by-case FDRs) and Figure 3 shows the empirical powers of these methods based on 1000 replications. Here the power is defined as the number of correctly discovered variables divided by the number of truly associated variables. As a result, we find that LMT and LMT0 correctly control FDRs and have the greatest power among all the cases. In particular, the power of LMT and LMT0 are almost the same, which increases as the sparsity decreases, the signal magnitude ρ increases, or the sample size n increases, while LMT0 has slightly inflated FDRs. The U-S method, although correctly controls the FDRs, has poor power, which is largely due to the dependence among the covariates.

Fig. 2.

Boxplots of the empirical FDRs across all the settings for α = 0.2.

Fig. 3.

Empirical power under FDR α = 0.2 for ρ = 3 (top) and ρ = 4 (bottom).

FDV Control.

For our proposed test that controls FDV (denoted as LMTV), by setting desired FDV level r = 10, we apply our method to various settings. Specifically, we set ρ = 3, p ∈{800,1000,1200}, set k ∈{40,50,60}, and let n vary from 400, 600, 800 to 1000. The design covariates are generated similarly as the previous part. The resulting empirical FDV and powers are summarized in Table 3. Our proposed LMTV has the correct control of FDV in all the settings and the power increases as n grows, k decreases, or p decreases.

Table 3.

Empirical performance of LMTV with FDV level r = 10.

| ρ | p | k | Empirical FDV | Empirical Power | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| n = 400 | 600 | 800 | 1000 | 400 | 600 | 800 | 1000 | |||

| 40 | 4.07 | 5.45 | 6.44 | 7.11 | 0.08 | 0.23 | 0.40 | 0.59 | ||

| 800 | 50 | 4.30 | 6.29 | 7.27 | 8.26 | 0.06 | 0.16 | 0.32 | 0.49 | |

| 60 | 4.33 | 6.63 | 7.48 | 8.42 | 0.05 | 0.12 | 0.25 | 0.42 | ||

| 40 | 3.30 | 4.59 | 5.79 | 6.82 | 0.06 | 0.18 | 0.35 | 0.52 | ||

| 3 | 1000 | 50 | 3.49 | 5.42 | 6.43 | 7.03 | 0.05 | 0.13 | 0.26 | 0.43 |

| 60 | 3.68 | 5.47 | 7.29 | 7.97 | 0.03 | 0.09 | 0.20 | 0.34 | ||

| 40 | 2.69 | 4.36 | 5.00 | 5.68 | 0.05 | 0.15 | 0.31 | 0.46 | ||

| 1200 | 50 | 2.97 | 4.22 | 5.73 | 6.43 | 0.03 | 0.11 | 0.21 | 0.36 | |

| 60 | 2.78 | 4.91 | 5.91 | 7.25 | 0.02 | 0.07 | 0.16 | 0.27 | ||

6. REAL DATA ANALYSIS

We illustrate our proposed methods by analyzing a dataset from the Pediatric Longitudinal Study of Elemental Diet and Stool Microbiome Composition (PLEASE) study, a prospective cohort study to investigate the effects of inflammation, antibiotics, and diet as environmental stressors on the gut microbiome in pediatric Crohn’s disease (Lewis et al. 2015; Lee et al. 2015; Ni et al. 2017). The study considered the association between pediatric Crohn’s disease and fecal metabolomics by collecting fecal samples of 90 pediatric patients with Crohn’s disease at baseline, 1 week, and 8 weeks after initiation of either anti-tumor necrosis factor (TNF) or enteral diet therapy, as well as those from 25 healthy control children (Lewis et al. 2015). In details, an untargeted fecal metabolomic analysis was performed on these samples using liquid chromatography-mass spectrometry (LC-MS). Metabolites with more than 80% missing values across all samples were removed from the analysis. For each metabolite, samples with the missing values were imputed with its minimum abundance across samples. To avoid potential large outliers, for each sample, the metabolite abundances were further normalized by dividing 90% cumulative sum of the abundances of all metabolites. The normalized abundances were then log transformed and used in all analyses. The metabololomics annotation was obtained from Human Metabolome Database (Lee et al. 2015). In total, for each sample, abundances of 335 known metabolites were obtained and used in our analysis.

6.1. Association Between Metabolites and Crohn’s Disease Before and After Treatment

We first test the overall association between 335 characterized metabolites and Crohn’s disease by fitting a logistic regression using the data of 25 healthy controls and 90 Crohn’s disease patients at the baseline. We obtain a global test statistic of 433.88 with a p-value < 0.001, indicating a strong association between Crohn’s disease and fecal metabolites. At the FDR < 5%, our multiple testing procedure selects four metabolites, including C14:0.sphingomyelin, C24:1.Ceramide.(d18:1) and 3-methyladipate/pimelate (see Table 4). Recent studies have demonstrated that sphingolipid metabolites, particularly ceramide and sphingosine-1-phosphate, are signaling molecules that regulate a diverse range of cellular processes that are important in immunity, inflammation and inflammatory disorders (Maceyka and Spiegel 2014). In fact, ceramide acts to reduce tumor necrosis factor (TNF) release (Rozenova et al. 2010) and has important roles in the control of autophagy, a process strongly implicated in the pathogenesis of Crohn’s disease (Barrett et al. 2008; Sewell et al. 2012).

Table 4.

Significant metabolites associated with Crohn’s disease (coded as 1 in logistic regression) at the baseline, one week and 8 weeks after treatment with FDR < 5%. The refitted regression coefficients show the direction of the association.

| Disease Stage | HMDB ID | Synonyms | Refitted Coefficient |

|---|---|---|---|

| Baseline | 00885 | C16:0.cholesteryl ester | 4.45 |

| 12097 | C14:0.sphingomyelin | 1.74 | |

| 04953 | C24:1.Ceramide.(d18:1) | 4.25 | |

| 00555 | 3-methyladipate/pimelate | −12.82 | |

| Week 1 | 06726 | C20:4.cholesteryl ester | 2.17 |

| 12097 | C14:0.sphingomyelin | 2.06 | |

| 04949 | C16:0.Ceramide.(d18:1) | 0.87 | |

| 00555 | 3-methyladipate/pimelate | −6.10 | |

| 00056 | beta-alanine | 2.95 | |

| 00448 | adipate | −4.50 | |

| Week 8 | 00883 | valine | 1.40 |

| 00222 | C16.carnitine | 0.58 | |

| 00848 | C18.carnitine | 0.39 | |

| 00555 | 3-methyladipate/pimelate | −5.95 | |

| 00056 | beta-alanine | 0.63 |

We next investigate whether treatment of Crohn’s disease alters the association between metabolites and Crohn’s disease by fitting two separate logistic regressions using the metabolites measured one week or 8 weeks after the treatment. At each time point, a significant association is detected based on our global test (p-value < 0.001). One week after the treatment, we observe six metabolites associated with Crohn’s disease, including all four identified at the baseline and two additional metabolites, beta-alanine and adipate (see Table 4). The beta-alanine and adipate associations are likely due to that beta-alanine and adipate are important ingredients of the enteral nutrition treatment of Crohn’s disease. However, it is interesting that at 8 weeks after the treatment, valine, C16.carnitine and C18.carnitine are identified to be associated with Crohn’s disease together with 3-methyladipate/pimelate and beta-alanine. It is known that carnitine plays an important role in Crohn’s disease, which might be a consequence of the underlying functional association between Crohn’s disease and mutations in the carnitine transporter genes (Peltekova et al. 2004; Fortin 2011). Deficiency of carnitine can lead to severe gut atrophy, ulceration and inflammation in animal models of carnitine deficiency (Shekhawat et al. 2013). Our results may suggest that the treatment increases carnitine, leading to reduction of inflammation.

6.2. Comparison of Metabolite Associations Between Responders and Non-Responders

To compare the metabolic association with Crohn’s disease for responders (n = 47) and non-responders (n = 34) eight weeks after treatment, we fit two logistic regression models, responder versus normal control and non-responder versus normal control. Our global test shows that there is an overall difference in regression coefficients for responders and for non-responders when compared to the normal controls (p-value < 0.001). We next apply our proposed multiple testing procedure to identify the metabolites that have different regression coefficients in these two different logistic regression models. At the FDR < 0.05, our procedure identifies 9 metabolites with different regression coefficients (see Table 5). It is interesting that all these 9 metabolites have the same signs of the refitted coefficients, while the actual magnitudes of the associations between responders and non-responders when compared to the normal controls are different. Besides C24:4.cholesteryl ester, beta-alanine, valine, C18.carnitine and 3-methyladipate/pimelate that we observe in previous analyses, metabolites 5-hydroxytryptopha, nicotinate, and succinate also have differential associations between responders and non-responders when compared to the controls.

Table 5.

Significant metabolites identified via logistic regression of responder vs normal control and non-responder vs normal control for FDR ≤ 5%.

| HMDB ID | Synonyms | Refitted Coefficients | |

|---|---|---|---|

| Responder vs. | Non-Responder vs. | ||

| Normal | Normal | ||

| 06726 | C20:4.cholesteryl ester | 0.139 | 1.854 |

| 01043 | Linoleic.acid | −0.686 | −0.388 |

| 00472 | 5-hydroxytryptophan | 1.000 | 1.034 |

| 00056 | beta-alanine | 0.503 | 2.298 |

| 00883 | valine | 0.628 | 0.530 |

| 00848 | C18.carnitine | 1.100 | 0.457 |

| 01488 | nicotinate | −1.936 | −4.312 |

| 00254 | succinate | 0.750 | 1.508 |

| 00555 | 3-methyladipate/pimelate | −1.989 | −4.209 |

7. DISCUSSION

In this paper, for both global and multiple testing, the precision matrix Ω = Σ−1 of the covariates is assumed to be sparse and unknown. Node-wise regression among the covariates is used to learn the covariance structure in constructing the debiased estimator. However, if the prior knowledge of Ω = I is available, the algorithm can be simplified greatly. Specifically, instead of incorporating the Lasso estimators as in (8), we let and τj = ‖vj‖n/〈vj, xj〉 for each j = 1, …, p. The theoretical properties of the resulting global testing and multiple testing procedures still hold, while the computational efficiency is improved dramatically. However, from our theoretical analysis, even with the knowledge of Ω = I, the theoretical requirement for the model sparsity ( in the Gaussian case and in the bounded case) cannot be relaxed due to the nonlinearity of the problem.

Sample splitting was used in this paper for theoretical purpose. This is different from other works on inference in high-dimensional linear/logistic regression models, including Ingster, Tsybakov, and Verzelen (2010), van de Geer et al. (2014), Mukherjee, Pillai, and Lin (2015) and Javanmard and Javadi (2019), where sample splitting is not needed. However, as we discussed throughout the paper, the assumptions and the alternatives that we considered are different from those previous papers. In the case of high-dimensional logistic regression model, a sample splitting procedure seems unavoidable under the current framework of our technical analysis without making additional strong structural assumptions such as the sparse inverse Hessian matrices used in van de Geer et al. (2014) or the weakly correlated design matrices used in Mukherjee, Pillai, and Lin (2015). Our simulations showed that the sample splitting is actually not needed in order for our proposed methods to perform well. It is of interest to develop technical tools that can eliminate sample splitting in inference for high dimensional logistic regression models.

As mentioned in the introduction, the logistic regression model can be viewed as a special case of the single index model y = f (β⊤x) + ϵ where f is a known transformation function (Yang et al. 2015). Based on our analysis, it is clear that the theoretical results are not limited to the sigmoid transfer function. In fact, the proposed methods can be applied to a wide range of transformation functions satisfying the following conditions: (C1) f is continuous and for any , 0 < f (u) < 1; (C2) for any , there exists a constant L > 0 such that ; and (C3) for any constant C > 0, there exists δ > 0 such that for any . Examples include but are not limited to the following function classes

Cumulative density functions: f (x) = P(X ≤ x) for some continuous random variable X supported on . In particular, when X ~ N(0, 1), the resulting model becomes the probit regression.

Affine hyperbolic tangent functions: for some parameter . In particular, (a, b) = (1, 0) corresponds to f (x) = ex / (1 + ex).

Generalized logistic functions: f (x) = (1 + e−x)−α for some α > 0.

Besides the problems we considered in this paper, it is also of interest to construct confidence intervals for functionals of the regression coefficients, such as ‖β‖1,‖β‖2, or θ⊤β for some given loading vector θ. In modern statistical machine learning, logistic regression is considered as an efficient classification method (Abramovich and Grinshtein 2018). In practice, a predicted label with an uncertainty assessment is usually preferred. Therefore, another important problem is the construction of predictive intervals of the conditional probability π* associated with a given predictor X*. These problems are related to the current work and are left for future investigations.

8. PROOFS OF THE MAIN THEOREMS

In this section, we prove Theorems 1, Theorem 2 and Theorem 4 in the paper. The proofs of other results, including Theorems 3 and 5, Proposition 1 and the technical lemmas, are given in our Supplementary Materials.

Proof of Theorem 1

Define . Under H0, , and by (A3), c < Fjj < C for j = 1, …, p and some constant C ≥ c > 0. Define statistics

and , . The following lemma shows that and therefore are good approximations of Mn.

Lemma 1. Under the condition of Theorem 1, the following events

hold with probability at least 1 − O(p−c) for some constant c > 0.

It follows that under the event B1 ∩ B2, let yp = 2log p − loglog p + x and ϵn = o(1), we have

Therefore it suffices to prove that for any , as (n, p) → ∞,

| (21) |

Now define where for τn = log(p + n), and . The following lemma states that is close to .

Lemma 2. Under the condition of Theorem 1, with probability at least 1 − O(p−c) for some constant c > 0.

By Lemma 2, it suffices to prove that for any , as (n, p) → ∞,

| (22) |

To prove this, we need the classical Bonferroni inequality.

Lemma 3. (Bonferroni inequality) Let . For any integer k < p / 2, we have

| (23) |

where .

By Lemma 3, for any integer 0 < q < p / 2,

| (24) |

where . Now let for j = 1, …, p, and for 1 ≤ i ≤ n. Define ‖a‖min = min1≤i≤d | ai| for any vector . Then we have

Then it follows from Theorem 1.1 in Zaitsev (1987) that

| (25) |

where c1 > 0 and c2 > 0 are constants, ϵn → 0 which will be specified later, and is a normal random vector with and cov(Nd) = cov(W1). Here d is a fixed integer that does not depend on n, p. Because log p = o(n1/5), we can let ϵn → 0 sufficiently slow, say, , so that for any large c > 0,

| (26) |

Combining (24), (25) and (26), we have

| (27) |

Similarly, one can derive

| (28) |

Now we use the following lemma from Xia, Cai, and Cai (2018).

Lemma 4. For any fixed integer d ≥ 1 and real number ,

It then follows from the above lemma, (27) and (28) that

for any positive integer p. By letting p → ∞, we obtain (22) and the proof is complete. □

Proof of Theorem 2.

The proof essentially follows from the general Le Cam’s method described in Section 7.1 of Baraud (2002). The key elements can be summarized as the following lemma that reduces the lower bound problem to calculation of the total variation distance between two posterior distributions.

Lemma 5. Let be some subset in an bounded Hilbert space and ρ some positive number. Let μρ be some probability measure on . Set , P0 as the (posterior) distribution at the null, and denote by Φα the level-α tests, we have

where denotes the total variation distance between and P0.

Now since by definition ρ*(Φα, δ, Θ(k)) ≥ ρ*(Φα, δ, Σ) for any Σ ∈Θ2(k), by Lemma 5, it suffices to construct the corresponding for β ∈Θβ(k) and find a lower bound ρ1 = ρ(η) such that

| (29) |

for fixed covariance Σ = I. In this case, an upper bound for the χ2-divergence between and P0, defined as , can be obtained by carefully constructing the alternative space . Since (see p.90 of Tsybakov (2009)), it follows that . By choosing ρ1 = ρ(η) such that for any ρ ≤ ρ1, , we have (29) holds. In the following, we will construct the alternative space and derive an upper bound of where P0 corresponds to the null space defined at a single point β = 0. We divide the proofs into two parts. Throughout, the design covariance matrix is chosen as Σ = I.

Step 1: Construction of .

Firstly, for a set M, we define as the set of all the n-element subsets of M. Let [1: p] ≡ {1, …, p}, so contains all the k-element subsets of [1: p]. We define the alternative parameter space . In other words, contains all the k-sparse vectors β(I) whose nonzero components ρ are indexed by I. Apparently, for any , it follows ‖β‖∞ = ρ and .

Step 2: Control of .

Let π denote the uniform prior of the random index set I over . This prior induces a prior distribution over the parameter space . For , the corresponding joint distribution of the data is

Similarly, the posterior distribution of the samples over the prior is denoted as

As a result, we have the following lemma controlling .

Lemma 6. Let where h(η) = [log(η2 + 1)]−1 and η = 1 − α − δ, then we have χ2(g, f) ≤ (1 − α − δ)2.

Combining Lemma 5 and Lemma 6, we know that for α, δ > 0 and α + δ < 1, if , then . Therefore, it follows that

| (30) |

Lastly, note that for the above chosen ρ, when for some 0 < γ < 1 / 2. This completes the proof. □

Proof of Theorem 4.

The proof follows similar arguments of the proof of Theorem 3.1 in Javanmard and Javadi (2019). We first consider the case when , given by (17), does not exist. In this case, and we consider the event that there are at least one false positive. In order to show the FDR/FDP can be controlled in this case, we show that

| (31) |

Note that for , we have Then

| (32) |

For any ϵ > 0, we can bound the first term by

By the proof of Lemma 1, we know that . In addition, for , where for some sufficiently large c > 0. Now since where and , by Lemma 6.1 of Liu (2013), we have . Now let , we have .

Hence , which goes to zero as (n, p) → ∞. By symmetry, we know that the second term in (32) also goes to 0. Therefore we have proved (31).

Now consider the case when holds. We have

where Note that by definition . The proof is complete if Ap → 0 in probability, which has been shown by Proposition 1. □

Supplementary Material

Footnotes

SUPPLEMENTARY MATERIALS

In the online Supplemental Materials, we prove Theorem 3, 5, Proposition 1, and the technical lemmas. The technical results and simulations concerning the two-sample tests discussed in Section 4 are also included.

References

- Abramovich F, and Grinshtein V (2018), “High-dimensional classification by sparse logistic regression,” IEEE Transactions on Information Theory, 65, 3068–3079. [Google Scholar]

- Bach F (2010), “Self-concordant analysis for logistic regression,” Electronic Journal of Statistics, 4, 384–414. [Google Scholar]

- Baraud Y (2002), “Non-asymptotic minimax rates of testing in signal detection,” Bernoulli, 8, 577–606. [Google Scholar]

- Barrett JC, Hansoul S, Nicolae DL, Cho JH, Duerr RH, Rioux JD, Brant SR, Silverberg MS, Taylor KD, Barmada MM, et al. (2008), “Genome-wide association defines more than 30 distinct susceptibility loci for Crohn’s disease,” Nature Genetics, 40, 955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belloni A, Chernozhukov V, and Wei Y (2016), “Post-selection inference for generalized linear models with many controls,” Journal of Business & Economic Statistics, 34, 606–619. [Google Scholar]

- Benjamini Y, and Hochberg Y (1995), “Controlling the false discovery rate: a practical and powerful approach to multiple testing,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 289–300. [Google Scholar]

- Benjamini Y, and Yekutieli D (2001), “The control of the false discovery rate in multiple testing under dependency,” The Annals of Statistics, 29, 1165–1188. [Google Scholar]

- Cai TT, and Guo Z (2017), “Confidence intervals for high-dimensional linear regression: Minimax rates and adaptivity,” The Annals of Statistics, 45, 615–646. [Google Scholar]

- Cai TT, Li H, Ma J, and Xia Y (2017), “Differential Markov random field analysis with applications to detecting differential microbial community structures,” Unpublished Manuscript. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai TT, Liu W, and Xia Y (2013), “Two-sample covariance matrix testing and support recovery in high-dimensional and sparse settings,” Journal of the American Statistical Association, 108, 265–277. [Google Scholar]

- ——— (2014), “Two-sample test of high dimensional means under dependence,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 76, 349–372. [Google Scholar]

- Candès E, Fan Y, Janson L, and Lv J (2018), “Panning for gold: model-X knockoffs for high dimensional controlled variable selection,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80, 551–577. [Google Scholar]

- Candès EJ, and Sur P (2018), “The phase transition for the existence of the maximum likelihood estimate in high-dimensional logistic regression,” arXiv preprint arXiv:1804.09753. [DOI] [PMC free article] [PubMed]

- Fortin G (2011), “L-Carnitine and intestinal inflammation,” in Vitamins & Hormones, Elsevier, vol. 86, pp. 353–366. [DOI] [PubMed] [Google Scholar]

- Ingster YI (1993), “Asymptotically minimax hypothesis testing for nonparametric alternatives. I, II, III,” Mathematical Methods of Statiststics, 2, 85–114. [Google Scholar]

- Ingster YI, Tsybakov AB, and Verzelen N (2010), “Detection boundary in sparse regression,” Electronic Journal of Statistics, 4, 1476–1526. [Google Scholar]

- Javanmard A, and Javadi H (2019), “False discovery rate control via debiased lasso,” Electronic Journal of Statistics, 13, 1212–1253. [Google Scholar]

- Javanmard A, and Montanari A (2014a), “Confidence intervals and hypothesis testing for high-dimensional regression.” Journal of Machine Learning Research, 15, 2869–2909. [Google Scholar]

- ——— (2014b), “Hypothesis testing in high-dimensional regression under the gaussian random design model: Asymptotic theory,” IEEE Transactions on Information Theory, 60, 6522–6554. [Google Scholar]

- Lee D, Baldassano RN, Otley AR, Albenberg L, Griffiths AM, Compher C, Chen EZ, Li H, Gilroy E, Nessel L, et al. (2015), “Comparative effectiveness of nutritional and biological therapy in North American children with active Crohn’s disease,” Inflammatory Bowel Diseases, 21, 1786–1793. [DOI] [PubMed] [Google Scholar]

- Lewis JD, Chen EZ, Baldassano RN, Otley AR, Griffiths AM, Lee D, Bittinger K, Bailey A, Friedman ES, Hoffmann C, et al. (2015), “Inflammation, antibiotics, and diet as environmental stressors of the gut microbiome in pediatric Crohn’s disease,” Cell Host & Microbe, 18, 489–500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu W (2013), “Gaussian graphical model estimation with false discovery rate control,” The Annals of Statistics, 41, 2948–2978. [Google Scholar]

- Liu W, and Luo S (2014), “Hypothesis testing for high-dimensional regression models,” Technical report. [Google Scholar]

- Maceyka M, and Spiegel S (2014), “Sphingolipid metabolites in inflammatory disease,” Nature, 510, 58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meier L, van de Geer S, and Bühlmann P (2008), “The group lasso for logistic regression,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 70, 53–71. [Google Scholar]

- Mukherjee R, Pillai NS, and Lin X (2015), “Hypothesis testing for high-dimensional sparse binary regression,” Annals of Statistics, 43, 352–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Negahban S, Ravikumar P, Wainwright MJ, and Yu B (2010), “A unified framework for high-dimensional analysis of M-estimators with decomposable regularizers,” Technical Report Number 979. [Google Scholar]

- Ni J, Shen T-CD, Chen EZ, Bittinger K, Bailey A, Roggiani M, Sirota-Madi A, Friedman ES, Chau L, Lin A, et al. (2017), “A role for bacterial urease in gut dysbiosis and Crohn’s disease,” Science Translational Medicine, 9, eaah6888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltekova VD, Wintle RF, Rubin LA, Amos CI, Huang Q, Gu X, Newman B, Van Oene M, Cescon D, Greenberg G, et al. (2004), “Functional variants of OCTN cation transporter genes are associated with Crohn’s disease,” Nature Genetics, 36, 471. [DOI] [PubMed] [Google Scholar]

- Plan Y, and Vershynin R (2013), “Robust 1-bit compressed sensing and sparse logistic regression: A convex programming approach,” IEEE Transactions on Information Theory, 59, 482–494. [Google Scholar]

- Ren Z, Zhang C-H, and Zhou HH (2016), “Asymptotic normality in estimation of large Ising graphical model,” Unpublished Manuscript. [Google Scholar]

- Rozenova KA, Deevska GM, Karakashian AA, and Nikolova-Karakashian MN (2010), “Studies on the role of acid sphingomyelinase and ceramide in the regulation of tumor necrosis factor α (TNFα)-converting enzyme activity and TNFα secretion in macrophages,” Journal of Biological Chemistry, 285, 21103–21113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sewell GW, Hannun YA, Han X, Koster G, Bielawski J, Goss V, Smith PJ, Rahman FZ, Vega R, Bloom SL, et al. (2012), “Lipidomic profiling in Crohn’s disease: abnormalities in phosphatidylinositols, with preservation of ceramide, phosphatidylcholine and phosphatidylserine composition,” The International Journal of Biochemistry & Cell Biology, 44, 1839–1846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shekhawat PS, Sonne S, Carter AL, Matern D, and Ganapathy V (2013), “Enzymes involved in L-carnitine biosynthesis are expressed by small intestinal enterocytes in mice: Implications for gut health,” Journal of Crohn’s & Colitis, 7, e197–e205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sur P, and Candès EJ (2019), “A modern maximum-likelihood theory for high-dimensional logistic regression,” Proceedings of the National Academy of Sciences, 116, 14516–14525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sur P, Chen Y, and Candès EJ (2017), “The likelihood ratio test in high-dimensional logistic regression is asymptotically a rescaled chi-square,” Probability Theory and Related Fields, 1–72. [Google Scholar]

- Tsybakov AB (2009), Introduction to Nonparametric Estimation, Springer Series in Statistics. Springer, New York. [Google Scholar]

- van de Geer S (2008), “High-dimensional generalized linear models and the lasso,” The Annals of Statistics, 36, 614–645. [Google Scholar]

- van de Geer S, Bühlmann P, Ritov Y, and Dezeure R (2014), “On asymptotically optimal confidence regions and tests for high-dimensional models,” The Annals of Statistics, 42, 1166–1202. [Google Scholar]

- Verzelen N (2012), “Minimax risks for sparse regressions: Ultra-high dimensional phenomenons,” Electronic Journal of Statistics, 6, 38–90. [Google Scholar]

- Xia Y, Cai T, and Cai TT (2015), “Testing differential networks with applications to the detection of gene-gene interactions,” Biometrika, 102, 247–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ——— (2018), “Two-sample tests for high-dimensional linear regression with an application to detecting interactions,” Statistica Sinica, 28, 63–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xia Y, Cai TT, and Li H (2018), “Joint testing and false discovery rate control in high-dimensional multivariate regression,” Biometrika, 105, 249–269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang Z, Wang Z, Liu H, Eldar YC, and Zhang T (2015), “Sparse nonlinear regression: Parameter estimation and asymptotic inference,” arXiv preprint arXiv:1511.04514.

- Zaitsev AY (1987), “On the Gaussian approximation of convolutions under multidimensional analogues of S.N. Bernstein’s inequality conditions,” Probability Theory and Related Fields, 74, 535–566. [Google Scholar]

- Zhang C-H, and Zhang SS (2014), “Confidence intervals for low dimensional parameters in high dimensional linear models,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 76, 217–242. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.