Abstract

Significance: We introduce and evaluate emerging devices and modalities for wound size imaging and also promising image processing tools for smart wound assessment and monitoring.

Recent Advances: Some commercial devices are available for optical wound assessment but with limited possibilities compared to the power of multimodal imaging. With new low-cost devices and machine learning, wound assessment has become more robust and accurate. Wound size imaging not only provides area and volume but also the proportion of each tissue on the wound bed. Near-infrared and thermal spectral bands also enhance the classical visual assessment.

Critical Issues: The ability to embed advanced imaging technology in portable devices such as smartphones and tablets with tissue analysis software tools will significantly improve wound care. As wound care and measurement are performed by nurses, the equipment needs to remain user-friendly, enable quick measurements, provide advanced monitoring, and be connected to the patient data management system.

Future Directions: Combining several image modalities and machine learning, optical wound assessment will be smart enough to enable real wound monitoring, to provide clinicians with relevant indications to adapt the treatments and to improve healing rates and speed. Sharing the wound care histories of a number of patients on databases and through telemedicine practice could induce a better knowledge of the healing process and thus a better efficiency when the recorded clinical experience has been converted into knowledge through deep learning.

Keywords: wound size imaging, tissue classification, mobile health, computer vision, deep learning

Yves Lucas, PhD

Scope and Significance

All types of wounds will benefit from the emergence of advanced image acquisition devices and processing tools as they share common healing process which implies fast and accurate periodic examination to adapt the treatments. As wound care is performed not only in hospital but also at home, wound assessment needs to rely on low-cost, user-friendly, and portable equipment. We summarize here recent experiments in computer vision laboratories on wound images with emerging image modalities and sensors. A comprehensive review of the introduction and development of wound size imaging helps to understand the power and the limits of this tool in clinical practice. Other aspects of imaging such as vascular or infection, not covered here, are required to assess fully a wound.

Smart assessment is concerned with both software and hardware aspects. On one side, artificial intelligence provides algorithms, for which simply providing labeled images as input during the learning step is sufficient, to build a model efficient in the recognition task. On the other side, smartphone technology invades the medical field with friendly, low-cost, and portable devices. Smartphone embeds “smart”: this reminds us that this property is not only attached to software but also to the hardware side of wound imaging devices with evident mobility and connectivity capabilities.

Translational Relevance

It is clear that adding information about the wound tends to improve the quality of assessment: each imaging modality extracts specific data to better evaluate the healing process. The assistance of medical experts is still required to provide the ground truth for tuning the image processing algorithms and validating the outputs. At a higher level, the knowledge of these experts is also necessary to combine all the data to describe the wound state accurately.

Clinical Relevance

The benefits of wound size imaging are already visible in automatic wound assessment, but the room for improvement is even greater if we consider wound monitoring. To anticipate and favor the evolution of a wound, it is necessary to integrate all its history and to analyze how the different regions, with their different tissue types, have been transformed, how the frontiers of these regions have been distorted, and at what speed. By accumulating data, the learning process becomes more robust, since neural networks perform optimally on huge learning databases, enabling more efficient therapeutic options for wound care to be proposed to improve healing rates. We should not nevertheless forget that many other factors influence the wound evolution, in particular, all the biological data documented in the patient's medical record. These data need to be included in the learning process to refine wound assessment and monitoring.

Evolution of Practice

The burden of wound care in the health system

Wound care is a major health issue as it is anticipated that worldwide 380 million people will suffer from wounds by the year 2025. In 2018 in Europe, for example, the population prevalence of chronic wounds was 3–4/1,000 people, which roughly translates to between 1.5 and 2.0 million of the 491 million inhabitants of the European Union, and the annual incidence estimate for both acute and chronic wounds stands at 4 million in the region.1–3 There is a wide range of wounds, such as surgical, ulcers (venous stasis, diabetic, arterial, pressure, decubitus …), traumatic injuries, and burns.

The rising prevalence of diabetes, a pathology associated with a slow healing process, and the growing geriatric population can be considered as the two major factors of the increase in the burden of wound care in the health system, further increased by the rise in the number of trauma injuries and road accidents. As publicly reported wound healing rates are underestimated, the cost could be higher for the health system.4

These wounds have a major long-term influence on the health and quality of life of patients and their families, causing depression, loss of function and mobility, social isolation, prolonged hospital stays, and high treatment costs. Emergency wound care and clinicians with considerable technical skill play a frontline role by performing successful wound care. It is also essential that the wounds be treated promptly and properly for the treatment to be efficient and to improve the wound healing rate. Patients with wounds need frequent clinical evaluation to check the local wound status regularly and adjust therapy. The assessment and monitoring of wounds is therefore a critical task to perform an accurate diagnosis and to select a suitable treatment.

Visual assessment

In clinical routine, wound care is performed by nurses and consequently visual assessment is only possible after she has removed the dressing and cleaned the wound, a time-consuming procedure. As a result, wound assessment suffered for many years from being a strictly manual practice and poor data were available for accurate wound monitoring, especially when a patient wound history was not shared between the nurses.

The periodic assessment of a wound is based on visual examination: clinicians describe the wound by geometrical measurements and quantify the skin tissue involved.5 Measurements are generally one-dimensional (1D) (a basic ruler or a specific Kundin gauge), sometimes 2D (the contour is drawn on a transparency to measure its surface), and 3D for severe cases (the volume is obtained from an alginate cast or serum injection inside the wound) or producing an alginate cast to obtain the volume. Moreover, these methods are imprecise and require direct contact with the wound. The evaluation of the percentage of each type of tissue in the wound bed based on color and texture analysis helps to understand the progress of the healing and to provide a contactless quantitative measurement.6,7 The healing status is obtained from a color evaluation protocol corresponding, respectively, to the dominant color, that is, red, yellow, black, and pink, of the different tissues present on a wound (respectively, granulation, fibrin, necrosis, and epithelium). The percentage of each type of tissue is recorded on a color-coded scale.8 However, during wound tissue identification, it is difficult for clinicians to determine their precise proportions by a simple visual inspection. Therefore, numerous techniques have become available for tissue classification over the wound region, ranging from the use of tracings to the more sophisticated methods detailed below requiring the use of cameras and computers.

Pioneering work in wound size imaging

With traditional cameras based on negative films, it was only possible to document patient folder, but the marketing of affordable digital camera enabled quantitative and automatic measurements on wounds based on image processing technology. The first studies considered wound as planar, so that wound area could be computed simply by counting the pixels. The percentage of each type of tissue was then derived after classifying the segmented regions.9–11 Two-dimensional approximation provides a low metric precision on large and curved wounds due to projection on focal plane. Moreover, the lighting conditions and the RGB sensor embedded in a given digital camera lead to color variability and tissue misclassifications. Machine learning requires tedious labeling of hundreds of images by experts to build a ground truth and the relevant tissue descriptors.

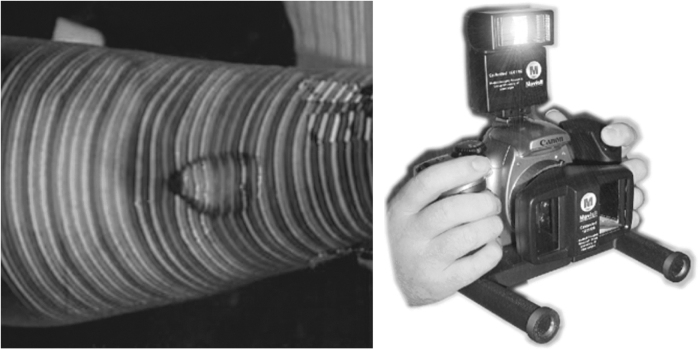

Some works focused on wound 3D modeling for spatial and volumetric measurements based on active and passive devices (Fig. 1). The first technique consists in extracting and matching interest points in a sequence of images.12–14 In the other one, a laser line or a matrix pattern illuminates the wound. In the two cases, the 3D points are triangulated by back projecting light rays.15–17 The prototypes elaborated by research laboratories were not adapted for routine care due to their overall dimensions, cost, and sophisticated practical application. In fact, due the strong prevalence of wounds in clinical centers, only low-cost systems could be spread in any hospital service. As already mentioned, wound assessment is done by the nurse during wound care and only a few minutes can be spared daily after cleaning wound bed, excluding complex protocols. Anyway critical wounds require a more reliable diagnosis aided by an advanced multimodal device.

Figure 1.

Volume measurement: active vision by color stripes projection (MAVIS I) passive vision by dual lens stereovision (MAVIS II).

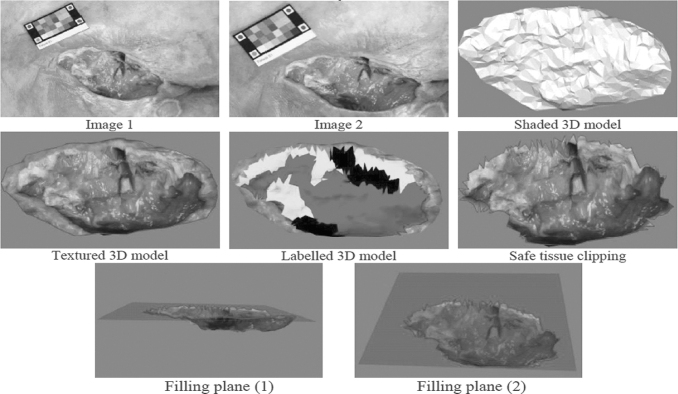

No pioneering work produced a versatile device to describe both wound shape and quantify tissue distribution, which are, however, essential for visual assessment. Later, prototypes were replaced by commercial digital cameras to produce wound 3D maps with tissues legends18,19 (Fig. 2). This was a significant step toward smart wound size imaging: the calibration step was avoided due to multiple view geometrical advances20 and a standard pattern pasted in the field of view provided color constancy and metric gauging.21,22

Figure 2.

3D wound reconstruction and labeling using a simple handheld digital camera. 3D, three-dimensional.

Subsequently, no significant improvements were made23–27 neither in the software nor in the hardware to propose a specific device with embedded image processing intended for wound assessment. The emergence of low-cost, accurate, portable, and handheld devices with embedded spectacular computing power was to radically change wound care practice,28 as these devices could be spread massively. Indeed, if the algorithms are installed and run inside the mobile devices without the need for a server, it will drastically increase speed and enable to work without internet access.

First commercial devices for wound size imaging

The SilhouetteMobile™ system (Aranz Medical Ltd., Christchurch, New Zealand) (Fig. 3 left) provided a handheld tool with embedded digital camera, active vision, and image processing software. Thanks to the RGB sensor and three laser line generators, an electronic device could come up to the requirements of a basic visual assessment (Fig. 5). Unfortunately, only geometrical measurements are provided: wound area is obtained by contour detection in the image and the average volume is deduced from the projection of laser patterns on wound surface. So, no tissue classification is available to assess the healing stage. To evaluate its accuracy, a comparison with Visitrak™ (Smith & Nephew, Watford, United Kingdom) wound measurement system, a tool based on manual tracing on transparent sheets reported onto a tactile tablet, and also with a basic elliptical approximation made with a ruler was carried out with a reference provided by a scanner.29 It demonstrated that all the wound imaging devices clearly outperform manual measurements with a ruler. The accuracy based on the relative error were 13.3%, 6.8%, and 2.3% and the precision based on the coefficient of variation were 6%, 6.3%, and 3.1%, respectively, for the ruler, the Visitrak, and Silhouette devices.

Figure 3.

(Left) Silhouette wound assessment device from Aranz medical (right) inSight device from eKare.

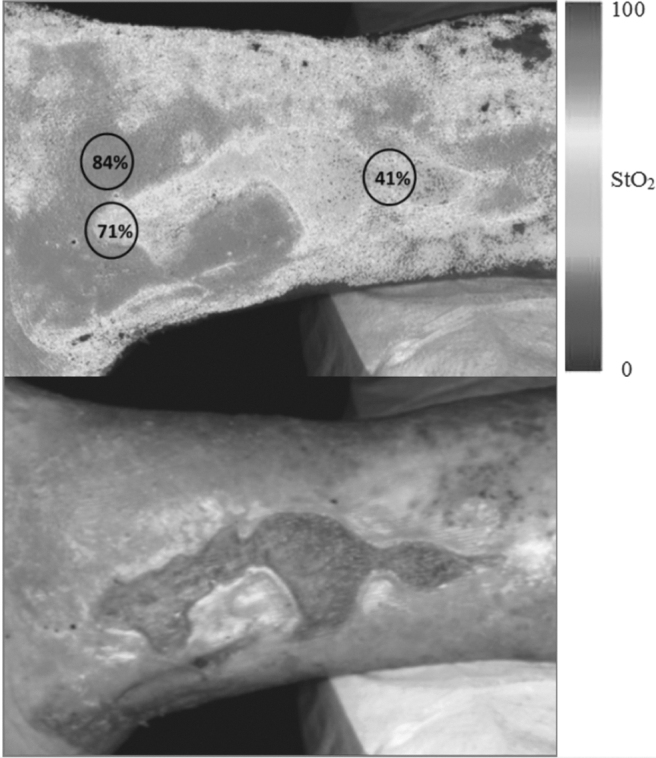

Figure 5.

Snapshot NIR allows viewing oxygen saturation (StO2) levels throughout the wound and surrounding tissue. Kent Imaging SnapshotNIR device. NIR, near infrared.

For accurate 3D measurements, several thousands of data points are required to build a numerical mesh. WoundZoom (Woundzoom, Inc.) device is based on a specially designed tablet which contains a built-in 3-D image sensor that can capture the length, breadth, and width of a patient's wound. The software program calculates the surface area and volume. It provides professionals with thermal mapping, which is another indicator of tissue health. Recently, InSight (eKare, Fairfax, Virginia, USA) (Fig. 3 right) a small device based on an iPad with an add-on stereo color sensor and embedded image processing software has been marketed.30 It provides a wound 3D model, tissue labeling, and wound region extraction from the background.

Numerous commercial imaging devices have been released since that time. So, it is legitimate to search for the best one. To check and compare the technical accuracies announced for all the available commercial devices released, it would be necessary to capture wound images from the same patients during a consistent clinical study, with the same panel of experts delivering the ground truth for the tissue labels. This explains why the specifications provided by the manufacturers, rarely controlled by independent laboratories, are provided here by company's links.

Emerging Acquisition Devices and Image Modalities

Just as digital cameras had revolutionized the field of photography, new emerging and low-cost devices offer again unexpected possibilities, such as thermal and infrared imaging or 3D scanning (Table 1). Spectral exploration was developed to detect nonvisible wavelengths, analyze narrow bands, and drastically improve spectral resolution. It provides relevant data for tissue analysis and classification. Concurrent techniques are also available for 3D geometrical measurements over the wound. The trend is to associate spectral analysis and 3D scanning with multimodal devices.

Table 1.

Wound imaging methods for tissue analysis and shape measurements

| Wound imaging method | Features | Application in wound care | Advantages | Disadvantages |

|---|---|---|---|---|

| Color imaging | Three broad channel RGB sensor | Basic tissue classification Shape measurements |

Available on all smartphones High spatial resolution |

Limited 3D reconstruction precision on poor textured wounds |

| Multispectral/Hyperspectral imaging | Visible and/or near IR sensor Up to several hundreds of narrow bands. |

Enhanced tissue classification Oxygen saturation Bacterial environment |

High spectral resolution | No add-on sensor for smartphone. Powerful illumination required |

| Thermal imaging | Mid-infrared band sensor | Insights on tissue inflammation and healing effectiveness | Add-on sensor available | Low thermal precision and poor spatial resolution |

| Light pattern | Projection of laser lines or infrared speckle | Shape measurements | Available on some smartphones with infrared light pattern | Implies specific lighting system for pattern projection. |

| Time of flight | Time taken by light to reach a point and go back to the sensor. | Shape analysis and volume measurement | Add-on sensor available High robustness for shape measurements |

Scanning required to create a 3D map. Device customization needed to comply with wound small size. |

3D, three-dimensional.

Spectral exploration

Acquiring a large number of narrow spectral bands, about ten for a multispectral image or one or two hundreds in the case of a hyperspectral image, provide much more data than color imaging, which is limited to red, green, and blue channels with a large bandpass. These devices initially reserved for aerial and satellite remote sensing are becoming widespread in factories to inspect consumer goods, and have been applied more recently in the medical field where visible and infrared bands are investigated.31,32

The acquisition technique uses push broom sensing, in which the scene is scanned during airborne displacement of the sensor. Since the camera needs to be translated as in a photocopier to obtain a spectrum in each scanned line, this technique is not adapted to wound imaging where the capture of complete image frames is required. To capture a hyperspectral cube, composed of a series of images at different wavelengths, it is necessary to operate very quickly when the scene is not static. A basic and low-cost approach is to use a wheel with several filters to capture in vivo wound images.

Even with a few wavelengths, preliminary experimentations indicated that wound assessment is greatly enhanced by spectral discrimination.33,34 Manual filter selection can be avoided with liquid crystal filters which are electronically tuned through a computer interface. This technique proved to be efficient to explore which wavelengths are relevant to detect and display vital tissues during surgery in an operating room.32 Recently, advanced snapshot mosaic sensors (IMEC, Leuven, Belgium) have been marketed. As they allow a series of wavelengths to be captured simultaneously in the visible and near-infrared band, medical applications will undoubtedly benefit from this technology. The last one released has key benefits: color and narrow-band near-infrared imaging are integrated into a single chip for low-cost and compact solutions. Moreover, the infrared band is tunable to match a specific filter band, which is valuable for medical imaging using fluorescence: the sensor can be customized to capture only the wavelengths emitted by the tissues stimulated by fluorescence.

Another technique to gather tissue response to specific wavelengths is to illuminate the tissue with these wavelengths in a dark environment. For example, based on digital light processing videoprojection, a hyperspectral imaging system has been designed for visualizing the chemical composition of in vivo tissues during surgical procedures, in particular, to quantify the oxygenation of the tissues.35 In a recent study, monitoring wound healing in a 3D wound model by hyperspectral imaging was investigated to mimick in-vitro healing process. It comprised human fibroblasts embedded in a collagen matrix and keratinocytes on the surface as representatives of the most important skin cells. This wound model was established and incubated without and with acute and chronic wound fluid. Tissue samples were fixed and investigated histologically and immunohistochemically to attest that the model was able to correlate cell quantity and spectral reflectance during wound closure.36

The number of wavelengths provides sharp discrimination between tissues, but the ability to stimulate tissues with only a particular wavelength can reveal hidden properties. This is the case of the i:X Wound Intelligence device (MolecuLight, Toronto, Canada) (Fig. 4). With the guidance of fluorescence imaging, this portable touch-screen with an intuitive interface allows clinicians to quickly, safely, and easily visualize bacteria. They simply appear in red in the image, providing maximum insights for accurate treatment selection. A monochromatic violet light is emitted by the device and its interaction with the tissue and bacteria leads to a green fluorescence of the wound which turns to red for harmful bacteria. A similar approach is followed in the SnapshotNIR device (Kent Imaging, Calgary, Canada) (Fig. 5) which uses light in the near-infrared spectrum for wound assessment. As the wavelength-dependent light absorption of hemoglobin differs if it is carrying oxygen from when it is not, this device reports approximate values and displays images of oxygen saturation, relative oxyhemoglobin, and deoxyhemoglobin levels in superficial tissue without injectable dyes or patient contact. It gives insights on tissue health and the healing potential of wounds. It conveys a comprehensive picture of tissue health and the healing capacity of wounds.

Figure 4.

Diabetic Foot Ulcer, Heel - FL-image™ (real-time imaging in fluorescence mode as a result of violet light illumination) revealed both cyan fluorescing bacteria, which is indicative of Pseudomonas aeruginosa (arrows) and red fluorescing bacteria MolecuLight i:X Wound Intelligence Device.

HyperView™, a new surface tissue oximetry device (HyperMed Imaging, Inc., Memphis, Tennessee, USA) also provides color-coded images depicting approximate concentrations of oxyhemoglobin and deoxyhemoglobin, as well as oxygen saturation. In a smaller and more portable configuration as used in the no more available OxyVu™-1 system, it is also based on hyperspectral imaging technology, which enable to differentiate light absorption between oxygenated hemoglobin and deoxygenated hemoglobin imaging technology. HyperMed Imaging, Inc. received FDA clearance in 2017 for this device, which determines oxygenation levels in superficial tissues, an important concern for wound healing, diabetic foot ulcers, and critical limb ischemia.

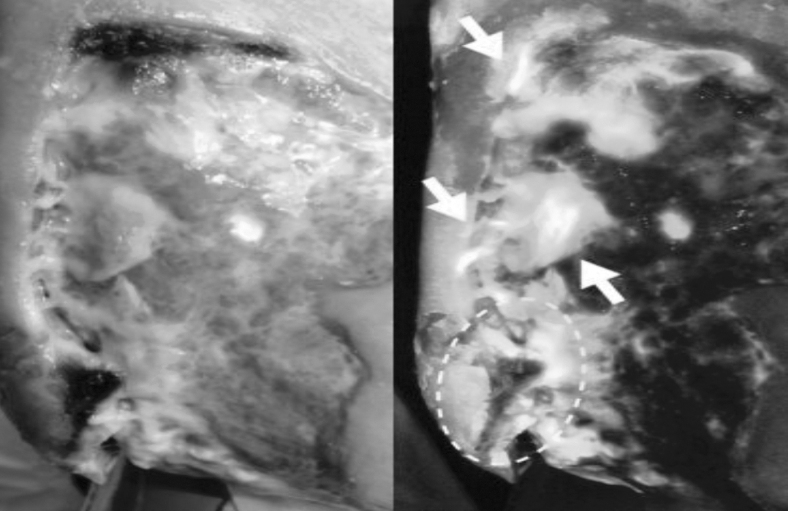

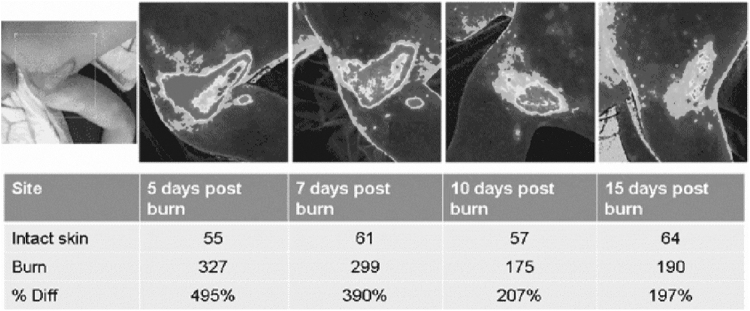

Another technique, still based on near-infrared imaging, is laser speckle contrast imaging (Fig. 6). A laser (785 nm) is diffused over the tissues, creating a speckle pattern which is captured in real-time with a coupled charge device (CCD) camera and analyzed to image blood perfusion and study microcirculation. Such a system, as the PeriCam PSI NR (Perimed, Järfälla, Sweden), is not handheld but remains easy to move and to be operated, when mounted on a car fitted with a flexible arm.

Figure 6.

Wound healing progress for burns analyzed with PeriCam PSI NR, Burn Center, Linköping Univ. Hospital, Sweden.

Tissues have a number of useful properties in the near-infrared region of the spectrum, linked to their weak absorption and high scattering of these wavelengths. The light–tissue interaction of components such as red blood cells, hemoglobin, or collagen is relevant to assess wound healing.37

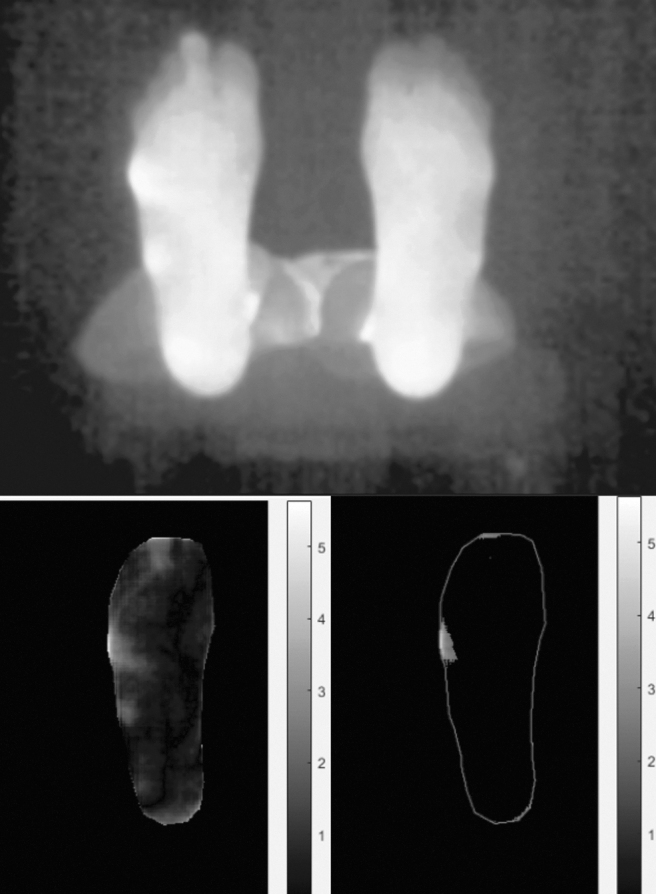

Longer wavelengths in the infrared band provide thermal information. As new devices become cheaper and embedded as add-on sensors, thermal cameras are becoming common for industrial control and visual inspection. This imaging modality is particularly relevant for wound assessment and has been investigated in this field.38–40 It allows for physical and physiological monitoring, feeding information to the physician about blood flow and metabolic activity, and it helps to identify differences between affected and unaffected tissues. The Scout solution (WoundVision Indianapolis, Indiana, USA), for instance, is a visual and infrared imaging device that computes and stores wound dimensions and biological modifications on the cutaneous tissues, based on temperature differential. In the current European STANDUP project41 (Smartphone Thermal ANalysis for Diabetic Foot Ulcer Prevention detection and treatment) dedicated to diabetic foot ulcers42 (Fig. 7), thermal information is used to prevent ulcers by hyperthermia detection, to monitor ulcer healing by combining thermal, color, and 3D measurements and to improve the design of foot insole and foot pads.

Figure 7.

From an infrared feet image with a smartphone equipped with add-on thermal sensor (left) temperature differential can be computed (center) to detect hyperthermia for diabetic ulcer prevention.

Nonoptical devices are out of the scope of this article but can be coupled with color imaging such as ultrasound to get insights on the condition of underlying tissues43 and make possible the screening of bed sores.44

3D geometrical measurements

A single image provides a lot of information on a wound, but it fails to produce accurate shape measurements, due to perspective projection errors on nonplanar wounds. To obtain 3D data, many approaches are now possible.

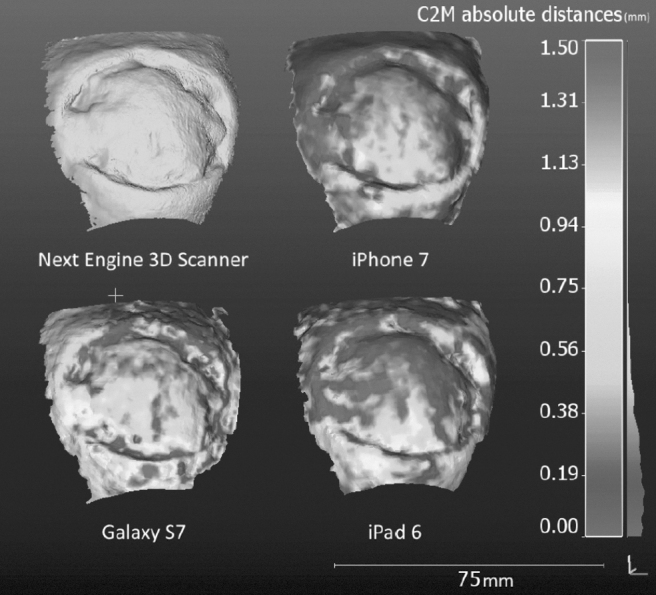

By combining several images from different points of view, 3D points can be reconstructed by triangulation. This is the basic concept of stereoscopic systems. Nowadays, the acquisition of image pairs can be advantageously replaced by video acquisition, since in an image sequence, the mapping of homologous points is easily done between two successive frames, whereas it is often tricky to find corresponding points between distant viewpoints. The weak point of this technique is that it does not work if the wound is insufficiently textured: not enough points can be matched between the images, resulting in a sparse 3D map and poor measurements. On well-textured scenes, this shape from motion approach competes with powerful laser scanners equipped with turning tables, as tested in a study on volume estimation of skin ulcers45 (Fig. 8).

Figure 8.

Reproducibility test: Residual distances between three models generated from different mobile cameras after ICP registration with the laser scanner reference (top left) —Zenteno 2019.45 ICP, iterative closest point.

To ensure more robust results than with the preceding passive vision techniques, in active vision, light patterns are projected onto the wound to obtain a 3D shape from the distorted pattern. This is the solution embedded in the commercial wound assessment device Silhouette (Aranz Medical, New Zealand) which projects three laser lines to obtain wound 3D data. The projection of a textured pattern provides denser 3D maps for accurate volume estimation, as done with the inSight device (eKare).

Recently, affordable time-of-flight cameras became available. These cameras are so named because they produce depth images by measuring on each point the time taken by light to reach this point and be reflected back to the sensor. These devices can compete with manual practice for volumetric measurement.46 This is also the case in a new multimodal prototype sensor system for wound assessment and pressure ulcer care. Multiple imaging modalities, including RGB, 3D scanning, thermal, multispectral, and chemical sensing, are integrated into a portable hand-held probe for real-time wound assessment. This multimodal sensor system performs various assessments, including tissue composition, 3D wound measurement, temperature profiling, spectral, and chemical vapor analysis, to estimate healing progress.47

Another class of sensors could soon revolutionize photographic devices. It is constituted by newly manufactured plenoptic sensors, (Raytrix, Kiel, Germany), which are already integrated in industrial inspection tasks. While a classical digital camera measures on each pixel the total intensity of light emitted from one point of the real scene, a plenoptic camera48 captures in a single snapshot the direction of each ray contributing to the intensity on a pixel, called the light field. As a conventional camera produces a 2D image formed by a single lens, a light field camera contains a micro lens array. Typically, a hologram is a photographic recording of the light field which appears to be 3D seen with the naked eye. Practically, plenoptic cameras make possible all in-focus with a high-resolution for accurate metrology. In the near future, this technology could be integrated in smartphone cameras as light field modules for smartphones have aroused the interest of manufacturers. These tools are very promising for medical applications. For example, in soft-tissue surgery, a novel fused plenoptic and near-infrared camera tracking system enables 3D tracking of tools and target tissue while overcoming blood and tissue occlusion in the uncontrolled, rapidly changing surgical environment.49

Artificial Intelligence for Tissue Segmentation

Expert knowledge

When wound assessment is done during visual examination, the clinician's knowledge is required to characterize the nature of the tissues. Even with the naked eye, an expert is able to discriminate between healing and infected tissues under non controlled lighting, but his/her efficiency is limited to producing quantitative measurements over the wound status or evolution. Wound imaging enables automatic measurement of tissue areas but tissue classes need to be first defined. Clearly, the expert knowledge needs to be transferred to the machine vision system by a learning step. It consists in collecting certified samples of each class of tissue to constitute a tissue database.

The clinician can draw tissue outlines on digital wound images but this process is time consuming. One alternative is to label previously segmented images with one of the tissue class labels.19–50 The clinician is no longer free to delimit exactly tissue regions but if the segmentation level is small enough, one can avoid creating hybrid regions containing several tissue classes, which the clinician would be unable to label. Note that intraobserver repeatability is not maximal in this process and that interobserver repeatability is even lower, so that several experts are needed to produce a robust ground truth. Tissue regions with poor consensus should be discarded for the learning step.

Machine learning

The power of machine learning,51 an application of artificial intelligence, resides in its ability to automatically learn and improve from experience without being explicitly programmed. The sample tissue regions labeled by the clinicians feed directly the learning step during which descriptors are computed on each tissue region. After various labeled samples have been processed, the algorithm is able to label a new sample with a high confidence level, based on the region descriptors extracted on it and compared with those of the known samples. Practically, the wound database is built from part of the labeled sample tissues. Then machine learning is run and validated by automatically segmenting the other part of the samples called the test database. Building a tissue model requires manually extracting color and texture descriptors to characterize each sample and assign the correct tissue label to it.

A machine learning approach is still very popular and inspired many efficient marketed medical imaging systems. The support vector machine (SVM) is the most popular supervised algorithm, and generally reaches the highest performance for most classification problems, given its mathematical properties such as convex optimization. Several machine learning architectures have been designed to implement wound tissue classification. For example, a robust skin tissue classification tool using cascaded two-staged SVM-based classification was proposed.15 The segmentation task consisted in the extraction of texture and color descriptors from wound images, followed by the SVM classifier to label the tissues within the wound into three categories (granulation, slough, and necrosis). Computer methods based on manually engineered descriptors or image processing methods were also developed to segment diabetic foot ulcers.52

Convolutional neural networks

A critical step in the classical machine learning workflow consists in selecting discriminant features from the images. This task is still devoted to humans. On the contrary, with deep learning, the so-called new generation of neural networks, manual feature engineering is not required. Instead, the network elaborates automatically high-level features from raw data during the learning step, but massive image databases are required in this supervised process.

Deep learning has demonstrated its efficiency in numerous applications, such as text translation, autonomous vehicles, voice assistants, or economic estimates. Images are ideal data structure for these networks and after spectacular results in computer vision such as image semantic segmentation, their application for medical imaging has been investigated with success by the computer vision specialists.53 Clearly, this approach will convey many medical advances in the next years. Traditionally, scientific discoveries are the result of intuition and observation, making hypotheses from associations and then designing and running experiments to test the hypotheses. However, with medical images, observing and quantifying associations can often be difficult because of the wide variety of features, patterns, colors, values, and shapes that are present in real data. Here, deep learning can extract new knowledge from the accumulation of hundreds of thousands of real cases.

Deep learning in health care addresses a wide range of problems and provides doctors with an accurate analysis of any disease, helping them treat them better, thus resulting in better medical decisions. It is noteworthy that the number of articles published on various applications of artificial intelligence in medical image analysis54 has been multiplied by four between 2015 and 2017 and that the segmentation of anatomical structure is the most tackled topic. The most commonly used architecture of deep learning networks for medical image analysis is convolutional neural networks (CNNs).

Deep learning can contribute to a range of fundamental tasks in medical image analysis: retrieval, registration, detection, segmentation classification, image synthesis, and enhancement. For example, using deep-learning models trained on patient data consisting of retinal fundus images, it is now possible to predict cardiovascular risk factors not previously thought to be present or quantifiable in retinal images, such as age, gender, smoking status, systolic blood pressure, and major adverse cardiac events.55 Deep learning can more directly outperform an expert eye in the detection of pathologies during breast, liver, and lung radiological examinations. As X-ray images provide huge amounts of data, CNNs can rise to the challenge of identifying very small regions in images depicting anomalies, such as nodules and masses that might represent cancers. Compared to highly trained dermatologists, deep neural networks also obtained similar diagnostic accuracy in identifying several types of skin cancers, but it involved a huge reliably annotated image database which is not currently available for wounds.56

Image segmentation is one of the first areas in which deep learning displays promising contributions to medical image analysis and some pioneering studies have recently investigated this approach (Table 2). As deep learning requires a massive amount of training data, which is a real problem for wound images captured in the patient room, several strategies have been tested to overcome it.

Table 2.

Summary of deep learning studies on wound tissue segmentation and classification

| Works | Goals | Methods | Database | Results |

|---|---|---|---|---|

|

Sofia Zahia [2018, USA] Tissue classification and segmentation of pressure injuries using ConvNets57 |

Segmentation of the different tissue types present in pressure injuries (granulation, slough, and necrotic tissues) using a small database | ConvNet (5 × 5 inputs) | 22 images 1,020 × 1,020 Patches 5 × 5 -75% for training set and 25% for test set |

Accuracy = 92.01% DSC = 91.38% Precision per class: Granulation = 97.31% Necrotic = 96.59% Slough = 77.90% |

|

H. Nejati [2018, Singapore] Fine-grained wound tissue analysis using deep neural network61 |

Classification of seven types of tissues (necrotic, slough, infected, epithelialization, healthy, unhealthy, hyper granulation) | AlexNet (227 × 227 inputs) SVM (HSV, LBP, HSV+LBP)—Principal component analysis | 350 images Patches 20 × 20 Resizing patches to 227 × 227 |

Three-fold cross validation: AlexNet = 86.40% HSV = 77.57% LBP = 79.66% HSV+LBP = 77.09% |

|

Fangzhao Li [2018, China] A composite model of wound segmentation based on traditional methods and deep neural networks63 |

Wound image segmentation framework that combines traditional digital image processing and deep learning methods | FCN (MobileNet) | 950 images | Precision = 94;69% |

|

Manu Goyal [2017, UK] DFUNet: CNNs for DFU classification84 |

Novel fast CNN architecture called DFUNet for classification of ulcers and non-ulcerous skin which outperformed GoogLeNet and AlexNets | DFUNet LeNet AlexNet GoogleNet SVM (LBP) SVM (LBP+HOG) SVM (LBP+HOG+color descriptors) |

292 images of patient's foot with ulcer and 105 images of the healthy foot Patches 256 × 256 -85% for training set, 5% for validation set and 10% testing set Data Augmentation (rotation, flipping, color spaces) |

AUC curve: DFUNet = 0.9608 LeNet = 0.9292 AlexNet = 0.9504 GoogleNet = 0.9604 LBP = 0.9322 LBP+HOG = 0.9308 LBP+HOG+Color Descriptors = 0.9430 |

|

Manu Goyal [2017, UK] Fully convolutional networks for diabetic foot ulcer segmentation58 |

Automated segmentation of DFU and its surrounding skin by using fully connected networks | FCN-AlexNet FCN-32s FCN-16s FCN-8s |

600 DFU images and 105 healthy foot images From 600 DFU images in the dataset, they produced 600 ROIs of DFU and 600 ROIs for surrounding skin around the DFU. |

Specificity for Ulcer: FCN-AlexNet = 0.982 FCN-32s = 0.986 FCN-16s = 0.986 FCN-8s = 0.987 Specificity for Surrounding skin: FCN-AlexNet = 0.991 FCN-32s = 0.989 FCN-16s = 0.994 FCN-8s = 0.993 |

|

Changhan Wang [2015, USA] A unified framework for automatic wound segmentation and analysis with CNN62 |

Wound segmentation for surface area estimation and features extraction Infection detection Healing progress prediction |

ConvNet Kernel SVM Gaussian Process Regression |

350 images Patches 20 × 20 Resizing patches to 227 × 227 |

Accuracy: SVM (RGB) = 77.6% ConvNet = 95% |

Wound Imaging: ready for smart assessment and monitoring.

AUC, area under the curve; CNNs, convolutional neural networks; DFU, diabetic foot ulcer; DSC, dice similarity coefficient; FCN, fully convolutional network; HOG, histogram of oriented gradients; HSV, hue saturated value; LBP, local binary pattern; SVM, support vector machine.

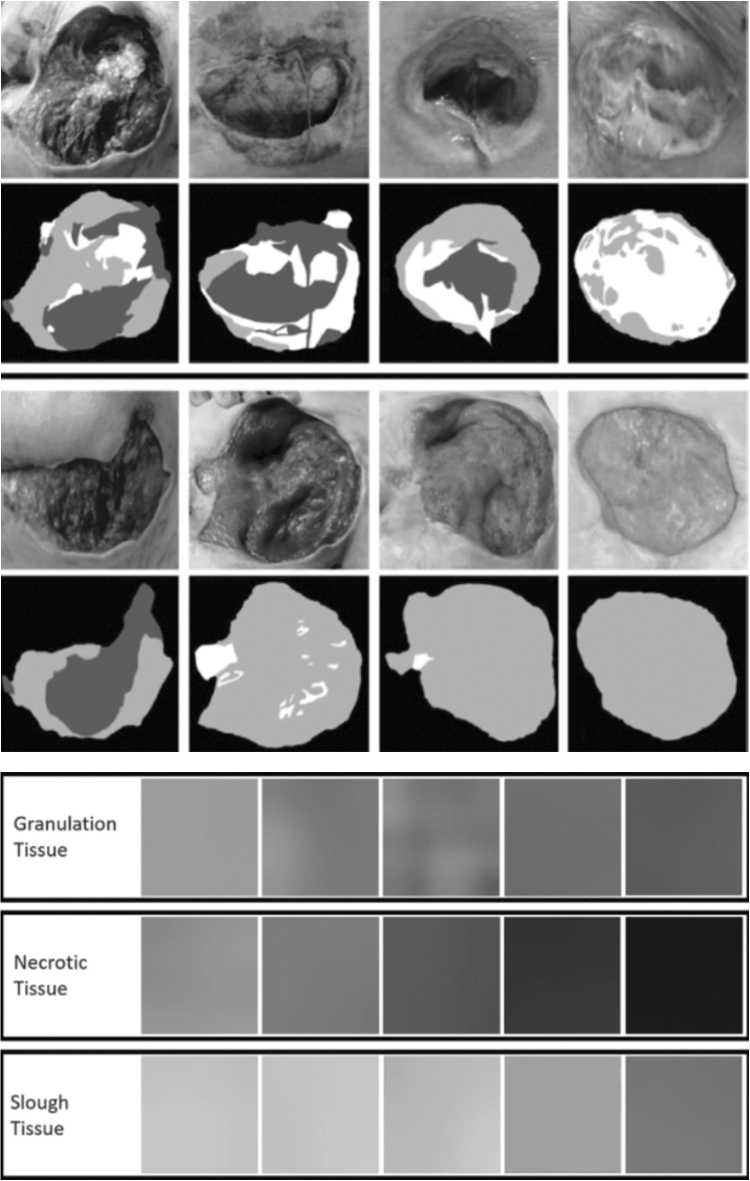

One solution is to split large images into small ones to expand the size of the database. Geometrical transformations such as scaling, translations, rotations, flipping, elastic deformations, or color space changes can also be made to generate a lot of subimages. In a recent study,57 (Fig. 9) only 22 images of pressure injuries were used for tissue classification (granulation, slough, and necrosis). The method involved using CNN on a dataset composed of a lot of cropped images to perform optimized segmentation. A standard resolution was adopted for the training and test images, but a preprocessing step created a set of small subimages, which were used as input for the CNN network, which achieved an overall average classification accuracy of 92%.

Figure 9.

Preprocessing step for database creation (top) and dataset dictionary for three wound tissues (bottom)—Zahia 2018.57

Another solution is to pretrain the network on a very large scale generic image database before training it on a smaller one dedicated to the application. A two-tier transfer learning method was applied by training a fully CNN on larger datasets of images and using it as pretrained model for the automated segmentation of diabetic foot ulcers.58 A dataset of 705 images was constituted, including 600 diabetic foot and 105 healthy foot images. The surrounding skin was also considered as it is a relevant sign of ulcer's healing. The specificity value for ulcer tissue was around 98%.

Expanding the training sample by geometrical transformation does not account for variations resulting from different imaging protocols and lesion specificities. So, a third solution takes advantage of a particular class of networks called generative adversarial networks.59 Composed of a generative model G and a discriminator model D, they have the ability to explore and discover the underlying structure of the training data and learn to generate new realistic images for network training using the G model. This is particularly interesting for wound imaging where data scarcity and patient privacy are important concerns. The discriminator D can be seen as a regularizer to ensure that the synthesized images are valid. This approach has been tested for unconditional dermoscopy image synthesis before skin tissue classification.60

One interesting result is that while CNN outperforms classical machine learning for wound segmentation and even feature extraction, SVM should be preferred for the next step of tissue classification. For example, the classification was a two-step process.

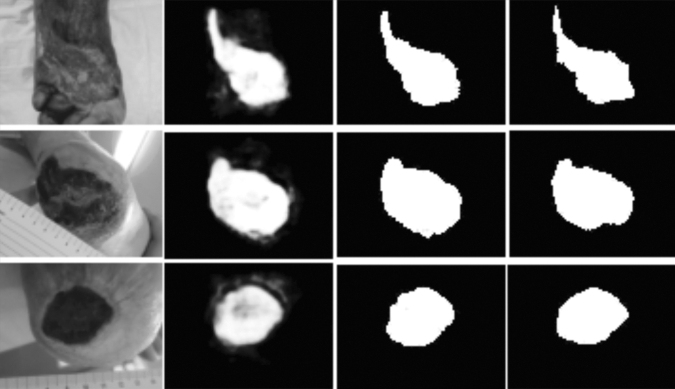

Feature extraction can be run on a pretrained network such as AlexNet before the tissue classification step based on linear kernel SVM.61 A dataset composed of 350 images was labeled into seven classes of tissue. Only three types of tissues are generally considered over the wound (sloughy, granulation, and necrotic) but additional types (infected, granulating healthy or not, epithelial) enhance the classification. With this architecture combining CNN and SVM instead of feeding SVM with manually designed descriptors, the accuracy was improved (86.4% instead of 79.66%). Similarly, (Fig. 10) a wound segmentation approach has been proposed.62 A CNN architecture is adopted to extract the features used both to feed SVM classifiers to detect infection and Gaussian regression models to predict healing. For wound segmentation, CNN accuracy (95%) outperformed the SVM classifier (77.6%) but for the detection infection, the SVM classifier trained with CNN features outperformed the neural network (84.7%). In fact, CNN should involve traditional image processing in the image processing workflow, for instance, for environmental background removal in preprocessing step and semantic correction in a postprocessing step.63

Figure 10.

(from left to right) The cropped image is taken as input by the neural network- At the output pixel-wise probabilities of wound segment are provided in gray levels the and final mask is obtained by setting a threshold of 0.5 on every pixel, to compare with the ground truth mask displayed in the last column. Wang 2015.62

Wound Image Management

Image databases

In the field of medical imaging, while a number of open access datasets are available, most of them are related to radiology (X-rays, MRI, PET, CT …) and not wound imaging. However, such databases would be valuable for research, especially when machine learning and artificial intelligence are involved, but also enable the comparison of algorithm efficiency from concurrent research teams through challenges or simply for publication reports. In a scientific study, a research team works on its own image database and so it is difficult to compare algorithm performance. Moreover, the quality of the ground truth cannot be checked by other teams.

There are several reasons for this: first, wound images are difficult to obtain as they are taken at the bedside (it is not pleasant for the patient to face a camera with such a handicap and taking pictures is often only possible when the dressing is changed by the nurse); second, as these images are difficult to obtain, researchers are tempted to reserve their exploitation to their own group; third, there is a lack of standardization in the protocols for wound image capture: lighting control, points of view and centring, scale factor, color constancy, wound history, patients' medical records, and so on are all features that can vary from one study to another. All these points should be addressed. Not only are wound images already difficult to find but also obtaining series of images covering a wound history from its inception to healing is nearly impossible.

Various wound images can nevertheless be freely retrieved from the Medetec wound database, including pressure ulcers, surgical wounds, and diabetic ulcers, regularly encountered by a wound care practitioner. A complementary database named Medetec Surgical Dressings Database contains images of many wound technical dressings, which are used to stimulate the healing process.

Computer aided wound monitoring

At the beginning, a precise wound assessment is a prerequisite for wound monitoring. Many components of the assessment should be included in weekly documentation through a nurse's narrative note or a wound assessment chart: wound location, etiology, classification or stage, size of wound (length, width, and depth), amount of wound tunneling and undermining, types of tissues and structures observed in the wound bed, amount of exudates, state of the surrounding skin and wound margins, signs and symptoms of wound infections, individual's pain level, patient's medical factors which could delay healing, and treatment objectives.

To record the evolution of a wound across different healing stages, the Pressure Ulcer Scale for Healing (PUSH) and Bates-Jensen Wound Assessment Tool (BWAT) procedure are the standard ones for the clinicians. PUSH tool developed by the National Pressure Ulcer Advisory Panel (NPUAP) tracks if wound evolution is positive or not. The PUSH score is expressed by the addition of three subscores related to area, exudates and tissue condition. In the same manner, the BWAT scores thirteen parameters to assess a wound, including dimensions, necrotic tissues, exudates, granulation and epithelialization.

After wound assessment, evaluation of care and a wound treatment plan can be investigated. Wound imaging provides only one component of a patient's state and can obviously not determine if the treatment has to be changed. With the commercial devices currently available in the clinical environment, only global parameters such as wound dimensions and the proportion of the different tissues are extracted. Analyzing locally and over time, the distribution of the different tissues and the evolution of the 3D surface could improve understanding of the healing process and diagnosis. The accumulation of a great number of wound healing histories, including all the wound assessment components, could feed machine learning algorithms to assist the clinician in treatment decision.

Wound monitoring quality can be significantly improved when the wound imaging device is linked to a data management tool. For example, the WoundZoom tablet (Perceptive Solutions, Inc., Eagan, Minnesota, USA), through its Web portal, helps collate patient information and can be easily integrated with a hospital's electronic health records, which is vital to creating a seamless digital network and eliminating double documentation.

Data can be exchanged between clinical centers or between clinicians and remote patients. Many hospitals have investigated the advantages of telemedicine for dermatology or wound therapeutic following. For this reason, clinicians should develop partnerships with companies offering emerging tools for telemedicine environments. Currently, images are compressed, uploaded and transferred between hospitals to enable discussions with remote experts, but image processing capabilities are not supported on line. With the development of mobile health, patients with chronic wounds who need the evaluation and assessment of a wound care specialist can take photographs of the wounds with a digital camera or smartphone and send them via the internet to the wound care specialist. These digital photographs allow the expert to diagnose and evaluate the chronic wounds on a periodic basis. Nevertheless, it is necessary to be aware of a degraded reliability when performing wound assessment using mobile images.64

Smartphone Applications

A smart sensor is commonly defined as a device, which does not output raw signals but embeds processor, memory, knowledge, and software, to process these signals, extract high-level information, detect major events or anomalies and export the results on networks. It has also friendly calibration and configuration capabilities. The idea behind smart wound assessment is that it relies on connected devices that not only capture images but also process them locally and completely to assess wound healing status with a high level of significance.

Since several years, smartphones have surpassed medium range digital cameras on the consumer market. These low cost and familiar devices include not only powerful processors but also high rate interfaces. A smartphone is not as reliable as a high-grade medical imaging device but it is the instrument of choice for mobile health.65,66 Clearly, the opportunity offered by the Internet of Medical Things and smart devices is already relevant for wound care, for example, to improve the surveillance of diabetic foot ulcers.67 The range of applications is also extended by add-on or connected sensors.

For example, smartphones are used for acuity screening in rural areas. Moreover, retina health can be monitored by plugging the Peek Retina Medical ophthalmoscope (Peek Vision, London, United Kingdom) for macula and optic nerve illumination into the smartphone. In orthodontics, The Dental Monitoring application (Dental Monitoring Company, Paris, France) is prescribed and activated by an orthodontist for all types of patients, whether in treatment, monitoring, or contention. Patients download the application from the App Store or Google Play digital distribution platforms and use their smartphone to take mouth photos, which are then uploaded to the Dental Monitoring website for further processing. Many sensors are embedded in a smartphone and have been used for medical applications such as patient tracking at all times with GPS to deal with the risk of wandering or patients' balance monitoring using the phone's accelerometer.

Smartphones or tablets are indeed promising tools for standard wound monitoring at the patient's bedside in hospital or at home.68–70 It is clear that sophisticated equipment could enable more accurate ulcer detection or assessment than a smartphone but none of it is designed for mobile health applications (mHealth). So, the challenge is to embed in a smartphone the essential features required by the nurse to rapidly assess an ulcer, characterize its evolution, transfer measurements to the hospital data management system, and obtain therapeutic indications.

Wound management

With the development of eHealth, smartphones and tablets are appropriate tools for wound management when mobile and connected devices are looked for. For the nurse responsible for wound care, it becomes a personal organizer and secretary. Several software tools have been developed to simplify wound management.

Smartphone applications such as SmartWoundCare (Internet Innovation Centre, Beijing, China) can replace paper-based charting with electronic charting for chronic wounds. It facilitates telehealth with data sharing and data transfer between multiple health care providers, allowing for more timely consultation and reducing the need for patients with mobility difficulties to attend consultations in person.

Wound treatment, staff communication, and quality reporting can be simplified if, directly at the bedside, a handheld device is available to acquire clinical data. The Braden scale has been clinically validated to predict pressure ulcer risk, utilizing the PUSH tool and telemedicine applications such as WoundRounds (Telemedicine Solutions LLC, Schaumburg, Illinois, USA) to track wound healing. These indicators are valuable inputs of a risk prevention system. Patients are sorted by overall risk and the nurse will even be prompted to deliver patient interventions. A mHealth application for decision-making support in wound dressing selection has also been proposed, so that the nurse can be assisted at the patient's bedside.71

Wound size imaging without add-on sensors

To comply with the concept of mobile health (mHealth), one should ideally use nothing but a smartphone, excluding add-on sensors, especially if use by patients at home is intended. Some advanced imaging modalities are then excluded but automatic wound assessment is nevertheless possible.

To obtain geometrical measurements of the wound with this limitation, several solutions were evaluated.72 A mobile application to document chronic wounds using a smartphone was extended to facilitate geometrical measurements on wounds using the smartphone's integrated camera. Various strategies have been implemented and tested to extract measurements: depth from focus, inertial sensors, and an original pinch/zoom method.

It is valuable to compare the performance of smartphone-based applications with that of marketed wound measurement devices. A recent study73 showed that Planimator, a wound area measurement application for Android smartphones, outperforms commercial devices such as SilhouetteMobile (Aranz medical, New Zealand) and Visitrak (Smith & Nephew, United Kingdom) presented before. The median of relative error were, respectively, 0.32%, 2.09%, and 7.69%, and the standard deviations of relative difference were, respectively, 0.52%, 5.83%, and 8.92%.

In commercial applications for 3D reconstruction without add-on sensors, the technique is based on photogrammetry: the user takes a series of pictures from different viewpoints, and a 3D colored and textured model of the object is derived by computation using such popular applications as QLone (EyeCue Vision Technologies Ltd., Yokneam, Israel), Scandy Pro (Scandy, New Orleans, Louisiana, USA), or Scann3D (SmartMobileVision, Budapest, Hungary). A video acquired with a high-tech smartphone can compete with a laser scanner if the wound tissue is sufficiently textured to enable dense 3D map reconstruction.45

For wound tissue segmentation purposes, the embedded processing power of recent smartphones is now sufficient to implement powerful algorithms. For example,74 the smartphone can perform wound segmentation based on the accelerated mean-shift method. The classical tissue color code is then used to resume wound healing progress and analyze its trend over time. Another efficient algorithm, the random forest classifier based on various color and texture features, has been implemented on mobile devices to classify granular, sloughy, and necrotic tissues. Training is a long and tricky computer process, but classification is fast enough to be run on a smartphone.75

Relying on the power of deep learning, Deepwound CNN76 is able to run wound assessment on a smartphone in the context of surgical site surveillance. The size of the dataset, which is composed of 1,355 smartphone wound images, enables to assign several labels to each test image, including not only granulation tissue or fibrinous exudates but also infection, drainage, staples, or sutures.

Wound size imaging with add-on sensors

A smartphone is able to perform wound 3D scanning with the emergence of software applications and add-on sensors. These advances are mainly driven by new face identification functions embedded into the smartphone, but the translation to medical applications is immediate. With add-on sensors, the current technique available is based on light pattern projection over the wound.

For example in the Structure Sensor (Occipital Inc., San Francisco, California, USA) (Fig. 11 top) integrated in the TechMed 3D medical application intended for body parts digitization, the structured light consists of an infrared speckled pattern, which gives access to multiple custom measurements and scans exportation into iMed files. In the Eora 3D scanner (Eora 3D, Sydney, Australia), a green laser line generator attached to the smartphone provides structured light, but the part to be scanned needs to rest on a turn table to obtain a complete scan, so this technique is not applicable to a patient.

Figure 11.

Structure sensor by occipital mounted on a tablet (top) Vivo's Nex Dual Screen TOF 3D sensor for smartphone (center) Flir One PRO LT thermal camera for IPhone and IPad (bottom). TOF, technique is time of flight.

The most accurate technique is time of flight (TOF) (Fig. 11 center). From the time necessary for a light pulse to reach an obstacle and go back to the sensor, it is simple to compute the depth in different directions and accurately map objects through a purely geometrical mesh. This technique is embedded in new Vivo's Nex dual screen smartphone (Vivo Mobile Communication Co., Beijing, China) to enable enhanced 3D face recognition protection with a 300,000 pixel resolution depth, which is said to be 10x the number existing in structured light technology. Some other advanced smartphones are also equipped with such TOF sensor, but it is dedicated to portrait enhancement (Huawei P30 Pro) or 3D face scanning after processing by 3D Creator application (Sony Xperia XZ3), remaining far from wound imaging resolution requirements as it cannot detect portrait details such as noses or ears. In fact, smartphone technology is driven by consumer market, for which high precision at close distance is not a priority.

Compact add-on thermal sensors have also been marketed for Android and IPhone smartphones. For example, in the STANDUP European project41 currently in progress, thermal information is provided by a compact Flir one PRO (FLIR Systems, Inc., Wilsonville, Oregon, USA) (Fig. 11 bottom) camera plugged into an Android smartphone. Two smartphone applications are currently being developed. The first one will be able to detect possible hyperthermia of the plantar foot surface and will analyze temperature variations on targeted regions of interest. The local temperature differential between the two plantar arches and also temperature variations just after a cold stress test are analyzed for screening purposes. The second one will assess temperature, color, and 3D shape of diabetic foot ulcers over time. The integrated camera provides color imaging and 3D measurements should be obtained from video capture and compared for evaluation to an add-on sensor plugged into the smartphone.

Color and thermal modalities are also combined in another smartphone-based application,77 so that periwound temperature increase can be tracked to detect infection.

The design and adaptation of other sensors for smartphones is still in progress to overcome technical challenges. This is the case for optical coherence tomography technique, which proved to be relevant for monitoring of wound healing processes in biological tissues78 and is now addressed by sensor manufacturers for the mHealth market.79

Discussion

When faced with the ongoing revolution in imaging devices and software tools for wound assessment, one may legitimately feel somewhat bewildered. When digital cameras replaced traditional photography, the advantages of numerical files over printed pictures were obvious. The reduction in cost to make a picture was drastic and the digital file could be stored, shared, and transferred easily and quickly. Recently, new imaging modalities have emerged at reduced costs and are very promising for wound care. So, which devices should reasonably equip clinical staff in the coming years?

Wound research focused for many years on two major criteria: healing time and wound area decrease. The automatic measurement of wound volume and percentage and distribution of each type of tissue have changed this situation. 3D wound scanners and tissue segmentation and classification software must therefore be integrated in the weekly wound assessment.

A major point is the need for low-cost, user-friendly imaging devices: given the high prevalence of wounds in hospitals, these devices need to be routine equipment for nurses, like a thermometer or a tensiometer. Considerable time is already devoted to dressing the wound, so there is little extra time to spend for wound size imaging. The same criteria also apply for mobile health development: the patient should be able to operate the device easily.

Another essential point is the need for data exchange: the imaging device has to be connected with the hospital data management system. This is critical to transfer data and specific care instructions, as nurses regularly relieve the preceding ones in patient bedroom. The constitution of large wound databases for machine learning purposes is also dependent on the dissemination of wound images at a very large scale as the efficiency of new deep learning techniques relies on the number of sample images used during the learning step. As for other patient medical data, the collect of wound images raises the problems of consent and confidentiality. We should make the difference between routine exploitation of images and research: in the first case, wound images should be collected with the same confidentiality rules as during echographic or endoscopic examinations for instance. In the case of research, the patient should agree by a written consent with the fact that images would be used for a clinical study or feed a wound image database and all data should be anonymized. To simplify wound image sharing between distant sites, smart phones may provide immediate digital signing of consent of patients each time a situation is valuable for other investigators.

The superiority of multimodal imaging tools is also relevant. Combining several wavelengths and 3D geometrical measurements helps to develop a more robust wound description than that obtained with a single sensor.

For these reasons, tablets and smartphones are the best platforms for wound assessment at the bedside or at home. As more and more computing resources, imaging technology and sensors are embedded in these devices at reduced cost due to large scale production, they will play an increasing role in wound assessment. Some functions such as thermal imaging, bacterial activity, or oxygen saturation display could be reserved for therapeutic follow-up of severe wounds; at least add-on sensors for smartphones can be shared easily by the clinical staff.

The ability of smart imaging devices to measure wounds with a great accuracy is well established. On the contrary, their efficiency and reliability to discriminate between different types of tissues are more questionable. The main reason is that expert knowledge must be introduced in the learning process. The labeling task, which consists in assigning to each pixel a tissue class, is essential to build a ground truth. But this process is subjective, fastidious, and relies only on the naked eye, not on histological references. It is even ambiguous for hybrid, mixed, or multisliced tissues. Anyway, a common wound RGB image can be segmented into granulation, fibrin, necrosis, and healthy regions with a great confidence. If narrow spectral bands can be extracted, especially in the near-infrared band, extra insights on tissue oxygenation and perfusion are provided. Higher wavelengths in the thermal band are relevant for the early detection of infection or inflammation, not seen by clinician naked eye.

One could think that deep learning could boost overall performance of all these imaging devices, as in other applications of medical imaging, cancer screening on radiographies for example. But to achieve high performance, deep learning networks require hundreds of thousands of labeled images: here is the bottleneck because wound image labeling is approximate and time consuming for the clinicians involved in this process and consequently large datasets are currently not available. At present time, deep learning is able to extract accurately by semantic segmentation a wound from its background, clearly outperforming classical image processing algorithms. It counts the pixels covered by the main classes of tissues, but it does not provide diagnosis over the healing process and cannot replace histopathology of wounds.

Patient care is the main flow for wound imaging but what about preclinical studies on small animals to elaborate new treatments? It is clear that the available commercial devices are not adapted to narrow field of view and close distance imaging, but advanced smartphones have macrophotography capabilities. However, it is not possible to distinguish very small structures, such as capillaries, due to insufficient resolution and close focus. However, combined with an eye piece and an objective, a mobile phone can be transformed into a quantitative imaging device, a mobile phone microscope.80

To achieve it on a smartphone, all automated settings and manufacturer image preprocessing filters have to be to cancelled or minimized to restore raw image quality.

Illumination at close distance can be problematic, but in the context of a clinical study, lighting systems can be installed over the experimental setup, which is not the case in a patient room. The development of new treatments for wound care in the context of preclinical studies is already investigated.81

The exploration of preclinical models of skin wound healing is now proposed for testing efficacy before clinical testing, and wound imaging is essential for quantitative measurements on wounds induced by thermal, mechanical, or chemical action, which are followed for rodents and large animals.82 In vivo imaging is applied to wound dimensional measurements, skin temperature, and inflammation biomarker quantification.

The next frontier will be advanced tools for wound monitoring and treatment plan assistance. Optical assessment is generally based on present wound condition. The record and analysis of wound shape and tissue distribution over time could contribute to a better evaluation of the healing process and to adapt the treatments. Until now, only graphs of global parameters such as wound size or the proportion of each type of tissue over time, and wound history are commonly summed up by scores such as PUSH or BWAT. Taking the local changes in wound geometry and tissue distribution over the wound surface into account could improve analysis of the healing process and help to adapt treatment.

In conclusion, we should not forget that wound size imaging remains only one component of wound assessment among those listed in the clinical chart and that all the biological and health data in the patient's record contribute to devising an efficient treatment plan to optimize wound healing. To predict the healing outcome, many imaging probes have been developed,83 which provide in-vivo real-time assessment of tissue microenvironment and inflammatory responses, providing cues on the underlying wound histology. These devices are complementary to the wound size imaging devices presented here that describe present wound state and are able to quantify its past changes but are not adapted to estimate its evolution. At the highest level, the clinical staff will still make final decision but he will be far more assisted, thanks to standardization of wound imaging devices.

Summary

Wound assessment no longer relies only on manual measurements since optical devices can accurately capture its 3D shape and label tissues across wound bed. However, to be routinely used in the clinical setting, compact, user-friendly devices are preliminary requirements, before being accepted by the nurses in charge of wound care. Low-cost wound size imaging devices now address range finding, hyperspectral resolution, and thermal sensing. Multimodal system performance will not be the addition but the multiplication of single modalities and offer precise and reliable wound assessment. The emergence of deep learning is also expected to be promising for tissue analysis.

The marketed devices do not embed the latest technological advances but routine wound care will soon take advantage of it. Indeed, the reduction of health system spending is driving the emergence of the devices marketed in the coming years and mobile health should undergo a spectacular development with the integration of enhanced imaging hardware and software tools in smartphones.

Take-Home Messages

Smartphones will soon become common devices for routine wound assessment

Add-on -sensors enhance significantly the capabilities of smartphone-based wound assessment

Wound size imaging modalities have been extended from the visible spectral band to the near-infrared and thermal bands in the low-cost and portable devices.

Hyperspectral imaging increases to hundred of narrow spectral bands the spectral resolution of color images limited to the red, green, and blue large channels

Wound imaging is not limited to wound shape measurement. It enables tissue classification and provides insights on oxygenation, perfusion inflammation, or infection

Advanced features for wound assessment are already available in recent commercial imaging devices

Deep learning should improve wound assessment and monitoring, as observed in many other medical imaging applications

Abbreviations and Acronyms

- AUC

area under the curve

- BWAT

Bates-Jensen Wound Assessment Tool

- CCD

couple charge device

- CNNs

convolutional neural networks

- DFU

diabetic foot ulcer

- DSC

dice similarity coefficient

- EU

European Union

- FCN

fully convolutional network

- HOG

histogram of oriented gradients

- HSV

hue saturation value

- ICP

iterative closest point

- IR

infrared

- LBP

local binary pattern

- NIR

near infrared

- NPUAP

National Pressure Ulcer Advisory Panel

- PUSH

Pressure Ulcer Scale for Healing

- ROI

region of interest

- SVM

support vector machine

- TOF

technique is time of flight

Acknowledgments and Funding Sources

This work was supported by internal funding sources of PRISME laboratory, Orleans, France and by The H2020-MSCA-RISE STANDUP European project.

Author Disclosure and Ghostwriting

No competing financial interests exist. The content of this article was expressly written by the authors listed. No ghostwriters were involved in the writing of this article.

About the Authors

Yves Lucas, PhD is an associate professor at PRISME laboratory, Orléans University, France, in charge of the Image and Vision Team activities covering 3D geometrical modeling, multimodal imaging, and video analysis. He received his MS degree in discrete mathematics from Lyon 1 University, France, in 1988. After a postgraduate degree in computer science, he worked on CAD-based 3D vision system programming and received his PhD degree from the National Institute of Applied Sciences in Lyon, France in 1993. After that, he joined PRISME laboratory at Orléans University and focused on complete image processing chains and 3D computer vision system design, including multimodal imaging and machine learning. His research interests are mainly oriented toward medical applications such as optical wound assessment, tissue detection in visible and infrared for surgical guidance, hyperspectral endoscopy, and patient care monitoring for orthodontics.

Rania Niri, MD is a PhD student in computer vision and image processing at the universities of Orléans, France and Ibn Zohr, Agadir, Morocco. Within the STANDUP project, she is developing deep learning methods for the segmentation of diabetic foot ulcers.

Sylvie Treuillet, PhD received her graduate degrees from the University of Clermont-Ferrand (France) in 1988. For two years, she worked as an R&D engineer in a private company in Montpellier. She then joined the University of Clermont-Ferrand and was awarded her PhD degree in computer vision in 1993. Since 1994, she has been assistant professor in computer sciences and electronic engineering at the Ecole Polytechnique of the University of Orléans. Her research interests include computer vision for 3D modeling, pattern recognition, and hyperspectral imaging.

Hassan Douzi, PhD has been a research professor at Ibn-Zohr University, Agadir in Morocco since 1993. He holds a PhD in Applied Mathematics from Paris IX University (Dauphine) and is the head of the IRF-SIC laboratory. His research focuses on wavelet analysis and image processing applications as well as information processing methods in general.

Benjamin Castaneda, PhD received his B.S. degree from the Pontificia Universidad Catolica del Peru (PUCP) (Peru, 2000), his M.Sc. from Rochester Institute of Technology (USA, 2004), and his Ph.D. from University of Rochester (USA, 2009). He is currently a Principal Professor, Chair of Biomedical Engineering and Head of Research of the Medical Imaging Laboratory at PUCP. He received the SINACYT/CONCYTEC award to the Peruvian academic innovator for his work toward the development medical technology in Peru (2013) and was two times a finalist of the Young Investigator Award of the American Institute of Biomedical Ultrasound (USA, 2007 & 2011).

References

- 1.European Wound Management Association. Prevalence of chronic wounds in different modalities of care in Germany. https://ewma.org/fileadmin/user_upload/EWMA.org/EWMA_Journal/Articles_latest_issue/April_2018/Kroeger_Prevalence_of_Chronic_Wounds_in_different_modalities_in_Germany.pdf (last accessed July20, 2019)

- 2.European Pressure Ulcer Advisory Panel. 18th EPUAP Annual Meeting 2015 Ghent, Belgium: Putting pressure on the heart of Europe. https://www.epuap.org/epuap-meetings/18th-epuap-annual-meeting-2015-ghent-belgium/ (last accessed July20, 2019)

- 3.Mordor Intelligence. Europe advanced wound care market - Growth, trends, and forecast (2020–2025). https://www.mordorintelligence.com/industry-reports/europe-advanced-wound-care-management-market-industry (last accessed July20, 2019)

- 4.Fife CE, Eckert KA, Carter MJ. Publicly reported wound healing rates: the fantasy and the reality. Adv Wound Care 2017;7:77–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Khoo R, Jansen S. The evolving field of wound measurement techniques: a literature review. Wounds 2016;28:175–181 [PubMed] [Google Scholar]

- 6.Grey JE, Enoch S, Harding KG. ABC of wound healing: wound assessment. BMJ 2006;332:285–288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Doughty DB.Wound assessment: tips and techniques. Adv Skin Wound Care 2004;17:369–372 [DOI] [PubMed] [Google Scholar]

- 8.Gottrup F, Kirsner R, Meaume S, Münter C, Sibbald G. Clinical Wound Assessment. A Pocket Guide. Minneapolis, MN: Coloplast Corp, 2003 [Google Scholar]

- 9.Oduncu H.Hoppe H, Clark M, Williams R, Harding K. Analysis of skin wound images using digital color image processing: a preliminary communication. Int J Low Extrem Wounds 2004;3:151–156 [DOI] [PubMed] [Google Scholar]

- 10.Kolesnik M, Fexa A. Segmentation of wounds in the combined color-texture feature space. SPIE Med Imaging 2004;5370:549–556 [Google Scholar]

- 11.Zheng H, Bradley L, Patterson D, Galushka M, Winder J. New protocol for leg ulcer tissue classification from colour images. Conf Proc IEEE Eng Med Biol Soc 2004;2004:1389–1392 [DOI] [PubMed] [Google Scholar]

- 12.Plassman P, Jones T. MAVIS: a non-invasive instrument to measure area and volume of wounds. Med Eng Phys 1998;20:332. [DOI] [PubMed] [Google Scholar]

- 13.Malian A, Van den Heuvel F, Azizi A. A robust photogrammetric system for wound measurement. Int Arch Photogramm Remote Sens 2002;34:264–269 [Google Scholar]

- 14.Plassman P, Jones, C McCarthy, M. Accuracy and precision of the MAVIS-II wound measurement device. Wound Repair Regen 2015:A129 [Google Scholar]

- 15.Krouskop TA, Baker R, Wilson MS. A non contact wound measurement system. J Rehabil Res Dev 2002;39:337. [PubMed] [Google Scholar]

- 16.Callieri M, Cignoni P, Coluccia M, et al. Derma: monitoring the evolution of skin lesions with a 3d system. 8th Int. Workshop on Vis. Mod. and Visualization, Munich, Germany, 2003, 167–174 [Google Scholar]

- 17.Malian A, Azizi A, Van Den Heuvel FA. Medphos: a new photogrammetric system for medical measurement; International Society for Photogrammetry and Remote Sensing, Int. archives of photogrammetry, Int. congress for photogrammetry and remote sensing; ISPRS XXth congress, Istanbul, Turkey, 2004, 1682–1750 [Google Scholar]

- 18.Treuillet S, Albouy B, Lucas Y. Three dimensional assessment of skin wounds using a standard digital camera. IEEE Trans Med Imaging 2009;28:752–762 [DOI] [PubMed] [Google Scholar]

- 19.Wannous H, Lucas Y, Treuillet S. Robust Assessment of the wound healing process by an accurate multi-view classification. IEEE Trans Med Imaging 2011;30:315–326 [DOI] [PubMed] [Google Scholar]

- 20.Albouy B, Treuillet S, Lucas Y, Pichaud JC. Volume estimation from uncalibrated views applied to wound measurement. In: Lecture Notes in Computer Science. Berlin, Germany: Springer Verlag, 2005, 945–952 [Google Scholar]

- 21.Wannous H, Treuillet S, Lucas Y. Robust tissue classification for reproducible wound assessment in telemedecine environments. J Electron Imaging 2010;19 [Google Scholar]

- 22.Wannous H, Lucas Y, Treuillet S, Mansouri A, Voisin Y. Improving color correction across camera and illumination changes by contextual sample selection. J Electron Imaging 2012;21:023015 [Google Scholar]

- 23.Veredas F, Mesa H, Morente L. Binary tissue classification on wound images with neural networks and bayesian classifiers. IEEE Trans Med Imaging 2010;29:410–427 [DOI] [PubMed] [Google Scholar]

- 24.Kumar KS, Reddy BE. Digital Analysis of Changes in Chronic Wounds through Image Processing. Int J Signal Proc Image Proc Pattern Recognit 2013;6:367–380 [Google Scholar]

- 25.Moghimi S, Baygi MHM, Torkaman G, Kabir E, Mahloojifar A, Armanfard N. Studying pressure sores through illuminant invariant assessment of digital color images. J Zhejiang Univ Sci 2010;11:598–606 [Google Scholar]

- 26.Dorileo EAG, Frade MAC, Rangayyan RM, Azevedo-Marques PM. Segmentation and analysis of the tissue composition of dermatological ulcers. Conference: Proceedings of the 23rd Canadian Conference on Electrical and Computer Engineering, CCECE 2-5 May 2010, Calgary, Alberta, Canada [Google Scholar]

- 27.Yadav M, Dhane D, Mukharjee G, Chakraborty C. Segmentation of chronic wound areas by clustering techniques using selected color space. J Med Imaging Health Inform 2013;3:22–29 [Google Scholar]