Abstract

Objective:

This study evaluated the use of a deep-learning approach for automated detection and numbering of deciduous teeth in children as depicted on panoramic radiographs.

Methods and materials:

An artificial intelligence (AI) algorithm (CranioCatch, Eskisehir-Turkey) using Faster R-CNN Inception v2 (COCO) models were developed to automatically detect and number deciduous teeth as seen on pediatric panoramic radiographs. The algorithm was trained and tested on a total of 421 panoramic images. System performance was assessed using a confusion matrix.

Results:

The AI system was successful in detecting and numbering the deciduous teeth of children as depicted on panoramic radiographs. The sensitivity and precision rates were high. The estimated sensitivity, precision, and F1 score were 0.9804, 0.9571, and 0.9686, respectively.

Conclusion:

Deep-learning-based AI models are a promising tool for the automated charting of panoramic dental radiographs from children. In addition to serving as a time-saving measure and an aid to clinicians, AI plays a valuable role in forensic identification.

Keywords: Artificial intelligence, deep learning, tooth detecting, panoramic radiography, children, pediatric dentistry, deciduous tooth

Introduction

Dental radiography is an invaluable diagnostic tool that frequently complements the clinical examinations of adults and children.1,2 Panoramic radiography has the following advantages over other radiographic techniques: it comprehensively displays the facial bones and teeth, uses a low radiation dose, and is technically fast and easy to use. These features make panoramic radiography ideally suited for children with special needs, those who are non-cooperative, or those who have strong nausea reflexes.3–6 The facial structures revealed by panoramic radiography include the maxillary and mandibular dental arches and their supporting structures, all of which can be seen on a single tomographic image.

However, panoramic radiography also has several disadvantages. The images are of low-resolution and do not provide the fine details obtained by intraoral radiography. To avoid positioning artifacts, the patient must be positioned correctly, which is often difficult in pediatric patients. Furthermore, it is difficult to visualize both jaws in children with severe maxilla–mandible discordance.6

Nonetheless, panoramic radiography is used in children for various purposes, including general evaluation of all stages of dentition and assessment of occlusion, such as impacted teeth, infra occlusion, and the relationship of those abnormalities with the surrounding anatomical structures. Although rare in children, intraosseous pathologies such as cysts, tumors, and infections can also be examined. Dentomaxillofacial traumas, by contrast, are frequent in pediatric patients and are readily evaluated using panoramic radiography. Other applications include diagnosis, treatment, and follow-up of developmental disorders of the maxillofacial skeleton. However, thus far, panoramic radiography is used mostly for dental age determination and diagnosis of dental abnormalities.5–7

Artificial intelligence (AI), and specifically deep learning, is increasingly being implemented in complex tasks such as problem-solving, decision-making, and object recognition. Deep-learning algorithms learn from large volumes of data rather than following a set of pre-programmed directions.8 One of the most popular deep-learning models in the field of medical imaging is a convolutional neural network (CNN). In a previous study, the similarly graded aspect of the visual cortex of the brain was followed by CNN for learning purposes.9 In the literature, many studies have demonstrated the potential of CNN-based deep-learning methods to assist clinicians in dentistry.10–30 Thus, in this study, we evaluated the success of deep-learning methods in the automated detection and enumeration of the deciduous teeth of pediatric patients as depicted on panoramic radiographs.

Methods and material

Patient selection

The 421 anonymized panoramic radiographs from pediatric patients (5–7 years of age) included in our study were obtained from the archive of Ataturk University, Faculty of Dentistry. Images with any artifacts were excluded. The study protocol was approved by the Clinical Research Ethical Committee of Ataturk University (04/30–07.05.2020). The study was conducted following the principles of the Declaration of Helsinki.

Radiographic data

All images in this study were obtained using the Planmeca Promax 2D Panoramic system (Planmeca, Helsinki, Finland) at 68 kVp, 14 mA, and 12 s.

Image evaluation

A pedodontist with 10 years of clinical experience annotated the panoramic images using the Colabeler annotation software (MacGenius, Blaze Software, CA). The bounding box method (rectangular boxes) was used to define the location of the deciduous teeth, and a class label was then applied according to the FDI numbering system (51-52-53-54-55-61-62-63-64-65-71-72-73-74-75-81-82-83-84-85).

Deep convolutional neural network

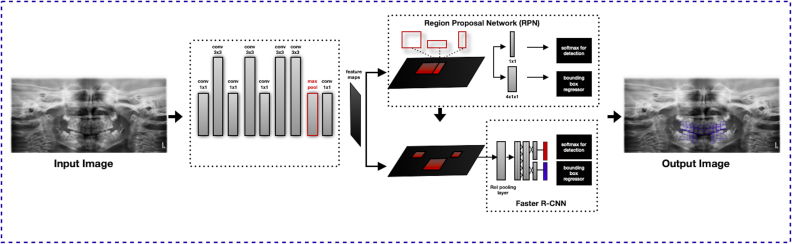

Faster region-based CNN (R-CNN) consists of convolution layers, a region proposal network (RPN), and class and bounding box predictions. Convolution networks consist of convolution layers, pool layers, and either a fully connected or another extended component as a final component for use in a suitable task, such as classification or detection. Pooling consists of reducing the number of features in the feature map by removing low-value pixels. The fully connected layer is used to classify those features that are not of interest in Faster R-CNN. RPN is a small neural network that enters into the final property map of the convolution layers and predicts both whether an object is present and the bounding box of that object. Other fully linked neural networks then use the regions proposed by RPN as input and predict the object class (classification) and the bounding box (regression). A Faster R-CNN model provides the object detectors and employs an architecture developed from R-CNN.9, 30 In this study, region proposal generation and the object detection function were performed using the same CNN, which allowed much faster object detection (Figure 1).

Figure 1.

System architecture of the Faster R-CNN algorithm R-CNN, region-based convolutional neural network

Model pipeline

The open-source Python programming language (Python 3.6.1, Python Software Foundation, Wilmington, DE, retrieved August 1, 2019, https://www.python.org/) and the Tensorflow library were used for model development. In this study, an AI algorithm (CranioCatch, Eskisehir, Turkey) was developed for use in deep-learning techniques to automatically detect and number teeth on the panoramic radiographs of children. Faster R-CNN Inception v2 (COCO) models were used. The training was performed on a PC equipped with 16 GB RAM and an NVIDIA GeForce GTX 1060Ti graphic card. Before training, each panoramic radiograph was resized from 2943 × 1435 pixels to 750 × 750 pixels. A maximum of 20 different classes (51-52-53-54-55-61-62-63-64-65-71-72-73-74-75-81-82-83-84-85) were detected on each radiograph. Among the 421 panoramic radiographs, there were 421 missing teeth. The 7999 labeled teeth included 1797 teeth with dental caries, 149 with restorative fillings, and 15 with stainless-steel crowns. Among the teeth on the 329 panoramic radiographs in the training group, 6430 were labeled.

Training phase

The images were randomly distributed as follows:

Training group: 329 images (6430 labels)

Validation group: 46 images (713 labels)

Test group: 46 images (856 labels)

The data from the test group were not used for model creation.

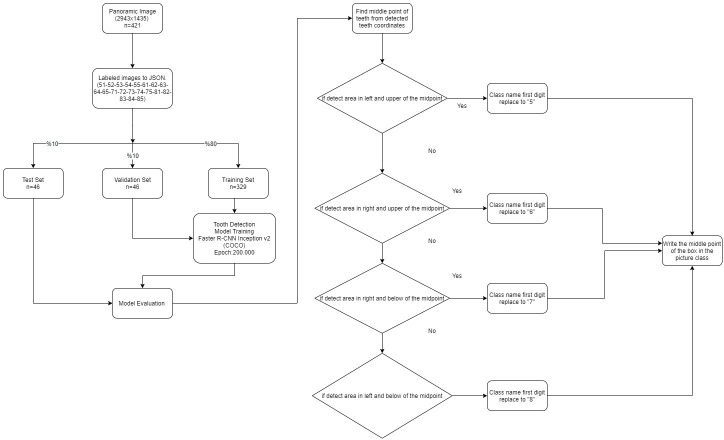

The approach used by CranioCatch for detecting and numbering the deciduous teeth of children was based on Faster R-CNN Inception v 2 (COCO) architecture. The models consisted of the tooth detection model and the tooth numbering model (Figure 2).

Figure 2.

Diagram of the artificial intelligence model (CranioCatch): development steps

Tooth detection model: initially, 20 single-model trainings were performed using 329 images. A maximum of 20 classes can be detected on each radiograph using Faster R-CNN Inception v. 2 (COCO) models with 200,000 epochs.

Tooth numbering model: the mid-point of the detected tooth coordinates was found. If the detected area was to the left and above the mid-point, the class name of the first digit was 5_. If the detected area was to the right and above the mid-point, the class name of the first digit was 6_. For teeth to the right or left and below the midpoint, the class name of the first digit was 7_ or 8_, respectively.

Statistical analysis

To calculate the success of the model, a confusion matrix was used together with the following procedures and metrics: true positive (TP), defined as a tooth correctly detected and numbered; false positive (FP), a tooth correctly detected but incorrectly numbered; and false negative (FN), a tooth incorrectly detected and numbered. If the algorithm labeled a tooth with a wrong number (e.g. t he maxillary right first molar labeled as 55 instead of 54), this was a FP. If a tooth was not labeled with any number, then it was a FN. The following metrics were calculated using the TP, FP, and FN: sensitivity (true positive rate = TP/(TP +FN), precision (positive predictive value = TP/(TP +FP), and the F1 score (2TP / (2TP +FP + FN)).

Results

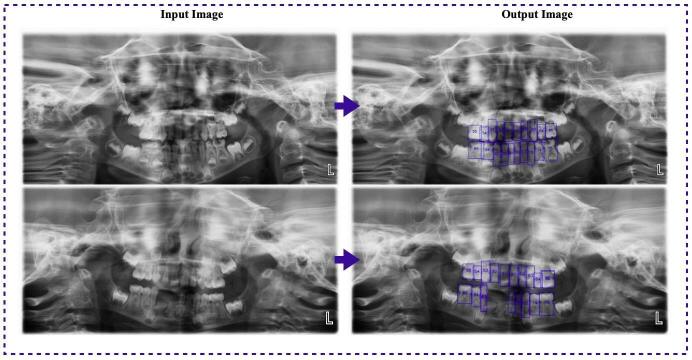

Among the 46 test group images with 856 labels, there were 804 TPs, 36 FPs, and 16 FNs (Table 1). Both the sensitivity (0.9804) and precision (0.9571) were high for the detection and numbering tasks, and the F1 score was 0.9686 (Table 2). Thus, the Faster R-CNN algorithm performed well in detecting and numbering deciduous teeth on pediatric panoramic radiographs (Figure 3).

Table 1.

Number of teeth detected or not in the test group using the artificial intelligence model (CranioCatch)

| Metric | Number |

|---|---|

| TP | 804 |

| FP | 36 |

| FN | 16 |

| Total | 856 |

FN, false negative; FP, false positive; TP, true positive.

Table 2.

Predictive performance measurement using the artificial intelligence model

| Measure | Value | Derivation |

|---|---|---|

| Sensitivity (TPR) |

0.9804 | TPR = TP/(TP +FN) |

| Precision (PPV) |

0.9571 | PPV = TP/(TP +FP) |

| F1 score | 0.9686 | F1 = 2 TP / (2TP + FP+ FN) |

PPV, positive predictive value; TPR, true positive rate.

Figure 3.

Tooth detection and numbering on pediatric panoramic radiographs using the artificial intelligence model (CranioCatch)

Discussion

Tooth detection and numbering using dental radiographs are the first steps in dental diagnostics. Image-processing algorithms, based on mathematical morphology, active contour, or level-set methods, have been developed for classification and segmentation purposes using dental radiographs.31–38 Mahoor et al38 presented an automated dental identification system based on Bayesian classification for the classification and numbering of teeth on bitewing radiographs. High accuracy in classifying and numbering the teeth was demonstrated.38 Lin et al31 recommended a tooth classification and numbering system to efficiently segment, classify, and number the teeth on bitewing radiographs using an image-enhancement technique. Their system performed well in its classification ability, even with difficult-to-classify images.31 Research on tooth detection and numbering during the last decades has focused mainly on the threshold- and region-based techniques, in contrast to CNN, which relies on deep learning. Eun et al39 focused on tooth localization as an important aspect of dental image applications and successfully presented an original tooth localization technique for periapical radiographs based on oriented tooth proposals and a CNN.39 Miki et al24 considered an automated tooth classifying system based on seven tooth types evaluated on axial slice cone-beam CT images using a CNN. The successful classification performance of this system was demonstrated by its high efficiency (91.0%) following the use of an increasing amount of training data with rotation and intensity transformation. The authors concluded that their seven-tooth type classification method can be applied to the efficient automated charting of tooth lists and may be valuable for forensic identification.24 Oktay25 proposed a CNN model modified using AlexNet architecture for tooth detection on panoramic radiographs. They focused on mouth gap detection and the possible placement of teeth in a pre-processing step. The method had high accuracy, with >90% of the detected teeth assigned to four classes.25 Jader et al40 proposed segmenting the teeth using a mask-region-based CNN method with transfer learning strategies. The superiority of this approach was evidenced by accuracy of 98%, F1 score of 88%, precision of 94%, recall rate of 84%, and specificity of 99%. Lee et al41 used a full deep-learning mask-R-CNN method implemented via a fine-tuning process for automated tooth segmentation. A high level of performance for automated tooth segmentation on panoramic radiographs was determined.41 In a 2018 study, Zhang et al36 proposed a deep-learning CNN model with a VGG16 network (a 16-layer CNN architecture) structure for the detection and classification of teeth as seen on periapical radiographs. Their novel method used a “label tree with cascade network structure combining several key strategies,” and the authors showed that this method was more successful than a single state-of-the-art multi class network. The precision and recall rates were high (95.8 and 96.1%, respectively) when the label tree with a cascade network structure was combined with several key strategies. Wirtz et al42 analyzed automated tooth segmentation based on the position and scale of the teeth on panoramic radiographs and a coupled-shape model with a neural network. The precision and recall rates were average.42 Zakirov et al43 presented coarse-to-fine volumetric segmentation of teeth using cone-beam CT images that efficiently handled large volumetric images, at least for tooth segmentation. Chen et al11 used Faster R-CNN in the TensorFlow library to detect and number teeth on periapical radiographs. The detection accuracy was enhanced by the use of three post-processing methods. The AI model’s performance was close to that of a junior dentist.11 Tuzoff et al30 also used state-of-the-art Faster R-CNN together with the VGG-16 network for tooth detection and numbering and reported a sensitivity and precision of 0.9941 and 0.9945 for tooth detection, respectively, and sensitivity and specificity of 0.9800 and 0.9994 for tooth numbering, respectively. The performance of the AI model was similar to that of an expert.30 In our study, a Faster R-CNN model implemented in combination with Google Net Inception v 2 architecture was used to develop an AI model to detect and number the deciduous teeth of children. To the best of our knowledge, this is the first model capable of detecting and numbering each primary tooth on pediatric panoramic radiographs. The performance of the model was high.

Conclusion

The automated charting of dental radiographs is an important step in achieving a digital diagnostic approach in dentistry. Deep-learning-based AI models offer a promising approach and play a valuable role in terms of saving time and effort in the practice of dentistry. Moreover, the algorithms can pave the way for digital forensic dentistry.

Footnotes

Conflict of Interest: The authors declare that they have no conflict of interest.

Funding: There is no funding to report for this manuscript.

Ethical approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent: Additional informed consent was obtained from all individual participants included in the study.

REFERENCES

- 1.Kim Y-H, Yang B-E, Yoon S-J, Kang B-C, Lee J-S. Diagnostic reference levels for panoramic and lateral cephalometric radiography of Korean children. Health Phys 2014; 107: 111–6. doi: 10.1097/HP.0000000000000071 [DOI] [PubMed] [Google Scholar]

- 2.Tsiklakis K, Mitsea A, Tsichlaki A, Pandis N. A systematic review of relative indications and contra-indications for prescribing panoramic radiographs in dental paediatric patients. Eur Arch Paediatr Dent 2020; 21: 387–406. doi: 10.1007/s40368-019-00478-w [DOI] [PubMed] [Google Scholar]

- 3.Visser H, Hermann KP, Bredemeier S, Köhler B. Dose measurements comparing conventional and digital panoramic radiography. Mund Kiefer Gesichtschir 2000; 4: 213–6. doi: 10.1007/s100060000169 [DOI] [PubMed] [Google Scholar]

- 4.Gavala S, Donta C, Tsiklakis K, Boziari A, Kamenopoulou V, Stamatakis HC. Radiation dose reduction in direct digital panoramic radiography. Eur J Radiol 2009; 71: 42–8. doi: 10.1016/j.ejrad.2008.03.018 [DOI] [PubMed] [Google Scholar]

- 5.Sabbadini GD. A review of pediatric radiology. J Calif Dent Assoc 2013; 41: 575–81584. [PubMed] [Google Scholar]

- 6.White SC, Pharoah MJ. Oral radiology-E-Book: principles and interpretation. Elsevier Health Sciences 2014;. [Google Scholar]

- 7.Osman F, Davies RM, Stephens CD, Dowell TB. Radiographs taken for orthodontic purposes in general practice. Br J Orthod 1985; 12: 82–6. doi: 10.1179/bjo.12.2.82 [DOI] [PubMed] [Google Scholar]

- 8.Mazurowski MA, Buda M, Saha A, Bashir MR. Deep learning in radiology: an overview of the concepts and a survey of the state of the art with focus on MRI. J Magn Reson Imaging 2019; 49: 939–54. doi: 10.1002/jmri.26534 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Saba L, Biswas M, Kuppili V, Cuadrado Godia E, Suri HS, Edla DR, et al. The present and future of deep learning in radiology. Eur J Radiol 2019; 114: 14–24. doi: 10.1016/j.ejrad.2019.02.038 [DOI] [PubMed] [Google Scholar]

- 10.Ariji Y, Yanashita Y, Kutsuna S, Muramatsu C, Fukuda M, Kise Y, et al. Automatic detection and classification of radiolucent lesions in the mandible on panoramic radiographs using a deep learning object detection technique. Oral Surg Oral Med Oral Pathol Oral Radiol 2019; 128: 424–30. doi: 10.1016/j.oooo.2019.05.014 [DOI] [PubMed] [Google Scholar]

- 11.Chen H, Zhang K. Lyu PET al. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci Rep 2019; 9: 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Devito KL, de Souza Barbosa F, Felippe Filho WN. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2008; 106: 879–84. doi: 10.1016/j.tripleo.2008.03.002 [DOI] [PubMed] [Google Scholar]

- 13.De Tobel J, Radesh P, Vandermeulen D, Thevissen PW. An automated technique to stage lower third molar development on panoramic radiographs for age estimation: a pilot study. J Forensic Odontostomatol 2017; 35: 42–54. [PMC free article] [PubMed] [Google Scholar]

- 14.Ekert T, Krois J, Meinhold L, Elhennawy K, Emara R, Golla T, et al. Deep learning for the radiographic detection of apical lesions. J Endod 2019; 45: 917–22. doi: 10.1016/j.joen.2019.03.016 [DOI] [PubMed] [Google Scholar]

- 15.Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, et al. Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol 2020; 36: 337–43. doi: 10.1007/s11282-019-00409-x [DOI] [PubMed] [Google Scholar]

- 16.Hiraiwa T, Ariji Y, Fukuda M, Kise Y, Nakata K, Katsumata A, et al. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac Radiol 2019; 48: 20180218. doi: 10.1259/dmfr.20180218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: a systematic review. Dentomaxillofac Radiol 2020; 49: 20190107. doi: 10.1259/dmfr.20190107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Krois J, Ekert T, Meinhold L, Golla T, Kharbot B, Wittemeier A, et al. Deep learning for the radiographic detection of periodontal bone loss. Sci Rep 2019; 9: 8495. doi: 10.1038/s41598-019-44839-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kunz F, Stellzig-Eisenhauer A, Zeman F, Boldt J. Artificial intelligence in orthodontics : Evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J Orofac Orthop 2020; 81: 52–68. doi: 10.1007/s00056-019-00203-8 [DOI] [PubMed] [Google Scholar]

- 20.Lee J-H, Kim D-H, Jeong S-N. Diagnosis of cystic lesions using panoramic and cone beam computed tomographic images based on deep learning neural network. Oral Dis 2020; 26: 152–8. doi: 10.1111/odi.13223 [DOI] [PubMed] [Google Scholar]

- 21.Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent 2018; 77: 106–11. doi: 10.1016/j.jdent.2018.07.015 [DOI] [PubMed] [Google Scholar]

- 22.Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J Periodontal Implant Sci 2018; 48: 114–23. doi: 10.5051/jpis.2018.48.2.114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Merdietio Boedi R, Banar N, De Tobel J, Bertels J, Vandermeulen D, Thevissen PW. Effect of lower third molar segmentations on automated tooth development staging using a Convolutional neural network. J Forensic Sci 2020; 65: 481–6. doi: 10.1111/1556-4029.14182 [DOI] [PubMed] [Google Scholar]

- 24.Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A, et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput Biol Med 2017; 80: 24–9. doi: 10.1016/j.compbiomed.2016.11.003 [DOI] [PubMed] [Google Scholar]

- 25.Oktay AB. Tooth detection with Convolutional Neural Networks 2017 Medical Technologies. National Congress (TIPTEKNO): IEEE 2017;: 1–4. [Google Scholar]

- 26.Orhan K, Bayrakdar IS, Ezhov M, Kravtsov A, Özyürek T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int Endod J 2020; 53: 680–9. doi: 10.1111/iej.13265 [DOI] [PubMed] [Google Scholar]

- 27.Poedjiastoeti W, Suebnukarn S. Application of Convolutional neural network in the diagnosis of jaw tumors. Healthc Inform Res 2018; 24: 236–41. doi: 10.4258/hir.2018.24.3.236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Saghiri MA, Asgar K, Boukani KK, Lotfi M, Aghili H, Delvarani A, et al. A new approach for locating the minor apical foramen using an artificial neural network. Int Endod J 2012; 45: 257–65. doi: 10.1111/j.1365-2591.2011.01970.x [DOI] [PubMed] [Google Scholar]

- 29.Schwendicke F, Golla T, Dreher M, Krois J. Convolutional neural networks for dental image diagnostics: a scoping review. J Dent 2019; 91: 103226. doi: 10.1016/j.jdent.2019.103226 [DOI] [PubMed] [Google Scholar]

- 30.Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol 2019; 48: 20180051. doi: 10.1259/dmfr.20180051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lin PL, Lai YH, Huang PW. An effective classification and numbering system for dental bitewing radiographs using teeth region and contour information. Pattern Recognit 2010; 43: 1380–92. doi: 10.1016/j.patcog.2009.10.005 [DOI] [Google Scholar]

- 32.Mahoor MH, Abdel-Mottaleb M. Automatic classification of teeth in bitewing dental images 2004 International Conference on Image Processing, 2004. ICIP'04. IEEE 2004;: 3475–8. [Google Scholar]

- 33.Said EH, Nassar DEM, Fahmy G, Ammar HH. Teeth segmentation in digitized dental X-ray films using mathematical morphology. IEEE Transactions on Information Forensics and Security 2006; 1: 178–89. doi: 10.1109/TIFS.2006.873606 [DOI] [Google Scholar]

- 34.Silva G, Oliveira L, Pithon M. Automatic segmenting teeth in X-ray images: trends, a novel data set, benchmarking and future perspectives. Expert Syst Appl 2018; 107: 15–31. doi: 10.1016/j.eswa.2018.04.001 [DOI] [Google Scholar]

- 35.Wirtz A, Wambach J, Wesarg S. Automatic Teeth Segmentation in Cephalometric X-Ray Images Using a Coupled Shape Model OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures. and Skin Image Analysis: Springer 2018;: 194–203. [Google Scholar]

- 36.Zhang K, Wu J, Chen H, Lyu P. An effective teeth recognition method using label tree with cascade network structure. Comput Med Imaging Graph 2018; 68: 61–70. doi: 10.1016/j.compmedimag.2018.07.001 [DOI] [PubMed] [Google Scholar]

- 37.Yuniarti A, Nugroho AS, Amaliah B, Arifin AZ. Classification and numbering of dental radiographs for an automated human identification system. TELKOMNIKA 2012; 10: 137. doi: 10.12928/telkomnika.v10i1.771 [DOI] [Google Scholar]

- 38.Mahoor MH, Abdel-Mottaleb M. Classification and numbering of teeth in dental bitewing images. Pattern Recognit 2005; 38: 577–86. doi: 10.1016/j.patcog.2004.08.012 [DOI] [Google Scholar]

- 39.Eun H, Kim C. Oriented tooth localization for periapical dental X-ray images via convolutional neural network 2016 Asia-Pacific Signal and Information. Processing Association Annual Summit and Conference (APSIPA): IEEE 2016;: 1–7. [Google Scholar]

- 40.Jader G, Fontineli J, Ruiz M, Abdalla K, Pithon M, Oliveira L. Deep instance segmentation of teeth in panoramic X-ray images 2018 31st SIBGRAPI Conference on Graphics. Patterns and Images (SIBGRAPI): IEEE 2018;: 400–7. [Google Scholar]

- 41.Lee J-H, Han S-S, Kim YH, Lee C, Kim I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol 2020; 129: 635–42. doi: 10.1016/j.oooo.2019.11.007 [DOI] [PubMed] [Google Scholar]

- 42.Wirtz A, Mirashi SG, Wesarg S. Automatic teeth segmentation in panoramic X-ray images using a coupled shape model in combination with a neural network International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer 2018;: 712–9. [Google Scholar]

- 43.Zakirov A, Ezhov M, Gusarev M, Alexandrovsky V, Shumilov E. End-To-End dental pathology detection in 3D cone-beam computed tomography images. 2018;.