Abstract

Although naturalistic developmental behavioral interventions (NDBI) have a sizeable and growing evidence base for supporting the development of children on the autism spectrum, their active ingredients and mechanisms of change are not well understood. This study used qualitative content analysis to better understand the intervention process of a parent mediated NDBI. Caregivers completed weekly written reflection responses as they learned each intervention technique. These responses were coded, and code-cooccurrences were examined to understand the relationship between implementation of specific intervention techniques and potential mechanisms of change according to caregiver observations. The responses were subsequently compared to a theoretical causal model derived from the intervention manual. Many responses were consistent with the intervention theory, however, some theoretical outcomes were not reported by caregivers, and caregivers described some potential mechanisms that were not explicitly stated in the intervention theory. Importantly, we found that individual techniques were associated with various mechanisms, suggesting that global measures of social communication may be insufficient for measuring context-dependent responses to individual intervention techniques. Our findings point to specific observable behaviors that may be useful targets of measurement in future experimental studies, and as indicators of treatment response in clinical settings. Overall, qualitative methods may be useful for understanding complex intervention processes.

Keywords: early intervention, active ingredients, autism, qualitative methods

The evidence base is growing for a class of early interventions for children on the autism spectrum which combine behavioral learning techniques with principles from developmental science (Sandbank et al., 2020; Tiede & Walton, 2019). Such interventions, coined Naturalistic Developmental Behavioral Interventions (NDBI), use child-directed teaching within natural contexts such as daily routines and play (Schreibman et al., 2015). NDBIs are thought to address several important developmental targets for children with social communication delays thought to have cascading effects (“pivotal skills”) such as imitation, joint attention, and joint engagement, in addition to teaching language and other communication skills (Schreibman et al., 2015). These interventions are comprised of several interacting treatment components, with common treatment elements including following the child’s lead, modeling appropriate language, using communicative temptations, and using prompting techniques to teach new skills (Frost et al., 2020).

Caregiver involvement in early intervention is considered best practice (Wong et al., 2015; Zwaigenbaum et al., 2015). As such, nearly all NDBIs have been examined using caregiver-implemented delivery (e.g. Early Start Denver Model; Estes et al., 2014; Enhanced Milieu Teaching; Kaiser et al., 2000; Joint Attention, Symbolic Play, Engagement & Regulation; Kasari et al., 2014), including some which were developed explicitly as caregiver-implemented interventions (e.g. Social ABCs; Brian et al., 2016; Project ImPACT; Ingersoll & Wainer, 2013). Caregiver-implemented interventions are those in which trained providers teach caregivers how to implement intervention techniques with their child (Bearss et al., 2015). As such, they are thought to allow for higher dose of treatment techniques while minimizing direct treatment hours, enabling providers to serve more families (Wetherby et al., 2018). Furthermore, caregiver-implemented interventions may be easier to implement in existing service delivery systems in low-resource settings for the same reason (Reichow et al., 2013; Wetherby et al., 2018). Despite their promise, caregiver-implemented NDBI have not consistently found positive effects on standardized child outcomes (McConachie & Diggle, 2007; Nevill et al., 2018; Oono et al., 2013; Wetherby et al., 2018), suggesting the need to better understand how and for whom they work.

NDBIs are complex interventions with several elements, many of which are thought to be “active ingredients” responsible for causing change in different developmental skills (Schreibman et al., 2015). When delivered together, these treatment elements are theorized to have complementary additive or interactive effects which combine to form a more potent intervention package (Schreibman et al., 2015). This complexity makes it challenging to understand how these interventions work. The techniques taught to parents in caregiver-mediated NDBIs are meant to elicit certain child social communication behaviors in the moment which have cascading developmental effects over time (Charman, 2003; Pickles et al., 2015, 2016; Wetherby et al., 2018). As such, these context-dependent, short-term responses to intervention techniques are thought to be mechanisms of change through which these interventions impact developmental outcomes more long term. While randomized controlled trials are considered the gold standard in treatment efficacy research, their focus on measuring a limited number of generalized (and often, distal) outcomes after the treatment is complete limits our ability to examine treatment process (Crawford et al., 2002). As such, there is limited evidence for which intervention elements can be considered active ingredients, and whether different treatment elements have differential effects on child outcomes (Gulsrud et al., 2016; Ingersoll & Wainer, 2013) or impact different mechanisms of change or child outcomes altogether. Likewise, our understanding of how treatment elements affect change, usually conceptualized as mechanisms or mediators of change, remains limited. An increased focus on how NDBIs work is essential for improving these interventions (Bruinsma et al., 2019). This calls for innovative approaches to identifying their active ingredients and mechanisms of change.

In caregiver-implemented NDBIs, caregivers are usually taught one intervention technique at a time to facilitate caregiver learning and mastery. This structure provides a unique opportunity to identify potential active ingredients and mechanisms by observing the relationship between parents’ use of specific intervention techniques and their child’s response. For example, Ingersoll and Wainer (2013) used multilevel modeling with a single-case, multiple-baseline design to examine the relationship between caregivers’ use of four intervention strategies and their child’s spontaneous language use. However, this approach is limited to those outcomes that are determined to be important a priori by the researcher, which are not necessarily consistent across individual intervention techniques. Due to caregivers’ proximity to the child and ability to observe the child’s response across multiple settings and daily routines, they are uniquely situated to report on how children respond to the intervention techniques in real time. Qualitative methods are particularly well-suited for obtaining an in-depth understanding of complex intervention processes (Crawford et al., 2002). Qualitative methods have been used to examine parent-level change processes in parent training interventions (Holtrop et al., 2014) and well as child-level changes and potential mechanisms (Mejia et al., 2016). By using qualitative analysis of parent reports, we can take a first step toward linking implementation of active ingredients to caregiver perceptions of mechanisms of change. However, future experimental research that directly manipulates and measures caregiver implementation of intervention techniques and child responses is needed to empirically test these causal relationships.

The focus of this study is Project ImPACT (Ingersoll & Dvortcsak, 2019), a manualized, evidence-based, caregiver-implemented NDBI (Steinbrenner et al., 2020). As such, this intervention was informed by previous literature in developmental and behavioral sciences. Project ImPACT has demonstrated efficacy in single case (Ingersoll et al., 2017; Ingersoll & Wainer, 2013) and group designs (Stahmer et al., 2019; Yoder et al., 2020). Furthermore, it has demonstrated efficacy using various modes of delivery, including a group model (Sengupta et al., 2020), individual in-person sessions (Ingersoll & Wainer, 2013; Stahmer et al., 2019; Yoder et al., 2020), and individual telehealth sessions (Hao et al., 2020; Ingersoll et al., 2016). Caregivers participating in Project ImPACT learn a series of treatment techniques on a weekly basis over 12 weeks, which they practice in the context of play and daily routines with their child (Ingersoll & Dvortcsak, 2019). This study used archival data from a telehealth adaptation of Project ImPACT to analyze caregiver perspectives on children’s response to each intervention technique while caregivers learned and practiced them at home. Using qualitative content analysis to analyze open-ended reflection responses embedded within the online training program, we linked caregiver use of specific Project ImPACT techniques to potential mechanisms of child change according to caregiver observations. In addition to better understanding potential active ingredients of Project ImPACT, a secondary goal of this research was to understand how qualitative methods can be leveraged to help understand complex intervention processes. To do this, we compared qualitative codes to a theoretical causal model, allowing for validation and refinement of the theoretical model.

Method

Participants

Participants were 51 caregivers of children on the autism spectrum between the ages of 17.7 and 83.9 months (M= 45.338, SD= 14.292). Caregivers received access to a web-based adaptation of Project ImPACT as part of one of two research studies evaluating the efficacy of a telehealth-based caregiver-mediated intervention (Ingersoll et al., 2016). Twenty-three participants were enrolled in a pilot randomized-controlled trial (RCT) from 2012 to 2014, and 28 participants were enrolled in an ongoing full-scale RCT from 2015 to 2020. Eligible families had caregivers who were proficient in English and who had not previously received parent training to support social communication development. All children had a classification of “autism” or “autism spectrum” on the Autism Diagnostic Observation Schedule- Second Edition (ADOS; Lord et al., 2012), and did not have known genetic syndromes or uncontrolled seizures. Participant demographics can be found in Table 1. Of the two research studies, only the data from participants who had completed the open-ended reflection questions from at least 5 lessons were used in this study. At the post-intervention timepoint, overall parent fidelity of implementation was scored from a 10-minute free-play interaction with a standardized box of toys. Implementation of each technique was rated on a scale from 1 (low fidelity) to 5 (high fidelity) and subsequently averaged to obtain an overall fidelity score. Caregivers in this sample had an average fidelity rating of 3.354 (SD = 0.823).

Table 1.

Participant demographics

| Group | Overall (N=51) | ||

|---|---|---|---|

|

|

|||

| Self-directed (N=17) | Therapist-assisted (N=34) | ||

|

| |||

| Parent Demographics | |||

| Gender (% female) | 94.1 | 85.3 | 88.2 |

| Education (% less than college degree) | 29.4 | 52.9 | 45.1 |

| Child Demographics | |||

| Gender (% female) | 35.3 | 20.6 | 25.5 |

| Race (% White) | 76.5 | 64.7 | 68.6 |

| Ethnicity (% Hispanic or Latino) | 0.0 | 11.8 | 7.8 |

| Child Age (Months) | 43.812 (10.996) | 46.124 (15.826) | 45.338 (14.292) |

| ADOS-2 CSS | 6.417 (1.240) | 6.867 (1.479) | 6.738 (1.415) |

Note. ADOS-2 CSS= Autism diagnostic observation schedule–2nd edition Calibrated Severity Score

Intervention

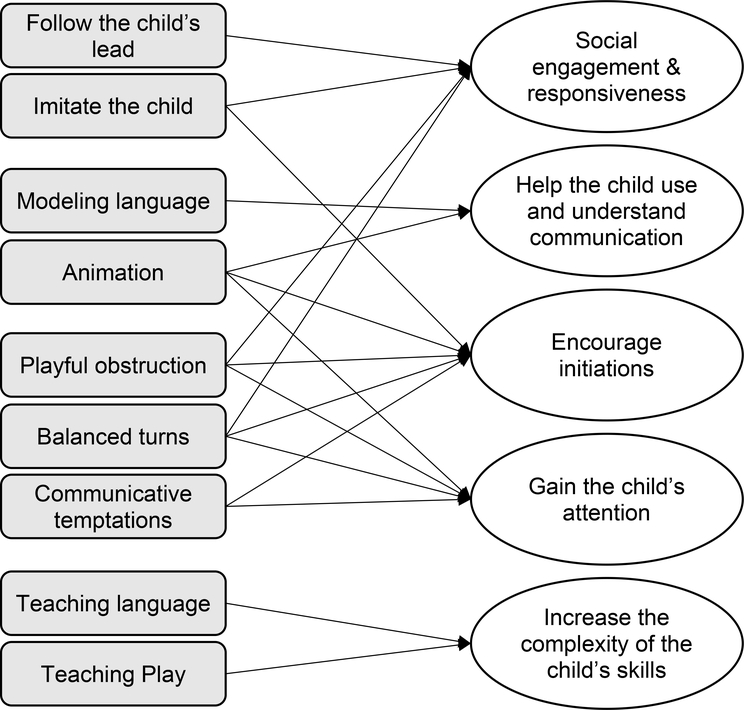

Project ImPACT techniques are thought to address a variety of underlying mechanisms to support child social communication development. A theoretical model, based on information described in the treatment manual, is presented in Figure 1. First, caregivers learn the focus on your child strategy, comprised of following the child’s lead and imitating the child, which is meant to improve the child’s social engagement and joint attention and increase the amount of time the dyad can play together. Imitating the child is also thought to support child initiations. Next, caregivers learn the adjust your communication strategy which is comprised of two techniques: using animation and modeling and expanding communication. Together, these techniques are meant to encourage social engagement and support verbal and nonverbal communication development. Animation is thought to focus more on child initiation, social attention, and nonverbal aspects of language, while modeling and expanding communication is thought to help the child use and understand verbal communication. The create opportunities strategy is comprised of three techniques, playful obstruction, balanced turns, and communicative temptations, which are broadly focused on giving the child opportunities to communicate (e.g. request, protest) and gain the child’s attention. In addition, playful obstruction and balanced turns are thought to support reciprocal interactions or turn-taking, and communicative temptations are thought to help expand the reasons the child communicates. The teach new skills strategy is comprised of multiple lessons to help caregivers use prompts and rewards effectively to help the child practice new communication, imitation, and play skills.

Figure 1.

Summary of theoretical model of Project ImPACT.

Delivery model

Participants in this study received a web-based adaptation of Project ImPACT, which was comprised of 12 individual lessons, a video library with examples of therapists and caregivers implementing the intervention, and a general resource library with links to information about ASD and child development. Each lesson included a digital caregiver manual chapter, narrated slideshows with embedded video examples of the intervention techniques, multiple-choice questions to check for understanding, and open-ended reflection questions. Seventeen participants went through the online program on their own in a self-directed manner, and 34 participants went through the program with therapist assistance and coaching. Although the complete intervention was comprised of 12 lessons, the first three involved introductory information, setting goals and preparing for participation, and the last lesson involved reflecting on the intervention as a whole. Lessons 4–11 (Table 2), which focused on teaching specific intervention skills, were used for this study.

Table 2.

Reflection response questions

| Lesson number | Intervention technique | Reflection response prompt |

|---|---|---|

|

| ||

| 4 | Following the child’s lead | • How does your child respond when you follow his lead? How long were you able to play? |

| Imitating the child | • How does your child respond when you imitate his toy play, gestures, body movements, or vocalizations? (e.g., Does he look at you or smile? Does he change activities to see if you will continue to imitate him?) | |

|

| ||

| 5 | Using animation | • How does your child respond when you use animation? (e.g., Does he pay more attention to you? Does he imitate your nonverbal communication?) |

| Modeling and expanding the child’s language | • How does your child respond when you model language around his play and interests? (e.g., Does he imitate your sounds or words? Does he use new gestures or words on his own?) | |

|

| ||

| 6 | Playful obstruction | • How does your child respond when you use playful obstruction? (e.g., Does he look at you? Does he use language to communicate?) |

| Balanced turns | • How does your child respond when you use balanced turns? (e.g., Does he request a turn? Does he watch you take your turn?) | |

|

| ||

| 7 | Communicative temptations | • How does your child respond when you use communicative temptations? (e.g., Does he look at you? Does he use gestures or language to initiate communication?) |

|

| ||

| 8 | Teaching language | • How does your child respond when you prompt him to use more complex language? (e.g., Does he use new communication skills?) |

|

| ||

| 9 | Teaching language (2) | • Which prompts did you use to expand your child’s language? How did your child respond to the different prompts? • Were you able to decrease your support to encourage your child to use language spontaneously? How did your child respond? |

|

| ||

| 10 | Teaching play | • How does your child respond when you teach imitative play with toys? (e.g., Does he imitate your play with toys? Does he play in more creative ways?) • How does your child respond when you teach gesture imitation? (e.g., Does he imitate your gestures? Does he use more gestures on his own?) |

|

| ||

| 11 | Teaching play (2) | • Which prompts did you use to expand your child's play? How did your child respond to the different prompts? • Were you able to decrease your support to encourage your child to use play skills spontaneously? How did your child respond? |

Reflection responses

Caregivers completed two to three short answer reflection responses per lesson, which they typed directly into the online program. Reflection responses analyzed for this study focused on the child’s response to the caregiver’s use of intervention techniques from the lesson. Thirty-six participants completed all eight responses; the remaining participants had some missing reflection responses. The text of the open-ended reflection questions is included verbatim in Table 2.

Qualitative coding

Qualitative content analysis using a combined inductive and deductive approach was utilized to analyze the semantic content of the reflection responses. Codes were applied based on manifest content of the reflection responses (i.e. directly described with words in the typed responses, not inferred). A set of categories describing caregiver use of intervention techniques was derived deductively from the content of the online program; this included 10 techniques covered across 8 lessons (Table 2). Each response was categorized with the lesson the reflection response applied to, as well as any specific techniques mentioned in the response. This directed content analysis approach is consistent with our emphasis on examining the existing theoretical model of the intervention (Hsieh & Shannon, 2005).

An inductive approach was used to generate categories pertaining to children’s response to the intervention techniques, consistent with an “open coding” process (Strauss & Corbin, 1990). An inductive approach was taken in order to capture the full range of child responses to the intervention techniques, rather than the expected or ideal outcomes. The second author and an undergraduate research assistant independently familiarized themselves with the caregiver responses and each generated a list of potential categories describing child responses. They then met to finalize the categories and created a codebook. The second author and research assistant met twice a week to consensus-code all responses using Dedoose. The first author subsequently read through all the responses and checked the final codes.

Analysis

Once coding was complete, code co-occurrence tables were generated using Dedoose (Table 3) to examine “thematic proximity” or the relationship between codes (Armborst, 2017). Specifically, our analysis focused on the frequency of co-occurrence of intervention techniques and specific child responses within each reflection response. We then examined the most frequent child response codes for each technique and compared them to the theoretical model presented in Figure 1.

Table 3.

Code co-occurrence table indicating child response codes associated with each Project ImPACT intervention technique.

| Following the child's lead | Imitating the child | Animation | Model language | Communicative temptations | Balanced turns | Playful obstruction | Teaching language | Teaching play | |

|---|---|---|---|---|---|---|---|---|---|

|

|

|||||||||

| Child Social Communication Responses | |||||||||

|

| |||||||||

| Reciprocal interaction/joint engagement | 14 | 30 | 21 | 5 | 7 | 14 | 8 | 5 | 23 |

| Child enjoyment in interaction | 22 | 43 | 24 | 5 | 7 | 7 | 19 | 1 | 16 |

| Longer duration of interaction | 7 | 4 | 4 | 2 | 0 | 1 | 0 | 1 | 0 |

| Child anticipation | 5 | 21 | 3 | 1 | 1 | 5 | 6 | 0 | 1 |

| Child imitation | 0 | 7 | 11 | 20 | 2 | 0 | 1 | 2 | 68 |

| Child eye contact | 5 | 14 | 12 | 2 | 27 | 2 | 32 | 5 | 2 |

| Child attention | 6 | 12 | 25 | 7 | 0 | 14 | 1 | 2 | 11 |

| Child gestures | 1 | 6 | 3 | 10 | 14 | 3 | 1 | 19 | 37 |

| Child requests | 3 | 2 | 3 | 1 | 22 | 12 | 9 | 22 | 6 |

| Child vocalizes | 2 | 11 | 7 | 35 | 23 | 12 | 22 | 60 | 20 |

| Child initiation of social interaction | 5 | 9 | 5 | 12 | 32 | 12 | 1 | 15 | 18 |

| Child skill growth | 1 | 3 | 0 | 6 | 0 | 1 | 0 | 6 | 12 |

|

| |||||||||

| Other child responses | |||||||||

|

| |||||||||

| Child responds well | 15 | 1 | 9 | 2 | 9 | 9 | 7 | 35 | 42 |

| Child exhibits RRBs | 2 | 4 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| Child disengagement in social interaction | 9 | 9 | 5 | 0 | 3 | 10 | 4 | 10 | 23 |

| Child difficulty knowing how to respond | 3 | 6 | 1 | 1 | 1 | 0 | 4 | 6 | 5 |

| Child frustration | 4 | 6 | 1 | 0 | 5 | 11 | 14 | 8 | 15 |

| Child response variability | 8 | 16 | 8 | 5 | 9 | 11 | 16 | 28 | 34 |

Note: Italicized code counts indicate that the behavior was used as an example in the reflection response prompt. The most common 2–3 child social communication response codes for each lesson are shaded in gray. Teaching language and Teaching play were each covered across 2 lessons, thus the total co-occurrences can surpass the total number of participants.

Community involvement

Community members were not directly involved in the development, design, or interpretation of this study.

Results

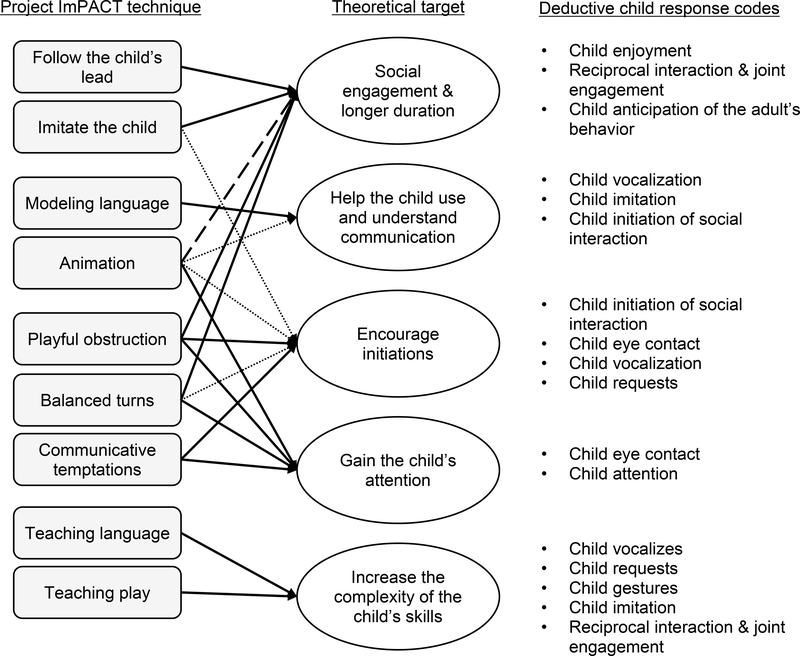

The code counts for the co-occurrence of each intervention technique with child response can be found in Table 3. The theoretical model presented in the introduction was largely confirmed by the results of the study, with a few key differences (Figure 2). We also examined the same code co-occurrences for a subset of families who implemented the intervention with relatively high fidelity (>3.5 on a 5-point scale) at the end of the program during an unstructured, 10-minute play session; the pattern of results was virtually identical; therefore we report only the full sample here.

Figure 2.

Summary of qualitative analysis results.

Note. Solid lines indicate finding supported by data and consistent with intervention theory; Dashed lines indicate findings supported by data but inconsistent with intervention theory; Dotted lines indicate findings not supported by data but consistent with intervention theory.

Consistent with the intervention theory, caregivers described child responses consistent with increased social engagement and longer duration of caregiver-child interactions (e.g. child enjoyment, child attention) when following the child’s lead and imitating the child. For example, one caregiver wrote, “Once I started following Sam’s lead; our play was more enjoyable as Sam was a bit surprised and then amused by realizing that mommy is trying to follow his play on his terms and helping/facilitating him to do what he enjoys to do. We were able to play longer and it was more fun than ever.” While using animation was described in the manual as facilitating social attention, helping the child use and understand nonverbal communication and encouraging initiations, caregivers reported that using animation was most associated with social engagement (e.g. child enjoyment) and gaining the child’s attention: “She pays more attention and thinks it is funny.” Thus, the theoretical model for animation was partially supported. When modeling and expanding language, caregivers perceived that they helped the child use communication (e.g. child vocalization, child initiation of social interaction), consistent with the intervention theory. One caregiver reported “Luke has had a lot more spontaneous babble the past week,” and another wrote “Rebecca does like to repeat what I say when I give her words for what she’s doing.” As described in the intervention manual, communicative temptations were perceived to fulfill their dual function of gaining the child’s attention and encouraging the child to initiate. For example, one caregiver said “Jaina responded in a variety of ways; […] Mostly; she used language. She did also make eye contact repeatedly (especially when wanting “more” of something).” Likewise, consistent with the intervention theory, playful obstruction was perceived to facilitate child social engagement, gain the child’s attention and encourage the child to initiate. One caregiver wrote that their son “laughs most of the time even tries to imitate and make sounds... but sometimes shies away and that’s when we take a break and get back to activity later.” Findings for balanced turns were partially consistent with the intervention theory. Balanced turns were often perceived to be associated with child social engagement and gaining the child’s attention; however they were not often perceived to be associated with child initiations. Last, lessons focused on teaching new communication, play, and imitation skills were perceived to be associated with a variety of child responses which are consistent with the treatment targets of the intervention (e.g. requests, gestures, imitation). For example, one caregiver reported “She uses single words when she’s motivated enough; especially for things like bubbles. With less motivating activities I have to help her point sometimes,” and another caregiver wrote “She imitates and plays on. She is really starting to join with others in her play; more than side by side play.”

Caregivers also noted that some techniques led to variable child responses, suggesting that the techniques did not always work consistently across settings and situations: “[he] reacts to my imitation in mixed ways. Sometimes he continues and enjoys the attention; and sometimes he moves on to avoid me.” This variability in responding was most often described for imitating the child, playful obstruction, and teaching language and play. In addition, some techniques were more likely than others to lead to child frustration. Caregivers reported that balanced turns, playful obstruction, and teaching play sometimes resulted in child frustration. One parent wrote, “She doesn’t respond well to [playful obstruction]. She gets very frustrated especially if she is very intent on the activity.”

Discussion

As far as we know, this is the first study in the autism field to use archival qualitative data to identify putative active ingredients and mechanisms of an intervention; however this method has been used for parent management training, a type of behavioral parent training program for child disruptive behavior (Holtrop et al., 2014; Mejia et al., 2016). We found written reflection questions to be a practical and convenient source of qualitative data, and believe such data adds richness to other quantitative measures collected as part of clinical trials. Through qualitative content analysis, we found evidence that, according to caregiver perceptions, the suite of techniques that comprise Project ImPACT do appear to target a variety of social communication behaviors. Furthermore, different techniques seem to target different behaviors. Many of the results were consistent with the intervention theory, however we found some theoretical outcomes were not reported by caregivers (e.g. balanced turns were not associated with child initiations). In addition, caregivers described some potential mechanisms that were not explicitly stated in the intervention theory (i.e. the association between animation and social engagement).

Future quantitative research that systematically measures caregiver implementation of the intervention technique and child responses is needed to directly assess the active ingredients of Project ImPACT. Experimental designs that manipulate the intervention techniques and measure mechanisms and outcomes (e.g. factorial experiments, single case component analyses) are needed to provide causal evidence of the associations between active ingredients and outcomes, and ideally would also include measuring mediating effects of treatment mechanisms. Limited research in early interventions for autism have begun to address this (Gulsrud et al., 2016; Ingersoll & Wainer, 2013), and this will be an important area for continued research.

However, we also believe that qualitative methods can expand on and complement this type of quantitative experimental research in several ways. For example, our paper provides guidance on which specific context-dependent child behaviors might be ideal targets of measurement in experimental designs, particularly for evaluating potential mechanisms of change. It will be important to link these mechanisms to broader outcomes measured later and in generalized contexts in order to understand treatment effects more fully. Yet, our results suggest that a single broad measure of child social communication, even one that is quite sensitive to change, may not capture the different child responses associated with individual, focused intervention techniques (e.g. duration of joint engagement for imitating the child; child eye contact or attention for communicative temptations). Studies designed to evaluate the child’s real-time response to individual intervention techniques, typical of single case experimental designs, may require measurement of a variety of specific social communication behaviors over time in order to evaluate and differentiate treatment effects associated with individual intervention techniques. Moreover, some caregivers reported significant variability in children’s responses to specific intervention techniques, while other caregivers perceived that some intervention techniques caused frustration in their children. Clinical trials may not ordinarily capture these types of responses, yet such information is important for understanding heterogeneity in treatment response, moderators of treatment effects, and how to optimally individualize treatment for children with different strengths. In addition, we believe that parent perceptions of outcomes have value in their own right and align with principles of family-centered care, which is associated with improved outcomes for children with special health care needs (Bailey et al., 2012; Kuhlthau et al., 2011). While it is possible that caregivers did not necessarily recognize every instance in which they used a technique, or may have not implemented all techniques with high fidelity, caregivers’ understanding of how their child responded to their use of the technique can affect factors such as therapeutic self-efficacy (Russell & Ingersoll, 2020), which has downstream effects on parenting stress (Hastings & Brown, 2002).

We also believe that mixed methods research that meaningfully integrates qualitative and quantitative data may provide useful insights into how and for whom interventions work. For example, a convergent mixed methods design could be used to examine meaningful differences in patterns of code co-occurrence for subgroups of caregivers (e.g. high compared to low parenting self-efficacy) or children (e.g. pre-verbal children compared to children who communicate verbally). Sequential mixed methods designs could also be used in several ways. For example, qualitative data could be used to contextualize or gain additional information about quantitative results, or quantitative results could be used to corroborate themes and relationships identified from qualitative responses. In the future, we play to examine some of the specific relationships identified in this report using quantitative measures coded from behavioral observations.

In addition, this work may have helpful clinical applications. Qualitative research may provide guidance on which specific behaviors might be used to identify early or slow treatment response. Here, we have identified specific, observable behaviors indicative of a short-term treatment response that are feasible to identify in a practice setting. These may be useful and sensitive early signals of treatment response for clinicians.

However, our data set was not without limitations. First, because parents learn several techniques in a prescribed sequence, children’s responses to individual techniques are not due to that technique alone, but rather the new technique in the presence of previously-learned techniques. While this is not ideal for understanding isolated effects of active ingredients, it does represent how the intervention techniques are meant to be used; they are not meant to be used in isolation, but rather integrated and used in concert. Next, our source material was limited in richness; given that reflection responses were typed into an online program, there was no opportunity to probe for additional detail or to ask follow-up questions for clarification. Qualitative interviews or focus groups are likely to provide a much richer description of how the intervention techniques are perceived to affect child outcomes. However, such data are more costly to collect and more time-intensive to code. A further limitation of this study is that reflection questions included theoretically-consistent child responses as examples to help caregivers understand what type of information to think about and report as part of the online reflection response. While not ideal for the purposes of data collection, this had a function within the training program itself. Although we cannot know whether or how this affected caregiver responses, the fact that caregivers provided responses outside of the examples named in the questions suggests that these data have merit. Last, these data come from a telehealth adaptation of Project ImPACT. Although Project ImPACT could have different active ingredients or mechanisms of change when delivered this way, we do not think this is likely. Although caregivers learned the techniques using an online modality, caregivers learned the same techniques and delivered them directly with the child as they would in a face-to-face intervention. Furthermore, recent research suggests that Project ImPACT as delivered face to face and via telehealth have similar efficacy (Hao et al., 2020).

Conclusion

According to caregiver perceptions examined through qualitative content analysis, the suite of techniques that comprise Project ImPACT appear to target a variety of social communication behaviors. Many of the results were consistent with the intervention theory, however some theoretical mechanisms were not reported by caregivers and some reported mechanisms were not explicitly stated in the intervention theory. These findings also support written parent reflections as a practical source of qualitative data to add richness to other quantitative measures in clinical trials. Future research is needed to directly assess the active ingredients of Project ImPACT. Experimental designs that manipulate the intervention techniques and measure mechanisms and outcomes are also needed to measure mediating effects of treatment mechanisms and provide causal evidence of the associations between active ingredients and outcomes.

Acknowledgements:

Many thanks to the families whose participation in research made this work possible. In addition, thank you to Grace MacDonald, who assisted with qualitative coding.

Funding: We are also grateful for the support of the following grants: Eunice Kennedy Shriver National Institute of Child Health and Human Development F31HD103209, PI: Frost; Congressionally Directed Medical Research Programs W81XWH-10-1-0586, PI: Ingersoll; Health Resources and Services Administration-Maternal and Child Health Bureau R40MC27704, PI: Ingersoll. The content and conclusions are those of the authors and should not be construed as the official position or policy of, nor should any endorsements be inferred by HRSA or the U.S. Government.

Declaration of interest: Author K.M.F. is involved with training providers to implement Project ImPACT at fidelity, and is currently supported by an NIH grant to study how Project ImPACT works. Author K.R. has no conflicts of interest to declare. Author B.I. is a co-developer of Project ImPACT. She receives royalties from Guilford Press for the curriculum and fees for training others in the program. She donates profits from this work to support research and continued development of Project ImPACT.

Footnotes

Ethical approval: This study was approved by the Institutional Review Board (IRB) at Michigan State University. All participants consented for their data to be used for research purposes.

Contributor Information

Kyle M. Frost, Michigan State University

Kaylin Russell, Michigan State University.

Brooke Ingersoll, Michigan State University.

References

- Armborst A (2017). Thematic Proximity in Content Analysis. SAGE Open, 7(2), 215824401770779. 10.1177/2158244017707797 [DOI] [Google Scholar]

- Bailey DB, Raspa M, & Fox LC (2012). What Is the Future of Family Outcomes and Family-Centered Services? Topics in Early Childhood Special Education, 31(4), 216–223. 10.1177/0271121411427077 [DOI] [Google Scholar]

- Bearss K, Burrell TL, Stewart L, & Scahill L (2015). Parent training in autism spectrum disorder: What’s in a name? Clinical Child and Family Psychology Review, 18(2), 170–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brian JA, Smith IM, Zwaigenbaum L, Roberts W, & Bryson SE (2016). The Social ABCs caregiver‐mediated intervention for toddlers with autism spectrum disorder: Feasibility, acceptability, and evidence of promise from a multisite study. Autism Research, 9(8), 899–912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruinsma Y, Minjarez MB, Schreibman L, & Stahmer AC (Eds.). (2019). Naturalistic Developmental Behavioral Interventions for Autism Spectrum Disorder. Brookes Publishing. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charman T (2003). Why is joint attention a pivotal skill in autism? Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 358(1430), 315–324. 10.1098/rstb.2002.1199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford MJ, Weaver T, Rutter D, Sensky T, & Tyrer P (2002). Evaluating new treatments in psychiatry: The potential value of combining qualitative and quantitative research methods. International Review of Psychiatry, 14(1), 6–11. 10.1080/09540260120114005 [DOI] [Google Scholar]

- Estes A, Vismara L, Mercado C, Fitzpatrick A, Elder L, Greenson J, Lord C, Munson J, Winter J, Young G, Dawson G, & Rogers S (2014). The Impact of Parent-Delivered Intervention on Parents of Very Young Children with Autism. Journal of Autism and Developmental Disorders, 44(2), 353–365. 10.1007/s10803-013-1874-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frost KM, Brian J, Gengoux GW, Hardan A, Rieth SR, Stahmer A, & Ingersoll B (2020). Identifying and measuring the common elements of naturalistic developmental behavioral interventions for autism spectrum disorder: Development of the NDBI-Fi. Autism. 10.1177/1362361320944011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gulsrud AC, Hellemann G, Shire S, & Kasari C (2016). Isolating active ingredients in a parent-mediated social communication intervention for toddlers with autism spectrum disorder. Journal of Child Psychology and Psychiatry, 57(5), 606–613. 10.1111/jcpp.12481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hao Y, Franco JH, Sundarrajan M, & Chen Y (2020). A Pilot Study Comparing Tele-therapy and In-Person Therapy: Perspectives from Parent-Mediated Intervention for Children with Autism Spectrum Disorders. Journal of Autism and Developmental Disorders. 10.1007/s10803-020-04439-x [DOI] [PubMed] [Google Scholar]

- Hastings RP, & Brown T (2002). Behavior Problems of Children With Autism, Parental Self-Efficacy, and Mental Health. American Journal on Mental Retardation, 107(3), 222–232. [DOI] [PubMed] [Google Scholar]

- Holtrop K, Parra-Cardona JR, & Forgatch MS (2014). Examining the Process of Change in an Evidence-Based Parent Training Intervention: A Qualitative Study Grounded in the Experiences of Participants. Prevention Science, 15(5), 745–756. 10.1007/s11121-013-0401-y [DOI] [PubMed] [Google Scholar]

- Hsieh H-F, & Shannon SE (2005). Three Approaches to Qualitative Content Analysis. Qualitative Health Research, 15(9), 1277–1288. 10.1177/1049732305276687 [DOI] [PubMed] [Google Scholar]

- Ingersoll B, & Dvortcsak A (2019). Teaching Social Communication to Children with Autism and Other Developmental Delays: The Project ImPACT Guide to Coaching Parents and the Project ImPACT Manual for Parents (2nd ed.). Guilford Press. [Google Scholar]

- Ingersoll B, & Wainer A (2013). Initial Efficacy of Project ImPACT: A Parent-Mediated Social Communication Intervention for Young Children with ASD. Journal of Autism and Developmental Disorders, 43(12), 2943–2952. 10.1007/s10803-013-1840-9 [DOI] [PubMed] [Google Scholar]

- Ingersoll B, Wainer AL, Berger NI, Pickard KE, & Bonter N (2016). Comparison of a Self-Directed and Therapist-Assisted Telehealth Parent-Mediated Intervention for Children with ASD: A Pilot RCT. Journal of Autism and Developmental Disorders, 46(7), 2275–2284. 10.1007/s10803-016-2755-z [DOI] [PubMed] [Google Scholar]

- Ingersoll B, Wainer AL, Berger NI, & Walton KM (2017). Efficacy of low intensity, therapist-implemented Project ImPACT for increasing social communication skills in young children with ASD. Developmental Neurorehabilitation, 20(8), 502–510. 10.1080/17518423.2016.1278054 [DOI] [PubMed] [Google Scholar]

- Kaiser AP, Hancock TB, & Nietfeld JP (2000). The Effects of Parent-Implemented Enhanced Milieu Teaching on the Social Communication of Children Who Have Autism. Early Education & Development, 11(4), 423–446. 10.1207/s15566935eed1104_4 [DOI] [Google Scholar]

- Kasari C, Lawton K, Shih W, Barker TV, Landa R, Lord C, Orlich F, King B, Wetherby A, & Senturk D (2014). Caregiver-Mediated Intervention for Low-Resourced Preschoolers With Autism: An RCT. In Pediatrics (24958585; Vol. 134, pp. e72–9). 10.1542/peds.2013-3229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhlthau KA, Bloom S, Van Cleave J, Knapp AA, Romm D, Klatka K, Homer CJ, Newacheck PW, & Perrin JM (2011). Evidence for Family-Centered Care for Children With Special Health Care Needs: A Systematic Review. Academic Pediatrics, 11(2), 136–143.e8. 10.1016/j.acap.2010.12.014 [DOI] [PubMed] [Google Scholar]

- McConachie H, & Diggle T (2007). Parent implemented early intervention for young children with autism spectrum disorder: A systematic review. J Eval Clin Pract, 13(1), 120–129. 10.1111/j.1365-2753.2006.00674.x [DOI] [PubMed] [Google Scholar]

- Mejia A, Ulph F, & Calam R (2016). Exploration of Mechanisms behind Changes after Participation in a Parenting Intervention: A Qualitative Study in a Low‐Resource Setting. American Journal of Community Psychology, 57(1–2), 181–189. [DOI] [PubMed] [Google Scholar]

- Nevill RE, Lecavalier L, & Stratis EA (2018). Meta-analysis of parent-mediated interventions for young children with autism spectrum disorder. Autism, 22(2), 84–98. 10.1177/1362361316677838 [DOI] [PubMed] [Google Scholar]

- Oono IP, Honey EJ, & McConachie H (2013). Parent-mediated early intervention for young children with autism spectrum disorders (ASD). Cochrane Database of Systematic Reviews. 10.1002/14651858.CD009774.pub2 [DOI] [PubMed] [Google Scholar]

- Pickles A, Harris V, Green J, Aldred C, McConachie H, Slonims V, Le Couteur A, Hudry K, Charman T, & the PACT Consortium. (2015). Treatment mechanism in the MRC preschool autism communication trial: Implications for study design and parent-focussed therapy for children. Journal of Child Psychology and Psychiatry, 56(2), 162–170. 10.1111/jcpp.12291 [DOI] [PubMed] [Google Scholar]

- Pickles A, Le Couteur A, Leadbitter K, Salomone E, Cole-Fletcher R, Tobin H, Gammer I, Lowry J, Vamvakas G, Byford S, Aldred C, Slonims V, McConachie H, Howlin P, Parr JR, Charman T, & Green J (2016). Parent-mediated social communication therapy for young children with autism (PACT): Long-term follow-up of a randomised controlled trial. The Lancet, 388(10059), 2501–2509. 10.1016/S0140-6736(16)31229-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reichow B, Servili C, Yasamy MT, Barbui C, & Saxena S (2013). Non-Specialist Psychosocial Interventions for Children and Adolescents with Intellectual Disability or Lower-Functioning Autism Spectrum Disorders: A Systematic Review. PLoS Medicine, 10(12), e1001572. 10.1371/journal.pmed.1001572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell KM, & Ingersoll B (2020). Factors related to parental therapeutic self-efficacy in a parent-mediated intervention for children with autism spectrum disorder: A mixed methods study. Autism, 136236132097423. 10.1177/1362361320974233 [DOI] [PubMed] [Google Scholar]

- Sandbank M, Bottema-Beutel K, Crowley S, Cassidy M, Dunham K, Feldman JI, Crank J, Albarran SA, Raj S, Mahbub P, & Woynaroski TG (2020). Project AIM: Autism intervention meta-analysis for studies of young children. Psychological Bulletin, 146(1), 1–29. 10.1037/bul0000215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schreibman L, Dawson G, Stahmer AC, Landa R, Rogers SJ, McGee GG, Kasari C, Ingersoll B, Kaiser AP, Bruinsma Y, McNerney E, Wetherby A, & Halladay A (2015). Naturalistic Developmental Behavioral Interventions: Empirically Validated Treatments for Autism Spectrum Disorder. Journal of Autism and Developmental Disorders, 45(8), 2411–2428. 10.1007/s10803-015-2407-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sengupta K, Mahadik S, & Kapoor G (2020). Glocalizing project ImPACT: Feasibility, acceptability and preliminary outcomes of a parent-mediated social communication intervention for autism adapted to the Indian context. Research in Autism Spectrum Disorders, 76, 101585. 10.1016/j.rasd.2020.101585 [DOI] [Google Scholar]

- Stahmer AC, Rieth SR, Dickson KS, Feder J, Burgeson M, Searcy K, & Brookman-Frazee L (2019). Project ImPACT for Toddlers: Pilot outcomes of a community adaptation of an intervention for autism risk. Autism, 136236131987808. 10.1177/1362361319878080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinbrenner JR, Hume K, Odom SL, Morin KL, Nowell SW, Tomaszewski B, Szendrey S, McIntyre NS, Yücesoy-Özkan Ş, & Savage MN (2020). Evidence-based Practices for Children, Youth, and Young Adults with Autism. The University of North Carolina at Chapel Hill, Frank Porter Graham Child Development Institute, National Clearinghouse on Autism Evidence and Practice Review Team, 143. [Google Scholar]

- Strauss A, & Corbin J (1990). Basics of qualitative research. Sage publications. [Google Scholar]

- Tiede G, & Walton KM (2019). Meta-analysis of naturalistic developmental behavioral interventions for young children with autism spectrum disorder. Autism, 136236131983637. 10.1177/1362361319836371 [DOI] [PubMed] [Google Scholar]

- Wetherby AM, Woods J, Guthrie W, Delehanty A, Brown JA, Morgan L, Holland RD, Schatschneider C, & Lord C (2018). Changing Developmental Trajectories of Toddlers With Autism Spectrum Disorder: Strategies for Bridging Research to Community Practice. Journal of Speech, Language, and Hearing Research, 61(11), 2615–2628. 10.1044/2018_JSLHR-L-RSAUT-18-0028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong C, Odom SL, Hume KA, Cox AW, Fettig A, Kucharczyk S, Brock ME, Plavnick JB, Fleury VP, & Schultz TR (2015). Evidence-Based Practices for Children, Youth, and Young Adults with Autism Spectrum Disorder: A Comprehensive Review. Journal of Autism and Developmental Disorders, 45(7), 1951–1966. 10.1007/s10803-014-2351-z [DOI] [PubMed] [Google Scholar]

- Yoder PJ, Stone WL, & Edmunds SR (2020). Parent utilization of ImPACT intervention strategies is a mediator of proximal then distal social communication outcomes in younger siblings of children with ASD. 14. [DOI] [PMC free article] [PubMed]

- Zwaigenbaum L, Bauman ML, Choueiri R, Kasari C, Carter A, Granpeesheh D, Mailloux Z, Smith Roley S, Wagner S, Fein D, Pierce K, Buie T, Davis PA, Newschaffer C, Robins D, Wetherby A, Stone WL, Yirmiya N, Estes A, … Natowicz MR (2015). Early Intervention for Children With Autism Spectrum Disorder Under 3 Years of Age: Recommendations for Practice and Research. Pediatrics, 136 Suppl 1, S60–81. 10.1542/peds.2014-3667E [DOI] [PMC free article] [PubMed] [Google Scholar]