Abstract

Spontaneous brain activity is characterized by bursts and avalanche-like dynamics, with scale-free features typical of critical behaviour. The stochastic version of the celebrated Wilson-Cowan model has been widely studied as a system of spiking neurons reproducing non-trivial features of the neural activity, from avalanche dynamics to oscillatory behaviours. However, to what extent such phenomena are related to the presence of a genuine critical point remains elusive. Here we address this central issue, providing analytical results in the linear approximation and extensive numerical analysis. In particular, we present results supporting the existence of a bona fide critical point, where a second-order-like phase transition occurs, characterized by scale-free avalanche dynamics, scaling with the system size and a diverging relaxation time-scale. Moreover, our study shows that the observed critical behaviour falls within the universality class of the mean-field branching process, where the exponents of the avalanche size and duration distributions are, respectively, 3/2 and 2. We also provide an accurate analysis of the system behaviour as a function of the total number of neurons, focusing on the time correlation functions of the firing rate in a wide range of the parameter space.

Author summary

Networks of spiking neurons are introduced to describe some features of the brain activity, which are characterized by burst events (avalanches) with power-law distributions of size and duration. The observation of this kind of noisy behaviour in a wide variety of real systems led to the hypothesis that neuronal networks work in the proximity of a critical point. This hypothesis is at the core of an intense debate. At variance with previous claims, here we show that a stochastic version of the Wilson-Cowan model presents a phenomenology in agreement with the existence of a bona fide critical point for a particular choice of the relative synaptic weight between excitatory and inhibitory neurons. The system behaviour at this point shows all features typical of criticality, such as diverging timescales, scaling with the system size and scale-free distributions of avalanche sizes and durations, with exponents corresponding to the mean-field branching process. Our analysis unveils the critical nature of the observed behaviours.

Introduction

Spontaneous brain activity shows complex spatio-temporal patterns characterized by a rich phenomenology, including power-law spectra [1], instabilities and metastability transitions [2–4], synchronization [5, 6], the presence of multiple spatio-temporal scales [7, 8], etc. Another striking feature is the occurrence of bursts, or avalanches, as first observed in organotypic cultures from coronal slices of rat cortex [9]. This kind of behaviour has been confirmed in a wide variety of systems, from cortical activity of awake monkeys [10] to human fMRI (functional Magnetic Resonance Imaging) [11] and MEG (MagnetoEncephaloGraphy) recordings [12]. In experiments, the distribution of avalanche size S is characterized by the scaling law with exponent τS ≃ 1.5, whereas the distribution of the avalanche duration T follows the scaling with τT ≃ 2. Both these behaviours are consistent with the universality class of the mean-field branching process [13], where the propagation of an avalanche can be described by a front of independent sites that can either trigger further activity or die out.

The hypothesis that some features of the brain activity can be interpreted as the result of a dynamics acting close to a critical point has inspired several statistical models where a critical state can be selected by the fine tuning of a parameter [14–16], or is self-organized [17–20]. Numerical data for different neuronal network models well reproduce experimental results. On the other hand, other stochastic models have been proposed that can reproduce the avalanche dynamics of the neural activity, without invoking the existence of an underlying critical behaviour. Among these models of spiking neurons, a central role is played by the celebrated Wilson-Cowan model (WCM), which describes the coupled dynamics of populations of excitatory and inhibitory neurons [21–24]. One of the major merits of this model is that it allows for analytical treatment in the large population size limit [22, 25, 26]. The stochastic version of this model has been shown to reproduce avalanche dynamics [26] and oscillatory behaviour of the activity [27]. However, the underlying mechanisms responsible for such phenomenology have been identified in the noisy functionally coupled structure of the dynamics, rather than in the presence of a critical point.

Requirements to assess critical behavior

In order to clarify the main requirements for the behaviour of a system to be classified as critical, here we briefly summarize the fundamental features of criticality [28]. Second order (critical) phase transitions are characterized by singularities in the proximity of a specific value of a control parameter, for instance the temperature in thermal phase transitions, in the limit of infinite system sizes and, generally, for vanishing external fields. More explicitly, fundamental properties of the system either diverge or go to zero approaching the critical value of the parameter. In particular, the order parameter of the system goes continuously to zero at the critical point, being non-zero only on one side of the transition (low temperatures in thermal systems), whereas the response function, proportional to the fluctuations of the order parameter, diverges. Fundamental requirement of second order phase transitions is that this singular behaviour is described by a function that in the neighbourhood of the critical point can be approximated by a power law, neglecting terms of higher order representing corrections to scaling. This property allows one to define a critical exponent for each quantity of interest and therefore a family of critical exponents characterizing the critical behaviour, named universality class. Different systems can belong to the same universality class if they are described by Hamiltonians with the same symmetries. Since critical transitions occur in systems of interacting components, the divergence of the response function implies, by fluctuation-dissipation relations, that at the critical point the spatial and temporal correlation ranges diverge in an infinite system. The divergence of the temporal correlation range is expression of the well-known critical slowing down taking place at the critical point, whereas the divergence of the correlation length expresses the large scale sensitivity of the system to external perturbations.

In finite systems, the spatial correlation range at criticality equals the system size. As a consequence, a diverging (or equal to the system size) correlation length implies that no characteristic size exists in the system and therefore the extension of the power law regime, namely the cutoff, must scale with the system size. Therefore, the divergence of the correlation length and the absence of a characteristic size are reflected in the power law behaviour of characteristic distributions. Summarizing, to assess that a system exhibits critical behaviour, one must identify an order parameter going continuously to zero at a critical value of a control parameter. At the critical point, the fluctuations of the order parameter must diverge, as well as the range of temporal and spatial correlations. In finite systems criticality implies that the cutoff in power law behaviour should scale with the system size.

A classic example of a second order phase transition is the ferro-paramagnetic transition exhibited by the Ising model Hamiltonian in zero external magnetic field. The magnetization per spin, which plays the role of the order parameter, is different than zero (the ferromagnetic phase) at low temperature, namely below the critical temperature Tc, vanishes at Tc and remains zero above Tc (the paramagnetic phase). For this transition the response function is the magnetic susceptibility, namely the derivative of the magnetization with respect to a vanishing external magnetic field. This susceptibility is indeed the spatial integral of the fluctuations of the magnetization and diverges at Tc due to the divergence of the correlation length, namely the size of clusters of correlated spins. All these singularities approaching Tc behave as power laws, defining the well-known Ising model universality class [28]. It is interesting to mention that the Ising model, besides this second order phase transition in zero field, also shows a first order phase transition for varying non-zero magnetic fields, below Tc. A first order phase transition is conversely characterized by a discontinuous order parameter and, eventually, the presence of hysteresis [29]. Increasing fluctuations approaching the transition can be observed also in this context, but the scaling with the system size is usually lacking, hampering the definition of critical exponents. The choice of the control parameters is, therefore, crucial in determining the order of the phase transition and the two phenomena can coexist in the same model (see Discussion).

This well-established scenario for equilibrium systems described by a Hamiltonian has been extended also to non-equilibrium open systems, even in the case where a Hamiltonian is not defined [30]. In particular, neuronal networks (biological and models) represent an instance of an entire field of systems where non-equilibrium phase transitions can occur in a self-organized matter, namely in the absence of a tuning parameter but due to the interaction of many degrees of freedom [20, 31, 32]. Also in non-equilibrium systems a continuous transition can be observed, with critical exponents typical of a well-defined universality class. More specifically, according to the conjecture by Janssen and Grassberger [30], systems with short-range interactions, exhibiting a continuous phase transition into a single absorbing state, belong generically to the Directed Percolation universality class, provided that they are characterized by a one component order parameter without additional symmetries and without unconventional features such as quenched disorder. Different scaling behavior is expected to occur in systems where at least one of these requirements is not fulfilled. We stress that both directed percolation and branching process on a tree, therefore in the mean field approximation, do provide the same universality class.

Here we reconsider the stochastic WCM in this framework, addressing the central issue related to its critical behaviour. First, we observe that this model is defined by dynamical equations which are not derived from a Hamiltonian function describing the energy of the system. Therefore a real thermodynamic phase transition, where singularities in the second derivative of the free energy occur, is not expected. However, we present a systematic analysis of the features typical of a critical behaviour, showing that a bona fide critical point in the parameter space of the WCM can be actually identified. In particular, we show that: i) the mean firing rate plays the role of the order parameter, passing from zero value to a finite value across the critical point; ii) the correlation time of the order parameter diverges at the critical point; iii) the avalanche size and duration distributions follow a power-law behaviour; iv) for finite systems, this power-law regime scales with the system size, as expected at the critical point. Moreover, we show that the critical exponents fall within the universality class of the mean-field branching process [13].

Results

The stochastic Wilson-Cowan model

The stochastic version of the Wilson-Cowan model [26] describes the coupled dynamics of NE excitatory and NI inhibitory neurons. The state ai of neuron i can be active active (ai = 1) or quiescent (ai = 0) and evolves according to a continuous-time Markov process. The transition rate from an active state to a quiescent state (1 → 0) is α for all neurons, while the rate for the inverse transition (0 → 1) is described by the activation function f(si) that depends on the i-th neuron. The total synaptic input si is defined as

| (1) |

where wij are the synaptic strengths and the parameter hi plays the role of a small external input that adds up to the synaptic inputs from the connected neurons and the sum runs over all neurons. The activation function is given by

| (2) |

where β has the dimension of an inverse time. The quantity s represents the distance of the membrane potential from the firing threshold and is measured in mV. In Eq (2) s is made a-dimensional by dividing by 1 mV. In the following we consider that each neuron is coupled with all other neurons. The synaptic weights wij are equal to wEE/NE for excitatory-excitatory connections, wIE/NE for excitatory-inhibitory connections, −wEI/NI for inhibitory-excitatory connections and −wII/NI for inhibitory-inhibitory connections. Therefore the input of a neuron, in the large N limit, only depends on the excitatory or inhibitory type of the neuron, namely si = sE if the i-th neuron is excitatory, and si = sI if the i-th neuron is inhibitory. In the following we set α = 0.1 ms−1, β = 1 ms−1, NE = NI = N, hE = hI = h, and consider symmetric synaptic weights wEE = wIE = wE, wII = wEI = wI. Thus sE = sI = s, with , and 0 ≤ k ≤ N and 0 ≤ l ≤ N are respectively the numbers of active excitatory and inhibitory neurons [26]. In the following we will focus on the instantaneous firing rate, defined as

| (3) |

so that the mean number of neurons that fire in a small time interval Δt is given by NRΔt.

The temporal evolution of the system can be effectively described in terms of the coupled non-linear Langevin equations [33]

where the noises satisfy 〈ηi(t)〉 = 0, 〈ηi(t)ηj(t′)〉 = δij δ(t − t′). Following Ref. [26], we make a Gaussian approximation and set that the number of active neurons is the sum of a deterministic component and a stochastic perturbation, i.e. and . Introducing the variables Σ = (E + I)/2 and Δ = (E − I)/2, which represent the total average activity and the imbalance between excitatory and inhibitory activity, respectively, and expanding Eq (4) in powers of N−1/2, the leading terms proportional to N provide a set of dynamical equations for the deterministic components

At long times, Δ relaxes to the fixed point value equal to zero, expression of the balance of excitation and inhibition [34] and direct consequence of the hypothesis of symmetric synaptic connections. Conversely, Σ relaxes to the fixed point Σ0, given by the solution of the equation

| (6) |

with s0 = w0Σ0 + h and w0 = wE − wI. We stress that, by this definition, w0 expresses the relative balance between the excitatory and inhibitory connection strength, and it will turn out to be the parameter controlling the critical transition. In addition, terms proportional to N1/2 in Eq (4), can be written as the linearized Langevin equations for the fluctuating components [22, 25, 26]

| (7) |

where ξΣ = (ξE + ξI)/2, ξΔ = (ξE − ξI)/2, the feed-forward term wff = (1 − Σ0)(wE + wI)f′(s0) and

The times τ1 and τ2 represent the correlation times in the linear approximation of the dynamical equations. Indeed, in such approximation the temporal correlation functions Cxy(t) = 〈x(t)y(0)〉 − 〈x〉〈y〉, where x and y are two observables and where the symbol 〈⋯〉 represents an average over noise in the stationary state (see Methods), can be written as the linear combinations of two exponential decays [35] (see Methods for the explicit expressions)

| (9) |

Note that Eq (6) can have more than one solution. In this case, the relevant one is the one characterized by positive values of the relaxation times τ1 and τ2, so that the fixed point is attractive. On the other hand, fixed points characterized by negative values of either τ1 or τ2 are repulsive and not relevant to the dynamics of the system. An accurate analysis of the stability properties of the WCM for finite external fields can be found for instance in [36].

Critical point of the dynamical equations

We here discuss the behavior of the system predicted by the linear noise approximation, namely in the limit of very large system size. When the external inputs h is zero, Σ0 = 0 is always a solution of the fixed point Eq (6). However, one can show, by taking the linear approximation of the hyperbolic tangent, that there is a critical value w0c = αβ−1. This value expresses the balance between the activation and disactivation characteristic neuronal times and therefore can be interpreted as an optimal value for excitation/inhibition balance. In particular, for w0 < w0c (when inhibition dominates) the fixed point Σ0 = 0 is stable, whereas for w0 > w0c (when excitation dominates) it is unstable and another stable point Σ0 ≃ (w0 − w0c)/w0 > 0 appears continuously from zero at the onset of the transition. When h > 0 there is always only one attractive fixed point with Σ0 > 0 and the transition is smoothed out (see Methods).

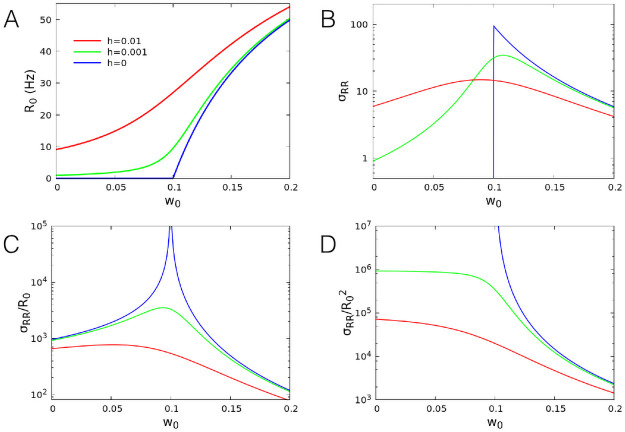

In Fig 1A we show the firing rate R0 = (1 − Σ0)f(w0Σ0 + h) computed at the attractive fixed point, as a function of w0 for different external input h. This quantity shows the typical behaviour of an order parameter. In particular, for h = 0, R0 = 0 for w0 < w0c, whereas it continuously increases for w0 ≥ w0c as R0 ∼ (w0 − w0c), according to what expected in a second-order phase transition. For finite h values, R0 shows a qualitatively similar behaviour characterized by a continuous increase, and the transition is smoothed out.

Fig 1. Order parameter and its variance.

(A) Analytical dependence of the firing rate per neuron at the fixed point on the value of w0, for different values of h. (B) Normalized variance σRR = N〈(R − R0)2〉 as a function of w0. (C) Fano factor σRR/R0. (D) Square coefficient of variation , that is equal to N times the variance of the ratio R/R0. Other parameters: α = 0.1 ms−1, β = 1 ms−1, wE + wI = 13.8.

Next we analysed the behaviour of the variance of the firing rate at the fixed point, as a function of w0 for different h values. For a large number of neurons, the variance of the firing rate is proportional to N−1 (see Methods), therefore σRR = N〈(R − R0)2〉 is independent of N, and can be computed in the linear approximation (see Methods). In Fig 1B we show σRR as a function of w0 for several values of h. For h = 0 it reaches a maximum at the critical point w0c = 0.1 and sharply vanishes for w0 < w0c. For finite values of h, the variance shows a smooth maximum close to the critical point. The fact that the variance of the order parameter does not diverge at the critical point, unusual in the framework of second order phase transitions, can be attributed to the vanishing of the noise amplitude in Eq (7). This is due to the particular choice of the activation function. Indeed, different functional forms for f(s) lead to the a non-zero Σ0 at the critical point and to diverging fluctuations. However, such a divergence is observed in the ratio of the variance to the mean value of the order parameter. In Fig 1C we show the Fano factor of the firing rate, that is the ratio σRR/R0. This quantity is defined as the ratio of the variance and the mean value, and measures how much the statistics of a variable deviates from the behaviour expected for a Poissonian variable. In the present case, it diverges at the critical point for h = 0, with a behaviour σRR/R0 ∼ |w0 − w0c|−1, while it shows a maximum near w0 = w0c for h > 0.

Moreover, in Fig 1D we show the squared coefficient of variation . Considering that the linear approximation is derived under the condition that fluctuations are much smaller than the average firing rate (in this case close to the fixed point value), this quantity can be interpreted as the limiting value of N for its validity. Indeed, if , then the standard deviation is much smaller than the mean R0 and the linear approximation holds (see below), conversely for the opposite is true. Therefore, the divergence of this quantity near the critical point means that, no matter how large N is, the linear approximation does not apply.

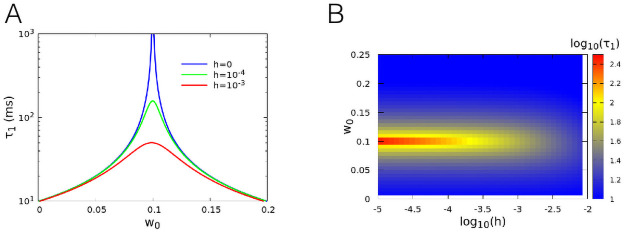

The critical behaviour in standard critical phenomena is accompanied by the slowing down of the dynamics. This is evidenced by the divergence of the characteristic time-scales of the system. To study the decay of the correlation function close to the critical point, we observe that when h = 0 and w0 ≃ w0c, Σ0 and s0 = w0Σ0 are much smaller than one, so that tanh(s0) ≈ s0. Using this approximation in Eq (8), we find that both for w0 < w0c and w0 > w0c, while τ2 ≤ α−1. If h > 0, the divergence of τ1 is rounded up, and one finds a maximum at w0 = w0c, diverging for h → 0. In Fig 2A and 2B we show the autocorrelation time τ1 in the linear approximation, where a clear divergence is observed for zero external field.

Fig 2. Divergence of the correlation time at criticality.

(A) Analytical result for the decay time τ1 in the linear approximation, for the same parameters of Fig 1. (B) Contour plot of τ1 as a function of both h and w0. The graphs show the divergence of the decay time at the critical value w0c = 0.1.

In conclusion, near the critical point w0 = w0c and for h = 0, both the Fano factor and the correlation time of the firing rate diverge. Divergence is also found for the Fano factor and correlation time of other dynamical variables, e.g. the total number of active neurons. These results provide further evidence that the occurring phenomenology can be rightfully interpreted in the framework of critical systems.

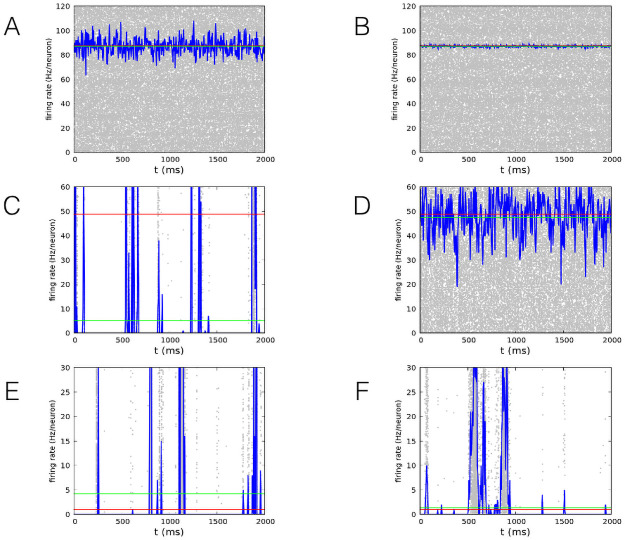

Firing rate dynamics

Near the critical point the linear approximation does not hold even for very large system sizes. We support this conclusion by analysing the instantaneous firing rate (Fig 3) as a function of time for h = 10−5 and three values of w0, w0 = 1 (upper row), w0 = 0.2 (middle row) and w0 = 0.1 (lower row). For each value of w0 we show two values of N, N = 103 on the left and N = 105 on the right. For w0 = 1 (upper row) the normalized variance is σRR ≃ 6, so that the dynamics for N ≫ 6 is always “continuous”, smoothly fluctuating around the attractive fixed point, and can be accurately described within the linear approximation. For w0 = 0.2 (middle row), the normalized variance is σRR ≃ 2400, therefore if N < σRR (left) the dynamics of the system is irregular and characterized by avalanches. The firing rate frequently hits the value R = 0, and a “downstate” of the network follows, where the activation of the neurons is controlled only by the external input h and the activity recovery can take a long time if h is small. On the right, conversely, N ≫ σRR, and the dynamics becomes “continuous”, as in the previous case. Finally, near the critical point, for w0 = 0.1 (lower row), the normalized variance is σRR ≃ 4.6 × 107, therefore the dynamics is characterized by avalanches up to N = 105 and above.

Fig 3. Firing rate for neuron at the critical point and far from it.

Firing rate measured in numerical simulations as a function of time for wE + wI = 13.8, h = 10−5. Upper row: w0 = 1 (E dominates) (A), middle row: w0 = 0.2 (E dominates) (C), lower row: w0 = 0.1 (E/I balance) (E), left column: N = 103, right column: N = 105 (B,D,F). Blue lines represent the firing rate of the network, while gray dots represent single neuron spikes. Red lines show the value of the firing rate R0 at the fixed point of the dynamics. Note that this value can be quite different from the mean firing rate (green lines), when large non linear effects are present.

This qualitative analysis suggests that the occurrence of the avalanche activity in dynamics of the stochastic WCM is indeed related to the presence of a critical point. If the system is moved away from the critical point, this kind of behaviour persists as long as the size of the system is small enough and disappears for larger sizes. More precisely, the system size must be smaller than the squared coefficient of variation of the firing rate. In this case, fluctuations of the firing rate are much larger than the mean value and the dynamics becomes avalanche-like.

Avalanche dynamics

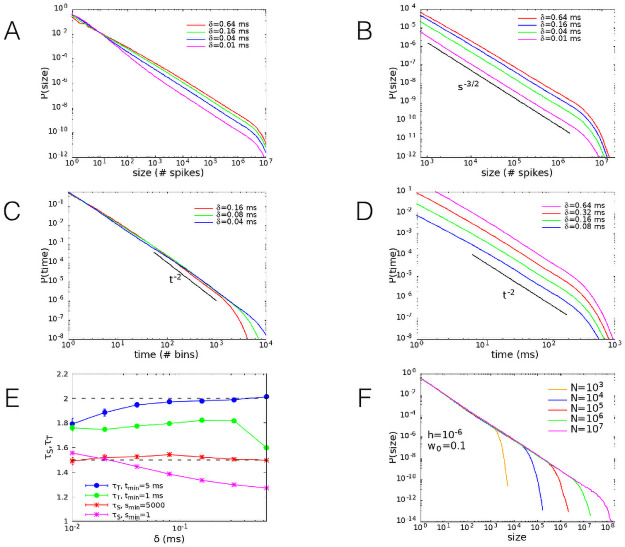

The above conclusions are strengthened by the quantitative analysis of the avalanche dynamics. We study the distribution of avalanches in the WCM simulated by the Gillespie algorithm [37] (see Methods). We implement two different procedures to define an avalanche and we start discussing the statistics of avalanches defined by the discretization in time bins of the temporal signal. More precisely, we divide the time in discrete bins of width δ [9] and identify an avalanche as a continuous series of time bins in which there is at least one spike (i.e., a transition of one neuron from a quiescent to an active state). The size of the avalanche is defined as the total number of spikes, while the duration is the number of time bins of the avalanche multiplied by the width δ of the bins.

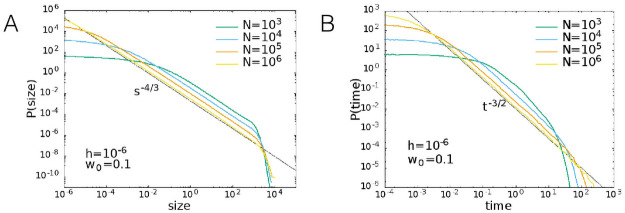

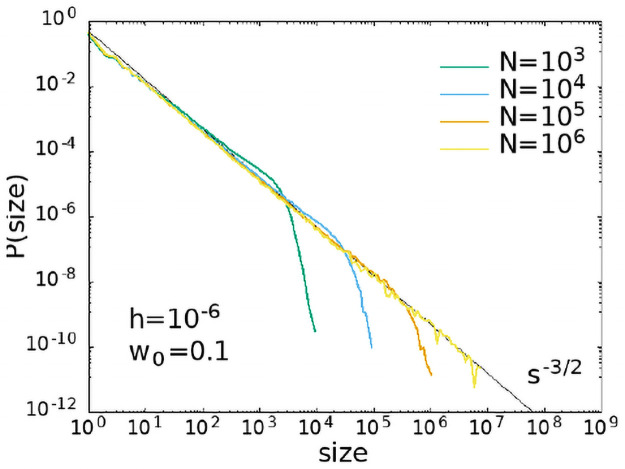

In Fig 4 we show the dependence of the size and duration distribution functions on the time bin δ, for w0 = 0.1, h = 10−6, N = 106. The behaviour of the distribution for small and large avalanche sizes is separately evidenced in Fig 4A and 4B. We notice that at small sizes the slope of the curves strongly depends on the bin width, as well evidenced also in experimental data [9–12]. Conversely, at large sizes all the curves exhibit a slope quite independent of the bin width, according to the power-law dependence with an exponent τS very close to 3/2. Analogously, in Fig 4C and 4D the distribution of avalanche durations exhibits a scaling with an exponent τT ∼ 2, which is very robust with the bin width. In Fig 4E we show the values of both exponents with error bars that best fit the data using the estimator introduced by Clauset et al. [38] (see Methods). It is evident that, if exponents are evaluated restricting the procedure to the large avalanche regime, their value converges to the expected exponents of the mean field branching process universality class, independently of the bin size [13]. This observation suggests that an underlying mechanism of marginal propagation of neural activity could be responsible for the avalanche behaviour.

Fig 4. Size and duration avalanche distributions.

(A) Distribution function of the avalanche sizes on the whole observed range. (B) Distribution function of the avalanche sizes on the region where robust power law behaviour is observed. (C) Distribution function of the avalanche duration as a function of the number of bins. (D) Distribution function of the avalanche duration as a function of time. Parameters w0 = 0.1, h = 10−6, N = 106. Different curves correspond to different values of the bin width δ, introduced to define the avalanche (see Methods). (E) Exponents of size and duration distributions, with error bars, computed using the estimator introduced in [38, 39], for different values of the lower bound of the fitting window. Error bars are not shown if they are smaller than the symbol size. (F) Size distribution function of the avalanches for w0 = 0.1, h = 10−6, and different values of the system size N = 103 − 107. As expected for a critical behaviour in finite systems, the exponential cut-off scales with N.

As expected for critical phenomena in finite systems, at the critical point the power-law behaviour of the avalanche size distribution function presents an exponential cut-off that scales with the system size. This is clearly shown in Fig 4F for w0 = 0.1 and vanishing external field, confirming that the power-law behaviour of the distribution is a genuine expression of the absence of a characteristic size at the critical point. We have also considered a different avalanche definition, through the introduction of a finite threshold in activity [40], confirming that this can lead to wrong values of critical exponents [41] (see Methods).

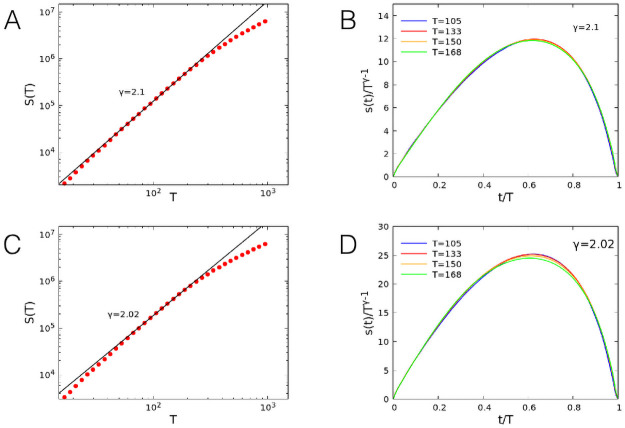

In recent years, the scaling properties of the avalanche shape have received wide attention in the community [42], searching for the collapse onto a universal curve according to a specific rescaling. Indeed, this analysis has been first proposed in the context of the crackling noise [43], where the scaling exponent for the avalanche size as a function of its duration has been derived to be γ = (τT − 1)/(τS − 1). Under this assumption, it is possible to obtain the collapse of the shapes of avalanches with different sizes onto a universal curve. Here we examine first the scaling behavior of the avalanche size versus its duration, see Fig 5A and 5C. Results evidence the expected scaling behavior of the avalanche size vs. its duration: The exponent γ is slightly larger than 2, the value predicted by the previous relation for τT = 2 and τS = 1.5 (γ ∼ 2.1 ± 0.05 for 50% inhibitory neurons and γ ∼ 2.02 ± 0.05 for 20% inhibitory neurons, where the fit is on the interval T ∈ [80, 200]). Moreover, the avalanche shapes for different sizes collapse onto a universal function for durations up to 400 time steps, corresponding to the scaling regime for the size distributions. However, at variance with the results from crackling noise [43], the shape is non-parabolic and strongly asymmetrical for all avalanche durations, for both percentages of inhibitory neurons.

Fig 5. Shape of the avalanche distributions.

Scaling of the avalanche size S as a function of its duration T for networks with 50% (A) and 20% (C) inhibitory neurons. Collapse of the avalanche shape for avalanche size in the scaling regime for notworks with 50% (B) and 20% (D) inhibitory neurons. Parameters w0 = 0.1, h = 10−6, N = 107.

Temporal correlation functions

In order to complete the description of the critical behaviour shown by the WCM, we focus here on the temporal correlation function of the firing rate simulated by the Gillespie algorithm. At each time we compute the mean total activity Σ = (k + l)/2N and the difference Δ = (k − l)/2N. We focus on the correlation function of the mean firing rate R = (1 − Σ)f[w0Σ + (wE + wI)Δ + h] in the stationary state

| (10) |

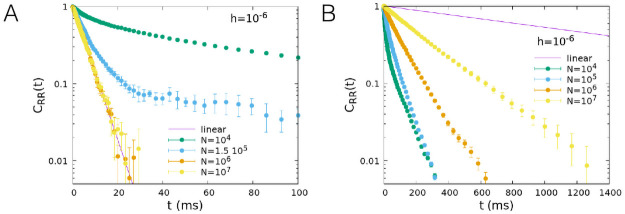

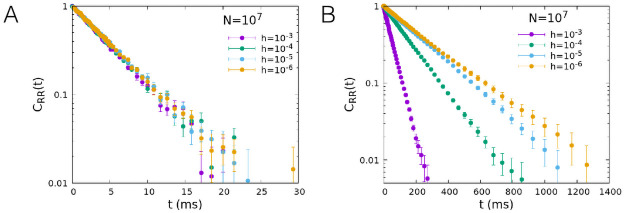

In Fig 6 we show the dependence of the correlation function on the number of neurons N for h = 10−6 and two values of w0, w0 = 0.2 far from the critical point and w0 = 0.1, corresponding to the critical point for h → 0. In both cases, the correlation function simulated by the Gillespie algorithm (dots) tends to the value predicted by the linear approximation (continuous line) given by Eq (9) for N → ∞. However for w0 = 0.2, far from the critical point, numerical data reproduce the linear approximation as soon as N ≳ 105, while for w0 = 0.1 the convergence is much slower. In Fig 7 we show the dependence of the correlation function on h for a fixed value of the number of neurons, N = 107. Data confirm that at the critical point (w0 = 0.1) the decay of the correlation function slows down in the limit h → 0. As expected, critical slowing down is not observed for w0 = 0.2.

Fig 6. Temporal decay of the firing rate autocorrelation, role of the system size N.

Time correlation function of the firing rate for several values of N, for α = 0.1 ms−1, β = 1 ms−1, wE + wI = 13.8, h = 10−6, and w0 = 0.2 (A), w0 = 0.1 (B). Dots correspond to the correlation function of the model simulated with the Gillespie algorithm, while the continuous line corresponds to the linear approximation, that is valid for large values of N.

Fig 7. Temporal decay of the firing rate autocorrelation, role of the external input h.

Time correlation function of the firing rate for several values of h, for N = 107 and w0 = 0.2 (A), w0 = 0.1 (B), other parameters as in Fig 6.

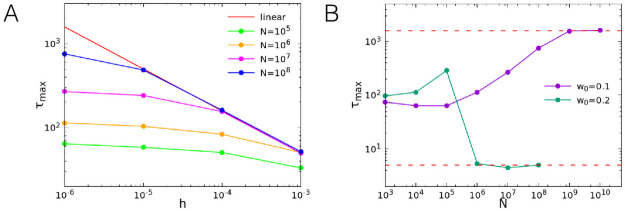

The maximum correlation time, obtained from an exponential fit of the long time tail of the functions, is plotted as a function of h in Fig 8A for w0 = 0.1. For a fixed value of the number of neurons N, the correlation times saturate at a finite value at the critical point w0 = 0.1. The value at which the time saturates however increases with the system size, so that the range of agreement of the measured correlation time with the linear approximation prediction extends toward smaller values of h for increasing N. In the limit N → ∞ the correlation time is always given by the linear approximation for any value of h, and therefore diverges for h → 0. In Fig 8B we plot the maximum correlation time as a function of N for h = 10−6 and w0 = 0.1, 0.2. It can be observed that the relaxation time saturates to the large value predicted by the linear approximation for N → ∞ at the critical point w0 = 0.1, whereas it decreases to smaller values far from the critical point.

Fig 8. Long time scale behaviour as a function of the system size N and the external input h.

(A) Maximum correlation time extracted from an exponential fit of the long time tail of the correlation function, as a function of h and for different values of N, for w0 = 0.1. The continuous red line corresponds to the linear approximation. (B) Maximum correlation time as a function of N for h = 10−6 and w0 = 0.1, 0.2.

Our analysis of the firing rate correlation function provides further evidence of the critical behaviour occurring in the WCM. In particular, the divergence of the characteristic time is in agreement with the slowing down of the dynamics in systems close to the critical point.

Discussion

The origin and nature of the power-law behaviour of the spontaneous activity in neural systems is a long-standing open issue. The observation of this dynamics in real systems is widespread, as well as in different models proposed to explain it. Similar scaling behaviour is indeed observed in a variety of integrate and fire neuronal network models, either self-organized, i.e. in absence of a tuning parameter [17, 20], or by adjusting at an appropriate value a relevant parameter [14, 19]. The central question in this context is whether scale-free phenomena are the mirror image of a genuine critical behaviour or emerge from non linear stochastic dynamics. Among different approaches, the stochastic Wilson-Cowan model, formulated in terms of the activity of populations of neurons, describes many interesting phenomena observed in neural dynamics. Moreover, it presents the advantage of the possibility of being analysed not only numerically but, most importantly, by an analytical approach under certain approximations. In previous studies this model was indicated as an example where the emergence of neuronal avalanches in the activity is not associated with a genuine critical point, but rather the byproduct of the network structure with noisy neuronal dynamics [26]. In order to clarify this point, we propose to explore a wider range of the parameter space, focusing on the behaviour of different quantities, as the temporal correlation function of the firing rate, in order to verify if a critical point can be identified, shedding new light on the nature of the phenomenon observed in the model.

Several papers in previous literature have studied the WCM and similar models, evidencing different kinds of behaviors, as first order phase transitions at the bifurcation point [36, 44, 45]. For instance, in [36], the authors considered the bifurcation transitions appearing upon varying the (always finite) external voltage inputs. However, the observed first-order transitions occur in a region of the parameter space of the model which does not overlap with that studied in our work. In particular, the theoretical results show slowing down and increasing fluctuations close to the bifurcation point and describe real systems in specific conditions, such as anesthesia, sleep cycles or seizures (see for instance [46]), very differently from the spontaneous activity state analyzed in the present study, namely in the absence of strong external stimulations, such as administration of anesthetic drugs, neither to the case of transitions between different sleep states, nor to the situation where epileptic crises can occur. In the review by Breakspear and co-workers [47], the possible different behaviors in brain activity are discussed. It is shown how the thermodynamic phase transitions in spatially extended systems are somehow the counterpart of the bifurcation transitions for systems with few components. This is an interesting remark, since second order phase transitions are observed only in systems with a sufficiently large number of degrees of freedom, namely in the thermodynamic limit.

In the light of the above discussion, the first important remark is that critical behaviour is expected for vanishing external fields. This requirement, together with the limit of very large system sizes, represents the foundation of the symmetry breaking phenomenon originating second order phase transitions. Recently critical behavior has been observed in non-zero field in the presence of self-adaptation mechanisms [20, 48]. Here we analyse the analytical solution of the WCM for vanishing h and in a wide range of the parameter values w0, searching for a quantity playing the role of an order parameter. In fact, the linear noise approximation is derived [22, 26] in the limit of very large N, namely in the thermodynamic limit. The analytical solution evidences that it exists a particular value of the control parameter w0c above which, for vanishing h, a second fixed point appears, beside the absorbing state Σ0 = 0. In the neighbourhood of this critical value w0c, the system activity is small allowing for the linearisation of the activation function. Interestingly, the critical value w0c = αβ−1 is the ratio of two characteristic rates, the disactivation rate α and the activation one, β. Criticality is therefore tuned by the optimal balance between these two (active-inactive) transitions: Below w0c the disactivation rate is much shorter than the activation rate and the absorbing state attracts the dynamics. Conversely, for very short activation times global activity becomes self-sustained even in the absence of external fields. An intriguing alternative interpretation of the expression of the critical point stems from the consideration that βw0c = α is the characteristic neuronal disactivation time. The l.h.s. is reminiscent of the product βJ in the Ising model, where β is the inverse temperature (entropic parameter) and J the interaction strength (energetic parameter). Therefore, the value of the critical point expresses also a non-local balance between the strength of the connection between pre- and post-synaptic neuron and the activation time in the post-synaptic neuron.

Within the linear approximation hypothesis, the analytical solution is able to provide a coherent description of the system dynamics in terms of the second order phase transition framework. More precisely, the system firing rate plays the role of the order parameter, going to zero at w = w0c, the correlation time diverges, evidencing the critical slowing down, as well as the fluctuations of the firing rate with respect to the fixed point value. Interestingly, the fixed point value for the variable Δ is Δ0 = 0, independently of w0. This suggests that the balance of the activity of excitatory and inhibitory neurons is a necessary but not sufficient condition for criticality. However, the dynamic Eq (5) are derived under the hypothesis of equal size populations of excitatory and inhibitory neurons, as well as symmetric connection strengths between different populations. Therefore, in order to further investigate in the WCM the role of balance of excitation and inhibition on the activity critical properties, it is necessary to extend the analytical study and generalize the analytical solutions relaxing the above hypothesis.

This analysis offers then a coherent scenario to understand the WCM behaviour and provides as well a tool to infer in which limit the linear approximation fails giving raise to bursty behaviour. More precisely, in order to observe neuronal avalanches, the coefficient of variation, measuring the fluctuations of the order parameter, should be much larger than the system size. In particular, for w0 ≳ w0c (Fig 3C and 3D) neuronal avalanches are found for the smaller system size, whereas the linear approximation (continuous behaviour) holds for the larger N. At the critical point (Fig 3E and 3F), fluctuations are larger than any N and avalanches are always detected. Analogously, far from the critical point (Fig 3A and 3B) the linear approximation always holds and avalanches are never found. Interestingly, similar results have been recently found for a different model [49]. Numerical data for the cortical branching model have evidenced, in fact, that the firing rate goes to zero for a specific value of the control parameter (the branching parameter), where the susceptibility diverges as well. As expected, moving away from the critical point these features are no longer found since the system does not satisfy any more the condition of vanishing external fields and the behaviour ceases to be critical.

Having clarified under which conditions critical behaviour is to be expected, we address next the issue of the scaling behaviour of neuronal avalanches. The determination of critical exponents in experimental systems represent an important challenge in terms of the appropriate tools to identify each avalanche. Common approaches implement the discretization of the temporal signal in bins. This approach leads to exponents varying with the bin size, as a consequence the optimal bin is identified with the one leading to a branching ratio equal to one, signature of a critical branching process [12]. Alternatively, a threshold in the amplitude of the signal can be chosen, defining as avalanche size the area delimited by the signal above threshold. A recent study has shown that special care must be taken implementing this method, in order to get the right critical exponent values [41]. In the present study we implement both approaches to identify the avalanche size, in order to verify the existence and robustness of the universal scaling behaviour. Numerical simulations by the Gillespie algorithm are very efficient numerically and allow the study of very large system sizes. This advantage turned up to be crucial in identifying an interesting scaling behaviour of the distributions for very large avalanche sizes. More precisely, by monitoring the value of the exponents as function of the bin size, without imposing any additional requirement, we evidence that, as expected, τS and τT depend on the bin size for moderate values of S, however for large avalanche sizes, S > 103, the scaling of the distribution becomes independent of δ. This surprising result, evidenced only because the analysis explored seven decades of N values, is in line with what expected from critical phenomena. Indeed, the critical slowing down at the critical point implies the absence of a characteristic time. By rescaling the time variable by a finite δ the temporal signal should be self-similar and therefore δ-independent. Most importantly, the extension of the scaling regime correctly scales with the system size. This behaviour can be then considered as a further confirmation of the critical nature of the activity in absence of external fields.

The universality class of the scaling behaviour for large avalanche sizes is in agreement with the mean field branching model universality class. These results are consistent with a variety of experimental data on different neuronal systems [9–12] and numerical simulations on complex networks in finite dimensions [17, 19]. We stress that it could appear surprising the emergence of a mean field universality class in finite dimension. A possible explanation is the small-world feature of functional networks, well supported by a number of experimental results. Moreover, recently it has been shown [50] that, starting from a regular square lattice, a neuronal integrate and fire model exhibits a crossover from the 2d sand pile behavior to the mean field branching process universality class. This crossover is due to the interplay between synaptic plastic adaptation and refractory time which makes the regular lattice evolve into a tree with negligible loops. In order to clarify this point in the context of the WCM we performed preliminary simulations on a 2d square lattice 32 × 32. On each site of the lattice we placed one excitatory and one inhibitory neuron, for a total number of neurons N = 2048. Each neuron can establish an average number of 80 synaptic connections at random, and the connection probability is proportional to exp(−r/5), where r is the distance between two neurons measured in lattice constant. Preliminary results show that the avalanche activity exhibits exponents very close to the values detected for the fully connected network. However, due to the limited system size, the scaling regime is limited to about one decade and the estimation of the exponents is not fully accurate. Moreover, in this calculation we implemented the value of the critical point w0c found for the fully connected network, even if a more precise identification is necessary since the critical point is not a universal quantity but depends on the network structure. Therefore, we plan to investigate the WCM behavior in finite dimensions in more detail and with a better statistics in a future study.

Moreover, we observe that an alternative definition of avalanches, implementing a threshold in the signal amplitude, can indeed lead to wrong exponent values (see Methods). In fact, we recover the expected behaviour only for vanishing threshold, whereas for finite thresholds the signal behaves as an Ornstein-Uhlenbeck process. This is an interesting observation since it suggests that neglecting regions in the signal with small amplitudes provides a signal typical of an uncorrelated process, in contrast with the feature of the whole neural activity.

The analytical calculation of the firing rate correlation function confirms the existence of a critical value for the control parameter, w = w0c, where the correlation time diverges for vanishing external fields in the linear approximation. Numerical simulations confirm that at the critical point the correlation function tends to the linear approximation behaviour in the limit of very large N, with a correlation time which remains finite far from w0c and increases with N at the critical point. This temporal relaxation behaviour, evidence of the critical slowing down, confirms the critical features of the firing rate activity. In conclusion, we confirm that the WCM model, able to reproduce a variety of complex features of neuronal activity, as oscillations and noisy limit cycles [27], exhibits critical behaviour at a specific value of the tunable parameter in the thermodynamic limit and for vanishing external fields.

Methods

Stability of the fixed points

From Eq (6) one has that, for h = 0, the fixed point Σ0 = 0 is unstable if w0 > w0c and stable if w0 < w0c, where w0c = α/β. Near the transition h = 0, w0 = w0c = α/β, assuming both h and Σ0 are small, so that a linear approximation of the hyperbolic tangent can be considered, Eq (6) becomes

| (11) |

The only acceptable solutions are those with Σ0 ≥ 0. Since the first and third coefficient have opposite sign, for h > 0 there is always exactly one acceptable solution. For h = 0, there is always a solution Σ0 = 0. When w0 ≤ w0c this is the only acceptable solution, while for w0 > w0c we have also the solution Σ0 ≃ (w0 − w0c)/w0 at first order in w0 − w0c. To investigate the stability of the fixed point, we have to consider the sign of the eigenvalues of the Jacobian, that is τ1 and τ2 given by Eq (8). These can be written also as

Note that the expressions (12) are exact. Therefore, eigenvalue is always positive. At the fixed point Σ0 = 0, , namely it is positive for w0 < w0c (fixed point is stable) and negative for w0 > w0c (fixed point is unstable). For w0 > w0c and at the fixed point Σ0 ≃ (w0 − w0c)/w0, one has at first order in w0 − w0c, so that τ1 is positive and the fixed point is stable.

Alternative definition of avalanches: Role of the threshold

In order to investigate the robustness of the observed scaling behaviours, we study the avalanche statistics implementing a different definition of avalanche, which is based on the analysis of the continuous temporal signal of the firing rate. We set a fixed threshold value Θ, and define the avalanche as an interval of time in which the firing rate is continuously above the threshold. The duration of the avalanche is the width of the time interval, while the size can be defined in three different ways: 1) as the total number of spikes observed in the time interval; 2) as the integral of the firing rate in the time interval; 3) as the integral of the difference between the firing rate and the threshold value. Definitions 1) and 2) give quite similar results, because the total number of spikes is proportional to the integral of the firing rate apart from small fluctuations. Fig 9A and 9B show the distributions of avalanche size and durations, defined with Θ = 0. We used definition 2 or 3 (they coincide in the case of Θ = 0) to measure the size of the avalanches. The exponents obtained with this procedure fully agree with the ones obtained by temporal binning, and are therefore those of a mean field branching process.

Fig 9. Avalanche size and duration distributions measured from the analysis of the continuous-time series of the firing rate signal.

The avalanche is defined as a continuous interval of time in which the firing rate is greater than a zero threshold and its duration is the width of the time interval. (A) Distribution function of the avalanche sizes. (B) Distribution function of the avalanche duration. Parameters: w0 = 0.1, h = 10−6, N = 106. Exponents of size and duration distributions, computed using the estimator introduced in [38, 39] with lower bounds of the fitting windows Smin = 10, tmin = 10 ms, are τS = 1.54 ± 0.03, τT = 2.04 ± 0.04.

However, as recently pointed out in [41], for continuous-time signals, the introduction of a finite threshold value in the definition of avalanches can lead to different scaling regimes. In order to verify this point, we consider the case of finite thresholds, Θ > 0. In our analysis, we consider as threshold the mean firing rate, Θ = 〈R〉. For w0 = 0.1 and h = 10−6 the mean firing rates fall within the interval from 0.63 Hz for N = 103 to 0.54 Hz for N = 106 (the firing rate at the fixed point is R0 = 0.316 Hz), while for w0 = 0.2 and h = 10−3 the mean firing rates take value in the interval from 11 Hz for N = 103 to 50 Hz for N = 106 (the firing rate at the fixed point is R0 = 50.3 Hz).

In Fig 10 we show the size and duration distributions for w0 = 0.1 and h = 10−6. The sizes were measured with the definition 3, that is as the integral of the difference between the firing rate and the threshold. The observed power-law exponent for the sizes is −4/3, while the exponent for the durations is −3/2, as expected for a random walk or Ornstein-Uhlenbeck process [41].

Fig 10. Avalanche size and duration distributions measured from a finite threshold.

(A) Size distribution and (B) duration distribution according to definition 3 for w0 = 0.1 and h = 10−6.

Moreover, in Fig 11 we show the size distribution measured according to the definition 2, that is as the integral of the firing rate, therefore integrating also the area below the threshold. In this case, consistently with what observed in [41], one finds also for the size distribution an exponent equal to −3/2, as for the duration distribution.

Fig 11. Avalanche size distributions measured from a finite threshold.

Distribution of the sizes according to definition 2 for w0 = 0.1 and h = 10−6.

Our analysis confirms the warning resulting from the discussion presented in [41]: The introduction of a threshold can lead to an incorrect evaluation of the scaling behavior. In the present case, choosing the threshold Θ = 0 allows us to recover the same scaling exponents obtained from the alternative definition of avalanche built on the time binning, in the universality class of the mean-field branching process. On the other hand, a different choice of Θ hides this scaling and reveals a behaviour similar to the simple random walk model.

Analytic expressions of variances and correlation functions

The covariance matrix σ of the system (7) has elements σij = 〈ξi(0)ξj(0)〉 with i, j = (Σ, Δ), where 〈⋯〉 denotes an average in the stationary state, which satisfy the relation [51]

| (13) |

where denotes the transpose matrix of

| (14) |

Solving Eq (13) one obtains

| (15) |

The inverse matrix σ−1 then reads

| (16) |

The elements of the time correlation matrix are obtained from the equations [51]

| (17) |

The matrix has eigenvalues (−1/τ1, −1/τ2) and eigenvectors (1, 0)T and (−wff τ1 τ2/(τ1 − τ2), 1)T. Diagonalizing , one obtains the matrix exponential

| (18) |

Note that in the case of τ1 = τ2 the upper right element in the above matrix becomes . Next, from Eq (17), one obtains the explicit expressions for the cross-correlation functions

| (19) |

| (20) |

| (21) |

| (22) |

Within the linear approximation valid in the limit of large number of neurons that we are here considering, and in the stationary state when the deterministic components have relaxed to the attractive fixed point, the firing rate (3) can be written as

| (23) |

where

| (24) |

and

| (25) |

| (26) |

are the derivatives of R computed at the fixed point. The correlation function of ξR(t) is therefore given by

| (27) |

The variance of R can then be computed as 〈(R − R0)2〉 = N−1〈ξR(0)2〉 (note the factor N appearing due to the definition 23), and σRR = N〈(R − R0)2〉 = 〈ξR(0)2〉, so that

| (28) |

where σΣΣ, σΣΔ and σΔΔ are the elements of the covariance matrix (15).

Numerical simulation methods

The network dynamics is simulated as a continuous-time Markov process, using the Gillespie algorithm [37]. More precisely, the steps of the algorithm are the following: 1) for each neuron i we compute the transition rate ri: ri = α if neuron i is active, or ri = f(si) if it is quiescent; 2) we compute the sum over all neurons r = ∑i ri; 3) we draw a time interval dt from an exponential distribution with rate r; 4) we choose the i–th neuron with probability ri/r and change its state; 5) we update the time to t + dt.

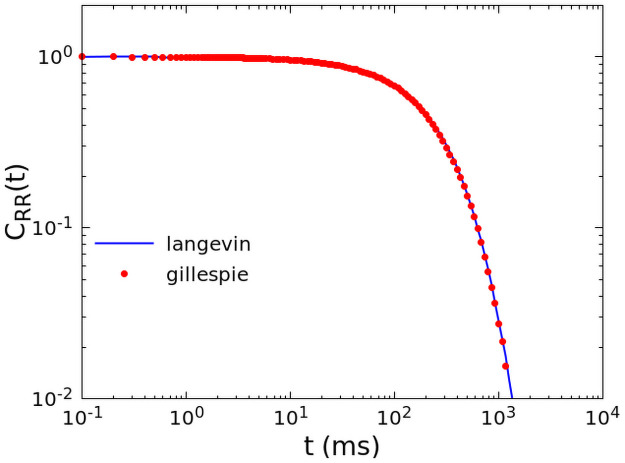

For a very large number of neurons N > 107, we optimized the numerical computation by simulating directly the Langevin Eq (4), with a fixed time step dt = 10−3 ms. In Fig 12 we show that the two simulation methods coincide perfectly for N = 107.

Fig 12. Equivalence of different numerical simulation methods.

Comparison between the correlation function calculated by the Gillespie algorithm (red dots) and fully non-linear Langevin equation (blue line), for N = 107, w0 = 0.1, h = 10−6.

Acknowledgments

LdA and AS acknowledge support from Program (VAnviteLli pEr la RicErca: VALERE) 2019 financed by the University of Campania “L. Vanvitelli”.

Data Availability

All relevant data are within the manuscript. The numerical codes for the Gillespie algorithm and the correlation function can be found at http://people.na.infn.it/~decandia/codice/cowan/ploscompbio/code.zip.

Funding Statement

AdC, IA and LdA acknowledge financial support from the MIUR PRIN 2017WZFTZP. AS acknowledges financial support form MIUR PRIN PRIN201798CZLJ. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Freeman WJ, Zhai J. Simulated power spectral density (PSD) of background electrocorticogram (ECoG). Cognitive neurodynamics. 2009;3(1):97–103. doi: 10.1007/s11571-008-9064-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Freeman WJ, Holmes MD, West GA, Vanhatalo S. Dynamics of human neocortex that optimizes its stability and flexibility. International Journal of Intelligent Systems. 2006;21(9):881–901. doi: 10.1002/int.20167 [DOI] [Google Scholar]

- 3.Freeman WJ, Holmes MD. Metastability, instability, and state transition in neocortex. Neural Networks. 2005;18(5-6):497–504. doi: 10.1016/j.neunet.2005.06.014 [DOI] [PubMed] [Google Scholar]

- 4.Deco G, Jirsa VK. Ongoing cortical activity at rest: criticality, multistability, and ghost attractors. Journal of Neuroscience. 2012;32(10):3366–3375. doi: 10.1523/JNEUROSCI.2523-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nunez PL, Wingeier BM, Silberstein RB. Spatial-temporal structures of human alpha rhythms: Theory, microcurrent sources, multiscale measurements, and global binding of local networks. Human brain mapping. 2001;13(3):125–164. doi: 10.1002/hbm.1030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bénar CG, Grova C, Jirsa VK, Lina JM. Differences in MEG and EEG power-law scaling explained by a coupling between spatial coherence and frequency: a simulation study. Journal of computational neuroscience. 2019;47(1):31–41. doi: 10.1007/s10827-019-00721-9 [DOI] [PubMed] [Google Scholar]

- 7.Deco G, Jirsa VK, McIntosh AR. Emerging concepts for the dynamical organization of resting-state activity in the brain. Nature Reviews Neuroscience. 2011;12(1):43–56. doi: 10.1038/nrn2961 [DOI] [PubMed] [Google Scholar]

- 8.Robinson P, Rennie C, Rowe D, O’Connor S, Wright J, Gordon E, et al. Neurophysical modeling of brain dynamics. Neuropsychopharmacology. 2003;28(1):S74–S79. doi: 10.1038/sj.npp.1300143 [DOI] [PubMed] [Google Scholar]

- 9.Beggs JM, Plenz D. Neuronal avalanches in neocortical circuits. Journal of neuroscience. 2003;23:11167. doi: 10.1523/JNEUROSCI.23-35-11167.2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Petermann T, Thiagarajan TC, Lebedev MA, Nicolelis MA, Chialvo DR, Plenz D. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proceedings of the National Academy of Sciences. 2009;106:15921. doi: 10.1073/pnas.0904089106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fransson P, Metsaranta M, Blennow M, Aden U, Lagercrantz H, Vanhatalo S. Early development of spatial patterns of power-law frequency scaling in fmri resting-state and eeg data in the newborn brain. Cerebral cortex. 2012;23:638. doi: 10.1093/cercor/bhs047 [DOI] [PubMed] [Google Scholar]

- 12.Shriki O, Alstott J, Carver F, Holroyd T, Henson RN, Smith ML, et al. Neuronal avalanches in the resting MEG of the human brain. Journal of Neuroscience. 2013;33:7079. doi: 10.1523/JNEUROSCI.4286-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zapperi S, Lauritsen KB, Stanley HE. Self-Organized Branching Processes: Mean-Field Theory for Avalanches. Phys Rev Lett. 1995;75:4071. doi: 10.1103/PhysRevLett.75.4071 [DOI] [PubMed] [Google Scholar]

- 14.Levina A, Herrmann JM, Geisel T. Dynamical synapses causing selforganized criticality in neural networks. Nature physics. 2007;3:857. doi: 10.1038/nphys758 [DOI] [Google Scholar]

- 15.van Kessenich LM, Lukovíc M, de Arcangelis L, Herrmann HJ. Critical neural networks with short-and long-term plasticity. Physical Review E. 2018;97:032312. doi: 10.1103/PhysRevE.97.032312 [DOI] [PubMed] [Google Scholar]

- 16.Raimo D, Sarracino A, de Arcangelis L. Role of inhibitory neurons in temporal correlations of critical and supercritical spontaneous activity. Physica A. 2020;565:125555. doi: 10.1016/j.physa.2020.125555 [DOI] [Google Scholar]

- 17.de Arcangelis L, Perrone-Capano C, Herrmann HJ. Self-organized criticality model for brain plasticity. Physical review letters. 2006;96:028107. doi: 10.1103/PhysRevLett.96.028107 [DOI] [PubMed] [Google Scholar]

- 18.de Arcangelis L. Are dragon neuronal avalanches dungeons for selforganized brain activity? The European Physical Journal Special Topics. 2012;205:243. doi: 10.1140/epjst/e2012-01574-6 [DOI] [Google Scholar]

- 19.de Arcangelis L, Herrmann HJ. Activity-dependent neuronal model on complex networks. Frontiers in physiology. 2012;3:1. doi: 10.3389/fphys.2012.00062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kinouchi O, Pazzini R, Copelli M. Mechanisms of Self-Organized Quasicriticality in Neuronal Network Models. Frontiers in Physics. 2020;8:530. doi: 10.3389/fphy.2020.583213 [DOI] [Google Scholar]

- 21.Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J. 1972;12:1. doi: 10.1016/S0006-3495(72)86068-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ohira T, Cowan JD. Stochastic neurodynamics and the system size expansion. In: Ellacort S, Anderson I, editors. Mathematics of Neural Networks: Models, Algorithms, and Applications. Springer, Berlin,; 1997. p. 290. [Google Scholar]

- 23.Buic M, Cowan J. Field-theoretic approach to fluctuation effects in neural networks. Physical Review E. 2007;75:51919. doi: 10.1103/PhysRevE.75.051919 [DOI] [PubMed] [Google Scholar]

- 24.Buice M, Cowan J, Chow C. Systematic fluctuation expansion for neural network activity equations. Neural Computation. 2010;22:377. doi: 10.1162/neco.2009.02-09-960 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bressloff PC. Stochastic neural field theory and the system-size expansion. SIAM Journal on Applied Mathematics. 2010;70:1488. doi: 10.1137/090756971 [DOI] [Google Scholar]

- 26.Benayoun M, Cowan JD, van Drongelen W, Wallace E. Avalanches in a Stochastic Model of Spiking Neurons. PLoS Comput Biol. 2010;6:e1000846. doi: 10.1371/journal.pcbi.1000846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wallace E, Benayoun M, Drongelen WV, Cowan JD. Emergent oscillations in networks of stochastic spiking neurons. Plos one. 2011;6:e14804. doi: 10.1371/journal.pone.0014804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Stanley EH. Introduction to Phase Transitions and Critical Phenomena. Oxford University Press; 1971. [Google Scholar]

- 29.Binder K. Theory of first-order phase transitions. Reports on progress in physics. 1987;50(7):783. doi: 10.1088/0034-4885/50/7/001 [DOI] [Google Scholar]

- 30.Henkel M, Hinrichsen H, Lübeck S. Non-Equilibrium Phase Transitions. Springer; 2008. [Google Scholar]

- 31.Bonachela JA, Munoz MA. Self-organization without conservation: true or just apparent scale-invariance? J Stat Mech. 2009;2009:P09009. doi: 10.1088/1742-5468/2009/09/P09009 [DOI] [Google Scholar]

- 32.Bonachela JA, Franciscis SD, Torres JJ, Munoz MA. Self-organization without conservation: are neuronal avalanches generically critical? J Stat Mech. 2010;2010:P02015. doi: 10.1088/1742-5468/2010/02/P02015 [DOI] [Google Scholar]

- 33.van Kampen NG. Stochastic Processes in Physics and Chemistry. North Holland; 2007. [Google Scholar]

- 34.Lombardi F, Herrmann H, Perrone-Capano C, Plenz D, de Arcangelis L. Balance between excitation and inhibition controls the temporal organization of neuronal avalanches. Physical review letters. 2012;108(22):228703. doi: 10.1103/PhysRevLett.108.228703 [DOI] [PubMed] [Google Scholar]

- 35.Sarracino A, Arviv O, Shriki O, de Arcangelis L. Predicting brain evoked response to external stimuli from temporal correlations of spontaneous activity. Phys Rev Research. 2020;2:033355. doi: 10.1103/PhysRevResearch.2.033355 [DOI] [Google Scholar]

- 36.Negahbani E, Steyn-Ross DA, Steyn-Ross ML, Wilson MT, Sleigh JW. Noise-induced precursors of state transitions in the stochastic Wilson–Cowan model. The Journal of Mathematical Neuroscience (JMN). 2015;5(1):1–27. doi: 10.1186/s13408-015-0021-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gillespie D. Exact stochastic simulation of coupled chemical reactions. J Phys Chem. 1977;81:2340. doi: 10.1021/j100540a008 [DOI] [Google Scholar]

- 38.Clauset A, Shalizi CR, Newman MEJ. Power-Law Distributions in Empirical Data. SIAM Review. 2009;51:661. doi: 10.1137/070710111 [DOI] [Google Scholar]

- 39.Alstott J, Bullmore E, Plenz D. powerlaw: A Python Package for Analysis of Heavy-Tailed Distributions. Plos One. 2014;9:e85777. doi: 10.1371/journal.pone.0085777 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Gautam SH, Hoang TT, McClanahan K, Grady SK, Shew WL. Maximizing sensory dynamic range by tuning the cortical state to criticality. PLoS computational biology. 2015;11:e1004576. doi: 10.1371/journal.pcbi.1004576 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Villegas P, di Santo S, Burioni R, Munoz MA. Time-series thresholding and the definition of avalanche size. Phys Rev E. 2019;100:012133. doi: 10.1103/PhysRevE.100.012133 [DOI] [PubMed] [Google Scholar]

- 42.Friedman N, Ito S, Brinkman BA, Shimono M, DeVille RL, Dahmen KA, et al. Universal critical dynamics in high resolution neuronal avalanche data. Physical review letters. 2012;108(20):208102. doi: 10.1103/PhysRevLett.108.208102 [DOI] [PubMed] [Google Scholar]

- 43.Kuntz MC, Sethna JP. Noise in disordered systems: The power spectrum and dynamic exponents in avalanche models. Phys Rev B. 2000;62:11699. doi: 10.1103/PhysRevB.62.11699 [DOI] [Google Scholar]

- 44.Steyn-Ross ML, Steyn-Ross DA, Sleigh JW. Modelling general anaesthesia as a first-order phase transition in the cortex. Progress in biophysics and molecular biology. 2004;85(2-3):369–385. doi: 10.1016/j.pbiomolbio.2004.02.001 [DOI] [PubMed] [Google Scholar]

- 45.Steyn-Ross DA, Steyn-Ross ML, Sleigh JW, Wilson MT, Gillies IP, Wright J. The sleep cycle modelled as a cortical phase transition. Journal of Biological Physics. 2005;31(3-4):547–569. doi: 10.1007/s10867-005-1285-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Steyn-Ross DA, Steyn-Ross ML, Wilson MT, Sleigh JW. Phase transitions in single neurons and neural populations: Critical slowing, anesthesia, and sleep cycles. In: Modeling phase transitions in the brain. Springer; 2010. p. 1–26. [Google Scholar]

- 47.Cocchi L, Gollo LL, Zalesky A, Breakspear M. Criticality in the brain: A synthesis of neurobiology, models and cognition. Progress in neurobiology. 2017;158:132–152. doi: 10.1016/j.pneurobio.2017.07.002 [DOI] [PubMed] [Google Scholar]

- 48.Girardi-Schappo M, Brochini L, Costa AA, Carvalho TTA, Kinouchi O. Synaptic balance due to homeostatically selforganized quasicritical dynamics. Phys Rev Research. 2020;2:012042. doi: 10.1103/PhysRevResearch.2.012042 [DOI] [Google Scholar]

- 49.Fosque LJ, Williams-Garcia RV, Beggs JM, Ortiz G. Evidence for Quasicritical Brain Dynamics. Phys Rev Lett. 2021;126:098101. doi: 10.1103/PhysRevLett.126.098101 [DOI] [PubMed] [Google Scholar]

- 50.Van Kessenich LM, De Arcangelis L, Herrmann HJ. Synaptic plasticity and neuronal refractory time cause scaling behaviour of neuronal avalanches. Scientific reports. 2016;6(1):1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Risken H. The Fokker-Planck Equation. Springer; 1996. [Google Scholar]