Abstract

Explainable Artificial Intelligence (XAI) is an emerging research topic of machine learning aimed at unboxing how AI systems’ black-box choices are made. This research field inspects the measures and models involved in decision-making and seeks solutions to explain them explicitly. Many of the machine learning algorithms cannot manifest how and why a decision has been cast. This is particularly true of the most popular deep neural network approaches currently in use. Consequently, our confidence in AI systems can be hindered by the lack of explainability in these black-box models. The XAI becomes more and more crucial for deep learning powered applications, especially for medical and healthcare studies, although in general these deep neural networks can return an arresting dividend in performance. The insufficient explainability and transparency in most existing AI systems can be one of the major reasons that successful implementation and integration of AI tools into routine clinical practice are uncommon. In this study, we first surveyed the current progress of XAI and in particular its advances in healthcare applications. We then introduced our solutions for XAI leveraging multi-modal and multi-centre data fusion, and subsequently validated in two showcases following real clinical scenarios. Comprehensive quantitative and qualitative analyses can prove the efficacy of our proposed XAI solutions, from which we can envisage successful applications in a broader range of clinical questions.

Keywords: Explainable AI, Information fusion, Multi-domain information fusion, Weakly supervised learning, Medical image analysis

Highlights

-

•

We performed a mini-review for XAI in medicine and digital healthcare.

-

•

Our mini-review is comprehensive on most recent studies of XAI in the medical field.

-

•

We proposed two XAI methods and constructed two representative showcases.

-

•

One of our XAI models is based on weakly supervised learning for COVID-19 classification.

-

•

One of our XAI models is developed for ventricle segmentation in hydrocephalus patients.

1. Introduction

Recent years have seen significant advances in the capacity of Artificial Intelligence (AI), which is growing in sophistication, complexity and autonomy. A continuously veritable and explosive growth of data with a rapid iteration of computing hardware advancement provides a turbo boost for the development of AI.

AI is a generic concept and an umbrella term that implies the use of a machine with limited human interference to model intelligent actions. It covers a broad range of research studies from machine intelligence for computer vision, robotics, natural language processing to more theoretical machine learning algorithms design and recently re-branded and thrived deep learning development (Fig. 1).

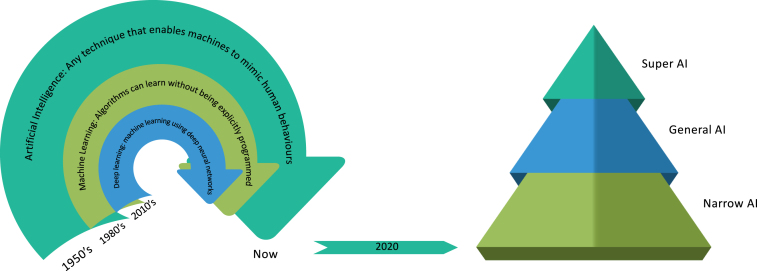

Fig. 1.

Left: Terminology and historical timeline of AI, machine learning and deep learning. Right: We are still at the stage of narrow AI, a concept used to describe AI systems that are capable of handling a single or limited task. General AI is the hypothetical wisdom of AI systems capable of comprehending or learning any intelligent activity a human being might perform. Super AI is an AI that exceeds human intelligence and skills.

1.1. Born of AI

AI changes almost every sector globally, e.g., enhancing (digital) healthcare (e.g., making diagnosis more accurate, allowing improved disease prevention), accelerating drug/vaccine development and repurposing, raising agricultural productivity, leading to mitigation and adaptation in climate change, improving the efficiency of manufacturing processes by predictive maintenance, supporting the development of autonomous vehicles and programming more efficient transport networks, and in many other successful applications, which make significant positive socio-economic impact. Besides, AI systems are being deployed in highly-sensitive policy fields, such as facial recognition in the police or recidivism prediction in the criminal justice system, and in areas where diverse social and political forces are presented. Therefore, nowadays, AI systems are incorporated into a wide variety of decision-making processes. As AI systems become integrated into all kinds of decision-making processes, the degree to which people who develop AI, or are subject to an AI-enabled decision, can understand how the resulting decision-making mechanism operates and why a specific decision is reached, has been increasingly debated in science and policy communities.

A collection of innovations, which are typically correlated with human or animal intelligence, is defined as the term “artificial intelligence”. John McCarthy, who coined this term in 1955, described it as “the scientific and technical expertise in the manufacture of intelligent machines”, and since then many different definitions have been endowed.

1.2. Growth of machine learning

Machine learning is a subdivision of AI that helps computer systems to intelligently execute complex tasks. Traditional AI methods, which specify step by step how to address a problem, are normally based on hard-coded rules. Machine learning framework, by contrast, leverages the power of a large amount of data (as examples and not examples) for the identification of characteristics to accomplish a pre-defined task. The framework then learns how the target output will be better obtained. Three primary subdivisions of machine learning algorithms exist:

-

•

A machine learning framework, which is trained using labelled data, is generally categorised as supervised machine learning. The labels of the data are grouped into one or more classes at each data point, such as “cats” or ”dogs”. The supervised machine learning framework exploits the nature from these labelled data (i.e., training data), and forecasts the categories of the new or so called test data.

-

•

Learning without labels is referred to as unsupervised learning. The aim is to identify the mutual patterns among data points, such as the formation of clusters and allotting data points to these clusters.

-

•

Reinforcement learning on the other hand is about knowledge learning, i.e., learning from experience. In standard reinforcement learning settings, an agent communicates with its environment, and is given a reward function that it tries to optimise. The purpose of the agent is to understand the effect of its decisions, and discover the best strategies for maximising its rewards during the training and learning procedure.

It is of note that some hybrid methods, e.g., semi-supervised learning (using partially labelled data) and weakly supervised (using indirect labels), are also under development.

Although not achieving the human-level intelligence often associated with the definition of the general AI, the capacity to learn from knowledge increases the amount and sophistication of tasks that can be tackled by machine learning systems (Fig. 1). A wide variety of technologies, many of which people face on a daily basis, are nowadays enabled by rapid developments in machine learning, contributing to current advancements and dispute about the influence of AI in society. Many of the concepts that frame the existing machine learning systems are not new. The mathematical underpinnings of the field date back many decades, and since the 1950s, researchers have developed machine learning algorithms with varying degrees of complexity. In order to forecast results, machine learning requires computers to process a vast volume of data. How systems equipped with machine learning can handle probabilities or uncertainty in decision-making is normally informed by statistical approaches. Statistics, however, often cover areas of research that are not associated with the development of algorithms that can learn to make forecasts or decisions from results. Although several key principles of machine learning are rooted in data science and statistical analysis, some of the complex computational models do not converge with these disciplines naturally. Symbolic approaches, compared to statistical methods, are also used for AI. In order to create interpretations of a problem and to reach a solution, these methods use logic and inference.

1.3. Boom of deep learning

Deep learning is a relatively recent congregation of approaches that have radically transformed machine learning. Deep learning is not an algorithm per se, but a range of algorithms that implements neural networks with deep layers. These neural networks are so deep that they can only be implemented on computer node clusters – modern methods of computing – such as graphics processing units (GPUs), are needed to train them successfully. Deep learning functions very well for vast quantities of data, and it is never too difficult to engineer the functionality even if a problem is complex (for example, due to the unstructured data). When it comes to image detection, natural language processing, and voice recognition, deep learning can always outperform the other types of algorithms. Deep learning assisted disease screening and clinical outcome prediction or automated driving, which were not feasible using previous methods, are well manifested now. Actually, the deeper the neural network with more data loaded for training, the higher accuracy a neural network can produce. The deep learning is very strong, but there are a few disadvantages to it. The reasoning of how deep learning algorithms reach to a certain solution is almost impossible to reveal clearly. Although several tools are now available that can increase insights into the inner workings of the deep learning model, this black-box problem still exists. Deep learning often involves long training cycles, a lot of data and complex hardware specifications, and it is not easy to obtain the specific skills necessary to create a new deep learning approach to tackle a new problem.

Although acknowledging that AI includes a wide variety of scientific areas, this paper uses the umbrella word ‘AI’ and much of the recent interest in AI has been motivated by developments in machine learning and deep learning. More importantly, we should realise that there is not one algorithm, though, that will adapt or solve all issues. Success normally depends on the exact problem that needs to be solved and the knowledge available. A hybrid solution is often required to solve the problem, where various algorithms are combined to provide a concrete solution. Each issue involves a detailed analysis into what constitutes the best-fit algorithm. Transparency of the input size, capabilities of the deep neural network and time efficiency should also be taken into consideration, since certain algorithms take a long time to train.

1.4. Stunt by the black-box and promotion of the explainable AI

Any of today’s deep learning tools are capable of generating extremely reliable outcomes, but they are often highly opaque, if not fully invisible, making it difficult to understand their behaviours. For even skilled experts to completely comprehend these so-called ’black-box’ models may be still difficult. As these deep learning tools are applied on a wide scale, researchers and policymakers can challenge whether the precision of a given task outweighs more essential factors in the decision-making procedure.

As part of attempts to integrate ethical standards into the design and implementation of AI-enabled technologies, policy discussions around the world increasingly involve demands for some form of Trustable AI, which includes Valid AI, Responsible AI, Privacy-Preserving AI, and Explainable AI (XAI), in which the XAI want to address the fundamental question about the rationale of the decision making process including both human level XAI and machine level XAI (Fig. 2). For example, in the UK, such calls came from the AI Committee of the House of Lords, which argued that the development of intelligible AI systems is a fundamental requirement if AI will be integrated as a trustworthy tool for our society. In the EU, the High-Level Group on AI has initiated more studies on the pathway towards XAI (Fig. 2). Similarly, in the USA, the Defence Advanced Research Projects Agency funds a new research effort aiming at the development of AI with more explainability. These discussions will become more urgent as AI approaches are used to solve problems in a wide variety of complicated policy making areas, as experts increasingly work alongside AI-enabled decision-making tools, for example in clinical studies, and as people more regularly experience AI systems in real life when decisions have a major impact. Meanwhile, research studies in AI continue to progress at a steady pace. XAI is a vigorous area with many on-going studies emerging and several new strategies evolving that make a huge impact on AI development in various ways.

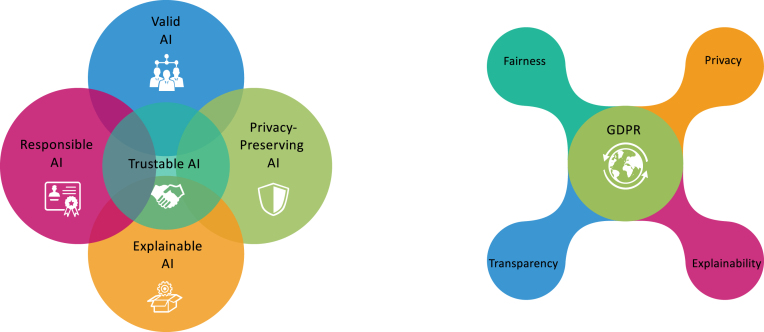

Fig. 2.

Left: Trustable AI or Trustworthy AI includes Valid AI, Responsible AI, Privacy-Preserving AI, and Explainable AI (XAI). Right: EU General Data Protection Regulation (GDPR) highlights the Fairness, Privacy, Transparency and Explainability of the AI.

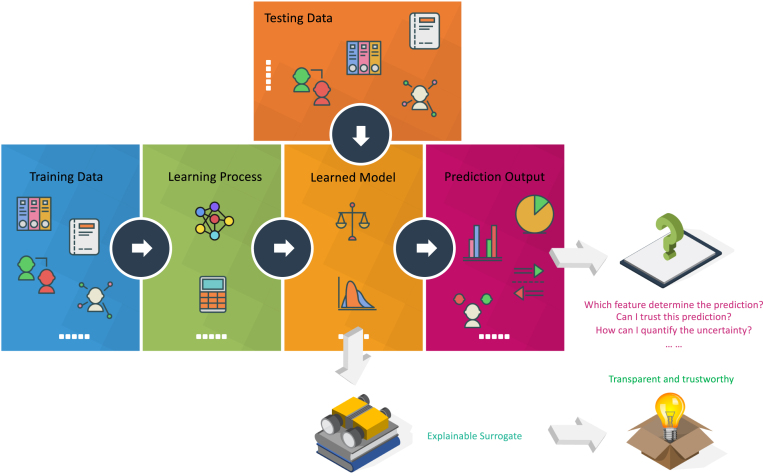

While the usage of the term is inconsistent, “XAI” refers to a class of systems that have insight into how an AI system makes decisions and predictions. XAI explores the reasoning for the decision-making process, presents the positives and drawbacks of the system, and offers a glimpse of how the system will act in the future. By offering accessible explanations of how AI systems perform their study, XAI can allow researchers to understand the insights that come from research results. For example, in Fig. 3, an additional explainable surrogate module can be added to the learnt model to achieve a more transparent and trustworthy model. In other words, for a conventional machine or deep learning model, only generalisation error has been considered while adding an explainable surrogate, both generalisation error and human experience can be considered and a verified prediction can be achieved. In contrast, a learnt black-box model without an explainable surrogate module will cause concerns for the end-users although the performance of the learnt model can be high. Such a black-box model can always cause confusions like “Why did you do that?”, “Why did you not do that?”, “When do you succeed or fail?”, “How do I correct an error?”, and “Can I trust the prediction?”. The XAI powered model, on the other hand, can provide clear and transparent predictions to reassure “I understand why.”, “I understand why not.”, “I know why you succeed or fail.”, “I know how to correct an error.”, and “I understand, therefore I trust”. A typical feedback loop of the XAI development can be found in Fig. 4, which includes seven steps from training, quality assurance (QA), deployment, prediction, split testing (A/B test), monitoring, and debugging.

Fig. 3.

Schema of the added explainable surrogate module for the normal machine or deep learning procedure that can achieve a more transparent and trustworthy model.

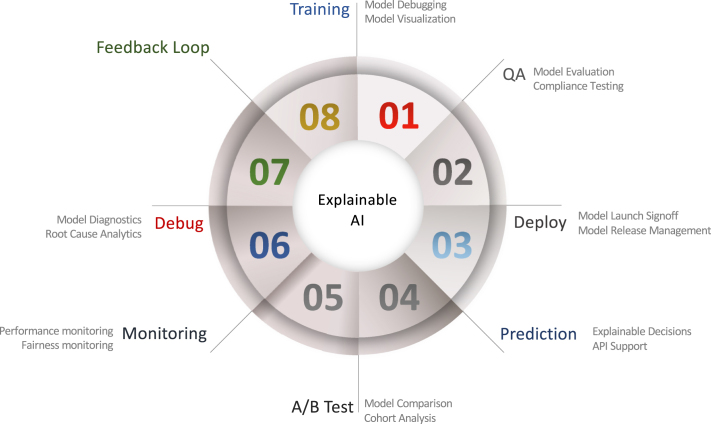

Fig. 4.

A typical feedback loop of the XAI development that includes seven steps from training, quality assurance (QA), deployment, prediction, split testing (A/B test), monitoring, and debugging.

A variety of terms are used to define certain desired characteristics of an XAI system in research, public, and policy debates, including:

-

•

Interpretability: it means a sense of knowing how the AI technology functions.

-

•

Explainability: it provides an explanation for a wider range of users that how a decision has been drawn.

-

•

Transparency: it measures the level of accessibility to the data or model.

-

•

Justifiability: it indicates an understanding of the case to support a particular outcome.

-

•

Contestability: it implies how the users can argue against a decision.

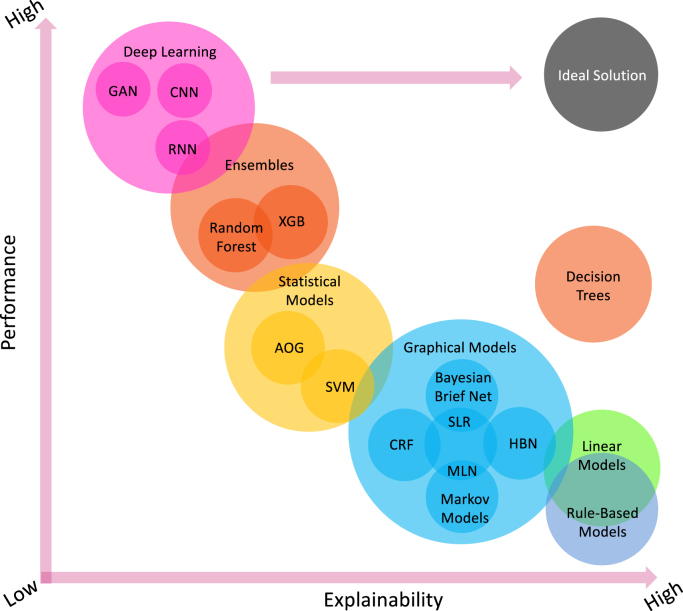

Comprehensive surveys on general XAI can be found elsewhere, e.g., [1], [2], [3], [4]; therefore, here we provide an overview of most important concepts of the XAI. Broadly speaking, XAI can be categorised into model-specific or model-agnostic based approaches. Besides, these methods can be classified into local or global methods that can be either intrinsic or post-hoc [1]. Essentially, there are many machine learning models that are intrinsically explainable, e.g., linear models, rule-based models and decision trees, which are also known as transparent models or white-box models. However, these relatively simple models may have a relatively lower performance (Fig. 5). For more complex models, e.g., support vector machines (SVM), convolutional neural networks (CNN), recurrent neural networks (RNN) and ensemble models, we can design model-specific and post-hoc XAI strategies for each of them. For example, commonly used strategies include explanation by simplification, architecture modification, feature relevance explanation, and visual explanation [1]. In general, these more complex models may achieve better performance while the explainability becomes lower (Fig. 5). It is of note that due to the nature of the problems, less complicated models may still perform well compared to deep learning based models while preserving the explainability. Therefore, Fig. 5 just depicts a preliminary representation inspired by [1], in which XAI demonstrates its ability to enhance the trade-off between model interpretability and efficiency.

Fig. 5.

Model explainability vs. model performance for widely used machine learning and deep learning algorithms. The ideal solution should have both high explainability and high performance. However, existing linear models, rule-based models and decision trees are more transparent, but with lower performance in general. In contrast, complex models, e.g., deep learning and ensembles, manifest higher performance while less explainability can be obtained. HBN: Hierarchical Bayesian Networks; SLR: Simple Linear Regression; CRF: Conditional Random Fields; MLN: Markov Logic Network; SVM: Support Vector Machine; AOG: Stochastic And-Or-Graphs; XGB: XGBoost; CNN: Convolutional Neural Network; RNN: Recurrent Neural Network; and GAN: Generative Adversarial Network.

Recently, model-agnostic based approaches attract great attention that rely on a simplified surrogate function to explain the predictions [3]. Model-agnostic approaches are not attached to a specific machine learning model. This class of techniques, in other words, distinguishes prediction from explanation. Model-agnostic representations are usually post-hoc that are generally used to explain deep neural networks with interpretable surrogates that can be local or global [2]. Below is some summary for XAI in more complex deep learning based models.

1.4.1. Model-specific global XAI

By integrating interpretability constraints into the procedure of deep learning, these model-specific global XAI strategies can improve the understandability of the models. Structural restrictions may include sparsity and monotonicity, where fewer input features are leveraged or the correlation between features and predictions is confined as monotonic). Semantic prior knowledge can also be impelled to restrict the higher-level abstractions derived from the data. For instance, in a CNN based brain tumour detection/classification model using multimodal MRI data fusion, constraints can be imposed by forcing disengaged representations that are recognisable to each MRI modality (e.g., T1, T1 post-contrast and FLAIR), respectively. In doing so, the model can identify crucial information from each MRI modality and distinguish brain tumours and sub-regions into necrotic, more or less infiltrative that can provide vital diagnosis and prognosis information. On the contrary, simple aggregation based information fusion (combining all the multimodal MRI data like a sandwich) would not provide such explainability.

1.4.2. Model-specific local XAI

In a deep learning model, a model-specific local XAI technique offers an interpretation for a particular instance. Recently, novel attention mechanisms have been proposed to emphasise the importance of different features of the high-dimensional input data to provide an explanation of a representative instance. Consider a deep learning algorithm that encodes an X-ray image into a vector using a CNN and then use an RNN to produce a clinical description for the X-ray image by using the encoded vector. For the RNN, an attention module can be applied to explain to the user what image fragments the model focuses on to produce each substantive term for the clinical description. For example, the attention mechanism will represent the appropriate segments of the image corresponding to the clinical key words derived by the deep learning model when a clinician is baffled to link the clinical key words to the regions of interest in the X-ray image.

1.4.3. Model-agnostic global XAI

In model-agnostic global XAI, a surrogate representation is developed to approximate an interpretable module for the black-box model. For instance, an interpretable decision tree based model can be used to approximate a more complex deep learning model on how clinical symptoms impact treatment response. A clarification of the relative importance of variables in affecting treatment response to clinical symptoms can be given by the IF-THEN logic of the decision tree. Clinical experts can analyse these variables and are likely to believe the model to the extent that particular symptomatic factors are known to be rational and confounding noises can be accurately removed. Diagnostic methods can also be useful to produce insights into the significance of individual characteristics in the predictions of the model. Partial dependence plots can be leveraged to determine the marginal effects of the chosen characteristics vs. the performance of the forecast, whereas individual conditional expectation can be employed to obtain a granular explanation of how a specific feature affects particular instances and to explore variation in impacts throughout instances. For example, a partial dependency plot can elucidate the role of clinical symptoms in reacting favourably to a particular treatment strategy, as observed by a computer-aided diagnosis system. On the other hand, individual conditional expectation can reveal variability in the treatment response among subgroups of patients.

1.4.4. Model-agnostic local XAI

For this type of XAI approaches, the aim is to produce model-agnostic explanations for a particular instance or the vicinity of a particular instance. Local Interpretable Model-Agnostic Explanation (LIME) [5], a well-validated tool, can provide an explanation for a complex deep learning model in the neighbourhood of an instance. Consider a deep learning algorithm that classifies a physiological attribute as a high-risk factor for certain diseases or cause of death, for which the clinician requires a post-hoc clarification. The interpretable modules are perturbed to determine how the predictions made by the change of those physiological attributes. For this perturbed dataset, a linear model is learnt with higher weights given to the perturbed instances in the vicinity of the physiological attribute. The most important components of the linear model can indicate the influence of a particular physiological attribute that can suggest a high-risk factor or the contrary can be implied. This can provide comprehensible means for the clinicians to interpret the classifier.

2. Related studies in AI for healthcare and XAI for healthcare

2.1. AI in healthcare

AI attempts to emulate the neural processes of humans, and it introduces a paradigm change to healthcare, driven by growing healthcare data access and rapid development in analytical techniques. We survey briefly the present state of healthcare AI applications and explore their prospects. For a detailed up to date review, the readers can refer to Jiang et al. [6], Panch et al. [7], and Yu et al. [8] on general AI techniques for healthcare and Shen et al. [9], Litjens et al. [10] and Ker et al. [11] on medical image analysis.

In the medical literature, the effects of AI have been widely debated [12], [13], [14]. Sophisticated algorithms can be developed using AI to ‘read’ features from a vast amount of healthcare data and then use the knowledge learnt to help clinical practice. To increase its accuracy based on feedback, AI can also be fitted with learning and self-correcting capabilities. By presenting up-to-date medical knowledge from journals, manuals and professional procedures to advise effective patient care, an AI-powered device [15] will support clinical decision making. Besides, in human clinical practice, an AI system may help to reduce medical and therapeutic mistakes that are unavoidable (i.e., more objective and reproducible) [12], [13], [15], [16], [17], [18], [19]. In addition, to help render real-time inferences for health risk warning and health outcome estimation, an AI system can handle valuable knowledge collected from a large patient population [20].

As AI has recently re-emerged into the scientific and public consciousness, AI in healthcare has new breakthroughs and clinical environments are imbued with novel AI-powered technologies at a breakneck pace. Nevertheless, healthcare was described as one of the most exciting application fields for AI. Researchers have suggested and built several systems for clinical decision support since the mid-twentieth century [21], [22]. Since the 1970s, rule-based methods had many achievements and have been seen to interpret ECGs [23], identify diseases [24], choose optimal therapies [25], offer scientific logic explanations [26] and assist doctors in developing diagnostic hypotheses and theories in challenging cases of patients [27]. Rule-based systems, however, are expensive to develop and can be unstable, since they require clear expressions of decision rules and, like any textbook, require human-authored modifications. Besides, higher-order interactions between various pieces of information written by different specialists are difficult to encode and the efficiency of the structures is constrained by the comprehensiveness of prior medical knowledge [28]. To narrow down the appropriate psychological context, prioritise medical theories, and prescribe treatment, it was also difficult to incorporate a method that combines deterministic and probabilistic reasoning procedures [29], [30].

Recent AI research has leveraged machine learning approaches, which can account for complicated interactions [31], to recognise patterns from the clinical results, in comparison to the first generation of AI programmes, which focused only on the curation of medical information by experts and the formulation of rigorous decision laws. The machine learning algorithm learns to create the correct output for a given input in new instances by evaluating the patterns extracted from all the labelled input–output pairs [32]. Supervised machine learning algorithms are programmed to determine the optimal parameters in the models in order to minimise the differences between their training case predictions and the effects observed in these cases, with the hope that the correlations found are generalisable to cases not included in the dataset of training. The model generalisability can be then calculated using the test dataset. For supervised machine learning models, grouping, regression and characterisation of the similarity between instances with similar outcome labels are among the most commonly used tasks. For the unlabelled dataset, unsupervised learning infers the underlying patterns for discovering sub-clusters of the original dataset, for detecting outliers in the data, or for generating low-dimensional data representations. However, it is of note that in a supervised manner, the recognition of low-dimensional representations for labelled dataset may be done more effectively. Machine-learning approaches allow the development of AI applications that promote the exploration of previously unrecognised data patterns without the need to define decision-making rules for each particular task or to account for complicated interactions between input features. Machine learning has therefore been the preferred method for developing AI utilities [31], [33], [34].

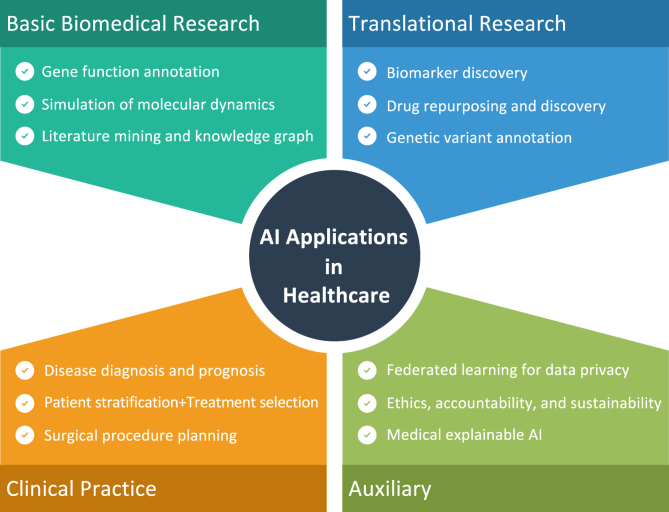

The recent rebirth of AI has primarily been motivated by the active implementation of deep learning – which includes training a multi-layer artificial neural network (i.e., a deep neural network) on massive datasets – to wide sources of labelled data [35]. Existing neural networks are getting deeper and typically have layers. Multi-layer neural networks may model complex interactions between input and output, but may also require more data, processing time, or advanced architecture designs to achieve better performance. Modern neural networks commonly have tens of millions to hundreds of millions of parameters and require significant computing resources to perform the model training [8]. Fortunately, recent developments in computer-processor architecture have empowered the computing resources required for deep learning [36]. However, in labelled instances, deep-learning algorithms are incredibly ’data hungry.’ Huge repositories of medical databases that can be integrated into these algorithms have only recently become readily available, due to the establishment of a range of large-scale research (in particular the Cancer Genome Atlas [37] and the UK Biobank [38]), data collection platforms (e.g., Broad Bioimage Benchmark Collection [39] and the Image Data Resources [40]) and the Health Information Technology for Economic and Clinical Health (HITECH) Act, which has promised to provide financial incentives for the use of electronic health records (EHRs) [41], [42]. In general, deep learning based AI algorithms have been developed for image-based classification [43], diagnosis [44], [45], [46] and prognosis [47], [48], genome interpretation [49], biomarker discovery [50], [51], monitoring by wearable life-logging devices [52], and automated robotic surgery [53] to enhance the digital healthcare [54]. The rapid explosion of AI has given rise to the possibilities of using aggregated health data to generate powerful models that can automate diagnosis and also allow an increasingly precise approach to medicine by tailoring therapies and targeting services with optimal efficacy in a timely and dynamic manner. A non-exhaustive map of possible applications is showing in Fig. 6.

Fig. 6.

A non-exhaustive map of the AI in healthcare applications.

While AI is promising to revolutionise medical practice, several technological obstacles lie ahead. Because deep learning based approaches rely heavily on the availability of vast volumes of high-quality training data, caution must be taken to collect data that is representative of the target patient population. For example, data from various healthcare settings, which include different forms of bias and noise, may cause a model trained in the data of one hospital to fail to generalise to another [55]. Where the diagnostic role has an incomplete inter-expert agreement, it has been shown that consensus diagnostics could greatly boost the efficiency of the training of the deep learning based models [56]. In order to manage heterogeneous data, adequate data curation is important. However, achieving a good quality gold standard for identifying the clinical status of the patients requires physicians to review their clinical results independently and maybe repeatedly, which is prohibitively costly at a population scale. A silver standard [57] that used natural-language processing methods and diagnostic codes to determine the true status of patients has recently been proposed [58]. Sophisticated algorithms that can handle the idiosyncrasies and noises of different datasets can improve the efficiency and safety of prediction models in life-and-death decisions.

Most of the recent advancement in neural networks has been limited to well-defined activities that do not require data integration across several modalities. Approaches for the application of deep neural networks to general diagnostics (such as analysis of signs and symptoms, prior medical history, laboratory findings and clinical course) and treatment planning are less simple. While deep learning has been effective in image detection [59], translation [60], speech recognition [61], [62], sound synthesis [63] and even automated neural architecture search [64], clinical diagnosis and treatment tasks often need more care (e.g., patient interests, beliefs, social support and medical history) than the limited tasks that deep learning can be normally adept. Moreover, it is unknown if transfer learning approaches will be able to translate models learnt from broad non-medical datasets into algorithms for the study of multi-modality clinical datasets. This suggests that more comprehensive data-collection and data-annotation activities are needed to build end-to-end clinical AI programmes. It is of note that one most recent study advocated for the use of Graph Neural Networks as a tool of choice for multi-modal causability knowledge fusion [65].

The design of a computing system for the processing, storage and exchange of EHRs and other critical health data remains a problem [104]. Privacy-preserving approaches, e.g., via federated learning, can allow safe sharing of data or models across cloud providers [105]. However, the creation of interoperable systems that follow the requirement for the representation of clinical knowledge is important for the broad adoption of such technology [106]. Deep and seamless incorporation of data across healthcare applications and locations remains questionable and can be inefficient. However, new software interfaces for clinical data are starting to show substantial adoption through several EHR providers, such as Substitutable Medical Applications and Reusable Technologies on the Fast Health Interoperability Resources platform [107], [108]. Most of the previously developed AI in healthcare applications were conducted on retrospective data for the proof of concept [109]. Prospective research and clinical trials to assess the efficiency of the developed AI systems in clinical environments are necessary to verify the real-world usefulness of these medical AI systems [110]. Prospective studies will help recognise the fragility of the AI models in real-world heterogeneous and noisy clinical settings and identify approaches to incorporate medical AI for existing clinical workflows.

AI in medicine would eventually result in safety, legal and ethical challenges [111] with respect to medical negligence attributed to complicated decision-making support structures, and have to face the regulation hurdles [112]. If malpractice lawsuits involving medical AI applications occur, the judicial system will continue to provide specific instructions as to which agency is responsible. Health providers with malpractice insurance have to be clear on coverage as health care decisions are taken in part by the AI scheme [8]. With the deployment of automatic AI for particular clinical activities, the criteria for diagnostic, surgical, supporting and paramedical tasks will need to be revised and the functions of healthcare practitioners will begin to change as different AI modules are implemented into the quality of treatment, and the bias needs to be minimised while the patient satisfaction must be maximised [113], [114].

2.2. XAI in healthcare

Despite deep learning based AI technologies will usher in a new era of digital healthcare, challenges exist. XAI can play a crucial role, as an auxiliary development (Fig. 6), for potentially solving the small sample learning by filter out clinically meaningless features. Moreover, many high-performance deep learning models produce findings that are impossible for unaided humans to understand. While these models can produce better-than-human efficiency, it is not easy to express intuitive interpretations that can justify model findings, define model uncertainties, or derive additional clinical insights from these computational ’black-boxes.’ With potentially millions of parameters in the deep learning model, it can be tricky to understand what the model sees in the clinical data, e.g., radiological images [115]. For example, research investigation has explicitly stated that being a black box is a “strong limitation” for AI in dermatology since it is not capable of doing a personalised evaluation by a qualified dermatologist that can be used to clarify clinical facts [116]. This black-box design poses an obstacle for the validation of the developed AI algorithms. It is necessary to demonstrate that a high-performance deep learning model actually identifies the appropriate area of the image and does not over-emphasise unimportant findings. Recent approaches have been developed to describe AI models including the visualisation methods. Some widely used levers include occlusion maps [117], salience maps [118], class activation maps [119], and attention maps [120]. Localisation and segmentation algorithms can be more readily interpreted since the output is an image. Model understanding, however, remains much more difficult for deep neural network models trained on non-imaging data other than images that is a current open question for ongoing research efforts [5].

Deep learning-based AI methods have gained popularity in the medical field, with a wide range of work in automatic triage, diagnosis, prognosis, treatment planning and patient management [6]. We can find many open questions in the medical field that have galvanised clinical trials leveraging deep learning and AI approaches (e.g., from grand-challenge.org). Nevertheless, in the medical field, the issue of interpretability is far from theoretical development. More precisely, it is noted that interpretabilities in the clinical sectors include considerations not recognised in other areas, including risk and responsibilities [7], [121]. Life may be at risk as medical responses are made, and leaving those crucial decisions to AI algorithms that without explainabilities and accountabilities will be irresponsible [122]. Apart from legal concerns, this is a serious vulnerability that could become disastrous if used with malicious intent.

As a result, several recent studies [120], [123], [124], [125], [126], [127] have been devoted to the exploration of explainability in medical AI. More specifically, specific analyses have been investigated, e.g., chest radiography [128], emotion analysis in medicine [129], COVID-19 detection and classification [43], and the research encourages understanding of the importance of interpretability in the medical field [130]. Besides, the exposition argues [131] that a certain degree of opaqueness is appropriate, that is, it would be more important for us to deliver empirically checked reliable findings than to dwell too hard on how to unravel the black-box. It is advised that readers consider these studies first, at least for an overview of interpretability in medical AI.

An obvious XAI approach has been taken by many researchers is to provide their predictive models with interpretability. These methods depend primarily on maintaining the interpretability of less complicated AI models while improving their performance by techniques of refinement and optimisation. For example, as Fig. 5 shows, decision tree based methods are normally interpretable, research studies have been done using automated pruning of decision trees for various classifications of illnesses [132] and accurate decision trees focused on boosting patient stratification [133]. However, such model optimisation is not always straightforward and it is not a trivial task.

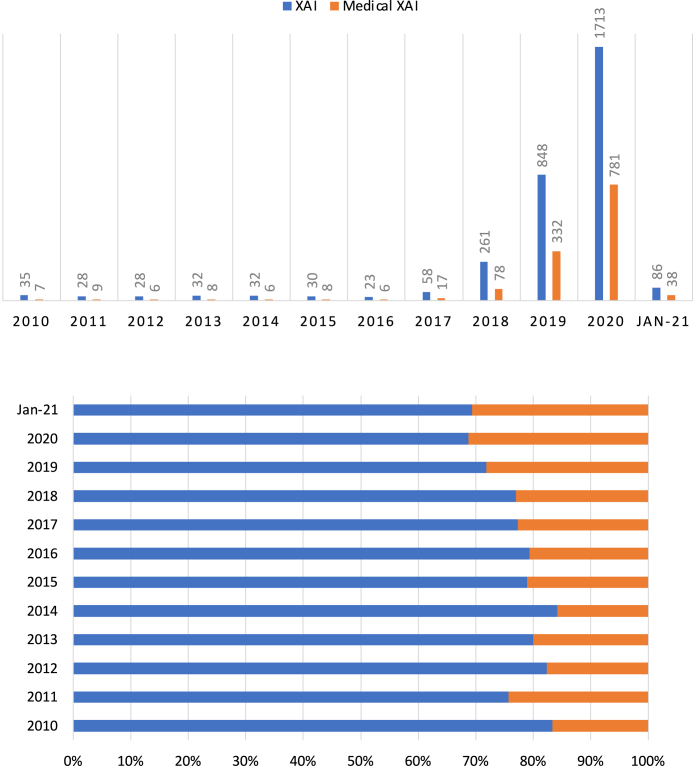

Previous survey studies on XAI in healthcare can be found elsewhere, e.g., Tjoa and Guan [134] in medical XAI and Payrovnaziri et al. [135] in XAI for EHR. For specific applications, e.g., digital pathology, the readers can refer to Pocevivciute et al. [136] and Tosun et al. [137]. The research studies in XAI and medical XAI have been increased exponentially especially after 2018 alongside increasingly development of multimodal clinical information fusion (Fig. 7). In this mini-review, we only surveyed the most recent studies that were not covered by previous more comprehensive review studies. In this mini-review, we classified XAI in medicine and healthcare into five categories, which synthesised the approach by Payrovnaziri et al. [135], including (1) XAI via dimension reduction, (2) XAI via feature importance, (3) XAI via attention mechanism, (4) XAI via knowledge distillation, and (5) XAI via surrogate representations (Table 1).

Fig. 7.

Publication per year for XAI and medical XAI (top) and percentage for two categories of research (bottom). Data retrieved from Scopus® (Jan 8th, 2021) by using these commands when querying this database—XAI: (ALL(“Explainable AI”) OR ALL(“Interpretable AI”) OR ALL(“Explainable Artificial Intelligence”) OR ALL(“Interpretable Artificial Intelligence”) OR ALL(“XAI”)) AND PUBYEAR = 20XX; Medical XAI: (ALL(“Explainable AI”) OR ALL(“Interpretable AI”) OR ALL(“Explainable Artificial Intelligence”) OR ALL(“Interpretable Artificial Intelligence”) OR ALL(“XAI”)) AND (ALL(“medical”) OR ALL(“medicine”)) AND PUBYEAR = 20XX, in which XX represents the actual year.

Table 1.

Summary of various XAI methods in digital healthcare and medicine including their category (XAI via dimension reduction, feature importance, attention mechanism, knowledge distillation, and surrogate representations), reference, key idea, type (Intrinsic or Post-hoc, Local or Global, and Model-specific or Model-agnostic) and specific clinical applications.

| XAI Category | Reference | Method | Intrinsic/ Post-hoc |

Local/Global | Model-specific/ Model-agnostic |

Application |

|---|---|---|---|---|---|---|

| Dimension Reduction | Zhang et al. [66] | Optimal feature selection | Intrinsic | Global | Model-specific | Drug side effect estimation |

| Yang et al. [67] | Laplacian Eigenmaps | Intrinsic | Global | Model-specific | Brain tumour classification using MRS | |

| Zhao and Bolouri [68] | Cluster analysis and LASSO | Intrinsic | Global | Model-agnostic | Lung cancer patients stratification | |

| Kim et al. [69] | Optimal feature selection | Intrinsic | Global | Model-agnostic | Cell-type specific enhancers prediction | |

| Hao et al. [70] | Sparse deep learning | Intrinsic | Global | Model-agnostic | Long-term survival prediction for glioblastoma multiforme | |

| Bernardini et al. [71] | Sparse-balanced SVM | Intrinsic | Global | Model-agnostic | Early diagnosis of type 2 diabetes | |

| Feature Importance | Eck et al. [72] | Feature marginalisation | Post-hoc | Global, Local | Model-agnostic | Gut and skin microbiota/inflammatory bowel diseases diagnosis |

| Ge et al. [73] | Feature weighting | Post-hoc | Global | Model-agnostic | ICU mortality prediction (all-cause) | |

| Zuallaert et al. [74] | DeepLIFT | Post-hoc | Global | Model-agnostic | Splice site detection | |

| Suh et al. [75] | Shapley value | Post-hoc | Global, Local | Model-agnostic | Decision-supporting for prostate cancer | |

| Singh et al. [76] | DeepLIFT and others | Post-hoc | Global, Local | Model-agnostic | Ophthalmic diagnosis | |

| Attention Mechanism | Kwon et al. [77] | Attention | Intrinsic | Global, Local | Model-specific | Clinical risk prediction (cardiac failure/cataract) |

| Zhang et al. [78] | Attention | Intrinsic | Local | Model-specific | EHR based future hospitalisation prediction | |

| Choi et al. [79] | Attention | Intrinsic | Local | Model-specific | Heart failure prediction | |

| Kaji et al. [80] | Attention | Intrinsic | Global, Local | Model-specific | Predictions of clinical events in ICU | |

| Shickel et al. [81] | Attention | Intrinsic | Global, Local | Model-specific | Sequential organ failure assessment/in-hospital mortality | |

| Hu et al. [82] |

Attention |

Intrinsic |

Local |

Model-specific |

Prediction of HIV genome integration site |

|

| Izadyyazdanabadi et al. [83] | MLCAM | Intrinsic | Local | Model-specific | Brain tumour localisation | |

| Zhao et al. [84] | Respond-CAM | Intrinsic | Local | Model-specific | Macromolecular complexes | |

| Couture et al. [85] | Super-pixel maps | Intrinsic | Local | Model-specific | Histologic tumour subtype classification | |

| Lee et al. [86] | CAM | Intrinsic | Local | Model-specific | Acute intracranial haemorrhage detection | |

| Kim et al. [87] | CAM | Intrinsic | Local | Model-specific | Breast neoplasm ultrasonography analysis | |

| Rajpurkar et al. [88] | Grad-CAM | Intrinsic | Local | Model-specific | Diagnosis of appendicitis | |

| Porumb et al. [89] | Grad-CAM | Intrinsic | Local | Model-specific | ECG based hypoglycaemia detection | |

| Hu et al. [43] | Multiscale CAM | Intrinsic | Local | Model-specific | COVID-19 classification | |

| Knowledge Distillation | Caruana et al. [90] | Rule-based system | Intrinsic | Global | Model-specific | Prediction of pneumonia risk and 30-day readmission forecast |

| Letham et al. [91] | Bayesian rule lists | Intrinsic | Global | Model-specific | Stroke prediction | |

| Che et al. [92] | Mimic learning | Post-hoc | Global, Local | Model-specific | ICU outcome prediction (acute lung injury) | |

| Ming et al. [93] | Visualisation of rules | Post-hoc | Global | Model-specific | Clinical diagnosis and classification (breast cancer, diabetes) | |

| Xiao et al. [94] | Complex relationships distilling | Post-hoc | Global | Model-specific | Prediction of the heart failure caused hospital readmission | |

| Davoodi and Moradi [95] | Fuzzy rules | Intrinsic | Global | Model-specific | In-hospital mortality prediction (all-cause) | |

| Lee et al. [96] | Visual/textual justification | Post-hoc | Global, Local | Model-specific | Breast mass classification | |

| Prentzas et al. [97] | Decision rules | Intrinsic | Global | Model-specific | Stroke Prediction | |

| Surrogate Models | Pan et al. [98] | LIME | Post-hoc | Local | Model-agnostic | Forecast of central precocious puberty |

| Ghafouri-Fard et al. [99] | LIME | Post-hoc | Local | Model-agnostic | Autism spectrum disorder diagnosis | |

| Kovalev et al. [100] | LIME | Post-hoc | Local | Model-agnostic | Survival models construction | |

| Meldo et al. [101] | LIME | Post-hoc | Local | Model-agnostic | Lung lesion segmentation | |

| Panigutti et al. [102] | LIME like with rule-based XAI | Post-hoc | Local | Model-agnostic | Prediction of patient readmission, diagnosis and medications | |

| Lauritsen et al. [103] | Layer-wise relevance propagation | Post-hoc | Local | Model-agnostic | Prediction of acute critical illness from EHR | |

2.2.1. XAI via dimension reduction

Dimension reduction methods, e.g., using principal component analysis (PCA) [138], independent component analysis (ICA) [139], and Laplacian Eigenmaps [140] and other more advanced techniques, are commonly and conventionally used approaches to decipher AI models by representing the most important features. For example, by integrating multi-label k-nearest neighbour and genetic algorithm techniques, Zhang et al. [66] developed a model for drug side effect estimation based on the optimal dimensions of the input features. Yang et al. [67] proposed a nonlinear dimension reduction method to improve unsupervised classification of the H MRS brain tumour data and extract the most prominent features using Laplacian Eigenmaps. Zhao and Bolouri [68] stratified stage-one lung cancer patients by defining the most insightful examples via a supervised learning scheme. In order to recognise a group of “exemplars” to construct a ”dense data matrix”, they introduced a hybrid method for dimension reduction by combining pattern recognition with regression analytics. Then they used examples in the final model that are the most predictive for the outcome. Based on domain knowledge, Kim et al. [69] developed a deep learning method to extract and rank the most important features based on their weights in the model, and visualised the outcome for predicting cell-type-specific enhancers. To explore the gene pathways and their associations in patients with the brain tumour, Hao et al. [70] proposed a pathway-associated sparse deep learning method. Bernardini et al. [71] used the least absolute shrinkage and selection operator (LASSO) to prompt sparsity for SVMs for the early diagnosis of type 2 diabetes.

Simplifying the information down to a small subset using dimension reduction methods can make the underlying behaviour of the model understandable. Besides, with potentially more stable regularised models, they are less prone to overfitting, which may also be beneficial in general. Nevertheless, the possibility of losing crucial features, which may still be relevant for clinical predictions on a case-by-case basis, can be common and these important features may be neglected unintentionally by the dimensional reduced models.

2.2.2. XAI via feature importance

Researchers have leveraged the feature importance to explain the characteristics and significance of the extracted features and the correlations among features and between features and the outcomes for providing interpretability for AI models [2], [141], [142]. Ge et al. [73] used feature weights to rank the top ten extracted features to predict mortality of the intensive care unit. Suh et al. [75] developed a risk calculator model for prostate cancer (PCa) and clinically significant PCa with XAI modules that used Shapley value to determine the feature importance [143]. Sensitivity analysis of the extracted features can represent the feature importance, and essentially the more important features are those for which the output is more sensitive [144]. Eck et al. [72] defined the most significant features of a microbiota-based diagnosis task by roughly marginalising the features and testing the effect on the model performance.

Shrikumar et al. [145] implemented the Deep Learning Important FeaTures (DeepLIFT)—a backpropagation based approach to realise interpretability. Backpropagation approaches measure the output gradient for input through the backpropagation algorithm to report the significance of the feature. Zuallaert et al. [74] developed the DeepLIFT based method to create interpretable deep models for splice site prediction by measuring the contribution score for each nucleotide. A recent comparative study of different models of XAI, including DeepLIFT [145], Guided backpropagation (GBP) [146], Layer wise relevance propagation (LRP) [147], SHapley Additive exPlanations (SHAP) [148] and others, was conducted for ophthalmic diagnosis [76].

XAI, by the extraction of feature importance, can not only explain essential feature characteristics, but may also reflect their relative importance to clinical interpretation; however, numerical weights are either not easy to understand or maybe misinterpreted.

2.2.3. XAI via attention mechanism

The core concept behind the attention mechanism [149] is that the model “pays attention” only to the parts of the input where the most important information is available. It was originally proposed for tackling the relation extraction task in machine translation and other natural language processing problems. Because certain words are more relevant than others in the relation extraction task, the attention mechanism can assess the importance of the words for the purpose of classification, generating a meaning representation vector. There are various types of attention mechanisms, including global attention, which uses all words to build the context, local attention, which depends only on a subset of words, or self-attention, in which several attention mechanisms are implemented simultaneously, attempting to discover every relation between pairs of words [150]. The attention mechanism has also been shown to contribute to the enhancement of interpretability as well as to technical advances in the field of visualisation [151].

Kaji et al. [80] demonstrated particular occasions when the input features have mostly influenced the predictions of clinical events in ICU patients using the attention mechanism. Shickel et al. [81] presented an interpretable acuity score framework using deep learning and attention-based sequential organ failure assessment that can assess the severity of patients during an ICU stay. Hu et al. [82] provided “mechanistic explanations” for the accurate prediction of HIV genome integration sites. Zhang et al. [78] also built a method to learn how to represent EHR data that could document the relationship between clinical outcomes within each patient. Choi et al. [79] implemented the Reverse Time Attention Model (RETAIN), which incorporated two sets of attention weights, one for visit level to capture the effect of each visit and the other at the variable-level. RETAIN was a reverse attention mechanism intended to maintain interpretability, to replicate the actions of clinicians, and to integrate sequential knowledge. Kwon et al. [77] proposed a visually interpretable cardiac failure and cataract risk prediction model based on RETAIN (RetainVis). The general intention of these research studies is to improve the interpretability of deep learning models by highlighting particular position(s) within a sequence (e.g., time, visits, DNA) in which those input features can affect the prediction outcome.

Class activation mapping (CAM) [152] method and its variations have been investigated for XAI since 2016, and have been subsequently used for digital healthcare, especially the medical image analysis areas. Lee et al. [86] developed an XAI algorithm for the detection of acute intracranial haemorrhage from small datasets that is one of the most famous studies using CAM. Kim et al. [87] summarised AI based breast ultrasonography analysis with CAM based XAI. Zhao et al. [84] reported a Respond-CAM method that offered a heatmap-based saliency on 3D images obtained from cryo-tomography of cellular electrons. The region where macromolecular complexes were present was marked by the high intensity in the heatmap. Izadyyazdanabadi et al. [83] developed a multilayer CAM (MLCAM), which was used for brain tumour localisation. Coupling with CNN, Couture et al. [85] proposed a multi-instance aggregation approach to classify breast tumour tissue microarray for various clinical tasks, e.g., histologic subtype classification, and the derived super-pixel maps could highlight the area where the tumour cells were and each mark corresponded to a tumour class. Rajpurkar et al. [88] used Grad-CAM for the diagnosis of appendicitis from a small dataset of CT exams using video pretraining. Porumb et al. [89] combined CNN and RNN for electrocardiogram (ECG) analysis and applied Grad-CAM for the identification of the most relevant heartbeat segments for the hypoglycaemia detection. In Hu et al. [43], a COVID-19 classification system was implemented with multiscale CAM to highlight the infected areas. By the means of visual interpretability, these saliency maps are recommended. The clinician analysts who examine the AI output can realise that the target is correctly identified by the AI model, rather than mistaking the combination of the object with the surrounding as the object itself.

Attention based XAI methods do not advise the clinical end user specifically of the response, but highlight the areas of greater concern to facilitate easier decision-making. Clinical users can, therefore, be more tolerant of imperfect precision. However, it might not be beneficial to actually offer this knowledge to a clinical end user because of the major concerns, including information overload and warning fatigue. It can potentially be much more frustrating to have areas of attention without clarification about what to do with the findings if the end user is unaware of what the rationale of a highlighted segment is, and therefore the end user can be prone to ignore non-highlighted areas that could also be critical.

2.2.4. XAI via knowledge distillation and rule extraction

Knowledge distillation is one form of the model-specific XAI, which is about eliciting knowledge from a complicated model to a simplified model—enables to train a student model, which is usually explainable, with a teacher model, which is hard to interpret. For example, this can be accomplished by model compression [153] or tree regularisation [154] or through a coupling approach of model compression and dimension reduction [141]. Research studies have investigated this kind of technique for several years, e.g., Hinton et al. [155], but has recently been uplifted along with the development of AI interpretability [156], [157], [158]. Rule extraction is another widely used XAI method that is closely associated with knowledge distillation and can have a straightforward application for digital healthcare, for example, decision sets or rule sets have been studied for interpretability [159] and Model Understanding through Subspace Explanations (MUSE) method [160] has been developed to describe the projections of the global model by considering the various subgroups of instances defined by user interesting characteristics that also produces explanation in the form of decision sets.

Che et al. [92] introduced an interpretable mimic-learning approach, which is a straightforward knowledge-distillation method that uses gradient-boosting trees to learn interpretable structures and make the baseline model understandable. The approach used the information distilled to construct an interpretable prediction model for the outcome of the ICU, e.g., death, ventilator usage, etc. A rule-based framework that could include an explainable statement of death risk estimation due to pneumonia was introduced by Caruana et al. [90]. Letham et al. [91] also proposed an XAI model named Bayesian rule lists, which offered certain stroke prediction claims. Ming et al. [93] developed a visualisation approach to derive rules by approximating a complicated model via model induction at different tasks such as diagnosis of breast cancer and the classification of diabetes. Xiao et al. [94] built a deep learning model to break the dynamic associations between readmission to hospital and possible risk factors for patients by translating EHR incidents into embedded clinical principles to characterise the general situation of the patients. Classification rules were derived as a way of providing clinicians interpretable representations of the predictive models. Davoodi and Moradi [95] developed a rule extraction based XAI technique to predict mortality in ICUs and Das et al. [161] used a similar XAI method for the diagnosis of Alzheimer’s disease. In the LSTM-based breast mass classification, Lee et al. [96] incorporated the textual reasoning for interpretability. For the characterisation of stroke and risk prediction, Prentzas et al. [97] implemented the argumentation theory for their XAI algorithm training process by extracting decision rules.

XAI approaches, which rely on knowledge distillation and rule extraction, are theoretically more stable models. The summarised representations of complicated clinical data can provide clinical end-users with the interpretable results intuitively. However, if the interpretation of these XAI results could not be intuitively understood by clinical end-users, then the representations are likely to make it much harder for the end-users to comprehend.

2.2.5. XAI via surrogate representation

An effective application of XAI in the medical field is the recognition of individual health-related factors that lead to disease prediction using the local interpretable model-agnostic explanation (LIME) method [5] that offers explanations for any classifier by approximating the reference model with a surrogate interpretable and “locally faithful” representation. LIME disrupts an instance, produces neighbourhood data, and learns linear models in the neighbourhood to produce explanations [162].

Pan et al. [98] used LIME to analyse the contribution of new instances to forecast central precocious puberty in children. Ghafouri-Fard et al. [99] have applied a similar approach to diagnose autism spectrum disorder. Kovalev et al. [100] proposed a method named SurvLIME to explain AI base survival models. Meldo et al. [101] used a local post-hoc explanation model, i.e., LIME, to select important features from a special feature representation of the segmented lung suspicious objects. Panigutti et al. [102] developed the “Doctor XAI” system that could predict the readmission, diagnosis and medications order for the patient. Similar to LIME, the implemented system trained a local surrogate model to mimic the black-box behaviour with a rule-based explanation, which can then be mined using a multi-label decision tree. Lauritsen et al. [103] tested an XAI method using Layer-wise Relevance Propagation [163] for the prediction of acute critical illness from EHR.

Surrogate representation is a widely used scheme for XAI; however, the white-box approximation must accurately describe the black-box model to gain trustworthy explanation. If the surrogate models are too complicated or too abstract, the clinician comprehension might be affected.

3. Proposed method

3.1. Problem formulation

In this study, we have demonstrated two typical but important applications of using XAI, which have been developed for classification and segmentation—two mostly widely discussed problems in medical image analysis and AI-powered digital healthcare. Our developed XAI techniques have been manifested using CT images classification for COVID-19 patients and segmentation for hydrocephalus patients using CT and MRI datasets.

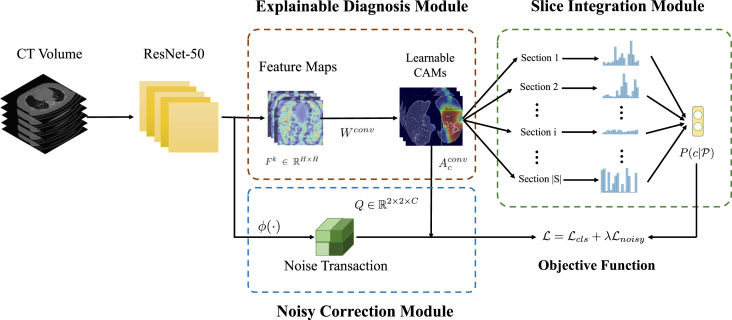

3.2. XAI for classification

In this subsection, we provide a practical XAI solution for explainable COVID-19 classification that is capable of alleviating the domain shift problem caused by multicentre data collected for distinguishing COVID-19 patients from other lung diseases using CT images. The main challenge for multicentre data is that hospitals are likely to use different scanning protocols and parameters for CT scanners when collecting data from patients leading to distinct data distribution. Moreover, it can be observed that images obtained from various hospitals are visually different although they are imaging the same organ. If a machine learning model is trained on data from one hospital and tested on the data from another hospital (i.e., another centre), the performance of the model often degrades drastically. Besides, another challenge is that only patient-level annotations are available commonly but image-level labels are not since it would take a large amount of time for radiologists to annotate them [164]. Therefore, we propose a weakly supervised learning based classification model to cope with these two problems. Besides, an explainable diagnosis module in the proposed model can also offer the auxiliary diagnostic information visually for radiologists. The overview of our proposed model is illustrated in Fig. 8.

Fig. 8.

The overview of our proposed model. denotes the probability of the Section , and represents the probability of the patient who is COVID-19 infected or not. indicates the noise transaction from the probability of the true label to the noise label . Besides, is a non-linear feature transformation function, which projects the feature into embedding space.

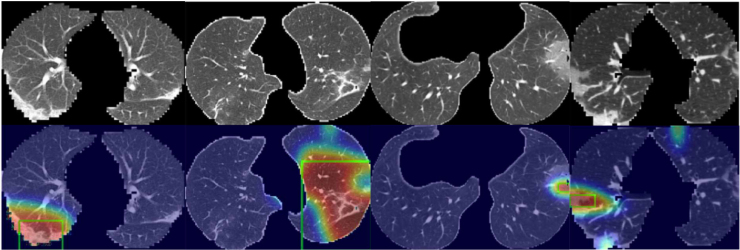

3.2.1. Explainable diagnosis module (EDM)

As the predicting process of deep learning models is in a black-box, it is desirable to develop an explainable technique in medical image diagnosis, which provides an explainable auxiliary tool for radiologists. For common practice, CAM can generate the localisation maps for the prediction through the weighted sum of feature maps from the backbone networks such as ResNet [165]. Suppose is the th feature map with the shape of , and , where is the number of feature maps. Therefore, the class score for class can be computed as

| (1) |

Therefore, the activation map for class can be defined by

| (2) |

However, generating CAMs is not an end-to-end process, in which the network should be firstly trained on the dataset and utilises the weights of the last fully connected layer to compute the CAMs, bringing extra computation. To tackle this drawback, in our explainable diagnosis module (EDM), we replace the fully connected layer with a 1 × 1 convolutional layer of which the weight shares the same mathematical form as . So we can reformulate Eq. (1) as

| (3) |

where is the activation map for class that can be learnt adaptively during the training procedure. The activation map produced by the EDM can not only accurately indicate the importance of the region from CT images and locate the infected parts of the patients, but can also offer the explainable results which are able to account for the prediction.

3.2.2. Slice integration module (SIM)

Intuitively, each COVID-19 patient case has a different severity. Some patients are severely infected with large lesions, while most of the positive cases can be mild of which only a small portion of the CT volume is infected. Therefore, if we directly apply the patient level annotations as the labels for the image slices, the data would be extremely noisy leading to poor performance as the consequence. To overcome this problem, instead of relying on single images, we propose a slice integration module (SIM) and use the joint distribution of the image slices to model the probability of the patient being infected or not. In our SIM, we assume that the lesions are consecutive and the distribution of the lesion positions is consistent. Therefore, we adopt a section based strategy to handle this problem and fit this into a Multiple Instance Learning (MIL) framework [166]. In the MIL, each sample is regarded as a bag, which is composed of a set of instances. A positive bag contains at least one positive instance, while the negative bag solely consists of negative instances. In our scenario, only patient annotations (bag labels) are provided, and the sections can be regarded as instances in the bags.

Given a patient with CT slices, we divide them into disjoint sections , where is the total amount of sections for patient , that is

| (4) |

Here is the section length, which is a designed parameter. Then we integrate the probability of each section as the probability of the patient, that is

| (5) |

where is the probability of the th section that belongs to class . By taking the -max probability of the images for each class to compute the section probability, we can mitigate the problem that some slices may contain few infections, which can hinder the prediction for the section. The -max selection method can be formulated as

| (6) |

where is the top th class score of the slice in the th section for the class , and represents the Sigmoid function. Then we apply the patient annotations to compute the classification loss, which can be formulated as

| (7) |

3.2.3. Noisy correction module (NCM)

In real-world applications, radiologists would only diagnose the disease from one image. Therefore, it is also significant for improving the prediction accuracy on single images. However, the image-level labels are extremely noisy since only patient-level annotations are available. To further alleviate the negative impact of patient-level annotations, we propose a noisy correction module (NCM). Inspired by [167], we model the noise transaction distribution , which transforms the true posterior distribution to the noisy label distribution by

| (8) |

In practice, we estimate the noise transaction distribution for the class via

| (9) |

where ; is a nonlinear mapping function implemented by convolution layers; and are the trainable parameters. The noise transaction score represents the confidence score of the transaction from the true label to the noise label for the class . Therefore, Eq. (8) can be reformulated as

| (10) |

By estimating the noisy label distribution for patient , the noisy classification loss can be computed by

| (11) |

By combining Eqs. (7), (11), we can obtain the total loss function for our XAI solution of an explainable COVID-19 classification, that is

| (12) |

where is a hyper-parameter to balance the classification loss and the noisy classification loss .

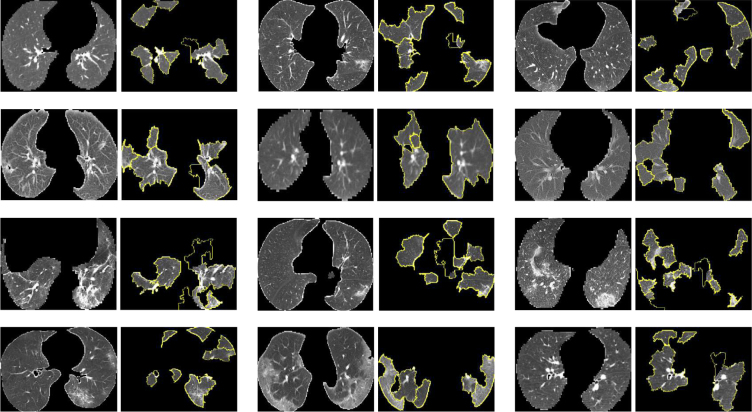

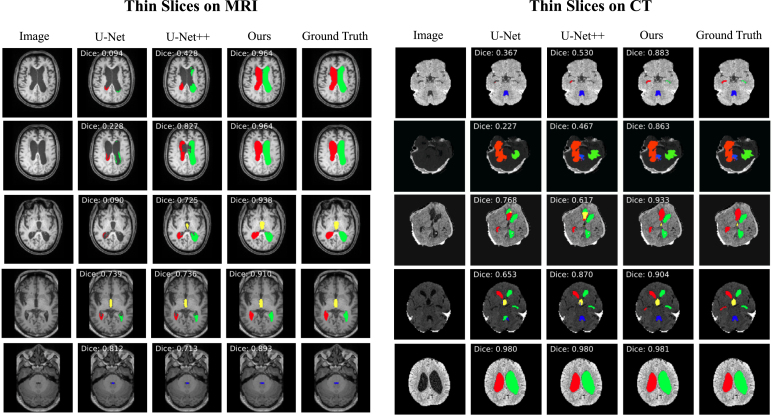

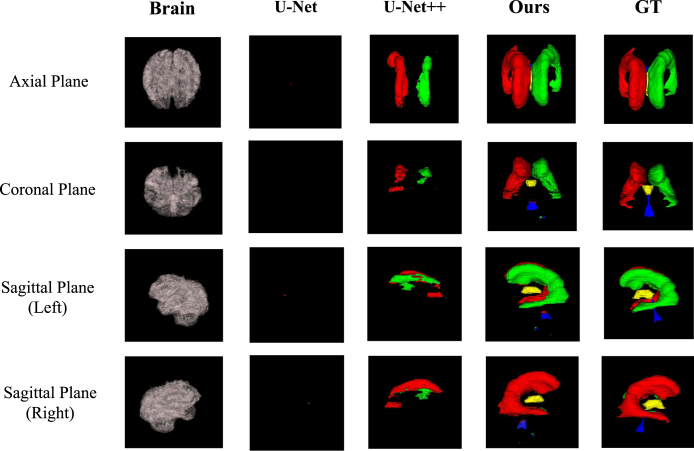

3.3. XAI for segmentation

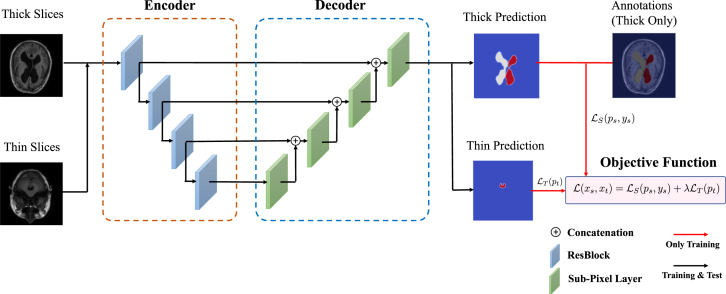

In this subsection, we introduce an XAI model that is applicable for the explainable brain ventricle segmentation using multimodal MRI data acquired from the hydrocephalus patients. Previous methods [168], [169] have conducted experiments using images with a slice thickness of less than 3 mm. This is because the smaller of the image thickness, the more images could be obtained, which helps improve the representation power of the model. However, in a real-world scenario, it is not practical for clinicians to use these models because labelling these image slices is extremely labour-intensive and time-consuming. Therefore, it is more common for the annotations of images with larger slice thicknesses, which are easily available while those images with smaller slice thickness are not. Besides, models trained only on thick-slice images have poor generalisation on thin-slice images. To alleviate these problems, we proposed a thickness agnostic image segmentation model, which can be applicable for both thick-slice and thin-slice images, but only requires the annotations of thick-slice images during the training procedure.

Suppose we have a set of thick-slice images and a set of thin-slice images . The main idea of our model is to utilise the unlabelled thin-slice images to minimise the model performance gap between thick-slice and thin-slice images while a post-hoc XAI can also be developed.

3.3.1. Segmentation network

With the wide applications of deep learning methods, the encoder–decoder based architectures are usually adopted in automated high accuracy medical image segmentation. The workflow of our proposed segmentation network is illustrated in Fig. 9. Inspired by the U-Net [170] model, we replace the original encoder with ResNet-50 [165] pre-trained on ImageNet dataset [171] since it can provide better feature representation for the input images. In addition, the decoder of the U-Net has at least a couple of drawbacks: 1) the increase of low-resolution feature maps can bring a large amount of computational complexity, and 2) interpolation methods [172] such as bilinear interpolation and bicubic interpolation do not bring extra information to improve the segmentation. Instead, the decoder of our model adopts sub-pixel convolution for constructing segmentation results. The sub-pixel convolution can be represented as

| (13) |

where operator transforms and arranges a tensor shaped in into a tensor with the shape of , and is the scaling factor. and are the input feature maps and output feature maps. and are the parameters of the sub-pixel convolution operators for the layer .

Fig. 9.

Overview of our proposed XAI model for explainable segmentation. Here ResBlock represents the residual block proposed in the ResNet [165].

3.3.2. Multimodal training

As aforementioned, the thick-slice images with annotations are available. Therefore, in order to minimise the performance gap between thick-slice images and thin-slice images. We apply a multimodal training procedure to jointly optimise for both types of images. Overall, the objective function of our proposed multimodal training can be computed as

| (14) |

where is a hyper-parameter for weighting the impact of and . and are the prediction of the segmentation probability maps shaped in for thick-slice images and thin-slices images, respectively. In particular, is the cross-entropy loss defined as follows

| (15) |

For the unlabelled thin-slice images, we assume that can push the features away from the decision boundary of the feature distributions of the thick-slice images, thus achieving distribution alignment. Besides, according to [173], minimising the distance between the prediction distribution and the uniform distribution can diminish the uncertainty of the prediction. To measure the distance of these two distributions, the objective function can be modelled by the -divergence, that is

| (16) |

Most existing methods [173], [174] tend to choose , which is alternatively named as KL-divergence. However, one of the main obstacle is that when adopting , the gradient of would be extremely imbalanced. To be more specific, it can assign a large gradient to the easily classified samples, while assigning a small gradient to hardly classified samples. Therefore, in order to mitigate the unbalancing problem during the optimisation, we incorporate Pearson -divergence (i.e., ) rather than using the KL-divergence for , that is

| (17) |

After applying the Pearson -divergence, the gradient imbalanced issue can be mitigated since the slope of the gradient is constant, which can be verified by taking the second order derivative of .

During the training procedure, is optimised alternatively for both thick-slice and thin-slice images.

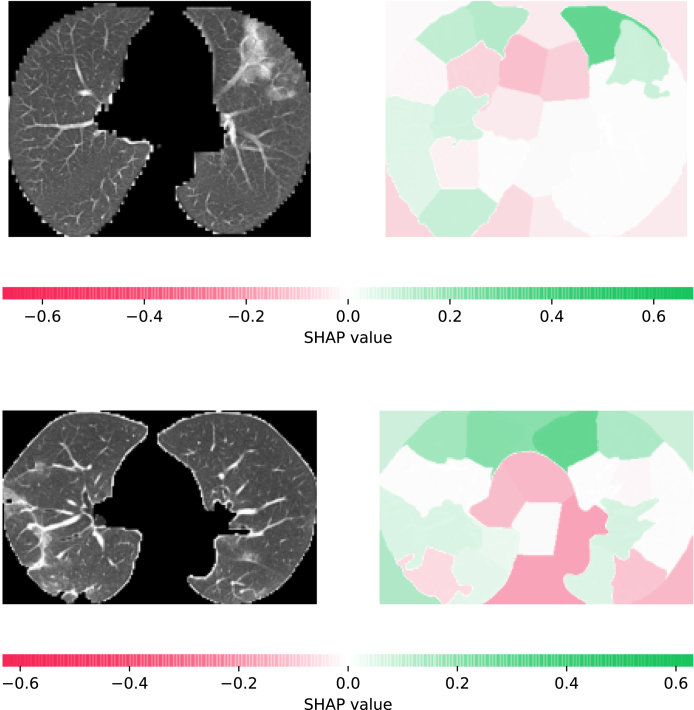

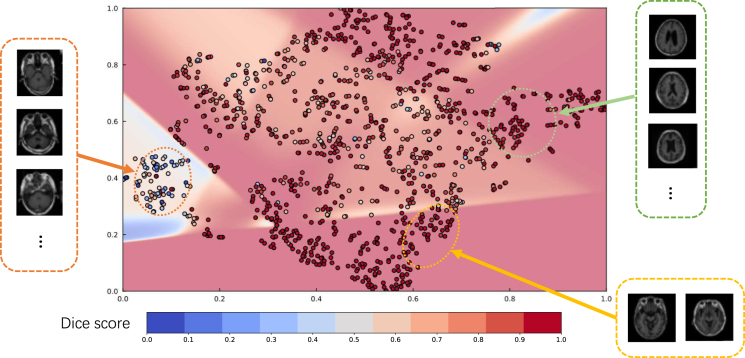

3.3.3. Latent space explanation

Once the model is trained using multimodal datasets, the performance of the network can be quantitatively evaluated by volumetric or regional overlapping metrics, e.g., Dice scores. However, the relation between network performance and input samples remains unclear. In order to provide information about the characteristics of data and their effect on model performance, through which users can set their expectations accordingly, we investigate the feature space and their correlation with the model performance. For feature space visualisation, we extract the outputs of the encoder module of our model, and then decompose them into a two-dimensional space via Principal Component Analysis (PCA). For estimating the whole space, we use a multi-layer perceptron to fit the decomposed samples and their corresponding Dice scores, which can provide an understanding of Dice scores for particular regions of interests in the latent space where there is no data available. Therefore, through analysing the characteristics of the samples in the latent space, we can retrieve the information about the relationships between samples and their prediction power.

3.4. Implementation details

For both our classification and segmentation tasks, we used ResNet-50 [165] as the backbone network pre-trained on ImageNet [171]. For classification, we resized these images into a spatial resolution of 224 × 224. During the training procedure, we set , the dropout rate as 0.7, and the weight decay coefficient as . Besides, was set to 16, and was set to 8 for the sake of computing the patient-level probability. For segmentation, we set for balancing the impact of supervised loss and unsupervised loss. During the training, Adam [175] optimiser was utilised with a learning rate . The training procedure is terminated after 4,000 iterations with batch size 8. All of the experiments were conducted on a workstation with 4 NVIDIA RTX GPUs using PyTorch framework with version 1.5.

4. Experimental settings and results

4.1. Showcase I: Classification for COVID-19

4.1.1. Datasets

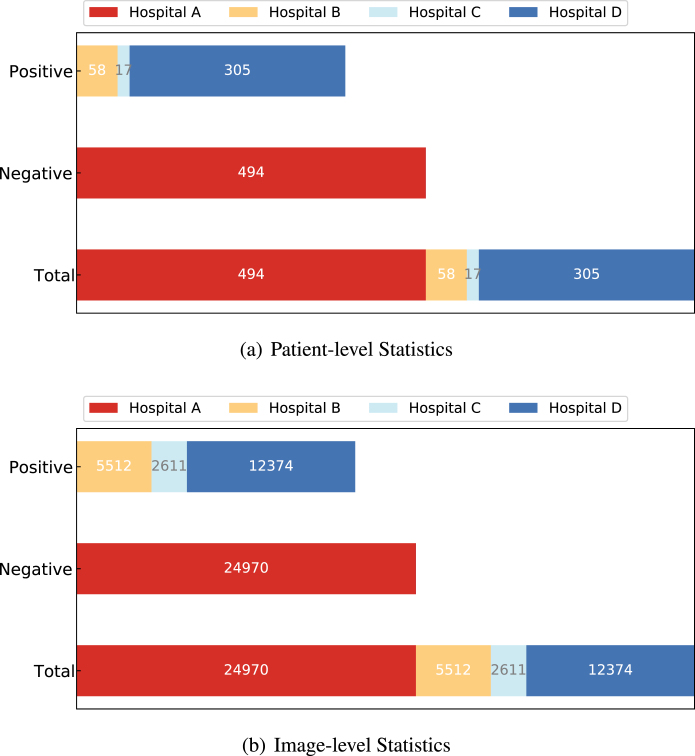

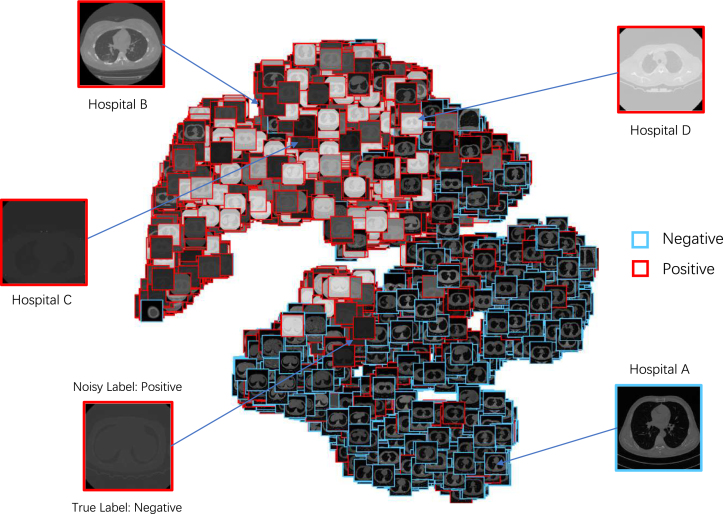

We collected CT data from four different local hospitals in China and removed the personal information to ensure data privacy. The information of our collected data is summarised in Fig. 10. In total, there were 380 CT volumes of the patients who tested COVID-19 positive (reverse transcription polymerase chain reaction test confirmed) and 424 COVID-19 negative CT volumes. For a fair comparison, we trained the model on the cross-centre datasets collected from hospital A, B, C, and D. For an unbiased independent testing, CC-CCII data [176], a publicly available dataset, which contained 2,034 CT volumes with 130,511 images, was adopted to verify the effectiveness of the trained models.

Fig. 10.

Class distribution of the collected CT data. The numbers in the sub-figures (a) and (b) represent the counts for the patient-level statistics and image-level statistics, respectively. The data collected from several clinical centres can result in great challenges in learning discriminative features from those class-imbalanced centres.

4.1.2. Data standardisation, pre-processing and augmentation

Following the protocol described in [176], we used the U-Net segmentation network [170] to segment the CT images. Then, we randomly cropped a rectangular region whose aspect ratio was randomly sampled in , the area was randomly sampled in , and the region was then resized into 224 × 224. Meanwhile, we randomly flipped the input volumes horizontally with 0.5 probability. The input data would be a set of CT volumes, which were composed of consecutive CT image slices.

Table 2.

Comparison results of our method vs. state-of-the-art methods performed on the CC-CCII dataset.

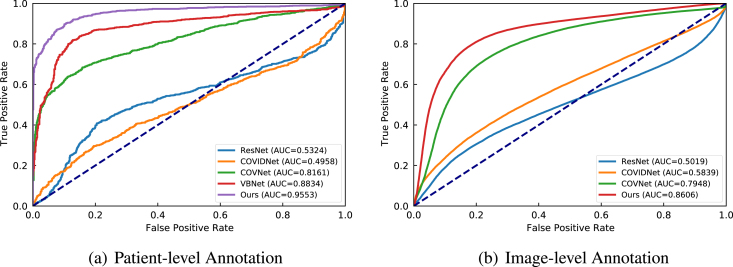

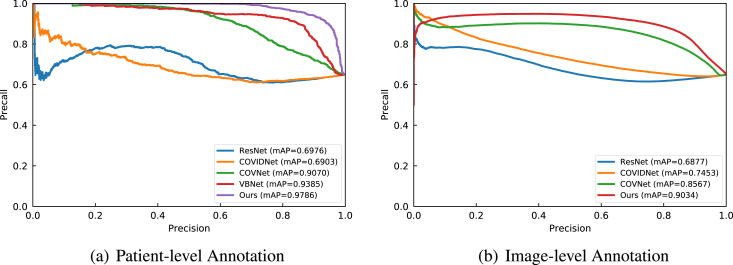

| Annotation | Method | Patient Acc. (%) | Precision (%) | Sensitivity (%) | Specificity (%) | AUC (%) |

|---|---|---|---|---|---|---|

| Patient-level | ResNet-50 [165] | 53.44 | 64.45 | 63.03 | 35.71 | 53.24 |

| COVID-Net [177] | 57.13 | 62.53 | 84.70 | 6.16 | 49.58 | |

| COVNet [178] | 69.96 | 70.20 | 93.33 | 26.75 | 81.61 | |

| VB-Net [179] | 76.11 | 75.84 | 92.73 | 45.38 | 88.34 | |

| Ours | 89.97 | 92.99 | 91.44 | 87.25 | 95.53 | |

| Image-level | ResNet-50 [165] | 52.56 | 61.60 | 71.27 | 18.06 | 50.19 |

| COVID-Net [177] | 60.03 | 64.81 | 83.91 | 15.98 | 58.39 | |

| COVNet [178] | 75.55 | 79.90 | 83.24 | 61.37 | 79.48 | |

| Ours | 80.41 | 88.56 | 80.15 | 80.89 | 86.06 | |

4.1.3. Quantitative results