Abstract

The “Narratives” collection aggregates a variety of functional MRI datasets collected while human subjects listened to naturalistic spoken stories. The current release includes 345 subjects, 891 functional scans, and 27 diverse stories of varying duration totaling ~4.6 hours of unique stimuli (~43,000 words). This data collection is well-suited for naturalistic neuroimaging analysis, and is intended to serve as a benchmark for models of language and narrative comprehension. We provide standardized MRI data accompanied by rich metadata, preprocessed versions of the data ready for immediate use, and the spoken story stimuli with time-stamped phoneme- and word-level transcripts. All code and data are publicly available with full provenance in keeping with current best practices in transparent and reproducible neuroimaging.

Subject terms: Computational neuroscience, Language, Social neuroscience, Psychology, Cortex

| Measurement(s) | functional brain measurement |

| Technology Type(s) | functional magnetic resonance imaging |

| Factor Type(s) | story • native language |

| Sample Characteristic - Organism | Homo sapiens |

Machine-accessible metadata file describing the reported data: 10.6084/m9.figshare.14818587

Background & Summary

We use language to build a shared understanding of the world. In speaking, we package certain brain states into a sequence of linguistic elements that can be transmitted verbally, through vibrations in the air; in listening, we expand a verbal transmission into the intended brain states, bringing our own experiences to bear on the interpretation1. The neural machinery supporting this language faculty presents a particular challenge for neuroscience. Certain aspects of language are uniquely human2, limiting the efficacy of nonhuman animal models and restricting the use of invasive methods (for example, only humans recursively combine linguistic elements into complex, hierarchical expressions with long-range dependencies3,4). Language is also very dynamic and contextualized. Linguistic narratives evolve over time: the meaning of any given element depends in part on the history of elements preceding it and certain elements may retroactively resolve the ambiguity of preceding elements. This makes it difficult to decompose natural language using traditional experimental manipulations5–7.

Noninvasive neuroimaging tools, such as functional MRI, have laid the groundwork for a neurobiology of language comprehension8–14. Functional MRI measures local fluctuations in blood oxygenation—referred to as the blood-oxygen-level-dependent (BOLD) signal—associated with changes in neural activity over the course of an experimental paradigm15–18. Despite relatively poor temporal resolution (e.g. one sample every 1–2 seconds), fMRI allows us to map brain-wide responses to linguistic stimuli at a spatial resolution of millimeters. A large body of prior work has revealed a partially left-lateralized network of temporal and frontal cortices encoding acoustic-phonological19–22, syntactic23–25, and semantic features26,27 of linguistic stimuli (with considerable individual variability28–30). This work has historically been grounded in highly-controlled experimental paradigms focusing on isolated phonemes31,32, single words33–35, or contrasting sentence manipulations36–41 (with some exceptions42–44). While these studies have provided a tremendous number of insights, the vast majority rely on highly-controlled experimental manipulations with limited generalizability to natural language5,45. Recent work, however, has begun extending our understanding to more ecological contexts using naturalistic text46 and speech47.

In parallel, the machine learning community has made tremendous advances in natural language processing48,49. Neurally-inspired computational models are beginning to excel at complex linguistic tasks such as word-prediction, summarization, translation, and question-answering50,51. Rather than using symbolic lexical representations and syntactic trees, these models typically rely on vectorial representations of linguistic content52: linguistic elements that are similar in some respect are encoded nearer to each other in a continuous embedding space, and seemingly complex linguistic relations can be recovered using relatively simple arithmetic operations53,54. In contrast to experimental traditions in linguistics and neuroscience, machine learning has embraced complex, high-dimensional models trained on enormous corpora of real-world text, emphasizing predictive power over interpretability55–57.

We expect that a reconvergence of these research trajectories supported by “big” neural data will be mutually beneficial45,58. Public, well-curated benchmark datasets can both accelerate research and serve as useful didactic tools (e.g. MNIST59, CIFAR60). Furthermore, there is a societal benefit to sharing human brain data: fMRI data are expensive to acquire and the reuse of publicly shared fMRI data is estimated to have saved billions in public funding61. Public data also receive much greater scrutiny, with the potential to reveal (and rectify) “bugs” in the data or metadata. Although public datasets released by large consortia have propelled exploratory research forward62,63, relatively few of these include naturalistic stimuli (cf. movie-viewing paradigms in Cam-CAN64,65, HBN66, HCP S120063). On the other hand, maturing standards and infrastructure67–69 have enabled increasingly widespread sharing of smaller datasets from the “long tail” of human neuroimaging research70. We are beginning to see a proliferation of public neuroimaging datasets acquired using rich, naturalistic experimental paradigms46,71–84. The majority of these datasets are not strictly language-oriented, comprising audiovisual movie stimuli rather than audio-only spoken stories.

With this in mind, we introduce the “Narratives” collection of naturalistic story-listening fMRI data for evaluating models of language85. The Narratives collection comprises fMRI data collected over the course of seven years by the Hasson and Norman Labs at the Princeton Neuroscience Institute while participants listened to 27 spoken story stimuli ranging from ~3 minutes to ~56 minutes for a total of ~4.6 hours of unique stimuli (~43,000 words; Table 1). The collection currently includes 345 unique subjects contributing 891 functional scans with accompanying anatomical data and metadata. In addition to the MRI data, we provide demographic data and comprehension scores where available. Finally, we provide the auditory story stimuli, as well as time-stamped phoneme- and word-level transcripts in hopes of accelerating analysis of the linguistic content of the data. The data are standardized according to the Brain Imaging Data Structure86 (BIDS 1.2.1; https://bids.neuroimaging.io/; RRID:SCR_016124), and are publicly available via OpenNeuro87 (https://openneuro.org/; RRID:SCR_005031): https://openneuro.org/datasets/ds002345. Derivatives of the data, including preprocessed versions of the data and stimulus annotations, are available with transparent provenance via DataLad88,89 (https://www.datalad.org/; RRID:SCR_003931): http://datasets.datalad.org/?dir=/labs/hasson/narratives.

Table 1.

The “Narratives” datasets summarized in terms of duration, word count, and sample size.

| Story | Duration | TRs | Words | Subjects |

|---|---|---|---|---|

| “Pie Man” | 07:02 | 282 | 957 | 82 |

| “Tunnel Under the World” | 25:34 | 1,023 | 3,435 | 23 |

| “Lucy” | 09:02 | 362 | 1,607 | 16 |

| “Pretty Mouth and Green My Eyes” | 11:16 | 451 | 1,970 | 40 |

| “Milky Way” | 06:44 | 270 | 1,058 | 53 |

| “Slumlord” | 15:03 | 602 | 2,715 | 18 |

| “Reach for the Stars One Small Step at a Time” | 13:45 | 550 | 2,629 | 18 |

| “It’s Not the Fall That Gets You” | 09:07 | 365 | 1,601 | 56 |

| “Merlin” | 14:46 | 591 | 2,245 | 36 |

| “Sherlock” | 17:32 | 702 | 2,681 | 36 |

| “Schema” | 23:12 | 928 | 3,788 | 31 |

| “Shapes” | 06:45 | 270 | 910 | 59 |

| “The 21st Year” | 55:38 | 2,226 | 8,267 | 25 |

| “Pie Man (PNI)” | 06:40 | 267 | 992 | 40 |

| “Running from the Bronx (PNI)” | 08:56 | 358 | 1,379 | 40 |

| “I Knew You Were Black” | 13:20 | 534 | 1,544 | 40 |

| “The Man Who Forgot Ray Bradbury” | 13:57 | 558 | 2,135 | 40 |

| Total: | 4.6 hours | 11,149 TRs | 42,989 words | |

| Total across subjects: | 6.4 days | 369,496 TRs | 1,399,655 words | |

Some subjects participated in multiple experiments resulting in overlapping samples. Note that for the “Milky Way” and “Shapes” datasets, we tabulate the duration and word count only once for closely related experimental manipulations, respectively (reflected in the total durations at bottom). For the “Schema” dataset, we indicate the sum of the duration and word counts across eight brief stories. We do not include the temporally scrambled versions of the “It’s Not the Fall That Gets You Dataset” in the duration and word totals.

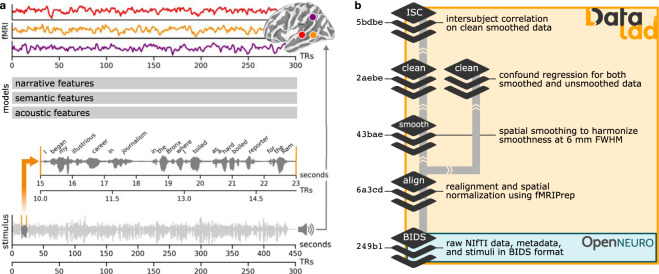

We believe these data have considerable potential for reuse because naturalistic spoken narratives are rich enough to support testing of a wide range of meaningful hypotheses about language and the brain5,7,45,90–95. These hypotheses can be formalized as quantitative models and evaluated against the brain data96,97 (Fig. 1a). For example, the Narratives data are particularly well-suited for evaluating models capturing linguistic content ranging from lower-level acoustic features98–100 to higher-level semantic features47,101–103. More broadly, naturalistic data of this sort can be useful for evaluating shared information across subjects104,105, individual differences77,106–108, algorithms for functional alignment81,109–113, models of event segmentation and narrative context114–118, and functional network organization119–122. In the following, we describe the Narratives data collection and provide several perspectives on data quality.

Fig. 1.

Schematic depiction of the naturalistic story-listening paradigm and data provenance. (a) At bottom, the full auditory story stimulus “Pie Man” by Jim O’Grady is plotted as a waveform of varying amplitude (y-axis) over the duration of 450 seconds (x-axis) corresponding to 300 fMRI volumes sampled at a TR of 1.5 seconds. An example clip (marked by vertical orange lines) is expanded and accompanied by the time-stamped word annotation (“I began my illustrious career in journalism in the Bronx, where I worked as a hard-boiled reporter for the Ram…”). The story stimulus can be described according to a variety of models; for example, acoustic, semantic, or narrative features can be extracted from or assigned to the stimulus. In a prediction or model-comparison framework, these models serve as formal hypotheses linking the stimulus to brain responses. At top, preprocessed fMRI response time-series from three example voxels for an example subject are plotted for the full duration of the story stimulus (x-axis: fMRI signal magnitude; y-axis: scan duration in TRs; red: early auditory cortex; orange: auditory association cortex; purple: temporoparietal junction). See the plot_stim.py script in the code/ directory for details. (b) At bottom, MRI data, metadata, and stimuli are formatted according to the BIDS standard and publicly available via OpenNeuro. All derivative data are version-controlled and publicly available via DataLad. The schematic preprocessing workflow includes the following steps: realignment, susceptibility distortion correction, and spatial normalization with fMRIPrep; unsmoothed and spatially smoothed workflows proceed in parallel; confound regression to mitigate artifacts from head motion and physiological noise; as well as intersubject correlation (ISC) analyses used for quality control in this manuscript. Each stage of the processing workflow is publicly available and indexed by a commit hash (left) providing a full, interactive history of the data collection. This schematic is intended to provide a high-level summary and does not capture the full provenance in detail; for example, derivatives from MRIQC are also included in the public release alongside other derivatives (but are not depicted here).

Methods

Participants

Data were collected over the course of roughly seven years, from October, 2011 to September, 2018. Participants were recruited from the Princeton University student body as well as non-university-affiliated members of the broader community in Princeton, NJ. All participants provided informed, written consent prior to data collection in accordance with experimental procedures approved by Princeton University Institutional Review Board. Across all datasets, 345 adults participated in data collection (ages 18–53 years, mean age 22.2 ± 5.1 years, 204 reported female). Demographics for each dataset are reported in the “Narrative datasets” section. Both native and non-native English speakers are included in the dataset, but all subjects reported fluency in English (our records do not contain detailed information on fluency in other languages). All subjects reported having normal hearing and no history of neurological disorders.

Experimental procedures

Upon arriving at the fMRI facility, participants first completed a simple demographics questionnaire, as well as a comprehensive MRI screening form. Participants were instructed to listen and pay attention to the story stimulus, remain still, and keep their eyes open. In some cases, subject wakefulness was monitored in real-time using an eye-tracker. Stimulus presentation was implemented using either Psychtoolbox123,124 or PsychoPy125–127. In some cases a centrally-located fixation cross or dot was presented throughout the story stimulus; however, participants were not instructed to maintain fixation. Auditory story stimuli were delivered via MRI-compatible insert earphones (Sensimetrics, Model S14); headphones or foam padding were placed over the earphones to reduce scanner noise. In most cases, a volume check was performed to ensure that subjects could comfortably hear the auditory stimulus over the MRI acquisition noise prior to data collection. A sample audio stimulus was played during an EPI sequence (that was later discarded) and the volume was adjusted by either the experimenter or subject until the subject reported being able to comfortably hear and understand the stimulus. In the staging/ directory on GitHub, we provide an example stimulus presentation PsychoPy script used with the “Pie Man (PNI)”, “Running from the Bronx (PNI)”, “I Knew You Were Black”, and “The Man Who Forgot Ray Bradbury” stories (story_presentation.py), as well as an example volume-check script (soundcheck_presentation.py; see “Code availability”).

For many datasets in this collection, story scans were accompanied by additional functional scans including different stories or other experimental paradigms, as well as auxiliary anatomical scans. In all cases, an entire story stimulus was presented in a single scanning run; however, in some cases multiple independent stories were collected in a single scanning run (the “Slumlord” and “Reach for the Stars One Small Step at a Time” stories, and the “Schema” stories; see the “Narrative datasets” section below). In scanning sessions with multiple stories and scanning runs, participants were free to request volume adjustments between scans (although this was uncommon). Participants were debriefed as to the purpose of a given experiment at the end of a scanning session, and in some cases filled out follow-up questionnaires evaluating their comprehension of the stimuli. Specifical procedural details for each dataset are described in the “Narrative datasets” section below.

Stimuli

To add to the scientific value of the neuroimaging data, we provide waveform (WAV) audio files containing the spoken story stimulus for each dataset (e.g. pieman_audio.wav). Audio files were edited using the open source Audacity software (https://www.audacityteam.org). These audio files served as stimuli for the publicly-funded psychological and neuroscientific studies described herein. The stimuli are intended for non-profit, non-commercial scholarly use—principally feature extraction—under “fair use” or “fair dealing” provisions; (re)sharing and (re)use of these media files is intended to respect these guidelines128. Story stimuli span a variety of media, including commercially-produced radio and internet broadcasts, authors and actors reading written works, professional storytellers performing in front of live audiences, and subjects verbally recalling previous events (in the scanner). Manually written transcripts are provided with each story stimulus. The specific story stimuli for each dataset are described in more detail in the “Narrative datasets” section below. In total, the auditory stories sum to roughly 4.6 hours of unique stimuli corresponding to 11,149 TRs (excluding TRs acquired with no auditory story stimulus). Concatenating across all subjects, this amounts to roughly 6.4 days worth of story-listening fMRI data, or 369,496 TRs.

By including all stimuli in this data release, researchers can extract their choice of linguistic features for model evaluation7,129, opening the door to many novel scientific questions. To kick-start this process, we used Gentle 0.10.1130 to create time-stamped phoneme- and word-level transcripts for each story stimulus in an automated fashion. Gentle is a robust, lenient forced-alignment algorithm that relies on the free and open source Kaldi automated speech recognition software131 and the Fisher English Corpus132. The initial (non-time-stamped) written transcripts were manually transcribed by the authors and supplied to the Gentle forced-alignment algorithm alongside the audio file. The first-pass output of the forced-alignment algorithm was visually inspected and any errors in the original transcripts were manually corrected; then the corrected transcripts were resubmitted to the forced-alignment algorithm to generate the final time-stamped transcripts.

The Gentle forced-alignment software generates two principal outputs. First, a simple word-level transcript with onset and offset timing for each word is saved as a tabular CSV file. Each row in the CSV file corresponds to a word. The CSV file contains four columns (no header) where the first column indicates the word from the written transcript, the second column indicates the corresponding word in the vocabulary, the third column indicates the word onset timing from the beginning of the stimulus file, and the fourth column indicates the word offset timing. Second, Gentle generates a more detailed JSON file containing the full written transcript followed by a list of word entries where each entry contains fields indicating the word (“word”) and whether word was found in the vocabulary (“alignedWord”), the onset (“start”) and offset (“end”) timing of the word referenced to the beginning of the audio file, the duration of each phone comprising that word (“phones” containing “phone” and “duration”), and a field (“case”) indicating whether the word was correctly localized in the audio signal. Words that were not found in the vocabulary are indicated by “<unk>” for “unknown” in the second column of the CSV file or in the “alignedWord” field of the JSON file. Words that are not successfully localized in the audio file receive their own row but contain no timing information in the CSV file, and are marked as “not-found-in-audio” in the “case” field of the JSON file. All timing information generated by Gentle is indexed to the beginning of the stimulus audio file. This should be referenced against the BIDS-formatted events.tsv files accompanying each scan describing when the story stimulus began relative to the beginning of the scan (this varies across datasets as described in the “Narratives datasets” section below). Some word counts may be slightly inflated by the inclusion of disfluencies (e.g. “uh”) in the transcripts. Transcripts for the story stimuli contain 42,989 words in total across all stories; 789 words (1.8%) were not successfully localized by the forced-alignment algorithm and 651 words (1.5%) were not found in the vocabulary (see the get_words.py script in the code/ directory). Concatenating across all subjects yields 1,399,655 words occurring over the course of 369,496 TRs.

Gentle packages the audio file, written transcript, CSV and JSON files with an HTML file for viewing in a browser that allows for interactively listening to the audio file while each word is highlighted (with its corresponding phones) when it occurs in the audio. Note that our forced-alignment procedure using Gentle was fully automated and did not include any manual intervention. That said, the time-stamped transcripts are not perfect—speech disfluencies, multiple voices speaking simultaneously, sound effects, and scanner noise provide a challenge for automated transcription. We hope this annotation can serve as a starting point, and expect that better transcriptions will be created in the future. We invite researchers who derive additional features and annotations from the stimuli to contact the corresponding author and we will help incorporate these annotations into the publicly available dataset.

MRI data acquisition

All datasets were collected at the Princeton Neuroscience Institute Scully Center for Neuroimaging. Acquisition parameters are reproduced below in compliance with the guidelines put forward by the Organization for Human Brain Mapping (OHBM) Committee on Best Practices in Data Analysis and Sharing133 (COBIDAS), and are also included in the BIDS-formatted JSON metadata files accompanying each scan. All studies used a repetition time (TR) of 1.5 seconds. Several groups of datasets differ in acquisition parameters due to both experimental specifications and the span of years over which datasets were acquired; for example, several of the newer datasets were acquired on a newer scanner and use multiband acceleration to achieve greater spatial resolution (e.g. for multivariate pattern analysis) while maintaining the same temporal resolution. The “Pie Man”, “Tunnel Under the World”, “Lucy”, “Pretty Mouth and Green My Eyes”, “Milky Way”, “Slumlord”, “Reach for the Stars One Small Step at a Time”, “It’s Not the Fall that Gets You”, “Merlin”, “Sherlock”, and “The 21st Year” datasets were collected on a 3 T Siemens Magnetom Skyra (Erlangen, Germany) with a 20-channel phased-array head coil using the following acquisition parameters. Functional BOLD images were acquired in an interleaved fashion using gradient-echo echo-planar imaging (EPI) with an in-plane acceleration factor of 2 using GRAPPA: TR/TE = 1500/28 ms, flip angle = 64°, bandwidth = 1445 Hz/Px, in-plane resolution = 3 × 3 mm, slice thickness = 4 mm, matrix size = 64 × 64, FoV = 192 × 192 mm, 27 axial slices with roughly full brain coverage and no gap, anterior–posterior phase encoding, prescan normalization, fat suppression. In cases where full brain coverage was not attainable, inferior extremities were typically excluded (e.g. cerebellum, brainstem) to maximize coverage of the cerebral cortex. At the beginning of each run, three dummy scans were acquired and discarded by the scanner to allow for signal stabilization. T1-weighted structural images were acquired using a high-resolution single-shot MPRAGE sequence with an in-plane acceleration factor of 2 using GRAPPA: TR/TE/TI = 2300/3.08/900 ms, flip angle = 9°, bandwidth = 240 Hz/Px, in-plane resolution 0.859 × 0.859 mm, slice thickness 0.9 mm, matrix size = 256 × 256, FoV = 172.8 × 220 × 220 mm, 192 sagittal slices, ascending acquisition, anterior–posterior phase encoding, prescan normalization, no fat suppression, 7 minutes 21 seconds total acquisition time.

The “Schema” and “Shapes” datasets were collected on a 3 T Siemens Magnetom Prisma with a 64-channel head coil using the following acquisition parameters. Functional images were acquired in an interleaved fashion using gradient-echo EPI with a multiband (simultaneous multi-slice; SMS) acceleration factor of 4 using blipped CAIPIRINHA and no in-plane acceleration: TR/TE 1500/39 ms, flip angle = 50°, bandwidth = 1240 Hz/Px, in-plane resolution = 2.0 × 2.0 mm, slice thickness 2.0 mm, matrix size = 96 × 96, FoV = 192 × 192 mm, 60 axial slices with full brain coverage and no gap, anterior–posterior phase encoding, 6/8 partial Fourier, no prescan normalization, fat suppression, three dummy scans. T1-weighted structural images were acquired using a high-resolution single-shot MPRAGE sequence with an in-plane acceleration factor of 2 using GRAPPA: TR/TE/TI = 2530/2.67/1200 ms, flip angle = 7°, bandwidth = 200 Hz/Px, in-plane resolution 1.0 × 1.0 mm, slice thickness 1.0 mm, matrix size = 256 × 256, FoV = 176 × 256 × 256 mm, 176 sagittal slices, ascending acquisition, no fat suppression, 5 minutes 52 seconds total acquisition time.

The “Pie Man (PNI),” “Running from the Bronx,” “I Knew You Were Black,” and “The Man Who Forgot Ray Bradbury” datasets were collected on the same 3 T Siemens Magnetom Prisma with a 64-channel head coil using different acquisition parameters. Functional images were acquired in an interleaved fashion using gradient-echo EPI with a multiband acceleration factor of 3 using blipped CAIPIRINHA and no in-plane acceleration: TR/TE 1500/31 ms, flip angle = 67°, bandwidth = 2480 Hz/Px, in-plane resolution = 2.5 × 2.5 mm, slice thickness 2.5 mm, matrix size = 96 × 96, FoV = 240 × 240 mm, 48 axial slices with full brain coverage and no gap, anterior–posterior phase encoding, prescan normalization, fat suppression, three dummy scans. T1-weighted structural images were acquired using a high-resolution single-shot MPRAGE sequence with an in-plane acceleration factor of 2 using GRAPPA: TR/TE/TI = 2530/3.3/1100 ms, flip angle = 7°, bandwidth = 200 Hz/Px, in-plane resolution 1.0 × 1.0 mm, slice thickness 1.0 mm, matrix size = 256 × 256, FoV = 176 × 256 × 256 mm, 176 sagittal slices, ascending acquisition, no fat suppression, prescan normalization, 5 minutes 53 seconds total acquisition time. T2-weighted structural images were acquired using a high-resolution single-shot MPRAGE sequence with an in-plane acceleration factor of 2 using GRAPPA: TR/TE = 3200/428 ms, flip angle = 120°, bandwidth = 200 Hz/Px, in-plane resolution 1.0 × 1.0 mm, slice thickness 1.0 mm, matrix size = 256 × 256, FoV = 176 × 256 × 256 mm, 176 sagittal slices, interleaved acquisition, no prescan normalization, no fat suppression, 4 minutes 40 seconds total acquisition time.

MRI preprocessing

Anatomical images were de-faced using the automated de-facing software pydeface 2.0.0134 prior to further processing (using the run_pydeface.py script in the code/ directory). MRI data were subsequently preprocessed using fMRIPrep 20.0.5135,136 (RRID:SCR_016216; using the run_fmriprep.sh script in the code/ directory). FMRIPrep is a containerized, automated tool based on Nipype 1.4.2137,138 (RRID:SCR_002502) that adaptively adjusts to idiosyncrasies of the dataset (as captured by the metadata) to apply the best-in-breed preprocessing workflow. Many internal operations of fMRIPrep functional processing workflow use Nilearn 0.6.2139 (RRID:SCR_001362). For more details of the pipeline, see the section corresponding to workflows in fMRIPrep’s documentation. The containerized fMRIPrep software was deployed using Singularity 3.5.2-1.1.sdl7140. The fMRIPrep Singularity image can be built from Docker Hub (https://hub.docker.com/r/poldracklab/fmriprep/; e.g. singularity build fmriprep-20.0.5.simg docker://poldracklab/fmriprep:20.0.5). The fMRIPrep outputs and visualization can be found in the fmriprep/ directory in derivatives/ available via the DataLad release. The fMRIPrep workflow produces two principal outputs: (a) the functional time series data in one more output space (e.g. MNI space), and (b) a collection of confound variables for each functional scan. In the following, we describe fMRIPrep’s anatomical and functional workflows, as well as subsequent spatial smoothing and confound regression implemented in AFNI 19.3.0141,142 (RRID:SCR_005927).

The anatomical MRI T1-weighted (T1w) images were corrected for intensity non-uniformity with N4BiasFieldCorrection143, distributed with ANTs 2.2.0144 (RRID:SCR_004757), and used as T1w-reference throughout the workflow. The T1w-reference was then skull-stripped with a Nipype implementation of the antsBrainExtraction.sh (from ANTs) using the OASIS30ANTs as the target template. Brain tissue segmentation of cerebrospinal fluid (CSF), white-matter (WM), and gray-matter (GM) was performed on the brain-extracted T1w using fast145 (FSL 5.0.9; RRID:SCR_002823). Brain surfaces were reconstructed using recon-all146,147 (FreeSurfer 6.0.1; RRID:SCR_001847), and the brain mask estimated previously was refined with a custom variation of the method to reconcile ANTs-derived and FreeSurfer-derived segmentations of the cortical gray-matter from Mindboggle148 (RRID:SCR_002438). Volume-based spatial normalization to two commonly-used standard spaces (MNI152NLin2009cAsym, MNI152NLin6Asym) was performed through nonlinear registration with antsRegistration (ANTs 2.2.0) using brain-extracted versions of both T1w reference and the T1w template. The following two volumetric templates were selected for spatial normalization and deployed using TemplateFlow149: (a) ICBM 152 Nonlinear Asymmetrical Template Version 2009c150 (RRID:SCR_008796; TemplateFlow ID: MNI152NLin2009cAsym), and (b) FSL’s MNI ICBM 152 Non-linear 6th Generation Asymmetric Average Brain Stereotaxic Registration Model151 (RRID:SCR_002823; TemplateFlow ID: MNI152NLin6Asym). Surface-based normalization based on nonlinear registration of sulcal curvature was applied using the following three surface templates152 (FreeSurfer reconstruction nomenclature): fsaverage, fsaverage6, fsaverage5.

The functional MRI data were preprocessed in the following way. First, a reference volume and its skull-stripped version were generated using a custom methodology of fMRIPrep. A deformation field to correct for susceptibility distortions was estimated using fMRIPrep’s fieldmap-less approach. The deformation field results from co-registering the BOLD reference to the same-subject T1w-reference with its intensity inverted153,154. Registration was performed with antsRegistration (ANTs 2.2.0), and the process was regularized by constraining deformation to be nonzero only along the phase-encoding direction, and modulated with an average fieldmap template155. Based on the estimated susceptibility distortion, a corrected EPI reference was calculated for more accurate co-registration with the anatomical reference. The BOLD reference was then co-registered to the T1w reference using bbregister (FreeSurfer 6.0.1), which implements boundary-based registration156. Co-registration was configured with six degrees of freedom. Head-motion parameters with respect to the BOLD reference (transformation matrices, and six corresponding rotation and translation parameters) are estimated before any spatiotemporal filtering using mcflirt (FSL 5.0.9)157–159. BOLD runs were slice-time corrected using 3dTshift from AFNI 20160207160. The BOLD time-series were resampled onto the following surfaces: fsaverage, fsaverage6, fsaverage5. The BOLD time-series (including slice-timing correction when applied) were resampled onto their original, native space by applying a single, composite transform to correct for head-motion and susceptibility distortions. These resampled BOLD time-series are referred to as preprocessed BOLD in original space, or just preprocessed BOLD. The BOLD time-series were resampled into two volumetric standard spaces, correspondingly generating the following spatially-normalized, preprocessed BOLD runs: MNI152NLin2009cAsym, MNI152NLin6Asym. A reference volume and its skull-stripped version were first generated using a custom methodology of fMRIPrep. All resamplings were performed with a single interpolation step by composing all the pertinent transformations (i.e. head-motion transform matrices, susceptibility distortion correction, and co-registrations to anatomical and output spaces). Gridded (volumetric) resamplings were performed using antsApplyTransforms (ANTs 2.2.0), configured with Lanczos interpolation to minimize the smoothing effects of other kernels161. Non-gridded (surface) resamplings were performed using mri_vol2surf (FreeSurfer 6.0.1).

Several confounding time-series were calculated based on the preprocessed BOLD: framewise displacement (FD), DVARS, and three region-wise global signals. FD and DVARS are calculated for each functional run, both using their implementations in Nipype162. The three global signals are extracted within the CSF, the WM, and the whole-brain masks. Additionally, a set of physiological regressors were extracted to allow for component-based noise correction (CompCor)163. Principal components are estimated after high-pass filtering the preprocessed BOLD time-series (using a discrete cosine filter with 128 s cut-off) for the two CompCor variants: temporal (tCompCor) and anatomical (aCompCor). The tCompCor components are then calculated from the top 5% variable voxels within a mask covering the subcortical regions. This subcortical mask is obtained by heavily eroding the brain mask, which ensures it does not include cortical GM regions. For aCompCor, components are calculated within the intersection of the aforementioned mask and the union of CSF and WM masks calculated in T1w space, after their projection to the native space of each functional run (using the inverse BOLD-to-T1w transformation). Components are also calculated separately within the WM and CSF masks. For each CompCor decomposition, the k components with the largest singular values are retained, such that the retained components’ time series are sufficient to explain 50 percent of variance across the nuisance mask (CSF, WM, combined, or temporal). The remaining components are dropped from consideration. The head-motion estimates calculated in the correction step were also placed within the corresponding confounds file. The confound time series derived from head motion estimates and global signals were expanded with the inclusion of temporal derivatives and quadratic terms for each164. Frames that exceeded a threshold of 0.5 mm FD or 1.5 standardised DVARS were annotated as motion outliers. All of these confound variables are provided with the dataset for researchers to use as they see fit. HTML files with quality control visualizations output by fMRIPrep are available via DataLad.

We provide spatially smoothed and non-smoothed versions of the preprocessed functional data returned by fMRIPrep (smoothing was implemented using the run_smoothing.py script in the code/ directory). Analyses requiring voxelwise correspondence across subjects, such as ISC analysis165, can benefit from spatial smoothing due to variability in functional–anatomical correspondence across individuals—at the expense of spatial specificity (finer-grained intersubject functional correspondence can be achieved using hyperalignment rather than spatial smoothing81,113,166). The smoothed and non-smoothed outputs (and subsequent analyses) can be found in the afni-smooth/ and afni-nosmooth/ directories in derivatives/ available via DataLad. To smooth the volumetric functional images, we used 3dBlurToFWHM in AFNI 19.3.0141,142 which iteratively measures the global smoothness (ratio of variance of first differences across voxels to global variance) and local smoothness (estimated within 4 mm spheres), then applies local smoothing to less-smooth areas until the desired global smoothness is achieved. All smoothing operations were performed within a brain mask to ensure that non-brain values were not smoothed into the functional data (see the brain_masks.py script in the code/ directory). For surface-based functional data, we applied smoothing SurfSmooth in AFNI, which uses a similar iterative algorithm for smoothing surface data according to geodesic distance on the cortical mantle167,168. In both cases, data were smoothed until a target global smoothness of 6 mm FWHM was met (i.e. 2–3 times original voxel sizes169). Equalizing smoothness is critical to harmonize data acquired across different scanners and protocols170.

We next temporally filtered the functional data to mitigate the effects of confounding variables. Unlike traditional task fMRI experiments with a well-defined event structure, the goal of regression was not to estimate regression coefficients for any given experimental conditions; rather, similar to resting-state functional connectivity analysis, the goal of regression was to model nuisance variables, resulting in a “clean” residual time series. However, unlike conventional resting-state paradigms, naturalistic stimuli enable intersubject analyses, which are less sensitive to idiosyncratic noises than within-subject functional connectivity analysis typically used with resting-state data119,171. With this in mind, we used a modest confound regression model informed by the rich literature on confound regression for resting-state functional connectivity172,173. AFNI’s 3dTproject was used to regress out the following nuisance variables (via the extract_confounds.py and run_regression.py scripts in the code/ directory): six head motion parameters (three translation, three rotation), the first five principal component time series from an eroded CSF and a white matter mask163,174, cosine bases for high-pass filtering (using a discrete cosine filter with cutoff: 128 s, or ~0.0078 Hz), and first- and second-order detrending polynomials. These variables were included in a single regression model to avoid reintroducing artifacts by sequential filtering175. The scripts used to perform this regression and the residual time series are provided with this data release. This processing workflow ultimately yields smoothed and non-smoothed versions of the “clean” functional time series data in several volumetric and surface-based standard spaces.

Computing environment

In addition to software mentioned elsewhere in this manuscript, all data processing relied heavily on the free, open source GNU/Linux ecosystem and NeuroDebian distribution176,177 (https://neuro.debian.net/; RRID:SCR_004401), as well as the Anaconda distribution (https://www.anaconda.com/) and conda package manager (https://docs.conda.io/en/latest/; RRID:SCR_018317). Many analyses relied on scientific computing software in Python (https://www.python.org/; RRID:SCR_008394), including NumPy178,179 (http://www.numpy.org/; RRID:SCR_008633), SciPy180,181 (https://www.scipy.org/; RRID:SCR_008058), Pandas182 (https://pandas.pydata.org/; RRID:SCR_018214), NiBabel183 (https://nipy.org/nibabel/; RRID:SCR_002498), IPython184 (http://ipython.org/; RRID:SCR_001658), and Jupyter185 (https://jupyter.org/; RRID:SCR_018315), as well as Singularity containerization140 (https://sylabs.io/docs/) and the Slurm workload manager186 (https://slurm.schedmd.com/). All surface-based MRI data were visualized using SUMA187,188 (RRID:SCR_005927). All other figures were created using Matplotlib189 (http://matplotlib.org/; RRID:SCR_008624), seaborn (http://seaborn.pydata.org/; RRID:SCR_018132), GIMP (http://www.gimp.org/; RRID:SCR_003182), and Inkscape (https://inkscape.org/; RRID:SCR_014479). The “Narratives” data were processed on a Springdale Linux 7.9 (Verona) system based on the Red Hat Enterprise Linux distribution (https://springdale.math.ias.edu/). An environment.yml file specifying the conda environment used to process the data is included in the staging/ directory on GitHub (as well as a more flexible cross-platform environment-flexible.yml file; see “Code availability”).

Narratives datasets

Each dataset in the “Narratives” collection is described below. The datasets are listed in roughly chronological order of acquisition. For each dataset, we provide the dataset-specific subject demographics, a summary of the stimulus and timing, as well as details of the experimental procedure or design.

“Pie Man”

The “Pie Man” dataset was collected between October, 2011 and March, 2013, and comprised 82 participants (ages 18–45 years, mean age 22.5 ± 4.3 years, 45 reported female). The “Pie Man” story was told by Jim O’Grady and recorded live at The Moth, a non-profit storytelling event, in New York City in 2008 (freely available at https://themoth.org/stories/pie-man). The “Pie Man” audio stimulus was 450 seconds (7.5 minutes) long and began with 13 seconds of neutral introductory music followed by 2 seconds of silence, such that the story itself started at 0:15 and ended at 7:17, for a duration 422 seconds, with 13 seconds of silence at the end of the scan. The stimulus was started simultaneously with the acquisition of the first functional MRI volume, and the scans comprise 300 TRs, matching the duration of the stimulus. The transcript for the “Pie Man” story stimulus contains 957 words; 3 words (0.3%) were not successfully localized by the forced-alignment algorithm, and 19 words (2.0%) were not found in the vocabulary (e.g. proper names). The “Pie Man” data were in some cases collected in conjunction with temporally scrambled versions of the “Pie Man” stimulus, as well the “Tunnel Under the World” and “Lucy” datasets among others, and share subjects with these datasets (as specified in the participants.tsv file). The current release only includes data collected for the intact story stimulus (rather than the temporally scrambled versions of the stimulus). The following subjects received the “Pie Man” stimulus on two separate occasions (specified by run-1 or run-2 in the BIDS file naming convention): sub-001, sub-002, sub-003, sub-004, sub-005, sub-006, sub-008, sub-010, sub-011, sub-012, sub-013, sub-014, sub-015, sub-016. We recommend excluding subjects sub-001 (both run-1 and run-2), sub-013 (run-2), sub-014 (run-2), sub-021, sub-022, sub-038, sub-056, sub-068, and sub-069 (as specified in the scan_exclude.json file in the code/ directory) based on criteria explained in the “Intersubject correlation” section of “Data validation.” Subsets of the “Pie Man” data have been previously reported in numerous publications109,115,119,190–198, and additional datasets not shared here have been collected using the “Pie Man” auditory story stimulus199–202. In the filename convention (and figures), “Pie Man” is labeled using the task alias pieman.

“Tunnel Under the World”

The “Tunnel Under the World” dataset was collected between May, 2012, and February, 2013, and comprised 23 participants (ages 18–31 years, mean age 22.5 ± 3.8 years, 14 reported female). The “Tunnel Under the World” science-fiction story was authored by Frederik Pohl in 1955 which was broadcast in 1956 as part of the X Minus One series, a collaboration between the National Broadcasting Company and Galaxy Science Fiction magazine (freely available at https://www.oldtimeradiodownloads.com). The “Tunnel Under the World” audio stimulus is 1534 seconds (~25.5 minutes) long. The stimulus was started after the first two functional MRI volumes (2 TRs, 3 seconds) were collected, with ~23 seconds of silence after the stimulus. The functional scans comprise 1040 TRs (1560 seconds), except for subjects sub-004 and sub-013 which have 1035 and 1045 TRs respectively. The transcript for “Tunnel Under the World” contains 3,435 words; 126 words (3.7%) were not successfully localized by the forced-alignment algorithm and 88 words (2.6%) were not found in the vocabulary. The “Tunnel Under the World” and “Lucy” datasets contain largely overlapping samples of subjects, though were collected in separate sessions, and were, in many cases, collected alongside “Pie Man” scans (as specified in the participants.tsv file). We recommend excluding the “Tunnel Under the World” scans for subjects sub-004 and sub-013 (as specified in the scan_exclude.json file in the code/ directory). The “Tunnel Under the World” data have been previously reported by Lositsky and colleagues203. In the filename convention, “Tunnel Under the World” is labeled using the task alias tunnel.

“Lucy”

The “Lucy” dataset was collected between October, 2012 and January, 2013 and comprised 16 participants (ages 18–31 years, mean age 22.6 ± 3.9 years, 10 reported female). The “Lucy” story was broadcast by the non-profit WNYC public radio in 2010 (freely available at https://www.wnycstudios.org/story/91705-lucy). The “Lucy” audio stimulus is 542 seconds (~9 minutes) long and was started after the first two functional MRI volumes (2 TRs, 3 seconds) were collected. The functional scans comprise 370 TRs (555 seconds). The transcript for “Lucy” contains 1,607 words; 22 words (1.4%) were not successfully localized by the forced-alignment algorithm and 27 words (1.7%) were not found in the vocabulary. The “Lucy” and “Tunnel Under the World” datasets contain largely overlapping samples of subjects, and were acquired contemporaneously with “Pie Man” data. We recommend excluding the “Lucy” scans for subjects sub-053 and sub-065 (as specified in the scan_exclude.json file in the code/ directory). The “Lucy” data have not previously been reported. In the filename convention, “Lucy” is labeled using the task alias lucy.

“Pretty Mouth and Green My Eyes”

The “Pretty Mouth and Green My Eyes” dataset was collected between March, 2013, and October, 2013, and comprised 40 participants (ages 18–34 years, mean age 21.4 ± 3.5 years, 19 reported female). The “Pretty Mouth and Green My Eyes” story was authored by J. D. Salinger for The New Yorker magazine (1951) and subsequently published in the Nine Stories collection (1953). The spoken story stimulus used for data collection was based on an adapted version of the original text that was shorter and included a few additional sentences, and was read by a professional actor. The “Pretty Mouth and Green My Eyes” audio stimulus is 712 seconds (~11.9 minutes) long and began with 18 seconds of neutral introductory music followed by 3 seconds of silence, such that the story started at 0:21 (after 14 TRs) and ended at 11:37, for a duration of 676 seconds (~451 TRs), with 15 seconds (10 TRs) of silence at the end of the scan. The functional scans comprised 475 TRs (712.5 seconds). The transcript for “Pretty Mouth and Green My Eyes” contains 1,970 words; 7 words (0.4%) were not successfully localized by the forced-alignment algorithm and 38 words (1.9%) were not found in the vocabulary.

The “Pretty Mouth and Green My Eyes” stimulus was presented to two groups of subjects in two different narrative contexts according to a between-subject experimental design: (a) in the “affair” group, subjects read a short passage implying that the main character was having an affair; (b) in the “paranoia” group, subjects read a short passage implying that the main character’s friend was unjustly paranoid (see Yeshurun et al., 2017, for the full prompts). The two experimental groups were randomly assigned such that there were 20 subjects in each group and each subject only received the stimulus under a single contextual manipulation. The group assignments are indicated in the participants.tsv file, and the scans.tsv file for each subject. Both groups received the identical story stimulus despite receiving differing contextual prompts. Immediately following the scans, subjects completed a questionnaire assessing comprehension of the story. The questionnaire comprised 27 context-independent and 12 context-dependent questions (39 questions in total). The resulting comprehension scores are reported as the proportion of correct answers (ranging 0–1) in the participants.tsv file and scans.tsv file for each subject. We recommend excluding the “Pretty Mouth and Green My Eyes” scans for subjects sub-038 and sub-105 (as specified in the scan_exclude.json file in the code/directory). The “Pretty Mouth and Green My Eyes” data have been reported in existing publications109,204. In the filename convention, “Pretty Mouth and Green My Eyes” is labeled using the task alias prettymouth.

“Milky Way”

The “Milky Way” dataset was collected between March, 2013, and April, 2017, and comprised 53 participants (ages 18–34 years, mean age 21.7 ± 4.1 years, 27 reported female). The “Milky Way” story stimuli were written by an affiliate of the experimenters and read by a member of the Princeton Neuroscience Institute not affiliated with the experimenters’ lab. There are three versions of the “Milky Way” story capturing three different experimental conditions: (a) the original story (labeled original), which describes a man who visits a hypnotist to overcome his obsession with an ex-girlfriend, but instead becomes fixated on Milky Way candy bars; (b) an alternative version (labeled vodka) where sparse word substitutions yield a narrative in which a woman obsessed with an American Idol judge visits a psychic and becomes fixated on vodka; (c) a control version (labeled synonyms) with a similar number of word substitutions to the vodka version, but instead using synonyms of words in the original version, yielding a very similar narrative to the original. Relative to the original version, both the vodka and synonyms versions substituted on average 2.5 ± 1.7 words per sentence (34 ± 21% of words per sentence). All three versions were read by the same actor and each sentence of the recording was temporally aligned across versions. All three versions of the “Milky Way” story were 438 seconds (7.3 min; 292 TRs) long and began with 18 seconds of neutral introductory music followed by a 3 seconds of silence (21 TRs total), such that the story started at 0:21 and ended at 7:05, for a duration 404 s, with 13 seconds of silence at the end of the scan. The functional runs comprised 297 volumes (444.5 seconds). The transcript for the original version of the stimulus contains 1,059 words; 7 words (0.7%) were not successfully localized by the forced-alignment algorithm and 16 words (1.5%) were not found in the vocabulary. The vodka version contains 1058 words; 2 words (0.2%) were not successfully localized and 21 words (2.0%) were not found in the vocabulary. The synonyms version contains 1,066 words; 10 words (0.9%) were not successfully localized and 13 words (1.2%) were not found in the vocabulary.

The three versions of the stimuli were assigned to subjects according to a between-subject design, such that there were 18 subjects in each of the three groups, and each subject received only one version of the story. The group assignments are indicated in the participants.tsv file, and the scans.tsv file for each subject. The stimulus filename includes the version (milkywayoriginal, milkywayvodka, milkywaysynonyms) and is specified in the events.tsv file accompanying each run. The data corresponding to the original and vodka versions were collected between March and October, 2013, while the synonyms data were collected in March and April, 2017. Subjects completed a 28-item questionnaire assessing story comprehension following the scanning session. The comprehension scores for the original and vodka groups are reported as the proportion of correct answers (ranging 0–1) in the participants.tsv file and scans.tsv file for each subject. We recommend excluding “Milky Way” scans for subjects sub-038, sub-105, and sub-123 (as specified in the scan_exclude.json file in the code/ directory). The “Milky Way” data have been previously reported197. In the filename convention, “Milky Way” is labeled using the task alias milkyway.

“Slumlord” and “Reach for the Stars One Small Step at a Time”

The “Slumlord” and “Reach for the Stars One Small Step at a Time” dataset was collected between May, 2013, and October, 2013, and comprised 18 participants (ages 18–27 years, mean age 21.0 ± 2.3 years, 8 reported female). The “Slumlord” story was told by Jack Hitt and recorded live at The Moth, a non-profit storytelling event, in New York City in 2006 (freely available at https://themoth.org/stories/slumlord). The “Reach for the Stars One Small Step at a Time” story was told by Richard Garriott and also recorded live at The Moth in New York City in 2010 (freely available at https://themoth.org/stories/reach-for-the-stars). The combined audio stimulus is 1,802 seconds (~30 minutes) long in total and begins with 22.5 seconds of music followed by 3 seconds of silence. The “Slumlord” story begins at approximately 0:25 relative to the beginning of the stimulus file, ends at 15:28, for a duration of 903 seconds (602 TRs), and is followed by 12 seconds of silence. After another 22 seconds of music starting at 15:40, the “Reach for the Stars One Small Step at a Time” story starts at 16:06 (965 seconds; relative to the beginning of the stimulus file), ends at 29:50, for a duration of 825 seconds (~550 TRs), and is followed by 12 seconds of silence. The stimulus file was started after 3 TRs (4.5 seconds) as indicated in the events.tsv files accompanying each scan. The scans were acquired with a variable number of trailing volumes following the stimulus across subjects, but can be truncated as researchers see fit (e.g. to 1205 TRs). The transcript for the combined “Slumlord” and “Reach for the Stars One Small Step at a Time” stimulus contains 5,344 words; 116 words (2.2%) were not successfully localized by the forced-alignment algorithm, and 57 words (1.1%) were not found in the vocabulary. The transcript for “Slumlord” contains 2,715 words; 65 words (2.4%) were not successfully localized and 25 words (0.9%) were not found in the vocabulary. The transcript for “Reach for the Stars One Small Step at a Time” contains 2,629 words; 51 words (1.9%) were not successfully localized and 32 words (1.2%) were not found in the vocabulary. We recommend excluding sub-139 due to a truncated acquisition time (as specified in the scan_exclude.json file in the code/ directory).

After the scans, each subject completed an assessment of their comprehension of the “Slumlord” story. To evaluate comprehension, subjects were presented with a written transcript of the “Slumlord” story with 77 blank segments, and were asked to fill in the omitted word or phrase for each blank (free response) to the best of their ability. The free responses were evaluated using Amazon Mechanical Turk (MTurk) crowdsourcing platform. MTurk workers rated the response to each omitted segment on a scale from 0–4 of relatedness to the correct response, where 0 indicates no response provided, 1 indicates an incorrect response unrelated to the correct response, and 4 indicates an exact match to the correct response. The resulting comprehension scores are reported as the proportion out of a perfect score of 4 (ranging 0–1) in the participants.tsv file and scans.tsv file for each subject. Comprehension scores for “Reach for the Stars One Small Step at a Time” are not provided. The “Slumlord” and “Reach for the Stars One Small Step at a Time” data have not been previously reported; however, a separate dataset not included in this release has been collected using the “Slumlord” story stimulus205. In the filename convention, the combined “Slumlord” and “Reach for the Stars One Small Step at a Time” data are labeled slumlordreach, and labeled slumlord and reach when analyzed separately.

“It’s Not the Fall that Gets You”

The “It’s Not the Fall that Gets You” dataset was collected between May, 2013, and October, 2013, and comprised 56 participants (ages 18–29 years, mean age 21.0 ± 2.4 years, 31 reported female). The “It’s Not the Fall that Gets You” story was told by Andy Christie and recorded live at The Moth, a non-profit storyteller event, in New York City in 2009 (freely available at https://themoth.org/stories/its-not-the-fall-that-gets-you). In addition to the original story (labeled intact), two experimental manipulations of the story stimulus were created: (a) in one version, the story stimulus was temporally scrambled at a coarse level (labeled longscram); (b) in the other version, the story stimulus was temporally scrambled at a finer level (labeled shortscram). In both cases, the story was spliced into segments at the level of sentences prior to scrambling, and segment boundaries used for the longscram condition are a subset of the boundaries used for the shortscram condition. The boundaries used for scrambling occurred on multiples of 1.5 seconds to align with the TR during scanning acquisition. We recommend using only the intact version for studying narrative processing because the scrambled versions do not have a coherent narrative structure (by design). All three versions of the “It’s Not the Fall that Gets You” stimulus were 582 seconds (9.7 minutes) long and began with 22 seconds of neutral introductory music followed by 3 seconds of silence such that the story started at 0:25 (relative to the beginning of the stimulus file) and ended at 9:32 for a duration of 547 seconds (365 TRs), with 10 seconds of silence at the end of the stimulus. The stimulus file was started after 3 TRs (4.5 seconds) as indicated in the events.tsv files accompanying each scan. The functional scans comprised 400 TRs (600 seconds). The transcript for the intact stimulus contains 1,601 words total; 40 words (2.5%) were not successfully localized by the forced-alignment algorithm and 20 words (1.2%) were not found in the vocabulary.

The scrambled stimuli were assigned to subjects according to a mixed within- and between-subject design, such that subjects received the intact stimulus and either the longscram stimulus (23 participants) or the shortscram stimulus (24 participants). The group assignments are indicated in the participants.tsv file, and the scans.tsv file for each subject. Due to the mixed within- and between-subject design, the files are named with the full notthefallintact, notthefalllongscram, notthefallshortscram task labels. We recommend excluding the intact scans for sub-317 and sub-335, the longscram scans for sub-066 and sub-335, and the shortscram scan for sub-333 (as specified in the scan_exclude.json file in the code/ directory). The “It’s Not the Fall that Gets You” data have been recently reported206.

“Merlin” and “Sherlock”

The “Merlin” and “Sherlock” datasets were collected between May, 2014, and March, 2015, and comprised 36 participants (ages 18–47 years, mean age 21.7 ± 4.7 years, 22 reported female). The “Merlin” and “Sherlock” stimuli were recorded while an experimental participant recalled events from previously viewed television episodes during fMRI scanning. Note that this spontaneous recollection task (during fMRI scanning) is cognitively distinct from reciting a well-rehearsed story to an audience, making this dataset different from others in this release. This release contains only the data for subjects listening to the auditory verbal recall; not data for the audiovisual stimuli or the speaker’s fMRI data207. The “Merlin” stimulus file is 915 seconds (9.25 minutes) long and began with 25 seconds of music followed by 4 seconds of silence such that the story started at 0:29 and ended at 15:15 for a duration of 886 seconds (591 TRs). The transcript for the “Merlin” stimulus contains 2,245 words; 111 words (4.9%) were not successfully localized by the forced-alignment algorithm and 13 words (0.6%) were not found in the vocabulary. The “Sherlock” stimulus file is 1,081 seconds (~18 minutes) long and began with 25 seconds of music followed by 4 seconds of silence such that the story started at 0:29 and ended at 18:01 for a duration of 1,052 seconds (702 TRs). The stimulus files for both stories were started after 3 TRs (4.5 seconds) as indicated in the events.tsv files accompanying each scan. The “Merlin” and “Sherlock” scans were acquired with a variable number of trailing volumes following the stimulus across subjects, but can be truncated as researchers see fit (e.g. to 614 and 724 TRs, respectively). The transcript for “Sherlock” contains 2681 words; 171 words (6.4%) were not successfully localized and 17 words (0.6%) were not found in the vocabulary. The word counts for “Merlin” and “Sherlock” may be slightly inflated due to the inclusion of disfluencies (e.g. “uh”) in transcribing the spontaneous verbal recall.

All 36 subjects listened to both the “Merlin” and “Sherlock” verbal recall auditory stimuli. However, 18 subjects viewed the “Merlin” audiovisual clip prior to listening to both verbal recall stimuli, while the other 18 subjects viewed the “Sherlock” audiovisual clip prior to listening to both verbal recall stimuli. In the “Merlin” dataset, the 18 subjects that viewed the “Sherlock” clip (and not the “Merlin” audiovisual clip) are labeled with the naive condition as they heard the “Merlin” verbal recall auditory-only stimulus without previously having seen the “Merlin” audiovisual clip. The other 18 subjects in the “Merlin” dataset viewed the “Merlin” audiovisual clip (and not the “Sherlock” clip) prior to listening to the “Merlin” verbal recall and are labeled with the movie condition. Similarly, in the “Sherlock” dataset, the 18 subjects that viewed the “Merlin” audiovisual clip (and not the “Sherlock” audiovisual clip) are labeled with the naive condition, while the 18 subjects that viewed the “Sherlock” audiovisual clip (and not the “Merlin” audiovisual clip) are labeled with the movie condition. These condition labels are indicated in both the participants.tsv file and the scans.tsv files for each subject. Following the scans, each subject performed a free recall task to assess memory of the story. Subjects were asked to write down events from the story in as much detail as possible with no time limit. The quality of comprehension for the free recall text was evaluated by three independent raters on a scale of 1–10. The resulting comprehension scores are reported as the sum across raters normalized by the perfect score (range 0–1) in the participants.tsv file and scans.tsv file for each subject. Comprehension scores are only provided for the naive condition. We recommend excluding the “Merlin” scan for subject sub-158 and the “Sherlock” scan for sub-139 (as specified in the scan_exclude.json file in the code/ directory. The “Merlin” and “Sherlock” data have been previously reported207. In the filename convention, “Merlin” is labeled merlin and “Sherlock” is labeled sherlock.

“Schema”

The “Schema” dataset was collected between August, 2015, and March, 2016, and comprised 31 participants (ages 18–35 years, mean age 23.7 ± 4.8 years, 13 reported female). The “Schema” dataset comprised eight brief (~3-minute) auditory narratives based on popular television series and movies featuring restaurants and airport scenes: The Big Bang Theory, Friends, How I Met Your Mother, My Cousin Vinnny, The Santa Clause, Shame, Seinfeld, Up in the Air; labeled bigbang, friends, himym, vinny, santa, shame, seinfeld, upintheair, respectively. These labels are indicated in the events.tsv accompanying each scanner run, and the corresponding stimuli (e.g. bigbang_audio.wav). Two of the eight stories were presented in each of four runs. Each run had a fixed presentation order of a fixed set of stories, but the presentation order of the four runs was counterbalanced across subjects. The functional scans were acquired with a variable number of trailing volumes across subjects, and in each run two audiovisual film clips (not described in this data release) were also presented; the auditory stories must be spliced from the full runs according to the events.tsv file. Overall, the transcripts for the auditory story stimuli in the “Schema” dataset contain 3,788 words; 20 words (0.5%) were not successfully localized by the forced-alignment algorithm and 69 words (1.8%) were not found in the vocabulary. The “Schema” data were previously reported116. In the filename convention, the “Schema” data are labeled using the task alias schema.

“Shapes”

The “Shapes” dataset was collected between May, 2015, and July, 2016, and comprised 59 participants (ages 18–35 years, mean age 23.0 ± 4.5 years, 42 reported female). The “Shapes” dataset includes two related auditory story stimuli describing a 7-minute original animation called “When Heider Met Simmel” (copyright Yale University). The movie uses two-dimensional geometric shapes to tell the story of a boy who dreams about a monster, and was inspired by Heider and Simmel208. The movie itself is available for download at https://www.headspacestudios.org. The two verbal descriptions based on the movie used as auditory stimuli in the “Shapes” dataset were: (a) a purely physical description of the animation (labeled shapesphysical), and (b) a social description of the animation intended to convey intentionality in the animated shapes (labeled shapessocial). Note that the physical description story (shapesphysical) is different from other stimuli in this release in that it describes the movements of geometrical shapes without any reference to the narrative embedded in the animation. Both stimulus files are 458 seconds (7.6 minutes) long and began with a 37-second introductory movie (only the audio channel is provided in the stimulus files) followed by 7 seconds of silence such that the story started at 0:45 and ended at roughly 7:32 for a duration of ~408 seconds (~272 TRs) and ended with ~5 seconds of silence. The stimulus files for both stories were started after 3 TRs (4.5 seconds) as indicated in the events.tsv files accompanying each scan. The functional scans were acquired with a variable number of trailing volumes following the stimulus across subjects, but can be truncated as researchers see fit (e.g. to 309 TRs). The transcript for the shapesphysical stimulus contains 951 words; 9 words (0.9%) were not successfully localized by the forced-alignment algorithm and 25 words (2.6%) were not found in the vocabulary. The transcript for the shapessocial stimulus contains 910 words; 6 words (0.7%) were not successfully localized and 14 words (1.5%) were not found in the vocabulary.

Each subject received both the auditory physical description of the animation (shapesphysical), the auditory social description of the animation (shapessocial), and the audiovisual animation itself (not included in the current data release) in three separate runs. Run order was counterbalanced across participants, meaning that some participants viewed the animation before hearing the auditory description. The run order for each subject (e.g. physical-social-movie) is specified in the participants.tsv file and the subject-specific scans.tsv files. Immediately following the story scan, subjects performed a free recall task in the scanner (the fMRI data during recall are not provided in this release). They were asked to describe the story in as much detail as possible using their own words. The quality of the recall was evaluated by an independent rater naive to the purpose of the experiment on a scale of 1–10. The resulting comprehension scores are reported normalized by 10 (range 0–1) and reported in the participants.tsv file and scans.tsv file for each subject. Comprehension scores are only provided for scans where the subject first listened to the story stimulus, prior to hearing the other version of the story or viewing the audiovisual stimulus. We recommend excluding the shapessocial scan for subject sub-238 (as specified in the scan_exclude.json file in the code/ directory. The “Shapes” data were previously reported209.

“The 21st Year”

The “The 21st Year” dataset was collected between February, 2016, and January, 2018, and comprised 25 participants (ages 18–41 years, mean age 22.6 ± 4.7 years, 13 reported female). The story stimulus was written and read aloud by author Christina Lazaridi, and includes two seemingly unrelated storylines relayed in blocks of prose that eventually fuse into a single storyline. The stimulus file is 3,374 seconds (56.2 minutes) long and began with 18 seconds of introductory music followed by 3 seconds such that the story started at 0:21 and ended at 55:59 for a duration of 3,338 seconds (2,226 TRs) followed by 15 seconds of silence. The functional scans comprised 2,249 volumes (3,373.5 seconds). The story stimulus contains 8,267 words; 88 words (1.1%) were not successfully localized by the forced-alignment algorithm and 143 words (1.7%) were not found in the vocabulary. Data associated with “The 21st Year” have been previously reported210. In the filename convention, data for “The 21st Year” are labeled using the task alias 21styear.

“Pie Man (PNI)” and “Running from the Bronx”

The “Pie Man (PNI)” and “Running from the Bronx” data were collected between May and September, 2018, and comprised 47 participants (ages 18–53 years, mean age 23.3 ± 7.4 years, 33 reported female). The “Pie Man (PNI)” and “Running from the Bronx” story stimuli were told by Jim O’Grady while undergoing fMRI scans at the Princeton Neuroscience Institute (PNI). The spoken story was recorded using a noise cancelling microphone mounted on the head coil and scanner noise was minimized using Audacity. The “Pie Man (PNI)” stimulus file is 415 seconds (~7 minutes) long and begins with a period of silence such that the story starts at :09 and ends at 6:49 for a duration of 400 seconds (267 TRs) followed by 7 seconds of silence. The “Pie Man (PNI)” stimulus conveys the same general story as the original “Pie Man” stimulus but with differences in timing and delivery (as well as poorer audio quality due to being recorded during scanning). The “Running from the Bronx” stimulus file is 561 seconds (9.4 minutes) long and begins with a period of silence such that the story starts at 0:15 and ends at 9:11 for a duration of 536 seconds (358 TRs) followed by 10 seconds of silence. The “Pie Man (PNI)” and “Running from the Bronx” functional scans comprised 294 TRs (441 seconds) and 390 TRs (585 seconds), respectively. Both stimulus files were started after 8 TRs (12 seconds) as indicated in the accompanying events.tsv files. The program card on the scanner computer was organized according to the ReproIn convention211 to facilitate automated conversion to BIDS format using HeuDiConv 0.5.dev1212. The transcript for the “Pie Man (PNI)” stimulus contains 992 words; 7 words (0.7%) were not successfully localized by the forced-alignment algorithm and 19 words (1.9%) were not found in the vocabulary. The transcript for “Running from the Bronx” contains 1,379 words; 23 words (1.7%) were not successfully localized and 19 words (1.4%) were not found in the vocabulary.

At the end of the scanning session, the subjects completed separate questionnaires for each story to evaluate comprehension. Each questionnaire comprised 30 multiple choice and fill-in-the-blank questions testing memory and understanding of the story. The resulting comprehension scores are reported as the proportion of correct answers (ranging 0–1) in the participants.tsv file and the scans.tsv file for each subject. Data for both stories have been previously reported166,213. The “Pie Man (PNI)” and “Running from the Bronx” data are labeled with the piemanpni and bronx task aliases in the filename convention. The “Pie Man (PNI)” and “Running from the Bronx” data were collected in conjunction with the “I Knew You Were Black” and “The Man Who Forgot Ray Bradbury” data and share the same samples of subjects.

“I Knew You Were Black” and “The Man Who Forgot Ray Bradbury”

The “I Knew You Were Black” and “The Man Who Forgot Ray Bradbury” data were collected by between May and September, 2018, and comprised the same sample of subjects from “Pie Man (PNI)” and “Running from the Bronx” data (47 participants, ages 18–53 years, mean age 23.3 ± 7.4 years, 33 reported female). Unlike the “Pie Man (PNI)” and “Running from the Bronx” story stimuli, the “I Knew You Were Black” and “The Man Who Forgot Ray Bradbury” stimuli are professionally recorded with high audio quality. The “I Knew You Were Black” story was told by Carol Daniel and recorded live at The Moth, a non-profit storytelling event, in New York City in 2018 (freely available at https://themoth.org/stories/i-knew-you-were-black). The “I Knew You Were Black” story is 800 seconds (13.3 minutes, 534 TRs) long and occupies the entire stimulus file. The “I Knew You Were Black” functional scans comprised 550 TRs (825 seconds). The transcript for the “I Knew You Were Black” stimulus contains 1,544 words; 5 words (0.3%) were not successfully localized by the forced-alignment algorithm and 4 words (0.3%) were not found in the vocabulary. “The Man Who Forgot Ray Bradbury” was written and read aloud by author Neil Gaiman at the Aladdin Theater in Portland, OR, in 2011 (freely available at https://soundcloud.com/neilgaiman/the-man-who-forgot-ray-bradbury). The “The Man Who Forgot Ray Bradbury” audio stimulus file is 837 seconds (~14 minutes, 558 TRs) long and occupies the entire stimulus file. The “The Man Who Forgot Ray Bradbury” functional scans comprised 574 TRs (861 seconds). The transcript for “The Man Who Forgot Ray Bradbury” contains 2,135 words; 16 words (0.7%) were not successfully localized and 29 words (1.4%) were not found in the vocabulary. For both datasets, the audio stimulus was prepended by 8 TRs (12 seconds) and followed by 8 TRs (12 seconds) of silence. The program card on the scanner computer was organized according to the ReproIn convention211 to facilitate automated conversion to BIDS format using HeuDiConv 0.5.dev1212.

Similarly to the “Pie Man (PNI)” and “Running from the Bronx” stories, the subjects completed separate questionnaires for each story to evaluate comprehension after scanning. Each questionnaire comprised 25 multiple choice and fill-in-the-blank questions testing memory and understanding of the story. The resulting comprehension scores are reported as the proportion of correct answers (ranging 0–1) in the participants.tsv file and the scans.tsv file for each subject. Data for both stories have been previously reported166,213. The “I Knew You Were Black” and “The Man Who Forgot Ray Bradbury” data are labeled with the black and forgot task aliases in the filename convention.

Data Records

The core, unprocessed NIfTI-formatted MRI data with accompanying metadata and stimuli are publicly available on OpenNeuro: https://openneuro.org/datasets/ds002345 (10.18112/openneuro.ds002345.v1.1.4)85. All data and derivatives are hosted at the International Neuroimaging Data-sharing Initiative (INDI)214 (RRID:SCR_00536) via the Neuroimaging Informatics Tools and Resources Clearinghouse (NITRC)215 (https://www.nitrc.org/; RRID:SCR_003430): http://fcon_1000.projects.nitrc.org/indi/retro/Narratives.html (10.15387/fcp_indi.retro.Narratives)216. The full data collection is available via the DataLad data distribution (RRID:SCR_019089): http://datasets.datalad.org/?dir=/labs/hasson/narratives.

Data and derivatives have been organized according to the machine-readable Brain Imaging Data Structure (BIDS) 1.2.186, which follows the FAIR principles for making data findable, accessible, interoperable, and reusable217. A detailed description of the BIDS specification can be found at http://bids.neuroimaging.io/. Organizing the data according to the BIDS convention facilitates future research by enabling the use of automated BIDS-compliant software (BIDS Apps)218. Briefly, files and metadata are labeled using key-value pairs where key and value are separated by a hyphen, while key-value pairs are separated by underscores. Each subject is identified by an anonymized numerical identifier (e.g. sub-001). Each subject participated in one or more story-listening scans indicated by the task alias for the story (e.g. task-pieman). Subject identifiers are conserved across datasets; subjects contributing to multiple datasets are indexed by the same identifier (e.g. sub-001 contributes to both the “Pie Man” and “Tunnel Under the World” datasets).

The top-level BIDS-formatted narratives/ directory contains a tabular participants.tsv file where each row corresponds to a subject and includes demographic variables (age, sex), the stories that subject received (task), as well as experimental manipulations (condition) and comprehension scores where available (comprehension). Cases of omitted, missing, or inapplicable data are indicated by “n/a” according to the BIDS convention. The top-level BIDS directory also contains the following: (a) a dataset_description.json containing top-level metadata, including licensing, authors, acknowledgments, and funding sources; (b) a code/ directory containing scripts used for analyzing the data; (c) a stimuli/ directory containing the audio stimulus files (e.g. pieman_audio.wav), transcripts (e.g. pieman_transcript.txt), and a gentle/ directory containing time-stamped transcripts created using Gentle (i.e.; described in “Stimuli” in the “Methods” section); (d) a derivatives/ directory containing all MRI derivatives (described in more detail in the “MRI preprocessing” and “Technical validation” sections); and (e) descriptive README and CHANGES files.

BIDS-formatted MRI data for each subject are contained in separate directories named according to the subject identifiers. Within each subject’s directory, data are segregated into anatomical data (the anat/ directory) and functional data (the func/ directory). All MRI data are stored as gzipped (compressed) NIfTI-1 images219. NIfTI images and the relevant metadata were reconstructed from the original Digital Imaging and Communications in Medicine (DICOM) images using dcm2niix 1.0.20180518220. In instances where multiple scanner runs were collected for the same imaging modality or story in the same subject, the files are differentiated using a run label (e.g. run-1, run-2). All anatomical and functional MRI data files are accompanied by sidecar JSON files containing MRI acquisition parameters in compliance with the COBIDAS report133. Identifying metadata (e.g. name, birth date, acquisition date and time) have been omitted, and facial features have been removed from anatomical images using the automated de-facing software pydeface 2.0.0134. All MRI data and metadata are released under the Creative Commons CC0 license (https://creativecommons.org/), which allows for free reuse without restriction.

All data and derivatives are version-controlled using DataLad88,89 (https://www.datalad.org/; RRID:SCR_003931). DataLad uses git (https://git-scm.com/; RRID:SCR_003932) and git-annex (https://git-annex.branchable.com/; RRID:SCR_019087) to track changes made to the data, providing a transparent history of the dataset. Each stage of the dataset’s evolution is indexed by a unique commit hash and can be interactively reinstantated. Major subdivisions of the data collection, such as the code/, stimuli/, and derivative directories, are encapsulated as “subdatasets” nested within the top-level BIDS dataset. These subdatasets can be independently manipulated and have their own standalone version history. For a primer on using DataLad, we recommend the DataLad Handbook221 (http://handbook.datalad.org/).

Technical Validation

Image quality metrics