Abstract

Recent neuroscience studies demonstrate that a deeper understanding of brain function requires a deeper understanding of behavior. Detailed behavioral measurements are now often collected using video cameras, resulting in an increased need for computer vision algorithms that extract useful information from video data. Here we introduce a new video analysis tool that combines the output of supervised pose estimation algorithms (e.g. DeepLabCut) with unsupervised dimensionality reduction methods to produce interpretable, low-dimensional representations of behavioral videos that extract more information than pose estimates alone. We demonstrate this tool by extracting interpretable behavioral features from videos of three different head-fixed mouse preparations, as well as a freely moving mouse in an open field arena, and show how these interpretable features can facilitate downstream behavioral and neural analyses. We also show how the behavioral features produced by our model improve the precision and interpretation of these downstream analyses compared to using the outputs of either fully supervised or fully unsupervised methods alone.

Author summary

The quantification of animal behavior is a crucial step towards understanding how neural activity produces coordinated movements, and how those movements are affected by genes, drugs, and environmental manipulations. In recent years video cameras have become an inexpensive and ubiquitous way to monitor animal behavior across many species and experimental paradigms. Here we propose a new computer vision algorithm that extracts a succinct summary of an animal’s pose on each frame. This summary contains information about a predetermined set of body parts of interest (such as joints on a limb), as well as information about previously unidentified aspects of the animal’s pose. Experimenters can thus track body parts they think are relevant to their experiment, and allow the algorithm to discover new dimensions of behavior that might also be important for downstream analyses. We demonstrate this algorithm on videos from four different experimental setups, and show how these new dimensions of behavior can aid in downstream behavioral and neural analyses.

This is a PLOS Computational Biology Methods paper.

Introduction

The ability to produce detailed quantitative descriptions of animal behavior is driving advances across a wide range of research disciplines, from genetics and neuroscience to psychology and ecology [1–6]. Traditional approaches to quantifying animal behavior rely on time consuming and error-prone human video annotation, or constraining the animal to perform simple, easy to measure actions (such as reaching towards a target). These approaches limit the scale and complexity of behavioral datasets, and thus the scope of their insights into natural phenomena [7]. These limitations have motivated the development of new high-throughput methods which quantify behavior from videos, relying on recent advances in computer hardware and computer vision algorithms [8, 9].

The automatic estimation of animal posture (or “pose”) from video data is a crucial first step towards automatically quantifying behavior in more naturalistic settings [10–13]. Modern pose estimation algorithms rely on supervised learning: they require the researcher to label a relatively small number of frames (tens to hundreds, which we call “human labels”), indicating the location of a predetermined set of body parts of interest (e.g. joints). The algorithm then learns to label the remaining frames in the video, and these pose estimates (which we refer to simply as “labels”) can be used for downstream analyses such as quantifying behavioral dynamics [13–17] and decoding behavior from neural activity [18, 19]. One advantage of these supervised methods is that they produce an inherently interpretable output: the location of the labeled body parts on each frame. However, specifying a small number of body parts for labeling will potentially miss some of the rich behavioral information present in the video, especially if there are features of the pose important for understanding behavior that are not known a priori to the researcher, and therefore not labeled. Furthermore, it may be difficult to accurately label and track body parts that are often occluded, or are not localizable to a single point in space, such as the overall pose of the face, body, or hand.

A complementary approach for analyzing behavioral videos is the use of fully unsupervised dimensionality reduction methods. These methods do not require human labels (hence, unsupervised), and instead model variability across all pixels in a high-dimensional behavioral video with a small number of hidden, or “latent” variables; we refer to the collection of these latent variables as the “latent representation” of behavior. Linear unsupervised dimensionality reduction methods such as Principal Component Analysis (PCA) have been successfully employed with both video [20–23] and depth imaging data [24, 25]. More recent work performs video compression using nonlinear autoencoder neural networks [26, 27]; these models consist of an “encoder” network that compresses an image into a latent representation, and a “decoder” network which transforms the latent representation back into an image. Especially promising are convolutional autoencoders, which are tailored for image data and hence can extract a compact latent representation with minimal loss of information. The benefit of this unsupervised approach is that, by definition, it does not require human labels, and can therefore capture a wider range of behavioral features in an unbiased manner. The drawback to the unsupervised approach, however, is that the resulting low-dimensional latent representation is often difficult to interpret, which limits the specificity of downstream analyses.

In this work we seek to combine the strengths of these two approaches by finding a low-dimensional, latent representation of animal behavior that is partitioned into two subspaces: a supervised subspace, or set of dimensions, that is required to directly reconstruct the labels obtained from pose estimation; and an orthogonal unsupervised subspace that captures additional variability in the video not accounted for by the labels. The resulting semi-supervised approach provides a richer and more interpretable representation of behavior than either approach alone.

Our proposed method, the Partitioned Subspace Variational Autoencoder (PS-VAE), is a semi-supervised model based on the fully unsupervised Variational Autoencoder (VAE) [28, 29]. The VAE is a nonlinear autoencoder whose latent representations are probabilistic. Here, we extend the standard VAE model in two ways. First, we explicitly require the latent representation to contain information about the labels through the addition of a discriminative network that decodes the labels from the latent representation [30–37]. Second, we incorporate an additional term in the PS-VAE objective function that encourages each dimension of the unsupervised subspace to be statistically independent, which can provide a more interpretable latent representation [38–44]. There has been considerable work in the VAE literature for endowing the latent representation with semantic meaning. Our PS-VAE model is distinct from all existing approaches but has explicit mathematical connections to these, especially to [37, 45]. We provide a high-level overview of the PS-VAE in the following section, and in-depth mathematical exposition in the Methods. We then contextualize our work within related machine learning approaches in S1 Appendix.

We first apply the PS-VAE to a head-fixed mouse behavioral video [46]. We track paw positions and recover unsupervised dimensions that correspond to jaw position and local paw configuration. We then apply the PS-VAE to a video of a mouse freely moving around an open field arena. We track the ears, nose, back, and tail base, and recover unsupervised dimensions that correspond to more precise information about the pose of the body. We then demonstrate how the PS-VAE enables downstream analyses on two additional head-fixed mouse neuro-behavioral datasets. The first is a close up video of a mouse face (a similar setup to [47]), where we track pupil area and position, and recover unsupervised dimensions that separately encode information about the eyelid and the whisker pad. We then use this interpretable behavioral representation to construct separate saccade and whisking detectors. We also decode this behavioral representation with neural activity recorded from visual cortex using two-photon calcium imaging, and find that eye and whisker information are differentially decoded. The second dataset is a two camera video of a head-fixed mouse [22], where we track moving mechanical equipment and one visible paw. The PS-VAE recovers unsupervised dimensions that correspond to chest and jaw positions. We use this interpretable behavioral representation to separate animal and equipment movement, construct individual movement detectors for the paw and body, and decode the behavioral representation with neural activity recorded across dorsal cortex using widefield calcium imaging. Importantly, we also show how the uninterpretable latent representations provided by a standard VAE do not allow for the specificity of these analyses in both example datasets. These results demonstrate how the interpretable behavioral representations learned by the PS-VAE can enable targeted downstream behavioral and neural analyses using a single unified framework. Finally, we extend the PS-VAE framework to accommodate multiple videos from the same experimental setup by introducing a new subspace that captures variability in static background features across videos, while leaving the original subspaces (supervised and unsupervised) to capture dynamic behavioral features. We demonstrate this extension on multiple videos from the head-fixed mouse experimental setup [46]. A python/PyTorch implementation of the PS-VAE is available on github as well as the NeuroCAAS cloud analysis platform [48], and we have made all datasets publicly available; see the Data Availability and Code Availability statements for more details.

PS-VAE model formulation

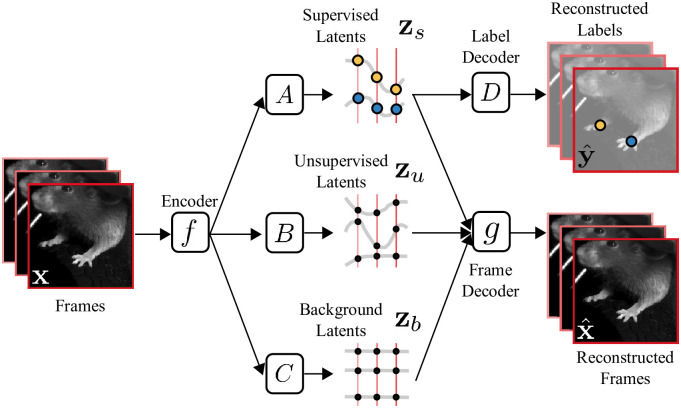

The goal of the PS-VAE is to find an interpretable, low-dimensional latent representation of a behavioral video. Both the interpretability and low dimensionality of this representation make it useful for downstream modeling tasks such as learning the dynamics of behavior and connecting behavior to neural activity, as we show in subsequent sections. The PS-VAE makes this behavioral representation interpretable by partitioning it into two sets of latent variables: a set of supervised latents, and a separate set of unsupervised latents. The role of the supervised latents is to capture specific features of the video that users have previously labeled with pose estimation software, for example joint positions. To achieve this, we require the supervised latents to directly reconstruct a set of user-supplied labels. The role of the unsupervised subspace is to then capture behavioral features in the video that have not been previously labeled. To achieve this, we require the full set of supervised and unsupervised latents to reconstruct the original video frames. We briefly outline the mathematical formulation of the PS-VAE here; full details can be found in the Methods, and we draw connections to related work from the machine learning literature in S1 Appendix.

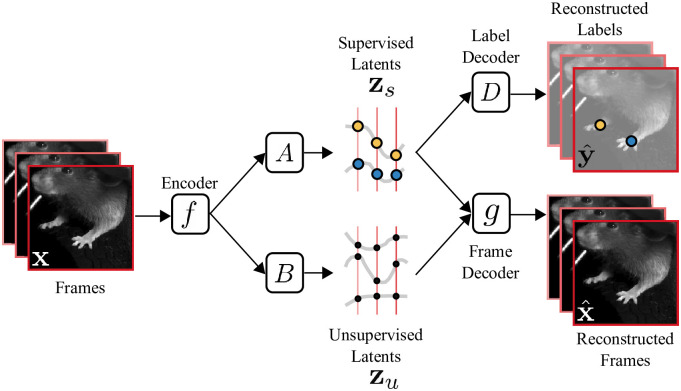

The PS-VAE is an autoencoder neural network model that first compresses a video frame x into a low-dimensional vector μ(x) = f(x) through the use of a convolutional encoder neural network f(⋅) (Fig 1). We then proceed to partition μ(x) into supervised and unsupervised subspaces, respectively defined by the linear transformations A and B. We define the supervised representation as

| (1) |

where (and subsequent ϵ terms) denotes Gaussian noise, which captures the fact that A μ(x) is merely an estimate of zs from the observed data. We refer to zs interchangeably as the “supervised representation” or the “supervised latents.” We construct zs to have the same number of elements as there are label coordinates y, and enforce a one-to-one element-wise linear mapping between the two, as follows:

| (2) |

where D is a diagonal matrix that scales the coordinates of zs without mixing them, and d is an offset term. [Note we could easily absorb the diagonal matrix in to the linear mapping A from Eq 1, but we instead separate these two so that we can treat the random variable zs as a latent variable with a known prior such as which does not rely on the magnitude of the label values.] Thus, Eq 2 amounts to a multiple linear regression predicting y from zs with no interaction terms.

Fig 1. Overview of the Partitioned Subspace VAE (PS-VAE).

The PS-VAE takes a behavioral video as input and finds a low-dimensional latent representation that is partitioned into two subspaces: one subspace contains the supervised latent variables zs, and the second subspace contains the unsupervised latent variables zu. The supervised latent variables are required to reconstruct user-supplied labels, for example from pose estimation software (e.g. DeepLabCut [10]). The unsupervised latent variables are then free to capture remaining variability in the video that is not accounted for by the labels. This is achieved by requiring the combined supervised and unsupervised latents to reconstruct the video frames. An additional term in the PS-VAE objective function factorizes the distribution over the unsupervised latents, which has been shown to result in more interpretable latent representations [45].

Next we define the unsupervised representation as

| (3) |

recalling that B defines the unsupervised subspace. We refer to zu interchangeably as the “unsupervised representation” or the “unsupervised latents.”

We now construct the full latent representation z = [zs; zu] through concatenation and use z to reconstruct the observed video frame through the use of a convolutional decoder neural network g(⋅):

| (4) |

We take two measures to further encourage interpretability in the unsupervised representation zu. The first measure ensures that zu does not contain information from the supervised representation zs. One approach is to encourage the mappings A and B to be orthogonal to each other. In fact we go one step further and encourage the entire latent space to be orthogonal by defining U = [A; B] and adding the penalty term ||UUT − I|| to the PS-VAE objective function (where I is the identity matrix). This orthogonalization of the latent space is similar to PCA, except we do not require the dimensions to be ordered by variance explained. However, we do retain the benefits of an orthogonalized latent space, which will allow us to modify one latent coordinate without modifying the remaining coordinates, facilitating interpretability [37].

The second measure we take to encourage interpretability in the unsupervised representation is to maximize the statistical independence between the dimensions. This additional measure is necessary because even when we represent the latent dimensions with a set of orthogonal vectors, the distribution of the latent variables within this space can still contain correlations (e.g. Fig 2B, top). To minimize correlation, we take an information-theoretic approach and penalize for the “Total Correlation” metric as proposed by [42] and [45]. Total Correlation is a generalization of mutual information to more than two random variables, and is defined as the Kullback-Leibler (KL) divergence between a joint distribution p(z1, …, zD) and a factorized version of this distribution p(z1)…p(zD). Our penalty encourages the joint multivariate latent distribution to be factorized into a set of independent univariate distributions (e.g. Fig 2B, bottom).

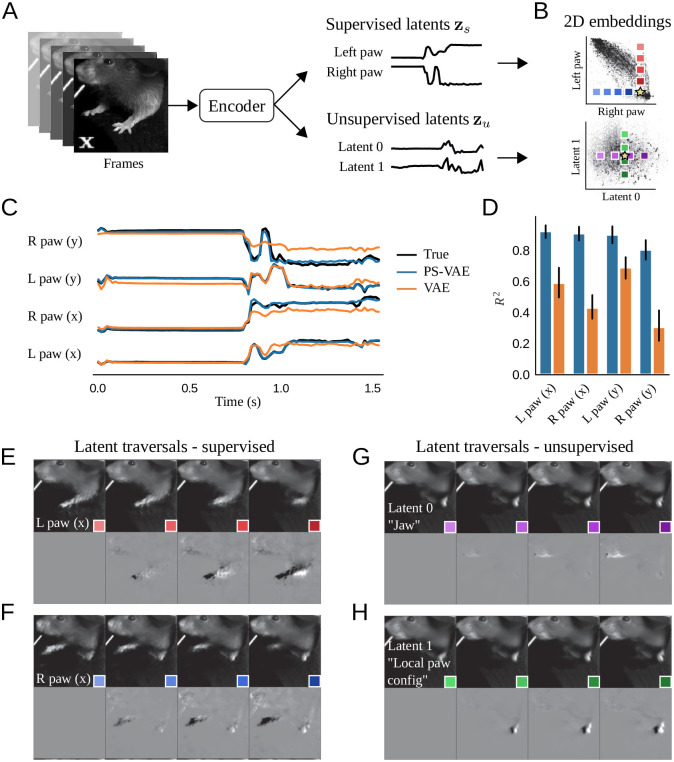

Fig 2. The PS-VAE successfully partitions the latent representation of a head-fixed mouse video [46].

The dataset contains labels for each fore paw. A: The PS-VAE transforms frames from the video into a set of supervised latents zs and unsupervised latents zu. B: Top: A visualization of the 2D embedding of supervised latents corresponding to the horizontal coordinates of the left and right paws. Bottom: The 2D embedding of the unsupervised latents. C: The true labels (black lines) are almost perfectly reconstructed by the supervised subspace of the PS-VAE (blue lines). We also reconstruct the labels from the latent representation of a standard VAE (orange lines), which captures some features of the labels but misses much of the variability. D: Observations from the trial in C hold across all labels and test trials. Error bars represent a 95% bootstrapped confidence interval over test trials. E: To investigate individual dimensions of the latent representation, frames are generated by selecting a test frame (yellow star in B), manipulating the latent representation one dimension at a time, and pushing the resulting representation through the frame decoder. Top: Manipulation of the x coordinate of the left paw. Colored boxes indicate the location of the corresponding point in the latent space from the top plot in B. Movement along this (red) dimension results in horizontal movements of the left paw. Bottom: To better visualize subtle differences between the frames above, the left-most frame is chosen as a base frame from which all frames are subtracted. F: Same as E except the manipulation is performed with the x coordinate of the right paw. G, H: Same as E, F except the manipulation is performed in the two unsupervised dimensions. Latent 0 encodes the position of the jaw line, while Latent 1 encodes the local configuration (rather than absolute position) of the left paw. See S6 Video for a dynamic version of these traversals. See S1 Table for information on the hyperparameters used in the models for this and all subsequent figures.

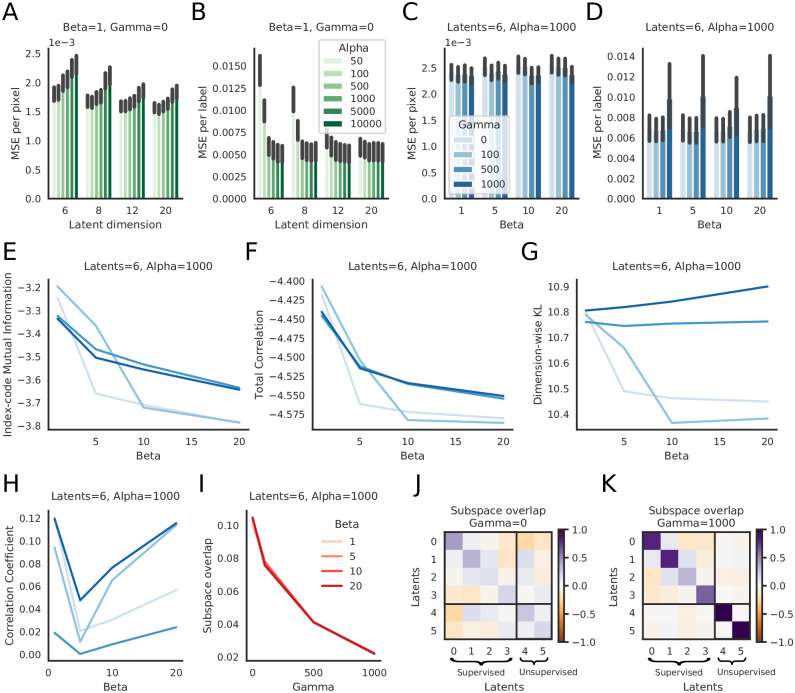

The final PS-VAE objective function contains terms for label reconstruction, frame reconstruction, orthogonalization of the full latent space, and the statistical independence between zu’s factors. The model requires several user-provided hyperparameters, and in the Methods we provide guidance on how to set these. One important hyperparameter is the dimensionality of the unsupervised subspace. In the following sections we use 2D unsupervised subspaces, because these are easy to visualize and the resulting models perform well empirically. At several points we explore models with larger subspaces. In general we recommend starting with a 2D subspace, then increasing one dimension at a time until the results are satisfactory. We emphasize that there is no single correct value for this hyperparameter; what constitutes a satisfactory result will depend on the data and the desired downstream analyses.

Results

Application of the PS-VAE to a head-fixed mouse dataset

We first apply the PS-VAE to an example dataset from the International Brain Lab (IBL) [46], where a head-fixed mouse performs a visual decision-making task by manipulating a wheel with its fore paws. We tracked the left and right paw locations using Deep Graph Pose [13]. First, we quantitatively demonstrate the model successfully learns to reconstruct the labels, and then we qualitatively demonstrate the model’s ability to learn interpretable representations by exploring the correspondence between the extracted latent variables and reconstructed frames. For the results shown here, we used models with a 6D latent space: a 4D supervised subspace (two paws, each with x and y coordinates) and a 2D unsupervised subspace. S1 Table details the complete hyperparameter settings for each model, and in the Methods we explore the selection and sensitivity of these hyperparameters.

Model fits

We first investigate the supervised representation of the PS-VAE, which serves two useful purposes. First, by forcing this representation to reconstruct the labels, we ensure these dimensions are interpretable. Second, we ensure the latent representation contains information about these known features in the data, which may be overlooked by a fully unsupervised method. For example, the pixel-wise mean square error (MSE) term in the standard VAE objective function will only allow the model to capture features that drive a large amount of pixel variance. However, meaningful features of interest in video data, such as a pupil or individual fingers on a hand, may only drive a small amount of pixel variance. As long as these features themselves change over time, we can ensure they are represented in the latent space of the model by tracking them and including them in the supervised representation. Rare behaviors (such as a single saccade) will be more difficult for the model to capture.

We find accurate label reconstruction (Fig 2C, blue lines), with R2 = 0.85 ± 0.01 (mean ± s.e.m) across all held-out test data. This is in contrast to a standard VAE, whose latent variables are much less predictive of the labels; to show this, we first fit a standard VAE model with 6 latents, then fit a post-hoc linear regression model from the latent space to the labels (Fig 2C, orange lines). While this regression model is able to capture substantial variability in the labels (R2 = 0.55 ± 0.02), it still fails to perform as well as the PS-VAE (Fig 2D). We also fit a post-hoc nonlinear regression model in the form of a multi-layer perceptron (MLP) neural network, which performed considerably better (R2 = 0.83 ± 0.01). This performance shows that the VAE latents do in fact contain significant information about the labels, but much of this information is not linearly decodable. This makes the representation more difficult to use for some downstream analyses, which we address below. The supervised PS-VAE latents, on the other hand, are linearly decodable by construction.

Next we investigate the degree to which the PS-VAE partitions the supervised and unsupervised subspaces. Ideally the information contained in the supervised subspace (the labels) will not be represented in the unsupervised subspace. To test this, we fit a post-hoc linear regression model from the unsupervised latents zu to the labels. This regression has poor predictive power (R2 = 0.07 ± 0.03), so we conclude that there is little label-related information contained in the unsupervised subspace, as desired.

We now turn to a qualitative assessment of how well the PS-VAE produces interpretable representations of the behavioral video. In this context, we define an “interpretable” (or “disentangled”) representation as one in which each dimension of the representation corresponds to a single factor of variation in the data, e.g. the movement of an arm, or the opening/closing of the jaw. To demonstrate the PS-VAE’s capacity to learn interpretable representations, we generate novel video frames from the model by changing the latent representation one dimension at a time—which we call a “latent traversal”—and visually compare the outputs [37–39, 42–44]. If the representation is sufficiently interpretable (and the decoder has learned to use this representation), we should be able to easily assign semantic meaning to each latent dimension.

The latent traversal begins by choosing a test frame and pushing it through the encoder to produce a latent representation (Fig 2A). We visualize the latent representation by plotting it in both the supervised and unsupervised subspaces, along with all the training frames (Fig 2B, top and bottom, respectively; the yellow star indicates the test frame, black points indicate all training frames). Next we choose a single dimension of the representation to manipulate, while keeping the value of all other dimensions fixed. We set a new value for the chosen dimension, say the 20th percentile of the training data. We can then push this new latent representation through the frame decoder to produce a generated frame that should look like the original, except for the behavioral feature represented by the chosen dimension. Next we return to the latent space and pick a new value for the chosen dimension, say the 40th percentile of the training data, push this new representation through the frame decoder, and repeat, traversing the chosen dimension. Traversals of different dimensions are indicated by the colored boxes in Fig 2B. If we look at all of the generated frames from a single traversal next to each other, we expect to see smooth changes in a single behavioral feature.

We first consider latent traversals of the supervised subspace. The y-axis in Fig 2B (top) putatively encodes the horizontal position of the left paw; by manipulating this value—and keeping all other dimensions fixed—we expect to see the left paw move horizontally in the generated frames, while all other features (e.g. right paw) remain fixed. Indeed, this latent space traversal results in realistic looking frames with clear horizontal movements of the left paw (Fig 2E, top). The colored boxes indicate the location of the corresponding latent representation in Fig 2B. As an additional visual aid, we fix the left-most generated frame as a base frame and replace each frame with its difference from the base frame (Fig 2E, bottom). We find similar results when traversing the dimension that putatively encodes the horizontal position of the right paw (Fig 2F), thus demonstrating the supervised subspace has adequately learned to encode the provided labels.

The representation in the unsupervised subspace is more difficult to validate since we have no a priori expectations for what features the unsupervised representation should encode. We first note that the facial features are better reconstructed by the full PS-VAE than when using the labels alone, hinting at the features captured by the unsupervised latents (see S1 Video for the frame reconstruction video, and S14 Fig for panel captions). We repeat the latent traversal exercise once more by manipulating the representation in the unsupervised subspace. Traversing the horizontal (purple) dimension produces frames that at first appear all the same (Fig 2G, top), but when looking at the differences it becomes clear that this dimension encodes jaw position (Fig 2G, bottom). Similarly, traversal of the vertical (green) dimension reveals changes in the local configuration of the left paw (Fig 2H). It is also important to note that none of these generated frames show large movements of the left or right paws, which should be fully represented by the supervised subspace. See S6 Video for a dynamic version of these traversals, and S15 Fig for panel captions. The PS-VAE is therefore able to find an interpretable unsupervised representation that does not qualitatively contain information about the supervised representation, as desired.

Qualitative model comparisons

We now utilize the latent traversals to highlight the role that the label reconstruction term and the Total Correlation (TC) term of the PS-VAE play in producing an interpretable latent representation. We first investigate a standard VAE as a baseline model, which neither reconstructs the labels nor penalizes the TC among the latents. We find that many dimensions in the VAE representation simultaneously encode both the paws and the jaw (S10 Video). Next we fit a model that contains the TC term, but does not attempt to reconstruct the labels. This model is the β-TC-VAE of [45]. As in the PS-VAE, the TC term orients the latent space by producing a factorized representation, with the goal of making it more interpretable. [Note, however, the objective still acts to explain pixel-level variance, and thus will not find features that drive little pixel variance.] The β-TC-VAE traversals (S11 Video) reveal dimensions that mostly encode movement from one paw or the other, although these do not directly map on the x and y coordinates as the supervised subspace of the PS-VAE does. We also find a final dimension that encodes jaw position. The TC term therefore acts to shape the unsupervised subspace in a manner that may be more interpretable to human observers. Finally, we consider a PS-VAE model which incorporates label information but does not penalize the TC term. The traversals (S7 Video) reveal each unsupervised latent contains (often similar) information about the paws and jaw, and thus the model has lost interpretability in the unsupervised latent space. However, the supervised latents still clearly represent the desired label information, and thus the model has maintained interpretability in the supervised latent space; indeed, the label reconstruction ability of the PS-VAE is not affected by the TC term across a range of hyperparameter settings (Methods). These qualitative evaluations demonstrate that the label reconstruction term influences the interpretability of the supervised latents, while the TC term influences the interpretability of the unsupervised latents.

We now briefly explore PS-VAE models with more than two unsupervised dimensions, and keep all other hyperparameters the same as the best performing 2D model. We first move to a 3D unsupervised subspace, and find two dimensions that correspond to those we found in the 2D model: a jaw dimension, and a left paw configuration dimension. The third dimension appears to encode the position of the elbows: traversing this dimension shows the elbows moving inwards and outwards together (S8 Video). We then move to a 4D unsupervised subspace, and find three dimensions that correspond to those in the 3D model, and a fourth dimension that is almost an exact replica of the elbow dimension (S9 Video). Additional models fit with different weight initializations produced the same behavior: three unique dimensions matching those of the 3D model, and a fourth dimension that matched one of the previous three. It is possible that adjusting the hyperparameters could lead to four distinct dimensions (for example by increasing the weight on the TC term), but we did not explore this further. We conclude that the PS-VAE can reliably find a small number of interpretable unsupervised dimensions, but the possibility of finding a larger number of unique dimensions will depend on the data and other hyperparameter settings.

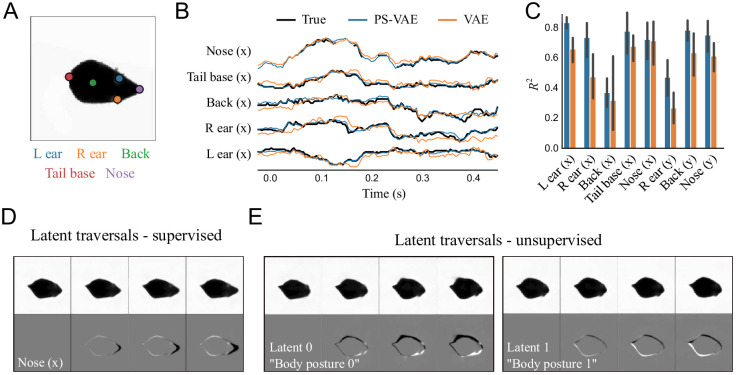

Application of the PS-VAE to a freely moving mouse dataset

We next apply the PS-VAE to a video of a mouse in an open field arena—another ubiquitous behavioral paradigm [49]—to demonstrate how our model generalizes beyond the head-fixed preparation. We tracked the nose, ears, back, and tail base using DeepLabCut [10], and cropped and rotated the mouse to obtain egocentric alignment across frames (Fig 3A). For our analysis we use a PS-VAE model with a 10D latent space: an 8D supervised subspace (x, y coordinates for five body parts, minus two y coordinates that are fixed by our alignment procedure) and a 2D unsupervised subspace.

Fig 3. The PS-VAE successfully partitions the latent representation of a freely moving mouse video.

A: Example frame from the video. The indicated points are tracked to provide labels for the PS-VAE supervised subspace. B: The true labels (black lines) and their reconstructions from the PS-VAE supervised subspace (blue lines) and a standard VAE (orange lines); both models are able to capture much of the label variability. The PS-VAE is capable of interpolating missing labels, as seen in the “Nose (x)” trace; see text for details. Only x coordinates are shown to reduce clutter. C: Observations from the example trial hold across all labels and test trials. The y coordinates of the left ear and tail base are missing because these labels are fixed by our egocentric alignment procedure. Error bars are computed as in Fig 2D. D: Frames generated by manipulating the latent corresponding to the x coordinate of the nose in the supervised subspace. E: Same as panel D except the manipulation is performed in the two unsupervised dimensions. These latents capture more detailed information about the body posture than can be reconstructed from the labels. See S12 Video for a dynamic version of these traversals.

The PS-VAE is able to reconstruct the labels reasonably well (R2 = 0.68 ± 0.02), slightly outperforming the linear and nonlinear regressions from the VAE latents (R2 = 0.59 ± 0.03 and R2 = 0.65 ± 0.02, respectively) (Fig 3B and 3C). This dataset also highlights how the PS-VAE can handle missing label data. Tracking may occasionally be lost, for example when a body part is occluded, and pose estimation packages like DLC and DGP will typically assign low likelihood values to the corresponding labels on such frames. During the PS-VAE training, all labels that fall below an arbitrary likelihood threshold of 0.9 are simply omitted from the computation of the label reconstruction term and its gradient (likelihood values lie in the range [0, 1]). Nevertheless, the supervised subspace of the PS-VAE will still produce an estimate of the label values for these omitted time points, which allows the model to interpolate through missing label information (Fig 3B, “Nose (x)” trace).

In addition to the good label reconstructions, the latent traversals in the supervised subspace also show the PS-VAE learned to capture the label information (Fig 3D). While the supervised latents capture much of the pose information, we find there are additional details about the precise pose of the body that the unsupervised latents capture (see S2 Video for the frame reconstruction video). The latent traversals in the unsupervised subspace show these dimensions have captured complementary (Fig 3E) and uncorrelated (S10H Fig) information about the body posture (S12 Video). To determine whether or not these unsupervised latents also encode label-related information, we again fit a post-hoc linear regression model from the unsupervised latents to the labels. This regression has poor predictive power (R2 = 0.08 ± 0.03), which verifies the unsupervised latent space is indeed capturing behavioral variability not accounted for by the labels.

The PS-VAE enables targeted downstream analyses

The previous section demonstrated how the PS-VAE can successfully partition variability in behavioral videos into a supervised subspace and an interpretable unsupervised subspace. In this section we turn to several downstream applications using different datasets to demonstrate how this partitioned subspace can be exploited for behavioral and neural analyses. For each dataset, we first characterize the latent representation by showing label reconstructions and latent traversals. We then quantify the dynamics of different behavioral features by fitting movement detectors to selected dimensions in the behavioral representation. Finally, we decode the individual behavioral features from simultaneously recorded neural activity. We also show how these analyses are not possible with the “entangled” representations produced by the VAE.

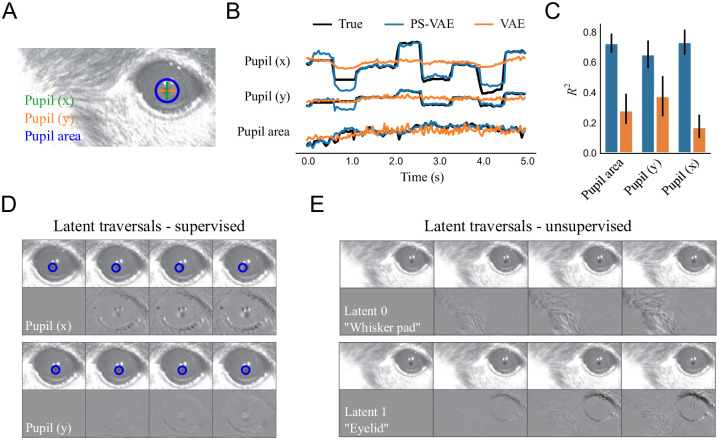

A close up mouse face video

The first example dataset is a close up video of a mouse face (Fig 4A), recorded while the mouse quietly sits and passively views drifting grating stimuli (setup is similar to [47]). We tracked the pupil location and pupil area using Facemap [50]. For our analysis we use models with a 5D latent space: a 3D supervised subspace (x, y coordinates of pupil location, and pupil area) and a 2D unsupervised subspace.

Fig 4. The PS-VAE successfully partitions the latent representation of a mouse face video.

A: Example frame from the video. Pupil area and pupil location are tracked to provide labels for the PS-VAE supervised subspace. B: The true labels (black lines) are again almost perfectly reconstructed by the supervised subspace of the PS-VAE (blue lines). Reconstructions from a standard VAE (orange lines) are able to capture pupil area but miss much of the variability in the pupil location. C: Observations from the example trial hold across all labels and test trials. Error bars are computed as in Fig 2D. D: Frames generated by manipulating the representation in the supervised subspace. Top: Manipulation of the x coordinate of the pupil location. The change is slight due to a small dynamic range of the pupil position in the video, so a static blue circle is superimposed as a reference point. Bottom: Manipulation of the y coordinate of the pupil location. E: Same as panel D except the manipulation is performed in the two unsupervised dimensions. Latent 0 encodes the position of the whisker pad, while Latent 1 encodes the position of the eyelid. See S14 Video for a dynamic version of these traversals.

The PS-VAE is able to successfully reconstruct the pupil labels (R2 = 0.71 ± 0.02), again outperforming the linear regression from the VAE latents (R2 = 0.27 ± 0.03) (Fig 4B and 4C). The difference in reconstruction quality is even more pronounced here than the previous datasets because the feature that we are tracking—the pupil—is composed of a small number of pixels, and thus is not (linearly) captured well by the VAE latents. Furthermore, in this dataset we do not find a substantial improvement when using nonlinear MLP regression from the VAE latents (R2 = 0.31 ± 0.01), indicating that the VAE ignores much of the pupil information altogether. The latent traversals in the supervised subspace show the PS-VAE learned to capture the pupil location, although correlated movements at the edge of the eye are also present, especially in the horizontal (x) position (Fig 4D; pupil movements are more clearly seen in the traversal video S14 Video). The latent traversals in the unsupervised subspace show a clear separation of the whisker pad and the eyelid (Fig 4E). To further validate these unsupervised latents, we compute a corresponding 1D signal for each latent by cropping the frames around the whisker pad or the eyelid, and taking the first PCA component as a “hand engineered” representation of that behavioral feature. The PS-VAE latents show a reasonable correlation with their corresponding PCA signals (whisker pad, r = 0.51; eyelid r = 0.92; S1 Fig). Together these results from the label reconstruction analysis and the latent traversals demonstrate the PS-VAE is able to learn an interpretable representation for this behavioral video.

The separation of eye and whisker pad information allows us to independently characterize the dynamics of each of these behavioral features. As an example of this approach we fit a simple movement detector using a 2-state autoregressive hidden Markov model (ARHMM) [51]. The ARHMM clusters time series data based on dynamics, and we typically find that a 2-state ARHMM clusters time points into “still” and “moving” states of the observations [13, 27]. We first fit the ARHMM on the pupil location latents, where the “still” state corresponds to periods of fixation, and the “moving” state corresponds to periods of pupil movement; the result is a saccade detector (Fig 5A). Indeed, if we align all the PS-VAE latents to saccade onsets found by the ARHMM, we find variability in the pupil location latents increases just after the saccades (Fig 5C). See S21 Video for example saccade clips, and S16 Fig for panel captions. This saccade detector could have been constructed using the original pupil location labels, so we next fit the ARHMM on the whisker pad latents, obtained from the unsupervised latents, which results in a whisker pad movement detector (Fig 5B and 5D; see S22 Video for example movements). To validate this detector we compare it to a hand engineered detector, in which we fit an ARHMM to the 1D whisker signal extracted from the whisker pad; these hand engineered states have 87.1% overlap with the states from the PS-VAE-based detector (S1 Fig). The interpretable PS-VAE latents thus allow us to easily fit several simple ARHMMs to different behavioral features, rather than a single complex ARHMM (with more states) to all behavioral features. This is a major advantage of the PS-VAE framework, because we find that ARHMMs provide more reliable and interpretable output when used with a small number of states, both in simulated data (S2 Fig) and in this particular dataset (S3 Fig).

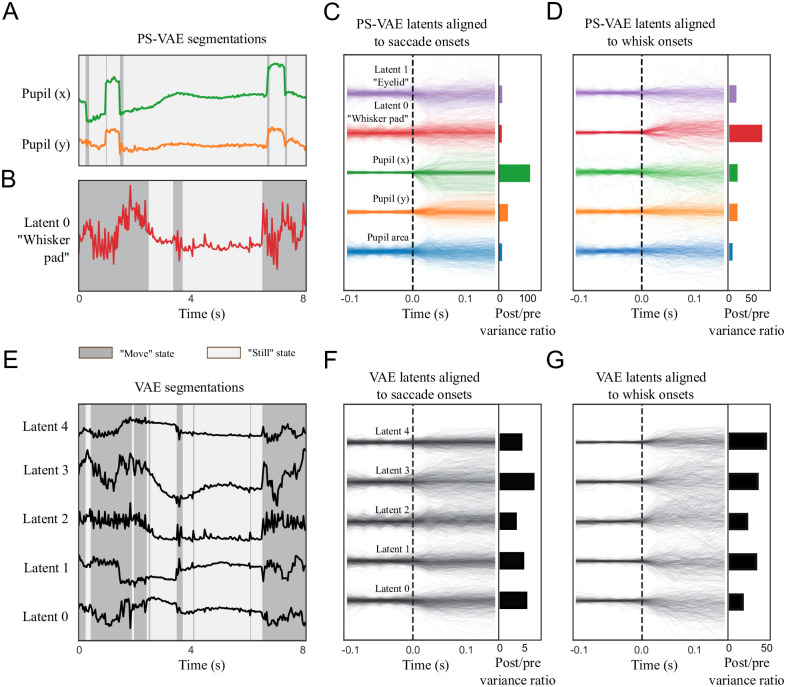

Fig 5. The PS-VAE enables targeted downstream behavioral analyses of the mouse face video.

A simple 2-state autoregressive hidden Markov model (ARHMM) is used to segment subsets of latents into “still” and “moving” states (which refer only to the behavioral features modeled by the ARHMM, not the overall behavioral state of the mouse). A: An ARHMM is fit to the two supervised latents corresponding to the pupil location, resulting in a saccade detector (S21 Video). Background colors indicate the most likely state at each time point. B: An ARHMM is fit to the single unsupervised latent corresponding to the whisker pad location, resulting in a whisking detector (S22 Video). C: Left: PS-VAE latents aligned to saccade onsets found by the model from panel A. Right: The ratio of post-saccade to pre-saccade activity shows the pupil location has larger modulation than the other latents. D: PS-VAE latents aligned to onset of whisker pad movement; the largest increase in variability is seen in the whisker pad latent. E: An ARHMM is fit to five fully unsupervised latents from a standard VAE. The ARHMM can still reliably segment the traces into “still” and “moving” periods, although these tend to align more with movements of the whisker pad than the pupil location (compare to segmentations in panels A and B). F: VAE latents aligned to saccade onsets found by the model from panel A. Variability after saccade onset increases across many latents, demonstrating the distributed nature of the pupil location representation. G: VAE latents aligned to whisker movement onsets found by the model from panel B. The whisker pad is clearly represented across all latents. This distributed representation makes it difficult to interpret individual VAE latents, and therefore does not allow for the targeted behavioral models enabled by the PS-VAE.

We now repeat the above analysis with the latents of a VAE to further demonstrate the advantage gained by using the PS-VAE in this behavioral analysis. We fit a 2-state ARHMM to all latents of the VAE (since we cannot easily separate different dimensions) and again find “still” and “moving” states, which are highly overlapping with the whisker pad states found with the PS-VAE (92.2% overlap) and the hand engineered whisker pad states (85.0% overlap). However, using the VAE latents, we are not able to easily discern the pupil movements (70.2% overlap). This is due in part to the fact that the VAE latents do not contain as much pupil information as the PS-VAE (Fig 4C), and also due to the fact that what pupil information does exist is generally masked by the more frequent movements of the whisker pad (Fig 5A and 5B). Indeed, plotting the VAE latents aligned to whisker pad movement onsets (found from the PS-VAE-based ARHMM) shows a robust detection of movement (Fig 5G), and also shows that the whisker pad is represented non-specifically across all VAE latents. However, if we plot the VAE latents aligned to saccade onsets (found from the PS-VAE-based ARHMM), we also find variability after saccade onset increases across all latents (Fig 5F). So although the VAE movement detector at first seems to mostly capture whisker movements, it is also contaminated by eye movements.

A possible solution to this problem is to increase the number of ARHMM states, so that the model may find different combinations of eye movements and whisker movements (i.e. eye still/whisker still, eye moving/whisker still, etc.). To test this we fit a 4-state ARHMM to the VAE latents, but find the resulting states do not resemble those inferred by the saccade and whisking detectors, and in fact produce a much noisier segmentation than the combination of simpler 2-state ARHMMs (S3 Fig). Therefore we conclude that the entangled representation of the VAE does not allow us to easily construct saccade or whisker pad movement detectors, as does the interpretable representation of the PS-VAE.

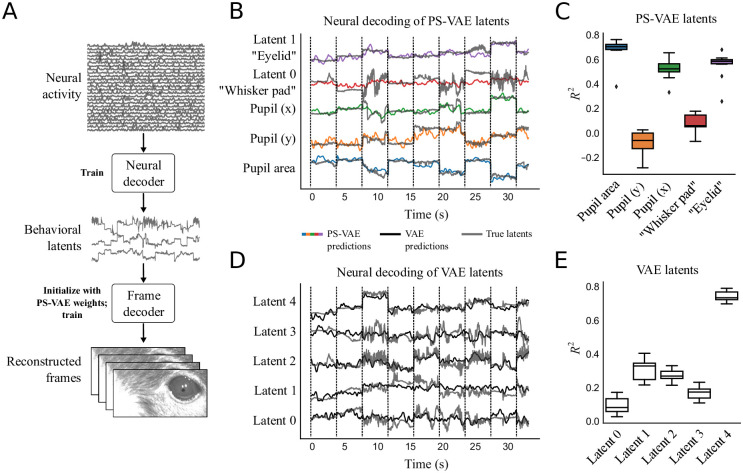

The separation of eye and whisker pad information also allows us to individually decode these behavioral features from neural activity. In this dataset, neural activity in primary visual cortex was optically recorded using two-photon calcium imaging. We randomly subsample 200 of the 1370 recorded neurons and decode the PS-VAE latents using nonlinear MLP regression (Fig 6A and 6B). We repeated this subsampling process 10 times, and find that the neural activity is able to successfully reconstruct the pupil area, eyelid, and horizontal position of the pupil location, but does not perform as well reconstructing the whisker pad or the vertical position of the pupil location (which may be due to the small dynamic range of the vertical position and the accompanying noise in the labels) (Fig 6C). Furthermore, we find these R2 values to be very similar whether decoding the PS-VAE supervised latents (shown here in Fig 6) or the original labels (S8 Fig).

Fig 6. The PS-VAE enables targeted downstream neural analyses of the mouse face video.

A: A neural decoder is trained to map neural activity to the interpretable behavioral latents. These predicted latents can then be further mapped through the frame decoder learned by the PS-VAE to produce video frames reconstructed from neural activity. B: PS-VAE latents (gray traces) and their predictions from neural activity (colored traces) recorded in primary visual cortex with two-photon imaging. Vertical black lines delineate individual test trials. See S25 Video for a video of the full frame decoding. C: Decoding accuracy (R2) computed separately for each latent demonstrates how the PS-VAE can be utilized to investigate the neural representation of different behavioral features. Boxplots show variability over 10 random subsamples of 200 neurons from the full population of 1370 neurons. D: Standard VAE latents (gray traces) and their predictions from the same neural activity (black traces). E: Decoding accuracy for each VAE dimension reveals one dimension that is much better decoded than the rest, but the distributed nature of the VAE representation makes it difficult to understand which behavioral features the neural activity is predicting.

In addition to decoding the PS-VAE latents, we also decoded the motion energy (ME) of the latents (S6 Fig), as previous work has demonstrated that video ME can be an important predictor of neural activity [22, 23, 52]. We find in this dataset that the motion energy of the whisker pad is decoded reasonably well (R2 = 0.33 ± 0.01), consistent with the results in [22] and [23] that use encoding (rather than decoding) models. The motion energies of the remaining latents (pupil area and location, and eyelid) are not decoded well.

Again we can easily demonstrate the advantage gained by using the PS-VAE in this analysis by decoding the VAE latents (Fig 6D). We find that one latent dimension in particular is decoded well (Fig 6E, Latent 4). Upon reviewing the latent traversal video for this VAE (S15 Video), we find that Latent 4 encodes information about every previously described behavioral feature—pupil location, pupil area, whisker pad, and eyelid. This entangled VAE representation makes it difficult to understand precisely how well each of those behavioral features is represented in the neural activity; the specificity of the PS-VAE behavioral representation, on the other hand, allows for a greater specificity in neural decoding.

We can take this decoding analysis one step further and decode not only the behavioral latents, but the behavioral videos themselves from neural activity. To do so we retrain the PS-VAE’s convolutional decoder to map from the neural predictions of the latents (rather than the latents themselves) to the corresponding video frame (Fig 6A). The result is an animal behavioral video that is fully reconstructed from neural activity. See S25 Video for the neural reconstruction video, and S17 Fig for panel captions. These videos can be useful for gaining a qualitative understanding of which behavioral features are captured (or not) by the neural activity—for example, it is easy to see in the video that the neural reconstruction typically misses high frequency movements of the whisker pad. It is also possible to make these reconstructed videos with the neural decoder trained on the VAE latents and the corresponding VAE frame decoder [27]. These VAE reconstructions are qualitatively and quantitatively similar to the PS-VAE reconstructions, suggesting the PS-VAE can provide interpretability without sacrificing information about the original frames in the latent representation.

A two-view mouse video

The next dataset that we consider [22] poses a different set of challenges than the previous datasets. This dataset uses two cameras to simultaneously capture the face and body of a head-fixed mouse in profile and from below (Fig 7A). Notably, the cameras also capture the movements of two lick spouts and two levers. As we show later, the movement of this mechanical equipment drives a significant fraction of the pixel variance, and is thus clearly encoded in the latent space of the VAE. By tracking this equipment we are able to encode mechanical movements in the supervised subspace of the PS-VAE, which allows the unsupervised subspace to capture solely animal-related movements.

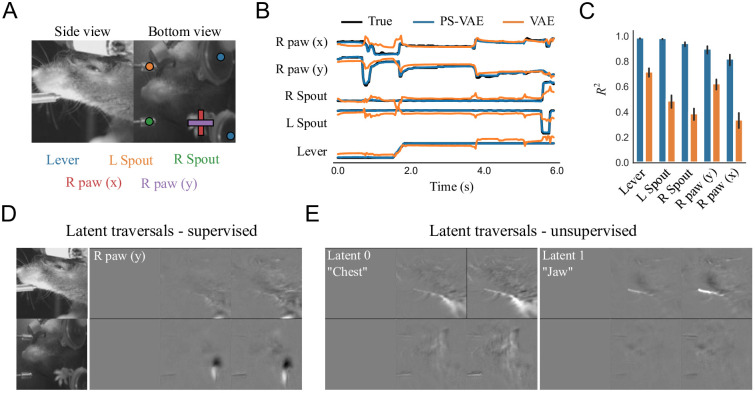

Fig 7. The PS-VAE successfully partitions the latent representation of a two-view mouse video [22].

A: Example frames from the video. Mechanical equipment (lever and two independent spouts) as well as the single visible paw are tracked to provide labels for the PS-VAE supervised subspace. By tracking the moving mechanical equipment, the PS-VAE can isolate this variability in a subset of the latent dimensions, allowing the remaining dimensions to solely capture the animal’s behavior. B: The true labels (black lines) are again almost perfectly reconstructed by the supervised subspace of the PS-VAE (blue lines). Reconstructions from a standard VAE (orange lines) miss much of the variability in these labels. C: Observations from the example trial hold across all labels and test trials. Error bars are computed as in Fig 2D. D: Frames generated by manipulating the y coordinate of the tracked paw results in changes in the paw position, and only small changes in the side view. Only differenced frames are shown for clarity. E: Manipulation of the two unsupervised dimensions. Latent 0 (left) encodes the position of the chest, while Latent 1 (right) encodes the position of the jaw. The contrast of the latent traversal frames has been increased for visual clarity. See S16 Video for a dynamic version of these traversals.

We tracked the two moving lick spouts, two moving levers, and the single visible paw using DeepLabCut [10]. The lick spouts move independently, but only along a single dimension, so we were able to use one label (i.e. one dimension) for each spout. The levers always move synchronously, and only along a one-dimensional path, so we were able to use a single label for all lever-related movement. Therefore in our analysis we use models with a 7D latent space: a 5D supervised subspace (three equipment labels plus the x, y coordinates of the visible paw) and a 2D unsupervised subspace. To incorporate the two camera views into the model we resized the frames to have the same dimensions, then treated each grayscale view as a separate channel (similar to having separate red, green, and blue channels in an RGB image).

The PS-VAE is able to successfully reconstruct all of the labels (R2 = 0.93 ± 0.01), again outperforming the linear regression from the VAE latents (R2 = 0.53 ± 0.01) (Fig 7B and 7C) as well as the nonlinear MLP regression from the VAE latents (R2 = 0.85 ± 0.01). The latent traversals in the supervised subspace also show the PS-VAE learned to capture the label information (Fig 7D). The latent traversals in the unsupervised subspace show one dimension related to the chest and one dimension related to the jaw location (Fig 7E), two body parts that are otherwise hard to manually label (S16 Video). Nevertheless, we attempted to extract 1D hand engineered signals from crops of the chest and jaw as in the mouse face dataset, and again find reasonable correlation with the corresponding PS-VAE latents (chest, r = 0.41; jaw, r = 0.76; S4 Fig). [We note that an analogue of the chest latent is particularly difficult to extract from the frames by cropping due to the presence of the levers and paws.] Together these results from the label reconstruction analysis and the latent traversals demonstrate that, even with two concatenated camera views, the PS-VAE is able to learn an interpretable representation for this behavioral video.

We also use this dataset to demonstrate that the PS-VAE can find more than two interpretable unsupervised latents. We removed the paw labels and refit the PS-VAE with a 3D supervised subspace (one dimension for each of the equipment labels) and a 4D unsupervised subspace. We find that this model recovers the original unsupervised latents—one for the chest and one for the jaw—and the remaining two unsupervised latents capture the position of the (now unlabeled) paw, although they do not learn to strictly encode the x and y coordinates (see S17 Video; “R paw 0” and “R paw 1” panels correspond to the now-unsupervised paw dimensions).

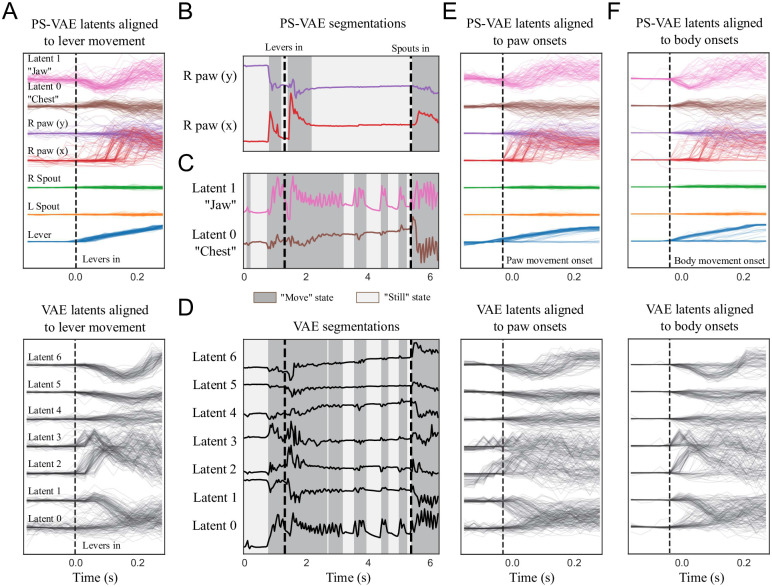

As previously mentioned, one major benefit of the PS-VAE for this dataset is that it allows us find a latent representation that separates the movement of mechanical equipment from the movement of the animal. To demonstrate this point we align the PS-VAE and VAE latents to the time point where the levers move in for each trial (Fig 8A). The PS-VAE latent corresponding to the lever increases with little trial-to-trial variability (blue lines), while the animal-related latents show extensive trial-to-trial variability. On the other hand, the VAE latents show activity that is locked to lever movement onset across many of the dimensions, but it is not straightforward to disentangle the lever movements from the body movements here. The PS-VAE thus provides a substantial advantage over the VAE for any experimental setup that involves moving mechanical equipment that is straightforward to track.

Fig 8. The PS-VAE enables targeted downstream behavioral analyses of the two-view mouse video.

A: PS-VAE latents (top) and VAE latents (bottom) aligned to the lever movement. The PS-VAE isolates this movement in the first (blue) dimension, and variability in the remaining dimensions is behavioral rather than mechanical. The VAE does not clearly isolate the lever movement, and as a result it is difficult to distinguish variability that is mechanical versus behavioral. B: An ARHMM is fit to the two supervised latents corresponding to the paw position (S23 Video). Background colors as in Fig 5. C: An ARHMM is fit to the two unsupervised latents corresponding to the chest and jaw, resulting in a “body” movement detector that is independent of the paw (S24 Video). D: An ARHMM is fit to seven fully unsupervised latents from a standard VAE. The “still” and “moving” periods tend to align more with movements of the body than the paw (compare to panels B and C). E: PS-VAE latents (top) and VAE latents (bottom) aligned to the onsets of paw movement found in B. This movement also is often accompanied by movements of the jaw and chest, although this is impossible to ascertain from the VAE latents. F: This same conclusion holds when aligning the latents to the onsets of body movement.

Beyond separating equipment- and animal-related information, the PS-VAE also allows us to separate paw movements from body movements (which we take to include the jaw). As in the mouse face dataset, we demonstrate how this separation allows us to fit some simple movement detectors to specific behavioral features. We fit 2-state ARHMMs separately on the paw latents (Fig 8B) and the body latents (Fig 8C) from the PS-VAE. To validate the body movement detector—constructed from PS-VAE unsupervised latents—we fit another ARHMM to the 1D body signal analogues extracted directly from the video frames, and find high overlap (94.9%) between the states of these two models (S4 Fig). We also fit an ARHMM on all latents from the VAE (Fig 8D). Again we see the VAE segmentation tends to line up with one of these more specific detectors more than the other (VAE and PS-VAE-paw state overlap: 72.5%; VAE and PS-VAE-body state overlap: 95.3%; VAE and hand engineered body state overlap: 93.1%). If we align all the PS-VAE latents to paw movement onsets found by the ARHMM (Fig 8E, top), we can make the additional observation that these paw movements tend to accompany body movements, as well as lever movements (see S23 Video for example clips). However, this would be impossible to ascertain from the VAE latents alone (Fig 8E, bottom), where the location of the mechanical equipment, the paw, and the body are all entangled. We make a similar conclusion when aligning the latents to body movement onsets (Fig 8F; see S24 Video for example clips). Furthermore, we find that increasing the number of ARHMM states does not help with interpretability of the VAE states (S5 Fig). The entangled representation of the VAE therefore does not allow us to easily construct paw or body movement detectors, as does the interpretable representation of the PS-VAE.

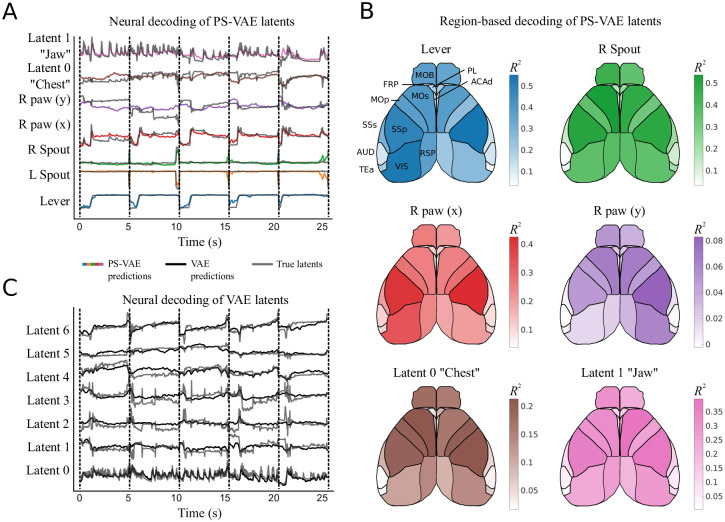

Finally, we decode the PS-VAE latents—both equipment- and animal-related—from neural activity. In this dataset, neural activity across dorsal cortex was optically recorded using widefield calcium imaging. We extract interpretable dimensions of neural activity using LocaNMF [19], which finds a low-dimensional representation for each of 12 aggregate brain regions defined by the Allen Common Coordinate Framework Atlas [53]. We first decode the PS-VAE latents from all brain regions using nonlinear MLP regression and find good reconstructions (Fig 9A), even for the equipment-related latents (quantified in S7 Fig). The real benefit of our approach becomes clear, however, when we perform a region-based decoding analysis (Fig 9B). The confluence of interpretable, region-based neural activity with interpretable behavioral latents from the PS-VAE leads to a detailed mapping between specific brain regions and specific behaviors.

Fig 9. The PS-VAE enables a detailed brain region-to-behavior mapping in the two-view mouse dataset.

A: PS-VAE latents (gray traces) and their predictions from neural activity (colored traces) recorded across dorsal cortex with widefield calcium imaging. Vertical dashed black lines delineate individual test trials. See S26 Video for a video of the full frame decoding. B: The behavioral specificity of the PS-VAE can be combined with the anatomical specificity of computational tools like LocaNMF [19] to produce detailed mappings from distinct neural populations to distinct behavioral features. Region acronyms are defined in Table 1. C: VAE latents (gray traces) and their predictions from the same neural activity as in A (black traces). The distributed behavioral representation produced by the VAE does not allow for the same region-to-behavior mappings enabled by the PS-VAE.

In this detailed mapping we see the equipment-related latents actually have higher reconstruction quality than the animal-related latents, although the equipment-related latents contain far less trial-to-trial variability (Fig 8A). Of the animal-related latents, the x coordinate of the right paw (supervised) and the jaw position (unsupervised) are the best reconstructed, followed by the chest and then the y coordinate of the right paw. Most of the decoding power comes from the motor (MOp, MOs) and somatosensory (SSp, SSs) regions, although visual regions (VIS) also perform reasonably well. We note that, while we could perform a region-based decoding of VAE latents [27], the lack of interpretability of those latents does not allow for the same specificity as the PS-VAE.

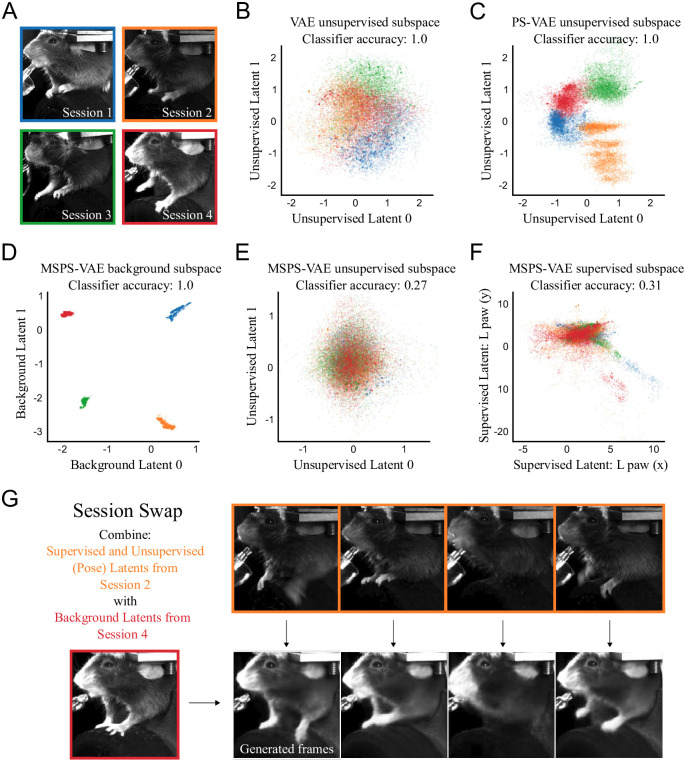

Extending the PS-VAE framework to multiple sessions

Thus far we have focused on validating the PS-VAE on single videos from different experimental setups; in practice, though, we will typically want to produce a low-dimensional latent representation that is shared across multiple experimental sessions, rather than fitting session-specific models. However, the inclusion of multiple videos during training introduces a new problem: different videos from the same experimental setup will contain variability in the experimental equipment, lighting, or even physical differences between animals, despite efforts to standardize these features (Fig 10A). We do not want these differences (which we refer to collectively as the “background”) to contaminate the latent representation, as they do not contain the behavioral information we wish to extract for downstream analyses.

Fig 10. The multi-session PS-VAE (MSPS-VAE) accounts for session-level differences between videos in the head-fixed mouse dataset [46].

A: One example frame from each of four experimental sessions with variation in lighting, experimental equipment, and animal appearance. B: Distribution of two latents from a VAE trained on all four sessions. Noticeable session-related structure is present, and a linear classifier can perfectly predict session identity on held-out test data (note the VAE has a total of eleven latent dimensions). Colors correspond to borders in panel A. C: Distribution of two unsupervised latents from a PS-VAE. D: Distribution of two background latents from an MSPS-VAE, which are designed to contain all of the static across-session variability. E: Distribution of two unsupervised latents from an MSPS-VAE. Note the lack of session-related structure; a linear classifier can only predict 27% of the data points correctly (chance level is 25%). F: Distribution of two supervised latents from an MSPS-VAE. G: Example of a “session swap” where the pose of one mouse is combined with the background appearance of another mouse to generate new frames. These swaps qualitatively demonstrate the model has learned to successfully encode these different features in the proper subspaces. See S27 Video for a dynamic version of these swaps.

To demonstrate how the background can affect the latent representation, we first fit a standard VAE using four sessions from the same experimental setup as the head-fixed mouse dataset (Fig 10A; see S1 Table for hyperparameter settings for this and subsequent models). The distribution of latents shows considerable session-specific structure (Fig 10B). We quantify this observation by fitting a logistic regression classifier from the VAE latent space to the session identity for each time point. We find this classifier can predict the correct session on held-out test data 100% of the time. However, a classifier fit to the z-scored labels predicts the correct session only 22% of the time (chance level is 25%). This demonstrates that much of the information concerning session identity comes not from the labels (i.e. the behavior), but instead from the background information. The standard PS-VAE does not perform any better; a classifier fit to the PS-VAE unsupervised latents can again perfectly predict session identity (Fig 10C).

To address this issue within the framework of the PS-VAE, we introduce a new subspace into our model which captures static differences between sessions (the “background” subspace) while leaving the other subspaces (supervised and unsupervised) to capture dynamic behaviors. As before, we briefly describe this multi-session PS-VAE (MSPS-VAE) here, while full details can be found in the Methods. Recall that the PS-VAE forms the supervised and unsupervised latent representations zs and zu as a nonlinear transformation of the observed video frame x. In a similar fashion, we define the background representation zb and encourage all three subspaces to be orthogonal to one another. We employ a triplet loss [54] that moves data points from the same session nearer to each other in the background subspace, and pushes data points from different sessions farther apart. Finally, the concatenated latent representation z = [zu; zs; zb] is used to reconstruct the observed video frame.

We fit the MSPS-VAE to the four sessions in Fig 10A, and visualize the three latent spaces. The background subspace, trained with the triplet loss, has learned to cleanly separate the four sessions (Fig 10D). An immediate consequence of this separation is that the distribution of data points in the unsupervised subspace no longer contains session-specific structure (Fig 10E); in fact, a classifier can only predict the correct session identity 27% of the time (where again chance level is 25%). We also find these dimensions to be relatively interpretable, and conserved across the four different sessions (S20 Video). Finally, we see little session-specific structure in the supervised latents (Fig 10F), and note that label reconstructions are still good and outperform VAE label reconstructions (S9 Fig).

Once the MSPS-VAE has been trained, the background representation zb should be independent of the behavioral information represented in zs and zu. As a qualitative test of this, we perform a “session swap” where we combine the poses of one session (encoded in zs and zu) with the appearance of another session (encoded in zb). This allows us to, for example, take a clip from one session, then generate corresponding clips that appear as if animals from the other sessions are performing the exact same movements (Fig 10G; see S27 Video, and S18 Fig for panel captions). We find that the movements of obvious body parts such as the paws and tongue are synchronized across the videos, while background information such as the lighting, lickspout, and head stage acquire the appearance of the desired session.

Discussion

In this work we introduced the Partitioned Subspace VAE (PS-VAE), a model that produces interpretable, low-dimensional representations of behavioral videos. We applied the PS-VAE to three head-fixed mouse datasets (Figs 2, 4 and 7) and a freely moving mouse dataset (Fig 3), demonstrating on each that our model is able to extract a set of supervised latents corresponding to user-supplied labels, and another set of unsupervised latents that account for other salient behavioral features. Notably, the PS-VAE can accommodate a range of tracking algorithms—the analyzed datasets contain labels from Deep Graph Pose [13] (head-fixed mouse), Facemap [50] (mouse face), and DeepLabCut [10] (freely moving mouse, two-view mouse). [Although all of our examples use pose estimates as labels, this is not an explicit requirement of the model; the labels can in general be any variable, continuous or discrete, that might be predicted from the video data.] We then demonstrated how the PS-VAE’s interpretable representations lend themselves to targeted downstream analyses which were otherwise infeasible using supervised or unsupervised methods alone. In one dataset we constructed a saccade detector from the supervised representation, and a whisker pad movement detector from the unsupervised representation (Fig 5); in a second dataset we constructed a paw movement detector from the supervised representation, and a body movement detector from the unsupervised representation (Fig 8). We then decoded the PS-VAE’s behavioral representations from neural activity, and showed how their interpretability allows us to better understand how different brain regions are related to distinct behaviors. For example, in one dataset we found that neurons from visual cortex were able to decode pupil information much more accurately than whisker pad position (Fig 6); in a second dataset we separately decoded mechanical equipment, body position, and paw position from across the dorsal cortex (Fig 9). Finally, we extended the PS-VAE framework to accommodate multiple videos from the same experimental setup (Fig 10). To do so we introduced a new subspace that captures variability in static background features across videos, while leaving the original subspaces (supervised and unsupervised) to capture dynamic behavioral features.

The PS-VAE contributes to a growing body of research that relies on automated video analysis to facilitate scientific discovery, which often requires supervised or unsupervised dimensionality reduction approaches to first extract meaningful behavioral features from video. Notable examples include “behavioral phenotyping,” a process which can automatically compare animal behavior across different genetic populations, disease conditions, and pharmacological interventions [16, 55]; the study of social interactions [56–59]; and quantitative measurements of pain response [60] and emotion [61]. The more detailed behavioral representation provided by the PS-VAE enables future such studies to consider a wider range of behavioral features, potentially offering a more nuanced understanding of how different behaviors are affected by genes, drugs, and the environment.

Automated video analysis is also becoming central to the search for neural correlates of behavior. Several recent studies applied PCA (an unsupervised approach) to behavioral videos to demonstrate that movements are encoded across the entire mouse brain, including regions not previously thought to be motor-related [22, 23]. In contrast to PCA, which does not take into account external covariates, the PS-VAE extracts interpretable pose information, as well as automatically discovers additional sources of variation in the video. These interpretable behavioral representations, as shown in our results (Figs 6 and 9), lead to more refined correlations between specific behaviors and specific neural populations. Moreover, motor control studies have employed supervised pose estimation algorithms to extract kinematic quantities and regress them against simultaneously recorded neural activity [56, 62–65]. The PS-VAE may allow such studies to account for movements that are not easily captured by tracked key points, such as soft tissues (e.g. a whisker pad or throat) or body parts that are occluded (e.g. by fur or feathers).

Finally, an important thread of work scrutinizes the neural underpinnings of naturalistic behaviors such as rearing [25] or mounting [66]. These discrete behaviors are often extracted from video data via segmentation of a low-dimensional representation (either supervised or unsupervised), as we demonstrated with the ARHMMs (Figs 5 and 8). Here too, the interpretable representation of the PS-VAE can allow segmentation algorithms to take advantage of a wider array of interpretable features, producing a more refined set of discrete behaviors.

In the results presented here we have implicitly defined “interpretable” behavioral features to mean individual body parts such as whisker pads, eyelids, and jaws. However, we acknowledge that “interpretable” is a subjective term [67], and will carry different meanings for different datasets. It is of course possible that the PS-VAE could find “interpretable” features that involve multiple coordinated body parts. Furthermore, features that are not immediately interpretable to a human observer may still contain information that is relevant to the scientific question at hand. For example, when comparing the behaviors of two subject cohorts (e.g. healthy and diseased) we might find that a previously uninterpretable feature is a significant predictor of cohort. Regardless of whether or not the unsupervised latents of the PS-VAE map onto intuitive behavioral features, these latents will still account for variance that is not explained by the user-provided labels.

There are some obvious directions to explore by applying the PS-VAE to different species and different experimental preparations, though the model may not be appropriate for analyzing behavioral videos where tracking non-animal equipment is not possible. Examples include bedding that moves around in a home cage experiment, or a patterned ball used for locomotion [68]. Depending on the amount of pixel variance driven by changes in these non-trackable, non-behavioral features, the PS-VAE may attempt to encode them in its unsupervised latent space. This encoding may be difficult to control, and could lead to uninterpretable latents.

The PS-VAE is not limited to the analysis of video data; rather, it is a general purpose nonlinear dimensionality reduction tool that partitions the low-dimensional representation into a set of dimensions that are constrained by user-provided labels, and another set of dimensions that account for remaining variability (similar in spirit to demixed PCA [69]). As such its application to additional types of data is a rich direction for future work. For example, the model could find a low-dimensional representation of neural activity, and constrain the supervised subspace with a low-dimensionsal representation of the behavior—whether that be from pose estimation, a purely behavioral PS-VAE, or even trial variables provided by the experimenter. This approach would then partition neural variability into a behavior-related subspace and a non-behavior subspace. [70] and [71] both propose a linear version of this model, although incorporating the nonlinear transformations of the autoencoder may be beneficial in many cases. [72] take a related nonlinear approach that incorporates behavioral labels differently from our work. Another use case comes from spike sorting: many pipelines contain a spike waveform featurization step, the output of which is used for clustering [73, 74]. The PS-VAE could find a low-dimensional representation of spike waveforms, and constrain the supervised subspace with easy to compute features such as peak-to-peak amplitude and waveform width. The unsupervised latents could then reveal interpretable dimensions of spike waveforms that are important for distinguishing different cells.

The structure of the PS-VAE fuses a generative model of video frames with a discriminative model that predicts the labels from the latent representation [30–37], and we have demonstrated how this structure is able to produce a useful representation of video data (e.g. Fig 2). An alternative approach to incorporating label information is to condition the latent representation directly on the labels, instead of predicting them with a discriminative model [72, 75–82]. We pursued the discriminative (rather than conditional) approach based on the nature of the labels we are likely to encounter in the analysis of behavioral videos, i.e. pose estimates: although pose estimation has rapidly become more accurate and robust, we still expect some degree of noise in the estimates. With the discriminative approach we can explicitly model that noise with the label likelihood term in the PS-VAE objective function. This approach also allows us to easily incorporate a range of label types beyond pose estimates, both continuous (e.g. running speed or accelerometer data) and discrete (e.g. trial condition or animal identity).

Extending the PS-VAE model itself offers several exciting directions for future work. We note that all of our downstream analyses in this paper first require fitting the PS-VAE, then require fitting a separate model (e.g., an ARHMM, or neural decoder). It is possible to incorporate some of these downstream analyses directly into the model. For example, recent work has combined autoencoders with clustering algorithms [15, 16], similar to what we achieved by separately fitting the ARHMMs (a dynamic clustering method) on the PS-VAE latents. There is also growing interest in directly incorporating dynamics models into the latent spaces of autoencoders for improved video analysis, including Markovian dynamics [83, 84], ARHMMs [26], RNNs [85–89], and Gaussian Processes [90]. There is also room to improve the video frame reconstruction term in the PS-VAE objective function. The current implementation uses the pixel-wise mean square error (MSE) loss. Replacing the MSE loss with a similarity metric that is more tailored to image data could substantially improve the quality of the model reconstructions and latent traversals [88, 91]. And finally, unsupervised disentangling remains an active area of research [38, 42–45, 72, 82, 92, 93], and the PS-VAE can benefit from improvements in this field through the incorporation of new disentangling cost function terms as they become available in the future.

Methods

Data details

Ethics statement

Animal experimentation: All procedures and experiments were carried out in accordance with the local laws and following approval by the relevant institutions: the Columbia University Institutional Animal Care and Use Committee [AC-AABL7561] (freely moving mouse dataset) and the Home Office in accordance with the UK Animals (Scientific Procedures) Act 1986 [P1DB285D8] (mouse face dataset).

Head-fixed mouse dataset [46]

A head-fixed mouse performed a visual decision-making task by manipulating a wheel with its fore paws. Behavioral data was recorded using a single camera at a 60 Hz frame rate; grayscale video frames were cropped and downsampled to 192x192 pixels. Batches were arbitrarily defined as contiguous blocks of 100 frames. We chose to label the left and right paws (Fig 2) for a total of 4 label dimensions (each paw has an x and y coordinate). For the single session analyzed in Fig 2 we hand labeled 66 frames and trained Deep Graph Pose [13] to obtain labels for the remaining frames. For the multiple sessions analyzed in Fig 10 we used a DLC network [10] trained on many thousands of frames. Each label was individually z-scored to make hyperparameter values more comparable across the different datasets analyzed in this paper, since the label log-likelihood values will depend on the magnitude of the labels. Note, however, that this preprocessing step is not strictly necessary due to the scale and translation transform in Eq 2.

Freely moving mouse dataset