ABSTRACT

Since the early 1990s, ecologists and evolutionary biologists have aggregated primary research using meta‐analytic methods to understand ecological and evolutionary phenomena. Meta‐analyses can resolve long‐standing disputes, dispel spurious claims, and generate new research questions. At their worst, however, meta‐analysis publications are wolves in sheep's clothing: subjective with biased conclusions, hidden under coats of objective authority. Conclusions can be rendered unreliable by inappropriate statistical methods, problems with the methods used to select primary research, or problems within the primary research itself. Because of these risks, meta‐analyses are increasingly conducted as part of systematic reviews, which use structured, transparent, and reproducible methods to collate and summarise evidence. For readers to determine whether the conclusions from a systematic review or meta‐analysis should be trusted – and to be able to build upon the review – authors need to report what they did, why they did it, and what they found. Complete, transparent, and reproducible reporting is measured by ‘reporting quality’. To assess perceptions and standards of reporting quality of systematic reviews and meta‐analyses published in ecology and evolutionary biology, we surveyed 208 researchers with relevant experience (as authors, reviewers, or editors), and conducted detailed evaluations of 102 systematic review and meta‐analysis papers published between 2010 and 2019. Reporting quality was far below optimal and approximately normally distributed. Measured reporting quality was lower than what the community perceived, particularly for the systematic review methods required to measure trustworthiness. The minority of assessed papers that referenced a guideline (~16%) showed substantially higher reporting quality than average, and surveyed researchers showed interest in using a reporting guideline to improve reporting quality. The leading guideline for improving reporting quality of systematic reviews is the Preferred Reporting Items for Systematic reviews and Meta‐Analyses (PRISMA) statement. Here we unveil an extension of PRISMA to serve the meta‐analysis community in ecology and evolutionary biology: PRISMA‐EcoEvo (version 1.0). PRISMA‐EcoEvo is a checklist of 27 main items that, when applicable, should be reported in systematic review and meta‐analysis publications summarising primary research in ecology and evolutionary biology. In this explanation and elaboration document, we provide guidance for authors, reviewers, and editors, with explanations for each item on the checklist, including supplementary examples from published papers. Authors can consult this PRISMA‐EcoEvo guideline both in the planning and writing stages of a systematic review and meta‐analysis, to increase reporting quality of submitted manuscripts. Reviewers and editors can use the checklist to assess reporting quality in the manuscripts they review. Overall, PRISMA‐EcoEvo is a resource for the ecology and evolutionary biology community to facilitate transparent and comprehensively reported systematic reviews and meta‐analyses.

Keywords: comparative analysis, critical appraisal, evidence synthesis, non‐independence, open science, study quality, pre‐registration, registration

I. INTRODUCTION

Ecological and evolutionary research topics are often distilled in systematic review and meta‐analysis publications (Gurevitch et al., 2018; Koricheva & Kulinskaya, 2019). Although terminology differs both across and within disciplines, here we use the term ‘meta‐analysis’ to refer to the statistical synthesis of effect sizes from multiple independent studies, whereas a ‘systematic review’ is the outcome of a series of established, transparent, and reproducible methods to find and summarise studies (definitions are discussed further in Primer A below). As with any scientific project, systematic reviews and meta‐analyses are susceptible to quality issues, limitations, and biases that can undermine the credibility of their conclusions. First, the strength of primary evidence included in the review might be weakened by selective reporting and research biases (Jennions & Møller, 2002; Forstmeier, Wagenmakers & Parker, 2017; Fraser et al., 2018). Second, reviews might be conducted or communicated in ways that summarise existing evidence inaccurately (Whittaker, 2010; Ioannidis, 2016). Systematic review methods have been designed to identify and mitigate both these threats to credibility (Haddaway & Macura, 2018) but, from the details that authors of meta‐analyses report, it is often unclear whether systematic review methods have been used in ecology and evolution. For a review to provide a firm base of knowledge on which researchers can build, it is essential that review authors transparently report their aims, methods, and outcomes (Liberati et al., 2009; Parker et al., 2016a ).

In evidence‐based medicine, where biased conclusions from systematic reviews can endanger human lives, transparent reporting is promoted by reporting guidelines and checklists such as the Preferred Reporting Items for Systematic reviews and Meta‐Analyses (PRISMA) statement. PRISMA, first published in 2009 (Moher et al., 2009) and recently updated as PRISMA‐2020 (Page et al., 2021b ), describes minimum reporting standards for authors of systematic reviews of healthcare interventions. PRISMA has been widely cited and endorsed by prominent journals, and there is evidence of improved reporting quality in clinical research reviews following its publication (Page & Moher, 2017). Several extensions of PRISMA have been published to suit different types of reviews (e.g. PRISMA for Protocols, PRISMA for Network Meta‐Analyses, and PRISMA for individual patient data: Hutton et al., 2015; Moher et al., 2015; Stewart et al., 2015).

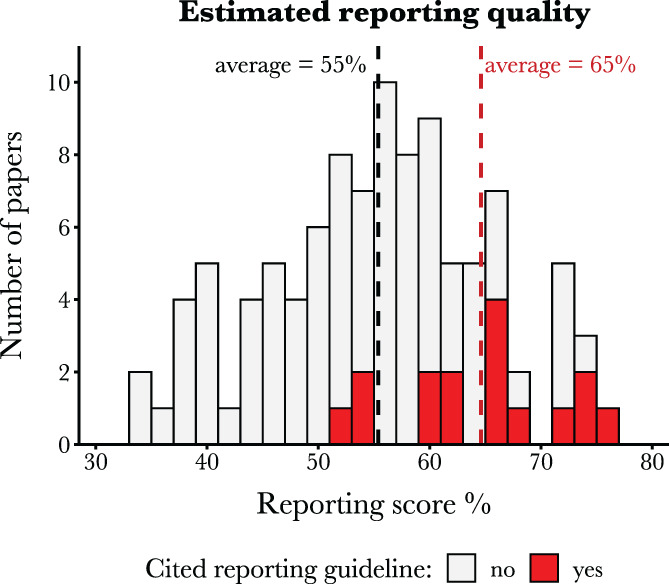

Ecologists and evolutionary biologists seldom reference reporting guidelines in systematic reviews and meta‐analyses. However, there is community support for wider use of reporting guidelines (based on our survey of 208 researchers; see online Supporting Information) and benefits to their adoption. In a representative sample of 102 systematic review and meta‐analysis papers published between 2010 and 2019, the 16% of papers that mentioned a reporting guideline showed above‐average reporting quality (Fig. 1). In all but one paper, the reporting guideline used by authors was PRISMA, despite it being focussed on reviews of clinical research. While more discipline‐appropriate reporting checklists are available for our fields (e.g. ‘ROSES RepOrting standards for Systematic Evidence Syntheses’; Haddaway et al., 2018; and the Tools for Transparency in Ecology and Evolution’; Parker et al., 2016a ), these have so far focussed on applied topics in environmental evidence, and/or lack explanations and examples for meta‐analysis reporting items. Ecologists and evolutionary biologists need a detailed reporting guideline for systematic review and meta‐analysis papers.

Fig 1.

Results from our assessment of reporting quality of systematic reviews and meta‐analyses published between 2010 and 2019, in ecology and evolutionary biology (n = 102). For each paper, the reporting score represents the mean ‘average item % score’ across all applicable items. Full details are provided in the Supporting Information and supplementary code. Red columns indicate the minority of papers that cited a reporting guideline (n = 15 cited PRISMA, and n = 1 cited Koricheva & Gurevitch, 2014). The subset of papers that referenced a reporting guideline tended to have higher reporting scores (note that these observational data cannot distinguish between checklists causing better reporting, or authors with better reporting practices being more likely to report using checklists). Welch's t‐test: t‐value = 5.21; df = 25.65; P < 0.001.

We have designed version 1.0 of a PRISMA extension for ecology and evolutionary biology: PRISMA‐EcoEvo. This guideline caters for the types of reviews and methods common within our fields. For example, meta‐analyses in ecology and evolutionary biology often combine large numbers of diverse studies to summarise patterns across multiple taxa and/or environmental conditions (Nakagawa & Santos, 2012; Senior et al., 2016). Aggregating diverse studies often creates multiple types of statistical non‐independence that require careful consideration (Noble et al., 2017), and guidance on reporting these statistical issues is not comprehensively covered by PRISMA. Conversely, some of the items on PRISMA are yet to be normalised within ecology and evolution (e.g. risk of bias assessment, and duplicate data extraction). Without pragmatic consideration of these differences between fields, most ecologists and evolutionary biologists are unlikely to use a reporting guideline for systematic reviews and meta‐analyses.

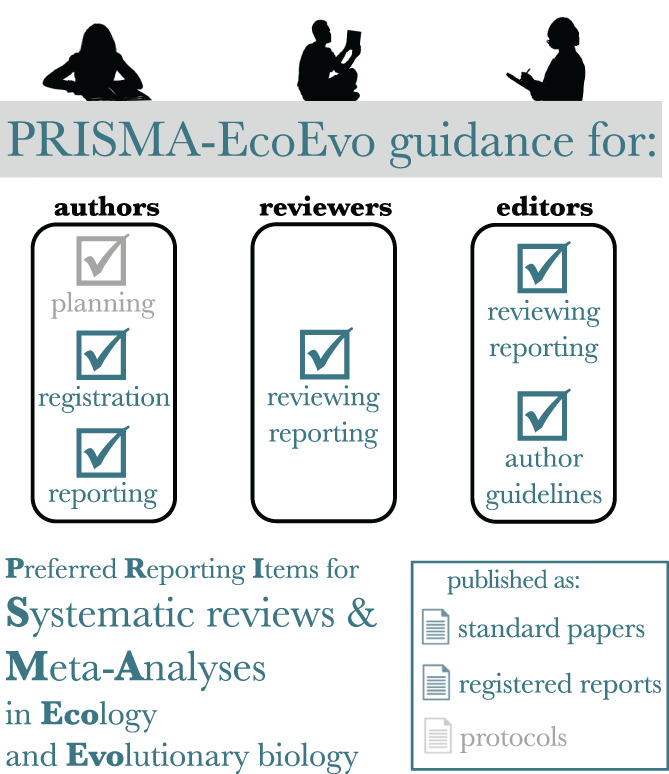

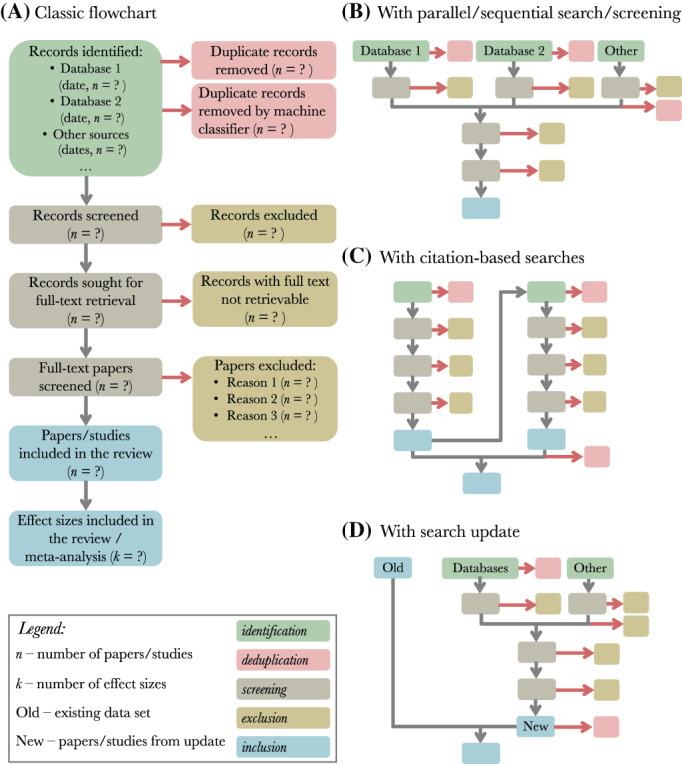

Here we explain every item of the PRISMA‐EcoEvo checklist, for use by authors, peer‐reviewers, and editors (Fig. 2). We also include extended discussion of the more difficult topics for authors in five ‘Primer’ sections (labelled A–E). Table 1 presents a checklist of sub‐items, to aid the assessment of partial reporting. The full checklist applies to systematic reviews with a meta‐analysis, but many of the items will be applicable to systematic reviews without a meta‐analysis, and meta‐analyses without a systematic review. Examples of each item from a published paper are presented in the Supporting Information, alongside text descriptions of current reporting practices.

Fig 2.

PRISMA‐EcoEvo for authors, peer‐reviewers, and editors. Planning and protocols are shown in grey because, while PRISMA‐EcoEvo can point authors in the right direction, authors should seek additional resources for detailed conduct guidance. Authors can use PRISMA‐EcoEvo as a reporting guideline for both registered reports (Primer C) and completed manuscripts. Reviewers and editors can use PRISMA‐EcoEvo to assess reporting quality of the systematic review and meta‐analysis manuscripts they read. Editors can promote high reporting quality by asking submitting authors to complete the PRISMA‐EcoEvo checklist, either by downloading a static file at https://osf.io/t8qd2/, or by using an interactive web application at https://prisma‐ecoevo.shinyapps.io/checklist/.

Table 1.

PRISMA‐EcoEvo v1.0. Checklist of preferred reporting items for systematic reviews and meta‐analyses in ecology and evolutionary biology, alongside an assessment of recent reporting practices (based on a representative sample of 102 meta‐analyses published between 2010 and 2019; references to all assessed papers are provided in the reference list, while the Supporting Information presents details of the assessment). The proportion of papers meeting each sub‐item is presented as a percentage. While all papers were assessed for each item, there was a set of reasons why some items might not be applicable (e.g. no previous reviews on the topic would make sub‐item 2.2 not applicable). Only applicable sub‐items contributed to reporting scores; sample sizes for each sub‐item are shown in the column on the right. Asterisks (*) indicate sub‐items that are identical, or very close, to items from the 2009 PRISMA checklist. In the wording of each Sub‐item, ‘review’ encompasses all forms of evidence syntheses (including systematic reviews), while ‘meta‐analysis’ and ‘meta‐regression’ refer to statistical methods for analysing data collected in the review (definitions are discussed further in Primer A)

| Checklist item | Sub‐item number | Sub‐item | Papers meeting component (%) | No. papers applicable |

|---|---|---|---|---|

| Title and abstract | 1.1 | Identify the review as a systematic review, meta‐analysis, or both* | 100 | 102 |

| 1.2 | Summarise the aims and scope of the review | 97 | 102 | |

| 1.3 | Describe the data set | 74 | 102 | |

| 1.4 | State the results of the primary outcome | 96 | 102 | |

| 1.5 | State conclusions* | 94 | 102 | |

| 1.6 | State limitations* | 17 | 96 | |

| Aims and questions | 2.1 | Provide a rationale for the review* | 100 | 102 |

| 2.2 | Reference any previous reviews or meta‐analyses on the topic | 93 | 75 | |

| 2.3 | State the aims and scope of the review (including its generality) | 91 | 102 | |

| 2.4 | State the primary questions the review addresses (e.g. which moderators were tested) | 96 | 102 | |

| 2.5 | Describe whether effect sizes were derived from experimental and/or observational comparisons | 57 | 76 | |

| Review registration | 3.1 | Register review aims, hypotheses (if applicable), and methods in a time‐stamped and publicly accessible archive and provide a link to the registration in the methods section of the manuscript. Ideally registration occurs before the search, but it can be done at any stage before data analysis | 3 | 102 |

| 3.2 | Describe deviations from the registered aims and methods | 0 | 3 | |

| 3.3 | Justify deviations from the registered aims and methods | 0 | 3 | |

| Eligibility criteria | 4.1 | Report the specific criteria used for including or excluding studies when screening titles and/or abstracts, and full texts, according to the aims of the systematic review (e.g. study design, taxa, data availability) | 84 | 102 |

| 4.2 | Justify criteria, if necessary (i.e. not obvious from aims and scope) | 54 | 67 | |

| Finding studies | 5.1 | Define the type of search (e.g. comprehensive search, representative sample) | 25 | 102 |

| 5.2 | State what sources of information were sought (e.g. published and unpublished studies, personal communications)* | 89 | 102 | |

| 5.3 | Include, for each database searched, the exact search strings used, with keyword combinations and Boolean operators | 49 | 102 | |

| 5.4 | Provide enough information to repeat the equivalent search (if possible), including the timespan covered (start and end dates) | 14 | 102 | |

| Study selection | 6.1 | Describe how studies were selected for inclusion at each stage of the screening process (e.g. use of decision trees, screening software) | 13 | 102 |

| 6.2 | Report the number of people involved and how they contributed (e.g. independent parallel screening) | 3 | 102 | |

| Data collection process | 7.1 | Describe where in the reports data were collected from (e.g. text or figures) | 44 | 102 |

| 7.2 | Describe how data were collected (e.g. software used to digitize figures, external data sources) | 42 | 102 | |

| 7.3 | Describe moderator variables that were constructed from collected data (e.g. number of generations calculated from years and average generation time) | 56 | 41 | |

| 7.4 | Report how missing or ambiguous information was dealt with during data collection (e.g. authors of original studies were contacted for missing descriptive statistics, and/or effect sizes were calculated from test statistics) | 47 | 102 | |

| 7.5 | Report who collected data | 10 | 102 | |

| 7.6 | State the number of extractions that were checked for accuracy by co‐authors | 1 | 102 | |

| Data items | 8.1 | Describe the key data sought from each study | 96 | 102 |

| 8.2 | Describe items that do not appear in the main results, or which could not be extracted due to insufficient information | 42 | 53 | |

| 8.3 | Describe main assumptions or simplifications that were made (e.g. categorising both ‘length’ and ‘mass’ as ‘morphology’) | 62 | 86 | |

| 8.4 | Describe the type of replication unit (e.g. individuals, broods, study sites) | 73 | 102 | |

| Assessment of individual study quality | 9.1 | Describe whether the quality of studies included in the systematic review or meta‐analysis was assessed (e.g. blinded data collection, reporting quality, experimental versus observational) | 7 | 102 |

| 9.2 | Describe how information about study quality was incorporated into analyses (e.g. meta‐regression and/or sensitivity analysis) | 6 | 102 | |

| Effect size measures | 10.1 | Describe effect size(s) used | 97 | 102 |

| 10.2 | Provide a reference to the equation of each calculated effect size (e.g. standardised mean difference, log response ratio) and (if applicable) its sampling variance | 63 | 91 | |

| 10.3 | If no reference exists, derive the equations for each effect size and state the assumed sampling distribution(s) | 7 | 28 | |

| Missing data | 11.1 | Describe any steps taken to deal with missing data during analysis (e.g. imputation, complete case, subset analysis) | 37 | 57 |

| 11.2 | Justify the decisions made to deal with missing data | 21 | 57 | |

| Meta‐analytic model description | 12.1 | Describe the models used for synthesis of effect sizes | 97 | 102 |

| 12.2 | The most common approach in ecology and evolution will be a random‐effects model, often with a hierarchical/multilevel structure. If other types of models are chosen (e.g. common/fixed effects model, unweighted model), provide justification for this choice | 50 | 40 | |

| Software | 13.1 | Describe the statistical platform used for inference (e.g. R) | 92 | 102 |

| 13.2 | Describe the packages used to run models | 74 | 80 | |

| 13.3 | Describe the functions used to run models | 22 | 69 | |

| 13.4 | Describe any arguments that differed from the default settings | 29 | 75 | |

| 13.5 | Describe the version numbers of all software used | 33 | 102 | |

| Non‐independence | 14.1 | Describe the types of non‐independence encountered (e.g. phylogenetic, spatial, multiple measurements over time) | 32 | 102 |

| 14.2 | Describe how non‐independence has been handled | 74 | 102 | |

| 14.3 | Justify decisions made | 47 | 102 | |

| Meta‐regression and model selection | 15.1 | Provide a rationale for the inclusion of moderators (covariates) that were evaluated in meta‐regression models | 81 | 94 |

| 15.2 | Justify the number of parameters estimated in models, in relation to the number of effect sizes and studies (e.g. interaction terms were not included due to insufficient sample sizes) | 20 | 94 | |

| 15.3 | Describe any process of model selection | 80 | 40 | |

| Publication bias and sensitivity analyses | 16.1 | Describe assessments of the risk of bias due to missing results (e.g. publication, time‐lag, and taxonomic biases) | 65 | 102 |

| 16.2 | Describe any steps taken to investigate the effects of such biases (if present) | 47 | 30 | |

| 16.3 | Describe any other analyses of robustness of the results, e.g. due to effect size choice, weighting or analytical model assumptions, inclusion or exclusion of subsets of the data, or the inclusion of alternative moderator variables in meta‐regressions | 35 | 102 | |

| Clarification of post hoc analyses | 17.1 | When hypotheses were formulated after data analysis, this should be acknowledged | 14 | 28 |

| Metadata, data, and code | 18.1 | Share metadata (i.e. data descriptions) | 44 | 102 |

| 18.2 | Share data required to reproduce the results presented in the manuscript | 77 | 102 | |

| 18.3 | Share additional data, including information that was not presented in the manuscript (e.g. raw data used to calculate effect sizes, descriptions of where data were located in papers) | 39 | 102 | |

| 18.4 | Share analysis scripts (or, if a software package with graphical user interface (GUI) was used, then describe full model specification and fully specify choices) | 11 | 102 | |

| Results of study selection process | 19.1 | Report the number of studies screened* | 37 | 102 |

| 19.2 | Report the number of studies excluded at each stage of screening | 22 | 102 | |

| 19.3 | Report brief reasons for exclusion from the full‐text stage | 27 | 102 | |

| 19.4 | Present a Preferred Reporting Items for Systematic Reviews and Meta‐Analyses (PRISMA)‐like flowchart (www.prisma‐statement.org)* | 19 | 102 | |

| Sample sizes and study characteristics | 20.1 | Report the number of studies and effect sizes for data included in meta‐analyses | 96 | 91 |

| 20.2 | Report the number of studies and effect sizes for subsets of data included in meta‐regressions | 57 | 93 | |

| 20.3 | Provide a summary of key characteristics for reported outcomes (either in text or figures; e.g. one quarter of effect sizes reported for vertebrates and the rest invertebrates) | 62 | 102 | |

| 20.4 | Provide a summary of limitations of included moderators (e.g. collinearity and overlap between moderators) | 22 | 87 | |

| 20.5 | Provide a summary of characteristics related to individual study quality (risk of bias) | 60 | 5 | |

| Meta‐analysis | 21.1 | Provide a quantitative synthesis of results across studies, including estimates for the mean effect size, with confidence/credible intervals | 94 | 87 |

| Heterogeneity | 22.1 | Report indicators of heterogeneity in the estimated effect (e.g. I 2, tau 2 and other variance components) | 52 | 84 |

| Meta‐regression | 23.1 | Provide estimates of meta‐regression slopes (i.e. regression coefficients) and confidence/credible intervals | 78 | 94 |

| 23.2 | Include estimates and confidence/credible intervals for all moderator variables that were assessed (i.e. complete reporting) | 59 | 94 | |

| 23.3 | Report interactions, if they were included | 59 | 27 | |

| 23.4 | Describe outcomes from model selection, if done (e.g. R 2 and AIC) | 81 | 36 | |

| Outcomes of publication bias and sensitivity analyses | 24.1 | Provide results for the assessments of the risks of bias (e.g. Egger's regression, funnel plots | 60 | 102 |

| 24.2 | Provide results for the robustness of the review's results (e.g. subgroup analyses, meta‐regression of study quality, results from alternative methods of analysis, and temporal trends) | 44 | 102 | |

| Discussion | 25.1 | Summarise the main findings in terms of the magnitude of effect | 73 | 102 |

| 25.2 | Summarise the main findings in terms of the precision of effects (e.g. size of confidence intervals, statistical significance) | 57 | 102 | |

| 25.3 | Summarise the main findings in terms of their heterogeneity | 47 | 89 | |

| 25.4 | Summarise the main findings in terms of their biological/practical relevance | 98 | 102 | |

| 25.5 | Compare results with previous reviews on the topic, if available | 88 | 72 | |

| 25.6 | Consider limitations and their influence on the generality of conclusions, such as gaps in the available evidence (e.g. taxonomic and geographical research biases) | 72 | 100 | |

| Contributions and funding | 26.1 | Provide names, affiliations, and funding sources of all co‐authors | 92 | 102 |

| 26.2 | List the contributions of each co‐author | 31 | 102 | |

| 26.3 | Provide contact details for the corresponding author | 100 | 102 | |

| 26.4 | Disclose any conflicts of interest | 0 | 8 | |

| References | 27.1 | Provide a reference list of all studies included in the systematic review or meta‐analysis | 92 | 102 |

| 27.2 | List included studies as referenced sources (e.g. rather than listing them in a table or supplement) | 18 | 102 |

II. PRIMER A: TERMINOLOGY

Within the ecology and evolutionary biology community there are terminological differences regarding how ‘meta‐analysis’ is defined (Vetter, Rücker & Storch, 2013). In the broadest sense, any aggregation of results from multiple studies is sometimes referred to as a ‘meta‐analysis’ (including the common but inadvisable practice of tallying the number of significant versus non‐significant results, i.e. ‘vote‐counting’; Vetter et al., 2013; Koricheva & Gurevitch, 2014; Gurevitch et al., 2018). Here, we reserve the term ‘meta‐analysis’ for studies in which effect sizes from multiple independent studies are combined in a statistical model, to give an estimate of a pooled effect size and error. Each effect size represents a result, and the effect sizes from multiple studies are expressed on the same scale. Usually the effect sizes are weighted so that more precise estimates (lower sampling error) have a greater impact on the pooled effect size than imprecise estimates (although unweighted analyses can sometimes be justified; see Item 12).

In comparison with meta‐analyses, which have been used in ecology and evolutionary biology for nearly 30 years (the first meta‐analysis in ecology was published by Jarvinen, 1991), systematic reviews are only now becoming an established method (Gurevitch et al., 2018; Berger‐Tal et al., 2019; but see Pullin & Stewart, 2006). Systematic‐review methods are concerned with how information was gathered and synthesised. In fields such as medicine and conservation biology, the required steps for a systematic review are as follows: defining specific review questions; identifying all likely relevant records; screening studies against pre‐defined eligibility criteria; assessing the risk of bias both within and across studies (i.e. ‘critical appraisal’; Primer D); extracting data; and synthesising results (which might include a meta‐analysis) (Pullin & Stewart, 2006; Liberati et al., 2009; Haddaway & Verhoeven, 2015; James, Randall & Haddaway, 2016; Cooke et al., 2017; Higgins et al., 2019). Under this formal definition, systematic reviews in ecology and evolutionary biology are exceedingly rare for two reasons. First, we tend not to conduct exhaustive searches to find all relevant records (e.g. we usually rely on a sample of published sources from just one or two databases). Second, assessing the risk of bias in primary studies is very uncommon (based on the meta‐analyses we assessed; see Supporting Information and Section VIII). Given current best practice and usage of the term ‘systematic review’ in ecology and evolutionary biology, the PRISMA‐EcoEvo checklist is targeted towards meta‐analyses that were conducted on data collected from multiple sources and whose methods were structured, transparent, and reproducible.

III. ABSTRACT & INTRODUCTION

Item 1: Title and abstract

In the title or abstract, identify the review as a systematic review, meta‐analysis, or both. In the abstract provide a summary including the aims and scope of the review, description of the data set, results of the primary outcome, conclusions, and limitations.

Explanation and elaboration

Identifying the report as a systematic review and/or meta‐analysis in the title, abstract, or keywords makes these types of reviews identifiable through database searches. It is essential that the summary of the review in the abstract is accurate because this is the only part of the review that some people will read (either by choice or because of restricted access) (Beller et al., 2013). While it is currently rare for abstracts to report limitations, this practice should change. Casual readers can be misled if the abstract does not disclose limitations of the review or fails to report the result of the primary outcome. Even very concise abstracts (e.g. for journals that allow a maximum of 150 words) should state obvious limitations. To signify greater accountability, authors can report when their review was registered in advance (Item 3).

Item 2: Aims and questions

Explain the rationale of the study, including reference to any previous reviews or meta‐analyses on the topic. State the aims and scope of the study (including its generality) and its primary questions (e.g. which moderators were tested), including whether effect sizes are derived from experimental and/or observational comparisons.

Explanation and elaboration

An effective introduction sets up the reader so that they understand why a study was done and what it entailed, making it easier to process subsequent information (Liberati et al., 2009). In this respect, the introduction of a systematic review is no different to that of primary studies (Heard, 2016). Previous review articles are likely to influence thinking around a research topic, so these reviews should be placed in context, as their absence signifies a research gap. If the introduction is well written and the study is well designed, then the reader can roughly infer what methods were used before reading the methods section. To achieve such harmony, authors should clearly lay out the scope and primary aims of the study (e.g. which taxa and types of studies; hypothesis testing or generating/exploratory). The scope is crucial because this influences many aspects of the study design (e.g. eligibility criteria; Item 4) and interpretation of results. It is also important to distinguish between experimental and observational studies, as experiments provide an easier path to causal conclusions.

IV. PRIMER B: TYPES OF QUESTIONS

Broadly, systematic reviews with meta‐analyses can answer questions of two kinds: the generality of a phenomenon, or its overall effect (Gurevitch et al., 2018). When PRISMA was published in 2009 for reviews assessing the overall effect (i.e. the meta‐analytic intercept/mean) it recommended that questions be stated with reference to ‘PICO’ or ‘PECO’: population (e.g. adult humans at risk of cardiovascular disease), intervention or exposure (e.g. statin medication), comparator (e.g. control group taking a placebo), and outcome (e.g. difference in the number of cardiovascular disease events between the intervention and control groups) (Liberati et al., 2009). When a population is limited to one species, or one subset of a population, the number of studies available to quantify the overall effect of an intervention or exposure is typically small (e.g. <20 studies) (Gurevitch et al., 2018). However, even when ecological and evolutionary questions can be framed in terms of ‘PICO’ or ‘PECO’, the ‘population’ is often broad (e.g. vertebrates, whole ecosystems) leading to larger and more diverse data sets (Gerstner et al., 2017). Examples include the effect of the latitudinal gradient on global species richness (Kinlock et al., 2018; n = 199 studies), the effect of parasite infection on body condition in wild species (Sánchez et al., 2018; n = 187 studies), the effect of livestock grazing on ecosystem properties of salt marshes (Davidson et al., 2017; n = 89 studies), and the effect of cytoplasmic genetic variation on phenotypic variation in eukaryotes (Dobler et al., 2014; n = 66 studies).

In ecological and evolutionary meta‐analyses, determining the average overall effect across studies is usually of less interest than exploring the extent, and sources, of variability in effect sizes among studies. Combining a large number of studies across species or contexts increases variability and makes estimation and interpretation of the average effect difficult and arguably meaningless. Instead, exploring variables which influence the magnitude or direction of an effect can be particularly fruitful; these variables could be biological or methodological (Gerstner et al., 2017). To explore sources of variation in effects, and quantify statistical power, it is important to report the magnitude of heterogeneity among effect sizes (i.e. differences in effect sizes between studies beyond what is expected from sampling error; Item 22). Heterogeneity is typically high when diverse studies are combined in a single meta‐analysis. High heterogeneity is considered problematic in medical meta‐analyses (Liberati et al., 2009; Muka et al., 2020), but it is the norm in ecology and evolutionary biology (Stewart, 2010; Senior et al., 2016), and identifying sources of heterogeneity could produce both biological and methodological insights (Rosenberg, 2013). Authors of meta‐analyses in ecology and evolutionary biology should be aware that high heterogeneity reduces statistical power for a given sample size, especially for estimates of moderator effects and their interactions (Valentine, Pigott & Rothstein, 2010). It is therefore important to report estimates of heterogeneity (Item 22) alongside full descriptions of sample sizes (Item 20), and communicate appropriate uncertainty in analysis results.

V. REGISTRATION

Item 3: Review registration

Register study aims, hypotheses (if applicable), and methods in a time‐stamped and publicly accessible archive. Ideally registration occurs before the search, but it can be done at any stage before data analysis. A link to the archived registration should be provided in the methods section of the manuscript. Describe and justify deviations from the registered aims and methods.

Explanation and elaboration

Registering planned research and analyses, in a time‐stamped and publicly accessible archive, is easily achieved with existing infrastructure (Nosek et al., 2018) and is a promising protection against false‐positive findings (Allen & Mehler, 2019) (discussed in Primer C). While ecologists and evolutionary biologists have been slower to adopt registrations compared to researchers in the social and medical sciences, our survey found that authors who had tried registrations viewed their experience favourably (see Supporting Information). Given that authors of systematic reviews and meta‐analyses often plan their methods in advance (e.g. to increase the reliability of study screening and data extractions), only a small behavioural change would be required to register these plans in a time‐stamped and publicly available archive. Inexperienced authors might feel ill‐equipped to describe analysis plans in detail, but there are still benefits to registering conceptual plans (e.g. detailed aims, hypotheses, predictions, and variables that will be extracted for exploratory purposes only). Deviations from registered plans should be acknowledged and justified in the final report (e.g. when the data collected cannot be analysed using the proposed statistical model due to violation of assumptions). Authors who are comfortable with registration might consider publishing their planned systematic review or meta‐analysis as a ‘registered report’, whereby the abstract, introduction and methods are submitted to a journal prior to the review being conducted. Some journals even publish review protocols before the review is undertaken (as is commonly done in environmental sciences, e.g. Greggor, Price & Shier, 2019).

VI. PRIMER C: REGISTRATION AND REGISTERED REPORTS

Suboptimal reporting standards are often attributed to perverse incentives for career advancement (Ioannidis, 2005; Smaldino & McElreath, 2016; Moher et al., 2020; Munafò et al., 2020). ‘Publish or perish’ research cultures reward the frequent production of papers, especially papers that gain citations quickly. Researchers are therefore encouraged, both directly and indirectly, to extract more‐compelling narratives from less‐compelling data. For example, given multiple choices in statistical analyses, researchers might favour paths leading to statistical significance (i.e. ‘P‐hacking’; Simmons, Nelson & Simonsohn, 2011; Head et al., 2015). Similarly, there are many ways to frame results in a manuscript. Results might be more impactful when framed as evidence for a hypothesis, even if data were not collected with the intention of testing that hypothesis (a problematic practice known as ‘HARKing’ — Hypothesising After the Results are Known’; Kerr, 1998). Engaging in these behaviours does not require malicious intent or obvious dishonesty. Concerted effort is required to avoid the trap of self‐deception (Forstmeier et al., 2017; Aczel et al., 2020). For researchers conducting a systematic review or meta‐analysis, we need both to be aware that these practices could reduce the credibility of primary studies (Primer D), and guard against committing these practices when conducting and writing the review.

‘Registration’ or ‘pre‐registration’ is an intervention intended to make it harder for researchers to oversell their results (Rice & Moher, 2019). Registration involves publicly archiving a written record of study aims, hypotheses, experimental or observational methods, and an analysis plan prior to conducting a study (Allen & Mehler, 2019). The widespread use of public archiving of study registrations only emerged in the 2000s when — in recognition of the harms caused by false‐positive findings — the International Committee of Medical Journal Editors, World Medical Association, and the World Health Organisation, mandated that all medical trials should be registered (Goldacre, 2013). Since then, psychologists and other social scientists have adopted registrations too (which they term ‘pre‐registrations’; Rice & Moher, 2019), in response to high‐profile cases of irreproducible research (Nelson, Simmons & Simonsohn, 2018; Nosek et al., 2019). In addition to discouraging researchers from fishing for the most compelling stories in their data, registration may also help locate unpublished null results, which are typically published more slowly than ‘positive’ findings (Jennions & Møller, 2002) (i.e. registrations provide a window into researchers’ ‘file drawers’, a goal of meta‐analysts that seemed out of reach for decades; Rosenthal, 1979).

Beyond registration, a more powerful intervention is the ‘registered report’, because these not only make it harder for researchers to oversell their research and selectively report outcomes, but also prevent journals basing their publication decisions on study outcomes. In a registered report, the abstract, introduction, and method sections of a manuscript are submitted for peer review prior to conducting a study, and studies are provisionally accepted for publication before their results are known (Parker, Fraser & Nakagawa, 2019). This publication style can therefore mitigate publication bias and helps to address flaws in researchers’ questions and methods before it is too late to change them. Although the ‘in principle’ acceptance for publication does rely on authors closely following their registered plans, this fidelity comes with the considerable advantage of not requiring ‘surprising’ results for a smooth path to publication (and, if large changes reverse the initial decision of provisional acceptance, authors can still submit their manuscript as a new submission). Currently, a small number of journals that publish meta‐analyses in ecology and evolutionary biology accept registered reports (see https://cos.io/rr/ for an updated list) and, as with a regular manuscript, the PRISMA‐EcoEvo checklist can be used to improve reporting quality in registered reports.

Systematic reviews and meta‐analyses are well suited for registration and registered reports because these large and complicated projects have established and predictable methodology (Moher et al., 2015; López‐López et al., 2018; Muka et al., 2020). Despite these advantages, in ecology and evolutionary biology registration is rare (see Supporting Information). When we surveyed authors, reviewers, and editors, we found researchers had either not considered registration as an option for systematic reviews and meta‐analyses or did not consider it worthwhile. Even in medical reviews, registration rates are lower than expected (Pussegoda et al., 2017). Rather than a leap to perfect science, registration is a step towards greater transparency in the research process. Still, the practice has been criticised for not addressing underlying issues with research quality and external validity (Szollosi et al., 2020). Illogical research questions and methods are not rescued by registration (Gelman, 2018), but registered reports provide the opportunity for them to be addressed before a study is conducted. Overall, wider adoption of registrations and registered reports is the clearest path towards transparent and reliable research.

VII. FINDING AND EXTRACTING INFORMATION

Item 4: Eligibility criteria

Report the specific criteria used for including or excluding studies when screening titles and/or abstracts, and full texts, according to the aims of the meta‐analysis (e.g. study design, taxa, data availability). Justify criteria, if necessary (i.e. not obvious from aims and scope).

Explanation and elaboration

Fully disclosing which studies were included in the review allows readers to assess the generality, or specificity, of the review's conclusions (Vetter et al., 2013). To decide upon the scope of the review, we typically use an iterative process of trial‐and‐error to refine the eligibility criteria, in conjunction with refining the research question. These planning stages should be conducted prior to registering study methods. Pragmatically, the scope of a systematic review should be sufficiently broad to address the research question meaningfully, while being achievable within the authors’ constrained resources (time and/or funding) (Forero et al., 2019).

The eligibility criteria represent a key ‘forking path’ in any meta‐analysis; slight modifications to the eligibility criteria could send the review down a path towards substantially different results (Palpacuer et al., 2019). When planning a review, it is crucial to define explicit criteria for which studies will be included that are as objective as possible. These criteria need to be disclosed in the paper or supplementary information for the review to be replicable. It is especially important to describe criteria that do not logically follow from the aims and scope of the review (e.g. exclusion criteria chosen for convenience, such as excluding studies with missing data rather than contacting authors).

Item 5: Finding studies

Define the type of search (e.g. comprehensive search, representative sample), and state what sources of information were sought (e.g. published and unpublished studies, personal communications). For each database searched include the exact search strings used, with keyword combinations and Boolean operators. Provide enough information to repeat the equivalent search (if possible), including the timespan covered (start and end dates).

Explanation and elaboration

Finding relevant studies to include in a systematic review is hard. Weeks can be spent sifting through massive piles of literature to find studies matching the eligibility criteria and yet, when reporting methods of the review, these details are typically skimmed over (average reporting quality <50%; see Supporting Information). While authors might deem it needlessly tedious to report the minutiae of their search methods, the supplementary information can service readers who wish to evaluate the appropriateness of the search methods (e.g. ‘PRESS’ – Peer Review of Electronic Search Strategies; McGowan et al., 2016). Detailing search methods is also necessary for the study to be updatable using approximately the same methods (Garner et al., 2016). Although journal subscriptions might vary over time and between different institutions (Mann, 2015), all authors can aim for approximately replicable searches. For instance, authors searching for studies through Web of Science should specify which databases were included in their search; institutions will typically only have access to a portion of the possible databases.

To recall how and why searches were conducted, authors should record the process and workflow of search strategy development. Often, multiple scoping searches are trialled before settling on a final search strategy (Siddaway, Wood & Hedges, 2019). For this process of trial‐and‐error, authors can check the ability of different searches to find a known set of suitable studies (studies that meet, or almost meet, the eligibility criteria; Item 4) (Bartels, 2013). The scoping searches can be conducted using a single database, but it is preferable to use more than one database for the final search (Bramer et al., 2018) (requiring duplicated studies to be removed prior to study selection, for which software is available; Rathbone et al., 2015a ; Westgate, 2019; Muka et al., 2020). Sometimes potentially useful records will be initially inaccessible (e.g. when authors’ home institutions do not subscribe to the journal), but efforts can be made to retrieve them from elsewhere (e.g. inter‐library loans; directly contacting authors) (Stewart et al., 2013). Authors should note whether the search strategy was designed to retrieve unpublished sources and grey literature. While most meta‐analysts in ecology and evolutionary biology only search for published studies, the inclusion of unpublished data could substantially alter results (Sánchez‐Tójar et al., 2018).

Traditional systematic reviews aim to be comprehensive and find all relevant studies, published and unpublished, during a ‘comprehensive search’ (Primer A). In order to achieve comprehensive coverage in medical reviews, teams of systematic reviewers often employ an information specialist or research librarian. In ecology and evolutionary biology, it is more common to obtain a sample of available studies, sourced from a smaller number of sources and/or from a restricted time period. The validity of this approach depends on whether the sample is likely to be representative of all available studies; if the sampling strategy is not biased, aiming for a representative sample is justifiable (Cote & Jennions, 2013). We encourage authors to be transparent about the aim of their search and consider the consequences of sampling decisions. Further guidance on reporting literature searches is available from PRISMA‐S (Rethlefsen et al., 2021), and guidance on designing and developing searches is available from Bartels (2013), Bramer et al. (2018), Siddaway et al. (2019) and Stewart et al. (2013).

Item 6: Study selection

Describe how studies were selected for inclusion at each stage of the screening process (e.g. use of decision trees, screening software). Report the number of people involved and how they contributed (e.g. independent parallel screening).

Explanation and elaboration

As with finding studies, screening studies for inclusion is a time‐consuming process that ecologists and evolutionary biologists rarely describe in their reports (average reporting quality <10%; see Supporting Information). Typically, screening is conducted in two stages. First, titles and abstracts are screened to exclude obviously ineligible studies (usually the majority of screened studies will be ineligible). Software can help speed up the process of title and abstract screening (e.g. Rathbone, Hoffmann & Glasziou, 2015b ; Ouzzani et al., 2016). Second, the full texts of potentially ineligible studies are downloaded (e.g. using a reference manager) and screened. At the full‐text stage, the authors should record reasons why each full text did not meet the eligibility criteria (Item 19). Pre‐determined, documented, and piloted eligibility criteria (Item 4) are essential for both stages of screening to be reliable. Preferably, each study is independently screened by more than one person. Authors should report how often independent decisions were in agreement, and the process for resolving conflicting decisions (Littell, Corcoran & Pillai, 2008). To increase the reliability and objectivity of screening criteria, especially when complete independent screening is impractical, authors could restrict independent parallel screening to the piloting stage, informing protocol development. Regardless of how studies were judged for inclusion, authors should be transparent about how screening was conducted.

Item 7: Data collection process

Describe where in the reports data were collected from (e.g. text or figures), how data were collected (e.g. software used to digitize figures, external data sources), and what data were calculated from other values. Report how missing or ambiguous information was dealt with during data collection (e.g. authors of original studies were contacted for missing descriptive statistics, and/or effect sizes were calculated from test statistics). Report who collected data and state the number of extractions that were checked for accuracy by co‐authors.

Explanation and elaboration

Describing how data were collected provides both information to the reader on the likelihood of errors and allows other people to update the review using consistent methods. Data extraction errors will be reduced if authors followed pre‐specified data extraction protocols, especially when encountering missing or ambiguous data. For example, when sample sizes were only available as a range, were the minimum or mean sample sizes taken, or were corresponding authors contacted for precise numbers? Were papers excluded when contacted authors did not provide information, or was there a decision rule for the maximum allowable range (e.g. such that n = 10–12 would be included, but n = 10–30 would be excluded)? Another ambiguity occurs when effect sizes can be calculated in multiple ways, depending on which data are available (sensibly, the first priority should be given to raw data, followed by descriptive statistics – e.g. means and standard deviations, followed by test‐statistics and then P‐values). Data can also be duplicated across multiple publications and, to avoid pseudo‐replication (Forstmeier et al., 2017), the duplicates should be removed following objective criteria. Whatever precedent is set for missing, ambiguous, or duplicated information from one study should be applied to all studies. Without recording the decisions made for each scenario, interpretations can easily drift over time. Authors can record and report the percentages of collected data that were affected by missing, ambiguous, or duplicate information.

Data collection can be more efficient and accurate when authors invest time in developing and piloting a data collection form (or database), which can be made publicly available to facilitate updates (Item 18). The form should describe precisely where data were presented in the original studies, both to help re‐extractions, and because some data sources are more reliable than others. Using software to extract data from figures can improve reproducibility [e.g. metaDigitise (Pick, Nakagawa & Noble, 2019) and metagear (Lajeunesse, 2016)]. Ideally, all data should be collected by at least two people (which should correct for the majority of extraction errors). While fully duplicating extractions of large data sets might be impractical for small teams (Primer B), a portion of collected data could be independently checked. Authors can then report the percentage of collected data that were extracted or checked by more than one person, error rates, and how discrepancies were resolved.

Item 8: Data items

Describe the key data sought from each study, including items that do not appear in the main results, or which could not be extracted due to insufficient information. Describe main assumptions or simplifications that were made (e.g. categorising both ‘length’ and ‘mass’ as ‘morphology’). State the type of replication unit (e.g. individuals, broods, study sites).

Explanation and elaboration

Data collection approaches fall on a spectrum between recording just the essential information to address the aim of the review, and recording all available information from each study. We recommend reporting both data that were collected and attempted to be collected. Complete reporting facilitates re‐analyses, allows others to build upon previous reviews, and makes it easier to detect selective reporting of results (Primer C). For re‐analyses, readers could be interested in the effects of additional data items (e.g. species information), and it is therefore useful to know whether those data are already available (Item 18). Similarly, stating which data were unavailable, despite attempts to collect them, identifies gaps in primary research or reporting standards. For selective reporting, authors could collect a multitude of variables but present only a selection of the most compelling results (inflating the risk of false positives; Primer C). Having a registered analysis plan is the easiest way to detect selective reporting (Item 3). Readers and peer‐reviewers can also be alerted to this potential source of bias if it is clear that, for example, three different body condition metrics were collected, but the results of only one metric were reported in the paper.

VIII. PRIMER D: BIAS FROM PRIMARY STUDIES

The conclusions drawn from systematic reviews and meta‐analyses are only as strong as the studies that comprise them (Gurevitch et al., 2018). Therefore, an integral step of a formal systematic review is to evaluate the quality of the information that is being aggregated (Pullin & Stewart, 2006; Haddaway & Verhoeven, 2015). If this evaluation reveals that the underlying studies are poorly conducted or biased, then a meta‐analysis cannot answer the original research question. Instead, the synthesis serves a useful role in unearthing flaws in the existing primary studies and guiding newer studies (Ioannidis, 2016). While other fields emphasise quality assessment, risk of bias assessment, and/or ‘critical appraisal’ (Cooke et al., 2017), ecologists and evolutionary biologists seldom undertake these steps. When surveyed, authors, reviewers, and editors of systematic reviews and meta‐analyses in ecology and evolutionary biology were largely oblivious to the existence of study quality or risk of bias assessments, sceptical of their importance, and somewhat concerned that such assessments could introduce more bias into the review (see Supporting Information). In this respect, little has changed since 2002, when Simon Gates wrote that randomization and blinding deserve more attention in meta‐analyses in ecology (Gates, 2002).

It is difficult to decide upon metrics of ‘quality’ for the diverse types of studies that are typically combined in an ecological or evolutionary biology meta‐analysis. We typically consider two types of quality – internal validity and external validity (James et al., 2016). Internal validity describes methodological rigour: are the inferences of the study internally consistent, or are the inferences weakened by limitations such as biased sampling or confounds? External validity describes whether the study addresses the generalised research question. In ecology and evolutionary biology, the strongest causal evidence and best internal validity might come from large, controlled experiments that use ‘best practice’ methods such as blinding. If we want to generalise across taxa and understand the complexity of nature, however, then we need ‘messier’ evidence from wild systems. Note that in the medical literature, risk of bias (practically equivalent to internal validity) is considered a separate and preferable construct to ‘study quality’ (Büttner et al., 2020), and there are well established constructs such as ‘GRADE’ for evaluating the body of evidence (‘Grading of Recommendations, Assessment, Development and Evaluations’; Guyatt et al., 2008). In PRISMA‐EcoEvo we are broadly referring to study quality (Item 9) until such a time when more precise and accepted constructs are developed for our fields.

In PRISMA‐EcoEvo we encourage ecologists and evolutionary biologists to consider the quality of studies included in systematic reviews and meta‐analyses carefully, while recognising difficulties inherent in such assessments. A fundamental barrier is that we cannot see how individual studies were conducted. Usually, we only have the authors’ reports to base our assessments on and, given problems with reporting quality, it is arguable whether the authors’ reports can reliably represent the actual studies (Liberati et al., 2009; Nakagawa & Lagisz, 2019). Quality assessments are most reliable when they measure what they claim to be measuring (‘construct validity’) with a reasonable degree of objectivity, so that assessments are consistent across reviewers (Cooke et al., 2017). Despite the stated importance of quality assessment in evidence‐based medicine, there are still concerns that poorly conducted assessments are worse than no assessments (Herbison, Hay‐Smith & Gillespie, 2006), and these concerns were echoed in responses to our survey (see Supporting Information). Thoughtful research is needed on the best way to conduct study quality and/or risk‐of‐bias assessments. While internal validity (or risk of bias) will usually be easier to assess, we urge review authors to be mindful of external validity too (i.e. generalisability).

IX. ANALYSIS METHODS

Item 9: Assessment of individual study quality

Describe whether the quality of studies included in the meta‐analysis was assessed (e.g. blinded data collection, reporting quality, experimental versus observational), and, if so, how this information was incorporated into analyses (e.g. meta‐regression and/or sensitivity analysis).

Explanation and elaboration

Meta‐analysis authors in ecology and evolutionary biology almost never report study quality assessment, or the risk of bias within studies, despite these assessments being a defining feature of systematic reviews (average reporting quality <10%, see Supporting Information; Primer D). Potentially, authors are filtering out studies deemed unambiguously unreliable during the study selection process (Item 6), but this process is poorly reported, making reproducibility impractical. A more informative approach would be to code indicators of study quality and/or risk of bias within studies, and then use meta‐regression or subgroup analyses to assess how these indicators impact the review's conclusions (Curtis et al., 2013). While sensible in theory, quality assessment is difficult in practice (some might say impossible, given current reporting standards in the primary literature; Primer D). The principal difficulty is that we rely on authors’ being reliable narrators of their conduct; omitting important information, such as the process of randomization, leaves us searching in the dark for a signal of study quality (O'Boyle, Banks & Gonzalez‐Mulé, 2017). Uncertainty about the reliability of author reports is exacerbated by the absence of registration for most publications in ecology and evolutionary biology (Primer C). Until further research is conducted on reliable methods of quality assessment in our fields, we recommend review authors critically consider and report whether meaningful quality (or risk of bias) indicators could be collected from included studies. For example, indicators for experimental studies could include whether or not data collection and/or analysis was blinded for those collecting or analysing data [as blinding reduces the risk of bias (van Wilgenburg & Elgar, 2013; Holman et al., 2015)] and whether the study showed full reporting of results [e.g. using a checklist such as Hillebrand & Gurevitch (2013); an example of the latter is shown in Parker et al. (2018a )]. Authors should then measure the impact that quality indicators have on the review's results (Items 16 and 24). Ultimately, as with collecting studies and data (Items 5 and 7), review authors are bound by the reporting quality of the primary literature.

Item 10: Effect size measures

Describe effect size(s) used. For calculated effect sizes (e.g. standardised mean difference, log response ratio) provide a reference to the equation of each effect size and (if applicable) its sampling variance, or derive the equations and state the assumed sampling distribution(s).

Explanation and elaboration

For results to be understandable, interpretable, and dependable, the choice of effect size should be carefully considered, and the justification reported (Harrison, 2010). For interpretable results it is essential to state the direction of the effect size clearly (e.g. for a mean difference, what was the control, was it subtracted from the treatment, or was the treatment subtracted from the control?). Sometimes, results will only be interpretable when the signs of some effect sizes are selectively reversed (i.e. positive to negative, or vice versa), and these instances need to be specified (and labelled as such in the available data; Item 18). For example, when measuring the effect of a treatment on mating success, both positive and negative differences could be ‘good’ outcomes (e.g. more offspring and less time to breed), so the signs of ‘good’ negative differences would be reversed.

Choosing an established effect size (such as Hedges’ g for mean differences, or Fisher's z for correlations) carries the advantage of the effect size's statistical properties being sufficiently understood and described previously (Rosenberg, Rothstein & Gurevitch, 2013). When a non‐conventional effect size is chosen, authors should provide equations for both the effect size and its sampling variance. Details should be provided on how the equations were derived, and how the sampling variance was determined (with analytic solutions or simulations) (Mengersen & Gurevitch, 2013).

Item 11: Missing data

Describe any steps taken to deal with missing data during analysis (e.g. imputation, complete case, subset analysis). Justify the decisions made.

Explanation and elaboration

There are multiple methods to analyse data sets that are missing entries for one or more variables, therefore the chosen methods should be reported transparently. Statistical programs often default to ‘complete case’, deleting rows that contain missing data (empty cells) prior to analysis, but our assessment of reporting practices found it was uncommon for authors to state that complete case analysis was conducted (despite their data showing missing values for meta‐regression moderator variables). Understandably, authors might not recognise the passive method of complete case analysis as a method of dealing with missing data, but it is important to be explicit about this step, both for the sample size implications (Item 20) and because of the potential to introduce bias when data are not ‘missing completely at random’ (Nakagawa & Freckleton, 2008; Little & Rubin, 2020). As an alternative to complete case analysis, authors can impute missing data based on the values of available correlated variables (e.g. multiple imputation methods, which retain uncertainty in the estimates of missing values; for discussion of these methods, see Ellington et al., 2015). Data imputation can be used for missing moderator variables as well as information related to effect sizes (e.g. sampling variances), thereby increasing the number of effect sizes included in analyses (Item 20) (Lajeunesse, 2013). Because imputation methods rely on the presence of correlated information, authors might extract additional data items to inform the imputation models, even if those data items are not of interest to the main analyses (Item 8). When justifying the chosen method, authors can conduct sensitivity analyses (Item 16) to assess the impact of missing data on estimated effects.

Item 12: Meta‐analytic model description

Describe the models used for synthesis of effect sizes. The most common approach in ecology and evolution will be a random‐effects model, often with a hierarchical/multilevel structure. If other types of models are chosen (e.g. common/fixed effects model, unweighted model), this requires justification.

Explanation and elaboration

Meta‐analyses in ecology and evolutionary biology usually combine effect sizes from a broad range of studies, making it sensible to use a model that allows the ‘true’ effect size to vary between studies in a ‘random‐effects meta‐analysis’ (the alternative is a ‘common’ or ‘fixed’‐effect meta‐analysis). Both frequentist and Bayesian statistical packages can implement random‐effects meta‐analyses. It is also common for multiple random effects to be included in a multilevel or hierarchical structure to account for non‐independence (Item 14). Traditional meta‐analytic models are weighted so that more precise effects have a greater influence on the pooled estimate than effects that are less certain (Primer A). In a random‐effects meta‐analysis, weights are usually taken from the sum of within‐study sampling variance and the between‐study variance. As a consequence of these variances being combined, large between‐study variance will dilute the impact of within‐study sampling variances. Alternatively, weights can be taken from the within‐study sampling variances alone (as is done for common‐effect models) (Henmi & Copas, 2010). When between‐study variance is large (which can be assessed with heterogeneity statistics; Item 22), these two weighting structures could give different results. Authors could therefore assess the robustness of their results to alternative weighting methods as part of sensitivity analyses (Items 16 and 24).

Unweighted meta‐analyses are regularly published in ecology and evolutionary biology journals, but we advise that these analyses be interpreted cautiously, and justified sufficiently. Theoretically, when publication bias is absent and effects have a normal sampling distribution, unweighted analyses can provide unbiased estimates (just with lower precision) (Morrissey, 2016). However, it is hard to detect effects that are inflated due to publication bias without sampling variances (Item 16), and from unweighted analyses we cannot estimate the contribution of sampling variance to the overall variation among effects (i.e. heterogeneity; Item 22). Unweighted analyses become more problematic for analyses of absolute values, because the ‘folded’ sampling distribution produces upwardly biased estimates (Nakagawa & Lagisz, 2016). Such analyses of magnitudes, ignoring directions, are relatively common in ecology and evolutionary biology. There are two possible corrections for bias from analyses of absolute values (sensu Morrissey, 2016): (i) ‘transform‐analyse’, where the folded distribution is converted to an unfolded distribution before analysis, or; (ii) ‘analyse‐transform’, where the folded estimates are back‐transformed to correct for bias.

Item 13: Software

Describe the statistical platform used for inference (e.g. R), and the packages and functions used to run models, including version numbers. Specify any arguments that differed from the default settings.

Explanation and elaboration

Given the many software options and methods available for conducting meta‐analyses, transparent reporting is required for analyses to be reproducible (Sandve et al., 2013). Authors should cite all software used and provide complete descriptions of version numbers. When describing software, it is easy to overestimate familiarity among the readership; changes from the default settings will not be obvious to some and should be described in full. That said, this item is less important than sharing data and code (Item 18) because shared code will convey much of the same information in a more reproducible form. Nonetheless it is helpful to describe software details in the text for the majority of readers who will not dig into the shared code.

Item 14: Non‐independence

Describe the types of non‐independence encountered (e.g. phylogenetic, spatial, multiple measurements over time) and how non‐independence has been handled, including justification for decisions made.

Explanation and elaboration

Meta‐analyses in ecology and evolutionary biology regularly violate assumptions of statistical non‐independence, which can bias effect estimates and inflate precision. For example, studies containing pseudo‐replication (Forstmeier et al., 2017) have inflated sample sizes and downwardly biased sampling variances. When multiple effect sizes are derived from the same study, they are often not statistically independent, such as when multiple experimental groups are compared to the same control group (Gleser & Olkin, 2009). Alternatively, there may be non‐independence among effect sizes across studies. There are numerous sources of non‐independence at this level, including dependence among effect sizes due to phylogenetic relatedness (discussed in Primer E), and correlations between effect sizes originating from the same population or research group (Nakagawa et al., 2019). Despite the ubiquity of non‐independence in ecological and evolutionary meta‐analyses, these issues are often not disclosed in the report (32% of 102 meta‐analyses described the types of non‐independence encountered; see Supporting Information). We recommend that authors report all potential sources of non‐independence among effect sizes included in the meta‐analysis, and the proportion of effect sizes that are impacted (for further guidance, see Noble et al., 2017; López‐López et al., 2018).

In addition to listing all sources of non‐independence, authors should report and justify any steps that were taken to account for the stated non‐independence. Steps range from the familiar (e.g. averaging multiple effect sizes from the same source, the inclusion of random effects, and robust variance estimation; Hedges, Tipton & Johnson, 2010) to the more involved (e.g. modelling correlations directly by including correlation or covariance matrices). Complicated methods of dealing with non‐independence are best communicated through shared analysis scripts (Item 18). When primary studies are plagued by pseudo‐replication (which could be considered in quality assessment; Item 9), an effect size could be chosen that is less sensitive to biased sample sizes (Item 10) (e.g. for mean differences, the log response ratio, lnRR, rather than the standardised mean difference, d), and (to be more conservative) sampling variances could be increased (Noble et al., 2017).

It is not expected that non‐independence can be completely controlled for. As with primary studies, problems of non‐independence are complicated, and often the information necessary to solve the problem is unavailable (e.g. strength of correlation between non‐independent samples or effect sizes, or an accurate phylogeny). Where there are multiple, imperfect, solutions, we encourage running sensitivity analyses (Item 16) and reporting how these decisions affect the magnitude and precision of results (Item 24).

Item 15: Meta‐regression and model selection

Provide a rationale for the inclusion of moderators (covariates) that were evaluated in meta‐regression models. Justify the number of parameters estimated in models, in relation to the number of effect sizes and studies (e.g. interaction terms were not included due to insufficient sample sizes). Describe any process of model selection.

Explanation and elaboration

When meta‐regressions are used to assess the effects of moderator variables (i.e. meta‐analyses with one or more fixed effects), the probability of false‐positive findings increases with multiple comparisons. Therefore, rationales for each moderator variable should be provided in either the introduction or methods section of the meta‐analysis manuscript. Analyses conducted solely for exploration and description should be distinguished from hypothesis‐testing analyses (see Item 17). Authors should also report how closely the chosen moderator variables relate to the biological phenomena of interest (e.g. using mating call rate as a proxy for mating investment), and how the variables were categorised (Item 8).

For model selection and justification, principles from ordinary regression analyses apply to meta‐regressions too (Gelman & Carlin, 2014; Harrison et al., 2018; Meteyard & Davies, 2020). To avoid cryptic multiple hypothesis testing and associated high rates of false positive findings, authors should report full details of any model selection procedures (Forstmeier & Schielzeth, 2011). Under‐powered meta‐regressions should be reported with obvious caveats (or else avoided completely), to discourage the results from being interpreted with unwarranted confidence (Tipton, Pustejovsky & Ahmadi, 2019). Meta‐regressions have lower statistical power than meta‐analyses, especially when including interaction terms between two or more moderator variables (Hedges & Pigott, 2004). Low statistical power can be due to any combination of too few available studies, small sample sizes within studies, or high amounts of variability between study effects, and therefore justification of meta‐regression models should include consideration of sample sizes (Item 20) and heterogeneity (Item 22) (Valentine et al., 2010).

Item 16: Publication bias and sensitivity analyses

Describe assessments of the risk of bias due to missing results (e.g. publication, time‐lag, and taxonomic biases), and any steps taken to investigate the effects of such biases (if present). Describe any other analyses of robustness of the results, e.g. due to effect size choice, weighting or analytical model assumptions, inclusion or exclusion of subsets of the data, or the inclusion of alternative moderator variables in meta‐regressions.

Explanation and elaboration

Reviews can produce biased conclusions if they summarise a biased subset of the available information, or if there is bias within the information itself. Authors should therefore assess risks of bias so that confidence in the conclusions (or lack thereof) can be accurately conveyed. Bias within the information itself was discussed in Item 9 and Primer D. Publication bias occurs when journals and authors prioritise the publication of studies with particular outcomes. For example, journals might prefer studies that support an exciting hypothesis, rather than publishing null or contradictory evidence (Rosenthal, 1979; Murtaugh, 2002; Leimu & Koricheva, 2004). Meta‐analyses in ecology and evolutionary biology typically rely on published papers for data (Item 5) and hence are especially vulnerable to the effects of publication bias (e.g. Sánchez‐Tójar et al., 2018). Even if all research was published, the resulting papers would still provide information that was biased towards certain taxa (e.g. vertebrates), geographical locations (e.g. field sites close to Western universities), and study designs (e.g. short‐term studies contained within the length of a PhD) (Pyšek et al., 2008; Rosenthal et al., 2017). Such ‘research biases’ (Gurevitch & Hedges, 1999) should be considered when categorising studies (Item 8).

While none are entirely satisfactory, multiple tools are available to detect publication bias in a meta‐analytic data set (Møller & Jennions, 2001; Parekh‐Bhurke et al., 2011; Jennions et al., 2013). Many readers will be familiar with funnel plots, whereby effect sizes (either raw, or residuals) are plotted against the inverse of their sampling variances, which should form a funnel shape. Asymmetries in the funnel indicate studies that are ‘missing’ due to publication bias, but could also be a benign outcome of heterogeneity (Item 22) (Egger et al., 1997; Terrin et al., 2003). Although funnel plots and Egger's regression (a test of funnel plot asymmetry) were originally only useful for common‐effect meta‐analytic models (Egger et al., 1997), modified methods have been proposed to suit the random‐effects meta‐analytic models commonly used in ecology and evolutionary biology (Item 12; Moreno et al., 2009; Nakagawa & Santos, 2012). Publication bias might also be indicated by a reduction in the magnitude of an effect through time (tested with a meta‐regression using publication year as a moderator, or with a cumulative meta‐analysis) (Jennions & Møller, 2002; Leimu & Koricheva, 2004; Koricheva & Kulinskaya, 2019). When biases are detected, we recommend authors report multiple sensitivity analyses to assess the robustness of the review's results (Rothstein, Sutton & Borenstein, 2005; Vevea, Coburn & Sutton, 2019). Subgroup analyses can be reported to test whether the original effect remains once the data set is restricted to recent studies, or studies that have been assessed to have a lower risk of bias (Item 9). To assess the sensitivity of the results to individual studies, authors can also report ‘leave‐one‐out’ analyses, and plot variability in the primary outcome (Willis & Riley, 2017).

Item 17: Clarification of post hoc analyses

When hypotheses were formulated after data analysis, this should be acknowledged.

Explanation and elaboration

Usually, a hypothesis should only be tested on data that were collected with the prior intention of testing that hypothesis. While a meta‐analysis can test different hypotheses from those addressed in primary studies on which it is based, it is important that these hypotheses are formulated in advance. It is common, however, for researchers to be curious about patterns in their data after they have already been collected. Exploration and description are integral to research, but problems arise when such analyses are presented as hypothesis‐testing, especially when they deviate from what is stated in a registration (also called ‘Hypothesising After Results are Known’ — see Primer C; Kerr, 1998). Ideally, authors will have a registered analysis plan (Item 3) to protect against the common self‐deception that a post‐hoc analysis was, in hindsight, the obvious a‐priori choice (Parker et al., 2016a ). When a public registration is not provided, the reader is reliant on the memory and honesty of the authors. It is currently rare to see post‐hoc acknowledgements in ecology and evolution meta‐analyses (or indeed in primary studies) but, in the methods section, we encourage authors to state transparently which analyses were developed after data collection and, in the discussion, temper confidence in the results of such analyses accordingly.

Item 18: Metadata, data, and code

Share metadata (i.e. data descriptions), data, and analysis scripts with peer‐reviewers. Upon publication, upload this information to a permanently archived website in a user‐friendly format. Provide all information that was collected, even if it was not included in the analyses presented in the manuscript (including raw data used to calculate effect sizes, and descriptions of where data were located in papers). If a software package with graphical user interface (GUI) was used, then describe full model specification and fully specify choices.

Explanation and elaboration