Abstract

The Nash equilibrium concept has previously been shown to be an important tool to understand human sensorimotor interactions, where different actors vie for minimizing their respective effort while engaging in a multi-agent motor task. However, it is not clear how such equilibria are reached. Here, we compare different reinforcement learning models to human behavior engaged in sensorimotor interactions with haptic feedback based on three classic games, including the prisoner’s dilemma, and the symmetric and asymmetric matching pennies games. We find that a discrete analysis that reduces the continuous sensorimotor interaction to binary choices as in classical matrix games does not allow to distinguish between the different learning algorithms, but that a more detailed continuous analysis with continuous formulations of the learning algorithms and the game-theoretic solutions affords different predictions. In particular, we find that Q-learning with intrinsic costs that disfavor deviations from average behavior explains the observed data best, even though all learning algorithms equally converge to admissible Nash equilibrium solutions. We therefore conclude that it is important to study different learning algorithms for understanding sensorimotor interactions, as such behavior cannot be inferred from a game-theoretic analysis alone, that simply focuses on the Nash equilibrium concept, as different learning algorithms impose preferences on the set of possible equilibrium solutions due to the inherent learning dynamics.

Subject terms: Neuroscience, Mathematics and computing

Introduction

Nash equilibria are the central solution concept for understanding strategic interactions between different agents1. Crucially, unlike other maximum expected utility decision-making models2–4, the Nash equilibrium concept cannot assume a static environment that can be exploited to find the optimal action in a single sweep, but it rather defines a fixed point representing a combination of strategies that can be found by iteration, so that finally no agent has anything to gain by deviating from their equilibrium behavior. Here, a strategy is conceived as a probability distribution over actions, so that Nash equilibria are in general determined by combinations of probability distributions over actions (mixed Nash equilibria), and only in special cases by combinations of single actions (pure equilibria)5. An example of the first kind is the popular rock-papers-scissors game which can be simplified to the matching pennies game with two action choices, where in either case the mixed Nash equilibrium requires players to randomize their choices uniformly. An example of the second kind is the prisoner’s dilemma6 where both players choose between cooperating and defecting without communication. The pure Nash equilibrium in this game requires both players to defect, because the payoffs are designed in a way that allow for a dominant strategy from the perspective of a single player, where it is always better to defect, no matter what the other player is doing.

The Nash equilibrium concept has not only been broadly applied in economic modeling of interacting rational agents, companies and markets7–10, but also to explain the dynamics of animal conflict11,12, population dynamics including microbial growth13,14, foraging behavior15–18, the emergence of theory of mind19–22, and even monkeys playing rock-papers-scissors23,24. Recently, Nash equilibria have also been proposed as a concept to understand human sensorimotor interactions25–29. In these studies human dyads are typically coupled haptically30 and experience physical forces that can be regarded as payoffs in a sensorimotor game. By designing the force payoffs appropriately in dependence of subjects’ actions, these sensorimotor games can be made to correspond to classic pen-and-paper games like the prisoner’s dilemma with a single pure equilibrium, coordination games with multiple equilibria, or signaling games with Bayesian Nash equilibria to model optimal sensorimotor communication. In general, it was found in these studies that subjects’ sensorimotor behavior during haptic interactions was in agreement with the game theoretic predictions of the Nash equilibrium, even though the pen-and-paper versions of some games systematically violate these predictions. This raises the question of how such equilibria are attained, especially since the Nash equilibrium concept itself provides no explanation of how it is reached, especially when there are multiple equivalent equilibria.

In this study we investigate what kind of learning models could explain how humans that are interacting through a haptic sensorimotor coupling reach a Nash equilibrium. The problem of learning in games can be approached within different frameworks, including learning of simple fixed response models like partial best response dynamics for reaching pure Nash equilibria31,32, or fictitious play with smoothed best response dynamics for mixed equilibria33,34, as well as more sophisticated reinforcement learning models like Q-learning35,36, policy gradients37,38, minimax Q-learning39 or Nash Q-learning40,41, together with learning models in evolutionary game theory for reaching Nash equilibria (evolutionary stable strategies) through population dynamics42. Here we focus on model-free reinforcement learning models to explain subjects’ sensorimotor interactions, as in many of the previous experiments25–27 subjects interact only through haptic feedback and cannot otherwise “see” the choices of the other player. In particular, we consider model-free reinforcement learning models like Q-learning and direct policy search methods like policy gradients that exclusively rely on force feedback during learning. We compare Q-learning and policy gradient learning in games with pure and mixed Nash equilibria, including the prisoner's dilemma and two versions of the matching pennies game with symmetric and asymmetric pay-offs respectively. While the prisoner's dilemma game has a single pure equilibrium, the sensorimotor version of the matching pennies games has infinitely many mixed Nash equilibria that are theoretically equivalent, and we investigate whether the different learning algorithms introduce additional preferences between these equilibrium strategies based on the inherent learning dynamics and we check how these match up with human learning behavior.

Results

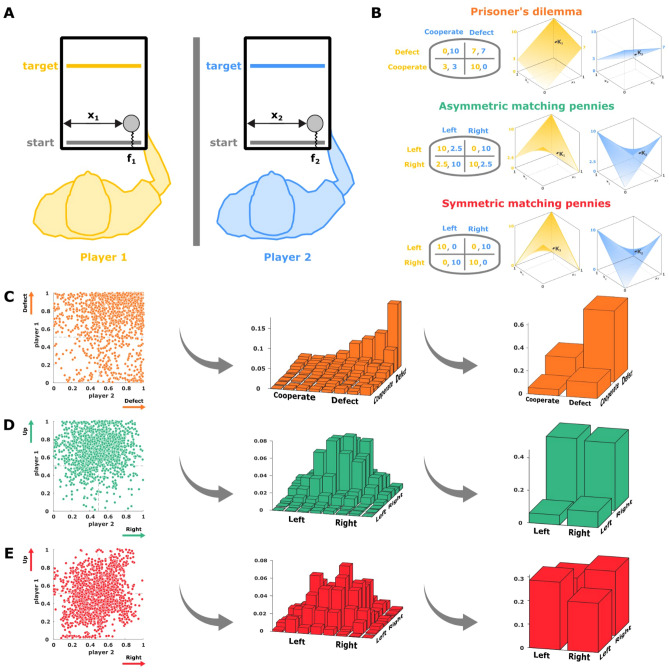

To investigate learning during sensorimotor interactions with pure and mixed Nash equilibria, we designed three continuous sensorimotor games that are variants of the traditional two-player matrix games of the prisoner’s dilemma, the asymmetric matching pennies game, and the symmetric matching pennies game (see Fig. 1 and Methods for details). During the experiment two players were sitting next to each other and interacted through a virtual reality system with the handles of two robotic interfaces that were free to move in the horizontal plane (see Fig. 1A). In each trial, players were requested to move their handle to cross a target bar ahead of them, where the lateral position of both handles determined the individual magnitude of a resistive force opposing the forward motion of the handle. Thus, the movement of each player directly impacted on the forces experienced by both players in a continuous fashion. This way, our sensorimotor game differs in three essential aspects from the traditional cognitive versions, in that first, we have an implicit effort cost through haptic coupling, second, subjects could choose from a continuum of actions defining a continuous payoff landscape, where the payoffs in the four corners correspond to the payoffs in the traditional payoff matrices, and third, that subjects are unaware of the structure of the haptic coupling, i.e., they do not have complete information about the payoffs, as they only have access to their own payoffs through force feedback.

Figure 1.

Experimental setup. (A) Sensorimotor game. Subjects were sitting next to each other without communication, and interacted through two handles that generated a force on their hand opposing their forward movement. (B) Payoff matrices for each one of the 3 games. The 2-by-2 payoff matrices determine the boundary values of the force payoffs at the extremes of the -space for both players (left). Interpolation of the payoffs defining a continuous payoff landscape for a continuum of actions (right). (C) Subjects’ results in the continuous prisoner's dilemma game. Final decisions shown as a scatter plot in the -space (left), where the single pure Nash equilibrium is located in the top-right corner at (1,1). The same responses binned into an 8-by-8 histogram (middle), and the corresponding categorical representation (right). (D) Subjects’ results in the continuous asymmetric matching pennies game. Final decisions (left), where the mixed Nash equilibrium strategies have expectation (0.8, 0.5). The same responses binned into an 8-by-8 histogram (middle), and the corresponding categorical representation (right). (E) Subjects’ results in the continuous matching pennies. Final decisions game (left), where the mixed Nash equilibrium strategies have expectation (0.5, 0.5). The same responses binned into an 8-by-8 histogram (middle), and the corresponding categorical representation (right). Created using MATLAB R2021a (https://www.mathworks.com)43.

Since the payoffs can only be learned from experience, we devised different reinforcement learning models that can emulate subjects’ behavior by adapting their choice distributions in a way that avoids punitive forces. As subjects were only communicated their own payoff when making their choices, we compare only model-free reinforcement learning schemes, in particular Q-learning and policy gradient models. We consider two reward conditions, with and without intrinsic costs added to the extrinsically imposed reward defined by the haptic forces, where the intrinsic costs make it more expensive for the learner to change actions abruptly between trials—see Methods for details. We focus on trial-by-trial learning across the 40 trial blocks where games were repeated and neglect within-trial adaptation, as we found that initial and final positions of movement trajectories were close together most of the time. In particular, we found that in approximately of the trials for the prisoner’s dilemma, and in and of the trials for the asymmetric and symmetric matching pennies respectively, the subjects’ final decision laid within a 3.6cm neighborhood ( of the entire workspace) of their initial position in each trial, and there was no systematic change across the block of trials. We found that the distribution of distances between the initial and final position in each trial did not change significantly when comparing the first 20 trials of each block with the last 20 trials of each block (Kolmogorov-Smirnov test, for all games). Therefore we concentrate only on the final position of each trial, where the force pay-off was strongest.

Categorical analysis

Previously, we have studied subjects’ choices in the sensorimotor game in a binary fashion25,26 to allow for a direct comparison with the corresponding pen-and-paper 2-by-2 matrix game. To this end, we divide each subject’s action space into two halves and categorize actions accordingly—compare Fig. 2. The four quadrants of the combined action space then reflect all possible combinations, for example in the prisoner’s dilemma (defect, defect), (defect, cooperate), (cooperate, defect) and (cooperate, cooperate). When comparing the histogram over subjects’ choices over the first 10 trials compared to the last 10 trials of each block of 40 trials, it can be clearly seen that behavior adapts and becomes more consistent with the Nash equilibrium solution of the 2-by-2 matrix games for the prisoner’s dilemma and the asymmetric matching pennies game. In particular, in the prisoner’s dilemma game, subjects increasingly concentrate on the defect/defect solution, even though the Nash solution is never perfectly reached, as other action combinations also occur late in learning. In the asymmetric matching pennies game, player 1 displays the expected asymmetric action distribution, whereas player 2 shows the expected 50:50 behavior. In both games, the entropy of the joint action distribution decreases significantly over the block of 40 trials—see Supplementary Fig. 1. In the case of the symmetric matching pennies game, the histograms do not reveal any learning or entropy decrease, as expected, because the random Nash strategy coincides with the random initial strategy.

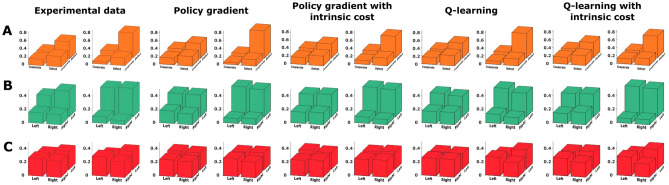

Figure 2.

Categorical analysis. Final decisions in the -space categorized into two halves for the experimental data (left) and, from left to right, the results of the binary gradient descent, binary gradient descent with prior cost, binary Q-learning, and binary Q-learning with prior cost. We show the first 10 trials compared to the last 10 trials of each block of 40 trials.(A) Prisoner’s dilemma. (B) Asymmetric matching pennies. (C) Matching pennies. Created using MATLAB R2021a (https://www.mathworks.com)43.

We compared the binary Q-learning described in the methods as model 1, and the policy gradient described as model 2, to the human data by exposing both models to the same kind of game sequence that was experienced by subjects. In the simulations, we always applied the same learning model for both players, albeit with player-specific parameters that were fitted to match subjects’ action distributions. In Fig. 2 it can be seen that both kinds of learning models explain subjects’ behavior equally well. Also when repeating the same analysis including the intrinsic cost function that discourages deviations from previous positions, the result remains essentially the same. This leaves open the possibility that the categorical analysis may be too coarse to distinguish between these kinds of models. In the following, we therefore study the continuous games in terms of their continuous responses and not just their binary discretization. We compare subjects’ behavior in the three different games to two continuous reinforcement learning models, where one is a policy gradient model with a continuous action distribution, and the other one a continuous Q-learning model.

Continuous analysis

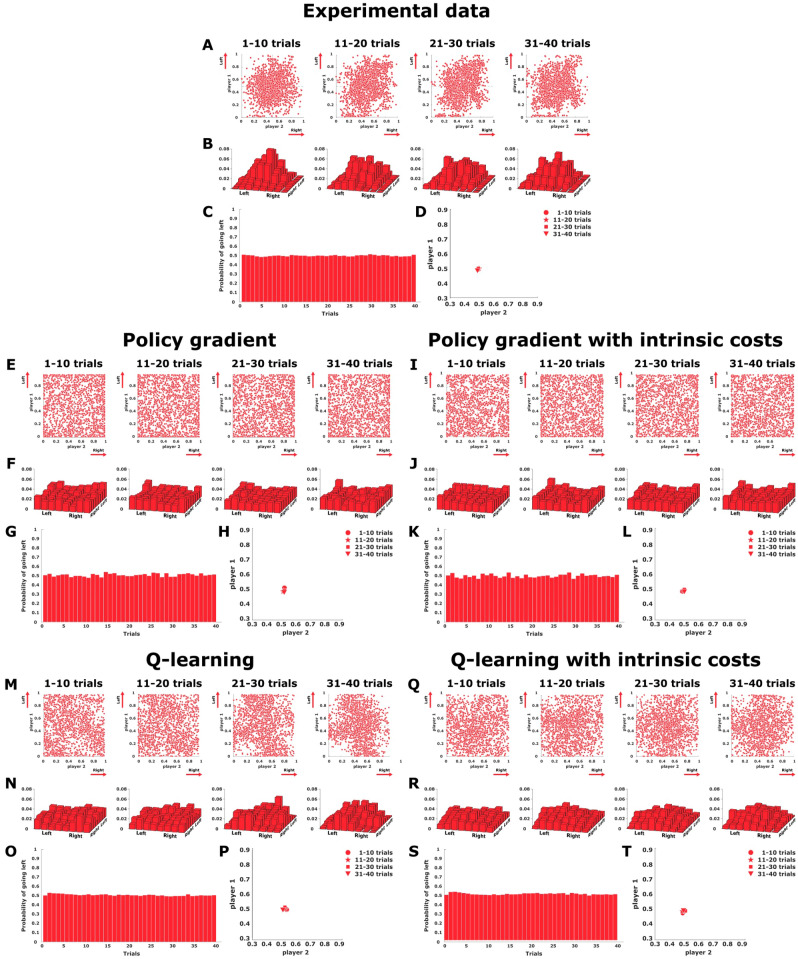

Prisoner’s dilemma: Endpoint distributions. In Fig. 3A subjects’ final decisions in the continuous prisoner’s dilemma game are shown as a scatter plot within the -space, where the single pure Nash equilibrium is located in the top-right corner at position (1, 1). Over the course of 40 trials subjects’ responses increasingly cluster around the Nash equilibrium, even though a considerable spread remains. The difference in endpoint distribution between the first 10 trials in a block and the last 10 trials was highly significant for player 1 and player 2 (KS-test, for each player). A two-dimensional histogram binning of the experimental scatter plots can be found in Fig. 3B.

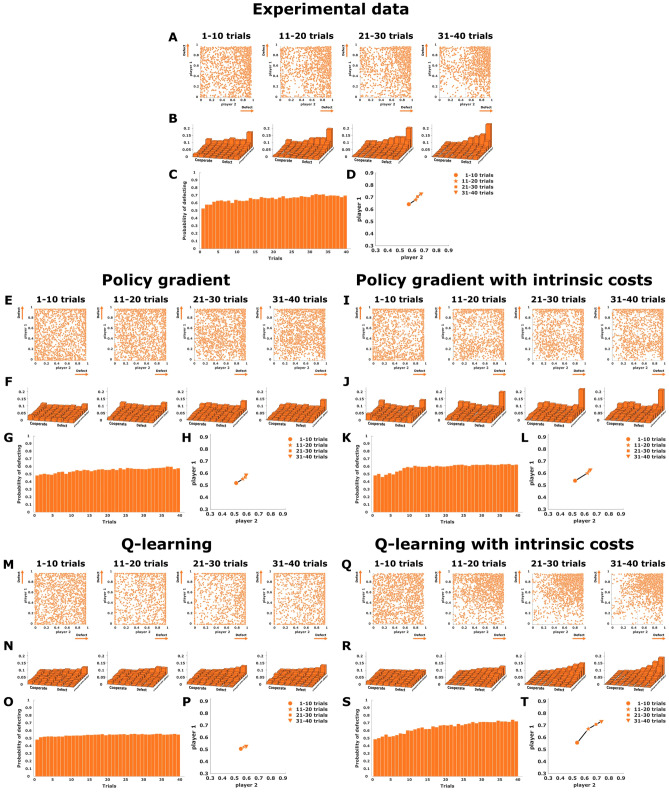

Figure 3.

Prisoner’s dilemma. (A),(E),(I),(M), and (Q) show scatter plots of final decisions in the -plane, where subjects’ actions are expected to cluster around the single pure Nash equilibrium located in the top-right corner at position (1,1). (B),(F),(J),(N), and (R) show two-dimensional histograms binning the experimental scatter plots. (C),(G),(K),(O), and (S) represent the change of the mean endpoints (averaged for both players) for each trial across the block of 40 trials. (D),(H),(L),(P), and (T) show the direction of adaptation in the endpoint space. The experimental data is shown at the top, the four continuous models are shown below. Created using MATLAB R2021a (https://www.mathworks.com)43.

Figure 3E shows the performance of two coupled continuous policy gradient learners on the same task. The model learners also converge to the Nash solution, but the variability is spread mainly along the boundaries and corners, which is in stark contrast to subjects’ behavior. The same is also true if the policy gradient learners are parameterized slightly differently with the logit-normal—see Supplementary Figure 2. Figure 3M shows two coupled continuous Q-learning agents performing the prisoner’s dilemma sensorimotor task. The model learners slowly start concentrating probability mass in the quadrant of the Nash equilibrium, but their responses are spread out more uniformly than the subjects’ responses, giving in comparison a distribution that is too flat due to the broad exploration. One way to enforce more specific exploration is through the consideration of intrinsic costs, for example by assuming that selecting actions that significantly deviate from the action prior, given by averaging over all trials, is costly44,45. Figure 3I and Q show the performance of the two learning models considering such an intrinsic cost. While the behavior of the gradient learner still shows a sharp peak in the corner, the Q-learning model concentrates actions in a way that is more similar to human behavior in the quadrant of the Nash equilibrium, and it even shows the exponential decline in probability that is observed in the human data when moving away from the Nash corner. Accordingly, the Q-learning model with intrinsic costs achieves the most similar two-dimensional histogram to the data, as quantified by the Euclidean distance between the subjects’ and the models’ histograms—compare Supplementary Table 1.

Prisoner’s dilemma: Response frequency adaptation. A statistical measure to assess the effects of learning is the change of the mean endpoint (averaged for both players) for each trial across the block of 40 trials. Figure 3C shows a slow increase from 0.5 to approximately 0.7. The Q-learning model without intrinsic costs shows a flat distribution resulting from the broad exploration (see Fig. 3O). In contrast, both the gradient learning models (see Fig. 3G and K) and the local Q-learning model with intrinsic costs (see Fig. 3S), manage to mimic the experimental responses, with the latter model achieving the lowest mean-squared error compared to the data—compare Supplementary Table 1.

To study the direction of adaptation in the endpoint space, we look at the difference vectors resulting from subtracting the mean endpoints of each mini-block of 10 trials from the preceding mini-block of 10 trials. The arrow plot in Fig. 3D shows that subjects move towards the Nash equilibrium on a straight path, where the step size of learning becomes smaller over time. As can be seen in Fig. 3H and L this adaptation pattern is reproduced by both the gradient learning models as well as the Q-learning model with intrinsic costs (see Fig. 3T). Without the intrinsic costs, the extensive search of the Q-learning agents generates an almost uniform adaptation (see Fig. 3P). In summary, our analysis demonstrates that it is not enough to look at the mean behavior to distinguish between the different models. In fact, when looking at the entire two-dimensional histogram over the continuous action space, it becomes apparent that the Q-learning model with intrinsic costs is the only one that can capture subjects’ behavior well, as it is the only model that concentrates actions in the Nash quadrant where the concentration increases gradually with proximity to the Nash solution (compare Fig. 3B,F,J,N,R).

Asymmetric matching pennies: endpoint distribution. Figure 4A shows subjects’ final decisions in the continuous asymmetric matching pennies game as a scatter plot in the -plane, where the set of mixed Nash equilibria corresponds to all joint distributions where the marginal distribution of player 1 has the expected location and the marginal distribution of player 2 has the expected location . Throughout the course of 40 trials, subjects’ responses evolve from a symmetric distribution centered approximately at (0.5, 0.5) to an asymmetric distribution that is tilted for player 1 as expected. The difference in endpoint distribution between the first 10 trials in a block and the last 10 trials was highly significant (KS-test, ). A two-dimensional histogram binning of the scatter plots can be seen in Fig. 4B.

Figure 4.

Asymmetric matching pennies. (A),(E),(I),(M), and (Q) show final decisions as a scatter plot in the -plane, where subjects’ actions are expected to cluster in top quadrants. (B),(F),(J),(N), and (R) show a two-dimensional histogram binning of the experimental scatter plots. (C),(G),(K),(O), and (S) present the change of the mean endpoint (independently for each player) for each trial across the block of 40 trials. (D),(H),(L),(P), and (T) show the direction of adaptation in the endpoint space. The experimental data is shown on the top, the four continuous models are below. Created using MATLAB R2021a (https://www.mathworks.com)43.

Figure 4E shows the endpoint distribution of two coupled policy gradient learners performing in the continuous asymmetric matching pennies game. As expected, player 1 shifts to an asymmetric behavior, while player 2 remains random, but the model actions are mainly spread along the two opposing boundaries, which singles out a possible Nash solution, but is in stark contrast to subjects’ behavior that concentrates in the upper quadrants of the workspace. This preference for extreme responses of the gradient model is even more extreme when including intrinsic costs, which eliminates most of the asymmetry (see Fig. 4I) and also holds for a logit-normal parameterization of the gradient learners (compare Supplementary Figure 3).

Figure 4M shows two coupled continuous Q-learning agents performing the same task. In contrast to the gradient learning models, the best-fitting Q-learning models manage to preserve substantial probability mass in the center of the workspace, but this happens at the expense of such a slow learning progress that the resulting action distribution is close to uniform, and therefore also does not capture subjects’ behavior well. This changes, however, when including intrinsic costs. Figure 4Q shows two fitted continuous Q-learning agents with intrinsic costs that manage to qualitatively capture the experimentally observed distribution in that player 1 concentrates its probability mass in the top quadrants, whereas player 2 shows more uniform behavior, as expected.

Asymmetric matching pennies: response frequency adaptation. Figure 4C shows the change of the mean endpoint (independently for each player) in each trial across the block of 40 trials. We can observe an increase from 0.5 to approximately 0.8 for player 1, whereas for player 2 the mean value remains constant along all trials around 0.5. This behavior is also observed in Fig. 4D, which shows the average direction of subjects’ adaptation. These mean adaptation patterns are reproduced by the gradient learning model (see Fig. 4G,H), however, when including the intrinsic costs, the symmetry between extreme responses causes more random behavior in both players (see Fig. 4K,L), although this is not so much the case in the logit-normal parameterization (compare Supplementary Figure 3). We see also that the Q-learning model without intrinsic costs depicted in Fig. 4O is very slow in learning, which can also be seen from the mean direction of adaptation depicted in Fig. 4P. However, when considering intrinsic costs in the Q-learning model, the change of the mean endpoint (see Fig. 4S) and the average direction of adaptation (see Fig. 4T) match subjects behavior quite well. Even though the Q-learning model with intrinsic costs is initially slightly slower than the human subjects in picking up the equilibrium behavior, taken together with the two-dimensional histogram binning of the scatter plots in Fig. 4B,F,J,N,R the Q-learning model with intrinsic costs provides the best fit to subjects’ behavior, confirmed by the lowest Euclidean distance between model and subject histograms—compare Supplementary Table 1.

Symmetric matching pennies: endpoint distribution

While the asymmetric matching pennies game requires adaptation in action frequency, learning in the symmetric game is not expected to show changes in the action frequency, and thus we finally need to look for other signatures of learning. In Fig. 5A subjects final decisions in the continuous matching pennies game are shown as a scatter plot in the -plane, where the set of mixed Nash equilibria corresponds to all joint distributions where the marginal distribution of player 1 and player 2 has the expected location . Subjects’ responses generally cluster around the center position with mean (0.5, 0.5) in the first block of 10 trials and remain that way through the entire block of 40 trials. A two-dimensional histogram binning of the scatter plots can be seen in Fig. 5B. While subjects’ initial behavior can be explained as a result of ignorance, the same behavior is also compatible with the Nash solution, and so the response frequencies remain stable throughout the block of 40 trials. This can be seen from the mean endpoint (averaged for both players) across the block of 40 trials shown in Fig. 5C, and from the average direction of subjects’ adaptation depicted in Fig. 5D.

Figure 5.

Symmetric matching pennies. (A),(E),(I),(M), and (Q) show final decisions as a scatter plot in the -plane, where subjects’ actions are expected to cluster around the center of the workspace. (B),(F),(J),(N), and (R) show a histogram binning of the experimental scatter plots. (C),(G),(K),(O), and (S) present the change of the mean endpoint (averaged for both players) for each trial across the block of 40 trials. (D),(H),(L),(P), and (T) show the direction of adaptation in the endpoint space. The experimental data is shown in the top, the four continuous models are below. Created using MATLAB R2021a (https://www.mathworks.com)43.

The endpoint distribution of two coupled policy gradient learners performing in the continuous symmetric matching pennies game is shown in Fig. 5E, and the corresponding version including intrinsic costs is shown in Fig. 5I. In both cases the distribution of responses for both players is rather uniform. Figure 5M shows two coupled continuous Q-learning agents performing the same task, and Fig. 5Q the same model including intrinsic costs. Contrarily to the gradient learning models, the Q-learning models manage to preserve substantial probability mass in the center of the workspace.

While all four models capture the effect of stable mean response frequencies—see Fig. 5G,H,K,L,O,P, and S,T for comparison—, the gradient learning models predict a more uniform distribution of responses, including in particular a high amount of extreme responses at the borders of the workspace that were mostly absent in the experiment. In contrast, the Q-learning models cluster in the center of the work space. Moreover, the inclusion of intrinsic costs that punish large deviations from behavior in the previous trial lead to a slightly stronger concentration of responses in the center of the work space in agreement with subject data. Again, based on the two-dimensional histogram binning of the scatter plots in Fig. 5B,F,J,N,R the Q-learning model with intrinsic costs achieves the best fit to subjects’ behavior, confirmed by the lowest Euclidean distance between the fourth binned histogram—compare Supplementary Table 1.

Symmetric matching pennies: response frequency adaptation

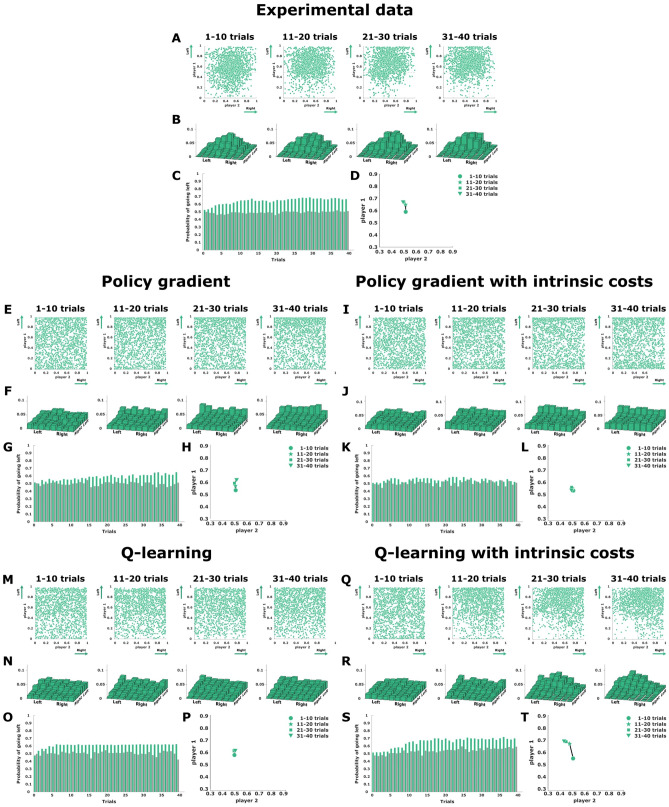

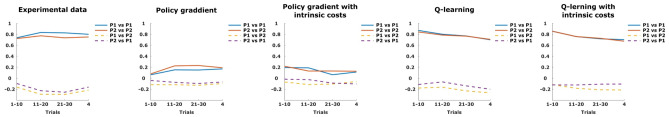

As there is no change in response frequencies, assessing learning in this game is more challenging, even though the difference in endpoint distribution between the first 10 trials in a block and the last 10 trials was significant (KS-test, ). To study the trial-by-trial adaptation process in more detail, we correlated subjects’ positional response from the current trial either to their positional response in the previous trial or the positional response of the other player in the previous trial. The correlations across four batches of ten consecutive trials that make up the blocks of 40 trials are shown in Fig. 6. The positive correlations displayed by the solid lines indicate that subjects had a strong tendency to give the same kind of response in consecutive trials. In contrast, the close-to-zero correlations displayed by the dashed lines indicate that subjects’ behavior cannot be predicted from the other player’s response in the previous trial. These correlations were stable across the block of 40 trials. The close-to-zero correlations represented by the dashed lines are reproduced by all learning models. However, the solid lines representing the first-order autocorrelation behave quite differently in the models. The gradient learners start out with zero correlation between successive actions and increase this correlation slightly over trials, but always far below the high autocorrelation displayed by subjects. In contrast, the Q-learning models exhibit an autocorrelation comparable in magnitude to the subject data. Although the modeled autocorrelation decreases slightly over time, the continuous Q-learning models again fit the data best.

Figure 6.

Autocorrelation in the symmetric matching pennies. The solid lines show the autocorrelation between actions from the current and the previous trial for both players. The dashed lines indicate cross-correlations between one players action in the current trial with another player’s action in the previous trial. From left to right: experimental data, policy gradient, policy gradient with intrinsic costs, Q-learning, Q-learning with intrinsic costs. Created using MATLAB R2021a (https://www.mathworks.com)43.

Discussion

In this study we investigate human multi-agent learning in three different kinds of continuous sensorimotor games based on the three -matrix games of matching pennies, asymmetric matching pennies and the prisoner’s dilemma. In these games, subjects were haptically coupled and learned to move in a way that would reduce the experienced haptic coupling force resisting their forward motion, which served as a negative reward during the interaction. To explain subjects’ learning behavior across multiple repetitions, we compare their movements to different reinforcement learning models with or without internal costs arising when deviating from the previous action or average behavior. In previous studies25, the attainment of Nash equilibria in sensorimotor interactions has been reported, but without investigating the mechanisms of learning. In the current study we found, when simulating different learning models, that it is not enough to study binary discretizations of the continuous games as previously, as this coarse analysis does not reveal fine differences between learning models. However, clear differences between learning models arise when studying the games in their native continuous sensorimotor space. In particular, we found that a continuous Q-learning model with intrinsic action costs best explains subjects’ co-adaptation.

While we considered binary and continuous models in the paper to highlight the advantage the latter may have compared to the former, there is a gradual spectrum from discrete to continuous models. Naively, discrete models with more than two actions can be used to approximate the continuous case, but then learning is slower, because there are many more independent actions to learn. In the reinforcement learning literature46, this problem is remedied with multiple tilings, where each tiling contains a fixed number of tiles that cover the workspace. Having multiple tilings that are shifted with respect to each other introduces neighbourhood relationships where the number of tiles per tiling reflects the width of the neighbourhood. When formulating the Q-learning model in our examples as a discrete tiled agent, we get qualitatively similar results to the continuous agents, however, sometimes there are border effects. Therefore, we decided to use continuous models that more closely reflect the experimental setup, and that can be discretized for visualization.

In all of the games, the continuous Q-learning model trained without additional intrinsic costs tends to spread its probability fairly evenly across the workspace, and shows rather slow learning. The reason is not that Q-learning per se would be too slow to learn, but is rather a consequence of the fitting procedure that determines that the slow learning in that case provides the best fit, as faster learning would produce behavior that deviates from subjects’ behavior even more. However, we found that introducing an intrinsic cost that punishes large deviations from the previous action could alleviate this problem, allowing for more concentrated learning and making the Q-learning model qualitatively the best fit to human behavior. The intrinsic cost can be interpreted as a simple intrinsic cost for motor effort, that is not considered in the payoff function of the game, or it could be the consequence of an intrinsic motor planning cost that favors similar actions. As all our models were initialized with a uniform distribution, such an intrinsic cost could also be thought of as a non-uniform prior that one could use to initialize the models.

An alternative interpretation would be to conceptualize the minimization of the trial-by-trial deviation as minimizing the information difference between each action and the marginal distribution over actions given by the average behavior. Such an implicit information penalization of action policies can be regarded as a form of bounded rational decision-making, where decision-makers trade off between utility and information costs that are required to achieve a certain level of precision47–49. Such information costs have been previously suggested to model costs of motor planning and abstraction50,51. Another form of limited information-processing capability is subjects’ limited sensory precision regarding the perception of force, that we have modeled by assuming Gaussian sensory noise with a magnitude roughly corresponding to ten percent of the maximum force52,53. The assumption of this sensory noise is vital for all the models, as otherwise the simulations predict very fast convergence and steep learning transients that are not observed in human subjects.

Compared to the Q-learning model, the policy gradient learners preferentially converged to strategies involving actions at the extremes, even though average behavior of these models fitted with game-theoretic predictions. This preference for extremes occurs not only for the particular parameterization that we chose (Kumaraswamy or beta-distribution), but also occurs for other parameterizations like the logit-normal model—compare Supplementary Figures 2- 4. While this can only happen when the policy gradient models are initialized uniformly, a non-uniform initialization of these models must assign zero probability to the extremes of the workspace, and therefore trivially avoids this problem. When initialized uniformly, the gradient learners prefer the extreme solutions, as the reward gradient is steepest in this direction. This demonstrates that the choice of learning algorithm imposes a preference on the set of possible equilibrium solutions, that a priori would all be equally acceptable, due to the inherent learning dynamics. We conclude that it is important to study different learning algorithms for understanding sensorimotor interactions, as such behavior cannot be inferred from a game-theoretic analysis alone, that simply focuses on the Nash equilibrium concept. While our study can of course not exclude the possibility that there could be many other learning algorithms that could explain the data equally well or better, it shows also that it is not trivial that the Q-learning with intrinsic costs manages to capture the adaptive behavior of human interactions.

Unlike the abstract concept of the Nash equilibrium solution, reinforcement learning models are deeply rooted in the psychology literature of classical and operant conditioning46 and thereby open up a mechanistic approach to understanding sensorimotor interactions. In the neurosciences there have been numerous attempts to relate these psychological concepts to neuronal mechanisms like spike-timing dependent plasticity and dopaminergic modulation54,55. In human strategic interactions, previous reinforcement learning models have been used to explain, for example, the emergence of dominant strategies56–58, conditional cooperation59,60, learning in extensive form games61–63, and transfer of reward-predictive representations64,65. The interactions in these studies were mostly based on cognitive strategies that participants could develop, as they were made explicitly aware of the meaning of different choice options. Instead, we have focused on haptic interactions with forces that require implicit learning that can be construed as the minimization of reward prediction error in the context of reinforcement learning66,67.

The role of reinforcement learning in the context of implicit learning has been previously examined in a number of studies on motor learning68–70, including physical robot-human interactions71,72 and group coordination73, however, not in the context of game theory and haptic interactions. While our task was simple enough to allow for model-free reinforcement learning, a challenge for the future remains to study reinforcement learning in more complex environments with multiple agents. This may include, for example, studying model-based learning strategies74 or learning strategies that succeed in the presence of multiple equilibria26. Although parallel single-agent Q-learning over multiple trials can lead to successful action coordination in some strategic interactions, game-theoretic learning algorithms like Nash Q-learning make it more likely to reach a joint optimal path in more general games41. Whether human players would devise such strategies during sensorimotor interactions remains an open question. In summary, our study adds to a growing body of research harnessing the power of reinforcement learning models to understand human interactions and highlights that a more detailed understanding of learning mechanisms can also contribute to a better understanding of equilibrium behavior.

Methods

Experimental methods

Participants

Sixteen naïve subjects participated in this study and provided written informed consent for participation. The study was approved by the ethics committee of Ulm University. All sixteen participants were undergraduate students that were compensated for their time with an hourly payment of 10 euros, while they played two sensorimotor versions of the matching pennies game. Before the experiment, participants were instructed that they should move a cursor to a target bar while trying to minimize the resistive forces experienced during the movement. Participants were told that they are not allowed to communicate with each other during the experiment. The data of another sixteen subjects was reanalyzed from a previous study25, where they performed a sensorimotor version of the prisoner’s dilemma under the same kind of experimental conditions presented here in the current study. All methods were carried out in accordance with relevant guidelines and regulations.

Setup

The experiment was run on two vBOT haptic devices75 that were connected to a virtual reality environment in which two subjects could control one cursor each by moving a handle in the horizontal plane. This setup provides both subjects separately with a visual feedback of their own cursor position, without showing a direct view of their hand or the hand of the other player—see Fig. 1A. The subjects were haptically coupled by applying individual forces on the two handles representing a negative payoff. Both the forces and the cursors’ positions were updated and recorded with a sampling frequency of 1 kHz throughout each trial.

Experimental design

To begin each trial, subjects had to simultaneously locate their cursor on the 15-cm-wide starting bar placed at each bottom side of their respective workspace. Each participant’s workspace was constrained by the vBOT simulating solid walls at the extremes left () and right () for both players. A beep sound informed players that a valid starting position was chosen and a 15-cm-wide target bar was displayed in front of each player with the same distance that was randomly drawn from the uniform distribution between 5 and 20 cm—see Fig. 1A. Subjects were instructed to move as soon as they heard the beep. Each participant had to hit their respective target bar with their cursor, for which they had to move forward (y-direction) and touch the bar within a maximum time period of 1500 ms. Each trial was finished as soon as both players had hit their respective target bar, which they could touch anywhere along its length. For the analysis, we interpreted this horizontal position of the target crossing as subjects’ final choice.

To determine the payoffs of the interaction, each player’s cursor was attached to a simulated one-dimensional spring whose equilibrium point was located on the starting bar. This generated a force on their hand in the negative y-direction that opposed their forward movement with , where corresponds to the y-distance of the cursor of player from the starting bar. Crucially, the spring constants were not constant but variable functions depending on the normalized horizontal positions and of both participants, where corresponds to extreme left and represents extreme right of the workspace of player i. In particular, the spring functions were determined by the bilinear interpolation

| 1 |

where was a constant scaling parameter set to throughout the experiment, and the 2-by-2 matrices corresponds to the 2-by-2 payoff matrix of player i and determine the boundary values of the force payoffs at the extremes of the -space. Consequently, is the payoff assigned to player i when player 1 takes action and player 2 takes action where . Each one of the three games analyzed in this study was defined by a different set of payoff matrices corresponding to the 2-by-2 games of the prisoner’s dilemma, asymmetric matching pennies, and symmetric matching pennies—see Fig. 1B. Players’ actions are considered as the final positions in the -space, where the continuous responses can be categorized by using different binning (see Fig. 1C,D,E). To encourage learning, the payoff matrices were permuted every 40 trials, so that the mapping between action and payoff had to be relearned every 40 trials. There were four possible permutations corresponding to the possible reassignments and . Subject pairs repeated 20 blocks of 40 trials each, totaling in 800 trials over the whole experiment.

Prisoner’s dilemma motor game

In the pen-and-paper version of the prisoner’s dilemma the 2-by-2 payoff matrices are given by

and represent a scenario where two delinquents are caught separately by the police and face the choice of either admitting their crime (cooperating) or denying it (defecting), while being individually interrogated with no communication allowed. For each player it is always best to defect, no matter what the other player is doing, even though cooperation would lead to a more favorable outcome if both players choose to do so. Hence, there is only one pure Nash equilibrium given by defect/defect. In our continuous sensorimotor version of the game, the Nash equilibrium corresponds to a corner of the -space—see Fig. 1C.

Matching pennies motor games

In the classic version of the symmetric matching pennies game the 2-by-2 payoff matrices are given by

and can be motivated from the scenario of 2 football players during a penalty kick with the two actions left and right. In this case player 2 (e.g. the goalkeeper) will try to select the matching action (e.g. ) to stop the ball, while player 1 (e.g. the striker) will try to unmatch by aiming the shot away from player 1 (e.g. ). Choosing an action deterministically in this game is a bad strategy, since the opponent can exploit predictable behavior. The mixed Nash equilibrium is therefore given by a pair of distributions with and (see Fig. 1E). In the continuous sensorimotor version of the experiment there are infinitely many mixed Nash equilibria corresponding to the set of all distributions where and assuming —see Supplementary Material.

In the 2-by-2 version of the asymmetric matching pennies game the payoff matrices are given by

which is similar to the symmetric scenario, only that one player now has a higher incentive to choose a particular action (e.g. the striker gets awarded more points when choosing ). The mixed Nash equilibrium is then given by a pair of distributions with and (see Fig. 1D). In the continuous sensorimotor version of the experiment there are infinitely many mixed Nash equilibria corresponding to the set of all distributions where and assuming —see Supplementary Material.

Theoretical methods

Computational models

Every model consists of two artificial agents whose parameters are updated over the course of 40 trials. Like in the actual experiment, every agent decides independently from the other agent about an action, observes the reward signal in the form of a punishing force and adapts their strategy. The models can be categorized in binary action models with action space , and continuous action models that operate on the action space . All models have the same structure:

At the beginning of every trial t every agent i samples an action from the distribution .

After both agents have taken their respective action simultaneously, they receive a reward , where is calculated based on the spring constant from Equation (1), such that , where ε is a Gaussian random variable with mean zero and standard deviation 0.1 to model the effect of sensory noise.

When considering intrinsic costs, the reward signal is augmented to for all in order to punish high trial-by-trial deviations. For all simulations we use .

Depending on the action and reward, the agents adapt their strategy to optimize future rewards.

Binary action models

Binary action models are limited to the two actions 0 and 1. These models can be used for learning in the classic matrix versions of the different games.

Model 1: Q-learning

As in standard Q-Learning, agents using the first model represent their knowledge in the form of a Q-value lookup table. Since there are only two actions the lookup table for player i has two values, namely:

For the distribution we chose a softmax-distribution with parameter :

and the updates for the Q-values are of the following form:

where is a learning rate that decreases exponentially over the course of the trials. All Q-values are initialized as 0, such that the agents start the game without any initial knowledge.

Model 2: Gradient Decent

The gradient decent model learns a parameterized policy that can select actions without consulting a value function. The policy used by the binary gradient decent learner is the Bernoulli probabilty distribution with outcomes . An action a is determined by sampling from the policy:

where is a parameter that is adapted whenever a player receives the reward for their current action .

with an exponentially decaying learning rate parameter that is fitted to the behavior of the human participants. The parameter is initialized as such that both actions are equally probable for the initial policy.

Continuous action models

Continuous models give a more faithful representation of the sensorimotor version of the game in that they are able to sample actions from the full interval [0, 1].

Model 3: Gradient Descent

The gradient decent model directly learns a parameterized policy that can select actions without consulting a value function. The parameterized policy used in this study is given by the Kumaraswamy probability distribution on the interval (0, 1). An action a is determined by sampling from the policy:

where and are shape-parameters that are adapted whenever a player receives reward or punishment in form of a force for their current action. For an action and reward , the parameters are updated according to the following update equations:

with an exponentially decaying learning rate parameter that is fitted to the behavior of the human participants. The shape-parameters are initialized as such that the initial policy for all players is the uniform distribution on (0, 1).

Model 4: Q-Learning with Gaussian basis functions

This model is an extension of the discrete Q-Learning model to the continuous action space [0, 1]. Its core consists of an action value function with radial basis functions such that the real action value function is well reflected by a set of weights that are adapted throughout the trials and a set of parameters with that are held constant. For the simulation we chose Gaussian basis functions centered around with standard deviation such that action samples can be drawn from a Gaussian mixture model. Accordingly, we can generate an action at time t, by sampling an index j from a categorical distribution with softmax-parameters and softmax function σ(.), and then draw a sample a from the j-th basis function in the Gaussian mixture. Samples that lie outside of the interval [0, 1] are rejected and resampled. Unlike the discrete case we update all weights Q in every trial according to the following rule. If and are the action taken and the reward at time t, then

where is a learning rate parameter, is a discount factor and is the clipped Gaussian density function . All weights were initialized as 0.

Optimizing parameters

All parameters were optimized such that the simulated agents’ behavior fitted the behavior of the average player in a specific player slot (i.e. player 1 or player 2). For the optimization we minimized the absolute error:

that occurred over 40 trials between the participants data d and the simulation’s results . The histogram function h maps the data points onto an matrix where each entry of this matrix contains the normalized amount of data points that lie in the corresponding bin of an binned histogram. The time index t of indicates that all data points from the first trial up until trial t are used to generate the matrix.

Supplementary information

Acknowledgements

This study was funded by European Research Council (ERC-StG-2015-ERC Starting Grant, Project ID: 678082, BRISC: Bounded Rationality in Sensorimotor Coordination).

Author contributions

C.L.L., G.S., and D.B. designed the experiment. C.L.L. performed experiments and analyzed the data. G.S. generated predictions from computer simulations. D.B. supervised the project. C.L.L. and D.B. wrote the paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Cecilia Lindig-León and Gerrit Schmid.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-021-99428-0.

References

- 1.Nash JF. Equilibrium points in n-person games. Proc. Natl. Acad. Sci. USA. 1950;36:48–49. doi: 10.1073/pnas.36.1.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.von Neumann, J. & Morgenstern, O. The Theory of Games and Economic Behavior (Princeton University Press, 1947).

- 3.Savage, L. J. The foundations of statistics. Wiley (1954).

- 4.Todorov E. Optimality principles in sensorimotor control (review) Nat. Neurosci. 2004;7:907–915. doi: 10.1038/nn1309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fudenberg, D. & Tirole, J. Game Theory (MIT Press, 1991).

- 6.Poundstone, W. Prisoner’s Dilemma: John Von Neumann, Game Theory and the Puzzle of the Bomb (Doubleday, 1992).

- 7.Buchanan JM. Game theory, mathematics, and economics. Null. 2001;8:27–32. doi: 10.1080/13501780010022802. [DOI] [Google Scholar]

- 8.C.R. McConnell, S. F., S.L. Brue. Economics: principles, problems, and policies (McGraw-Hill Education, 2018).

- 9.Camerer C. Behavioral game theory: experiments in strategic interaction. Princeton, N.J.: Princeton University Press; 2003. [Google Scholar]

- 10.Sanfey, A. G. Social decision-making: insights from game theory and neuroscience. Science (New York, N.Y.)318, 598–602 (2007). 10.1126/science.1142996. [DOI] [PubMed]

- 11.Keynes, J. M. The general theory of employment, interest and money (Macmillan, 1936). https://search.library.wisc.edu/catalog/999623618402121.

- 12.DeDeo S, Krakauer DC, Flack JC. Inductive game theory and the dynamics of animal conflict. PLoS Comput. Biol. 2010;6:1–16. doi: 10.1371/journal.pcbi.1000782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sumpter, D. The principles of collective animal behavior. Philosophical transactions of the Royal Society of London. Series B, Biological sciences361, 5–22 (2006). [DOI] [PMC free article] [PubMed]

- 14.Cai J, Tan T, Chan SHJ. Predicting nash equilibria for microbial metabolic interactions. Bioinformatics. 2020 doi: 10.1093/bioinformatics/btaa1014. [DOI] [PubMed] [Google Scholar]

- 15.Giraldeau LA, Livoreil B. Game theory and social foraging. Oxford: Oxford University Press; 1998. [Google Scholar]

- 16.Basar, T. & Olsder, G. J. Dynamic Noncooperative Game Theory (SIAM, 1999).

- 17.Özgüler AB, Yıldız A. Foraging swarms as nash equilibria of dynamic games. IEEE Trans. Cybern. 2014;44:979–987. doi: 10.1109/TCYB.2013.2283102. [DOI] [PubMed] [Google Scholar]

- 18.Cressman R, Křivan V, Brown JS, Garay J. Game-theoretic methods for functional response and optimal foraging behavior. PLoS ONE. 2014;9:e88773. doi: 10.1371/journal.pone.0088773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rilling JK, Sanfey AG, Aronson JA, Nystrom LE, Cohen JD. The neural correlates of theory of mind within interpersonal interactions. NeuroImage. 2004;22:1694–1703. doi: 10.1016/j.neuroimage.2004.04.015. [DOI] [PubMed] [Google Scholar]

- 20.Sanfey AG. Social decision-making: Insights from game theory and neuroscience. Science. 2007;318:598. doi: 10.1126/science.1142996. [DOI] [PubMed] [Google Scholar]

- 21.Yoshida W, Dolan RJ, Friston KJ. Game theory of mind. PLoS Comput. Biol. 2008;4:e1000254. doi: 10.1371/journal.pcbi.1000254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Moriuchi, E. “social credit effect” in a sharing economy: A theory of mind and prisoner’s dilemma game theory perspective on the two-way review and rating system. Psycho. Mark. 37, 641–662 (2021). 10.1002/mar.21301.

- 23.Lee D, McGreevy BP, Barraclough DJ. Learning and decision making in monkeys during a rock-paper-scissors game. Cogn. Brain Res. 2005;25:416–430. doi: 10.1016/j.cogbrainres.2005.07.003. [DOI] [PubMed] [Google Scholar]

- 24.Gao J, Su Y, Tomonaga M, Matsuzawa T. Learning the rules of the rock-paper-scissors game: Chimpanzees versus children. Primates. 2018;59:7–17. doi: 10.1007/s10329-017-0620-0. [DOI] [PubMed] [Google Scholar]

- 25.Braun DA, Ortega PA, Wolpert DM. Nash equilibria in multi-agent motor interactions. PLoS Comput. Biol. 2009;5:e1000468. doi: 10.1371/journal.pcbi.1000468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Braun DA, Ortega PA, Wolpert DM. Motor coordination: When two have to act as one. Exp. Brain Res. 2011;211:631–641. doi: 10.1007/s00221-011-2642-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Grau-Moya J., P. G., Hez E. & A., B. D. The effect of model uncertainty on cooperation in sensorimotor interactions. J. R. Soc.10 (2013). [DOI] [PMC free article] [PubMed]

- 28.Leibfried F, Grau-Moya J, Braun DA. Signaling equilibria in sensorimotor interactions. Cognition. 2015;141:73–86. doi: 10.1016/j.cognition.2015.03.008. [DOI] [PubMed] [Google Scholar]

- 29.Chackochan, V. & Sanguineti, V. Modelling collaborative strategies in physical human-human interaction. In Ibáñez, J., González-Vargas, J., Azorán, J., Akay, M. & Pons, J. (eds.) Converging Clinical and Engineering Research on Neurorehabilitation II. Biosystems & Biorobotics, vol. 15 (Springer, Cham, 2017).

- 30.Van der Wel RPRD, Knoblich G, Sebanz N. Let the force be with us: Dyads exploit haptic coupling for coordination. J. Exp. Psychol. Hum. Percept. Perform. 2011;37:1420. doi: 10.1037/a0022337. [DOI] [PubMed] [Google Scholar]

- 31.Fudenberg, D. The Theory of Learning in Games (MIT Press, 1998).

- 32.Cournot, A. A., Fisher, I. & Bacon, N. T. Researches into the mathematical principles of the theory of wealth. No. xxiv p., 1 L., 213 p. in Economic classics (The Macmillan company, New York, 1927). http://hdl.handle.net/2027/mdp.39015014823705.

- 33.Ellison G, Fudenberg D. Learning purified mixed equilibria. J. Econ. Theory. 2000;90:84–115. doi: 10.1006/jeth.1999.2581. [DOI] [Google Scholar]

- 34.Shamma JS, Arslan G. Dynamic fictitious play, dynamic gradient play, and distributed convergence to nash equilibria. IEEE Trans. Autom. Control. 2005;50:312–327. doi: 10.1109/TAC.2005.843878. [DOI] [Google Scholar]

- 35.Watkins CJCH, Dayan P. Q-learning. Mach. Learn. 1992;8:279–292. [Google Scholar]

- 36.Grau-Moya, J., Leibfried, F. & Bou-Ammar, H. Balancing two-player stochastic games with soft q-learning. arXiv preprintarXiv:1802.03216 (2018).

- 37.Banerjee, B. & Peng, J. Adaptive policy gradient in multiagent learning. 686–692 (2003).

- 38.Mazumdar E, Ratliff LJ, Sastry SS. On gradient-based learning in continuous games. SIAM J. Math. Data Sci. 2020;2:103–131. doi: 10.1137/18M1231298. [DOI] [Google Scholar]

- 39.Littman, M. L. Markov games as a framework for multi-agent reinforcement learning. 157–163 (Elsevier, 1994).

- 40.Hu, J. & Wellman, M. P. Multiagent reinforcement learning: theoretical framework and an algorithm. vol. 98, 242–250 (Citeseer, 1998).

- 41.Hu J, Wellman MP. Nash q-learning for general-sum stochastic games. J. Mach. Learn. Res. 2003;4:1039–1069. [Google Scholar]

- 42.Weibull, J. W. Evolutionary Game Theory (MIT Press, 1995).

- 43.MATLAB. version 9.10.0 (R2021a) (The MathWorks Inc., Natick, Massachusetts, 2021).

- 44.Tishby, N. & Polani, D. Information theory of decisions and actions. 601–636 (Springer, 2011).

- 45.Genewein T, Leibfried F, Grau-Moya J, Braun DA. Bounded rationality, abstraction, and hierarchical decision-making: An information-theoretic optimality principle. Front. Robot. AI. 2015;2:27. doi: 10.3389/frobt.2015.00027. [DOI] [Google Scholar]

- 46.Sutton, R. S. & Barto, A. G. Reinforcement learning: An introduction (MIT Press, 2018).

- 47.Ortega PA, Braun DA. Thermodynamics as a theory of decision-making with information-processing costs. Proc. R. Soc. A: Math. Phys. Eng. Sci. 2013;469:20120683. doi: 10.1098/rspa.2012.0683. [DOI] [Google Scholar]

- 48.Gottwald S, Braun DA. The two kinds of free energy and the bayesian revolution. PLoS Comput. Biol. 2020;16:e1008420. doi: 10.1371/journal.pcbi.1008420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Leimar O, McNamara JM. Learning leads to bounded rationality and the evolution of cognitive bias in public goods games. Sci. Rep. 2019;9:16319. doi: 10.1038/s41598-019-52781-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Schach S, Gottwald S, Braun DA. Quantifying motor task performance by bounded rational decision theory. Front. Neurosci. 2018;12:932. doi: 10.3389/fnins.2018.00932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lindig-León C, Gottwald S, Braun DA. Analyzing abstraction and hierarchical decision-making in absolute identification by information-theoretic bounded rationality. Front. Neurosci. 2019;13:1230. doi: 10.3389/fnins.2019.01230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Juslin P, Olsson H. Thurstonian and Brunswikian origins of uncertainty in judgment: A sampling model of confidence in sensory discrimination. Psychol. Rev. 1997;104:344. doi: 10.1037/0033-295X.104.2.344. [DOI] [PubMed] [Google Scholar]

- 53.Ennis, D. M. Thurstonian models: Categorical decision making in the presence of noise (Institute for Perception, 2016).

- 54.Worgotter F, Porr B. Temporal sequence learning, prediction, and control: A review of different models and their relation to biological mechanisms. Neural Comput. 2005;17:245–319. doi: 10.1162/0899766053011555. [DOI] [PubMed] [Google Scholar]

- 55.Florian RV. Reinforcement learning through modulation of spike-timing-dependent synaptic plasticity. Neural Comput. 2007;19:1468–1502. doi: 10.1162/neco.2007.19.6.1468. [DOI] [PubMed] [Google Scholar]

- 56.Sandholm TW, Crites RH. Multiagent reinforcement learning in the iterated prisoners dilemma. Biosystems. 1996;37:147–166. doi: 10.1016/0303-2647(95)01551-5. [DOI] [PubMed] [Google Scholar]

- 57.Beggs AW. On the convergence of reinforcement learning. J. Econ. Theory. 2005;122:1–36. doi: 10.1016/j.jet.2004.03.008. [DOI] [Google Scholar]

- 58.Harper, M. et al. Reinforcement learning produces dominant strategies for the iterated prisoner’s dilemma. PLoS ONE12, e0188046. 10.1371/journal.pone.0188046 (2017). [DOI] [PMC free article] [PubMed]

- 59.Ezaki T, Horita Y, Takezawa M, Masuda N. Reinforcement learning explains conditional cooperation and its moody cousin. PLoS Comput. Biol. 2016;12:e1005034. doi: 10.1371/journal.pcbi.1005034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Horita Y, Takezawa M, Inukai K, Kita T, Masuda N. Reinforcement learning accounts for moody conditional cooperation behavior: Experimental results. Sci. Rep. 2017;7:39275. doi: 10.1038/srep39275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Roth AE, Erev I. Learning in extensive-form games: Experimental data and simple dynamic models in the intermediate term. Games Econ. Behav. 1995;8:164–212. doi: 10.1016/S0899-8256(05)80020-X. [DOI] [Google Scholar]

- 62.Laslier J-F, Walliser B. A reinforcement learning process in extensive form games. Int. J. Game Theory. 2005;33:219–227. doi: 10.1007/s001820400194. [DOI] [Google Scholar]

- 63.Ling, C., Fang, F. & Kolter, J. Z. What game are we playing? end-to-end learning in normal and extensive form games. In IJCAI (2018).

- 64.Russek EM, Momennejad I, Botvinick MM, Gershman SJ, Daw ND. Predictive representations can link model-based reinforcement learning to model-free mechanisms. PLoS Comput. Biol. 2017;13:e1005768. doi: 10.1371/journal.pcbi.1005768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lehnert L, Littman ML, Frank MJ. Reward-predictive representations generalize across tasks in reinforcement learning. PLoS Comput. Biol. 2020;16:e1008317. doi: 10.1371/journal.pcbi.1008317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Glimcher PW. Understanding dopamine and reinforcement learning: The dopamine reward prediction error hypothesis. Proc. Natl. Acad. Sci. 2011;108:15647–15654. doi: 10.1073/pnas.1014269108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Watabe-Uchida M, Eshel N, Uchida N. Neural circuitry of reward prediction error. Annu. Rev. Neurosci. 2017;40:373–394. doi: 10.1146/annurev-neuro-072116-031109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.J, I. & R, S. ,. Learning from sensory and reward prediction errors during motor adaptation. PLOS Comput. Biol.7, e1002012 (2011). [DOI] [PMC free article] [PubMed]

- 69.Krakauer JW, Mazzoni P. Human sensorimotor learning: Adaptation, skill, and beyond. Curr. Opin. Neurobiol. 2011;21:636–644. doi: 10.1016/j.conb.2011.06.012. [DOI] [PubMed] [Google Scholar]

- 70.Uehara S, Mawase F, Therrien AS, Cherry-Allen KM, Celnik P. Interactions between motor exploration and reinforcement learning. J. Neurophysiol. 2021;122:797–808. doi: 10.1152/jn.00390.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Mitsunaga N, Smith C, Kanda T, Ishiguro H, Hagita N. Adapting robot behavior for human–robot interaction. IEEE Trans. Rob. 2008;24:911–916. doi: 10.1109/TRO.2008.926867. [DOI] [Google Scholar]

- 72.Akkaladevi SC, et al. Toward an interactive reinforcement based learning framework for human robot collaborative assembly processes. Front. Robot. AI. 2018;5:126. doi: 10.3389/frobt.2018.00126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Schmid G, Braun DA. Human group coordination in a sensorimotor task with neuron-like decision-making. Sci. Rep. 2020;10:8226. doi: 10.1038/s41598-020-64091-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Howard IS, Ingram JN, Wolpert DM. A modular planar robotic manipulandum with end-point torque control. J. Neurosci. Methods. 2009;181:199–211. doi: 10.1016/j.jneumeth.2009.05.005. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.