Abstract

Limited research has reported the economic feasibility—from both a research and practice perspective—of efforts to recruit and enroll an intended audience in evidence-based approaches for disease prevention. We aimed to retrospectively assess and estimate the costs of a population health management (PHM) approach to identify, engage, and enroll patients in a Type 1 Hybrid Effectiveness-Implementation (HEI), diabetes-prevention trial. We used activity-based costing to estimate the recruitment costs of a PHM approach integrated within an HEI trial. We took the perspective of a healthcare system that may adopt, and possibly sustain, the strategy in the typical practice. We also estimated replication costs based on how the strategy could be applied in healthcare systems interested in referring patients to a local diabetes prevention program from a payer perspective. The total recruitment and enrollment costs were $360,424 to accrue 599 participants over approximately 15 months. The average cost per screened and enrolled participant was $263 and $620, respectively. Translating to the typical settings, total recruitment costs for replication were estimated as $193,971 (range: $43,827–$210,721). Sensitivity and scenario analysis results indicated replication costs would be approximately $283–$444 per patient enrolled if glucose testing was necessary, based on the Medicare-covered services. From a private payer perspective, and without glucose testing, per-participant assessed costs were estimated at $31. A PHM approach can be used to accrue a large number of participants in a short period of time for an HEI trial, at a comparable cost per participant.

Keywords: Activity-based costing, Reach, Adoption, Behavioral lifestyle intervention, Prediabetes, Process mapping

Implications.

Practice: Population health management (PHM), a multicomponent strategy that includes identifying subpopulations of patients who would benefit from a given evidence-based intervention (EBI) and examining the characteristics of these populations using available health record data, holds the potential of maximizing program reach into the intended audience.

Policy: Reach is a key implementation outcome, and thus costs of recruitment for patients to participate in EBIs should be considered when it comes to the estimates of the economic feasibility.

Research: Future research is needed to explore the use of an automated PHM approach in the EHR system to further prompt outreach to high-risk or hard-to-reach patients to encourage subsequent diabetes screening tests and interventions to prevent diabetes or other preventive services.

Introduction

Prediabetes, the predecessor of diabetes, is a leading contributor to lifetime healthcare costs due to its high prevalence [1]. Approximately 84 million (or one in three) adults in the U.S. have prediabetes with an estimated convert rate of type 2 diabetes (T2D) of 5%–10% per year [2]. A number of efficacious weight-loss interventions that combine healthy eating and physical activity plans with behavioral strategies to promote weight loss have been developed for this population, the most well-known of which is the lifestyle intervention from the Diabetes Prevention Program (DPP) [3]. Studies have also shown that DPPs improved participants’ glycemic outcomes [4], reduced the incidence of diabetes [3], and reduced healthcare costs and utilization in the adult workforce [5] and Medicare populations [6] in the first intervention year. The Centers for Disease Control and Prevention (CDC) Diabetes Prevention Recognition Program developed standards to track and ensure the quality of lifestyle intervention programs, and currently recognizes both in-person and virtual/online/digital programs to support relevant behavior changes [7]. To date, most of these programs have been delivered in person [8, 9], limiting program scalability and accessibility for at-risk individuals dealing with time and/or transportation barriers. In a recent review study of identifying factors leading to the successful implementation of DPP in real-world settings [10], Aziz et al. emphasized that even modest weight loss can have a significant population-level impact if a high proportion of at-risk individuals participate in the programs. Unfortunately, engaging participants in these lifestyle programs has been a challenge [11]. In the most recent National Health Interview Survey, of respondents at risk for diabetes, only 2.4% reported participating in a diabetes prevention program [12].

To address low participation rates digital platforms provide the appeal of scalability and the ability to overcome barriers often associated with in-person diabetes prevention interventions. Specifically, the use of asynchronous sessions that allow for social support and coaching when and where is most convenient for a given participant addresses issues with scheduling and travel time [13]. Digital DPPs have also demonstrated success at supporting participant weight-loss in a similar magnitude as in-person programs [14–16]. Still, the challenge of engaging populations at risk for diabetes persists. Indeed, 25% of people at risk for diabetes indicate that they would like to participate in a DPP if it were available [12]. Unfortunately, there has been limited research on methods to recruit or engage potential participants in either in-person or digital DPPs [17, 18].

Evidence-based interventions (EBIs) are faced with the challenge of ensuring clinical effectiveness while attracting a broad and representative sample of the target population [19]. The need for multi-component strategies which maximize reach (i.e., the number, proportion, and representativeness of participants) [20] is paramount given the challenges faced by in-person and digital DPPs. Processes that efficiently identify patients at risk of developing T2D and engage primary care providers (PCPs) are needed and seldom reported in the literature [21]. Population Health Management is gaining traction as highly relevant for organizations aiming to provide primary care services while tackling the challenges associated with the management of health care delivery and payment systems [22]. In primary care settings, proactive population health management would include, for example, identifying subpopulations of patients who would benefit from a given EBI, examining the characteristics of these populations using available health record data, creating reminders for patients and providers, tracking performance measures, and making data widely available for clinical decision making at the practice level [22, 23]. A prominent barrier to EBI implementation is a paucity of evidence on the startup costs, or costs related to the uptake of such an approach within existing healthcare or community-based systems [24, 25]. Assessing costs of recruitment and enrollment is an important first step towards understanding the economic feasibility of adopting and implementing a population health management approach in the delivery of digital DPPs to maximize reach into the intended audience.

The success of participant recruitment is related to several factors including staffing resources, length of intervention, post-intervention follow-up duration, and costs [26–28]. However, most intervention studies often overlook the importance of recruitment costs and resources and it is seldom reported in the literature [25, 29–31]. Information associated with the resources and potential costs associated with recruitment activities and the process is critical to decision-makers in charge of resource allocation and upfront investment within limited budgets. Moreover, underestimating the costs associated with participant recruitment can contribute to recruitment problems, which inhibits or delays the translation of EBIs into practice [27]. The objectives of this study were to (a) assess the costs of applying a population health management approach to reach patients at risk of diabetes and enroll them in a clinical trial comparing a digital DPP to an enhanced standard of care, and (b) estimate the potential cost variation if replicated or sustained in general practice with modifications that reflect population health management approaches typical for chronic disease. It is anticipated that this information will be useful for informed decision-making in the widespread adoption of lifestyle interventions targeting diabetes prevention and the reduction of cardiovascular disease risk (e.g. obesity, diet, and hypertension) in healthcare settings.

METHODS

Setting and overview of the PREDICTS trial

The Preventing Diabetes with Digital Health and Coaching for Translation and Scalability trial (PREDICTS) is a Type 1 Hybrid Effectiveness-Implementation trial (HEI) that was conducted to determine the clinical effectiveness of a technology-enabled and adapted DPP lifestyle intervention to reduce hemoglobin A1c (HbA1c) and body weight of patients with prediabetes in an integrated healthcare system. As a Type 1 HEI trial [32], secondary aims related to examining the dissemination and implementation context included the assessment of potential reach recruitment costs, and potential for adoption and sustained implementation of digital diabetes prevention strategies within a typical healthcare setting [33]. The PREDICTS trial recruited 599 overweight or obese adults with prediabetes, determined by the HbA1c range of 5.7%–6.4%. The study protocol and details about participant recruitment and intervention reach are presented in detail elsewhere [8, 33]. In brief, eight clinics within the Nebraska Medicine healthcare system in the Greater Omaha area participated in the trial, from which 22,642 patients aged 19 and older who were at risk of T2D and had a body mass index of ≥25 kg/m2 were identified via an electronic health record (EHR) system query. Partnering PCPs reviewed health records of 11,313 of the resulting patient pool, and those who were not excluded from participation after physician review were sent a recruitment packet inviting them to participate in the trial. Packets included an opt-out postcard for patients to return if not interested. Trained study staff members contacted potential participants who did not return the opt-out postcard within 2 weeks by outreach phone call to determine interest and conduct a telephone screening to further assess eligibility. A total of 2,796 patients were telephone screened, 30% of which were found ineligible due to not meeting the inclusion criteria [33]. In total, 1,412 patients who passed the telephone screening attended an in-person screening at which HbA1c was assessed to determine final eligibility. Of these, 630 were found eligible and 599 of them were enrolled in the trial.

Participants were randomly assigned to the digital DPP (the intervention arm, n = 299) or to the enhanced standard of care (n = 300). The digital DPP is a technology-based delivery of the DPP lifestyle intervention [3] that consists of small group support, personalized health coaching, digital tracking tools, and a weekly behavior change curriculum approved by the CDC Diabetes Prevention Recognition Program (the Omada Health Program) [14]. Using internet-enabled devices (laptop, tablet, or smartphone), program participants can asynchronously complete weekly interactive curriculum lessons, privately message a health coach for individual counseling, track weight loss and physical activity using a wireless weight scale and pedometer, and monitor their engagement and weight loss progress. The program is inclusive of an initial 16-week intensive curriculum focusing on weight loss and a subsequent 36-week curriculum focusing on weight maintenance, with a total of 12 months of educational lessons. Participants in the control arm were provided with a one-time, 2-hr diabetes prevention education class, consisting of detailed information on current recommended levels of physical activity and healthy food choices involving portion size, eating regular meals, and a well-balanced diet based on the CDC My Plate recommendations, and the development of a personal action plan. The recruitment phase of the PREDICTS trial occurred over the 15-month duration from November 2017, through March 2019, when the last eligible participant was randomized. The trial was approved by the University of Nebraska Medical Center Institutional Review Board and Western IRB and is registered at clinicaltrials.gov (NCT03312764).

Analytical framework

We designed the analytic approach to address two primary issues. First, we focused on determining the cost needed for a large HEI randomized controlled trial to accrue the proposed sample size over a finite period of time (e.g., 599 participants over 15 months). This reflects the actual costs of recruitment and enrollment for the HEI trial. Second, we focused on sensitivity and scenario analyses to determine the potential costs of our population health management approach if it were to be used by a healthcare system for recruitment and enrollment to a local program (not a clinical trial) that aligned with the CDC Diabetes Prevention Recognition Program requirements. The analytical approach followed the best practice guidelines for the costing of prevention interventions [34, 35] and the modified cost assessment procedure proposed by Ritzwoller et al. [29], consisting of five elements: (a) perspective of the analysis, (b) identifying costs components, (c) capturing relevant costs, (d) data analysis, and (e) sensitivity analysis.

The recruitment costs were assessed from the organizational (i.e., healthcare system) perspective given that organizations are deciding whether or not to integrate such programs into their practices and thus bear the costs of implementing such programs. All costs were categorized as labor and non-labor costs and expressed as 2020 U.S. dollars, using the Consumer Price Index [36] due to the majority of non-medical care-related activities and resources involved in the present study. For the collection and analysis of costs, we utilized a micro-costing approach with an activity-based costing strategy [37], a method that is widely adopted in healthcare, to explicitly identify, measure, and value all resources used to recruit participants for the study. Specifically, total labor costs were estimated by summing the costs of each recruitment activity, which was calculated by multiplying the total activity time (in hours) by the per-hour cost of resources.

Costing a population health management approach for participant recruitment and enrollment

Step 1: identify labor cost components by recruitment activities and associated labor hours

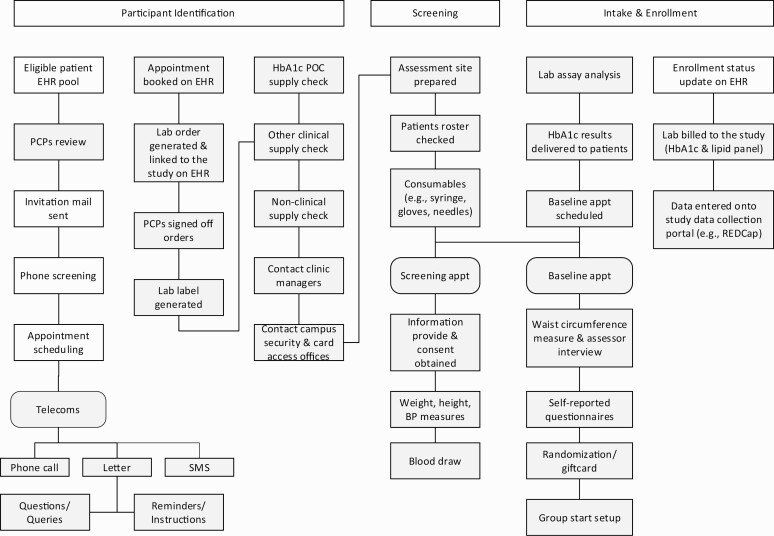

The diabetes prevention trial applied a population health management approach that holds the potential to be automated within existing healthcare systems to identify, screen, enroll, and engage potential participants. To better capture the recruitment costs, in which the majority are activity-based, we created a process map (Fig. 1) to illustrate the study recruitment process with each steps reflecting activity in the participant recruitment process from initial identifying individuals at risk, ordering screening tests, conducting screening tests, managing screening test results, to the final enrollment in the preventive services. All the identified recruitment activities were further categorized into three sections: participant identification, participant eligibility screening, and eligible participant intake and enrollment. At the end of the recruitment phase, members of the research team estimated the average number of hours per week they spent on the specific task, supplemented by the regular documentation of average times spent on each subcategory by project management tracking of the recruitment progress and resource use. We further multiplied the average hours per week by the number of weeks dedicated to a recruitment activity to derive the total number of labor hours on a specific task in the entire recruitment process.

Fig 1.

| Process map depicting the recruitment actives and process for the PREDICTS trial. Gray box = activities or resources may not be required for replication of the recruitment strategy. EHR, electronic health record; PCP, primary care provider; SMS, short message service; HbA1c, hemoglobin A1c; POC, point-of-care; BP, blood pressure.

Participant identification

A computer programmer applied pre-specified inclusion and exclusion criteria to identify potential eligible participants via EHR query [8]. Once a list of potential participants was generated, a physician champion engaged PCPs at each of the participating clinics for patient list review and clearance, or potential participant referral. A recruitment packet consisting of a physician invitation letter, a study description, and an opt-out postcard was prepared and mailed to potentially eligible patients. A total of 14 recruitment packet preparation sessions were conducted to prepare and send 10,770 invitation packets by postal mail.

Participant eligibility screening

Research assistants conducted a telephone screen call to assess specific inclusion and exclusion criteria for all patients who did not return the opt-out postcard. After initial eligibility was determined, a screening visit was scheduled and the initial screening packet, containing screening instruction, direction to the screening location, and a copy of the informed consent was prepared and sent by postal mail or email (based on participant preference). Research assistants and clinical staff (e.g., research nurse coordinators, medical assistants, or phlebotomists) conducted the screening assessment session, including HbA1c testing, blood pressure, weight, height, and resting heart rate measurements at eight different primary care clinics across the metropolitan area [33].

Eligible participant intake and enrollment

Participants found eligible by HbA1c screening completed an in-person baseline assessment prior to being randomized into one of the trial conditions. Research staff conducted all the assessment and data collection activities (survey questionnaires and waist circumference measurement) at the baseline visit.

Step 2: determine hourly wage rates

Labor costs were estimated based on time spent on each recruitment activity (i.e., activity-based costing), outlined on the process map (Fig. 1), conducted by the staff members and a full-time project manager who oversaw all aspects of the study, including staff recruitment, orientation and training, meeting and planning, coordinating between clinics, and IRB related tasks, and corresponding hourly wages. The number of hours worked was also tracked using bi-weekly timesheets of all research personnel. The per-hour salary rate for personnel who conducted the EHR query with computer programming and PCPs who reviewed the list of their potentially eligible patients to exclude any patients for reasons related to safety and appropriateness of the intervention were estimated at $44.29 and $124.87, respectively. These rates were calculated based on their annual salaries plus fringe benefits at a standard rate of 28%. All the other recruitment activities were conducted by the non-clinical research staff and clinical research staff at an hourly rate of $16.35. Costs associated with the project manager were estimated based on the actual 2017–2019 salaries, and simply accounted for the proportion of his/her full-time equivalent spent on recruitment-related activities.

Step 3: determine non-labor costs

Non-labor costs for telecommunication service subscription, appointment reminder service subscription, equipment, and supplies were based on actual amounts spent and were tracked from receipts and payment invoices. The research team collected nonlabor costs, further categorized each as fixed or variable. Variable costs were reported as unit costs and multiplied by the number of participants or the item purchased. These costs included mail postages, telephone and cellphone services, point-of-care HbA1c fingerstick test and venipuncture HbA1c test, incentives, iPads and cases, Apple pencil, safety box, recruitment materials printing, clinical supplies (e.g., gauge butterflies, syringe, vials, and sharp container), gulick tape, stadiometer, sphygmomanometer, stethoscope, arm pressure monitor, and scales. Fixed costs included the Appointment Reminder software subscription and AppleCare. A detailed listing of the materials and services, and individual costs, grouped into operational services, operational supplies, and medical supplies, are provided in Table 1. Other non-labor costs include research staff travel costs for assessment sessions, which were accrued at a rate of $0.25 per mileage, and were included in the others category. We did not take into account the overhead or space costs, because the study-related screening and assessment sessions, which were conducted in the primary care clinics, conferences, or classrooms, occurred outside the regular business hours (in the weekday evenings or Saturday morning).

Table 1.

| PREDICTS trial recruitment costs.

| Activity/category | Time, hours | No. of participants/units |

Costs ($) |

|---|---|---|---|

| Labor costs | |||

| A full-time project manager, including fringe benefits | $60,584 | ||

| Participant identification | |||

| EHR query | 472 | $21,548 | |

| PCP recruit and review | 132 | 11,313 | $16,988 |

| Recruitment packet preparation | 236 | 10,770 | $3,969 |

| Participant eligibility screening | |||

| Participant screening calls and schedule | 2,984 | 2,796 | $50,288 |

| Screening visit packet preparation | 132 | 1,832 | $2,224 |

| Preparation for screening visits, non-clinical | 396 | 1,832 | $6,673 |

| Ordering of HbA1c testing and PCP signed off | 24 | 1,832 | $3,029 |

| Screening visit | 1,476 | 1,412 | $24,873 |

| Follow-up for screening visit | 230 | 1,432 | $3,867 |

| Eligible participants intake and enrollment | |||

| Baseline visit packet preparation | 77 | 630 | $1,298 |

| Preparation for baseline visits | 96 | 630 | $1,622 |

| Baseline visit | 790 | 599 | $13,313 |

| Follow-up for baseline visit | 66 | 599 | $1,104 |

| Total labor costs | 7,109 | $211,379 | |

| Non-labor costs | |||

| Operational Service | |||

| Mail/Postage | 10,770 | $7,983 | |

| Telephone/cellphone, monthly fee, device, and data plan | $2,984 | ||

| Venipuncture HbA1c testb | 837 | $81,092 | |

| Operational/medical supplies | |||

| Incentives | 1,412 | $36,383 | |

| iPad and iPad cases | 15 | $6,840 | |

| AppleCare | $896 | ||

| Apple pencil | 1 | $133 | |

| Safety box | 2 | $74 | |

| Recruitment materials printing | $3,787 | ||

| Appointment Reminder App subscription fee | $435 | ||

| Othersc | $982 | ||

| Clinical supplies (e.g. gauge butterflies, syringe, vials, and sharp container) | $1,683 | ||

| Gulick tape | 10 | $499 | |

| POC HbA1c testb | 575 | $4,563 | |

| Stadiometer | 2 | $310 | |

| Sphygmomanometer, stethoscope, arm pressure monitor, and scale | $402 | ||

| Total non-labor costs | $149,045 | ||

| Total recruitment costs | $360,424 | ||

| Total costs per screened patient | $263 | ||

| Total costs per enrolled patient | $620 |

EHR, electronic health record; PCPs, primary care providers; HbA1c, hemoglobin A1c; POC, point-of-care.

aThe hourly wage for EHR query and PCP recruit and review activities were $44.29 and $124.87, respectively. Otherwise, the hourly wage for other activities was $16.35.

bThe screening protocol was switched to a lab HbA1c testing from a POC HbA1c fingerstick test to determine eligibility 6 months after the initiation of study recruitment due to a high proportion of false positive POC results (52%) (see Wilson et al. [33] for more detail).

cOthers included duffel bag, headset, 250 GB SSD, hard drive adapter, changing room divider, stationary, information technology, and mileages.

Data analysis

We used descriptive analyses to estimate the total number of labor hours, and total labor and non-labor costs associated with study participant recruitment. Measures included total recruitment costs broken down into labor and non-labor costs, and costs per participant screened and recruited by dividing total recruitment costs by the number of participants screened and enrolled. Additionally, exploratory descriptive analyses were conducted to estimate the cost to replicate the population health management approach for participant recruitment for preventive interventions.

Estimated costs for replication

For the estimate of replication costs, we used the process map (Fig. 1) to map replication resources needed to guide our cost estimate. We focused on activities that would be required for a healthcare system to implement the population health management strategy. We excluded any tasks, activities, and expenses that dealt with the clinical trial protocol development, clinical trial assessment and data collection, and any other clinical trial-related activities that would not need to be replicated if the study were continued at the organization or if it were replicated or adopted in another setting.

We further conducted one-way (deterministic) sensitivity analyses (varying one input parameter at a time) to evaluate the uncertainty and variation of the recruitment cost estimates to the parameter assumptions or in a variety of settings and circumstances. The cost range estimates for the labor activities and resources were derived via the consensus of the study investigators. Each input variable was first labeled as required vs. optional depending on whether they were identified as needed resources during the replication process. If deemed required resources, we calculated the minimum and maximum plausible values for the required activities or cost categories by varying the original costs by ±50%; whereas we assumed that the costs ranged from 0 (not required) to 100% (required) when the activities or resources were considered optional. The use of 50% lower or higher from the original costs for the lower bound and the upper bound is a commonly-used measure of sensitivity when specific data is not available [38]. For labor costs, we varied the time spent conducting a specific activity. For example, the percentage of computer programming time used to query the EHR ranged from 50% to 150%, because it is the essential element for a recruitment strategy applying a population health management approach. We assumed discounted resources associated with participant screening calls and schedule, and follow-up for a screening visit and enrollment (varied from 50% to 100%) because a telephone screening for a randomized trial is more laborious than a general screening call to offer a preventive service and less intensive follow-up. In addition, we considered that PCPs reviewing the potential participant list as an optional activity because it is not required when referring patients to CDC-recognized DPP programs.

For non-labor costs, we considered the service of glucose testing is optional and thus the cost range of a glucose testing was assumed between 0 (not required) to 100% (required). Because to enroll in a Medicare-supported DPP, it is required to have glucose testing results within the eligibility range (5.7%–6.4% for HbA1c test, 110–125 mg/dl for the fasting glucose test, and 140–199 mg/dl for the 2-hr oral glucose tolerance test) [39]; however, the glucose testing is not required for participation in CDC-recognized DPPs (i.e., private payer perspective). Currently, however, HbA1c testing, used in the present study, is not reimbursed by Medicare (but fasting glucose test and 2-hr oral glucose tolerance test) [40, 41]. Similarly, for other optional activities or resources (i.e., telephone service), we assumed that the cost range between 0% and 100%. We used tornado diagrams [38] to summarize the effects of varying key input parameters one at a time on the replication costs. The parameters were sorted in descending order by their influence on the cost outcomes. The longer bars indicated the most important parameters.

In addition, we conducted a scenario analysis to estimate the potential replication costs of recruitment when considering different stakeholders (e.g., Medicare, or private payers), where the main difference lies in whether a glucose testing is required to enroll in DPP programs. From the Medicare perspective, we varied the cost of fasting glucose test ($40 per unit) and 2-hr oral glucose tolerance test ($108 per unit) by 50% higher or lower than their original costs based on cost provided by the healthcare system that participated in this study. From a private payer perspective, we did not account for the cost of glucose testing and excluded all other optional activities and resources.

RESULTS

Recruitment costs for the PREDICTS trial

Labor costs

Table 1 reports the personnel time in hours and costs associated with these recruitment activities. The labor hours summed to 840, 5,241, and 1,029 h, for activities of participant identification, eligibility screening, and eligible participant intake and enrollment, respectively. The total labor costs summed to $211,379, including the costs that a project manager spent on recruitment activities. The majority (74%) of labor hours were accrued by the participant eligibility screening with the costs of $90,955, followed by eligible participant intake/enrollment (14%), and participant identification (12%). Activity-associated labor costs were primarily attributable to the participant eligibility screening (60%), in which costs related to screening calls, scheduling screening visits, ordering HbA1c testing, PCP patient approvals, delivering reminders to participants, preparing for screening visits, conducting screening sessions (n = 132 sessions over 15 months), and follow-up phone calls. Participant identification via the EHR system and targeted mailing accounted for 28% of the total recruitment activity-related labor costs ($42,504), followed by eligible participant intake and enrollment (11%, $17,336). The differences in rankings in terms of amounts of time spent and costs between sections of participant eligibility screening and participant intake and enrollment attributed to the different hourly wages between study personnel who were conducting the EHR query, PCP recruitment and review process, and staff who made the screening and scheduling calls.

Non-labor costs

Non-labor costs are presented in Table 1 and summed to $149,045. The vast majority of non-labor costs were associated with HbA1c testing/point-of-care HbA1c used to define patients’ eligibility ($85,655, 57%), followed by the incentives to compensate patients’ time for participating in the screening activities ($36,383, 24%).

Total recruitment costs

The total recruitment costs (labor and non-labor costs) were $360,424, which translated to $263 per participant screened and assessed (n = 1,412) and $620 per participant enrolled (n = 599) in the PREDICTS trial.

Estimated costs for replicating the population health management approach for recruitment

Sensitivity analysis

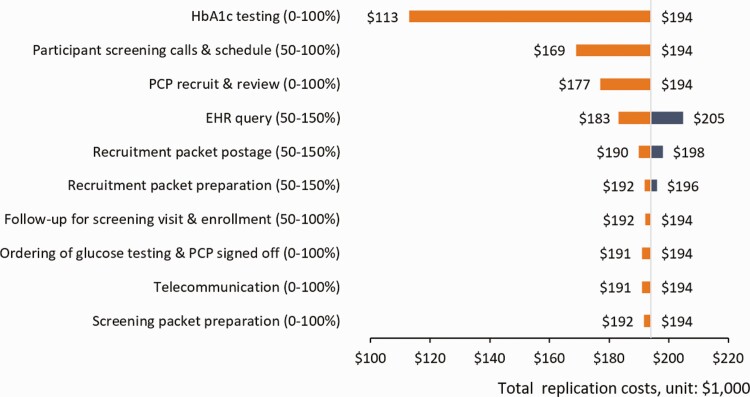

The process map (Fig. 1), depicting the flow of the recruitment activities employing a population health management approach, reveals that many activities associated with the trial could be omitted when used in typical healthcare system practice. As shown in Table 2, based on the recruitment activities (not trial-associated) and non-labor resources that may be required for the future replication, the total recruitment costs for replication were estimated at $193,971 (range: $43,827 [the summation of the lower bound values of all input parameters]–$210,721 [the summation of the upper bound values of all input parameters]) for programs with the similar scale. It translated to $137 (range: $31–$149) and $324 (range: $73–$352) per patient screened and enrolled, respectively. With the percentage effort assumption made for each activity, Fig. 2 presents one-way sensitivity analysis results with a tornado diagram that summarizes the effect of variation in input parameters (recruitment labor activities or non-labor resources) one at a time on the total replication estimate. The recruitment activity and non-labor resource with the greatest impact on the replication costs was the glucose testing (optional resources), as the estimates range from not increasing current replication costs to reducing by $81,092 (the total costs of HbA1c testing).

Table 2.

| Estimate replication costs and assumptions for replication for sensitivity analyses

| Activity/cost category | Cost estimates | Needed for replication? | Range |

|---|---|---|---|

| Labor costs | |||

| Participant identification, screening, and enrollment | |||

| EHR query | $21548 | Required, fixed | 50–150% |

| PCPs recruit and review | $16988 | Optional, variable | 0–100% |

| Recruitment packet preparation | $3969 | Required, variable | 50–150% |

| Participant screening calls and schedulea | $50288 | Required, variable | 50–100% |

| Screening packet preparation | $2224 | Optional, variable | 0–100% |

| Ordering of glucose testing and PCP signed off | $3029 | Optional, variable | 0–100% |

| Follow-up for screening visit and enrollmenta | $3867 | Required, variable | 50–100% |

| Non-labor costs | |||

| Recruitment packet postage | $7983 | Required, variable | 50–150% |

| Telecommunication | $2984 | Optional, variable | 0–100% |

| HbA1c testing | $81092 | Optional variable | 0–100% |

| Total replication costs | $193971 | $43827–$210721 |

EHR, electronic health record; PCPs, primary care providers.

aThe cost range of replication was discounted due to the screening for a randomized trial is more laborious than screening to offer a preventive service.

Fig 2.

| One-way sensitivity testing around total replication costs ($193,971) for key recruitment activities and resources. Each row shows the changes in cost, across the range of replication estimate values, from the total replication. The parameters are sorted in descending order by their impact on the recruitment estimates. Longer bars indicate the most important parameters, giving the diagram its “tornado” appearance. Note: HbA1c, hemoglobin A1c; PCP, primary care provider; EHR, electronic health record.

Scenario analysis

Varying the screening methods used to determine the program eligibility, especially for a Medicare population, resulted in an estimated total replication cost per enrolled patient of $283 (range: $236–$331) for the fasting blood glucose test and $444 (range: $316–$571) for the 2-hr, postglucose challenge test (both were reimbursed by Medicare)

When considering the private payer as the potential decision-maker, the recruitment activities of glucose testing and associated activities (PCP recruit and review, screening packet preparation, glucose testing, ordering of glucose testing and PCP approvals, and telecommunication) may not be needed. Removing these cost components/activities, the replication costs of recruitment and referring eligible patients to CDC-recognized DPPs were estimated at the lower bound of the replication costs of $43,827, translated to $31 per patient assessed and $73 per patient enrolled.

Discussion

The potential to reach individuals for whom an intervention is intended is often understudied and overlooked in determining intervention impact, justifying the economic feasibility of EBIs, and thus the costs associated with recruitment activities are underreported. In this study, we aimed to assess the costs of using a population health management approach to identify, engage, and enroll patients in a type 1 HEI, digital diabetes prevention trial and estimate the potential costs of applying this population health management approach if replicated in typical practice. Our study results indicate that the total recruitment costs of the PREDICTS trial were $360,424, in which 59% were labor activities and 41% were non-labor resources, translated to $263 and $620 per participant screened or enrolled/randomized. Moreover, the replication costs were estimated at $193, 971, with a total of 11,313 patients who were reviewed by PCPs, 2,796 patients who were telephone screened for eligibility, 1,832 screening packages that were prepared, 1,412 patients who attended the screening sessions, and 630 patients who were eligible [33]. This information may be helpful to other research groups as they plan for accruing a large number of participants over a short period of time or healthcare systems with a similar scale that plan to refer their eligible patients to a DPP program. We also examined what costs could look like for a healthcare system interested in identifying and engaging patients in a CDC-recognized diabetes prevention program. As cost is considered a key factor when considering the implementation of an EBI [35], our results provide additional cost information on a potential upfront investment regarding the infrastructure and capacity required in the pre-implementation phase (i.e. participant recruitment) for an EBI [21]. We also projected a potential enrollment cost (at the lower end of our estimates) of $73 per participant. The number could be further reduced based on an economy of scale principle based on identifying efficiencies (e.g., automating enrollment package preparation or screen call procedures) over time. This provides additional avenues for future work to examine potential efficiencies that could allow broad scale-up at incrementally lower costs over time and numbers of participants accrued.

As indicated in the sensitivity analysis, glucose screening was one of the key parameters in the cost estimates of recruitment replication. Per CDC-recognized DPP eligibility criteria [42], patients may not need to have a glucose testing (HbA1c, fasting plasma glucose, or 2-hr plasma glucose testing) to be referred to the program if they met other criteria, such as have a diagnosis of prediabetes or history of gestational diabetes, or if they take the self-report risk test and receive a high-risk result. Without accounting for the glucose testing, the replication cost was $112,879 ($80 per patient assessed, Table 2). Further excluding other optional recruitment labor activities or resources, the replication costs can be as low as $31 per patient screened based on the perspective of private payers. In a recently published pragmatic DPP trial conducted in the Veteran Affairs (VA) healthcare system, Damschroder and colleagues reported that the labor cost to recruit participants was $68 per participant assessed and $330 per participant identified to be eligible for VA-DPP [21]. Different from a population health management approach by integrating EHR computer programming in the recruitment process used in our HEI trial, they engaged PCPs to refer potential eligible patients for participation and did not account for the activities of participant identification and screening, which may contribute to the cost difference. This is reflected by the fact that 21% of the target population in their study were eligible compared to 45% in the PREDICTS trial [33]. Unlike their approach, which relied on PCPs to refer patients in the face of other competing demands, our approach presents a great potential to minimize missed opportunities to efficiently identify and engage high-risk individuals before they progress to diabetes [21]. While the comparison of pragmatic trial costs to our population health management sensitivity analysis results is not ideal, it does appear that the economic feasibility of applying a population health management approach to increase program reach may be cost-efficient for identifying and recruiting potential participants for the DPP programs.

In the PREDICTS trial, we applied HbA1c testing to determine the eligibility with a cost of approximately $97 per patient. Not surprisingly, the cost of HbA1c testing is higher than the other glucose testing methods as it reduces the burden of patient waiting time and inconvenience (i.e., fasting). However, it is not reimbursed by Medicare as one of the diabetes screening methods to determine the eligibility for Medicare DPP [41]. Healthcare systems decision-makers should balance the potential patient costs (e.g., time and discomfort) and screening costs when considering adopting this recruitment strategy targeting the Medicare population. Furthermore, reimbursement for diabetes screening is critical to supporting and scaling population-level strategies to prevent diabetes [40]. Results from the scenario analysis reveal that the replication costs of this recruitment strategy can vary significantly based on the potential stakeholders and their designated eligibility criteria.

Underestimating recruitment costs compounds existing recruitment problems, such as under-representation of minority or gender groups or the absence of attractive program features, and could delay or prohibit the translation of evidence-based programs like a digital DPP into practice [29, 37, 43, 44]. The current study provides insight on the cost of using a population health management approach to improve program reach, with the potential to be automated in the EHR system, for participant recruitment in a digital diabetes prevention trial. The use of the EHR system, the involvement of PCPs reviewing the potential participant list, and sending out the physician endorsed invitation letter are identified as fundamental elements within this population health management approach. These elements could help with the future design of an automated risk assessment in clinical populations and further prompt outreach to high-risk or hard-to-reach (i.e., those who otherwise would not participate via other passive forms of recruitment approaches) patients to encourage subsequent diabetes screening tests and interventions to prevent diabetes or other preventive services [40], and improve participant recruitment in clinical trials [45].

Limitations

Some limitations need to be acknowledged. First, similar to the studies of costing behavioral lifestyle interventions retrospectively [46], the current study may suffer from issues related to recall bias for self-reported hours on the recruitment activities. However, retrospective cost capture is considered a practical and low-burden method [29]. For the labor time, we asked multiple staff members to report hours spent on similar tasks and averaged the reported hours, which should partially alleviate this concern. Second, it is challenging to disentangle upstream resources and activities, such as staff training and planning, from the resources needed for study implementation, intervention delivery, and participant retention. The implementation of the study would not be possible without the startup and infrastructure setup in the pre-implementation phase of the trial. Third, we overestimated the replication costs when implementing this recruitment strategy in a real-world setting regardless of the sensitivity analysis exercises. Some of the operational costs (e.g., mail postage or telecommunication) or labor resources may have been shared costs in an existing system. Fourth, we acknowledged that the estimated replication costs might be simply generalizable to the general practice/healthcare setting due to the unavailability of overhead/space cost data, which may be meaningful when considering application in other settings. Similarly, we were not able to estimate the costs by patient characteristics because the cost data collection process was not planned accordingly to capture such information. Fifth, we recognized that the presentation of the effect on the estimates of total recruitment costs of varying some required activities or cost categories by ±50% to address factors such as potential geographic variation for the salaries and fringe benefits may seem arbitrary. However, it is considered a rule of thumb approach and can be used as a measure of sensitivity when there is limited data available [38]. Moreover, the hours needed for each labor-related activity were provided in Table1, which allows for the estimation of the total recruitment costs using salaries and fringe benefits from different settings. Finally, as healthcare systems differ in available resources, organizational capacity, system characteristics, service scope, marketing/communication ability, and patient components, further investigation into other healthcare systems is warranted to increase the generalizability.

Conclusions

To facilitate the uptake and scale-up of DPP-like programs, Damschroder and colleagues pointed to the need for referral processes that are (a) compatible and integrated with existing clinical processes; (b) effective in identifying and engaging high-risk participants; and (c) easy to use [21]. Our study presents a pragmatic approach for costing recruitment activities of a population health management approach to maximize program reach as well as the replication costs for applying this approach to other healthcare settings. This estimated cost information can inform future clinical system changes to improve the reach of existing evidence-based health promotion and disease prevention interventions.

Acknowledgments

We would like to acknowledge the entire UNMC and Wake Forest School of Medicine research team who has been instrumental in the development and implementation of the PREDICTS trial, including LuAnn Larson, Rachel Harper, Tristan Gilmore, Haydar Hasan, Carrie Fay, Cody Goessl, Priyanka Chaudhary, Thomas Ward, Jennifer Alquicira, Lindsay Thomsen, Sharalyn Steenson, Emiliane Pereira, Mariam Taiwo, Amanda Kis, Xiaolu Hou, Ashley Raposo-Hadley, Kaylee Schwasinger, Norah Winter, Tiffany Powell, Markisha Jackson, Kalynn Hamlin, Camia Sellers, Kumar Gaurav, Jessica Tran, Destiny Gamble, Akou Vei, Carol Kittel (WFSM), Amir Alexander (WFSM), Lea Harvin (WFSM), and Patty Davis (WFSM).

Funding: Research reported in this publication was supported by Omada Health, Inc., San Francisco, CA, USA. The content is solely of the responsibility of the authors and does not necessarily represent the official views of Omada Health.

Compliance with Ethical Standards

Authors’ Statement of Conflict of Interest and Adherence to Ethical Standards: All authors declare that they have no conflicts of interest.

Human Rights: This article does not contain any studies with human participants performed by any of the authors.

Informed Consent: This study does not involve human participants and informed consent was therefore not required.

Welfare of Animals: This article does not contain any studies with animals performed by any of the authors.

Transparency Statements: The diabetes prevention trial (PREDICTS) mentioned in the manuscript was registered at clinicaltrials.gov (NCT03312764). Otherwise, this study was not formally registered. The analysis plan was not formally pre-registered.

Data Availability: Deidentified data are available on request. There is not analytic code associated with this study. Materials used to conduct the study are not publicly available.

References

- 1. Dall TM, Yang W, Halder P, et al.. The economic burden of elevated blood glucose levels in 2012: Diagnosed and undiagnosed diabetes, gestational diabetes mellitus, and prediabetes. Diabetes Care. 2014;37(12):3172–3179. [DOI] [PubMed] [Google Scholar]

- 2. Centers for Disease Control and Prevention. National Diabetes Statistics Report, 2017. Atlanta, GA: Centers for Disease Control and Prevention, US Department of Health and Human Services; 2017. [Google Scholar]

- 3. Knowler WC, Barrett-Connor E, Fowler SE, et al. ; Diabetes Prevention Program Research Group. Reduction in the incidence of type 2 diabetes with lifestyle intervention or metformin. N Engl J Med. 2002;346(6):393–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Diabetes Prevention Program Research Group. HbA1c as a predictor of diabetes and as an outcome in the diabetes prevention program: A randomized clinical trial. Diabetes Care. 2015;38(1):51–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Sweet CC, Jasik CB, Diebold A, DuPuis A, Jendretzke B. Cost savings and reduced health care utilization associated with participation in a digital diabetes prevention program in an adult workforce population. J Health Econ Outcomes Res. 2020;7(2):139–147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Alva ML, Hoerger TJ, Jeyaraman R, Amico P, Rojas-Smith L. Impact of the YMCA of the USA diabetes prevention program on Medicare spending and utilization. Health Aff (Millwood). 2017;36(3):417–424. [DOI] [PubMed] [Google Scholar]

- 7. Centers for Disease Control and Prevention. Centers for Disease Control and Prevention Diabetes Prevention Recognition Program. Available at https://www.cdc.gov/diabetes/prevention/pdf/dprp-standards.pdf. Accessed November 5, 2019.

- 8. Almeida FA, Michaud TL, Wilson KE, et al. Preventing diabetes with digital health and coaching for translation and scalability (PREDICTS): A type 1 hybrid effectiveness-implementation trial protocol. Contemporary Clinical Trials. 2019;88:105877. doi: 10.1016/j.cct.2019.105877. [DOI] [PubMed] [Google Scholar]

- 9. Porter GC, Laumb K, Michaud T, et al. Understanding the impact of rural weight loss interventions: A systematic review and meta‐analysis. Obesity Rev. 2019;20(5):713–724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Aziz Z, Absetz P, Oldroyd J, Pronk NP, Oldenburg B. A systematic review of real-world diabetes prevention programs: Learnings from the last 15 years. Implement Sci. 2015;10(1):172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Ali MK, Bullard KM, Imperatore G, et al.. Reach and use of diabetes prevention services in the United States, 2016–2017. JAMA Network Open. 2019;2(5):e193160-e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Venkataramani M, Pollack CE, Yeh HC, Maruthur NM. Prevalence and correlates of diabetes prevention program referral and participation. Am J Prev Med. 2019;56(3):452–457. [DOI] [PubMed] [Google Scholar]

- 13. Sepah SC, Jiang L, Peters AL. Translating the diabetes prevention program into an online social network: Validation against CDC standards. Diabetes Educ. 2014;40(4):435–443. [DOI] [PubMed] [Google Scholar]

- 14. Sepah SC, Jiang L, Ellis RJ, McDermott K, Peters AL. Engagement and outcomes in a digital Diabetes Prevention Program: 3-year update. BMJ Open Diabetes Res Care. 2017;5(1):e000422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Castro Sweet CM, Chiguluri V, Gumpina R, et al.. Outcomes of a digital health program with human coaching for diabetes risk reduction in a Medicare population. J Aging Health. 2018;30(5):692–710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Michaelides A, Raby C, Wood M, Farr K, Toro-Ramos T. Weight loss efficacy of a novel mobile Diabetes Prevention Program delivery platform with human coaching. BMJ Open Diabetes Res Care. 2016;4(1):e000264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Ali MK, Echouffo-Tcheugui J, Williamson DF. How effective were lifestyle interventions in real-world settings that were modeled on the Diabetes Prevention Program? Health Aff (Millwood). 2012;31(1):67–75. [DOI] [PubMed] [Google Scholar]

- 18. Ali MK, Wharam F, Kenrik Duru O, et al. ; NEXT-D Study Group. Advancing health policy and program research in diabetes: Findings from the natural experiments for translation in diabetes (NEXT-D) network. Curr Diab Rep. 2018;18(12):146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Estabrooks P, You W, Hedrick V, Reinholt M, Dohm E, Zoellner J. A pragmatic examination of active and passive recruitment methods to improve the reach of community lifestyle programs: The Talking Health Trial. Int J Behav Nutr Phys Act. 2017;14(1):7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Glasgow RE, Harden SM, Gaglio B, et al.. RE-AIM planning and evaluation framework: Adapting to new science and practice with a 20-year review. Front Public Health. 2019;7:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Damschroder LJ, Reardon CM, AuYoung M, et al.. Implementation findings from a hybrid III implementation-effectiveness trial of the Diabetes Prevention Program (DPP) in the Veterans Health Administration (VHA). Implement Sci. 2017;12(1):94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Li Y, Kong N, Lawley MA, Pagán JA. Using systems science for population health management in primary care. J Prim Care Community Health. 2014;5(4):242–246. [DOI] [PubMed] [Google Scholar]

- 23. Berwick DM, Nolan TW, Whittington J. The triple aim: Care, health, and cost. Health Aff (Millwood). 2008;27(3):759–769. [DOI] [PubMed] [Google Scholar]

- 24. Powell BJ, Fernandez ME, Williams NJ, et al. Enhancing the impact of implementation strategies in healthcare: A research agenda. Front Public Health. 2019;7:3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Vale L, Thomas R, MacLennan G, Grimshaw J. Systematic review of economic evaluations and cost analyses of guideline implementation strategies. Eur J Health Econ. 2007;8(2):111–121. [DOI] [PubMed] [Google Scholar]

- 26. Lovato LC, Hill K, Hertert S, Hunninghake DB, Probstfield JL. Recruitment for controlled clinical trials: Literature summary and annotated bibliography. Control Clin Trials. 1997;18(4):328–352. [DOI] [PubMed] [Google Scholar]

- 27. Sadler GR, Ko CM, Malcarne VL, Banthia R, Gutierrez I, Varni JW. Costs of recruiting couples to a clinical trial. Contemp Clin Trials. 2007;28(4):423–432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Beaton SJ, Sperl-Hillen JM, Worley AV, et al. A comparative analysis of recruitment methods used in a randomized trial of diabetes education interventions. Contemp Clin Trials. 2010;31(6):549–557. [DOI] [PubMed] [Google Scholar]

- 29. Ritzwoller DP, Sukhanova A, Gaglio B, Glasgow RE. Costing behavioral interventions: A practical guide to enhance translation. Ann Behav Med. 2009;37(2):218–227. [DOI] [PubMed] [Google Scholar]

- 30. Speich B, von Niederhäusern B, Schur N, et al. ; MAking Randomized Trials Affordable (MARTA) Group. Systematic review on costs and resource use of randomized clinical trials shows a lack of transparent and comprehensive data. J Clin Epidemiol. 2018;96:1–11. [DOI] [PubMed] [Google Scholar]

- 31. Grimshaw J, Thomas R, MacLennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Int J Technol Assessm Health Care. 2005;21(1):149. [DOI] [PubMed] [Google Scholar]

- 32. Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Wilson K, Michaud T, Almeida F, et al. Using a population health management approac h to enroll participants in a diabetes prevention trial: Reach outcomes from the PREDICTS randomized clinical trial. Transl Behav Med. 2021.. [DOI] [PMC free article] [PubMed]

- 34. Foster EM, Porter MM, Ayers TS, Kaplan DL, Sandler I. Estimating the costs of preventive interventions. Eval Rev. 2007;31(3):261–286. [DOI] [PubMed] [Google Scholar]

- 35. Ingels JB, Walcott RL, Wilson MG, et al.. A Prospective programmatic cost analysis of fuel your life: A worksite translation of DPP. J Occup Environ Med. 2016;58(11):1106–1112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. U.S. Bureau of Labor Statistics. Consumer price index—all urban consumers. https://www.bls.gov/cpi/data.htm. Accessed February 2, 2021.

- 37. Raghavan R. Dissemination and Implementation Research in Health: Translating Science to Practice. 2nd ed. The Role of Economic Evaluation in Disseminations and Implementation Research. New York: Oxford University Press; 2018. [Google Scholar]

- 38. Briggs AH, Weinstein MC, Fenwick EA, et al. Model parameter estimation and uncertainty: A report of the ISPOR-SMDM modeling good research practices task force-6. Value Health. 2012;15(6):835–842. [DOI] [PubMed] [Google Scholar]

- 39. Centers for Medicare and Medicaid Services. Medicare Diabetes Prevention Program (MDPP )- Beneficiary Eligibility Fact Sheet. Available at: https://innovation.cms.gov/files/fact-sheet/mdppbeneelig-fs.pdf. Accessed February 3, 2021.

- 40. Bowen ME, Schmittdiel JA, Kullgren JT, Ackermann RT, O’Brien MJ. Building toward a population-based approach to diabetes screening and prevention for US adults. Curr Diab Rep. 2018;18(11):104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Centers for Medicare and Medicaid Services. Medicare Provides Coverage of Diabetes Screening Tests. https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNMattersArticles/downloads/se0821.pdf. Accessed November 1, 2019.

- 42. Centers for Disease Control and Prevention. National Diabetes Prevention Program- Program Eligibility. https://www.cdc.gov/diabetes/prevention/program-eligibility.html. Accessed November 5, 2019.

- 43. Leslie LK, Mehus CJ, Hawkins JD, et al. Primary health care: Potential home for family-focused preventive interventions. Am J Prev Med. 2016;51(4 Suppl 2):S106–S118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Jordan N, Graham AK, Berkel C, Smith JD. Costs of preparing to implement a family-based intervention to prevent pediatric obesity in primary care: A budget impact analysis. Prev Sci. 2019;20(5):655–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Effoe VS, Katula JA, Kirk JK, et al. ; LIFT Diabetes Research Team. The use of electronic medical records for recruitment in clinical trials: Findings from the Lifestyle Intervention for Treatment of Diabetes trial. Trials. 2016;17(1):496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Diabetes Prevention Program Research Group. Costs associated with the primary prevention of type 2 diabetes mellitus in the diabetes prevention program. Diabetes Care. 2003;26(1):36–47. [DOI] [PMC free article] [PubMed] [Google Scholar]