Abstract

Background

In recent years, there has been rapid growth in the availability and use of mobile health (mHealth) apps around the world. A consensus regarding an accepted standard to assess the quality of such apps has yet to be reached. A factor that exacerbates the challenge of mHealth app quality assessment is variations in the interpretation of quality and its subdimensions. Consequently, it has become increasingly difficult for health care professionals worldwide to distinguish apps of high quality from those of lower quality. This exposes both patients and health care professionals to unnecessary risks. Despite progress, limited understanding of the contributions of researchers in low- and middle-income countries (LMICs) exists on this topic. Furthermore, the applicability of quality assessment methodologies in LMIC settings remains relatively unexplored.

Objective

This rapid review aims to identify current methodologies in the literature to assess the quality of mHealth apps, understand what aspects of quality these methodologies address, determine what input has been made by authors from LMICs, and examine the applicability of such methodologies in LMICs.

Methods

This review was registered with PROSPERO (International Prospective Register of Systematic Reviews). A search of PubMed, EMBASE, Web of Science, and Scopus was performed for papers related to mHealth app quality assessment methodologies, which were published in English between 2005 and 2020. By taking a rapid review approach, a thematic and descriptive analysis of the papers was performed.

Results

Electronic database searches identified 841 papers. After the screening process, 52 papers remained for inclusion. Of the 52 papers, 5 (10%) proposed novel methodologies that could be used to evaluate mHealth apps of diverse medical areas of interest, 8 (15%) proposed methodologies that could be used to assess apps concerned with a specific medical focus, and 39 (75%) used methodologies developed by other published authors to evaluate the quality of various groups of mHealth apps. The authors in 6% (3/52) of papers were solely affiliated to institutes in LMICs. A further 15% (8/52) of papers had at least one coauthor affiliated to an institute in an LMIC.

Conclusions

Quality assessment of mHealth apps is complex in nature and at times subjective. Despite growing research on this topic, to date, an all-encompassing appropriate means for evaluating the quality of mHealth apps does not exist. There has been engagement with authors affiliated to institutes across LMICs; however, limited consideration of current generic methodologies for application in LMIC settings has been identified.

Trial Registration

PROSPERO CRD42020205149; https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=205149

Keywords: mHealth app, health app, mobile health, health website, quality, quality assessment, methodology, high-income country, low-income country, middle-income country, LMIC, mobile phone

Introduction

Background

Mobile health (mHealth) apps can be defined as software “incorporated into smartphones to improve health outcome, health research, and health care services” [1]. In 2017, >325,000 mHealth apps were available for download [2]. These apps can enhance health promotion and disease prevention, resulting in improved patient outcomes and economic savings [3,4].

In 2020, 35% of US health care consumers used mHealth apps compared with just 16% in 2014 [5]. Access to and use of these apps is also increasing in many low- and middle-income countries (LMICs) [6]. In 2015, there were >7 billion mobile telephone subscriptions worldwide, 70% of which were in LMICs [7,8]. Furthermore, 95% of the global population resides in an area covered by mobile cellular networks, with 84% of people having access to mobile broadband networks [9]. Such widespread use and access to smartphones has helped incorporate mHealth solutions into health care systems within LMICs [10].

Since the introduction of mHealth in the late 2000s, apps have facilitated improvements in disease management, reductions in health care costs and boosted service efficiency [3,4,11]. Despite the growing popularity of mHealth apps, research has also identified the potential risks associated with their use. Regardless of location, quality of content and software functionality are areas of concern in mHealth apps [12], as are data privacy and security [10,13]. For successful implementation of mHealth in LMIC settings, additional factors such as user-prospective and technical factors should also be considered [10].

At present, there is no comprehensive, universally available methodology to assess the quality of mHealth apps [14]. In addition, the existing five-star rating scales available within app stores provide subjective indications of quality, which are often unreliable [15]. Given the paucity of current methodologies, unreliability associated with star ratings, and the ever-expanding mHealth app market, the challenge for health care professionals to identify high-quality apps is becoming increasingly difficult.

A factor that exacerbates the conundrum of quality assessment is indeed the word quality itself. Quality can be considered an umbrella term encompassing many dimensions, depending on its context. Hence, disparities exist in the depth and focus of its definition. The Institute of Medicine defines quality in health care broadly as “the degree to which health services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge” [16]. The International Organization for Standardization adopts a more expansive approach and defines quality as the “degree to which a set of inherent characteristics of an object fulfills requirements” [17]. Nouri et al [18] proposed a broad classification model to address quality in relation to the mHealth app evaluation. Within this model, criteria and subcriteria are outlined for consideration when evaluating the quality of mHealth apps.

Perhaps it is this hierarchical, multifaceted nomenclature that has rendered it difficult to unify on a standard of quality when discussing mHealth apps. Various approaches have been taken to help identify higher quality apps. In the United Kingdom, the National Health Service app library provides a collection of mHealth apps of approved quality [19]. The Federal Institute for Drugs and Medical devices in Germany is set to examine the quality of apps with a view to doctors ultimately being able to “prescribe health care apps to patients” [20].

Efforts are also being made for mHealth app evaluation methods in LMIC settings [21]. However, despite the rapidly increasing market access, significant developments have yet to occur. Given the variability of socioeconomics across the globe, additional parameters in methodologies for mHealth app evaluation in emerging economics may be required.

Objectives

The primary aim of this rapid review is to identify current methodologies in the literature to assess the quality of mHealth apps. Second, it aims to determine what aspects of quality these methodologies consider. Third, it aims to examine global research input on this topic since 2005. Finally, this review examines the applicability of such methodologies in LMIC settings.

Methods

Study Design

Rapid reviews draw upon traditional systematic review processes to accelerate and streamline research while preserving the rigor and quality of review methodology [22]. Given the aforementioned research aims and objectives, a rapid review approach was deemed appropriate.

The broad principles of scoping review methodology, as defined by Arksey and O’Malley [23], were followed to formulate the research question and identify relevant studies for selection. A concept-centric approach was taken for the charting procedure in line with the advice given by Webster and Watson [24] for writing literature reviews in the field of information sciences. A standard protocol was followed in accordance with the PRISMA-ScR (Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews) checklist [25].

Search Strategy

A systematic search strategy was developed and applied across four databases: PubMed, EMBASE, Scopus, and Web of Science. These databases have strong scientific and medical focuses. This combination of databases was chosen in an effort to guarantee adequate and efficient coverage of relevant papers [26].

Under the guidance of an academic librarian and reflecting upon the advice of Arksey and O’Malley [23] for conducting literature reviews, the research question was split into the following four specific concepts: methodology, assess, quality, and mHealth app. Through the iterative process of keyword searching and preliminary search testing using Medical Subject Headings terms, synonyms of each concept were incorporated into the search string. The final search string is provided in Multimedia Appendix 1. An intrinsic link exists between mHealth apps and health websites. Therefore, variations of health website were included in the search string to identify papers that potentially covered both mHealth app and health website domains.

The search was conducted in December 2020 and was limited to studies published in English between 2005 and 2020. The year 2005 was chosen as the starting point for this review as the first iPhone was released on the market in 2007, and the app store was created in 2008 [27]. Geographical restrictions were not imposed on this search.

Study Selection

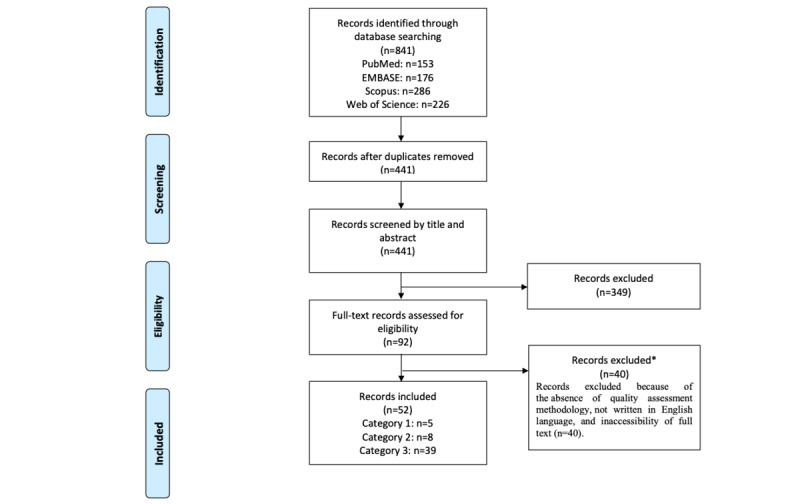

The reference management software EndNote X9 (Clarivate Analytics) was used to collate the initial literature search citations. Duplicates were removed before exporting the remaining citations to the Covidence systematic review software (v2409). The author FW initially screened titles and abstracts to determine whether a paper met the general study selection criteria. The full texts of the remaining papers were formally screened against the inclusion and exclusion criteria by FW. Any papers that the author FW was unsure about were screened by and clarified through engagement with the author JOD. The search results were presented in a PRISMA flow diagram (Figure 1).

Figure 1.

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) flow diagram of the inclusion process.

Selection Criteria

The inclusion criteria for studies were as follows:

Papers that proposed a methodology to evaluate the quality of mHealth apps (regardless of assessing one aspect or several aspects of quality)

Papers that used a methodology for the quality evaluation of specific groups of mHealth apps

Papers published in English from 2005 onward in research journals

The exclusion criteria for studies were as follows:

Papers that proposed a methodology for the evaluation of non-mHealth apps

Papers that proposed a methodology solely for the evaluation of health websites

Papers that were not available through the library of University College Cork, interlibrary loans, or via direct communication with authors

Papers that were not available in the English language

Categorization of Reviewed Papers

Papers that successfully passed full-text screening were subdivided into three categories based on their thematic synergies. These were as follows:

Category 1 (generic methodologies): papers that proposed generic methodologies to evaluate the quality of mHealth apps or mHealth websites

Category 2 (health condition–specific methodologies): papers that proposed methodologies designed specifically to evaluate the quality of mHealth apps that focus on one medical condition (ie, App Quality Evaluation Tool to evaluate the quality of nutrition apps)

Category 3 (use of existing methodologies): papers that used a prepublished methodology to evaluate certain groups of mHealth apps

Data Extraction

Data items extracted from all studies included paper title, author, year, aim or objective, name of methodology used or developed, target platform of methodology, disease focus of study, strengths, validity and reliability of methodology, weaknesses, and future work. The location of authors institute affiliations was classified based on the World Bank Classification system [28] into high-, middle-, or low-income countries. Any uncertainty was clarified through discussion with a second reviewer (JOD). This extraction form was initially piloted and amended where necessary.

The methodologies proposed by the papers in category 1 (ie, those that proposed a generic methodology for mHealth app evaluation) were compared with a reference classification checklist of criteria for assessing mHealth app quality proposed by Nouri et al [18].

Quality Evaluation

The aim of this rapid review is to assess the extent of published literature on mHealth quality assessment methodologies and related studies rather than to evaluate specific causes and effects. Therefore, as supported by the World Health Organization, risk of bias assessment was not conducted, as this review served as an information gathering process [29].

Results

The search and paper retrieval processes are illustrated in Figure 1. A total of 841 potentially relevant papers were identified. Of the 841 papers, following the removal of duplicates, 441 (52.4%) papers remained, with 52 (6.2%) papers meeting the criteria for inclusion.

Characteristics of Retrieved Papers

The papers were subdivided into three categories. Category characteristics and respective citations are indicated in Table 1.

Table 1.

Summary of paper categories and their respective citations (N=52).

| Category | Number of papers, n (%) | General explanation of category |

| Category 1: generic methodologies | 5 (10) | |

| Category 2: health condition–specific methodologies | 8 (15) | |

| Category 3: use of existing methodologies | 39 (75) |

aAQEL: App Quality Evaluation Tool.

bMARS: Mobile App Rating Scale.

Category 1

Of the five papers identified in category 1, 1 (20%) proposed a methodology to evaluate the quality of both mHealth apps and health websites [30], and 4 (80%) proposed methodologies solely used to evaluate the quality of mHealth apps [31-34]. The coverage of the Nouri et al [18] mHealth app evaluation criteria found in the methodologies within this category can be viewed in Table 2.

Table 2.

Coverage of category 1 methodologies of the criteria for assessing the quality of mHealth apps proposed by Nouri et al [18].

| mHealth app quality assessment criteria (Nouri et al [18]) | Baumel et al (Enlight) [30] | Yasini et al [31] | Anderson et al (ACDCa) [32] | Stoyanov et al (MARSb) [33] | Martínez-Pérez et al [34] | |

| Design | ||||||

|

|

Suitability of design | ✓ |

|

|

|

|

|

|

Aesthetics | ✓ |

|

|

✓ |

|

|

|

Appearance | ✓ |

|

|

|

✓ |

|

|

Design consistency | ✓ |

|

✓ | ✓ |

|

| Information or content | ||||||

|

|

Credibility | ✓ | ✓ | ✓ | ✓ | ✓ |

|

|

Accuracy | ✓ | ✓ |

|

✓ | ✓ |

|

|

Quality of information | ✓ |

|

✓ | ✓ |

|

|

|

Quantity of information | ✓ |

|

✓ | ✓ |

|

| Usability | ||||||

|

|

Ease of use | ✓ | ✓ | ✓ | ✓ | ✓ |

|

|

Operability |

|

✓ |

|

|

|

|

|

Visibility | ✓ |

|

|

|

|

|

|

User control and freedom |

|

|

|

|

|

|

|

Consistency and standards |

|

|

|

|

|

|

|

Error prevention |

|

|

|

|

✓ |

|

|

Completeness | ✓ | ✓ |

|

|

|

|

|

Information needs | ✓ | ✓ |

|

|

|

|

|

Flexibility and customizability |

|

✓ |

|

|

|

|

|

Competency |

|

|

|

|

|

|

|

Style | ✓ |

|

|

|

|

|

|

Behavior | ✓ |

|

|

|

|

|

|

Structure |

|

|

|

|

|

| Functionality | ||||||

|

|

Performance |

|

|

✓ | ✓ | ✓ |

|

|

Health warnings |

|

|

✓ |

|

|

|

|

Feedback | ✓ |

|

✓ |

|

|

|

|

Connectivity and interpretability |

|

✓ | ✓ |

|

|

|

|

Record | ✓ |

|

|

|

|

|

|

Display |

|

|

|

|

|

|

|

Guide | ✓ |

|

|

|

|

|

|

Remind or alert | ✓ |

|

|

|

|

|

|

Communicate | ✓ |

|

|

|

✓ |

| Ethical issues | ||||||

|

|

Beneficence | ✓ | ✓ |

|

|

|

|

|

Nonmaleficence |

|

✓ |

|

|

|

|

|

Autonomy |

|

✓ |

|

|

|

|

|

Justice |

|

✓ |

|

|

|

|

|

Legal obligations | ✓ | ✓ |

|

|

|

| Security and privacy | ||||||

|

|

Security | ✓ | ✓ |

|

|

✓ |

|

|

Privacy | ✓ | ✓ |

|

|

|

| User perceived value | ||||||

|

|

User perceived value | ✓ | ✓ | ✓ | ✓ | ✓ |

aACDC: App Chronic Disease Checklist.

bMARS: Mobile App Rating Scale.

Category 2

The methodologies proposed in category 2 of the papers focused specifically on asthma [35], pain management [36], medication adherence [37], medication-related problems [38], hard of hearing [39], diabetes mellitus [40], infant feeding [41], and nutritional [42] mHealth apps. The methodologies proposed within this category of papers were highly specific to one topic of medicine. Therefore, their respective dimensions of quality were not subjected to further investigation.

Category 3

Papers in category 3 were concerned with a variety of medical conditions. Quality assessment of nutrition-related mHealth apps was the most prevalent area of research within this category [46,47,50,55,58,63,65,76]. Other types of mHealth apps studied were those related to obesity and weight management [53,62,64], pain management [43,70,75], mental health [51,67], and oncology [57,82].

Within this category, of the 39 papers, 23 (59%) papers applied one methodology, and 16 (41%) papers used a combination of methodologies to evaluate the quality of mHealth apps. The Mobile App Rating Scale (MARS) construct [33] was the most commonly used methodology (24/39, 62%). It was used in 38% (15/39) of papers as the sole means of quality evaluation [45-49,52,57,69-71,73-76,78,79]. MARS was also used in an additional 23% of papers along with other methodologies, such as clinical guidelines [46,55,56,58,65,67,68,77,81]. The breakdown of methodologies and the frequency of their use can be viewed in Table 3.

Table 3.

Breakdown of methodologies used by papers in category 3 (N=39).

| Methodology used | Articles, n (%) |

| MARSa [45-49,52,57,69-71,73-76,78,79] | 15 (38) |

| uMARSb [48,63] | 2 (5) |

| Silberg scale [51,53,64] | 3 (8) |

| AQELc [50] | 1 (3) |

| mHONd code [61] | 1 (3) |

| IOMe aims [72] | 1 (3) |

| Combination of methodologies (System Usability Scale and clinical guidelines) [46,47,54-56,58-60,62,65-68,77,80,81] | 16 (41) |

aMARS: Mobile App Rating Scale.

buMARS: user version of Mobile App Rating Scale.

cAQEL: App Quality Evaluation Tool.

dmHON: Mobile applications–Health on the Net.

eIOM: Institute of Medicine.

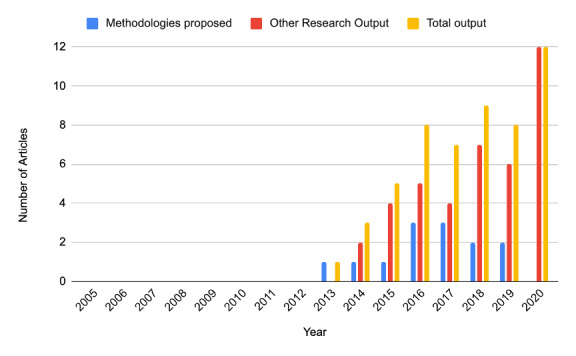

Timeline of Published mHealth Assessment Methodologies and Studies

Research output on the topic of mHealth quality assessment has significantly increased in recent years. Since 2005, 52 papers have been published on this topic; of the 52 papers, 12 (23%) were published in 2020 alone. The research output of novel methodologies for evaluating mHealth apps (categories 1 and 2) and studies relating to the topic (category 3) since 2005 are illustrated in Figure 2.

Figure 2.

Illustration of research output on mobile health app evaluation studies from 2005 to 2020.

International Input

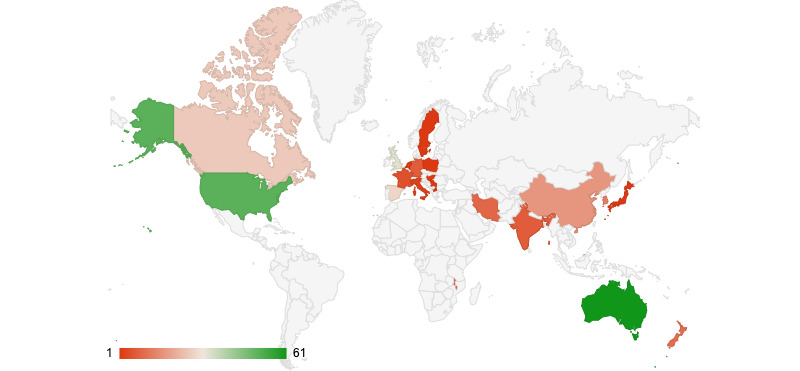

The location of authors institute affiliations for all papers (categories 1, 2, and 3) was classified into high-, middle-, or low-income countries based on the World Bank Classification system [28]. All category 1 papers were published by authors affiliated with institutions in high-income countries. One paper in category 2 was published by authors affiliated with an institute in a low-or middle-income country [35]. Of the 39 papers in category 3, 2 (5%) were solely published by authors affiliated with institutes in LMICs [44,78]. A further 21% (8/39) of papers in this category had at least one author affiliated with institutes in LMICs [45,54,56,60,63,67,74,75]. The location of authors’ affiliated institutes can be viewed in the Geo chart in Figure 3. A breakdown of countries and the number of authors affiliated with it can be viewed in Table 4.

Figure 3.

Geo chart indicating research output affiliated to each country.

Table 4.

A breakdown of research contribution based on the country of author affiliation institute.

| Author affiliation and country (ordered alphabetically) | Number of authors affiliated to institutions in that country, n (%) | |

| High-income countries | ||

|

|

Australia | 61 (23.7) |

|

|

Canada | 26 (10.1) |

|

|

France | 5 (1.9) |

|

|

Germany | 7 (2.7) |

|

|

Hungary | 1 (0.4) |

|

|

Italy | 2 (0.8) |

|

|

Japan | 1 (0.4) |

|

|

Korea, Republic | 13 (5.1) |

|

|

Netherlands | 1 (0.4) |

|

|

New Zealand | 10 (3.9) |

|

|

Poland | 1 (0.4) |

|

|

Spain | 28 (10.9) |

|

|

Singapore | 16 (6.2) |

|

|

Sweden | 1 (0.4) |

|

|

United Kingdom | 33 (12.8) |

|

|

United States | 51 (19.8) |

| Total affiliations from high-income countries | 257 (100) | |

| Middle-income countries | ||

|

|

China | 17 (48.6) |

|

|

India | 7 (20) |

|

|

Iran, Islamic Republic | 9 (25.7) |

|

|

Macedonia | 1 (2.9) |

|

|

Serbia | 1 (2.9) |

| Total affiliations from middle-income countries | 35 (100) | |

| Low-income countries | ||

|

|

Brunei | 3 (42.9) |

|

|

Malawi | 4 (57) |

| Total affiliations from low-income countries | 7 (100) | |

Discussion

Principal Findings

A variety of methodologies to assess the quality of mHealth apps have been identified in this review. Some adopted a generic approach and can be used to evaluate mHealth apps for various medical conditions. Other methodologies take a disease-centric approach and are only relevant when considering apps concerned with that particular disease. Despite a number of quality assessment methodologies being available, significant variations in the dimensions of quality that they address were identified. Given the subjective nature of quality and its subdimensions, it is not surprising to find this high degree of diversity.

As presented in category 3, the MARS construct proposed by Stoyanov et al [33] has been widely used by other authors to evaluate the quality of mHealth apps. MARS is a concise, easy-to-use tool that covers many of the Nouri et al [28] criteria for assessing mHealth app quality (Table 2). Despite its popularity, MARS fails to address some important key aspects of quality, most notably security and privacy. The use of mHealth apps may involve the processing of sensitive information by multiple parties. Therefore, a rising awareness and concern exist in relation to the safety of the information that they contain [13,83]. This underscores the importance of considering privacy and security when evaluating mHealth apps and highlights a significant limitation of the MARS construct. Only two of the five generic methodologies took both of these dimensions into consideration [30,31].

In contrast to MARS, the Enlight suite of assessments proposed by Baumel et al [30] provides a more thorough assessment of quality. It has been designed for both mHealth apps and health website quality evaluation purposes. As presented in Table 2, Enlight has comprehensive coverage of the Nouri et al [28] criteria for mHealth app quality assessment. Rating measures within the Enlight suite are divided into two sections: quality assessments and checklists. The quality assessment section refers to aspects of quality that relate to the user’s experience of an mHealth app. The checklists are not expected to directly impact the end user’s experience of the product’s efficacy; rather, these lists may expose the user (or provider) to acknowledged risks or benefits.

Respondent fatigue is a well-documented phenomenon in questionnaires [84]. Although the Enlight suite provides a far-reaching means to evaluate the quality of mHealth apps, its all-encompassing nature may, in reality, curtail its use. Along with the checklists section, 28 questions are contained within the Enlight Quality Assessment section. This is significantly greater than that of other generic methodologies. Hence, the use of the Enlight suite would take significantly longer than others to score the quality of mHealth apps. Undeniably, a greater balance is needed to maximize user uptake and engagement among the health care community. This is especially important, as in many cases health care professionals are not allotted additional time to assess new apps.

Although an abundance of mHealth apps is available, academic studies on their clinical impact are lacking. Concerningly, many mHealth apps are not based on any behavior change theory, and in many cases, their effectiveness has not been correctly evaluated [82,85]. With that said, the ability of apps to stimulate behavior change is becoming a growing area of interest [86]. The behavior change technique is not an explicit quality criterion proposed by Nouri et al [28]; however, the World Health Organization recognizes the importance of health outcome–based measures [87]. This review identified its considerations in three of the generic methodologies [30,32,33]. The App Chronic Disease Checklist (ACDC) construct includes behavior change as a singular point of consideration [32]. In contrast, the MARS construct assesses “the perceived impact of an app on the user’s knowledge, attitudes, intentions to change as well as the likelihood of actual change in the targeted health behavior” in its App-Specific section [33]. Similarly, the Enlight Suites Therapeutic Persuasiveness section is specifically dedicated to addressing the topic of behavior change techniques [30]. Although behavior change technique in itself is a broad concept, it is reassuring to identify its consideration even to a certain extent within many methodologies.

Challenges in App Assessment

As mentioned in the introduction, a paucity of uniform definitions for quality and its respective subdimensions exists. A lack of clear-cut definitions not only poses a challenge to this research but also adds a level of ambiguity to mHealth app quality evaluations as a whole. Until precise definitions of each dimension of quality are provided, ongoing subjectivity regarding the interpretation of a dimension of quality with respect to an mHealth app may continue.

It is quite important to consider the validity and reliability of the assessment tools in health care [88]. Validity indicates how well a tool measures what it intends to measure, and reliability expresses the extent to which the obtained results are reproducible [88]. Most of the selected tools offered some form of face and content validity based on expert opinions [30-33]. Only 4% (2/52) of studies [30,33] provided reliability results. However, the selected studies did not conduct factor analysis, which can limit their construct validity. In addition, none of the tools provided any predictive validity, which is the extent to which the scores predict the ability of the mHealth app to improve the targeted health condition. Thus, the paucity of information on the validity and reliability of the available tools could limit their usefulness in practice.

Methodologies proposed within category 1 provide the user with a means to assess the quality of mHealth apps. However, no methodology within this category provides the user with a scoring mechanism or rubric to interpret the results. For example, when using the ACDC checklist, what does it mean if an app contains an overwhelming amount of information but scores perfect results in all other dimensions of quality? Does this render the app low quality? A lack of clear scoring mechanisms may hinder a user’s interpretation of the evaluation process, making it an inconclusive exercise.

Applicability of mHealth App Evaluation Methodologies in High-, Middle-, and Low-Income Countries

Although 46% of new mHealth app publishers are from Europe [89], the apps they develop are often available in international markets. As the functionality of mHealth apps becomes more diverse and ownership of smartphones rises, it is likely that their adoption by those living in LMICs will continue to increase. The applicability of the aforementioned methodologies for assessing mHealth app quality in LMIC settings has not been widely considered. As a health care professional contemplates whether a specific mHealth app would be beneficial for their patient, the suitability of an app in the context of his or her patient must be considered. Various regulatory, technical, and user-prospective factors have been identified as obstacles to the integration of mHealth solutions in resource-poor settings [10].

Many regulatory factors that may affect mHealth use in LMICs also affect their use in high-income countries (HICs). Security and privacy of data are two examples. Table 2 highlights that these factors are currently considered in many quality assessment methodologies. Continued access to the internet represents a technological factor that may affect mHealth use in LMICs disproportionately to that in HICs [10]. Despite the penetration rate of mobile broadband signal doubling in LMICs over the past two decades [90], challenges such as use, cost, and speed continue to exist. As such, researchers may wish to consider the impact of inconsistent internet services on an app's functionality. The ACDC checklist [32] was the sole generic methodology to address the facilitation of an offline mode. The incorporation of questions such as this within methodologies helps to consider the reality faced by many within LMICs at present.

Socioeconomics can impact the use of mHealth solutions. With increased global demand, it represents an important parameter for consideration. Two factors within the domain of socioeconomics, which may be important, are cultural appropriateness and literacy. Cultural appropriateness is essential for designing user interfaces or web interfaces for international and country-specific audiences that will be accepted and liked by users [91]. Cultural appropriateness applies to mHealth app evaluations not only in LMICs but also in HICs. If the content of an mHealth app is unsuitable for a particular audience, its download may become a contentious or fruitless exercise. For example, an app designed for prenatal care in Ireland may not be appropriate for use in sub-Saharan Africa. As far as the authors are aware, no generic methodology has explicitly examined the cultural appropriateness of an mHealth app. However, vague considerations were made in the MARS construct [33] and the Enlight suite [30]. In these cases, the suitability of certain aspects of an app, such as information and visual content with respect to the target audience, were mentioned. Given the broadening cultural diversity of app users, perhaps a more formal effort to consider cultural appropriateness exists for the benefit of those in LMICs and HICs.

Health literacy is a concern for many low- and middle-income populations. Within the domain of literacy, readability refers to the comprehension level required by an individual to correctly understand and engage with written material [92]. Past research indicates that many mHealth apps are written at excessively high reading grade levels [66,93]. Poor readability may increase the scope for misinterpretation and render an app inaccessible to many potential end users [66,93]. Nouri et al [28] considered readability as a subcriterion of ease of use [18]. Only two of the five generic methodologies explicitly consider these subcriteria [31,32]. Although the average reading level in LMICs is rising, in many cases, it is still behind that of HICs [94]. Given the proportion of mHealth app development from HICs, a salient need for health care professionals in LMICs to consider the readability of these apps in terms of their potential end users is important.

Future Work

The authors identify several directions for future work in this area of research. First, the review could be extended to papers published in languages other than English, providing a more accurate representation of quality assessment methodologies currently available at an international level.

The Enlight suite provides a thorough means for evaluating the quality of mHealth apps; however, its fundamental usability and ability to consider an app in the context of various populations could be enhanced. An area of active research by the authors is the revision and enhancement of this tool based on the knowledge of this rapid review. Through a Delphi study and supporting survey techniques, the suite is in the process of being modified to make it more user friendly and comprehensive in LMIC settings.

This study highlights several challenges associated with the use of quality assessment methodologies in practice. There is scope to formalize methodology reliability processes, yielding more transparency and comparability in assessments. A scoring mechanism or rubric may be considered in future methodologies that provides users with a means to summarize an app based on the aggregated dimensions of quality that it fulfills.

On a practical level, this research provides additional emphasis on the importance of mHealth app quality assessments. Methodologies such as the Enlight suite and MARS construct are suitable for the purposes outlined in this paper. However, going forward, these methodologies may also be used in consultation with health care professionals for reasons of app development, providing a template for quality assurance.

Strengths

This review has several strengths. To the best of the authors’ knowledge, this is the first review to consider the applicability of generic methodologies to evaluate the quality of mHealth apps in LMIC settings. Furthermore, it highlights the affiliations of authors institutes, indicating where significant research input has come from in the past. This review begins to consider further parameters that one may wish to incorporate into methodologies in the future to improve their relevancy across resource-poor settings.

Limitations

This research is not without limitations. A decision was made by the research team to exclude methodologies for the evaluation of health websites only and non-mHealth apps. This decision was based on the fact that such methodologies often consider parameters that are not applicable to mHealth apps themselves. In an effort to retrieve all relevant papers, terms relating to these concepts were included within the search string. However, only those papers that formally met the inclusion or exclusion criteria were considered in this review.

Although reviewed by a second author where necessary, paper retrieval, selection, and data extraction were completed by one reviewer (FW). Nouri et al provided generalized definitions or examples of its respective quality assessment criteria [18]. A considered approach was taken by the author FW, whereby a methodology with reasonable coverage of the criteria was positively reflected in data extraction. A lack of universal definitions for quality and its respective subdimensions posed a challenging factor for data extraction, comparison, and synthesis on this topic.

The investigators acknowledge that the country affiliation of methodology authors may have limited relevance toward the application of those methodologies within their respective locations. Nevertheless, given the international market demand and varying socioeconomics, the investigators believe that this approach serves as one of many, which may help indicate the suitability of mHealth app quality assessment methodologies in LMICs.

Finally, only articles published in English were included in this review. This may have some impact on our results presented in Figure 3 and Table 4, as methodologies published in other languages were not identified.

Conclusions

Quality assessment of mHealth apps is a complex task. Significant heterogeneity exists between the aspects of quality that are considered by the methodologies identified by this rapid review. Some key aspects of quality remain unaddressed by certain methodologies despite their growing popularity. Although engagement with authors affiliated to institutes in LMIC exists on this topic, limited consideration has been made for the use of current methodologies in LMIC settings.

Owing to the variety of stakeholders involved in mHealth (eg, software engineers, information technology departments or companies, health care professionals, and patients), the challenges of finding or developing an all-encompassing methodology to assist health care professionals in assessing the quality of a given app is easily appreciated. With the ever-increasing role of mHealth apps in health care, it is time to consider policy development at the international level. An inclusive and intuitive mHealth app assessment methodology is required to ensure the reliable use of mHealth apps worldwide.

Acknowledgments

All those who met the authorship criteria are listed above as the authors. Each certify that they have participated sufficiently in this body of work. The lead author, FW, received an Irish Health Research Board Scholarship, which helped support this project (Scholarship Number: SS-2020-089).

Abbreviations

- ACDC

App Chronic Disease Checklist

- HIC

high-income country

- LMIC

low- and middle-income country

- MARS

Mobile App Rating Scale

- mHealth

mobile health

- PRISMA-ScR

Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews

- PROSPERO

International Prospective Register of Systematic Reviews

Search string applied to databases.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Ming LC, Hameed MA, Lee DD, Apidi NA, Lai PS, Hadi MA, Al-Worafi YM, Khan TM. Use of medical mobile applications among hospital pharmacists in Malaysia. Ther Innov Regul Sci. 2016 Jul 30;50(4):419–26. doi: 10.1177/2168479015624732. [DOI] [PubMed] [Google Scholar]

- 2.Mobile health (mHealth) market to reach USD 311.98 billion by 2027. Reports and Data. 2020. [2021-02-07]. https://www.globenewswire.com/news-release/2020/04/28/2023512/0/en/Mobile-Health-mHealth-Market-To-Reach-USD-311-98-Billion-By-2027-Reports-and-Data.html .

- 3.Duplaga M, Tubek A. mHealth - areas of application and the effectiveness of interventions. Zdrowie Publiczne i Zarządzanie. 2018;16(3):155–66. doi: 10.4467/20842627oz.18.018.10431. [DOI] [Google Scholar]

- 4.Ghanchi J. mHealth apps: how are they revolutionizing the healthcare industry? Mddionline. 2019. Sep, [2021-01-30]. https://www.mddionline.com/digital-health/mhealth-apps-how-are-they-revolutionizing-healthcare-industry .

- 5.Safavi K, Kalis B. How can leaders make recent digital health gains last? US findings. Accenture 2020 Digital Health Consumer Survey. 2020. [2021-01-30]. https://www.accenture.com/_acnmedia/PDF-130/Accenture-2020-Digital-Health-Consumer-Survey-US.pdf#zoom=40 .

- 6.Feroz A, Kadir MM, Saleem S. Health systems readiness for adopting mhealth interventions for addressing non-communicable diseases in low- and middle-income countries: a current debate. Glob Health Action. 2018;11(1):1496887. doi: 10.1080/16549716.2018.1496887. http://europepmc.org/abstract/MED/30040605 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Measuring the information society report. International Telecommunication Union. 2015. [2021-02-07]. http://www.itu.int/en/ITU-D/Statistics/Documents/publications/misr2015/MISR2015-w5.pdf,

- 8.Key ICT Indicators for Developed and Developing Countries and the World (Totals and Penetration Rates) Geneva: International Telecommunication Union; 2017. Mobile-cellular telephone subscriptions. [Google Scholar]

- 9.About International Telecommunication Union (ITU) International Telecommunication Union. [2021-01-30]. https://www.itu.int/en/about/Pages/default.aspx .

- 10.Wallis L, Blessing P, Dalwai M, Shin SD. Integrating mHealth at point of care in low- and middle-income settings: the system perspective. Glob Health Action. 2017 Jun 25;10(sup3):1327686. doi: 10.1080/16549716.2017.1327686. http://europepmc.org/abstract/MED/28838302 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ventola CL. Mobile devices and apps for health care professionals: uses and benefits. P T. 2014 May;39(5):356–64. http://europepmc.org/abstract/MED/24883008 . [PMC free article] [PubMed] [Google Scholar]

- 12.Akbar S, Coiera E, Magrabi F. Safety concerns with consumer-facing mobile health applications and their consequences: a scoping review. J Am Med Inform Assoc. 2020 Feb 01;27(2):330–40. doi: 10.1093/jamia/ocz175. http://europepmc.org/abstract/MED/31599936 .5585394 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sampat B, Prabhakar B. Privacy risks and security threats in mHealth apps. J Int Technol Inf Manag. 2017;26(4):126–53. https://scholarworks.lib.csusb.edu/cgi/viewcontent.cgi?article=1353&context=jitim . [Google Scholar]

- 14.Moshi MR, Tooher R, Merlin T. Suitability of current evaluation frameworks for use in the health technology assessment of mobile medical applications: a systematic review. Int J Technol Assess Health Care. 2018 Jan;34(5):464–75. doi: 10.1017/S026646231800051X.S026646231800051X [DOI] [PubMed] [Google Scholar]

- 15.Kuehnhausen M, Frost V. Trusting smartphone apps? To install or not to install, that is the question. Proceedings of the IEEE International Multi-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support; IEEE International Multi-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support (CogSIMA); Feb 25-28, 2013; San Diego, CA, USA. 2013. [DOI] [Google Scholar]

- 16.IOM definition of quality. Institute of Medicine. 2013. [2021-02-07]. http://iom.nationalacademies.org/Global/News%20Announcements/Crossing-the-Quality-Chasm-The-IOM-Health-Care-Quality-Initiative .

- 17.ISO 8000-2:2020(en) Data quality — Part 2: Vocabulary. Online Browsing Platform. 2011. [2021-02-07]. https://www.iso.org/obp/ui/#iso:std:iso:8000:-2:ed-4:v1:en:term:3.1.3 .

- 18.Nouri R, R Niakan Kalhori S, Ghazisaeedi M, Marchand G, Yasini M. Criteria for assessing the quality of mHealth apps: a systematic review. J Am Med Inform Assoc. 2018 Aug 01;25(8):1089–98. doi: 10.1093/jamia/ocy050. http://europepmc.org/abstract/MED/29788283 .4996915 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.NHS apps library. National Health Service. [2021-01-15]. https://www.nhs.uk/apps-library/

- 20.German doctors to prescribe health apps in ‘World First’. HealthManagement.org. 2020. [2021-01-15]. https://healthmanagement.org/c/it/news/german-doctors-to-prescribe-health-apps-in-world-first .

- 21.The MAPS Toolkit: mHealth Assessment and Planning for Scale. Geneva: World Health Organization; 2015. pp. 1–94. [Google Scholar]

- 22.Kelly SE, Moher D, Clifford TJ. Defining rapid reviews: a modified delphi consensus approach. Int J Technol Assess Health Care. 2016 Jan;32(4):265–75. doi: 10.1017/S0266462316000489.S0266462316000489 [DOI] [PubMed] [Google Scholar]

- 23.Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005 Feb;8(1):19–32. doi: 10.1080/1364557032000119616. [DOI] [Google Scholar]

- 24.Webster J, Watson R. Analyzing the past to prepare for the future: writing a literature review. MIS Quarterly. 2002 Jun;26(2):xiii–xxiii. https://www.jstor.org/stable/4132319 . [Google Scholar]

- 25.Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, Moher D, Peters MD, Horsley T, Weeks L, Hempel S, Akl EA, Chang C, McGowan J, Stewart L, Hartling L, Aldcroft A, Wilson MG, Garritty C, Lewin S, Godfrey CM, Macdonald MT, Langlois EV, Soares-Weiser K, Moriarty J, Clifford T, Tunçalp Ö, Straus SE. PRISMA extension for Scoping Reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018 Oct 02;169(7):467–73. doi: 10.7326/M18-0850. https://www.acpjournals.org/doi/abs/10.7326/M18-0850?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .2700389 [DOI] [PubMed] [Google Scholar]

- 26.Bramer W, Rethlefsen ML, Kleijnen J, Franco OH. Optimal database combinations for literature searches in systematic reviews: a prospective exploratory study. Syst Rev. 2017 Dec 06;6(1):245. doi: 10.1186/s13643-017-0644-y. https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-017-0644-y .10.1186/s13643-017-0644-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.A brief history of the Apple App store in its first 10 years. Digital Trends. 2021. [2021-06-21]. https://www.digitaltrends.com/news/apple-app-store-turns-10/

- 28.World Bank Country And Lending Groups – World Bank Data Help Desk. Datahelpdesk.worldbank.org. 2021. [2021-01-12]. https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups> .

- 29.Rapid Reviews To Strengthen Health Policy And Systems: A Practical Guide. Geneva: World Health Organization; 2017. [Google Scholar]

- 30.Baumel A, Faber K, Mathur N, Kane JM, Muench F. Enlight: a comprehensive quality and therapeutic potential evaluation tool for mobile and web-based eHealth interventions. J Med Internet Res. 2017 Mar 21;19(3):e82. doi: 10.2196/jmir.7270. https://www.jmir.org/2017/3/e82/ v19i3e82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Yasini M, Beranger J, Desmarais P, Perez L, Marchand G. mHealth quality: a process to seal the qualified mobile health apps. Stud Health Technol Inform. 2016;228:205–9. [PubMed] [Google Scholar]

- 32.Anderson K, Burford O, Emmerton L. App chronic disease checklist: protocol to evaluate mobile apps for chronic disease self-management. JMIR Res Protoc. 2016 Nov 04;5(4):e204. doi: 10.2196/resprot.6194. https://www.researchprotocols.org/2016/4/e204/ v5i4e204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Stoyanov SR, Hides L, Kavanagh DJ, Zelenko O, Tjondronegoro D, Mani M. Mobile app rating scale: a new tool for assessing the quality of health mobile apps. JMIR Mhealth Uhealth. 2015 Mar 11;3(1):e27. doi: 10.2196/mhealth.3422. https://mhealth.jmir.org/2015/1/e27/ v3i1e27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Martínez-Pérez B, de la Torre-Díez I, Candelas-Plasencia S, López-Coronado M. Development and evaluation of tools for measuring the quality of experience (QoE) in mHealth applications. J Med Syst. 2013 Oct;37(5):9976. doi: 10.1007/s10916-013-9976-x. [DOI] [PubMed] [Google Scholar]

- 35.Guan Z, Sun L, Xiao Q, Wang Y. Constructing an assessment framework for the quality of asthma smartphone applications. BMC Med Inform Decis Mak. 2019 Oct 15;19(1):192. doi: 10.1186/s12911-019-0923-8. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-019-0923-8 .10.1186/s12911-019-0923-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Reynoldson C, Stones C, Allsop M, Gardner P, Bennett MI, Closs SJ, Jones R, Knapp P. Assessing the quality and usability of smartphone apps for pain self-management. Pain Med. 2014 Jun;15(6):898–909. doi: 10.1111/pme.12327. [DOI] [PubMed] [Google Scholar]

- 37.Ali EE, Teo AK, Goh SX, Chew L, Yap KY. MedAd-AppQ: a quality assessment tool for medication adherence apps on iOS and android platforms. Res Social Adm Pharm. 2018 Dec;14(12):1125–33. doi: 10.1016/j.sapharm.2018.01.006.S1551-7411(17)30665-4 [DOI] [PubMed] [Google Scholar]

- 38.Loy JS, Ali EE, Yap KY. Quality assessment of medical apps that target medication-related problems. J Manag Care Spec Pharm. 2016 Oct;22(10):1124–40. doi: 10.18553/jmcp.2016.22.10.1124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Romero RL, Kates F, Hart M, Ojeda A, Meirom I, Hardy S. Quality of deaf and hard-of-hearing mobile apps: evaluation using the Mobile App Rating Scale (MARS) with additional criteria from a content expert. JMIR Mhealth Uhealth. 2019 Oct 30;7(10):e14198. doi: 10.2196/14198. https://mhealth.jmir.org/2019/10/e14198/ v7i10e14198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kaltheuner M, Drossel D, Heinemann L. DiaDigital apps: evaluation of smartphone apps using a quality rating methodology for use by patients and diabetologists in Germany. J Diabetes Sci Technol. 2019 Jul 28;13(4):756–62. doi: 10.1177/1932296818803098. http://europepmc.org/abstract/MED/30264585 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zhao J, Freeman B, Li M. How do infant feeding apps in China measure up? A content quality assessment. JMIR Mhealth Uhealth. 2017 Dec 06;5(12):e186. doi: 10.2196/mhealth.8764. https://mhealth.jmir.org/2017/12/e186/ v5i12e186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.DiFilippo KN, Huang W, Chapman-Novakofski KM. A new tool for Nutrition App Quality Evaluation (AQEL): development, validation, and reliability testing. JMIR Mhealth Uhealth. 2017 Oct 27;5(10):e163. doi: 10.2196/mhealth.7441. https://mhealth.jmir.org/2017/10/e163/ v5i10e163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Salazar A, de Sola H, Failde I, Moral-Munoz JA. Measuring the quality of mobile apps for the management of pain: systematic search and evaluation using the mobile app rating scale. JMIR Mhealth Uhealth. 2018 Oct 25;6(10):e10718. doi: 10.2196/10718. https://mhealth.jmir.org/2018/10/e10718/ v6i10e10718 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Rangrazejeddi F, Anvari S, Farrahi SR, Sharif RS. The most popular Iranian smartphone applications for traditional medicine: A quality assessment. Shiraz E-Med J. 2018;19(Suppl):e66306. https://sites.kowsarpub.com/semj/articles/66306.html . [Google Scholar]

- 45.Jovičić S, Siodmiak J, Watson ID, European Federation of Clinical ChemistryLaboratory Medicine Working Group on Patient Focused Laboratory Medicine Quality evaluation of smartphone applications for laboratory medicine. Clin Chem Lab Med. 2019 Feb 25;57(3):388–97. doi: 10.1515/cclm-2018-0710.cclm-2018-0710 [DOI] [PubMed] [Google Scholar]

- 46.Cheng H, Tutt A, Llewellyn C, Size D, Jones J, Taki S, Rossiter C, Denney-Wilson E. Content and quality of infant feeding smartphone apps: five-year update on a systematic search and evaluation. JMIR Mhealth Uhealth. 2020 May 27;8(5):e17300. doi: 10.2196/17300. https://mhealth.jmir.org/2020/5/e17300/ v8i5e17300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Taki S, Elliott R, Russell G, Laws R, Campbell K, Denney-Wilson E. Smartphone applications and websites on infant feeding: a systematic analysis of quality, suitability and comprehensibility. Obes Res Clin Pract. 2014 Dec;8(1):101. doi: 10.1016/j.orcp.2014.10.184. [DOI] [Google Scholar]

- 48.LeBeau K, Huey LG, Hart M. Assessing the quality of mobile apps used by occupational therapists: evaluation using the user version of the mobile application rating scale. JMIR Mhealth Uhealth. 2019 May 01;7(5):e13019. doi: 10.2196/13019. https://mhealth.jmir.org/2019/5/e13019/ v7i5e13019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Chapman C, Champion KE, Birrell L, Deen H, Brierley M, Stapinski LA, Kay-Lambkin F, Newton NC, Teesson M. Smartphone apps about crystal methamphetamine ("Ice"): systematic search in app stores and assessment of composition and quality. JMIR Mhealth Uhealth. 2018 Nov 21;6(11):e10442. doi: 10.2196/10442. https://mhealth.jmir.org/2018/11/e10442/ v6i11e10442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.DiFilippo KN, Huang WD, Chapman-Novakofski KM. Mobile apps for the Dietary Approaches to Stop Hypertension (DASH): app quality evaluation. J Nutr Educ Behav. 2018 Jun;50(6):620–5. doi: 10.1016/j.jneb.2018.02.002.S1499-4046(18)30089-7 [DOI] [PubMed] [Google Scholar]

- 51.Zhang MW, Ho RC, Loh A, Wing T, Wynne O, Chan SW, Car J, Fung DS. Current status of postnatal depression smartphone applications available on application stores: an information quality analysis. BMJ Open. 2017 Nov 14;7(11):e015655. doi: 10.1136/bmjopen-2016-015655. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=29138195 .bmjopen-2016-015655 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jupp JC, Sultani H, Cooper CA, Peterson KA, Truong TH. Evaluation of mobile phone applications to support medication adherence and symptom management in oncology patients. Pediatr Blood Cancer. 2018 Nov;65(11):e27278. doi: 10.1002/pbc.27278. [DOI] [PubMed] [Google Scholar]

- 53.Jeon E, Park H, Min YH, Kim H. Analysis of the information quality of Korean obesity-management smartphone applications. Healthc Inform Res. 2014 Jan;20(1):23–9. doi: 10.4258/hir.2014.20.1.23. https://www.e-hir.org/DOIx.php?id=10.4258/hir.2014.20.1.23 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Xiao Q, Wang Y, Sun L, Lu S, Wu Y. Current status and quality assessment of cardiovascular diseases related smartphone apps in China. Stud Health Technol Inform. 2016;225:1030–1. [PubMed] [Google Scholar]

- 55.Lambert K, Mullan J, Mansfield K, Owen P. Should we recommend renal diet-related apps to our patients? An evaluation of the quality and health literacy demand of renal diet-related mobile applications. J Ren Nutr. 2017 Nov;27(6):430–8. doi: 10.1053/j.jrn.2017.06.007.S1051-2276(17)30149-8 [DOI] [PubMed] [Google Scholar]

- 56.Regmi K, Kassim N, Ahmad N, Tuah N. Assessment of content, quality and compliance of the STaR mobile application for smoking cessation. Tob Prev Cessat. 2017 Jul 3;3(July):120. doi: 10.18332/tpc/75226. http://europepmc.org/abstract/MED/32432194 .120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hartin P, Cleland I, Nugent C, Tschanz J, Clark C, Norton M. Assessing app quality through expert peer review: a case study from the gray matters study. Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Aug 16-20, 2016; Orlando, FL, USA. 2016. pp. 4379–82. [DOI] [PubMed] [Google Scholar]

- 58.McAleese D, Papadaki A. A content analysis of the quality and behaviour change techniques of smartphone apps promoting the Mediterranean diet. Proc Nutr Soc. 2020 Jun 10;79(OCE2):E271. doi: 10.1017/s0029665120002190. [DOI] [Google Scholar]

- 59.Bergeron D, Iorio-Morin C, Bigder M, Dakson A, Eagles ME, Elliott CA, Honey CM, Kameda-Smith MM, Persad AR, Touchette CJ, Tso MK, Fortin D, Canadian Neurosurgery Research Collaborative Mobile applications in neurosurgery: a systematic review, quality audit, and survey of Canadian neurosurgery residents. World Neurosurg. 2019 Jul;127:e1026–38. doi: 10.1016/j.wneu.2019.04.035.S1878-8750(19)31027-7 [DOI] [PubMed] [Google Scholar]

- 60.Crehan C, Kesler E, Dube Q, Lufesi N, Giaconne M, Normand C, Heys M. The acceptability, feasibility and usability of the neotree application in malawi: an integrated data collection, clinical management and education mhealth solution to improve quality of newborn care and thus newborn survival in health facilities in resource-poor settings. Br Med J. 2018;103(Suppl 1):117. doi: 10.1136/archdischild-2018-rcpch.278. [DOI] [Google Scholar]

- 61.Cabrera A, Ranasinghe M, Frossard C, Postel-Vinay N, Boyer C. An exploratory empirical analysis of the quality of mobile health apps. Proceedings of the 18th International Conference on WWW/Internet; 18th International Conference on WWW/Internet; Nov 7-9, 2019; Cagliari, Italy. 2019. [DOI] [Google Scholar]

- 62.Chen J, Cade JE, Allman-Farinelli M. The most popular smartphone apps for weight loss: a quality assessment. JMIR Mhealth Uhealth. 2015 Dec 16;3(4):e104. doi: 10.2196/mhealth.4334. https://mhealth.jmir.org/2015/4/e104/ v3i4e104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Li Y, Ding J, Wang Y, Tang C, Zhang P. Nutrition-related mobile apps in the China app store: assessment of functionality and quality. JMIR Mhealth Uhealth. 2019 Jul 30;7(7):e13261. doi: 10.2196/13261. https://mhealth.jmir.org/2019/7/e13261/ v7i7e13261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Zhang MW, Ho RC, Hawa R, Sockalingam S. Analysis of the information quality of bariatric surgery smartphone applications using the Silberg scale. Obes Surg. 2016 Jan 30;26(1):163–8. doi: 10.1007/s11695-015-1890-5.10.1007/s11695-015-1890-5 [DOI] [PubMed] [Google Scholar]

- 65.Lambert K, Owen P, Koukoumas A, Mesiti L, Mullan J, Mansfield K, Lonergan M. Content analysis of the quality of diet-related smartphone applications for people with kidney disease. Nephrol. 2015 Sep;:57. https://www.researchgate.net/publication/296088088_CONTENT_ANALYSIS_OF_THE_QUALITY_OF_DIET-RELATED_SMARTPHONE_APPLICATIONS_FOR_PEOPLE_WITH_KIDNEY_DISEASE . [Google Scholar]

- 66.Ayyaswami V, Padmanabhan DL, Crihalmeanu T, Thelmo F, Prabhu AV, Magnani JW. Mobile health applications for atrial fibrillation: a readability and quality assessment. Int J Cardiol. 2019 Oct 15;293:288–93. doi: 10.1016/j.ijcard.2019.07.026. http://europepmc.org/abstract/MED/31327518 .S0167-5273(19)33276-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Li Y, Zhao Q, Cross WM, Chen J, Qin C, Sun M. Assessing the quality of mobile applications targeting postpartum depression in China. Int J Ment Health Nurs. 2020 Oct 28;29(5):772–85. doi: 10.1111/inm.12713. [DOI] [PubMed] [Google Scholar]

- 68.Bearne LM, Sekhon M, Grainger R, La A, Shamali M, Amirova A, Godfrey EL, White CM. Smartphone apps targeting physical activity in people with rheumatoid arthritis: systematic quality appraisal and content analysis. JMIR Mhealth Uhealth. 2020 Jul 21;8(7):e18495. doi: 10.2196/18495. https://mhealth.jmir.org/2020/7/e18495/ v8i7e18495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Pourrahmat M, De Vera MA. FRI0629 Patient-targeted smartphone apps for systemic lupus erythematosus: a systematic review and assessment of features and quality. Br Med J. 2018;77:7106. doi: 10.1136/annrheumdis-2018-eular.7106. [DOI] [Google Scholar]

- 70.Hoffmann A, Faust-Christmann CA, Zolynski G, Bleser G. Toward gamified pain management apps: mobile application rating scale-based quality assessment of pain-mentor's first prototype through an expert study. JMIR Form Res. 2020 May 26;4(5):e13170. doi: 10.2196/13170. https://formative.jmir.org/2020/5/e13170/ v4i5e13170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Santo K, Richtering SS, Chalmers J, Thiagalingam A, Chow CK, Redfern J. Mobile phone apps to improve medication adherence: a systematic stepwise process to identify high-quality apps. JMIR Mhealth Uhealth. 2016 Dec 02;4(4):e132. doi: 10.2196/mhealth.6742. https://mhealth.jmir.org/2016/4/e132/ v4i4e132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Lee Y, Shin S, Kim J, Kim JH, Seo D, Joo S, Park J, Kim WS, Lee J, Bates DW. Evaluation of mobile health applications developed by a tertiary hospital as a tool for quality improvement breakthrough. Healthc Inform Res. 2015 Oct;21(4):299–306. doi: 10.4258/hir.2015.21.4.299. https://www.e-hir.org/DOIx.php?id=10.4258/hir.2015.21.4.299 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Sullivan RK, Marsh S, Halvarsson J, Holdsworth M, Waterlander W, Poelman MP, Salmond JA, Christian H, Koh LS, Cade JE, Spence JC, Woodward A, Maddison R. Smartphone apps for measuring human health and climate change co-benefits: a comparison and quality rating of available apps. JMIR Mhealth Uhealth. 2016 Dec 19;4(4):e135. doi: 10.2196/mhealth.5931. https://mhealth.jmir.org/2016/4/e135/ v4i4e135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Davalbhakta S, Advani S, Kumar S, Agarwal V, Bhoyar S, Fedirko E, Misra DP, Goel A, Gupta L, Agarwal V. A systematic review of smartphone applications available for Corona Virus Disease 2019 (COVID19) and the Assessment of their quality using the Mobile Application Rating Scale (MARS) J Med Syst. 2020 Aug 10;44(9):164. doi: 10.1007/s10916-020-01633-3. http://europepmc.org/abstract/MED/32779002 .10.1007/s10916-020-01633-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Escriche-Escuder A, De-Torres I, Roldán-Jiménez C, Martín-Martín J, Muro-Culebras A, González-Sánchez M, Ruiz-Muñoz M, Mayoral-Cleries F, Biró A, Tang W, Nikolova B, Salvatore A, Cuesta-Vargas AI. Assessment of the quality of mobile applications (apps) for management of low back pain using the Mobile App Rating Scale (MARS) Int J Environ Res Public Health. 2020 Dec 09;17(24):9209. doi: 10.3390/ijerph17249209. https://www.mdpi.com/resolver?pii=ijerph17249209 .ijerph17249209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Moseley I, Roy A, Deluty A, Brewer JA. Evaluating the quality of smartphone apps for overeating, stress, and craving-related eating using the mobile application rating scale. Curr Addict Rep. 2020 Sep 01;7(S2):260–7. doi: 10.1007/s40429-020-00319-7. [DOI] [Google Scholar]

- 77.Jones C, O'Toole K, Jones K, Brémault-Phillips S. Quality of psychoeducational apps for military members with mild traumatic brain injury: an evaluation utilizing the mobile application rating scale. JMIR Mhealth Uhealth. 2020 Aug 18;8(8):e19807. doi: 10.2196/19807. https://mhealth.jmir.org/2020/8/e19807/ v8i8e19807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Kalhori SR, Hemmat M, Noori T, Heydarian S, Katigari MR. Quality evaluation of English mobile applications for gestational diabetes: app review using Mobile Application Rating Scale (MARS) Curr Diabetes Rev. 2021 Jul 03;17(2):161–8. doi: 10.2174/1573399816666200703181438.CDR-EPUB-107881 [DOI] [PubMed] [Google Scholar]

- 79.Amor M, Collado-Borrell R, Escudero-Vilaplana V. 1ISG-013 Smartphone applications for patients diagnosed with genitourinary tumours: analysis of the quality using the mobile application rating scale. Eur J Hosp Pharm. 2020;27(1):A6. doi: 10.1136/ejhpharm-2020-eahpconf.13. [DOI] [Google Scholar]

- 80.Martínez-Pérez B, de la Torre-Díez I, López-Coronado M. Experiences and results of applying tools for assessing the quality of a mHealth app named Heartkeeper. J Med Syst. 2015 Nov;39(11):142. doi: 10.1007/s10916-015-0303-6. [DOI] [PubMed] [Google Scholar]

- 81.Hay-Smith J, Peebles L, Farmery D, Dean S, Grainger R. Apps-olutely fabulous? - The quality of PFMT smartphone app content and design rated using the Mobile App Rating Scale, Behaviour Change Taxonomy, and guidance for exercise prescription. Proceedings of the ICS 2019 Gothenburg Conference; ICS 2019 Gothenburg Conference; Sep 3-6, 2019; Gothemburg, Sweden. 2019. https://www.ics.org/2019/abstract/547 . [Google Scholar]

- 82.McKay FH, Wright A, Shill J, Stephens H, Uccellini M. Using health and well-being apps for behavior change: a systematic search and rating of apps. JMIR Mhealth Uhealth. 2019 Jul 04;7(7):e11926. doi: 10.2196/11926. https://mhealth.jmir.org/2019/7/e11926/ v7i7e11926 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Nurgalieva L, O'Callaghan D, Doherty G. Security and privacy of mHealth applications: a scoping review. IEEE Access. 2020 Jun 4;8:104247–68. doi: 10.1109/access.2020.2999934. [DOI] [Google Scholar]

- 84.Lavrakas PJ. Encyclopedia of Survey Research Methods. Thousand Oaks, California, United States: SAGE Publications Ltd; 2008. Respondent fatigue. [Google Scholar]

- 85.Han M, Lee E. Effectiveness of mobile health application use to improve health behavior changes: a systematic review of randomized controlled trials. Healthc Inform Res. 2018 Jul;24(3):207–26. doi: 10.4258/hir.2018.24.3.207. https://www.e-hir.org/DOIx.php?id=10.4258/hir.2018.24.3.207 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.McKay FH, Slykerman S, Dunn M. The app behavior change scale: creation of a scale to assess the potential of apps to promote behavior change. JMIR Mhealth Uhealth. 2019 Jan 25;7(1):e11130. doi: 10.2196/11130. https://mhealth.jmir.org/2019/1/e11130/ v7i1e11130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.mHealth: New Horizons for Health Through Mobile Technologies. Geneva: World Health Organization; 2011. pp. 1–102. [Google Scholar]

- 88.Bolarinwa O. Principles and methods of validity and reliability testing of questionnaires used in social and health science researches. Niger Postgrad Med J. 2015;22(4):195–201. doi: 10.4103/1117-1936.173959. http://www.npmj.org/article.asp?issn=1117-1936;year=2015;volume=22;issue=4;spage=195;epage=201;aulast=Bolarinwa .NigerPostgradMedJ_2015_22_4_195_173959 [DOI] [PubMed] [Google Scholar]

- 89.The rise of mHealth apps: a market snapshot. Liquid State. 2018. [2021-01-15]. https://liquid-state.com/mhealth-apps-market-snapshot/

- 90.Mobile network coverage and evolving technologies. ICT Facts and Figures. 2016. [2016-11-15]. https://www.itu.int/en/ITU-D/Statistics/Documents/facts/ICTFactsFigures2016.pdf .

- 91.What is cultural appropriateness. IGI Global. [2021-01-30]. https://www.igi-global.com/dictionary/culturally-appropriate- web-user-interface/6361 .

- 92.Albright J, de Guzman C, Acebo P, Paiva D, Faulkner M, Swanson J. Readability of patient education materials: implications for clinical practice. Appl Nurs Res. 1996 Aug;9(3):139–43. doi: 10.1016/S0897-1897(96)80254-0. [DOI] [PubMed] [Google Scholar]

- 93.Dunn Lopez K, Chae S, Michele G, Fraczkowski D, Habibi P, Chattopadhyay D, Donevant SB. Improved readability and functions needed for mHealth apps targeting patients with heart failure: an app store review. Res Nurs Health. 2021 Feb;44(1):71–80. doi: 10.1002/nur.22078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.International student assessment (PISA) OECD Library. 2021. [2021-02-07]. https://www.oecd-ilibrary.org/education/reading-performance-pisa/indicator/english_79913c69-en .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Search string applied to databases.