Graphical abstract

Keywords: Artificial intelligence, Functional genomics, Genomics, Proteomics, Epigenomics, Transcriptomics, Epitranscriptomics, Metabolomics, Machine learning, Deep learning

Abstract

We review the current applications of artificial intelligence (AI) in functional genomics. The recent explosion of AI follows the remarkable achievements made possible by “deep learning”, along with a burst of “big data” that can meet its hunger. Biology is about to overthrow astronomy as the paradigmatic representative of big data producer. This has been made possible by huge advancements in the field of high throughput technologies, applied to determine how the individual components of a biological system work together to accomplish different processes. The disciplines contributing to this bulk of data are collectively known as functional genomics. They consist in studies of: i) the information contained in the DNA (genomics); ii) the modifications that DNA can reversibly undergo (epigenomics); iii) the RNA transcripts originated by a genome (transcriptomics); iv) the ensemble of chemical modifications decorating different types of RNA transcripts (epitranscriptomics); v) the products of protein-coding transcripts (proteomics); and vi) the small molecules produced from cell metabolism (metabolomics) present in an organism or system at a given time, in physiological or pathological conditions. After reviewing main applications of AI in functional genomics, we discuss important accompanying issues, including ethical, legal and economic issues and the importance of explainability.

1. Introduction

In the last decades, the fast development of high-throughput technologies in biological sciences has led to the production of large amounts of data. These data are aimed at the quantification and characterization of selected ensembles of biological molecules, such as DNA, RNA, proteins and metabolites, with the ultimate goal to understand how these molecules contribute to determine the structure, function and dynamics of a living system, such as a cell, tissue or organism. Disciplines that aim at collecting and analysing large sets of biological data are generally indicated as “omics” by derivation of the word genome, used to indicate the whole amount of DNA present in each cell of an organism, with an extra flavour of openness to big challenges [1]. The different disciplines that contribute to generate this massive volume of biological data are named after the main target of investigation, be it the DNA information content of an organism or system (genomics), the modulations that DNA can reversibly undergo (epigenomics), the RNA transcripts originated by a genome (transcriptomics), the set and dynamics of RNA modifications (epitranscriptomics), the translational products of protein-coding transcripts (proteomics) or the metabolites (metabolomics) that can be present in a given organism or system, at a given time and condition, in physiological and pathological states. All these disciplines are independent fields of study, but the knowledge and data that they produce converge into the ambitious goal of functional genomics, a field of research aimed at characterizing the action and interaction of all main actors (DNA, RNA, proteins and metabolites, along with their modifications) that link a set of observable characteristics of a cell or individual (that is, the phenotype) to the functional interplay between the underlying genetic characteristics (the genotype) and the environmental conditions.

Omics data can get easily too bulky and complex to be investigated through visual analysis or statistical correlations. This has encouraged the use of the so-called Machine Intelligence or Artificial Intelligence (AI) [2], able not only to manage amounts of data that are intractable for human minds, but also to extract information that go beyond our current understanding of the system under investigation and, importantly, to improve automatically through experience gained on training data.

Within AI, independence from the need of being explicitly programmed to perform a given task is the distinguishing feature of Machine Learning (ML) algorithms, including Linear Regression, Clustering and Bayesian Networks (Table 1). The first applications of ML methods in Biology date back to the early 1980s [3]. More recently, ML programs have been applied in all research areas related to functional genomics, such as genomics [4], [5], [6], transcriptomics [7], proteomics [8], [9] and metabolomics [10], [11].

Table 1.

A summary of commonly used machine learning methods, including a brief description of their distinctive features and indication of core applications.

| Learning Type | Method | Description and Most Relevant Features | Main Applications | References |

|---|---|---|---|---|

| LR | Linear Regression is a supervised learning method to investigate the linear relationship between a dependent and one or more independent variables. LR was the oldest and the most widely used type of regression. To overcome the limit of linear assumption many regression techniques have been developed, varying in the type of cost function used: Non-Linear Regression, Polynomial Regression, Logistic Regression (Sigmoid function), Poisson Regression and many others. | Classification. Functional causal modelling. Metabolomics. Genotype-phenotype associations. |

2001 [49] 2005 [50] 2006 [51] 2008 [52] 2012 [53] 2014 [54] |

|

| SVM | Support Vector Machines are supervised learning methods for binary classification. SVMs represent data as points in space and construct a hyperplane or set of hyperplanes in a high-dimensional space to separate the points and predict the belonging to a category [21]. SVMs can perform linear classification and non-linear classification using kernel methods, a class of algorithms for high-dimensional pattern analysis. | Cancer genomics classification. Outliers detection. Discovery of new biomarkers and new drug targets. |

2003 [55] 2007 [56] 2008 [57] 2011 [58] 2013 [59] 2014 [60] |

|

| Supervised | RDF | Random Decision Forests are learning methods that train and average predictions provided by many Decision Trees (DTs). DTs are ML approaches in which predictions are represented by a series of decisions to predict the target value of a variable starting from features observations [61]. Target variable can take continuous (Regression Trees) or discrete values (Classification Trees). DTs are often unstable methods, but have the big advantage to be easily interpretable. | Genome-Wide Association (GWA). Epistasis detection. Pathway analysis. Visualization of decision processes. |

2003 [62] 2004 [63] 2006 [64] 2009 [65] 2012 [66] 2015[67] |

| Naive Bayes | Bayes Classifiers are ML methods that use the Bayes’ theorem for the classification process. A strong assumption for Naive Bayes is mutual feature-independence. These classifiers are very fast and, despite their simplicity, they are efficient in many complex tasks, also with small training data sets. | Short-sequences classification. Multi-class prediction. DNA barcoding. Biomarker selection. |

2001 [68] 2002 [69] 2006 [70] 2009 [71] |

|

| k-NN | The k-Nearest Neighbours is an instance-based learning algorithm used for classification or regression. The algorithm assigns weights to neighbour contribution. The nearest neighbours contribute more to the computed average than distant ones. | Cancer genomics classification. Gene expression analysis. |

2005 [72] 2006 [73] 2010 [74] |

|

| PCA | Principal Component Analysis is a statistical procedure for the reduction of the dimensionality of variable space. PCA consists in a linear coordinate transformation that projects variables from an high-dimensional space to a low-dimensional space trying to maintain the variance as much as possible[19]. One of the main limits of this method is that it can capture only linear correlations between variables. To overcome this disadvantage, Sparse PCA and Nonlinear PCA have been recently introduced. | Dimensionality reduction. Cancer classification. SNPs tagging. Visualization of genetic distances. Proteomic analysis. |

2004 [75] 2007 [76] 2009 [77] 2011 [78] 2013 [79] 2014 [80] |

|

| Unsupervised | DBNs | A Dynamic Bayesian Network is a Bayesian Network (a probabilistic graphical model that uses Bayesian inference for probability computations) with a temporal extension able to model stochastic processes over time [20]. The advantage of this kind of architectures is that they can model very complex time series and relationships between multiple time series. | Gene regulation analysis. Epigenetic data integration. Protein sequencing. |

2007 [81] 2010 [82] 2012 [83] 2014 [84] 2016 [85] |

| LDA | Linear Discriminant Analysis is a linear dimensionality reduction technique for the projection of a dataset on a lower-dimensional space. LDA is very similar to PCA, but in addition to maximizing data variance, LDA is also interested in finding axes that minimize variance. | Data pre-processing. Motifs identification. Cancer genomics classification. |

2000 [86] 2008 [87] 2009 [88] |

|

| k-Means | k-Means Clustering is a vector-quantization method for the partition of observations into k clusters. At each step the algorithm re-updates centroids as cluster barycenters and re-assigns each data point to the nearest centroid. k-Means is at the same time a simple and efficient algorithm for clustering problems. | Genome clustering. Gene expression pattern recognition. Image segmentation. |

2005 [89] 2007 [90] 2015 [91] 2016 [92] |

|

Among ML methods, the most promising to address omics-data complexity are the ones collectively known as Deep Learning (DL) methods. These methods process information by performing mathematical operations (named neurons, in analogy to the “computational elements” in the brain) arranged in multiple layers (thus deep) connected to one other (thus referred to as a neural network) (Table 2). Although the first neural network models were implemented more than 60 years ago, at the beginning they constituted a fascinating but unsustainable resource, due to prohibitive monetary and computational costs. The Perceptron, the first neural network architecture introduced to the scientific community in 1958 by Frank Rosenblatt [12], had limited learning ability. Moreover, despite being the size of a large room, the computer that was running the Perceptron algorithm had quite limited processing power. In general, effective application of DL methods has become possible only in the last decade thanks to steep increase in processor performance that reached their high computational demand, especially following the repurposing of gaming-aimed Graphical Processing Units (GPUs) [13]. In the same years, tremendous decrease of sequencing costs favoured availability of a flood of large genome-scale datasets and made functional genomics a fertile ground for DL applications [14], [15], [16].

Table 2.

A summary of most relevant deep learning architectures, including a brief description of their distinctive features and indication of core applications.

| Learning Type | Method | Description and Most Relevant Features | Main Applications | References |

|---|---|---|---|---|

| DNNs | Deep Neural Networks are neural networks with many hidden layers of artificial neurons. The output of a layer represents the input of the following layer [23]. This kind of architecture allows to capture non-linear relationships and provides complex representations of input data. | Cancer genomics. Protein sequence classification. Phenotype from genotype prediction. |

2017 [93] 2018 [94] 2018 [95] 2019 [96] 2020 [7] |

|

| MLP | The Single Layer Perceptron (SLP) is a ML algorithm for linear binary classification. Neurons of the layer learn optimal weights for input signals one at a time and generate two linearly separable classes [12]. The Multilayer Perceptron (MLP) contains many SLPs organized into three or more layers with feed-forward connections. Unlike SLP, MLP can perform also non-linear classifications. | Protein structure prediction. Molecular Classification. Cancer genomics. |

1982 [3] 2006 [97] 2009 [98] 2010 [99] 2013 [100] |

|

| CNNs | Convolutional Neural Networks are hierarchical architectures inspired by biological processes governing the organization of animals’ visual cortex. This architecture uses combination of convolution and pooling layers and can detect complex local and global patterns [27]. They work by scanning multidimensional arrays such as 2D images or weight matrices of DNA motifs. To be efficient, CNNs require many layers and large labelled datasets. | Modelling regulatory elements. Detection of DNA accessibility. Finding Binding-sites sequences. |

2016 [101] 2016 [102] 2016 [103] 2017 [104] 2018 [105] 2018 [106] 2019 [107] |

|

| Supervised | RNNs | Recurrent Neural Networks are deep architectures able to capture temporal dynamic behaviours. They are suitable for processing time series or sequential data and in general to predict outputs depending on previous states. RNNs hidden layers retain information from previous layers and feed to the next layer, providing the architecture with a sort of memory [25]. | Transcription factor binding sites prediction. Mutation and variants identification. Protein homology detection. |

2017 [108] 2017 [109] 2018 [110] 2019 [111] 2019 [107] |

| LSTM | Long Short Term Memory is a particular type of RNN architecture with feedback connections. It is able to retain information over a long time and to learn long-term dependencies [112]. LSTMs can overcome the vanishing gradient problem, typical of traditional RNNs. They are useful to make predictions, especially when dealing with time series with lags, even wide, between events. | Splicing prediction. Gene expression regulation. Detection of genomic long-term correlations. |

2015 [113] 2015 [114] 2016 [115] 2017 [116] 2019 [117] 2020 [118] |

|

| DBMs | Deep Boltzman Machines are a kind of RNNs based on a stochastic maximum likelihood algorithm. The network contains undirected connections between all layers and has the ability to learn internal representations that become increasingly complex and abstract [26]. The main disadvantage of this kind of networks is the slow speed. | Protein function prediction. SNPs pattern recognition. Cancer genomics. |

2004 [119] 2017 [120] 2017 [121] 2017 [122] 2018 [123] |

|

| DBN | Deep Belief Networks can be viewed as a composition of Restricted Boltzmann Machines (two-layers generative stochastic neural networks) where each layer learns the entire input [24]. Unlike DBMs, only the first two layers of DBNs have undirected connections. A disadvantage of this kind of networks is the high computational cost of the training process since layers must be trained one at a time. | Enhancers prediction. Gene expression pattern recognition. Cancer classification. Drug discovery. |

2014 [124] 2016 [125] 2016 [126] 2017 [127] 2017 [128] 2017 [129] |

|

| AEs | AutoEncoders are neural networks trained to reconstruct the input. The output layer of an AE has the same number of neurons as the input layer, while one or more hidden layers have a lower dimensionality, in order to force the AE to compress data and to extract important features neglecting unimportant ones [28], [29]. Many variants can make representation very robust and precise: Variational AEs, Spares AEs, Denoising AEs, Contractive AEs, Convolutional AEs. | Dimensionality Reduction. Mutation and variants identification. Methylation analysis. Drug discovery. |

2014 [130] 2016 [103] 2017 [131] 2018 [132] 2019 [133] 2020 [134] |

|

| Unsupervised | GANs | Generative Adversarial Networks are architectures made of two neural networks, one against the other [30]. The first neural network, called the generator, generates new instances, the second neural network, the discriminator, evaluates if the generated instances can belong to the training data-set or not. This way GANs are able to generate new data that are indistinguishable from the observed ones. | Data denoising. Data augmentation. Missing data imputation. Genome editing. |

2017 [135] 2018 [136] 2018 [137] 2019 [138] 2019 [139] 2020 [140] |

DL methods in recent years have opened up interesting and exciting perspectives in core areas of research (e.g., image analysis, language analysis and also omics sciences) [17], [18], having many important advantages over traditional ML techniques such as Principal Component Analysis (PCA) [19], Bayesian Methods (BMs) [20], Support Vector Machines (SVMs) [21], Random Forests (RFs) and Decision Trees (DTs) [22] (Table 1). The main advantage of DL over ML methods is the end-to-end learning, that is the possibility of obtaining classification or prediction results directly from the raw data. While not saving the process from possible sources of bias (e.g., input data selection for the network training phase), end-to-end learning benefits from avoiding the potential bias introduced by manual intervention in the various data processing stages. Also, DL methods ease the integration of different input data types (textual, numeric, images, audio files). Finally, DL architectures have a much higher capability of abstraction compared to traditional ML techniques.

Modern DL architectures, such as Deep Neural Networks (DNNs) [23], Deep Belief Networks (DBNs) [24], Recurrent Neural Networks (RNNs) [25], Deep Boltzmann Machines (DBMs) [26], Convolutional Neural Networks (CNNs) [27], AutoEncoders (AEs) [28], [29] and Generative Adversarial Networks (GANs) [30] (Table 2), have moved a long way from the Rosenblatt’s Perceptron in terms of both efficiency and performance. Yet, progress came at the cost of decreased transparency and loss of the ability to trace associative feature extraction and classification processes. This loss of explainability stems from the increased architecture complexity, moving from the single layer of neurons of the Perceptron to the many layers of hidden neurons intervening between the input and output layers of advanced DL models. Of note, loss of explainability entails new risks of obtaining variously biased results and, therefore, it is currently one of the most active research areas in AI (see Sections 5, 6). Explainability is indeed a major issue for the exploitation of DL potential, especially in the biomedical research and healthcare domains, where features selected by the learning system towards the output decision need to be made understandable in human terms. In fact, the ability of DL architectures to extract much more elaborate features than visual deduction and infer associations based on very high abstraction levels facilitates new investigative strategies. However, it also raises major ethical and legal issues due to the cryptic rationale supporting the machine decisions, that is given as a black-box impeding to evaluate the process and to clear possible sources of errors or biases (see Section 7). Finally, more and more sophisticated DL architectures are subject to increased training complexity, due to the exploding number of model configuration parameters (e.g., the weights - or contribution to the prediction - of each node in an artificial neural network) that need to be estimated from the training data. Moreover, DL architectures need a careful and long tuning of configuration hyper-parameters (e.g. the learning rate for training a neural network) that are external to the model and whose value cannot be estimated from the data, but that can strongly impact training speed and model performance. The reader is referred to excellent recent reviews [4], [31], [32] for a detailed discussion on parameter and hyper-parameter setting in biological applications of DL architectures. Taken together, these considerations make DL a powerful tool to be handled with care.

Here we review the main applications of AI methods in functional genomics and the interlaced fields of genomics, epigenomics, transcriptomics, epitranscriptomics, proteomics and metabolomics. In particular, we focus on recent years applications following the raising of big data production in functional genomics and the natural crossing of this discipline with the flourishing field of AI (Fig. 1). In this framework, we also discuss important aspects of data management, such as data integration, cleaning, balancing and imputation of missing data. Furthermore, we address legal, ethical and economic issues related to the application of AI methods in the functional genomics domain. Finally, we endeavour to provide a glimpse of possible future scenarios.

Alan Turing - Mathematician and philosopher.

It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers… They would be able to converse with each other to sharpen their wits. At some stage, therefore, we should have to expect the machines to take control.

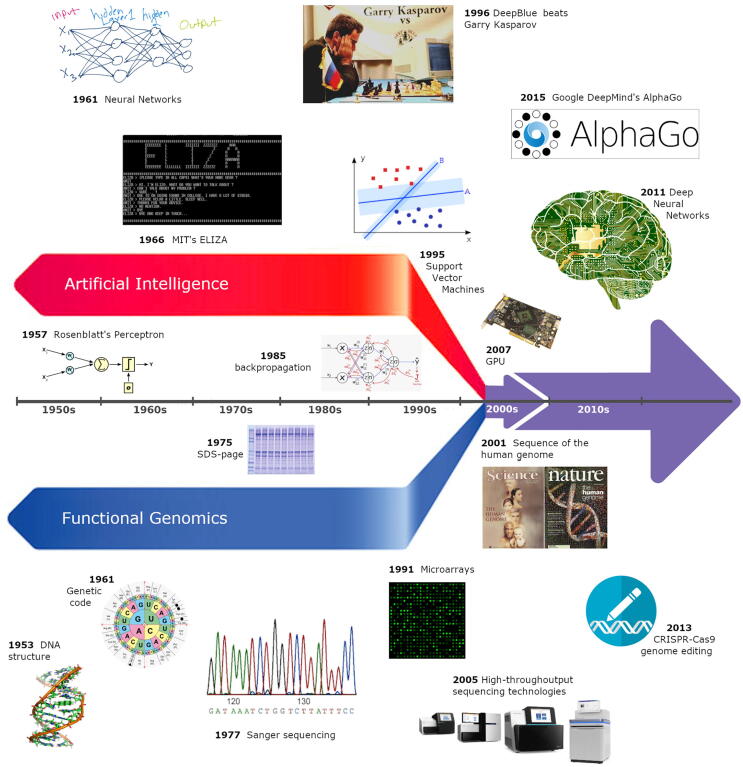

Fig. 1.

A timeline of momentous events in functional genomics and artificial intelligence from their foundation until the time they crossed their paths.

2. Functional genomics

Functional genomics is the science that studies, on a genome-wide scale, the relationships among the components of a biological system - genes, transcripts, proteins, metabolites, etc. - and how these components work together to produce a given phenotype. The term ”functional genomics” takes root in the scientific community at the time of the rising of the first genome sequencing projects. These projects are ultimately aimed at determining the complete genome sequence of a given organism and to annotate functionally relevant features therein, such as protein-coding and non-coding genes as well as DNA regulatory regions. The landmark such endeavour is the Human Genome Project (HGP),1 a worldwide collaborative project launched in 1990 and officially completed in 2003 (International Human Genome Sequencing Consortium [33]). However, the first completely sequenced genome from a eukaryote, that of the budding yeast Saccharomyces cerevisiae, was released already in 1996 [34] and provided material to start exploring the complex relationships between genes and gene products at the genome scale. Indeed, a tentative definition of functional genomics was first published in 1997 by Hieter and Boguski [35], that at the beginning of their paper state: “An informal poll of colleagues indicates that the term [functional genomics] is widely used, but has many different interpretations. There is even some sentiment that the term is unnecessary and that it does nothing more than refer to biological research as a whole.” Nevertheless, in the same paper, they also recognize that “[…] the concept of functional genomics has arrived and it is stimulating the creation of new ideas and approaches to understanding biological mechanisms in the context of knowledge of whole genome structure.”. Functional genomics is eventually defined by these authors as a “new phase of genome analysis”, following the conclusion of the “structural genomics” phase (i.e., construction of a physical map and sequencing of the genome). This “new phase” consisted in developing and applying genome-wide experimental approaches and computational techniques to infer gene functions.

The impressive advances occurred since the beginning of this century in massively parallel sequencing technologies and related protocols have changed the face of functional genomics. Today, we can claim that the term is not open to different interpretations any more: it refers to a discipline integrating a large variety of “omics” data and relying on a plethora of high-throughput experimental methodologies and computational approaches to understand the behaviour of biological systems, being the system a cell, tissue or entire organism, in either healthy or pathological conditions.

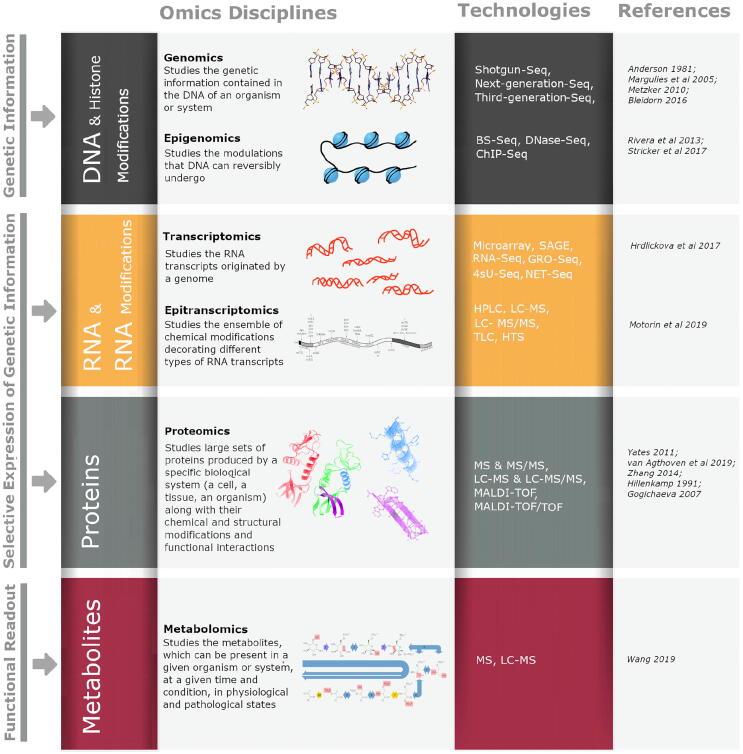

Specifically, the data used in functional genomics analyses are produced in the context and with the technologies of “omics” disciplines, including genomics, epigenomics, transcriptomics, epitranscriptomics, proteomics and metabolomics (Fig. 2).

Elaine Rich – Computer scientist.

Artificial Intelligence is the study of how to make computers do things which, at the moment, people do better.

Fig. 2.

The many facets of functional genomics: contributing “omics” disciplines, target biological features and core high-throughput technologies for data production. Abbreviations: 4sU-Seq: 4-thiouridine (4sU)-labeled RNA Sequencing; BS-Seq: Bisulfite sequencing; ChIP-Seq: Chromatin ImmunoPrecipitation followed by sequencing; DNase-Seq: DNase I hypersensitive sites sequencing; GRO-seq: Global Run On Sequencing; HPLC: high-performance liquid chromatography; HTS: High- Throughput Sequencing; LC-MS: Liquid Chromatography coupled with Mass Spectrometry; LC-MS/MS: Liquid Chromatography coupled with tandem Mass Spectrometry; MALDI-TOF: Matrix-assisted laser desorption/ionization (MALDI) Time Of Flight; MALDI-TOF/TOF: MALDI coupled with tandem Time Of Flight; MS: Mass Spectrometry; MS/MS: tandem Mass Spectrometry; NET-Seq: Nascent RNA Transcript Sequencing; RNA-Seq: RNA Sequencing; SAGE: serial analysis of gene expression; TLC: thin-layer chromatography..

3. AI applications in functional genomics

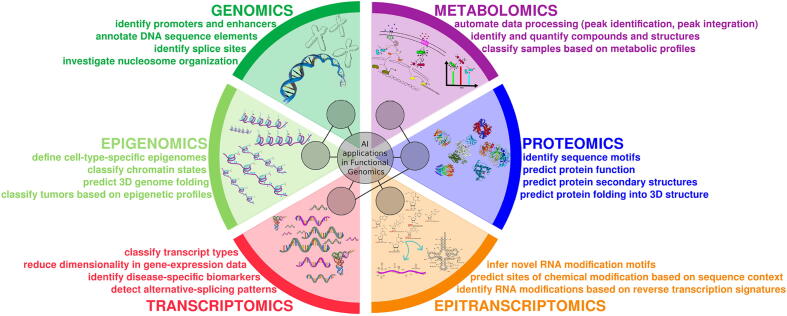

In the last decades, ML has been widely used in many areas of “omics” sciences, especially those characterized by the production of large amounts of data and/or complex mechanisms governed by the synergic participation of different factors. Important applications include: prediction of DNA regulatory regions; discovery of cell morphology and spatial organization; identification of associations between phenotypes and genotypes; classification of DNA methylation and histone modifications; biomarkers discovery; transcriptional enhancers detection; cancer diagnosis and analysis of evolutionary mechanisms [36], [37], [38], [39], [40], [41], [42] (see Fig. 3).

Fig. 3.

AI applications in functional genomics.

Since the 1980s we have witnessed the first attempts to apply supervised training techniques to “omics” sciences. In 1982, Stormo et al. used the Perceptron algorithm to distinguish E. coli translational initiation sites from all other sites in a library of over 78.000 nucleotides of mRNA sequence [3]. In 1993, Rost and Sander implemented a neural network to predict the protein secondary structure [43]. DL techniques began to be massively used in functional genomics only in the second decade of the 2000s, due to the improvement of PC performance and the collapse of genome sequencing costs [44], [45], [46].

In 2015, two important deep architectures have been implemented and applied to functional genomics, producing results of great scientific impact. DeepBind [47] is a fully automatic stand-alone software for the prediction of sequence specificities of DNA and RNA binding proteins. DeepSEA (deep learning-based sequence analyser) [48] predicts chromatin effects of sequence alterations with single-nucleotide resolution, by learning regulatory sequences from large-scale chromatin-profiling data. Both methods, based on deep architectures, have overcome many challenges such as the processing of millions of sequences, the generalization between data from different technologies, the tolerance of noise and missing data and the end-to-end and totally automatic learning, without the need for hand-tuning. These approaches outperformed other state-of-the-art methods and encouraged many scientists to follow similar exciting paths.

In the next sections, we analyse in detail some of the highest impact applications of ML and DL in the main disciplines converging into functional genomics.

Yoshua Bengio – Computer scientist.

I don’t think that any of the human faculties is something inherently inaccessible to computers. I would say that some aspects of humanity are less accessible and creativity of the kind that we appreciate is probably one that is going to be something that’s going to take more time to reach. But maybe even more difficult for computers, but also quite important, will be to understand not just human emotions, but also something a little bit more abstract, which is our sense of what’s right and what’s wrong.

3.1. Genomics

The concept of “genome” was first proposed in 1920 by Hans Winkler, then professor of Botany at the University of Hamburg, referring to “the haploid number of chromosomes” located in the nucleus [141]. In the current era of biological research, with the technological progress in sequencing and the discovery of the DNA complexity, this concept has been extended to the whole set of DNA sequences in a cell or organism (i.e., accounting for the number of copies of the basic set of chromosomes, or ploidy, and including the DNA material from extranuclear organelles such as the mitochondria).

Genomics can be defined as the “science of genomes”. The term was coined in 1986 by Thomas Roderick to describe the nascent discipline of sequencing, mapping, annotating and analysing genomes [35]. The first complete genome sequence of a eukaryotic organelle (the human mitochondrion, 16.6 kb in length) was determined in 1981 [142]; the first free living organism (H. influenzae, 1.8 Mb) was sequenced in 1995 [143]; and the first eukaryotic genome (S. cerevisiae, 12.1 Mb) was completed in 1996 [34]. Sequencing of the human genome (3 Gb), a milestone in the genomics field, took 13 years and was completed in 2003 [33].

Starting from early low-throughput methods (the first generation of sequencing technologies) [144], [145], [146], in a few decades the field was first revolutionized by high parallelization of sequencing reactions, reaching the production of millions of short reads in few hours of run (the second generation) [147], [148]; more recently, it further evolved towards single molecule sequencing and very long sequencing reads (the third generation) [149], [150], [151].

Today, thanks to the advent of high-throughput sequencing techniques, hundreds of thousands of genomes from different kingdoms have been fully sequenced, including over 15.000 eukaryotic genomes (Genome NCBI),2 and the term genomics has now expanded to include the investigation of DNA structure, function, evolution, and editing.

As pointed out by Libbrecht and Stafford Noble [152], ML has been widely used in genomics to annotate sequence elements, identify splice sites, find promoters and enhancers, etc. A large amount of genome sequences have been used to train ML models to recognize specific functional elements. In 1990, an important paper by Bucher [153] was published, where an Optimized-Weight-Matrix algorithm has been applied to hundreds of unrelated promoter sequences to identify promoter elements.

Of note, this ML application, as others in the following years, has been made possible by establishment of databases such as the Eukaryotic Promoter Database (EPD)3 or the European Nucleotide Archive (ENA).4 In 2002, SVM and NB prediction methods [69] have been applied for splice site prediction and showed improvements and advantages over traditional relevant features selection methods. In 2006, Segal et al. proposed an important combined experimental and computational approach [154] to investigate the nucleosome organization. In the proposed pipeline, nucleosome-bound sequences from yeast were isolated at high resolution and used to construct a probabilistic nucleosome-DNA interaction model for linking nucleosome positions to specific chromosome functions and predicting the genome-wide organization of nucleosomes. In 2007, Heintzman et al. mapped five histone modifications and four transcription factors on 30 Mb of the human genome using a clustering ML approach [90]. In 2012, Hoffman et al. applied an unsupervised Dynamic BN method [83] to analyse different types of omics data (such as histone modification marks and binding sites for modifiers of chromatin structure), all of which derived from a human chronic myeloid leukemia cell line, to analyse the entire genome at 1-bp resolution, despite the presence of noise and missing data (See Sections 4.2, 4.1).

DL methods have only been applied in the genomic field in more recent years. An open-source package based on CNNs named Basset [101] for the annotation and interpretation of the non-coding genome was proposed in 2016. The following year, Killoran et al. proposed a GAN to generate DNA sequences with specific properties [135]. More recently, Avsec et al. [155] introduced a DL approach to unravel the influence of motif spacing between neighbour transcription-factor binding sites on transcription factor cooperativity.

Unsupervised approaches, such as GANs and AEs (see Table 1) have a great ability to extract very representative features and learn complex representations of the input data without any type of supervision and addressing. Moreover, they can efficiently denoise and reduce dimensionality without loss of information.

In recent years, representation models used for Natural Language Processing (NLP) have been applied to biological sequence data processing [156], [157]. In a sense biological sequences may be considered as sentences of a language. A widely used method in NLP is LSTM [112], based on RNN architecture, which is suitable for extracting semantic and contextual information from long sequences. In 2013, Mikolov et al. proposed Word2Vec [158], an unsupervised word embedding method to perform low-dimensional vector representation of natural language words. This method is capable of capturing the context of a word in a document, underline relationships between words, and capture semantic and syntactic similarities. In 2015, Vaswani et al. [159] proposed Transformer, a new architecture based on attention mechanism. Transformers are designed to handle sequential data, like LSTM and RNN, and in this sense they are suitable for text translation and interpretation; however, they neither use recurrence nor process inputs in their order. Transformers use a random initialization and are based on dynamic word embeddings (unlike other NLP architectures that use static word embedding). In 2018, Devlin et al., introduced a new NLP method named BERT (Bidirectional Encoder Representations from Transformers) [160] where the authors applied the bidirectional training of Transformer, which was highly performing in capturing semantic meaning and context words.

Very recent papers reported interesting applications of unsupervised word embedding methods on biological sequences. Woloszynek et al. [161] used Word2Vec to embed nucleotide sequences, in particular k-mers obtained from 16S rRNA amplicon surveys, and managed to extract relevant features related to sequence context, taxonomy and classification. Ostrovsky-Berman et al. presented Immune2vect [162], an adaptation of Word2Vec for B-cell receptor sequencing data, where they embedded immune sequencing data in low-dimensional vector-representations to extract relevant features such as n-gram properties and classify immunoglobulin heavy-chain variable (IGHV) genes. Recently Le et al. [163] presented a new technique made up of a BERT and a CNN for DNA enhancer prediction. This approach turned out to be more efficient than Word2Vec in capturing the hidden information in DNA sequences because the word embedding generated with BERT is dynamic, and nucleotides can be represented in different positions and assume different vector values. This is an advantage over static word embeddings, where the same vectors are obtained for the same words regardless of their context, because it provides more detailed and accurate representations.

A major goal in genomics is the identification of genetic variants that underpin human traits, particularly diseases. HTS technologies greatly accelerated our ability to identify gene mutations responsible for human disorders that are caused by variation of large effect in a single gene (e.g., Huntington disease, Duchenne muscular dystrophy). Additionally, thousands of genome-wide association studies (GWAS) have produced long lists of genetic variants associated with common diseases (e.g., asthma, diabetes, heart disease), which are often due to weak contribution of multiple genes and environmental factors. Nevertheless, our understanding of the genetic determinants of these complex diseases still remains limited. This is partly due to unexpected phenotypic readouts originating from functional interactions between two or more genes, as in the case of a genetic mutation whose presence can mask the effects of an allele at another locus (a.k.a. epistasis). Systematic genetics screens conducted in model organisms have fostered a better understanding of the interplay between genotype and phenotype, and provide a framework for the development of personalized genetics in humans by mapping phenotypes between organisms [164]. The most comprehensive analyses have been conducted in the budding yeast Saccharomyces cerevisiae [165], [166], which has led to quantitative phenotypic measurements for tens of million pairs of mutations in yeast. These massive screening efforts have been leveraged together with ML and DL methods for multiple scopes, including: automatic prediction of growth impact of selected genetic interactions in yeast metabolic network, based on both regression and a genetic algorithm that improved prediction accuracy [167]; association of genetic interactions to functional impact, based on RF regression [168]; and costruction of an interpretable or ‘visible’ NN, named DCell, which simulates a basic eukaryotic cell growth [169] and predicts response to genetic perturbation in terms of cellular fitness.

The advent of powerful genome editing technologies, such as CRISPR-Cas9 (Clustered Regularly Interspaced Short Palindromic Repeats-CRISPR-associated protein 9) has enabled scalable manipulation of DNA to functionally characterize genes and gene regulatory elements in a number of different organisms and in human cell model systems. A key point for successful application of CRISPR-Cas9 is the proper design of short RNAs (broadly referred to as gRNAs, acronym to guide RNAs), which provide scaffold to and guide the enzymatic complex to target sites for editing, based on sequence complementarity of 17–20 nucleotides at the 5’-end of the gRNA. In particular, in the gRNA design process, it is crucial to optimize the engineered sequence towards specific interaction with the editing target (on-target activity) while minimizing unintended interactions with other genomic sites (off-target activity), which may arise from sequence similarity with the genuine target. Various ML methods and DL methods have been developed to optimize gRNA design and predict both on-target and off-target activity, including: CRISTA [170], an RF-based regression model that scores the propensity of a genomic site to be cleaved by a given gRNA; DeepCRISPR [171], a computational platform that uses data augmentation technique to expand the training dataset of experimentally validated gRNA sequences and feeds two CNNs (one for on- and one for off-target activity prediction), with gRNA representations produced by pre-trained autoencoders; CROTON [172], an end-to-end framework based on deep multi-task CNNs and neural architecture search to predicting CRISPR-Cas9 editing outcomes; and the complementary tools CRISPR-ONT and CRISPR-OFFT [173], attention-based CNNs trained to predict gRNA on- and off-target activities, respectively.

Combining efficient gene perturbation provided by CRISPR-Cas9 technology with manifold transcriptional phenotyping provided by single-cell RNA sequencing (scRNA-seq) offers an unprecedented opportunity to explore genetic interactions in mammalian cells at large scale. This experimental framework was recently explored by Norman et al. [174], who applied recommender system ML for dimensionality reduction of the high-dimensional map of transcriptional states (phenotypes) associated to gene perturbation, to allow visual analysis and predict genetic interactions.

Several groups have explored ML and DL approaches both to identify disease-associated genetic interactions and to predict the genetic risk of complex diseases in populations from genome-wide maps of genetic variation, such as the occurrence of single-nucleotide polymorphisms (SNPs) or small nucleotide insertions or deletions (indels) in human genomes. In 2014, Kircher et al. proposed CADD (Combined Annotation-Dependent Depletion) [60], an SVM approach for the classification of functional, deleterious and pathogenic variants, which was trained with millions of both high-frequency human derived alleles and simulated variants. The method outperformed existing methods at distinguishing various pathogenic variants that underlie diseases from nearby benign variants. In 2016, Quang and Xie implemented DANN [115], a method for annotating the pathogenicity of genetic variants developed by using the same feature set and training data as CADD, but based a DNN, more suitable than SVMs to capture non-linear relationships between features. In the same year, Ionita-Laza et al. [175], proposed an alternative method based on unsupervised spectral approach (Eigen) that scores genetic variants for disease-association. In 2018, Zhou et al. [106] proposed ExPecto, an end-to-end framework based on a CNN, which was trained on multiple omics data obtained from 200 human tissues and cell types, to predict cell-type-specific effects of genetic sequence variation on gene expression and disease risk. Finally, concerning the analysis of raw sequencing data to identify the presence of genetic variation, in 2018 the genomics team at Google Brain published a deep learning architecture, named DeepVariant, based on a CNN trained to call SNPs and indels variants from piles of aligned sequencing reads [176]. This method won the highest performance award for SNPs in US Food and Drug Administration-sponsored variant calling Truth Challenge in May 2016.

3.1.1. Cancer genomics

In the last decades, the rise of NGS techniques has revolutionized the medical approach to cancer [177]. Genomics has become increasingly important in clinical study, prevention, treatment and monitoring practices. Cancer genomics studies differences in DNA sequences and gene expression between tumour and normal cells, with the aim to understand the dynamics underlying the formation and spread of tumours at the genetic, metabolic, systemic and environmental level. The Cancer Genome Atlas [178] project collected multi-level NGS data for 33 different types of common tumours, an enormous data resource made available to study tumour-specific as well as recurrent cancer mechanisms. The availability and integration of large quantities of genomic, proteomic and epigenomic information has allowed increasingly comprehensive representations of complex dynamics, such as cancer formation[179], to be obtained. Indeed, integration of multiple omics data can help overcome possible noise and/or bias of single data layers, thus improving the relevance of extracted representative features. In this framework, data integration has been an active field of research for ML and DL techniques applied to omics data, especially cancer genomics [180], [181] (see Section 4.3 for a more detailed discussion on data integration). In particular, the introduction of autoencoders, such as denoising autoencoders, has allowed robust representations of heterogeneous data to be provided, and extraction of highly representative and predictive features to be more easily performed [182], [183], [184]. Indeed, AI applications to cancer genomics can provide useful information for a rapid growth of precision medicine and for disease prevention and monitoring.

ML applications to mutation detection and interpretation can help in identifying cancer-predisposing genes such as BRCA1/2 and in predicting cancer risk [185], [186]. AI performances in cancer genomics are very promising. As an example, AI results in the diagnosis of melanoma and breast cancer are very reliable and often surpass expert evaluation [187], [188]. Many ML techniques have been applied to cancer detection and classification, and especially to biomarker identification. In 2003, Vlahou et al. obtained good ovarian cancer classification results by applying a Decision Tree [62]. In the early 2010s, two groups, Abeel et al. [189] and Chen et al. [190], applied SVM for cancer biomarkers identification. In recent years, deep architectures have been applied to variant calling and mutation detection. In 2016, Yuan et al. proposed DeepGene, a DNN cancer classifier, [191] and in 2018 Qi et al. used a MVP for prioritizing pathogenic missense variants [192]. In the same year, Malta et al. proposed a one-class LR for the extraction of transcriptomic and epigenetic features associated with dedifferentiated oncogenic states [193]. Survival models, such as SurvivalNet [194], a DL approach for the screening of large cancer genomic datasets, can be useful for prognosis accuracy improvement and prediction of cancer outcomes.

A recent and promising field of application for AI methods in cancer genomics concerns the computational investigation of synthetic lethal interactions in cancer cell lines to guide anti-cancer drug design. Synthetic lethality refers to a type of genetic interaction where the simultaneous perturbation of two genes leads to cell death or severe impairment of cell viability, while a perturbation of either gene alone does not. Concomitant availability of thorough maps of genetic interactions obtained in model organisms [166], catalogues of cancer genomics data [178], powerful tools for genome editing (e.g. CRISPR-Cas9 editing system) and single cell high-throughput sequencing technologies opened the way to systematic phenotypic discovery at single cell resolution, which is utterly important to tackle tumour cell heterogeneity. In 2017, Way et al. [195] developed an ML approach based on ensemble logistic regression, which was trained on both mutation and transcriptomic profiles of glioblastoma from The Cancer Genome Atlas [178], to predict genes that may exhibit synthetic lethality in cancer cells lacking the neurofibromin 1 tumour suppressor gene. In 2019, Das et al. implemented DiscoverSL [196], a multiparameter RF classifier trained on multi-omic cancer data from The Cancer Genome Atlas [178] to predict and visualize synthetic lethality in cancers. In 2020, Wan et al. developed EXP2SL [197], a semi-supervised NN-based method, which was trained on a large collection of cancer cell line expression signatures from the LINCS1000 Program [198], to predict cancer cell-line specific synthetic lethal interactions.

Other important applications of AI in cancer genomics concern identification of regulatory variants in noncoding domains [199], bioactivity prediction [200], anticancer drug prioritization [201] and sensitivity prediction [202], [203]. All of these applications represent important steps towards personalized medicine, increasing accurate and less invasive prevention, treatment and monitoring paths based on the specific characteristics of the patients and the environment in which they live [204].

Erik Brynjolfsson – Director of Stanford Human-Centered AI.

We can virtually eliminate global poverty massively reduce disease and provide better education to almost everyone on the planet. That said, AI and ML can also be used to increasingly concentrate wealth and power, leaving many people behind, and to create even more horrifying weapons… The right question is not ‘What will happen?’ but ‘What will we choose to do?’ We need to work aggressively to make sure technology matches our values.

3.2. Epigenomics

Epigenomics is a discipline that studies epigenetic processes at the genome scale. These processes include the regulatory mechanisms of gene activity and inheritance that are dictated by genome architecture and independent of changes in the DNA sequence. The term epigenetics, coined in 1942 by British biologist Conrad Waddington, indicates a regulatory layer of gene expression mainly mediated by small chemical compounds (such as Methyl-, Acetyl- or Phosphate-groups) that can be reversibly attached to DNA (e.g., DNA methylation) or chromatin proteins (e.g., methylation, acetylation, phosphorylation and other chemical modifications occurring at the tails of histone proteins). These epigenetic marks are dynamically orchestrated (i.e., layered, interpreted or removed) from the so-called “writer”, “reader” and “eraser” proteins. They cause DNA modulation both in terms of spatial organization and capacity to interact with the gene regulatory machinery, ultimately resulting in switching on or off the expression of the affected genes. In addition to DNA methylation and histone modifications, chromatin remodelling complexes in concert with other DNA binding proteins (such as enhancer-binding proteins and mediators of long-range chromatin looping) provide further epigenetic mechanisms that collectively define the three-dimensional (3D) organization of the genome. This, in turn, defines chromatin regions of active (i.e., transcriptionally competent) or repressed (i.e., inaccessible to transcriptional machinery) states. Epigenomics aims at systematically charting ensembles of epigenetic marks and landscapes of active and repressed genomic regions (i.e., the epigenome) in different cell types and states, to characterize the functional effect on gene expression. In fact, each cell type has a unique epigenome that allows a specific differentiation and reflects a specific state for the cell [205]. Identification of chromatin states, local density of epigenetic marks, long-range chromatin contacts and histone modification patterns has proven relevant for studying and interpreting regulatory regions, cell specific activity and disease-associated patterns. To this end, many ML and DL techniques have been applied to define cell type-specific profiles of DNA methylation (or methylomes) and histone modifications, classify chromatin regions into active and repressed states and, more recently, classify tumour types based on high-throughput methylome data and predict 3D genome folding [206], [207], [208].

In 2015, Ernst and Kellis developed ChromImpute [67], an ML approach based on regression trees to make large-scale prediction of epigenomic marks (such as DNA methylation and histone marks) and chromatin states (such as DNA accessibility). The authors demonstrated the performance of their inference method on a large compendium of publicly available epigenomic maps, achieving strong agreement between experimentally observed and computationally imputed signals. In 2016, Zhang and co-workers developed IDEAS [85], an integrative epigenome annotation system based on quantitative Hidden Markov Models (HMMs) for the characterization of epigenetic dynamics and the detection of regulatory regions. The proposed method is able to handle multiple genomes and to compare inferred epigenomic events at base resolution across different cell types, to identify recurrent as well as cell specific patterns. Wang et al. [209] developed a stacked denoising autoencoder architecture, named DeepMethyl, that uses both DNA sequence features and 3D genome structure to predict DNA methylation status of CpG sites. Recently, Kelley and co-workers proposed Basenji [105], a CNN approach to predict cell-type-specific epigenetic and transcriptional profiles using only DNA sequence as input.

Epigenomics data are often affected by noise and biases (see Section 4.2), and ML and DL methods have been widely used in recent years for data quality enhancement. In 2017, Koh et al. [210] used a CNN to denoise and improve data quality of histone ChIP-seq (chromatin immune-precipitation sequencing) data. In 2019, Hiranuma et al. proposed AIControl [211], a regression algorithm for genome-wide detection of binding-enriched regions, which integrates many publicly available control datasets to improve background subtraction and signal discrimination. The advantage of data integration exploited by AIControl is the ability to subtract different kind of biases affecting ChIP-seq data, thus providing an effective method to remove background signals from experiments lacking control samples. Most recently, Lal et al. introduced AtacWorks [212], a DL-based toolkit, which trains a residual NN model consisting of multiple stacked residual blocks, to denoise low-coverage or low-quality single-cell sequencing data obtained by ATAC-seq (Assay for Transposase-Accessible Chromatin using Sequencing), a high-throughput technique that captures genome-wide open chromatin sites as a proxy for active regulatory regions.

Several ML approaches have been applied to modelling chromatin structure from experimental data obtained by chromosome conformation capture (3C) and its derived technologies (such as 4C, 5C and Hi-C) [213], [214]. In 2012, Ernst and Kellis presented ChromHMM [215], an automated method based on a multivariate HMM for the inference of chromatin states starting from sets of aligned reads for each chromatin modification mark under investigation. In 2014, Gusmao et al. [84] proposed an HMM for the detection of transcription factor binding sites and open chromatin regions integrating structural information such as DNase I hypersensitivity and histone modifications. Chrom3D [216] and ChromStruct [217], [218] use Monte Carlo optimization with loss-score function minimization for the estimation of the chromatin structure starting for Hi-C data. Many of the computational frameworks for the 3D-modelling of chromatin also provide visualization tools [219], in order to allow chromatin structural patterns to be visually interpreted and relationships between chromatin states, genomic positions and pathological modifications to be more easily understood. In 2020, Fudenberg et al. developed Akita [220], a CNN that predicts local 3D genome structures in terms of locus-specific contact frequencies. The Akita algorithm, which was trained on a collection of high-resolution Hi-C maps, takes a genomic region of one million base pairs as input and predicts contact frequencies between any pair of 2,048 bp long windows of DNA sequence within this region. In the same year, Schwessinger et al. developed DeepC [221], a DNN that leverages transfer learning approach and tissue-specific Hi-C data, to train models that predict genome folding in megabase-sized DNA windows. These trained models are then exploited to predict both chromatin domain boundaries at high-resolution and sequence determinants of genome folding, which allows DeepC to also predict the impact of genetic variants of different size (e.g., from large structural variations to SNPs) on 3D-structure.

Ginni Rometty – CEO of IBM.

Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we’ll augment our intelligence.

3.3. Transcriptomics

The transcriptome is the complete set of transcribed genes present within a cell at a given point of time. The first use and definition of the word “transcriptome” date back to 1997 in a work by Velculescu et al. [222], where the authors analysed and characterized the genes expressed in yeast, the only eukaryote for which the entire genome sequence was available at the time [34]. Transcripts were quantified using one of the earliest sequencing-based transcriptomic methods to be developed, namely the serial analysis of gene expression (SAGE) [223]. Velculescu et al. [222] define the transcriptome as “the identity of each expressed gene and its level of expression for a defined population of cells”. The term came later to be used in a broader meaning, and can now be applied to a defined population of cells, a tissue, an organ or an entire organism. It encompasses the whole transcript content, comprising both protein- and non-protein-coding transcribed genes, from the most commonly known infrastructural RNAs (transfer and ribosomal RNAs) and messenger RNAs (involved in protein translation) to the most recently identified small and long non-coding RNAs (defined by a heuristic length cut off of 200 bases [224]), circular RNAs [225], Piwi-interacting RNAs [226], and many other novel non-coding RNA (ncRNA) types. In fact, consortia-based projects for the systematic annotation and characterization of functional elements, such as the ENCyclopedia Of DNA Elements - ENCODE (www.encodeproject.org) [227], [228], detected an unexpected pervasive transcription across genomes, with about 80% of mammalian genomic DNA being actively transcribed, the vast majority of this classified as ncRNA. Compared to the genome, the transcriptome is intrinsically variable and dynamic, making its definition and analysis considerably more complicated.

Transcriptomics is the study of the transcriptome in given physiological or pathological conditions of interest, aimed at capturing the dynamic link between the genome of an organism and its phenotypical characteristics. Ideally, it tries to identify all RNA types and sequences present in a given cell at a given time; to determine the transcriptional structure of genes in terms of start sites, 5’ and 3’ ends, exons, introns and splicing patterns; to detect gene expression levels and unravel possible regulation mechanisms at the whole-genome scale using high-throughput techniques. Instead of focusing on the function of individual genes or transcripts, transcriptomics has the ambition to characterize the whole transcriptome and its changes across a variety of cells, developmental stages, in different biological and environmental conditions. Since the late 1990s, transcriptomics research has been repeatedly revolutionized by the new technological innovations in the field, re-specifying at each step what was possible to investigate. The development of microarrays [229], [230] and, later, NGS technologies [231], [232] have been two key moments in this process. Microarrays allow quantification of a set of already known and preselected RNA sequences since their output signals rely on hybridization of the target molecules with ad hoc designed probes being anchored on the array. NGS technologies applied to RNA sequencing (RNA-seq) [233], [234] are able to capture transcribed molecules independent of prior knowledge since they reconstruct the sequence of assayed RNA molecules as part of the detection step (such in the sequencing-by-synthesis approach, where the target sequence is revealed by synthesis of the complementary strand accompanied by a detection system of the nucleotides inserted during synthesis). As a result of increased throughput, higher accuracy, and lower cost of these specialized NGS technologies, the last two decades have witnessed an exponential growth in transcriptomics studies, which have provided valuable resources for extensive investigations of transcriptional and post-transcriptional regulation [235].

3.3.1. Protein-coding and non protein-coding transcript classification

One core goal of functional genomics is the classification of transcriptome elements, such as the annotation of transcripts as mRNAs (i.e., protein-coding) or ncRNAs, or the prediction of coding potential for each of the multiple transcript products (i.e., isoforms) originating from the same gene locus due to alternative-splicing (AS) events. Many in silico (bioinformatics) methods have attempted to solve this task, but it can be surprisingly difficult in practice. In fact, the proposed solutions often results in manually curated and time-consuming workflows with a number of limitations. The ENCODE and GENCODE projects [227], [228], [236] played a crucial role in this context. In the vast majority of cases, the characterization of novel transcripts is based on the comparison with current sets of genome annotations available from public databases, such as transcript and protein sequences collected from different organisms, known protein domains and structures, integrated with multi-omics experimental data. The more the supporting evidences, the higher the confidence to call the transcript under investigation as being or being not protein-coding [237], [238], [239].

Classification of transcript type provides one application where AI can be crucial. Indeed, this is a typical ML task for which several methods and tools, based on both supervised and unsupervised learning, have been made available. For example, SVM methods were successfully applied to assign coding potential to transcripts according to selected sequences and structure features. In particular, diverse classification algorithms variably integrated relevant characteristics such as: the length of an open reading frame (ORF), which is the specific mRNA sub-sequence dictating the series of amino acids to produce a protein; the corresponding amino acid composition; the predicted protein secondary structure; the predicted proportion of protein residues exposed to the solvent; the existence of corresponding homologous in other organisms; and the synonymous versus non-synonymous substitution rates [240], [241], [242], [243].

Furthermore, classifiers based on ML algorithms were also proposed to distinguish long ncRNAs (lncRNAs) from protein-coding transcripts. For example, Pian et al. [244] used an RF method with some new specific features. Since protein-coding transcripts seem to have longer ORFs compared to lncRNAs, the authors selected the following two specific features for better discrimination: [i.] the longer ORF length (MaxORF) obtained in the three possible lecture schemes (i.e., starting in silico translation of each triplet of nucleotides into the corresponding amino acid at position 1, 2 or 3 of the given transcript); and [ii.] the normalized MaxORF value, obtained by taking into account the total length of the transcript. Similarly, other algorithms that extract a selection of features from sequences and feed it into traditional ML algorithms to assess coding potential have been developed and are available [245], [246].

Even though the integration of additional information not intrinsically derived from transcript sequences may improve the transcript classification, it can also introduce dependence on reliable annotation and be limited by current scientific knowledge, which is biased towards mainstream topics or species (e.g., less available for lncRNAs compared to mRNAs, or for non-model compared to model organisms). Furthermore, manual feature selection made as in traditional ML can introduce biases in the classification, because they are designed and picked by hand. Conversely, DL methods using neural networks can de novo discover complex and hidden biological rules in transcript raw data, thus becoming a more powerful tool to investigate the transcriptomes of the myriad of species made available by current high-throughput sequencing technologies [247], [248], [249], [250].

3.3.2. Gene-expression data analysis

Gene expression is the dynamic process that converts the information encoded within the genome into final functional gene products, giving rise to a range of proteins or ncRNAs. Identifying the molecular mechanisms that control differential gene expression is a major goal of both basic and applied biological research. Gene-expression data from microarrays or RNA-seq platforms have been widely used to distinguish tissues, biological vs. physiological conditions, disease phenotypes, and identify valuable disease biomarkers. A typical problem with high-throughput technologies is the disproportion of dimensions between samples and variables in the dataset. In fact, the high-dimensional number of assayed variables, such as the expression levels of tens of thousands of genes or transcripts, typically far outnumbers that of available samples under investigation (e.g., biological replicates, individuals with a disease). Moreover, these high-dimensional datasets are often sparse and noisy (see Sections 4.1, 4.2 for a more detailed discussion). Practically, the increase in sparsity hampers the collection of data with statistical power, making it extremely difficult to gain biological insights from these data using traditional analytical approaches. This phenomenon is called the “curse of dimensionality”.

Specialized ML algorithms can be powerful tools to address such issues and other serious challenges. Unsupervised learning approaches such as clustering and PCA have been extensively used to find inherent patterns within the data without reference to prior knowledge, for example, to identify gene signatures in gene-expression profiles that might otherwise be overlooked. Global gene-expression correlations (or meta-analyses) are even possible, by comparing numerous genome-wide studies.

Talavera et al. [251] performed a meta-analysis of about 1,500 yeast microarray datasets containing several stress-related experiments. They used an agglomerative clustering algorithm to identify groups (blocks) of transcripts showing high correlation of RNA levels across multiple conditions. Subsequent functional enrichment analyses of the obtained transcriptional blocks, performed using yeast genome annotations of biological processes based on Gene Ontology subsets (also known as GO slims), showed that those groups of consistently up- or down-regulated genes were indeed associated with biological processes linked to responses to different external stimuli (e.g., oxidative stress, osmotic stress, DNA damage stimulus, glucose limitation). This strategy highlights how functional information at the transcript block level, rather than at the single-gene level, in differential expression analyses can effectively help to make hypotheses and model molecular biological mechanisms of the system under investigation.

Microarrays or RNA-seq data can also be used by AI approaches as training sets to effectively learn how to discriminate distinct clinical groups and correctly assign patients to them [252]. In a very recent work [253], the authors analysed about 200 soft-tissue sarcoma samples from The Cancer Genome Atlas project [178] to gain novel insights into the many subtypes differing in prognosis and treatment, which unfortunately have considerable morphological overlap among each other and make differential diagnosis really difficult. To this end, the authors applied different ML algorithms: PCA for dimensionality reduction; a DNN to investigate the overlap of gene-expression patterns of the soft-tissue sarcomas with gene-expression patterns of healthy human tissues; an RF approach to identify novel diagnostic markers. Finally, tumor subtype-specific prognostic genes were identified and tested as predictive of the metastasis-free interval using k-NN analysis.

Very interestingly, in the latest phase of the ENCODE project [254], hierarchical clustering was used to define core gene sets that correspond to major cell types in 53 primary cells from different locations in the human body. Clustering of these primary cells revealed that most cells in the human body share a few broad transcriptional programs, which define five major cell types: epithelial, endothelial, mesenchymal, neural, and blood cells. Based on gene-expression profiles, this new set of cell types redefined the basic histological paradigm by which tissues have been traditionally classified.

Due to the raise of technologies able to profile molecules within an individual cell, such as scRNA-seq, the task of dimensionality reduction to allow visualization and analysis of high-dimensional datasets has becoming increasingly demanding. Consequently, non-linear methods, such as t-distributed Stochastic Neighbour Embedding (t-SNE) [255] and Uniform Manifold Approximation and Projection (UMAP) [256], gained momentum when dealing with large and heterogeneous samples over conventional linear methods such as PCA [257].

The scarce amount of RNA material inherent to scRNA-seq experiments is reflected in the very noisy and incomplete nature of output data. In particular, one major problem related to scRNA-seq experiments is the high percentage of zero-valued observations (a.k.a. dropouts), which stimulated the development of several ML- and DL-based approaches for data imputation (See Section 4.1 for a more detailed discussion on data imputation). In 2018, Li et al. proposed ScImpute [258], an iterative LASSO regression for the imputation of dropout values in scRNA-seq data. Gong et al. developed DrImpute [259], a clustering-based approach that uses a consensus strategy to impute missing values for a given target gene in scRNA-seq data, based on gene expression values of other cells belonging to the same cluster. Arisdakessian et al. implemented DeepImpute [260], a DNN architecture embedding a divide-and-conquer approach to extract relevant patterns useful for imputation of missing expression values for target genes. Specifically, given a set of target genes with dropouts in a scRNA-seq data, DeepImpute builds multiple sub-neural networks, each aimed at learning the relationship between the input genes (predictor genes) and a subset of target genes with dropouts (with zero-values of gene expression to be imputed), thus reducing the complexity by learning smaller problems. Ghahramani et al. [136] applied a GAN to integrate and denoise different scRNA-seq datasets derived from diverse laboratories and experimental protocols, and perform dimensionality reduction. In 2019, Grønbech et al. used an unsupervised DL approach based on Variational AEs [133] to estimate gene expression levels directly from raw scRNA-seq data.

3.3.3. Alternative-splicing code detection

Eukaryotic mRNA AS constitutes an important source of protein diversity [261]. It has been reported that most (i.e., 95%) of multi-exon human genes can undergo AS events [262], [263]. Aberrant AS has been shown to be associated with many diseases [264], [265], [266], [267], [268], [269], [270], [271], [272]. In addition to providing information on RNA abundance, RNA-seq data can be used to infer AS patterns and identify differential AS events linked to different sample conditions, such as treatment vs. control, disease vs. normal, diverse developmental stages, etc. The seminal work on developing DL methods to decipher the splicing code was done by Leung and colleagues [271]. The authors have predicted splicing patterns from mouse RNA-Seq data by using a DNN, with millions of variables representing both the genomic features and the tissue context, which outperformed previous attempts that were based on shallower architectures.

3.3.4. Alternative polyadenylation event detection

Several tools have been introduced in the literature to predict polyadenylation sites (PASs) from human genomic sequences. DNAFSMiner [273] predicts PASs from sequences using k-mer features in an SVM model. Dragon PolyA Spotter [274] also predicts PASs from sequences using both an ANN and an RF. POLYAH [275] discriminates real PASs from other hexamer signals using a linear discriminant function. This algorithm focuses only on the canonical PAS (i.e., the AATAAA sequence motif) in the analysis, although alternative PASs (variants of the AATAAA sequence) may influence the site discrimination. Polyadq [276] uses a quadratic discriminant function to predict real PAS regions. This tool considers two PAS signals in the analysis.

However, the biology underlying alternative polyadenylation is more complicated, and the choice for the polyadenylation machinery to recognize a given PAS depends not only on the PAS itself but also on downstream U/GU-rich elements (AUEs and DAEs). Polyasvm [277] predicts polyA sites from sequences using an SVM model. PolyAR [278] also predicts polyA sites from sequences using a linear discriminant function. Both of these tools use hand-picked sequence features. In order to overcome this limit, DL models such as DeepPolyA [279], DeeReCT-PolyA [280], and Conv-Net [281] have been recently introduced to predict PASs and recognize relatively dominant gene PASs (i.e., most frequently used PASs in a given gene). Of note, all of these models use CNNs to extract features from input genomic sequences. Although the secondary structure near a PAS is also crucial for the PAS to be selected for the polyadenylation process [282], [283], [284], none of these tools consider RNA secondary structures in their prediction procedures.

Geoffrey Hinton - Cognitive psychologist and computer scientist.

I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way similar to the human brain. That is the goal I have been pursuing. We are making progress, though we still have lots to learn about how the brain actually works.

3.4. Epitranscriptomics

Among the diverse regulatory mechanisms of molecular biology, it has been emerging that all classes of cellular RNA are subject to co- and post-transcriptional modification. The transcriptome modification status is dynamic, revealing a novel and finer layer of complexity in gene expression regulation. Similar to epigenomics, this regulatory mechanism seems orchestrated by writer, reader, and eraser RNA-binding proteins, which can rapidly alter transcript expression levels upon environmental and developmental changes. Taken together, the multitude of RNA modifications, including both non-substitutional chemical modifications and editing events, constitute the “epitranscriptome” [285]. Early reports about RNA modifications deriving from studies on abundant non-coding RNAs such as transfer RNAs and ribosomal RNAs in prokaryotes and simple eukaryotes date back to decades ago [286], [287]. However, only recently, technical advances and refined computational approaches have revealed thousands of novel modification sites within different species of cellular RNA, including mRNAs and lncRNAs. Currently, over 150 distinct post-transcriptional modifications are known to occur on diverse RNA types [288], [289], and the number of discovered epitranscriptomic marks is ever-growing. Nevertheless, knowledge about the function and specific location of RNA modifications remains scarce thus far. Accordingly, epitranscriptomics is the research field devoted to identifying the full spectra of RNA modifications and characterizing them in both protein-coding and non-protein-coding RNA, where they seem to have roles beyond simply fine-tuning of RNA structure and function, as numerous studies on various disease syndromes have highlighted.

In 2012, two independent groups [290], [291] achieved a transcriptome-wide mapping of a specific type of modification (i.e., methylation on the sixth position of the purine ring in RNA adenine, or m6A). These results demonstrated the feasibility of identifying RNA modifications across the entire transcriptome and established the field of epitranscriptomics [292]. Availability of large collections of experimentally identified m6A modification sites stimulated the development of many supervised learning algorithms for the prediction of transcriptome modification sites. Among others, the top performing was SRAMP [293], a predictor of m6A modification sites based on multiple RF classifiers. In 2019, Chen et al. [294] developed WHISTLE, an ML approach that outperformed other algorithms by integrating multiple genomic features (e.g., gene expression profiles, RNA methylation profiles, and protein–protein interaction networks) to predict m6A modification sites rather than rely only on transcript sequences. The next year, Dao and co-workers [295] established iRNA-m6A, an SVM-based classifier for the identification of m6A sites in multiple tissues of human, mouse and rat. The classifier worked on a set of optimal features selected from three kinds of sequence encoding features (i.e., physical–chemical property matrix, mono-nucleotide binary encoding and nucleotide chemical property) computed from the input RNA sequences. Most recently, Zhang et al. introduced DNN-m6A [296], a DNN-based method outperforming preexisting methods in the same task (i.e., prediction of m6A modification sites in RNA sequences of different mammalian tissues).

As mentioned above, transcripts can either be edited (i.e., with base replacement), or covalently linked to small molecules. The former case (i.e., introduction of base changes) can be detected directly by using RNA-seq techniques due to the mismatches that will emerge when the sequencing reads are mapped back to the reference genome. The latter case (i.e., covalent link to small molecules) is more complicated to detect because conventional NGS approaches would erase information about the chemical modification during the sample preparation, specifically during the reverse transcription step. In this mandatory step of NGS protocols, an enzyme called reverse transcriptase (RT) converts RNA into complementary DNA (cDNA) by reading the trascript as a template and inserting base by base the complementary DNA nucleotide in the growing cDNA strand. Consequently, modifications that do not affect Watson–Crick base pairing during cDNA synthesis will be canceled out.

Experimental assays dedicated to the detection of non-mutational RNA modifications have been developed, such as immunoprecipitation with ad hoc antibodies. Importantly, these methods can be applied to a limited number of RNA modifications since they rely on availability of effective antibodies. Other methods exploit the natural consequence of a handful of RNA modifications to induce the RT to arrest during cDNA synthesis, or to make errors (i.e., incorporate non-complementary nucleotides) into the nascent cDNA. In both cases, disturbance in the RT processing will become visible in so-called RT-signatures, that are typical for a given RNA modification and will become visible by mapping the set of sequencing reads spanning the modified RNA position under investigation back to the reference genome. These RT-signatures include accumulation of sequencing reads with identical ends, which match the modified RNA position that caused the RT to stall, or in variable patterns of mismatches, which arise from misreading of the modified RNA residue by the RT. Most recently, Werner and co-workers [297] used an RF approach to predict RNA modifications based on RT-signatures. Their results show strong variability in the success rates depending not only on the type of RNA modification but also on the specific RT enzyme used in the cDNA synthesis step.