Abstract

Graph machine learning (GML) is receiving growing interest within the pharmaceutical and biotechnology industries for its ability to model biomolecular structures, the functional relationships between them, and integrate multi-omic datasets — amongst other data types. Herein, we present a multidisciplinary academic-industrial review of the topic within the context of drug discovery and development. After introducing key terms and modelling approaches, we move chronologically through the drug development pipeline to identify and summarize work incorporating: target identification, design of small molecules and biologics, and drug repurposing. Whilst the field is still emerging, key milestones including repurposed drugs entering in vivo studies, suggest GML will become a modelling framework of choice within biomedical machine learning.

Keywords: graph machine learning, drug discovery, drug development

Introduction

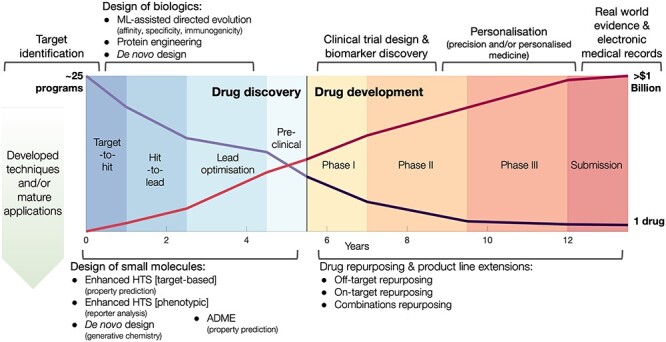

The process from drug discovery to market costs, on average, well over $1 billion and can span 12 years or more [1–3]; due to high attrition rates, rarely can one progress to market in less than ten years [4, 5]. The high levels of attrition throughout the process not only make investments uncertain but require market approved drugs to pay for the earlier failures. Despite an industry-wide focus on efficiency for over a decade, spurred on by publications and annual reports highlighting revenue cliffs from ending exclusivity and falling productivity, significant improvements have proved elusive against the backdrop of scientific, technological and regulatory change [2]. For the aforementioned reasons, there is now a greater interest in applying computational methodologies to expedite various parts of the drug discovery and development pipeline [6], see Figure 1.

Figure 1 .

Timeline of drug development linked to potential areas of application by GML methodologies. Preclinical drug discovery applications are shown on the left side of the figure ( 5.5 years), and clinical drug development applications are shown on the right hand side of the figure (

5.5 years), and clinical drug development applications are shown on the right hand side of the figure ( 8 years). Over this period, for every

8 years). Over this period, for every  25 drug discovery programmes, a single successful drug reaches market approval. Applications listed in the top of half of the figure are less developed in the context of GML with limited experimental validation. Financial, timeline and success probability data are taken from Paul et al. [5].

25 drug discovery programmes, a single successful drug reaches market approval. Applications listed in the top of half of the figure are less developed in the context of GML with limited experimental validation. Financial, timeline and success probability data are taken from Paul et al. [5].

Digital technologies have transformed the drug development process generating enormous volumes of data. Changes range from moving to electronic lab notebooks [7], electronic regulatory submissions, through increasing volumes of laboratory, experimental and clinical trial data collection [8] including the use of devices [9, 10] to precision medicine and the use of ‘big data’ [11]. The data collected about therapies extend well beyond research and development to include hospital, specialist and primary care medical professionals’ patient records — including observations taken from social media, e.g. for pharmacovigilance [12, 13]. There are innumerable online databases and other sources of information including scientific literature, clinical trials information, through to databases of repurposable drugs [14, 15]. Technological advances now allow for greater -omic profiling beyond genotyping and whole genome sequencing (WGS); standardization of microfluidics and antibody tagging has made single-cell technologies widely available to study both the transcriptome, e.g. using RNA-seq [16], the proteome (targeted), e.g. via mass cytometry [17], or even multiple modalities together [18].

One of the key characteristics of biomedical data that is produced and used in the drug discovery process is its inter-connected nature. Such data structure can be represented as a graph, a mathematical abstraction ubiquitously used across disciplines and fields in biology to model the diverse interactions between biological entities that intervene at the different scales. At the molecular scale, proteins and other biomolecules can be represented as graphs capturing spatial and structural relationships between their amino acid residues [19, 20] and small molecule drugs as graphs relating their constituent atoms and chemical bonding structure [21, 22]. At an intermediary scale, interactomes are graphs that capture specific types of interactions between biomolecular species (e.g. metabolites, mRNA, proteins) [23], with protein–protein interaction (PPI) graphs being perhaps most commonplace. Finally, at a higher level of abstraction, knowledge graphs can represent the complex relationships between drugs, side effects, diagnosis, associated treatments and test results [24, 25] as found in electronic medical records (EMR).

Within the last decade, two emerging trends have reshaped the data modelling community: network analysis and deep learning. The ‘network medicine’ paradigm has long been recognized in the biomedical field [26], with multiple approaches borrowed from graph theory and complex network science applied to biological graphs such as PPIs and gene regulatory networks (GRNs). Most approaches in this field were limited to handcrafted graph features such as centrality measures and clustering. In contrast, deep neural networks, a particular type of machine learning algorithms, are used to learn optimal tasks-specific features. The impact of deep learning was ground-breaking in computer vision [27] and natural language processing [28] but was limited to specific domains by the requirements on the regularity of data structures. At the convergence of these two fields is graph machine learning (GML) a new class of ML methods exploiting the structure of graphs and other irregular datasets (point clouds, meshes, manifolds, etc).

The essential idea of GML methods is to learn effective feature representations of nodes [29, 30] (e.g. users in social networks), edges (e.g. predicting future interactions in recommender systems) or entire graphs [31] (e.g. predicting properties of molecular graphs). In particular, graph neural networks (GNNs) [32–34], which are deep neural network architectures specifically designed for graph-structure data, are attracting growing interest. GNNs iteratively update the features of the nodes of a graph by propagating information from their neighbours. These methods have already been successfully applied to a variety of tasks and domains such as recommendation in social media and E-commerce [35–38], traffic estimations in Google Maps [39], misinformation detection in social media [40], and various domains of natural sciences including modelling fluids, rigid solids, and deformable materials interacting with one another [41] and event classification in particle physics [42, 43].

In the biomedical domain, GML has now set the state of the art for mining graph-structured data including drug–target–indication interaction and relationship prediction through knowledge graph embedding [30, 44, 45]; molecular property prediction [21, 22], including the prediction of absorption, distribution, metabolism and excretion (ADME) profiles [46]; early work in target identification [47] to de novo molecule design [48, 49]. Most notably, Stokes et al. [50] used directed message passing GNNs operating on molecular structures to propose repurposing candidates for antibiotic development, validating their predictions in vivo to propose suitable repurposing candidates remarkably structurally distinct from known antibiotics. Therefore, GML methods appear to be extremely promising in applications across the drug development pipeline.

Compared to previous review papers on GML [51–55] for the machine learning community or general reviews on ML within drug development more broadly [56–58], the focus of our paper is both for biomedical researchers without extensive ML backgrounds and ML experts interested in biomedical applications — with a thematic focus on GML. We provide an introduction to the key terms and building blocks of graph learning architectures (Definitions & Machine Learning on Graphs) and contextualize these methodologies within the drug discovery and development pipeline from an industrial perspective for method developers without extensive biological expertize (Drug Development Applications) before providing a closing discussion (Discussion).

Definitions

Notations and preliminaries of graph theory

We denote a graph  where

where  is a set of

is a set of  nodes, or vertices, and

nodes, or vertices, and  is a set of

is a set of  edges. Let

edges. Let  denote a node and

denote a node and  denote an edge from node

denote an edge from node  to node

to node  . When multiple edges can connect the same pair of nodes, the graph is called a multigraph. Node features are represented by

. When multiple edges can connect the same pair of nodes, the graph is called a multigraph. Node features are represented by  and

and  are the

are the  features of node

features of node  . Edge features, or attributes, are similarly represented by

. Edge features, or attributes, are similarly represented by  where

where  . We may also denote different nodes as

. We may also denote different nodes as  and

and  such that

such that  is the edge from

is the edge from  to

to  with attributes

with attributes  . Note that under this definition, undirected graphs are defined as directed graphs with each undirected edge represented by two directed edges.

. Note that under this definition, undirected graphs are defined as directed graphs with each undirected edge represented by two directed edges.

The neighbourhood  of node

of node  , sometimes referred to as one-hop neighbourhood, is the set of nodes that are connected to it by an edge,

, sometimes referred to as one-hop neighbourhood, is the set of nodes that are connected to it by an edge,  , with shorthand

, with shorthand  used for compactness. The cardinality of a node’s neighbourhood is called its degree and the diagonal degree matrix,

used for compactness. The cardinality of a node’s neighbourhood is called its degree and the diagonal degree matrix,  , has elements

, has elements  .

.

Two nodes  and

and  in a graph

in a graph  are connected if there exists a path in

are connected if there exists a path in  starting at one and ending at the other, i.e. there exists a sequence of consecutive edges of

starting at one and ending at the other, i.e. there exists a sequence of consecutive edges of  connecting the two nodes. A graph is connected if there exists a path between every pair of nodes in the graph. The shortest path distance between

connecting the two nodes. A graph is connected if there exists a path between every pair of nodes in the graph. The shortest path distance between  and

and  is defined as the number of edges in the shortest path between the two nodes and denoted by

is defined as the number of edges in the shortest path between the two nodes and denoted by  .

.

A graph  is a subgraph of

is a subgraph of  if and only if

if and only if  and

and  . If it also holds that

. If it also holds that  , then

, then  is called an induced subgraph of

is called an induced subgraph of  .

.

The adjacency matrix  typically represents the relations between nodes such that the entry on the

typically represents the relations between nodes such that the entry on the  row and

row and  column indicates whether there is an edge from node

column indicates whether there is an edge from node  to node

to node  , with

, with  representing that there is an edge, and

representing that there is an edge, and  that there is not (i.e.

that there is not (i.e.  ). Most commonly, the adjacency matrix is a square (from a set of nodes to itself), but the concept extends to bipartite graphs where an

). Most commonly, the adjacency matrix is a square (from a set of nodes to itself), but the concept extends to bipartite graphs where an  matrix can represent the edges from one set of

matrix can represent the edges from one set of  nodes to another set of size

nodes to another set of size  , and is sometimes used to store scalar edge weights. The Laplacian matrix of a simple (unweighted) graph is

, and is sometimes used to store scalar edge weights. The Laplacian matrix of a simple (unweighted) graph is  . The normalized Laplacian

. The normalized Laplacian  is often preferred, with a variant defined as

is often preferred, with a variant defined as  .

.

Knowledge graph

The term knowledge graph is used to qualify a graph that captures  types of relationships between a set of entities. In this case,

types of relationships between a set of entities. In this case,  includes relationship types as edge features. Knowledge graphs are commonly introduced as sets of triplets

includes relationship types as edge features. Knowledge graphs are commonly introduced as sets of triplets  , where

, where  represents the set of relationships. Note that multiple edges of different types can connect two given nodes. As such, the standard adjacency matrix is ill-suited to capture the complexity of a knowledge graph. Instead, a knowledge graph is often represented as a collection of adjacency matrices

represents the set of relationships. Note that multiple edges of different types can connect two given nodes. As such, the standard adjacency matrix is ill-suited to capture the complexity of a knowledge graph. Instead, a knowledge graph is often represented as a collection of adjacency matrices  , forming an adjacency tensor, in which each adjacency matrix

, forming an adjacency tensor, in which each adjacency matrix  captures one type of relationship.

captures one type of relationship.

Random walks

A random walk is a sequence of nodes selected at random during an iterative process. A random walk is constructed by considering a random walker that moves through the graph starting from a node  . At each step, the walker can either move to a neighbouring node with probability

. At each step, the walker can either move to a neighbouring node with probability  or stay on node

or stay on node  with probability

with probability  . The sequence of nodes visited after a fixed number of steps

. The sequence of nodes visited after a fixed number of steps  gives a random walk of length

gives a random walk of length  . Graph diffusion is a related notion that models the propagation of a signal on a graph. A classic example is heat diffusion [59], which studies the propagation of heat in a graph starting from some initial distribution.

. Graph diffusion is a related notion that models the propagation of a signal on a graph. A classic example is heat diffusion [59], which studies the propagation of heat in a graph starting from some initial distribution.

Graph isomorphism

Two graphs  and

and  are said to be isomorphic if there exists a bijective function

are said to be isomorphic if there exists a bijective function  such that

such that  . Finding if two graphs are isomorphic is a recurrent problem in graph analysis that has deep ramifications for machine learning on graphs. For instance, in graph classification tasks, it is assumed that a model needs to capture the similarities between pairs of graphs to classify them accurately.

. Finding if two graphs are isomorphic is a recurrent problem in graph analysis that has deep ramifications for machine learning on graphs. For instance, in graph classification tasks, it is assumed that a model needs to capture the similarities between pairs of graphs to classify them accurately.

The Weisfeiler-Lehman (WL) graph isomorphism test [60] is a classical polynomial-time algorithm in graph theory. It is based on iterative graph recolouring, starting with all nodes of identical ‘colour’ (label). At each step, the algorithm aggregates the colours of nodes and their neighbourhoods and hashes the aggregated colour into unique new colours. The algorithm stops upon reaching a stable colouring. If at that point, the colourings of the two graphs differ, the graphs are deemed non-isomorphic. However, if the colourings are the same, the graphs are possibly (but not necessarily) isomorphic. In other words, the WL test is a necessary but insufficient condition for graph isomorphism. There exist non-isomorphic graphs for which the WL test produces identical colouring and thus considers them possibly isomorphic; the test is said to fail in this case [61].

Machine Learning on Graphs

Most machine learning methods that operate on graphs can be decomposed into two parts: a general-purpose encoder and a task-specific decoder [62]. The encoder embeds a graph’s nodes, or the graph itself, in a low-dimensional feature space. To embed entire graphs, it is common first to embed nodes and then apply a permutation invariant pooling function to produce a graph level representation (e.g. sum, max or mean over node embeddings). The decoder computes an output for the associated task. The components can either be combined in two-step frameworks, with the encoder pre-trained in an unsupervised setting, or in an end-to-end fashion. The end tasks can be classified following multiple dichotomies: supervised/unsupervised, inductive/transductive and node-level/graph-level.

Supervised/unsupervised task. This is the classic dichotomy found in machine learning [63]. Supervised tasks aim to learn a mapping function from labelled data such that the function maps each data point to its label and generalizes to unseen data points. In contrast, unsupervised tasks highlight unknown patterns and uncover structures in unlabelled datasets.

Inductive/transductive task. Inductive tasks correspond to supervised learning discussed above. Transductive tasks expect that all data points are available when learning a mapping function, including unlabelled data points [32]. Hence, in the transductive setting, the model learns both from unlabelled and labelled data. In this respect, inductive learning is more general than transductive learning, as it extends to unseen data points.

Node-level/graph-level task. This dichotomy is based on the object of interest. A task can either focus on the nodes within a graph, e.g. classifying nodes within the context set by the graph, or focus on whole graphs, i.e. each data point corresponds to an entire graph [31]. Note that node-level tasks can be further decomposed into node attribute prediction tasks [32] and link inference tasks [30]. The former focuses on predicting properties of nodes while the latter infers missing links in the graph.

As an illustration, consider the task of predicting the chemical properties of small molecules based on their chemical structures. This is a graph-level task in a supervised (inductive) setting whereby labelled data is used to learn a mapping from chemical structure to chemical properties. Alternatively, the task of identifying groups of proteins that are tightly associated in a PPI graph is an unsupervised node-level task. However, predicting proteins’ biological functions using their interactions in a PPI graph corresponds to a node-level transductive task.

Further, types of tasks can be identified, e.g. based on whether we have static or varying graphs. Biological graphs can vary and evolve along a temporal dimension resulting in changes to composition, structure and attributes [64, 65]. However, the classifications detailed above are the most commonly found in the literature. We review below the existing classes of GML methods.

Traditional approaches

Graph statistics

In the past decades, a flourish of heuristics and statistics have been developed to characterize graphs and their nodes. For instance, the diverse centrality measures capture different aspects of graphs connectivity. The closeness centrality quantifies how closely a node is connected to all other nodes, and the betweenness centrality measures how many shortest paths between pairs of other nodes a given node is part of. Furthermore, graph sub-structures can be used to derive topological descriptors of the wiring patterns around each node in a graph. For instance, motifs [66] and graphlets [67] correspond to sets of small graphs used to characterize local wiring patterns of nodes. Specifically, we can derive a feature vector with length corresponding to the number of considered motifs (or graphlets) where the  element indicates the frequency of the

element indicates the frequency of the  motif.

motif.

These handcrafted features can provide node, or graph, representations that can be used as input to machine learning algorithms. A popular approach has been the definition of kernels based on graph statistics that can be used as input to support vector machines (SVM). For instance, the graphlet kernel [68] captures node wiring patterns similarity, and the WL kernel [69] captures graph similarity based on the WL algorithm discussed in Section 2.

Random walks

Random-walk based methods have been a popular, and successful, approach to embed a graph’s nodes in a low-dimensional space such that node proximities are preserved. The underlying idea is that the distance between node representations in the embedding space should correspond to a measure of distance on the graph, measured here by how often a given node is visited in random walks starting from another node. Deepwalk [29] and node2vec [70] are arguably the most famous methods in this category.

In practice, Deepwalk simulates multiple random walks for each node in the graph. Then, given the embedding  of a node

of a node  , the objective is to maximize the log probability

, the objective is to maximize the log probability  for all nodes

for all nodes  that appear in a random walk within a fixed window of

that appear in a random walk within a fixed window of  . The method draws its inspiration from the SkipGram model developed for natural language processing [71].

. The method draws its inspiration from the SkipGram model developed for natural language processing [71].

DeepWalk uses uniformly random walks, but several follow-up works analyze how to bias these walks to improve the learned representations. For example, node2vec biases the walks to behave more or less like certain search algorithms over the graph. The authors report a higher quality of embeddings with respect to information content when compared to Deepwalk.

Geometric approaches

Geometric models for knowledge graph embedding posit each relation type as a geometric transformation from source to target in the embedding space. Consider a triplet  ,

,  denoting the source node and

denoting the source node and  denoting the target node. A geometric model learns a transformation

denoting the target node. A geometric model learns a transformation  such that

such that  is small, with

is small, with  being some notion of distance (e.g. Euclidean distance) and

being some notion of distance (e.g. Euclidean distance) and  denoting the embedding of entity

denoting the embedding of entity  . The key differentiating choice between these approaches is the form of the geometric transformation

. The key differentiating choice between these approaches is the form of the geometric transformation  .

.

TransE [72] is a purely translational approach, where  corresponds to the sum of the source node and relation embeddings. In essence, the model enforces that the motion from the embedding

corresponds to the sum of the source node and relation embeddings. In essence, the model enforces that the motion from the embedding  of the source node in the direction given by the relation embedding

of the source node in the direction given by the relation embedding  terminates close to the target node’s embedding

terminates close to the target node’s embedding  as quantified by the chosen distance metric. Due to its formulation, TransE is not able to account effectively for symmetric relationships or one-to-many interactions.

as quantified by the chosen distance metric. Due to its formulation, TransE is not able to account effectively for symmetric relationships or one-to-many interactions.

Alternatively, RotatE [30] represents relations as rotations in a complex latent space. Thus,  applies a rotation matrix

applies a rotation matrix  , corresponding to the relation, to the embedding vector of the source node

, corresponding to the relation, to the embedding vector of the source node  such that the rotated vector

such that the rotated vector  lies close to the embedding vector

lies close to the embedding vector  of the target node in terms of Manhattan distance. The authors demonstrate that rotations can correctly capture diverse relation classes, including symmetry/anti-symmetry.

of the target node in terms of Manhattan distance. The authors demonstrate that rotations can correctly capture diverse relation classes, including symmetry/anti-symmetry.

Matrix/tensor factorization

Matrix factorization is a common problem in mathematics that aims to approximate a matrix  by the product of

by the product of  low-dimensional latent factors,

low-dimensional latent factors,  . The general problem can be written as

. The general problem can be written as

|

where  represents a measure of the distance between two inputs, such as Euclidean distance or Kullback-Leibler divergence. In machine learning, matrix factorization has been extensively used for unsupervised applications such as dimensionality reduction, missing data imputation and clustering. These approaches are especially relevant to the knowledge graph embedding problem and have set state-of-the-art (SOTA) results on standard benchmarks [73].

represents a measure of the distance between two inputs, such as Euclidean distance or Kullback-Leibler divergence. In machine learning, matrix factorization has been extensively used for unsupervised applications such as dimensionality reduction, missing data imputation and clustering. These approaches are especially relevant to the knowledge graph embedding problem and have set state-of-the-art (SOTA) results on standard benchmarks [73].

For graphs, the objective is to factorize the adjacency matrix  , or a derivative of the adjacency matrix (e.g. Laplacian matrix). It can effectively be seen as finding embeddings for all entities in the graph on a low-dimensional, latent manifold under user-defined constraints (e.g. latent space dimension) such that the adjacency relationships are preserved under dot products.

, or a derivative of the adjacency matrix (e.g. Laplacian matrix). It can effectively be seen as finding embeddings for all entities in the graph on a low-dimensional, latent manifold under user-defined constraints (e.g. latent space dimension) such that the adjacency relationships are preserved under dot products.

Laplacian eigenmaps, introduced by Belkin et al. [74], is a fundamental approach designed to embed entities based on a similarity derived graph. Laplacian eigenmaps uses the eigendecomposition of the Laplacian matrix of a graph to embed each of the  nodes of a graph

nodes of a graph  in a low-dimensional latent manifold. The spectral decomposition of the Laplacian is given by equation

in a low-dimensional latent manifold. The spectral decomposition of the Laplacian is given by equation  , where

, where  is a diagonal matrix with entries corresponding to the eigenvalues of L and column

is a diagonal matrix with entries corresponding to the eigenvalues of L and column  of

of  gives the eigenvector associated to the

gives the eigenvector associated to the  eigenvalue

eigenvalue  (i.e.

(i.e.  ). Given a user defined dimension

). Given a user defined dimension  , the embedding of node

, the embedding of node  is given by the vector

is given by the vector  , where

, where  indicates the

indicates the  entry of vector

entry of vector  .

.

Nickel et al. [75] introduced RESCAL to address the knowledge graph embedding problem. RESCAL’s objective function is defined as

|

where  and

and  , with

, with  denoting the latent space dimension. The function

denoting the latent space dimension. The function  denotes a regularizer, i.e. a function applying constraints on the free parameters of the model, on the factors

denotes a regularizer, i.e. a function applying constraints on the free parameters of the model, on the factors  and

and  . Intuitively, factor

. Intuitively, factor  learns the embedding of each entity in the graph and factor

learns the embedding of each entity in the graph and factor  specifies the interactions between entities under relation

specifies the interactions between entities under relation  . Yang et al. [76] proposed DistMult, a variation of RESCAL that considers each factor

. Yang et al. [76] proposed DistMult, a variation of RESCAL that considers each factor  as a diagonal matrix. Trouillon et al. [77] proposed ComplEx, a method extending DistMult to the complex space taking advantage of the Hermitian product to represent asymmetric relationships.

as a diagonal matrix. Trouillon et al. [77] proposed ComplEx, a method extending DistMult to the complex space taking advantage of the Hermitian product to represent asymmetric relationships.

Alternatively, some existing frameworks leverage both a graph’s structural information and the node’s semantic information to embed each entity in a way that preserves both sources of information. One such approach is to use a graph’s structural information to regularize embeddings derived from the factorization of the feature matrix  [78, 79]. The idea is to penalize adjacent entities in the graph to have closer embeddings in the latent space, according to some notion of distance. Another approach is to jointly factorize both data sources, for instance, introducing a kernel defined on the feature matrix [80, 81].

[78, 79]. The idea is to penalize adjacent entities in the graph to have closer embeddings in the latent space, according to some notion of distance. Another approach is to jointly factorize both data sources, for instance, introducing a kernel defined on the feature matrix [80, 81].

Graph neural networks

GNNs were first introduced in the late 1990s [82–84] but have attracted considerable attention in recent years, with the number of variants rising steadily [32–34, 44, 85–89]. From a high-level perspective, GNNs are a realization of the notion of group invariance, a general blueprint underpinning the design of a broad class of deep learning architectures. The key structural property of graphs is that the nodes are usually not assumed to be provided in any particular order, and any functions acting on graphs should be permutation invariant (order-independent); therefore, for any two isomorphic graphs, the output of said functions are identical. A typical GNN consists of one or more layers implementing a node-wise aggregation from the neighbour nodes; since the ordering of the neighbours is arbitrary, the aggregation must be permutation invariant. When applied locally to every node of the graph, the overall function is permutation equivariant, i.e. its output is changed in the same way as the input under node permutations.

GNNs are amongst the most general class of deep learning architectures currently in existence. Popular architectures such as DeepSets [90], transformers [91] and convolutional neural networks [92] can be derived as particular cases of GNNs operating on graphs with an empty edge set, a complete graph, and a ring graph, respectively. In the latter case, the graph is fixed and the neighbourhood structure is shared across all nodes; the permutation group can therefore be replaced by the translation group, and the local aggregation expressed as a convolution. While a broad variety of GNN architecture exists, their vast majority can be classified into convolutional, attentional and message-passing ‘flavours’ — with message-passing being the most general formulation.

Message passing networks

A message passing-type GNN layer is comprised of three functions: (1) a message passing function Msg that permits information exchange between nodes over edges; (2) a permutation-invariant aggregation function Agg that combines the collection of received messages into a single, fixed-length representation (3) and an update function Update that produces node-level representations given the previous representation and the aggregated messages. Common choices are a simple linear transformation for Msg, summation, simple- or weighted-averages for Agg and multilayer perceptrons (MLP) with activation functions for the Update function, although it is not uncommon for the Msg or Update function to be absent or reduced to an activation function only. Where the node representations after layer  are

are  , we have

, we have

|

or, more compactly,

|

where  and

and  are the update, aggregation and message passing functions, respectively, and

are the update, aggregation and message passing functions, respectively, and  indicates the layer index [93]. The design of the aggregation

indicates the layer index [93]. The design of the aggregation  is important: when chosen to be an injective function, the message passing mechanism can be shown to be equivalent to the colour refinement procedure in the WL algorithm [86]. The initial node representations,

is important: when chosen to be an injective function, the message passing mechanism can be shown to be equivalent to the colour refinement procedure in the WL algorithm [86]. The initial node representations,  , are typically set to node features,

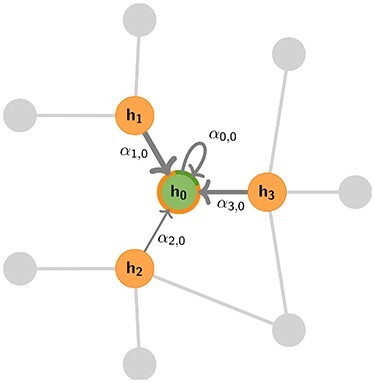

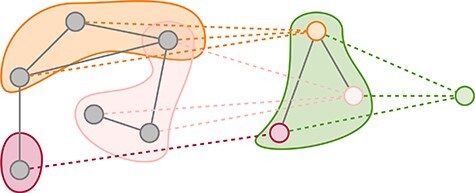

, are typically set to node features,  . Figure 2 gives a schematic representation of this operation.

. Figure 2 gives a schematic representation of this operation.

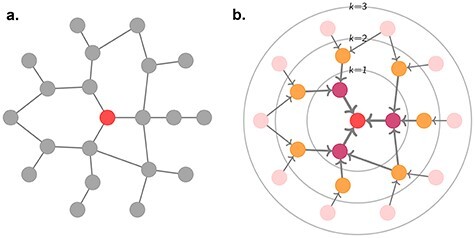

Figure 2 .

Illustration of a general aggregation step performed by a GNN for the central node (green) based on its direct neighbours (orange). Messages may be weighted depending on their content, the source or target node features or the attributes of the edge they are passed along, as indicated by the thickness of incoming arrows.

Graph convolutional network

The graph convolutional network (GCN) [32] can be decomposed in this framework as

|

where  is some activation function, usually a rectified linear unit (ReLU). The scheme is simplified further if we consider the addition of self-loops, that is, an edge from a node to itself, commonly expressed as the modified adjacency

is some activation function, usually a rectified linear unit (ReLU). The scheme is simplified further if we consider the addition of self-loops, that is, an edge from a node to itself, commonly expressed as the modified adjacency  , where the aggregation includes the self-message and the update reduces to

, where the aggregation includes the self-message and the update reduces to

|

With respect to the notations in Figure 2, for GCN we have  .

.

As the update depends only on a node’s local neighbourhood, these schemes are also commonly referred to as neighbourhood aggregation. Indeed, taking a broader perspective, a single-layer GNN updates a node’s features based on its immediate or one-hop neighbourhood. Adding a second GNN layer allows information from the two-hop neighbourhood to propagate via intermediary neighbours. By further stacking GNN layers, node features can come to depend on the initial values of more distant nodes, analogous to the broadening the receptive field in later layers of convolutional neural networks—the deeper the network, the broader the receptive field (see Figure 3). However, this process is diffusive and leads to features washing out as the graph thermalizes. This problem is solved in convolutional networks with pooling layers, but an equivalent canonical coarsening does not exist for irregular graphs.

Figure 3 .

-hop neighbourhoods of the central node (red). Typically, a GNN layer operates on the 1-hop neighbourhood, i.e. nodes with which the central node shares an edge, within the

-hop neighbourhoods of the central node (red). Typically, a GNN layer operates on the 1-hop neighbourhood, i.e. nodes with which the central node shares an edge, within the  circle. Stacking layers allows information from more distant nodes to propagate through intermediate nodes.

circle. Stacking layers allows information from more distant nodes to propagate through intermediate nodes.

Graph attention network

Graph attention networks (GAT) [33] weight incoming messages with an attention mechanism and multi-headed attention for train stability, including self-loops, the message, aggregation and update functions

|

are otherwise unchanged. Although the authors suggest the attention mechanism is decoupled from the architecture and should be task specific, in practice, their original formulation is most widely used. The attention weights,  are softmax normalized, that is

are softmax normalized, that is

|

where  is the output of a single layer feed-forward neural network without a bias (a projection) with LeakyReLU activations, that takes the concatenation of transformed source- and target-node features as input,

is the output of a single layer feed-forward neural network without a bias (a projection) with LeakyReLU activations, that takes the concatenation of transformed source- and target-node features as input,

|

where  .

.

Relational graph convolutional networks

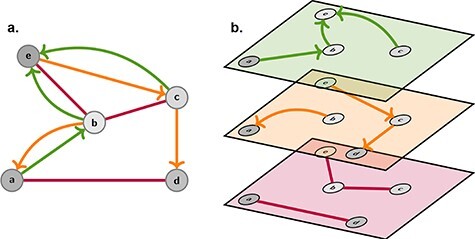

At many scales of systems biology, the relationships between entities have a type, a direction, or both. For instance, the type of bonds between atoms, binding of two proteins, and gene regulatory interactions are essential to understanding the systems in which they exist. This idea is expressed in the message passing framework with messages that depend on edge attributes. Relational graph convolutional networks (R-GCNs) [44] learn separate linear transforms for each edge type, which can be viewed as casting the graph as a multiplex graph and operating GCN-like models independently on each layer, as shown in Figure 4.

Figure 4 .

A multi-relational graph (A) can be parsed into layers of a multiplex graph (B). The R-GCN learns a separate transform for each layer, and the self-loop and messages are passed according to the connectivity of the layers. For example, node (A) passes a message to node (B) in the top layer, receives a message from (B) in the middle layer, and does not communicate with (B) in the bottom layer.

The R-GCN model decomposes to

|

for edge types  , with separate transforms

, with separate transforms  for self-loops, and problem-specific normalization constant

for self-loops, and problem-specific normalization constant  .

.

The different types of GNNs above illustrate some approaches to define message passing on graphs. Note that there is no established best scheme for all scenarios and that each specific application might require a different scheme.

Graph pooling

Geometric deep learning approaches machine learning with graph-structured data as the generalization of methods designed for learning with grid and sequence data (images, time-series; Euclidean data) to non-Euclidean domains, i.e. graphs and manifolds [94]. This is also reflected in the derivation and naming conventions of popular GNN layers as generalized convolutions [32, 44]. Modern convolutional neural networks have settled on the combination of layers of  kernels interspersed with

kernels interspersed with  max-pooling. Developing a corresponding pooling workhorse for GNNs is an active area of research. The difficulty is that, unlike Euclidean data structures, there are no canonical up- and down-sampling operations for graphs. As a result, there are many proposed methods that centre around learning to pool or prune based on features [95–99], and learned or non-parametric structural pooling [100–103]. However, the distinction between featural and structural methods is blurred when topological information is included in the node features.

max-pooling. Developing a corresponding pooling workhorse for GNNs is an active area of research. The difficulty is that, unlike Euclidean data structures, there are no canonical up- and down-sampling operations for graphs. As a result, there are many proposed methods that centre around learning to pool or prune based on features [95–99], and learned or non-parametric structural pooling [100–103]. However, the distinction between featural and structural methods is blurred when topological information is included in the node features.

The most successful feature-based methods extract representations from graphs directly either for cluster assignments [95, 99] or for top-k pruning [96–98]. DiffPool uses GNNs both to produce a hierarchy of representations for overall graph classification and to learn intermediate representations for soft cluster assignments to a fixed number of pools [95]. Figure 5 presents an example of this kind of pooling. top-k pooling takes a similar approach, but instead of using an auxiliary learning process to pool nodes, it is used to prune nodes [96, 97]. In many settings, this simpler method is competitive with DiffPool at a fraction of the memory cost.

Figure 5 .

A possible graph pooling schematic. Nodes in the original graph (left, grey) are pooled into nodes in the intermediate graph (centre) as shown by the dotted edges. The final pooling layer aggregates all the intermediate nodes into a single representation (right, green). DiffPool could produce the pooling shown [95].

Structure-based pooling methods aggregate nodes based on the graph topology and are often inspired by the processes developed by chemists and biochemists for understanding molecules through their parts. For example, describing a protein in terms of its secondary structure ( -helix,

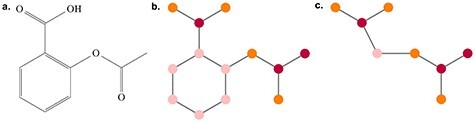

-helix,  -sheets) and the connectivity between these elements can be seen as a pooling operation over the protein’s molecular graph. Figure 6 shows how a small molecule can be converted to a junction tree representation, with the carbon ring (in pink) being aggregated into a single node. Work on decomposing molecules into motifs bridges the gap between handcrafted secondary structures and unconstrained learning methods [103]. Motifs are extracted based on a combined statistical and chemical analysis, where motif templates (i.e. graph substructures) are selected based on how frequently they occur in the training corpus and molecules are then decomposed into motifs according to some chemical rules. More general methods look to concepts from graph theory such as minimum cuts [100, 102] and maximal cliques [101] on which to base pooling. Minimum cuts are graph partitions that minimize some objective and have obvious connections to graph clustering, whilst cliques (subsets of nodes that are fully connected) are in some sense at the limit of node community density.

-sheets) and the connectivity between these elements can be seen as a pooling operation over the protein’s molecular graph. Figure 6 shows how a small molecule can be converted to a junction tree representation, with the carbon ring (in pink) being aggregated into a single node. Work on decomposing molecules into motifs bridges the gap between handcrafted secondary structures and unconstrained learning methods [103]. Motifs are extracted based on a combined statistical and chemical analysis, where motif templates (i.e. graph substructures) are selected based on how frequently they occur in the training corpus and molecules are then decomposed into motifs according to some chemical rules. More general methods look to concepts from graph theory such as minimum cuts [100, 102] and maximal cliques [101] on which to base pooling. Minimum cuts are graph partitions that minimize some objective and have obvious connections to graph clustering, whilst cliques (subsets of nodes that are fully connected) are in some sense at the limit of node community density.

Figure 6 .

Illustration of (A) the molecule aspirin, (B) its basic graph representation and (C) the associated junction tree representation. Colours on the node correspond to atom types.

Drug Development Applications

The process of discovering a drug and making it available to patients can take up to 10 years and is characterized by failures, or attrition, see Figure 1. The early discovery stage involves target identification and validation, hit discovery, lead molecule identification and then optimization to achieve the desired characteristics of a drug candidate [114]. Pre-clinical research typically comprises both in vitro and in vivo assessments of toxicity, pharmacokinetics (PK), pharmacodynamics (PD) and efficacy of the drug. Providing good pre-clinical evidence is presented, the drug then progresses for human clinical trials normally through three different phases of clinical trials. In the following subsections, we explore how GML can be applied to distinct stages within the drug discovery and development process.

In Table 1, we provide a summary of how some of the key work reviewed in this section ties to the methods discussed in Section 3. Table 2 outlines the underlying key data types and databases therein. Biomedical databases are typically presented as general repositories with minimal reference to downstream applications. Therefore, additional processing is often required for a specific task; to this end, efforts have been directed towards processed data repositories with specific endpoints in mind [115, 116].

Table 1.

Exemplar references linking applications from Section 4 to methods described in Section 3. The entries in the data types column refers to acronyms defined in Table 2. The last column indicates the presence of follow up experimental validation. *The authors consider a mesh graph over protein surfaces.

| Relevant application | Ref. | Method type | Task level | ML approach | Data types | Exp. val? |

|---|---|---|---|---|---|---|

| 4.1 Target identification | ||||||

| − | [47] | Geometric (§3.2) | Node-level | Unsupervised | Di, Dr, GA | |

| 4.2 Design of small molecules therapies | ||||||

| Molecular property prediction | [21] | GNN (§3.4) | Graph-level | Supervised | Dr | |

| [104] | GNN (§3.4) | Graph-level | Supervised | Dr | ||

| [22] | GNN (§3.4) | Graph-level | Supervised | Dr | ||

| Enhanced high throughput screens | [50] | GNN (§3.4) | Graph-level | Supervised | Dr | ✓ |

| De novo design | [105] | GNN (§3.4) | Graph-level | Unsupervised | Dr | |

| [48] | Factorisation (§3.3) | Graph-level | Semi-supervised | Dr | ✓ | |

| 4.3 Design of new biological entities | ||||||

| ML-assisted directed evolution | − | − | − | − | − | |

| Protein engineering | [49] | GNN (§3.4) | Subgraph-level | *Supervised | PS | |

| De novo design | [106] | GNN (§3.4) | Graph-level | Supervised | PS | ✓ |

| 4.4 Drug repurposing | ||||||

| Off-target repurposing | [107] | Factorisation (§3.3) | Node-level | Unsupervised | Dr, PI | |

| [108] | GNN (§3.4) | Graph-level | Supervised | Dr, PS | ||

| [109] | Factorisation (§3.3) | Node-level | Unsupervised | Dr, Di | ||

| On-target repurposing | [110] | GNN (§3.4) | Node-level | Supervised | Dr, Di | |

| [111] | Geometric (§3.2) | Node-level | Unsupervised | Dr, Di, PI, GA | ||

| Combination repurposing | [112] | GNN (§3.4) | Node-level | Supervised | Dr, PI, DC | |

| [113] | GNN (§3.4) | Graph-level | Supervised | Dr, DC | ✓ | |

Table 2.

Different types of data relevant to drug discovery and development applications with associated databases.

Target identification

Target identification is the search for a molecular target with a significant functional role(s) in the pathophysiology of a disease such that a hypothetical drug could modulate said target culminating with beneficial effect [132, 133]. Early targets included G-protein coupled receptors (GPCRs), kinases, and proteases and formed the major target protein families amongst first-in-class drugs [134] — targeting other classes of biomolecules is also possible, e.g. nucleic acids. For an organ-specific therapy, an ideal target should be strongly and preferably expressed in the tissue of interest, and preferably a three-dimensional structure should be obtainable for biophysical simulations.

There is a range of complementary lines of experimental evidence that could support target identification. For example, a phenomenological approach to target identification could consider the imaging, histological or -omic presentation of diseased tissue when compared to matched healthy samples. Typical differential presentation includes chromosomal aberrations (e.g. from WGS), differential expression (e.g. via RNA-seq) and protein translocation (e.g. from histopathological analysis) [135]. As the availability of -omic technologies increases, computational and statistical advances must be made to integrate and interpret large quantities of high dimensional, high-resolution data on a comparatively small number of samples, occasionally referred to as panomics [136, 137].

In contrast to a static picture, in vitro and in vivo models are built to examine the dynamics of disease phenotype to study mechanism. In particular, genes are manipulated in disease models to understand key drivers of a disease phenotype. For example, random mutagenesis could be induced by chemicals or transposons in cell clones or mice to observe the phenotypic effect of perturbing certain cellular pathways at the putative target protein [133]. As a targeted approach, bioengineering techniques have been developed to either silence mRNA or remove the gene entirely through genetic editing. In modern times, CRISPR is being used to knockout genes in a cleaner manner to prior technologies, e.g. siRNA, shRNA, TALEN [138–140]. Furthermore, innovations have led to CRISPR interference (CRISPRi) and CRISPR activation (CRISPRa) that allow for suppression or overexpression of target genes [141].

To complete the picture, biochemical experiments observe chemical and protein interactions to inform on possible drug mechanisms of action [132], examples include: affinity chromatography, a range of mass spectrometry techniques for proteomics, and drug affinity responsive target stability assays [142–144]. X-ray crystallography and cryogenic electron microscopy (cryo-EM) can be used to detail structures of proteins to identify druggable pockets [145]; computational approaches can be used to assess the impacts of mutations in cancer resulting in perturbed crystal structures [146]. Yeast two-hybrid or three-hybrid systems can be employed to detail genomic PPI or RNA–protein interaction [147, 148].

Systems biology aims to unify phenomenological observations on disease biology (the ‘-omics view’), genetic drivers of phenotypes (driven by bioengineering) through a network view of interacting biomolecules [149]. The ultimate goal is to pin down a ‘druggable’ point of intervention that could hopefully reverse the disease condition. One of the outcomes of this endeavour is the construction of signalling pathways; for example, the characterization of the TGF- , PI3K/AKT and Wnt-dependent signalling pathways have had profound impacts on oncology drug discovery [150–152].

, PI3K/AKT and Wnt-dependent signalling pathways have had profound impacts on oncology drug discovery [150–152].

In contrast to complex diseases, target identification for infectious disease requires a different philosophy. After eliminating pathogenic targets structurally similar to those within the human proteome, one aims to assess the druggability of the remaining targets. This may be achieved using knowledge of the genome to model the constituent proteins when 3D structures are not already available experimentally. The Blundell group has shown that 70-80% of the proteins from Mycobacterium tuberculosis and a related organism, Mycobacterium abscessus (infecting cystic fibrosis patients), can be modelled via homology [153, 154]. By examining the potential binding sites, such as the catalytic site of an enzyme or an allosteric regulatory site, the binding hotspot can be identified and the potential value as a target estimated [155]. Of course, target identification is also dependent on the accessibility of the target to a drug, as well as the presence of efflux pumps — and metabolism of any potential drug by the infectious agent.

From systems biology to machine learning on graphs

Organisms, or biological systems, consist of complex and dynamic interactions between entities at multiple scales. At the submolecular level, proteins are chains of amino acid residues which fold to adopt highly specific conformational structures. At the molecular scale, proteins and other biomolecules physically interact through transient and long-timescale binding events to carry out regulatory processes and perform signal amplification through cascades of chemical reactions. By starting with a low-resolution understanding of these biomolecular interactions, canonical sequences of interactions associated with specific processes become labelled as pathways that ultimately control cellular functions and phenotypes. Within multicellular organisms, cells interact with each other forming diverse tissues and organs. A reductionist perspective of disease is to view it as being the result of perturbations of the cellular machinery at the molecular scale that manifest through aberrant phenotypes at the cellular and organismal scales. Within target identification, one is aiming to find nodes that upon manipulation lead to a causal sequence of events resulting in the reversion from a diseased to a healthy state.

It seems plausible that target identification will be the greatest area of opportunity for machine learning on graphs. From a genetics perspective, examining Mendelian traits and genome-wide association studies linked to coding variants of drug targets have a greater chance of success in the clinic [156, 157]. However, when examining PPI networks, Fang et al. [158] found that various protein targets were not themselves ‘genetically associated’, but interacted with other proteins with genetic associations to the disease in question. For example in the case of rheumatoid arthritis (RA), tumour necrosis factor (TNF) inhibition is a popular drug mechanism of action with no genetic association — but the interacting proteins of TNF including CD40, NFKBIA, REL, BIRC3 and CD8A have variants that are known to exhibit a genetic predisposition to RA.

Oftentimes, systems biology has focused on networks with static nodes and edges, ignoring faithful characterization of underlying biomolecules that the nodes represent. With GML, we can account for much richer representations of biology accounting for multiple relevant scales, for example, graphical representation of molecular structures (discussed in Sections 4.2 and 4.3), functional relationships within a knowledge graph (discussed in Section 4.4), and expression of biomolecules. Furthermore, GML can learn graphs from data as opposed to relying on pre-existing incomplete knowledge [159, 160]. Early work utilizing GML for target identification includes Pittala et al. [47], whereby a knowledge graph link prediction approach was used to beat the in house algorithms of open targets [161] to rediscover drug targets within clinical trials for Parkinson’s disease.

The utilization of multi-omic expression data capturing instantaneous multimodal snapshots of cellular states will play a significant role in target identification as costs decrease [162, 163] — particularly in a precision medicine framework [136]. Currently, however, only a few panomic datasets are publicly available. A small number of early adopters have spotted the clear utility in employing GML [164], occasionally in a multimodal learning [165, 166], or causal inference setting [167]. These approaches have helped us move away from the classical Mendelian ‘one gene – one disease’ philosophy and appreciate the true complexity of biological systems.

Design of small molecule therapies

Drug design broadly falls into two categories: phenotypic drug discovery and target-based drug discovery. Phenotypic drug discovery (PDD) begins with a disease’s phenotype without having to know the drug target. Without the bias from having a known target, PDD has yielded many first-in-class drugs with novel mechanisms of action [168]. It has been suggested that PDD could provide the new paradigm of drug design, reducing costs substantially and increasing productivity [169]. However, drugs found by PDD are often pleiotropic and impose greater safety risks when compared to target-oriented drugs. In contrast, best-in-class drugs are usually discovered by a target-based approach.

For target-based drug discovery, after target identification and validation, ‘hit’ molecules would be identified via high-throughput screening of compound libraries against the target [114], typically resulting in a large number of possible hits. Grouping these into ‘hit series’ and they become further refined in functional in vitro assays. Ultimately, only those selected via secondary in vitro assays and in vivo models would be the drug ‘leads’. With each layer of screening and assays, the remaining compounds should be more potent and selective against the therapeutic target. Finally, lead compounds are optimized by structural modifications, to improve properties such as PKPD, typically using heuristics, e.g. Lipinski’s rule of five [170]. In addition to such structure-based approach, fragment-based (FBDD) [171, 172] and ligand-based drug discovery (LBDD) have also been popular [173, 174]. FBDD enhances the ligand efficiency and binding affinity with fragment-like leads of  150 Da, whilst LBDD does not require 3D structures of the therapeutic targets.

150 Da, whilst LBDD does not require 3D structures of the therapeutic targets.

Both phenotypic- and target-based drug discovery comes with their own risks and merits. While the operational costs of target ID may be optional, developing suitable phenotypic screening assays for the disease could be more time-consuming and costly [169, 175]. Hence, the overall timeline and capital costs are roughly the same [175].

In this review, we make no distinction between new chemical entities (NCE), new molecular entities (NME) or new active substances (NAS) [176].

Modelling philosophy

For a drug, the base graph representation is obtained from the molecule’s SMILES signature and captures bonds between atoms, i.e. each node of the graph corresponds to an atom and each edge stands for a bond between two atoms [21, 22]. The features associated with atoms typically include its element, valence and degree. Edge features include the associated bond’s type (single, double, triple), its aromaticity, and whether it is part of a ring or not. Additionally, Klicpera et al. [22] consider the geometric length of a bond and geometric angles between bonds as additional features. This representation is used in most applications, sometimes complemented or augmented with heuristic approaches.

To model a graph structure, Jin et al. [105] used the base graph representation in combination with a junction tree derived from it. To construct the junction tree, the authors first define a set of molecule substructures, such as rings. The graph is then decomposed into overlapping components, each corresponding to a specific substructure. Finally, the junction tree is defined with each node corresponding to an identified component and each edge associates overlapping components.

Jin et al. [103] then extended their previous work by using a hierarchical representation with various coarseness of the small molecule. The proposed representation has three levels: (1) an atom layer, (2) an attachment layer and (3) a motif layer. The first level is simply the basic graph representation. The following levels provide the coarse and fine-grain connection patterns between a molecule’s motifs. Specifically, the attachment layer describes at which atoms two motifs connect, while the motif layer only captures if two motifs are linked. Considering a molecule base graph representation  , a motif is defined as a subgraph of

, a motif is defined as a subgraph of  induced on atoms in

induced on atoms in  and bonds in

and bonds in  . Motifs are extracted from a molecule’s graph by breaking bridge bonds.

. Motifs are extracted from a molecule’s graph by breaking bridge bonds.

Kajino [177] opted for a hypergraph representation of small molecules. A hypergraph is a generalization of graphs in which an edge, called a hyperedge, can connect any number of nodes. In this setting, a node of the hypergraph corresponds to a bond between two atoms of the small molecule. In contrast, a hyperedge then represents an atom and connects all its bonds (i.e. nodes).

Molecular property prediction

Pharmaceutical companies may screen millions of small molecules against a specific target, e.g. see GlaxoSmithKline’s DNA-encoded small molecule library of 800 million entries [178]. However, as the end result will be optimized via a skilled medicinal chemist, one should aim to substantially cut down the search space by screening only a representative selection of molecules for optimization later. One route towards this is to select molecules with heterogeneous chemical properties using GML approaches. This is a popular task with well-established benchmarks such as QM9 [179] and MD17 [180]. Top-performing methods are based on GNNs.

For instance, using a graph representation of drugs, Duvenaud et al. [21] have shown substantial improvements over non-graph-based approaches for molecule property prediction tasks. Specifically, the authors used GNNs to embed each drug and tested the predictive capabilities of the model on diverse benchmark datasets. They demonstrated improved interpretability of the model and predictive superiority over previous approaches which relied on circular fingerprints [181]. The authors use a simple GNN layer with a read-out function on the output of each GNN layer that updates the global drug embedding.

Alternatively, Schutt et al. [182] introduced SchNet, a model that characterizes molecules based on their representation as a list of atoms with interatomic distances, that can be viewed as a fully connected graph. SchNet uses learned embeddings for each atom using two modules: (1) an atom-wise module and (2) an interaction module. The former applies a simple MLP transformation to each atom representation input, while the latter updates the atom representation based on the representations of the other atoms of the molecule and using relative distances to modulate contributions. The final molecule representation is obtained with a global sum pooling layer over all atoms’ embeddings.

With the same objective in mind, Klicpera et al. [22] recently introduced DimeNet, a novel GNN architecture that diverges from the standard message passing framework presented in Section 3. DimeNet defines a message coefficient between atoms based on their relative positioning in 3D space. Specifically, the message from node  to node

to node  is iteratively updated based on

is iteratively updated based on  ’s incoming messages as well as the distances between atoms and the angles between atomic bonds. DimeNet relies on more geometric features, considering both the angles between different bonds and the distance between atoms. The authors report substantial improvements over SOTA models for the prediction of molecule properties on two benchmark datasets.

’s incoming messages as well as the distances between atoms and the angles between atomic bonds. DimeNet relies on more geometric features, considering both the angles between different bonds and the distance between atoms. The authors report substantial improvements over SOTA models for the prediction of molecule properties on two benchmark datasets.

Most relevant to the later stages of preclinical work, Feinberg et al. extended previous work on molecular property prediction [104] to include ADME properties [46]. In this scenario, by only using structures of drugs predictions were made across a diverse range of experimental observables, including half-lives across in vivo models (rat, dog), human Ether-à-go-go-Related Gene protein interactions and IC values for common liver enzymes predictive of drug toxicity.

values for common liver enzymes predictive of drug toxicity.

Enhanced high-throughput screens

Within the previous section, chemical properties were a priori defined. In contrast, Stokes et al. [50] leveraged results from a small phenotypic growth inhibition assay of 2335 molecules against Escherichia coli to infer antibiotic properties of the ZINC15 collection of >107 million molecules. After ranking and curating hits, only 23 compounds were experimentally tested — leading to halicin being identified. Of particular note was that the Tanimoto similarity of halicin when compared its nearest neighbour antibiotic, metronidazole, was only  0.21 — demonstrating the ability of the underlying ML to generalize to diverse structures.

0.21 — demonstrating the ability of the underlying ML to generalize to diverse structures.

Testing halicin against a range of bacterial infections, including Mycobacterium tuberculosis, demonstrated broad-spectrum activity through selective dissipation of the  pH component of the proton motive force. In a world first, Stokes et al. showed efficacy of an AI-identified molecule in vivo (Acinetobacter baumannii infected neutropenic BALB/c mice) to beat the standard of care treatment (metronidazole) [50].

pH component of the proton motive force. In a world first, Stokes et al. showed efficacy of an AI-identified molecule in vivo (Acinetobacter baumannii infected neutropenic BALB/c mice) to beat the standard of care treatment (metronidazole) [50].

De novo design

A more challenging task than those previously discussed is de novo design of small molecules from scratch; that is, for a fixed target (typically represented via 3D structure) can one design a suitable and selective drug-like entity?

In the landmark paper, Zhavoronkov et al. [48] created a novel chemical matter against discoidin domain receptor 1 (DDR1) using a variational autoencoder style architecture. Notably, they penalize the model to select structures similar to disclosed drugs from the patent literature. As the approach was designed to find small molecules for a well-known target, crystal structures were available and subsequently utiliszed. Additionally, the ZINC dataset containing hundreds of millions of structures was used (unlabelled data) along with confirmed positive and negative hits for DDR1.

In total, six compounds were synthesized with four attaining <1 values. Whilst selectivity was shown for two molecules of DDR1 when compared to DDR2, selectivity against a larger panel of off-targets was not shown. Whilst further development (e.g. PK or toxicology testing) was not shown, Zhavoronkov et al. demonstrated de novo small molecule design in an experimental setting [48]. Arguably, the recommendation of an existing molecule is a simpler task than designing one from scratch.

values. Whilst selectivity was shown for two molecules of DDR1 when compared to DDR2, selectivity against a larger panel of off-targets was not shown. Whilst further development (e.g. PK or toxicology testing) was not shown, Zhavoronkov et al. demonstrated de novo small molecule design in an experimental setting [48]. Arguably, the recommendation of an existing molecule is a simpler task than designing one from scratch.

Design of new biological entities

New biological entities (NBE) refer to biological products or biologics, that are produced in living systems [183]. The types of biologics are very diversified, from proteins (>40 amino acids), peptides, antibodies, to cell and gene therapies. Therapeutic proteins tend to be large, complex structured and are unstable in contrast to small molecules [184]. Biologic therapies typically use cell-based production systems that are prone to post-translational modification and are thus sensitive to environmental conditions requiring mass spectrometry to characterize the resulting heterogeneous collection of molecules [185].

In general, the target-to-hit-to-lead pathway also applies to NBE discovery, with similar procedures like high-throughput screening assays. Typically, an affinity-based high-throughput screening method is used to select from a large library of candidates using one target. One must then separately study off-target binding from similar proteins, peptides and immune surveillance [186].

Modelling philosophy

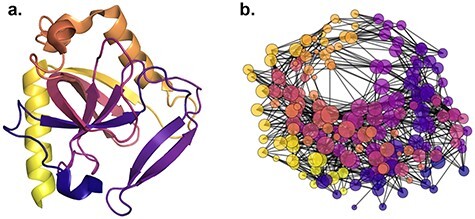

Focusing on proteins, the consensus to derive the protein graph representation is to use pairwise spatial distances between amino acid residues, i.e. the protein’s contact map, and to apply an arbitrary cut-off or Gaussian filter to derive adjacency matrices [19, 20, 187], see Figure 7.

Figure 7 .

Illustration of (A) a protein (PDB accession: 3EIY) and (B) its graph representation derived based on intramolecular distance with cut-off threshold set at  Å.

Å.

However, protein structures are substantially more complex than small molecules and, as such, there are several resulting graph construction schemes. For instance, residue-level graphs can be constructed by representing the intramolecular interactions, such as hydrogen bonds, that compose the structure as edges joining their respective residues. This representation has the advantage of explicitly encoding the internal chemistry of the biomolecule, which determines structural aspects such as dynamics and conformational rearrangements. Other edge constructions can be distance-based, such as K-NN (where a node is joined to its  most proximal neighbours) [19, 20] or based on Delaunay triangulation. Node features can include structural descriptors, such as solvent accessibility metrics, encoding the secondary structure, distance from the centre or surface of the structure and low-dimensional embeddings of physicochemical properties of amino acids. It is also possible to represent proteins as large molecular graphs at the atomic level in a similar manner to small molecules. Due to the plethora of graph construction and featurization schemes available, tools are being made available to facilitate the pre-processing of said protein structure [188].

most proximal neighbours) [19, 20] or based on Delaunay triangulation. Node features can include structural descriptors, such as solvent accessibility metrics, encoding the secondary structure, distance from the centre or surface of the structure and low-dimensional embeddings of physicochemical properties of amino acids. It is also possible to represent proteins as large molecular graphs at the atomic level in a similar manner to small molecules. Due to the plethora of graph construction and featurization schemes available, tools are being made available to facilitate the pre-processing of said protein structure [188].

One should note that sequences can be considered as special cases of graphs and are compatible with graph-based methods. However, in practice, language models are preferred to derive protein embeddings from amino acids sequences [189, 190]. Recent works suggest that combining the two can increase the information content of the learnt representations [187, 191]. Several recurrent challenges in the scientific community aim to push the limit of current methods. For instance, the CAFA [192] and CAPRI [193] challenges aim to improve protein functional classification and PPI prediction.

ML-assisted directed evolution

Display technologies have driven the modern development of NBEs; in particular, phage display and yeast display are widely used for the generation of therapeutic antibodies. In general, a peptide or protein library with diverse sequence variety is generated by PCR, or other recombination techniques [194]. The library is ‘displayed’ for genotype–phenotype linkage such that the protein is expressed and fused to surface proteins while the encoding gene is still encapsulated within the phage or cell. Therefore, the library could be screened and selected, in a process coined ‘biopanning’, against the target (e.g. antigen) according to binding affinity. Thereafter, the selected peptides are further optimized by repeating the process with a refined library. In phage display, selection works by physical capture and elution [195]; for cell-based display technologies (like yeast display), fluorescence-activated cell sorting is utilized for selection [196].

Due to the repeated iteration of experimental screens and the high number of outputs, such display technologies are now being coupled to ML systems for greater speed, affinity and further in silico selection [197, 198]. As of yet, it does not appear that advanced GML architectures have been applied in this domain, but promising routes forward have been recently developed. For example, Hawkins-Hooker et al. [199] trained multiple variational autoencoders on the amino acid sequences of 70 000 luciferase-like oxidoreductases to generate new functional variants of the luxA bacterial luciferase. Testing these experimentally led to variants with increased enzyme solubility without disrupting function. Using this philosophy, one has a grounded approach to refine libraries for a directed evolution screen.

Protein engineering

Some proteins have reliable scaffolds that one can build upon, for example, antibodies whereby one could modify the variable region but leave the constant region intact. For example, Deac et al. [200] used dilated (á trous) convolutions and self-attention on antibody sequences to predict the paratope (the residues on the antibody that interact with the antigen) as well as a cross-modal attention mechanism between the antibody and antigen sequences. Crucially, the attention mechanisms also provide a degree of interpretability to the model.

In a protein-agnostic context, Fout et al. [19] used Siamese architectures [201] based on GNNs to predict at which amino acid residues are involved in the interface of a protein–protein complex. Each protein is represented as a graph where nodes correspond to amino acid residues and edges connect each residue to its  closest residues. The authors propose multiple aggregation functions with varying complexities and following the general principles of a diffusion convolutional neural network [202]. The output embeddings of each residue of both proteins are concatenated all-to-all and the objective is to predict if two residues are in contact in the protein complex based on their concatenated embeddings. The authors report a significant improvement over the method without the GNN layers, i.e. directly using the amino acid residue sequence and structural properties (e.g. solvent accessibility, distance from the surface).

closest residues. The authors propose multiple aggregation functions with varying complexities and following the general principles of a diffusion convolutional neural network [202]. The output embeddings of each residue of both proteins are concatenated all-to-all and the objective is to predict if two residues are in contact in the protein complex based on their concatenated embeddings. The authors report a significant improvement over the method without the GNN layers, i.e. directly using the amino acid residue sequence and structural properties (e.g. solvent accessibility, distance from the surface).

Gainza et al. [49] recently introduced molecular surface interaction fingerprinting (MaSIF) for tasks such as, binding pocket classification or protein interface site prediction. The approach is based on GML applied on mesh representations of the solvent-excluded protein surface, abstracting the underlying sequence and internal chemistry of the biomolecule. In practice, MaSIF first discretizes a protein surface with a mesh where each point (vertex) is considered as a node in the graph representation. Then, the protein surface is decomposed into overlapping small patches based on the geodesic radius, i.e. clusters of the graph. For each patch, geometric and chemical features are handcrafted for all nodes within the patch. The patches serve as bases for learnable Gaussian kernels [203] that locally average node-wise patch features and produce an embedding for each patch. The resulting embeddings are fed to task-dependent decoders that, for instance, give patch-wise scores indicating if a patch overlaps with an actual protein-binding site.

De novo design

One of the great ambitions of bioengineering is to design proteins from scratch. In this case, one may have an approximate structure in mind, e.g. to inhibit the function of another endogenous biomolecule. This motivates the inverse protein-folding problem, identifying a sequence that can produce a pre-determined protein structure. For instance, Ingraham et al. [191] leveraged an autoregressive self-attention model using graph-based representations of structures to predict corresponding sequences.

Strokach et al. [106] leveraged a deep GNN to tackle protein design as a constraint satisfaction problem. Predicted structures resulting from novel sequences were initially assessed in silico using molecular dynamics and energy-based scoring. Subsequent in vitro synthesis of sequences led to structures that matched the secondary structure composition of serum albumin evaluated using circular dichroism.