Abstract

Social companion robots are getting more attention to assist elderly people to stay independent at home and to decrease their social isolation. When developing solutions, one remaining challenge is to design the right applications that are usable by elderly people. For this purpose, co-creation methodologies involving multiple stakeholders and a multidisciplinary researcher team (e.g., elderly people, medical professionals, and computer scientists such as roboticists or IoT engineers) are designed within the ACCRA (Agile Co-Creation of Robots for Ageing) project. This paper will address this research question: How can Internet of Robotic Things (IoRT) technology and co-creation methodologies help to design emotional-based robotic applications? This is supported by the ACCRA project that develops advanced social robots to support active and healthy ageing, co-created by various stakeholders such as ageing people and physicians. We demonstra this with three robots, Buddy, ASTRO, and RoboHon, used for daily life, mobility, and conversation. The three robots understand and convey emotions in real-time using the Internet of Things and Artificial Intelligence technologies (e.g., knowledge-based reasoning).

Keywords: Co-creation, Robotics, Smart health, Emotional care, Ageing, Elderly, Internet of robotic things, Cloud robotic, Artificial intelligence, Ontology, Semantic reasoning, Semantic web technologies, Reusable knowledge engineering, Semantic web of things (SWoT), Knowledge directory service, Body of knowledge

Introduction

Service robots [86] are highly demanded to assist human beings, typically by performing a job that is dirty, dull, distant, dangerous or repetitive. Indeed, according to the International Federation of Robotics1 “by 2019, sales forecasts indicate another rapid increase of service robots up to an accumulated value of US$ 23 billion for the period 2016-2019”. “Investments under the joint SPARC initiative (the largest research and innovation program in robotics in the world) are expected to reach 2.8 billion euro with 700 million euro in financial investments coming from the European Commission under Horizon 2020 over 7 years.”

Service robots are becoming an important technology to invest in Sophia [95], the humanoid robot, is one of the latest demonstrations of the advancements in this area. The key remaining challenge when developing robots is to make them interact well with humans and their environment.2 Over 70% of people aged 80 and over experience problems with personal mobility, while they want to remain independent in their lives.3 Others, those who are able to maintain their full mobility, struggle with isolation, and lack of social interaction. People can become socially isolated for a variety of reasons, such as getting older or weaker, no longer being the hub of their family, leaving the workplace, the deaths of spouses and friends, or through disability or illness. Whatever the cause, it is shockingly easy to be left feeling alone and vulnerable, which can lead to depression and a serious decline in physical health and wellbeing.4Social robots are autonomous robots that interact and communicate with humans or other autonomous physical agents by following social behaviors and rules attached to their role. Social robots can assist elderly to live secure, independent, and sociable lives. These robots also lighten the workload of caregivers, doctors, and nurses. Social companion robots [42] play the role of companion to help persons enjoy being with someone and reduce loneliness. They are getting more and more attention5 such as Pepper that dances and jokes or Buddy6 that conveys emotions.

Social robots need to detect emotion and behave accordingly [80], innovative social robots should have personalized behaviors based on users’ emotional states to fit more in their activities and to improve human-robot interaction. Consequently, robots have been required to follow mental models based on Human-Human interaction because they are perceived as social actors. The human is in the loop, the robot detects and analyses the emotion as a first step, plans a reaction as a second step and closes the loop on the human-being as the third step.

Further, social robots need to interact better with humans and understand and/or convey emotions [85]. Technology can help. Wearable devices can be used for this purpose: indeed, these devices produce physiological signals that can be analyzed by machines to understand humans’ emotions and their physical state (see Table 7). Internet of Things (IoT) technology can connect wearables to AI based capability on the web leveraging approaches such as the W3C Web of Things.7 The integration of Robotics, IoT, Web and Cloud technologies paves the way to the Internet of Robotics Things (IoRT)8 [94, 98, 106, 115], i.e. an environment where unlimited and continuously improving knowledge reasoning and data processing is available, thereby endowing robots with capabilities for context awareness and for interactions with humans by reacting to their emotions, thus enhancing the quality of human-robot interactions.

Table 7.

Well-being and IoT-based emotion applications (positive and negative) related work synthesis

| Authors | Year | Research problem addressed and project | Sensor or measurement type | Reasoning |

|---|---|---|---|---|

| Nouh et al. [81] | 2019 | Well-being RS, Food RS | No | Hybrid RS, KNN, Unsupervised ML |

| Budner et al. [19] | 2017 | Detect happiness from watch correlation weather and mood correlation friends having positive or negative mood | Pebble smart watch heart rate, light, activity, VMC temperature, humidity, pressure, wind, cloud | Random Forest ML Weka |

| Ahmed et al. [4] | 2017 | Heart attack prediction | Heart rate, blood pressure, cholesterol, blood sugar | KNN ML |

| Lim [71] | 2013 | I-Wellness: personalized wellness therapy RS | Health status, personal lifestyle, wellness concern | RS, Hybrid Case-Based Reasoning (CBR) |

| Likamwa et al. [69] | 2013 | User’s: mood mobile app correlates mood and phone usage | Phone | Clustering classifier multi-linear regression |

| Koelstra et al. [63] | 2012 | DEAP dataset: emotion analysis using physiological signals | GSR, Blood volume pressure, respiration, skin temperature, EMG, EOG, EEG | Gaussian Naive Bayes (EEG) |

| Lin et al. [73] | 2011 | Motivate: personalized context-aware RS | Location, weather, agenda | RS, Rule-based (If-then rules) |

| Lane et al. [65] | 2011 | BeWell: Well-being mobile app | Sleep, activity, social | No |

| Rabbi et al. [92] | 2011 | Well-being mobile application | Voice, accelerometer, depressive symptoms | HMM |

| Church et al. [23] | 2010 | MobiMood: mobile mood awareness and communication | Location, calls, SMS.mood intensity | No |

| Afzal et al. [3] | 2018 | Personalized well-being health | Location, activity, weather, emotion | RS, Rule-based |

| Garcia-Ceja et al. [39] | 2018 | Mental Health Monitoring Systems (NHMS) Survey | Heart rate, GSR, body or skin temperature | Survey paper ML algorithm |

| Kim et al. [61] | 2017 | Depression Severity Elderly People, AAL | Infrared motion sensor | Bayesian Network Decision Tree, SVM, ANN |

| Zhou et al. [123] | 2015 | Monitoring mental health states | Heart rate, pupil variation, head movement, eye blink, facial expression | ML (Logistic regression, SVM) |

| Garcia-Ceja et al. [40] | 2016 | Stress | Accelerometer data (from smartphone) | Naive Bayes, Decision Tree |

| Yoon et al. [121] | 2016 | New stress monitoring patch | Skin conductance, pulse wave, skin temperature | No |

| Lu et al. [76] | 2012 | StressSense | Voice data (smartphone) | GMMs |

| Chang et al. [20] | 2011 | AMMON: Stress detector | Voice data | SVM |

| Yacchirema et al. [119] | 2018 | Obtrusive Sleep Apnea (OSA) | Heart rate, snoring, activity, BMI, weight, step counts, temperature, humidity, air pollutant | Rule-based ANN-MLP RELU |

| Angelidou [7] | 2015 | Sleep apnea diagnosis, snoring | Bio-signal and sound data | Decision tree (Weka) |

| Laxminarayan [66] | 2004 | Exploratory sleep analysis | Heart rate, oxygen potential, body position | Association rule mining Logistic regression, Weka |

Sensor measurement and reasoning taxonomy to later process sensor data with reasoning mechanisms within applications. Set of keywords relevant for automatic analysis. Legend: Machine Learning (ML), Support Vector Machine (SVM), Recommender System (RS), Ambient Assisted Living (AAL), Gaussian Mixture Models (GMMs), Artificial Neural Network (ANN), Multilayer Perceptron (MLP), Galvanic Skin Response (GSR), Hidden Markov Model (HMM), K-Nearest Neighbors (KNN)

ACCRA9 is a joint Europe-Japan project focusing on the application of a co-creation methodology to build robot applications for ageing, co-created by various stakeholders such as ageing people and physicians. ACCRA has developed three social companion robots applications for older adults (daily life, conversation, and mobility applications) using three robots, Buddy, RoboHon, and ASTRO. Buddy is an emotional robot conveying specific feelings and emotions to humans on-demand, RoboHon10 robot can understand emotions by using face recognition, and ASTRO11 helps with mobility.

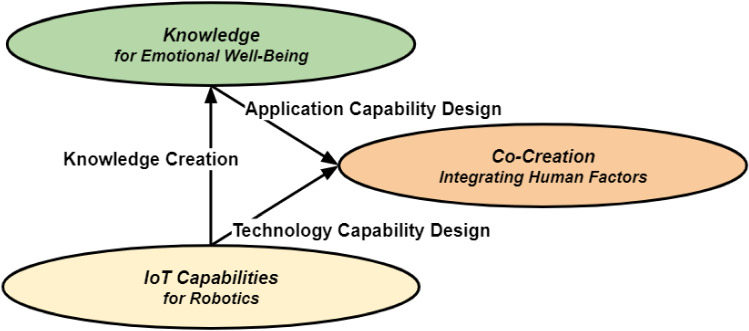

ACCRA designed an engineering framework consisting of three components as shown in Fig. 1:

IoT capabilities for robotics, i.e. connecting robots to other IoT devices and to cloud processing capabilities.

Knowledge base for emotional well-being, i.e. accessing capabilities for context awareness, human interaction learning and emotion management

Co-creation methodologies, i.e. integrating multidisciplinary expertise and involving humans in the design of applications.

Fig. 1.

Components of the engineering framework

In this light, our main research question is: How can IoRT technology and co-creation methodologies help to design emotional-based robotic applications?

We address the following sub-research questions (RQ):

RQ1: What is the architecture of social companion robot systems for smart healthcare and emotional well-being?

RQ2: What kind of robots do we need to enhance elderly’s mobility?

RQ3: What kind of robots do we need to help elderly to socialize and reduce their loneliness?

RQ4: How do we ensure that implemented applications meet the need of elderly to stay independent at home?

RQ5: What kind of robots do we need to recognize emotions? How can we integrate IoT-based emotional understanding into applications?

The main contributions of this paper are:

C1: The design of the robotic-based IoT architecture of the ACCRA project; it addresses RQ1 in Sect. 3.2.

C2: The ASTRO robot for the mobility application, designed with co-creation; it addresses RQ4 in Sect. 5.1.1.

C3: The Buddy robot for the daily life and conversational applications, designed with co-creation; it addresses RQ2 in Sect. 5.1.2 and RQ3 in Sect. 5.1.3.

C4: The design of robotic applications are supported with the Listen, Innovate, Field-test, Evaluate (LIFE) co-creation methodology for IoT, integrating human factors; it addresses RQ1 in Sect. 6.1.

C5: The knowledge API to enrich and reason on data generated by sensors to build emotional and ontology-based applications; it addresses RQ5 in Sects. 4 and 5.2.

C6: The RoboHon robot recognizes emotions with face-recognition; it addresses RQ5 in Sect. 3.1.

Structure of the paper: Section 2 introduces the concepts for robotics and emotional well-being as well as information on technological solutions that may be used in this context. Section 3 describes the ACCRA system, the robots, and their applications. Section 4 focuses on the Knowledge API. Section 5 explains the engineering components for robotics in smart health and emotional well-being and their evaluation within the ACCRA project. Section 6 evaluates our proposed solutions with stakeholders. Section 7 reminds key contributions and summarizes lessons learnt. Section 8 concludes the paper and provides suggestions for future work.

Related Work: Internet of Robotic Things and Emotional Care

The three sections hereafter introduce the related work for robotics and smart heath emotional care. Some of the technologies mentioned hereafter are employed in the engineering framework described in Sect. 5. The literature study’s purpose is to scope the state of the art and historic development. It has been collected over the last 10 years by searching for specific keywords on a corpus such as Google Scholar. We synthesize the related work in Tables 2, 3, 7 and 8 for a quick overview and comparison. We keep mentioning oldest pioneer work to acknowledge them instead of ignoring them.

Table 2.

Set of online demonstrators: ontology catalog, rule discovery, and full scenarios

Table 3.

Maturity of engineering components in ACCRA applications.

| IoT capabilities for robotics | Knowledge for emotional well-being | Co-creation integrating | |

|---|---|---|---|

| Component | Component | Human factors component | |

| Daily life application | IoT for emotional well-being (Table 7) | IoT for emotional well-being (Table 7) | Buddy robot |

| Emotion ontologies (Table 8) | RoboHon robot | ||

| +++ | +++ | +++ | |

| Conversation application | IoT for emotional well-being (Table 7) | Emotion ontologies (Table 8) | Buddy robot |

| + | +++ | +++ | |

| Mobility application | IoT for emotional well-being (Table 7) | IoT for emotional well-being (Table 7) | ASTRO robot |

| + | + | +++ |

Legend: +++ is more advanced than +

Table 8.

Related work synthesis: Ontology-based emotional projects and reasoning mechanisms employed

| Authors | Year | Project | OA | Reasoning | Text analysis |

|---|---|---|---|---|---|

| Lin et al. [72] | 2018 2017 | Emotion and mood ontology representing visual cues of emotions | No | No | |

| Gil et al. [43] | 2015 | Emotion and cognition ontology used to understand emotions from Emotiv EEG neuroheadset for online learning | owl:Restriction | No | |

| Berthelon et al. [16] | 2013 | Emotion ontology for context awareness taxonomy of 6 emotions | No | No | |

| Sanchez-Rada et al. [100] | 2018 2016 | Onyx: describing emotions on the web of data | No SPIN mentioned | ||

| Hastings et al. [54] | 2012 2011 | MFOEM: mental health and disease ontologies Taxonomy of emotions | No | No | |

| Lopez et al. [74] | 2008 | Describing emotions | No | No | |

| Abaalkhail et al. [2] | 2018 | Survey on 20 ontologies for affective states and their influences | No | No | No |

| Tabassum et al. [108] | 2018 | EmotiOn ontology for emotion analysis based on Plutchik’s wheel of emotions | No | HermiT for consistency and inference | |

| Arguedas et al. [8] | 2015 | Emotion and mood awareness for E-learning (wiki, chat, forum) | No | Event-Condition-Action (ECA) rule system | |

| Tapia et al. [109] | 2014 | Semantic human emotion ontology (SHEO) for text and images DetectionEmotion: facial complex emotions | No | SWRL rules for complex emotions | |

| Sykora et al. [107] | 2013 | Emotive ontology for social networks message analysis (Twitter) | No | No | |

| Ptaszynski et al. [91] | 2012 | Ontology for extracting emotion objects from texts (blogs) | No | No | |

| Baldoni et al. [11] | 2012 | Emotion ontology for Italian art work annotated tags | No | Jena Reasoner | |

| Honold et al. [55] | 2012 | Emotion ontology for nonverbal communication | No | Bayesian | No |

| Eyharabide et al. [35] | 2011 | OLA: ontology for predicting learners’ affect to infer emotions | No | inference, Decision Tree (Weka) | No |

| Francisco et al. [37] | 2010 2006 | Recognizing emotions from voice, and text | No | OWL DL, Pellet, Racer Pro owl:Restriction, inference | |

| Grassi et al. [45] | 2011 2009 | Human emotion ontology (HEO) for voice, text, gesture, and face | No | No | |

| Radulovic et al. [93] | 2009 | Ontology of emoticons (smileys as tests or pictures) with annotation of emotion classes | No | No | |

| Yan et al. [120] | 2008 | Chinese emotion ontology based on HowNet for text analysis | No | Rules for annotation | |

| Benta et al. [14] | 2007 2005 | User’s affective States for context aware museum guide | No | Fuzzy Logic in OWL (logical inference) | No |

| Garcia-Rojas et al. [41] | 2006 | Emotional face and body expression profiles for virtual human (MPEG-4 animations) | No | RacerPro and nRQL (natural Racer Query Language) | No |

| Obrenovic et al. [82] | 2005 2003 | Ontology for description of emotional cues from different modalities (text, speech) | No | No |

Legend: Ontology Availability (OA), when the code is available, the ontologies are classified on the top. Then, the ontology-based projects are classified by year of publications

Robotics Technologies and Internet of Robotic Things

We selected papers with keywords: “Internet of Robotics Things”, “IoT and Robotics”, “Cloud Robotics”, “Service Robotics”, “Robotic-as-a-Service”, “Robots Web”, “Robotics Ontologies”. Survey papers have been read first to find key references. A World Wide Web for Robots is introduced by the RoboEarth project [117]. RoboEarth provides a cloud-based knowledge repository for robots learning to solve tasks. “Internet of Robotic Things” (IoRT) integrates robotics, Internet of Things (IoT), and artificial intelligence (AI) to design and develop new frontiers in human-robot interaction (HRI), collaborative robotics, and cognitive robotics [94]. Thanks to the pervasiveness of IoT solutions, robots can expand their sensing abilities [5] whereas cloud computing resources and artificial intelligence can expand robots’ cognitive abilities [60]. Cloud Robotics is another term to describe the benefit of computational power for robots [57, 60, 99].

Kamilaris et al. [59] address six research questions when surveying the connection between robotics and the principles of IoT and WoT: (1) Which concepts, characteristics, architectures, platforms, software, hardware, and communication standards of IoT are used by existing robotic systems and services? (2) Which sensors and actuators are incorporated in IoT-based robots? (3) In which application areas is IoRT applied? (4) Which technologies of IoT are used in robotics till today? (5) Is Web of Things (WoT) used in robotics? By employing which technologies? (6) Which is the overall potential of WoT in combination with robotics, towards a WoRT? Tools supporting those IoRT technologies are still missing.

Tiddi et al. identify the main characteristics, core themes and research challenges of social robots for cities [113], encourage knowledge-based environments for robots, but do not focus on the emotional care. Nocentini et al. [80] surveyed Human-Robot Interaction (HRI) that provide robots with cognitive and affective capabilities to establish empathic relationships with users; and focus on the following architectural aspects: (1) the development of adaptive behavioral models, (2) the design of cognitive architectures, and (3) the ability to establish empathy with the user. They encourage an holistic approach to design empathic robots.

Paulius et al. [86] advice for designing an effective knowledge representation (e.g., distinguish representation from learning, cloud computing interface for robots, sharing knowledge and task experience between robots to avoid redeveloping from scratch). They conclude the need of standards for service robotics and quickly mention the need for ontologies [90] without describing the IEEE Autonomous robotics ontologies and numerous existing ontologies for robotics.

Surveys about robotics using IoT, Cloud, and AI technologies without a focus on semantic web technologies are summarized in Table 2. We reviewed ontology-based robotics projects, with a focus on robot autonomy in [83] published in 2019. We design Table 3 for this new paper to give a quick overview.

Zander et al. [122] surveyed semantic-based robotics projects and compare them according to six dimensions that are relevant for describing robotic components and capabilities: (i) domain and scope of an approach, (ii) system design and architecture, (iii) ontology scope and extensibility, (iv) reasoning features, (v) technological foundation of the reasoning techniques, and (vi) additional relevant technological features.

Shortcomings of the Literature Study: Previous work focuses on technological aspects such as integration or bringing AI (semantic web, IoT, Cloud) capabilities and not on bringing emotional care. There is a lack of a survey regarding all models already designed to cover the robotic domain (this is the purpose of our survey [83]). There is a need to understand why there is a need to constantly design new models without reusing the existing ones. It clearly demonstrates the need for tools to support developers willing to design new applications. The Internet of Robotics Things (IoRT) study published in 2017 [115] cites only one ontology for robotics designed by Prestes et al. (the IEEE standardization) [89].

Emotion Capture and Reproduction

Emotional understanding is widely investigated: (1) emotion recognition is investigated by Eckman [32], a pioneer who defined the six basic emotions (anger, happiness, surprise, disgust, sadness, and fear) focusing on face recognition, (2) positive psychology and research about happiness is investigated by Seligman [104], and (3) affective computing [88] that combines affective sciences to computing capabilities. Blue Frog robotics designed the Buddy companion robot, with a cute and smiley face. Buddy’s strength is to assist, entertain, educate, and make everybody smile. We selected papers with such keywords: “IoT and emotion”, “IoT Well-Being”, “well-being recommender system”, “wellness sensor”, “happiness IoT”, “emotion ontologies”, and solutions to remedy to those disorders: “depression sensor”, “stress sensor”, “sleep disorder sensor”. Survey papers have been read first to find key references. The main goal was to classify key sensors used in such systems, and the reasoning mechanisms to interpret sensor data. The classification of emotional well-being projects using IoT devices (Table 7) or ontologies (Table 8) is provided in Sect. 5.2.

In Table 7, the first column references authors, the second column reminds the date of publicatios, the third column provides a short description of the research, the fourth column describes sensors mentioned, and the last column provides the reasoning mechanisms employed to infer meaningful information from sensor data.

Conclusion: Emotional robots are still in their infancy stage. There is a need to classify sensors that can help to understand better emotions (not just with a camera for face recognition) [33], but to understand relationships between physiological data and emotions better [12, 17]. Table 7 is a first step towards bringing together IoT devices to enhance mood and emotions by managing disorders such as stress, sleep, mental health, and depression to enhance quality of life with empowered IoT-based well-being solutions.

System Design and Lifecycle Process: Agile Programming, DevOps and Co-creation

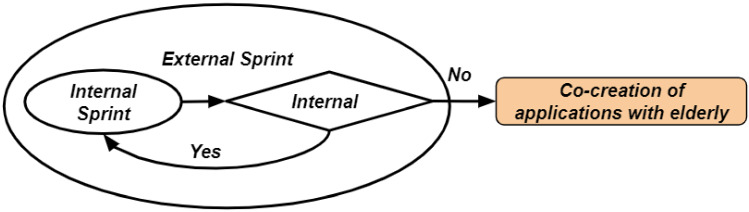

Agile programming Product Development12 fosters rapid and flexible response to change; it is carried out in short periods called sprints. Sprints’ objectives are agreed by members of the project team: the agile programming and engineering team, the product manager, and the product owner. Sprints’ results are demonstrated and assessed by the product owners and managers. Product backlogs list future features to be integrated and developed such as new features, changes, bug fixes, infrastructure changes, etc.

DevOps is a ‘release early, release often’ software development methodology where engineers are involved in planning, designing and developing product releases, with varied activities and skills required at each stage. DevOps emphasizes the creation of tight feedback loops between engineers and testers or end-users and reduces the risk of creating software that no one will use. Development teams are encouraged to implement smaller sets of features, with minimal capabilities, to reshape future product planning and, to decide features worth to develop.

The co-creation approach actively involves multi-disciplinary partners as users in development, as opposed to being the subject of development [34]. Users co-develop solutions using tools and techniques that involve them in making ’things’ themselves, such as collages, mock-ups, and prototypes. Co-creation methods, tools and techniques access the tacit and latent knowledge of people, which may be very difficult to express, as opposed to what people say what they want (explicit knowledge) or what we can see people wanting (observable knowledge). Such methods can uncover people’s dreams, needs and wants, giving access to their true feelings [34]. This is relevant when developing IoT robotics applied to emotional well-being, because people’s emotions can be accessed through co-creation; this may not be the case when using methods such as interviews or observations that focus of uncovering on explicit or observable knowledge.

Conclusion: Agile, DevOps, and co-creation methodologies are used internally within the ACCRA engineering team to develop applications (mobility, daily life, and socialization) and externally with elderly people and multidisciplinary stakeholders to adapt applications to their needs (through the LIFE methodology [1] explained in Sect. 6.1).

System Used in ACCRA: Robots, and Architecture

The robots employed within ACCRA are described in Sect. 3.1, and the ACCRA project architecture is explained in Sect. 3.2.

Buddy, ASTRO, and RoboHon Robots

Buddy is a companion robot, that helps and entertains people every day. It offers several services: daily company, protection and security, communication and social connection, well-being and entertainment, and conversation.

Companionship: The robot is a playful and endearing companion that keeps company and entertains. It hums a tune, tells jokes, gives a warm smile when its head or nose is stroked, answers questions about the weather, the date, and the time, etc.

Protection and Security: The robot assists elderly in case of falling or getting stuck when moving. It can call for help upon request. It reminds medical appointments, medication to take, and good practices to follow (e.g., drink regularly in hot weather).

Communication and Social Connection: The robot enables close ones (family, grandchildren, friends, etc.) to interact with the elderly by phone or video call, send photos, videos and drawings, and displays them on its screen.

Well-being and Entertainment: The robot accompanies the elderly with well-being or relaxation activities, by playing videos, to unwind (relaxation sessions, sophrology, etc.) or to keep fit (physical exercise, gentle gymnastics, yoga, etc.). It can also play for amusement or to exercise your memory, read an audiobook or play your favorite music.

Conversation: The robot entertains by talking to the elderly about a variety of topics to stimulate the mind and can retrieve useful information for them.

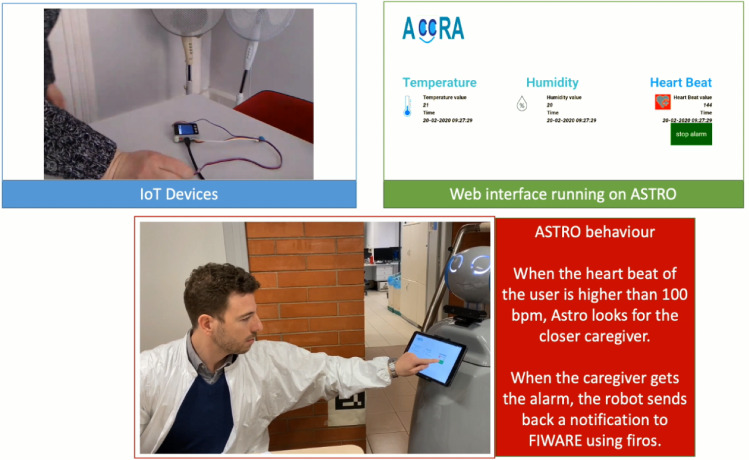

ASTRO is a socially assistive robot designed with elderly to support their indoor mobility, manage exercises, support caregivers at work, communication, telepresence, etc..

Walking support: ASTRO supports the walking of elderly users through the robotic handle by acting like a rollator. Older persons can easily drive ASTRO by changing the handgrip strength, automatically adapted to the user and its velocity over time (using a customized machine learning algorithm).

Exercises: ASTRO helps users with physical exercises that can be selected among a list of videos managed by the caregiver. Users can see themselves on various ASTRO interfaces while performing the exercise, and later perform self-assessments.

Support caregiver at work: ASTRO supports the work of caregiver: (1) Managing exercises: the caregiver can select the proper exercises for each user. (2) Monitoring: the caregiver can have an overview of the satisfaction on the performance of the exercises and walking support services. He can access the overview of the usage of the service, the total traveled time, and the mean velocity. The data are clustered and organized in a bar graph to enhance the overview. (3) Communication and Telepresence: the caregiver can call the elderly through this service, and can use ASTRO to remotely visit the elderly, using the ad hoc interface he can guide ASTRO and see what he sees.

RoBoHoN [38] is a robot that gives therapy for blind elderly people to induce a positive mental emotional state and reduce stress, and to reduce social isolation. RoBoHoN is a personal mobile robot whose main interface is the voice. By making it a humanoid robot, it is easy to talk to and get an expression by movement. Through the voice UI, applications such as telephone, mail, and camera, and contents such as weather, fortune-telling, and news can be transformed into special experiences, and various apps can be added to help the owner. RoBoHoN provides such functionalities:

Emotional Face Recognition: RoBoHoN [102] recognizes emotion (true face, joy, surprise, anger, and sadness) from the face using the OMRON’s Human Vision camera Component (HVC-P2) embedded within the robot’s eyes.

Emotional Conversation: RoBoHoN senses which sentence affected the emotions of the elderly and responds appropriately.

Person’s Location from Voice Recognition: RoBoHoN is a humanoid robot capable of voice communication to identify where the person is located in the room from the speech recognition engine (Rospeex). To move around and understand its environment, RoBoHoN deals with several sensors: three axis accelerometers, three axis geo-magnetometers, three-axis gyroscope, a light sensor, a temperature sensor, a humidity sensor, heart rate, and a camera for facial recognition. RoBoHoN can manipulate his environment with ease, making it easy for him to walk and know his exact location.

The RoBoHoN operating system is Google Android (TM), and the standard Android API is also available. Those who have experience in Android application development can utilize the knowledge and technology that they have cultivated so far. In addition, you can develop apps that provide a user experience that is different from smartphones by taking advantage of RoBoHoN’s characteristic functions (voice dialogue, motion, etc.). RoBoHoN is using the FIWARE infrastructure software, and MQTT as the communication protocol for sensor data.

ACCRA Architecture

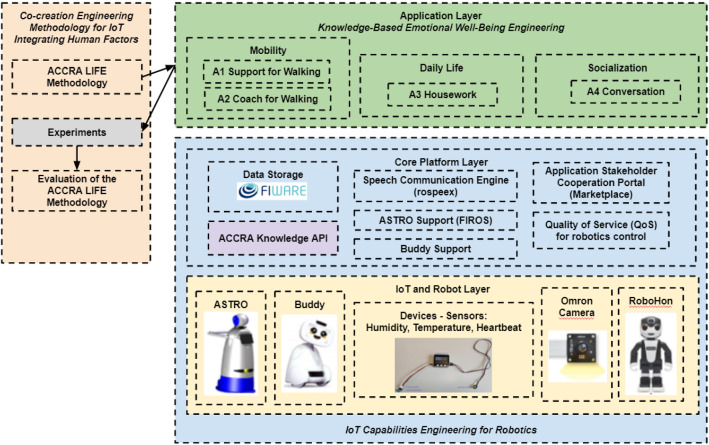

The ACCRA overview architecture, depicted in Fig. 2, has three layers:

The IoT and Robot Layer (see Sect. 3.1) comprises the robots used for mobility, daily life, and conversation applications, and sensors such as room humidity and thermometer, heartbeat, and a camera to detect facial expressions.

The Core Platform Layer comprises the following functionalities: (1) The FIWARE platform which exchanges sensor data and the services used by them. The server receives and sends information from the sensors to the services that are running on different robots (ASTRO, Buddy, and RoboHon). (2) Astro Support which communicates and receives data from the FIWARE platform, (3) Buddy Support, (4) RoboHon Support with the Rospeex Speech Communication platform which is used for conversational applications and which can recognize facial emotions (true face, joy, surprise, anger, and sadness) with the camera. (5) Application Stakeholder Cooperation Portal (Marketplace), and (6) Quality of Service (QoS) to prioritize network traffic. For instance, the ACCRA Knowledge API (see Sect. 4) provides the knowledge to the platform to decide what the messages and orders are, sent to the services depending on the data received by the different sensors. The reasoning engine interprets the data meaning to build well-being and emotional applications.

The Application Layer provides the three main applications (mobility, daily life, and conversation), developed and experimented within the ACCRA methodology. The applications are detailed in Sect. 5.1.

More information regarding the ACCRA architecture and relationships between core components are detailed in ACCRA D5.3 Platform Environment for Marketplace [25].

Fig. 2.

ACCRA overview architecture

ACCRA Knowledge API for Robotics Emotional Well-Being

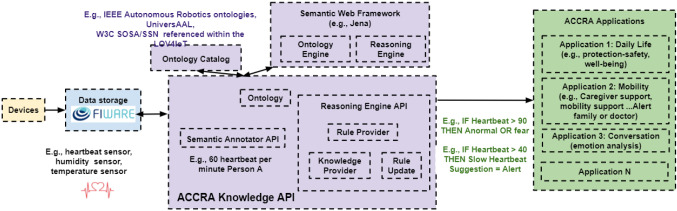

Inferring Meaningful Knowledge from Data

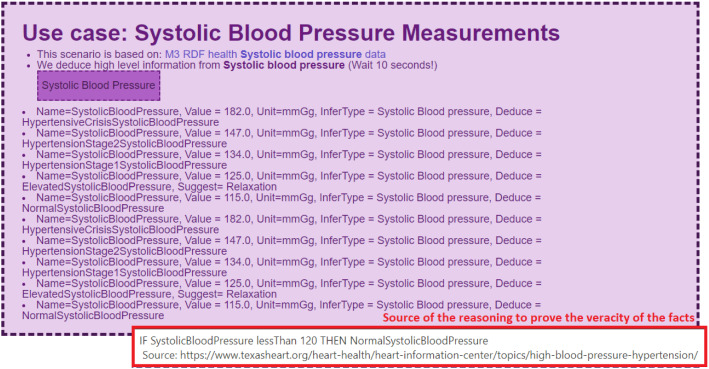

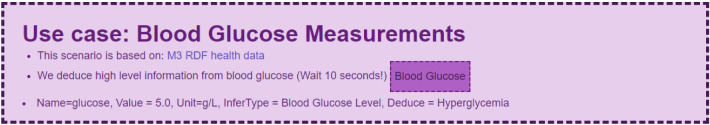

The end-to-end architecture provided in Fig. 3 uses data generated by devices (e.g., heartbeat sensor, temperature, humidity) available within the ACCRA project. The data are stored and managed within the FIWARE middleware. The Semantic Annotator API component explicitly annotates the data (e.g., unit of the measurement, context such as body temperature or room temperature) and unifies the data when needed. The semantic annotation uses ontologies that can be found through ontology catalogs (e.g., LOV4IoT [49] [83], Sect. 4.2). The ontology chosen must be compliant with a set of rules to infer additional information. The Reasoning Engine API (inspired from [48, 50] and supported by the AIOTI group on semantic interoperability [79]) deduces additional knowledge from the data (e.g., abnormal heartbeat) using rule-based reasoning. The IF THEN ELSE rules executed by the reasoning engine will add new data in the FIWARE data storage. Finally, the enriched data can be exploited within end-user applications (e.g., call the family or doctor when the heartbeat is abnormal since it might be an emergency).

Fig. 3.

ACCRA knowledge API: the ontology-based reasoning architecture from sensor data to end-user services

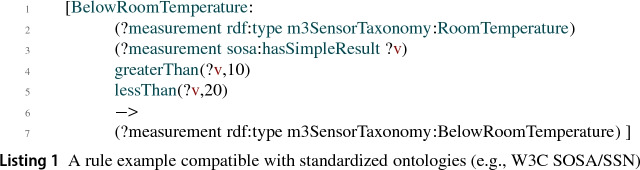

Listing 1 shows a rule compliant with the Jena framework and the W3C Sensor Observation Sampler and Actuator (SOSA)/Semantic Sensor Networks (SSN) ontology and its extension, the Machine-to-Machine-Measurement (M3) ontology that classifies sensor type, measurement type, units, etc. to do knowledge-based reasoning.

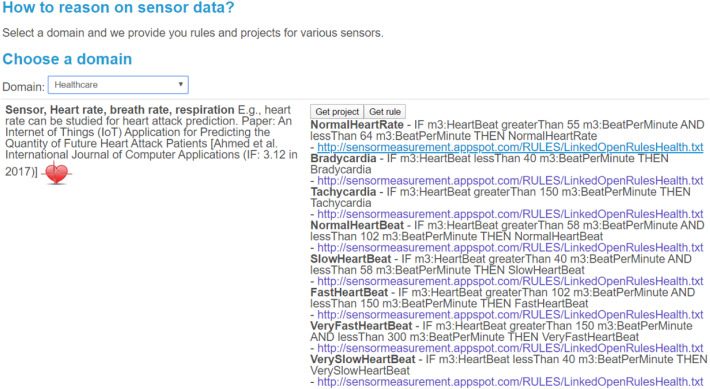

Table 1 provides examples of the web services used by the GUI (Fig. 4 ). Such web services are employed to later code rules as depicted in Listing 1 for the scenarios according to the applications’ needs.

Table 1.

Specification draft of the S-LOR knowlege reasoning API

| Web service description | Web service URL and example |

|---|---|

| Getting all rules for a specific sensor | http://linkedopenreasoning.appspot.com/slor/rule/sensorType |

| Example: | |

| http://linkedopenreasoning.appspot.com/slor/rule/BodyThermometer | |

| sensorType should be compliant with the classes referenced with M3 ontology | |

| Update the rule dataset | We can add new sensors in the dataset and add new rules |

| by adding a new instantiation within the dataset | |

| (see code example above and contribution to the . | |

| semantic interoperability for IoT white paper 2019 [13]) | |

| The GUI to add new sensors: | |

| http://linkedopenreasoning.appspot.com/?p=ruleRegistry |

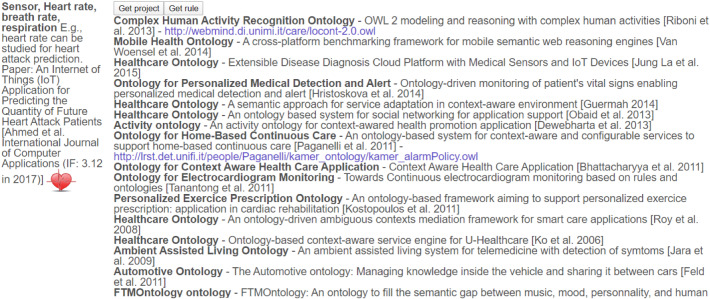

Fig. 4.

The IoT developer selects a domain (e.g., healthcare) to automatically retrieve sensors relevant for this domain (e.g., heartbeat) and then all rules to interpret data for a specific sensor (e.g., deduce abnormal heartbeat) when the “Get rule” button is selected. Similarly, there is a dedicated rule mechanism for robotics, emotion, and smart home

Various rules (compliant with the Jena framework such as Listing 1) can be provided by the Sensor-based Linked Open Rules (S-LOR) tool which classifies rules per domain and per sensor. In ACCRA, we are focused on healthcare since we have the heart beat sensor and smart home domains for Ambient Assisted Living with temperature and humidity sensors. Figures 4 and 5 depicted below show a demonstrator of the rule-based engine for IoT, called Sensor-based Linked Open Rules (S-LOR). They show a drop-down list with a set of IoT sub-domains, such as smart home, that we are interested in. Once the domain is selected, the list of sensors relevant for this domain (e.g., presence detector, temperature, light sensor) are depicted. The developer clicks on the button “Get Project” to retrieve existing projects already using such sensors, or the “Get rule” button to find existing rules relevant for this sensor to deduce meaningful information from sensor data. For instance, for a temperature sensor within a smart home, the smart home application integrating a rule-based reasoner understands that when the temperature is cold or too hot, it can automatically switch on the heater or air-conditioning.

Fig. 5.

The provenance of the knowledge encoded as a rule is kept by providing the source (e.g., scientific publication) when he “Get project” button is selected. Similarly, there is a dedicated rule mechanism for robotics, emotion, and smart home

Several reasoning examples are provided to demonstrate the full scenarios from a simple measurement:

Skin conductance to infer potential emotion such as anxiety (Fig. 6).

Blood pressure to infer potential disease such as hypertension (Fig. 7).

Blood glucose to infer potential disease such as hyperglycemia (Fig. 8).

Heart beat to infer diseases such as Tachycardia (Fig. 9). Another scenario could be to detect emotions such as fear if the heart beat is elevated (e.g., 155 heart beat per minute).

Demos provide tooltips to provide the veracity of the facts. The facts come, most of the time, from scientific publications referenced within the LOV4IoT catalogs and the Sensor-based Linked Open Rules (S-LOR) tool. Such technical AI solutions can be later embedded within the robots to better understand users’ emotions and diseases from sensor devices.

Fig. 6.

Inferring emotion (e.g., anxiety) from skin conductance

Fig. 7.

Inferring disease (e.g., hypertension) from blood pressure

Fig. 8.

Inferring disease (e.g., hyperglycemia) from blood glucose

Fig. 9.

Inferring disease (e.g., tachycardia) from heartbeart

Reusing Knowledge Expertise

The LOV4IoT ontology catalog is generic enough to be extended to any domain. In this paper, we are mainly focused on the following domains: emotion, health, robotics, and home.

LOV4IoT-Emotion knowledge catalog (see Table 2 for URLs). We have collected 22 ontology-based emotion projects in Table 8 (enrichment of [48]), from 2003 to 2018, and have classified them: (1) ontologies that are open-source that define domain knowledge (column ontology availability with a green check mark), (2) ontology not openly accessible (column ontology availability with a red cross mark). We released it as an open-source dataset, web service, and dump (see Table 2 for URLs) to easily retrieve the list of projects, their ontology URLs, and scientific publications for further automatic knowledge extraction. Those ontologies classify the numerous emotions to be automatically recognized with tasks such as text analysis (e.g., robots understanding conversations), or face recognition. Table 8 concludes that frequently, ontologies are not accessible online which demonstrates the need to disseminate the Semantic Web Community Best practices guidelines13 within the Affective Computing community more, to encourage the reuse and the publication of ontologies. Ontology-based emotional projects miss the information of which devices are required to recognize emotions (except microphone for voice data, and camera for face detection). For this reason, we also classified well-being projects (e.g., recommendation systems) using IoT devices to interpret emotions in Table 7. This table helped us to build the reasoning engine for emotions explained in [48].

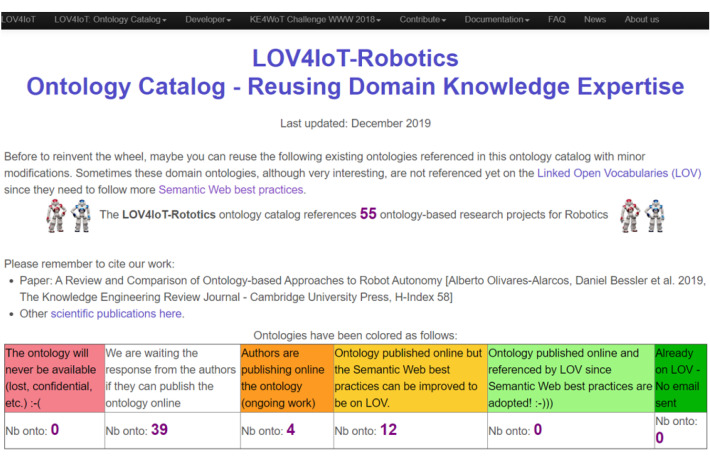

LOV4IoT-Robotics provides an HTML interface (Fig. 10 for humans but also the RDF dataset is employed for statistics (number of the ontology for the robotics domain and the quality of the ontology such as published online or not) and other automatic tasks (e.g., the Perfecto project to link the ontology URL with semantic web tools and analyze ontology best practices or automatic visualization). In the same way, a focus on Ambient Assisted Living (AAL) ontologies (e.g., UniversAAL ontologies) could be done, or even the intersection of robotic ontologies for AAL. We also released dump files with the ontology code for a quick analysis of common concepts used within present or past robotics projects.

Fig. 10.

LOV4IoT-robotics: ontology catalog for robotics. Similarly, there is a dedicated ontology catalog for health, emotion, and smart home

Similarly, we have built LOV4IoT-Health and LOV4IoT-Home (see Table 2 for URLs). All of those ontology datasets have been released for AI4EU Knowledge Extraction for the Web of Things (KE4WoT) Challenge.14

Knowledge Catalog Methodology

We define the following survey Inclusion Criteria:

Open-Source projects. Ideally, projects have a project website, documentation, ontologies released online, etc.

Impact-full projects (e.g., European projects with numerous partners. Projects in partnership with industrial companies, etc.)

Supported by standards (e.g., ETSI, W3C, ISO, IEC, OneM2M).

Reuse of the ontologies within other projects.

Size of the ontology or knowledge base.

Maintenance of the projects.

As explained in Sect. 2, doing the Systematic Literature Survey such as PRISMA, PICO or [18, 96] [62] is out of the scope of the paper, however, we continuously investigated on search engines keyphrases such as ‘robotic ontologies” or “robotic ontology healthcare”; the set of keywords has been regularly refined. We provide this overview to corroborate the discussion and the context of our work. We persistently explored references included within the bibliography of the papers that have been studied and added any new papers that needed to be investigated. When looking for papers on search engines, the most cited paper will be probably referenced first. However, we have to consider the latest publications as well, that are not cited yet. For this reason, we can use Google Scholar to explicitly request publications per year (e.g., 2018, 2019), to include the latest publications. We foster open-source projects that share their ontologies online in priority. Another criteria is the maintenance of the project and/or the ontology.

Discussions: More than a survey, an ontology catalog tool. On top of this survey paper, we created an ontology catalog tool accessible online, called LOV4IoT to ease the task of retrieving ontology URLs when available, links to scientific publications, etc. The ontology catalog provides a table view for humans but is also encoded in a machine processable format (RDF). We also provide a web service using the RDF dataset to be used by developers (e.g., robotics, or knowledge representation experts). A tutorial to help reusing those ontology-based projects provides: (1) the easy use of the web service, (2) the opportunity to download the zip file with ontology code when available, and (3) a table sheet with ontology URLs when available and potential issues encountered (e.g., versioning).

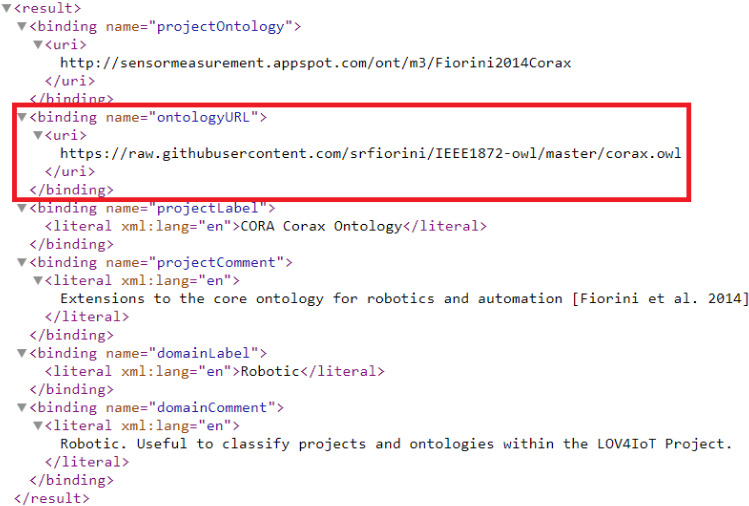

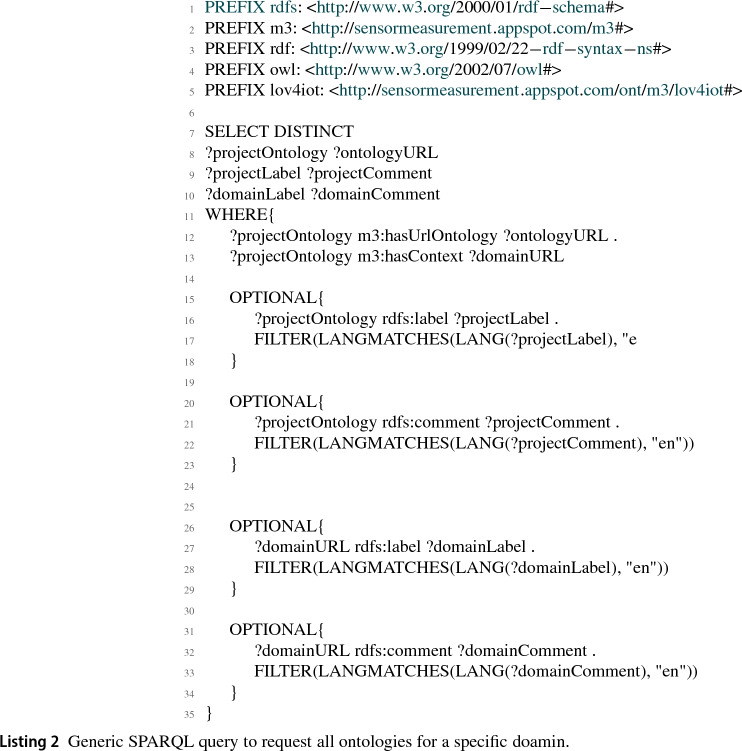

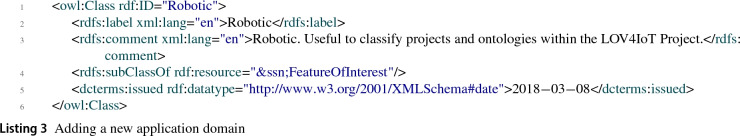

The generic SPARQL query to automatically retrieve all ontologies for a specific domain from the LOV4IOT ontology catalog is displayed in Listing 2. The generic variable ?domainURL enables to achieve this. This ?domainURL refers to Listing 3 when we query the robotic domain, ?domainURL is replaced by m3:Robotic. The same mechanism works for other domains covered in this paper (e.g., IoT, emotion, health).

Figure 11 demonstrates the web service providing all robotic ontologies. The web service is publicly accessible.15 You can notice the ontologyURL matches to the one explained within the RDF dataset (Listing 4). This figure shows the result returned by the SPARQL query when executed.

Fig. 11.

Web service providing all robotic ontologies

Summary of Knowledge API Demonstrators

We retrieve knowledge expertise from existing projects by classifying sensors, ontologies used to model data and applications, and the reasoning mechanisms used to interpret sensor data. We integrate the knowledge from several communities (Affective Computing designing new AI algorithms to understand IoT data, Affective Sciences modeling emotion within ontologies, Robotics community designing ontologies, IoT community designing ontologies, etc.). The collected knowledge can be automatically retrieved within online Knowledge API demonstrators, as referenced in Table 2).

Engineering Framework for Robotics in Smart Health and Emotional Care

ACCRA is using a framework consisting of three multi-disciplinary engineering components (Fig. 1) and their maturity is illustrated in Table 3:

IoT capabilities for robotics (Sect. 5.1),

Knowledge for emotional well-being (Sect. 5.2), and

Co-creation (Sect. 6.1).

Engineering IoT Capabilities for Robotics

The ACCRA platform is evaluated with the mobility, daily life, and conversational applications detailed hereafter.

Mobility Application

The ASTRO robot acts as a walker to support frail persons during indoor walking tasks [26]. Data collected from pressure sensors located on the ASTRO handles are aggregated in real-time with the AI algorithm stored on the robot to tune the physical human-robot interaction. ASTRO provides a personalized list of physical exercises and monitors the performance of the patient over time. ASTRO can also be used by caregivers to support their work, by using a customized web-interface stored in FIWARE: they can monitor the performance of a user over a certain period of time (i.e. statistic on the exercise performed over a day, related satisfaction of walking service) or monitor parameters related to Sarcopenia and frailty, namely handgrip strength and walking velocity [36]. Caregivers can personalize the list of exercises according to the clinical profile.

Daily Life Application (e.g., Well-Being)

IoT technologies are investigated in healthcare applications too (e.g., to provide an affordable way to unobtrusively monitor the patient’s lifestyles in terms of the amount of physical activity suggested by international guidelines for patients with chronic conditions like diabetes [6]). We designed IAMHAPPY [48], an innovative IoT-based well-being recommendation system (ontology and rule-based system) to encourage people’s happiness daily. The system helps people deal with day-to-day discomforts (e.g., minor symptoms such as headache, fever) by using home remedies and related alternative medicines (e.g., naturopathy, aromatherapy), activities to reduce stress, etc. The recommendation system queries the web-based knowledge repository for emotions that reference expertise from past emotional ontology-based projects (Table 8) and well-being IoT-based applications (Table 7), reused to build applications as explained below in Sect. 5.2. The knowledge repository helps to analyze the data produced by IoT devices to understand users’ emotions and health. The knowledge repository is integrated with a rule-based engine to suggest recommendations to enhance people’s well-being every day. The naturopathy application scenario supports the recommendation system, which has also been enriched with food that boosts the immune system, and scientific facts, necessary to face the COVID-19 pandemic.

Conversational Application

Buddy challenges people with intellectual exercises to stimulate their curiosity on different topics. The results of the need analysis (see the “Listen” phase of the LIFE methodology in Sect. 6.1) indicate differences between the Italian and the Japanese culture [31]. Japanese elderly are more interested in the conversation on fashion, golf, and travel which are modulated on their preferences and psychological profile, in order to improve functioning in the everyday context thus encouraging autonomy of the patients and reducing the risk of isolation. Italian elderly use the robot as a cognitive stimulus in order to remember appointments and things to do during the day, to propose the listening of music tracks, to adjourn on daily news, and to show pictures in order to support patients with cognitive impairment during hospitalization. Furthermore, it could improve the users’ comfort degree when using the device, automatically adapting to users behaviors and their personal histories.

Engineering Knowledge for Robotics Emotional Well-Being

Robots can either convey emotions via their cute interface (e.g. Buddy), or robots can understand human emotions by using: (1) cameras with face recognition (e.g. RoboHon), (2) speech with voice recognition, and (3) IoT devices (e.g., heartbeat, ECG). We designed a knowledge-based repository for emotion, health, robotics, and home (as explained in detail in Sect. 4). For instance, we classify and analyze emotional-based projects either using IoT technologies to understand human emotions (Table 7) or ontologies to model emotions (Table 8).

As an example, Fig. 12 shows reasoning from three IoT devices (thermometer, heart beat sensor, and humidity) embedded within the ASTRO mobility robots to assist stakeholders such as physicians when emergency actions must be taken.

Fig. 12.

Knowledge Reasoning embedded within robots to assist stakeholders such as physicians

Evaluation

This section evaluates our proposed solutions with stakeholders: (1) elderly and caregivers through the Listen, Innovate, Field-test, Evaluate (LIFE) co-creation methodology in Sect. 6.1, (2) researchers and IT experts in Sect. 6.2 via a user form and Google Analytics in Sect. 6.3.

Engineering Co-Creation for IoT Integrating Human Factors

Our approach is based on the Listen, Innovate, Field-test, Evaluate (LIFE)16, a co-creation methodology for IoT, integrating human factors focusing on: (1) user-centric design involving elderly people and caregivers, and (2) technology design involving robotics features. The LIFE methodology identifies the needs of the elderly people experiencing a loss of autonomy, to co-create robotic solutions that meet these needs, to field-test their daily use and to evaluate the solutions’ sustainability.

The methodology consists of four phases, each ending with a checkpoint to assure that the project team only passes to a next phase if the scope is clear, the goals are met, and the necessary conditions in terms of resources are in place. The phases are: (1) Needs Analysis, (2) Agile co-creation, (3) Agile pre-experiment, and (4) Final evaluations. Table 4 details activities and checkpoints in each of the phases. The agile co-creation stage is the heart of the LIFE methodology. It is based on iterative cycles comprising four sub-steps: Co-design, Test, Develop, and Quality check meeting.

Table 4.

Co-creation methodology processes

| Process | Activities |

|---|---|

| Need analysis process | Project scoping |

| Needs prioritization | |

| Services offering description | |

| Technical feasibility | |

| Final services prioritization | |

| Consumer expression of final services | |

| Agile co-creation process | Capability scoping |

| Co-design | |

| Test | |

| Agile development | |

| Quality check meeting | |

| Agile pre-experiment process | Stability check |

| Plan: design the pre-experiment | |

| Do: execute the pre-experiment | |

| Check: evaluate the re-experiment | |

| Act: recommendations for future work | |

| Final evaluation process | Maturity check |

| End experiment | |

| Market assessment | |

| Sustainability assessment | |

| Market-readiness check |

Experiments: The goal of the experimentation phase is to test the robotics solution under real conditions by a large group of end users. The experimentation only took place in Europe. For each use case a methodology for the experimentation has been designed, taking into account what the robot’s functionalities are and what would (given the time constraints of the project) be the optimal duration of use. The design was either a before and after design or a cross sectional design without a control group. We used mixed method data collection consisting of surveys, interviews and, for the conversation use case, video recording. An extensive amount of data was collected, giving insight in, among others, perception of the robot, usability, and quality of life of the user. The target sample per country for each use case was to recruit the following stakeholders: 20 elderly, around 6 formal caregivers and as many informal caregivers as available and willing to participate. In total 100 people participated in the experiment. The results reached in the ACCRA project are beyond the scope of this paper, but will be reported in other publications.

The application usability is measured with the with the System Usability Scale (SUS) [77]. SUS provides specific questions such as: (1) I think that I would like to use this system frequently. (2) I found the system unnecessarily complex. (3) I thought the system was easy to use. (4) I think that I would need the support of a technical person to be able to use this system. (5) I found the various functions in this system were well integrated. (6) I thought there was too much inconsistency in this system. (7) I would imagine that most people would learn to use this system very quickly. (8) I found the system very cumbersome to use. (9) I felt very confident using the system. (10) I needed to learn a lot of things before I could get going with this system.

Conclusion: Agile and DevOps (introduced in Sect. 2.3) are used to co-create applications (mentioned above) with elderly and medical professionals, amongst other stakeholders. Internal sprints are done within the engineering team. Once an application is ready, it is presented to the previously mentioned multidisciplinary stakeholders during co-creation workshops for validation, refinement or further development (within external sprints), depicted in Fig. 13. Technical limitations of the study are described in Sect. 7.

Fig. 13.

Internal and external sprints for co-reaction of applications with elderly

Experiments of Knowledge API Demonstrators with Users Through Google Forms

To evaluate LOV4IoT, an evaluation form17 has been set up available on the LOV4IoT user interface. It has been filled in by 50 volunteers, who employed the LOV4IoT web site and found the evaluation form to fill it in. We did not select their profiles but requested the information “Who are you?” in the form. We keep the evaluation form open to get additional volunteers that can still provide their feedback. For this reason, statistics can evolve. This form demonstrates that the synthesization and classification work of numerous ontology-based projects is useful for other developers, researchers and not only designed for the IoT research field. It helps users for their state of the art or for finding and reusing the existing ontologies. Sometimes the results are not always 100% when the question was not mandatory or when we added later a new question to get more information. The LOV4IoT evaluation form contains the following questions and results.

Who are you? Users are either: 37% semantic-based IoT developers, 37% IoT developers, 11% ontology matching tool experts and 9% domain experts. It means that the dataset is mainly used by the IoT community.

Domain ontologies that you are looking for? 40% of users are interested in smart home ontologies (the highest score), 28% in health ontologies, 10% in emotion. It means that users are interested in most of the domains that we cover.

How did you find this tool? 23% found the LOV4IoT tool thanks to search engines, 23% thanks to emails that we sent to ask people to share their domain knowledge or to fill this form, 19% thanks to research articles, and 13% thanks to people who recommended this tool. Everybody can find and use this tool, not necessarily researchers.

Do you trust the results since we reference research articles? 57% of users trust the LOV4IoT tool since we reference research articles, 40% are partially convinced. It means they consider this dataset as a reliable source.

In which information are you interested? 72% of users are interested in ontology URL referenced, 66% of users are interested in research articles, 37% in technologies, 27% in rules and 27% in sensors used. The classification and description of each project is beneficial for our users.

Do you use this web page for your state-of-the art? 40% of users answered yes frequently, 32% yes, and 28% no. Thanks to this work, users save time by doing the state of the art on our dataset.

In your further IoT application developments, do you think you will use again this web page? 59% of users answered yes frequently, 36% yes, and 4% no. This result is really encouraging to maintain the dataset for domain and IoT experts.

In general, do you think this web page is useful: 69% of users answered yes frequently, 28% yes, and 2% no. This result is really encouraging to maintain the dataset and add new functionalities.

Would you recommend this web page to other colleagues involved in ontology-based IoT development projects? 82% of users answered yes frequently, 16% yes, and 2% no. This result is really encouraging to maintain the dataset and add new functionalities.

This evaluation shows that LOV4IoT is really relevant for the IoT community. The results are encouraging to update the dataset with additional domains and ontologies. LOV4IoT leads to the AIOTI (The Alliance for the Internet of Things Innovation) IoT ontology landscape survey form18 and analysis result19, executed by the WG03 Standards—Semantic Interoperability Expert Group. It aims to help industrial practitioners and non-experts to answer those questions: Which ontologies are relevant in a certain domain? Where to find them? How to choose the most suitable? Who is maintaining and taking care of their evolution?

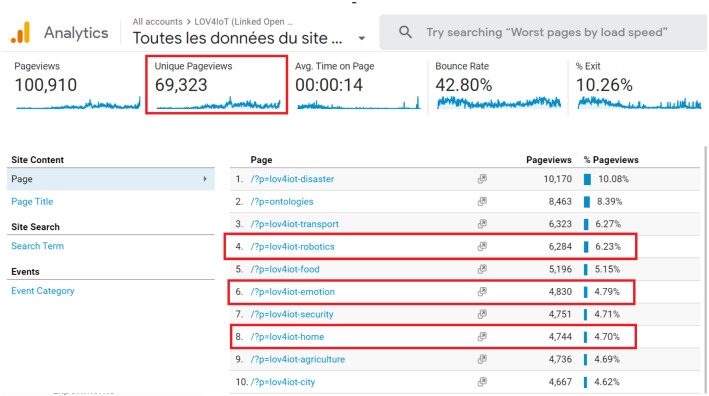

Experiments of Knowledge API Demonstrators with Google Analytics

Since August 2014, we have set up Google Analytics. In January 2021; more than 955 visits have been done on the ontologies (all ontologies), (794 unique visits). Since we realized that the LOV4IoT-ontologies was one of the most visited (after the home web page) of the entire “Semantic Web of Things” web site, we decided to create a dedicated web site for LOV4IoT. In April 2018, Google Analytics have been set up on the LOV4IoT dedicated web site, as depicted in Fig. 14. We can see a constant improvement of the number of visits. Because of such encouraging results, we also started to split the web page per domains to clearly observe the IoT domains that interest the audience (Fig. 15). It demonstrates that robotics, emotion, and home domains referencing existing ontologies are the most visited: robotics with more than 6200 page views, emotion with more than 4800 page views, and home with more than 4700 pageviews. It means that visitors return several time to this dataset which demonstrates its usefulness. However, we are aware of the need of front-end developers to enhance GUI user experience. As mentioned previously, LOV4IoT leads to the AIOTI IoT ontology landscape survey.

Fig. 14.

LOV4IoT google analytics

Fig. 15.

LOV4IoT google analytics per IoT domain (e.g., robotics, emotion, and home)

In the same way, we have recently refactored the SLOR rule discovery webpage to a dedicated web site and then split it per domain; the google analtyics results are not impressive yet.

Key Contributions and Lessons Learnt

Internet of Robotic Things (IoRT): the Scuola Superiore Sant’anna ACCRA partner, is a pioneer of the IoRT field [115]; several partners have an interest in contributing more to the convergence of IoT and robotics communities and technologies [94] [106]. Cloud Robotics [57, 60, 99]): the reasoning engine component is available on the Cloud to benefit from the computational power. The intelligence of the robot can be downloaded from the Cloud according to the application needs. The reasoning engine is flexible enough to be used either on the Cloud, and has already been tested on Android-powered devices in previous projects; which means that any robot platform compatible with Android should be able to run the reasoning engine and the Knowledge API.

Unifying Models: A common language to allow machines and devices talk with each other is necessary, we found that robots need a common language to exchange information with each other (more sophisticated than RoboEarth [124] and RobotML [28]). Our findings show that this can be achieved with semantic web technologies (e.g., ontology). The IEEE RAS Ontologies for Robotics and Automation Working Group [89] is becoming known by the IoRT community [115]. There is no emotion ontology endorsed by standards such as IEEE, ISO, or W3C yet. The reuse and integration of existing ontologies is a technical challenge to face, there is a need to disseminate more semantic web best practices [52] to communities such as IoT, Robotics, Affective Sciences, etc.

Autonomous robots: The IEEE 1872.2 ontology for autonomous robotics standardization designs ontological patterns to be reused among heterogeneous robots. The LOV4IoT-Robotics has been refined with the knowledge from the IEEE robotic experts (see the survey of ontology-based approaches to robot autonomy [83]). LOV4IoT-Robotics classifies 55 ontology-based projects (in August 2020), many more projects compared to the ones from the survey.

Trust (e.g., Security and Privacy): Cloud technologies bring new AI capabilities, however, we are aware of trust issues (that includes security and privacy) to be compliant with the European Commission vision conveyed within their white paper [24].

Conclusion and Future Work

Three social robots (Buddy, ASTRO, and RoboHon) assist elderly people to stay independent at home and improve their socialization in the context of the ACCRA project. The robots embed three kinds of emotional-based applications (mobility, daily life and conversational), conceived with the Listen, Innovate, Field-test, Evaluate (LIFE) co-creation methodology for IoT, integrating human factors to fit the elderly’s needs. We also have detailed the knowledge API to reuse expertise from cross-domain communities (e.g., robotics, IoT, health, and affective sciences).

Table 5.

Summary of robotics surveys using IoT, cloud, and AI technologies

| Authors | Year | Expertise | Semantic web (e.g., ontologies) |

|---|---|---|---|

| Paulius et al. [86] | 2019 | Survey service robotics | Knowledge representation list and prolog for rules (reasoning and inference) |

| Simoens et al. [106] | 2018 | IoT robotics | No |

| Saha et al. [99] | 2018 | Survey cloud robotics | No |

| Mouradian et al. [78] | 2018 | Robotics-as-a-service (RaaS) cloud, disaster | No |

| Vermesan et al. [115] | 2017 | Internet of robotics things (IoRT) | cites only the IEEE standardization [89] |

| Chowdhury et al. [97] | 2017 | IoT, robotics | No |

| Jangid et al. [58] | 2016 | Cloud robotics, disaster | No |

| Kehoe et al. [60] | 2015 | Survey, RaaS, ML, cloud | No |

| Koubaa et al. [64] | 2015 | RaaS, robot operating system (ROS) web service (WS) | No |

| Waibel et al. [117] | 2011 | WWW robots, cloud | No |

| RoboEarth EU FP7 project |

Table 6.

Overview of existing ontology-based robotic and IoT projects

| Authors | Year | Expertise | LOV4IoT-robotic dataset | Ontology availability (OA) |

|---|---|---|---|---|

| Tiddi et al. [112] | 2017 | Capability of robots | Ontology | |

| Tenorth, Beetz et al. [111] [110] (KnowRob, OpenEase, RobotHub) | 2017 2013 | Cognitive robots | Ontology | 16 ontologies online |

| Li et al. [68] | 2017 | Underwater robots | Ontology | |

| Prestes, Fiorini [89] [90] | 2014 2013 | Core ontology for robotics and automation (CORA) standard | Ontology | |

| Lortal et al. [75] [27] | 2010 | Robotic DSL | Ontology | |

| Mobile manipulation ontologies | – | Mobile manipulation ontologies | Ontology | |

| IEEE P1872.2 Standard | 2021 | Autonomous robotics (AuR) | Ontology | No(ongoing) |

| Tosello et al. [114] | 2018 | Robot task and motion planning | Ontology | No |

| Haidegger et al. [53] | 2013 | Service robots | Ontology | No |

| Balakirsky et al. [10] | 2012 | Industrial robotic | Ontology | No |

| Hotz et al. [56] | 2012 | Robot tasks | Ontology | No |

| Chella et al. [22] | 2002 | Robotic environments | Ontology | No |

| Sabri et al. [98] | 2018 | Context-aware semantic internet of robotic things (IoRT) | Ontology | No |

| Olszewska et al. [84] | 2017 | Autonomous robotics | Ontology | No |

| Gonccalves et al. [44] | 2016 | Surgical robotics | Ontology | No |

| Zander et al. [122] | 2016 | Survey semantic-based robotics projects | Survey | No |

| Saraydaryan, Grea et al. [101] [46] | 2015 2014 | Services | Ontology | No |

| Saxena et al. [102] [105] | 2014 | Robobrain knowledge engine based on WordNet, Wikipedia, Freebase, and ImageNet | Ontology | No |

| Azkune et al. [9] | 2012 | Social-robot self-configuration | Ontology | No |

| Lim et al. [70] | 2011 | Service robots, indoor environment | Ontology | No |

| Dogmus et al. [30] [29] | 2015 | Rehabilitation robotics ontology | Ontology | No |

| 2013 | ||||

| RobotML [28] | 2012 | RoboML language | Ontology | No |

| Paull et al. [87] | 2012 | Autonomous robotics | Ontology | No |

| Lemaignan et al. [67] | 2010 | Cognitive, robotics | Ontology | No |

| Vorobieva et al. [116] | 2010 | Object recognition and manipulation | Ontology | No |

| Bermejo et al. [15] | 2010 | Autonomous sytems | Ontology | No |

| Chatterjee et al. [21] | 2005 | Robot for disaster and rescue | Ontology | No |

| Wang et al. [118] | 2005 | Robot context understanding | Ontology | No |

| Schlenoff et al. [103] | 2005 | Robot urban search and rescue | Ontology | No |

Legend: Ontology availability (OA), when the code is available, the ontologies are classified on the top. Then, the ontology-based projects are classified by year of publications

Future work is to contribute to the IEEE 1872.2 ontology for autonomous robotics and apply it to deployed innovative cross-domain applications. Applications will use more wearables with elderly through co-creation methodologies and will integrate ontologies from various domains (e.g., robotic, IoT, Ambient Assisted Living). Another way to enhance the evaluation to measure the usability of applications is through the World Health Organisation Quality of Life assessment (WHOQOL) [47] that asks questions about overall quality of life and general health. We will continue the work on the Personalized Health Knowledge Graph [51]. Automatic knowledge extraction from ontologies and scientific publication describing the ontology purpose is challenging (see our AI4EU Knowledge Extraction for the Web of Things Challenge) to reuse the expertise designed by robotics and domain experts (e.g., physicians) and to make it usable by machines.

Acknowledgements

This work has partially received funding from the European Union’s Horizon 2020 research and innovation program (ACCRA) under grant agreement No. 738251, National Institute of Information and Communications Technology (NICT) of Japan, and AI4EU No. 825619. We would like to thanks ACCRA partners for their valuable comments. The opinions expressed are those of the authors and do not reflect those of the sponsors.

Biographies

Dr. Amelie Gyrard

is a consultant on Research & Innovation European projects at Trialog, Paris, France. Dr. Amelie Gyrard has experience in topics such as Internet of Things (IoT) and Artificial Intelligence (AI) Semantic Interoperability, Robotics, and Well-being. Dr. Gyrard is involved in standardizations relevant for Internet of Things and ontologies (e.g., ISO/IEC SC41 IoT and Digital Twin, ISO/IEC SC42 AI, ETSI SmartM2M, W3C, IEEE Ontology for Autonomous Robotics, iot.schema.org). She co-authored Semantic Interoperability for Internet of Things (IoT) white papers targeting developers and engineers where different standardization activities are collaborating (W3C Web of Things, ISO/IEC JTC1, ETSI, ONEM2M, IEEE P2413 and AIOTI).

Dr. Kasia Tabeau

is an Assistant Professor in Health Services Innovation and Cocreation at at the Department of Health Services Management and Organization (HSMO), Erasmus School of Health Policy & Management, The Netherlands. Her research focuses on how co-creation in healthcare should be organized and managed to ensure products and services meet patients’ needs, as well as those of their relatives and the (medical) professionals involved in their care. In particular, on how design thinking, i.e. the practices and abilities of designers, can facilitate the bringing together of the needs of these multiple, heterogeneous stakeholders.

Dr. Laura Fiorini

is a postodoctoral researcher at the University of Florence, Department of Industrial Engineering, Florence, Italy. She received the M.Sc. Degree in Biomedical Engineering at University of Pisa in 2012 (full marks, cum laude). She obtained a Ph.D. in Biorobotics (full marks, cum laude) at the BioRobotics Institute of Scuola Superiore Sant’Anna, in 2016 with a fellowship offered by Telecom Italia discussing a thesis entitled ”Cloud Robotic Services for Assisted Living Application”. In 2015 she visited the Bristol Robotics Laboratory at University of West England (Bristol, UK). From 2016 to 2020, she was post doc researcher at the BioRobotics Institute and she collaborated at different EU and national projects such as: Robot-Era, ACCRA, CloudIA and SI-ROBOTICS. Currently, she is the coordinator of Italian pilot site of Pharaon Project. She is author of articles on ISI journals concerning the fields of social assistive robotics and wearable devices.

Mr. Antonio Kung

(male) has more than 30-year experience on embedded systems and ICT systems. He co-founded Trialog in 1987 where he acts as CEO. He has coordinated several collaborative projects in the security and privacy area (PRECIOSA, PRIPARE, PARIS, PDP4E). He is active in standardization (editor of ISO/IEC 27550 privacy engineering, rapporteur of two study periods: privacy guidelines for the IoT, privacy in smart cities). He is a member of the IPEN community, responsible for a wiki on privacy standards (ipen.trialog.com). He holds a Master’s degree from Harvard University, USA and an engineering degree from Ecole Centrale Paris, France.

Dr. Eloise Senges

is Associate Professor in Marketing with a senior consumer expertise, at the Toulouse School of Management, Toulouse 1 Capitole University, Toulouse, France. Before working in research, she had an experience of 10 years in marketing of consumer goods (Kraft Foods, now called Mondelez, and Cadbury). She was Group Marketing Manager in charge of the management of two markets (€225 million) and 13 brands. Her Ph.D. thesis (2016) dealt with the influence of Ageing Well on senior’s consumption. From 2016 to 2020, she was research engineer at Paris Dauphine University. She collaborated in European and national scientific projects aimed at developing robotic solutions and new technologies for ageing well, such as ACCRA and AMISURE. Dr. Eloise Senges (co)authored several papers on the ageing well of elderly consumers.

Dr. Marleen De Mul

is a health scientist with a broad interest in the development, implementation and evaluation of technologies for health and wellbeing. As an assistant professor at Erasmus School of Health Policy & Management she leads a research line on eHealth. In her research she applies mainly qualitative methods. Dr. De Mul (co)authored several papers on IT-supported quality management, development of patient portals, and use of robots in elderly care.

Francesco Giuliani

is the Director of the Innovation and Research Unit at Fondazione Casa Sollievo della Sofferenza. The Unit promotes the development of innovative solutions in the field of healthcare IT systems. He has coordinated several research programs in the fields of Computer Science, Robotics, Network Analysis, Medical Informatics, Telemedicine, Medical Devices Management, Scientometrics and Chronobiology. He has broad multidisciplinary experience in managing research and innovation projects from a regional to a European level. He is the author of articles, comments and interviews published in international journals.

Delphine Lefebvre

is working at Blue Frog Robotics, Paris, France as a project manager. She is an engineer in computer science and worked as a software developer and project manager in virtual reality, simulation and robotics in France and Italy. At Blue Frog Robotics she manages projects for the robot Buddy in fields such as education, robotics for elderly care and autism.

Dr. Hiroshi Hoshino

is CEO of Connectdot Ltd., Kyoto Japan, which is IT company. He has a PhD in engineering from Kyoto University. For 10 years at Kubota Computer, he localized the OS of super graphics computers using MIPS RISC CPU chips and introduced X Window Terminals to Japan. After that, he joined the Software Research Institute in Kyoto City and engaged in research to apply IT to the local community. Currently, he is also a part-time lecturer at Kyoto University and is in charge of software engineering lectures. He also develops apps for the visually impaired, and his lifetime job is to support the disabled and the elderly with IT.

Dr. Isabelle Fabbricotti

is associate professor at the research group Health Services Management and Organization at the Institute of Health Policy & Management, Erasmus University Rotterdam. Her main field of expertise is integrated care and new care arrangements to enhance the efficiency and effectiveness of care. In her research she uses qualitative, quantitative and mix-methods designs as well as co-creation designs. Fabbricotti has extensive experience with the evaluation of large-scale, complex, and multidisciplinary interventions. Fabbricotti was project leader of the work package of the experiment of the Horizon 2020 ACCRA-project. Fabbricotti holds a master degree in Health Sciences from the Erasmus University Rotterdam and a doctorate from the same institution.

Dr. Daniele Sancarlo

is geriatrician and bioinformatician working as researcher of the Geriatrics Unit of CSS since 2008. He is author of over 70 scientific articles published prevalently on international indexed journals and over 60 abstracts. In the last years he was involved in several international and national research projects. He is currently participating as PI for its institution in the Pharaon European Project. His activities concern ageing, technology applied to the elderly, AAL, multidimensional impairment, assistive robotics, mortality prognostic model and dementia.

Dr. Grazia D’Onofrio

is a Psychologist with a PhD in Robotics. She works as Researcher in the Psychological Service of the CCS since 2007. She is author of more than 60 scientific publications mainly published in international journals. At this time, she is Editorial Board Member for 3 international journals: Current Updates in Aging (OPR Science), Current Updates in Gerontology (OPR Science) and Austin Aging Research (Austin Publishing Group). In the last years she was actively involved in several national, regional and international projects. Her activities regards prevalently dementia, psychological and behavioral symptoms, Ambient Assisted Living and Multidimensional impairment.

Prof. Filippo Cavallo

is Associate Professor in Biomedical Robotics at the University of Florence, Department of Industrial Engineering, Florence, Italy. He received the Master Degree in Electronics Engineering from the University of Pisa, Italy, and the Ph.D. degree in Bioengineering at the BioRobotics Institute of Scuola Superiore Sant’Anna, Pisa, Italy. From 2007 to 2013, he was post doc researcher and, from 2013 to 2019, he was assistant professor and head and scientific responsible of the Assistive Robotics Lab at the BioRobotics Institute, Scuola Superiore Sant’Anna. He participated in various National and European projects, and he is currently principal investigator of the Olimpia Project, Pharaon project on pilots for active and healthy ageing, and scientific responsible of ACCRA, Capsula, Corsa, CloudIA, Si-Robotics, Intride and Metrics projects. He is author of more than 130 papers on conferences and ISI journals.

Pr. Denis Guiot

is Professor of Marketing and heads the Marketing Research Center of Paris-Dauphine University (DRM-ERMES) (18 scholars and 23 PhD students) and the Consulting and Research Master program in Marketing and Strategy since 2006. He has carried out research on the influence of time on consumer behavior. His current research projects explore alternative forms of consumption including the second-hand market and the use of virtual communities by consumers. He is also an expert in marketing to the elderly and has been a consultant for major companies such as Humanis, IBM, Lagardère, and for advertising companies. He has written more than 80 research papers in the field of consumer behavior and participated, as a scientific coordinator for Paris-Dauphine University, in various national and European projects such as AMISURE and ACCRA. His research has been published in numerous books and in the Journal of Retailing, Psychology & Marketing, Recherche et Applications en Marketing (English and French editions), and Decisions Marketing.

Ms Estibaliz Arzoz-Fernandez

has a master degree in Telecommunications engineering by the University of the Basque Country (Spain). She has more than 10 years of experience working as an engineer and the last 7 years dedicated to privacy and security projects as a Project Manager in the fields of research and innovation, managing and coordinating projects such as ACCRA or PDP4E. She is involved in several research and innovation European projects managing the privacy and cybersecurity activities in the energy sector or health domain (InterConnect, SENDER, MAESHA).

Prof. Yasuo Okabe