Abstract

Background

Digital biomarkers are defined as objective, quantifiable, physiological, and behavioral data that are collected and measured using digital devices such as portables, wearables, implantables, or digestibles. For their widespread adoption in publicly financed health care systems, it is important to understand how their benefits translate into improved patient outcomes, which is essential for demonstrating their value.

Objective

The paper presents the protocol for a systematic review that aims to assess the quality and strength of the evidence reported in systematic reviews regarding the impact of digital biomarkers on clinical outcomes compared to interventions without digital biomarkers.

Methods

A comprehensive search for reviews from 2019 to 2020 will be conducted in PubMed and the Cochrane Library using keywords related to digital biomarkers and a filter for systematic reviews. Original full-text English publications of systematic reviews comparing clinical outcomes of interventions with and without digital biomarkers via meta-analysis will be included. The AMSTAR-2 tool will be used to assess the methodological quality of these reviews. To assess the quality of evidence, we will evaluate the systematic reviews using the Grading of Recommendations, Assessment, Development and Evaluation (GRADE) tool. To detect the possible presence of reporting bias, we will determine whether a protocol was published prior to the start of the studies. A qualitative summary of the results by digital biomarker technology and outcomes will be provided.

Results

This protocol was submitted before data collection. Search, screening, and data extraction will commence in December 2021 in accordance with the published protocol.

Conclusions

Our study will provide a comprehensive summary of the highest level of evidence available on digital biomarker interventions, providing practical guidance for health care providers. Our results will help identify clinical areas in which the use of digital biomarkers has led to favorable clinical outcomes. In addition, our findings will highlight areas of evidence gaps where the clinical benefits of digital biomarkers have not yet been demonstrated.

International Registered Report Identifier (IRRID)

PRR1-10.2196/28204

Keywords: digital biomarker; outcome; systematic review; meta-analysis; digital health; mobile health; Grading of Recommendations, Assessment, Development and Evaluation; AMSTAR-2; review; biomarkers; clinical outcome; interventions; wearables; portables; digestables; implants

Introduction

The advent of new medical technologies such as sensors has accelerated the process of collecting patient data for relevant clinical decisions [1], leading to the emergence of a new technology, namely digital biomarkers. By definition, “digital biomarkers are objective, quantifiable, physiological, and behavioral measures collected using digital devices that are portable, wearable, implantable, or digestible” [2].

In addition to their role in routine clinical care, digital biomarkers also play a significant role in clinical trials [3]. Digital biomarkers are considered important enablers of the health care value chain [4], and the global digital biomarker market is projected to grow at a rate of 40.4% between 2019 and 2025, reaching US $5.64 billion in revenue by 2025 [5].

By providing reliable disease-related information [6], digital biomarkers can offer considerable diagnostic and therapeutic value in modern health care systems as monitoring tools and as part of novel therapeutic interventions [7]. Digital biomarkers could reduce clinical errors and improve the accuracy of diagnostic methods for patients and clinicians using measurement-based care [8]. As an alternative to cross-sectional surveillance or prospective follow-ups with a limited number of visits, these technologies can provide more reliable results through continuous and remote home-based observation, and when combined with appropriate interventions, digital biomarkers have the potential to improve therapeutic outcomes [9]. In addition, predicting patients' disease status during continuous monitoring provides opportunities for treatment processes with fewer complications [10].

Given the recent rapid pace of the development of digital health technologies such as software [11], sensors [12], or robotic devices [13,14], their widespread adoption in publicly financed health care systems requires a systematic evaluation and demonstration of their clinical benefits and economic value [15]. The new European Medical Device Regulations effective from May 2021 seek sufficient clinical evidence with the goal of improving clinical security and providing equitable access to appropriate products [16].

Because of rapid technological changes, several potential user groups, and a wide range of functionalities, assessing the value of digital health technologies is a challenging, multidimensional task that often involves broader issues than standard health economic evaluations [17-20]. The National Institute for Clinical Excellence (NICE) has published an evidence framework to guide innovators on what is considered a good level of evidence to support the evaluation of digital health technology. According to the NICE framework, digital biomarkers can fall into several digital health technology risk categories, ranging from simple consumer health monitoring to digital health interventions that potentially impact treatment or diagnosis of care. Although evidence of measurement accuracy and ongoing data collection on use may be sufficient for lower risk categories, for high-risk technologies, demonstration of clinical benefits in high-quality interventional studies is required as a minimum standard of evidence. NICE considers randomized controlled trials (RCTs) conducted in a relevant health care system or meta-analyses of RCTs to be the best practice evidence standard [20].

Numerous studies have conducted systematic reviews of digital biomarkers in recent years with varying results. For instance, a meta-analysis found that implantable cardioverter defibrillators (ICDs) are generally effective in reducing all-cause mortality in patients with nonischemic cardiomyopathy [21], whereas another reported that ICD therapy for the primary prevention of sudden cardiac death in women does not reduce all-cause mortality [16]. In a meta-analysis comparing ICDs with drug treatments, ICDs were found to be more effective than drugs in preventing sudden cardiac death [22]. Some systematic reviews on the use of wearable sensors for monitoring Parkinson disease have reported that wearable sensors are the most effective digital devices to detect differences in standing balance between people with Parkinson disease and control subjects [23] and improve quality of life [24]. In another systematic review, the clinical utility of wearable sensors in patients with Parkinson disease to support clinical decision making was not clear [25]. A 2011 systematic review confirmed no differences between the effectiveness of portable coagulometers and conventional coagulometers in monitoring oral anticoagulation [26].

The inconsistent results from current systematic reviews call for a more systematic assessment of the strength and quality of evidence regarding the health outcomes of interventions based on digital biomarkers. Lack of knowledge about or omission of the quality of evidence of systematic reviews may lead to biased therapeutic guidelines and economic evaluations, and consequently to the widespread adoption of potentially harmful practices and a lag in the adoption of beneficial interventions [27].

Several systems for assessing the quality of evidence have been developed [28], of which the Grading of Recommendations, Assessment, Development and Evaluation (GRADE) system has been adopted by organizations such as the World Health Organization, American College of Physicians, and the Cochrane Collaboration due to its simplicity, methodological rigor, and usefulness in systematic reviews, health technology assessments, and therapeutic guidelines [27]. By assessing study limitations, inconsistency of results, indirectness of evidence, imprecision and reporting bias, GRADE classifies the quality of evidence into four levels from high to very low, with high quality indicating that further research is unlikely to alter our confidence in the effect estimate. Furthermore, by assessing the risk and benefit profile of interventions, GRADE offers two grades of recommendation—strong or weak, with strong recommendations indicating a clear positive or negative balance of risks and benefits [27]. However, some systematic reviews do not provide a structured assessment of the quality of synthesized evidence, and the quality of reporting may also limit the quality assessment of their results. Therefore, the AMSTAR-2 tool was developed as a validated tool to assess the methodological quality of systematic reviews [29].

Our goal is to provide innovators and policy makers with practical insights into the state of evidence generation on digital biomarkers, a rapidly evolving area of medicine [2]. This systematic review of systematic reviews will assess the overall strength of evidence and the reporting quality of systematic reviews that report a quantitative synthesis of the impact of digital biomarkers on health outcomes when compared to interventions without digital biomarkers. Methodological quality of the studies will be assessed using the AMSTAR-2 tool, whereas overall quality of evidence will be evaluated according to GRADE by digital biomarker technologies and reported outcomes.

Methods

This protocol was prepared following the PRISMA-P (Preferred Reporting Items for Systematic Reviews and Meta-Analyses Protocols) statement preferred for describing items for systematic review and meta-analysis protocols [30]. When reporting the results of this study, amendments or deviations from this protocol will be reported.

Eligibility Criteria

Original full-text English publications of systematic reviews that report meta-analyses of clinical outcomes of digital biomarker–based interventions compared with alternative interventions without digital biomarkers will be included. Specifically, we will include studies examining digital biomarkers used for diagnosing humans with any health condition in any age group and across genders. Studies investigating the use of digital biomarkers in animals will be excluded. Furthermore, the definition of digital biomarkers may overlap with sensor applications in the general population such as citizen sensing [31]. In this research, we will only consider systematic reviews focusing on digital devices used by clinicians or patients with the aim of collecting clinical data during treatment.

All interventions that involve the use of digital biomarkers for any purpose related to diagnosing patients, monitoring outcomes, or influencing the delivery of a therapeutic intervention will be considered. There will be no restrictions on comparators as long as the comparator arm does not involve the application of digital biomarkers for the purposes listed above. Only meta-analyses of clinical outcomes that report the intentional or unintentional change in the health status of participants resulting from an intervention will be considered. Systematic reviews that focus on measurement properties or other technical or use-related features of digital biomarkers that are not measures of a change in participants' health status due to an intervention are not eligible for this review.

Systematic reviews published between January 1, 2019, and December 31, 2020, will be included. We will include full-text articles published in English in peer-reviewed journals, conference papers, or systematic review databases, as well as full-text documents of systematic reviews from non–peer-reviewed sources, such as book chapters or health technology assessment reports.

Search Strategy

A comprehensive literature search will be conducted in PubMed and the Cochrane Library. In addition, the reference list of eligible full-text systematic reviews will be searched for other potentially eligible reviews for our study. Keywords related to “digital biomarkers” and filters for “systematic reviews” and publication dates will be combined in the literature search. Automatic expansion of the search terms to include applicable MeSH (Medical Subject Headings) terms will be allowed. For searching the digital biomarker studies, we operationalized the definition of “digital biomarkers” [2]. For identifying systematic reviews, the search filter proposed by the National Library for Medicine will be used [32]. This filter was designed to retrieve systematic reviews from PubMed that have been assigned the publication type “Systematic Review” during MEDLINE indexing, citations that have not yet completed MEDLINE indexing, and non-MEDLINE citations. The full syntax is provided in Table 1. An equivalent syntax will be developed to retrieve Cochrane reviews from the Cochrane Library.

Table 1.

Search expressions for PubMed.

| Terms | Number | Syntax |

| Digital biomarkers | #1 | “digital biomarker” OR “digital biomarkers” OR portable OR portables OR wearable OR wearables OR implantable OR implantables OR digestible OR digestibles |

| Systematic reviews | #2 | (((systematic review[ti] OR systematic literature review[ti] OR systematic scoping review[ti] OR systematic narrative review[ti] OR systematic qualitative review[ti] OR systematic evidence review[ti] OR systematic quantitative review[ti] OR systematic meta-review[ti] OR systematic critical review[ti] OR systematic mixed studies review[ti] OR systematic mapping review[ti] OR systematic Cochrane review[ti] OR systematic search and review[ti] OR systematic integrative review[ti]) NOT comment[pt] NOT (protocol[ti] OR protocols[ti])) NOT MEDLINE [subset]) OR (Cochrane Database Syst Rev[ta] AND review[pt]) OR systematic review[pt] |

| Publication date | #3 | (“2019/01/01”[Date - Publication]: “2020/12/31”[Date - Publication]) |

| Final search strategy | #4 | #1 AND #2 AND #3 |

Screening and Selection

After removing duplicates, 2 reviewers will independently screen the titles and abstracts according to two main eligibility criteria: (1) systematic reviews and (2) interventions including digital biomarkers that meet the definition “objective, quantifiable, physiological, and behavioral measures collected using digital devices that are portable, wearable, implantable, or digestible” [2]. Following this definition, imaging or any other technology that does not measure physiological or behavioral data will be excluded from this study. Portable, wearable, implantable, or digestible medical devices or sensors, which generate physiological or behavioral data, will be considered as digital biomarkers (such as fitness trackers and defibrillators). We interpret portable as “portable with respect to patients or consumers”; therefore, portable devices that are operated by health care professionals (eg, digital stethoscopes) will be excluded. Studies other than systematic reviews will be excluded in the screening phase. Interreviewer calibration exercises will be performed after title and abstract screening of the first 100 records, using the following method: both screening criteria will be scored as 1 if “criterium not met” and 0 if “criterium met or unsure.” Therefore, reviewers can evaluate each record by assigning a score of 1, 2, 3, or 4, denoting response patterns of (0,0), (1,0), (0,1), and (1,1), respectively. Interrater agreement and the κ statistic will be calculated for the score, and reviewers will be retrained if worse than substantial agreement (κ 0.6) is not observed [33]. In case of discordant evaluations, a third reviewer will make decisions.

After screening, full-text articles will be evaluated against all eligibility criteria by 2 independent reviewers: (1) is the language English? (yes/no or unsure), (2) does the review concern human studies? (yes/no or unsure), (3) was the review published between January 1, 2019, and December 31, 2020? (yes/no or unsure), (4) does the review involve a meta-analysis of clinical outcomes? (yes/no or unsure), (5) does the intervention involve a digital biomarker used for diagnosis, patient monitoring, or influencing therapy? (yes/no or unsure), (6) does the comparator arm lack a digital biomarker for the same purposes? (yes/no or unsure). For inclusion, all 6 criteria must have yes as the answer. Discrepancies will have to be resolved by the 2 reviewers. In case of disagreement, a third reviewer will make the decision on including the article. Excluded full-text articles and the reasons for exclusion will be included as an appendix to the publication of results.

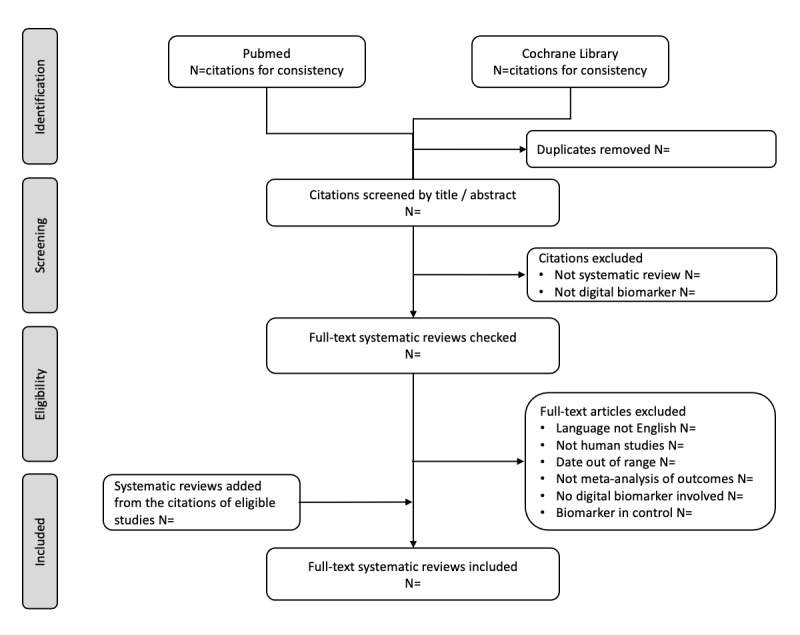

The screening results and selection of eligible studies will be visualized using the PRISMA-P 2009 flow diagram shown in Figure 1 [34].

Figure 1.

PRISMA-P (Preferred Reporting Items for Systematic Reviews and Meta-Analyses Protocols) flow diagram of included studies.

Data Extraction

Data extraction will be performed by 2 independent researchers, and a calibration exercise (evaluation of interrater agreement) will be conducted after completing data extraction from 20% of the included studies. Discrepancies between reviewers will be resolved by consensus, and residual differences will be settled by a third reviewer. Any modification needed in the data extraction form will be done at this point.

Study-Level Variables

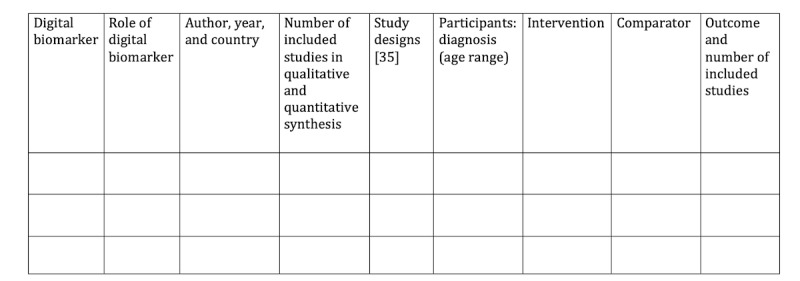

We will record the following study-level variables: year of publication, the first author’s country using code 3166-1 of the International Standards Organization, the total number of included studies on qualitative and quantitative synthesis as well as separately for every outcome, study designs of the included studies (RCTs and non-RCTs, cohort studies, case-control studies, and cross-sectional studies) [35], population and its age range, disease condition using the International Classification of Diseases 11th Revision coding [36], intervention, type of intervention using the International Classification of Health Interventions coding [37], comparator, type of comparator, digital biomarker, role of digital biomarker (diagnosis, patient monitoring, and influencing intervention), bodily function quantified by the digital biomarker using the International Classification of Functioning, Disability and Health coding [38], and the list of synthesized outcomes. Each eligible study will be summarized in Figure 2.

Figure 2.

Summary of the included studies, which will include resources retrieved from non–peer-reviewed sources and reviews retrieved from peer-reviewed sources. Study designs will be listed in abbreviated form as the following: randomized controlled trial (RCT), non–randomized controlled trial (non-RCT), cohort study (C), case-control study (CC), and cross-sectional study (CS).

Assessment of the Methodological Quality of Systematic Reviews

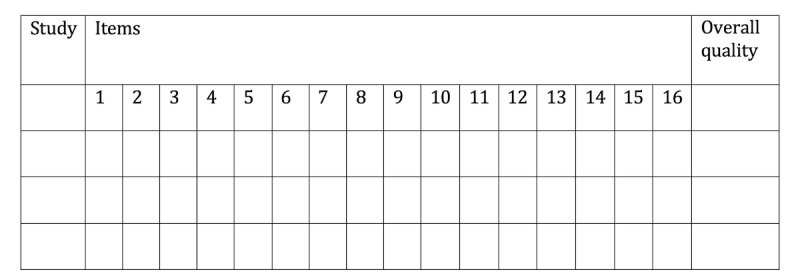

The methodological quality of the eligible systematic reviews will be assessed using the criteria of the AMSTAR-2 tool [29] by 2 independent reviewers. Discrepancies will be resolved by consensus; lingering differences will be resolved by a third reviewer. AMSTAR-2 is a reliable and valid tool used for assessing the methodological quality of systematic reviews of randomized and nonrandomized studies of health care interventions [29,39]. In brief, AMSTAR-2 evaluates methodological quality according to the following 16 criteria: (1) research question according to the PICO (patient, intervention, comparison, outcome) framework, (2) methods established prior to the study, (3) explicit inclusion criteria, (4) comprehensive literature search, (5) study selection in duplicate, (6) data extraction in duplicate, (7) reporting of excluded studies, (8) detailed description of included studies, (9) risk of bias (RoB) assessment, (10) disclosure of funding sources, (11) appropriate statistical methods for evidence synthesis, (12) quantitative assessment of RoB in main results, (13) study-level discussion of RoB, (14) explanation for heterogeneity of results, (15) investigation of publication bias, and (16) reporting conflicts of interest.

For consistent rating [40], we will use the AMSTAR-2 website [41]. The AMSTAR-2 website provides an overall grading of the studies in four categories: critically low, low, medium, and high. It also provides explicit criteria for the answer options (yes, partially yes, and no). For each eligible article, answers for all AMSTAR-2 items and the overall ratings will be presented in Figure 3.The AMSTAR-2 items are presented in Textbox 1.

Figure 3.

Assessment of the methodological quality of reviews (AMSTAR-2). Overall quality will be listed as critically low (CL), low (L), medium (M), and high (H).

AMSTAR-2 items.

Did the research questions and inclusion criteria for the review include the components of the PICO (patient, intervention, comparison, outcome) framework?

Did the review report contain an explicit statement that the review methods were established prior to conducting the review and did the report justify any significant deviations from the protocol?

Did the review authors explain their selection of the study designs for inclusion in the review?

Did the review authors use a comprehensive literature search strategy?

Did the review authors perform study selection in duplicate?

Did the review authors perform data extraction in duplicate?

Did the review authors provide a list of excluded studies and justify the exclusions?

Did the review authors describe the included studies in adequate detail?

Did the review authors use a satisfactory technique for assessing the risk of bias (RoB) in individual studies that were included in the review?

Did the review authors report on the sources of funding for the studies included in the review?

If a meta-analysis was performed, did the review authors use appropriate methods for the statistical combination of results?

If a meta-analysis was performed, did the review authors assess the potential impact of RoB in individual studies on the results of the meta-analysis or other evidence synthesis?

Did the review authors account for RoB in individual studies when interpreting and discussing the results of the review?

Did the review authors provide a satisfactory explanation for and discussion of any heterogeneity observed in the results of the review?

If they performed a quantitative synthesis, did the review authors carry out an adequate investigation of publication bias (small study bias) and discuss its likely impact on the results of the review?

Did the review authors report any potential sources of conflicts of interest, including any funding they received for conducting the review?

Outcome-Level Variables

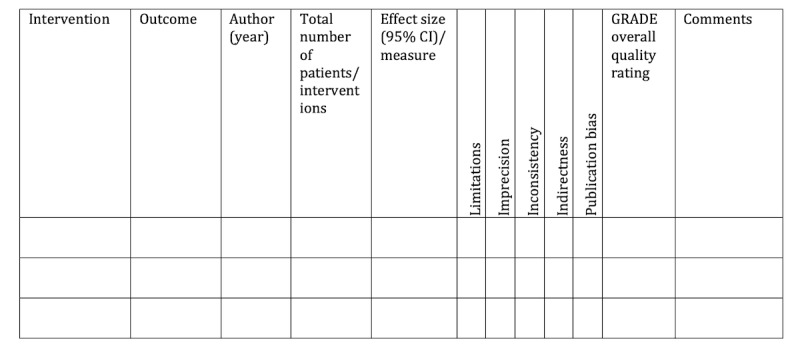

In addition to study-level variables, for each outcome synthesized in the meta-analyses, the following information will be extracted by duplicate reviews, using the process described above: the measured outcome, total number of studies per outcome, total number of patients and number of patients in the intervention, effect size and its 95% CI (upper and lower limits), as well as the type of effect size (standardized mean difference, odds ratio, and risk ratio). Quantitative descriptions of outcomes will be grouped by digital biomarker and are provided in Figure 4, along with the assessment of the quality of evidence.

Figure 4.

Evidence summary and quality assessment by the GRADE (Grading of Recommendations, Assessment, Development and Evaluation) tool. Measure will be listed as risk ratio (RR), odds ratio (OR), mean difference (MD), and standardized mean difference (SMD). GRADE certainty ratings will be provided as high (H), medium (M), low (L), and very low quality of evidence (VL).

Assessing the Quality of Evidence

We will evaluate the quality of evidence of the meta-analyses for each outcome using the GRADE system [27,42]. By default, GRADE considers the evidence from RCTs as high quality; however, this assessment may be downgraded for each outcome based on the evaluation of the following quality domains: (1) RoB [43], (2) inconsistency [44], (3) imprecision [45], (4) publication bias [46], and (5) indirectness [47]. Depending on the severity of the quality concerns, for each domain, a downgrade of 0, 1, or 2 can be proposed. We will assign downgrades for RoB according to the following criteria: if 75% or more than 75% of the included studies for a given outcome are reported to have low RoB, no downgrades will be assigned; if less than 75% of the included studies have low RoB, 1 downgrade will be assigned; if the RoB is not reported, 1 downgrade will be allocated [48].

To evaluate inconsistency, the reported heterogeneity (I2 statistic) of studies for each outcome will be considered. If the heterogeneity of the included studies of an outcome is less than or equal to 75%, no downgrade will be allotted. If the heterogeneity of included studies of the outcome is more than 75%, 1 downgrade will be assigned. If only 1 trial is included in the outcome, no downgrade will be assigned. In cases where the heterogeneity is not reported, we will assign 1 downgrade [48].

To assess imprecision, the sample size and CI will be evaluated [49]. Following the broad recommendations of the GRADE handbook [42], we will apply no downgrade if the pooled sample size is over 2000. We will apply 1 downgrade if the pooled sample size is less than 200. For pooled sample sizes between 200 and 2000, we will assess the optimal information size criterion as follows [42]: by expecting a weak effect size of 0.2 [50], we will calculate the sample size for an RCT using the pooled standard error and pooled sample size assuming a balanced sample, power of 0.8, and significance level of .05. If the calculated sample size is greater than the pooled sample size, 1 downgrade will be applied [42,49].

Publication bias appears when a pooled estimate does not comprise all the studies that could be included in the evidence synthesis [51]. One way to detect publication bias is to visually observe a funnel plot. Owing to the limitations of the funnel plot [46,52], this method may not show publication bias accurately [49,53] and may lead to false conclusions [52,54]. Therefore, we will assess publication bias using the trim and fill method proposed by Duval and Tweedie [55]. Potentially missing studies will be imputed, and the pooled effect size of the complete data set will be recalculated. In case the imputation of potentially missing studies change the conclusions of the analysis (eg, a significant effect size will not be significant anymore), we will apply 1 downgrade attributable to publication bias [55].

When assessing indirectness, any differences between the population, interventions, and comparators in each outcome of the research questions of the reviews will be considered [52]. In this regard, the studies included in each meta-analysis outcome will be evaluated. If the population, interventions, or comparators are consistent with the main aims of the meta-analysis, no downgrading will be considered. If the population, interventions, or comparators of the studies do not match the main objectives of the meta-analysis, depending on the severity of this mismatch, a downgrade of 1 or 2 will be considered based on the consensus of the 2 independent researchers involved in data extraction.

The quality evaluation and assignment of downgrades in each domain will be performed by 2 independent reviewers. Discrepancies will be resolved by consensus, and if required, decisions will be made by a third reviewer. The overall grading of the quality of evidence for each outcome will be performed by consensus. As a starting point for the consensus on overall evaluation, we will use the recommendations by Pollock et al [48]: (1) high quality indicates that further research is very unlikely to change our confidence in the effect estimate (0 downgrades); (2) moderate quality means further research is likely to have an important impact on our confidence in the effect estimate and may change the estimate (1-2 downgrades); (3) low quality implies further research is very likely to have an important impact on our confidence in the effect estimate and is likely to change the estimate (3-4 downgrades); (4) very low quality means any effect estimate is very uncertain (5-6 downgrades) [27,48].

In addition to the quantitative description of outcomes, the number of downgrades (0, 1, or 2) for each domain and the overall quality assessment (high, moderate, low, or very low) of the evidence with reasons for downgrades will be presented in Figure 4 for each outcome by each digital biomarker.

If the required information from the eligible studies is lacking at any stage of the research, or in case of ambiguity, we will contact the corresponding authors of the reviews by email to obtain the required information or to remove the ambiguity. If we do not receive any response, the case will be considered as “missing” or “not reported.”

Evidence Synthesis

Interrater agreement during screening will be evaluated via the percentage of agreement and the Cohen κ statistic. Study characteristics will be summarized using descriptive statistics. Given the heterogeneity of the included populations and interventions, we plan to provide a qualitative synthesis of the results for each digital biomarker by the type of intervention and outcome.

Results

This protocol was submitted before data collection. Search, screening, and data extraction will commence in December 2021 in accordance with the published protocol. The study is funded by the National Research, Development and Innovation Fund of Hungary (reference number: NKFIH-869-10/2019).

Discussion

Our study will provide a comprehensive summary of the breadth and quality of evidence available on the clinical outcomes of interventions involving digital biomarkers.

Strengths

Most of the systematic review studies conducted in the field of digital biomarkers in recent years have been mainly performed with a specific focus on one or more disease areas or technologies such as the effects of wearable fitness trackers on motivation and physical activity [56] or ICD troubleshooting in patients with left ventricular assist devices [57]. To the best of our knowledge, no comprehensive systematic review of systematic reviews has been published on all types of digital biomarkers in all populations and diseases. Therefore, our review aims to assess the quality of methods and evidence of systematic reviews, without being limited to a specific area or technology, using validated tools and standard methodologies. As a result, the strength of evidence can be compared between different types of interventions, providing practical guidance for clinicians and policy makers.

Limitations

One of the potential limitations of this study is the restricted search time period (2019 and 2020). Owing to the breadth of the scope, we chose a shorter timeframe for our review. However, we hypothesized that considering the new European Medical Device Regulations that were published in 2017 [16], this is a highly relevant period for evaluating the available clinical evidence generated prior to the implementation of the regulations. Furthermore, we hypothesized that given the rapid development of the field [3], systematic reviews are published regularly to summarize key developments in the generation of clinical evidence.

We operationalized the definition of digital biomarkers in our search. However, the sensitivity and specificity of our search filter to retrieve articles concerning digital biomarkers has not been tested. In addition to the general keywords applied in our search expressions, digital biomarkers may be identified by specific terms referring to the technology or type of data collected [3]. However, the creation of a comprehensive list of relevant search terms for all existing technologies was beyond the scope of this study and remains a research question to be answered. Furthermore, we will apply the definition of digital biomarkers in a clinical setting. Some sensor applications in the general population may have public health implications (eg, COVID-19 contact tracing apps [58]) , which will be omitted from this review. The challenges of interpreting the digital biomarker definition will be discussed.

Although relevant guidelines for systematic reviews of systematic reviews recommend searching in the Database of Abstracts of Reviews of Effectiveness (DARE) in addition to PubMed and Cochrane [59], we will limit our search to PubMed and Cochrane when retrieving reviews. It should be noted that the DARE was not used in this study as it does not contain new reviews from 2015.

Conclusions

In conclusion, our results will help identify clinical areas where the use of digital biomarkers has led to favorable clinical outcomes. Furthermore, our results will highlight areas with evidence gaps where the clinical usage of digital biomarkers has not yet been studied.

Acknowledgments

This research (project TKP2020-NKA-02) has been implemented with the support provided from the National Research, Development and Innovation Fund of Hungary, financed under the Tématerületi Kiválósági Program funding scheme. We thank Ariel Mitev (Institute of Marketing, Corvinus University of Budapest), who provided valuable insight and expertise for developing the search strategy, and Balázs Benyó (Department of Control Engineering and Information Technology, Budapest University of Technology and Economics) for comments that significantly improved the manuscript.

In connection with writing this paper, LG, ZZ, and HM-N have received support (project TKP2020-NKA-02) from the National Research, Development and Innovation Fund of Hungary, financed under the Tématerületi Kiválósági Program funding scheme. ZZ has received funding from the European Research Council (ERC) under the European Union's Horizon 2020 Research and Innovation Programme (grant 679681). MP has received funding (grant 2019-1.3.1-KK-2019-00007) from the National Research, Development and Innovation Fund of Hungary, financed under the 2019-1.3.1-KK funding scheme. MP is a member of the EuroQol Group, a not-for-profit organization that develops and distributes instruments for assessing and evaluating health.

Abbreviations

- DARE

Database of Abstracts of Reviews of Effectiveness

- GRADE

Grading of Recommendations, Assessment, Development and Evaluation

- ICD

implantable cardioverter defibrillator

- MeSH

Medical Subject Headings

- NICE

National Institute for Clinical Excellence

- PICO

patient, intervention, comparison, outcome

- PRISMA-P

Preferred Reporting Items for Systematic Reviews and Meta-Analyses Protocols

- RCT

randomized clinical trial

- RoB

risk of bias

Footnotes

Authors' Contributions: HM-N, ZZ, LG, and MP developed the concept. HM-N wrote the first manuscript draft. All authors have commented on and approved the final manuscript.

Conflicts of Interest: None declared.

References

- 1.Califf RM. Biomarker definitions and their applications. Exp Biol Med (Maywood) 2018 Feb;243(3):213–221. doi: 10.1177/1535370217750088. http://europepmc.org/abstract/MED/29405771 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Babrak L, Menetski J, Rebhan M, Nisato G, Zinggeler M, Brasier N, Baerenfaller K, Brenzikofer T, Baltzer L, Vogler C, Gschwind L, Schneider C, Streiff F, Groenen P, Miho E. Traditional and Digital Biomarkers: Two Worlds Apart? Digit Biomark. 2019 Aug 16;3(2):92–102. doi: 10.1159/000502000. http://europepmc.org/abstract/MED/32095769 .dib-0003-0092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Coravos A, Khozin S, Mandl KD. Developing and adopting safe and effective digital biomarkers to improve patient outcomes. NPJ Digit Med. 2019;2(1):14. doi: 10.1038/s41746-019-0090-4. doi: 10.1038/s41746-019-0090-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Meister S, Deiters W, Becker S. Digital health and digital biomarkers ? enabling value chains on health data. Curr Dir Biomed Eng. 2016 Sep;2(1):577–581. doi: 10.1515/cdbme-2016-0128. [DOI] [Google Scholar]

- 5.Global Digital Biomarkers Market: Focus on Key Trends, Growth Potential, Competitive Landscape, Components (Data Collection and Integration), End Users, Application (Sleep and Movement, Neuro, Respiratory and Cardiological Disorders) and Region – Analysis and Forecast, 2019-2025. BIS Research. 2020. [2021-12-27]. https://bisresearch.com/industry-report/digital-biomarkers-market.html .

- 6.Lipsmeier F, Taylor KI, Kilchenmann T, Wolf D, Scotland A, Schjodt-Eriksen J, Cheng W, Fernandez-Garcia I, Siebourg-Polster J, Jin L, Soto J, Verselis L, Boess F, Koller M, Grundman M, Monsch AU, Postuma RB, Ghosh A, Kremer T, Czech C, Gossens C, Lindemann M. Evaluation of smartphone-based testing to generate exploratory outcome measures in a phase 1 Parkinson's disease clinical trial. Mov Disord. 2018 Dec;33(8):1287–1297. doi: 10.1002/mds.27376. http://europepmc.org/abstract/MED/29701258 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Robb MA, McInnes PM, Califf RM. Biomarkers and Surrogate Endpoints: Developing Common Terminology and Definitions. JAMA. 2016 Mar 15;315(11):1107–8. doi: 10.1001/jama.2016.2240.2503184 [DOI] [PubMed] [Google Scholar]

- 8.Insel TR. Digital Phenotyping: Technology for a New Science of Behavior. JAMA. 2017 Oct 03;318(13):1215–1216. doi: 10.1001/jama.2017.11295.2654782 [DOI] [PubMed] [Google Scholar]

- 9.Guthrie NL, Carpenter J, Edwards KL, Appelbaum KJ, Dey S, Eisenberg DM, Katz DL, Berman MA. Emergence of digital biomarkers to predict and modify treatment efficacy: machine learning study. BMJ Open. 2019 Jul 23;9(7):e030710. doi: 10.1136/bmjopen-2019-030710. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=31337662 .bmjopen-2019-030710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Shin EK, Mahajan R, Akbilgic O, Shaban-Nejad A. Sociomarkers and biomarkers: predictive modeling in identifying pediatric asthma patients at risk of hospital revisits. NPJ Digit Med. 2018 Oct 2;1(1):50. doi: 10.1038/s41746-018-0056-y. doi: 10.1038/s41746-018-0056-y.56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lunde P, Nilsson BB, Bergland A, Kværner KJ, Bye A. The Effectiveness of Smartphone Apps for Lifestyle Improvement in Noncommunicable Diseases: Systematic Review and Meta-Analyses. J Med Internet Res. 2018 May 04;20(5):e162. doi: 10.2196/jmir.9751. http://www.jmir.org/2018/5/e162/ v20i5e162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lenouvel E, Novak L, Nef T, Klöppel S. Advances in Sensor Monitoring Effectiveness and Applicability: A Systematic Review and Update. Gerontologist. 2020 May 15;60(4):e299–e308. doi: 10.1093/geront/gnz049.5491622 [DOI] [PubMed] [Google Scholar]

- 13.Nagy, TD A DVRK-based Framework for Surgical Subtask Automation. Acta Polytechnica Hungarica. 2019 Sep 09;16(8):61–78. doi: 10.12700/aph.16.8.2019.8.5. http://acta.uni-obuda.hu/Nagy_Haidegger_95.pdf . [DOI] [Google Scholar]

- 14.Haidegger, T Probabilistic Method to Improve the Accuracy of Computer-Integrated Surgical Systems. Acta Polytechnica Hungria. 2019 Sep 09;16(8):119–140. doi: 10.12700/aph.16.8.2019.8.8. http://acta.uni-obuda.hu/Haidegger_95.pdf . [DOI] [Google Scholar]

- 15.Drummond MF. The use of health economic information by reimbursement authorities. Rheumatology (Oxford) 2003 Nov;42 Suppl 3:iii60–3. doi: 10.1093/rheumatology/keg499.42/suppl_3/iii60 [DOI] [PubMed] [Google Scholar]

- 16.Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices, amending Directive 2001/83/EC, Regulation (EC) No 178/2002 and Regulation (EC) No 1223/2009 and repealing Council Directives 90/385/EEC and 93/42/EE. Official Journal of the European Union. 2017. May 5, [2021-10-15]. https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32017R0745 .

- 17.Biggs JS, Willcocks A, Burger M, Makeham MA. Digital health benefits evaluation frameworks: building the evidence to support Australia's National Digital Health Strategy. Med J Aust. 2019 Apr;210 Suppl 6:S9–S11. doi: 10.5694/mja2.50034. [DOI] [PubMed] [Google Scholar]

- 18.Enam A, Torres-Bonilla J, Eriksson H. Evidence-Based Evaluation of eHealth Interventions: Systematic Literature Review. J Med Internet Res. 2018 Nov 23;20(11):e10971. doi: 10.2196/10971. http://www.jmir.org/2018/11/e10971/ v20i11e10971 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lau F, Kuziemsky C, editors. Handbook of eHealth Evaluation: An Evidence-based Approach. Victoria, BC: University of Victoria; 2017. [PubMed] [Google Scholar]

- 20.Evidence Standards Framework for Digital Health Technologies. National Institute for Clinical Excellence. 2019. [2020-12-27]. https://www.nice.org.uk/Media/Default/About/what-we-do/our-programmes/evidence-standards-framework/digital-evidence-standards-framework.pdf .

- 21.Al-Khatib SM, Fonarow GC, Joglar JA, Inoue LYT, Mark DB, Lee KL, Kadish A, Bardy G, Sanders GD. Primary Prevention Implantable Cardioverter Defibrillators in Patients With Nonischemic Cardiomyopathy: A Meta-analysis. JAMA Cardiol. 2017 Jun 01;2(6):685–688. doi: 10.1001/jamacardio.2017.0630. http://europepmc.org/abstract/MED/28355432 .2613595 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Peck KY, Lim YZ, Hopper I, Krum H. Medical therapy versus implantable cardioverter -defibrillator in preventing sudden cardiac death in patients with left ventricular systolic dysfunction and heart failure: a meta-analysis of > 35,000 patients. Int J Cardiol. 2014 May 01;173(2):197–203. doi: 10.1016/j.ijcard.2014.02.014.S0167-5273(14)00367-2 [DOI] [PubMed] [Google Scholar]

- 23.Hubble RP, Naughton GA, Silburn PA, Cole MH. Wearable sensor use for assessing standing balance and walking stability in people with Parkinson's disease: a systematic review. PLoS One. 2015;10(4):e0123705. doi: 10.1371/journal.pone.0123705. http://dx.plos.org/10.1371/journal.pone.0123705 .PONE-D-14-53638 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rovini E, Maremmani C, Cavallo F. How Wearable Sensors Can Support Parkinson's Disease Diagnosis and Treatment: A Systematic Review. Front Neurosci. 2017 Oct 06;11:555. doi: 10.3389/fnins.2017.00555. doi: 10.3389/fnins.2017.00555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Johansson D, Malmgren K, Alt Murphy M. Wearable sensors for clinical applications in epilepsy, Parkinson's disease, and stroke: a mixed-methods systematic review. J Neurol. 2018 Aug 9;265(8):1740–1752. doi: 10.1007/s00415-018-8786-y. http://europepmc.org/abstract/MED/29427026 .10.1007/s00415-018-8786-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Caballero-Villarraso J, Villegas-Portero R, Rodríguez-Cantalejo Fernando. [Portable coagulometer devices in the monitoring and control of oral anticoagulation therapy: a systematic review] Aten Primaria. 2011 Mar;43(3):148–56. doi: 10.1016/j.aprim.2010.03.017. https://linkinghub.elsevier.com/retrieve/pii/S0212-6567(10)00203-9 .S0212-6567(10)00203-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, Schünemann HJ, GRADE Working Group GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008 Apr 26;336(7650):924–6. doi: 10.1136/bmj.39489.470347.AD. http://europepmc.org/abstract/MED/18436948 .336/7650/924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Atkins D, Eccles M, Flottorp S, Guyatt GH, Henry D, Hill S, Liberati A, O'Connell D, Oxman AD, Phillips B, Schünemann H, Edejer TT, Vist GE, Williams JW, GRADE Working Group Systems for grading the quality of evidence and the strength of recommendations I: critical appraisal of existing approaches The GRADE Working Group. BMC Health Serv Res. 2004 Dec 22;4(1):38. doi: 10.1186/1472-6963-4-38. https://bmchealthservres.biomedcentral.com/articles/10.1186/1472-6963-4-38 .1472-6963-4-38 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, Moher D, Tugwell P, Welch V, Kristjansson E, Henry DA. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017 Dec 21;358:j4008. doi: 10.1136/bmj.j4008. http://www.bmj.com/cgi/pmidlookup?view=long&pmid=28935701 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kamioka H. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P)2015 statement. Jpn Pharmacol Ther. 2019;47(8):1177–85. [Google Scholar]

- 31.Pritchard H, Gabrys J. From Citizen Sensing to Collective Monitoring: Working through the Perceptive and Affective Problematics of Environmental Pollution. GeoHumanities. 2016 Nov 16;2(2):354–371. doi: 10.1080/2373566x.2016.1234355. [DOI] [Google Scholar]

- 32.Search Strategy Used to Create the PubMed Systematic Reviews Filter. National Library of Medicine. 2018. Dec, [2020-12-27]. https://www.nlm.nih.gov/bsd/pubmed_subsets/sysreviews_strategy.html .

- 33.McHugh ML. Interrater reliability: the kappa statistic. Biochem Med. 2012:276–282. doi: 10.11613/BM.2012.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009 Jul 21;6(7):e1000100. doi: 10.1371/journal.pmed.1000100. http://dx.plos.org/10.1371/journal.pmed.1000100 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Grimes DA, Schulz KF. An overview of clinical research: the lay of the land. Lancet. 2002 Jan 05;359(9300):57–61. doi: 10.1016/S0140-6736(02)07283-5.S0140-6736(02)07283-5 [DOI] [PubMed] [Google Scholar]

- 36.ICD-11 International Classification of Diseases 11th Revision: The global standard for diagnostic health information. World Health Organization. 2020. [2020-12-27]. https://icd.who.int/en .

- 37.International Classification of Health Interventions (ICHI) World Health Organization. 2020. [2020-12-27]. https://www.who.int/standards/classifications/international-classification-of-health-interventions .

- 38.ICF Browser. World Health Organization. 2017. [2021-12-27]. https://apps.who.int/classifications/icfbrowser/

- 39.Lorenz RC, Matthias K, Pieper D, Wegewitz U, Morche J, Nocon M, Rissling O, Schirm J, Jacobs A. A psychometric study found AMSTAR 2 to be a valid and moderately reliable appraisal tool. J Clin Epidemiol. 2019 Oct;114:133–140. doi: 10.1016/j.jclinepi.2019.05.028.S0895-4356(18)31099-0 [DOI] [PubMed] [Google Scholar]

- 40.Pieper D, Lorenz RC, Rombey T, Jacobs A, Rissling O, Freitag S, Matthias K. Authors should clearly report how they derived the overall rating when applying AMSTAR 2-a cross-sectional study. J Clin Epidemiol. 2021 Jan;129:97–103. doi: 10.1016/j.jclinepi.2020.09.046.S0895-4356(20)31140-9 [DOI] [PubMed] [Google Scholar]

- 41.Shea Beverley J, Reeves Barnaby C, Wells George, Thuku Micere, Hamel Candyce, Moran Julian, Moher David, Tugwell Peter, Welch Vivian, Kristjansson Elizabeth, Henry David A. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017 Sep 21;358:j4008. doi: 10.1136/bmj.j4008. http://www.bmj.com/lookup/pmidlookup?view=long&pmid=28935701 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Schünemann H, Brozek J, Guyatt G, Oxman A. Handbook for grading the quality of evidence and the strength of recommendations using the GRADE approach. 2013. [2020-12-27]. https://gdt.gradepro.org/app/handbook/handbook.html#h.9rdbelsnu4iy .

- 43.Guyatt GH, Oxman AD, Vist G, Kunz R, Brozek J, Alonso-Coello P, Montori V, Akl EA, Djulbegovic B, Falck-Ytter Y, Norris SL, Williams John W, Atkins D, Meerpohl J, Schünemann Holger J. GRADE guidelines: 4. Rating the quality of evidence—study limitations (risk of bias) J Clin Epidemiol. 2011 Apr;64(4):407–15. doi: 10.1016/j.jclinepi.2010.07.017.S0895-4356(10)00413-0 [DOI] [PubMed] [Google Scholar]

- 44.Guyatt GH, Oxman AD, Kunz R, Woodcock J, Brozek J, Helfand M, Alonso-Coello P, Glasziou P, Jaeschke R, Akl EA, Norris S, Vist G, Dahm P, Shukla VK, Higgins J, Falck-Ytter Y, Schünemann HJ. GRADE guidelines: 7. Rating the quality of evidence-inconsistency. J Clin Epidemiol. 2011 Dec;64(12):1294–302. doi: 10.1016/j.jclinepi.2011.03.017.S0895-4356(11)00182-X [DOI] [PubMed] [Google Scholar]

- 45.Guyatt GH, Oxman AD, Kunz R, Brozek J, Alonso-Coello P, Rind D, Devereaux PJ, Montori VM, Freyschuss B, Vist G, Jaeschke R, Williams JW, Murad MH, Sinclair D, Falck-Ytter Y, Meerpohl J, Whittington C, Thorlund K, Andrews J, Schünemann HJ. GRADE guidelines 6. Rating the quality of evidence—imprecision. J Clin Epidemiol. 2011 Dec;64(12):1283–93. doi: 10.1016/j.jclinepi.2011.01.012.S0895-4356(11)00206-X [DOI] [PubMed] [Google Scholar]

- 46.Guyatt GH, Oxman AD, Montori V, Vist G, Kunz R, Brozek J, Alonso-Coello P, Djulbegovic B, Atkins D, Falck-Ytter Y, Williams JW, Meerpohl J, Norris SL, Akl EA, Schünemann HJ. GRADE guidelines: 5. Rating the quality of evidence—publication bias. J Clin Epidemiol. 2011 Dec;64(12):1277–82. doi: 10.1016/j.jclinepi.2011.01.011.S0895-4356(11)00181-8 [DOI] [PubMed] [Google Scholar]

- 47.Guyatt GH, Oxman AD, Kunz R, Woodcock J, Brozek J, Helfand M, Alonso-Coello P, Falck-Ytter Y, Jaeschke R, Vist G, Akl EA, Post PN, Norris S, Meerpohl J, Shukla VK, Nasser M, Schünemann HJ. GRADE guidelines: 8. Rating the quality of evidence—indirectness. J Clin Epidemiol. 2011 Dec;64(12):1303–10. doi: 10.1016/j.jclinepi.2011.04.014.S0895-4356(11)00183-1 [DOI] [PubMed] [Google Scholar]

- 48.Pollock A, Farmer SE, Brady MC, Langhorne P, Mead GE, Mehrholz J, van Wijck F, Wiffen PJ. An algorithm was developed to assign GRADE levels of evidence to comparisons within systematic reviews. J Clin Epidemiol. 2016 Feb;70:106–10. doi: 10.1016/j.jclinepi.2015.08.013. https://linkinghub.elsevier.com/retrieve/pii/S0895-4356(15)00389-3 .S0895-4356(15)00389-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Zhang Y, Coello PA, Guyatt GH, Yepes-Nuñez JJ, Akl EA, Hazlewood G, Pardo-Hernandez H, Etxeandia-Ikobaltzeta I, Qaseem A, Williams JW, Tugwell P, Flottorp S, Chang Y, Zhang Y, Mustafa RA, Rojas MX, Xie F, Schünemann HJ. GRADE guidelines: 20. Assessing the certainty of evidence in the importance of outcomes or values and preferences-inconsistency, imprecision, and other domains. J Clin Epidemiol. 2019 Jul;111:83–93. doi: 10.1016/j.jclinepi.2018.05.011.S0895-4356(17)31061-2 [DOI] [PubMed] [Google Scholar]

- 50.Cohen J. Statistical Power Analysis for the Behavioral Sciences. New York, NY: Routledge; 1988. [Google Scholar]

- 51.Austin TM, Richter RR, Sebelski CA. Introduction to the GRADE approach for guideline development: considerations for physical therapist practice. Phys Ther. 2014 Nov;94(11):1652–9. doi: 10.2522/ptj.20130627.ptj.20130627 [DOI] [PubMed] [Google Scholar]

- 52.Terrin N, Schmid CH, Lau J. In an empirical evaluation of the funnel plot, researchers could not visually identify publication bias. J Clin Epidemiol. 2005 Sep;58(9):894–901. doi: 10.1016/j.jclinepi.2005.01.006.S0895-4356(05)00082-X [DOI] [PubMed] [Google Scholar]

- 53.Mavridis D, Salanti G. How to assess publication bias: funnel plot, trim-and-fill method and selection models. Evid Based Ment Health. 2014 Feb;17(1):30. doi: 10.1136/eb-2013-101699.eb-2013-101699 [DOI] [PubMed] [Google Scholar]

- 54.Zwetsloot P, Van Der Naald M, Sena ES, Howells DW, IntHout J, De Groot JA, Chamuleau SA, MacLeod MR, Wever KE. Standardized mean differences cause funnel plot distortion in publication bias assessments. Elife. 2017 Sep 08;6:e24260. doi: 10.7554/eLife.24260. doi: 10.7554/eLife.24260.24260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Duval S, Tweedie R. Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics. 2000 Jun;56(2):455–63. doi: 10.1111/j.0006-341x.2000.00455.x. [DOI] [PubMed] [Google Scholar]

- 56.Nuss K, Moore K, Nelson T, Li K. Effects of Motivational Interviewing and Wearable Fitness Trackers on Motivation and Physical Activity: A Systematic Review. Am J Health Promot. 2021 Feb 14;35(2):226–235. doi: 10.1177/0890117120939030. [DOI] [PubMed] [Google Scholar]

- 57.Black-Maier E, Lewis RK, Barnett AS, Pokorney SD, Sun AY, Koontz JI, Daubert JP, Piccini JP. Subcutaneous implantable cardioverter-defibrillator troubleshooting in patients with a left ventricular assist device: A case series and systematic review. Heart Rhythm. 2020 Sep;17(9):1536–1544. doi: 10.1016/j.hrthm.2020.04.019.S1547-5271(20)30351-9 [DOI] [PubMed] [Google Scholar]

- 58.Amann J, Sleigh J, Vayena E. Digital contact-tracing during the Covid-19 pandemic: An analysis of newspaper coverage in Germany, Austria, and Switzerland. PLoS One. 2021;16(2):e0246524. doi: 10.1371/journal.pone.0246524. https://dx.plos.org/10.1371/journal.pone.0246524 .PONE-D-20-32473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Smith V, Devane D, Begley CM, Clarke M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med Res Methodol. 2011;11(1):15. doi: 10.1186/1471-2288-11-15. http://www.biomedcentral.com/1471-2288/11/15 .1471-2288-11-15 [DOI] [PMC free article] [PubMed] [Google Scholar]