Abstract

There is an urgent need for automated methods to assist accurate and effective assessment of COVID-19. Radiology and nucleic acid test (NAT) are complementary COVID-19 diagnosis methods. In this paper, we present an end-to-end multitask learning (MTL) framework (COVID-MTL) that is capable of automated and simultaneous detection (against both radiology and NAT) and severity assessment of COVID-19. COVID-MTL learns different COVID-19 tasks in parallel through our novel random-weighted loss function, which assigns learning weights under Dirichlet distribution to prevent task dominance; our new 3D real-time augmentation algorithm (Shift3D) introduces space variances for 3D CNN components by shifting low-level feature representations of volumetric inputs in three dimensions; thereby, the MTL framework is able to accelerate convergence and improve joint learning performance compared to single-task models. By only using chest CT scans, COVID-MTL was trained on 930 CT scans and tested on separate 399 cases. COVID-MTL achieved AUCs of 0.939 and 0.846, and accuracies of 90.23% and 79.20% for detection of COVID-19 against radiology and NAT, respectively, which outperformed the state-of-the-art models. Meanwhile, COVID-MTL yielded AUC of 0.800 ± 0.020 and 0.813 ± 0.021 (with transfer learning) for classifying control/suspected, mild/regular, and severe/critically-ill cases. To decipher the recognition mechanism, we also identified high-throughput lung features that were significantly related (P < 0.001) to the positivity and severity of COVID-19.

Keywords: COVID-19, Multitask learning, 3D CNNs, Diagnosis, Severity assessment, Deep learning, Computer tomography

1. Introduction

As firstly reported in December 2019 [1], COVID-19 was identified as a novel coronavirus (SARS-CoV-2) with severe respiratory symptoms similar to pneumonia and seasonal flu, such as fever, cough, fatigue, and myalgia [2]. The outbreak of the disease has triggered the World Health Organization (WHO) to declare it as a pandemic. This human-to-human transmission disease has resulted in more than 119 million infections worldwide with over 2.6 million deaths as of March 2021 according to statistics released by Johns Hopkins University.

To constraint the spread of COVID-19 pneumonia, besides personal protection, WHO recommended preventive measures including quickly identifying suspect cases, timely testing, isolating infectious people, and more importantly, identifying all close contacts of the infected [3]. Nucleic acid test (NAT) via real-time polymerase chain reaction (RT-PCR) is considered as an operational “gold standard” for detection of the causative agent of COVID-19 [4]. However, the RT-PCR test suffers from a relatively higher false-negative rate especially in initial disease presentation and asymptomatic people [5] which may due to prolonged nuclei acid conversion [6], lack of sufficient test kits, and the low quality of the swab samples [4].

It was reported that SARS-CoV-2 affects lung lobes and patients infected with COVID-19 pneumonia are widely exhibited ground-glass opacities (GGO), consolidation, or both in their chest computer tomography (CT) scans [7]. Naturally, such anatomical changes can also be captured by measuring imaging features, especially texture features, and used for COVID-19 diagnosis. Besides, the chest CT scan is suggested to be able to detect COVID-19 in the early stage, especially useful for screening asymptomatic patients or patients with negative NAT results [8]. More importantly, signs of disease progression can also be observed from chest CT images, and as reported [9] that GGO, GGO plus reticular pattern or consolidation were all common in the early rapid progressive stage, GGO plus consolidation dominated the advanced stage, and GGO plus consolidation sharply decreased in the recovery (absorption) stage. Consequently, the chest CT scan has become a complementary strategy of NAT and is widely used in clinical practice.

Considering the widespread of COVID-19 across 192 countries/regions, the rapid increase of the number of new cases, and the success of deep learning in medical image analysis, there is an urgent need to develop a deep learning-based system for automated assessment of COVID-19. Several methods have been proposed and achieved promising results. For instance, Li et al. [10] proposed a ResNet50-based COVNet model, from which a series of CT image slices were fed into different network branches, and the feature maps obtained from individual branches were finally concatenated, for detection of COVID-19 and community-acquired pneumonia. Later, Harmon et al. [11] proposed an artificial intelligence (AI) system to detect COVID-19 pneumonia using multinational chest CT datasets, which achieved up to 90.8% accuracy. Similar performance was achieved by Sun et al. [12], they first extracted imaging features from volumetric CT scans and proposed a deep forest network guided by adaptive feature selection for COVID-19 classification. Wang et al. [13] developed a tailored 2D convolutional neural network, named COVID-Net, for the detection of COVID-19 using chest X-ray images. This model was later redesigned in [14] for COVID-19 CT image slice classification. More recently, Shorfuzzaman and Hossain [15] used a fine-tuned pre-trained convolutional encoder to capture feature representations of COVID-19 from limited X-ray training samples, and then adopted a Siamese network for classification of COVID-19. Besides the binary classification of COVID-19, Wang et al. [16] developed a deep learning model that can simultaneously localize the infectious regions of COVID-19 on chest X-ray images. Tang et al. [17] extracted radiomic features from CT images and then combined them with clinical indices for classification of severe vs. non-severe COVID-19 in a small cohort using a random forest model. Very recently, Ning et al. [18] made their COVID-19 dataset publicly available and proposed to use CT imaging data as well as clinical features for the detection and severity assessment of COVID-19. They have manually labeled 19,685 CT slices to train their single-task CNN models. Meanwhile, Wang et al. proposed deCoVnet based on Facebook SlowFast to harness 3D CNN for COVID-19 diagnosis [19].

The existing works mainly focused on detecting COVID-19 using CT or X-ray images against either radiology or NAT. Given the relatively higher false-negative rate of NAT in asymptomatic detection, the prediction results only against NAT might have biases. More seriously, though people with NAT-negative infection may not present any symptoms, they still carry the virus and may have a big chance to transmit among their contacts, which thereafter poses an even greater risk to the communities since it is harder to do contact tracing for asymptomatic transmissions. Radiology, especially computer tomography, which is more sensitive than other imaging modalities for early diagnosis of COVID-19, is now served as an essential complementary method for more accurate diagnoses. Thus, automated detection of COVID-19 against both NAT and computer tomography is increasingly needed and maybe more useful in clinical practice. While CT slice-based solutions may not be applicable in mass practice because the selection of proper CT slices for model inference requires expert involvement. Thus, methods that directly utilize volumetric CT images to reduce the heavy workload of experts are more clinically feasible and in high demand. Besides diagnosis, automated and fast severity assessment of COVID-19 may be especially beneficial for severe patients given the extreme shortage of hospital beds to handle the unexpected surge of COVID-19 admissions across many countries.

To address these challenges, we propose a multitask-learning (MTL) framework to ensemble 3D CNN and auxiliary feed-forward neural network (FNN) to harness volumetric CT inputs and high-throughput CT lung features for automated and simultaneous detection of COVID-19 pneumonia against both radiology (diagnosed by radiologists using CT scans) and NAT (RT-PCR) as well as assessing the severity of the infection. Due to the imbalance of task difficulty, more difficult tasks such as severity assessment are prone to slow-convergence in the MTL learning procedure. To tackle this issue, a novel random-weighted loss function is proposed to prioritize vulnerable COVID-19 tasks that aims to alleviate task dominance and enhance joint learning performance. COVID-MTL uses chest CT scans as inputs that are more stable compared to the slice-based approach since its inference process is fully automated. To overcome the hurdle faced by conventional 3D CNNs when processing volumetric CT inputs, we proposed a novel Shift3D that introduces space variances on low-level volumetric feature representations to alleviate overfitting and improve convergence and accuracy for state-of-the-art 3D CNN components. In addition, accurate lung segmentation is necessary for automated diagnosis, we thereby design a novel unsupervised algorithm to address the under-segmentation of crucial and diagnostic-relevant structures like GGO in COVID-19 CT scans.

2. Related work

2.1. Lung segmentation from COVID-19 CT scans

Lung segmentation is a necessary and critical step for the diagnosis and treatment of lung diseases, especially in the early stage. Conventionally, U-net, a symmetric model architecture that is widely used in medical image segmentation, is applied for lung [20] and lung lesion/nodule segmentation [21]. However, this method requires lung delineation masks that are paired to each input CT slice for training. Recent studies have shown that people infected with SARS-CoV-2 may undergo ground-glass opacities or GGO in their lungs within a few weeks after symptom onset and thus subsequently demonstrate a white lung appearance in CT scans [22]. The white lung areas may introduce additional difficulties for some of the existing lung segmentation methods, especially for algorithms involving intensity or thresholding, where under-segmentation of GGO may occur.

To tackle those problems, different strategies have been proposed more recently. For instance, Oulefki et al. improved a multilevel thresholding algorithm based on Kapur entropy for automatic segmentation of COVID-19 infected lung regions from chest CT scans [23]; Fan et al. proposed a semi-supervised framework for segmentation of lung infections from COVID-19 CT scans, from which limited labeled images and randomly selected propagation strategies were used to train an Inf-Net CNN model [24].

However, given the shortage of radiologist engagement during the pandemic, an unsupervised segmentation algorithm is more favorable and more viable for mass studies and applications on COVID-19. In this research, we intended to improve a classical unsupervised lung segmentation algorithm [25], which was widely adopted by the community (e.g., Kaggle competition, Data Science Bowl 2017), for the following tasks, i.e., detection and severity assessment of COVID-19.

2.2. 3D convolutional neural network

The convolutional neural network was initially proposed to process 2D images, including handwriting recognition and natural image classification. Besides extracting features from the spatial dimensions, 3D convolution was later introduced for simultaneously handling the temporal dimensions of an input series, such as motion information captured from multiple adjacent video frames, hand pose signals that are estimated from the single depth image, and organ tissue segmented from volumetric medical images. Regarding processing CT images, one can either take a single CT slice as input using 2D CNNs which fail to leverage temporal context from adjacent slices, or harness inter-slice context from volumetric input with 3D convolution kernels. Although 3D CNNs can lead to improved performance in comparison to their 2D counterparts, the benefit comes with an extreme memory and computational cost due to the complexity of 3D convolution and the increased number of network weights. To tackle the problem, well-known resource-efficient 2D CNNs have been recently converted to 3D CNNs to leverage the capability of spatio-temporal features [26], such as ResNet3D, SqueezeNet3D, and MobileNet3D. SqueezeNet is one of the lightweight CNN architectures, which can achieve similar accuracy to AlexNet by only using 50 times fewer parameters [27]. In this work, we build our 3D CNN model with SqueezeNet as the backbone.

2.3. Multitask learning

Multitask learning (MTL) is referred to as a learning paradigm that aims to improve the generalization performance of multiple related tasks by leveraging their relational information [28]. To harness the power of MTL, the learning tasks or a subset of tasks are assumed to be related. For example, a task to detect the positivity of COVID-19 is related to the task designed to assess the severity of the infection. Given the nature of different tasks (imbalance of task difficulty), tasks such as the assessment of the severity can be more difficult to learn than a task to detect infection. Depending on how the hidden layers are shared, there are two different types of MTL approaches, i.e., hard and soft parameter sharing [28]. In the hard parameter sharing, which is more common and capable of greatly reducing the risk of overfitting, hidden layers were shared between all tasks, and each task has its own task-specific output layer(s) [29]. In comparison, the soft parameter sharing approach comes with regularizations to reduce the distance between different task models (each task has its own model and parameters) [28]. Both the two MTL approaches are prone to unnecessarily emphasize easier tasks which can lead to convergence problems for difficult tasks [30]. As a result, specific tasks may dominate the entire learning procedure. Different strategies have been proposed to tackle the problem, for example, Guo et al. introduced adaptive weight adjustment to automatically prioritize more difficult tasks; Kendall et al. adopted homoscedastic uncertainty (task-dependent uncertainty) as a basis for weighting losses [31]; Tian et al. recently proposed to use two sets of Eigenfunctions (the common one shared by different tasks and unique ones used in individual tasks) to approximate MTL objective function [32]. Recently, multitask learning approach was utilized for medical diagnostics, for example, Huang et al. adopted graph-based feature selection and proposed a multi-gate mixture-of-experts model for joint diagnosis of autism spectrum disorder and attention deficit hyperactivity disorder from resting-state functional MRI data [33]; Amyar et al. introduced a multitask learning workflow based on U-Net for lesion segmentation and classification of COVID-19 CT slices [34].

3. COVID-19 CT studies

A total of 1329 studies with chest CT scans and corresponding diagnosis and severity assessment results were enrolled from a public dataset1 [18] (collected by Wuhan Union Hospital and Wuhan Liyuan Hospital). 761 patients were confirmed as COVID-19 positive by nucleic acid test (COVID-NAT). 998 studies were diagnosed as COVID-19 by radiologists using chest CT images (COVID-CT). 237 studies diagnosed as COVID-19 using CT scans but yet confirmed by the nucleic acid test were regarded as “suspected” cases. 331 COVID-19 negative cases were served as “control”. The severities of the COVID-19 patients were assessed by physicians based on the infection, symptoms, disease progression, and patient conditions, which can be categorized into control/suspected (type I), mild/regular (type II), and severe/critically ill (type III) (COVID-Severity). The cohort was split arbitrarily into training/cross-validation (70%, n = 930) and testing dataset (30%, n = 399). The split was stratified by COVID-NAT. The two datasets have similar class distributions. A summary of the study distribution and training/cross-validation vs testing split is shown in

4. Methodology

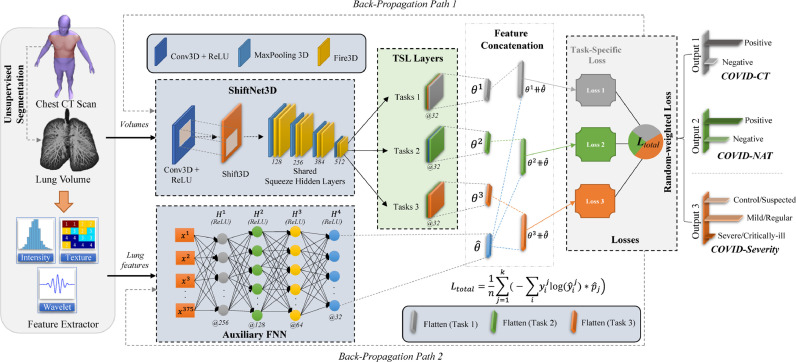

As illustrated in Fig. 1 , the proposed multitask learning framework (COVID-MTL) consists of six major components for diagnosis and severity assessment on COVID-19 CT inputs. An unsupervised 3D lung segmentation module is first used to extract lung volumes from chest CT scans. Then, a feature extractor is to obtain high-throughput CT features including intensity, texture, and wavelet features from the segmented lung volumes. Next, the segmented lung volumes and extracted lung features are fed into a ShiftNet3D and a feed-forward neural network (FNN), respectively, to leverage both raw CT data as well as high-throughput imaging features. A hard parameter sharing approach is adopted to construct the MTL model.

Fig. 1.

Overview of COVID-19 Multitask Learning Framework.

As the major component of COVID-MTL, ShiftNet3D includes a Shift3D layer to boost network performance through introducing space variances on low-level feature representations of the volumetric inputs and 8 consecutive 3D Fire modules (the backbone of SqueezeNet [27]) are used as shared hidden layers between all tasks. To learn task-specific representations, each task has its own output layer (TSL layers) and loss function. High-level feature representations obtained from CT imaging features through the auxiliary FNN (AFNN) are concatenated with each TSL layer for performance enhancement. As such, there are two backpropagation paths in the overall MTL network. Last, a random-weighted loss function is attached to calculate the combined task loss so that different COVID-19 tasks can be trained simultaneously by using weighted total loss as guidance. Key modules of COVID-MTL will be disclosed in Sections 4.1 – 4.3.

4.1. Unsupervised lung segmentation and high-throughput lung feature extraction

To avoid the heavy burden on manually labeling lung volumes from chest CT scans during the pandemic, an unsupervised lung segmentation algorithm is more favorable and more viable for mass studies of COVID-19 compared to learning-based methods. However, the widely-adopted unsupervised lung segmentation algorithm [25], which is based on intensity and region connection, is not able to properly handle white lung areas (e.g., GGO) in COVID-19 CT scans. To address this problem, we propose an active contour-based algorithm to refine the initial segmentation results produced by the classical method. The inflated contours of the initially segmented lungs are used as seeds for the refinements. The energy-minimizing refinement method evolves using the given seeds and stops at the boundary of the respective lungs. Thus, avoided inherited under-segmentation defects of thresholding-based methods when dealing with more complicated COVID-19 lung regions. The corresponding pseudocode is summarized in Algorithm 1 .

Algorithm 1.

Pseudocode code of unsupervised refinement method.

|

Given the limited engagement of radiologists during the outbreak, over a thousand (1056) instead of hundreds of thousands of CT slices were arbitrarily selected from the cohort (which covered patients with various states) and manually delineated as ground-truth under a senior radiologist's supervision for performance comparison. Segmentation masks produced by different state-of-the-art and proposed methods were compared with ground-truth. The segmentation performance was measured by standard metrics, including Dice similarity coefficient, Jaccard index, Matthews correlation coefficient (MCC), and precision, which are defined as:

where : true positive; : true negative; : false positive; : false negative.

After the lung volumes were automatically segmented by the proposed unsupervised algorithm, a total of 375 high-throughput lung features, which including First Order Statistics, Gray Level Cooccurrence Matrix (GLCM), Gray Level Run Length Matrix (GLRLM), Gray Level Size Zone Matrix (GLSZM), and Wavelet features, were extracted from corresponding lung volumes for the cohort study. To extract wavelet features, Coiflets 1 (coif1) low- and high-pass filters were applied in each of the three dimensions which yielded 8 sub-bands (or decompositions). GLCM and GLRLM features were then derived from each sub-band.

4.2. Shift3D

Chest CT is more sensitive for early diagnosis of COVID-19 than NAT and other imaging modalities. However, the existing COVID-19 diagnosis based on CT slice unavoidably needs expert involvement. To automate the diagnostic workup, it is necessary to directly process the volumetric radiographic images. 3D CNNs are specifically designed to harness volumetric inputs, but they are notoriously difficult to train, e.g., slow convergence, extremely high memory, and computational costs. Therefore, more efficient structures such as 3D SqueezeNet were instead used. However, the lightweight 3D CNNs come with accuracy degradation and are still prone to overfitting and suffering from slow convergence. To address those problems and make them more feasible in practice, here we propose a 3D real-time augmentation method, named Shift3D, which introduces space variances through randomly shifting low-level feature representations of the volumetric inputs in three dimensions (or 6 directions). The rationale of this setting is based on our observation that the geographical location of human organs in CT scans varies from one case to another, and even for different scans of the same patient (people lying down on a CT bed without exactly the same positions). Such space variances may affect the network performance and are thus worth to be dealt with. In comparison to traditional augmentation methods, Shift3D directly operate on different levels of feature representations (3D feature maps) instead of original volumetric inputs and leverage GPU computing power by being implemented as a neural network layer. Related studies with 2D CNNs suggest that nonlinearly augmenting 2D feature maps can achieve better performance compared to input augmentation [35, 36]. 3D scaling may also introduce space variances, but it is more computationally expensive.

The pseudocode of Shift3D is illustrated in Algorithm 2 . There are three parameters for Shift3D: max shift percentage (default is 0.2) decides the maximum percentage of a shift in each of the 6 directions (in compared to the size of the corresponding dimension); elements will be re-introduced at the first position if they are shifted beyond the last position, and the and are used to fill re-introduced elements with specific numbers. The usage of Shift3D is flexible, for example, one can lower the frequency for calling Shift3D in a wrapped network layer to reduce shifting chance and processing power; it can also combine with existing augmentation methods to further boost network performance. Similar to other augmentation algorithms, it is not recommended to use Shift3D in the inference stage.

Algorithm 2.

Pseudocode implementation of Shift3D with PyTorch. Note: randint is a Python built-in function that returns random values between [a, b] for randint(a, b).

|

4.3. Random-weighted multitask loss

Difficult tasks like severity assessment of COVID-19 may induce higher losses compared to easier tasks (e.g., diagnosis) and thus more vulnerable in the MTL learning procedure, e.g., slow convergence and lower learning priority. Inspired by task-dependent uncertainty loss proposed by Kendall et al. [31], where uncertainty weights were learned by tuning log variances, here we propose a random-weighted loss function, which randomly assigns learning weights to different tasks during each iteration of the joint training, to prevent the learning procedure being dominated by any specific tasks. The random weights are drawn from the Dirichlet distribution since it can generate probability distributions that satisfy our needs: 1) sum of all task weights (probabilities) is equal to 1; 2) it allows us to control the concentration of a generated weight distribution (discuss later). Such a random-weighted setting is based on the probability theory that each of the tasks has chance to be prioritized if the number of iterations in joint training is large. Therefore, vulnerable tasks still have a sufficient chance to be prioritized and trained.

The Dirichlet distribution uses a probability density function that defined as:

| (1) |

where, , is the number of learning tasks;, , is the weight of learning task ; is the normalization constant and , which can be expressed as a gamma function:

| (2) |

Since the objective function for each task is a cross-entropy loss, which is defined as:

| (3) |

The total loss function of a MTL model with random-weighted loss, therefore, can be calculated as:

| (4) |

For the special case when , the weights for the two learning tasks can be simply decided as:

| (5) |

where can be drawn from either Dirichlet or others like Uniform distribution for two tasks.

One can draw random weights times and average the results to avoid potential heavy fluctuation of a single Dirichlet draw, thus the total loss function can be finally modeled as:

| (6) |

while is the accumulation weights of draws for the task . can be seen as a hyperparameter which controls weight difference (or concentration) between tasks, the larger the the less difference among tasks’ weights (evenly distributed), therefore, the random-weighted loss will be downgraded to simple mean loss if is large enough. In other words, a larger is preferred if tasks’ difficulties are similar, otherwise, a smaller is better for tasks that have distinct difficulties.

As indicated in Eq. (6), instead of tuning normalization constant (a vector of concentration parameters), we fixed which made Dirichlet distribution generate high concentrated probabilities by default, and then we introduced a single integer parameter to control the concentration (which is much easier to tune) and the degree for prioritizing vulnerable task(s).

4.4. Pattern analysis of high-throughput lung features and their correlation with COVID-19

Because the deep features extracted by the neural network lack interpretability. To decipher the correlation between CT images and COVID-19, high-throughput imaging features extracted from CT lung volumes were instead analyzed. The feature studies were stratified into different groups based on the positivity and severity of COVID-19. Because the stratified groups may not have equal feature variances and equal sample sizes, Welch's ANOVA was therefore used to test differences between the group means. The top imaging features that are significantly related to COVID-19 were then identified based on Welch's results. The patterns of high-throughput features and their correlation with COVID-19 infection and severity as well as routine clinical parameters like gender and age can be further analyzed with clustering heatmap, where imaging features were first scaled by z-score and then hierarchically clustered based on the distance of the Pearson correlation coefficient.

5. Experiments and results

5.1. Experiment settings

A total of seven machine learning and deep learning models were used in this study for the diagnosis and severity assessment of COVID-19. All of the chest CT scans were resampled into the spacing of 1 mm x 1 mm x 1 mm for lung segmentation. High-throughput features extracted from lung CT volumes were used to train Random Forests (RF) and LightGBM (LGBM) models. In each of the two machine learning models, 1000 estimators (decision trees) were utilized. A learning rate of 0.01 was used to train the LGBM model. The number of feature maps of ShiftNet3D is ranging from 64 to 512; in comparison, the AFNN branch contains 256, 128, 64, and 32 nodes in its four hidden layers. To train deep learning models, i.e., ResNet3D (ResNet34 structure) [26], SqueezeNet3D [26], deCovNet [19], ShiftNet3D, and COVID-MTL, standard preprocessing and augmentation procedures were utilized, which including normalization, random rotation, flip, and crop (size of 200 × 250 × 250 pixels covering major lung regions). As for the training parameters, stochastic gradient descent (SGD) with Nesterov momentum of 0.9, weight decay of 5E-5, 80 epochs, and a batch size of 10 was used for all CNN models. A cosine learning rate scheduler was utilized, and the learning rate was started with 0.005 and gradually declined to a minimum of 5E-5. He-normalization was adopted to initialize network weights. The number of draws () for Dirichlet distribution in each training iteration is 2. The abovementioned hyperparameters were derived from the training/cross-validation dataset. More complicated 3D ResNet models, like the 3D version of ResNet-50, were unable to be trained due to the limitation of GPU memory capacity. All CNN models were trained on two Nvidia RTX 2080Ti GPUs using consistent settings. COVID-MTL can be trained with or without high-throughput inputs. The last epoch testing performances, which were measured by precision, recall, F1 score, accuracy, and area under the curve (AUC), were reported.

5.2. Unsupervised lung segmentation on COVID-19 chest CT scans

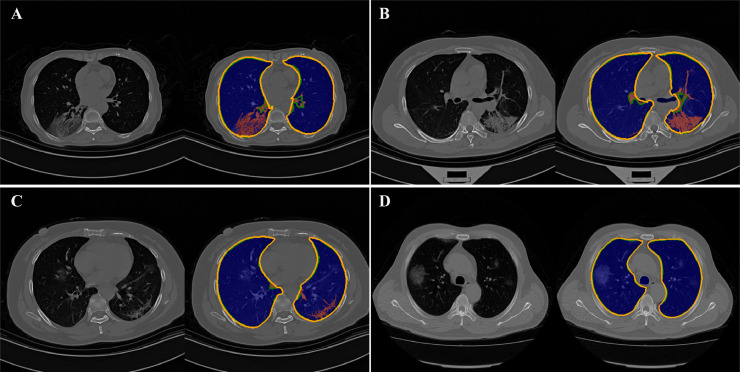

As shown in Table 2, both the classical method [25] and the proposed algorithm achieved high segmentation performance. The proposed refinement method consistently improved the state-of-the-art and the benefits are expected coming from the improvements of under-segmentation in white lung areas, as indicated in Fig. 2 .

Table 2.

Performance metrics of lung segmentation on COVID-19 CT scans.

Fig. 2.

Sample lung segmentation on COVID-19 CT scans. Blue region: segmented by classical method; red regions contain tissue structures like GGO that are crucial for COVID-19 diagnosis; orange contour: refinement results; green contour: ground-truth.

The performance of discriminative localization segmentation (DLS) [37] was relatively lower than the other methods, and the results complied with our previous discussion that traditional thresholding-based methods may have difficulty handling white-appearing lung areas. U-Net-R231CovidWeb [38] achieved relatively lower performance than expected, which may due to a small training cohort (36 cases or 3393 CT slices) they adopted [38]. Fine-tuning of U-Net-R231CovidWeb on part of the dataset we used might be helpful to improve the segmentation outcomes. However, it would need a considerable amount of slice-by-slice expert delineation to generate training labels, which may not be feasible due to the limited engagements of radiologists during the pandemic.

The sample result in Fig. 2 illustrates that the proposed refinement algorithm can accurately detect white lung areas from COVID-19 CT studies in different scenarios, especially on more challenging cases as illustrated in Fig. 2A-C, whereas, the classical method failed to detect crucial COVID-19 diagnostic structures (red region, Fig. 2A-C). However, the classical method does not always produce negative outcomes, it can detect ongoing GGOs in some of the cases where mild to moderate white appearance mostly occurred within but not on the edge of the lung (Fig. 2D).

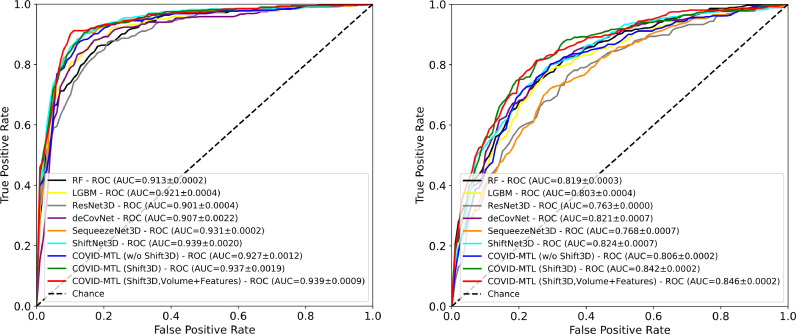

5.3. Experimental results of COVID-19 diagnosis

The seven types of models were trained on the full training/cross-validation dataset and then tested on another 399 CT studies (Table 1 ). Corresponding models were assessed against radiology (COVID-CT) and nucleic acid tests (COVID-NAT). As shown in Fig. 3 and Table 3 , the two popular machine learning models (RF and LGBM) achieved similar detection performance, i.e., AUCs of 0.913/0.921 and accuracies of 86.47%/86.47% against radiology, and AUCs of 0.819/0.803 and accuracies of 73.93%/76.19% against nucleic acid tests, using high-throughput lung features.

Table 1.

Summary of patient studies and training/cross-validation and testing split.

| Features | Train/Cross-Val (n = 930) | Testing (n = 399) |

|---|---|---|

| Male/Female | 465/465 | 214/185 |

| Age (mean ± std) | 54.67±16.81 | 53.81±17.80 |

| COVID-CT | ||

| Positive | 701 | 297 |

| Negative | 229 | 102 |

| COVID-NAT | ||

| Positive | 533 | 228 |

| Negative | 397 | 171 |

| COVID-Severity | ||

| Control/Suspected | 397 | 171 |

| Mild/Regular | 398 | 164 |

| Severe/Critically ill | 135 | 64 |

| Data Source | ||

| Wuhan Union Hospital | 669 | 290 |

| Wuhan Liyuan Hospital | 261 | 109 |

Fig. 3.

ROC/AUCs of machine learning and deep learning models for detection of COVID-19 against radiologists (left) and SARS-CoV-2 nucleic acid test (right).

Table 3.

Performance metrics of machine learning and deep learning models for COVID-19 diagnosis.

| Model | Input(s) | COVID-19 against Radiology |

COVID-19 against Nucleic Acid Test |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Rec. | F1 | Acc. | AUC | Prec. | Rec. | F1 | Acc. | AUC | |||

| Single Task | RF | CT Features | 0.861 | 0.865 | 0.862 | 86.47% | 0.913 | 0.739 | 0.739 | 0.739 | 73.93% | 0.819 |

| LGBM | CT Features | 0.862 | 0.865 | 0.863 | 86.47% | 0.921 | 0.761 | 0.762 | 0.761 | 76.19% | 0.803 | |

| ResNet3D | CT Volume | 0.841 | 0.840 | 0.840 | 83.96% | 0.901 | 0.723 | 0.724 | 0.724 | 72.43% | 0.763 | |

| deCoVnet | CT Volume | 0.865 | 0.860 | 0.862 | 85.96% | 0.907 | 0.771 | 0.772 | 0.771 | 77.19% | 0.821 | |

| SqueezeNet3D | CT Volume | 0.897 | 0.885 | 0.888 | 88.47% | 0.931 | 0.713 | 0.707 | 0.708 | 70.68% | 0.768 | |

| ShiftNet3D | CT Volume | 0.896 | 0.887 | 0.890 | 88.72% | 0.939 | 0.762 | 0.762 | 0.762 | 76.19% | 0.824 | |

| COVID-MTL (Multi-task) |

w/o. Shift3D | CT Volume | 0.891 | 0.877 | 0.881 | 87.72% | 0.927 | 0.760 | 0.757 | 0.758 | 75.69% | 0.806 |

| Shift3D | CT Volume | 0.891 | 0.882 | 0.885 | 88.22% | 0.937 | 0.796 | 0.794 | 0.791 | 79.45% | 0.842 | |

| Shift3D | CT Volume, CT Features |

0.912 | 0.902 | 0.905 | 90.23% | 0.939 | 0.791 | 0.792 | 0.792 | 79.20% | 0.846 | |

When directly utilizing 3D CT lung volumes as inputs, 3D CNN models, especially ShiftNet3D, yielded higher performance in comparison to RF and LGBM. Compared to ResNet3D and SqueezeNet3D, ShiftNet3D achieved around 4–5% higher accuracy and AUC performance for detection of COVID-19 against nucleic acid tests (Table 3), suggesting the introduction of Shift3D can induce a performance boost for existing 3D CNNs on the more challenging COVID-19 learning task. While deCoVnet [19] achieved higher performance on COVID-NAT task but second worst (slightly better than ResNet3D) on the other two tasks (Table 3 and 4 ).

Table 4.

Performance metrics of machine learning and deep learning models for severity assessment of COVID-19.

| Model | Pre. | Recall | F1 | Acc. | AUC | |

|---|---|---|---|---|---|---|

| Single Task | RF | 0.628 | 0.639 | 0.624 | 63.91% | 0.791 |

| LGBM | 0.630 | 0.647 | 0.632 | 64.66% | 0.784 | |

| ResNet3D | 0.546 | 0.556 | 0.549 | 55.64% | 0.737 | |

| deCoVnet | 0.540 | 0.591 | 0.552 | 59.15% | 0.757 | |

| SqueezeNet3D | 0.655 | 0.659 | 0.653 | 65.91% | 0.794 | |

| ShiftNet3D | 0.655 | 0.659 | 0.653 | 65.91% | 0.794 | |

| Multi-task | COVID-MTL | 0.666 | 0.667 | 0.649 | 66.67% | 0.800 |

| COVID-MTL (Transfer) |

0.647 | 0.669 | 0.632 | 66.92% | 0.813 | |

After the adoption of a random-weighted loss function, the COVID-MTL is capable of simultaneously detecting COVID-19 against both radiology (AUC of 0.939, an accuracy of 90.23%) and nucleic acid tests (AUC of 0.846, an accuracy of 79.20%) (Fig. 3 and Table 3). In comparison to single-task models, the MTL approach achieved around 1.5%−6% higher accuracy for detection against radiology and significant higher diagnosis performance against nucleic acid tests, e.g., over 3% accuracy promotion compared to ShiftNet3D, and around 7–9% compared to ResNet3D and SqueezeNet3D (Table 3), suggesting the effectiveness of joint learning. Even higher performance was achieved when using CT lung volumes and high-throughput imaging features as parallel inputs which detected 92.54% and 84.69% of clinically-confirmed positive and negative test cases respectively. COVID-MTL models equipped with Shift3D consistently outperform models without Shift3D. It is worth noting that the training and inference time of the COVID-MTL models is significantly reduced (three times less) in comparison to conventional 3D CNN models since the latter needs to be trained and predicted individually for each task.

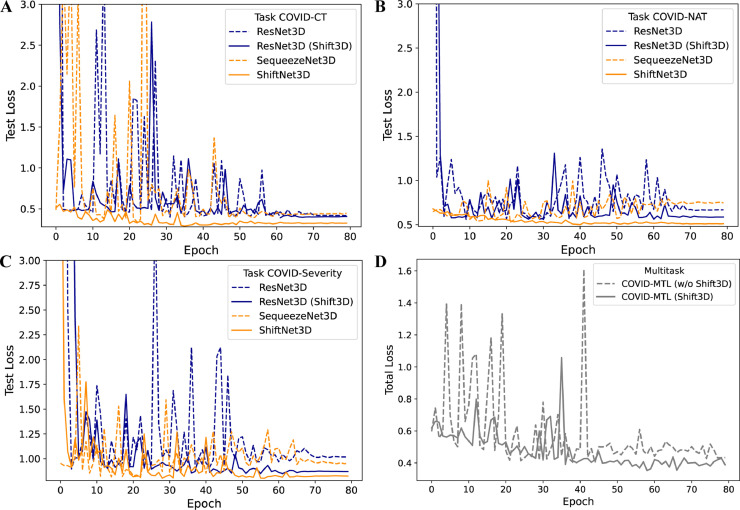

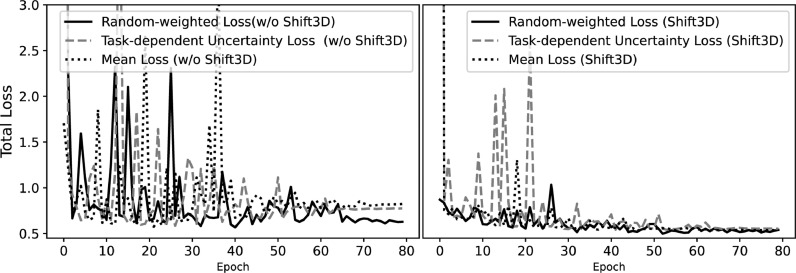

As shown in Fig. 4 A-C, under the consistent experiment settings, single-task 3D CNN models with Shift3D converged faster, more stable (less fluctuating), and converged at the lower loss levels on all three tasks. In comparison, Fig. 4D illustrates the total loss of COVID-MTL models (with CT volumetric inputs) for COVID-19 detection with and w/o using Shift3D. The losses of different tasks were randomly weighted and summed during each training iteration, and the corresponding total test loss fluctuated as expected in the earlier learning stage. With the help of the Shift3D, the multitask learning model converged faster, and the fluctuation of the total loss was also alleviated (Fig. 4D).

Fig. 4.

Loss comparison of lightweight 3D CNNs (A-C) and COVID-MTL (D) for COVID-19 assessment under using (solid lines) and w/o (dash lines) using Shift3D.

5.4. Experimental results of COVID-19 severity assessment

As shown in Table 4, except for ResNet3D and deCovNet, the deep learning models achieved consistently higher performance compared to RF and LGBM for COVID-19 severity assessment. ShiftNet3D yielded similar performance compared to its backbone model (SqueezeNet3D). In comparison, the COVID-MTL achieved a slight performance boost with an AUC of 0.800 ± 0.020 and an accuracy of 66.67%.

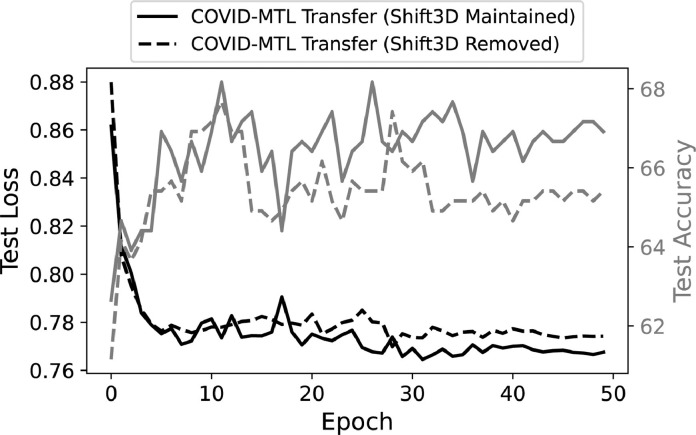

Other than training three tasks together, it is reasonable to assume that the MTL model trained for the two diagnosis tasks can be reused for severity assessment since the control/suspected cases can be inferred from the positivity of the CT diagnosis and nucleic acid tests. To validate the hypothesis, the COVID-MTL model trained for the two diagnosis tasks was repurposed using transfer learning for severity assessment. The task-specific output and classification layers of COVID-MTL were replaced with fully-connected layers, the pretrained convolutional layers were frozen and the reused model was then trained for additional 50 epochs. As a result, the transfer learning model achieved an AUC of 0.813 ± 0.021 for classifying control/suspected (AUC of 0.841), mild/regular (AUC of 0.808), and severe/critically-ill (AUC of 0.789) cases, which is a slight boost for original COVID-MTL model.

Interestingly, the 3D implementation of ResNet (ResNet34 in this work), which was based on [26], achieved the lowest performance on severity assessment. More complicated 3D ResNet models are unable to be loaded under the present settings due to the GPU memory limitation. Even deeper ResNet structures could be converted to 3D versions and explored when enough GPU resources become available.

5.5. Analysis results of high-throughput lung features and their correlation with COVID-19

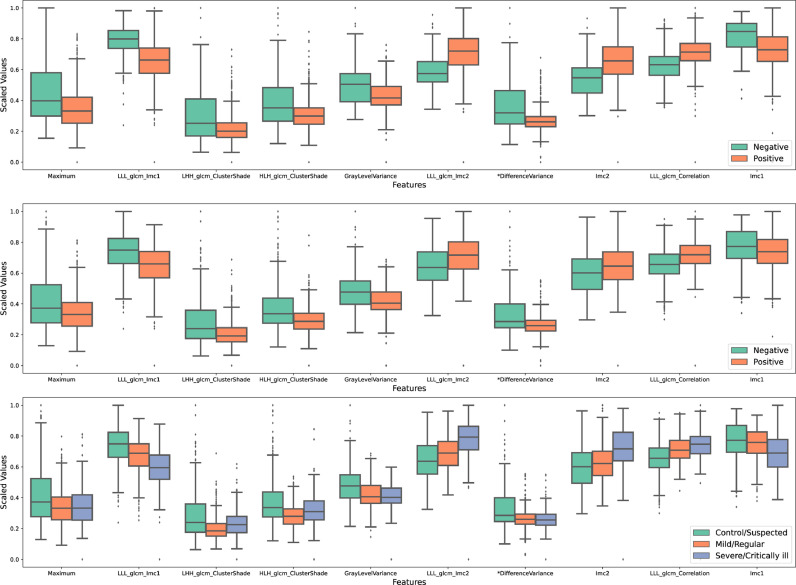

For each COVID-19 task, feature importance was generated after training the machine learning model (LGBM in this work). The top 10 most important imaging features were selected for each task. After removing repeated features, 24 high-throughput lung features remained for correlation analyses. As mentioned in the methods section, the 24 imaging features under different COVID-19 states were analyzed using Welch's ANOVA test, which then identified 16 image features that significantly related to COVID-19 positivity and severity (P < 0.001 for all; gray items in Table 5 ). 10 significant features were further scaled to the same value range and were box-plotted for better illustration (Fig. 5 ).

Table 5.

Welch's ANOVA test of top CT lung features between COVID-19 positive and negative cases, and among different severity groups.

| Robust Tests of Equality of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| COVID-19 against Radiologists (df1=1) |

COVID-19 against Nucleic Acid Test (df1=1) |

COVID-19 Severity (df1=2) |

|||||||

| High-throughput Lung Features | Stat. | df2 | Sig. | Stat. | df2 | Sig. | Stat. | df2 | Sig. |

| HLL_glcm_ClusterProminence | 43.06 | 340.89 | <0.001 | 61.80 | 604.97 | <0.001 | 30.86 | 472.32 | <0.001 |

| LHL_glcm_Idmn | 30.06 | 530.47 | <0.001 | 30.43 | 1198.09 | <0.001 | 18.10 | 540.37 | <0.001 |

| Maximum | 80.41 | 441.28 | <0.001 | 57.10 | 956.95 | <0.001 | 29.73 | 542.92 | <0.001 |

| Energy | 2.49 | 451.09 | 0.115 | <0.01 | 1096.54 | 0.986 | 10.52 | 514.79 | <0.001 |

| LLL_glcm_Imc1 | 409.21 | 668.77 | <0.001 | 167.23 | 1208.49 | <0.001 | 112.46 | 541.73 | <0.001 |

| HLL_glcm_Correlation | 29.58 | 512.00 | <0.001 | 1.65 | 1119.28 | 0.199 | 1.19 | 591.13 | 0.306 |

| LLH_glcm_Correlation | 3.89 | 604.49 | 0.049 | 7.79 | 1321.56 | 0.005 | 6.45 | 539.50 | 0.002 |

| LHH_glcm_ClusterShade | 77.59 | 393.65 | <0.001 | 114.05 | 817.28 | <0.001 | 75.90 | 529.59 | <0.001 |

| *LongRunLowGrayLevelEmphasis | 54.96 | 689.20 | <0.001 | 68.80 | 1306.63 | <0.001 | 37.82 | 489.17 | <0.001 |

| HLH_glcm_ClusterShade | 70.23 | 402.28 | <0.001 | 127.16 | 868.70 | <0.001 | 82.64 | 522.13 | <0.001 |

| Idn | 13.55 | 530.18 | <0.001 | 7.65 | 1158.71 | 0.006 | 6.35 | 546.89 | 0.002 |

| LLH_glcm_ClusterShade | 2.96 | 489.89 | 0.086 | 0.05 | 1191.24 | 0.832 | 0.21 | 595.76 | 0.807 |

| LargeAreaHighGrayLevelEmphasis | 1.07 | 537.72 | 0.300 | <0.01 | 1311.65 | 0.993 | 4.31 | 515.67 | 0.014 |

| *ShortRunHighGrayLevelEmphasis | 44.66 | 360.21 | <0.001 | 50.89 | 702.63 | <0.001 | 27.48 | 475.40 | <0.001 |

| Idmn | 8.63 | 534.83 | 0.003 | 0.72 | 1146.50 | 0.395 | 1.32 | 549.05 | 0.268 |

| GrayLevelVariance | 84.66 | 442.59 | <0.001 | 124.43 | 1030.40 | <0.001 | 63.26 | 582.43 | <0.001 |

| HHH_glcm_ClusterShade | 62.03 | 377.48 | <0.001 | 108.32 | 753.05 | <0.001 | 64.82 | 534.31 | <0.001 |

| LLL_glcm_Imc2 | 346.27 | 630.78 | <0.001 | 107.75 | 1178.31 | <0.001 | 93.34 | 546.17 | <0.001 |

| LLL_glrlm_RunEntropy | 47.48 | 548.11 | <0.001 | 2.72 | 1237.29 | 0.100 | 43.13 | 515.62 | <0.001 |

| LLH_glcm_ClusterProminence | 29.54 | 429.01 | <0.001 | 25.00 | 1032.16 | <0.001 | 14.92 | 598.86 | <0.001 |

| *DifferenceVariance | 129.02 | 383.67 | <0.001 | 143.71 | 792.11 | <0.001 | 71.98 | 567.16 | <0.001 |

| Imc2 | 279.23 | 651.74 | <0.001 | 48.15 | 1178.92 | <0.001 | 54.21 | 530.26 | <0.001 |

| LLL_glcm_Correlation | 171.47 | 512.35 | <0.001 | 173.88 | 1138.84 | <0.001 | 89.70 | 539.75 | <0.001 |

| Imc1 | 253.18 | 673.94 | <0.001 | 51.66 | 1208.52 | <0.001 | 42.87 | 533.73 | <0.001 |

Stat. Asymptotically F distributed; *LongRunLowGrayLevelEmphasis: HLH_glrlm_LongRunLowGrayLevelEmphasis.

*ShortRunHighGrayLevelEmphasis: LHH_glrlm_ShortRunHighGrayLevelEmphasis; *DifferenceVariance: HLL_glcm_DifferenceVariance.

Fig. 5.

Box plots of CT lung features between COVID-19 positive and negative cases (first row: against radiology; second row: against SARS-CoV-2 nucleic acid test) as well as among different severity groups (last row).

In other words, those identified features are significantly different between COVID-19 positive and negative cases against both radiology (P < 0.001; second column, Table 5; first row of Fig. 5) and SARS-CoV-2 nucleic acid test (P < 0.001; third column, Table 5; second row of Fig. 5). Similar results were observed in the analysis of COVID-19 severity, where significant value differences were found in different severity groups (P < 0.001, last column, Table 5), especially when comparing control/suspected and severe/critically ill cases (last row, Fig. 5).

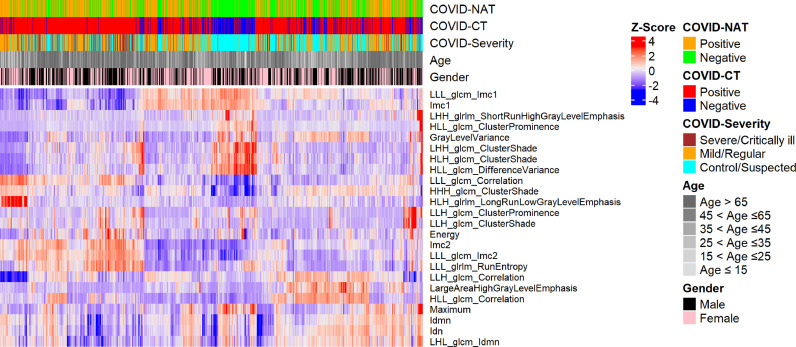

As shown in Fig. 6 , clustering of the top lung imaging features demonstrated that the nuclei acid test results were not always consistent with the diagnosis from radiologists (using CT scans), which is in accordance with the published literature [5]. The inconsistency between the two diagnosis standards may due to the relatively higher false-negative rate of NAT in detection of asymptomatic transmission. The clustering also shows that COVID-19 infection can be found in different age and gender groups (Fig. 6). People in older age groups are more vulnerable to be with severe/critically-ill infection (Fig. 6) given their immune response is less effective to SARS-CoV-2 compared to their younger counterparts. More interestingly, there is a group of uninfected people (mostly the male) whose lung CT features demonstrated a distinct pattern compared with others (middle, Fig. 6).

Fig. 6.

Clustering of top high-throughput lung features related to COVID-19.

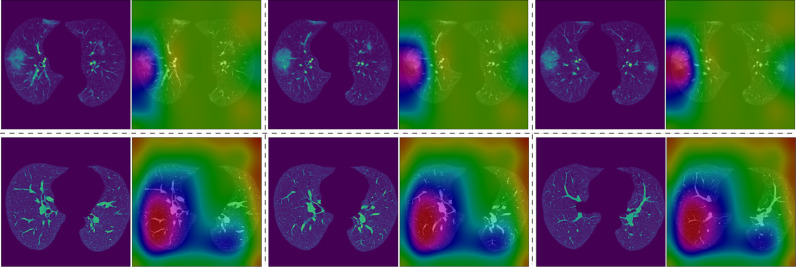

6. Case study

To decipher the underlying mechanism of COVID-MTL for detection of COVID-19 infection, we obtained 3D feature maps from the last convolutional layer of COVID-MTL when inferencing an infected case (upper panel, Fig. 7 ) and a normal study (bottom panel, Fig. 7). The feature maps were then converted to Class Activation Maps (CAMs) and overlaid on the two cases respectively to compare the discriminative regions captured by the MTL model. The comparison shows a distinct discriminative pattern between the two cases, which indicate that the discriminative regions captured from the infected case are focused on lung areas that exhibited ground-glass opacities (red attention color in the upper panel, Fig. 7), whereas, large and homogeneous lung tissue regions were covered in the normal case (red attention color in the bottom panel, Fig. 7).

Fig. 7.

CAM visualization for comparison of discriminative regions captured by COVID-MTL in the diagnosis of COVID-19. Upper panel: lung region and corresponding CAM visualization of an infected case (ongoing infection with severe symptoms, ground-glass opacities exhibited); Bottom panel: lung region and corresponding CAM visualization of a normal case.

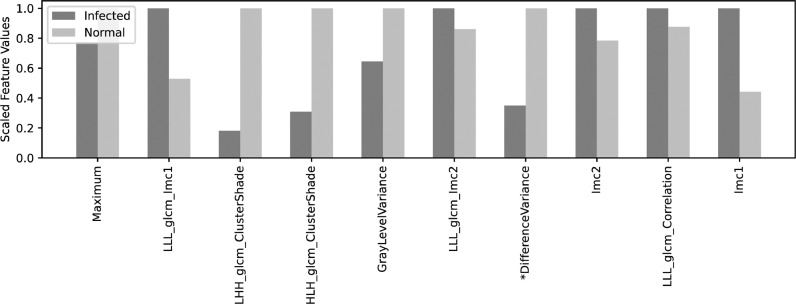

A comparison of their lung CT features is also demonstrated in Fig. 8 , in which some of the features are significantly different between the two cases, including Imc1, HLL_glcm_DifferenceVariance, HLH_glcm_ClusterShade, LHH_glcm_ClusterShade, and LLL_glcm_Imc1.

Fig. 8.

Comparison of top high-throughput lung features between an infected and a normal case. Features are scaled to 0 - 1 for comparison.

7. Ablation study

We compared the performance of task-dependent uncertainty loss, random-weighted loss, and mean loss (average of 3 task losses) under COVID-MTL for detection and severity assessment of COVID-19. The result shows our proposed random-weighted multitask loss function achieved faster and stable convergence as well as better performance in comparison to task-dependent uncertainty loss [31] and mean loss (left panel, Fig. 9 ). COVID-MTL models equipped with Shift3D achieved consistently better performance than models without Shift3D, i.e., faster convergence and better performance have been achieved under all three types of loss functions with random-weighted loss slightly better than the other two methods (right panel, Fig. 9).

Fig. 9.

Performance of three different multitask loss functions for detection and severity assessment of COVID-19 when w/o using and using Shift3D.

As we have shown before, the COVID-MTL model trained for the two diagnosis tasks can be repurposed for severity assessment (Table 4), which achieved a slight performance boost (AUC of 0.813 ± 0.021, accuracy of 66.92%, and recall of 0.669) compared to the original MTL model (AUC of 0.800 ± 0.020, accuracy of 66.67%, and recall of 0.667). However, such performance gain was obtained when the Shift3D layer was still enabled during the additional training (fine-tuning) procedure. Without the utilization of Shift3D, the transfer learning model is unable to achieve such performance gain (AUC of 0.810 ± 0.024, accuracy of 65.41%, and recall of 0.654) under the current settings even it was trained for additional 50 epochs. This finding was also illustrated in Fig. 10 , where the model with Shift3D achieved lower loss and higher test accuracy.

Fig. 10.

Performance comparison of COVID-MTL transfer learning model for severity assessment of COVID-19 when maintaining (solid lines) and removing (dashed lines) Shift3D.

The performance of 3D CNNs was further evaluated using initial lung segmentation outputs produced by [25]. As shown in Table 6 , the 3D CNNs, especially SqueezeNet3D and ShiftNet3D, achieved an average of 2% lower end-to-end performance with initial lung segmentation compared to their counterparts (with refined segmentation; Table 3 and Table 4). Such a result is explainable since diagnostic-relevant structures like GGO are more likely to be under-segmented using classical methods, especially in severe and critical-ill cases, making it harder to differentiate severities.

Table 6.

Performance of 3D CNNs using initial lung segmentation outputs.

| Task | Model | Prec. | Rec. | F1 | Acc. | AUC |

|---|---|---|---|---|---|---|

| COVID-CT | ResNet3D | 0.847 | 0.830 | 0.835 | 82.96% | 0.893 |

| SqueezeNet3D | 0.876 | 0.870 | 0.872 | 86.97% | 0.932 | |

| ShiftNet3D | 0.879 | 0.870 | 0.873 | 86.97% | 0.941 | |

| COVID-NAT | ResNet3D | 0.723 | 0.724 | 0.723 | 72.43% | 0.779 |

| SqueezeNet3D | 0.712 | 0.712 | 0.712 | 71.18% | 0.770 | |

| ShiftNet3D | 0.743 | 0.744 | 0.741 | 74.44% | 0.820 | |

| COVID-Severity | ResNet3D | 0.608 | 0.612 | 0.607 | 61.15% | 0.754 |

| SqueezeNet3D | 0.579 | 0.584 | 0.581 | 58.40% | 0.756 | |

| ShiftNet3D | 0.631 | 0.637 | 0.633 | 63.66% | 0.781 |

The random number of shifting lines in Shift3D is drawn from a discrete uniform distribution by default, it can be also drawn from Gaussian distribution, as the results shown in Table 7 , which demonstrated that the performance of ShiftNet3D in the current discrete uniform distribution is consistently better than that of Gaussian distribution.

Table 7.

Performance of ShiftNet3D using shift numbers generated from Discrete Uniform and Gaussian distributions.

| Task | Distribution | Prec. | Rec. | F1 | Acc. | AUC |

|---|---|---|---|---|---|---|

| COVID-CT | Discrete Uniform (Current) | 0.896 | 0.887 | 0.890 | 88.72% | 0.939 |

| Gaussian | 0.878 | 0.865 | 0.869 | 86.47% | 0.930 | |

| COVID-NAT | Discrete Uniform (Current) | 0.762 | 0.762 | 0.762 | 76.19% | 0.824 |

| Gaussian | 0.754 | 0.754 | 0.754 | 75.44% | 0.800 | |

| COVID-Severity | Discrete Uniform (Current) | 0.655 | 0.659 | 0.653 | 65.91% | 0.794 |

| Gaussian | 0.639 | 0.647 | 0.640 | 64.66% | 0.796 |

Further, the performance of MTL with only using extracted CT lung features was evaluated. As shown in Table 8 , the MTL approach exclusively learned by FNN using CT features achieved lower performance especially compared to COVID-MTL that simultaneously learned by 3D CNN and FNN using both CT lung volumes and features, suggesting the effectiveness of the proposed framework (Fig 1; Table 3 and Table 4).

Table 8.

MTL learning performance with only using extracted CT lung features for COVID-19 diagnosis and severity assessment.

| Task | Prec. | Rec. | F1 | Acc. | AUC |

|---|---|---|---|---|---|

| COVID-CT | 0.847 | 0.852 | 0.844 | 85.21% | 0.886 |

| COVID-NAT | 0.746 | 0.747 | 0.744 | 74.69% | 0.802 |

| COVID-Severity | 0.518 | 0.602 | 0.552 | 60.15% | 0.741 |

Feature engineering plays an important role in machine learning performance, in addition to radiomic features, we have trained RF and LGBM with Histogram of Oriented Gradients (HOG) features (32 bins) under the same settings, and the test results demonstrated an inferior performance (Table 9 ).

Table 9.

Performance of machine learning models with Histogram of Oriented Gradients (HOG) features.

| Model | Task | Prec. | Rec. | F1 | Acc. | AUC |

|---|---|---|---|---|---|---|

| RF | COVID-CT | 0.554 | 0.744 | 0.635 | 74.44% | 0.583 |

| COVID-NAT | 0.631 | 0.582 | 0.450 | 58.15% | 0.536 | |

| COVID-Severity | 0.360 | 0.426 | 0.390 | 42.61% | 0.559 | |

| LGBM | COVID-CT | 0.666 | 0.742 | 0.647 | 74.19% | 0.675 |

| COVID-NAT | 0.563 | 0.579 | 0.548 | 57.89% | 0.560 | |

| COVID-Severity | 0.442 | 0.461 | 0.425 | 46.12% | 0.659 |

Note: HOG features were dimensionally reduced to the same size as radiomic features for a fair comparison.

Additional samples were collected from a recently published work [39] which provided 121 positive CT studies diagnosed with RT-PCR. Given the normal chest CT scan is a scarce resource, 98 control cases from existing test data were enrolled to compensate for the class imbalance to form a multi-institutional test cohort. As shown in Table 10 , the proposed methods consistently achieved better performance on the newly-formed test cohort and it also demonstrated the power of learning COVID-19 infection against both radiography and nucleic acid test, where aggregate approaches including multitask learning and model aggregation (e.g., ShiftNet3D) can achieve significantly higher performance compared to learning against only one target.

Table 10.

Performance of 3D CNN-based models on the newly-formed test cohort with volume inputs.

| Model | Model Instance | Prec. | Rec. | F1 | Acc. | AUC |

|---|---|---|---|---|---|---|

| ResNet3D | Against Radio. | 0.896 | 0.872 | 0.868 | 87.21% | 0.964 |

| Against NAT | 0.887 | 0.877 | 0.877 | 87.67% | 0.891 | |

| Model Aggregated | 0.905 | 0.886 | 0.883 | 88.58% | 0.954 | |

| SqueezeNet3D | Against Radio. | 0.919 | 0.918 | 0.918 | 91.78% | 0.951 |

| Against NAT | 0.812 | 0.804 | 0.804 | 80.37% | 0.860 | |

| Model Aggregated | 0.900 | 0.900 | 0.899 | 89.95% | 0.935 | |

| deCovNet | Against Radio. | 0.890 | 0.881 | 0.879 | 88.13% | 0.903 |

| Against NAT | 0.939 | 0.932 | 0.931 | 93.15% | 0.939 | |

| Model Aggregated | 0.936 | 0.927 | 0.926 | 92.69% | 0.927 | |

| ShiftNet3D | Against Radio. | 0.925 | 0.922 | 0.922 | 92.24% | 0.952 |

| Against NAT | 0.905 | 0.904 | 0.904 | 90.41% | 0.932 | |

| Model Aggregated | 0.943 | 0.941 | 0.940 | 94.06% | 0.952 | |

| COVID-MTL | Against Radio. (Output 1) | 0.930 | 0.922 | 0.921 | 92.24% | 0.974 |

| Against NAT (Output 2) | 0.950 | 0.945 | 0.945 | 94.52% | 0.929 | |

| Output Aggregated | 0.946 | 0.941 | 0.940 | 94.06% | 0.957 |

8. Discussion and conclusion

With the dramatic increase of COVID-19 infections in the past few months and the shortage of human resources in clinical practice globally, automated methods for diagnosis and severity assessment of the highly infectious disease are increasingly demanded. People with NAT negative but diagnosed as CT positive were classified by physicians as “suspected cases”, which is the source of the result difference between the two methods. Generally speaking, quarantine measures need to be considered for people who were detected as either CT or NAT positivity since those persons are at high risk of transmission (even classified as suspected). As a fast method, chest CT (typically takes 30 s) is high-sensitive for detection of asymptomatic transmission and has been complementarily adopted for more accurate diagnosis in clinical practice since the outbreak when NAT was unable to timely confirm the positivity. The two complementary examination standards naturally share corresponding outcome labels to a certain extent which conforms with the real-world scenarios, i.e., people infected with SARS-COV-2 have a high probability of showing positivity in both nucleic acid tests (NAT) and computer tomography (CT). It is a manifestation of the interrelationship of multiple tasks. Because the two diagnosis methods are related, we regarded learning against the two methods as a multitask problem from which the network was trained in consideration of aspects in both CT and NAT for improving generalization and joint learning performance. The better prediction results for the three tasks were achieved through fusing the losses of multiple tasks with multi-task loss function, and the network weights were then correspondingly adjusted to achieve joint learning by back-propagating the fused loss. The shared network weights before task-specific layers were, therefore, able to learn more general COVID-19 related features and thereby improved the network performance of multiple tasks. Statistical analysis demonstrated that compared to each of the other existing models, COVID-MTL achieved statistically significant improvements for at least two out of three tasks (p-value ranging from 1.12E-08 to 0.02).

In addition to boosting the joint learning performance, another promising aspect of our proposed framework lies in its high recognition accuracy against radiologists and the capability of automated screening out high-risk cases of transmission. Meanwhile, simultaneous inferring NAT results from CT scans indicated that deep features obtained from chest CT scans may contain richer information for COVID-19 assessment than expected, which is an interesting topic and has great practical benefits considering its fast manner in detection. In comparison, conventional NAT relies on RT-PCR, a much slower and complicated operation that requires highly qualified technicians.

By combining 3D CNN and auxiliary FNN, different representations of chest CT information, i.e., volumetric lung CT data and high-throughput lung CT features, were propagated and concatenated in the network for evaluation of COVID-19. The fine-grained detection results can be obtained based on the prediction against both radiology and NAT (a combination of COVID-CT and COVID-NAT). However, the diagnosis of COVID-19 is more complicated than expected due to prolonged incubation and asymptomatic infection from which biomedical tests and repeated swab samples need to be collected and evaluated periodically to confirm suspected cases. Nonetheless, the proposed framework demonstrated promising performance in identifying positive and negative cases.

The proposed Shift3D and random-weighted multitask loss function were experimentally validated to be able to improve the convergence and accuracy of the state-of-the-art methods, especially on more challenging COVID-19 tasks. Shift3D introduces space shifting invariance to existing 3D CNNs to improve (or accelerate) model convergence and alleviate overfitting and works well under different loss configurations. In comparison, the random-weighted loss function gives vulnerable tasks a sufficient chance to be prioritized and prevents joint learning procedures from being dominated by specific tasks, which outperformed task-dependent uncertainty loss and linearly combined mean loss. As a result, the multitask learning performance was boosted compared to single-task models. Dirichlet distribution allows us to control the concentration of the generated weight distributions by fixing the concentration parameter vector and tuning a drawing number instead, which is quite useful when dealing with imbalanced tasks (in terms of task difficulty). Distributions like the normal ones can also be used when there are only two tasks. However, other probability distributions that satisfy the abovementioned needs may also be effective, which deserves further exploration. The proposed random-weighted loss could be further extended on 2D CNNs and classification & regression combined tasks.

COVID-MTL works under the utilization of chest CT scan only, it is a self-contained framework and can work independently without human intervention, thus reducing inter and intra-observer variability especially compared to slice-based methods in which the inference can be heavily affected by the inputs (quality of manually labeled CT slices). COVID-MTL is independent of clinical parameters but can be further enhanced by integrating those clinical factors, including biochemical tests. In comparison to single-task solutions, the performance of different tasks, especially the more challenging ones, was boosted under the joint learning, and meanwhile, training and inference time can be significantly reduced given its capability of learning and predicting three different COVID-19 tasks in parallel.

The baseline RF/LGBM with Radiomic Feature adopted in this work was a state-of-the-art machine learning approach for medical imaging analysis and has demonstrated comparable and even better performances compared to existing models, i.e., ResNet3D and SqueezeNet3D, in certain learning tasks. Lightweight 3D CNNs have their own weakness including potential accuracy degradations although they are more resource-efficient and able to counterbalance overfitting in comparison to conventional structures. Meanwhile, one of the vulnerable aspects of the traditional machine learning method lies in its heavy reliance on feature engineering, improper selection of features may lead to an inferior machine learning baseline as demonstrated in the ablation study which conformed with the experimental results reported in [19].

The deep learning model is more like a black box, to decipher the relationship between radiographic images and COVID-19, high-throughput lung features were extracted from COVID-19 CT scans, and top imaging features were identified through statistical analyses. The analyses showed that those lung CT features are significantly (P < 0.001) related to COVID-19 positivity and severity. The given case study, which was conducted to decipher the underlying mechanism of COVID-MTL for recognition of COVID-19, ascertained the findings by showing the distinct discriminative patterns that were captured by the neural network from CT images of the infectious and normal case respectively. The analyses of high-throughput lung features and their correlation with COVID-19 may help the community better understand the disease regarding its relevance to radiology. The identified lung features could be used in future unsupervised COVID-19 assessments.

In conclusion, we proposed an end-to-end multitask learning framework for automated and simultaneous diagnostic classification and severity assessment of COVID-19. Our experiments and ablation study demonstrated that key components of the COVID-MTL framework, including unsupervised lung segmentation, Shift3D, and random-weighted multitask loss, were able to improve the diagnostic workflows and outperform their counterpart methods, which finally boosted the joint learning performance when dealing with COVID-19 tasks of imbalanced difficulties. High-throughput lung features that related to the positivity and severity of COVID-19 were deciphered from chest CT scans, and a case study was given to help further understand the pattern recognition mechanism of the network. Chest CT scans may contain richer information than expected but are currently not fully utilized in COVID-19 research. Future studies may focus on exploring unsupervised diagnosis approaches with identified lung imaging features, extending random-weighted loss on 2D CNNs and regression tasks, and integrating clinical parameters. Besides, including cross-continental COVID-19 CT data may further validate and improve the performance of COVID-MTL and enable it more viable for clinical practice. All our experimental data, pretrained models, and computer code can be made publicly available, which may facilitate the community for future research and relevant applications.

Data availability and experimental reproducibility

The dataset (including 1329 segmented chest CT scans and corresponding extracted high-throughput lung features), source code, and pretrained models have been released at a public repository: https://github.com/guoqingbao/COVID-MTL.

Declaration of Competing Interest

The authors declare no competing interests.

Biographies

Guoqing Bao, Ph.D. candidate at School of Computer Science, The University of Sydney. He was a senior software engineer at iQiYi.com from 2011 to 2016. His research interests include medical image processing, deep learning, and translational medicine.

Huai Chen, Ph.D. candidate at Department of Automation, Institute of Image Processing and Pattern Recognition, Shanghai Jiao Tong University. His research interests include deep learning, medical image processing, and computer vision.

Tongliang Liu, Lecturer in Machine Learning at School of Computer Science, The University of Sydney. His research interests lie in understanding machine learning models and designing efficient learning algorithms for problems in computer vision and data mining. He has published 60+ peer-reviewed papers in leading international journals and proceedings of top international conferences.

Guanzhong Gong, Radiation physicist from Department of Radiation Oncology, Shandong Cancer Hospital. Mainly engaged in basic research and clinical application of tumor precise radiotherapy guided by medical image processing technology.

Yong Yin, Professor and Director of Department of Radiation Physics, Shandong Cancer Hospital. Mainly Engaged in Clinical Application and research Technology of Radiation Oncology. He had Published 75 Academic Papers, and 32 Were Science Citation Index.

Lisheng Wang, Professor of Department of Automation, Shanghai Jiao Tong University, China. His research interests include analysis and visualization of 3D images, computer-aided diagnosis.

Xiuying Wang, Associate Professor at the School of Computer Science, The University of Sydney. Her research interests include medical data analysis and fusion, image segmentation, registration, and saliency detection.

Footnotes

References

- 1.Zhu N., Zhang D., Wang W., Li X., Yang B., Song J., Zhao X., Huang B., Shi W., Lu R., Niu P., Zhan F., Ma X., Wang D., Xu W., Wu G., Gao G.F., Tan W. A novel coronavirus from patients with pneumonia in China, 2019. N. Engl. J. Med. 2020;382:727–733. doi: 10.1056/NEJMoa2001017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X., Cheng Z., Yu T., Xia J., Wei Y., Wu W., Xie X., Yin W., Li H., Liu M., Xiao Y., Gao H., Guo L., Xie J., Wang G., Jiang R., Gao Z., Jin Q., Wang J., Cao B. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet North Am. Ed. 2020;395:497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.W.H. Organization . World Health Organization2020; 22 October 2020. Considerations in the Investigation of Cases and Clusters of COVID-19: Interim Guidance. [Google Scholar]

- 4.Surkova E., Nikolayevskyy V., Drobniewski F. False-positive COVID-19 results: hidden problems and costs. Lancet Respirat. Med. 2021 doi: 10.1016/S2213-2600(20)30453-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Woloshin S., Patel N., Kesselheim A.S. False negative tests for SARS-CoV-2 infection — challenges and implications. N. Engl. J. Med. 2020;383:e38. doi: 10.1056/NEJMp2015897. [DOI] [PubMed] [Google Scholar]

- 6.Xiao A.T., Tong Y.X., Zhang S. False negative of RT-PCR and prolonged nucleic acid conversion in COVID-19: rather than recurrence. J. Med. Virol. 2020;92:1755–1756. doi: 10.1002/jmv.25855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ng M.-.Y., Lee E.Y., Yang J., Yang F., Li X., Wang H., Lui M.M.-s., Lo C.S.-Y., Leung B., Khong P.-.L., Hui C.K.-M., Yuen K.-y., Kuo M.D. Imaging profile of the COVID-19 infection: radiologic findings and literature review. Radiology. 2020;2 doi: 10.1148/ryct.2020200034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Meng H., Xiong R., He R., Lin W., Hao B., Zhang L., Lu Z., Shen X., Fan T., Jiang W., Yang W., Li T., Chen J., Geng Q. CT imaging and clinical course of asymptomatic cases with COVID-19 pneumonia at admission in Wuhan, China. J. Infect. 2020;81:e33–e39. doi: 10.1016/j.jinf.2020.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zhou S., Zhu T., Wang Y., Xia L. Imaging features and evolution on CT in 100 COVID-19 pneumonia patients in Wuhan, China. Eur. Radiol. 2020;30:5446–5454. doi: 10.1007/s00330-020-06879-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li L., Qin L., Xu Z., Yin Y., Wang X., Kong B., Bai J., Lu Y., Fang Z., Song Q., Cao K., Liu D., Wang G., Xu Q., Fang X., Zhang S., Xia J., Xia J. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy. Radiology. 2020;296:E65–E71. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harmon S.A., Sanford T.H., Xu S., Turkbey E.B., Roth H., Xu Z., Yang D., Myronenko A., Anderson V., Amalou A., Blain M., Kassin M., Long D., Varble N., Walker S.M., Bagci U., Ierardi A.M., Stellato E., Plensich G.G., Franceschelli G., Girlando C., Irmici G., Labella D., Hammoud D., Malayeri A., Jones E., Summers R.M., Choyke P.L., Xu D., Flores M., Tamura K., Obinata H., Mori H., Patella F., Cariati M., Carrafiello G., An P., Wood B.J., Turkbey B. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat. Commun. 2020;11:4080. doi: 10.1038/s41467-020-17971-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sun L., Mo Z., Yan F., Xia L., Shan F., Ding Z., Song B., Gao W., Shao W., Shi F. Adaptive feature selection guided deep forest for covid-19 classification with chest CT. IEEE J. Biomed. Health Inform. 2020 doi: 10.1109/JBHI.2020.3019505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wang L., Lin Z.Q., Wong A. COVID-Net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020;10:19549. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wang Z., Liu Q., Dou Q. Contrastive cross-site learning with redesigned net for COVID-19 CT classification. IEEE J. Biomed. Health Inform. 2020;24:2806–2813. doi: 10.1109/JBHI.2020.3023246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shorfuzzaman M., Hossain M.S. MetaCOVID: a Siamese neural network framework with contrastive loss for n-shot diagnosis of COVID-19 patients. Pattern Recognit. 2020 doi: 10.1016/j.patcog.2020.107700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang Z., Xiao Y., Li Y., Zhang J., Lu F., Hou M., Liu X. Automatically discriminating and localizing COVID-19 from community-acquired pneumonia on chest X-rays. Pattern Recognit. 2021;110 doi: 10.1016/j.patcog.2020.107613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tang Z., Zhao W., Xie X., Zhong Z., Shi F., Liu J., Shen D. Severity assessment of COVID-19 using CT image features and laboratory indices. Physics in Medicine & Biology. 2020 doi: 10.1088/1361-6560/abbf9e. [DOI] [PubMed] [Google Scholar]

- 18.Ning W., Lei S., Yang J., Cao Y., Jiang P., Yang Q., Zhang J., Wang X., Chen F., Geng Z., Xiong L., Zhou H., Guo Y., Zeng Y., Shi H., Wang L., Xue Y., Wang Z. Open resource of clinical data from patients with pneumonia for the prediction of COVID-19 outcomes via deep learning. Nat. Biomed. Eng. 2020 doi: 10.1038/s41551-020-00633-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wang X., Deng X., Fu Q., Zhou Q., Feng J., Ma H., Liu W., Zheng C. A Weakly-Supervised Framework for COVID-19 Classification and Lesion Localization From Chest CT. IEEE Trans. Med. Imaging. 2020;39:2615–2625. doi: 10.1109/TMI.2020.2995965. [DOI] [PubMed] [Google Scholar]

- 20.Shaziya H., Shyamala K., Zaheer R. 2018 International Conference on Communication and Signal Processing (ICCSP) 2018. Automatic Lung Segmentation on Thoracic CT Scans Using U-Net Convolutional Network; pp. 0643–0647. [Google Scholar]

- 21.Xiao Z., Liu B., Geng L., Zhang F., Liu Y. Segmentation of lung nodules using improved 3D-UNet neural network. Symmetry. 2020;12:1787. [Google Scholar]

- 22.Shi H., Han X., Jiang N., Cao Y., Alwalid O., Gu J., Fan Y., Zheng C. Radiological findings from 81 patients with COVID-19 pneumonia in Wuhan, China: a descriptive study. Lancet Infect. Dis. 2020;20:425–434. doi: 10.1016/S1473-3099(20)30086-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Oulefki A., Agaian S., Trongtirakul T., Kassah Laouar A. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2020 doi: 10.1016/j.patcog.2020.107747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fan D.-.P., Zhou T., Ji G.-.P., Zhou Y., Chen G., Fu H., Shen J., Shao L. Inf-Net: automatic COVID-19 Lung Infection Segmentation from CT Images. IEEE Trans. Med. Imaging. 2020 doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

- 25.Jain Arnav, Mader K.S. Data Science Bowl 2017. Kaggle; 2017. DSB Lung Segmentation Algorithm Candidate Generation and LUNA16 preprocessing.https://www.kaggle.com/arnavkj95/candidate-generation-and-luna16-preprocessing Kaggle. [Google Scholar]

- 26.Köpüklü O., Kose N., Gunduz A., Rigoll G. 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW) 2019. Resource efficient 3D convolutional neural networks, pp. 1910–1919. [Google Scholar]

- 27.Hu J., Shen L., Sun G. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. Squeeze-and-excitation networks; pp. 7132–7141. [Google Scholar]

- 28.Ruder S. An overview of multi-task learning in deep neural networks. CoRR. 2017 abs/1907.09595. [Google Scholar]

- 29.Deng Y., Xie Y., Li Y., Yang M., Du N., Fan W., Lei K., Shen Y. Multi-task learning with multi-view attention for answer selection and knowledge base question answering. AAAI. 2019 [Google Scholar]

- 30.Guo M., Haque A., Huang D.-.A., Yeung S., Fei-Fei L. Proceedings of the European Conference on Computer Vision (ECCV) 2018. Dynamic task prioritization for multitask learning; pp. 270–287. [Google Scholar]

- 31.Kendall A., Gal Y., Cipolla R. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics; pp. 7482–7491. [Google Scholar]

- 32.Tian X., Li Y., Liu T., Wang X., Tao D. Eigenfunction-based multitask learning in a reproducing kernel Hilbert space. IEEE Transact. Neur. Netw. Learn. Syst. 2019;30:1818–1830. doi: 10.1109/TNNLS.2018.2873649. [DOI] [PubMed] [Google Scholar]

- 33.Huang Z.A., Liu R., Tan K.C. 2020 International Joint Conference on Neural Networks (IJCNN) 2020. Multi-task learning for efficient diagnosis of ASD and ADHD using resting-state fMRI data; pp. 1–7. [Google Scholar]

- 34.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: classification and segmentation. Comput. Biol. Med. 2020;126 doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bayar B., Stamm M.C. 2017 IEEE International Conference on Image Processing (ICIP) 2017. Augmented convolutional feature maps for robust CNN-based camera model identification; pp. 4098–4102. [Google Scholar]

- 36.Chen Z., Fu Y., Zhang Y., Jiang Y., Xue X., Sigal L. Multi-level semantic feature augmentation for one-shot learning. IEEE Trans. Image Process. 2019;28:4594–4605. doi: 10.1109/TIP.2019.2910052. [DOI] [PubMed] [Google Scholar]

- 37.Feng X., Yang J., Laine A.F., Angelini E.D. In: Medical Image Computing and Computer Assisted Intervention − MICCAI 2017. Descoteaux M., Maier-Hein L., Franz A., Jannin P., Collins D.L., Duchesne S., editors. Springer International Publishing; Cham: 2017. Discriminative Localization in CNNs for Weakly-Supervised Segmentation of Pulmonary Nodules; pp. 568–576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hofmanninger J., Prayer F., Pan J., Röhrich S., Prosch H., Langs G. Automatic lung segmentation in routine imaging is primarily a data diversity problem, not a methodology problem. Eur. Radiol. Exper. 2020;4:50. doi: 10.1186/s41747-020-00173-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kassin M.T., Varble N., Blain M., Xu S., Turkbey E.B., Harmon S., Yang D., Xu Z., Roth H., Xu D., Flores M., Amalou A., Sun K., Kadri S., Patella F., Cariati M., Scarabelli A., Stellato E., Ierardi A.M., Carrafiello G., An P., Turkbey B., Wood B.J. Generalized chest CT and lab curves throughout the course of COVID-19. Sci. Rep. 2021;11:6940. doi: 10.1038/s41598-021-85694-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset (including 1329 segmented chest CT scans and corresponding extracted high-throughput lung features), source code, and pretrained models have been released at a public repository: https://github.com/guoqingbao/COVID-MTL.