Abstract

Observers spontaneously segment larger activities into smaller events. For example, “washing a car” might be segmented into “scrubbing,” “rinsing,” and “drying” the car. This process, called event segmentation, separates “what is happening now” from “what just happened.” In this study we show that event segmentation predicts activity in the hippocampus when people access recent information. Participants watched narrative film and occasionally attempted to retrieve from memory objects that recently appeared in the film. The delay between object presentation and test was always 5 s. Critically, for some of the objects the event changed during the delay while for others the event continued. Using functional magnetic resonance imaging we examined whether retrieval related brain activity differed when the event changed during the delay. Brain regions involved in remembering past experiences over long periods of time, including the hippocampus, were more active during retrieval when the event changed during the delay. Thus, the way an object encountered just five seconds ago is retrieved from memory appears to depend in part on what happened in those five seconds. These data strongly suggest that the segmentation of ongoing activity into events is a control process that regulates when memory for events is updated.

Keywords: Episodic Memory, Event Perception, fMRI, Medial Temporal Lobe, Retrieval

As a part of ongoing perception observers separate what is happening now from what just happened (Newtson, 1973; Zacks, Speer, Swallow, Braver, & Reynolds, 2007). For example, while watching a man cross the street, an observer may divide the activity into two parts: the man waits for traffic to clear, then he walks through the intersection. This process, called event segmentation, can be measured in the lab by asking participants to press a button when they believe an event boundary (the moment in time that separates two events) has occurred. Event segmentation has observable effects on neural processing and long-term memory for events. When observers passively view movies of goal directed activities, the points in time that correspond to event boundaries are associated with increased blood oxygen level dependent (BOLD) activity in bilateral extrastriate cortex, including motion sensitive and biological motion sensitive regions, right prefrontal cortex, and bilateral medial parietal cortex (Speer, Swallow, & Zacks, 2003; Zacks, Braver, Sheridan, Donaldson, Snyder, Ollinger, et al., 2001; Zacks, Swallow, Vettel, & McAvoy, 2006). In long-term memory tests, event boundaries are also better recognized than other timepoints in the movie (Baird & Baldwin, 2001; Newtson & Engquist, 1976; Swallow, Zacks, & Abrams, 2009; Zacks, Speer, Swallow, Braver, & Reynolds, 2007). Although these data show that event segmentation has important consequences for the way perceived events are processed and encoded, relatively little is known about its consequences for memory retrieval.

Several theories of perception and comprehension suggest that changes in events should lead to changes in how recent information is retrieved from memory (Gernsbacher, 1985; Zacks, Speer, Swallow, Braver, & Reynolds, 2007; Zwaan & Radvansky, 1998). In general, these theories propose that observers represent the current situation in a mental model that encodes features of the current event, including location of the event, the actors, their goals, and the objects that are present. According to Event Segmentation Theory (Zacks, Speer, Swallow, Braver, & Reynolds, 2007), models of the current event (event models) are actively maintained in memory until the event is segmented. Once the event is segmented EST claims that active memory is cleared and a new event model is built from current perceptual information.

EST entails three specific hypotheses about memory encoding and retrieval.

First, event boundaries should be better encoded into episodic memory than other moments in time. As part of setting up a new event model, information presented at event boundaries should receive additional processing and therefore should be better encoded into episodic memory than nonboundary information.

Second, event boundaries should mark when recently encountered information is cleared from active memory. If and when subsequent retrieval is needed, this information must be retrieved from episodic memory. Clearing active memory at event boundaries should have several consequences for memory for recently encountered objects. Because it is not processed as well as boundary information, information presented during nonboundary periods is less likely to be encoded into episodic memory and should be less accurately retrieved across events than within events (Baird & Baldwin, 2001; Newtson & Engquist, 1976; Swallow, Zacks, & Abrams, 2009). Indeed, relative to boundary information, nonboundary information is poorly remembered after long delays (Newtson & Engquist, 1976) and appears to contribute little to an observer’s comprehension of an event (Schwan & Garsoffky, 2004). In addition, because forgetting irrelevant information reduces the degree to which it interferes with the retrieval of relevant information (Kuhl, Dudukovic, Kahn, & Wagner, 2007), forgetting nonboundary information may facilitate the retrieval of boundary information that has been encoded into episodic memory (Swallow, Zacks, & Abrams, 2009). Because it should be encoded into episodic memory, boundary information may be remembered as well, or better, after active memory has been cleared at a subsequent event boundary.

Finally, the proposal that active memory is cleared at event boundaries implies that the brain systems involved in retrieving recently encountered information should change when events change. Brain regions involved in episodic retrieval, such as the MTL and medial and lateral parietal cortex, should be more active during retrieval across events than during retrieval within events. In addition, regions that are most active during successful retrieval from episodic memory should also be most active when boundary information is retrieved across events.

Previous research on event perception provides substantial evidence in favor of the first hypothesis (Baird & Baldwin, 2001; Newtson & Engquist, 1976; Swallow, Zacks, & Abrams, 2009), showing that movie frames and objects that are visible at an event boundary are better recognized than those that are not. Research in narrative and discourse comprehension (Gernsbacher, 1985; Jarvella, 1979; Radvansky & Copeland, 2006; Speer & Zacks, 2005) and two studies of retrieval during film viewing (Carroll & Bever, 1976; Swallow, Zacks, & Abrams, 2009) provide evidence for the second hypothesis: Changes in perceived and narrated events can impair retrieval of information encountered prior to the change. However, to date no research has investigated neural activity during memory retrieval as a function of event segmentation.

To examine whether the neural systems involved in remembering recent information change in response to changes in events, we asked 28 participants to watch movies depicting goal directed activities while undergoing functional magnetic resonance imaging (fMRI). The movies were rich, naturalistic stimuli excerpted from professional narrative cinema. The task was identical to that used in another study of event segmentation and memory (Swallow, Zacks, & Abrams, 2009). Occasionally, the movies stopped for a recognition test on an object that was recently presented in the movie (Figure 1a). All objects were tested 5 s after they were presented. For this test, the question “Which of these objects was just in the movie?” appeared above an old object that had been presented in the movie and an object that was contextually appropriate for, but not present in, the movie. As in earlier studies, several variables that could influence the memorability of each object (e.g., object size, eccentricity, and the ease with which the object is detected within the scene) were measured and statistically controlled.

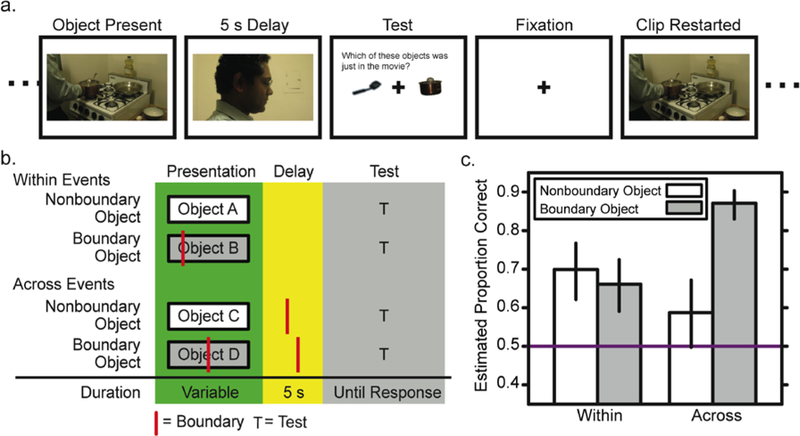

Figure 1.

Design and behavioral data. (a) Participants viewed clips that depicted goal directed activities. 5 s after an object was presented the clip stopped for a two-alternative forced choice recognition test. Afterwards, the movie restarted at a point ten seconds prior to when it was stopped. (b) Object recognition tests were in four conditions based on whether event boundaries occurred during object presentation and during the delay. (c) Mean estimates of accuracy for tests of an average old object (see Methods) in each of the four object test conditions. The purple line indicates chance performance. Error bars are 95% confidence intervals. NOTE: The images in this figure were not used in the experiments but are illustrative of what participants saw. All movies and images were displayed in full color.

We examined how recognition test performance and retrieval-related BOLD activity varied as a function of two attributes of event segmentation (Figure 1b). First, for each trial an event boundary may have occurred during object presentation (boundary object trials) or not (nonboundary object trials). For example, in one of the stimulus movies, a man is shown aiming a toy gun at a balloon and then firing, at which point an event boundary occurs (perhaps reflecting that the actor’s activity changed from aiming to firing). A wall clock is on the wall behind the man when the event boundary occurs, making it a boundary object. According to EST, the occurrence of an event boundary during the presentation of the clock should increase the likelihood that it is processed and encoded into episodic memory. Second, for each trial an event boundary may have occurred during the 5 s delay between object presentation and test (across event trials) or not (within event trials). In the previous example, a couple seconds after the man shoots the toy gun, the movie cuts to a new scene in which he is shown taking a picture. An event boundary occurs at this time, perhaps reflecting the actor’s change in location and activity. Because the clock is tested soon after this event boundary it is tested across events. According to EST, anything that has not been encoded into episodic memory (less likely for nonboundary objects) should be less recognizable when it is tested across events rather than within events. Anything that has been encoded into episodic memory (likely for boundary objects) should be recognizable following an event boundary.

For all conditions, the delay between object presentation and test was held constant while the presence of event boundaries during object presentation and during the delay varied.

Materials and Methods

Participants

Participants were 28 right-handed, native English speaking volunteers (20 female, 18–28) who provided informed consent. All procedures were approved by the Washington University Institutional Review Board.

Image Acquisition and Processing

Data acquisition was performed in a Siemens 3T MRI Scanner (Erlangen, Germany). A high-resolution T1-weighted image (MPRAGE; 1×1×1.25 mm) was acquired. BOLD data (Ogawa, Lee, Kay, & Tank, 1990) were acquired with a T2* weighted asymmetric spin-echo echo-planar sequence (slice TR=64 ms, TE=25 ms) in 32 transverse slices (4.0 mm isotropic voxels) aligned with the anterior and posterior commissures. To facilitate BOLD data registration to individual anatomy, a high-resolution T2-weighted fast turbo-spin echo image (1.3×1.3×4.0 mm voxels, slice TR=8430 ms, TE=96) was acquired in the same plane as the T2* images before the BOLD data were collected. Timing offsets in the functional data were corrected with cubic spline interpolation and intensity differences in the slices were removed to compensate for interleaved slice acquisition. Functional and structural data were aligned, warped to standard stereotaxic space (Talairach & Tournoux, 1988) and resampled to 3.0 mm isotropic voxels.

Stimulus Presentation

Stimuli were presented with PsyScope X software (Cohen, MacWhinney, Flatt, & Provost, 1993) on a PowerBook G4 (Apple Inc., Cupertino, CA). Visual stimuli were back-projected onto a screen at the head of the scanner bore. Movie soundtracks were presented over headphones.

Materials

Detailed descriptions of the materials are available elsewhere (Swallow, Zacks, & Abrams, 2009). In brief, five clips from four commercial movies, Mr. Mom (Dragoti, 1983), Mon Oncle (Tati, 1958), One Hour Photo (Romanek, 2002), and 3 Iron (Ki-Duk, 2004) depicted characters engaged in everyday activities in natural and realistic settings, had little dialogue, and presented clearly identifiable objects. Scenes from the film 3 Iron were presented in two clips to permit the introduction of a central character appearing in later scenes. A clip from The Red Balloon (Lamorisse, 1956) was used for a practice session. 5 s of a black screen preceded and followed each clip.

A second group of 16 individuals identified event boundaries. Participants watched the clips and pressed a button whenever they believed one natural unit of activity ended and another began. Participants performed the task twice to identify events at large and small temporal resolutions (grains). The button press time-series for each clip and grain were smoothed (Gaussian kernel; large grain bandwidth=2.5 s, small grain bandwidth=1 s). Event boundaries were defined as the highest local maxima of the smoothed time-series. The number of boundaries equaled the mean number of button presses for that clip and grain.

Thirty-five objects presented in the movie clips were selected for testing. The 35 object tests were classified according to the presence or absence of an event boundary during object presentation and during the 5 s delay between object presentation and test (Figure 1). There were 7 objects in the nonboundary object, within event condition, 8 objects in the boundary object, within event condition, 9 objects in the nonboundary object, across events condition, and 11 objects in the boundary object, across events condition. Thirty-five additional objects were identified for a secondary analysis but were not tested (nontest control). Like the objects that were tested, these objects were classified according to whether an event boundary occurred during the time the object was on the screen and during the 5 second period that followed object presentation (equivalent to the delay period for tested objects). The number of tested and untested objects in the four conditions defined by these two factors was equivalent.

Recognition Test Alternatives.

The 35 old objects that were selected for testing were continuously visible for at least 1 s and were not presented within 5 s of other old objects. For each old object, an object that was contextually appropriate but from a different semantic category than the old object (e.g., cat vs. chair) served as the recognition test foil. An image for the test foil was photographed, acquired online, or taken from stock photography and manipulated to match the properties (e.g., contrast) of the old object (see Swallow, Zacks, & Abrams, 2009 for additional details).

In two pilot studies, participants performed match-to-sample tasks. For these tasks, a frame from the movie appeared above images of two objects. One group was shown the old object and an object from the same category as the old object (e.g., two different chairs). The other group was shown an object from the same category as the old object (e.g., a different chair) and the recognition test foil (e.g., a cat). Participants were told to select the object (or type of object) that most closely matched an object in the frame as quickly as they could. Only objects that were correctly matched by 80% of participants were used in the recognition tests.

Task and Procedure

Functional data were acquired in 5 BOLD runs (TR=2.048 s), one for each clip. Clip order was counterbalanced across participants. Prior to each run, a brief introduction was read. Runs began with 19 frames of a black fixation cross (1°x1°) on a white background. The clip then played at the center of the screen. About once a minute the clip stopped for a two-alternative forced choice recognition test. Thirty-five tests were object tests (Figure 1a) for which the question “Which of these objects was just in the movie?” appeared 4.17° above a fixation cross at the center of the screen. The old object and its corresponding different type object were presented 4.86° deg to the left and right of the fixation cross. Twelve tests were event tests, which consisted of a question about a recent activity (e.g., “Who started the music?”) and two reasonable alternatives (e.g., “The young man.” “The woman.”). Event tests were included to ensure that participants attended to the activities in the films but were not designed to test the hypotheses derived from EST. The delay between the end of object presentation or the end of the event and its test was always 5 s. Participants responded to tests with their right hand using a four key response box. Following a response, the fixation cross was presented for 1–5 frames before the movie was restarted. Fifteen frames of fixation followed the final portion of the clip instead of a test. Five comprehension questions focusing on the activities, intentions, and goals of the characters were administered after the run. The shortest run lasted a mean of 8.73 minutes; the longest run lasted a mean of 18.9 minutes.

A practice session performed during the structural scan with The Red Balloon presented primarily event tests (6/8) to encourage participants to attend to the activities. Prior to the scan, the volume of the soundtrack was adjusted to ensure it was audible.

Data Analysis

Matching time from the two match to sample pilot studies, a variable coding whether the actor interacted with the object (actor-object interactions), object size, and object eccentricity were used as covariates in the behavioral data analyses of object test accuracy and response times. 1[footnote 1] Actor-object interactions were defined as any change in the relationship between the actor and an object while the object is on the screen (e.g., changing the position of an object is an actor-object interaction, holding that object in the same position and manner is not). 2[footnote 2] One model was calculated for each individual. For accuracy, logistic regression coefficients for the effects of delay- and presentation- boundaries were obtained. T-tests evaluated the statistical significance of the logistic regression coefficients. Post hoc tests were performed on the logits of accuracy. For response times, residuals from linear regression models were analyzed with analysis of variance (ANOVA). For the figures accuracy was estimated for an “average” old object for each trial and individual. Estimates of the probability of a correct response on each trial and its associated response time were obtained by multiplying the appropriate regression coefficients from the individual regression models by the two mean matching time values, the mean of the actor-object interaction variable, mean object size, mean object eccentricity, and dummy variables coding object test condition.

BOLD data were analyzed using the general linear model (GLM) and an assumed hemodynamic response function (Boynton, Engel, Glover, & Heeger, 1996). Regressors in the GLM modeled each type of object test (one per condition, duration=response time3 [footnote 3]), each type of nontest control (one per condition, duration matched to tests in corresponding conditions), event tests (duration=response time), movie presentation (duration=clip length), linear drift in the BOLD signal during each run, and baseline differences in BOLD signal across runs. The first 4 frames of BOLD data were dropped and the remaining data were spatially smoothed with a Gaussian kernel (FWHM=6 mm). For region of interest analyses, one model was estimated per region per participant. For whole-brain analyses, one model was estimated per voxel per participant. In the whole-brain analysis, regions were defined as a set of contiguous voxels, and the percent signal change under each condition of the object test was estimated for each region.

Identification of Regions of Interest

Using established protocols (Head, Snyder, Girton, Morris, & Buckner, 2005; Insausti, Insausti, Sobreviela, Salinas, & Martínez-Peñuela, 1998), one researcher traced each participant’s right and left hippocampus (HPC) and parahippocampal gyrus (PHG) twice on coronal slices of the T1-weighted structural volumes. The HPC included the dentate gyrus and subiculum. The PHG (including entorhinal, perirhinal, and posterior parahippocampus) was bounded by white matter dorsally and by the collateral sulcus. Test-retest reliability was adequate (all intraclass correlations > .75). A motion sensitive region in extrastriate cortex (MT+) was identified using data from another study. For that study, 28 participants were shown displays of moving dots (translating motion) and still dots for 1 s. Moving dot displays were presented in low and high contrast. Right MT+ was defined as voxels in the right lateral posterior temporal cortex that were more active during moving dot displays than during still dot displays across participants (p<0.05, z≥4.0, cluster size≥5 voxels).

A third region of interest in inferior parietal lobule (iIPL) was identified independently of the current data set using coordinates of bilateral lateral inferior parietal regions reported by Vincent and colleagues (2006). In their study, Vincent and colleagues (2006) identified brain regions whose resting state activity correlated with seed regions in the hippocampus. Subsequent analyses confirmed that these regions showed standard old/new and remember/know effects in recognition memory. iIPL regions were defined as all voxels within 9 mm of the voxel with the peak resting state correlation in the inferior parietal lobule (left iIPL:: −39 −73 42; right iIPL: 45 −69 40; Vincent, et al., 2006).

Results

Behavioral Data

Participants accurately responded to the event tests and the post-clip comprehension questions, indicating that they were attending to the activities presented in the movies. Mean accuracy for the event tests was .946 (SD = 0.061) with an average median response time of 3.27 s (SD = 0.854). Mean accuracy and response times for the post-clip comprehension questions were .870 (SD = 0.076) and 7.05 s (SD = 1.54).

According to EST, the perception of an event boundary should lead to increased perceptual processing and the construction of a new mental model describing the current situation. If this is the case, then boundary objects are more likely to be encoded into episodic memory than are nonboundary objects. In addition, EST claims that active memory is cleared at event boundaries. As a result, recognizing objects across events should depend on episodic memory representations. Furthermore, because related information is no longer in active memory to interfere with retrieval, objects encoded into episodic memory may be better remembered when they are tested across events rather than within events (cf. Kuhl, Dudukovic, Kahn, & Wagner, 2007). EST therefore predicts an interaction between presentation-boundaries and delay-boundaries: Both nonboundary and boundary objects should be recognizable when tested within events, but only those objects that are encoded into episodic memory (likely for boundary objects but not for nonboundary objects) should be available in memory when they are tested across events.

Figure 1c illustrates recognition test accuracy for the four object test conditions. The data support EST’s predictions: When an event boundary occurred during the 5 s delay between presentation and test, accuracy for nonboundary objects declined, t27=−2.24, p=.03, and accuracy for boundary objects increased, t27=6.70, p<.001, resulting in a reliable interaction between event boundaries during the delay and event boundaries during object presentation: odds ratio=1.54, mean logistic regression coefficient=0.433, SD=0.42; t27=5.46, p<0.001 (main effect of presentation-boundaries: odds ratio=1.50, mean logistic regression coefficient=0.41, SD=0.48; t27=4.48, p<0.001; main effect of delay-boundaries: odds ratio=1.25, mean logistic regression coefficient=0.22, SD=0.33; t27=3.58, p<0.002). An analysis of response times (Table 1) indicated that differences in response accuracy across the four test conditions did not result from a speed accuracy trade-off. Responses were fastest when boundary objects were tested across events, slowest when boundary objects were tested within events, and comparable in the remaining two conditions. This pattern produced a marginally reliable main effect of delay-boundaries, F1, 27=3.66, p<.066, ηp2=.119 (the main effect of presentation-boundaries and its interaction were not reliable, both F1, 27<2.41, p>.132).

Table 1.

Mean and standard deviation (in parentheses) of response times, in seconds, to object tests

| Within Events | Across Events | |||

|---|---|---|---|---|

| Nonboundary | Boundary | Nonboundary | Boundary | |

| Raw Mean | 3.27 (0.72) | 3.44 (0.74) | 3.29 (0.58) | 3.15 (0.69) |

| Average Object | 3.31 (0.74) | 3.38 (0.72) | 3.41 (0.62) | 3.08 (0.66) |

Note: Average object response times were derived from the linear regression models of individual participants data (see Data Analysis in Methods) using the mean object size, mean actor-object interactions, mean object eccentricity, and mean matching times of all the old objects.

There are two striking aspects of these data. First, recognition accuracy was low and near chance for nonboundary objects that were tested across events but well above chance for nonboundary objects tested within events. This effect is wholly consistent with the predictions derived from EST. According to EST, nonboundary objects are not likely to be stored in episodic memory, and anything not stored in episodic memory should be difficult to recognize after an event boundary. Second, the occurrence of an event boundary during the 5-s delay between presentation and test was associated with greater recognition accuracy for boundary objects. This difference may be due to the fact that within event and across event tests occurred at different times relative to the beginning of the current event (or the most recent event boundary). Additional information may be acquired and stored in active memory as an event progresses. Cognitive load and interference from information stored in active memory therefore should be greater later in an event, when within event tests occurred, than earlier in an event, when across event tests occurred. Following the clearance of active memory at delay-boundaries, decreases in cognitive load and interference would enhance recognition memory for boundary objects, which are likely to be stored in episodic memory, but not for nonboundary objects, which should be less available for retrieval. Additional research is needed to determine whether interference and cognitive load can account for better recognition of boundary objects tested across events than those tested within events. Importantly, however, the data were consistent with predictions derived from EST and replicated data from previously reported experiments (Swallow, Zacks, & Abrams, 2009).

Imaging Data

If retrieval across events relies on episodic memory, then brain regions involved in episodic memory retrieval should be more active when an object is retrieved across events than when it is retrieved within an event. Such regions include the hippocampus (HPC) and the parahippocampal gyrus (PHG). These regions have been tied to the encoding and successful retrieval of domain general relational information about an episode and to the encoding and successful retrieval of context, scene and layout information, respectively (Davachi, 2006; Dobbins, Rice, Wagner, & Schacter, 2003; Hannula, Tranel, & Cohen, 2006). Because HPC and PHG show greater increases in activity when encoding context is successfully retrieved from episodic memory (Dobbins, Rice, Wagner, & Schacter, 2003), EST predicts that they should show larger increases in activity when boundary objects are tested across events (and purportedly retrieved from episodic memory) than within events. We defined anatomical regions of interest for the left and right HPC and PHG (Head, Snyder, Girton, Morris, & Buckner, 2005; Insausti, Insausti, Sobreviela, Salinas, & Martínez-Peñuela, 1998) and used a general linear model (GLM) to estimate, for each participant, the degree to which BOLD activity in these regions differed across the four types of object tests. These estimates were then submitted to a repeated measures analysis of variance (ANOVA) with four factors: event boundaries during object presentation, event boundaries during the delay interval, brain hemisphere, and anatomical region.

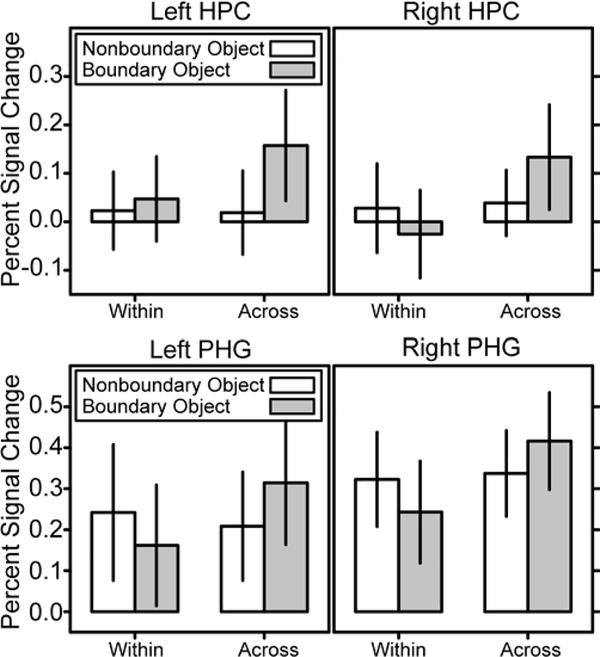

Estimated BOLD activity in the HPC and PHG during the four different types of object tests is illustrated in Figure 2. Overall the PHG was more active during retrieval than was the HPC, main effect of region F1,27=46.8, p<0.001. However, the PHG and HPC showed a similar pattern of activity across the four object tests. As can be seen in Figure 2, both the HPC and PHG were more active during retrieval across events than during retrieval within events, but only when boundary objects were tested, resulting in a reliable delay-boundary x presentation-boundary interaction, F1,27=7.42, p=0.011 and a main effect of delay-boundaries, F1,27=8.96, p=0.006. Delay- and presentation-boundaries did not reliably interact with hemisphere or region, largest F1,27=1.94, p=0.175. Of critical importance, however, was whether retrieval related activity in the HPC and PHG was greatest when boundary objects were retrieved across events than in the other three test conditions. This was the case in the HPC where activity was greater when boundary objects were retrieved across events than in the other three test conditions, smallest t27=3.46, p=.002. In the PHG, activity was reliably greater when boundary objects were retrieved across events than when they were retrieved within events and when nonboundary objects were retrieved across events, smallest t27=2.11, p=.044. The difference in activity for boundary objects retrieved across events and nonboundary objects retrieved within events was not reliable in the PHG, t27=1.61, p=.119. Thus, the HPC showed the largest increases in activity in the condition in which the objects should have been successfully retrieved from episodic memory. The PHG also increased in activity most when successful retrieval from episodic memory was expected, though this effect was reliable in only two of the three comparisons.

Figure 2.

Activity in anatomical regions of interest defined for the bilateral HPC and PHG varied across the four types of object recognition tests. Error bars indicate 95% confidence intervals.

To further evaluate whether episodic retrieval systems are more engaged when retrieving objects across events than within events, we conducted a voxel-wise whole-brain analysis. As with the region of interest analysis, responses for each participant were estimated using a GLM that included contrasts for each type of test. Model estimates were submitted to a 2×2 ANOVA with event boundaries during object presentation and event boundaries during the delay as within-participants factors and participant as a random effect. F-values were sphericity corrected and converted to z-values. The map-wise false positive rate was held to p<0.05 (z≥4.0, cluster size ≥ 4 voxels; (McAvoy, Ollinger, & Buckner, 2001). The resulting regions are listed in Table 2.

Table 2.

Regions whose activity varied across the four object tests

| Cortical Region | Brodmann Area | Center of Mass | |

|---|---|---|---|

| Main Effect of Event Boundaries During the Delay | |||

| Precentral Sulcus | Left | 4 | −20, −21, 58 |

| Precentral Gyrus | Left | 4 | −34, −28, 42 |

| Inferior Frontal Cortex | Right | 44/6 | 55, 6, 3 |

| Precuneus | Left | 31/18 | −13, −62, 21 |

| Right | 31/18 | 15, −57, 23 | |

| Posterior Cingulate | Left | 23/29/30 | −16, −48, 6 |

| Inferior Parietal Lobule | Right | 40/39 | 38, −72, 32 |

| Medial Temporal Lobe | Left | 35/36 | −24, −39, −11 |

| Right | 35/36 | 26, −38, −10 | |

| Superior Temporal Gyrus | Left | 22 | −58, −29, 1 |

| Left | 41/42 | −55, −43, 4 | |

| Right | 22 | 57, −25, 1 | |

| Cuneus | Both | 18 | 0, −93, 0 |

| Cerebellum | Left | −14, −41, −39 | |

| Right | 12, −48, 6 | ||

|

Main Effect of Event Boundaries During Object Presentation | |||

| Precentral Sulcus | Left | 6 | −17, −30, 51 |

| Inferior Parietal Lobule | Right | 40/39 | 41, −63, 39 |

| Left | 40/39 | −41, −68, 36 | |

| Precuneus | Both | 31/18 | 1, −67, 31 |

| Posterior Cingulate | Right | 23/29/31 | 5, −45, 26 |

| Angular Gyrus | Right | 22 | 56, −43, 18 |

| Temporal Occipital Cortex | Left | 39/37 | −42, −68, 6 |

| Lateral Occipital Cortex | Left | 18 | −28, −89, 8 |

| Right | 18 | 31, −85, 13 | |

| Medial Occipital Cortex | Both | 19 | 8, −93, 23 |

|

| |||

| Interaction | |||

|

| |||

| Precuneus | Right | 31 | 5, −38, 38 |

| Intraparietal Sulcus | Left | 39/7 | −38, −56, 42 |

| Inferior Parietal Lobule | Right | 40/39 | 36, −65, 41 |

| Temporal Occipital Cortex | Left | 39/37 | −41, −70, 5 |

| Right | 39/37 | 46, −69, 10 | |

| Lingual Gyrus | Left | 18 | −4, −82, −10 |

| Cerebellum | Right | 7, −58, −36 | |

Note: Center of Mass is in (x, y, z) coordinates.

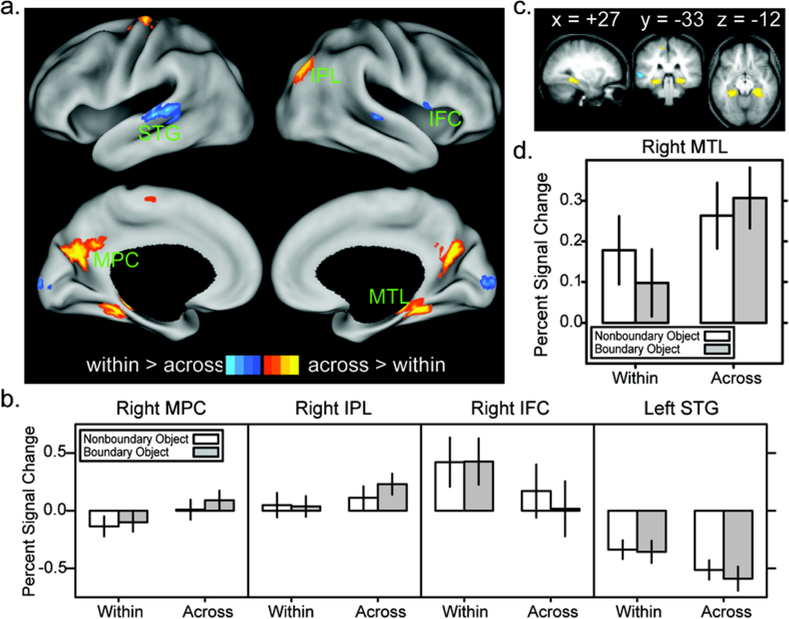

As illustrated in Figure 3, regions in the bilateral MTL (BA 35/36), the medial parietal cortex (MPC), including bilateral precuneus (BA 31/18) and left posterior cingulate (PCC; BA 23/31), and the right inferior parietal lobule (rIPL, BA 19) were more active during retrieval across events than during retrieval within events (smallest F1,27=79.6, p<0.001). This delay-boundary effect interacted with the effect of event boundaries during object presentation in the right MTL, left PCC, and right IPL (smallest F1,27=6.75, p=0.015). Although the delay-boundary effect was larger for boundary objects than for nonboundary objects, Tukey’s post-hoc tests confirmed that it was also reliable for nonboundary objects (marginal for nonboundary objects in PCC, qs=3.56, p=0.079; smallest qs = 4.55, p=0.017 for all others). These data indicate that when an event boundary occurred during the 5 s delay between object presentation and test, attempts to retrieve both boundary and nonboundary objects engaged the MTL, MPC, and right IPL.

Figure 3.

Retrieval-related activity in a network of regions was associated with whether an event boundary occurred during the 5-s delay. (a). Regions more active during retrieval across events than within events are in yellow; regions more active during retrieval within events than across events are in blue (mapped to PALS atlas with CARET, Van Essen, 2002; Van Essen, 2004). (b) Percent signal change during tests in four conditions for representative regions. (c) Slices showing the regions in the MTL on the average anatomy of participants. (d) Percent signal change, plotted as for (b). Error bars indicate 95% confidence intervals.

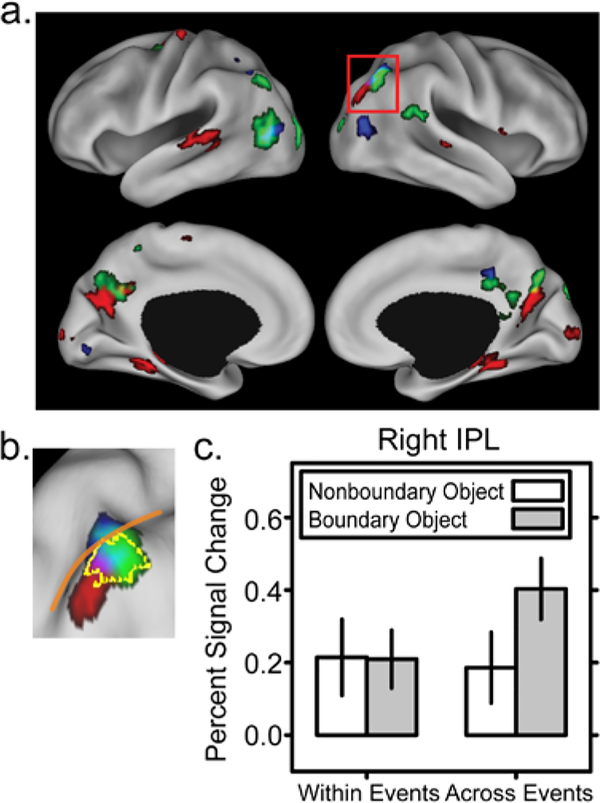

Another region in the right IPL showed a reliable interaction between delay boundaries and presentation boundaries (Figure 4). This region was medial and superior to the rIPL region that exhibited a main effect of delay boundaries. It showed a pattern of activity very similar to that observed in the anatomically-defined HPC: Changes in activity were greatest when boundary objects were retrieved across events (smallest t27=5.79, p=.001). However, for nonboundary objects there was no significant effect of whether they were tested within or across events (t27=−1.03, p=.31).

Figure 4.

Regions showing the delay-boundary effect, the presentation-boundary effect, and an interactive effect of these two factors. (a) Regions whose activity differed between within- and across-event retrieval (red) were largely separate from those whose activity changed when an event boundary occurred during object presentation (green) and those whose activity depended on the interaction of these factors (blue). Overlap is shown in yellow, magenta, and light blue. (b) Retrieval related activity in several adjacent regions in the right IPL (outlined in the red box in panel a and shown here from a dorsal posterior angle) immediately ventral to the posterior intraparietal sulcus (marked in orange) differed along the delay- and presentation-boundaries factors, as well as their interaction. The yellow outline indicates the iIPL region of interest defined by coordinates from a study of resting state activity in the HPC (Vincent, et al., 2006). (c) Activity in the right IPL region that showed an interactive effect of delay- and presentation-boundaries mirrored recognition test accuracy (blue region in panel b), changing most when boundary objects were tested across events. Error bars indicate 95% confidence intervals.

To further explore the relationship between the activity in HPC and the IPL during the object tests, regions in bilateral IPL were independently defined using coordinates reported in another study (iIPL; see Methods; Vincent, et al., 2006). Although activity in the HPC was greater than activity in the iIPL (which decreased in activity for most conditions, see Table 3), F1,27=7.4, p<.011, the overall pattern of activity in these regions was similar across test conditions (the presentation-boundary x delay-boundary x region interaction was not reliable, F1,27=1.08, p=.31). Activity in bilateral HPC and iIPL was greatest when boundary objects were tested across events and similar in the remaining three test conditions (presentation-boundary x delay-boundary interaction, F1,27=16.3, p<.001; main effect of presentation-boundary, F1,27=24.0, p<.001; main effect of delay-boundary, F1,27=5.15, p=.031). The main effect of presentation-boundaries was stronger in the iIPL than in the HPC, F1,27=17.7, p<.001.

Table 3.

Mean and Standard Deviation of Percent Signal Change in Regions of Interest

| Test | Nontest Control | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Within Events | Across Events | Within Events | Across Events | ||||||

| Region | NBO | BO | NBO | BO | NBO | BO | NBO | BO | |

|

| |||||||||

| HPC | L | 0.02 | 0.05 | 0.02 | 0.16 | −0.01 | 0 | 0.08 | 0.02 |

| (0.21) | (0.23) | (0.22) | (0.29) | (0.2) | (0.15) | (0.13) | (0.16) | ||

| R | 0.03 | −0.03 | 0.04 | 0.13 | 0 | −0.01 | 0.01 | 0 | |

| (0.24) | (0.23) | (0.17) | (0.28) | (0.2) | (0.13) | (0.14) | (0.19) | ||

| PHG | L | 0.24 | 0.16 | 0.21 | 0.31 | −0.02 | 0.11 | 0.09 | 0.05 |

| (0.43) | (0.38) | (0.34) | (0.39) | (0.3) | (0.31) | (0.2) | (0.17) | ||

| R | 0.32 | 0.24 | 0.34 | 0.42 | 0.03 | 0.01 | 0.01 | 0.06 | |

| (0.3) | (0.32) | (0.27) | (0.31) | (0.2) | (0.22) | (0.21) | (0.18) | ||

| MT+ | R | −0.64 | −0.57 | −0.55 | −0.7 | 0.01 | −0.03 | −0.03 | 0.02 |

| (0.57) | (0.52) | (0.51) | (0.56) | (0.2) | (0.21) | (0.18) | (0.2) | ||

| PreC | L | 0.18 | 0.1 | 0.26 | 0.31 | 0.05 | 0.03 | 0.03 | 0.01 |

| (0.11) | (0.19) | (0.14) | (0.21) | (0.1) | (0.21) | (0.14) | (0.22) | ||

| R | −0.12 | −0.07 | 0.01 | 0.09 | 0.07 | −0.08 | −0.01 | 0 | |

| (0.09) | (0.14) | (0.1) | (0.16) | (0.12) | (0.16) | (0.12) | (0.17) | ||

| PCC | L | −0.14 | −0.1 | 0.01 | 0.09 | 0.04 | −0.1 | −0.04 | −0.02 |

| (0.13) | (0.22) | (0.12) | (0.21) | (0.16) | (0.22) | (0.15) | (0.22) | ||

| IPL | R | −0.08 | −0.11 | −0.01 | 0.06 | 0.03 | −0.02 | 0.00 | 0.04 |

| (0.08) | (0.2) | (0.1) | (0.23) | (0.11) | (0.22) | (0.13) | (0.19) | ||

| MTL | L | 0.05 | 0.04 | 0.11 | 0.23 | 0.01 | −0.01 | −0.04 | 0.04 |

| (0.13) | (0.23) | (0.14) | (0.23) | (0.11) | (0.26) | (0.14) | (0.27) | ||

| R | 0.16 | 0.12 | 0.27 | 0.3 | 0.01 | 0.06 | 0.02 | 0.01 | |

| (0.1) | (0.26) | (0.13) | (0.25) | (0.11) | (0.27) | (0.14) | (0.29) | ||

| iIPL | L | −0.14 | −0.06 | −0.22 | 0.05 | 0.02 | −0.06 | −0.05 | −0.06 |

| (0.45) | (0.38) | (0.43) | (0.38) | (0.31) | (0.26) | (0.22) | (0.3) | ||

| R | −0.21 | −0.15 | −0.29 | 0.04 | 0.02 | −0.09 | −0.1 | −0.06 | |

| (0.5) | (0.33) | (0.41) | (0.27) | (0.24) | (0.22) | (0.29) | (0.3) | ||

NBO=Nonboundary Object; BO=Boundary Object; L=Left; R=Right; HPC=Hippocampus; PHG=Parahippocampal Gyrus; MT+=putative human analog to motion sensitive Middle Temporal Cortex in monkey; PreC=Precuneus; PCC=Posterior Cingulate Cortex; IPL=Inferior Parietal Lobule; MTL=Medial Temporal Lobe; iIPL=independently identified Inferior Parietal Lobule region defined by coordinates from Vincent, et al (2006).

Because event segmentation is accompanied by a transient increase in activity in medial parietal regions of the brain as well as in extrastriate regions (Zacks et al., 2001), it is possible that some of the observed effects of delay-boundaries on activity reflect processing that would have occurred in the absence of retrieval attempts. A second analysis examined activity during the period of time that occurred 5 s after untested objects were presented (when tests normally occurred, nontest control period). Activity during the nontest control period was analyzed according to whether event boundaries occurred during object presentation and during the 5 s period that followed presentation. In addition, we defined a control region in a motion sensitive region of right extrastriate cortex (MT+) that transiently increases in activity at event boundaries (Zacks et al., 2001). Estimates of percent signal change in the test and nontest control periods are reported in Table 3. The independent variables did not reliably interact during the nontest control period in the HPC, PHG, and MT+ regions of interest (largest F1,27=1.04, p=0.317). For the regions identified through the whole-brain analysis (MTL, MPC, rIPL), ANOVAs on BOLD activity following tested and untested objects showed that the delay-boundary effect was greater during tests than during the nontest control period (interaction, smallest F1,27=16.7, p=0.001). Thus, the selective pattern of responses in the HPC, PHG, MTL, MPC, and rIPL during retrieval likely reflects retrieval related processing rather than ongoing event processing.

Discussion

If event boundaries mark when active memory for the current event is reset and updated, then retrieving information from the event just prior to the current one should engage episodic memory systems (Zacks, Speer, Swallow, Braver, & Reynolds, 2007). Therefore, the MTL, MPC, and IPL should be more active when objects are tested across events than when they are tested within events. The data support this claim. The MTL, which includes the HPC and the PHG, were differentially engaged in retrieval as a function of when event boundaries occurred in the clips. Activity in the bilateral MTL, bilateral MPC, and right IPL was greater when boundary and nonboundary objects were tested across events than when they were tested within events. Furthermore, the HPC and a region in the right IPL were most active when boundary objects were tested across events, the condition in which successful retrieval from episodic memory was predicted. These differences in activity were observed despite the fact that other potentially confounding factors were held constant (i.e., 5 s delay between object presentation and test, equivalent testing conditions, and, presumably equivalent retrieval strategies).

Retrieving Objects Across Events Engages Episodic Memory Systems

Activity in the MTL, MPC, and right IPL has been repeatedly observed in neuroimaging studies of episodic memory retrieval and during the recollection of encoding context, objects, words, and visual scenes (Ciaramelli, Grady, & Moscovitch, 2008; Dobbins, Rice, Wagner, & Schacter, 2003; Summerfield, Lepsien, Gitelman, Mesulam, & Nobre, 2006; Wagner, Shannon, Kahn, & Buckner, 2005). The HPC, PHG, and right IPL are also more active when participants search for targets in visual scenes that they have previously encountered, indicating that they may be involved in retrieving the locations of objects in scenes from memory (Summerfield, Lepsien, Gitelman, Mesulam, & Nobre, 2006). In addition, during an episodic retrieval task, activity in an IPL region whose resting state activity is correlated with that in the MTL was greater when participants reported remembering an item than when they reported that they were familiar with the item (Vincent et al., 2006). This IPL region was similar in location to the right IPL region that was selectively active when both boundary and nonboundary objects were tested across events (Figures 3 & 4). Moreover, like the HPC, activity in bilateral IPL regions defined using coordinates from Vincent and colleagues (2006) was greatest when boundary objects were tested across events and similar for the remaining three types of object tests. Activity in the IPL has been associated with a variety of retrieval phenomena including the adoption of a task set for episodic retrieval, successful recollection of an earlier experience from episodic memory, and reporting that a test item was previously studied (Ciaramelli, Grady, & Moscovitch, 2008; Wagner, Shannon, Kahn, & Buckner, 2005). The involvement of these regions in retrieval across events converges with the behavioral data and the anatomical region of interest analyses, suggesting that retrieval across events relies on episodic memory systems. It is therefore plausible that the MTL, MPC, and right IPL were engaged when objects were retrieved across events in order to reinstate the previous event in memory.

The observed pattern of activity in the IPL, PHG, and HPC does not simply reflect successful recognition of the object being tested. In this experiment, recognition accuracy was best when boundary objects were tested across events, moderate when boundary objects and nonboundary objects were tested within events, and worst when nonboundary objects were tested across events (Figure 1c). Activity in the HPC and a region in the right IPL (Figure 4b, light blue) was also greatest when boundary objects were tested across events. However, BOLD activity in these regions was similar when objects were tested within events and when nonboundary objects were tested across events. Activity in these regions did not distinguish between objects that were recognized at near chance levels (nonboundary objects that were tested across events) and objects that were recognized moderately well (objects that were tested within events).

Rather, the data more closely conforms to EST’s prediction that successful retrieval from episodic memory should only occur when boundary objects are tested across events. According to EST, episodic retrieval should not be necessary when objects are retrieved within events. Any region whose activity reflects retrieval success from episodic memory should therefore show the largest increases in activity when boundary objects are tested across events and should not differentiate between the other three conditions. The pattern of activity in the HPC and right IPL, both of which have been associated with retrieval success from episodic memory (Ciaramelli, Grady, & Moscovitch, 2008; Dobbins, Rice, Wagner, & Schacter, 2003; Vincent et al., 2006; Wagner, Shannon, Kahn, & Buckner, 2005), is consistent with this prediction.

Implications for Episodic Memory

Current theories of memory suggest that at least three factors may effect which brain systems are involved in holding a piece of information in memory at a given moment in time: the type of information maintained in memory (e.g., words vs. faces), the amount of time that has elapsed since the information was encountered, and the amount of intervening information encountered in that period of time (Anderson & Neely, 1996; Baddeley & Logie, 1999; Johnson & Rugg, 2007). Recent data show that the HPC, once thought to be selectively involved in long-term episodic memory, is also necessary for retaining relational information over short time periods (Hannula, Tranel, & Cohen, 2006; Hartley et al., 2007; Olson, Moore, Stark, & Chatterjee, 2006). These data have reignited the debate about the relationship between episodic memory and active memory (Jonides et al., 2008; Olson, Moore, Stark, & Chatterjee, 2006; Shrager, Levy, Hopkins, & Squire, 2008), supporting claims that it is the type of information maintained in memory that is most important for predicting whether the MTL are involved in its maintenance and retrieval. However, a growing number of studies also show that the involvement of the HPC in retrieval reflects the occurrence of any intervening information between encoding and retrieval (Cowan, 1999; Hannula, Tranel, & Cohen, 2006; Hartley et al., 2007; Jonides et al., 2008; Olson, Moore, Stark, & Chatterjee, 2006; Öztekin, McElree, Staresina, & Davachi, 2008). In one study, Öztekin and colleagues (2008) presented a list of five consonants to participants and immediately afterwards administered a two alternative recognition test on one of the consonants. When the last item in the list was tested, activity in the HPC was significantly lower than it was when the tested item was presented in an earlier position. These data indicate that the HPC is involved in retrieval when any amount of information, even a single consonant, intervenes between encoding and retrieval.

Our data provide a unique perspective on the role of the HPC and MTL in memory. In this experiment, the type of information that was tested and the delay between object presentation and test were constant across conditions. In addition, because the film continued during the 5 s delay between object presentation and test, some amount of intervening information occurred in all conditions. What differentiated the conditions was whether an event boundary occurred during the delay and whether an event boundary occurred during object presentation. Despite the fact that the same amount of time had elapsed and information was continuously presented during the delay, the HPC and MTL were most active during retrieval when an object had to be retrieved across an event boundary. Therefore, these data suggest that the involvement of the HPC and MTL in memory retrieval depends not just on how much time has elapsed or how much intervening material has been presented, but also on whether a new event has begun since the information was encoded.

Although the present study examined memory retrieval, these results have implications for the encoding of episodic memories. A parsimonious proposal is that, as a result of the memory updating, successive events may constitute qualitatively different context signals. These context signals may be used to discriminate the events in episodic memory (Polyn & Kahana, 2008). Thus, event segmentation may determine the elementary units of episodic memories. If so, abnormal segmentation patterns should be associated with poorer memory for events. Indeed, memory for events is disrupted both in individuals who abnormally segment events (Zacks, Speer, Vettel, & Jacoby, 2006) and when experimental manipulations interfere with normal segmentation (Boltz, 1992; Schwan & Garsoffky, 2004). Elementary episodic memory units may be quickly forgotten, or integrated into larger knowledge structures that represent knowledge goals and event structure (Conway, in press). Research on autobiographical memory indicates that such integration is critical for delayed recall (Conway, in press).

The data from this experiment also suggest an intriguing relationship between memory deficits associated with MTL lesions and the way perceived events are structured in time. Patients suffering lesions in the MTL demonstrate a marked impairment in the ability to remember a recent event after a brief delay (Stefanacci, Buffalo, Schmolck, & Squire, 2000). In particular, HPC damage leads to impairments in remembering the spatial, temporal, and associative relations among items (Hannula, Tranel, & Cohen, 2006; Konkel, Warren, Duff, Tranel, & Cohen, 2008), all of which may be important components of the mental representations of ongoing events (Zacks, Speer, Swallow, Braver, & Reynolds, 2007). The present data indicate that when an event is segmented, retrieving information encountered prior to segmentation engages the MTL. Although this does not mean that the MTL are necessary for retrieval across event boundaries, it does suggest that event segmentation may influence when amnesiacs lose track of recent events. Specifically, patients with MTL damage may retain information about an ongoing event until the event changes. Because event segmentation has been previously associated with changes in high-level conceptual features of activity (e.g., an actor putting an object down, walking to a new spatial location, or changing his or her goals; Speer, Zacks, & Reynolds, 2007), and changes in low-level perceptual features of an activity (e.g., object velocity; Zacks, 2004), conceptual and perceptual changes in events could influence when amnesiacs are more likely to forget what just happened.

Conclusion

Information does not continuously move into and out of active memory. Rather, the present data indicate that what one remembers and for how long depends on when events are segmented as well as subsequent input. The data are consistent with studies showing that the MTL are involved in retrieving study items over both short and long delays (Hannula, Tranel, & Cohen, 2006; Olson, Moore, Stark, & Chatterjee, 2006). Beyond this, they offer insight into when episodic retrieval systems are involved in retrieval and when they are not. Indeed, the data point to the conceptual and perceptual changes in events that correspond to event boundaries (e.g., an actor putting an object down or changing his or her goal state; Speer, Zacks, & Reynolds, 2007; Zacks, 2004) as important factors in determining when people will likely forget what has just happened.

Acknowledgements

This work was supported by a National Institutes of Health RO1 grant to JMZ and a Washington University in St. Louis Dean’s Dissertation Fellowship to KMS. The authors thank Nicole Speer, Emily Podany, John Harwell, Erbil Akbudak, Fran Miezin, Martin Conway, and Randy Buckner for their comments and help with the project.

Footnotes

Thematic relevance of the object to the scene, thematic relevance of the foil to the scene, and semantic relatedness of the target and foil to each other were also examined. These variables were not reliably related to recognition test accuracy (r’s < .04, t33 < 0.22, p > .83) and were not included as covariates in the behavioral data analysis.

A more inclusive variable coding whether any actor in the scene touched the object was also obtained. This variable was less strongly related to recognition test accuracy (r = .30) than was the actor-object interactions variable (r = .46). Because of the high degree of overlap in these variables, only the variable that served as the strongest predictor of recognition test accuracy was included as a covariate in the analysis.

Response times to the object tests varied across conditions (see Figure 1) and ranged from a mean minimum of 1.53 s (SD = .221 s) to a mean maximum of 6.41 s (SD = 1.94 s). The hemodynamic response function was extended by response times in order to account for this variability. A second analysis in which the HRF was not extended by RT was also conducted and yielded data consistent with those reported here.

References

- Anderson MC, & Neely JH (1996). Interference and inhibition in memory retrieval. In Bjork EL & Bjork RA (Eds.), Memory (2 ed., pp. 237–313). San Diego, CA: Academic Press. [Google Scholar]

- Baddeley A, & Logie RH (1999). The multiple-component model. In Miyake A & Shah P (Eds.), Models of Working Memory: Mechanisms of Active Maintenance and Executive Control (pp. 29–61). New York: Cambridge University Press. [Google Scholar]

- Baird JA, & Baldwin DA (2001). Making sense of human behavior: Action parsing and intentional inference. In Malle BF & Moses LJ (Eds.), Intentions and intentionality: Foundations of social cognition (pp. 193–206). Cambridge, MA: MIT Press. [Google Scholar]

- Boltz M (1992). Temporal accent structure and the remembering of filmed narratives. Journal of Experimental Psychology: Human Perception & Performance, 18(1), 90–105. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, & Heeger DJ (1996). Linear systems analysis of functional magnetic resonance imaging in human V1. Journal of Neuroscience, 16(13), 4207–4221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll JM, & Bever TG (1976). Segmentation in cinema perception. Science, 191(4231), 1053–1055. [DOI] [PubMed] [Google Scholar]

- Ciaramelli E, Grady CL, & Moscovitch M (2008). Top-down and bottom-up attention to memory: A hypothesis (AtoM) on the role of the posterior parietal cortex in memory retrieval. Neuropsychologia, 46, 1828–1851. [DOI] [PubMed] [Google Scholar]

- Cohen JD, MacWhinney B, Flatt M, & Provost J (1993). PsyScope: A new graphic interactive environment for designing psychology experiments. Behavioral Research Methods, Instruments, and Computers, 25(2), 257–271. [Google Scholar]

- Conway MA (in press). Episodic memories. Neuropsychologia. [DOI] [PubMed] [Google Scholar]

- Cowan N (1999). An embedded-processes model of working memory. In Miyake A & Shah P (Eds.), Models of Working Memory: Mechanisms of Active Maintenance and Executive Control (pp. 62–101). New York: Cambridge University Press. [Google Scholar]

- Davachi L (2006). Item, context and relational episodic encoding in humans. Current Opinion in Neurobiology, 16, 693–700. [DOI] [PubMed] [Google Scholar]

- Dobbins IG, Rice HJ, Wagner AD, & Schacter DL (2003). Memory orientation and success: separable neurocognitive components underlying episodic recognition. Neuropsychologia, 41, 318–333. [DOI] [PubMed] [Google Scholar]

- Dragoti S (Writer) (1983). Mr. Mom. In J. Hughes (Producer). United States: Orion Pictures. [Google Scholar]

- Gernsbacher MA (1985). Surface information loss in comprehension. Cognitive Psychology, 17, 324–363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula DE, Tranel D, & Cohen NJ (2006). The long and short of it: Relational memory impairments in amnesia, even at short lags. Journal of Neuroscience, 26(32), 8352–8359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartley T, Bird CM, Chan D, Cipolotti L, Husain M, Vargha-Khadem F, et al. (2007). The hippocampus is required for short-term topographical memory in humans. Hippocampus, 17, 34–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Head D, Snyder AZ, Girton LE, Morris JC, & Buckner RL (2005). Frontal-hippocampal double dissociation between normal aging and Alzheimer’s disease. Cerebral Cortex, 15, 732–739. [DOI] [PubMed] [Google Scholar]

- Insausti R, Insausti AM, Sobreviela MT, Salinas A, & Martínez-Peñuela JM (1998). Human medial temporal lobe in aging: anatomical basis of memory preservation. Microscopy research and technique, 43, 8–15. [DOI] [PubMed] [Google Scholar]

- Jarvella RJ (1979). Immediate memory and discourse processing. In Bower GH (Ed.), The Psychology of Learning and Motivation (Vol. 13, pp. 379–421). New York: Academic Press. [Google Scholar]

- Johnson JD, & Rugg MD (2007). Recollection and the reinstatement of encoding-related cortical activity. Cerebral Cortex, 17(11), 2507–2515. [DOI] [PubMed] [Google Scholar]

- Jonides J, Lewis RL, Nee DE, Lustig CA, Berman MG, & Moore KS (2008). The mind and brain of short-term memory. Annual Review of Psychology, 59, 193–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ki-Duk K (Writer) (2004). 3-Iron [DVD]. In K. Ki-Duk (Producer). South Korea: Sony Picture Classics. [Google Scholar]

- Konkel A, Warren DE, Duff M, C., Tranel D, & Cohen NJ (2008). Hippocampal amnesia impairs all manner of relational memory. Frontiers in Human Neuroscience, 2(15), 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Dudukovic NM, Kahn I, & Wagner AD (2007). Decreased demands on cognitive control reveal the neural processing benefits of forgetting. Nature Neuroscience, 10(7), 908–914. [DOI] [PubMed] [Google Scholar]

- Lamorisse A (Writer) (1956). The Red Balloon. In A. Lamorisse (Producer). United States: Public Media, Inc. [Google Scholar]

- McAvoy MP, Ollinger JM, & Buckner RL (2001). Cluster size thresholds for assessment of significant activation in fMRI. NeuroImage, 15, S198. [Google Scholar]

- Newtson D (1973). Attribution and the unit of perception of ongoing behavior. Journal of Personality and Social Psychology, 28(1), 28–38. [Google Scholar]

- Newtson D, & Engquist G (1976). The perceptual organization of ongoing behavior. Journal of Experimental Social Psychology, 12, 436–450. [Google Scholar]

- Ogawa S, Lee TM, Kay AR, & Tank DW (1990). Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proceedings of the National Academy of Science, USA, 87, 9868–9872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson IR, Moore KS, Stark M, & Chatterjee A (2006). Visual working memory is impaired when the medial temporal lobe is damaged. Journal of Cognitive Neuroscience, 18(7), 1087–1097. [DOI] [PubMed] [Google Scholar]

- Öztekin I, McElree B, Staresina BP, & Davachi L (2008). Working memory retrieval: Contributions of the left prefrontal cortex, the left posterior parietal cortex, and the hippocampus. Journal of Cognitive Neuroscience, 21, 581–593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polyn SM, & Kahana MJ (2008). Memory search and the neural representation of context. Trends in Cognitive Sciences, 12(1), 24–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radvansky GA, & Copeland DE (2006). Walking through doorways causes forgetting: Situation models and experienced space. Memory & Cognition, 24(5), 1150–1156. [DOI] [PubMed] [Google Scholar]

- Romanek M (Writer) (2002). One Hour Photo. In M. Romanek (Producer). United States: Fox Searchlight Pictures. [Google Scholar]

- Schwan S, & Garsoffky B (2004). The cognitive representation of filmic event summaries. Applied Cognitive Psychology, 18, 37–55. [Google Scholar]

- Shrager Y, Levy DA, Hopkins RO, & Squire LR (2008). Working memory and the organization of brain systems. Journal of Neuroscience, 28(18), 4818–4822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Speer NK, Swallow KM, & Zacks JM (2003). Activation of human motion processing areas during event perception. Cognitive, Affective, and Behavioral Neuroscience, 3(4), 335–345. [DOI] [PubMed] [Google Scholar]

- Speer NK, & Zacks JM (2005). Temporal changes as event boundaries: Processing and memory consequences of narrative time shifts. Journal of Memory and Language, 53(1), 125–140. [Google Scholar]

- Speer NK, Zacks JM, & Reynolds JR (2007). Human brain activity time-locked to narrative event boundaries. Psychological Science, 18(449–455). [DOI] [PubMed] [Google Scholar]

- Stefanacci L, Buffalo EA, Schmolck H, & Squire LR (2000). Profound amnesia after damage to the medial temporal lobe: A neuroanatomical and neuropsychological profile of patient E. P. Journal of Neuroscience, 20(18), 7024–7036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Summerfield JJ, Lepsien J, Gitelman DR, Mesulam MM, & Nobre AC (2006). Orienting attention based on long-term memory experience. Neuron, 49, 905–916. [DOI] [PubMed] [Google Scholar]

- Swallow KM, Zacks JM, & Abrams RA (2009). Event boundaries in perception affect memory encoding and updating. Journal of Experimental Psychology: General, 138(2), 236–257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, & Tournoux P (1988). Co-planar Stereotaxic Atlas of the Human Brain: 3-Dimensional Proportional System - An Approach to Cerebral Imaging. New York: Theime Medical Publishers. [Google Scholar]

- Tati J (Writer) (1958). Mon Oncle. In J. Lagrange, J. L’Hôte & J. Tati (Producer). France: Spectra Films. [Google Scholar]

- Vincent JL, Snyder AZ, Fox MD, Shannon BJ, Andrews JR, Raichle ME, et al. (2006). Coherent spontaneous activity identifies a hippocampal-parietal memory network. Journal of Neurophysiology, 96, 3517–3531. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Shannon BJ, Kahn I, & Buckner RL (2005). Parietal lobe contributions to episodic memory retrieval. Trends in Cognitive Sciences, 9(9), 445–453. [DOI] [PubMed] [Google Scholar]

- Zacks JM (2004). Using movement and intentions to understand simple events. Cognitive Science, 28(6), 979–1008. [Google Scholar]

- Zacks JM, Braver TS, Sheridan MA, Donaldson DI, Snyder AZ, Ollinger JM, et al. (2001). Human brain activity time-locked to perceptual event boundaries. Nature Neuroscience, 4(6), 651–655. [DOI] [PubMed] [Google Scholar]

- Zacks JM, Speer NK, Swallow KM, Braver TS, & Reynolds JR (2007). Event perception: A mind/brain perspective. Psychological Bulletin, 133(2), 273–293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zacks JM, Speer NK, Vettel JM, & Jacoby LL (2006). Event understanding and memory in healthy aging and dementia of the Alzheimer Type. Psychology and Aging, 21(3), 466–482. [DOI] [PubMed] [Google Scholar]

- Zacks JM, Swallow KM, Vettel JM, & McAvoy MP (2006). Visual motion and the neural correlates of event perception. Brain Research, 1076, 150–162. [DOI] [PubMed] [Google Scholar]

- Zwaan RA, & Radvansky GA (1998). Situation models in language comprehension and memory. Psychological Bulletin, 123(2), 162–185. [DOI] [PubMed] [Google Scholar]