Significance

Changing individuals’ behavior is key to tackling some of today’s most pressing societal challenges such as the COVID-19 pandemic or climate change. Choice architecture interventions aim to nudge people toward personally and socially desirable behavior through the design of choice environments. Although increasingly popular, little is known about the overall effectiveness of choice architecture interventions and the conditions under which they facilitate behavior change. Here we quantitatively review over a decade of research, showing that choice architecture interventions successfully promote behavior change across key behavioral domains, populations, and locations. Our findings offer insights into the effects of choice architecture and provide guidelines for behaviorally informed policy making.

Keywords: choice architecture, nudge, behavioral insights, behavior change, meta-analysis

Abstract

Over the past decade, choice architecture interventions or so-called nudges have received widespread attention from both researchers and policy makers. Built on insights from the behavioral sciences, this class of behavioral interventions focuses on the design of choice environments that facilitate personally and socially desirable decisions without restricting people in their freedom of choice. Drawing on more than 200 studies reporting over 450 effect sizes (n = 2,149,683), we present a comprehensive analysis of the effectiveness of choice architecture interventions across techniques, behavioral domains, and contextual study characteristics. Our results show that choice architecture interventions overall promote behavior change with a small to medium effect size of Cohen’s d = 0.45 (95% CI [0.39, 0.52]). In addition, we find that the effectiveness of choice architecture interventions varies significantly as a function of technique and domain. Across behavioral domains, interventions that target the organization and structure of choice alternatives (decision structure) consistently outperform interventions that focus on the description of alternatives (decision information) or the reinforcement of behavioral intentions (decision assistance). Food choices are particularly responsive to choice architecture interventions, with effect sizes up to 2.5 times larger than those in other behavioral domains. Overall, choice architecture interventions affect behavior relatively independently of contextual study characteristics such as the geographical location or the target population of the intervention. Our analysis further reveals a moderate publication bias toward positive results in the literature. We end with a discussion of the implications of our findings for theory and behaviorally informed policy making.

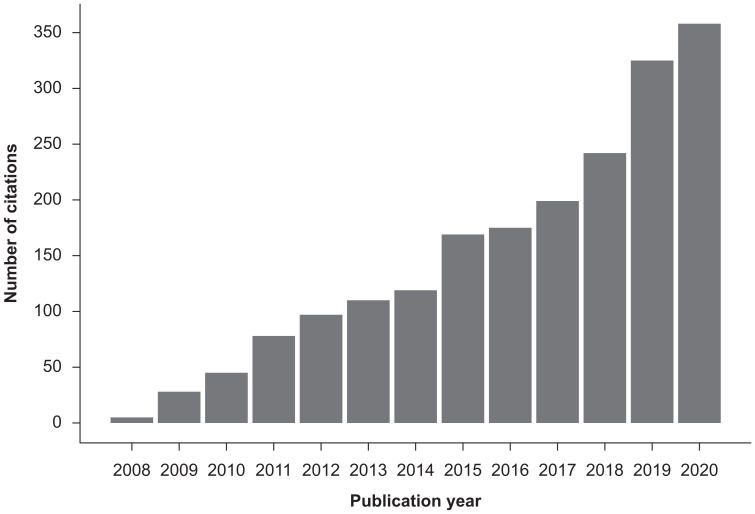

Many of today’s most pressing societal challenges such as the successful navigation of the COVID-19 pandemic or the mitigation of climate change call for substantial changes in individuals’ behavior. Whereas microeconomic and psychological approaches based on rational agent models have traditionally dominated the discussion about how to achieve behavior change, the release of Thaler and Sunstein’s book Nudge—Improving Decisions about Health, Wealth, and Happiness (1) widely introduced a complementary intervention approach known as choice architecture or nudging, which aims to change behavior by (re)designing the physical, social, or psychological environment in which people make decisions while preserving their freedom of choice (2). Since the publication of the first edition of Thaler and Sunstein (1) in 2008, choice architecture interventions have seen an immense increase in popularity (Fig. 1). However, little is known about their overall effectiveness and the conditions under which they facilitate behavior change—a gap the present meta-analysis aims to address by analyzing the effects of the most widely used choice architecture techniques across key behavioral domains and contextual study characteristics.

Fig. 1.

Number of citations of Thaler and Sunstein (1) between 2008 and 2020. Counts are based on citation search in Web of Science.

Traditional microeconomic intervention approaches are often built around a rational agent model of decision making, which assumes that people base their decisions on known and consistent preferences that aim to maximize the utility, or value, of their actions. In determining their preferences, people are thought to engage in an exhaustive analysis of the probabilities and potential costs and benefits of all available options to identify which option provides the highest expected utility and is thus the most favorable (3). Interventions aiming to change behavior are accordingly designed to increase the utility of the desired option, either by educating people about the existing costs and benefits of a certain behavior or by creating entirely new incentive structures by means of subsidies, tax credits, fines, or similar economic measures. Likewise, traditional psychological intervention approaches explain behavior as the result of a deliberate decision making process that weighs and integrates internal representations of people’s belief structures, values, attitudes, and norms (4, 5). Interventions accordingly focus on measures such as information campaigns that aim to shift behavior through changes in people’s beliefs or attitudes (6).

Over the past years, intervention approaches informed by research in the behavioral sciences have emerged as a complement to rational agent-based approaches. They draw on an alternative model of decision making which acknowledges that people are bounded in their ability to make rational decisions. Rooted in dual-process theories of cognition and information processing (7), this model recognizes that human behavior is not always driven by the elaborate and rational thought processes assumed by the rational agent model but instead often relies on automatic and computationally less intensive forms of decision making that allow people to navigate the demands of everyday life in the face of limited time, available information, and computational power (8, 9). Boundedly rational decision makers often construct their preferences ad hoc based on cognitive shortcuts and biases, which makes them susceptible to supposedly irrational contextual influences, such as the way in which information is presented or structured (10–12). This susceptibility to contextual factors, while seemingly detrimental to decision making, has been identified as a promising lever for behavior change because it offers the opportunity to influence people’s decisions through simple changes in the so-called choice architecture that defines the physical, social, and psychological context in which decisions are made (2). Rather than relying on education or significant economic incentives, choice architecture interventions aim to guide people toward personally and socially desirable behavior by designing environments that anticipate and integrate people’s limitations in decision making to facilitate access to decision-relevant information, support the evaluation and comparison of available choice alternatives, or reinforce previously formed behavioral intentions (13) (see Table 1 for an overview of intervention techniques based on choice architecture*).

Table 1.

Taxonomy of choice architecture categories and intervention techniques

| Psychological barrier | Intervention category | Intervention technique |

| Limited access to decision-relevant information | Decision information: increase the availability, comprehensibility, and/or personal relevance of information | Translate information: adapt attributes to facilitate processing of already available information and/or shift decision maker’s perspective |

| Make information visible: provide access to relevant information | ||

| Provide social reference point: provide social normative information to reduce situational ambiguity and behavioral uncertainty | ||

| Limited capacity to evaluate and compare choice options | Decision structure: alter the utility of choice options through their arrangement in the decision | Change choice defaults: set no action default or prompt active choice to address behavioral inertia, loss aversion, and/or perceived endorsement |

| environment or the format of decision making | Change option-related effort: adjust physical or financial effort to remove friction from desirable choice option | |

| Change range or composition of options: adapt categories or grouping of choice options to facilitate evaluation | ||

| Change option consequences: adapt social consequences or microincentives to address present bias, bias in probability weighting, and/or loss aversion | ||

| Limited attention and self-control | Decision assistance: facilitate self-regulation | Provide reminders: increase the attentional salience of desirable behavior to overcome inattention due to information overload |

| Facilitate commitment: encourage self or public commitment to counteract failures of self-control |

Addressing Psychological Barriers through Choice Architecture

Unlike the assumption of the rational agent model, people rarely have access to all relevant information when making a decision. Instead, they tend to base their decisions on information that is directly available to them at the moment of the decision (14, 15) and to discount or even ignore information that is too complex or meaningless to them (16, 17). Choice architecture interventions based on the provision of decision information aim to facilitate access to decision-relevant information by increasing its availability, comprehensibility, and/or personal relevance to the decision maker. One way to achieve this is to provide social reference information that reduces the ambiguity of a situation and helps overcome uncertainty about appropriate behavioral responses. In a natural field experiment with more than 600,000 US households, for instance, Allcott (18) demonstrated the effectiveness of descriptive social norms in promoting energy conservation. Specifically, the study showed that households which regularly received a letter comparing their own energy consumption to that of similar neighbors reduced their consumption by an average of 2%. This effect was estimated to be equivalent to that of a short-term electricity price increase of 11 to 20%. Other examples of decision information interventions include measures that increase the visibility of otherwise covert information (e.g., feedback devices and nutrition labels; refs. 19, 20), or that translate existing descriptions of choice options into more comprehensible or relevant information (e.g., through simplifying or reframing information; ref. 21).

Not only do people have limited access to decision-relevant information, but they often refrain from engaging in the elaborate cost-benefit analyses assumed by the rational agent model to evaluate and compare the expected utility of all choice options. Instead, they use contextual cues about the way in which choice alternatives are organized and structured within the decision environment to inform their behavior. Choice architecture interventions built around changes in the decision structure utilize this context dependency to influence behavior through the arrangement of choice alternatives or the format of decision making. One of the most prominent examples of this intervention approach is choice default, or the preselection of an option that is imposed if no active choice is made. In a study comparing organ donation policies across European countries, Johnson and Goldstein (22) demonstrated the impact of defaults on even highly consequential decisions, showing that in countries with presumed consent laws, which by default register individuals as organ donors, the rate of donor registrations was nearly 60 percentage points higher than in countries with explicit consent laws, which require individuals to formally agree to becoming an organ donor. Other examples of decision structure interventions include changes in the effort related to choosing an option (23), the range or composition of options (24), and the consequences attached to options (25).

Even if people make a deliberate and potentially rational decision to change their behavior, limited attentional capacities and a lack of self-control may prevent this decision from actually translating into the desired actions, a phenomenon described as the intention–behavior gap (26). Choice architecture interventions that provide measures of decision assistance aim to bridge the intention–behavior gap by reinforcing self-regulation. One example of this intervention approach are commitment devices, which are designed to strengthen self-control by removing psychological barriers such as procrastination and intertemporal discounting that often stand in the way of successful behavior change. Thaler and Benartzi (27) demonstrated the effectiveness of such commitment devices in a large-scale field study of the Save More Tomorrow program, showing that employees increased their average saving rates from 3.5 to 13.6% when committing in advance to allocating parts of their future salary increases toward retirement savings. If applied across the United States, this program was estimated to increase the total of annual retirement contributions by approximately $25 billion for each 1% increase in saving rates. Other examples of decision assistance interventions are reminders, which affect decision making by increasing the salience of the intended behavior (28).

The Present Meta-analysis

Despite the growing interest in choice architecture, only a few attempts have been made to quantitatively integrate the empirical evidence on its effectiveness as a behavior change tool (29–32). Previous studies have mostly been restricted to the analysis of a single choice architecture technique (33–35) or a specific behavioral domain (36–39), leaving important questions unanswered, including how effective choice architecture interventions overall are in changing behavior and whether there are systematic differences across choice architecture techniques and behavioral domains that so far may have remained undetected and that may offer new insights into the psychological mechanisms that drive choice architecture interventions.

The aim of the present meta-analysis was to address these questions by first quantifying the overall effect of choice architecture interventions on behavior and then providing a systematic comparison of choice architecture interventions across different techniques, behavioral domains, and contextual study characteristics to answer 1) whether some choice architecture techniques are more effective in changing behavior than others, 2) whether some behavioral domains are more receptive to the effects of choice architecture interventions than others, 3) whether choice architecture techniques differ in their effectiveness across varying behavioral domains, and finally, 4) whether the effectiveness of choice architecture interventions is impacted by contextual study characteristics such as the location or target population of the intervention. Drawing on an exhaustive literature search that yielded more than 200 published and unpublished studies, this comprehensive analysis presents important insights into the effects and potential boundary conditions of choice architecture interventions and provides an evidence-based guideline for selecting behaviorally informed intervention measures.

Results

Effect Size of Choice Architecture.

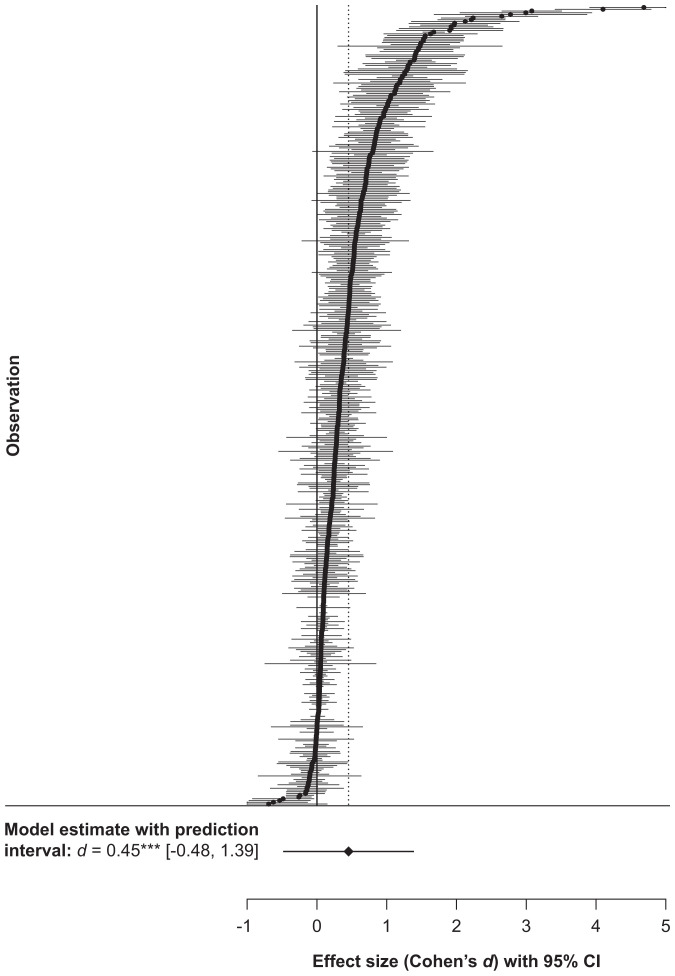

Our meta-analysis of 455 effect sizes from 214 publications (N = 2, 149, 683) revealed a statistically significant effect of choice architecture interventions on behavior (Cohen’s , 95% CI [0.39, 0.52], , P < 0.001) (Fig. 2). Using conventional criteria, this effect can be classified to be of small to medium size (40). The effect size was reliable across several robustness checks, including the removal of influential outliers, which marginally decreased the overall size of the effect but did not change its statistical significance (, 95% CI [0.37, 0.46], , P < 0.001). Additional leave-one-out analyses at the individual effect size level and the publication level found the effect of choice architecture interventions to be robust to the exclusion of any one effect size and publication, with d ranging from 0.43 to 0.46 and all P < 0.001.

Fig. 2.

Forest plot of all effect sizes (k = 455) included in the meta-analysis with their corresponding 95% confidence intervals. Extracted Cohen’s d values ranged from –0.69 to 4.69. The proportion of true to total variance was estimated at I2 = 99.67%. ***

The total heterogeneity was estimated to be , indicating considerable variability in the effect size of choice architecture interventions. More specifically, the dispersion of effect sizes suggests that while the majority of choice architecture interventions will successfully promote the desired behavior change with a small to large effect size, ∼15% of interventions are likely to backfire, i.e., reduce or even reverse the desired behavior, with a small to medium effect (95% prediction interval [–0.48, 1.39]) (40–42).

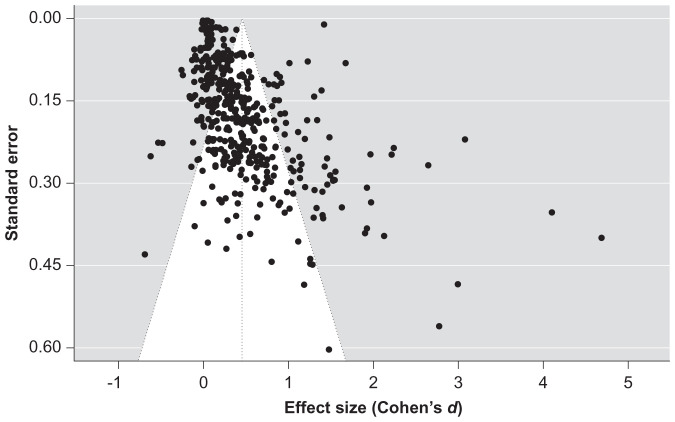

Publication Bias.

Visual inspection of the relation between effect sizes and their corresponding SEs (Fig. 3) revealed an asymmetric distribution that suggested a one-tailed overrepresentation of positive effect sizes in studies with comparatively low statistical power (43). This finding was formally confirmed by Egger’s test (44), which found a positive association between effect sizes and SEs (, 95% CI [1.31, 3.25], , P < 0.001). Together, these results point to a publication bias in the literature that may favor the reporting of successful as opposed to unsuccessful implementations of choice architecture interventions in studies with small sample sizes. Sensitivity analyses imposing a priori weight functions on a simplified random effects model suggested that this one-tailed publication bias could have potentially affected the estimate of our meta-analytic model (43). Assuming a moderate one-tailed publication bias in the literature attenuated the overall effect size of choice architecture interventions by 26.79% from Cohen’s d = 0.42, 95% CI [0.37, 0.46], and () to and . Assuming a severe one-tailed publication bias attenuated the overall effect size even further to and ; however, this assumption was only partially supported by the funnel plot. Although our general conclusion about the effects of choice architecture interventions on behavior remains the same in the light of these findings, the true effect size of interventions is likely to be smaller than estimated by our meta-analytic model due to the overrepresentation of positive effect sizes in our sample.

Fig. 3.

Funnel plot displaying each observation as a function of its effect size and SE. In the absence of publication bias, observations should scatter symmetrically around the pooled effect size indicated by the gray vertical line and within the boundaries of the 95% confidence intervals shaded in white. The asymmetric distribution shown here indicates a one-tailed publication bias in the literature that favors the reporting of successful implementations of choice architecture interventions in studies with small sample sizes.

Moderator Analyses.

Supported by the high heterogeneity among effect sizes, we next tested the extent to which the effectiveness of choice architecture interventions was moderated by the type of intervention, the behavioral domain in which it was implemented, and contextual study characteristics.

Intervention category and technique.

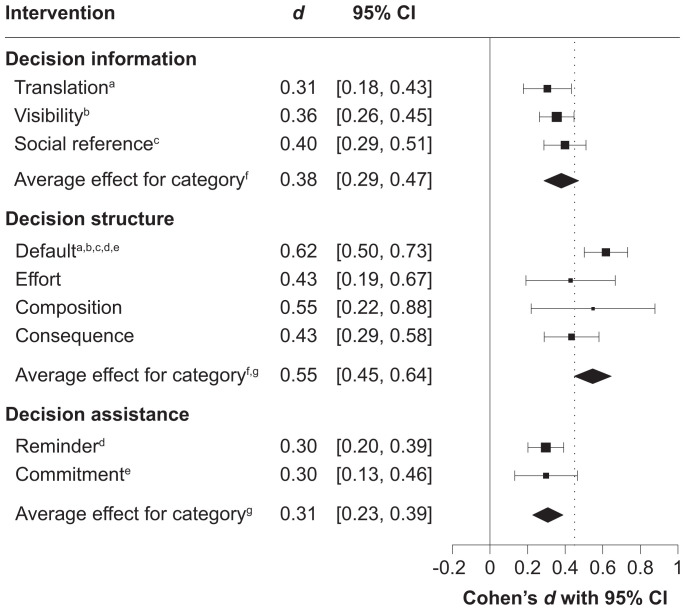

Our first analysis focused on identifying potential differences between the effect sizes of decision information, decision structure, and decision assistance interventions. This analysis found that intervention category indeed moderated the effect of choice architecture interventions on behavior (, P < 0.001). With average effect sizes ranging from to 0.55, interventions across all three categories were effective in inducing statistically significant behavior change (all ; Fig. 4). Planned contrasts between categories, however, revealed that interventions in the decision structure category had a stronger effect on behavior compared to interventions in the decision information (b = 0.17, 95% CI [0.03, 0.31], , P = 0.02) and the decision assistance category (, 95% CI [0.11, 0.36], , P < 0.001). No difference was found in the effectiveness of decision information and decision assistance interventions (, 95% CI [], , P = 0.25). Including intervention category as a moderator in our meta-analytic model marginally reduced the proportion of true to total variability in effect sizes from to (; ; SI Appendix, Table S3).

Fig. 4.

Forest plot of effect sizes across categories of choice architecture intervention techniques (see Table 1 for more detailed description of techniques). The position of squares on the x axis indicates the effect size of each respective intervention technique. Bars indicate the 95% confidence intervals of effect sizes. The size of squares is inversely proportional to the SE of effect sizes. Diamond shapes indicate the average effect size and confidence intervals of intervention categories. The solid line represents an effect size of Cohen’s d = 0. The dotted line represents the overall effect size of choice architecture interventions, Cohen’s d = 0.45, 95% CI [0.39, 0.52]. Identical letter superscripts indicate statistically significant (P < 0.05) pairwise comparisons.

To test whether the effect sizes of the three intervention categories adequately represented differences on the underlying level of choice architecture techniques, we reran our analysis with intervention technique rather than category as the key moderator. As illustrated in Fig. 4, each of the nine intervention techniques was effective in inducing behavior change, with Cohen’s d ranging from 0.30 to 0.62 (all P < 0.01). Within intervention categories, techniques were generally consistent in their effect sizes (for all contrasts, P > 0.05). Between categories, however, techniques showed in parts substantial differences in effect sizes. In line with the previously reported results, techniques within the decision structure category were consistently stronger in their effects on behavior than intervention techniques within the decision information or the decision assistance category. The observed effect size differences between the decision information, the decision structure, and the decision assistance category were thus unlikely to be driven by a single intervention technique but rather representative of the entire set of techniques within those categories.

Behavioral domain.

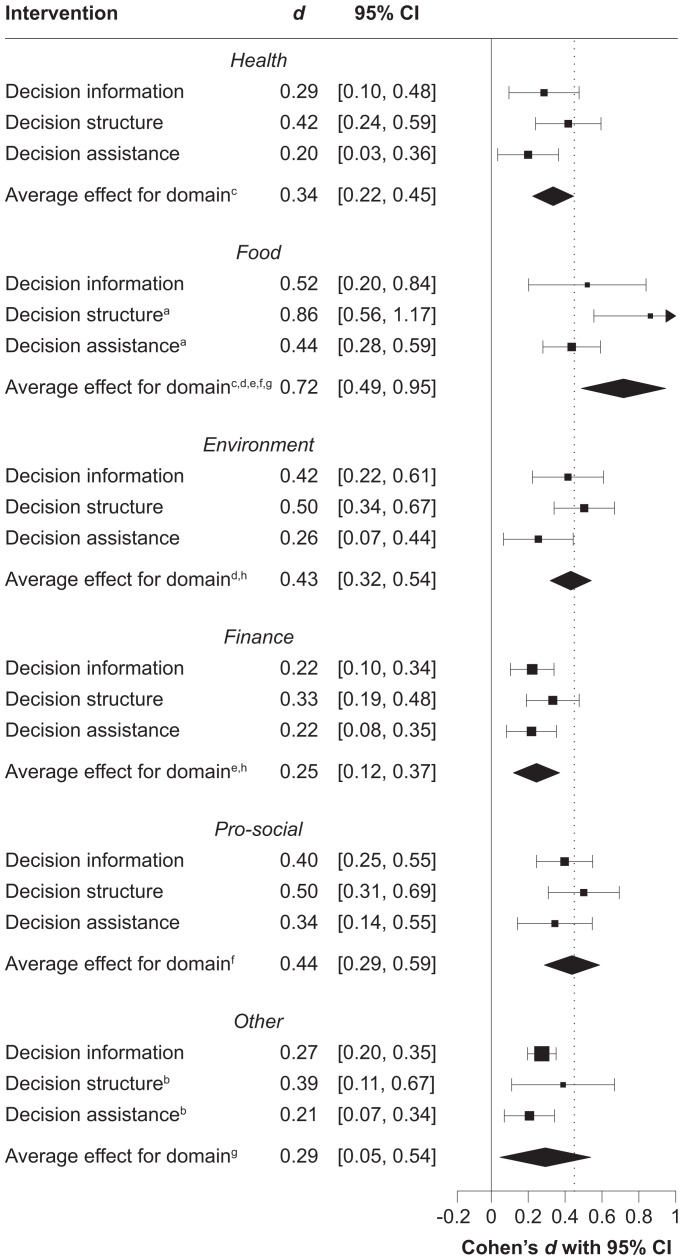

Following our analysis of the effectiveness of varying types of choice architecture interventions, we next focused on identifying potential differences among the behavioral domains in which interventions were implemented. As illustrated in Fig. 5, effect sizes varied quite substantially across domains, with Cohen’s d ranging from 0.25 to 0.72. Our analysis confirmed that the effectiveness of interventions was moderated by domain (, P < 0.001). Specifically, it showed that choice architecture interventions, while generally effective in inducing behavior change across all six domains, had a particularly strong effect on behavior in the food domain, with (95% CI [0.49, 0.95]). No other domain showed comparably large effect sizes (for all contrasts, P < 0.05). The smallest effects were observed in the financial domain. With an average intervention effect of d = 0.25 (95% CI [0.12, 0.37]), this domain was less receptive to choice architecture interventions than the other behavioral domains we investigated. Introducing behavioral domain as a moderator in our meta-analytic model marginally reduced the ratio of true to total heterogeneity among effect sizes from to (; ; SI Appendix, Table S3).

Fig. 5.

Forest plot of effect sizes across categories of choice architecture interventions and behavioral domains. The position of squares on the x axis indicates the effect size of each intervention category within a behavioral domain. Bars indicate the 95% confidence intervals of effect sizes. The size of squares is inversely proportional to the SE of effect sizes. Diamond shapes indicate the overall effect size and confidence intervals of choice architecture interventions within a behavioral domain. The solid line represents an effect size of Cohen’s d = 0. The dotted line represents the overall effect size of choice architecture interventions, Cohen’s d = 0.45, 95% CI [0.39, 0.52]. Identical letter superscripts indicate statistically significant (P < 0.05) pairwise comparisons.

Intervention category across behavioral domain.

Comparing the effectiveness of decision information, decision structure, and decision assistance interventions across domains consistently showed interventions within the decision structure category to have the largest effect on behavior, with Cohen’s d ranging from 0.33 to 0.86 (Fig. 5). This result suggests that the observed effect size differences between the three categories of choice architecture interventions were relatively stable and independent from the behavioral domain in which interventions were applied. Including the interaction of intervention category and behavioral domain in our meta-analytic model reduced the proportion of true to total effect size variability from to (; ; SI Appendix, Table S3).

Study characteristics.

Last, we were interested in the extent to which the effect size of choice architecture interventions was moderated by contextual study characteristics, such as the location of the intervention (inside vs. outside of the United States), the target population of the intervention (adults vs. children and adolescents), the experimental setting in which the intervention was investigated (conventional laboratory experiment, artifactual field experiment, framed field experiment, or natural field experiment; ref. 45), and the year in which the data were published. As can be seen in Table 2, choice architecture interventions affected behavior relatively independently of contextual influences since neither location nor target population had a statistically significant impact on the effect size of interventions. In support of the external validity of behavioral measures, our analysis moreover did not find any difference in the effect size of different types of experiments. Only year of publication predicted the effect of interventions on behavior, with more recent publications reporting smaller effect sizes than older publications.

Table 2.

Parameter estimates of three-level meta-analytic models showing the overall effect size of choice architecture interventions as well as effect sizes across categories, techniques, behavioral domains, and contextual study characteristics

| Effect size | ||||||

| Effect | k | n | d | 95% CI | Test statistic | P |

| Random-effects model | ||||||

| Overall effect size | 455 | 2,149,683 | 0.45 | [0.39, 0.52] | t(340) = 14.38 | <0.001 |

| Mixed-effects models: substantive moderators | ||||||

| Choice architecture category | F(3, 337) = 9.79 | <0.001 | ||||

| Decision informationa | 130 | 913,963 | 0.38 | [0.29, 0.47] | ||

| Decision structurea,b | 227 | 357,179 | 0.55 | [0.45, 0.64] | ||

| Decision assistanceb | 98 | 878,541 | 0.31 | [0.23, 0.39] | ||

| Choice architecture technique | F(9, 331) = 4.27 | <0.001 | ||||

| Translationc | 50 | 52,230 | 0.31 | [0.18, 0.43] | ||

| Visibilityd | 31 | 822,242 | 0.36 | [0.26, 0.45] | ||

| Social referencee | 49 | 39,491 | 0.40 | [0.29, 0.51] | ||

| Defaultc,d,e,f,g | 132 | 139,948 | 0.62 | [0.50, 0.73] | ||

| Effort | 23 | 8,033 | 0.43 | [0.19, 0.67] | ||

| Composition | 53 | 7,434 | 0.55 | [0.22, 0.88] | ||

| Consequence | 19 | 201,764 | 0.43 | [0.29, 0.58] | ||

| Reminderf | 69 | 870,381 | 0.30 | [0.20, 0.39] | ||

| Commitmentg | 29 | 8,160 | 0.30 | [0.13, 0.46] | ||

| Behavioral domain | F(6, 334) = 4.62 | <0.001 | ||||

| Healthh | 84 | 122,762 | 0.34 | [0.22, 0.45] | ||

| Foodh,i,j,k,l | 111 | 12,515 | 0.72 | [0.49, 0.95] | ||

| Environmenti,m | 76 | 105,848 | 0.43 | [0.32, 0.54] | ||

| Financej,m | 45 | 38,730 | 0.25 | [0.12, 0.37] | ||

| Prosocialk | 66 | 1,041,629 | 0.44 | [0.29, 0.59] | ||

| Otherl | 73 | 828,199 | 0.29 | [0.05, 0.54] | ||

| Mixed-effects models: contextual study characteristics | ||||||

| Location | t(339) = 0.53 | 0.599 | ||||

| Outside United States | 186 | 1,214,499 | ||||

| Inside United States | 269 | 935,184 | ||||

| Population | t(339) = –1.13, | 0.258 | ||||

| Children and adolescents | 27 | 9,891 | ||||

| Adults | 428 | 2,139,792 | ||||

| Type of experiment | F(3, 337) = 0.27 | 0.846 | ||||

| Conventional laboratory | 124 | 12,723 | 0.44 | [0.33, 0.55] | ||

| Artifactual field | 160 | 49,118 | 0.43 | [0.24, 0.62] | ||

| Framed field | 81 | 15,595 | 0.52 | [0.34, 0.70] | ||

| Natural field | 90 | 2,072,247 | 0.44 | [0.11, 0.77] | ||

| Year of publication | 1982 to 2021* | 2,149,683 | t(339) = –3.44 | <0.001 | ||

k, number of effect sizes; n, sample size. Within each moderator with more than two subgroups, identical letter superscripts indicate statistically significant (P < 0.05) pairwise comparisons between subgroups.

*Values refer to range of publication years rather than number of effect sizes.

Discussion

Changing individuals’ behavior is key to solving some of today’s most pressing societal challenges. However, how can this behavior change be achieved? Recently, more and more researchers and policy makers have approached this question through the use of choice architecture interventions. The present meta-analysis integrates over a decade’s worth of research to shed light on the effectiveness of choice architecture and the conditions under which it facilitates behavior change. Our results show that choice architecture interventions promote behavior change with a small to medium effect size of Cohen’s d = 0.45, which is comparable to more traditional intervention approaches like education campaigns or financial incentives (46–48). Our findings are largely consistent with those of previous analyses that investigated the effectiveness of choice architecture interventions in a smaller subset of the literature (e.g., refs. 29, 30, 32, 33). In their recent meta-analysis of choice architecture interventions across academic disciplines, Beshears and Kosowksy (30), for example, found that choice architecture interventions had an average effect size of . Similarly, focusing on one choice architecture technique only, Jachimowicz et al. (33) found that choice defaults had an average effect size of , which is slightly higher than the effect size our analysis revealed for this intervention technique (d = 0.62). Our results suggest a somewhat higher overall effectiveness of choice architecture interventions than meta-analyses that have focused exclusively on field experimental research (31, 37), a discrepancy that holds even when accounting for differences between experimental settings (45). This inconsistency in findings may in part be explained by differences in metaanalytic samples. Only 7% of the studies analyzed by DellaVigna and Linos (31), for example, meet the strict inclusion and exclusion criteria of the present meta-analysis. Among others, these criteria excluded studies that combined multiple choice architecture techniques. While this restriction allowed us to isolate the unique effect of each individual intervention technique, it may conflict with the reality of field experimental research that often requires researchers to leverage the effects of several choice architecture techniques to address the specific behavioral challenge at hand (see Materials and Methods for details on the literature search process and inclusion criteria). Similarly, the techniques that are available to field experimental researchers may not always align with the underlying psychological barriers to the target behavior (Table 1), decreasing their effectiveness in encouraging the desired behavior change.

Not only does choice architecture facilitate behavior change, but according to our results, it does so across a wide range of behavioral domains, population segments, and geographical locations. In contrast to theoretical and empirical work challenging its effectiveness (49–51), choice architecture constitutes a versatile intervention approach that lends itself as an effective behavior change tool across many contexts and policy areas. Although the present meta-analysis focuses on studies that tested the effects of choice architecture alone, the applicability of choice architecture is not restricted to stand-alone interventions but extends to hybrid policy measures that use choice architecture as a complement to more traditional intervention approaches (52). Previous research, for example, has shown that the impact of economic interventions such as taxes or financial incentives can be enhanced through choice architecture (53–55).

In addition to the overall effect size of choice architecture interventions, our systematic comparison of interventions across different techniques, behavioral domains, and contextual study characteristics reveals substantial variations in the effectiveness of choice architecture as a behavior change tool. Most notably, we find that across behavioral domains, decision structure interventions that modify decision environments to address decision makers’ limited capacity to evaluate and compare choice options are consistently more effective in changing behavior than decision information interventions that address decision makers’ limited access to decision-relevant information or decision assistance interventions that address decision makers’ limited attention and self-control. This relative advantage of structural choice architecture techniques may be due to the specific psychological mechanisms that underlie the different intervention techniques or, more specifically, their demands on information processing. Decision information and decision assistance interventions rely on relatively elaborate forms of information processing in that the information and assistance they provide needs to be encoded and evaluated in terms of personal values and/or goals to determine the overall utility of a given choice option (56). Decision structure interventions, by contrast, often do not require this type of information processing but provide a general utility boost for specific choice options that offers a cognitive shortcut for determining the most desirable option (57, 58). Accordingly, decision information and decision assistance interventions have previously been described as attempts to facilitate more deliberate decision making processes, whereas decision structure interventions have been characterized as attempts to advance more automatic decision making processes (59). Decision information and decision assistance interventions may thus more frequently fail to induce behavior change and show overall smaller effect sizes than decision structure interventions because they may exceed people’s cognitive limits in decision making more often, especially in situations of high cognitive load or time pressure.

The engagement of internal value and goal representations by decision information and decision assistance interventions introduces a second factor that may impact their effectiveness to change behavior: the moderating influence of individual differences. Nutrition labels, a prominent example of decision information interventions, for instance, have been shown to be more frequently used by consumers who are concerned about their diet and overall health than consumers who do not share those concerns (60). By targeting only certain population segments, information and assistance-based choice architecture interventions may show an overall smaller effect size when assessed at the population level compared to structure-based interventions, which rely less on individual values and goals and may therefore have an overall larger impact across the whole population. From a practical perspective, this suggests that policy makers who wish to use choice architecture as a behavioral intervention measure may need to precede decision information and decision assistance interventions by an assessment and analysis of the values and goals of the target population or, alternatively, choose a decision structure approach in cases when a segmentation of the population in terms of individual differences is not possible.

In summary, the higher effectiveness of decision structure interventions may potentially be explained by a combination of two factors: 1) lower demand on information processing and 2) lower susceptibility to individual differences in values and goals. Our explanation remains somewhat speculative, however, as empirical research especially on the cognitive processes underlying choice architecture interventions is still relatively scarce (but see refs. 53, 56, 57). More research efforts are needed to clarify the psychological mechanisms that drive the impact of choice architecture interventions and determine their effectiveness in changing behavior.

Besides the effect size variations between different categories of choice architecture techniques, our results reveal considerable differences in the effectiveness of choice architecture interventions across behavioral domains. Specifically, we find that choice architecture interventions had a particularly strong effect on behavior in the food domain, with average effect sizes up to 2.5 times larger than those in the health, environmental, financial, prosocial, or other behavioral domain.† A key characteristic of food choices and other food-related behaviors is the fact that they bear relatively low behavioral costs and few, if any, perceived long-term consequences for the decision maker. Previous research has found that the potential impact of a decision can indeed moderate the effectiveness of choice architecture interventions, with techniques such as gain and loss framing having a smaller effect on behavior when the decision at hand has a high, direct impact on the decision maker than when the decision has little to no impact (61). Consistent with this research, we observe not only the largest effect sizes of choice architecture interventions in the food domain but also the overall smallest effect sizes of interventions in the financial domain, a domain that predominantly represents decisions of high impact to the decision maker. This systematic variation of effect sizes across behavioral domains suggests that when making decisions that are perceived to have a substantial impact on their lives, people may be less prone to the influence of automatic biases and heuristics, and thus the effects of choice architecture interventions, than when making decisions of comparatively smaller impact.

Another characteristic of food choices that may explain the high effectiveness of choice architecture interventions in the food domain is the fact that they are often driven by habits. Commonly defined as highly automatized behavioral responses to cues in the choice environment, habits distinguish themselves from other behaviors through a particularly strong association between behavior on the one hand and choice environment on the other hand (62, 63). It is possible that choice architecture interventions benefit from this association to the extent that they target the choice environment and thus potentially alter triggers of habitualized, undesirable behaviors. To illustrate, previous research has shown that people tend to adjust their food consumption relative to portion size, meaning that they consume more when presented with large portions and less when presented with small portions (39). Here portion size acts as an environmental cue that triggers and guides the behavioral response to eat. Choice architecture interventions that target this environmental cue, for example, by changing the default size of a food portion, are likely to be successful in changing the amount of food people consume because they capitalize on the highly automatized association between portion size and food consumption. The congruence between factors that trigger habitualized behaviors and factors that are targeted by choice architecture interventions may not only explain why interventions in our sample were so effective in changing food choices but more generally indicate that choice architecture interventions are an effective tool for changing instances of habitualized behaviors (64). This finding is particularly relevant from a policy making perspective as habits tend to be relatively unresponsive to traditional intervention approaches and are therefore generally considered to be difficult to change (62). Given that choice architecture interventions can only target the environmental cues that trigger habitualized responses but not the association between choice environment and behavior per se, it should be noted though that the effects of interventions are likely limited to the specific choice contexts in which they are implemented.

While the present meta-analysis provides a comprehensive overview of the effectiveness of choice architecture as a behavior change tool, more research is needed to complement and complete our findings. For example, our methodological focus on individuals as the unit of analysis excludes a large number of studies that have investigated choice architecture interventions on broader levels, such as households, school classes, or organizations, which may reduce the generalizability of our results. Future research should target these studies specifically to add to the current analysis. Similarly, our data show very high levels of heterogeneity among the effect sizes of choice architecture interventions. Although the type of intervention, the behavioral domain in which it is applied, and contextual study characteristics account for some of this heterogeneity (SI Appendix, Table S3), more research is needed to identify factors that may explain the variability in effect sizes above and beyond those investigated here. Research has recently started to reveal some of those potential moderators of choice architecture interventions, including sociodemographic factors such as income and socioeconomic status as well as psychological factors such as domain knowledge, numerical ability, and attitudes (65–67). Investigating these moderators systematically cannot only provide a more nuanced understanding of the conditions under which choice architecture facilitates behavior change but may also help to inform the design and implementation of targeted interventions that take into account individual differences in the susceptibility to choice architecture interventions (68). Ethical considerations should play a prominent role in this process to ensure that potentially more susceptible populations, such as children or low-income households, retain their ability to make decisions that are in their personal best interest (66, 69, 70). Based on the results of our own moderator analyses, additional avenues for future research may include the study of how information processing influences the effectiveness of varying types of choice architecture interventions and how the overall effect of interventions is determined by the type of behavior they target (e.g., high-impact vs. low-impact behaviors and habitual vs. one-time decisions). In addition, we identified a moderate publication bias toward the reporting of effect sizes that support a positive effect of choice architecture interventions on behavior. Future research efforts should take this finding into account and place special emphasis on appropriate sample size planning and analysis standards when evaluating choice architecture interventions. Finally, given our choice to focus our primary literature search on the terms “choice architecture” and “nudge,” we recognize that the present meta-analysis may have failed to capture parts of the literature published before the popularization of this now widely used terminology, despite our efforts to expand the search beyond those terms (for details on the literature search process, see Materials and Methods). Due to the large increase in choice architecture research over the past decade (Fig. 1), however, the results presented here likely offer a good representation of the existing evidence on the effectiveness of choice architecture in changing individuals’ behavior.

Conclusion

Few behavioral intervention measures have lately received as much attention from researchers and policy makers as choice architecture interventions. Integrating the results of more than 450 behavioral interventions, the present meta-analysis finds that choice architecture is an effective and widely applicable behavior change tool that facilitates personally and socially desirable choices across behavioral domains, geographical locations, and populations. Our results provide insights into the overall effectiveness of choice architecture interventions as well as systematic effect size variations among them, revealing promising directions for future research that may facilitate the development of theories in this still new but fast-growing field of research. Our work also provides a comprehensive overview of the effectiveness of choice architecture interventions across a wide range of intervention contexts that are representative of some of the most pressing societal challenges we are currently facing. This overview can serve as a guideline for policy makers who seek reliable, evidence-based information on the potential impact of choice architecture interventions and the conditions under which they promote behavior change.

Materials and Methods

The meta-analysis was conducted in accordance with guidelines for conducting systematic reviews (71) and conforms to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (72) standards.

Literature Search and Inclusion Criteria.

We searched the electronic databases PsycINFO, PubMed, PubPsych, and ScienceDirect using a combination of keywords associated with choice architecture (nudge OR “choice architecture”) and empirical research (method* OR empiric* OR procedure OR design).‡ Since the terms nudge and choice architecture were established only after the seminal book by Thaler and Sunstein (1), we restricted this search to studies that were published no earlier than 2008. To compensate for the potential bias this temporal restriction might introduce to the results of our meta-analysis, we identified additional studies, including studies published before 2008, through the reference lists of relevant review articles and a search for research reports by governmental and nongovernmental behavioral science units. To reduce the possibly confounding effects of publication status on the estimation of effect sizes, we further searched for unpublished studies using the ProQuest Dissertations & Theses database and requesting unpublished data through academic mailing lists. The search concluded in June 2019, yielding a total of 9,606 unique publications.

Given the exceptionally high heterogeneity in choice architecture research, we restricted our meta-analysis to studies that 1) empirically tested one or more choice architecture techniques using a randomized controlled experimental design, 2) had a behavioral outcome measure that was assessed in a real-life or hypothetical choice situation, 3) used individuals as the unit of analysis, and 4) were published in English. Studies that examined choice architecture in combination with other intervention measures, such as significant economic incentives or education programs, were excluded from our analyses to isolate the unique effects of choice architecture interventions on behavior.

The final sample comprised 455 effect sizes from 214 publications with a pooled sample size of 2,149,683 participants (N ranging from 14 to 813,990). SI Appendix, Fig. S1 illustrates the literature search and review process. All meta-analytic data and analyses reported in this paper are publicly available on the Open Science Framework (https://osf.io/fywae/) (74).

Effect Size Calculation and Moderator Coding.

Due to the large variation in behavioral outcome measures, we calculated Cohen’s d (40) for a standardized effect size measure of the mean difference between control and treatment conditions. Positive Cohen’s d values were coded to reflect behavior change in the desired direction of the intervention, whereas negative values reflected an undesirable change in behavior.

To categorize systematic differences between choice architecture interventions, we coded studies for seven moderators describing the type of intervention, the behavioral domain in which it was implemented, and contextual study characteristics. The type of choice architecture intervention was classified using a taxonomy developed by Münscher and colleagues (13), which distinguishes three broad categories of choice architecture: decision information, decision structure, and decision assistance. Each of these categories targets a specific aspect of the choice environment, with decision information interventions targeting the way in which choice alternatives are described (e.g., framing), decision structure interventions targeting the way in which those choice alternatives are organized and structured (e.g., choice defaults), and decision assistance interventions targeting the way in which decisions can be reinforced (e.g., commitment devices). With its tripartite categorization framework the taxonomy is able to capture and categorize the vast majority of choice architecture interventions described in the literature, making it one of the most comprehensive classification schemes of choice architecture techniques in the field (see Table 1 for an overview). Many alternative attempts to organize and structure choice architecture interventions are considered problematic because they combine descriptive categorization approaches, which classify interventions based on choice architecture technique, and explanatory categorization approaches, which classify interventions based on underlying psychological mechanisms, within a single framework. The taxonomy we use here adopts a descriptive categorization approach in that it organizes interventions exclusively in terms of choice architecture techniques. We chose this approach to not only omit common shortcomings of hybrid classification schemes, such as a reduction in the interpretability of results, but also to warrant a highly reliable categorization of interventions in the absence of psychological outcome measures that would allow us to infer explanatory mechanisms. Using a descriptive categorization approach further allowed us to generate theoretically meaningful insights that can be easily translated into concrete recommendations for policy making. Each intervention was coded according to its specific technique and corresponding category. Interventions that combined multiple choice architecture techniques were excluded from our analyses to isolate the unique effect of each approach. Based on previous reviews (73) and inspection of our data, we distinguished six behavioral domains: health, food, environment, finance, prosocial behavior, and other behavior. Contextual study characteristics included the type of experiment that had been conducted (conventional laboratory experiment, artifactual field experiment, framed field experiment, or natural field experiment), the location of the intervention (inside vs. outside of the United States), the target population of the intervention (adults vs. children and adolescents), and the year in which the data were published. Interrater reliability across a random sample of 20% of the publications was high, with Cohen’s κ ranging from 0.76 to 1 ().

Statistical Analysis.

We estimated the overall effect of choice architecture interventions using a three-level meta-analytic model with random effects on the treatment and the publication level. This approach allowed us to account for the hierarchical structure of our data due to publications that reported multiple relevant outcome variables and/or more than one experiment (75–77). To further account for dependency in sampling errors due to overlapping samples (e.g., in cases where multiple treatment conditions were compared to the same control condition), we computed cluster-robust SEs, confidence intervals, and statistical tests for the estimated effect sizes (78, 79).

To identify systematic differences between choice architecture interventions, we ran multiple moderator analyses in which we tested for the effects of type of intervention, behavioral domain, and study characteristics using mixed-effects meta-analytic models with random effects on the treatment and the publication level. All analyses were conducted in R using the package metafor (80).

Supplementary Material

Acknowledgments

This research was supported by Swiss National Science Foundation Grant PYAPP1_160571 awarded to Tobias Brosch and Swiss Federal Office of Energy Grant SI/501597-01. It is part of the activities of the Swiss Competence Center for Energy Research –Competence Center for Research in Energy, Society and Transition, supported by the Swiss Innovation Agency (Innosuisse). The funding sources had no involvement in the preparation of the article; in the study design; in the collection, analysis, and interpretation of data; nor in the writing of the manuscript. We thank Allegra Mulas and Laura Pagel for their assistance in data collection and extraction.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2107346118/-/DCSupplemental.

*While alternative classification schemes of choice architecture interventions can be found in the literature, the taxonomy used in the present meta-analysis distinguishes itself through its comprehensiveness, which makes it a highly reliable categorization tool and allows for inferences of both theoretical and practical relevance.

†Please note that our results are robust to the exclusion of nonretracted studies by the Cornell Food and Brand Laboratory which has been criticized for repeated scientific misconduct; retracted studies by this research group were excluded from the meta-analysis.

‡Search terms were adapted from Szaszi et al. (73).

Data Availability

Data have been deposited in the Open Science Framework (https://osf.io/fywae/).

References

- 1.Thaler R. H., Sunstein C. R., Nudge: Improving Decisions about Health, Wealth, and Happiness (Yale University Press, 2008). [Google Scholar]

- 2.Thaler R. H., Sunstein C. R., Balz J. P., “Choice architecture” in The Behavioral Foundations of Public Policy, Shafir E., Ed. (Princeton University Press, 2013), pp. 428–439. [Google Scholar]

- 3.G. S. Becker, The Economic Approach to Human Behavior (University of Chicago Press, ed. 1, 1976). [Google Scholar]

- 4.Ajzen I., The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50, 179–211 (1991). [Google Scholar]

- 5.Stern P. C., Toward a coherent theory of environmentally significant behavior. J. Soc. Issues 56, 407–424 (2000). [Google Scholar]

- 6.Albarracin D., Shavitt S., Attitudes and attitude change. Annu. Rev. Psychol. 69, 299–327 (2018). [DOI] [PubMed] [Google Scholar]

- 7.Evans J. S. B. T., Dual-processing accounts of reasoning, judgment, and social cognition. Annu. Rev. Psychol. 59, 255–278 (2008). [DOI] [PubMed] [Google Scholar]

- 8.Simon H. A., A behavioral model of rational choice. Q. J. Econ. 69, 99–118 (1955). [Google Scholar]

- 9.H. A. Simon, Models of Bounded Rationality (MIT Press, 1982). [Google Scholar]

- 10.Gigerenzer G., Gaissmaier W., Heuristic decision making. Annu. Rev. Psychol. 62, 451–482 (2011). [DOI] [PubMed] [Google Scholar]

- 11.Lichtenstein S., Slovic P., Eds., The Construction of Preference (Cambridge University Press, 2006). [Google Scholar]

- 12.Payne J. W., Bettman J. R., Johnson E. J., Behavioral decision research: A constructive processing perspective. Annu. Rev. Psychol. 43, 87–131 (1992). [Google Scholar]

- 13.Münscher R., Vetter M., Scheuerle T., A review and taxonomy of choice architecture techniques. J. Behav. Decis. Making 29, 511–524 (2016). [Google Scholar]

- 14.Kahneman D., Thinking, Fast and Slow (Farrar, Straus and Giroux, 2011). [Google Scholar]

- 15.Slovic P., From Shakespeare to Simon: Speculations—and some evidence—about man’s ability to process information. Or. Res. Inst. Res. Bull. 12, 1–19 (1972). [Google Scholar]

- 16.Hsee C. K., Zhang J., General evaluability theory. Perspect. Psychol. Sci. 5, 343–355 (2010). [DOI] [PubMed] [Google Scholar]

- 17.Shah A. K., Oppenheimer D. M., Easy does it: The role of fluency in cue weighting. Judgm. Decis. Mak. 2, 371–379 (2007). [Google Scholar]

- 18.Allcott H., Social norms and energy conservation. J. Public Econ. 95, 1082–1095 (2011). [Google Scholar]

- 19.Jessoe K., Rapson D., Knowledge is (less) power: Experimental evidence from residential energy use. Am. Econ. Rev. 104, 1417–1438 (2014). [Google Scholar]

- 20.Roberto C. A., Larsen P. D., Agnew H., Baik J., Brownell K. D., Evaluating the impact of menu labeling on food choices and intake. Am. J. Public Health 100, 312–318 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Larrick R. P., Soll J. B., Economics. The MPG illusion. Science 320, 1593–1594 (2008). [DOI] [PubMed] [Google Scholar]

- 22.Johnson E. J., Goldstein D., Medicine. Do defaults save lives? Science 302, 1338–1339 (2003). [DOI] [PubMed] [Google Scholar]

- 23.Maas J., de Ridder D. T. D., de Vet E., de Wit J. B. F., Do distant foods decrease intake? The effect of food accessibility on consumption. Psychol. Health 27 (suppl. 2), 59–73 (2012). [DOI] [PubMed] [Google Scholar]

- 24.Martin J. M., Norton M. I., Shaping online consumer choice by partitioning the web. Psychol. Mark. 26, 908–926 (2009). [Google Scholar]

- 25.Sharif M. A., Shu S. B., Nudging persistence after failure through emergency reserves. Organ. Behav. Hum. Decis. Process. 163, 17–29 (2021). [Google Scholar]

- 26.Sheeran P., Webb T. L., The intention-behavior gap. Soc. Personal. Psychol. Compass 10, 503–518 (2016). [Google Scholar]

- 27.Thaler R. H., Benartzi S., Save more tomorrow: Using behavioral economics to increase employee saving. J. Polit. Econ. 112, 164–187 (2004). [Google Scholar]

- 28.Loibl C., Jones L., Haisley E., Testing strategies to increase saving in individual development account programs. J. Econ. Psychol. 66, 45–63 (2018). [Google Scholar]

- 29.Benartzi S., et al., Should governments invest more in nudging? Psychol. Sci. 28, 1041–1055 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Beshears J., Kosowsky H., Nudging: Progress to date and future directions. Organ. Behav. Hum. Decis. Process. 161 (suppl.), 3–19 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.DellaVigna S., Linos E., “RCTs to scale: Comprehensive evidence from two nudge units” (Working Paper 27594, National Bureau of Economic Research, 2020; https://www.nber.org/papers/w27594). [Google Scholar]

- 32.Hummel D., Maedche A., How effective is nudging? A quantitative review on the effect sizes and limits of empirical nudging studies. J. Behav. Exp. Econ. 80, 47–58 (2019). [Google Scholar]

- 33.Jachimowicz J. M., Duncan S., Weber E. U., Johnson E. J., When and why defaults influence decisions: A meta-analysis of default effects. Behav. Public Policy 3, 159–186 (2019). [Google Scholar]

- 34.Kluger A. N., DeNisi A., The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 119, 254–284 (1996). [Google Scholar]

- 35.Kühberger A., The influence of framing on risky decision: A meta-analysis. Organ. Behav. Hum. Decis. Process. 75, 23–55 (1998). [DOI] [PubMed] [Google Scholar]

- 36.Abrahamse W., Steg L., Vlek C., Rothengatter T., A review of intervention studies aimed at household energy conservation. J. Environ. Psychol. 25, 273–291 (2005). [Google Scholar]

- 37.Cadario R., Chandon P., Which healthy eating nudges work best? A meta-analysis of field experiments. Mark. Sci. 39, 459–486 (2020). [Google Scholar]

- 38.Nisa C. F., Bélanger J. J., Schumpe B. M., Faller D. G., Meta-analysis of randomised controlled trials testing behavioural interventions to promote household action on climate change. Nat. Commun. 10, 4545 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zlatevska N., Dubelaar C., Holden S. S., Sizing up the effect of portion size on consumption: A meta-analytic review. J. Mark. 78, 140–154 (2014). [Google Scholar]

- 40.Cohen J., Statistical Power Analysis for the Behavioral Sciences (Lawrence Erlbaum Associates, 1988). [Google Scholar]

- 41.Borenstein M., Hedges L. V., Higgins J. P., Rothstein H. R., Identifying and Quantifying Heterogeneity (John Wiley & Sons, Ltd, 2009), pp. 107–125. [Google Scholar]

- 42.Borenstein M., Higgins J. P., Hedges L. V., Rothstein H. R., Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Res. Synth. Methods 8, 5–18 (2017). [DOI] [PubMed] [Google Scholar]

- 43.Vevea J. L., Woods C. M., Publication bias in research synthesis: Sensitivity analysis using a priori weight functions. Psychol. Methods 10, 428–443 (2005). [DOI] [PubMed] [Google Scholar]

- 44.Egger M., Smith G. Davey, Schneider M., Minder C., Bias in meta-analysis detected by a simple, graphical test. BMJ 315, 629–634 (1997). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Harrison G. W., J. A.. List, Field experiments. J. Econ. Lit. 42, 1009–1055 (2004). [Google Scholar]

- 46.Maki A., Burns R. J., Ha L., Rothman A. J., Paying people to protect the environment: A meta-analysis of financial incentive interventions to promote proenvironmental behaviors. J. Environ. Psychol. 47, 242–255 (2016). [Google Scholar]

- 47.Mantzari E., et al., Personal financial incentives for changing habitual health-related behaviors: A systematic review and meta-analysis. Prev. Med. 75, 75–85 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Snyder L. B., et al., A meta-analysis of the effect of mediated health communication campaigns on behavior change in the United States. J. Health Commun. 9 (suppl. 1), 71–96 (2004). [DOI] [PubMed] [Google Scholar]

- 49.Hagmann D., Ho E. H., Loewenstein G., Nudging out support for carbon tax. Nat. Clim. Chang. 9, 484–489 (2019). [Google Scholar]

- 50.IJzerman H., et al., Use caution when applying behavioural science to policy. Nat. Hum. Behav. 4, 1092–1094 (2020). [DOI] [PubMed] [Google Scholar]

- 51.Kristal A. S., Whillans A. V., What we can learn from five naturalistic field experiments that failed to shift commuter behaviour. Nat. Hum. Behav. 4, 169–176 (2020). [DOI] [PubMed] [Google Scholar]

- 52.Loewenstein G., Chater N., Putting nudges in perspective. Behav. Public Policy 1, 26–53 (2017). [Google Scholar]

- 53.Hardisty D. J., Johnson E. J., Weber E. U., A dirty word or a dirty world?: Attribute framing, political affiliation, and query theory. Psychol. Sci. 21, 86–92 (2010). [DOI] [PubMed] [Google Scholar]

- 54.Homonoff T. A., Can small incentives have large effects? The impact of taxes versus bonuses on disposable bag use. Am. Econ. J. Econ. Policy 10, 177–210 (2018). [Google Scholar]

- 55.McCaffery E. J., Baron J., Thinking about tax. Psychol. Public Policy Law 12, 106–135 (2006). [Google Scholar]

- 56.Mertens S., Hahnel U. J. J., Brosch T., This way please: Uncovering the directional effects of attribute translations on decision making. Judgm. Decis. Mak. 15, 25–46 (2020). [Google Scholar]

- 57.Dinner I., Johnson E. J., Goldstein D. G., Liu K., Partitioning default effects: Why people choose not to choose. J. Exp. Psychol. Appl. 17, 332–341 (2011). [DOI] [PubMed] [Google Scholar]

- 58.Knowles D., Brown K., Aldrovandi S., Exploring the underpinning mechanisms of the proximity effect within a competitive food environment. Appetite 134, 94–102 (2019). [DOI] [PubMed] [Google Scholar]

- 59.Sunstein C. R., People prefer System 2 nudges (kind of). Duke Law J. 66, 121–168 (2016). [Google Scholar]

- 60.Campos S., Doxey J., Hammond D., Nutrition labels on pre-packaged foods: A systematic review. Public Health Nutr. 14, 1496–1506 (2011). [DOI] [PubMed] [Google Scholar]

- 61.Marteau T. M., Framing of information: Its influence upon decisions of doctors and patients. Br. J. Soc. Psychol. 28, 89–94 (1989). [DOI] [PubMed] [Google Scholar]

- 62.Verplanken B., Wood W., Interventions to break and create consumer habits. J. Public Policy Mark. 25, 90–103 (2006). [Google Scholar]

- 63.Wood W., Rünger D., Psychology of habit. Annu. Rev. Psychol. 67, 289–314 (2016). [DOI] [PubMed] [Google Scholar]

- 64.Venema T. A. G., Kroese F. M., Verplanken B., de Ridder D. T. D., The (bitter) sweet taste of nudge effectiveness: The role of habits in a portion size nudge, a proof of concept study. Appetite 151, 104699 (2020). [DOI] [PubMed] [Google Scholar]

- 65.Allcott H., Site selection bias in program evaluation. Q. J. Econ. 130, 1117–1165 (2015). [Google Scholar]

- 66.Ghesla C., Grieder M., Schubert R., Nudging the poor and the rich—A field study on the distributional effects of green electricity defaults. Energy Econ. 86, 104616 (2020). [Google Scholar]

- 67.Mrkva K., Posner N. A., Reeck C., Johnson E. J., Do nudges reduce disparities? Choice architecture compensates for low consumer knowledge. J. Mark. 85, 67–84 (2021). [Google Scholar]

- 68.Bryan C. J., Tipton E., Yeager D. S., Behavioural science is unlikely to change the world without a heterogeneity revolution. Nat. Hum. Behav. 5, 980–989 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Hahnel U. J. J., Chatelain G., Conte B., Piana V., Brosch T., Mental accounting mechanisms in energy decision-making and behaviour. Nat. Energy 5, 952–958 (2020). [Google Scholar]

- 70.Sunstein C. R., The distributional effects of nudges. Nat. Hum. Behav. 10.1038/s41562-021-01236-z (2021). [DOI] [PubMed] [Google Scholar]

- 71.Siddaway A. P., Wood A. M., Hedges L. V., How to do a systematic review: A best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses. Annu. Rev. Psychol. 70, 747–770 (2019). [DOI] [PubMed] [Google Scholar]

- 72.Moher D., Liberati A., Tetzlaff J., Altman D. G.; PRISMA Group, Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 6, e1000097 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Szaszi B., Palinkas A., Palfi B., Szollosi A., Aczel B., A systematic scoping review of the choice architecture movement: Toward understanding when and why nudges work. J. Behav. Decis. Making 31, 355–366 (2018). [Google Scholar]

- 74.Mertens S., Herberz M., Hahnel U. J. J., Brosch T., The effectiveness of nudging: A meta-analysis of choice architecture interventions across behavioral domains. Open Science Framework. https://osf.io/fywae/. Deposited 11 September 2021. [DOI] [PMC free article] [PubMed]

- 75.Cheung M. W. L., Modeling dependent effect sizes with three-level meta-analyses: A structural equation modeling approach. Psychol. Methods 19, 211–229 (2014). [DOI] [PubMed] [Google Scholar]

- 76.Van den Noortgate W., López-López J. A., Marín-Martínez F., Sánchez-Meca J., Three-level meta-analysis of dependent effect sizes. Behav. Res. Methods 45, 576–594 (2013). [DOI] [PubMed] [Google Scholar]

- 77.Van den Noortgate W., López-López J. A., Marín-Martínez F., Sánchez-Meca J., Meta-analysis of multiple outcomes: A multilevel approach. Behav. Res. Methods 47, 1274–1294 (2015). [DOI] [PubMed] [Google Scholar]

- 78.Cameron A. C., Miller D. L., A practitioner’s guide to cluster-robust inference. J. Hum. Resour. 50, 317–372 (2015). [Google Scholar]

- 79.Hedges L. V., Tipton E., Johnson M. C., Robust variance estimation in meta-regression with dependent effect size estimates. Res. Synth. Methods 1, 39–65 (2010). [DOI] [PubMed] [Google Scholar]

- 80.Viechtbauer W., Conducting meta-analyses in R with the metafor package. J. Stat. Softw. 36, 1–48 (2010). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data have been deposited in the Open Science Framework (https://osf.io/fywae/).