Abstract

Purpose

Stuttering is characterized by intermittent speech disfluencies, which are dramatically reduced when speakers synchronize their speech with a steady beat. The goal of this study was to characterize the neural underpinnings of this phenomenon using functional magnetic resonance imaging.

Method

Data were collected from 16 adults who stutter and 17 adults who do not stutter while they read sentences aloud either in a normal, self-paced fashion or paced by the beat of a series of isochronous tones (“rhythmic”). Task activation and task-based functional connectivity analyses were carried out to compare neural responses between speaking conditions and groups after controlling for speaking rate.

Results

Adults who stutter produced fewer disfluent trials in the rhythmic condition than in the normal condition. Adults who stutter did not have any significant changes in activation between the rhythmic condition and the normal condition, but when groups were collapsed, participants had greater activation in the rhythmic condition in regions associated with speech sequencing, sensory feedback control, and timing perception. Adults who stutter also demonstrated increased functional connectivity among cerebellar regions during rhythmic speech as compared to normal speech and decreased connectivity between the left inferior cerebellum and the left prefrontal cortex.

Conclusions

Modulation of connectivity in the cerebellum and prefrontal cortex during rhythmic speech suggests that this fluency-inducing technique activates a compensatory timing system in the cerebellum and potentially modulates top-down motor control and attentional systems. These findings corroborate previous work associating the cerebellum with fluency in adults who stutter and indicate that the cerebellum may be targeted to enhance future therapeutic interventions.

Supplemental Material

Stuttering is a speech disorder that impacts the production of smooth and timely articulations of planned utterances. Stuttering typically emerges early in childhood and persists over the life span for 1% of the population (Craig et al., 2009; Yairi & Ambrose, 1999). Speech of people who stutter (PWS) is characterized by perceptually salient repetitions and prolongations of individual phonemes, as well as abnormal silent pauses at the onset of syllables and words accompanied by tension in the articulatory musculature (Max, 2004). These disfluencies are often accompanied by other secondary behaviors, such as eye blinking and facial grimacing (Guitar, 2014). Along with these more overt characteristics, stuttering also has a severe impact on those who experience it, including increased social anxiety and decreased self-confidence, emotional functioning, and overall mental health (Craig et al., 2009; Craig & Tran, 2006, 2014). Gaining a better understanding of how and why stuttering occurs will help to lead to more targeted therapies and improve quality of life for PWS.

Considerable effort has been made to identify the core pathology underlying stuttering (for reviews, see Max, 2004; Max et al., 2004). More recently, diverse brain imaging modalities have been used to examine how the brains of PWS differ from those who do not and how these measures change in different speaking scenarios or following therapy (see Etchell et al., 2018, for a complete literature review). Studies have consistently found that PWS show structural and functional differences in the brain network pertaining to speech initiation and timing (cortico-thalamo-basal ganglia motor loop; Chang & Zhu, 2013; Giraud, 2008; Lu, Peng, et al., 2010) and reduced structural integrity in speech planning areas (left ventral premotor cortex [vPMC] and inferior frontal gyrus [IFG]; Beal et al., 2013, 2015; Chang et al., 2008, 2011; Garnett et al., 2018; Kell et al., 2009; Lu et al., 2012). Functionally, previous work has indicated that, during speech, adults who stutter (AWS) have reduced activation in left hemisphere auditory areas (Belyk et al., 2015; Braun et al., 1997; Chang et al., 2009; De Nil et al., 2008, 2000; Fox et al., 1996; Van Borsel et al., 2003) and overactivation in right hemisphere structures (Braun et al., 1997; De Nil et al., 2000; Fox et al., 1996, 2000; Ingham et al., 2000; Van Borsel et al., 2003), which are typically nondominant for language processing. These studies strongly suggest that stuttering occurs as the result of impaired speech timing, planning, and auditory processing and that brain structures not normally involved in speech production are potentially recruited to compensate.

In addition to these task activation analyses, previous studies have examined task-based functional connectivity (i.e., activation coupling between multiple brain areas during a speaking task) differences between AWS and adults who do not stutter (ANS). Some studies show reduced connectivity between the left IFG and the left precentral gyrus in AWS (Chang et al., 2011; Lu et al., 2009), which suggests an impairment in translating speech plans for motor execution (Guenther, 2016). Other studies show group differences in connectivity between auditory, motor, premotor, and subcortical areas (Chang et al., 2011; Kell et al., 2018; Lu, Chen, et al., 2010; Lu et al., 2009; Lu, Peng, et al., 2010). Results of these task-based connectivity studies, as well as resting-state and structural connectivity studies (e.g., Chang & Zhu, 2013; Sitek et al., 2016), have made it apparent that stuttering behavior is not merely the result of disruptions to one or more separate brain regions, but also differences in the ability for brain regions to communicate with one another during speech.

Beyond examining neural activation in AWS during typical speech, imaging studies have also looked at activation during conditions where AWS speak more fluently. One such condition that has been widely examined behaviorally is the rhythm effect in which stuttering disfluencies are dramatically reduced when speakers synchronize their speech movements with isochronous pacing stimuli (Azrin et al., 1968; Barber, 1940; Hutchinson & Norris, 1977; Stager et al., 1997; Toyomura et al., 2011). These fluency-enhancing effects are robust; they occur regardless of whether the pacing stimulus is presented in the acoustic or visual modalities (Barber, 1940), can be induced even by an imagined rhythm (Barber, 1940; Stager et al., 2003), and occur independently of speaking rate (Davidow, 2014; Hanna & Morris, 1977). Previous studies investigating changes in brain activation during the rhythm effect (Braun et al., 1997; Stager et al., 2003; Toyomura et al., 2011, 2015) have found that, during isochronous speech, both AWS and ANS had increased activation in speech-related auditory and motor regions of cortex as well as parts of the basal ganglia. These activation increases were especially pronounced for AWS as compared to ANS. Toyomura et al. (2011) also demonstrated that these activation increases occurred in regions displaying underactivation during the unpaced speaking condition. This suggests that pacing speech, along with a metronome, improves fluency by “normalizing” underactivation in speech production regions. In light of the functional connectivity studies mentioned previously, characterizing changes in brain connectivity between typical and isochronously paced speech could illuminate how external pacing leads to normalized activation in the speech network and, ultimately, fluency.

In this study, we employed functional magnetic resonance imaging (fMRI) during an overt isochronously paced sentence-reading task in AWS and ANS to characterize modulation of brain activation and functional connectivity related to the rhythm effect in stuttering. In addition, this study sought to address an important issue not previously accounted for in neuroimaging studies of the rhythm effect: a reduced speaking rate in the paced compared to the unpaced condition. Reduced speaking rate and paced speech can both induce fluency in AWS (Andrews et al., 1982), but the effects are dissociable—the rhythm effect increases fluency even when speaking rates are matched between speaking conditions (Davidow, 2014). Since brain activation is also modulated by speaking rate (Fox et al., 2000; Riecker et al., 2006), activation changes between paced and unpaced conditions may reflect either the planning/production features or the fluency-inducing effect of both, unless rate is accounted for. Two prior studies (Braun et al., 1997; Stager et al., 2003) examined general differences between “fluent” and “disfluent” speaking conditions, aiming to characterize the neural underpinnings of fluency without controlling for features that contributed (e.g., rate, speaking style, percent voicing). Toyomura et al. (2011) attempted to control for rate differences between the conditions by instructing participants to speak at similar rates during both conditions. However, they still found a significantly reduced speaking rate in the metronome-paced condition that was not accounted for in their analyses. Separating out the effects of rate would help elucidate the neural underpinnings of the rhythm effect itself. In this study, a combination of training and analysis procedures was used to accomplish this.

Method

The current study complied with the principles of research involving human subjects as stipulated by the Boston University (BU) Institutional Review Board (Protocol 2421E) and the Massachusetts General Hospital (MGH) Human Research Committee, and participants gave informed consent before taking part. The entire experimental procedure took approximately 2 hr, and subjects received monetary compensation.

Subjects

Sixteen AWS (11 men and five women, aged 18–58 years, M age = 29.9 years, SD = 12.9) and 17 ANS (11 men and six women, aged 18–49 years, M age = 28.7 years, SD = 8.1) from the greater Boston area were included in the final analyses. Age was not significantly different between groups (two-sample t test, t = 0.31, p = .756). Subjects were native speakers of American English who reported normal (or corrected-to-normal) vision and no history of hearing, speech, language, or neurological disorders (aside from persistent developmental stuttering for the AWS). Handedness was measured with the Edinburgh Handedness Inventory (Oldfield, 1971). Using this metric, all AWS were found to be right-handed (scoring greater than 40), but there was more variability among ANS (13 right-handed, one left-handed, and three ambidextrous). There was a significant difference in handedness score between groups (Wilcoxon rank-sum test, z = 2.29, p = .022); therefore, handedness score was included as a covariate in all group imaging comparisons. For each stuttering participant, stuttering severity was determined using the Stuttering Severity Instrument–Fourth Edition (SSI-4; Riley, 2008; mean score = 23.1, range: 9–42; see Table 1 for individual participants). Four additional subjects (three AWS and one ANS) were also tested, but they were excluded during data inspection (described below in the Behavioral Analysis and Task Activation fMRI Analysis sections).

Table 1.

Demographic and stuttering severity data from adults who stutter (AWS).

| Subject ID | Age | Gender | SSI-4 composite | SSI-Mod | Disfluency rate |

|---|---|---|---|---|---|

| AWS01 | 19 | F | 28 | 19 | 0% |

| AWS02 | 22 | F | 31 | 26 | 3.03% |

| AWS03 | 31 | F | 30 | 22 | 3.03% |

| AWS04 | 21 | M | 9 | 7 | 1.92% |

| AWS05 | 58 | M | 14 | 11 | 0% |

| AWS06 | 23 | M | 42 | 29 | 0% |

| AWS07 | 53 | M | 27 | 22 | 0% |

| AWS08 | 44 | M | 20 | 16 | 0% |

| AWS09 | 20 | M | 18 | 15 | 1.52% |

| AWS10 | 22 | M | 27 | 18 | 3.02% |

| AWS11 | 21 | M | 19 | 16 | 6.06% |

| AWS12 | 20 | M | 24 | 14 | 1.52% |

| AWS13 | 18 | F | 14 | 11 | 0% |

| AWS14 | 35 | M | 30 | 19 | 0% |

| AWS15 | 42 | M | 22 | 17 | 1.52% |

| AWS16 | 29 | M | 14 | 12 | 0% |

Note. SSI-4 = Stuttering Severity Index–Fourth Edition; SSI-Mod = a modified version of the SSI-4 that does not include a subscore related to concomitant movements; Disfluency rate = the percentage of trials containing disfluencies during the normal speech condition; F = female; M = male.

fMRI Paradigm

Sixteen 8-syllable sentences were selected from the Revised List of Phonetically Balanced Sentences (Harvard Sentences; Institute of Electrical and Electronics Engineers, 1969; see Appendix A). These sentences, composed of one- and two-syllable words, contain a broad distribution of English speech sounds (e.g., “The juice of lemons makes fine punch”). During a functional brain-imaging session, subjects read aloud the stimulus sentences under two different speaking conditions, one in which individual syllables were paced by isochronous auditory beats (i.e., the rhythm condition) and one in which syllables were not paced (i.e., the normal condition). For each trial, subjects were presented with eight isochronous tones (1000 Hz, 25-ms duration), with a 270ms interstimulus interval. This resulting rate of approximately 222 beats per minute was chosen so that participants' speech would approximate the rate of the normal condition (based on previous estimates of mean speaking rate in English; Davidow, 2014; Pellegrino et al., 2004). Participants were instructed to refrain from using any part of their body (e.g., finger or foot) to tap to the rhythm.

To avoid confounding the auditory region blood oxygen level–dependent (BOLD) response to the pace tone and speech auditory feedback, the pacing tones were terminated prior to the presentation of the orthographic stimulus. During a rhythm or normal trial, the orthography of a given sentence was presented with the corresponding trial identifier (i.e., “rhythm” or “normal”) presented above the sentence. From this identifier, subjects were instructed to either read the sentence “in a rhythmic way” by aligning each syllable to a beat or in a natural way. Thus, on rhythm trials, subjects used the tones to pace their forthcoming speech, while on normal trials, they read the stimuli at a normal speaking rate, rhythm, and intonation (see Appendix B for detailed instructions). The font color was either blue for rhythm or green for normal or vice versa, and colors were counterbalanced across subjects. Subjects were instructed to begin reading aloud immediately after the sentence appeared on the screen. In the event that they made a mistake, they were asked to refrain from producing any corrections and remain silent until the next trial. Silent baseline trials were also included wherein subjects heard the tones and saw a random series of typographical symbols (e.g., “+\^ &$/[|\ $=[ [)*% /-@ \| -%-/”) clustered into wordlike groupings (matched to stimulus sentences); subjects refrained from speaking during these trials.

Subjects participated in a behavioral experiment (not reported here) prior to the imaging experiment that gave them experience with the speech stimuli and the task. The time between this prior exposure and the present experiment ranged from 0 to 424 days. Immediately prior to the imaging session, subjects practiced each sentence under both conditions until they demonstrated competence with the task and sentence production. Subjects also completed a set of six practice trials in the scanner prior to fMRI data collection. To control basic speech parameters across conditions and groups, subjects were provided with performance feedback on their overall speech rate and loudness during practice only. Following this practice set, subjects completed between two and four experimental runs of test trials, depending on time constraints (14 ANS and 14 AWS completed four runs, three ANS and one AWS completed three runs, and one AWS completed two runs). During the experimental session, verbal feedback was provided between runs if subjects consistently performed outside the specified speech rate (mean syllable duration = 220–320 ms). Each run consisted of 16 rhythm trials, 16 normal trials, and 16 baseline trials, pseudorandomly interleaved within each run for each subject. All trials were audio-recorded for later processing.

Data Acquisition

MRI data for this study were collected at two locations: the Athinoula A. Martinos Center for Biomedical Imaging at the MGH, Charlestown Campus (nine AWS, nine ANS) and the Cognitive Neuroimaging Center at BU (eight AWS, eight ANS). At MGH, images were acquired with a 3T Siemens Skyra scanner and a 32-channel head coil, while a 3T Siemens Prisma scanner with a 64-channel head coil was used at BU. At each location, subjects lay supine in the scanner, and functional volumes were collected using a gradient echo, echo-planar imaging BOLD sequence (repetition time [TR] = 11.5 s, acquisition time = 2.47 s, echo time = 30 ms, flip angle = 90°). Each functional volume covered the entire brain and was composed of 46 axial slices (64 × 64 matrix) acquired in interleaved order and accelerated using a simultaneous multislice factor of 3, with a 192-mm field of view. The in-plane resolution was 3.0 × 3.0 mm2, and slice thickness was 3.0 mm with no gap. Additionally, a high-resolution T1-weighted whole-brain structural image was collected from each participant to anatomically localize the functional data (MPRAGE [magnetization-prepared rapid gradient-echo] sequence, 256 × 256 × 176 mm3 volume, with a 1-mm isotropic resolution, TR = 2.53 s, inversion time = 1,100 ms, echo time = 1.69 ms, flip angle = 7°).

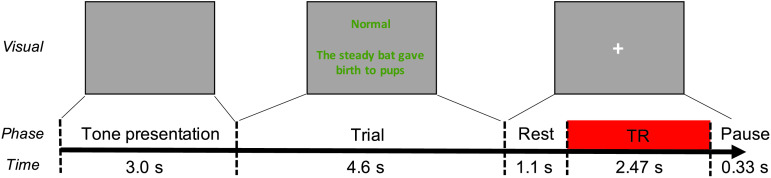

Functional data were acquired using a sparse image acquisition paradigm (Eden et al., 1999; Hall et al., 1999) that allowed participants to produce the target sentences during silent intervals between volume acquisitions. Volumes were acquired 5.7–8.17 s after visual stimulus presentation to ensure a 4- to 6-s delay between the middle of sentence production (approximately 2.3 s post–sentence presentation) and the middle of the acquisition (approximately 6.9 s post–sentence presentation), aligning the acquisition to the peak of the canonical task-related BOLD response to the subject's production (Poldrack et al., 2011). Prior work has shown there is variation in the timing of this hemodynamic response across tasks, brain regions, and participants (Handwerker et al., 2004; Janssen & Mendieta, 2020). However, since the functional volumes are acquired over 2.47 s, sentences are produced over the course of about 2 s, and there is a random amount of jitter between the start of the sentence production and the start of the acquisition at each trial; the single acquisition provides a broad sampling of the hemodynamic response across a range of different delay times. Furthermore, by scanning after speech production has ended, this paradigm reduces head motion–induced scan artifacts, eliminates the influence of scanner noise on speaker performance, and allows subjects to perceive their own self-generated auditory feedback in the absence of scanner noise (e.g., Gracco et al., 2005). A schematic representation of the trial structure and timeline is shown in Figure 1.

Figure 1.

Schematic diagram illustrating the temporal structure of stimulus presentation during functional data acquisition. At the start of each trial, isochronous tone sequences were presented for 3.0 s. The visual stimulus then appeared and remained on screen for 4.6 s. At 1.1 s after stimulus offset, a whole-brain volume was acquired. The next trial started 0.33 s after data acquisition was complete. TR = repetition time.

Visual stimuli were projected onto a screen viewed from within the scanner via a mirror attached to the head coil. Auditory stimuli were delivered to both ears through Sensimetrics Model S-14 MRI-compatible earphones using MATLAB (The MathWorks). Subjects' utterances were transduced with a Fibersound Model FOM1-MR-30m fiberoptic microphone, sent to a laptop (Lenovo ThinkPad W540), and recorded using MATLAB. Subjects took a short break after completing each run.

Behavioral Analysis

The open-source large-vocabulary continuous speech recognition engine Julius (Lee & Kawahara, 2009) was used in conjunction with the free VoxForge American English acoustic models (voxforge.org) to perform phoneme-level alignment on the sentence recordings. This resulted in phoneme boundary timing information for every trial. A researcher manually inspected each trial to ensure correct automatic detection of phoneme boundaries. Any trials in which the subject made a reading error, a condition error (i.e., spoke at an isochronous pace when they were cued to speak normally or vice versa), or a disfluency categorized as a stutter by a licensed speech-language pathologist were eliminated from further behavioral analysis. One ANS who made consistent condition errors was eliminated from further analysis. One AWS was eliminated from further analysis due to an insufficient number of fluent trials during the normal speech condition (six of 64 attempted). Neither were included in the total participant count in the Subjects section.

To evaluate whether there was a fluency-enhancing effect of isochronous pacing, the percentage of trials eliminated due to stuttering in the AWS group was compared between the two speaking conditions using a nonparametric Wilcoxon signed-ranks test. Measures of the total sentence duration and intervocalic timing from each trial were also extracted to determine the rate and isochronicity of each production. Within a sentence, the average time between the centers of the eight successive vowels was calculated to determine the intervocalic interval (IVI). The reciprocal (1/IVI) was then calculated, resulting in a measure of speaking rate in units of IVIs per second. The coefficient of variation for IVIs (CV-IVIs) was also calculated by dividing the standard deviation of IVIs by the mean IVI. A higher CV-IVI indicates higher variability of IVI, while a CV-IVI of 0 reflects perfect isochronicity. Rate and CV-IVI were compared between groups and conditions using a mixed-design analysis of variance. A Bonferroni correction was applied across these two analyses to account for multiple testing.

Task Activation fMRI Analysis

Preprocessing

Following data collection, all images were processed through two preprocessing pipelines: a surface-based pipeline for cortical activation analyses and a volume-based pipeline for subcortical and cerebellar analyses. For both the surface- and volume-based pipelines, functional images from each subject were simultaneously realigned to the mean subject image and unwarped (motion-by-inhomogeneity interactions) using SPM12's realign and unwarp procedure (Andersson et al., 2001). Outlier scans were detected with artifact detection tools (https://www.nitrc.org/projects/artifact_detect/) based on motion displacement (scan-to-scan motion threshold of 0.9 mm) and mean signal change (scan-to-scan signal change threshold of 5 SDs above the mean). For the surface-based pipeline, functional images from each subject were then coregistered with their high-resolution T1 structural images and resliced using SPM12's intermodal registration procedure with a normalized mutual information objective function. The structural images were segmented into white matter, gray matter, and cerebrospinal fluid, and cortical surfaces were reconstructed using the FreeSurfer image analysis suite (freesurfer.net; Fischl et al., 1999). Functional data were then resampled at the location of the FreeSurfer fsaverage tessellation of each subject-specific cortical surface. For the vertex-level analyses (see Second-Level Group Analyses section), surfaces were additionally smoothed using iterative diffusion smoothing with 40 diffusion steps (equivalent to an 8-mm full-width half-maximum smoothing kernel; Hagler et al., 2006).

For the volume-based pipeline, after the outlier detection step, functional volumes were then simultaneously segmented and normalized directly to Montréal Neurological Institute (MNI) space using SPM12's combined normalization and segmentation procedure (Ashburner & Friston, 2005). For the voxel-level analyses (see Second-Level Group Analyses section), volumes were also smoothed using an 8-mm full-width half-maximum smoothing kernel. A mask was then applied, such that only voxels within the subcortical structures were submitted to subsequent analyses. The original T1 structural image from each subject was also centered, segmented, and normalized using SPM12. Following preprocessing, two AWS (not included in the 16 described in the Subjects section) were eliminated from subsequent analyses: one due to excessive head motion in the scanner (> 1.5 mm average scan-to-scan motion) and one due to structural brain abnormalities.

First-Level Analysis

After preprocessing, BOLD responses were estimated for each subject using a general linear model (GLM) in SPM12. Because images were collected in a sparse sequence with a relatively long TR, the BOLD response for each trial (event) was modeled as an individual epoch. The model included regressors for each of the conditions of interest: normal, rhythm, and baseline. Trials that contained reading errors, condition errors, or disfluencies were modeled as a single separate condition of noninterest. To control for differences in rate between the two conditions (see the Results section), trial-by-trial mean IVIs were centered and added as a covariate of noninterest. These regressors were collapsed across runs to maximize power while controlling for potential differences in the number of trials produced without errors or disfluencies. For each run, regressors were added to remove linear effects of time (e.g., signal drift, adaptation) in addition to six motion covariates (taken from the realignment step) and a constant term, as well as outlier regressors (one regressor per identified outlier) to remove the effects of acquisitions with excessive scan-to-scan motion or global signal change (estimated from the artifact detection step, described above). The first-level GLM regressor coefficients for the three conditions of interest were estimated at each surface vertex and subcortical voxel. The mean normal speech and rhythm speech coefficients were then contrasted with the baseline condition to yield contrast effect size values for the two contrasts of interest (normal–baseline and rhythm–baseline).

Region of Interest Definition

Cortical regions of interest (ROIs) were labeled according to a modified version of the SpeechLabel atlas previously described in Cai et al. (2014); the atlas divides the cortex into macro-anatomically defined ROIs specifically tailored for studies of speech. Labels are applied by mapping the atlas from the FreeSurfer fsaverage cortical surface template to each individual surface reconstruction.

Subcortical and cerebellar ROIs were extracted from multiple atlases. Thalamic ROIs were extracted from the mean atlas of thalamic nuclei described by Krauth et al. (2010). Basal ganglia ROIs were derived from the nonlinear normalized probabilistic atlas of basal ganglia described by Keuken et al. (2014). Each ROI was thresholded at a minimum probability threshold of 33% and combined in a single labeled volume in the atlas's native space (the MNI104 template). Cerebellar ROIs were derived from the SUIT (spatially unbiased infra-tentorial template) 25% maximum probability atlas of cerebellar regions (Diedrichsen, 2006; Diedrichsen et al., 2009, 2011). Each atlas was nonlinearly registered to the SPM12 MNI152 template and then combined into a single labeled volume.

Second-Level Group Analyses

Group activation differences were examined in the two speech conditions compared to baseline (normal–baseline, rhythm–baseline) as well as the Group × Condition interaction. Additionally, differences between the two speech conditions (rhythm–normal) were examined in each group separately. All group-level analyses were performed using a GLM with random effects across subjects. Group comparisons included the following four control covariates: (a) subject motion (average framewise displacement score for each subject), (b) acquisition site (MGH vs. BU), (c) handedness (due to significant difference in handedness between the two groups; see the Subjects section), and (d) stuttering severity within the AWS group only. This severity covariate was a modification of the SSI-4 score, heretofore termed “SSI-Mod.” SSI-Mod removes the secondary concomitants subscore from each subject's SSI-4 score, thus focusing the measure on speech-related function. The SSI-Mod and SSI-4 composite scores for each subject are included in Table 1. With 16 AWS and 17 ANS and four control covariates, power is sufficient (greater than 80%) to detect at a p < .05 false positive control level large between-group differences (Cohen's d > 0.87). It is not uncommon to find or expect such large effects in the context of voxel- or surface-level analyses, and these sample sizes are comparable to or larger than those of similar studies (Stager et al., 2003; Toyomura et al., 2011, 2015). Additional regression analyses were carried out to determine whether stuttering severity, measured by the SSI-Mod, or disfluencies occurring during the experiment were correlated with task activation. Because very few disfluencies occurred during the rhythm condition, we were only able to calculate the correlation between the percentage of disfluencies occurring during normal trials (“disfluency rate”) and the normal–baseline activation. Note that because trials containing disfluencies were regressed out of the first-level effects, correlations with disfluency rate are capturing activation related to the propensity to stutter and not disfluent speech itself.

Two sets of group-level analyses were carried out to detect activation differences across groups and conditions: analyses at the level of the vertex (cortical) or voxel (subcortical) and exploratory ROI analyses. For the vertex/voxel analyses, the GLM was carried out on the smoothed data at each unit. Unit-wise statistics were first thresholded at a height threshold of p < .01, uncorrected. Cluster-level statistics were then estimated using a permutation/randomization analysis with 1,000 simulations (Bullmore et al., 1999), and only clusters below p FDR < .05 threshold are reported (topological false discovery rate [FDR]; Chumbley et al., 2010). Additional ROI analyses were performed to determine if activation from other brain regions was also modulated by group or condition at a less strict threshold. First-level contrast effects calculated from nonsmoothed data were averaged within each ROI. For each exploratory analysis, ROIs below a p < .05 uncorrected threshold are reported.

Functional Connectivity Analysis

Preprocessing and Analysis

Seed-based functional connectivity analyses were carried out using the CONN toolbox (Whitfield-Gabrieli & Nieto-Castanon, 2012). The same preprocessed data used for the task activation analysis were used for the functional connectivity analysis. The seeds for this analysis comprised a subset of the ROIs used in the exploratory task activation analysis, defined in either fsaverage surface (cortical) or MNI volume (subcortical) space. These included regions with significant positive activation (thresholded at one-sided p < .05 and corrected for multiple comparisons using a false discovery rate correction within each contrast; Benjamini & Hochberg, 1995) in the normal–baseline or rhythm–baseline contrasts, or significant rhythm–normal activation in either direction (thresholded at two-sided p < .05, uncorrected) across all subjects. In addition, prior work has found that connectivity between left orbitofrontal regions and the cerebellum is both increased in adults who have spontaneously recovered from stuttering (Kell et al., 2018) and negatively associated with severity (Sitek et al., 2016), indicating a potential common substrate of fluency in AWS. To determine whether connectivity between these regions is also found in rhythm-induced fluency, three left orbitofrontal regions were added as seeds (see Supplemental Figures S10 and S11 for a complete list).

The BOLD time series was first averaged within seed ROIs. To include connections between the speech production network and other regions that potentially have a moderating effect on this network, the target area in this analysis was extended to the whole brain. The target functional volume data were smoothed using an 8-mm full-width half-maximum Gaussian smoothing kernel. Following preprocessing, an aCompCor (anatomical component-based noise correction; Behzadi et al., 2007) denoising procedure was used to eliminate extraneous motion, physiological, and artifactual effects from the BOLD signal in each subject. In each seed ROI and every voxel in the smoothed brain volume, denoising was carried out using a linear regression model (Nieto-Castañón, 2020) that included five white matter regressors; five cerebrospinal fluid regressors; six subject motion parameters plus their first-order temporal derivatives; scrubbing regressors to remove the effects of outlier scans (from artifact detection, described above); and separate regressors for each run/session (constant effects and first-order linear trends), task condition (main and first-order derivative terms), and error trial. No bandpass filter was applied in order to preserve high-frequency fluctuations in the residual data.

For each participant, a generalized psychophysiological interaction (PPI; McLaren et al., 2012) analysis was implemented using a multiple regression model, predicting the signal in each target voxel with three sets of regressors: (a) the BOLD time series in a seed ROI, characterizing baseline connectivity between a seed ROI and each target voxel; (b) the main effects of each of the task conditions (normal, rhythm, and baseline), characterizing direct functional responses to each task in the target voxel; and (c) their seed-time-series-by-task interactions (PPI terms), characterizing the relative changes in functional connectivity strength associated with each task. The implementation of PPI in CONN used in this article (Nieto-Castañón, 2020) is based on the original Friston et al. (1997) formulation, where the interaction is modeled and estimated at the level of the BOLD signal directly. Among other potential benefits, this allows the direct application of PPI and generalized PPI to the analysis of sparse acquisition data sets. Second-level random effects analyses were then used to compare these interaction terms within and between groups and conditions, specifically the rhythm–normal contrast in AWS and ANS and the Group × Condition interaction. Additional analyses examining the correlation between normal–baseline and SSI_Mod, rhythm–baseline and SSI_Mod, and normal–baseline and disfluency rate in the normal condition were also carried out. All group-level analyses included the same four control covariates used in the task activation analyses. For each comparison, separate analyses were run from the 116 seed ROIs to the whole brain. Within each analysis, a two-step thresholding procedure was used; voxels were thresholded at a p < .001 height threshold, followed by a cluster size threshold of p FDR < .05 estimated using random Gaussian field theory (Worsley et al., 1996). To control for familywise error (FWE) across the 116 separate seed-to-voxel analyses, a within-comparison Bonferroni correction was applied so that only significant clusters with p FDR < .00043 (0.05/116) were reported.

Results

Behavioral Analysis

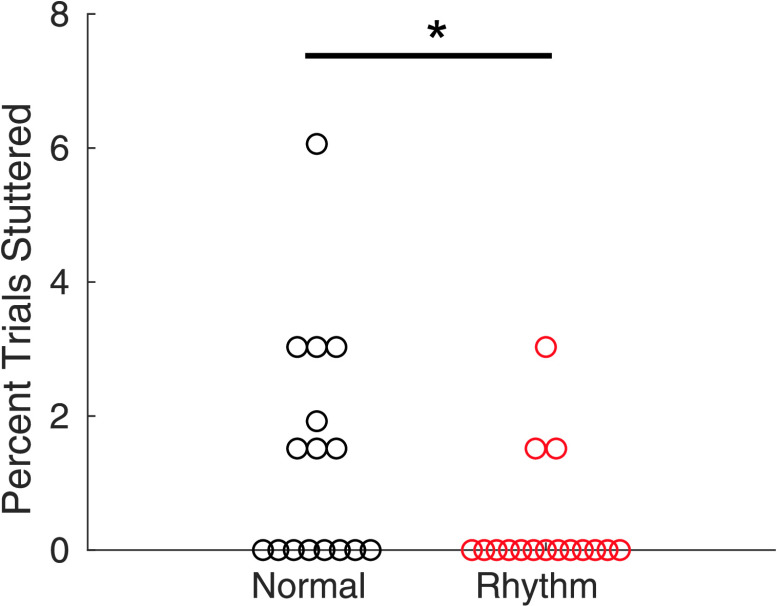

Stuttering occurred infrequently over the course of the experiment, with seven out of 16 AWS producing no disfluencies. There was, however, a significantly lower percentage of disfluent trials in the rhythm condition (0.38%) compared to the normal condition (1.35%; W = 42, p = .023; see Figure 2). There was no Group × Condition interaction or group main effect on speaking rate, but there was a significant main effect of condition with normal trials (3.773 IVI/s) produced at a faster rate than rhythm trials (3.463 IVI/s), F(1, 31) = 54.7, p FWE < .001. To examine whether this reduction in rate led to increased fluency rather than the isochronous pacing, we tested for a correlation between the change in speech rate and the reduction in disfluencies. These two measures were not significantly correlated (r = −.07, p = .80). For isochronicity, there was no main effect of group or Group × Condition interaction. There was a significant main effect of condition, where subjects had a lower CV-IVI (greater isochronicity) in the rhythm condition (0.13) than the normal condition (0.25), F(1, 31) = 492.0, p FWE < .001. For complete results regarding speaking rate and CV-IVI, see Table 2.

Figure 2.

Comparison of disfluencies between the normal and rhythm conditions for adults who stutter. Circles represent individual participants. *p < .05.

Table 2.

Descriptive and inferential statistics for speaking rate and coefficient of variation for intervocalic intervals (CV-IVI).

| Measure | ANS |

AWS |

Main effect of group | Main effect of condition | Interaction | ||

|---|---|---|---|---|---|---|---|

| Normal | Rhythm | Normal | Rhythm | ||||

| Speaking rate (IVI/s) | 3.797 ± 0.086 | 3.456 ± 0.080 | 3.748 ± 0.164 | 3.470 ± 0.173 | F(1, 31) = 0.1, p FWE = 1 | F(1, 31) = 54.7, p FWE < .001 | F(1, 31) = 0.6, p FWE = .92 |

| CV-IVI | 0.259 ± 0.013 | 0.127 ± 0.006 | 0.251 ± 0.019 | 0.132 ± 0.007 | F(1, 31) = 0.1, p FWE = 1 | F(1, 31) = 492.0, p FWE < .001 | F(1, 31) = 1.4, p FWE = .48 |

Note. Error estimates indicate 95% confidence intervals. Significant effects are highlighted in bold. ANS = adults who do not stutter; AWS = adults who stutter; FWE = familywise error rate.

Task Activation fMRI Analysis

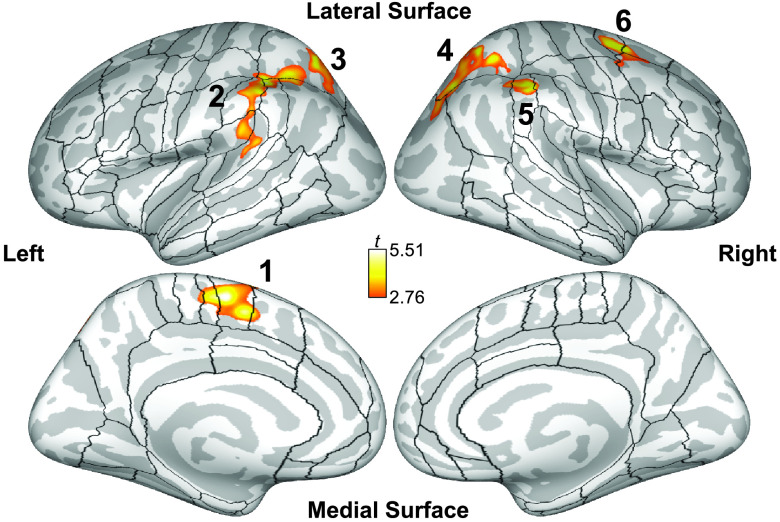

For the vertex/voxel-wise analysis, no significant differences were found between groups for either normal–baseline or rhythm–baseline (vertex/voxel-level p < .01, cluster-level p FDR < .05). Similarly, no clusters showed a significant interaction between groups and conditions. Within the AWS group, there were no significant differences between the two conditions. Because there were no significant group differences in either condition and no significant Group × Condition interactions, the rhythm–normal analysis was collapsed across groups to improve power. Clusters that had greater activation during the rhythm condition than the normal condition (vertex/voxel-level p < .01, cluster-level p FDR < .05) are shown in Table 3 and Figure 3. These six clusters include the left hemisphere cortex spanning posterior Sylvian fissure (planum temporale [PT] and parietal operculum, supramarginal gyrus [SMg], and intraparietal sulcus), left posterior superior parietal lobule (SPL), left supplementary motor area (SMA), right SPL, right SMg, and right dorsal premotor cortex (dPMC). No regions in the cerebral cortex or subcortical structures were found to be more active during the normal condition than the rhythm condition.

Table 3.

Cortical clusters with activation differences between the rhythm and normal conditions collapsed across groups (vertex-wise p < .01, cluster-wise p FDR < .05).

| Cluster | Peak MNI coordinates |

Cluster mass | p FDR | ||

|---|---|---|---|---|---|

| x | y | z | |||

| Combined groups, rhythm > normal | |||||

| L lateral superior parietal cortex (aSMg, SPL, PO, PT, pSMg) | −42 | −39 | 45 | 42456 | .0080 |

| R superior parietal cortex (SPL, OC) | 32 | −51 | 55 | 29904 | .0090 |

| L supplementary motor area (SMA, dMC, pre-SMA) | −09 | −08 | 59 | 19658 | .0166 |

| L posterior superior parietal cortex (SPL) | −21 | −68 | 59 | 13488 | .0253 |

| R posterior supramarginal gyrus (pSMg, AG) | 48 | −32 | 46 | 12479 | .0253 |

| R dorsal premotor cortex (mdPMC, adPMC, pMFg) | 23 | −04 | 53 | 12169 | .0253 |

Note. FDR = false discovery rate; MNI = Montréal Neurological Institute; L = left; aSMg = anterior supramarginal gyrus; SPL = superior parietal lobule; PO = parietal operculum; PT = planum temporale; pSMg = posterior supramarginal gyrus; R = right; OC = occipital cortex; SMA = supplementary motor area; dMC = dorsal primary motor cortex; pre-SMA = presupplementary motor area; AG = angular gyrus; mdPMC = middle dorsal premotor cortex; adPMC = anterior dorsal premotor cortex; pMFg = posterior middle frontal gyrus.

Figure 3.

Cortical clusters significantly more active during the rhythm condition than the normal condition collapsed across both groups and displayed on an inflated cortical surface (vertex-wise p < .01, cluster-wise p FDR < .05). 1 = left supplementary motor area; 2 = left lateral superior parietal cortex; 3 = posterior superior parietal cortex; 4 = right superior parietal cortex; 5 = right posterior supramarginal gyrus; 6 = right dorsal premotor cortex. Black outlines indicate cortical regions of interest used in the exploratory analysis. FDR = false discovery rate.

In the exploratory ROI analysis, AWS had increased activation in the left middle temporo-occipital cortex (p = .004), left posterior middle temporal gyrus (p = .010), and left anterior ventral superior temporal sulcus (p = .042) for the normal–baseline contrast compared to ANS and decreased activation in cerebellar vermis X (p = .049; see Supplemental Table S1). In the rhythm–baseline contrast, AWS had reduced activation in the left anterior frontal operculum (p < .009), midline cerebellar vermis VIIIb (p < .008) and cerebellar vermis VIIIa (p < .042), and right anterior middle temporal gyrus (p < .040) and cerebellar lobule X (p < .046) compared to ANS (see Supplemental Table S1). Also, in this exploratory analysis, interactions were found in a number of cortical and subcortical ROIs, including bilateral auditory regions and left inferior cerebellum (see Supplemental Table S2 and Supplemental Figure S2 for complete results). In all cases, ANS had increased activation in the rhythm condition compared to normal, while AWS showed no change or a decrease. For complete exploratory ROI results for the rhythm–normal analysis in each group separately and combined, see Supplemental Table S3 and Supplemental Figures S3–S5.

Brain–Behavior Correlation Analyses

In our vertex/voxel-wise analysis, no significant clusters were found showing a correlation between SSI-Mod and normal–baseline or rhythm–baseline, or between disfluency rate and normal–baseline. Exploratory results can be found in Supplemental Table S4 and Supplemental Figures S6–S9. Of note, positive correlations were found between SSI-Mod and activation in bilateral premotor and frontal opercular cortex, and negative correlations were found in left medial prefrontal regions. In addition, positive correlations between disfluency rate and normal–baseline were found in right perisylvian regions, left putamen, and bilateral ventral anterior thalamus (VA)/ventral lateral thalamus and inferior cerebellum.

Functional Connectivity Analyses

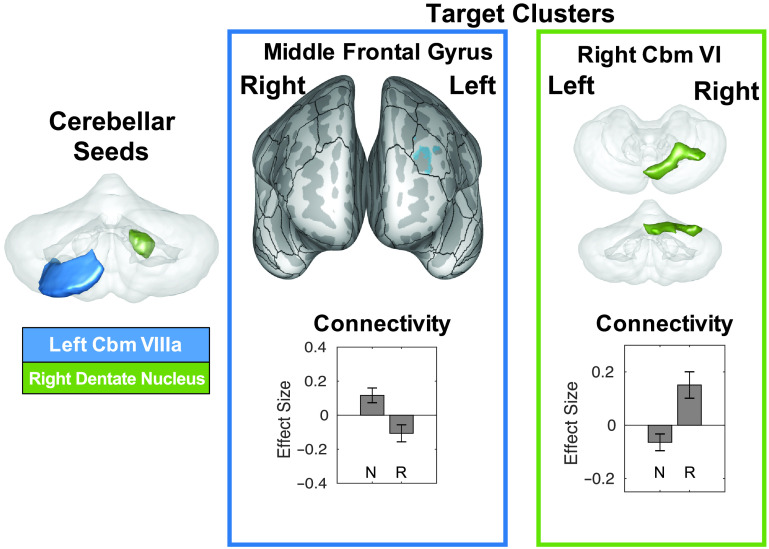

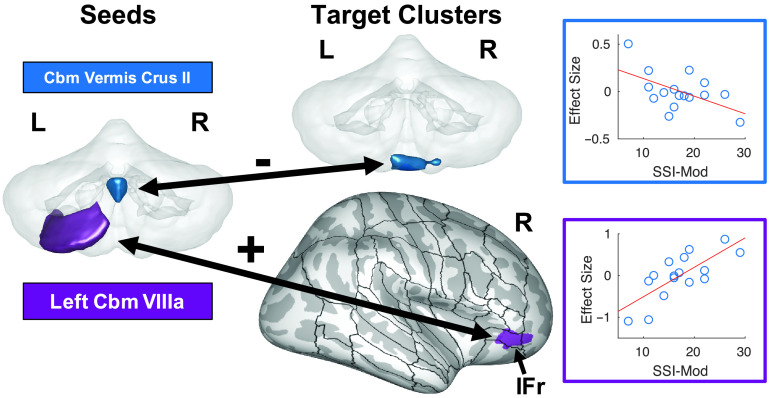

The set of 116 cortical and subcortical ROIs used as seed in the functional connectivity analyses is illustrated in Supplemental Figures S10 and S11. Within the AWS group, two connections were significantly different in the rhythm condition as compared to the normal condition (p FDR < .00043), both involving the cerebellum (see Table 4 and Figure 4). The right dentate nucleus showed an increase in connectivity in the rhythm condition, with a cluster covering right cerebellar lobule VI and crus I, as well as vermis VI, while the left cerebellar lobule VIIIa displayed reduced connectivity in the rhythm condition, with a cluster in the left anterior middle frontal gyrus. To determine whether these differences were specific to AWS, a post hoc analysis found that these connections did not reach significance in the ANS group, even using an uncorrected α level of .05. Instead, ANS had different connections that were significantly different between conditions. Increased connectivity was found in the rhythm condition between the right putamen and a cluster in the anterior cingulate gyrus straddling the midline, right anterior insula and a cluster in the left inferior frontal sulcus, and between the left Heschl's gyrus, right pre-SMA, and right ventral somatosensory cortex seeds and clusters in the left posterior SPL abutting occipital cortex. There was also decrease in connectivity during the rhythm condition between the left inferior temporo-occipital cortex (ITO) and left IFG pars opercularis and triangularis, and between the right anterior dPMC and bilateral occipital cortex (see Supplemental Figure S13).

Table 4.

Functional connectivity analysis—condition and interaction effects.

| Seed ROI | Target cluster regions | Peak MNI coordinates |

Cluster size (no. of voxels) | p FDR | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| AWS, rhythm > normal | ||||||

| R dentate nucleus | Superior cerebellum (R VI, Ver VI, R crus I) | 14 | −72 | −20 | 435 | < 1 × 10−6 |

| AWS, normal > rhythm | ||||||

| L Cbm VIIIa | Left anterior middle frontal gyrus (L aMFg) | −28 | 34 | 30 | 170 | .000207 |

| ANS, rhythm > normal | ||||||

| L H | Left parieto-occipital cortex (L SPL) | −24 | −70 | 38 | 186 | .000195 |

| R vSC | Left parieto-occipital cortex (L SPL, L AG, L OC) | −24 | −66 | 34 | 199 | .000176 |

| R aINS | Left inferior frontal sulcus (L aIFs, L pIFs, L aMFg) | −50 | 26 | 26 | 243 | .000032 |

| R putamen | Midline cingulate motor cortex (L dCMA, R vCMA, L vCMA, R dCMA, L SMg, L aCG, R SFg, R aCG) | 02 | 14 | 30 | 353 | < 1 × 10−6 |

| R pre-SMA | Left parieto-occipital cortex (L SPL, L OC, L PCN, L AG) | −16 | −70 | 34 | 224 | .000100 |

| ANS, normal > rhythm | ||||||

| L ITO | Left inferior frontal gyrus (L vIFo, L pIFt, L pFO) | −56 | 24 | 04 | 175 | .000221 |

| Group × Condition interaction | ||||||

| L Cbm I–IV | Medial sensorimotor cortex (L dSC, L pCG, L PCN, L dMC) | −16 | −52 | 44 | 254 | .000301 |

| L VA | Right inferior occipital cortex (R OC, R LG, R TOF, R VI, Ver VI) | 08 | −72 | −08 | 365 | .000023 |

| R aITg | Left frontoparietal operculum (L aINS, L aCO, L pCO, L pINS, L pFO, L H, L PO, L vPMC) | −40 | −12 | 14 | 507 | < 1 × 10−6 |

Note. Roman numerals indicate cerebellar lobules. ROI = region of interest; MNI = Montréal Neurological Institute; FDR = false discovery rate; AWS = adults who stutter; R = right; Ver = vermis; L = left; Cbm = cerebellum; aMFg = anterior middle frontal gyrus; ANS = adults who do not stutter; H = Heschl's gyrus; SPL = superior parietal lobule; vSC = ventral primary somatosensory cortex; AG = angular gyrus; OC = occipital cortex; aINS = anterior insula; aIFs = anterior inferior frontal sulcus; pIFs = posterior inferior frontal sulcus; dCMA = dorsal cingulate motor area; vCMA = ventral cingulate motor area; SMg = supramarginal gyrus; aCG = anterior cingulate gyrus; SFg = superior frontal gyrus; pre-SMA = presupplementary motor area; PCN = precuneus; ITO = inferior temporo-occipital cortex; vIFo = ventral inferior frontal gyrus pars opercularis; pIFt = posterior inferior frontal gyrus pars triangularis; pFO = posterior frontal operculum; dSC = dorsal primary somatosensory cortex; pCG = posterior cingulate gyrus; dMC = dorsal primary motor cortex; VA = ventro-anterior regions of the thalamus; LG = lingual gyrus; TOF = temporo-occipital fusiform gyrus; aITG = anterior inferior temporal gyrus; aCO = anterior central operculum; pCO = posterior central operculum; pINS = posterior insula; PO = parietal operculum; vPMC = ventral premotor cortex.

Figure 4.

A summary of functional connections that are significantly different between the normal and rhythm conditions in adults who stutter. Seed regions for these connections are indicated on the left side on a transparent 3D rendering of the cerebellum (viewed posteriorly), and colors in the rest of the figure refer back to these seed regions. Two target clusters (representing two distinct connections) are displayed in the right portion of the figure. Target Cluster 1 is projected onto an inflated surface of cerebral cortex (anterior view), along with the full cortical region of interest parcellation of the SpeechLabel atlas described in Cai et al. (2014). Target Cluster 2 is displayed on a transparent 3D rendering of the cerebellum (top view: superior; bottom view: posterior). The connectivity effect sizes in the normal and rhythm conditions for each connection are displayed below each cluster visualization. Roman numerals indicate cerebellar lobules. Error bars indicate 90% confidence intervals. Cbm = cerebellum; N = normal; R = rhythm.

There were three connections that showed a significant interaction between group and speech conditions (normal and rhythm; see Supplemental Figure S12). Connections that were lower in the rhythm condition for AWS and greater in this condition for ANS included the left cerebellar lobules I–IV to the left medial rolandic cortex and precuneus (see result cluster labeled 1 in the bottom-left panel of Supplemental Figure S12) and left VA to right lingual gyrus and occipital cortex (extending to right cerebellar lobule VI; Cluster 2). A connection that was greater in the rhythm condition for AWS and lesser in this condition for ANS was between the right anterior inferior temporal gyrus and a cluster covering parts of the left central operculum, insula, and surrounding regions. Simple effects from each group and condition are shown in the bottom panel of Supplemental Figure S12. On the basis of the results that showed increased connectivity for AWS between different parts of the cerebellum during isochronous speech, we performed a test comparing average pairwise connectivity among all 20 cerebellar ROIs active during speech. This test revealed that these ROIs show a significant Group × Condition interaction (t = 2.73, p = .011), driven by an increase in connectivity for AWS from normal to rhythm (t = 2.68, p = .019) and a nonsignificant decrease in connectivity for ANS (t = −1.93, p = .073).

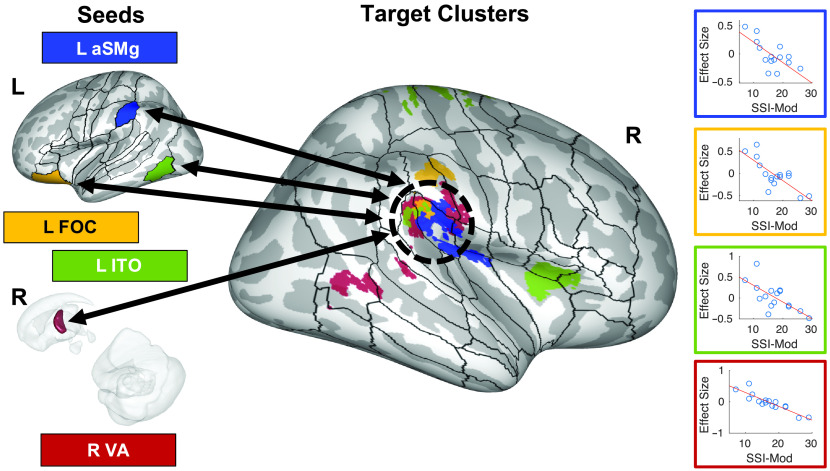

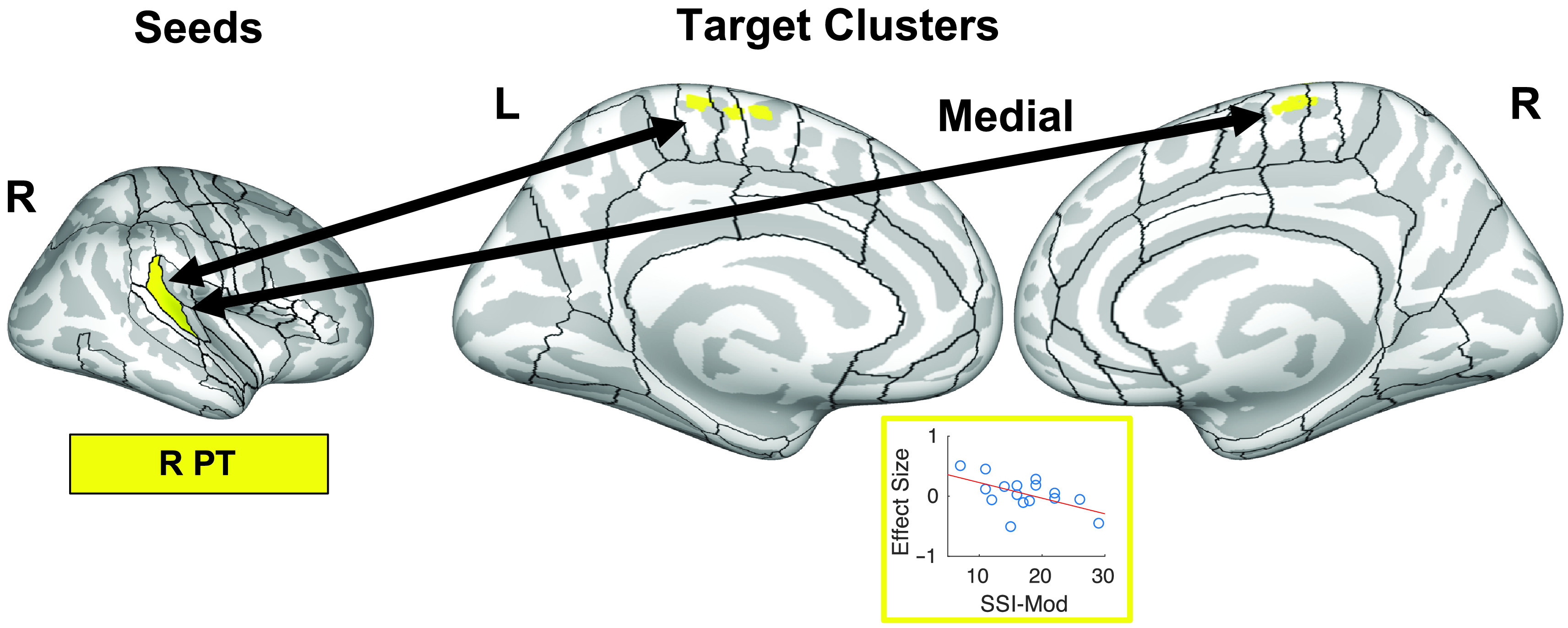

For the AWS group, there were multiple functional connections that were significantly correlated with either SSI-Mod or disfluency rate. Results are summarized in Table 5 and Supplemental Figures S14–S19. Of note, connectivity differences between the normal and baseline conditions were negatively correlated with SSI-Mod between cerebellar vermis crus II and bilateral cerebellum lobules IX and VIIIb (see Figure 5). There was also a significant positive correlation between SSI-Mod and connections between the left cerebellar lobule VIIIa and right IFG pars orbitalis (IFr) in the normal condition compared to baseline (see Figure 5). In addition, connectivity differences between the rhythm and baseline conditions were negatively correlated with SSI-Mod between the right temporoparietal junction and cluster in each of the left anterior SMg (aSMg), left IFr, left ITO, and right VA (see Figure 6), and between the right PT and a cluster in medial premotor cortex/SMA (see Figure 7).

Table 5.

Functional connectivity analysis—correlations with SSI-Mod (a modified version of the Stuttering Severity Index–Fourth Edition) and disfluency rate.

| Seed ROI | Target cluster regions | Peak MNI coordinates |

Cluster size (no. of voxels) | p FDR | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| AWS, normal > baseline, negative correlation with SSI-Mod | ||||||

| L aSMg | Left parietal operculum (L PO, L aSMg) | −32 | −22 | 24 | 216 | .000108 |

| L aCO | Left medial prefrontal cortex (L SFg, L aCG) | −12 | 38 | 30 | 227 | .000031 |

| L OC | Right medial posterior temporal lobe (R LG, R TOF, R pPHg, R pTFg) | 22 | −54 | −12 | 320 | .000001 |

| L Cbm crus I | Right inferior temporal lobe (R LG, R TOF, R pPHg, R pTFg, R ITO, R avSTs, R adSTs, R VI, R V, R MGN, R SN, R VPM) | 38 | −42 | −14 | 1672 | < 1 × 10−6 |

| Left inferior medial temporal lobe (L LG, L TOF, L pTFg, L V, L VI, L I–IV, R I–IV) | −06 | −52 | −10 | 396 | < 1 × 10−6 | |

| L STh | Left occipital cortex/superior cerebellum (L TOF, L OC, L VI, L crus I, Ver VI) | −8 | −78 | −18 | 235 | .000178 |

| Cbm vermis crus II | Brainstem/inferior cerebellum (brainstem, L IX, R VIIIb, R IX) | −4 | −52 | −60 | 174 | .000364 |

| R midMC | Left medial prefrontal cortex (L SFg, L FP) | 02 | 54 | −08 | 231 | .000109 |

| R VA | Left angular gyrus (L AG) | −58 | −64 | 28 | 264 | .000026 |

| Right middle temporal–occipital cortex (R MTO) | 60 | −60 | 02 | 253 | .000026 | |

| Right parietal operculum (R PO, R PT, R pINS) | 34 | −24 | 16 | 240 | .000029 | |

| R vSC | Left medial prefrontal cortex (L SFg, L FP) | −04 | 52 | 18 | 353 | .000001 |

| R pdSTs | Midline medial prefrontal cortex (L SFg, L FP, R SFg) | −12 | 40 | 26 | 184 | .000247 |

| AWS, normal > baseline, positive correlation with SSI-Mod | ||||||

| L Cbm VIIIa | Right orbital inferior frontal gyrus (R IFr, R FP, R FOC) | 50 | 50 | −12 | 234 | .000061 |

| L pIFt | Right angular gyrus (R AG) | 36 | −70 | 46 | 271 | .000017 |

| R VA | Right inferior temporal sulcus (R pMTg, R pITg) | 54 | −38 | −16 | 169 | .000391 |

| R vSC | Brainstem (brainstem) | 08 | −14 | −36 | 292 | .000005 |

| Right posterior angular gyrus (R AG, R OC) | 36 | −74 | 36 | 260 | .000010 | |

| AWS, rhythm > baseline, negative correlation with SSI-Mod | ||||||

| L aSMg | Right parietal operculum (R PO, R aSMg, R pINS) | 38 | −24 | 24 | 404 | < 1 × 10−6 |

| L FOC | Right inferior parietal cortex (R aSMg, R PO, R vSC) | 50 | −22 | 40 | 374 | < 1 × 10−6 |

| L ITO | Right dorsal rolandic cortex (R dMC, R dPMC, R vSC) | 18 | −10 | 70 | 358 | < 1 × 10−6 |

| Right frontal operculum (R pFO, R aFO, R aINS) | 32 | 14 | 10 | 299 | .000002 | |

| Right temporo-parietal junction (R PT, R PO) | 40 | −30 | 30 | 290 | .000002 | |

| L Cbm I–IV | Midline occipital cortex (R OC, L OC, R LG) | 00 | −70 | 06 | 401 | < 1 × 10−6 |

| L Cbm crus I | Left parieto-occipital fissure (L PCN, L OC) | −08 | −64 | 28 | 259 | .000073 |

| R TP | Right middle frontal gyrus (R pMFg, R pIFS) | 52 | 18 | 38 | 213 | .000254 |

| R PT | Midline medial precentral gyrus (R dPMC, L dPMC, L dMC, L SMA) | 10 | −28 | 74 | 190 | .000194 |

| R aCG | Right supramarginal gyrus (R pSMg, R AG) | 54 | −40 | 46 | 350 | .000001 |

| R midMC | Midline rostral prefrontal cortex (L aCG, L FP, R FP, R FMC) | −08 | 50 | 04 | 370 | < 1 × 10−6 |

| R STh | Right anterior insula (R aINS, R IFr) | 34 | 22 | 12 | 368 | .000001 |

| R SN | Right inferior cerebellum (R IX, R X, L IX, Ver VIIIa, Ver VIIIb, L VIIb, R VIIIa, L VIIIa, L dentate, R VIIb, Ver IX) | −10 | −66 | −40 | 404 | < 1 × 10−6 |

| R VA | Right temporo-parietal junction (R PO, R PT, R aSMg, R vSC) | 60 | −22 | 20 | 559 | < 1 × 10−6 |

| Right middle temporo-occipital cortex (R MTO) | 60 | −50 | 02 | 232 | .000036 | |

| R Cbm I–IV | Midline occipital cortex (L OC, R OC, R PCN) | −08 | −78 | 06 | 286 | .000008 |

| Cbm vermis VI | Left parieto-occipital fissure (L PCN, L OC, L LG) | −24 | −62 | 24 | 622 | < 1 × 10−6 |

| Right parieto-occipital fissure (R PCN, R OC) | 10 | −64 | 16 | 382 | < 1 × 10−6 | |

| Right anterior cingulate cortex (R aCG) | 16 | 26 | 20 | 160 | .000415 | |

| AWS, rhythm > baseline, positive correlation with SSI-Mod | ||||||

| L aSMg | Left inferior occipital cortex (L OC, L TOF, L ITO) | −42 | −74 | −16 | 226 | .000032 |

| AWS, normal > baseline, negative correlation with disfluency rate | ||||||

| L pdPMC | Right insula (R aINS, R aCO, R putamen) | 42 | 04 | 08 | 186 | .000360 |

| L pdSTs | Right medial temporal cortex (R pPHg, R pTFg) | 34 | −34 | −20 | 191 | .000059 |

| Right lateral occipital cortex (R OC) | 38 | −64 | −12 | 144 | .000292 | |

| L pCO | Midline rolandic cortex (R dMC, R dPMC, R dSC, L dMC, L dSC) | 14 | −22 | 78 | ||

| L dentate | Right occipital cortex (R OC) | 12 | −84 | 16 | 216 | .000083 |

| L Cbm crus I | Right dorsal prefrontal cortex (R FP, R SFg) | 24 | 48 | 36 | 199 | .000038 |

| Cbm vermis crus I | Right occipital cortex (R OC, R PCN) | 06 | −80 | 28 | 1149 | < 1 × 10−6 |

| Cbm vermis VIIIa | Right occipital cortex (R OC, R LG) | 10 | −62 | 06 | 184 | .000223 |

| AWS, normal > baseline, positive correlation with disfluency rate | ||||||

| R Cbm X | Inferior temporal cortex (R pITg, R pTFg, R VI) | 46 | −36 | −28 | 356 | .000001 |

Note. Roman numerals indicate cerebellar lobules. Regions of the SpeechLabel atlas (Cai et al., 2014) containing at least 10 voxels of a given cluster are indicated in parentheses. ROI = region of interest; MNI = Montréal Neurological Institute; FDR = false discovery rate; L = left; aSMg = anterior supramarginal gyrus; PO = parietal operculum; aCO = anterior central operculum; SFg = superior frontal gyrus; aCG = anterior cingulate gyrus; OC = occipital cortex; R = right; LG = lingual gyrus; TOF = temporo-occipital fusiform gyrus; pPHg = posterior parahippocampal gyrus; pTFg = posterior temporal fusiform gyrus; Cbm = cerebellum; ITO = inferior temporo-occipital cortex; avSTs = anterior ventral superior temporal sulcus; adSTs = anterior dorsal superior temporal sulcus; MGN = medial geniculate nucleus of the thalamus; SN = substantia nigra; VPM = ventral postero-medial portion of the thalamus; STh = subthalamic nucleus; Ver = vermis; midMC = middle primary motor cortex; FP = frontal pole; VA = ventral anterior portion of the thalamus; AG = angular gyrus; MTO = middle temporo-occipital cortex; PT = planum temporale; pINS = posterior insula; vSC = ventral primary somatosensory cortex; pdSTs = posterior dorsal superior temporal sulcus; IFr = inferior frontal gyrus pars orbitalis; FOC = fronto-orbital cortex; pIFt = posterior inferior frontal gyrus pars triangularis; pMTg = posterior middle temporal gyrus; pITg = posterior inferior temporal gyrus; dMC = dorsal primary motor cortex; dPMC = dorsal premotor cortex; pFO = posterior frontal operculum; aFO = anterior frontal operculum; aINS = anterior insula; PCN = precuneus; TP = temporal pole; pMFg = posterior middle frontal gyrus; pIFs = posterior inferior frontal sulcus; SMA = supplementary motor area; pSMg = posterior supramarginal gyrus; FMC = fronto-medial cortex; pdPMC = posterior dorsal premotor cortex; dSC = dorsal primary somatosensory cortex.

Figure 5.

Two notable correlations of cerebellar functional connectivity (normal > baseline) with stuttering severity. Seed regions for these connections are indicated on the left side of the figure on a transparent 3D rendering of the cerebellum viewed posteriorly. Colors in the rest of the figure refer back to these seed regions. Target clusters are either displayed on the same transparent rendering of the cerebellum or projected onto an inflated surface of cerebral cortex, along with the full cortical region of interest parcellation of the SpeechLabel atlas described in Cai et al. (2014). The “+” and “−” indicate positive and negative correlations, respectively. The right portion of the figure plots the beta estimates of the psychophysiological interaction regressors from individual adults who stutter against stuttering severity. Roman numerals indicate cerebellar lobules. Full results of this analysis can be found in Supplemental Figures S14 and S15 and Table 5. Cbm = cerebellum; IFr = inferior frontal gyrus pars orbitalis; L = left; R = right; SSI-Mod = modification of the Stuttering Severity Instrument–Fourth Edition score.

Figure 6.

Correlations of functional connectivity (rhythm > baseline) between seed regions of interest (ROIs) and the right temporoparietal junction with stuttering severity. Seed regions for these connections are indicated on the left side of the figure either on an inflated surface of the left cerebral cortex or on a transparent 3D rendering of right subcortical structures viewed medially. Colors in the rest of the figure refer back to these seed regions. Target clusters are projected onto an inflated surface of the right cerebral cortex, along with the full cortical ROI parcellation of the SpeechLabel atlas described in Cai et al. (2014). The black dashed oval indicates a rough border of the right temporoparietal junction. The right portion of the figure plots the beta estimates of the psychophysiological interaction regressors from individual adults who stutter against stuttering severity for each functional connection. Full results of this analysis can be found in Supplemental Figures S16 and S17 and Table 5. aSMg = anterior supramarginal gyrus; FOC = fronto-orbital cortex; ITO = inferior temporo-occipital junction; L = left; R = right; SSI-Mod = modification of the Stuttering Severity Instrument–Fourth Edition score; VA = ventral anterior portion of the thalamus.

Figure 7.

Correlations of functional connectivity (rhythm > baseline) between the right planum temporale and a medial premotor cortex with stuttering severity. The seed region is indicated on the left side of the figure on an inflated surface of the right cerebral cortex. One target cluster (straddling the midline) is projected onto an inflated surface of the cerebral cortex, along with the full cortical region of interest parcellation of the SpeechLabel atlas described in Cai et al. (2014). Below, the beta estimates of the psychophysiological interaction regressors from individual adults who stutter are plotted against stuttering severity. Full results of this analysis can be found in Supplemental Figures S16 and S17 and Table 5. L = left; PT = planum temporale; R = right; SSI-Mod = modification of the Stuttering Severity Instrument–Fourth Edition score.

Discussion

This study aimed to characterize the changes in functional activation and connectivity that occur when adults time their speech to an external metronomic beat and how these changes differ in AWS compared to ANS. Extending previous work, this paradigm was novel in that the metronome was paced at the typical rate of English speech. The rate and isochronicity of paced speech by AWS was also similar to that of ANS. Consistent with prior literature, AWS produced significantly fewer disfluencies during externally paced speech than during normal, internally paced speech (see Figure 2). Controlling for speaking rate, participants exhibited greater activation during isochronously paced speech than internally paced speech in left hemisphere sensory association areas and bilateral attentional and premotor regions. AWS had greater functional connectivity during isochronous speech than internally paced speech within the cerebellum and reduced connectivity between the left inferior cerebellum and left prefrontal cortex. Finally, there were significant correlations between SSI-Mod and functional connections within the cerebellum, between the cerebellum and orbitofrontal cortex, and between the right temporoparietal junction and left hemisphere speech-related regions. The following sections discuss these results in relation to prior behavioral and neuroimaging literature.

A Possible Compensatory Role for the Cerebellum in AWS

A role for the cerebellum in mediating speech timing is well established (see Ackermann, 2008, for a review), and damage to this structure can lead to “scanning speech,” where syllables are evenly paced (Duffy, 2013). Previous work posits that when the basal ganglia–SMA “internal” timing system is impaired in AWS, the cerebellum, along with lateral cortical premotor structures, forms part of an “external” timing system that is recruited (Alm, 2004; Etchell et al., 2014). In support of this, numerous fMRI and positron emission tomography studies demonstrate cerebellar overactivation and hyperconnectivity during normal speech production in AWS (e.g., Brown et al., 2005; Chang et al., 2009; Ingham et al., 2012; Lu et al., 2012; Lu, Peng, et al., 2010; Watkins et al., 2007) that is reduced following therapy (De Nil et al., 2001; Lu et al., 2012; Neumann et al., 2003; Toyomura et al., 2015), a potential indication of an organic attempt at compensation. In this study, the increased connectivity among speech-related regions of the cerebellum, along with increased fluency during the rhythm condition, may thus reflect similar neural processes.

It should be noted that this functional connectivity does not reflect direct structural connectivity between a seed and target region. As suggested by Bernard et al. (2013), we interpret the result of increased within-cerebellar connectivity as reflecting an increase in synchrony among multiple cerebrocerebellar loops. Thus, in AWS, areas of cerebral cortex may simultaneously impinge on distinct areas of cerebellum to utilize the cerebellum's temporal processing capabilities to ensure accurate speech timing during the rhythm condition.

The reduction in connectivity between the left prefrontal cortex and inferior cerebellum connection may be an exception. Both regions are functionally connected during rest with areas of the ventral attention network, including bilateral temporoparietal junction and IFG (Buckner et al., 2011; Vossel et al., 2014; Yeo et al., 2011). This network is associated with modulating attention based on new or surprising stimuli (Vossel et al., 2014), is largely right-lateralized (Vossel et al., 2014), and overlaps with regions involved in responding to sensory feedback errors during speech production (Golfinopoulos et al., 2011; Tourville et al., 2008). Indeed, cerebellar lobule VIII is also involved in sensory feedback control (Golfinopoulos et al., 2011; Tourville et al., 2008) and suprasyllabic speech sequencing (Bohland & Guenther, 2006). Thus, a reduction in connectivity between these two regions during the rhythm condition may reflect a decrease in reliance on this network in favor of more top-down control in AWS.

Changes in Activation During Isochronous Speech

Comparing neural activation between isochronously paced and normal speech showed that subjects had greater activation during isochronous speech in left hemisphere medial premotor and sensory association areas, bilateral parietal cortex, and right hemisphere dPMC. Activation in the left temporoparietal sensory association cortex (PT, aSMg) and right vPMC (in the exploratory results) may be related to increased reliance on sensory feedback control during this novel speech condition. Previous studies have shown that sensory feedback errors (i.e., mismatches between the auditory signal expected from the current motor commands and the actual auditory signal) lead to increased activation in secondary auditory and somatosensory areas (Hashimoto & Sakai, 2003; Parkinson et al., 2012; Takaso et al., 2010; Tourville et al., 2008), whereas greater activation in right vPMC is thought to reflect the transformation of sensory errors into corrective motor responses (Golfinopoulos et al., 2011; Hashimoto & Sakai, 2003; Tourville et al., 2008). Temporoparietal cortex may also play a more general role in audio–motor integration (Hickok et al., 2003); therefore, increased activation in this region may be indicative of the need to hold the rhythmic auditory stimulus in working memory and translate it into a motoric response in the rhythm condition of the current study. This is supported by increased activity in bilateral intraparietal sulcus and posterior SMg, additional regions commonly recruited in working memory tasks (Rottschy et al., 2012).

There was also increased activation during isochronous speech in areas thought to be involved in speech planning and sequencing (left SMA; Bohland et al., 2010; Civier et al., 2013; Guenther, 2016), producing complex motor sequences (left SPL; Haslinger et al., 2002; Heim et al., 2012), producing novel sequences (left SPL; Jenkins et al., 1994; Segawa et al., 2015), attending to stimulus timing (left SPL; Coull, 2004), and controlled respiration (right dPMC; McKay et al., 2003). The rhythm condition requires participants to produce speech in an unfamiliar way. This change in their speech production results in speech becoming less automatic and may require greater recruitment in these areas for timing the sequence of syllables (Alario et al., 2006; Bohland & Guenther, 2006; Schubotz & von Cramon, 2001). Bengtsson et al. (2004, 2005) found that, for both finger tapping and simple repetition of “pa,” more complex timing led to increased activation in SMA compared to simple patterns. The increased need to implement a timing pattern recruited the same structure that mediates temporal sequencing.

Unlike previous studies (Braun et al., 1997; Stager et al., 2003; Toyomura et al., 2011, 2015), AWS did not exhibit significantly increased activation in the rhythm condition compared to the normal condition. The most consistent finding from these studies was that both groups showed increased activation in bilateral auditory regions during isochronously paced speech and that AWS showed greater increases in the basal ganglia. In this study, the lack of clear between-conditions effects within the AWS group or between the AWS and ANS groups may be due to more individual variability for AWS than ANS for this contrast. Future work is needed to determine whether this within-group variability is driving the null findings in the AWS group. Furthermore, Toyomura et al. (2011) found that, while areas of the basal ganglia, left precentral gyrus, left SMA, left IFG, and left insula were less active in AWS during normal speech, activity in these areas increased to the level of ANS during isochronous speech. These results suggested that isochronously paced speech had a “normalizing” effect on activity in these regions, which differs with the present results.

There are methodological differences between the current work and similar studies that also could have impacted the results. In the current study, the rhythmic stimulus was presented prior to speaking regardless of the condition, unlike previous work in which the participant heard the stimulus while speaking and only during the rhythm condition (Toyomura et al., 2011). Thus, group effects reported by Toyomura et al. (2011) likely reflect auditory processing of the pacing stimulus in addition to any differences in speech motor processes. Second, our study sought to examine the rhythm effect when speech was produced at a conversational speaking rate. Previous studies used a metronome set at 92–100 beats per minute, considerably slower than the mean conversational rate in English (228–372 syllables per minute; Davidow, 2014; Pellegrino et al., 2011) and the rate observed in our study (approximately 207 syllables per minute). While Toyomura et al. (2011, 2015) instructed participants to speak at a similar rate during the normal condition (when previous studies had not), the slower tempo overall may have led to increased auditory feedback processing. This could have modified the mechanisms by which ANS and AWS controlled their speech timing. Finally, only one of the previous studies accounted for disfluencies during the task in their imaging analysis (Stager et al., 2003), despite significant correlations with brain activation (Braun et al., 1997). However, given the small number of disfluencies in this and previous studies, this effect may have had a limited impact on the results.

Correlation Between Activation and Severity

The voxel/vertex-based analysis did not find significant correlations between disfluency rate and activation in the normal–baseline contrast. However, in the exploratory ROI analysis, activation in the left VA thalamus and bilateral ventral lateral thalamus had among the strongest positive correlations with the disfluency rate (p < .005; see Supplemental Table S4 and Supplemental Figure S9 for details). These nuclei are part of both the cortico-cerebellar and cortico-basal ganglia motor loops and are structurally connected with premotor and primary motor areas (Barbas et al., 2013). As relays between subcortical structures and the cortex, increased activation for participants with a higher disfluency rate during the task may reflect greater reliance upon these modulatory pathways during speech. It is also worth noting that, with an exploratory threshold (p < .05, uncorrected), some ROIs follow similar patterns to previous literature. The propensity to stutter during the task, measured by the disfluency rate, was associated with greater cortical activation in largely right hemisphere regions and bilateral subcortical activation at uncorrected thresholds. The right-lateralized cortical associations in this study may reflect increased compensatory activity in AWS (as in Braun et al., 1997; Cai et al., 2014; Kell et al., 2009; Preibisch et al., 2003; Salmelin et al., 2000). This is supported by the fact that fluency-inducing therapy leads to more left-lateralized activation (De Nil et al., 2003; Neumann et al., 2003, 2005), similar to that of neurotypical speakers. It should be noted that, due to the low number of disfluencies exhibited during the task, determining a clear relationship between fluency and activation may not have been possible.

Functional connectivity between multiple seed ROIs and target clusters was significantly correlated with SSI-Mod. Given the large number of these significantly correlated connections, we focus here on what we consider to be the most salient findings; further detail regarding the full set of findings is provided in the supplemental materials.

When comparing the normal and baseline conditions, the negative association between SSI-Mod and the connection between cerebellar vermis crus II and midline inferior cerebellum indicates that less severe AWS have greater within-cerebellum connectivity. This fits conceptually with the result of increased connectivity within the cerebellum during the rhythm condition—both conditions associate the cerebellum with greater fluency. There was also a positive correlation between SSI-Mod and the connection between the left cerebellum VIIIa and right IFr. The direction of this connection is surprising given previous work. For instance, Sitek et al. (2016) found a negative relationship between SSI scores and the connection between the left cerebellum and IFr in resting-state connectivity, and in Kell et al. (2018), there was hyperconnectivity between the cerebellum and left IFr in the comparison between overt and covert speech for recovered AWS. These suggest that greater fluency was associated with enhanced connections between these regions. However, the cerebellar regions involved in these connections were not as fine-grained as the ROI in the current study, and the specific tasks on which these connections were based were different than the normal–baseline comparison in this study.

During the rhythm condition compared to baseline, multiple connections—between the left fronto-orbital cortex, left aSMg, left ITO, and right VA seeds and overlapping clusters in the right temporoparietal junction—were negatively correlated with SSI-Mod. Thus, more severe AWS had lower connection strengths compared to less severe AWS. In general, these connections support the idea that the right hemisphere is recruited to compensate for impaired left hemisphere processing (Braun et al., 1997; De Nil et al., 2000; Fox et al., 1996). Indeed, this temporoparietal region was found to be hyperactive in a meta-analysis of stuttering neuroimaging studies (Belyk et al., 2015). The convergence of these connections, specifically in the right temporoparietal junction, may imply association with this region's role in responding to salient or unexpected events (Corbetta & Shulman, 2002). In the realm of speech production, these connections (especially with left aSMg) may reflect increased use of the somatosensory feedback loop by less severe AWS to control speech during the rhythm condition (Golfinopoulos et al., 2011). One additional negative correlation worth mentioning for the rhythm–baseline contrast is the negative association between SSI-Mod and the connection between the right PT and midline primary motor cortex/premotor cortex/SMA. In the auditory feedback loop, as proposed by Tourville et al. (2008) and Guenther (2016), sensory state, target, and error maps send error signals to the right PMC to generate connective motor commands. Connectivity between the right PT and medial premotor regions may then reflect an interface between these sensory feedback loops and the SMA–basal ganglia “internal” timing system, which is disrupted in stuttering (Chang & Guenther, 2020). More fluent speakers may use this connection to a greater extent in order to resolve conflicts between competing motor programs (Guenther, 2016).

Limitations