Abstract

Constraining the many biological parameters that govern cortical dynamics is computationally and conceptually difficult because of the curse of dimensionality. This paper addresses these challenges by proposing (1) a novel data-informed mean-field (MF) approach to efficiently map the parameter space of network models; and (2) an organizing principle for studying parameter space that enables the extraction biologically meaningful relations from this high-dimensional data. We illustrate these ideas using a large-scale network model of the Macaque primary visual cortex. Of the 10-20 model parameters, we identify 7 that are especially poorly constrained, and use the MF algorithm in (1) to discover the firing rate contours in this 7D parameter cube. Defining a “biologically plausible” region to consist of parameters that exhibit spontaneous Excitatory and Inhibitory firing rates compatible with experimental values, we find that this region is a slightly thickened codimension-1 submanifold. An implication of this finding is that while plausible regimes depend sensitively on parameters, they are also robust and flexible provided one compensates appropriately when parameters are varied. Our organizing principle for conceptualizing parameter dependence is to focus on certain 2D parameter planes that govern lateral inhibition: Intersecting these planes with the biologically plausible region leads to very simple geometric structures which, when suitably scaled, have a universal character independent of where the intersections are taken. In addition to elucidating the geometry of the plausible region, this invariance suggests useful approximate scaling relations. Our study offers, for the first time, a complete characterization of the set of all biologically plausible parameters for a detailed cortical model, which has been out of reach due to the high dimensionality of parameter space.

Author summary

Cortical circuits are characterized by a high degree of structural and dynamical complexity, and this biological reality is reflected in the large number of parameters in even semi-realistic cortical models. A fundamental task of computational neuroscience is to understand how these parameters govern network dynamics. While some neuronal parameters can be measured in vivo, many remain poorly constrained due to limitations of available experimental techniques. Computational models can address this problem by relating difficult-to-measure parameters to observable quantities, but to do so one must overcome two challenges: (1) the computational expense of mapping a high dimensional parameter space, and (2) extracting biological insights from such a map. This study aims to address these challenges in the following ways: First, we propose a parsimonious data-informed algorithm that efficiently predicts spontaneous cortical activity, thereby speeding up the mapping of parameter landscapes. Second, we show that lateral inhibition provides a basis for conceptualizing cortical parameter space, enabling us to begin to make sense of its geometric structure and attendant scaling relations. We illustrate our approach on a biologically realistic model of the monkey primary visual cortex.

Introduction

From spatially and temporally homogeneous but sensitive resting states to highly structured evoked responses, neuronal circuits in the cerebral cortex exhibit an extremely broad range of dynamics in support of information processing in the brain [1–8]. Accompanying this dynamical flexibility is a high degree of morphological and physiological complexity [9–15]. As a result, any effort to characterize cortical circuits necessarily involves a large number of biological parameters [16–21]. Understanding the range of parameters compatible with biologically plausible cortical dynamics and how individual parameters impact neural computation are, in our view, basic questions in computational neuroscience.

Due to limitations of available experimental techniques, many neuronal and network parameters are poorly constrained. Biologically realistic network models can bridge this gap by quantifying the dependence of observable quantities like firing rates on parameters, thereby constraining their values. However, two challenges stand in the way of efforts to map the parameter landscape of detailed cortical networks. First, a direct approach, i.e., parameter sweeps using network models, may be extremely costly or even infeasible. This is because even a single layer of a small piece of cortex consists of tens of thousands of neurons, and the computational cost grows rapidly with the size of the network. This cost is compounded by the need for repeated model runs during parameter sweeps, and by the “curse of dimensionality,” i.e., the exponential growth of parameter space volume with the number of parameters. Second, even after conducting parameter sweeps, one is still faced with the daunting task of making sense of the high dimensional data to identify interpretable, biologically meaningful features.

This paper addresses the twin challenges of computational cost and interpretable cortical parameter mapping. Starting from a biologically realistic network model, we define as “viable” those parameters that yield predictions compatible with empirically observed firing rates, and seek to identify the viable region. To mitigate the computational cost of parameter space scans, we propose a parsimonious, data-informed mean-field (MF) algorithm. MF methods replace rapidly-fluctuating quantities like membrane potentials with their mean values; they have been used a great deal in neuroscience [22–38]. MF models of neuronal networks are all based on the relevant biology to different degrees, but most rely on idealized voltage-rate relations (so-called “gain” or “activation” function) to make the system amenable to analysis (see, e.g., [39, 40] and the Discussion for more details). In contrast, our MF equations are derived from a biologically realistic network model: Instead of making assumptions on gain functions, our MF equations follow closely the anatomical and physiological information incorporated in the network, hence reflecting its key features. To stress this tight connection to a realistic network model, we have described our method as a “data-informed MF approach”. As we will show, the algorithm we propose is capable of accurately predicting network firing rates at a small fraction of the expense of direct network simulations.

We illustrate the power of this approach and how one might conceptualize the mapping it produces using a biologically realistic model of Macaque primary visual cortex (V1). Focusing on spontaneous activity, our main result is that the viable region is a thin neighborhood of a codimension-1 manifold in parameter space. (A codimension-1 manifold is an (n − 1)-dimensional surface in an n-dimensional parameter space.) Being approximately codimension-1 implies that the viable region is simultaneously sensitive and flexible to parameter changes: sensitive in that a small perturbation can easily move a point off the manifold; flexible in the sense that it allows for a great variety of parameter combinations, consistent with the wide variability observed in biology. Our analysis of parameter dependence is based on the following organizing principle: By restricting attention to certain 2D planes associated with lateral inhibition, we discover geometric structures that are remarkably similar across all such “inhibition planes”. Our findings suggest a number of simple approximate scaling relations among neuronal parameters.

The Macaque V1 network model we use for illustration involves ≳ 4 × 104 neurons in Layer 4Cα of V1, and has been carefully benchmarked to a large number of known features of V1 response [41]. In [41], the authors focused on a small parameter region which they had reason to believe to be viable. The present study produces a much more comprehensive characterization of the set of all viable parameters defined in terms of spontaneous activity. The reason we have focused on spontaneous activity is that it is a relatively simple, homogeneous equilibrium steady state, and understanding it is necessary before tackling more complex, evoked responses. However, as all cortical activity depends on a delicate balance between excitation and inhibition, even background dynamics can be rather nontrivial.

Parameter search and tuning are problems common to all areas of computational biology. By significantly reducing the cost of mapping parameter landscapes, we hope the computational strategy proposed in the present paper will enable computational neuroscientists to construct high-fidelity cortical models, and to use these models to shed light on spontaneous and evoked dynamics in neural circuitry. Moreover, reduced models of the type proposed here may be useful as a basis for parameter and state estimation on the basis of experimental data.

Results

As explained in the Introduction, this work (1) proposes a novel data-informed mean-field approach to facilitate efficient and systematic parameter analysis of neuronal networks, which we validate using a previously constructed model of the monkey visual cortex; and (2) we develop ways to conceptualize and navigate the complexities of high-dimensional parameter spaces of neuronal models by organizing around certain relationships among parameters, notably those governing lateral inhibition.

Sect. 1 describes the network model of the visual cortex that will be used both to challenge the MF algorithm and to assess its efficacy, together with a brief introduction to the algorithm itself; details are given in Methods. Sect. 2 uses the algorithm to explore the parameter landscape of the model. Qualitative analysis is offered along the way leading to a conceptual understanding of parameter dependence.

1 Network model and parameter landscape

We use as starting point the large-scale network model in [41]. This is a mechanistic model of an input layer to the primary visual cortex (V1) of the Macaque monkey, which has vision very similar to that of humans [42–46] Among existing neuronal network models, [41] is at the very high end on the scale of details and biological realism: It incorporates a good amount of known neuroanatomy in its network architecture, capturing the dynamics of individual neurons as well as their dynamical interaction.

In [41], the authors located a small set of potentially viable parameters, which they refined by benchmarking the resulting regimes against multiple sets of experimental data. No claims were made on the uniqueness or optimality of the parameters considered. Indeed, because of the intensity of the work involved in locating viable parameters, little attempt was made to consider parameters farther from the ones used. This offers a natural testing ground for our novel approach to parameter determination: We borrow certain aspects of the model from [41], including network architecture, equations of neuronal dynamics and parameter structure, but instead of using information on the parameters found, we will search for viable parameter regions using the techniques developed here.

For a set of parameters to be viable, it must produce firing rates similar to those of the real cortex, including background firing, the spontaneous spiking produced when cortex is unstimulated. Background activity provides a natural way to constrain parameters: It is an especially simple state of equilibrium, one in which spiking is statistically homogeneous in space and time and involves fewer features of cortical dynamics. For example, synaptic depression and facilitation are not known to play essential roles in spontaneous activity. A goal in this paper will be to systematically identify all regions in parameter space with acceptable background firing rates.

1.1 Network model of an input layer to primate visual cortex

The network model is that of a small patch of Macaque V1, layer 4Cα (hereafter “L4”), the input layer of the Magnocellular pathway. This layer receives input from the lateral geniculate nucleus (LGN) and feedback from layer 6 (L6) of V1. Model details are as in [41], except for omission of features not involved in background activity. We provide below a model description that is sufficient for understanding—at least on a conceptual level – the material in Results. Precise numerical values of the various quantities are given in S1 Text. For more detailed discussions of the neurobiology behind the material in this subsection, we refer interested readers to [41] and references therein.

Network architecture

Of primary interest to us is L4, which is modeled as a 2D network of point neurons. Locations within this layer are identified with locations in the retina via neuronal projections, and distances on the retina are measured in degrees. Cells are organized into hypercolumns of about 4000 neurons each, covering a 0.25° × 0.25° area. (In this paper, “cells” and “neurons” always refer to nerve cells in the primary visual cortex.) The neurons are assumed to be of two kinds: 75−80% are Excitatory (E), and the rest are Inhibitory (I). The E-population is evenly placed in a lattice on the cortical surface; the same is true for I-cells. Projections to E- and I-cells are assumed to be isotropic, with probabilities of connection described by truncated Gaussians. E-neurons (which are assumed to be spiny stellate cells) have longer axons, about twice that of I-neurons (which are assumed to be basket cells). E-to-E coupling is relatively sparse, at about 15% at the peak, while E-to-I, I-to-E and I-to-I coupling is denser, at about 60%. Connections are drawn randomly subject to the probabilities above. On average, each E-neuron receives synaptic input from slightly over 200 E-cells and about 100 I-cells, while each I-cell receives input from ∼800 E-cells and 100 I-cells. Exact numbers are given in S1 Text.

Cells in L4 also receive synaptic input from two external sources, “external” in the sense that they originate from outside of this layer. One source is LGN: Each L4 cell, E or I, is assumed to be connected to 4 LGN cells on average; each LGN cell is assumed to provide 20 spikes per sec, a typical firing rate in background. These spikes are assumed to be delivered to L4 cells in a Poissonian manner, independently from cell to cell. L6 provides another source of synaptic input: We assume each E-cell in L4 receives input from 50 E-cells from L6, consistent with known neurobiology, and that each L6 E-cell fires on average 5 spikes per sec in background. For I-cells, the number of presynaptic L6 cells is unknown; this is one of the free parameters we will explore. Spike times from L6 are also assumed to be Poissonian and independent from cell to cell, a slight simplification of [41]. All inputs from external sources are excitatory. Finally, we lump together all top-down modulatory influences on L4 not modeled into a quantity we call “ambient”. Again, see S1 Text for all pertinent details.

Equations of neuronal dynamics

We model only the dynamics of neurons in L4. Each neuron is modeled as a conductance-based point neuron whose membrane potential v evolves according to the leaky integrate-and-fire (LIF) equation [47, 48]

| (1) |

Following [41], we nondimensionalize v, with resting potential Vrest = 0 and spiking threshold Vth = 1. In Eq (1), v is driven toward Vth by the Excitatory current gE(t)(v − VE), and away from it by the leak term gLv and the Inhibitory current gI(t)(v − VI). When v reaches 1, a spike is fired, and v is immediately reset to 0, where it is held for a refractory period of 2 ms. The membrane leakage times 1/gL = 20 ms for E-neurons and 16.7 ms for I-neurons, as well as the reversal potentials VE = 14/3 and VI = −2/3, are standard [49].

The quantities gE(t) and gI(t), the Excitatory and Inhibitory conductances, are defined as follows. First,

| (2) |

Here, the neuron whose dynamics are described in Eq (1) is assumed to be of type Q, Q = E or I, and the constant SQI is the synaptic coupling weight from I-neurons to neurons of type Q. The summation is taken over , times at which a spike from a presynaptic I-cell from within layer 4Cα is received by the neuron in question. Upon the arrival of each spike, gI(t) is elevated for 5–10 ms and GI(⋅) describes the waveform in the IPSC. Second, the Excitatory conductance gE(t) is the sum of 4 terms, the first three of which are analogs of the right side of Eq (2), with an EPSC lasting 3–5 ms: they represent synaptic input from E-cells from within L4, from LGN and from L6. The 4th term is from ambient.

This completes the main features of the network model. Details are given in S1 Text.

1.2 Parameter space to be explored

Network dynamics can be very sensitive—or relatively robust—to parameter changes, and dynamic regimes can change differently depending on which parameter (or combination of parameters) is varied. To demonstrate the multiscale and anisotropic nature of the parameter landscape, we study the effects of parameter perturbations on L4 firing rates, using as reference point a set of biologically realistic parameters [41]. Specifically, we denote L4 E/I-firing rates at the reference point by , and fix a region around consisting of firing rates (fE, fI) we are willing to tolerate. We then vary network parameters one at a time, changing it in small steps and computing network firing rates until they reach the boundary of , thereby determining the minimum perturbations needed to force L4 firing rates out of .

Table 1 shows the results. We categorize the parameters according to the aspect of network dynamics they govern. As can be seen, L4 firing rates show varying degrees of sensitivity to perturbations in different parameter groups. They are most sensitive to perturbations to synaptic coupling weights within L4, where deviations as small as 1% can push the firing rates out of . This likely reflects the delicate balance between excitation and inhibition, as well as the fact that the bulk of the synaptic input to a L4 neuron comes from lateral interaction, facts consistent with earlier findings [41]. With respect to parameters governing inputs from external sources, we find perturbing LGN parameters to have the most impact, followed by amb and L6, consistent with their net influence on gE,I in background. We also note that the parameters governing afferents to I cells are more tolerant of perturbations than those for E cells.

Table 1. Network parameters and response.

Using the parameters from [41] as a reference point, we set and and vary one parameter at a time. We then compute the minimum perturbation needed to force the network firing rates out of . Values such as FEP, where P ∈ {lgn, L6, amb}, represent the total number of spikes per second received by an E-cell in L4 from source P. For example, in the reference set, each L4 cell has 4 afferent LGN cells on average, the mean firing rate of each is assumed to be 20 spikes/s, so FElgn = 80 Hz. Column 5 gives the lower and upper bounds of single-parameter variation (rounded to the nearest 1%) from the reference point that yield firing rates within .

| Group | Parameter | Meaning | Value | Bounds |

|---|---|---|---|---|

| within L4 | S EE | E-to-E synaptic weight | 0.024 | (-3%, 1%) |

| S II | I-to-I synaptic weight | 0.120 | (-4%, 1%) | |

| S EI | I-to-E synaptic weight | 0.0362 | (-1%, 3%) | |

| S IE | E-to-I synaptic weight | 0.0176 | (-1%, 3%) | |

| LGN to L4 | S Elgn | lgn-to-E synaptic weight | 0.048 | (-5%, 3%) |

| S Ilgn | lgn-to-I synaptic weight | 0.096 | (-6%, 9%) | |

| F Flgn | total # lgn spikes/s to E | 80 Hz | (-7%, 4%) | |

| F Ilgn | total # lgn spikes/s to I | 80 Hz | (-9%, 11%) | |

| L6 to L4 | S EL6 | L6-to-E synaptic weight | 0.008 | (-16%, 11%) |

| S IL6 | L6-to-I synaptic weight | 0.0058 | (-19%, 30%) | |

| F EL6 | total # L6 spikes/s to E | 250 Hz | (-16%, 10%) | |

| F IL6 | total # L6 spikes/s to I | 750 Hz | (-16%, 29%) | |

| amb to L4 | S amb | ambient-to-E/I synaptic wt. | 0.01 | (-8%, 6%) |

| F Eamb | rate of ambient to E | 500 Hz | (-7%, 5%) | |

| F Iamb | rate of ambient to I | 500 Hz | (-10%, 27%) |

These observations suggest that network dynamics depend in a complex and subtle way on parameters; they underscore the challenges one faces when attempting to tune parameters “by hand.” We now identify the parameters in the network model description in Sect. 1.1 to be treated as free parameters in the study to follow.

Free parameters

We consider a parameter “free” if it is hard to measure (or has not yet been measured) directly in the laboratory, or when data offer conflicting guidance. When available data are sufficient to confidently associate a value to a parameter, we consider it fixed. Following this principle, we designate the following 6 synaptic coupling weights governing recurrent interactions within L4 and its thalamic inputs as free parameters:

As shown in Table 1, these are also the parameters to which network response rates are the most sensitive. As for SEL6 and SIL6, which govern synaptic coupling from L6 to E- and I-neurons in L4, we assume

following [50] (see also [41]). This means that in our study, these quantities will vary, but they are indexed to SEE and SIE in a fixed manner and we will not regard them as free parameters.

A second category of parameters govern external sources. Here we regard FElgn, FIlgn and FEL6 as fixed to the values given in Table 1. L6 firing rates in background have been measured, but we know of no estimates on the number of presynaptic L6 cells to I-cells, so we treat FIL6 (which combines the effects of both) as a free parameter. The relation between SIL6 and SIE assumed above is in fact unknown from experiments. On the other hand, we are assuming that errors in the estimate of SIL6 can be absorbed into FIL6, which we vary. As for “ambient”, these inputs are thought to be significant, though not enough to drive spikes on their own. Since so little is known about this category of inputs, we fix the values of Samb, FEamb, and FIamb to those given in Table 1, having checked that they meet the conditions above.

As discussed earlier, we are interested in L4 firing rates under background conditions. Denoting E- and I- firing rates by fE and fI respectively, the aim of our study can be summarized as follows:

Aim: To produce maps of fE and fI as functions of the 7 parameters

| (3) |

to identify biologically relevant regions, and to provide a conceptual understanding of the results.

1.3 A brief introduction to our proposed MF approach

The approach we take is a MF computation of firing rates augmented by synthetic voltage data, a scheme we will refer to as “MF+v”. To motivate the “+v” part of the scheme, we first write down the MF equations obtained from Eq (1) by balancing mean membrane currents. These MF equations will turn out to be incomplete. We discuss briefly how to secure the missing information; details are given in Methods.

MF equations

Eq (1) reflects instantaneous current balance across the cell membrane of a L4 neuron. Assuming that this neuron’s firing rate coincides with that of the L4 E/I-population to which it belongs and neglecting (for now) refractory periods, we obtain a general relation between firing rates and mean currents by integrating Eq (1). We will refer to the equations below as our “MF equations”. They have the general form

where [4E → E] represents the integral of the current contribution from E-cells in L4 to E-cells in L4, [4I → E] represents the corresponding quantity from I-cells in L4 to E-cells in L4, and so on. The contribution from lateral, intralaminar interactions can be further decomposed into, e.g.,

Here NEE and NEI are the mean numbers of presynaptic E- and I-cells from within L4 to an E-neuron, fE, fI, SEE and SEI are as defined earlier, and is the mean membrane potential v among E-neurons in L4. Other terms in Eq (4a) and in Eq (4b) are defined similarly; detailed derivation of the MF equations is given in Methods.

Network connectivity and parameters that are not considered “free parameters” are assumed to be fixed throughout. If additionally we fix a set of the 7 free parameters in (3), then Eq (4) is linear in fE and fI, and are easily solved—except for two undetermined quantities, and . For network neurons, and are emergent quantities that cannot be easily estimated from the equations of evolution or parameters chosen.

Estimating mean voltages

We explain here the ideas that lead to the algorithm we use for determining and , leaving technical details to Methods.

Our first observation is that the values of fE and fI computed from Eq (4) depend delicately on and ; they can vary wildly with small changes in and . This ruled out the use of (guessed) approximate values, and even called into question the usefulness of the MF equations. But as we demonstrate in Methods, if one collects mean voltages and from network simulations and plug them into Eq (4) to solve for fE and fI, then one obtains results that agree very well with actual network firing rates. This suggests Eq (4) can be useful, provided we correctly estimate and .

As it defeats the purpose of an MF approach to use network simulations to determine and , we sought to use a pair of LIF-neurons, one E and one I, to provide this information. To do that, we must create an environment for this pair of neurons that is similar to that within the network, incorporating the biological features with the LIF neurons. For example, one must use the same parameters and give them the same external drives, i.e., LGN, L6, and ambient. But a good fraction of the synaptic input to neurons in L4 are generated from lateral interactions; to simulate that, we would have to first learn what fE and fI are. The problem has now come full circle: what we need are self-consistent values of fE and fI for the LIF-neurons, so that their input and output firing rates coincide.

These and other ideas to be explained (e.g., efficiency and stability) go into the algorithm proposed. In a nutshell, we use the aid of a pair of LIF-neurons to help tie down and , and use the MF equations to compute fE and fI. This mean-field algorithm aided by voltage closures (MF+v) is discussed in detail in Methods. We present next firing rate plots generated using this algorithm.

2 Dependence of firing rates on system parameters

Even with a fast algorithm, so that many data points can be computed, discovery and representation of functions depending on more than 3 or 4 variables can be a challenge, not to mention conceptualization of the results. In Sects. 2.1–2.3, we propose to organize the 7D parameter space described in Sect. 1.2 in ways that take advantage of insights on how the parameters interact: Instead of attempting to compute 6D level surfaces for fE and fI embedded in the 7D parameter space, we identify a biologically plausible region of parameters called the “viable region”, and propose to study parameter structures by slicing the 7D space with certain 2D planes called “inhibition planes”. We will show that intersections of the viable region and inhibition planes—called “good areas”—possess certain canonical geometric structures, and that these structures offer a biologically interpretable landscape of parameter dependence. The three terms, viable regions, inhibition planes and good areas, the precise definitions of which are given in Sect. 2.1, are objects of interest throughout this section. In Sect. 2.4 we show comparison of firing rate computations from our algorithm and from actual network simulations.

2.1 Canonical structures in inhibition planes

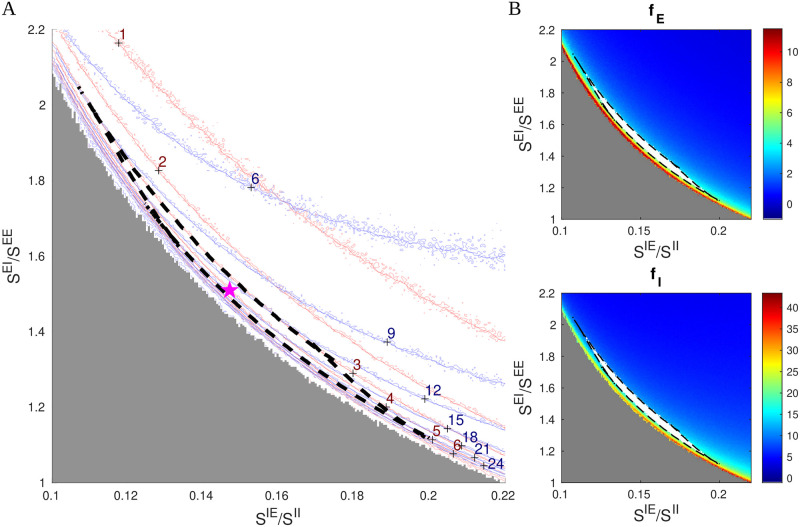

We have found it revealing to slice the parameter space using 2D planes defined by varying the parameters governing lateral inhibition, SIE and SEI, with all other parameters fixed. As we will show, these planes contain very simple and stable geometric structures around which we will organize our thinking about parameter space. Fig 1A shows one such 2D slice. We computed raw contour curves for fE and fI on a 480 × 480 grid using the MF+v algorithm, with red curves for fE and blue for fI.

Fig 1. Canonical structures in an inhibition plane.

A. Firing rates contours computed from firing rate maps on a 480×480 grid, showing fE = 1 − 6 Hz (red) and fI = 6 − 24 Hz (blue). The good area is indicated by the black dash lines (fE ∈ (3, 5) Hz and fI/fE ∈ (3, 4.25)); the reference point is indicated by the purple star (⋆). The MF+v method becomes unstable and fails when the inhibitory index SIE is too low (the gray region). B. Firing rate maps of fE (upper) and fI (lower), in which the good areas are indicated by white bands surrounded by black dash lines. The other five free parameters are as in Table 1: SEE = 0.024, SII = 0.12, SElgn = 2 × SEE, SIlgn = 2 × SElgn, and FIL6 = 3 × FEL6.

A striking feature of Fig 1A is that the level curves are roughly hyperbolic in shape. We argue that this is necessarily so. First, note that in Fig 1A, we used SIE/SII as x-axis and SEI/SEE as y-axis. The reason for this choice is that SIE/SII can be viewed as the degree of cortical excitation of I-cells, and SEI/SEE the suppressive power of I-cells from the perspective of E-cells. The product

can therefore be seen as a suppression index for E-cells: the larger this quantity, the smaller fE. This suggests that the contours for fE should be of the form xy = constant, i.e., they should have the shape of hyperbolas. As E and I firing rates in local populations are known to covary, these approximately hyperbolic shapes are passed to contours of fI.

A second feature of Fig 1A is that fI contours are less steep than those of fE at lower firing rates. That I-firing covaries with E-firing is due in part to the fact that I-cells receive a large portion of their excitatory input from E-cells through lateral interaction, at least when E-firing is robust. When fE is low, fI is lowered as well as I-cells lose their supply of excitation from E-cells, but the drop is less severe as I-cells also receive excitatory input from external sources. This causes fI contours to bend upwards relative to the fE-hyperbolas at lower firing rates, a fact quite evident from Fig 1.

We now define the viable region, the biologically plausible region in our 7D parameter space, consisting of parameters that produce firing rates we deem close enough to experimentally observed values. For definiteness, we take these to be [51]

and refer to the intersection of the viable region with the 2D slice depicted in Fig 1A as the “good area”. Here, the good area is the crescent-shaped set bordered by dashed black lines. For the parameters in Fig 1, it is bordered by 4 curves, two corresponding to the fE = 3 and 5 Hz contours and the other two are where fI/fE = 3 and 4.25. That such an area should exist as a narrow strip of finite length, with unions of segments of hyperbolas as boundaries, is a consequence of the fact that fE and fI-contours are roughly but not exactly parallel. Fig 1B shows the good area (white) on firing rate maps for fE and fI.

Hereafter, we will refer to 2D planes parametrized by SIE/SII and SEI/SEE, with all parameters other than SIE and SEI fixed, as inhibition planes, and will proceed to investigate the entire parameter space through these 2D slices and the good areas they contain. Though far from guaranteed, our aim is to show that the structures in Fig 1A persist, and to describe how they vary with the other 5 parameters.

Fig 1 is for a particular set of parameters. We presented it in high resolution to show our computational capacity and to familiarize the reader with the picture. As we vary parameters in the rest of this paper, we will present only heat maps for fE for each set of parameters studied. The good area, if there is one, will be marked in white, in analogy with the top panel of Fig 1B.

Finally, we remark that the MF+v algorithm does not always return reasonable estimates of L4 firing rates. MF+v tends to fail especially for a low suppression index (gray area in Fig 1A), where the network simulation also exhibits explosive, biologically unrealistic dynamics. This issue is discussed in Methods and S1 Text.

2.2 Dependence on external drives

There are two main sources of external input, LGN and L6 (while ambient input is assumed fixed). In both cases, it is their effect on E- versus I-cells in L4, and the variation thereof as LGN and L6 inputs are varied, that is of interest here.

LGN-to-E versus LGN-to-I

Results from [50] suggest that the sizes of EPSPs from LGN are ∼ 2× those from L4. Based on this, we consider the range SElgn/SEE ∈ (1.5, 3.0) in our study. Also, data show that LGN produces somewhat larger EPSCs in I-cells than in E-cells [52], though the relative coupling weights to E and I-cells are not known. Here, we index SIlgn to SElgn, and consider SIlgn/SElgn ∈ (1.5, 3.0).

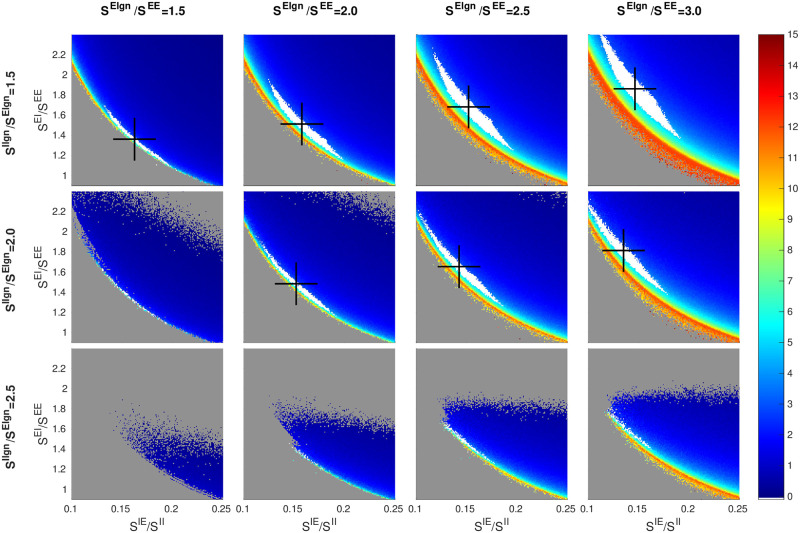

Fig 2 shows a 3 × 4 matrix of 2D panels, each one of which is an inhibition plane (see Fig 1). This is the language we will use here and in subsequent figures: we will refer to the rows and columns of the matrix of panels, while x and y are reserved for the axes for each smaller panel.

Fig 2. Dependence of firing rate and good area on LGN synaptic strengths.

A 3 × 4 matrix of panels is shown: each row corresponds to a fixed value of SIlgn/Elgn and each column a fixed value of SElgn/SEE. Each panel shows a heat map for fE on an inhibition plane (color bar on the right); x and y-axes are as in Fig 1. Good areas are in white, and their centers of mass marked by black crosses. The picture for SElgn/SEE = 2 and SIlgn/Elgn = 2 (row 2, column 2) corresponds to the fE rate map in Fig 1. Other free parameters are SEE = 0.024, SII = 0.12, and FIL6 = 3 × FEL6.

We consider first the changes as we go from left to right in each row of the matrix in Fig 2. With SIlgn/Elgn staying fixed, increasing SElgn/SEE not only increases LGN’s input to E, but also increases LGN to I by the same proportion. It is evident that the rate maps in the subpanels are all qualitatively similar, but with gradual changes in the location and the shape of the good area. Specifically, as LGN input increases, (i) the center of mass of the good area (black cross) shifts upward and to the left following the hyperbola, and (ii) the white region becomes wider.

To understand these trends, it is important to keep in mind that the good area is characterized by having firing rates within a fairly narrow range. As LGN input to E increases, the amount of suppression must be increased commensurably to maintain E-firing rate. Within an inhibition plane, this means an increase in SEI, explaining the upward move of black crosses as we go from left to right. Likewise, the amount of E-to-I input must be decreased by a suitable amount to maintain I-firing at the same level, explaining the leftward move of the black crosses and completing the argument for (i). As for (ii), recall that the SIE measures the degree of suppression of E-cells from within L4 (and L6, the synaptic weights of which are indexed to those in L4). Increased LGN input causes E-cells to be less suppressed than their SIE index would indicate. This has the effect of spreading the fE contours farther apart, stretching out the picture in a northeasterly direction perpendicular to the hyperbolas and widening the good area.

Going down the columns of the matrix, we observe a compression of the contours along the same northeasterly axis and a leftward shift of the black crosses. Recall that the only source of excitation of I-cells counted in the SIE-index is from L4 (hence also L6). When LGN to E is fixed and LGN to I is increased, the additional external drive to I produces a larger amount of suppression than the SIE-index would indicate, hence the compression. It also reduces the amount of SIE needed to produce the same I-firing rate, hence the leftward shift of the good area.

The changes in LGN input to E and to I shown cover nearly all of the biological range. We did not show the row corresponding to SIlgn/S Elgn = 3 because the same trend continues and there are no viable regions. Notice that even though we have only shown a 3 × 4 matrix of panels, the trends are clear and one can easily interpolate between panels.

LGN-to-I versus L6-to-I

Next, we examine the relation between two sources of external drive: LGN and L6. In principle, this involves a total of 8 quantities: total number of spikes and coupling weights, from LGN and from L6, received by E and I-neurons in L4. As discussed in Sect. 1.2, enough is known about several of these quantities for us to treat them as “fixed”, leaving as free parameters the following three: SElgn, SIlgn and FIL6. Above, we focused on SIlgn/SElgn, the impact of LGN on I relative to E. We now compare SIlgn/SElgn to FIL6/FEL6, the corresponding quantity with L6 in the place of LGN.

As there is little experimental guidance with regard to the range of FIL6, we will explore a relatively wide region of FIL6: Guided by the fact that

in L4 and the hypothesis that similar ratios hold for inter-laminar connections, we assume FIL6/FEL6 ∈ (1.5, 6). We have broadened the interval because it is somewhat controversial whether the effect of L6 is net-Excitatory or net-Inhibitory: the modeling work [53] on monkey found that it had to be at least slightly net-Excitatory, while [54] reported that it was net-Inhibitory in mouse.

Fig 3 shows, not surprisingly, that increasing LGN and L6 inputs to I have very similar effects: As with SIlgn/SElgn, larger FIL6/FEL6 narrows the strip corresponding to the good area and shifts it leftwards, that is, going from left to right in the matrix of panels has a similar effect as going from top to bottom. Interpolating, one sees, e.g., that the picture at (SIlgn/SElgn, FIL6/FEL6) = (1.5, 4) is remarkably similar to that at (2, 1.5). In background, changing the relative strengths of LGN to I vs to E has a larger effect than the corresponding changes in L6, because LGN input is a larger component of the Excitatory input than L6. This relation may not hold under drive, however, where L6 response is known to increase significantly.

Fig 3. Dependence of firing rate and good area on LGN versus L6.

A 3 × 4 matrix of panels is shown: each row corresponds to a fixed value of SIlgn/SElgn and each column a fixed value of FIL6/FEL6. Smaller panels depict fE maps on inhibition planes and are as in Fig 2. The panel for FEL6/FIL6 = 3 and SIlgn/SElgn = 2 (row 2, column 2) corresponds to the fE rate map in Fig 1. Other free parameters are SEE = 0.024, SII = 0.12, and SElgn = 2 × SEE.

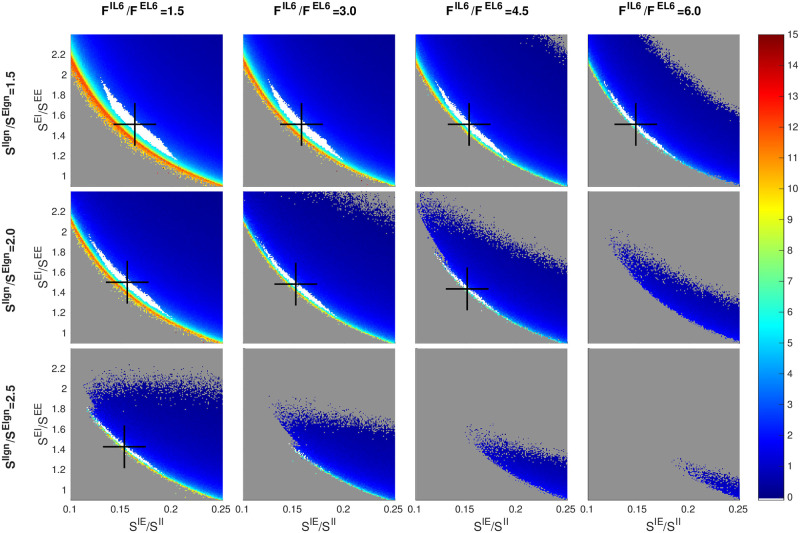

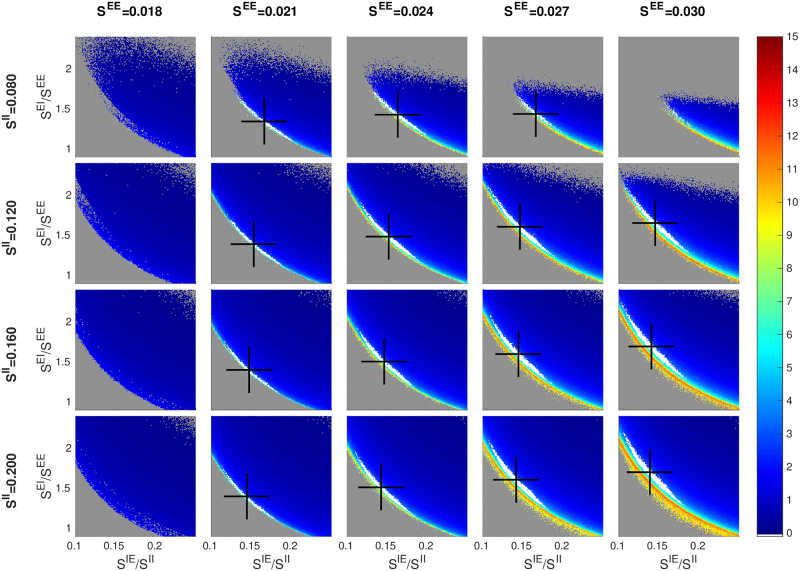

2.3 Scaling with SEE and SII

We have found SEE and SII to be the most “basic” of the parameters, and it has been productive indexing other parameters to them. In Fig 4, we vary these two parameters in the matrix rows and columns, and examine changes in the inhibition planes.

Fig 4. Dependence of firing rate and good area on SEE and SII.

Smaller panels are as in Figs 2 and 3, with good areas (where visible) in white and black crosses denoting their centers of mass. The picture for SEE = 0.024 and SII = 0.120 (row 2, column 3) corresponds to the fE rate map in Fig 1. Other free parameters are SElgn = 2 × SEE, SIlgn = 2 × SElgn, and FIL6 = 3 × FEL6.

We assume SEE ∈ (0.015, 0.03). This follows from the conventional wisdom [41, 50] that when an E-cell is stimulated in vitro, it takes 10–50 consecutive spikes in relatively quick succession to produce a spike. Numerical simulations of a biologically realistic V1 model suggested SEE values lie well within the range above [55]. As for SII, there is virtually no direct information other than some experimental evidence to the effect that EPSPs for I-cells are roughly comparable in size to those for E-cells; see [56] and also S1 Text. We arrived at the range we use as follows: With SII ∈ (0.08, 0.2) and SIE/SII ∈ (0.1, 0.25), we are effectively searching through a range of SIE ∈ (0.008, 0.05). As this interval extends quite a bit beyond the biological range for SEE, we hope to have cast a wide enough net given the roughly comparable EPSPs for E and I-cells.

Fig 4 shows a matrix of panels with SEE and SII in these ranges and the three ratios SElgn/SEE = 2.5, SIlgn/Elgn = 2 and FIL6/FEL6 = 3. As before, each of the smaller panels shows an inhibition plane. Good areas with characteristics similar to those seen in earlier figures varying from panel to panel are clearly visible.

A closer examination reveals that (i) going along each row of the matrix (from left to right), the center of mass of the good area (black cross) shifts upward as SEE increases, and (ii) going down each column, the black cross shifts slightly to the left as SII increases, two phenomena we now explain. Again, it is important to remember that firing rates are roughly constant on the good areas.

To understand (i), consider the currents that flow into an E-cell, decomposing according to source as follows: Let [4E], [6E], [LGN] and [amb] denote the total current into an E-cell from E-cells in L4 and L6, from LGN and ambient, and let [I] denote the magnitude of the I-current. As fE is determined by the difference (or gap) between the Excitatory and Inhibitory currents, we have

It is an empirical fact that the quantity in curly brackets is strictly positive (recall that ambient alone does not produce spikes). An increase of x% in SEE will cause not only [4E] to increase but also [6E] and [LGN], both of which are indexed to SEE, to increase by the same percentage. If [I] also increases by x%, then the quantity inside curly brackets will increase by x% resulting in a larger current gap. To maintain E-firing rate hence current gap size, [I] must increase by more than x%. Since I-firing rate is unchanged, this can only come about through an increase in the ratio SEI/SEE, hence the upward movement of the black crosses.

To understand (ii), we consider currents into an I-cell, and let [⋯] have the same meanings as before (except that they flow into an I-cell). Writing

we observe empirically that the quantity inside curly brackets is slightly positive. Now we increase SII by x% and ask how SIE should vary to maintain the current gap. Since [LGN] and [amb] are unchanged, we argue as above that SIE must increase by < x% (note that 6E is also indexed to SIE). This means SIE/SII has to decrease, proving (ii).

We have suggested that the inhibition plane picture we have seen many times is canonical, or universal, in the sense that through any point in the designated 7D parameter cube, if one takes a 2D slice as proposed, pictures qualitatively similar to those in Figs 2–4 will appear. To confirm this hypothesis, we have computed a number of slices taken at different values of the free parameters (see S1 Text), at

For all 18 = 3 × 3 × 2 combinations of these parameters, we reproduce the 3 × 4 panel matrix in Fig 2, i.e., the 4D slices of (SElgn/SEE, SIlgn/SII) × (SEI/SEE, SIE/SII), the first pair corresponding to rows and columns of the matrix, and the second to the xy-axes of each inhibition plane plot. All 18 plots confirm the trends observed above. Interpolating between them, we see that the contours and geometric shapes on inhibition planes are indeed universal, and taken together they offer a systematic, interpretable view of the 7D parameter space.

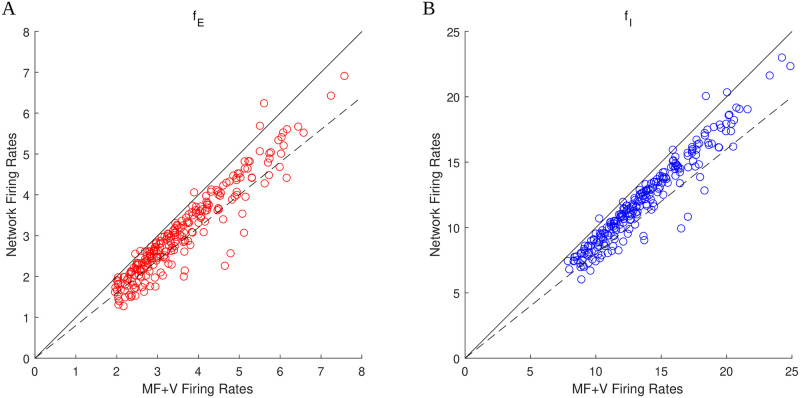

2.4 Comparing network simulations and the MF+v algorithm

Figs 1–4 were generated using the MF+v algorithm introduced in Sect. 1.3 and discussed in detail in Methods. Indeed, the same analysis would not be feasible using direct network simulations. But how accurately does the MF+v algorithm reproduce network firing rates? To answer that question, we randomly selected 128 sets of parameters in or near the good areas in Figs 1–4, and compared the values of fE and fI computed from MF+v to results of direct network simulations. The results are presented in Fig 5. They show that in almost all cases, MF+v overestimated network firing rates by a little, with < 20% error for ∼ 80% of the parameters tested. In view of the natural variability of neuronal parameters, both within a single individual under different conditions and across a population, we view of this level of accuracy as sufficient for all practical purposes. Most of the larger errors are associated with network E-firing rates that are lower than empirically observed (at about 2 spikes/sec).

Fig 5. Comparison of firing rates computed using the MF+v algorithm to those from direct network simulations.

The scatter plots show results for 128 sets of parameters randomly chosen in or near the good areas in Figs 1–4. A. Comparison of fE. B. Comparison of fI. Solid lines: y = x. Dashed lines: y = 0.8x. A majority of data points fall in the range of 20% accuracy.

3 Other views of the viable region

We have shown in Sect. 2 that a systematic and efficient way to explore parameter dependence is to slice the viable region using inhibition planes with rescaled coordinate axes, but there are many other ways to view the 6D manifold that approximates the viable region. Here are some examples.

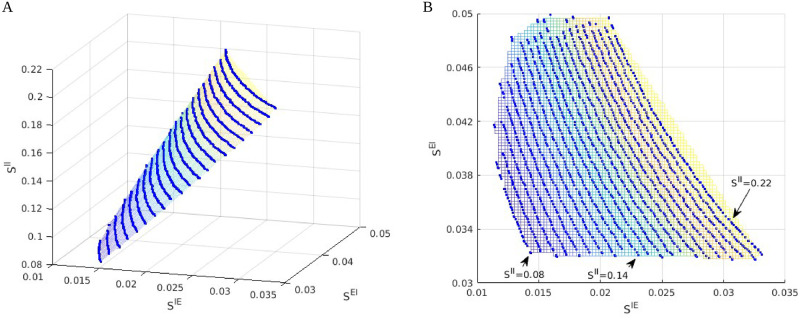

Fig 6 shows two views of the viable region projected to two different low dimension subspaces. The left panel shows the viable region as a surface parametrized by hyperbolas with varying aspect ratios. This is how it looks in unscaled coordinates, compared to the panels in, e.g., Fig 4, where we have uniformized the aspect ratios of the hyperbolas by plotting against SIE/SII instead of SIE. The right panel of Fig 6 shows a bird’s-eye view of the same plot, with the {fE = 4}-contours in the (unscaled) inhibition plane, giving another view of the SII dependence.

Fig 6. Other views of the viable regions.

A. A 2D surface approximating the viable region projected to the 3D-space defined by SIE × SEI × SII. Blue lines are projections of contours for fE = 4 Hz intersected with the good areas, computed on 21 different inhibition planes. Parameters are fixed at SEE = 0.024, SElgn/SEE = 2, SIlgn/SElgn = 2, and FIL6/FEL6 = 2; SII varies from 0.22 for the top contour to 0.08 for the bottom contour. B. A bird’s eye view of the left panel, with colors indicating corresponding locations of the projected E-contours.

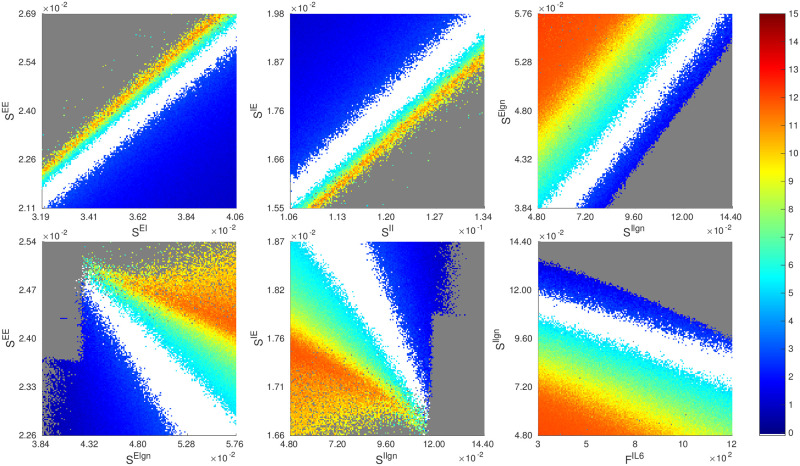

Table 1 gives a sense of how the viable region near the reference parameter point looks when we cut through the 7D parameter cube with 1D lines. In Fig 7, we show several heat maps for firing rates obtained by slicing the viable region with various 2D planes though the same reference point. In the top row, we have chosen pairs of parameters that covary (positively), meaning to stay in the viable region, these pairs of parameters need to be increased or decreased simultaneously by roughly constant proportions. The idea behind these plots is that to maintain constant firing rates, increased coupling strength from the E-population must be compensated by a commensurate increase in coupling strength from the I-population (left and middle panels), and increased drive to E must be compensated by a commensurate increase in drive to I (right panel). In the second row, we have selected pairs of parameters that covary negatively, i.e., their sums need to be conserved to stay in the viable region. The rationale here is that to maintain constant firing rates, total excitation from cortex and from LGN should be conserved (left and middle panels), as should total drive from L6 and from LGN (right panel).

Fig 7. Slicing the 7D parameter space from other directions through the reference point.

We compute the rate maps of fE and indicate viable regions on six other 2D slices, namely, the planes of SEE × SEI, SIE × SII, SElgn × SIlgn, SEE × SElgn, SIE × SIlgn, and SIlgn × FIL6. Firing rates are as in the color bar, and as usual the good area is in white.

Thus, together with the results in Sect. 2, we have seen three different ways in which pairs of parameters can relate: (i) they can covary, or (ii) their sums can be conserved, or, (iii) as in the case of inhibition planes, it is the product of the two parameters that needs to be conserved. Like (iii), which we have shown to hold ubiquitously and not just through this one parameter point, the relations in (i) and (ii) are also valid quite generally.

Discussion

We began with some very rough a priori bounds for the 7 free parameters identified in Sect. 1.2, basing our choices on physiological data when available and casting a wide net when they are not. We also identified a biologically plausible region (referred to as “the viable region”) defined to be the set of parameters that lead to spontaneous E- and I-firing rates compatible with experimental values, and sought to understand the geometry of this region of parameter space. Our most basic finding is that the viable region as defined is a slightly thickened 6D submanifold—the amount of thickening varies from location to location, and is so thin in places that for all practical purposes the submanifold vanishes. This is consistent with Table 1, which shows that varying certain parameters by as little as 1% can take us out of the viable region. One can think of directions that show greater sensitivity in Table 1 as more “perpendicular” to the slightly thickened 6D surface, while those that are more robust make a smaller angle with its tangent plane. The codimension-1 property is largely a consequence of the E-I balance and has a number of biological implications, the most important of which is that the parameters giving rise to biologically plausible regimes are robust—provided one compensates appropriately when varying parameters. Such compensation can come about from a variety of sources in vivo, e.g., synaptic depression of I-neurons [57, 58]; increased thresholds for potential generation of E-spikes due to Kv currents [59]; and a host of other homeostatic mechanisms [60]. To a first approximation, one can view these mechanisms as regulating synaptic weights, and our findings may be pertinent to anyone wishing to study homeostatic mechanisms governing neuronal activity.

Our analysis offers a great deal more information on the structure of the viable region beyond its being a thickened 6D manifold. We have found it profitable to slice the 7D parameter cube with inhibition planes, 2D planes containing the parameter axes SEI and SIE. Each inhibition plane intersects the viable region in a narrow strip surrounding a segment of a hyperbola (noted as “good area” above). Moreover, in rescaled variables SEI/SEE and SIE/SII, these hyperbolas are not only remarkably alike in appearance but their exact coordinate locations and aspect ratios vary little as we move from inhibition plane to inhibition plane, suggesting approximate scaling relations for firing rates as functions of parameters.

Summarizing, we found that most points in the viable region have SEE ∈ [0.02, 0.03] and SII ∈ [0.1, 0.2]; the lower limits of these ranges were found in our parameter analysis and the upper limits were a priori bounds. Our parameter exploration also shows that SEI/SEE ∈ (1, 2), and SIE/SII ∈ (0.1, 0.15), SElgn/SEE ∈ (1.5, 3), SIlgn/SElgn ∈ (1.5, 2), and FIL6/FIL6 ∈ (3, 4.5). We have further observed a strong correlation between degeneration of good areas and external inputs to I being too large in relation to that of E. For example, when SElgn/SEE is too low, or when SIlgn/Elgn or FIL6/FEL6 is too high, the hyperbolic strips in inhibition planes narrow, possibly vanishing altogether. (See S1 Text.) We have offered explanations in terms of a suppression index for E-cells.

Relation to previous work on MF

Since the pioneering work of Wilson, Cowan, Amari, and others [22–37, 61], MF ideas have been used to justify the use of firing rate-based models to model networks of spiking neurons. The basic idea underlying MF is to start with a relation between averaged quantities, e.g., an equation similar or analogous to Eq (4), and supplement it with an “activation” or “gain” function relating incoming spike rates and the firing rate of the postsynaptic neuron, thus arriving at a closed governing equation for firing rates. MF and related ideas have yielded valuable mathematical insights into a wide range of phenomena and mechanisms, including pattern formation in slices [25, 30], synaptic plasticity and memory formation [62–66], stability of attractor networks [67–73], and many other features of network dynamics involved in neuronal computation [31, 32, 34, 36, 61, 74–100]. However, as far as we are aware, MF has not been used to systematically map out cortical parameter landscape.

Another distinction between our approach and most previous MF models has to do with intended use. In most MF models, the form of the gain function is assumed, usually given by a simple analytical expression; see, e.g., [39]. In settings where the goal is a general theoretical understanding and the relevant dynamical features are insensitive to the details of the gain function, MF theory enables mathematical analysis and can be quite informative. Our goals are different: our MF models are computationally efficient surrogates for realistic biological network models, models that are typically highly complex, incorporating the anatomy and physiology of the biological system in question. For such purposes, it is essential that our MF equations capture quantitative details of the corresponding network model with sufficient accuracy. In particular, we are not free to design gain functions; they are dictated by the connectivity statistics, types of afferents and overall structure of the network model. We have termed our approach “data-informed MF” to stress these differences with the usual MF theories.

We have tried to minimize the imposition of additional hypotheses beyond the basic MF assumption of a closed model in terms of rates. As summarized in Results and discussed in depth in Methods and S1 Text, we sought to build an MF equation assuming only that the dynamics of individual neurons are governed by leaky integrate-and-fire (LIF) equations with inputs from lateral and external sources, and when information on mean voltage was needed to close the MF equation, we secured that from synthetic data using a pair of LIF neurons driven by the same inputs as network neurons. The resulting algorithm, which we have called the “MF+v” algorithm, is to our knowledge novel and is faithful to the idea of data-informed MF modeling.

As we have shown, our simple and flexible approach produces accurate firing rate estimates, capturing cortical parameter landscape at a fraction of the cost of realistic network simulations. It is also apparent that its scope goes beyond background activity, and can be readily generalized to other settings, e.g., to study evoked responses.

Contribution to a model of primate visual cortex

Our starting point was [41], which contains a mechanistic model of the input layer to the monkey V1 cortex. This model was an ideal proving ground for our data-informed MF ideas: it is a large-scale network model of interacting neurons built to conform to anatomy. For this network, the authors of [41] located a small patch of parameters with which they reproduced many visual responses both spontaneous and evoked. Their aim was to show that such parameters existed; parameters away from this patch were not considered—and this is where they left off and where we began: Our MF+v algorithm, coupled with techniques for conceptualizing parameter space, made it possible to fully examine a large 7D parameter cube. In this paper, we identified all the parameters in this cube for which spontaneous firing rates lie within certain acceptable ranges. The region we found includes the parameters in [41] and is many times larger; it is a slightly thickened 6D manifold that is nontrivial in size. Which subset of this 6D manifold will produce acceptable behavior when the model is stimulated remains to be determined, but since all viable parameters – viable in the sense of both background and evoked responses—must lie in this set, knowledge of its coordinates should provide a head-start in future modeling work.

Taking stock and moving forward

In a study such as the one conducted here, had we not used basic biological insight and other simplifications (such as inhibition planes, rescaled parameters, viable regions, and good areas) to focus the exploration of the 7D parameter space, the number of parameter points to be explored would have been N7, where N is the number of grid points per parameter, and the observations in Table 1 suggest that may be the order of magnitude needed. Obtaining this many data points from numerical simulation of the entire network would have been out of the question. Even after pruning out large subsets of the 7D parameter cube and leveraging the insights and scaling relations as we have done, producing the figures in this paper involved computing firing rates for ∼107 distinct parameters. That would still have required significant effort and resources to implement and execute using direct network simulations. In contrast, using the proposed MF+v algorithm, each example shown in this paper can be implemented with moderate programming effort and computed in a matter of hours on a modern computing cluster.

We have focused on background or spontaneous activity because its spatially and temporally homogeneous dynamics provide a natural testing ground for the MF+v algorithm. Having tested the capabilities of MF+v, our next challenge is to proceed to evoked activity, where visual stimulation typically produces firing rates with inhomogeneous spatial patterns across the cortical sheet. The methods developed in this paper continue to be relevant in such studies: evoked activity is often locally constant in space (as well as in time), so our methods apply to local populations, the dynamics of which form building blocks of cortical responses to stimuli with different spatiotemporal structure.

Finally, we emphasize that while MF+v provides a tremendous reduction to the cost of estimating firing rates given biological parameters, the computational cost of a parameter grid search remains exponential in the number of parameters (“curse of dimensionality”). Nevertheless, we expect our MF+v-based strategy, in combination with more efficient representations of data in high dimensional spaces (e.g., sparse grids [101]) and the leveraging of biological insight, can scale to systems with many more degrees of complexity.

Methods

As explained in Introduction and Results, we seek parsimonious phenomenological models that are (i) simple and efficient; (ii) flexible enough to accommodate key biological features and constraints; and (iii) able to faithfully capture mean firing rates and voltages of network models across a wide range of parameters. We use for illustration a model of the monkey primary visual cortex, treating as “ground truth” the network model described in Sect. 1.1 of Results. Here we elaborate on the MF+v scheme outlined in Sect. 1.3, applying it to study firing rates in Layer 4Cα (L4), an input layer to V1 in the network model.

M1 Mean-field rate-voltage relation

We begin by stating precisely and deriving the MF Eq (4) alluded to in Sect. 1.3 of Results. Consider an LIF model (Eq (1)) for neuron i in L4 of the network model. We set Vrest = 0, Vth = 1, and let t1, t2, …tn be the spiking times of neuron i on the time interval [0, T] for some large T. Integrating Eq (1) in time, we obtain

| (5) |

where , i.e., the time interval [0, T] minus the union of all refractory periods. Let fi = limT→∞ Ni(T)/T denote the mean firing rate of the ith neuron. We then have

| (6) |

where and is the total length of . The term (⋆) is the fraction of time the ith neuron is in refractory, and is the conditional expectation of the quantity x(t) given the cell is not refractory at time t. We have neglected correlations between conductances and voltages, as is typically done in mean-field (MF) theories. See, e.g., [29].

The long-time averages and reflect the numbers and sizes of E/I-kicks received by neuron i. In our network model (see S1 Text for details), the only source of inhibition comes from I-cells in L4, while excitatory inputs come from LGN, layer 6 (L6), ambient inputs (amb), and recurrent excitation from E-cells in L4. Mean conductances can thus be decomposed into:

where for P ∈ { lgn, L6, amb, E, I}, Si,P is the synaptic coupling weight from cells in P to neuron i, and Fi,P is the total number of spikes per second neuron i receives from source P, i.e., from all of its presynaptic cells in P combined. Here and in the rest of Methods, “E” and “I” refer to L4, the primary focus of the present study, so that Fi, E, for example, is the total number of spikes neuron i receives from E-cells from within L4.

As discussed in the main text, we are interested in background or spontaneous activity. During spontaneous activity, we may assume under the MF limit that all E-cells in L4 receive statistically identical inputs, i.e., for each P, (Si,P, Fi,P) is identical for all E-cells i in L4. We denote their common values by (SEP, FEP), and call the common firing rate of all E-cells fE. Corresponding quantities for I-cells are denoted (SIP, FIP) and fI. Combining Eqs 6 and 7, we obtain the MF equations for E/I-cells:

In Eq (8), and are leakage conductances; NQ′Q represents the average number of type-Q neurons in L4 presynaptic to a type-Q′ neuron in L4. These four numbers follow estimations of neuron density and connection probability of Layer 4Cα of the monkey primary visual cortex. Refractory periods are , and E-to-E synapses are assumed to have a synaptic failure rate pfall, also fixed. Details are discussed in S1 Text.

We seek to solve Eq (8) for (fE, fI) given network connections, synaptic coupling weights and external inputs. That is, we assume all the quantities that appear in Eq (8) are fixed, except for and . The latter two, the mean voltages and , cannot be prescribed freely as they describe the sub-threshold activity of L4 neurons once the other parameters are specified. Thus what we have is a system that is not closed: there are four unknowns, and only two equations.

A second observation is that once and are determined, Eq (8) has a very simple structure. To highlight this near-linear structure, we rewrite Eq (8) in matrix form as

| (9) |

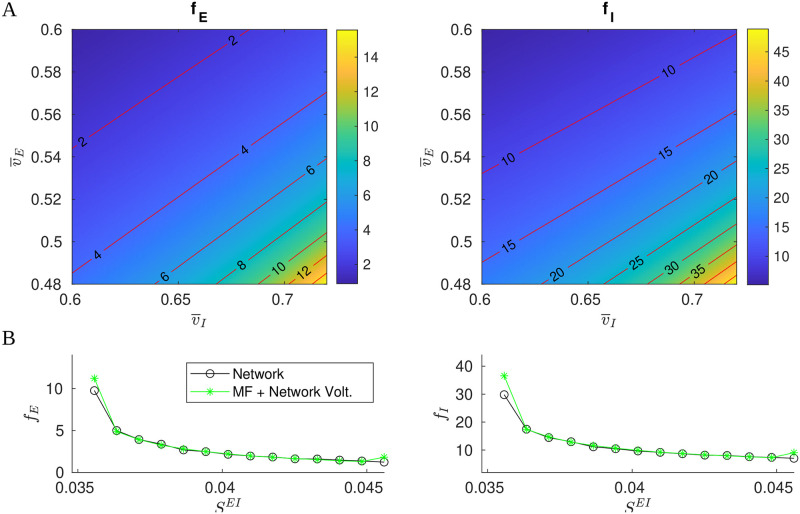

Here , , is a (voltage-dependent) linear operator acting on L4 E/I firing rates, includes inputs from external sources and leakage currents, and R accounts for refractory periods. (See S1 Text.) Neglecting refractory periods, Eq (9) is linear in assuming and are known. At typical cortical firing rates in background, the refractory factor R contributes a small nonlinear correction.

To understand the dependence on , we show in Fig 8A the level curves of fE and fI as functions of from the mapping defined by Eq (8). As expected, (fE, fI) vary with , the nearly straight contours reflecting the near-linear structure of Eq (9). One sees both fE and fI increase steadily (in a nonlinear fashion) as we decrease and increase , the dependence on being quite sensitive in the lower right part of the panels. The sensitive dependence of fE and fI on rules out arbitrary choices on the latter in a MF theory that aims to reproduce network dynamics. How to obtain reasonable information on is the main issue we need to overcome.

Fig 8. MF approximations.

A. Contours of E/I firing rates as functions of . B. Comparison of network firing rates (black) and (fE, fI) computed from Eq (8) using network-computed mean voltages (green).

We propose in this paper to augment Eq (8) with values of informed by (synthetic) data. To gauge the viability of this idea, we first perform the most basic of all tests: We collect firing rates and mean voltages (averaged over time and over neurons) computed directly from network simulations, and compare the firing rates to fE and fI computed from Eq (8) with set to network-computed mean voltages. The results for a range of synaptic coupling constants are shown in Fig 8B, and the agreement is excellent except when firing rates are very low or very high.

M2 The MF+v algorithm

Simulating the entire network to obtain defeats the purpose of MF approaches, but the results in Fig 8B suggest that we might try using single LIF neurons to represent typical network neurons, and use them to estimate mean voltages.

The idea is as follows: Consider a pair of LIF neurons, one E and one I, and fix a set of parameters and external drives. In order for this pair to produce similar to the mean voltages in network simulations, we must provide these cells with surrogate inputs that mimic what they would receive if they were operating within a network. However, the bulk of the input into L4 cells are from other L4 cells. This means that in addition to surrogate LGN, L6, and ambient inputs, we need to provide our LIF neurons surrogate L4 inputs (both E and I) commensurate with those received by network cells. Arrival time statistics will have to be presumed (here we use Poisson), but firing rates should be those of L4 cells—the very quantities we are seeking from our MF model. Thus there is the following consistency condition that must be fulfilled: For suitable parameters and external inputs, we look for values , , fE, and fI such that

given and , Eq (8) returns fE and fI as firing rates; and

when L4 firing rates of fE and fI are presented to the LIF pair along with the stipulated parameters and external inputs, direct simulations of this LIF pair return values of and .

If we are able to locate values of , , fE, and fI that satisfy the consistency relations above, it will follow that the LIF pair, acting as surrogate for the E and I-populations in the network, provide mean voltage data that enable us to determine mean network firing rates in a self-consistent fashion.

A natural approach to finding self-consistent firing rates is to alternate between estimating mean voltages using LIF neurons receiving surrogate network inputs—including L4 firing rates from the previous iteration—and using the MF formula (Eq (4)) to estimate L4 firing rates using voltage values from the previous step. A schematic representation of this iterative method is shown in Fig 9A. In more detail, let and be the mean voltage and firing rate estimates obtained from the pth iteration of the cycle above. In the next iteration, we first simulate the LIF cells for a prespecified duration tLIF, with L4 firing rate set to , to obtain an estimate of the mean voltage. We then update the rate estimate by solving

| (10) |

for , leading to

| (11) |

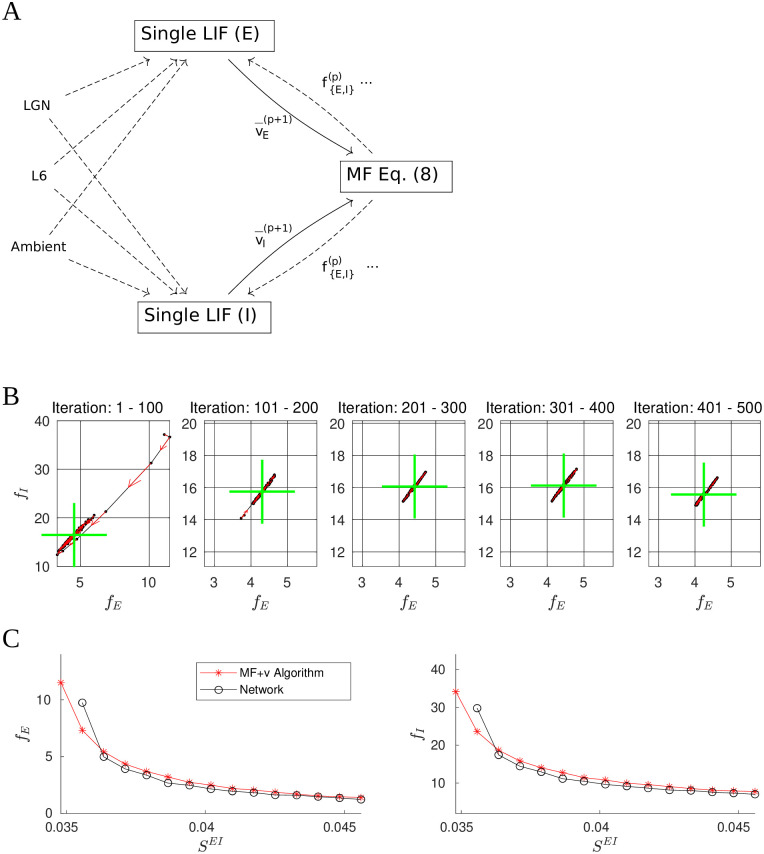

Fig 9. The MF+v algorithm.

A. Schematic of the algorithm. We begin by choosing initial values and . These values are used to drive a pair of LIF neurons for 20 seconds (biological time). The resulting membrane voltages are then fed into the MF Eq (8), which gives us firing rates for the next iteration. This is repeated until certain convergence criteria are met (see text). In the above, all dashed lines are modeled by Poisson processes. B. 500 training iterations of the MF+v. The means of every 100 iterations are indicated by green crosses, stability properties of which are quite evident. C. Comparison of network (black) and MF+v computed (red) firing rates.

When the iteration converges, i.e., when and , we have a solution of Eq (9).

Fig 9B shows 500 iterations of this scheme in -space. Shown are iterations and running means (green crosses). Observe that after transients, the settle down to what appears to be a narrow band of finite length, and wanders back and forth along this band without appearing to converge. We have experimented with doubling the integration time tLIF and other measures to increase the accuracy of the voltage estimates , but they have had no appreciable impact on the amplitudes of these oscillations. A likely explanation is that the contours of the E/I firing rates (Fig 8A) are close to but not exactly parallel: Had they been exactly parallel, a long line of would produce the same , implying the MF Eq (8) do not have unique solutions. The fact that they appear to be nearly parallel then suggests a large number of near-solutions, explaining why our attempt at fixed point iteration cannot be expected to converge in a reasonable amount of time.

However, the oscillations shown in Fig 9B are very stable and well defined, suggesting a pragmatic way forward: After the iterations settle to this narrow band, we can run a large number of iterations and average the to produce a single estimate. Specifically, we first carry out a number of “training” iterations, and when the firing rate estimates settle to a steady state by a heuristic criterion, we compute a long-time average and output the result. Combining this with the MF formula (4) yields the MF+v algorithm. See S1 Text for more details.

Fig 9C compares MF+v predictions with network simulations. As one would expect, averaging significantly reduces variance. The results show strong agreement between MF+v predictions and their target values given by direct network simulations.

Finally, we remark that a natural alternative to our MF+v algorithm might be to forgo the MF equation altogether, and construct a self-consistent model using single LIF neurons. We found that such an LIF-only method is much less stable than MF+v. (See S1 Text.) This is in part because firing rates require more data to estimate accurately: For each second of simulated activity, each LIF neuron fires only 3–4 spikes, whereas we can collect a great deal more data on voltages over the same time interval.

M3 Issues related to implementation of MF+v

The hybrid approach of MF+v, which combines the use of the MF formula (4) with voltage estimates from direct simulations of single neurons, has enabled us to seamlessly incorporate the variety of kick sizes and rates from L6, LGN, and ambient inputs while benefiting from the efficiency and simplicity of Eq (4). Nevertheless there are some technical issues one should be aware of.

Failure to generate a meaningful result

We have found that MF+v iterations can fail to give meaningful answers in the following two situations. First, in some parameter regimes, the linear operator can become singular, which can result in unreasonably low or even negative values of in the MF+v algorithm (see S1 Text). Second, when firing rates are too low (e.g., fE < 0.1 Hz), the low rate of L4 kicks to the LIF neurons in the MF+v model can result in large fluctuations of , which can destabilize the computed unless the integration time tLIF is sufficiently large.

In Results, whenever MF+v fails, we exclude the parameter set and label it with a gray pixel in the canonical picture; see, e.g., Fig 1.

Computational cost

The majority of computation in MF+v is spent on collecting the mean voltages in each iteration, which can be time-consuming depending on the time-scale and accuracy of LIF-neuron simulation. By repeating for M iterations, the computational cost of MF+v is O(MtLIF), where a general choice of tLIF ∈ (5, 40)s. If we simulate each neuron for tLIF 20s per iteration for up to M = 100 iterations, we typically obtain a firing rate estimate within ∼ 20% accuracy of the network firing rate in ∼ 1.5s. In contrast, the cost of simulating a large network with N neurons typically grows at least as fast as N, and may even grow nonlinearly in N. With the parameters in this paper, a typical network simulation using N ≈ 4 × 104 cells may require up to ∼ 60 seconds, which is substantial when used to map a 7-dimensional parameter grid. The MF+v algorithm thus represents an ∼ 40-fold speedup over the corresponding large network simulation in contemporary computing environments. (Both network simulations and MF+v computations are implemented using MATLAB 2020B with Intel Xeon Platinum 8268 24Core 2.9GHz processors).

It is possible to further reduce the computational cost of MF+v. First, instead of the simple iteration scheme we used in MF+v (i.e., Picard iteration), one can use a stochastic variant of a higher-order method (see, e.g., [102]). Second, one need not make an independent computation of the mean voltages for each iteration. Instead, we can precompute the mean voltages for a coarse grid in (fE, fI) space, then, by interpolation and smoothing, construct a table of values of mean voltages as functions of L4 rates. This approximation can then be used as a surrogate for the Monte Carlo simulation presently used in MF+v. In certain regimes, it may also be possible to compute in a more analytical manner, e.g., via the Fokker-Planck equation for LIF neurons.

Supporting information

(PDF)

Data Availability

Source code for the paper can be downloaded from: https://github.com/Texense/NYU_DIMF_PLOSCB.

Funding Statement

ZCX was supported by the Swartz Foundation. KL was supported in part by the National Science Foundation Grant DMS-182128. LSY was supported in part by the National Science Foundation Grants SMA-1734854 and DMS-1901009. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Mitra P. Observed Brain Dynamics. Oxford University Press; 2007. [Google Scholar]

- 2. Makeig S, Debener S, Onton J, Delorme A. Mining event-related brain dynamics. Trends in cognitive sciences. 2004;8(5):204–210. doi: 10.1016/j.tics.2004.03.008 [DOI] [PubMed] [Google Scholar]

- 3. Spiers HJ, Maguire EA. Thoughts, behaviour, and brain dynamics during navigation in the real world. Neuroimage. 2006;31(4):1826–1840. doi: 10.1016/j.neuroimage.2006.01.037 [DOI] [PubMed] [Google Scholar]

- 4. Del Cul A, Baillet S, Dehaene S. Brain dynamics underlying the nonlinear threshold for access to consciousness. PLoS biology. 2007;5(10):e260. doi: 10.1371/journal.pbio.0050260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Erb J, Henry MJ, Eisner F, Obleser J. The brain dynamics of rapid perceptual adaptation to adverse listening conditions. Journal of Neuroscience. 2013;33(26):10688–10697. doi: 10.1523/JNEUROSCI.4596-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Schiff ND, Nauvel T, Victor JD. Large-scale brain dynamics in disorders of consciousness. Current opinion in neurobiology. 2014;25:7–14. doi: 10.1016/j.conb.2013.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Barttfeld P, Uhrig L, Sitt JD, Sigman M, Jarraya B, Dehaene S. Signature of consciousness in the dynamics of resting-state brain activity. Proceedings of the National Academy of Sciences. 2015;112(3):887–892. doi: 10.1073/pnas.1418031112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Zalesky A, Fornito A, Cocchi L, Gollo LL, Breakspear M. Time-resolved resting-state brain networks. Proceedings of the National Academy of Sciences. 2014;111(28):10341–10346. doi: 10.1073/pnas.1400181111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Braitenberg V, Schüz A. Cortex: statistics and geometry of neuronal connectivity. Springer Science & Business Media; 2013. [Google Scholar]

- 10. Roth G, Blanke J, Wake DB. Cell size predicts morphological complexity in the brains of frogs and salamanders. Proceedings of the National Academy of Sciences. 1994;91(11):4796–4800. doi: 10.1073/pnas.91.11.4796 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. van Pelt J, van Ooyen A, Uylings HB. The need for integrating neuronal morphology databases and computational environments in exploring neuronal structure and function. Anatomy and embryology. 2001;204(4):255–265. doi: 10.1007/s004290100197 [DOI] [PubMed] [Google Scholar]

- 12. Zeng H, Sanes JR. Neuronal cell-type classification: challenges, opportunities and the path forward. Nature Reviews Neuroscience. 2017;18(9):530–546. doi: 10.1038/nrn.2017.85 [DOI] [PubMed] [Google Scholar]

- 13. Silver RA. Neuronal arithmetic. Nature Reviews Neuroscience. 2010;11(7):474–489. doi: 10.1038/nrn2864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Im HI, Kenny PJ. MicroRNAs in neuronal function and dysfunction. Trends in neurosciences. 2012;35(5):325–334. doi: 10.1016/j.tins.2012.01.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Debanne D, Campanac E, Bialowas A, Carlier E, Alcaraz G. Axon physiology. Physiological reviews. 2011;91(2):555–602. doi: 10.1152/physrev.00048.2009 [DOI] [PubMed] [Google Scholar]

- 16. Wang XJ. Probabilistic decision making by slow reverberation in cortical circuits. Neuron. 2002;36(5):955–968. doi: 10.1016/S0896-6273(02)01092-9 [DOI] [PubMed] [Google Scholar]

- 17. Friston K, Kiebel S. Cortical circuits for perceptual inference. Neural Networks. 2009;22(8):1093–1104. doi: 10.1016/j.neunet.2009.07.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Wolf F, Engelken R, Puelma-Touzel M, Weidinger JDF, Neef A. Dynamical models of cortical circuits. Current opinion in neurobiology. 2014;25:228–236. doi: 10.1016/j.conb.2014.01.017 [DOI] [PubMed] [Google Scholar]

- 19. Peron S, Pancholi R, Voelcker B, Wittenbach JD, Ólafsdóttir HF, Freeman J, et al. Recurrent interactions in local cortical circuits. Nature. 2020;579(7798):256–259. doi: 10.1038/s41586-020-2062-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Niell CM, Scanziani M. How Cortical Circuits Implement Cortical Computations: Mouse Visual Cortex as a Model. Annual Review of Neuroscience. 2021;44. doi: 10.1146/annurev-neuro-102320-085825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Mochol G, Hermoso-Mendizabal A, Sakata S, Harris KD, De la Rocha J. Stochastic transitions into silence cause noise correlations in cortical circuits. Proceedings of the National Academy of Sciences. 2015;112(11):3529–3534. doi: 10.1073/pnas.1410509112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophysical journal. 1972;12(1):1–24. doi: 10.1016/S0006-3495(72)86068-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Wilson HR, Cowan JD. A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetik. 1973;13(2):55–80. doi: 10.1007/BF00288786 [DOI] [PubMed] [Google Scholar]

- 24. Amari Si. Dynamics of pattern formation in lateral-inhibition type neural fields. Biological cybernetics. 1977;27(2):77–87. doi: 10.1007/BF00337259 [DOI] [PubMed] [Google Scholar]

- 25. Ermentrout GB, Cowan JD. A mathematical theory of visual hallucination patterns. Biological cybernetics. 1979;34(3):137–150. doi: 10.1007/BF00336965 [DOI] [PubMed] [Google Scholar]