Abstract

Oral squamous cell carcinoma (OSCC) is one of the most prevalent cancers worldwide and its incidence is on the rise in many populations. The high incidence rate, late diagnosis, and improper treatment planning still form a significant concern. Diagnosis at an early-stage is important for better prognosis, treatment, and survival. Despite the recent improvement in the understanding of the molecular mechanisms, late diagnosis and approach toward precision medicine for OSCC patients remain a challenge. To enhance precision medicine, deep machine learning technique has been touted to enhance early detection, and consequently to reduce cancer-specific mortality and morbidity. This technique has been reported to have made a significant progress in data extraction and analysis of vital information in medical imaging in recent years. Therefore, it has the potential to assist in the early-stage detection of oral squamous cell carcinoma. Furthermore, automated image analysis can assist pathologists and clinicians to make an informed decision regarding cancer patients. This article discusses the technical knowledge and algorithms of deep learning for OSCC. It examines the application of deep learning technology in cancer detection, image classification, segmentation and synthesis, and treatment planning. Finally, we discuss how this technique can assist in precision medicine and the future perspective of deep learning technology in oral squamous cell carcinoma.

Keywords: machine learning, deep learning, oral cancer, prognostication, precision medicine, precise surgery

Introduction

Oral squamous cell carcinoma (OSCC) is a common cancer that has an increased incidence across the globe [1–3]. Traditionally, the preferred primary cornerstone therapy for OSCC is surgical treatment [4]. Additionally, considering the aggressive nature of OSCC and the fact that many patients are diagnosed with locoregionally advanced disease, multimodality therapy with concomitant chemoradiotherapy becomes imperative [1]. In spite of the afore-mentioned treatment possibilities, the high incidence rate and the suboptimal treatment outcome still form a significant concern.

Early-stage diagnosis is of utmost importance for better prognosis, treatment, and survival [5, 6]. This is important to enhance the proper management of cancer. Late diagnosis has hampered the quest for precision medicine despite the recent improvement in the understanding of the molecular mechanisms of cancer. Therefore, deep machine learning technique has been touted to enhance early detection, and consequently to reduce cancer-specific mortality and morbidity [7]. Automated image analysis clearly has the potential to assist pathologists and clinicians in the early-stage detection of OSCC and in making informed decisions regarding cancer management.

This article discusses the overview of deep learning for OSCC. It examines the application of deep learning for precision medicine through accurate cancer detection, effective image classification, and efficient treatment planning for proper management of cancer. Finally, we discuss the prospect of future development of the deep learning-based predictive model for use in the daily clinical practice for improved patient care and outcome.

In this study, we performed a systematic review of the published systematic reviews that have examined the application of deep learning in oral cancer. To the best of our knowledge, this is the first systematic review of the published systematic reviews on the application of deep learning for oral cancer prognostication. This research approach provides the opportunity to offer a summary of evidence to clinicians regarding a particular domain or phenomena of interest [8, 9].

We have decided to use this approach of a systematic review of systematic reviews because it offers the opportunity to summarize the evidence from more than one systematic review that have examined the application of deep learning for prognostication oral cancer outcomes. In our previous study, we examined the utilization of deep learning techniques for cancer prognostication [7]. In this present study, we aim to summarize the evidence relating to the qualitative (reliability of the studies that examined deep learning for cancer prognostication) and quantitative (performance metrics of the deep learning algorithms) parameters that are needed to provide insightful overview on how deep learning techniques have helped in the improved and effective management of oral cancer. This will contribute to the effort to develop adequate cancer management by providing elements for the process from precise diagnosis to precise medicine.

Materials and Methods

The research question was: “How has deep learning techniques helped in achieving precise diagnosis or prognostication of oral cancer outcomes in the quest for precise medicine?”

Search Strategy

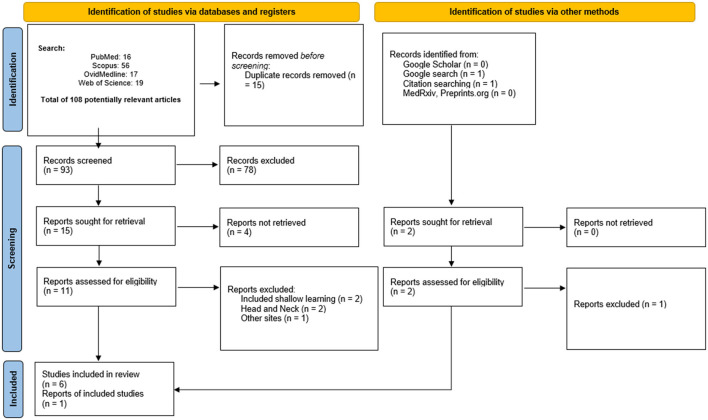

Detailed automated literature searches were performed in PubMed, OvidMedline, Scopus, and Web of Science from inception until the end of October 2021. Additionally, Google scholar, and unpublished studies were searched for possible gray literatures. This helps to reduce research waste. Similarly, the reference lists of potentially relevant systematic reviews were searched to ensure that all potential systematic reviews have been included. The manual searching of the reference list of the potential systematic reviews helps to avoid selection bias. The RefWorks software was used to manage the relevant systematic reviews and remove any duplicate reviews. The updated Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) was used in the searching and screening processes (Figure 1).

Figure 1.

A simplified illustration of a deep learning algorithm architecture with an input data ([9], figure modified).

Search Protocol

The search protocol was developed by combining search keywords: [(“deep learning AND oral cancer AND review”)]. The retrieved hits were exported to RefWorks software for further analysis.

Inclusion Criteria

All systematic reviews that considered deep learning for oral cancer diagnosis and prognosis were included. This includes reviews and scoping reviews that examined the application of deep learning on oral cancer prognostication.

Exclusion Criteria

Considering the fact that this study is a systematic review of systematic reviews, original articles, systematic reviews of other cancer types, comments, opinions, perspectives, editorials, abstracts only, and articles in languages other than English were excluded (Figure 1). This exclusion criteria was important as this study aims to put into the right perspectives, everything that has been summarized in the systematic review papers in other to answer the focused question presented in this study. Similarly, systematic reviews relating to head and neck cancer were excluded. Considering the vast amount of published studies on neural networks [10–15], we have excluded details about neural network and primarily focused on the role of deep learning techniques in the quest for precision medicine.

Quality Appraisal

The quality appraisal and risk of bias of the included systematic reviews ensure that the quality of the included systematic reviews is reliable. All potentially relevant systematic reviews were subjected to quality guidelines for systematic review as recommended by the National Institute of Health Quality Assessment tools [16]. The included studies in this review were subjected to four quality criteria informed by the same quality assessment and risk of bias tool [17]. These criteria were modified to include design (review or systematic review), methodology (databases were systematically searched), interventions (deep learning methodology was applied and performance measures from the deep learning algorithms was presented), and statistical analysis (conclusion from the included studies). These criteria have been scaled such that score 0 for “NO” and “Unclear” and score 1 for “Yes” for each of these criteria. Thus, a total of 4 points is expected from these criteria. Scaling of these criteria to percentage means that it was based on a 100% scale (i.e., 25% for each criterion). Thus, a potentially relevant systematic review or review with a quality score (≥50%) were considered reasonable and qualified to be included in this systematic review of systematic reviews (Table 1).

Table 1.

The quality appraisal of the included systematic reviews.

| Study | Design | Methodology | Interventions | Statistical analysis | Score (%) | Interpretation |

|---|---|---|---|---|---|---|

| Nagi et al. [18] (India) |

|

|

|

|

50 | Medium |

| Panigrahi and Swarnkar [19] (India) |

|

|

|

|

50 | Medium |

| Sultan et al. [20] (United States) |

|

|

|

|

50 | Medium |

| Alabi et al. [7] (Finland) |

|

|

|

|

100 | High |

| Ren et al. [21] (China) |

|

|

|

|

100 | High |

| Chu et al. [22] (China) |

|

|

|

|

100 | High |

| García-Pola et al. [23] (Spain) |

|

|

|

|

50 | Medium |

Extraction From Eligible Systematic Review

In each eligible systematic review, the first author name, year of publication, country, the title of studies, number of included studies, and conclusions derived from the systematic review of the application of deep learning for prognostication were summarized (Table 2). The detailed explanation of the application of deep learning that that had transformed from precise diagnosis to precise medicine was discussed collectively in the discussion section.

Table 2.

Summary of review studies on the application of deep learning in oral cancer.

| Review (Country) | Topic | Number of studies included | Performance reported | Conclusion |

|---|---|---|---|---|

| Nagi et al. [18] (India) | Clinical applications and performance of intelligent systems in dental and maxillofacial radiology: A review | 10 | NR | Intelligent systems such as deep learning have been proven to assist in making clinical diagnostics recommendation and treatment planning |

| Panigrahi and Swarnkar [19] (India) | Machine learning techniques used for the histopathological image analysis of oral cancer—A review | 8 14 (deep learning with histopathological images) |

NR | Computer-aided approach such as machine learning can assist in the prediction and prognosis of cancer |

| Sultan et al. [20] (United States) | The use of artificial intelligence, machine learning and deep learning in oncologic histopathology | 9 (histopathological images) | NR | Artificial intelligence has the potential for personalizing cancer care. Furthermore, it can achieve excellent and sometimes better results than the human pathologists. The AI model should provide a second opinion to the expert pathologists to reduce potential diagnostic errors |

| Alabi et al., [7] (Finland) | Utilizing deep machine learning for prognostication of oral squamous cell carcinoma—A systematic review | 34 | Average accuracy was 81.8% for computed tomography images Average accuracy was 96.6% for spectra data |

Deep learning approach offers a promising potential in the prognostication of oral cancer |

| Ren et al. [21] (China) | Machine learning in dental, oral and craniofacial imaging: a review of recent progress | 27 | NR | The application of deep learning approach for image detection has been intense in the past few years. Meanwhile, certain areas need to be supplemented for sustainable deep-learning research application in oral and maxillofacial radiology |

| Chu et al. [22] (China) | Deep learning for clinical image analyses in oral squamous cell carcinoma—A review | 14 | Range from 77.89 to 97.51% (pathological images) Range from 76 to 94.2% (radiographic images) | The trained deep learning model has the potential of producing a high clinical translatability in the proper management of oral cancer patients |

| García-Pola et al. [23] (Spain) | Role of artificial intelligence in the early diagnosis of oral cancer. A scoping review | 36 | Artificial intelligence can help in the detection of oral premalignant disorder. In addition, it can help in the early diagnosis of oral cancer Artificial intelligence can help in the precise diagnosis and prognosis of oral cancer |

Results

Characteristics of Relevant Studies

All the included systematic reviews were conducted in English language. Of the 7 included systematic reviews [7, 18–23], 4 were conducted in Asia (i.e., 2 each from China and India) [18, 19, 21, 22] while 2 reviews was conducted in Europe (Finland) [7, 23] and one review in the United States [20] (Table 2). The quality appraisal for all the review papers considered in this study indicated that 42.9% showed high quality [7, 21, 22] while 57.1% showed medium quality [18–20, 23]. Three of the reviews were conducted in the year 2020 [18–20] while four were conducted in the year 2021 [7, 21–23].

Data Used in Deep Learning Analyses

The findings of the published reviews (summarized in Table 2) suggested that the application of deep learning in oral cancer management (diagnosis and prognosis) is heightening based on the number of studies included in the considered reviews. Several data types such as pathologic and radiographic images have been used by deep learning in the quest to achieve precise diagnosis and prognosis [20, 22–32]. Other data types include gene expression data, spectra data, saliva metabolites, auto-fluorescence, cytology-image, computed tomography images, and clinicopathologic images that have been used in the deep learning analysis for improved diagnosis of oral cancer [7].

Performance of the Deep Learning Models in Oral Cancer Prognostication

The average reported accuracies were 96.6 and 81.8% for spectra data and computed tomography, respectively [7]. In addition, the accuracies ranged from 77.89 to 97.51% for pathological images and 76.0 and 94.2% for radiographic images [22]. This reveals that deep learning models have the potential to assist in the precise diagnosis and prognosis of oral cancer. These models carry the potential of being useful tools for clinical practice in oral oncology.

Remarkably, it is hoped that the use of disruptive technology approach such as deep learning techniques would address some of the concerns of the traditional diagnostic methods. For instance, it is sometimes challenging to differentiate between malignant and non-malignant adjacent tissues. This makes it challenging for the surgeons and oncologists to either properly detect and localize tumors before a biopsy, determine the surgical resection margins, or properly evaluate the resection bed after tumor extraction during post-treatment follow-up by for example endoscopy [33]. As a result, various imaging modalities are routinely used as diagnostic tools in oncology. In spite of this, this approach is subjective as the experience of the radiologists will play an important role in obtaining accurate diagnosis [33].

Of note, histopathological approach (biopsy) is the gold standard for oral squamous cell carcinoma. However, obtaining histopathological images is time-consuming, invasive in nature, subjective to pathologists' judgement, and relatively expensive. Interestingly, hyperspectral imaging techniques offer a potential non-invasive approach for tumor detection and assessment of pathological tissue by illustrating the spectral features of different tissues [21, 33, 34]. With this approach, data can become easily available, which can help the low-volume centers to acquire experience of high-volume centers for the patient's benefit.

Despite these benefits from using hyperspectral imaging techniques, several implications should be considered. One of the possible implications of this is that clinical examinations using these techniques might need more resources due to the needed learning phase and costs. In addition, drawing conclusions for treatment decisions and prognostic purposes based on subjective information from these methods, and not from histopathological diagnosis, has raised a valid concern [33]. Therefore, it has been suggested that each patient's finding should be images multiple times to obtain evidence of reproducibility of the image and subsequently, reliability of the diagnosis [33]. Similarly, injecting a fluorescent material (porphyrin) which can be potentially hazardous has raised another issue worthy of consideration [33]. Nonetheless, a laser-induced fluorescent technique have been proposed to overcome this concern [33, 35, 36]. Irrespective of the data type used in the training of the deep learning-based prognostication model, it is important to emphasize that the judgement of the clinicians still forms the bedrock of the decision-making. These models are poised to serve as an assistive tool to aid clinical decision-making.

Discussion

Deep Learning in Oral Cancer Management: An Overview

The advancements in technology in terms of increased computational power have allowed for improvement in model architecture of shallow machine learning to deep machine learning [7, 37, 38]. Owing to the fact that deep learning is a modification to the traditional neural network, it is also referred to as the deep neural network (DNN) [37]. Considering the continuous generation of data by various medical devices and digital record systems, it is important to process and manipulate these data accurately to obtain meaningful insights from them.

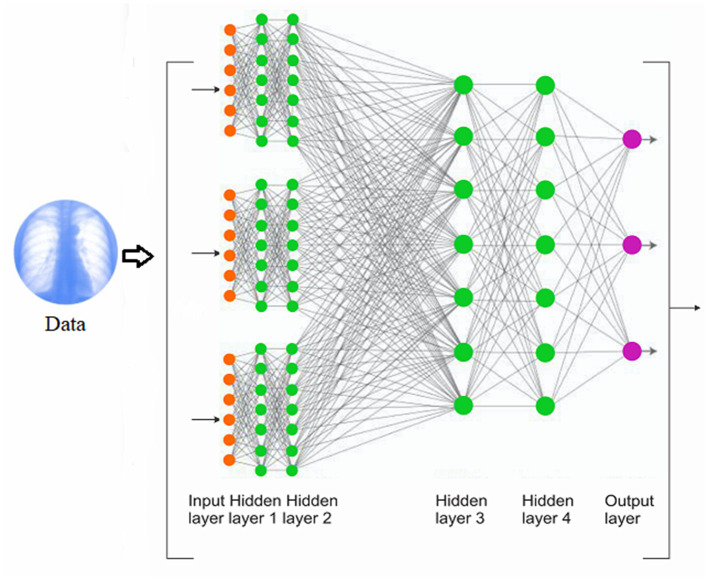

Deep learning approach offers an insightful approach to support clinical decision-making. It is unique in the sense that it is able to devise its own representation that is needed for pattern recognition [38]. Typically, deep learning is made up of multiple layers that are arranged sequentially with a significant amount of primitive, non-linear operations such that the input fed into the first layer goes to the second layer and to the next layer (transformed into more abstract representations) until the input space is iteratively transformed into a distinguishable data points [38, 39] (Figure 2).

Figure 2.

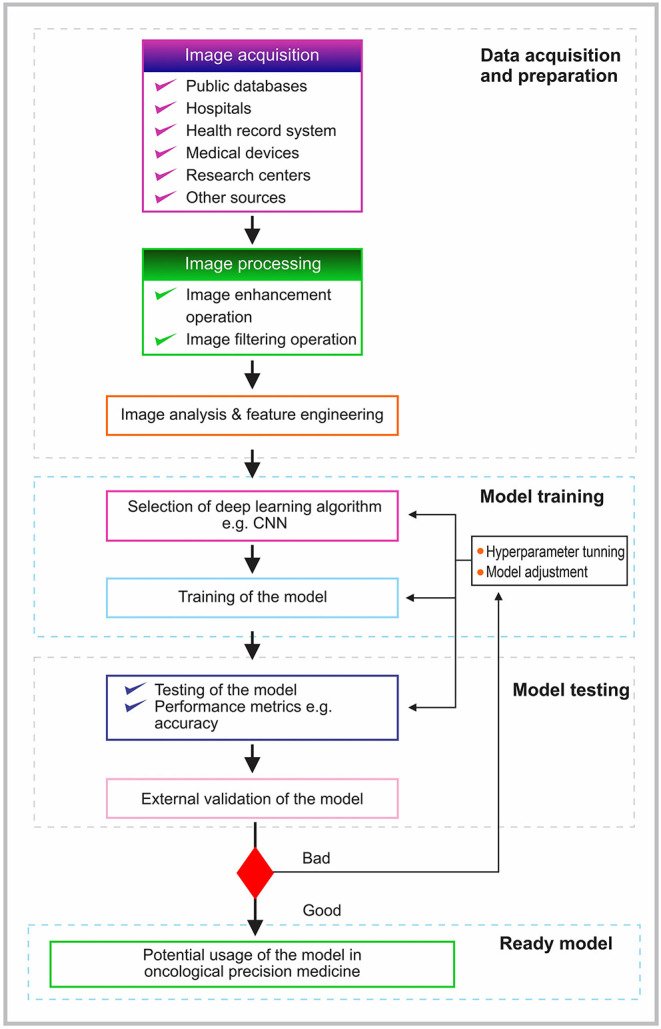

The schematic pipeline of the training process of a convolutional neural network.

In general, deep learning may take any form of the learning paradigms. The motive for the application of deep learning determines the learning approach. In some cases, these learning paradigms can be mixed to achieve good model performance. For example, the deep neural network (DNN) was initially trained based on unsupervised learning paradigm during the learning phase. This was followed by supervised fine-tuning of stacked network [40–42]. The stacked auto-encoders (SAEs) and deep belief network (DBN) were examples of architectures that exhibited this approach. However, this approach requires complex techniques and significant amount of engineering to achieve good performance. Therefore, the supervised method became the most popularly used since it simplified the entire training process [40]. The convolutional neural network (CNN) and the recurrent neural network (RNNs) are examples of the architectures that have used the supervised learning paradigm [40]. To this end, most of the examined architectures used in the published studies were based on CNN or its derivatives. Although, two unsupervised architectures—variational auto-encoder (VAE) and generative adversarial network (GAN) are beginning to attract attention. It would be interesting to explore their applications in the review of medical images [40].

In the deep learning approach which is based on the supervised learning paradigm, the dataset is made up of input data points and corresponding output data labels [38, 40, 43]. Similarly, meaningful information is extracted from the data to enhance the delineation of features of clinical interest and importance (segmentation) [40, 43]. Of note, deep learning can featurize and learn from a variety of data types such as image, temporal, and multimodal inputs [38].

In medicine, especially in cancer management, primary diagnostic imaging modalities are fed into deep learning to achieve a trained model that can offer effective prognostication of cancer outcomes [44]. Examples of these highly dimensioned mineable primary diagnostic imaging modalities include radiomic data, ultrasound (US), computed tomography (CT), genomic, magnetic resonance imaging (MRI), and positron emission tomography (PET) [44]. Similarly, stained tissue sections from biopsies or surgical resections from high-resolution whole-slide images produce cytological and immunohistochemical data which have been used as inputs for the deep learning techniques.

Other non-invasive tools/data types include infrared thermal imaging, confocal laser endomicroscopy, multispectral narrow-band, and Raman spectroscopy [44]. From these different types, it has been reported that the computed tomography (or enhanced computed tomography) provides the widely used non-invasive data type for deep learning analysis [7]. Similarly, spectra data were reported as a form of an emerging non-invasive data type for precision and personalized cancer management using deep learning techniques [7, 44].

Convolutional Neural Network

A typical CNN is made up of input and output layers alongside other important layers such as convolution, max pooling, and fully connected layers. These layers are essential to facilitate effective learning of more and more abstract features from the input data such as an image. When the input is fed into a typical CNN, the convolution layer takes the input image and extracts a feature. The extracted feature is transferred into the pooling layers for further processing. Finally, the reduced input images are then fed into the fully connected layer to classify the images into the corresponding labels. The entire process of building a deep learning-based prognostic model is summarized below (sub-section Inclusion Criteria). The CNN is an important type of neural network that has found application in medicine due to the fact it has addressed the various disadvantages of the traditional fully connected layers such as computational overheads. Several types have CNNs have evolved over time such as LeNet, AlexNet, DenseNet, GoogLeNet, ResNet, VGGNet [22]. However, the principle of operations of these variants remains largely the same.

Pipeline for Building a Deep Learning-Based Prognostic Model

The typical pipeline for building a deep learning model involves image acquisition, preprocessing, image analysis, and feature engineering [21] (Figure 3). In image acquisition, the image type to be used in the analysis is acquired from the chosen source. The acquired images are preprocessed to remove artifacts and noise [21]. Of note, it is important to properly pre-process the data as the quality of data provided as inputs will determine the quality of results [19, 22, 45]. Therefore, using high-quality images is essential in the new era of precision medicine.

Figure 3.

A schematic of a typical deep machine learning training process.

Several image enhancements and filtering options may be applied to improve the quality of the image [46] (Figure 3). For example, attempts such as the use of content-aware image restoration and Noise2void are deep learning methods being used in the attempt to denoise and restore bioimages in the era of deep learning [47–50]. Following the preprocessing step is the analysis and feature engineering stage. At this stage, necessary and required features are extracted. Considering the size of high-quality image data, it has been suggested to use certain pre-extracted features or to downsize the image sizes. There are two major drawbacks with these suggestions. Firstly, there is no confirmation which parts of the image have the most important information for proper diagnosis or prognosis. The image may contain some points that may have been inadvertently downsized. Secondly, the manual identification of important features (pre-extracted) on the image by the professionals may miss out on other extremely important features for precise diagnosis and prognosis. As a result, the detection of the regions of interest is done automatically by using a detection network (segmentation) [25]. The preprocessed image data are subsequently used for training (Figure 3).

The preprocessed data are usually divided into the training and testing sets, respectively [5, 51]. The training set, that is, the preprocessed data with outcome annotations are fed into a machine learning algorithm such as the Convolutional Neural Network (CNN) to perform the required tasks. A typical CNN is made up of convolutional, pooling, and fully connected layers. The processed training set is fed into the network through the convolutional layer. This layer automatically performs the image feature extraction to produce a feature map [21, 22, 52–55]. However, the produced feature map is still large and needs to be downsized [21]. An intuitive approach produced to downsize the feature map is the pooling process which works in a similar way as the earlier described convolution operation but with a different purpose (i.e., to downsize the feature map) [21, 54]. To this end, the filter used in the pooling process is designed to either generate the maximum value or average value. Thus, two types of pooling process is involved in the pooling layer (max-pooling or mean-pooling) [21]. Of note, there are concerns of excessive loss of information and possible destruction of partial information in processed images with the pooling process [21]. Although, several attempts have been made to address these concerns [56–59].

Thus, the output from the convolution/max pooling process is flattened into a single vector of value to facilitate processing and statistical analysis in the next phase (feeding to the fully connected layers) [52, 60]. The result from the afore-mentioned process (convolution/max pooling) is then fed into the fully connected layer to classify the images into the corresponding labels. Typically, a fully connected layer is made up of input, hidden, and output layers [22]. It connects all neurons in one layer to all the neurons in another layer. Hence, a linear transformation is used to combine inputs from the neurons in the previous layer to the next layer so that a single signal is formed which is then output to the next layer through an activation function to produce a non-linear transformation [21, 52, 61]. The most widely accepted activation function used for this purpose is the rectified linear unit (Relu) for two-class classification tasks and the softmax function typically for multi-class classification problems [52, 53, 55].

Based on the generated expected output, there may be a need for hyperparameter tuning and model adjustment to maximize the overall predictive performance of the model [22]. The model with suitable performance can be tested with the test sets and externally validated to ensure that the model works as expected (Figure 3). In general, the process of feature extraction and classification using a deep learning approach is carried out in a forward and backward manner in all the corresponding layers (convolution, pooling, and fully connected layers, respectively) [22].

Deep Learning For Oral Cancer: From Precise Diagnosis To Precision Medicine

Precision medicine considers numerous technologies for designing and optimizing the route for personalized diagnosis, prognosis, and treatment of patients [62]. Precise diagnosis and prognosis of oral cancer are of utmost importance for treatment-related decision making in precision medicine. The realization of this offers the potential to improve patient care and outcome by guiding the clinicians toward carefully tailored interventions [22]. Additionally, it provides the opportunity to shift decisions away from trial and error and to reduce disease-associated health burdens [63].

The increased computing performance and the availability of large medical datasets (such as cancer datasets) in recent years are essential to the implementation of precision medicine. This is because technologies such as deep learning are capable of using large multidimensional biological datasets that capture individual variabilities in clinical, pathologic, genomic, biomarker, functional, or environmental factors [62, 63]. The approach is able to identify hard to discern patterns in these datasets that might not be easily discoverable by the traditional statistical approach [51, 63]. Thus, this methodology has shown significant accuracy in the precise diagnosis and prognosis of oral cancer [7].

For example, for precise diagnosis purposes, deep learning models have been used in the detection of oral cancer [24, 25, 64–75]. Additionally, these models have assisted in the prediction of lymph node metastasis [27–29, 76]. Besides, they have been reported to perform well in differentiating between precancerous and cancerous lesions [64, 77–81]. These models have been integrated to offer an automated diagnosis of oral cancer [24, 64, 82]. This approach offers the opportunity for cost-efficient early detection of oral cancer [64, 69, 70, 73, 80, 82], which is the basis for the development of management of oral cancer [21]. Proper management of oral cancer will subsequently lead to an increase in the survival of patients through improved treatment planning by providing precision and personalized medicine [5, 21].

Similarly, deep learning models have shown promising value in the prognostication of outcomes in oral cancer. For instance, deep learning models have been reported to show significant performance in the quantification of tumor-infiltrating lymphocytes [30], multi-class grading for oral cancer patients [26, 83], predicting disease-free survival in oral cancer patients [32], and dividing patients into risk groups and identifying patient groups who are at a high risk of poor prognosis [84].

Therefore, deep learning methodology can assist to achieve more precise detection, diagnosis, and prognostication in oral cancer management. The quest for precision medicine is poised to ensure that oral cancer is detected early, the cost of tests and mistakes in the recognition procedure can be reduced [19]. These advantages can translate into an improved chance of survival and better management of oral cancer [19, 21]. The workload of the clinicians can be greatly reduced since the data can be rapidly processed to obtain important prognostication of patients outcomes [18]. The cognitive bias and mistakes associated with treatment planning for patients can be reduced with automated deep learning tools to predict treatment outcomes [18].

In conclusion, based on the aforesaid, high-performance computing capabilities and the availability of large biological datasets of cancer patients are essential to achieve precision medicine. At the center of this high computational power are deep learning models that are able to identify patterns in multidimensional datasets.

Challenges Of Deep Learning In Precision Medicine

Despite the reported potential benefits of deep learning techniques toward precision medicine, several important challenges must be resolved to achieve these. Firstly, quality of data (such as pathological or imaging) to be used in the analysis must be significantly high. To achieve this, a standardized resolution for the images should be agreed upon between for example the machine learning experts and the pathologists. This will ensure that the resolution is sufficient enough to capture histological features of the tumor and its microenvironment [22]. There should be a standardized guideline for both pathological and radiological image acquisition to obtain images that are beneficial in the deep learning analysis and ultimately, for precision medicine purposes.

Deep learning has better performance i.e., higher accuracy than the traditional shallow machine learning method but it is also more complex and this increases concern of explainability and interpretability of the model. There is a valid concern of the possible tradeoff between accuracy, explainability, and interpretability and this poses a challenge [85, 86].

It is important that the developed models are generalizable. Therefore, the model should be trained with a large dataset of high image quality. Another way to achieve a generalizable model is to manipulate the real data to obtain properly varied data for the training of deep learning [87]. The generalizability of the model ensures that the model provides a satisfactory performance measure within the entire intended target population. While external validation may ensure that the model will work as intended and without bias, it is important that the model shows good performance on a differing population regarding some properties [87]. Interestingly, research efforts are now ongoing to develop a generalizable model [87].

Future Consideration

The arguments for enhancing precision medicine i.e., offering better individualized prognostication and management of oral cancer are important for improved overall outcomes. In spite of this, it is important to mention that the application of deep learning models to aid in introducing precision is still in its early stage of development. Several factors still need to be considered as mentioned above. For instance, the deep learning approach should be standardized to improve data preprocessing, standardized reporting of deep learning methodologies, and performance metrics of the corresponding models.

Additionally, these models are externally validated with different cohorts to ensure that the accuracy of the models is as reported. In fact, the accuracy of the developed model should be reported based on the performance of the model on external cohorts (external validation) to get its true performance [88]. These models should perform better than the human experts to fulfill the touted benefit as a clinical support system. Concerted efforts should be made to develop powerful AI algorithms that can handle available clinical dataset while producing acceptable prognostication performance. Moreover, necessary frameworks should be developed to guide the adoption of these models in daily clinical practices. The failure to consider all these may lead to accumulated number of studies on the potentials of deep learning methodology in the proper prognostication of patient outcome but without a true benefit to an individual oral cancer patient. Similarly, this is warranted before their use in daily clinical practice as a clinical support system to provide a second opinion to the clinician.

Apart from the aforementioned, continuous efforts should be made to increase the availability of the existing multidimensional biological and clinical data for deep learning approaches. This is important to further increase the volume of datasets and thus, the benefits of the application of deep learning, which seems to be a promising new tool for precision medicine of oral cancer.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

RA and AM: study concepts and study design. RA: studies extraction. AM and AA: acquisition and quality control of included studies. RA, ME, AA, and AM: data analysis and interpretation. RA and AA: manuscript preparation. AM and ME: manuscript review. AA and RA: manuscript editing. All authors approved the final manuscript for submission.

Funding

This work was funded by Helsinki University Hospital Research Fund (No. TYH2020232) and Sigrid Jusélius Foundation (No. Antti Mäkitie 2020).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors thank Sigrid Jusélius Foundation, Helsinki University Hospital Research Fund, and Turku University Hospital Fund.

References

- 1.Coletta RD, Yeudall WA, Salo T. Grand challenges in oral cancers. Front Oral Health. (2020) 1:3. 10.3389/froh.2020.00003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Du M, Nair R, Jamieson L, Liu Z, Bi P. Incidence trends of lip, oral cavity, and pharyngeal cancers: global burden of disease 1990–2017. J Dent Res. (2020) 99:143–51. 10.1177/0022034519894963 [DOI] [PubMed] [Google Scholar]

- 3.Miranda-Filho A, Bray F. Global patterns and trends in cancers of the lip, tongue and mouth. Oral Oncol. (2020) 102:104551. 10.1016/j.oraloncology.2019.104551 [DOI] [PubMed] [Google Scholar]

- 4.Kartini D, Taher A, Panigoro SS, Setiabudy R, Jusman SW, Haryana SM, et al. Effect of melatonin supplementation in combination with neoadjuvant chemotherapy to miR-210 and CD44 expression and clinical response improvement in locally advanced oral squamous cell carcinoma: a randomized controlled trial. J Egyptian Natl Cancer Inst. (2020) 32:12. 10.1186/s43046-020-0021-0 [DOI] [PubMed] [Google Scholar]

- 5.Alabi RO, Elmusrati M, Sawazaki-Calone I, Kowalski LP, Haglund C, Coletta RD, et al. Machine learning application for prediction of locoregional recurrences in early oral tongue cancer: a Web-based prognostic tool. Virchows Archiv. (2019) 475:489–97. 10.1007/s00428-019-02642-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Almangush A, Bello IO, Coletta RD, Mäkitie AA, Mäkinen LK, Kauppila JH, et al. For early-stage oral tongue cancer, depth of invasion and worst pattern of invasion are the strongest pathological predictors for locoregional recurrence and mortality. Virchows Archiv. (2015) 467:39–46. 10.1007/s00428-015-1758-z [DOI] [PubMed] [Google Scholar]

- 7.Alabi RO, Bello IO, Youssef O, Elmusrati M, Mäkitie AA, Almangush A. Utilizing deep machine learning for prognostication of oral squamous cell carcinoma—a systematic review. Front. Oral Health. (2021) 2:1–11. 10.3389/froh.2021.686863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hasanpoor E, Hallajzadeh J, Siraneh Y, Hasanzadeh E, Haghgoshayie E. Using the methodology of systematic review of reviews for evidence-based medicine. Ethiopian J Health Sci. (2019) 29:775–78. 10.4314/ejhs.v29i6.15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Aromataris E, Fernandez R, Godfrey CM, Holly C, Khalil H, Tungpunkom P. Summarizing systematic reviews: methodological development, conduct and reporting of an umbrella review approach. Int J Evid Based Healthc. (2015) 13:132–40. 10.1097/XEB.0000000000000055 [DOI] [PubMed] [Google Scholar]

- 10.Kourou K, Exarchos TP, Exarchos KP, Karamouzis MV, Fotiadis DI. Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J. (2015) 13:8–17. 10.1016/j.csbj.2014.11.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Amato F, López A, Peña-Méndez EM, Vanhara P, Hampl A, Havel J. Artificial neural networks in medical diagnosis. J Appl Biomed. (2013) 11:47–58. 10.2478/v10136-012-0031-x25058735 [DOI] [Google Scholar]

- 12.Ayer T, Alagoz O, Chhatwal J, Shavlik JW, Kahn CE, Burnside ES. Breast cancer risk estimation with artificial neural networks revisited: discrimination and calibration. Cancer. (2010) 116:3310–21. 10.1002/cncr.25081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bottaci L, Drew PJ, Hartley JE, Hadfield MB, Farouk R, Lee PW, et al. Artificial neural networks applied to outcome prediction for colorectal cancer patients in separate institutions. Lancet. (1997) 350:469–72. 10.1016/S0140-6736(96)11196-X [DOI] [PubMed] [Google Scholar]

- 14.Biglarian A, Hajizadeh E, Kazemnejad A, Zayeri F. Determining of prognostic factors in gastric cancer patients using artificial neural networks. Asian Pac J Cancer Prev. (2010) 11:533–6. [PubMed] [Google Scholar]

- 15.Chien C-W, Lee Y-C, Ma T, Lee T-S, Lin Y-C, Wang W, et al. The application of artificial neural networks and decision tree model in predicting post-operative complication for gastric cancer patients. Hepatogastroenterology. (2008) 55:1140–5. [PubMed] [Google Scholar]

- 16.National Institute of Health. Study Quality Assessment Tools. National Heart, Lung, and Blood Institute (2013). Available Online at: https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools (accessed October 20, 2021).

- 17.Phillips CS, Becker H. Systematic review: expressive arts interventions to address psychosocial stress in healthcare workers. J Adv Nurs. (2019) 75:2285–98. 10.1111/jan.14043 [DOI] [PubMed] [Google Scholar]

- 18.Nagi R, Aravinda K, Rakesh N, Gupta R, Pal A, Mann AK. Clinical applications and performance of intelligent systems in dental and maxillofacial radiology: a review. Imaging Sci Dentistry. (2020) 50:81. 10.5624/isd.2020.50.2.81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Panigrahi S, Swarnkar T. Machine learning techniques used for the histopathological image analysis of oral cancer-a review. TOBIOIJ. (2020) 13:106–18. 10.2174/1875036202013010106 [DOI] [Google Scholar]

- 20.Sultan AS, Elgharib MA, Tavares T, Jessri M, Basile JR. The use of artificial intelligence, machine learning and deep learning in oncologic histopathology. J Oral Pathol Med. (2020) 49:849–56. 10.1111/jop.13042 [DOI] [PubMed] [Google Scholar]

- 21.Ren R, Luo H, Su C, Yao Y, Liao W. Machine learning in dental, oral and craniofacial imaging: a review of recent progress. PeerJ. (2021) 9:e11451. 10.7717/peerj.11451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chu CS, Lee NP, Ho JWK, Choi S-W, Thomson PJ. Deep learning for clinical image analyses in oral squamous cell carcinoma: a review. JAMA Otolaryngol Head Neck Surg. (2021) 147:893–900. 10.1001/jamaoto.2021.2028 [DOI] [PubMed] [Google Scholar]

- 23.García-Pola M, Pons-Fuster E, Suárez-Fernández C, Seoane-Romero J, Romero-Méndez A, López-Jornet P. Role of artificial intelligence in the early diagnosis of oral cancer. A scoping review. Cancers. (2021) 13:4600. 10.3390/cancers13184600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Aubreville M, Knipfer C, Oetter N, Jaremenko C, Rodner E, Denzler J, et al. Automatic classification of cancerous tissue in laserendomicroscopy images of the oral cavity using deep learning. Sci Rep. (2017) 7:11979. 10.1038/s41598-017-12320-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fu Q, Chen Y, Li Z, Jing Q, Hu C, Liu H, et al. A deep learning algorithm for detection of oral cavity squamous cell carcinoma from photographic images: a retrospective study. EClinicalMedicine. (2020) 27:100558. 10.1016/j.eclinm.2020.100558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Das N, Hussain E, Mahanta LB. Automated classification of cells into multiple classes in epithelial tissue of oral squamous cell carcinoma using transfer learning and convolutional neural network. Neural Netw. (2020) 128:47–60. 10.1016/j.neunet.2020.05.003 [DOI] [PubMed] [Google Scholar]

- 27.Ariji Y, Fukuda M, Kise Y, Nozawa M, Yanashita Y, Fujita H, et al. Contrast-enhanced computed tomography image assessment of cervical lymph node metastasis in patients with oral cancer by using a deep learning system of artificial intelligence. Oral Surg Oral Med Oral Pathol Oral Radiol. (2019) 127:458–63. 10.1016/j.oooo.2018.10.002 [DOI] [PubMed] [Google Scholar]

- 28.Ariji Y, Fukuda M, Nozawa M, Kuwada C, Goto M, Ishibashi K, et al. Automatic detection of cervical lymph nodes in patients with oral squamous cell carcinoma using a deep learning technique: a preliminary study. Oral Radiol. (2021) 37:290–6. 10.1007/s11282-020-00449-8 [DOI] [PubMed] [Google Scholar]

- 29.Ariji Y, Sugita Y, Nagao T, Nakayama A, Fukuda M, Kise Y, et al. CT evaluation of extranodal extension of cervical lymph node metastases in patients with oral squamous cell carcinoma using deep learning classification. Oral Radiol. (2020) 36:148–55. 10.1007/s11282-019-00391-4 [DOI] [PubMed] [Google Scholar]

- 30.Shaban M, Khurram SA, Fraz MM, Alsubaie N, Masood I, Mushtaq S, et al. A novel digital score for abundance of tumour infiltrating lymphocytes predicts disease free survival in oral squamous cell carcinoma. Sci Rep. (2019) 9:13341. 10.1038/s41598-019-49710-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gupta RK, Kaur M, Manhas J. Tissue level based deep learning framework for early detection of dysplasia in oral squamous epithelium. J Multimed Inf Syst. (2019) 6:81–6. 10.33851/JMIS.2019.6.2.81 [DOI] [Google Scholar]

- 32.Fujima N, Andreu-Arasa VC, Meibom SK, Mercier GA, Salama AR, Truong MT, et al. Deep learning analysis using FDG-PET to predict treatment outcome in patients with oral cavity squamous cell carcinoma. Eur Radiol. (2020) 30:6322–30. 10.1007/s00330-020-06982-8 [DOI] [PubMed] [Google Scholar]

- 33.Akbari H, Uto K, Kosugi Y, Kojima K, Tanaka N. Cancer detection using infrared hyperspectral imaging. Cancer Sci. (2011) 102:852–7. 10.1111/j.1349-7006.2011.01849.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lu G, Halig L, Wang D, Qin X, Chen ZG, Fei B. Spectral-spatial classification for noninvasive cancer detection using hyperspectral imaging. J Biomed Optics. (2014) 19:106004. 10.1117/1.JBO.19.10.106004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Martin ME, Wabuyele MB, Chen K, Kasili P, Panjehpour M, Phan M, et al. Development of an Advanced Hyperspectral Imaging (HSI) system with applications for cancer detection. Ann Biomed Eng. (2006) 34:1061–8. 10.1007/s10439-006-9121-9 [DOI] [PubMed] [Google Scholar]

- 36.Chang SK, Mirabal YN, Atkinson EN, Cox D, Malpica A, Follen M, et al. Combined reflectance and fluorescence spectroscopy for in vivo detection of cervical pre-cancer. J Biomed Opt. (2005) 10:024031. 10.1117/1.1899686 [DOI] [PubMed] [Google Scholar]

- 37.Zhu W, Xie L, Han J, Guo X. The application of deep learning in cancer prognosis prediction. Cancers. (2020) 12:603. 10.3390/cancers12030603 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Esteva A, Robicquet A, Ramsundar B, Kuleshov V, DePristo M, Chou K, et al. A guide to deep learning in healthcare. Nat Med. (2019) 25:24–9. 10.1038/s41591-018-0316-z [DOI] [PubMed] [Google Scholar]

- 39.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. (2015) 521:436–44. 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- 40.Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Med Image Anal. (2017) 42:60–88. 10.1016/j.media.2017.07.005 [DOI] [PubMed] [Google Scholar]

- 41.Hinton GE, Osindero S, Teh Y-W. A fast learning algorithm for deep belief nets. Neural Comput. (2006) 18:1527–54. 10.1162/neco.2006.18.7.1527 [DOI] [PubMed] [Google Scholar]

- 42.Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science. (2006) 313:504–7. 10.1126/science.1127647 [DOI] [PubMed] [Google Scholar]

- 43.Shen D, Wu G, Suk H-I. Deep learning in medical image analysis. Ann Rev Biomed Eng. (2017) 19:221–48. 10.1146/annurev-bioeng-071516-044442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Mahmood H, Shaban M, Rajpoot N, Khurram SA. Artificial Intelligence-based methods in head and neck cancer diagnosis: an overview. Br J Cancer. (2021) 124:1934–40. 10.1038/s41416-021-01386-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Sabottke CF, Spieler BM. The effect of image resolution on deep learning in radiography. Radiol Artif Intellig. (2020) 2:e190015. 10.1148/ryai.2019190015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Maini R, Aggarwal H. A comprehensive review of image enhancement techniques. Comp Vis Pattern Recogn. (2010) 2. [Google Scholar]

- 47.Laine RF, Jacquemet G, Krull A. Imaging in focus: an introduction to denoising bioimages in the era of deep learning. Int J Biochem Cell Biol. (2021) 140:106077. 10.1016/j.biocel.2021.106077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Weigert M, Schmidt U, Boothe T, Müller A, Dibrov A, Jain A, et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat Methods. (2018) 15:1090–7. 10.1038/s41592-018-0216-7 [DOI] [PubMed] [Google Scholar]

- 49.Krull A, Buchholz T-O, Jug F. Noise2Void - learning denoising from single noisy images. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, CA: IEEE; (2019). p. 2124–32. [Google Scholar]

- 50.von Chamier L, Laine RF, Jukkala J, Spahn C, Krentzel D, Nehme E, et al. Democratising deep learning for microscopy with ZeroCostDL4Mic. Nat Commun. (2021) 12:2276. 10.1038/s41467-021-22518-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Alabi RO, Elmusrati M, Sawazaki-Calone I, Kowalski LP, Haglund C, Coletta RD, et al. Comparison of supervised machine learning classification techniques in prediction of locoregional recurrences in early oral tongue cancer. Int J Med Inform. 2019:104068. 10.1016/j.ijmedinf.2019.104068 [DOI] [PubMed] [Google Scholar]

- 52.Fujioka T, Mori M, Kubota K, Oyama J, Yamaga E, Yashima Y, et al. The utility of deep learning in breast ultrasonic imaging: a review. Diagnostics. (2020) 10:1055. 10.3390/diagnostics10121055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Angermueller C, Pärnamaa T, Parts L, Stegle O. Deep learning for computational biology. Mol Syst Biol. (2016) 12:878. 10.15252/msb.20156651 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lecun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. (1998) 86:2278–324. 10.1109/5.72679127295638 [DOI] [Google Scholar]

- 55.Yasaka K, Akai H, Kunimatsu A, Kiryu S, Abe O. Deep learning with convolutional neural network in radiology. Japanese J Radiol. (2018) 36:257–72. 10.1007/s11604-018-0726-3 [DOI] [PubMed] [Google Scholar]

- 56.Graham B. Fractional max-pooling. ArXiv. [Preprint]. arXiv:1412.6071. [Google Scholar]

- 57.Zeiler M, Fergus R. Stochastic pooling for regularization of deep convolutional neural networks. ArXiv. [Preprint] arXiv:1301.3557 (2013). [Google Scholar]

- 58.Springenberg JT, Dosovitskiy A, Brox T, Riedmiller M. Striving for simplicity: the all convolutional net. ArXiv [Preprint]. arXiv:1412.6806 (2014). [Google Scholar]

- 59.He K, Zhang X, Ren S, Sun J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intellig. (2015) 37:1904–16. 10.1109/TPAMI.2015.2389824 [DOI] [PubMed] [Google Scholar]

- 60.Liu K, Kang G, Zhang N, Hou B. Breast cancer classification based on fully-connected layer first convolutional neural networks. IEEE Access. (2018) 6:23722–32. 10.1109/ACCESS.2018.281759327295638 [DOI] [Google Scholar]

- 61.Karlik B, Olgac AV. Performance Analysis of Various Activation Functions in Generalized MLP Architectures of Neural Networks. IJAE. (2011) 1:111–22. [Google Scholar]

- 62.Uddin M, Wang Y, Woodbury-Smith M. Artificial intelligence for precision medicine in neurodevelopmental disorders. Npj Digital Medicine. (2019) 2. 10.1038/s41746-019-0191-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Plant D, Barton A. Machine learning in precision medicine: lessons to learn. Nat Rev Rheumatol. (2021) 17:5–6. 10.1038/s41584-020-00538-2 [DOI] [PubMed] [Google Scholar]

- 64.Uthoff RD, Song B, Sunny S, Patrick S, Suresh A, Kolur T, et al. Point-of-care, smartphone-based, dual-modality, dual-view, oral cancer screening device with neural network classification for low-resource communities. PLoS ONE. (2018) 13:e0207493. 10.1371/journal.pone.0207493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Das DK, Bose S, Maiti AK, Mitra B, Mukherjee G, Dutta PK. Automatic identification of clinically relevant regions from oral tissue histological images for oral squamous cell carcinoma diagnosis. Tissue Cell. (2018) 53:111–9. 10.1016/j.tice.2018.06.004 [DOI] [PubMed] [Google Scholar]

- 66.Yan H, Yu M, Xia J, Zhu L, Zhang T, Zhu Z. Tongue squamous cell carcinoma discrimination with Raman spectroscopy and convolutional neural networks. Vibrat Spectrosc. (2019) 103:102938. 10.1016/j.vibspec.2019.102938 [DOI] [PubMed] [Google Scholar]

- 67.Yan H, Yu M, Xia J, Zhu L, Zhang T, Zhu Z, et al. Diverse region-based CNN for tongue squamous cell carcinoma classification with Raman spectroscopy. IEEE Access. (2020) 8:127313–28. 10.1109/ACCESS.2020.300656727295638 [DOI] [Google Scholar]

- 68.Yu M, Yan H, Xia J, Zhu L, Zhang T, Zhu Z, et al. Deep convolutional neural networks for tongue squamous cell carcinoma classification using Raman spectroscopy. Photodiagn Photodyn Ther. (2019) 26:430–5. 10.1016/j.pdpdt.2019.05.008 [DOI] [PubMed] [Google Scholar]

- 69.Chan C-H, Huang T-T, Chen C-Y, Lee C-C, Chan M-Y, Chung P-C. Texture-map-based branch-collaborative network for oral cancer detection. IEEE Transac Biomed Circuits Syst. (2019) 13:766–80. 10.1109/TBCAS.2019.2918244 [DOI] [PubMed] [Google Scholar]

- 70.Jeyaraj PR, Samuel Nadar ER. Computer-assisted medical image classification for early diagnosis of oral cancer employing deep learning algorithm. J Cancer Res Clin Oncol. (2019) 145:829–37. 10.1007/s00432-018-02834-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Xu S, Liu Y, Hu W, Zhang C, Liu C, Zong Y, et al. An early diagnosis of oral cancer based on three-dimensional convolutional neural networks. IEEE Access. (2019) 7:158603–11. 10.1109/ACCESS.2019.295028627295638 [DOI] [Google Scholar]

- 72.Jeyaraj PR, Panigrahi BK, Samuel Nadar ER. Classifier feature fusion using deep learning model for non-invasive detection of oral cancer from hyperspectral image. IETE J Res. (2020) 1–12. 10.1080/03772063.2020.1786471 [DOI] [Google Scholar]

- 73.Panigrahi S, Das J, Swarnkar T. Capsule network based analysis of histopathological images of oral squamous cell carcinoma. J King Saud Univ Comput Inform Sci. (2020) 10.1016/j.jksuci.2020.11.003 [DOI] [Google Scholar]

- 74.Xia J, Zhu L, Yu M, Zhang T, Zhu Z, Lou X, et al. Analysis and classification of oral tongue squamous cell carcinoma based on Raman spectroscopy and convolutional neural networks. J Modern Opt. (2020) 67:481–9. 10.1080/09500340.2020.1742395 [DOI] [Google Scholar]

- 75.Ding J, Yu M, Zhu L, Zhang T, Xia J, Sun G. Diverse spectral band-based deep residual network for tongue squamous cell carcinoma classification using fiber optic Raman spectroscopy. Photodiagn Photodyn Ther. (2020) 32:102048. 10.1016/j.pdpdt.2020.102048 [DOI] [PubMed] [Google Scholar]

- 76.Tomita H, Yamashiro T, Heianna J, Nakasone T, Kobayashi T, Mishiro S, et al. Deep learning for the preoperative diagnosis of metastatic cervical lymph nodes on contrast-enhanced computed tomography in patients with oral squamous cell carcinoma. Cancers. (2021) 13:600. 10.3390/cancers13040600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Sunny S, Baby A, James BL, Balaji D, Rana MH, Gurpur P, et al. A smart tele-cytology point-of-care platform for oral cancer screening. PLoS ONE. (2019) 14:e0224885. 10.1371/journal.pone.0224885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Nanditha BR, Geetha A, Chandrashekar HS, Dinesh MS, Murali S. An ensemble deep neural network approach for oral cancer screening. Int J Online Biomed Eng. (2021) 17:121. 10.3991/ijoe.v17i02.1920734689442 [DOI] [Google Scholar]

- 79.Shams W, Htike Z. Oral cancer prediction using gene expression profilling and machine learning. Int J Appl Eng Res. (2017) 12:4893–8. [Google Scholar]

- 80.Jubair F, Al-karadsheh O, Malamos D, Al Mahdi S, Saad Y, Hassona Y. A novel lightweight deep convolutional neural network for early detection of oral cancer. Oral Diseases. (2021) 10.1111/odi.13825 [DOI] [PubMed] [Google Scholar]

- 81.Kouznetsova VL, Li J, Romm E, Tsigelny IF. Finding distinctions between oral cancer and periodontitis using saliva metabolites and machine learning. Oral Diseases. (2021) 27:484–93. 10.1111/odi.13591 [DOI] [PubMed] [Google Scholar]

- 82.Welikala RA, Remagnino P, Lim JH, Chan CS, Rajendran S, Kallarakkal TG, et al. Automated detection and classification of oral lesions using deep learning for early detection of oral cancer. IEEE Access. (2020) 8:132677–93. 10.1109/ACCESS.2020.301018027295638 [DOI] [Google Scholar]

- 83.Musulin J, Štifanić D, Zulijani A, Cabov T, Dekanić A, Car Z. An Enhanced Histopathology analysis: an AI-based system for multiclass grading of oral squamous cell carcinoma and segmenting of epithelial and stromal tissue. Cancers. (2021) 13:1784. 10.3390/cancers13081784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Kim Y, Kang JW, Kang J, Kwon EJ, Ha M, Kim YK, et al. Novel deep learning-based survival prediction for oral cancer by analyzing tumor-infiltrating lymphocyte profiles through CIBERSORT. OncoImmunology. (2021) 10:1904573. 10.1080/2162402X.2021.1904573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Huang S, Yang J, Fong S, Zhao Q. Artificial intelligence in cancer diagnosis and prognosis: opportunities and challenges. Cancer Letters. (2020) 471:61–71. 10.1016/j.canlet.2019.12.007 [DOI] [PubMed] [Google Scholar]

- 86.Alabi RO, Youssef O, Pirinen M, Elmusrati M, Mäkitie AA, Leivo I, et al. Machine learning in oral squamous cell carcinoma: current status, clinical concerns and prospects for future—a systematic review. Artificial Intellig Med. (2021) 115:102060. 10.1016/j.artmed.2021.102060 [DOI] [PubMed] [Google Scholar]

- 87.Kleppe A, Skrede O-J, De Raedt S, Liestøl K, Kerr DJ, Danielsen HE. Designing deep learning studies in cancer diagnostics. Nat Rev Cancer. (2021) 21:199–211. 10.1038/s41568-020-00327-9 [DOI] [PubMed] [Google Scholar]

- 88.Zheng Q, Yang L, Zeng B, Li J, Guo K, Liang Y, et al. Artificial intelligence performance in detecting tumor metastasis from medical radiology imaging: a systematic review and meta-analysis. EClinicalMedicine. (2021) 31:100669. 10.1016/j.eclinm.2020.100669 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.