Abstract

For people with HIV, important transmission prevention strategies include early initiation and adherence to antiretroviral therapy and retention in clinical care with the goal of reducing viral loads as quickly as possible. Consequently, at this point in the HIV epidemic, innovative and effective strategies are urgently needed to engage and retain people in health care to support medication adherence. To address this gap, the Positive Health Check Evaluation Trial uses a type 1 hybrid randomized trial design to test whether the use of a highly tailored video doctor intervention will reduce HIV viral load and retain people with HIV in health care. Eligible and consenting patients from four HIV primary care clinical sites are randomly assigned to receive either the Positive Health Check intervention in addition to the standard of care or the standard of care only. The primary aim is to determine the effectiveness of the intervention. A second aim is to understand the implementation potential of the intervention in clinic workflows, and a third aim is to assess the costs of intervention implementation. The trial findings will have important real-world applicability for understanding how digital interventions that take the form of video doctors can be used to decrease viral load and to support retention in care among diverse patients attending HIV primary care clinics.

Keywords: HIV, Type 1 hybrid trial, Viral load, Medication adherence, Retention in care, Video doctor intervention

1. Introduction

HIV transmission continues to be an urgent public health challenge. According to the Centers for Disease Control and Prevention (CDC), more than one million people in the United States are living with HIV, with nearly 38,000 new infections annually [1]. Because of advances in antiretroviral therapy (ART), which suppresses the plasma HIV-1 viral load (VL), more people are living with and managing HIV as a chronic health condition. Early initiation and adherence to ART and retention in clinical care are important transmission prevention strategies because people with HIV (PWH) who are treated with ART and maintain VL suppression have effectively no risk of sexually transmitting HIV [2–5], and they experience a life expectancy similar to people not infected with HIV [6]. In 2017, of all PWH in the United States, 85.8% knew they were diagnosed and 62.7% were virally suppressed [1]. Consequently, to decrease sexual transmission of HIV, effective interventions are needed to engage PWH in regular health care that supports ART adherence and retention in medical care [4].

Digital interventions that use the internet, mobile devices, and computing technology show promise in improving ART adherence and VL suppression [7–11]. A systematic review found that digital interventions can improve HIV outcomes, including VL suppression, self-care behaviors, and sexual risk reduction [12]. Specifically, digital interventions that use “video doctors” appear very promising because they simulate interactions between the user and a healthcare provider [9,13]. Interactive simulated video doctors can provide patients with highly tailored information that is based on patient input, making these interactions patient-centered. Additionally, tailoring increases the relevance and meaning of the information received, potentially making it more actionable [10,14]. Digital interventions also allow for easy content updates that facilitate the dissemination of new prevention information as evidence changes over time [14].

This article describes the protocol for evaluating the Positive Health Check (PHC) intervention. PHC is a 15- to 20-min English-language intervention which expands on previous interactive video doctor interventions by delivering tailored behavior change messages to improve ART initiation and adherence, increase retention in care, reduce sexual risk, lower mother-to-child transmission, and reduce unsafe injection practices. The PHC Evaluation Trial uses a large heterogeneous sample of participants in community-based HIV primary care clinics and a measurement approach that relies on outcomes abstracted from patients’ electronic medical health records (EMRs). Small sample sizes and reliance on self-reported outcomes have been limitations of previous tests of similar interventions [7,13,15]. The current PHC evaluation also advances current research by using a type 1 hybrid trial approach [16]. This approach involves testing the effectiveness of PHC via a randomized trial as the primary aim and assessing the implementation context as a secondary aim. The findings from this research will guide decisions about CDC’s dissemination of PHC in support of the White House initiative on “Ending the HIV Epidemic: A Plan for America” [17].

2. Methods

2.1. Trial aims

The PHC evaluation includes three specific aims. Aim 1 involves determining if the PHC intervention reduces VL beyond what patients experience in the standard of care. Our hypothesis is that patients who complete the PHC intervention and receive the standard of care will have better VL outcomes than patients who only receive the standard of care. Aim 2 seeks to examine PHC implementation longitudinally to assess changes over time in the implementation context for PHC. Our hypothesis is that clinics will report significant improvements over time in the implementation context, signaling a more supportive implementation climate, increased readiness, and better fit of PHC into the clinic flow. Aim 3 involves conducting a cost analysis to estimate the incremental cost of the PHC intervention implementation in the clinic environment, exclusive of research-related costs.

2.2. Aim 1 approach

2.2.1. Participating clinics

We are conducting the PHC Evaluation Trial at four HIV primary care clinics affiliated with the following institutions: Florida Department of Health, Hillsborough County, FL; Crescent Care, New Orleans, LA; the Atlanta Veterans Affairs Medical Center (VAMC) Infectious Disease Clinic, Atlanta, GA; and Rutgers New Jersey Medical School Infectious Disease Practice, Newark, NJ. Sites were chosen based on diverse representation of PWH in the clinics and having a sufficient patient population size to meet sample size criteria. The trial received Institutional Review Board approvals from the University of South Florida and the Florida Department of Health; RTI International (which also covered the Crescent Care clinic); Emory University, which covers the Atlanta VAMC Medical Center in Atlanta; and Rutgers University.

2.2.2. Eligibility criteria

Eligible participants for the study have to meet the following criteria: (1) 18 years of age or older; (2) Diagnosed with HIV; (3) English-speaking; (4) Attending one of the four participating HIV clinics; (5) Meet at least one of the following sub-criteria: most recent VL lab result of ≥200 copies/mL, new to care within the past 12 months, or out of care (last attended appointment at the clinic was more than 12 months ago); and (6) Not enrolled in other research studies that could confound the current trial results.

2.2.3. Recruitment and screening

Each clinic will use their EMR systems to identify patients that meet the eligibility criteria. The trial coordinator at each site will confirm each patient’s eligibility at consent, including whether the patient can understand English and is not cognitively impaired.

The clinics will conduct systematic outreach using tailored scripts to recruit eligible patients who have missed appointments or who are out of care and do not have an upcoming appointment scheduled. This outreach is in addition to and different from each clinic’s standard of care linkage/retention activities. Eligible patients with upcoming appointments will be contacted by the Trial Coordinator via telephone and introduced to the trial. When eligible patients arrive in the clinic, the Trial Coordinator will invite them to participate. After patients are consented and enrolled in the trial, the clinics will continue with their standard of care practices to address missed appointments and retention in care. Each clinic has a Microsoft Access database, which is only accessible to clinic-based trial staff, to track patient participation in the trial.

2.2.4. Design, randomization, and blinding

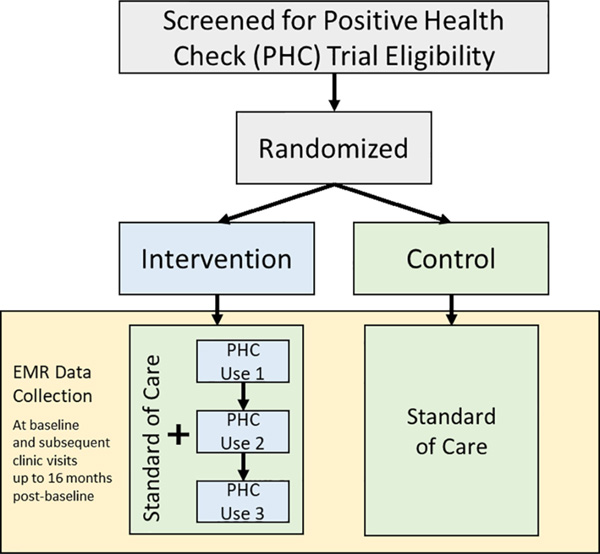

We will be testing PHC via a two-arm hybrid type 1 pragmatic randomized trial. Characteristics of the PHC Evaluation Trial that make it pragmatic include minimal inclusion/exclusion criteria; intervention and evaluation integrated into the clinical workflow (that is, the trial is being conducted in the participants’ own clinics while they see their own clinicians); and assessment of multiple outcomes [18–20]. We will use an electronic sealed-envelope approach to randomize participants. We will generate 500 PHC Identifications (IDs) for each site, noting the order in which they are generated. We will then use sealedenvelope.com’s “Create a randomization list” software [21] to randomize the assignment of PHC IDs to the intervention arm or the control arm. Although the trial is registered as open label [22], to maintain integrity of the primary analysis some trial staff will be blinded to trial conditions, including the principal investigator, lead statistician, and trial staff who interact with clinic stakeholders who are implementing the protocol. An overview of the trial design is shown in Fig. 1.

Fig. 1.

Overview of Positive Health Check evaluation trial design. Note: EMR = Electronic Medical Record.

2.2.5. Continued standard of care services

Participants in either the intervention condition or the control condition will continue to receive the standard of care throughout their observation period. During the informed consent process, participants will be advised that they will continue to receive the standard of care during their enrollment in the trial and that they should freely consult with their healthcare providers, as needed, about their health condition. No restrictions to care will be placed on healthcare providers. The standard of care will be measured for each clinic at baseline, midpoint and the end of the data collection, and updated if needed during the course of the trial. Understanding the standard of care may help describe variation in intervention use and outcomes across sites.

2.2.6. PHC intervention

The PHC intervention is guided by multiple health behavior theories, including the theory of motivational interviewing [23], the Information–Behavioral–Motivation Model [24], and the Transtheoretical Model [25]. The intervention aims to improve self-efficacy for performing behaviors important for medication adherence and reducing HIV transmission. It also aims to reduce psychosocial barriers to initiation and continuation of healthcare and increase intentions to adhere to medication regimens while engaging in prevention behaviors. Lastly, the intervention aims to enhance patient-provider communication, which can further increase self-efficacy, promote patient engagement, and improve health outcomes [26,27].

Intervention delivery procedures will begin at the time of enrollment and the first PHC use will be on-site in the HIV clinic waiting room or private room before the scheduled provider appointment. The Trial Coordinator will assist the participant with logging on and changing passwords and describing how the PHC patient handout (described below) will be delivered. The participant will then complete the intervention using an iPad or Android tablet while listening to the intervention’s audio via a trial-provided headset. Each tablet will be covered with a privacy screen if the intervention is completed in a clinic waiting room. After completing the intervention, the participant will return the device, receive the handout, and be called to the provider appointment. In cases where the participant’s PHC use is interrupted, upon the participant’s request, a link will be e-mailed to the participant and the intervention can be finished at a later time. Participants also will be allowed to use PHC after their scheduled appointment if they are unable to complete use prior to the appointment. After completing the first PHC use, participants will receive a $50 gift card. A participant’s second and third PHC use will be completed before a subsequent provider appointment, or before or after any ancillary appointment—such as a blood draw—within 20 days of a scheduled clinic visit.

There will be a 2-month waiting period following each PHC use after which participants can be approached again when they are in the clinic for an appointment. The goal is for each participant in the intervention arm to use PHC up to three times in a 12-month period. Participants will receive a second $50 gift card when completing PHC during the 10- to 16-month primary outcome assessment window when a final VL is obtained.

The components of the PHC intervention are summarized in Table 1. The participant is welcomed by a video nurse and asked to answer four initial demographic questions, followed by the tailored content. The participant is then able to select a video doctor to work with throughout the intervention. Based on participant-reported answers to the doctor’s standard questions, the doctor delivers tailored motivational messages to the participant. Messages can pertain to appointment keeping, medication adherence, and other behaviors to decrease transmission risk. The participant can also select tips to practice before the next clinic visit and questions they would like to discuss with their provider during the appointment from a tips and questions interface that is part of each intervention module. If a participant selects tips and/or questions as part of their intervention experience, a patient handout is printed automatically and delivered to the patient when they finish their PHC use. At the end of PHC, participants can choose to have a link to the intervention and/or their tailored handout e-mailed to them. Following PHC use, they are given access to Extra Info, a microsite embedded at the end of the intervention that provides peer-to-peer educational videos and other resources.

Table 1.

PHC intervention components.

| Component | Description |

|---|---|

| Participant-reported tailoring questions | ▪ 4 demographic questions used for routing the participant to modules throughout PHC are presented by the video nurse at the start of the intervention. ▪ 17 tailoring questions delivered by the video doctor are interspersed in the intervention probe for medication and adherence behaviors (n = 5), HIV care appointments (n = 2), sexual risk behaviors (n = 4), pregnancy status (n = 1), and drug use behaviors (n = 1). ▪ Responses to these 17 questions provide the basis for the response provided by the video doctor. |

| Video doctor | ▪ Participants interact with a video doctor who delivers tailored information based on responses to the questions posed by the doctor. ▪ Tailored messages are delivered from a database of 672 video clips. ▪ Based on applicability, participants can receive information from the doctor in 6 domains: treatment readiness, medication adherence, retention in care, sexual risk reduction, mother-to-child transmission, and injection drug use risk reduction. ▪ Participants choose from four video doctors who vary by race (white, black) and sex (female, male). There is one doctor for each race- by-sex combination. |

| Behavior change tips and messages | ▪ PHC programming draws from a “library” of 19 behavior change tips that relate to 3 behavior change domains: medication adherence (n = 9), retention in care (n = 4), and sexual risk reduction (n = 6). ▪ To pique participants’ interest, each behavior change tip has 2 supporting messages (38 total) that are attached to the tip in the handout. ▪ Participants can choose behavior change tips to practice in between health care visits. |

| Questions library | ▪ PHC programming draws from a “library” of 10 questions that relate to 3 behavior change domains: treatment readiness (n = 1), adherence (n = 7), and sexual risk reduction (n = 2). ▪ To pique participants’ interest, a link to relevant information from Extra Info is attached to each question. ▪ Participants can choose questions they want to ask their doctor during their clinic visit. |

| Patient handout | ▪ PHC automatically generates a handout at the completion of a session if a participant selects at least one behavior change tip and/ or question in the intervention. ▪ Before the participant logs off, if a handout was generated, it is automatically printed on a dedicated trial printer and delivered by trial staff. Participants can also choose to have the handout sent via e-mail. |

| Extra Info | ▪ A microsite is offered at the end of the intervention with links to publicly available resources and videos that provide additional information on topics relevant to PWH. ▪ Topics include HIV medications, sexually transmitted diseases, condom use, mental health, and transgender health. |

| Clinic Web Application (CWA) | ▪ The CWA allows trial staff to generate and activate user identification numbers for intervention arm participants, re-approach participants for subsequent uses, view a participant’s progress through the intervention, reset passwords to access the intervention, and send a link to PHC or the patient handout to the participant’s e-mail address. ▪ It also allows trial staff to export lists of their intervention participants by trial ID with PHC usage process data, such as number of PHC uses, timestamps for PHC uses, and if participants are eligible to be re-approached for a subsequent use. ▪ Trial staff can generate a variety of reports, such as the number of participants onboarded to PHC, the number of participants who completed a use, the average time spent in PHC in the clinic, and the average time spent in Extra Info. |

PHC includes other components that allow clinic staff to monitor patient participant progress through the intervention. The PHC Clinic Web Application (CWA) will be used by clinic trial staff to manage participants’ PHC access and to monitor participants’ use of the intervention. A PHC Structure Query Language (SQL) database captures patient-level intervention data for each PHC intervention session, including access location (at clinic or remote location), exposure to modules, patient selections in module and timestamps for each module and session. The CWA was programmed to communicate with the PHC SQL database to provide trial staff with a user-friendly, real-time, tracking and monitoring tool. Trial staff will be able to export their clinic list of intervention arm participants (populated using their trial IDs) with up-to-date key process variables (patient selections from intervention sessions are not included) and produce aggregate-level reports.

2.2.7. Fidelity assurance

To ensure fidelity to trial procedures, we will conduct extensive training on identifying eligible participants, consent and randomization processes, data management, delivering the PHC intervention, and re- approaching participants to use PHC at clinic visits after baseline use. Training webinars will be available for reference in addition to implementation manuals, PowerPoint slide decks, and checklists. Changes to implementation procedures and clarifications will be addressed during monthly all-site check-ins.

Fidelity issues will be assessed through telephone interviews and data monitoring. We will maintain frequent contact with the sites to provide one-on-one technical assistance. We will confirm that any threats to fidelity are resolved and that key staff understand all procedural changes and clarifications. Additionally, clinic sites are encouraged to contact their technical assistance providers immediately to discuss any questions regarding implementation procedures.

We will receive monthly recruitment and enrollment data directly from the sites and intervention use data from the CWA. We will review these data to monitor randomization, intervention use, and handout delivery. Quality control issues identified during this review will be addressed with the site. Finally, we will use the metrics captured in the PHC database, including completion of the intervention by participants and handout delivery, as quantitative indicators of intervention fidelity.

2.2.8. Retaining participants in the trial

Trial coordinators will specialize in building rapport with all participants, and they will leverage this skill to retain participants in the trial. A $50 incentive will be given at both trial enrollment and at collection of final VL data point. Each site will use a database to track all participants over time and to monitor follow-up visits to the clinic. For intervention arm participants, after the first PHC use at a scheduled primary care appointment, they also will be able to use PHC at ancillary clinic appointments. The database and the PHC CWA will flag intervention arm participants who can be approached again to use PHC a second and third time when they attend a clinic visit.

In order to avoid contaminating the retention in care measure, outreach to retain participants will only be conducted for those who do not have an appointment scheduled during the 12-month VL endpoint (10–16 months after randomization) assessment window. Trial staff will be trained to use an evidence-based outreach protocol [28] that includes participant, emergency, and other contact information. We will conduct biweekly technical assistance calls with the clinics’ PHC teams, including trial coordinators, outreach specialists, data managers, and principal investigators to address questions related to retention and other implementation strategies.

2.2.9. Measures and data collection

To assess the primary outcome of HIV-1 VL suppression, we will use EMR data to calculate the number and proportion of trial participants in the intervention arm and standard of care arm that achieve VL suppression (< 200 copies/mL) by the end of their individual follow-up (a window from the start of 10 months through the end of 16 months post-randomization). In addition to VL suppression, we will calculate changes in HIV-1 VL values from the baseline to the final measurement and model the amount of time it takes to achieve VL suppression over the study period for participants in the intervention arm and the control arm.

Secondary outcomes include ART initiation, which will be assessed by calculating the number and proportion of trial participants naïve to ART (or treatment-experienced but not currently prescribed ART) who receive a prescription for ART within 90 days from the date of randomization. Retention in care will be defined as whether a patient had at least one visit in each 6-month period within 12-months separated by 2 months or more [29,30]. Incident sexually transmitted diseases (STDs) will be assessed by calculating the number and proportion of trial participants diagnosed with a laboratory-confirmed incident STD from the date of randomization—including hepatitis B virus, syphilis, gonorrhea, and, for women only, chlamydia and trichomoniasis.

2.2.10. Aim 1 analytical plan

Descriptive statistics—such as counts (percentages), means (standard deviations), and medians (interquartile ranges)—will be computed to summarize outcomes for participants in the intervention condition and the standard of care condition. For the primary outcome, the number and proportion of trial participants will be computed for the intervention condition and the standard of care condition who achieve VL suppression at the 12-month endpoint. Effect size measures, including Cohen’s d for continuous outcomes and risk differences and relative risks for binary outcomes, with 95% confidence intervals, will be used to quantify the size of the difference in outcomes between the two conditions. Statistical significance of differences between binary outcomes for the two conditions will be tested by chi-square tests or by Fisher’s exact tests, depending on sample size. Differences between conceptually continuous outcomes will be tested by t-tests (after an appropriate transformation when needed) or by nonparametric tests (e.g., median tests).

For each outcome, generalized linear mixed models (GLMMs) will be fit, using procedures such as PROC GLIMMIX in SAS software (version 9.4), to compare outcomes between the intervention condition and the standard of care condition, controlling for sociodemographic characteristics and trial site.

The appropriate form of the model will be selected based on the distributions of the variables, such as a logistic model for categorical variables (e.g., VL suppression) or a Poisson model for count variables (e.g., number of missed appointments). Similar models will be used to examine changes in VL values from baseline through follow-up while accounting for demographics and repeated measurements over time. A time by trial condition interaction will be tested within these models to determine if changes in VL values vary across the two trial conditions (i.e., PHC vs. standard of care). In addition, time-to event methods, including Kaplan-Meier methods, Turnbull nonparametric interval-censored estimation methods, and Cox proportional hazards regression models, will be used to compare the time to first VL suppression between the two study conditions while controlling for demographic characteristics.

We will test for potential modifiers of the effect of the PHC intervention on the trial’s primary outcome, namely VL suppression. Interaction terms will be tested in the regression models to assess differences in the effect of PHC by variables including age, sex, race, ethnicity, income, and clinics’ standard of care (clinic level), followed by subgroup analyses to estimate intervention impact among those groups. In addition to the primary analyses that will compare outcomes among the PHC and standard of care groups, secondary analyses will be conducted to examine a dose response effect of the intervention by comparing outcomes among participants based on the number of times they used the PHC tool (0, 1, or 2+ times). Also, similar analyses will be conducted to quantify the impact of specific aspects of the intervention (e.g., particular PHC modules viewed).

Participants will be included in the analyses on an intent-to-treat basis. The extent of missing data will be examined at both the item-level and the person-level. If data appear to be missing at random, multiple imputation will be used for item-level missing data on explanatory variables, such as sociodemographic characteristics. In the case of person-level missing data (e.g., attrition), demographic characteristics of participants who have a final outcome measurement will be compared with participants who are missing data on the final outcome measurement. Characteristics that vary based on attrition will be included as control variables in the mixed effect regression models. Additionally, sensitivity analyses will be conducted to assess the impact of missing data imputation methods for the final outcome measurement, such as carrying the last measurement forward.

2.2.11. Sample size and data interpretation

We calculated the target sample size by hypothesizing that 50% of the standard of care arm will have VL suppression at 12 months and that 62% of intervention arm participants will have VL suppression at 12 months, a difference of 12 percentage points. We planned to use Fisher’s exact test at the 5% significance level to detect a difference between proportions. With a linear adjustment for 25% attrition in each arm, we will need a sample size of 758 = 568/(1–0.25) to have 80% power to detect a difference between the standard of care and intervention arms.

2.3. Aim 2 approach

2.3.1. Aim 2

Examine PHC implementation longitudinally to assess changes over time in the implementation context for PHC and factors that impact implementation effectiveness. Our hypothesis is that clinics will report significant improvements over time in the implementation context, signaling a more supportive implementation climate, increased readiness, and fit of PHC into the clinic workflow.

2.3.2. Design

Aim 2 will use a mixed method explanatory sequential design in which quantitative (surveys) and qualitative (semi-structured interviews) assessments are conducted every 3 months, providing 8 data collection time points. The qualitative assessments will be used to help provide context to the quantitative findings.

2.3.3. Participants

Eight rounds of surveys and interviews will be conducted with 3 to 4 clinic stakeholders from each clinic representing the following roles: PHC principal investigator, trial coordinator, outreach worker, and data manager.

2.3.4. Measures

Several measures of the implementation context will be assessed both quantitatively and qualitatively: 1) implementation climate, which refers to the stakeholders’ perceptions that PHC implementation is rewarded, supported and expected; 2) organizational readiness for implementing change, which refers to the extent that stakeholders feel committed to and confident in making the changes necessary to implement PHC; 3) implementation readiness which refers to having the tangible resources needed for implementation; and 4) perceptions that PHC fits their clinic workflow and supports PWH. These four constructs are all important dimensions for understanding the overall implementation context [31]. Qualitative questions probed in the interviews seek to understand details about the stakeholders’ perceptions of facilitators or barriers to implementation across implementation climate, organizational readiness, and perceived fit of PHC.

Implementation climate will be assessed using 6 items adapted from a measure developed by Jacobs and colleagues (2014) [32], which used confirmatory factor analysis to support the 6 items being used to measure implementation climate as a global construct. Additional support for this measure was provided by Jacobs and colleagues (2015) [33], who found this 6-item measure of implementation climate to be predictive of implementation effectiveness. Sample items include “During the past 3 months, PHC project staff implementing PHC were expected to enroll a certain number of patients in the Positive Health Check study”; “During the past 3 months, PHC project staff got the support they need to use PHC with eligible patients”; and “During the past 3 months, PHC project staff received appreciation for using Positive Health Check with eligible patients.” Reponses are given on a scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Organizational readiness for implementing change will be assessed with 12 items adapted from a measure developed by Shea and colleagues (2014) [34], which supported the psychometric properties of this measure via four studies. Sample items include “During the past 3 months, PHC project staff implementing PHC … were committed to implementing this intervention” and “During the past 3 months, PHC project staff implementing PHC … felt confident that they could handle the challenges that might arise in implementing this intervention.” Reponses are given on a scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Implementation readiness will be assessed with 8 items developed for the PHC Evaluation Trial based on the conceptualization of this construct by Damschroder and colleagues (2009) [35] that assesses tangible resources needed for implementation. Sample items include “To what extent during the last 3 months did PHC project staff implementing PHC have … necessary training to implement this intervention?” and “To what extent during the last 3 months did PHC project staff implementing PHC have … necessary clinic IT support to implement this intervention?” Reponses are given on a scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Perceived fit will be assessed with items developed for this trial based on prior research that had highlighted the importance of “fit between the innovation users’ values and the innovation” [31,36]. Five items: (i.e., During the past 3 months, to what extent did PHC project staff implementing PHC believe PHC as an intervention fit your clinic workflow?; During the past 3 months, to what extent did PHC project staff implementing PHC believe PHC as an intervention fit your clinic values?; During the past 3 months, to what extent did PHC project staff implementing PHC believe PHC as an intervention fit your clinic philosophy?; During the past 3 months, to what extent did PHC project staff implementing PHC believe PHC as an intervention was accepted by staff within your clinic?; During the past 3 months, to what extent did PHC project staff implementing PHC believe PHC as an intervention was well-matched to your clinic environment?) were asked for each of the five intervention components (i.e., patient outreach, onboarding, PHC delivery, handout printing and delivery, and the CWA). Reponses to all 25 items are given on a scale ranging from 0 (Not at all) to 5 (Highest extent possible), with the mean of these items representing perceived fit.

Implementation effectiveness has been operationally defined as the consistency and quality of implementation [31,36–38]. However, given that the PHC intervention is a computer-delivered intervention, the focus is on the consistency of implementation and measured via the number of times PHC was used over the implementation period.

2.3.5. Analysis approach

The analysis of quantitative data examining the implementation context over time is challenging due to only having a relatively small number of organizations or analytic units, such as teams or clinicians. This has been referred to as the “small n” problem [39], and it is a common issue within implementation research. Consequently, we will use longitudinal mixed-effects regression models with estimation options that optimize statistical power in small sample sizes to assess the extent to which there were significant changes over time within the implementation context for the following PHC intervention components: PHC onboarding, PHC module delivery, and PHC offboarding [40,41].

For the analysis of qualitative data examining implementation context over time, we will use a framework analysis method [42] as the foundation for the qualitative coding and analysis approach. Framework analysis provides a systematic, 5-stage approach to organizing and analyzing qualitative data (see Table 2).

Table 2.

Framework analysis methodology.

| Stage 1 | Familiarization involves establishing an overall familiarity with the data by immersing oneself in telephone interview notes. In this trial, as a first step, two program staff will conduct a review of all interview notes. |

| Stage 2 | Identifying a thematic framework includes identifying key themes or concepts that emerge from the data, which provides a manageable structure for examining the data. We will develop a codebook based on themes derived from the interview guide, notes, and knowledge of the PHC program structure, including the three core program components of patient, clinician, and system change. The codebook will provide an overview of the theme-based coding structure, guiding principles for coding, definitions, and examples. |

| Stage 3 | Indexing is systematically applying the thematic framework to all the textual data. We will conduct at least two rounds of test-coding exercises and discussion to establish intercoder reliability. We will examine overlap, discrepancies, and agreement for predefined codes and revised to establish greater agreement among coders. A team of three trial staff will code the data using the codebook codes. |

| Stage 4 | Charting involves arranging the data in accordance with the thematic framework and “charting” the data through synthesis and abstraction into a theme- or case-based chart. Consequently, we will generate a theme-based chart to organize the data by code, informant role, site, and implementation phase. We will review the coding reports and populate a matrix with themes and patterns identified within each site at each implementation phase by informant role. |

| Stage 5 | Mapping and interpretation will include reviewing and summarizing the matrix themes within each site and time point (mapping) and summarizing and assessing across sites and implementation phases and week time points to better understand the themes across all the data (interpretation). |

2.3.6. Coding and analysis

Interviews will be transcribed and entered into NVivo, a multifunctional software system for coding and analysis. Three trial team members will independently code a subset of transcriptions. Discrepancies in initial coding will be resolved by discussion between trial team members. The coders will then independently conduct a final coding of the remaining data, with one staff person coding each transcript. Intercoder agreement will be quantified and reported for each coding domain using Cohen’s Kappa, which is calculated as follows: (Observed agreement – Chance)/(1 – Chance) [43]. Thematic analysis is guided by the framework analysis approach shown in Table 2.

2.3.7. Mixed method analysis approach

Upon completion of the quantitative and qualitative data analysis, we will integrate the data using joint display tables to examine the changes in implementation context over time. These tables present the commonalities and differences between the two forms of data in order to demonstrate varying contexts over time and to account for stakeholder type and clinical site.

2.4. Aim 3 approach

2.4.1. Aim 3

Conduct a cost analysis to estimate the incremental cost of the PHC intervention, exclusive of research-related costs.

PHC staff at each clinic will report the costs associated with delivering the PHC intervention. We will collect cost data via 3 questionnaires: personnel time spent on each of the non-research PHC intervention activities (hourly wages and fringe benefits of the clinic personnel are collected separately); personnel time spent on PHC patient outreach; and cost of materials, supplies, and indirect overhead (nonlabor). The non-research PHC intervention activities, for which costs will be collected include staff training and preparation, patient identification and recruitment (first contact with patients), intervention delivery, mobile device management, report generation, and administration/general oversight. Time spent on research/evaluation will not be reported in this questionnaire.

Time spent on participant outreach activities, which is defined as activities related to PHC outreach protocol beyond first contact, will be reported in the PHC patient outreach questionnaire. The PHC patient outreach activities include reaching patients lost to care, contacting patients, management of databases to identify/find patients, and other outreach activities.

The PHC nonlabor costs include costs associated with materials, supplies, travel, and equipment, and indirect/overhead costs charged to PHC, reflecting office rent, repair and maintenance, network connection and maintenance, telephone service, and shared office equipment. Non-research costs and costs associated with patient outreach will be analyzed separately. All costs, regardless of the funding source, are included in the analysis.

Clinic staff who participate in the delivery of the PHC intervention will complete the questionnaires that report intervention and outreach activity time three times during the course of the trial: at 1 month, 6 months, and 12 months from the start of the intervention. In the questionnaires, staff members will be asked to report the number of hours spent on each program activity in a typical week during the specified month.

The Trial Coordinator will complete the questionnaire reporting nonlabor costs. Trial coordinators are instructed to avoid duplicating the costs reported under overhead with costs reported elsewhere in the questionnaire. The Trial Coordinator will complete the nonlabor cost questionnaire every month via an online survey. Cost data will be reviewed for accuracy and completeness.

2.4.2. Cost analysis plan

To conduct a cost analysis of the PHC intervention, we will assess all labor and nonlabor resources spent on the intervention. Our analysis will use a micro-costing approach to ensure that complete information on overall program costs are collected, including labor hours and wages and fringe benefits, material and supply costs, and the opportunity cost of donated labor and in-kind services [44–48]. The cost analysis will be conducted from the healthcare provider’s perspective to reflect the program delivery cost to the clinic; the patients’ time and productivity cost is not assessed [46,47,49]. We also will report the detailed characteristics of the cost variables and distribution of the cost across intervention activities. The total cost will be stratified by labor and nonlabor costs and by variable and fixed costs; the variable cost varies with the number of patients served, whereas the fixed cost remains the same regardless of the number of patients [46,48,50]. The cost of durable equipment will be amortized over the useful duration of the equipment. To describe potential variation in costs associated with each clinic’s unique features and patient characteristics, we will analyze each clinic’s costs separately. The site-level variation in cost may be important to understanding the key factors driving the cost. The analysis could help clinics identify the areas for efficiency improvement and provide budgetary guidance for those who may be interested in implementing PHC in other locations.

The primary outcome data will allow us to estimate the number of participants in the treatment group and the control group who have been prescribed ART, who have been retained in care, and who achieve viral suppression. Additionally, we will combine the intervention outcomes with the cost data and generate summary results, including total intervention cost, average cost per patient receiving the PHC intervention, and incremental cost per patient prescribed ART, retained in care, and virally suppressed. The incremental results will be expressed as incremental cost-effectiveness ratios (ICER) and they may be interpreted as the additional cost required to achieve one more unit of outcome as compared with the standard of care [46,50,51]. Our data collection using a micro-costing approach will allow us to collect most of the costs specific to implementing the PHC intervention. For some of the activities and fixed costs, such as indirect overhead, common to both the intervention and control arms, we will estimate the average cost per patient overall and then calculate the cost attributable to participants in the intervention arm. We will consider those fixed costs to be an essential component of the total intervention cost. We also will conduct sensitivity analyses on key variables, with a wide range of values and likely scenarios, to ensure stability in our results.

2.5. Data management and quality control

This trial collects a variety of data types from multiple sources. Patient outcome assessment will be collected via each site’s EMR and stored in their Access database, including patient data such as demographics, VL, and appointment data. The CWA will collect aggregate intervention data, such as completion rates, handouts delivered, and patient participant progress through the PHC study. PHC implementation data collected from trial stakeholders will be collected via Qualtrics, and interview data will be recorded, transcribed, and stored in NVivo. Cost and standard of care data are also collected via Qualtrics.

Aggregated patient outcome data will be received and processed quarterly over the trial period. Participant data received and analyzed does not contain personally identifiable information (PII) and are identified only by trial participant ID number. Each site’s quarterly data delivery will be checked in terms of ranges, missing values, and compared with previous deliveries to ensure data quality. PHC intervention data will be checked monthly for accuracy to ensure participants are being appropriately routed through PHC based on their selections, and an examination of missing values, and outliers will be conducted. The trial team will discuss flagged items prior to cleaning or resolution. All verified and cleaned data will be archived using SAS software, version 9.4. Master data files will be maintained over the trial period along with a master data dictionary. All data will be kept in password-protected share drives and will be accessed only by the data manager. Regular reports are generated on trial accrual, retention, follow-up, and completion. Any irregularities in the data are addressed and updated in the trial’s master files.

3. Discussion

Previous digital interventions have used simulated “video doctors” to deliver tailored HIV prevention messages to reduce risky sexual and drug-related behaviors [13] and to increase medication adherence [52] among PWH. One study found that a digital video doctor intervention increased HIV-related knowledge, intention to use a condom, and acceptance of an HIV test among patients in the waiting room of an emergency department [53,54]. Another study compared a digital nurse that provided medication follow-up counseling to traditional in- person follow-up counseling with healthcare providers. Both study arms covered similar topics to support medication adherence. Both arms resulted in improved ART adherence, with no statistical significant difference between them [55]. This demonstrates that virtual healthcare providers can be as effective as traditional in-person counseling encounters, at least for supporting ART adherence.

Although there are clear potential benefits to using video doctor interventions, several gaps in the evidence base need to be addressed to strengthen the scientific premise of this intervention approach. For example, previous studies have often relied on self-reported outcomes [13], have not been tailored to address changes in patients’ adherence behaviors [52], have not used randomization or experimental designs to demonstrate efficacy [56], have had high attrition [56], or have used small sample sizes [7,15]. Other gaps identified in evidence reviews are a lack of technology-based interventions to increase engagement and retention in care and reduce risky sexual behavior [11,57].

Another important gap in the evidence base is the lack of research assessing outcomes related to the implementation of digital HIV interventions [58]. A recent research review argued that understanding facilitators and barriers to implementation and adoption is useful, not only for future implementation efforts, but also for informing plans to scale up interventions [58]. Also, the lack of evidence on cost and cost-effectiveness has been identified as a barrier to the wide-scale dissemination of interventions to increase ART adherence and other outcomes in HIV care [57,58].

The PHC intervention expands on previous digital video doctor interventions in several ways. First, it uses an interactive video doctor that is selected by patients according to their preferences for gender and race to deliver tailored messages that meet specific patient needs related to a variety of issues, including treatment initiation, medication adherence, sexual risk reduction, retention in care, mother-to-child transmission, and substance use. Second, PHC is designed specifically to support patient behavior change by providing useful behavioral strategies (“tips”) for PHC users to practice between visits to their HIV primary care provider. These strategies can increase patient engagement, which has been shown to positively influence health outcomes and reduce healthcare costs [59]. Third, PHC can potentially support patient-provider communication by generating a set of questions that patients have selected to ask their healthcare provider. In this way, PHC supports patients and providers during their clinical encounter and promotes communication and patient engagement, thereby promoting the potential for increased adherence to ART [60]. Fourth, while designed to be used in the clinic waiting room or other designated clinic space, PHC can be accessed from home or other locations, making it easily accessible for patients based on their needs [61]. Finally, PHC was designed from the onset for wide-scale dissemination. Its flexible and updateable digital strategy provides access on multiple devices and platforms. This approach makes PHC a potentially important intervention strategy to improve public health in communities that have a high incidence of HIV infection.

3.1. Limitations

Limitations of the PHC Evaluation Trial relate to the pragmatic trial design and the nature of embedding a trial in a clinic workflow. We used the Pragmatic-Explanatory Continuum Indicator Summary (PRECIS) tool as a guide to design a trial that favored pragmatism over explanatory methods. For example, patients complete PHC at a regularly scheduled clinic visit—as compared with completing PHC at specified trial visits—to gain evidence of effectiveness of implementing PHC in the context of HIV primary care clinics. Additionally, to ensure that trial reminders to come in early to a clinic appointment to complete the intervention did not confound the retention in care measurement, we allow patients to complete PHC after an appointment or at home when assessing PHC uses subsequent to baseline. Finally, there are no patient-reported outcomes collected as part of the trial protocol. All outcome assessments are taken from clinic EMRs. This means that trial mediators are not identified, such as satisfaction with PHC or patient-reported changes in communicating with their provider.

4. Conclusion

To end the HIV epidemic, it is vital to understand and systematically study implementation of innovative digital interventions like Positive Health Check in clinical settings. The overarching goal of the PHC Evaluation Trial is to determine if the intervention is an effective public health approach to improve HIV outcomes. If successful, PHC may enhance clinic efficiency and communication between providers and patients, ultimately leading to improvements among PWH in self-efficacy, engagement in shared decision-making, and medication adherence. The implementation feasibility trial component, the cost analysis, and understanding the role of clinics’ standard of care in implementation will strengthen our understanding of how to streamline the adoption and implementation of digital interventions in busy clinic settings. If found to be effective, the trial findings will help inform future efforts to disseminate PHC on a larger scale.

Acknowledgements

We would also like to thank members of the Positive Health Check Study Team, including: Mable Chow, Surya Nagesh, Bomi Choi, Kelli Bedoya, Paige Ricketts, Gabriela Plazarte, and Nadine E. Coner at the Florida Department of Health, Hillsborough County; Eva Dunder, MPH, Christian Telleria BS, Dustin Baker MPH, and Jade Zeng MPH from NO/AIDS Task Force dba Crescent Care; Theron Clark Stuart, Elena Gonzalez, Kathryn Meagley MPH, Nana Addo Padi-Adjirackor, and Rincy Varughese at the Atlanta VA Medical Center; and Marta Paez Quinde, Valorie Cadorett, Eric Asencio, and Robenson Saint-Jean at the Rutgers, New Jersey Medical School Infectious Disease Practice; and Arin Freeman, Cari Courtenay-Quirk, Ann O’Leary, and Gary Marks from the Centers for Disease Control and Prevention.

Funding sources

This research is supported by a Cooperative Agreement from the Centers for Disease Control and Prevention (U18PS004967). V.C.M. has received funding from the Emory University Center for AIDS Research (NIH grant 2P30-AI-050409).

Disclaimer

V.C.M. has received consulting honoraria and/or research support from Lilly, ViiV Healthcare, Gilead and Bayer.

Abbreviations:

- ART

antiretroviral therapy

- CDC

Centers for Disease Control and Prevention

- CWA

Clinic Web Application

- EMR

electronic medical record

- ICER

incremental cost-effectiveness ratios

- PWH

people with HIV

- PHC

Positive Health Check

- PRECIS

Pragmatic-Explanatory Continuum Indicator Summary

- SQL

Structured Query Language

- STD

sexually transmitted disease

- VAMC

Veteran Affairs Medical Center

- VL

viral load

Footnotes

Declaration of Competing Interest

Shobha Swaminathan COI: Gilead Sciences, Viiv Healthcare.

References

- [1].Harris NS, Johnson AS, Huang YA, Kern D, Fulton P, Smith DK, et al. , Vital signs: Status of human immunodeficiency virus testing, viral suppression, and HIV preexposure prophylaxis - United States, 2013–2018, MMWR Morb. Mortal. Wkly Rep. 68 (2019) 1117–1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Centers for Disease Control and Prevention, Fact Sheet. Evidence of HIV Treatment and Viral Suppression in Preventing the Sexual Transmission of HIV, Centers forDisease Control and Prevention, Atlanta, GA, 2018. [Google Scholar]

- [3].Cohen MS, Chen YQ, McCauley M, Gamble T, Hosseinipour MC, Kumarasamy N, et al. , Antiretroviral therapy for the prevention of HIV-1 transmission, N. Engl. J. Med. 375 (2016) 830–839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Crepaz N, Tang T, Marks G, Mugavero MJ, Espinoza L, Hall HI, Durable viral suppression and transmission risk potential among persons with diagnosed HIV infection: United States, 2012–2013, Clin. Infect. Dis. 63 (2016) 976–983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Rodger AJ, Cambiano V, Bruun T, Vernazza P, Collins S, Degen O, et al. , Risk of HIV transmission through condomless sex in serodifferent gay couples with the HIV-positive partner taking suppressive antiretroviral therapy (PARTNER): Final results of a multicentre, prospective, observational study, Lancet. 393 (2019) 2428–2438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Samji H, Cescon A, Hogg RS, Modur SP, Althoff KN, Buchacz K, et al. , Closing the gap: Increases in life expectancy among treated HIV-positive individuals in the United States and Canada, PLoS One 8 (2013) e81355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Hersch RK, Cook RF, Billings DW, Kaplan S, Murray D, Safren S, et al. , Test of a web-based program to improve adherence to HIV medications, AIDS Behav. 17(2013) 2963–2976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Lustria ML, Cortese J, Noar SM, Glueckauf RL, Computer-tailored health interventions delivered over the web: Review and analysis of key components, Patient Educ. Couns. 74 (2009) 156–173. [DOI] [PubMed] [Google Scholar]

- [9].Kurth AE, Spielberg F, Cleland CM, Lambdin B, Bangsberg DR, Frick PA, et al. , Computerized counseling reduces HIV-1 viral load and sexual transmission risk: findings from a randomized controlled trial, J. Acquir. Immune Defic. Syndr. 65 (2014) 611–620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Noar SM, Black HG, Pierce LB, Efficacy of computer technology-based HIV prevention interventions: A meta-analysis, AIDS. 23 (2009) 107–115. [DOI] [PubMed] [Google Scholar]

- [11].Pellowski JA, Kalichman SC, Recent advances (2011−2012) in technology-delivered interventions for people living with HIV, Curr. HIV/AIDS Rep. 9 (2012) 326–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Daher J, Vijh R, Linthwaite B, Dave S, Kim J, Dheda K, et al. , Do digital innovations for HIV and sexually transmitted infections work? Results from a systematic review (1996–2017), BMJ Open 7 (2017) e017604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Gilbert P, Ciccarone D, Gansky SA, Bangsberg DR, Clanon K, McPhee SJ, et al. , Interactive “Video Doctor” counseling reduces drug and sexual risk behaviors among HIV-positive patients in diverse outpatient settings, PLoS One 3 (2008)e1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Noar SM, Computer technology-based interventions in HIV prevention: State of the evidence and future directions for research, AIDS Care 23 (2011) 525–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Claborn KR, Fernandez A, Wray T, Ramsey S, Computer-based HIV adherence promotion interventions: A systematic review, Transl. Behav. Med. 5 (2015) 294–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C, Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact, Med. Care 50 (2012) 217–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].U.S. Department of Health & Human Services, Minority HIV/AIDS Fund, What Is ‘Ending the HIV Epidemic: A Plan for America’? HIV.gov / Office of HIV/AIDS and Infectious Disease Policy (OHAIDP), U.S. Department of Health and Human Services, Washington, DC, 2019. [Google Scholar]

- [18].Godwin M, Ruhland L, Casson I, MacDonald S, Delva D, Birtwhistle R, et al. , Pragmatic controlled clinical trials in primary care: The struggle between external and internal validity, BMC Med. Res. Methodol. 3 (2003) 28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M, The PRECIS-2 tool: Designing trials that are fit for purpose, BMJ. 350 (2015) h2147. [DOI] [PubMed] [Google Scholar]

- [20].Patsopoulos NA, A pragmatic view on pragmatic trials, Dialogues Clin. Neurosci. 13 (2011) 217–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Sealed Envelope Ltd, Create a Randomisation List, Sealed Envelope Ltd, London, 2019. [Google Scholar]

- [22].National Library of Medicine (U.S.). (2017. -). Positive Health Check Evaluation Trial ((PHC)). NCT03292913. Retrieved from https://clinicaltrials.gov/ct2/show/NCT03292913?term=NCT03292913&draw=2&rank=1.

- [23].Miller ER, Rollnick S, Motivational Interviewing, 3rd ed, Guildford Press, New York, NY, 2013. [Google Scholar]

- [24].Fisher JD, Fisher WA, Changing AIDS-risk behavior, Psychol. Bull. 111 (1992)455–474. [DOI] [PubMed] [Google Scholar]

- [25].Prochaska JO, Velicer WF, The transtheoretical model of health behavior change, Am. J. Health Promot. 12 (1997) 38–48. [DOI] [PubMed] [Google Scholar]

- [26].Hibbard JH, Greene J, What the evidence shows about patient activation: Better health outcomes and care experiences; fewer data on costs, Health Aff. (Millwood).32 (2013) 207–214. [DOI] [PubMed] [Google Scholar]

- [27].Kelley JM, Kraft-Todd G, Schapira L, Kossowsky J, Riess H, The influence of the patient-clinician relationship on healthcare outcomes: A systematic review and meta-analysis of randomized controlled trials, PLoS One 9 (2014) e94207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Sitapati AM, Limneos J, Bonet-Vazquez M, Mar-Tang M, Qin H, Mathews WC, Retention: building a patient-centered medical home in HIV primary care through PUFF (Patients Unable to Follow-up Found), J. Health Care Poor Underserved 23 (2012) 81–95. [DOI] [PubMed] [Google Scholar]

- [29].Mugavero MJ, Westfall AO, Zinski A, Davila J, Drainoni ML, Gardner LI, et al. , Measuring retention in HIV care: the elusive gold standard, J. Acquir. Immune Defic. Syndr. 61 (2012) 574–580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Health Resources and Services Administration (HRSA), HIV/AIDS Bureau Performance Measures, March ed, United States Department of Health and Human Services, Rockville, MD, 2017. [Google Scholar]

- [31].Weiner BJ, Lewis MA, Linnan LA, Using organization theory to understand the determinants of effective implementation of worksite health promotion programs, Health Educ. Res. 24 (2009) 292–305. [DOI] [PubMed] [Google Scholar]

- [32].Jacobs SR, Weiner BJ, Bunger AC, Context matters: Measuring implementation climate among individuals and groups, Implement. Sci. 9 (2014) 46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Jacobs SR, Weiner BJ, Reeve BB, Hofmann DA, Christian M, Weinberger M, Determining the predictors of innovation implementation in healthcare: A quantitative analysis of implementation effectiveness, BMC Health Serv. Res. 15 (2015) 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ, Organizational readiness for implementing change: A psychometric assessment of a new measure, Implement. Sci. 9 (2014) 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC, Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science, Implement. Sci. 4(2009) 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Helfrich CD, Weiner BJ, McKinney MM, Minasian L, Determinants of implementation effectiveness: Adapting a framework for complex innovations, Med. Care Res. Rev. 64 (2007) 279–303. [DOI] [PubMed] [Google Scholar]

- [37].Klein KJ, Sorra JS, The challenge of innovation implementation, Acad. Manag. Rev. 21 (1996) 1055–1080. [Google Scholar]

- [38].Klein KJ, Conn AB, Sorra JS, Implementing computerized technology: An organizational analysis, J. Appl. Psychol. 86 (2001) 811–824. [DOI] [PubMed] [Google Scholar]

- [39].Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B, Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges, Admin. Pol. Ment. Health 36 (2009) 24–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Ridenour TA, Wittenborn AK, Raiff BR, Benedict N, Kane-Gill S, Illustrating idiographic methods for translation research: Moderation effects, natural clinical experiments, and complex treatment-by-subgroup interactions, Transl. Behav. Med. 6 (2016) 125–134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Ridenour T, Pineo TZ, Molina MMM, Lich KH, Toward rigorous ideographic research in prevention science: Comparison between three analytic strategies for testing preventive intervention in very small samples, Prev. Sci 14 (2013) 267–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Ritchie J, Spencer L, Qualitative data analysis for applied policy research, in: Bryman A, Burgess R. (Eds.), Analysing Qualitative Data, Routledge, London, 1994, pp. 173–194. [Google Scholar]

- [43].Cohen J, A coefficient of agreement for nominal scales, Educ. Psychol. Meas. 20 (1960) 37–46. [Google Scholar]

- [44].Frick KD, Microcosting quantity data collection methods, Med. Care 47 (2009) S76–S81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Smith MW, Barnett PG, Direct measurement of health care costs, Med. Care Res. Rev. 60 (2003) 74s–91s. [DOI] [PubMed] [Google Scholar]

- [46].Haddix AC, Teutsch SM, Corso PS, Prevention Effectiveness: A Guide to Decision Analysis and Economic Evaluation, Oxford University Press, New York, 2003. [Google Scholar]

- [47].Ramsey S, Willke R, Briggs A, Brown R, Buxton M, Chawla A, et al. , Good research practices for cost-effectiveness analysis alongside clinical trials: The ISPOR RCT-CEA Task Force report, Value Health 8 (2005) 521–533. [DOI] [PubMed] [Google Scholar]

- [48].Shrestha RK, Sansom SL, Farnham PG, Comparison of methods for estimating the cost of human immunodeficiency virus-testing interventions, J. Public Health Manag. Pract. 18 (2012) 259–267. [DOI] [PubMed] [Google Scholar]

- [49].Neumann PJ, Sanders GD, Russell LB, Siegel JE, Ganiats TG, Cost-Effectiveness in Health and Medicine, Second ed, Oxford University Press, New York, 2017. [Google Scholar]

- [50].Shrestha RK, Gardner L, Marks G, Craw J, Malitz F, Giordano TP, et al. , Estimating the cost of increasing retention in care for HIV-infected patients: Results of the CDC/HRSA retention in care trial, J. Acquir. Immune Defic. Syndr. 68 (2015) 345–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Ruger JP, Ben Abdallah A, Cottler L, Costs of HIV prevention among out-of-treatment drug-using women: Results of a randomized controlled trial, Public Health Rep. 125 (Suppl. 1) (2010) 83–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Fisher JD, Amico KR, Fisher WA, Cornman DH, Shuper PA, Trayling C, et al. , Computer-based intervention in HIV clinical care setting improves antiretroviral adherence: The LifeWindows Project, AIDS Behav. 15 (2011) 1635–1646. [DOI] [PubMed] [Google Scholar]

- [53].Aronson ID, Bania TC, Race and emotion in computer-based HIV prevention videos for emergency department patients, AIDS Educ. Prev. 23 (2011) 91–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Aronson ID, Plass JL, Bania TC, Optimizing educational video through comparative trials in clinical environments, Educ. Technol. Res. Dev. 60 (2012) 469–482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Cote J, Godin G, Ramirez-Garcia P, Rouleau G, Bourbonnais A, Gueheneuc YG, et al. , Virtual intervention to support self-management of antiretroviral therapy among people living with HIV, J. Med. Internet Res. 17 (2015) e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Bachmann LH, Grimley DM, Gao H, Aban I, Chen H, Raper JL, et al. , Impact of a computer-assisted, provider-delivered intervention on sexual risk behaviors in HIV-positive men who have sex with men (MSM) in a primary care setting, AIDS Educ. Prev. 25 (2013) 87–101. [DOI] [PubMed] [Google Scholar]

- [57].Simoni JM, Kutner BA, Horvath KJ, Opportunities and challenges of digital technology for HIV treatment and prevention, Curr. HIV/AIDS Rep. 12 (2015) 437–440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Kemp CG, Velloza J, Implementation of eHealth interventions across the HIV care cascade: A review of recent research, Curr. HIV/AIDS Rep. 15 (2018) 403–413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Green J, Hibbard JH, Sacks R, Overton V, Parrotta C, When patient activation levels change, health outcomes and costs change, too, Health Aff. (Millwood). 34 (2015) 431–437. [DOI] [PubMed] [Google Scholar]

- [60].Babalola S, Van Lith LM, Mallalieu EC, Packman ZR, Myers E, Ahanda KS, et al. , A framework for health communication across the HIV treatment continuum, J. Acquir. Immune Defic. Syndr. 74 (2017) S5–S14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Gaglio B, Shoup JA, Glasgow RE, The RE-AIM framework: A systematic review of use over time, Am. J. Public Health 103 (2013) e38–e46. [DOI] [PMC free article] [PubMed] [Google Scholar]