Abstract

Cereals are the main food for mankind. The grain shape extraction and filled/unfilled grain recognition are meaningful for crop breeding and genetic analysis. The conventional measuring method is mainly manual, which is inefficient, labor-intensive and subjective. Therefore, a novel method was proposed to extract the phenotypic traits of cereal grains based on point clouds. First, a structured light scanner was used to obtain the grains point cloud data. Then, the single grain segmentation was accomplished by image preprocessing, plane fitting, region growth clustering. The length, width, thickness, surface area and volume was calculated by the specified analysis algorithms for grain point cloud. To demonstrate this method, experimental materials included rice, wheat and corn were tested. Compared with manual measurement results, the average measurement error of grain length, width and thickness was 2.07%, 0.97%, 1.13%, and the average measurement efficiency was about 9.6 s per grain. In addition, the grain identification model was conducted with 25 grain phenotypic traits, using 6 machine learning methods. The results showed that the best accuracy for filled/unfilled grain classification was 90.184%.The best accuracy for indica and japonica identification was 99.950%, while for different varieties identification was only 47.252%. Therefore, this method was proved to be an efficient and effective way for crop research.

Subject terms: Plant sciences, Plant breeding

Introduction

Because of population explosion, global warming, and water shortages, we are facing severe challenges in agricultural production1–3. Cereals mainly including rice, wheat, corn, and sorghum have occupied a dominant position in the human’s food4, and cereal production is of great importance to the food security5,6. Cereal grain traits including grain shape, grain plumpness have performed direct influence on the final yield, and grain traits measurement are necessary for yield-related research7. Grain shape is a very important basis of grain classification, and plumpness is the criterion for judging the quality of rice varieties. Therefore the grain trait extraction is essential for cereal research8. However, the conventional method mainly depends on manual measurement, which is inefficient, labor-intensive and subjective. Therefore, it is urgent to develop a novel method for grain trait extraction with high throughput and high accuracy.

The measurement of rice grain size is of great significance in rice breeding and genetic research. With the rapid development of computer technology, machine vision has been applied in grain size measurement9,10. Tanabata et al.11 developed Smart-Grain software for high-throughput measurement of seed shape based on digital images and the open computer vision library (OpenCV). Ma et al.12 extracted the length and width information of rice grains based on the images taken by smart phones. Le et al.13 proposed a method to study the morphology of developing wheat grains based on X-ray μCT imaging technique. However, most of the researches focus on the 2D traits14, and it is not easy to obtain the 3D grain traits such as volume, surface area and thickness. Since the grain size are small, high quality and complete point cloud of which is needed. Point clouds obtained by binocular stereo vision, structure from motion and space carving are relatively sparse15–18, on the contrary the structured light imaging, an active three-dimensional vision technology, can obtain high-precision point clouds, which is widely used in industrial detection, reverse engineering and cultural relic protection19, and it provides an effective method for high precision analysis of cereal grain 3D traits.

The rice grain plumpness is one of the determinants in yield, which is of great importance to rice breeding. The number of filled grains per panicle is directly related to the crop yield20. Therefore, counting of filled and unfilled grains of a panicle is critical to judge the rice quality. Traditionally, grain counting is performed manually, which is labor-intensive, time-consuming and subjective. Manually, filled grain is distinguished from unfilled grain by water-based or wind-based methods21,22. To improve it, some automated methods were developed for identifying and counting the filled grain. Duan et al.23 proposed a method based on visible light imaging and soft X-ray imaging, which was expensive, and of radiation risk. Kumar et al.24 built an automated system for discriminating and counting filled and unfilled grains of a rice panicle based on thermal images. Since the system required to monitor the temperature after heating the grains, it was complicated and difficult to achieve high-throughput measurement. Therefore, it is urgent to develop a new method for the recognition of filled/unfilled grains, with high efficiency and low radiation risk.

In this study, cereal grain traits analysis method based on point cloud was proposed. The high-precision point cloud of grains are obtained by structured light scanner, and the specified algorithms and integrated user software were designed for automatic segmentation of the grain point clouds and 3D grain trait extraction. Finally, 25 grain traits were computed, based on which, the model for filled/unfilled grain identification was set up. In conclusion, our research demonstrated a novel method for grain 3D and plumpness information extraction with high throughput and high accuracy, which was definitely helpful to the rice breeding and genetic research.

Material and methods

Material

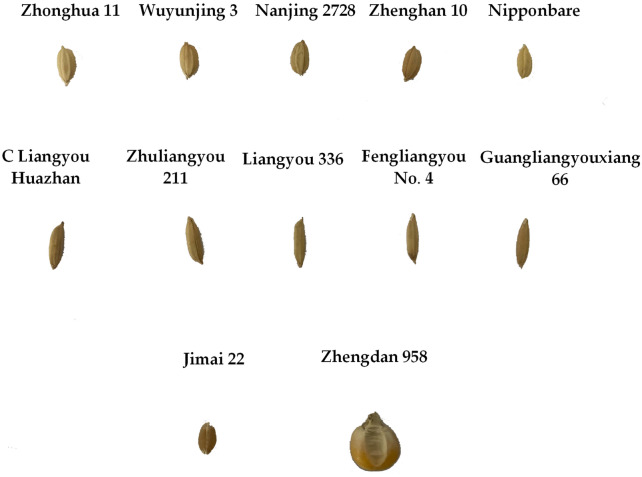

In this study, the test materials included rice, wheat, and corn three types of cereals, which were purchased from the market and rice was the main part. 10 rice varieties including 5 indica and 5 japonica subspecies were selected. Each rice variety contained 100 filled grains and 100 unfilled grains, and a total of 2000 rice grains were used as experiment materials. In the filled and unfilled rice grains judgment, three experimenters would judge the same grain and the average judgment would be taken as ground truth. Moreover, 100 grains of wheat and corn were selected to validate the adaptability of this method. The experimental materials were shown as Fig. 1, and the rice experimental materials include Zhonghua 11, Wuyunjing 3, Nanjing 2728, Zhenghan 10, Nipponbare, C Liangyou Huazhan, Zhulaingyou 211, Liangyou 336, Fengliangyou No.4, Guangliangyouxiang 66. The first five varieties belonged to the rice subspecies of japonica, and the last five varieties belonged to the rice subspecies of indica.

Figure 1.

Display of experimental materials, including wheat grains, corn grains and 10 different varieties of rice grains.

System design

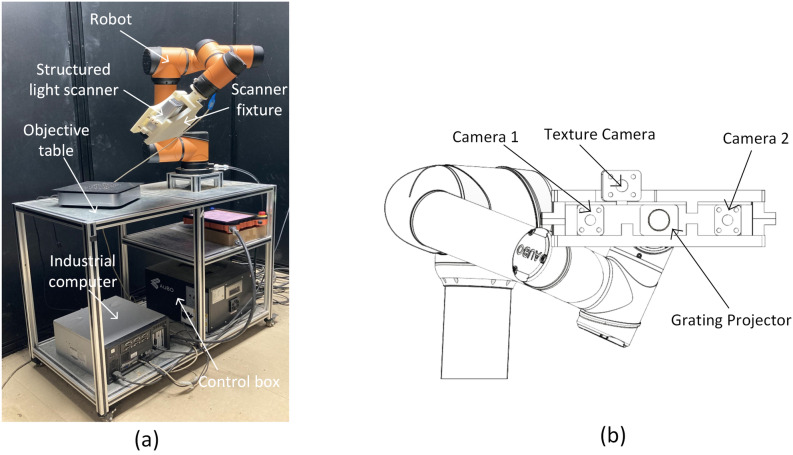

3D structured light scanner

The 3D structured light scanner (Reeyee Pro, China) was adopted in the study, which was based on white light LED raster scanning technology. Combing the advantages of structured light and binocular stereo vision, the scanner can achieve a single-sided accuracy of 0.05 mm within 2 s, which is suitable for high-precision scanning of small-sized work pieces, plastic products, and medical equipment. The main equipment is composed of a projector, two cameras and an internal modulated light source. Based on the principle of triangulation and sinusoidal grating image, it can obtain the dense point cloud data of objects. The detailed parameters of Reeyee Pro scanner are listed in Table 1. The structure of the scanner is shown in Fig. 2b.

Table 1.

Reeyee Pro scanner detailed parameters.

| Parameter | Value |

|---|---|

| Light source | White LED |

| Point distance | 0.16 mm |

| Spatial resolution | 0.05 mm |

| Scanning area | |

| Working distance | 290–480 mm |

| Maximum scan size |

Figure 2.

Schematic diagram of cereal grain scanning system. (a) The overall structure, (b) the structured light scanner.

Cereal grain scanning system

As shown in Fig. 2a, the whole system consists of 6 parts: structured light scanner, robot, scanner fixture, object platform, industrial computer and control unit. AUBO i5 robot was adopted, which was a 6 degrees of freedom (DOF) collaborative robot with a positioning accuracy of ± 0.02 mm and a maximum load of 5 kg. The working range of the robotic arm was a sphere with a radius of 886.5 mm, which ensured sufficient scanning space. In order to fix the scanner on the robot, a fixture was designed and 3D printed with ABS material, and the entire weight of the scanner and the fixture was less than 2.5 kg. The object platform was designed to fix the robot and place samples. The industrial computer was connected with the control unit and the scanner, to achieve the cooperative operation of robot movement and the scanner imaging.

Cereal grain point cloud acquisition

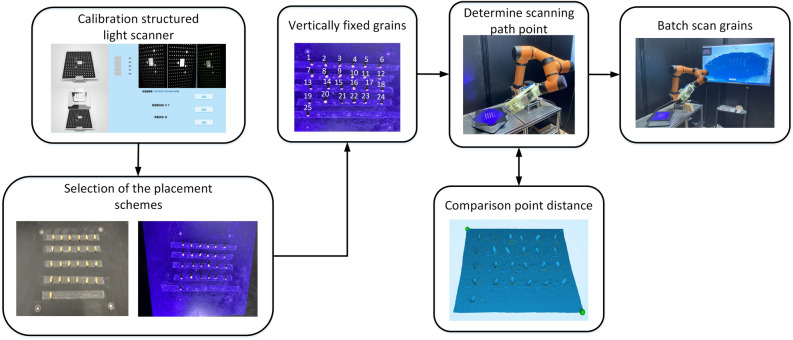

The cereal grain point cloud acquisition is shown as Fig. 3, which could be divided into 4 steps: the scanner calibration, the selection of the placement schemes, the scanning path determination, and the batch scanning.

Calibration of the scanner. The structured light scanner needed to be calibrated and corrected before working. When calibrating the camera, the calibration board need to be set in four positions including the directions of 0°, 90°, 180°, and 270°. Then the distance between the scanner and the calibration board should be adjusted from 350 to 450 mm, while collecting images.

Selection of the placement schemes. At present, there are mainly two kinds of three-dimensional scanning schemes for grains. One way is to spread the grains flatly on a platform, and another is to fix the grains through the seed holder25. The former has high efficiency, but the accuracy is low because the scanning grain is not complete. The latter obtains the complete point cloud of grain with high accuracy, but the disadvantage is that it can only scan a single grain, which is too time-consuming. To improve it, the grains were directly fixed vertically on the stage, and multiple grains could be scanned completely in the study.

Determination of the scanning path point. As shown in Table 1, the minimum space point distance of Reeyee Pro is 0.16 mm. To achieve as high spatial resolution as possible, the robot was studied to obtain proper scanning path point. In this study, the average minimum point distance of the grain point cloud was capable of reaching 0.1731 mm.

Batch scanning. Due to the limitation of scanning area and rotation effect, the grain placement range was set to in the center. In addition, the distance between adjacent grains was set as 20 mm to avoid grain shading. Meanwhile, the grain placement strategy was (4 rows for every 6 grains in a row, and the last one is placed separately), which is helpful for matching the manual and automatic values. What is more, the scanning strategy of rotating 8 times and scanning 45 degree a time was adopted.

Figure 3.

Flow chart of obtaining point cloud of cereal grains using structured light scanning system.

System development environment

The configuration of the industrial computer is I5 3470 and GTX1050TI. The development environment is Windows 7 Pro, Visual Studio 2015, cross-platform open source Point Cloud library version 1.8.1(PCL) based on C++ (https://pointclouds.org), QT version 5.9.8 (https://www.qt.io), Python 3.7.6 and Visualization Toolkit version 8.0.0(VTK) (https://vtk.org). In addition, there is a software, Reeyee-Pro_V2.6.1.0 (https://www.wiiboox.net/support-software.php), which can display the 3D data collection of the point cloud in real time. And the robot is controlled by the Robot Operating System (ROS) system in the Ubuntu environment.

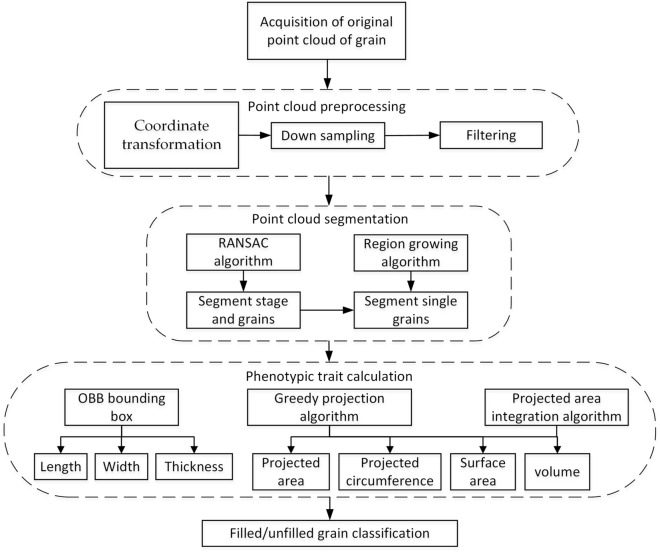

Cereal grain point cloud processing pipeline

The overall processing pipeline of cereal grain point cloud is shown in Fig. 4. It mainly includes 4 steps: point cloud preprocessing, point cloud segmentation, phenotypic traits calculation, and filled/unfilled grain recognition.

Figure 4.

Cereal grain point cloud processing pipeline.

Preprocessing of point clouds

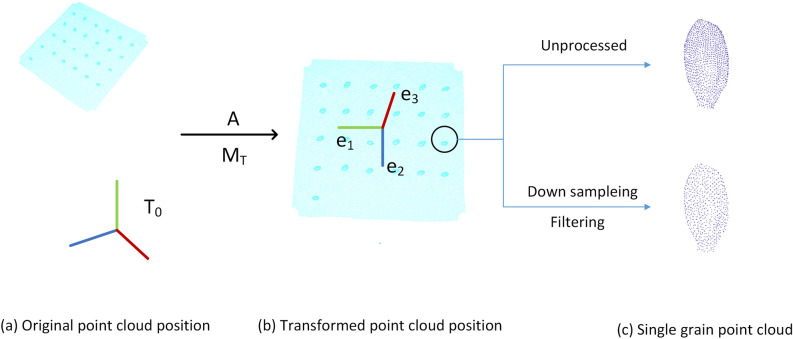

The preprocessing procedure of grain point cloud was shown in Fig. 5, mainly including 3 steps: coordinate transformation, down sampling, and filtering.

Figure 5.

The process and result of preprocessing. (a) Original point cloud position, (b) transformed point cloud position, (c) single grain point cloud.

(1) The coordinate transformation were conducted as Eqs. (1, 2). Firstly, move the original coordinates (T0) to the centroid point of point cloud. Then, based on principal component analysis26 (PCA), the covariance matrix (MT) was computed to generate the new coordinate. The transformed result was shown as Fig. 5b.

| 1 |

| 2 |

where are the three unit eigenvectors of the covariance matrix ; is the original point cloud coordinates; is the new coordinates after coordinate transformation; A is the translation matrix from the original coordinates (T0) to the centroid point of point cloud.

(2) Point cloud down sampling and filtering was shown as Fig. 5c. Based on voxel grids, all points in the voxel were replaced by the gravity center to reduce the point cloud, which can effectively improve the processing efficiency27. Then statistical filtering algorithm was applied to remove point data28, in which the point distance is abnormal.

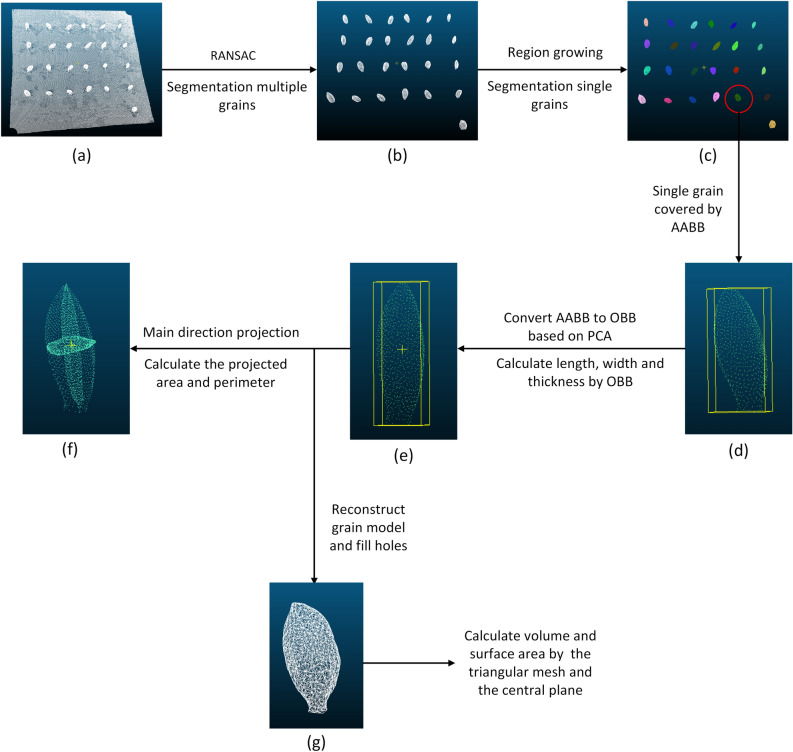

Segmentation of point cloud

The segmentation of point cloud was conducted as Fig. 6. After the preprocessing, the random sample consensus algorithm (RANSAC) was adopted to fit the sample stage plane29 and separate the grain point clouds from the background. Then, based on curvature and normal angle, the single grain point cloud was identified by region growing algorithm30.

Figure 6.

Cereal grain segmentation and traits extraction pipeline.

Phenotypic traits calculation

After single grain was obtained, phenotypic traits were extracted, including length, width, thickness, volume, surface area, projected area and perimeter in the main direction. Figure 6d–g shows the processing steps for grain trait extraction.

-

Grain length, width and thickness extraction

As shown in Fig. 6d,e, the extraction of grain length, width and thickness was mainly achieved by constructing a bounding box. Firstly, the coordinate system of the segmented single grain point clouds were transformed to convert axis-aligned bounding box (AABB)31 into orientation bounding box (OBB)32. Secondly, the maximum and minimum values of the transformed single grain point cloud in the new coordinate system were calculated as respectively. Finally, the grain length, width and thickness were computed as following equations.3 4

where l, w and h are the length, width and thickness of a grain, respectively.5 -

Grain surface area extraction

Firstly, the triangular mesh model of the point clouds was established by greedy projection triangulation algorithm33.Secondly, the holes were filled by reconstructing the mesh boundary edges, which were generated by the grain segmentation. As shown in Fig. 6g, the length of the side of the triangle was calculated by the coordinates of the three vertices of the triangle. Then, based on Helen's formula34, the areas of all the triangular surfaces were calculated and the sum of them was used to approximate the surface area of the grain. The calculation formula is as Eqs. (6–7).6

where is surface area of a grain, k is total number of triangles, is area of the i-th triangle, is half the perimeter of the triangle, represent the length of each side of the triangle.7 -

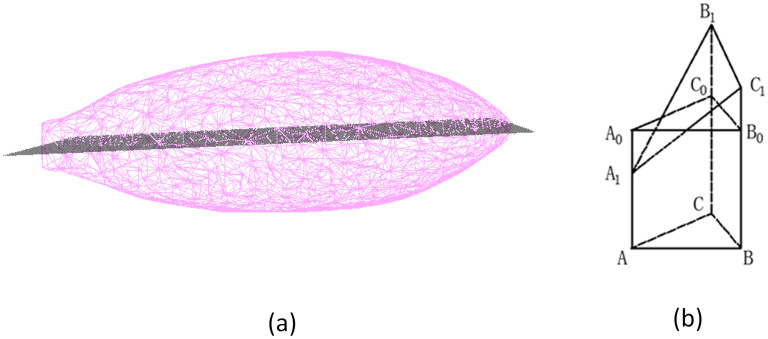

Grain volume extraction

The grain volume was extracted as Fig. 7. Firstly, the convex pentahedrons were constructed by the triangular mesh and central plane projection, and then grain volume V was the sum of their volumes. Figure 7a is the central plane of the triangular mesh projection. And as shown in the Fig. 7b, are the three vertices of a triangular mesh. It is assumed that the volume of the straight triangular prism is equal to the volume of this convex pentahedron, then the height of the straight triangular prism could be approximated as the height of the gravity center of .

where is height of the straight prism, is height of the center of gravity of8 -

Projected area and perimeter of grain in the main direction extraction

In this study, three main directions of grain point cloud were projected, and the projected area and perimeter of cross section, longitudinal section, and horizontal section were obtained as the shape description of grain (Fig. 6f). Firstly, the point cloud of a single grain after coordinate transformation was projected on the plane of x = 0, y = 0, z = 0 respectively. Then, based on the greedy projection triangulation algorithm33, the areas of the projected triangular mesh and the perimeter of the mesh edges were calculated.

Figure 7.

Grain volume calculation method in this study. (a) The central plane of triangular mesh projection, (b) the projected area integration method.

Filled/unfilled grain analysis

A total of 25 phenotypic traits were extracted in the study, including 11 basic traits and 14 derived traits, as shown in Table 2. Compactness index, as a comprehensive grain shape description factor35, is calculated by the following formula:

| 9 |

where is the compactness index, is perimeter of cross-section, is area of cross-section.

Table 2.

25 phenotypic traits.

| No | Symbol | Trait | No | Symbol | Trait |

|---|---|---|---|---|---|

| 1 | Length | 14 | Width-thickness ratio | ||

| 2 | Width | 15 | Box volume | ||

| 3 | Thickness | 16 | Specific surface area | ||

| 4 | Volume | 17 | Surface area-length ratio | ||

| 5 | Surface area | 18 | Surface area-width ratio | ||

| 6 | Perimeter of cross section | 19 | Surface area-thickness ratio | ||

| 7 | Area of cross section | 20 | Volume-length ratio | ||

| 8 | Perimeter of longitudinal section | 21 | Volume-width ratio | ||

| 9 | Area of longitudinal section | 22 | Volume-thickness ratio | ||

| 10 | Perimeter of horizontal section | 23 | Compactness index of cross section | ||

| 11 | Area of horizontal section | 24 | Compactness index of longitudinal section | ||

| 12 | Length–width ratio | 25 | Compactness index of horizontal section | ||

| 13 | Length-thickness ratio |

With the rice grain phenotypic dataset, the models of recognition between filled and unfilled grains, distinction between indica and japonica subspecies, and classification of different rice varieties were established by six different machine learning algorithms including decision tree, random forest, support vector machine, Naive Bayes, XGBoost, and BP neural network36–38.

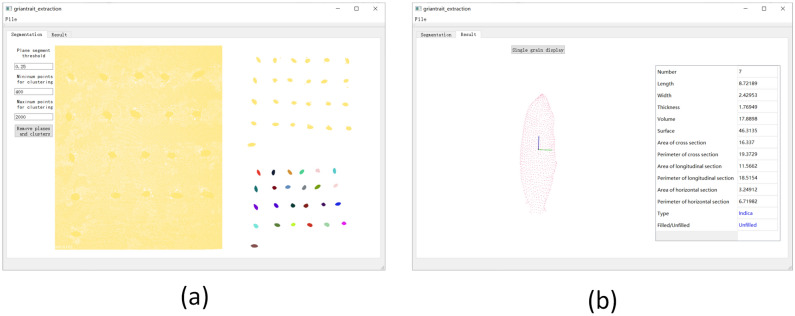

System software design

In order to facilitate grain 3D point cloud analysis, A specific user software was designed based on QT Designer, PCL, QVTKWidget and XGBoost as shown in Fig. 8, in which the above algorithms including grain point cloud processing, grain traits calculation and analysis were integrated. The segmentation window displayed the original point cloud and the grain segmentation result as shown in Fig. 8a. Meanwhile, in order to predict the grain category and the plumpness, the python script was adopted to load the filled/unfilled grain classification model, and the result window displayed the single grain point cloud, 11 basic traits, categories and plumpness as shown in Fig. 8b. Moreover, the software parameters of plane segmentation threshold and cluster point cloud range were able to be easily modified by users to optimize the grain segmentation result. Finally the results including grain point cloud and traits would be saved, and the software operation was shown as Supplementary Video S1.

Figure 8.

The user software for grain 3D point cloud analysis. (a) Grain 3D point cloud processing, (b) grain traits extraction.

Approval for plant experiments

We confirmed that all experiments were performed in accordance with relevant named guidelines and regulations.

Results

To verify the accuracy of the algorithm, three experimenters used micrometers to measure the length, width and thickness of 2000 rice (including filled and unfilled grains), 100 wheat and 100 corn grains, and the mean value of the three measurements was taken as ground truth. The accuracy of the error analysis result is evaluated by mean absolute percentage error (MAPE), root mean square error (RMSE) and determination coefficient (). The relevant formula is as follows:

| 10 |

| 11 |

| 12 |

where n is the total number of measurements; is the manual measurement results; is the system measurement results, and is the mean of the system measurements.

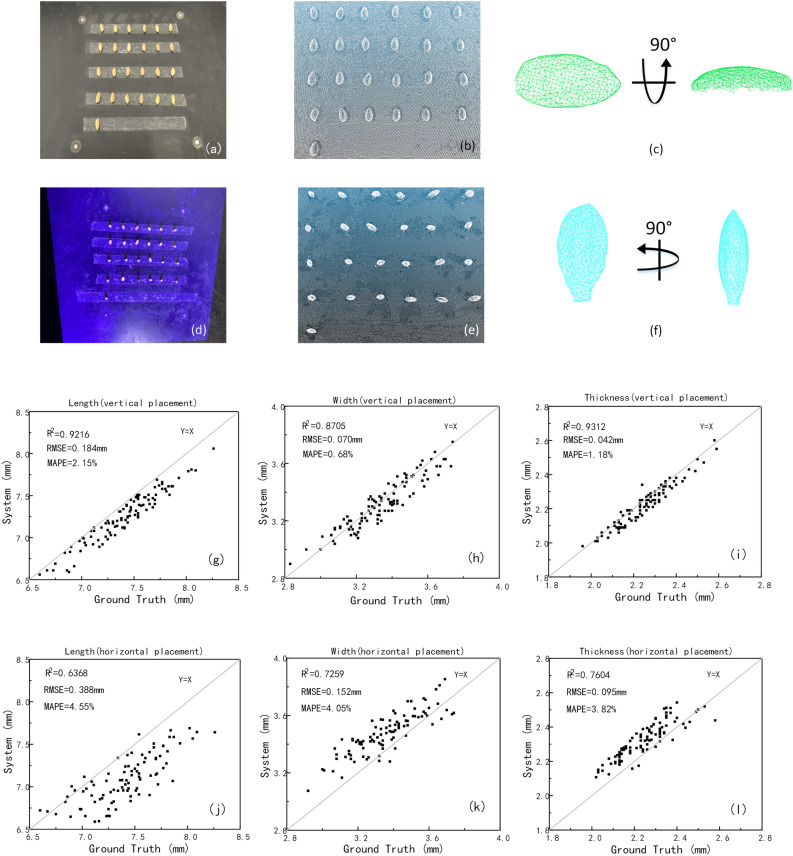

Comparison of placement scheme

To verify the measurement accuracy, 100 filled grains of Zhonghua 11 were taken as samples to compare the precision of the horizontal placement scheme with the vertical placement scheme. Figure 9a–f shows the point cloud comparison in the two schemes. As the results shown in Fig. 9g–l, the measurement errors of length, width and thickness of the horizontal placement scheme were 4.55%, 4.05% and 3.82%, while the measurement errors of the vertical placement scheme were 2.15%, 0.68% and 1.18%. As the Fig. 9c,f shown, the grain point clouds obtained by horizontal placement were incomplete due to the restriction of scanning angle, which obviously led to lower measurement accuracy, therefore the vertical placement scheme was proved to be preferable.

Figure 9.

Comparison of two placement schemes, (a–c) the effect of horizontal placement scheme, (d–f) the effect of vertical placement scheme, (g–i) the measuring result in vertical placement, (j–l) the measuring result in horizontal placement.

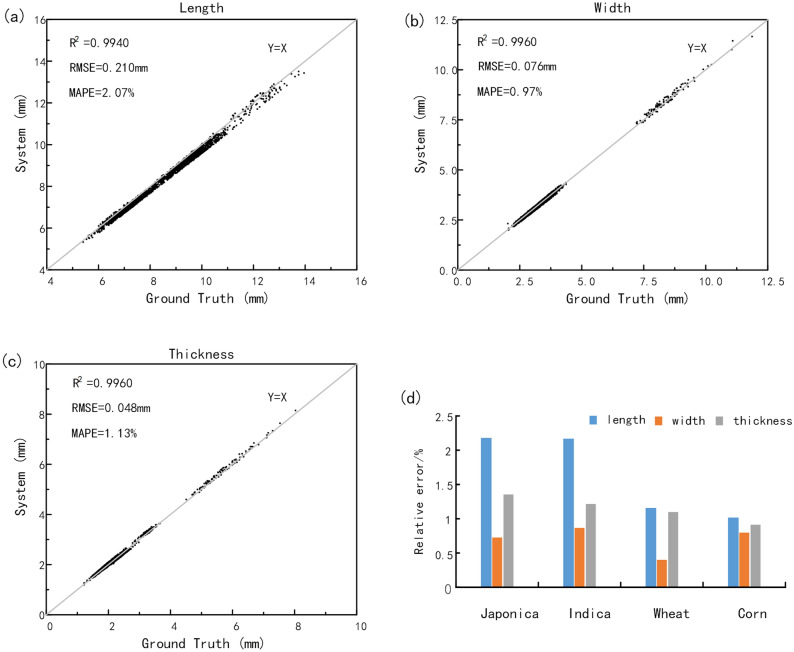

Accuracy analysis for length, width, thickness, surface area and volume

Accuracy analysis was performed on all 2200 samples including rice, wheat and corn, and the measuring results were shown in Fig. 10. Figure 10a shows that the length measurement results of was 0.9940, 0.210 mm and 2.07% respectively. Figure 10b shows that the width measurement results of was 0.9960, 0.076 mm and 0.97% respectively. And Fig. 10c shows that the thickness measurement results of was 0.9960, 0.048 mm and 1.13% respectively. The results showed that the system value was in good consistency with the manual value and the system method was able to extract the grain length, width and thickness of grains with high precision. Meanwhile, as shown in Fig. 10d, the measurement errors of wheat and corn were generally smaller than rice, especially in the length, because the wheat and corn were more stable than rice when placed vertically, which led to higher scanning accuracy.

Figure 10.

The sample accuracy analysis. (a) Length (b) Width (c) Thickness (d) japonica, indica, wheat and corn grains mean relative error.

Due to the irregular surface morphology of the grains, the surface area and volume are difficult to measure in a non-destructive way. Therefore, a standard sphere with a radius of 10 mm was adopted to verify the system method validity. The results showed that the surface area and volume measuring error were 2.83% and 1.75% respectively.

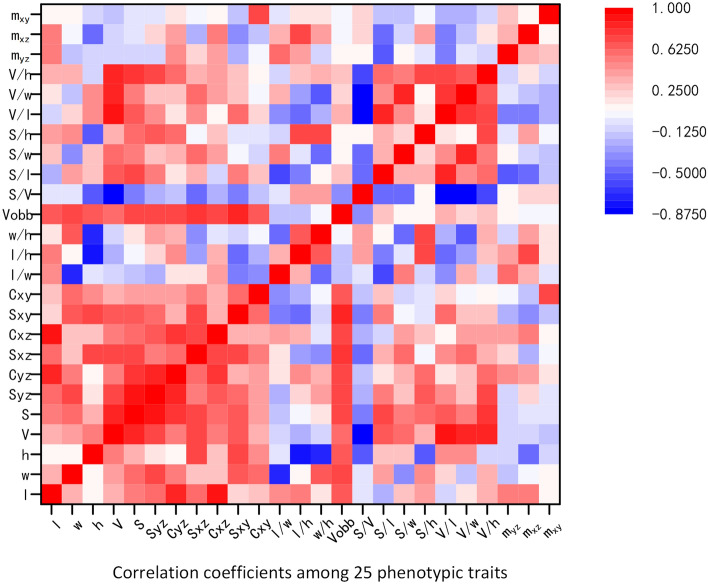

Statistical analysis of grain traits

The 25 grain traits extracted in this study could quantitatively describe the geometric shape of grain completely. In order to eliminate the influence of different dimensions of traits, the data was preprocessed based on the Z-score standardization method. The relevant formula is as follows:

| 13 |

where X* is the result of Z-score standardization, X is the sample data, is the mean of sample data, is the standard deviation of sample data.

Then the correlation analysis was carried out on the traits of grain varieties For example, with the extracted traits in Zhonghua 11, a correlation matrix of Pearson coefficients38 was calculated to identify inter-relationships. Intergroup correlation analysis was completed based on SPSS version 25.0 (https://www.ibm.com/products/spss-statistics), and the results were shown in Fig. 11. The results demonstrated that the correlation among the basic traits was strong and all of them were positive except thickness. Thickness as an important trait in grain shape had little correlation with length and width. In particular, the three compactness index were highly independent.

Figure 11.

The result of grain traits correlation analysis.

Recognition model of filled and unfilled grains

Filled and unfilled grain identification has great importance to the finally yield evaluation. In this study, the classification models were studied by 6 different machine learning method with 25 phenotypic traits. All classification models were performed on the Sklearn Tool Kit version 0.24.2 (http://www.lfd.uci.edu/~gohlke/pythonlibs/#scikit-learn), and the main parameters were decided by learning curve and grid search method. Then tenfold cross-validation method was applied to validate each model. The data set was randomly divided into 10 parts, while 9 of them were taken as the training set in turn, and the rest as the test set. Then the average of the 10 results was used as the model's accuracy. The model results for filled and unfilled grains classification were shown in Table 3, the details of which was as follows:

Classification and regression trees (CART): The model was constructed as follows: the information entropy was set as impurity criterion. Meanwhile, the maximum tree depth was 4, and tree branch decision mode was random. The accuracy of model classification was 85.447%.

Random forest (RF): In this model test, the depth of the forest was set to 2, while the Gini coefficient was adopted, and the number of base evaluators was set to 24. According to the validation results, the model classification accuracy reached 88.605%. Compared with CART, the model accuracy was significantly improved.

Support vector machines (SVM): Since the distribution of original phenotypic traits is linearly inseparable, an optimal high-dimensional space was constructed by selecting the kernel function and the penalty factor. In this study, Gaussian kernel function was selected, and the penalty factor was set as 6. As a result, the accuracy of model classification was 89.684%.

Naive Bayes (NB): in this study, Gaussian Naive Bayes was selected and the classification accuracy rate was 88.079%.

Back propagation (BP) neural networks: The hidden layer was divided into two layers, in which the number of neurons in the first layer is 100 and the second layer is 50. The number of iterations was set to 2000, the initial learning rate was set to 0.0003237, and other parameters were the default values. Eventually, the classification accuracy of the model was 88.105%.

Extreme gradient boosting (XGBoost): The classifier was constructed based on tree model. After the logistic regression loss function was selected, the number of weak classifiers was set as 20, while the maximum tree depth was set as 5, and the learning rate was set as 0.3. As a result, the classification accuracy of the model was 90.184%, which was the best in all the models.

Table 3.

The classification target results of each classification method based on 25 phenotypic traits.

| Classification target | Method | Precision (%) | Recall score | F1 score |

|---|---|---|---|---|

| Filled and unfilled | CART | 85.447 | 0.85333 | 0.85706 |

| RF | 88.605 | 0.88722 | 0.89145 | |

| SVM | 89.684 | 0.89667 | 0.90371 | |

| NB | 88.079 | 0.88167 | 0.89363 | |

| BP | 88.105 | 0.88167 | 0.88811 | |

| XGBoost | 90.184 | 0.89333 | 0.90615 | |

| 10 rice varieties | CART | 37.027 | 0.36586 | 0.30779 |

| RF | 40.363 | 0.39406 | 0.34210 | |

| SVM | 47.252 | 0.46856 | 0.44847 | |

| NB | 41.435 | 0.41175 | 0.39172 | |

| BP | 38.311 | 0.38047 | 0.36313 | |

| XGBoost | 45.960 | 0.45692 | 0.44967 | |

| Indica and japonica | CART | 98.785 | 0.98750 | 0.98745 |

| RF | 99.400 | 0.99400 | 0.99400 | |

| SVM | 99.950 | 0.99950 | 0.99950 | |

| NB | 99.450 | 0.99450 | 0.99450 | |

| BP | 99.250 | 0.99250 | 0.99245 | |

| XGBoost | 99.750 | 0.99950 | 0.99945 |

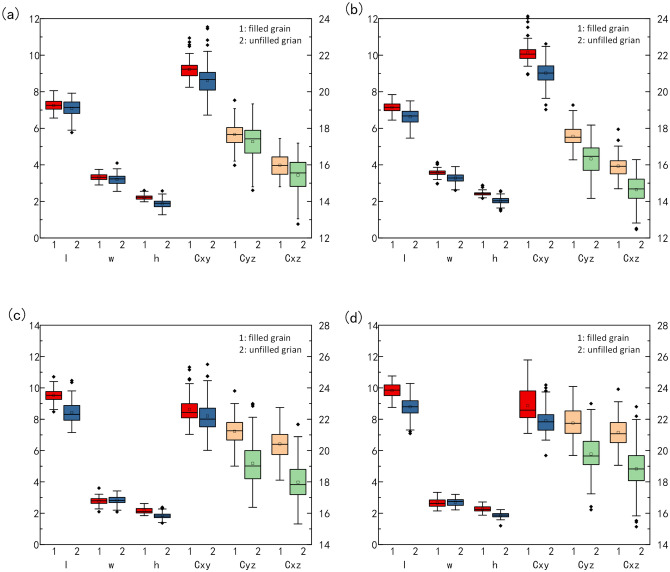

In order to explore the contribution of phenotypic traits, the XGBoost classifier was analyzed in detail and the results were shown in Table 4. From the results, the thickness weight had reached 0.34, which was proved to be dominant in filled and unfilled grain classification. Furthermore, the traits including volumetric-width ratio, volume, length-thickness ratio and surface area-length radio were all related to length, the weight of which were greater than 4%. Moreover, 4 varieties of rice grains were selected to verify the traits significance in the filled and unfilled grain classification. As shown in Fig. 12, the results indicated that the thickness had higher difference than width and length. The result also proved that the length had higher difference than width, especially in indica.

Table 4.

Weight rank of characteristic traits (> 4%).

| Rank | Trait | Importance weight |

|---|---|---|

| 1 | Thickness | 0.342219 |

| 2 | Length | 0.067255 |

| 3 | Perimeter of horizontal section | 0.062472 |

| 4 | Volume-width ratio | 0.056376 |

| 5 | Compactness index of horizontal section | 0.053502 |

| 6 | Volume | 0.049749 |

| 7 | Length-thickness ratio | 0.042486 |

| 8 | Surface area-length ratio | 0.042199 |

Figure 12.

The Comparison of main traits between filled grain and unfilled grain. (a) Zhonghua 11 (b) Wuyunjing 3 (c) C Liangyou Huazhan (d) Zhuliangyou 211.

Classification of different rice varieties and classification of indica and japonica

Based on the same 6 machine learning methods, the grain phenotypic traits of 10 different varieties which belonged to the two subspecies of indica and japonica, were used to build classification model according to the tenfold cross-validation method. The results showed that the best performance for the different varieties classification was 47.252% by the SVM model, however the best performance for different subspecies classification was 99.950% by the SVM model. This is because the grain phenotypic traits in the same subspecies had much less difference than in different subspecies. The detailed classification results of different rice varieties and subspecies were shown in Table 3.

Efficiency evaluation

To obtain the complete point clouds, 25 cereal grains would be scanned 8 times, and it took about 14 s for each time, while the sample turntable rotated 45 degree. Therefore, it took about 2 min for the point clouds acquisition. Meanwhile it took about 2 min for point clouds segmentation and phenotypic traits computation. Thus 25 grains measurement totally cost about 4 min, and the average efficiency was 9.6 s per grain. However the manual measurement efficiency was about 120 s per grain, which was one-twelfth of the system efficiency.

Discussion

Cereal grain traits have important impact on the final yield, which are also necessary for crop breeding and genetic analysis. Phenotypic traits such as length, width, thickness, volume and surface area are of great significance. In this study, a novel method for grain trait extraction by 3D structured light imaging was invented with high-throughput and high-accuracy. In addition, the grain identification model was conducted with 25 grain phenotypic traits, using 6 machine learning methods. The results indicated that the thickness was dominant in filled and unfilled grain classification. The result also proved that the length had higher difference than width, especially in indica.

At present, distinguishing filled grain from unfilled grain mainly relies on water-based or wind-based methods which are inaccurate and destructive. There are few researches on the filled/unfilled grain distinction. Therefore, there is an urgent need for a method that can accurately identify filled and unfilled grains. Liu et al.20 designed a method based on image analysis to measure grain plumpness by the grain shadow in four directions. In addition, some methods were proposed based on X-ray and thermal imaging23,24, but all these methods were identified in 2D imaging and could not provide more phenotypic information. Hua et al.25 extracted the point cloud of rice grains based on a laser scanner to calculate phenotypic information. However, it was not suitable for requirements of high throughput. The method of this study can obtain the phenotypic information of grains with high precision and high efficiency, which provides a method for crop breeding research.

In the research of the placement method, it was confirmed that the vertical placement was more accurate than the horizontal placement. Also, it is worth noting that during the scanning process, the stability of the vertical placement played great effects on the measuring result. From the results, the measurement errors of wheat and corn were generally smaller than rice, especially in the length, because the wheat and corn were more stable than rice when placed vertically.

With the rice varieties and subspecies classification results, it is demonstrated that the performance for rice subspecies classification were much better than different rice varieties classification. In the parental research of rice material, it was found that the same rice subspecies had the same intersecting pedigrees. For example, the rice varies of Zhonghua 11 and Nipponbare, which both belong to the subspecies of japonica, had the same parant of Nonglin 22, and it would definitely lead to the relatively consistent phenotypic traits40,41. However the different subspecies would had few intersecting pedigrees, which would result in significant phenotypic traits difference.

Conclusion

Based on the 3D structured light imaging, a novel method for cereal grain shape extraction and filled/unfilled grain identification was proposed. The results showed that the system measurement had high consistency with the manual measurement and the system method was able to extract the grain length, width and thickness of grains with high precision. Filled/unfilled grain identification, and grain subspecies classification were achieved by XGBoost and SVM Model, while a specific user software was developed to facilitate grain 3D point cloud analysis. In conclusion, our research demonstrated a novel method for grain 3D and plumpness information extraction with high throughput and high accuracy, which was definitely helpful to the rice breeding and genetic research. Based on the experiment results, the following conclusions are drawn.

Considering grain placement methods, the vertical placement scheme performed better results than the horizontal placement scheme. The measurement errors of length, width and thickness in the horizontal placement were 4.55%, 4.05% and 3.82%, while the measurement errors in the vertical placement were only 2.15%, 0.68% and 1.18%.

25 phenotypic traits of cereal grains could obtained automatically in batch, including 11 basic traits, 14 derived traits. And the average efficiency for single grain measurement was about 9.6 s, including 3D structure light imaging and point clouds analysis.

2200 samples including rice, corn and wheat were tested to evaluate this method, and the results showed that the average relative errors of length, width and thickness were 2.07%, 0.97% and 1.13%.

With the extracted traits, a correlation matrix of Pearson coefficients was calculated to identify inter-relationships. The results demonstrated that thickness as an important trait in grain shape had little correlation with length and width. In particular, the three compactness index were highly independent.

6 machine learning methods were used to classify the phenotypic traits of the filled/unfilled grains of 10 kinds of grains. The results showed that XGBoost was the best in all the models, with the classification accuracy of the model was 90.184%, while the thickness was proved to be dominant in filled and unfilled grain classification. And for the classification among 10 different varieties of rice grains, the best performance was 47.252% by the SVM model. What’s more, all the models performed great to classify indica and japonica, and the best performance was 99.950 by the SVM model.

Supplementary Information

Acknowledgements

We would like to thank all the colleagues in the Crop Phenotyping Center, Huazhong Agricultural University for their helping the experiments. This work was supported by grants from the National Natural Science Foundation of China (31600287, 31770397), National Key Research and Development Plan of China (2016YFD0100101-18), and the Fundamental Research Funds for the Central Universities (2662018JC004).

Author contributions

Z.Q. designed the research, performed the experiments, analyzed the data and wrote the manuscript. Z.Z., X.H. also performed experiments. W.Y, X. L, R. Z Revised the manuscript. C.H. supervised the project.

Funding

This article was funded by National Natural Science Foundation of China (Grant nos. 31600287, 31770397), National Key Research and Development Program of China (Grant no. 2016YFD0100101-18) and Fundamental Research Funds for the Central Universities (Grant no. 2662018JC004).

Data availability

All data generated or analyzed during this study are included in this published article and its supplementary information files.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-022-07221-4.

References

- 1.He T, Li C. Harness the power of genomic selection and the potential of germplasm in crop breeding for global food security in the era with rapid climate change. Crop J. 2020;8:688–700. doi: 10.1016/j.cj.2020.04.005. [DOI] [Google Scholar]

- 2.Tester M, Langridge P. Breeding technologies to increase crop production in a changing world. Science. 2010;327:818–822. doi: 10.1126/science.1183700. [DOI] [PubMed] [Google Scholar]

- 3.Wang Z, et al. Identification of QTLs with main, epistatic and QTL x environment interaction effects for salt tolerance in rice seedlings under different salinity conditions. Theor. Appl. Genet. 2012;125:807–815. doi: 10.1007/s00122-012-1873-z. [DOI] [PubMed] [Google Scholar]

- 4.Jiang L, et al. Increased grain production of cultivated land by closing the existing cropping intensity gap in Southern China. Food Secur. 2021 doi: 10.1007/s12571-021-01154-y. [DOI] [Google Scholar]

- 5.Fan M, et al. Improving crop productivity and resource use efficiency to ensure food security and environmental quality in China. J. Exp. Bot. 2012;63:13–24. doi: 10.1093/jxb/err248. [DOI] [PubMed] [Google Scholar]

- 6.Zhou W-B, Wang H-Y, Hu X, Duan F-Y. Spatial variation of technical efficiency of cereal production in China at the farm level. J. Integr. Agric. 2021;20:470–481. doi: 10.1016/s2095-3119(20)63579-1. [DOI] [Google Scholar]

- 7.Upadhyaya HD, Reddy KN, Singh S, Gowda CLL. Phenotypic diversity in Cajanus species and identification of promising sources for agronomic traits and seed protein content. Genet. Resour. Crop Evol. 2012;60:639–659. doi: 10.1007/s10722-012-9864-0. [DOI] [Google Scholar]

- 8.Yang W, et al. Crop phenomics and high-throughput phenotyping: past decades, current challenges, and future perspectives. Mol. Plant. 2020;13:187–214. doi: 10.1016/j.molp.2020.01.008. [DOI] [PubMed] [Google Scholar]

- 9.Tellaeche A, BurgosArtizzu XP, Pajares G, Ribeiro A, Fernández-Quintanilla C. A new vision-based approach to differential spraying in precision agriculture. Comput. Electron. Agric. 2008;60:144–155. doi: 10.1016/j.compag.2007.07.008. [DOI] [Google Scholar]

- 10.Yang W, Duan L, Chen G, Xiong L, Liu Q. Plant phenomics and high-throughput phenotyping: Accelerating rice functional genomics using multidisciplinary technologies. Curr. Opin. Plant Biol. 2013;16:180–187. doi: 10.1016/j.pbi.2013.03.005. [DOI] [PubMed] [Google Scholar]

- 11.Tanabata T, Shibaya T, Hori K, Ebana K, Yano M. SmartGrain: High-throughput phenotyping software for measuring seed shape through image analysis. Plant Physiol. 2012;160:1871–1880. doi: 10.1104/pp.112.205120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhihong M, Yuhan M, Chengliang GLL. Smartphone-based visual measurement and portable instrumentation for crop seed phenotyping. Ifac Papersonline. 2016;49(16):259–264. doi: 10.1016/j.ifacol.2016.10.048. [DOI] [Google Scholar]

- 13.Le TDQ, Alvarado C, Girousse C, Legland D, Chateigner-Boutin AL. Use of X-ray micro computed tomography imaging to analyze the morphology of wheat grain through its development. Plant Methods. 2019;15:84. doi: 10.1186/s13007-019-0468-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ducournau S, et al. High throughput phenotyping dataset related to seed and seedling traits of sugar beet genotypes. Data Brief. 2020;29:105201. doi: 10.1016/j.dib.2020.105201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.An P, Fang K, Jiang Q, Zhang H, Zhang Y. Measurement of rock joint surfaces by using smartphone structure from motion (SfM) photogrammetry. Sensors. 2021;21:1. doi: 10.3390/s21030922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gutierrez A, Jimenez MJ, Monaghan D, O’Connor NE. Topological evaluation of volume reconstructions by voxel carving. Comput. Vis. Image Underst. 2014;121:27–35. doi: 10.1016/j.cviu.2013.11.005. [DOI] [Google Scholar]

- 17.Jay S, Rabatel G, Hadoux X, Moura D, Gorretta N. In-field crop row phenotyping from 3D modeling performed using Structure from Motion. Comput. Electron. Agric. 2015;110:70–77. doi: 10.1016/j.compag.2014.09.021. [DOI] [Google Scholar]

- 18.Kim W-S, et al. Stereo-vision-based crop height estimation for agricultural robots. Comput. Electron. Agric. 2021;181:1. doi: 10.1016/j.compag.2020.105937. [DOI] [Google Scholar]

- 19.Paulus S. Measuring crops in 3D: Using geometry for plant phenotyping. Plant Methods. 2019;15:103. doi: 10.1186/s13007-019-0490-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Liu T, et al. A shadow-based method to calculate the percentage of filled rice grains. Biosys. Eng. 2016;150:79–88. doi: 10.1016/j.biosystemseng.2016.07.011. [DOI] [Google Scholar]

- 21.Yang J, et al. Grain filling pattern and cytokinin content in the grains and roots of rice plants. Plant Growth Regul. 2000;30(3):261–270. doi: 10.1023/A:1006356125418. [DOI] [Google Scholar]

- 22.Tirol-Padre A, et al. Grain yield performance of rice genotypes at suboptimal levels of soil N as affected by N uptake and utilization efficiency. Field Crop Res. 1996;46:127–143. doi: 10.1016/0378-4290(95)00095-x. [DOI] [Google Scholar]

- 23.Duan L, et al. Fast discrimination and counting of filled/unfilled rice spikelets based on bi-modal imaging. Comput. Electron. Agric. 2011;75:196–203. doi: 10.1016/j.compag.2010.11.004. [DOI] [Google Scholar]

- 24.Kumar A, et al. Discrimination of filled and unfilled grains of rice panicles using thermal and RGB images. J. Cereal Sci. 2020;95:1. doi: 10.1016/j.jcs.2020.103037. [DOI] [Google Scholar]

- 25.Li H, et al. Calculation method of surface shape feature of rice seed based on point cloud. Comput. Electron. Agric. 2017;142:416–423. doi: 10.1016/j.compag.2017.09.009. [DOI] [Google Scholar]

- 26.Wold S, Esbensen K, Geladi P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987;2:37–52. doi: 10.1016/0169-7439(87)80084-9. [DOI] [Google Scholar]

- 27.Chiang P-Y, Kuo CCJ. Voxel-based shape decomposition for feature-preserving 3D thumbnail creation. J. Vis. Commun. Image Represent. 2012;23:1–11. doi: 10.1016/j.jvcir.2011.07.008. [DOI] [Google Scholar]

- 28.Rusu RB, Marton ZC, Blodow N, Dolha M, Beetz M. Towards 3D Point cloud based object maps for household environments. Robot. Auton. Syst. 2008;56:927–941. doi: 10.1016/j.robot.2008.08.005. [DOI] [Google Scholar]

- 29.Schnabel, R., Wahl, R. & Klein, R. in John Wiley & Sons, Ltd Vol. 26 214–226 (2007).

- 30.Date H, Kanai S, Kawashima K. As-built modeling of piping system from terrestrial laser-scanned point clouds using normal-based region growing. J. Comput. Des. Eng. 2014;1:13–26. doi: 10.7315/jcde.2014.002. [DOI] [Google Scholar]

- 31.Bergen GVD. Efficient collision detection of complex deformable models using AABB trees. J. Graph. Tools. 1997;2:1–13. doi: 10.1080/10867651.1997.10487480. [DOI] [Google Scholar]

- 32.Dimitrov D, Knauer C, Kriegel K, Rote G. Bounds on the quality of the PCA bounding boxes. Comput. Geom. 2009;42:772–789. doi: 10.1016/j.comgeo.2008.02.007. [DOI] [Google Scholar]

- 33.Marton, Z. C., Rusu, R. B. & Beetz, M. in IEEE international conference on robotics and automation. IEEE (2009).

- 34.Connelly R. Comments on generalized Heron polynomials and Robbins’ conjectures. Discret. Math. 2009;309:4192–4196. doi: 10.1016/j.disc.2008.10.031. [DOI] [Google Scholar]

- 35.Shouche SP, Rastogi R, Bhagwat SG, Sainis JK. Shape analysis of grains of Indian wheat varieties. Comput. Electron. Agric. 2001;1:55–76. doi: 10.1016/S0168-1699(01)00174-0. [DOI] [Google Scholar]

- 36.Kurt I, Ture M, Kurum AT. Comparing performances of logistic regression, classification and regression tree, and neural networks for predicting coronary artery disease. Expert Syst. Appl. 2008;34:366–374. doi: 10.1016/j.eswa.2006.09.004. [DOI] [Google Scholar]

- 37.Narayan Y. Comparative analysis of SVM and Naive Bayes classifier for the SEMG signal classification. Mater. Today: Proc. 2021;37:3241–3245. doi: 10.1016/j.matpr.2020.09.093. [DOI] [Google Scholar]

- 38.Wang X-W, Liu Y-Y. Comparative study of classifiers for human microbiome data. Med. Microecol. 2020;4:1. doi: 10.1016/j.medmic.2020.100013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Edelmann D, Móri TF, Székely GJ. On relationships between the Pearson and the distance correlation coefficients. Stat. Probab. Lett. 2021;169:8960. doi: 10.1016/j.spl.2020.108960. [DOI] [Google Scholar]

- 40.Zeng X, et al. CRISPR/Cas9-mediated mutation of OsSWEET14 in rice cv. Zhonghua11 confers resistance to Xanthomonas oryzae pv. oryzae without yield penalty. BMC Plant Biol. 2020;20:1. doi: 10.1186/s12870-020-02524-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Deng Y, Pan Y, Luo M. Detection and correction of assembly errors of rice Nipponbare reference sequence. Plant Biol. (Stuttg) 2014;16:643–650. doi: 10.1111/plb.12090. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data generated or analyzed during this study are included in this published article and its supplementary information files.