Abstract

Safety sciences must cope with uncertainty of models and results as well as information gaps. Acknowledging this uncertainty necessitates embracing probabilities and accepting the remaining risk. Every toxicological tool delivers only probable results. Traditionally, this is taken into account by using uncertainty / assessment factors and worst-case / precautionary approaches and thresholds. Probabilistic methods and Bayesian approaches seek to characterize these uncertainties and promise to support better risk assessment and, thereby, improve risk management decisions. Actual assessments of uncertainty can be more realistic than worst-case scenarios and may allow less conservative safety margins. Most importantly, as soon as we agree on uncertainty, this defines room for improvement and allows a transition from traditional to new approach methods as an engineering exercise. The objective nature of these mathematical tools allows to assign each methodology its fair place in evidence integration, whether in the context of risk assessment, systematic reviews, or in the definition of an integrated testing strategy (ITS) / defined approach (DA) / integrated approach to testing and assessment (IATA). This article gives an overview of methods for probabilistic risk assessment and their application for exposure assessment, physiologically-based kinetic modelling, probability of hazard assessment (based on quantitative and read-across based structure-activity relationships, and mechanistic alerts from in vitro studies), individual susceptibility assessment, and evidence integration. Additional aspects are opportunities for uncertainty analysis of adverse outcome pathways and their relation to thresholds of toxicological concern. In conclusion, probabilistic risk assessment will be key for constructing a new toxicology paradigm – probably!

1. Introduction

Nothing is as certain as death and taxes1. Toxicology (as all of medicine) does not reach this level of certainty, as the Johns Hopkins scholar William Osler (1849–1919) rightly stated, “Medicine is a science of uncertainty and an art of probability”, and in this sense toxicology is a very medical discipline. However, our expectation as to the outcome of safety sciences is certainty – a product coming to the market must be safe. This article aims to make the case that we are actually working with an astonishing level of uncertainty in our assessments, which we hide by using apparently deterministic expressions of results (classifications, labels, thresholds, etc.). It is not that we cannot know, but that our predictions have only a certain probability of being correct – not very comforting when the safety of sometimes millions of patients and consumers is at stake.

The 2017 book The Illusion of Risk Control – What Does it Take to Live with Uncertainty? edited by Gilles Motet and Corinne Bieder, makes the important point of acknowledging that there is always a risk and that we can only assess and manage its probability. Consequently, safety is defined by the absence of unacceptable risk, not as the absence of all risk. Giving up on the illusion of safety and acknowledging uncertainty does give a new perspective on risk assessment and management as we will discuss here, applying it to toxicology. Dupuy (1982) described the problem as “The fundamental incapacity of Industrial Man to control his destiny increasingly appears as the paradoxical and tragic result of a desire for total control – either by reason or by force”. As we will see, embracing uncertainty can free us to adopt a new toxicity testing paradigm.

Uncertainty and probability are two sides of the same coin. Risk assessment under uncertainty, therefore, logically leads us to probabilistic risk assessment (ProbRA). We will go light on mathematics here. This article is primarily about why to use ProbRA and not on how to do it. In recent years, the importance of having a firm understanding of probability has become apparent, and as a result there are several books the reader can consult, which we recommend:

Kurt, Will (2019). Bayesian Statistics the Fun Way.

Mlodinow, Leonard (2008). The Drunkard’s Walk: How Randomness Rules Our Lives.

Wheelan, Charles (2013). Naked Statistics: Stripping the Dread from the Data.

2. Some defining characteristics of (un)certainty versus probability versus risk

2.1. Uncertainty

“We know accurately only when we know little; with knowledge, doubt increases” (Johann Wolfgang von Goethe in Maxims and Reflections).

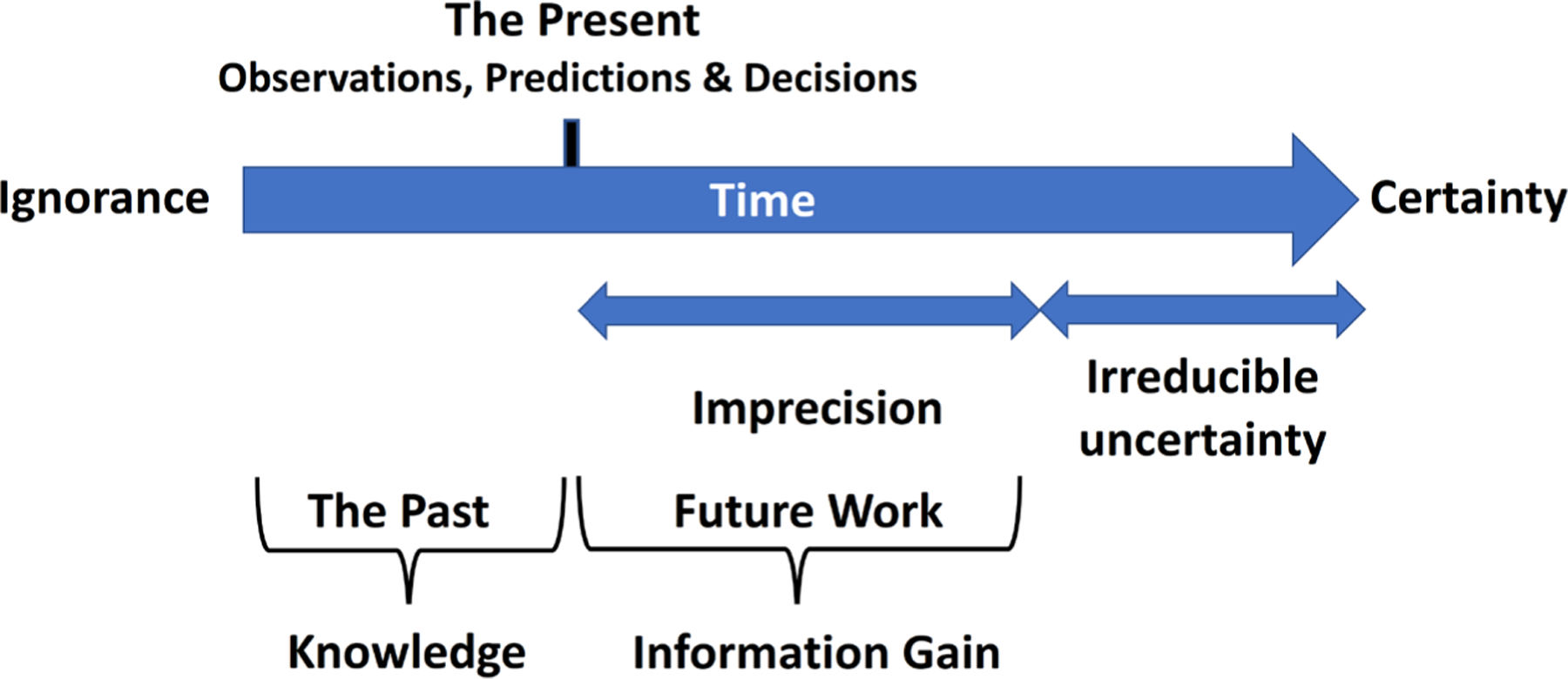

Uncertainty in toxicology is at its base the lack of knowledge of the true value of a quantity or relationships among quantities. Figure 1 illustrates the path from ignorance approximating certainty with some irreducible uncertainty remaining. Walker et al. (2003) note that uncertainty is not simply the absence of knowledge, but a situation of inadequate information (inexactness, unreliability, and sometimes ignorance). “However, uncertainty can prevail in situations where a lot of information is available …. Furthermore, new information can either decrease or increase uncertainty. New knowledge on complex processes may reveal the presence of uncertainties that were previously unknown or were understated. In this way, more knowledge illuminates that our understanding is more limited or that the processes are more complex than thought before”. Cullen and Frey (1999) address uncertainties that arise during risk analyses:

Scenario uncertainty – typically of omission, resulting from incorrect or incomplete specification of the risk scenario to be evaluated. In toxicology, for example, risk assessment before the actual use of a substance is clear.

Model uncertainty – limitations in the mathematical models or techniques often due to (a) simplifying assumptions; (b) exclusion of relevant processes; (c) misspecification of model boundary conditions (e.g., the range of input parameters); or (d) misapplication of a model developed for other purposes. In toxicology, this obviously resonates with many aspects of the risk assessment process.

Input or parameter uncertainty – particular attention must be paid to measurement error, which can be either systemic (when there is a bias in the data) or random (noise in the data). Toxicology obviously faces both, but these are rarely explicitly addressed when risk assessments are made.

Today, additional aspects such as inconsistency, bias, and methodological choices are considered as sources of uncertainty. Recent European Food Safety Authority (EFSA) guidance (EFSA, 2018) details uncertainty very comprehensively for the safety sciences.

Fig. 1: Knowledge gain versus uncertainty.

Modified and combined from Njå et al. (2017) and Augenbaugh (2006)

The Grading of Recommendations, Assessment, Development and Evaluation (GRADE) working group has issued a guideline (Brozek et al., 2021) on assessing the certainty in modelled evidence, which includes the three types of uncertainty mentioned above and provides a flowchart for finding, selecting, and assessing certainty in a model. The certainty of modelled outputs is recommended to be assessed on the following domains:

- Risk of bias

- credibility of the model itself

- certainty of all inputs

Directness

Precision

Consistency

Risk of publication bias

Variability (a.k.a. imprecision) refers to real differences in results over time, space, or members of a population and is a property of the system being studied (e.g., body weight, food consumption, age, etc. for humans or ecological species). Uncertainty is usually seen as the enemy of safety. But as Pariès (2017) rightly states, “Uncertainty is not necessarily bad. Actually we are immerged in uncertainty, we live with it, and we need it to deal with the world’s complexity with our limited resources. We have inherited cognitive and social tools to manage it and deal with the associated unexpected variability. We need to better understand these tools and augment their efficiency in order to engineer resilience into our socio-technical systems”.

2.2. Probability

Here we come to the core of the argument. Stephen Jay Gould (1941–2002, US paleontologist and historian of science) wrote in The Dinosaur in the Haystack (1995), “Misunderstanding of probability may be the greatest of all impediments to scientific literacy”. So, what is probability? George Boole (1815–1864, English mathematician and philosopher best known for his Boolean algebra) stated, “Probability is expectation founded upon partial knowledge. A perfect acquaintance with all the circumstances affecting the occurrence of an event would change expectation into certainty, and leave neither room nor demand for a theory of probabilities”. A probabilistic approach is based on the theory of probability and the fact that randomness plays a role in prediction. It is the opposite of deterministic. A deterministic situation, i.e., one without uncertainty, though does not exclude imprecision affecting our determination. Probabilistic models incorporate random variables and probability distributions into the respective model.

Few probabilities are known, like rolling a perfect die; they are called a priori probabilities. Where observed frequencies are used to predict probabilities, we call them statistical probabilities, to be distinguished from estimated probabilities, which are based on judgement because of the associated uncertainty. Almost all risk decisions in risk assessment are based on a combination of the latter two. The critical question is the reliability of the probability estimate. The purpose of this article is to stress that there are methods to assess the remaining uncertainty and support managing the resulting risk.

The key point we must clarify is that we are not just talking about the p-value of our statistical significance tests when talking about probabilities in risk assessment. Aside the poor use of statistics in toxicology in general (Hartung, 2013), it will surprise many readers that our gold-standard significance test approach, which is increasingly used (Cristea and Ioannidis, 2018), is actually ill-suited for the questions we ask (Goodman, 1999a,b)2: “Biological understanding and previous research play little formal role in the interpretation of quantitative results. This phenomenon is manifest in the discussion sections of research articles and ultimately can affect the reliability of conclusions. The standard statistical approach has created this situation by promoting the illusion that conclusions can be produced with certain ‘error rates,’ without consideration of information from outside the experiment. This statistical approach, the key components of which are P values and hypothesis tests, is widely perceived as a mathematically coherent approach to inference.” The articles discuss the resulting “p value fallacy”. P value fallacy in easy terms means “while most physicians and many biomedical researchers think that a ‘P’ of 0.05 for a clinical trial means that there is only a 5% chance that the null hypothesis is true, that is not the case. Here is what ‘P = 0.05’ actually means: if many similar trials are performed testing the same novel hypothesis, and if the null hypothesis is true, then it (the null) will be falsely rejected in 5% of those trials. For any single trial, it doesn’t tell us much”. Ioannidis (2008) shows the problem for a large number of observational epidemiological studies. Seeing the comparatively high standard of statistics in clinical trials and epidemiology, we are for larger parts of science reminded of Nassim Taleb (2007), “They only knew enough math to be blinded by it”.

It should be noted that an understanding of probability developed only slowly in science; Pierre-Simon Laplace classically defined the probability of an event as the number of outcomes favorable to the event divided by the total number of possible outcomes. So, the probability of throwing a six with a perfect die is 1 in 6. Laplace finalized the classical probability theory in the 19th century, which started as early as the 16th century (especially Pierre de Fermat and Blaise Pascal in the 17th century) mainly from the analysis of games. Jacob Bernoulli expanded to the principle of indifference, taking into account that not all outcomes need to have the same probability, and others expanded it to continuous variables. In 1933, the Russian mathematician A. Kolmogorov (1903–1987) outlined an axiomatic approach that forms the basis for the modern theory defining probability based on the three suggested axioms.

In the 20th century, frequentist statistics was developed and became the dominant statistical paradigm. It continues to be most popular in scientific articles (with p-values, confidence intervals, etc.). Frequentist statistics is about repeatability and gathering more data, and probability is the long-run frequency of repeatable experiments.

An alternative approach is “Bayesian inference” based on Bayes’ theorem, named after Thomas Bayes, an English statistician of the 18th century. Here, probability essentially represents the degree of belief in something, probably closer to most people’s intuitive idea of probability.

We can thus distinguish three major forms of probability:

The classical or axiomatic (based on Kolmogorov’s axioms) probability

The experimental / empirical probability of an event is equal to the long-term frequency of the event’s occurrence when the same process is repeated many times (also termed frequentist statistics or frequentist inference)

Subjective probability as the degree of belief or logical support (updated using Bayes’ theorem)

One drawback of the frequentist approach that is addressed by Bayesian inference is the issue of false-positives, especially for rare events (Szucs and Ioannidis, 2017). We have repeatedly stressed this problem for toxicology, where most hazards occur at low frequencies (Hoffmann and Hartung, 2005). The other way around, “big data” is bringing the reverse challenge of overpowered studies, i.e., “massive data sets expand the number of analyses that can be performed, and the multiplicity of possible analyses combines with lenient P value thresholds like 0.05 to generate vast potential for false positives” (Ioannidis, 2019). Another drawback is that frequentists neglect that opinion plays a major role in both preclinical and clinical research; Bayesian statistics forces the contribution of opinion out into the open where it belongs.

2.3. Likelihood

The distinction between probability and likelihood, a.k.a. reverse probability, is fundamentally important3: “Probability attaches to possible results; likelihood attaches to hypotheses.” This brings us to Bayesian statistics, which consider our beliefs. “Hypotheses, unlike results, are neither mutually exclusive nor exhaustive. … In data analysis, the ‘hypotheses’ are most often a possible value or a range of possible values for the mean of a distribution. … The set of hypotheses to which we attach likelihoods is limited by our capacity to dream them up. In practice, we can rarely be confident that we have imagined all the possible hypotheses. Our concern is to estimate the extent to which the experimental results affect the relative likelihood of the hypotheses we and others currently entertain. Because we generally do not entertain the full set of alternative hypotheses and because some are nested within others, the likelihoods that we attach to our hypotheses do not have any meaning in and of themselves; only the relative likelihoods – that is, the ratios of two likelihoods – have meaning. … This ratio, the relative likelihood ratio, is called the ‘Bayes Factor’.”3

In toxicology, our hypothesis is usually not articulated, but fundamentally we assume that a substance is toxic or, alternatively, that it is non-toxic. This set of hypotheses is neither complete nor mutually exclusive: The substance could be beneficial or toxic for some people or under certain circumstances. Results, on the contrary, refer to the outcome of a specific experiment where associated probabilities are adequate.

2.4. Risk

Risk has in the context of toxicology first to be distinguished from hazard, which is not always easy, as many languages do not make this distinction. Hazard is a source of danger, e.g., a tiger, but it becomes a risk only with exposure, i.e., a possibility of loss or injury with a certain probability. The tiger in the cage is a hazard with negligible risk.

Risk is characterized by two quantities:

the magnitude (severity) of the possible adverse consequence(s), and

the likelihood (probability) of occurrence of each consequence.

Table 1 gives examples of risks with the different combinations of these two properties.

Tab. 1:

Different risk types characterized by probability, possible damage, uncertainty, and public interest – iconic Greek mythology and (toxicological) examples

| Greek mythology | Risk types | Examples | Toxicology examples |

|---|---|---|---|

| Sword of Damocles | low probability, large damage | Nuclear reactors, dams, chemical plants | Chemical spills |

| Cyclops | uncertain probability, large damage | Earthquake, flood, eruption, ABC weapons | Post-marketing drug failure |

| Pythia | uncertain probability, uncertain damage | Disintegration of polar ice sheets, GMO technology | Chemicals’ contribution to obesity, miscarriage, childhood asthma |

| Pandora’s box | uncertain probability, uncertain damage, unknown causal processes | Persistent organic pollutants, endocrine disruptors, ecosystem changes | dito; nanoparticle toxicity |

| Cassandra | high probability, high delayed damage | Global atmospheric warming, loss of biodiversity | Smoking, air pollution |

| Medusa | high public unrest, little scientific concern | Electromagnetic radiation (UMTS), food irradiation | Vaccine safety |

Modified from Vlek (2010), derived from Klinke and Renn (2002); the authors added the column on toxicology.

Kaplan and Garrick (1981) defined risk in the context of toxicology as “risk is probability and consequences”. So, it is about the severity of possible damage or, as former U.S. Environmental Protection Agency (EPA) Administrator William K. Reilly phrased it, “Risk is a common metric that lets us distinguish the environmental heart attacks and broken bones from indigestion or bruises”4. For toxicology, risk is typically defined for an individual or a population. The consequences (hazards) are typically quite clear, but we struggle with the probabilities. Taleb (2007) phrased it outside of toxicology, “We generally take risks not out of bravado but out of ignorance and blindness to probability!”

3. The lack of certainty in toxicology

For the reader of this series of articles, this argument is a common thread. Some favorites in brief: In Hartung (2013, Tab. 1) we list 25 reasons why animal models as the most common approach do not reflect humans and cite studies that 20% of drug candidates fail because of unpredicted toxicities, and after passing clinical trials ~8% are withdrawn from the market mostly because of unexpected side-effects. Major studies by consortia of the pharmaceutical industry showed that rodents predict 43% of side effects in humans (n = 150) (Olson et al., 2000) and for all species had a sensitivity of 48% and specificity of 84% (n = 182) (Monticello et al., 2017).

Animal tests cannot be more relevant for humans than they are reproducible for themselves – we showed that of 670 eye corrosive chemicals, a repeat study showed 70% to be corrosive, 20% to be mild, and 10% to have no effect (Luechtefeld et al., 2016a). For skin sensitization, the reproducibility of the guinea pig maximization test was 93% (n = 624) and of the local lymph node assay (LLNA) in mice 89% (n = 296) (Luechtefeld, 2016b). Others reported for the cancer bioassay 57% reproducibility (n = 121) (cited in Basketter et al., 2012 and Smirnova et al., 2018). In our largest analysis (Luechtefeld et al., 2018b), we showed for the six most used Organisation for Economic Co-operation and Development (OECD) guideline tests and 3,469 cases where a chemical was tested more than twice, an average sensitivity of 69% (accuracy 81%); this means that the toxic property is missed in one of three tests.

Obviously, we usually do not know how well animal studies predict human health effects. However, interspecies comparisons cited in the papers above and in Wang and Gray (2015) allow an estimate, as there is no reason to assume that any species predicts humans better than they predict each other. These are some examples:

Skin sensitization (n = 403): 77% guinea pig versus mouse

Carcinogenicity (n = 317): 57% rat versus mouse

Reproductive toxicity (n = 167): ~61% rat versus rabbit versus mouse

Repeat dose toxicity (n = 37): 75–80% rat versus mouse; 27–55% for organ prediction

Repeat dose toxicity (n = 310): 68% rat versus mouse

In conclusion, toxicity tests in animals done according to OECD guidelines and under Good Laboratory Practice conditions are roughly 80% reproducible, and different lab animal species are concordant about 60% of the time. This quite impressively illustrates the uncertainty with which we operate. These are tests to estimate human safety!

For ecotoxicology, Hrovat et al. (2009) have shown an enormous variability of test results: For 44 compounds with at least 10 data entries in the ECOTOX database each, they analyzed 4,654 test reports and report variability exceeding several orders of magnitude (up to 8, i.e., one hundred million).

It is important to realize that failure to be realistic about uncertainty in toxicology has significant consequences: When a chemical is declared “safe” only to be determined years later to result in unexpected toxicity, this increases public skepticism about the ability of science to protect people (Maertens et al., 2021).

These reproducibility problems matter especially for the low-frequency events we study (Hoffmann and Hartung, 2005). The problem of rare events of big impact has been elegantly covered by Nassim Taleb (2007) in his popular book The Black Swan – The Impact of the Highly Improbable. Some pertinent quotes5 were cited earlier in this series (Bottini and Hartung, 2009). A few others are sprinkled into this article. Furthermore, the reader is referred to Taleb’s earlier book (2004) on randomness, where many of the same ideas are formulated in a less populistic way. With respect to certainty of our (animal) tools in toxicology, the most appropriate quote from Taleb (2007) is, “In the absence of a feedback process you look at models and think that they confirm reality”.

Recently, the Evidence-based Toxicology Collaboration (EBTC6) has tried a new approach to assessing certainty by evaluating rare toxicological events of drug-induced liver injury (DILI), which are poorly predicted by the mandated regulatory test battery. EBTC has put together a multi-stakeholder working group, which has searched for published evidence of DILI effects of drugs with DILI and no-DILI. The approach demonstrated that mechanistic tests reported in the U.S. EPA ToxCast database, and not the mandated regulatory animal tests, predicted rare DILI in humans (Dirven et al., 2021). This evidence-based approach has potential for broader application in toxicological methods validation.

4. Probabilistic risk assessment (ProbRA) 101

In the American system, 101 indicates an introductory course, often with no prerequisites. In this spirit, let’s summarize the principles and refer to the more comprehensive literature for details (Kirchsteiger, 1999; Jensen, 2002; Vose, 2008; Modarres, 2008; Vesely, 2011; Ostrom and Wilhelmsen, 2012).

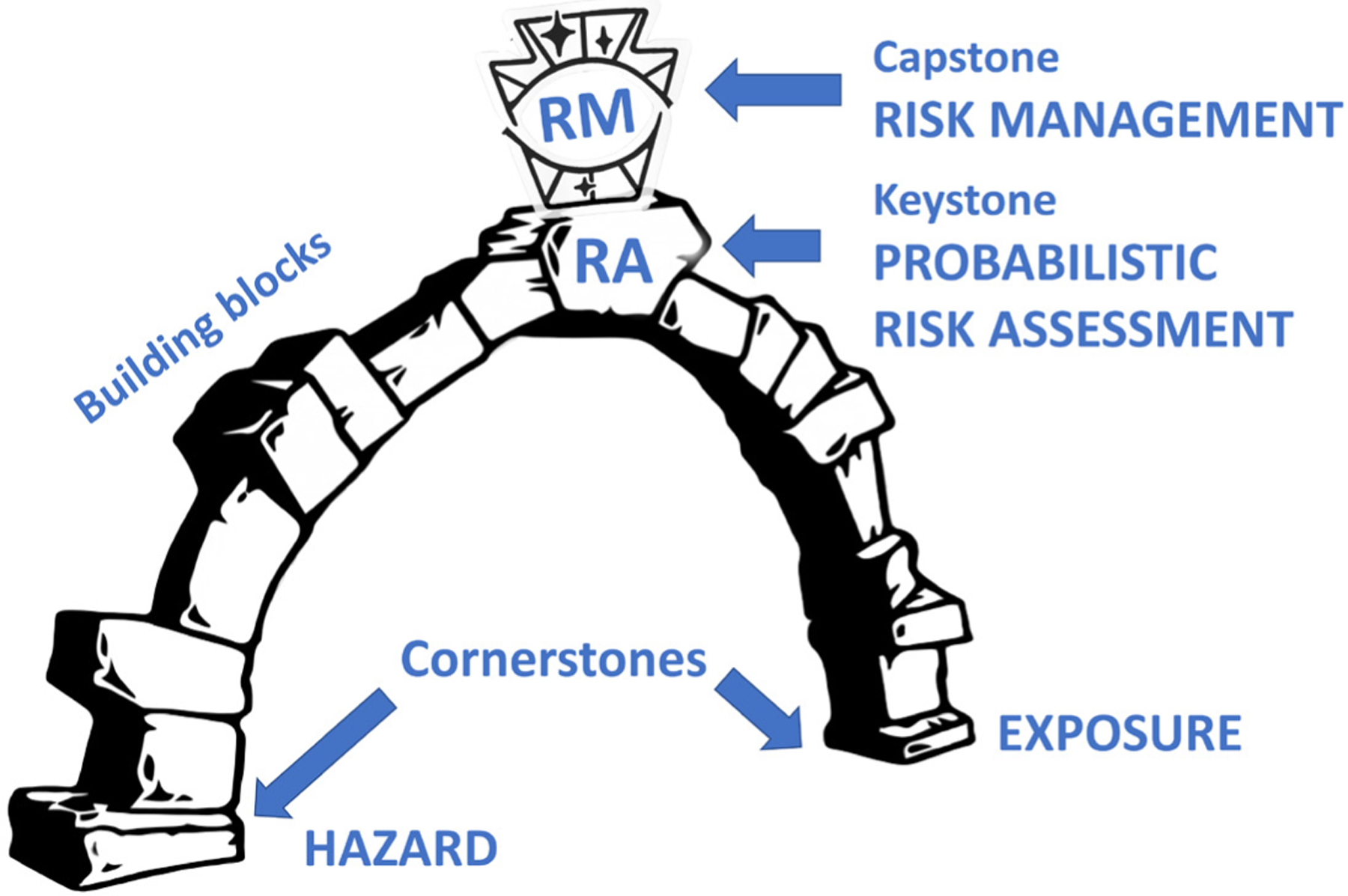

The probabilistic approach is the most widely used method of uncertainty analysis used in mathematical models. ProbRA has emerged as an increasingly popular analysis tool, especially to evaluate risks associated with every aspect of a complex engineering project (e.g., facility, spacecraft, or nuclear power plant) from concept definition, through design, construction, and operation, to end of service and decommissioning. It has its origin in the aerospace industry before and during the Apollo space program. ProbRA is a systematic and comprehensive methodology, which has only rarely been applied to substance safety assessments. ProbRA usually answers three basic questions as summarized by Michael Stamatelatos, NASA Office of Safety and Mission Assurance7:

“What can go wrong with the studied technological entity, or what are the initiators or initiating events (undesirable starting events) that lead to adverse consequence(s)?

What and how severe are the potential detriments, or the adverse consequences that the technological entity may be eventually subjected to as a result of the occurrence of the initiator?

How likely to occur are these undesirable consequences, or what are their probabilities or frequencies?”

Quite obviously, these can be applied to toxicology, where the initiator is exposure, and the adverse / undesirable consequences are hazard manifestations. For the purpose of this article, question 3 is obviously key. However, we will include some thoughts below on applying an uncertainty concept to adverse outcome pathways (AOP), which can be seen as the toxicological mechanistic aspects of questions 1 & 2. Stamatelatos7 further suggests the methodologies listed in Table 2 to answer the three questions above.

Tab. 2:

Key questions addressed in ProbRA and associated tools

| Question | Tools |

|---|---|

| What can go wrong? Screen important initiators. | Master logic diagrams (MLD) or failure modes and effects analyses (FMEA); in toxicology, these would be relevant exposures or molecular initiating events (MIE) triggered within the adverse outcome pathway (AOP) framework |

| What are the adverse consequences? | Deterministic analyses that describe the phenomena that could occur along the path of the accident (here hazard) scenario. In toxicology, this can be understood as the exposure-to-hazard path, more recently defined as AOP with their key events (KE). |

| What is the probability of adverse consequences? | Boolean logic methods for model development (e.g., event tree analysis (ETA) or event sequence diagrams (ESD) analysis and deductive methods like fault tree analysis (FTA)) and by probabilistic or statistical methods for the quantification portion of the model analysis (deductive logic tools like fault trees or inductive logic tools like reliability block diagrams (RBD) and FMEA). The final result of a ProbRA is given in the form of a risk curve and the associated uncertainties. This is evidently least translated to toxicology. |

For toxicology, the U.S. EPA pioneered ProbRA with the 1997 release of EPA’s “Policy for Use of Probabilistic Analysis in Risk Assessment”8. It states that “probabilistic analysis techniques as Monte Carlo analysis, given adequate supporting data and credible assumptions, can be viable statistical tools for analyzing variability and uncertainty in risk assessments”. Monte Carlo simulation (see, for example, textbooks by Melchers, 1999, and Madsen et al., 1986) is a technique that involves using random numbers and probabilities to solve problems. Originally, the EPA used “Monte Carlo method” essentially synonymously with ProbRA.

The modern Monte Carlo method / simulation was developed in the late 1940s by Stanislaw Ulam and John von Neumann in the nuclear weapons projects at the Los Alamos National Laboratory. It is based on the law of large numbers that a random variable can be approximated by taking the empirical mean of independent samples of the variable, where the input parameters are selected according to their respective probability distributions. This repeated random sampling to obtain numerical results uses randomness to solve problems that might be deterministic in principle. This way, it propagates variability or uncertainty of model input parameters and overcomes the uncertainty or variability in the underlying processes. For each combination of input parameters, the deterministic model is then solved, and model results are collected until the specified number of model iterations (shots) is completed. This results in a distribution of the output parameters, which is often parametrized using a Markov chain Monte Carlo (MCMC) sampler.

The Monte Carlo method, however, is just one of many methods for analyzing uncertainty propagation, where the goal is to determine how random variation, lack of knowledge, or error affects the sensitivity, performance, or reliability of the system that is being modeled. An alternative probabilistic methodology is the first- and second-order reliability method (FORM/SORM), a.k.a. Hasofer-Lind reliability index, a semi-probabilistic reliability analysis method devised to evaluate the reliability of a system. It estimates the sensitivity of the failure probability with respect to different input parameters. The method was suggested for ProbRA (Zhang, 2010).

Among the typically applied statistical techniques are (non-) parametric bootstrap methods. A parametric method assumes an underlying model (e.g., lognormal distribution); a non-parametric method only depends on the data points themselves. The term “bootstrap” is suggested to refer to the saying “to pull oneself up by one’s bootstraps” as a metaphor for bettering oneself by one’s own unaided efforts. As a statistical method, it belongs to the broader class of resampling methods. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates (Efron and Tibshirani, 1993; Davison and Hinkley, 1997). A great advantage of bootstrap is that it makes it easy to derive estimates of variability (standard errors) and confidence intervals for estimators of the distribution, such as percentile points, proportions, odds ratios, and correlation coefficients.

Similarly, maximum likelihood estimation9 can characterize uncertainty estimates at low sample sizes by estimating the parameters of an assumed probability distribution (Rossi, 2018). Alternatives are least squares regression or the generalized method of moments. Advantages and disadvantages of maximum likelihood estimation are10:

+ If the model is correctly assumed, the maximum likelihood estimator is the most efficient estimator. Efficiency is one measure of the quality of an estimator. An efficient estimator is one that has a small variance or mean squared error.

+ It provides a consistent but flexible approach that makes it suitable for a wide variety of applications, including cases where assumptions of other models are violated.

+ It results in unbiased estimates in larger samples.

- It relies on the assumption of a model and the derivation of the likelihood function, which is not always easy.

- Like other optimization problems, maximum likelihood estimation can be sensitive to the choice of starting values.

- Depending on the complexity of the likelihood function, the numerical estimation can be computationally expensive11.

- Estimates can be biased in small samples.

The Bayesian network (BN)12,13, also called Bayes network, belief network, belief net, decision net or causal network, introduced by Judea Pearl (1988), is a graphical formalism for representing joint probability distributions. Based on the fundamental work on the representation of and reasoning with probabilistic independence originated by the British statistician A. Philip Dawid in the 1970s, BN aim to model conditional dependence and, therefore causation, by representing conditional dependence by edges in a directed graph. Through these relationships, inference on the random variables in the graph is conducted by using weighing factors. Nodes represent variables (e.g., observable quantities, latent variables, unknown parameters or hypotheses). BN offer an intuitive and efficient way of representing sizable domains, making modeling of complex systems practical. BN provide a convenient and coherent way to represent uncertainty in models. BN have changed the way we think about probabilities.

These different mathematical tools have been employed to carry out probabilistic approaches in risk assessment. In 2014, the EPA published Probabilistic Risk Assessment Methods and Case Studies (EPA, 2014)14, describing ProbRA as “analytical methodology used to incorporate information regarding uncertainty and/or variability into analyses to provide insight regarding the degree of certainty of a risk estimate and how the risk estimate varies among different members of an exposed population, including sensitive populations or lifestages” applicable to both human health and ecological risk assessment. Two National Academy of Science reports influenced the report, namely, the National Research Council (NRC)’s report Science and Decisions: Advancing Risk Assessment (NRC, 2009) and Environmental Decisions in the Face of Uncertainty (IOM, 2013).

There are several comprehensive guides on how to actually do ProbRA (Jensen, 2002; Vose, 2008; Modarres, 2008; Vesely, 2011; Ostrom and Wilhelmsen, 2012). For our arguments, it suffices to say that in ProbRA at least one variable in the risk equation is defined as a probability distribution rather than a single number. However, the vision put forward is that more and more aspects of the risk equation should be seen as probability distributions that can be combined to estimate risk to an individual or, cumulatively, to a population. This is equally applicable to human health risk assessment and to the environment. The big questions are:

Is the method sufficiently advanced for the different aspects of the chemical risk assessment context?

What are the advantages and challenges?

What does it take to make them acceptable for regulators and bring them to broader use?

Different stakeholders have embraced this new approach to different extents. EPA and EFSA are clearly at the forefront. EPA already in 1997 (!) started defining what makes ProbRA approaches acceptable to them (Box 1).

Box 1: The U.S. Environmental Protection Agency Conditions for Acceptance of ProbRA8.

The purpose and scope of the assessment should be clearly articulated in a “problem formulation” section that includes a full discussion of any highly exposed or highly susceptible subpopulations evaluated (e.g., children, the elderly). The questions the assessment attempts to answer are to be discussed and the assessment endpoints are to be well defined.

The methods used for the analysis (including all models used, all data upon which the assessment is based, and all assumptions that have a significant impact upon the results) are to be documented and easily located in the report. This documentation is to include a discussion of the degree to which the data used are representative of the population under study. Also, this documentation is to include the names of the models and software used to generate the analysis. Sufficient information is to be provided to allow the results of the analysis to be independently reproduced.

The results of sensitivity analyses are to be presented and discussed in the report. Probabilistic techniques should be applied to the compounds, pathways, and factors of importance to the assessment, as determined by sensitivity analyses or other basic requirements of the assessment.

The presence or absence of moderate to strong correlations or dependencies between the input variables is to be discussed and accounted for in the analysis, along with the effects these have on the output distribution.

Information for each input and output distribution is to be provided in the report. This includes tabular and graphical representations of the distributions (e.g., probability density function and cumulative distribution function plots) that indicate the location of any point estimates of interest (e.g., mean, median, 95th percentile). The selection of distributions is to be explained and justified. For both the input and output distributions, variability and uncertainty are to be differentiated where possible.

The numerical stability of the central tendency and the higher end (i.e., tail) of the output distributions are to be presented and discussed.

Calculations of exposures and risks using deterministic (e.g., point estimate) methods are to be reported if possible. Providing these values will allow comparisons between the probabilistic analysis and past or screening level risk assessments. Further, deterministic estimates may be used to answer scenario-specific questions and to facilitate risk communication. When comparisons are made, it is important to explain the similarities and differences in the underlying data, assumptions, and models.

Since fixed exposure assumptions (e.g., exposure duration, body weight) are sometimes embedded in the toxicity metrics (e.g., reference doses, reference concentrations, unit cancer risk factors), the exposure estimates from the probabilistic output distribution are to be aligned with the toxicity metric.

5. Software for ProbRA

Several free and commercial software packages are available for ProbRA (Tab. 3).

Tab. 3:

Non-comprehensive list of software packages for ProbRA and Monte Carlo simulations

| Model | Developer/associated organization | Availabilitya |

|---|---|---|

| APROBA-Plus | WHO, RIVM15, Bokkers et al., 2017 | Free |

| CARES (Cumulative and Aggregate Risk Evaluation System) | CARES NG Development Organization16 | Free |

| ConsExpo | RIVM17 | Free |

| DEEM-FCID/Calendex (Dietary Exposure Evaluation Model-Food Commodity Intake Database/Calendex) | US EPA18 | Free |

| FDA-iRisk | Food and Drug Administration Center for Food Safety and Applied Nutrition (FDA/CFSAN), Joint Institute for Food Safety and Applied Nutrition (JIFSAN) and Risk Sciences International (RSI)19 | Free |

| mc2d | Pouillot et al.20 | Free |

| MCRA (Monte Carlo Risk Assessment) | RIVM, EFSA21 | Free |

| PROcEED (Probabilistic Reverse dOsimetry Estimating Exposure Distribution) | US EPA22 | Free |

| SHEDS (Stochastic Human Exposure and Dose Simulation) | US EPA24 | Free |

| AuvTool, bootstrap simulation and two-dimensional Monte Carlo simulation |

Foodrisk.org 25 | Free |

| Agena Risk | Agena Ltd., Fenton and Neil, 2014 | Commercial |

| Crystal Ball | Oracle26 | Commercial |

| @Risk | Palisade27 | Commercial |

List of available models adapted from US EPA23

5.1. Freely available

US EPA has compiled a sizable list of freely available modeling tools for ProbRA, such as RIVM’s ConsExpo and MCRA, ILSI’s CARES, and EPA’s PROcEED, to name a few. The complete list, descriptions, and links to models can be found on US EPA ExpoBox Website28. RIVM’s MCRA model is a comprehensive probabilistic risk tool, while ConsExpo, DEEMS-FCID/Calendex, CARES, and SHEDS are probabilistic exposure modeling tools for various exposure scenarios (e.g., consumer products to dietary and residential exposures) (Young et al., 2012).

Probabilistic Reverse dOsimetry Estimating Exposure Distribution (PROcEED), developed by the US EPA, is used to perform probabilistic reverse dosimetry calculations. In essence, PROcEED estimates a probability distribution of exposure concentrations that would likely have produced the observed biomarker concentrations measured in a given population, using either a discretized Bayesian approach, or, when an exposure-biomarker relation is linear, a more straightforward exposure conversion factor approach.

iRisk is a web-based tool created by the FDA that assesses risk associated with microbial and chemical contaminants in food using a probabilistic approach. Users enter data for the various factors, such as food, hazard, dose-response, etc. to generate a prediction. Further, the model can evaluate the effectiveness of prevention and control measures; the results are presented as a population-based estimate of health burden.

mc2d is an R package for two-dimensional (or second-order) Monte-Carlo simulations to superimpose the uncertainty in the risk estimates stemming from parameter uncertainty29. In order to reflect the natural variability of a modeled risk, a Monte-Carlo simulation approach can model both the empirical distribution of the risk within the population and of distributions reflecting the variability of parameters across the population.

5.2. Commercial

Although not exhaustive, we outline some of the commercially available tools for ProbRA here. Agena Risk (Fenton and Neil, 2014) is a commercial software for Bayesian artificial intelligence (A.I.) and probabilistic reasoning for assessing risk and uncertainty in fields such as operational risk, actuarial analysis, intelligence analysis risk, systems safety and reliability, health risk, cyber-security risk, and strategic financial planning.

Oracle’s Crystal Ball and Palisade’s @Risk are commercially available applications used in spreadsheet-based tools to report and measure risk using Monte Carlo analysis. Advantages to these applications include multiple pre-defined distributions and the ability to use custom data distributions, which improves risk estimates. The user can also carry out a sensitivity analysis to identify the most impactful metrics.

5.3. PBPK / PBTK model software

Paini et al. (2017) summarized a number of PBK modeling software packages (Tab. 4), noting that “the field as a whole has suffered from a fragmented software ecosystem, and the recent discontinuation of a widely used modelling software product (acslX) has highlighted the need for software tool resilience. Maintenance of, and access to, corporate knowledge and legacy work conducted with discontinued commercial software is highly problematic. The availability of a robust, free to use, global community-supported application should offer such resilience and help increase confidence in mathematical modelling approaches required by the regulatory community”.

Tab. 4:

Some software tools available for physiologically-based kinetic modeling

| Model | Developer/associated organization | Availabilitya |

|---|---|---|

| MEGen, a model equation generator (EG) linked to a parameter database | CEFIC LRI29 | Free |

| RVIS – open access PBPK modelling platform | CEFIC LRI, George Loizou (HSE)30 | Free |

| MERLIN-Expo, total exposure assessment chain | Ciffroy et al., 2016,31 | Free |

| KNIME suite of tools | COSMOS Project (SEURAT-1)32, Sala Benito et al., 2017 | Free |

| High-throughput toxicokinetics (httk) | US EPA, Wang, 2010 | Free |

| PLETHEM (Population Lifecourse Exposure-To-Health-Effects Model Suite) | Scitovation33, Pendse et al., 2017 | Free |

| Berkeley Madonna | Berkeley Madonna34 | Commercial |

| MATLAB | MathWorks35 | Commercial |

| Simcyp’s Population-based Simulator | Certara36 | Commercial |

| Gastroplus/ADMET/PBPK PLUS | SimulationPlus37 | Commercial |

| Computational Systems Biology Software Suite (PKSim), tools for the molecular level (MoBi), the organismal level (PK-Sim) |

Open Systems Pharmacology38 | Commercial |

List of available models adapted from Paini et al. (2017)

6. Probability of exposure

The concept that exposure has a certain probability for an individual and cumulatively for the population is intuitive and broadly used (Bogen et al., 2009). Cullen and Frey (1999) wrote a textbook, Probabilistic Techniques in Exposure Assessment, on the concept. Bogen et al. (2009) give a very comprehensive review on probabilistic exposure analysis for chemical risk characterization based on a Society of Toxicology’s Contemporary Concepts in Toxicology meeting (Probabilistic Risk Assessment (PRA): Bridging Components Along the Exposure-Dose-Response Continuum, held June 25–27, 2005, in Washington, DC). Jager et al. (2000) give a very comprehensive example for two substances, an existing chemical (dibutyl phthalate, DBP) and a new chemical notification (undisclosed) and present a review of the approach (summarized also in Jager et al., 2001). Chiu and Slob (2015) suggested a unified probabilistic framework for dose-response assessment of human health effects. EFSA in 2012 published extensive Guidance on the Use of Probabilistic Methodology for Modelling Dietary Exposure to Pesticide Residues39.

The German Federal Institute for Risk Assessment (BfR) lauds probabilistic exposure assessment40: “Exposure assessment can help to determine the type, nature, frequency and intensity of contacts between the population and the contaminant that is to be assessed. Traditional exposure assessment (also called deterministic estimate or point estimate, ‘worst case estimates’) of risks from chemical substances estimates a value that ensures protection for most of the population. Deviations from the real values are tolerated in order to ensure protection of the consumer using simple methods by, in some cases, considerably overestimating actual exposure.

For some time now the use of probabilistic approaches (also called distribution-based or population-related approaches) has been under discussion for exposure assessment. These methods do not merely describe a single, normally extreme case but rather endeavour to depict overall variability in the data and, by extension, to present all possible forms of exposure. The mathematical tools used in this approach are Monte Carlo simulations, distribution adjustments and other principles taken from the probability theory.

In toxicology risks are normally described by establishing limit values. Below a limit value there should be no risk; above a limit value health effects through contact with the chemicals cannot be ruled out. This approach is frequently challenged. The question has been raised whether this approach does justice to transparent, realistic risk assessment. Probabilistic methods could highlight this supposed lack of clarity, help to characterise uncertainties and take them into account in risk assessment.”

Exposure assessments are complex and have clearly limited throughput. They can typically target only a few substances, and individual exposures over time are highly diverse. Depending on the agent studied, either peak exposures or cumulative amounts are relevant. Metabolism of the chemical and interindividual differences add to the complexity. Noteworthy, approaches for rapid exposure assessment exist, such as US EPA’s ExpoCast project41, which allow triaging chemicals of irrelevant exposure (Wambaugh et al., 2015). Probabilistic approaches are again critical components here.

With the rise of biomonitoring studies, internal exposures, especially blood and tissue levels of chemicals, are increasingly becoming available. These depend on exposure and bioavailability (and other biokinetic properties to be discussed next). They offer opportunities to focus on relevant exposures. The concept has been broadened to exposomics (Sillé et al., 2020), which often employs probabilistic analyses for our context here.

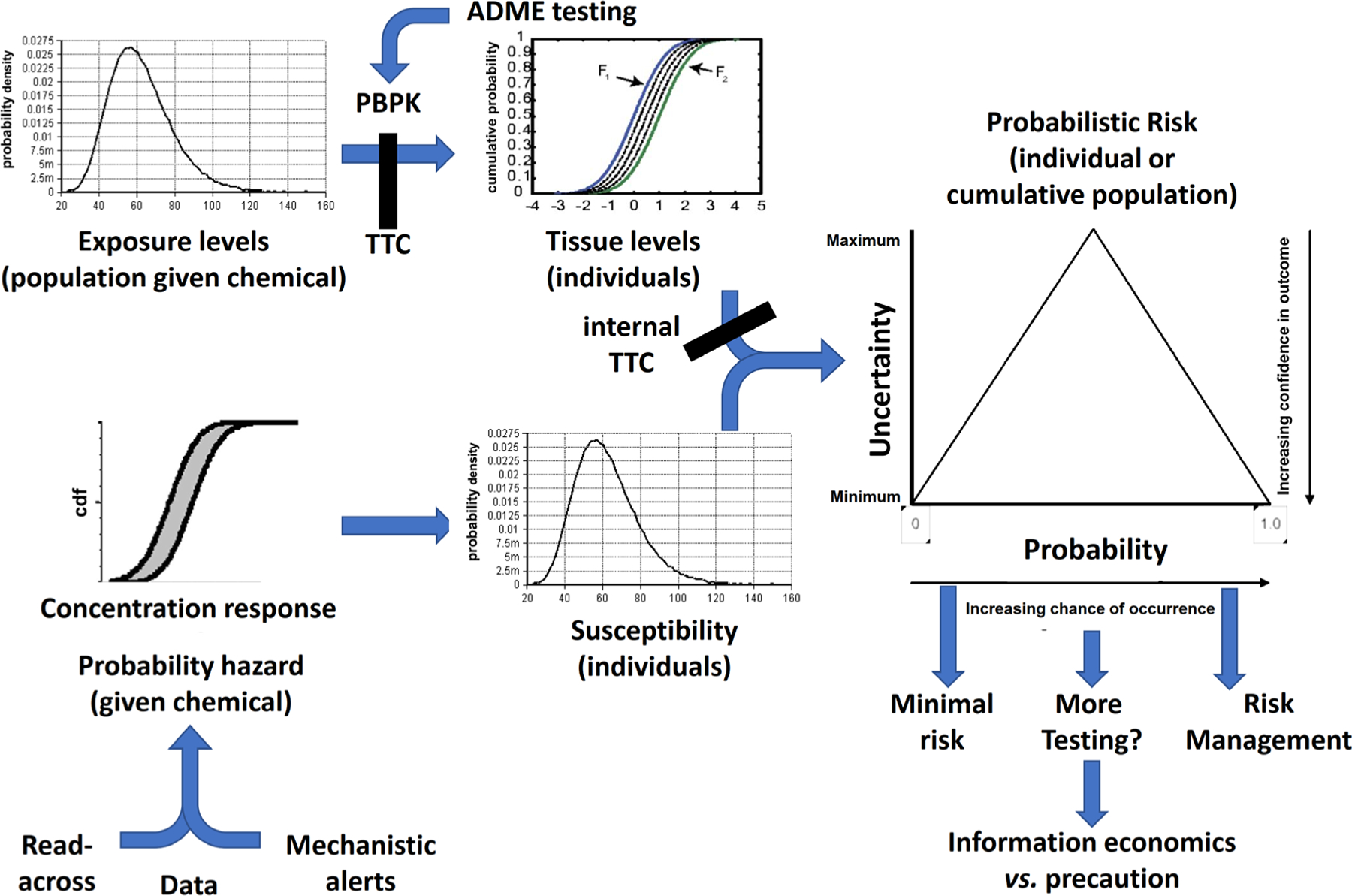

7. Probability as the basis of PBPK / PBTK modeling

We have stressed earlier in this series and elsewhere the importance of pharmacokinetic modeling for modern toxicology (Basketter et al., 2012; Leist et al., 2014; Tsaioun et al., 2016; Hartung, 2017a, 2018a). Pharmacokinetic modeling plays a critical role in informing us whether a given dose of a chemical reaches a critical level at the target organ and, in reverse, what in vitro active concentrations correspond to as exposure needed, i.e., quantitative in-vitro-to-in-vivo-extrapolation (QIVIVE) (McNally et al., 2018).

Here, the most important message in the context of ProbRA is that the most advanced body of probabilistic methods is available as physiologically based pharmacokinetic / toxicokinetic (PBPK/PBTK) modeling (McLanahan et al., 2012). PK / TK theoretical foundation, practical application, and various software packages have been developed in pharmacology (Leung, 1991) and later adapted to toxicology (Bogen and Hall, 1989) for the environmental health context by friends and collaborators such as Mel Andersen, Bas Blaauboer, Frederic Bois, Harvey Clewell, George Loizou, Amin Rostami-Hodjegan, Andrew Worth and others; please see their work for more substantial discussions. Several workshops have documented the field (Tab. 5). Most recently, a textbook became available (Fisher et al., 2020). Loizou et al. (2008) stress the need for kinetics in risk assessment: “The need for increasing incorporation of kinetic data in the current risk assessment paradigm is due to an increasing demand from risk assessors and regulators for higher precision of risk estimates, a greater understanding of uncertainty and variability …, more informed means of extrapolating across species, routes, doses and time …, the need for a more meaningful interpretation of biological monitoring data … and reduction in the reliance on animal testing … . Incorporating PBPK modelling into the risk assessment process can advance all of these objectives.”

Tab. 5:

Major workshops on physiology-based pharmacokinetic/toxicokinetic modeling (PBPK) for risk assessment

| Workshop/reference | Brief summary |

|---|---|

| ECVAM: The use of biokinetics and in vitro methods in toxicological risk evaluation, 1995, Utrecht, The Netherlands (Blaauboer et al., 1996) | Recommendations to encourage and guide future work in the PBK model field. 1. Explore possibilities to integrate in vitro data into the models; 2. Models are built on a case-by-case basis; 3. Establish documentation to illustrate what is needed experimentally; 4. Availability of data required for constructing models; 5. Establish databases; 6. Refine the partition coefficient; 7. Penetration rate should be incorporated into PBK models (barriers information); 8. Biotransformation CYP P450 reactions and information should be included into the model; 9. Emphasis on species comparison (rodent versus human); 10. Target organs and metabolism; 11. In vitro systems should be a reliable representation of in vivo; 12. PBK models should include dynamics; 13. Validation of PBK models should be done with independent data set; 14. Evaluation of the different software; 15. Sensitivity analysis employed to identify potential source of errors |

| ECVAM: Physiologically based kinetic (PBK) modelling: Meeting the 3Rs agendas, 2005, Ispra, Italy (Bouvier d’Yvoire et al., 2007) | To better define the potential role of PBK modelling as a set of techniques capable of contributing to the 3Rs in the risk assessment process of chemicals; needs for technical improvements and applications; needs to increase understanding and acceptance by regulatory authorities of the capabilities and limitations of these models. The recommendations were categorized into i) quality of PBK modelling; ii) availability of reference data and models; and iii) development of testing strategy |

| EPA/NIEHS/CIIT/ INERIS: Uncertainty and variability in PBPK models, 2006, RTP, NC, USA (Barton et al., 2007) | Better statistical models and methods; better databases for physiological properties and their variation; explore a wide range of chemical space; training, documentation, and software. |

| The Mediterranean Agronomic Institute of Chania: The International Workshop on the Development of GMP for PBPK models, 2007, Crete, Greece (Loizou et al., 2008) | Clear descriptions of good practices for (1) model development, i.e., research and analysis activities, (2) model characterization, i.e., methods to describe how consistent the model is with biology and the strengths and limitations of available models and data such as sensitivity analyses, (3) model documentation, and (4) model evaluation, i.e., independent review that will assist risk assessors in their decisions of whether and how to use the models, and also for model developers to understand expectations of various model purposes, e.g., research versus application in risk assessment |

| EPAA & EURL ECVAM: Potential for further integration of toxicokinetic modelling into the prediction of in vivo dose-response curves without animal experiments, 2011, Joint Research Centre, Italy (Bessems et al., 2014) | The aim of the workshop was to critically appraise PBK modelling software platforms as well as a more detailed state-of-the-art overview of non-animal based PBK parameterization tools. Such as: 1) Identification of gaps in non-animal test methodology for the assessment of ADME. 2) Addressing user-friendly PBK software tools and free-to-use web applications. 3) Understanding the requirements for wider and increased take up and use of PBK modelling by regulators, risk assessors and toxicologists in general. 4) Tackling the aspect of obtaining in vivo human toxicokinetic reference data via micro-dosing following the increased interest by the research community, regulators, and politicians |

| US FDA: Application of Physiologically-based pharmacokinetic (PBPK) modelling to support dose selection, 2014, Silver Spring, MD, USA (Wagner et al., 2015) | Workshop to (i) assess the current state of knowledge in the application of PBK in regulatory decision-making, and (ii) share and discuss best practices in the use of PBK modelling to inform dose selection in specific patient populations |

| EURL ECVAM: Physiologically-based kinetic modelling in risk assessment – Reaching a whole new level in regulatory decision-making, 2016, Joint Research Centre, Italy (Paini et al., 2017) | Strategies to enable prediction of systemic toxicity by applying new approach methodologies (NAM) using PBK modelling to integrate in vitro and in silico methods for ADME in humans for predicting whole-body TK behavior, for environmental chemicals, drugs, nano-materials, and mixtures. (i) identify current challenges in the application of PBK modelling to support regulatory decision-making; (ii) discuss challenges in constructing models with no in vivo kinetic data and opportunities for estimating parameter values using in vitro and in silico methods; (iii) present the challenges in assessing model credibility relying on non-animal data and address strengths, uncertainties and limitations in such an approach; (iv) establish a good kinetic modelling practice workflow to serve as the foundation for guidance on the generation and use of in vitro and in silico data to construct PBK models designed to support regulatory decision making. Recommendations on parameterization and evaluation of PBK models: (i) develop a decision tree for model construction; (ii) set up a task force for independent model peer review; (iii) establish a scoring system for model evaluation; (iv) attract additional funding to develop accessible modelling software; (v) improve and facilitate communication between scientists (model developers, data provider) and risk assessors/regulators; and (vi) organize specific training for end users. Critical need for developing a guidance document on building, characterizing, reporting, and documenting PBK models using non-animal data; incorporating PBK models in integrated strategy approaches and integrating them with in vitro toxicity testing and adverse outcome pathways. |

8. Probability of hazard

What indicates a probability of hazard? These four principal components come to mind:

Traditional test data on the given substance, which can range from physico-chemical measurements to animal guideline studies.

Such information on similar substances enabling (automated) read-across.

Structural alerts such as functional groups or chemical descriptors enabling (quantitative) structure-activity relationships ((Q)SAR).

Mechanistic alerts typically from in vitro testing or (clinical) biomarkers.

How these (jointly) indicate a probability of hazard and how to quantify it, is usually not clear. Some elements are more established. We have shown earlier how a combination of (1) and (2) can be used to derive probabilities of hazard (Luechtefeld et al., 2018a,b). These probabilities or, the other way around, measures of uncertainty are among the most remarkable features of the approach (Hartung, 2016) as they indicate whether more information is needed. The approach called read-across-based structure-activity relationship (RASAR) covers the nine most frequently used animal test-based classifications by OECD test guidelines. The method has been implemented as Underwriters Laboratories (UL) Cheminformatics Toolkit42; it has been further developed utilizing deep learning, making (non-validated) estimates of potency as GHS hazard classes and handling applicability domains of chemicals more explicitly. Notably, the method has been included in the new Australian chemicals legislation43, the Industrial Chemicals Act 2019 or AICIS (Australian Industrial Chemicals Introductions Scheme) in effect since July 1, 2020. This law creates a new regulatory scheme for the importation and manufacture of industrial chemicals by Australia. Unlike other jurisdictions, “industrial chemicals” includes personal care and cosmetics, and there is a full ban on new animal testing for these ingredients and dual-use ingredients that are used both in cosmetics and industrial uses. However, broader international acceptance of read-across as promoted also by the EUToxRisk project44 is still outstanding (Chesnut et al., 2018; Rovida et al., 2020). Other A.I.-based methods for hazard identification, which are more or less explicit in expressing probabilities of their predictions, are available (Zhang et al., 2018; Santin et al., 2021).

The approach under (3) is well-known as (Q)SAR, which has been covered earlier in this series of articles (Hartung and Hoffmann, 2009). (Q)SAR are based on structural alerts and physicochemical descriptors. Currently, we are exploring the integration of (Q)SAR as input parameters of the RASAR approach.

Most development is needed for (4). A read-across type of approach has been introduced for the US EPA ToxCast45 data (Shah et al., 2016), which tested about 2,000 chemicals in hundreds of robotized assays. This was also termed generalized read-across46. Pioneering work showed how to use this to predict endocrine activity (Browne et al., 2015; Kleinstreuer et al., 2018a; Judson et al., 2020). However, it is not clear how to extend this to chemicals that were not included in the ToxCast program. We discussed the opportunities of read-across of such biological data earlier (Zhu et al., 2016).

Most toxicologists, out of habit, talk of a xenobiotic exposure “causing” a certain effect, e.g., genotoxins cause cancer, etc. Yet, in reality, this is rarely the case – even when chemical exposures have a clear role in both initiation and progression, there is still a strong stochastic element involved (Tomasetti et al., 2017). For example, bilateral breast cancer is very rare, although both tissues have identical exposures. For other endpoints, it is even more important to remain mindful of the uncertainty intrinsic to most of the causal associations we are looking for in toxicology: For most diseases (Alzheimer’s and autism to name a few) we know that the environment plays an important role; however, decades of studies have failed to find any chemical “smoking gun”. We are instead likely looking for multiple exposures, over a lifetime, each of which may be individually insignificant, but which can, in vulnerable individuals, act as a tipping point.

One conceptual alternative to asking which chemicals “cause” which diseases is instead thinking of potential chemicals as quantifiable liabilities in a threshold-liability model. The threshold-liability model holds that for a given disease there exists within the population some probability distribution of thresholds, with some individuals with a high threshold (the life-long smoker who fails to develop lung cancer or heart disease) and others with considerably lower thresholds. Disease happens when an individual’s liabilities (which can include environmental exposures, stochastic factors, and epigenetic alterations) exceed their threshold. Such a model has been applied to amyotrophic lateral sclerosis (ALS) – a disease that has no known replicable environmental factors and is likely best characterized as the result of a pre-existing genetic load that faces environmental exposures over a lifespan and eventually reaches a tipping point, wherein neurodegeneration begins. While the past decade has seen an enormous expansion in our understanding of the genetic load component thanks to large-scale genome-wide association studies, the environmental component remains poorly characterized. While this is no doubt in part due to the much larger search space for environmental exposures, it must be acknowledged that the tools toxicologists employ – for example, looking for chemicals that will cause an ALS-like neurodegenerative phenotype in rodents at very high doses – are likely not ideal (Al-Chalabi and Hardiman, 2013).

An area where ProbRA has shown important (but largely neglected) opportunities is the test battery of genotoxicity assays. Depending on the field of use, three to six in vitro assays are carried out and, typically, any positive result is taken as an alert, leading to a tremendous rate of false-positive classifications as discussed earlier (Basketter et al., 2012). Aldenberg and Jaworska (2010) applied a BN to the dataset assembled by Kirkland et al., showing the potential of a probabilistic network to analyze such datasets. Expanding on work by Jaworska et al. (2013, 2015) for skin sensitization potency, we earlier showed how probabilistic hazard assessment by dose-response modeling can be done using BN (Luechtefeld et al., 2015). Our contribution was more technical (using feature elimination instead of QSAR, hidden Markov chains, etc.), but it moved the model’s potency predictions to standing cross-validation. Most recently, Zhao et al. (2021) compiled a human exposome database of > 20,000 chemicals, prioritized 13,441 chemicals based on probabilistic hazard quotient and 7,770 chemicals based on risk index, and provided a predicted biotransformation metabolite database of > 95,000 metabolites. While the importance of acute oral toxicity for ranking chemicals can be argued, it shows impressively how probabilistic approaches can be applied to large numbers of substances to allow prioritization.

9. Probability of risk

The prospect of ProbRA is increasingly recognized by regulators as shown earlier for EPA, EFSA and BfR (Tralau et al., 2015) and opinion leaders in the field (Krewski et al., 2014). A framework for performing probabilistic environmental risk assessment (PERA) was proposed (Verdonck et al., 2002, 2003). Risk assessment obviously requires combining hazard and exposure information; van der Voet and Slob (2007) suggested an approach where exposure assessment and hazard characterization are both included in a probabilistic way. Table 6 gives a few examples of ProbRA; notably they are very different in approach and quality, but they illustrate possible applications. Slob et al. (2014) used the ProbRA approach to explore uncertainties in cancer risk assessment. Together, this very incomplete list of examples of ProbRA in toxicology shows the potential of the technology.

Tab. 6:

Examples of ProbRA in toxicology

| Topic of ProbRA | Reference |

|---|---|

| Agrochemicals in the environment | Solomon et al., 2000 |

| Pesticide atrazine in the environment | Verdonck et al., 2002 |

| Environmentally occurring pharmaceuticals | Sanderson, 2003 |

| Linear alkylbenzene sulfonate (LAS) in sewage sludge | Schowanek et al., 2007 |

| Chemical constituents in mainstream smoke of cigarettes | Xie et al., 2012 |

| Flame retardant PBDE in fish | Pardo et al., 2014 |

| Insecticides (malathion and permethrin) | Schleier et al., 2015 |

| Nanosilica in food | Jacobs et al., 2015 |

| Reproductive and developmental toxicants in consumer products | Durand et al., 2015 |

| Perfluorooctane sulfonate (PFOS) | Chou and Lin, 2020 |

10. Uncertainty and the adverse outcome pathway (AOP) concept

As discussed above, a key element of ProbRA is the analysis of how the system is challenged and can fail. This is reminiscent of the AOP approach, which can be seen as the implementation of the call for toxicity pathway mapping from the “Toxicity testing in the 21st century movement” (Krewski et al., 2020). Based on the respective National Academy of Sciences / NRC report (NRC, 2007), a change toward new approach methodologies (NAMs) away from traditional animal testing, which is based on mechanistic understanding, i.e., toxicity pathways, pathways of toxicity (PoT) (Hartung and McBride, 2011; Kleensang et al., 2014) or, increasingly, AOP (Leist et al., 2017) is suggested.

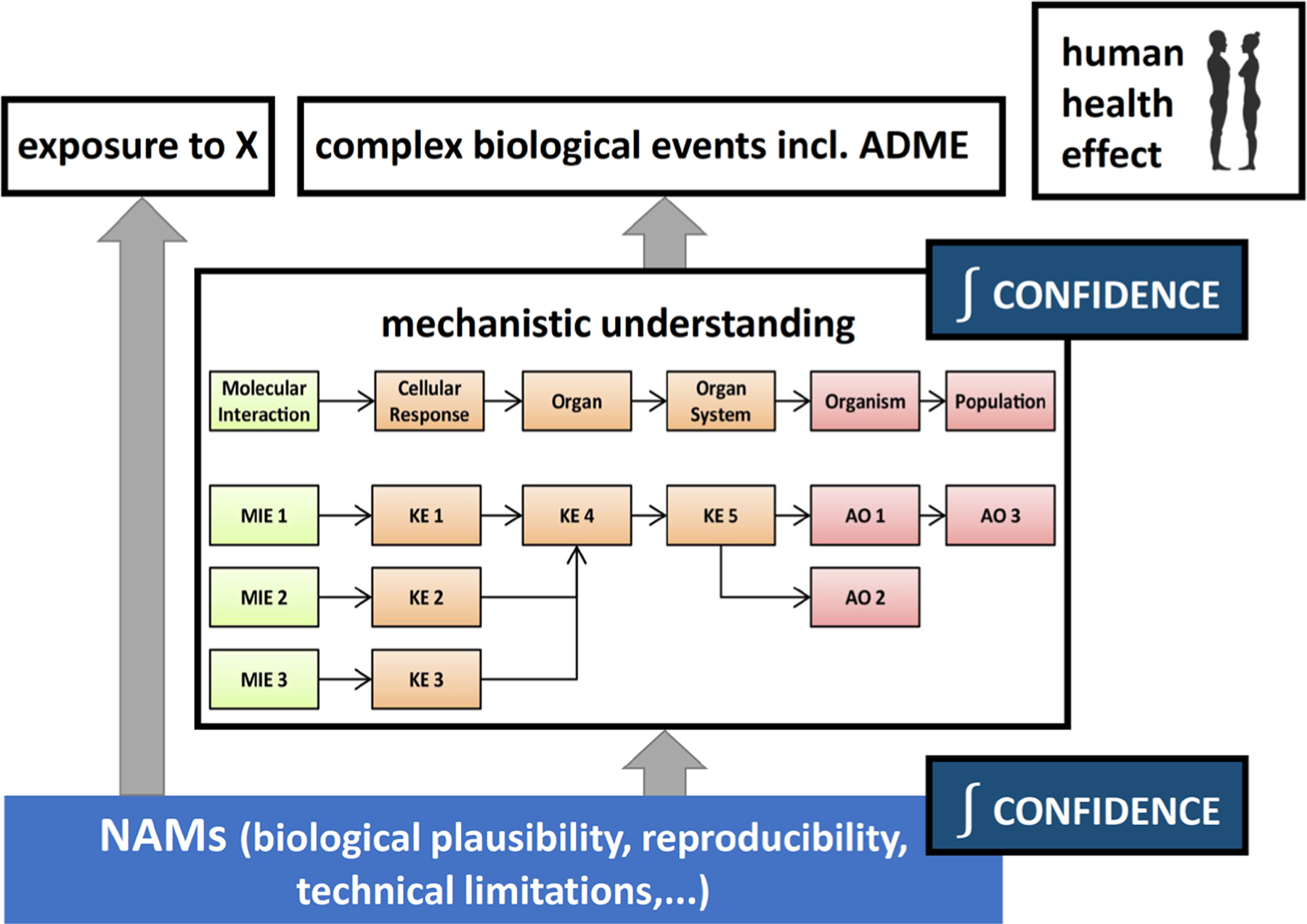

A major obstacle to the introduction of NAMs in regulatory decision-making has been the lack of confidence, or substantial overall uncertainty, in their fitness-for-purpose. While some individual aspects of NAMs contributing uncertainty are assessed in a systematic and thorough manner, a comprehensive approach that maps all uncertainties involved is lacking. A generic framework that integrates current mechanistic knowledge, e.g., condensed into AOP, biological plausibility of NAMs in relation to that knowledge, and NAM reproducibility with well-established risk assessment-related uncertainties, such as intra- and interspecies differences, has the potential to provide a widely agreed basis for a realistic purpose-focused assessment of NAMs. For a given question, e.g., the determination of a specific health hazard, mapping available evidence for the various uncertainty sources onto the framework will provide a complete overview of strengths, weaknesses, and gaps in our mechanistic understanding and ask is the NAM relevant for the health effect? Such an understanding will not only guide future NAM development, but it also allows to uncouple current regulatory practices, i.e., essentially animal-based approaches, from the aim of assessing health effects in humans.

Animal-based approaches are deeply rooted in regulatory approaches, but also in toxicology and environmental health, so that they are often used as a surrogate aim, not making their strengths and weaknesses explicit and transparent. A clear separation of the two would enable a fair and transparent assessment of NAMs, unbiased by current animal-based practices, for the purpose of protecting human health. Depending on the complexity of the human health effect, this approach will provide a clear path to reducing the overall uncertainty in NAM to achieve sufficient confidence in their results (Fig. 2).

Fig. 2:

Increasing confidence in new approach methodologies (NAM) through mechanistic understanding and biokinetics of human health effects

For the identification of sources of uncertainty, uncertainty in our mechanistic understanding of the biological events that lead to human health effects needs to be identified by systematically mapping the peer-reviewed literature that has addressed this topic. Outcomes of recent workshops organized by the EBTC6 (de Vries et al., 2021; Tsaioun et al., in preparation), relevant information from national and international bodies, especially the guidance and case studies of the OECD, and the opinions of leading scientists should be incorporated. The sources of uncertainties in NAM need to be identified using a similar approach, with a focus on literature and other information on the assessment of individual NAM and combinations of NAM in testing strategies.

In order to build the generic framework, the literature can be screened for initiatives in the field of toxicology and environmental health that could be built upon, e.g., by Bogen and Spear (1987). A top-down approach is recommended that starts with a (close to) ideal situation: That is either the theoretical assumption that hazard or risk for a certain health effect upon exposure to an stressor X is known, i.e., quantifiable without uncertainty, or the more practical assumption of adapting the concept of a “target” trial, i.e., a hypothetical, not necessarily feasible or ethical trial, conducted on the population of interest, whose results would answer the question (see, e.g., Sterne et al., 2016). The aim of addressing a human health effect exclusively with NAM and identifying the uncertainties introduced by each step could be achieved by careful mapping of interdependence of sources of uncertainty and will be essential for their integration. This process needs to consider lessons learned from the deterministic and probabilistic integration of uncertainties of animal studies that can be transferred to NAM.

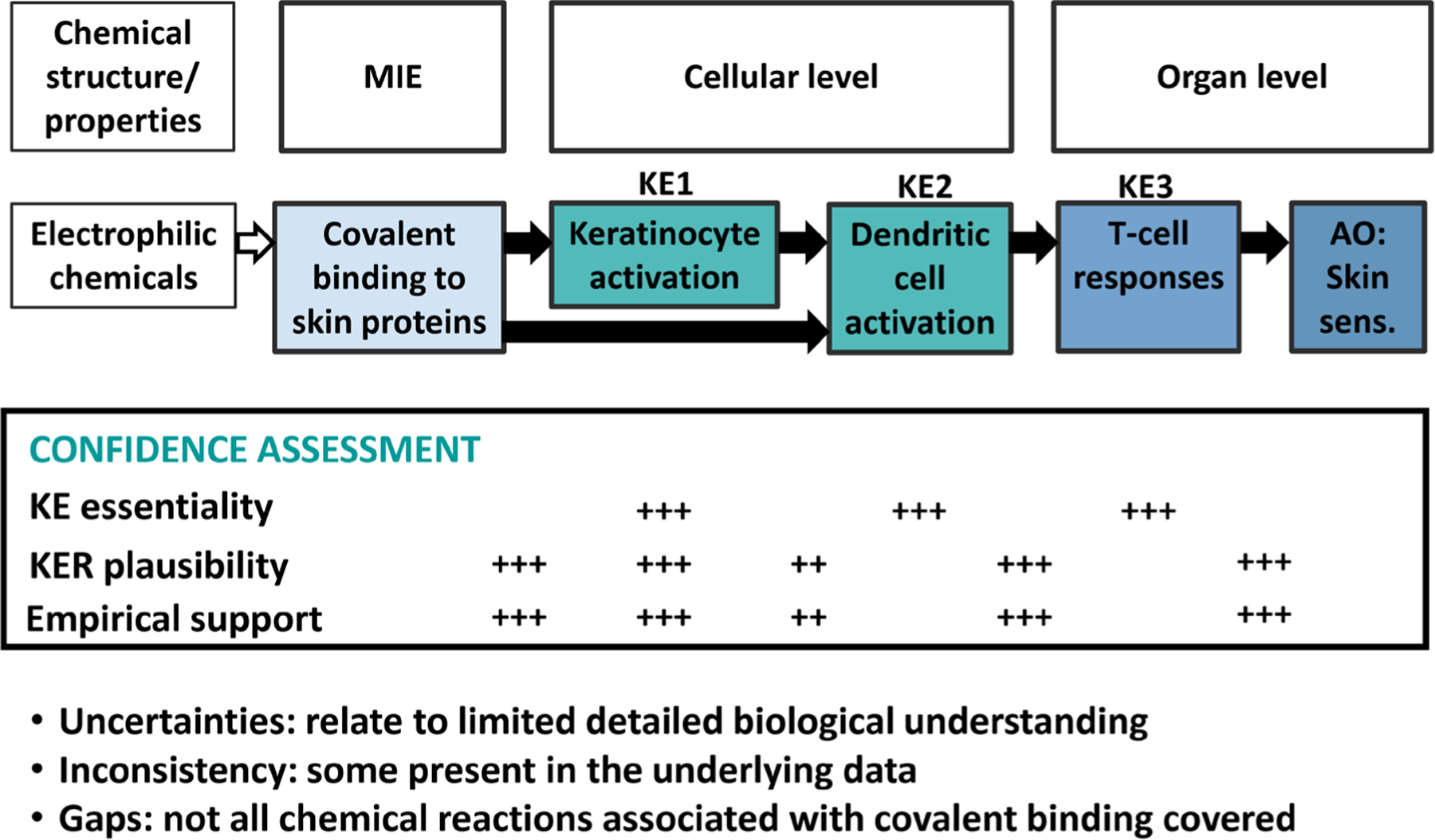

The resulting frameworks could be explored by applying a select one as a case study. For illustration, skin sensitization hazard identification and risk assessment lends itself to this purpose for the following reasons:

low complexity of the etiology of skin sensitization

availability of a well-described AOP (Fig. 3), including formal confidence assessment47 (OECD 2014)

availability of NAMs for the AOP events, many as OECD Test Guidelines (OECD 2018a,b, 2020)

well-characterized NAMs, e.g., limitations, reproducibility, etc. (Hoffmann et al., 2018)

availability of testing strategies, so-called defined approaches (DA) (Kleinstreuer et al., 2018b)

next generation skin sensitization risk assessment (NGRA) approach of cosmetic ingredients (Gilmour et al., 2020)

Available evidence for the various sources of uncertainty needs to be collected and plugged into the framework. Interdependencies of uncertainties can be explored or modelled, where applicable, to inform a qualitative or semi-quantitative integration of all uncertainties to characterize the confidence in the final decision.

Fig. 3: Example of skin sensitization adverse outcome pathway (AOP) confidence assessment.

MIE, molecular initiating event; KE, key event; AO, adverse outcome

The main results would be a generic framework that maps all sources of uncertainty in NAM-based regulatory decisions on human health. Such an objective evidence-based framework enables a transparent fit-for-purpose assessment of NAM and NAM combinations, e.g., integrated approaches to testing and assessment (IATA) (OECD, 2017). Application of the framework will allow for mapping of NAMs and characterization of uncertainty in an integrative manner, while highlighting the strengths but especially the weaknesses and knowledge and NAM gaps. This in turn will help direct future research to address the identified shortcomings. Ultimately, such a comprehensive and transparent approach is a pre-requisite to increase the regulators’ confidence in NAM-based decision-making to a level that will allow abandoning the traditional animal-based approaches, not least as it allows comparison of the approaches.

11. Evidence-based medicine / toxicology and the role of probability and uncertainty

Rysavy (2013) titled an editorial “Evidence-based medicine: A science of uncertainty and an art of probability”. In fact, a lot of the change brought about by evidence-based medicine is replacing the eminence-based (authoritarian) black-and-white of “this is the diagnosis/this is the treatment” to an acceptance of uncertainties, probabilities for differential diagnoses, treatment options, and associated odds for outcome etc., exactly what we describe for ProbRA and its challenge to classification and labeling of toxicities. By promoting transparency and mapping uncertainties and biases as well as broad evidence use, ProbRA promotes very similar goals to evidence-based toxicology.

12. Thresholds of toxicological concern (TTC) as probabilistic approaches

TTC represent a bit of a hybrid between the two worlds. They are based on the distribution of no adverse effect levels (NOAEL), and then the 5th percentile is used as a threshold, applying a safety factor of typically 100 (Hartung, 2017b). Future refinements of the concept might embrace uncertainty and probability considerations. As shown below, TTC might already now serve a role in the ProbRA approach.

13. Probabilistic avatars

Virtual representations of patients (avatars, digital twins)48,49 are increasingly developed as an approach to personalized medicine and even virtual clinical trials (Brown, 2016; Bruynseels et al., 2018). The European DISCIPULUS Project50,51 developed a roadmap for research and development. Earlier (Hartung, 2017c), we suggested that this is a logical extrapolation of the AOP concept: “A virtual patient is not far from the creation of a personal avatar for each patient, where the standard model is adapted to the genetic and pharmacokinetic parameters of the patients and where interventions can be modeled and optimized in virtual treatments. Certainly still largely science fiction, but these were any of the technologies of our current toolbox some decades ago too”. Here, it is important to note that the key underlying concept is the probabilistic approach of PB-PK. Similar to modeling disease and treatment, the hazardous consequences of exposure might be modelled in the future.

Noteworthy, this is also an interesting concept in the context of animal testing. Similar avatars of experimental animals might help with species extrapolations. Furthermore, we often point out that tests like the Draize rabbit eye test are not very reproducible. One source of variance is probably the animals themselves. Modeling the result of an animal test as a function of the chemical and animal tested (here avatar of the animal) would probably explain some of the uncertainty.

14. Artificial intelligence (A.I.) as the big evidence integrator delivers probabilities

A central problem of toxicology is evidence integration. More and more methodologies and results, some conflicting and others difficult to compare, are accumulating. We are facing this problem in more and more risk assessments, just thinking of tens of thousands of publications on bisphenol A, for example. Similarly, systematic reviews (Hoffmann et al., 2017; Farhat et al., 2022; Krewski et al., 2022) need to combine different evidence streams (EFSA and EBTC, 2018; Krewski et al., in preparation). Last but not least, the combination of tests and other assessment methods in integrated testing strategies (Hartung et al., 2013; Tollefsen et al., 2014; Rovida et al., 2015), a.k.a. IATA or DA by OECD, need to integrate different types of information. Again, probabilistic tools lend themselves to all of these.

We have earlier discussed how probabilistic approaches can help with integrated testing strategies, for example by determining the most valuable (next) test (Hartung et al., 2013). Briefly, we can ask how much the overall probability of the result can change with any outcome. Often, we might conclude that this is not actually worth the additional work, bringing an end to endless testing. Value of information analysis (Keisler et al., 2013) has enormous potential in toxicological decision-taking. This leads us to a type of information economics. Information economics is the discipline of modeling the role of information in an economic system as a fundamental force in every economic decision. We have stressed economic considerations earlier in this series of articles (Meigs et al., 2018). It seems like an interesting extension of this thinking if the investment into testing is contrasted quantitatively with the possible gain.

In the extreme, toxicology is seeing the rise of big data, which is defined by the three Vs: volume, velocity, and variety. These are key to understanding how we can measure big data and just how very different big data is to traditional data. Different technologies fuel this, such as omics technologies, high-content imaging, robotized testing (e.g., by ToxCast and the Tox21 alliance), sensor technologies, curated legacy databases, scientific and grey literature of the internet, etc. (Hartung and Tsatsakis, 2021). A.I. is making big sense from big data (Hartung, 2018b). It is worth mentioning that machine learning approaches frequently struggle with probabilities. Several existing approaches attempt to merge machine learning methods with probabilistic methods by modeling distributions or using Bayesian updating52. Frequently the outputs of neural networks are interpreted as probabilities, which can be problematic. Here, more work needs to be done.

Most importantly, by adopting a probabilistic view on safety information, we might come to a more flexible use of new approaches over time. If we do not see an individual method as definitive but only changing probabilities, we might be able to avoid the “war of faith” on the usefulness of animal tests, for example. Over time, we will see how the individual evidence sources contribute to the result of our A.I.-based integration. This might allow phasing out those methods that do not deliver valuable information and implementing those that do.

15. Conclusions and the way forward

As soon as we accept that risk assessment occurs with uncertainty and give up on the illusion of absolute safety, we must deal with probabilities. This is what science can deliver, as every experiment can only approximate truth. Working with models of reality with limited resources and technologies, and inherent variabilities and differences introduces uncertainty. The advantage of ProbRA is making these visible and estimating their potential contribution. By quantifying these uncertainties, we do not always need to default to the most conservative “precautionary” approach but can define acceptable risks and deprioritize scenarios clearly below them. ProbRA of chemicals offers numerous advantages compared to traditional deterministic approaches as well as several challenges53 (Tab. 7) (Kirchsteiger, 1999; Verdonck et al., 2002; Scheringer et al., 2002; Parkin and Morgan, 2006; Bogen et al., 2009; EPA, 2014).

Tab. 7:

Advantages and challenges for ProbRA in human health risk assessment

| Advantages of ProbRA | Challenges of ProbRA |

|---|---|

| Improves transparency and credibility by explicit consideration and treatment of all types of uncertainties; clearly structured; integrative and quantitative; allows ranking of issues and results; more information can be obtained by separating variability from uncertainty | Problem of model incompleteness; relatively time-consuming in performing and interpreting – this “might be a fertile ground for endless debate between utility and regulator” (Kafka, 1998); regulatory delays due to the necessity of analyzing numerous scenarios using various models |