Abstract

Defining the central components of an intervention is critical for balancing fidelity with flexible implementation in both research settings and community practice. Implementation scientists distinguish an intervention’s essential components (thought to cause clinical change) and adaptable periphery (recommended, but not necessary). While implementing core components with fidelity may be essential for effectiveness, requiring fidelity to the adaptable periphery may stifle innovation critical for personalizing care and achieving successful community implementation. No systematic method exists for defining essential components a priori. We present the CORE (COmponents & Rationales for Effectiveness) Fidelity Method —a novel method for defining key components of evidence-based interventions—and apply it to a case example of Reciprocal Imitation Teaching, a parent-implemented social communication intervention. The CORE Fidelity Method involves three steps: (1) gathering information from published and unpublished materials; (2) synthesizing information, including empirical and hypothesized causal explanations of component effectiveness; and (3) drafting a CORE model and ensuring its ongoing use in implementation efforts. Benefits of this method include: (a) ensuring alignment between intervention and fidelity materials; (b) clarifying the scope of the adaptable periphery to optimize implementation; and (c) hypothesizing—and later, empirically validating—the intervention’s active ingredients and their associated mechanisms of change.

Although research on social communication interventions is growing, evidence-based interventions are still not consistently available to autistic children and their families (Hume et al., 2021). Addressing this science-to-service gap requires translating interventions for community systems while maintaining their effectiveness (Dingfelder & Mandell, 2011; Sam et al., 2020). Positive outcomes for autistic children and their families are thought to require fidelity to intervention protocols. Therefore, ensuring fidelity is widely viewed as essential to effective implementation (Pellecchia et al., 2015; Yoder et al., 2020).

Different conceptualizations of fidelity complicate its measurement (Wiltsey-Stirman, 2020). Fidelity is often defined as the degree to which the “essential” elements of an intervention are replicated “as intended” during implementation (Abry et al., 2015). However, fidelity can also refer to adherence to a written manual or protocol, competence or quality of intervention delivery, and/or the degree to which the intervention differs from other interventions (Kendall & Beidas, 2007; Schoenwald et al., 2011). In multilevel interventions, such as parent-implemented interventions, fidelity can be measured at each level (e.g., providers’ fidelity in teaching parents, parents’ fidelity in using intervention strategies with their children; Wainer & Ingersoll, 2013). Identifying the most central components of evidence-based interventions at each level will facilitate implementation of these interventions in community settings (Miller et al., 2020), both by ensuring fidelity to essential aspects of the intervention and by delineating what may be adapted (Kirk et al., 2020; Chambers & Norton, 2016).

Unfortunately, defining essential or “core” components is surprisingly complex. There is little agreement on what is meant by “core” (e.g., Johnson et al., 2018). Trials of intervention packages or protocols do not indicate which elements of the intervention may have been most responsible for efficacy. Intervention developers’ beliefs about essential elements are seldom explicitly recorded in intervention materials (e.g., protocols, manuals, fidelity measurement tools) and can vary across these materials. Key elements are therefore open to broad interpretation, and intervention protocols likely contain both essential and adaptable components (Damschroder et al., 2009). Intervention manuals in fact serve several other functions beyond documenting key elements, such as standardizing interventions for research trials (Stiles et al., 1986), facilitating therapist training and evaluation, and aiding dissemination (Kendall et al., 1998). Some argue that understanding central intervention elements is a necessary precondition to the effective use of manuals (Kendall et al., 1998), suggesting we cannot rely on manuals to delineate key features.

We and others define essential components as potential “active ingredients” of the intervention: strategies or procedures that are either hypothesized or empirically shown to be responsible for positive clinical outcomes (Chambers & Norton, 2016; Miller et al., 2020). In contrast, the “adaptable periphery” includes aspects of an intervention that are not necessary for efficacy but may enhance effectiveness (Kendall & Frank, 2018). There is a related distinction between an intervention’s “core functions” (i.e., the purpose or mechanism through which an intervention operates) and “forms” (i.e., the intervention activities carried out to achieve the core functions; Perez Jolles et al., 2019; Kirk et al., 2019). Intervention forms are thought to be adaptable, whereas the core functions are not. For example, practicing a new skill and delivering feedback are two common elements of parent coaching. Practicing is thought to be essential (and a core function of learning) but the method of feedback is considered adaptable, and there are many different forms of delivering feedback (e.g., live feedback vs. video feedback; during vs. after practice). The “theory of change” approach, long prominent in evaluation science, holds that effective implementation and evaluation depends on a theory of both how and why an intervention works (Weiss, 1995; Breuer et al., 2016). Similarly, Kirk and colleagues’ (2020) Model for Adaptation Design and Impact (MADI) highlights an intervention’s “alignment with core functions” as a potential mechanism by which the implementation of the intervention achieves clinical outcomes. Thus, capturing an intervention’s fidelity to core functions requires understanding the rationale for each component’s effectiveness.

Empirical evidence of the causal mechanisms by which interventions work is seldom available or comprehensive. Intervention manuals may include active, inert, and even deleterious elements (Dimidjian & Hollon, 2010), and non-manualized aspects of the intervention may also be responsible for change (Wampold, 2015). The majority of psychosocial interventions are multicomponent in nature, obscuring which components are responsible and necessary for positive outcomes. Because randomized trials evaluate manualized interventions as a package, they do not offer proof of the causal effects of individual intervention elements or their respective mechanisms of change (Kazdin, 2007). Therefore, while we have growing evidence of overall intervention efficacy or effectiveness for many interventions, this evidence does not clearly delineate which individual components or collections of components are drivers (i.e., mechanisms) of clinical change. Experimental trials using an additive or dismantling approach (Bell et al., 2013), factorial design (Collins et al., 2014), or SMART design (Kasari et al., 2018) have been recommended to isolate individual components, but not all interventions can be easily delivered in a modular fashion, and these procedures generate information after components are evaluated. A systematic process for integrating available theory, evidence, and clinical insight is needed to generate a priori hypotheses on the core components of an intervention before those components can be formally evaluated.

It is imperative that we define a lean set of key elements for each intervention because insisting on fidelity to elements that are actually in the adaptable periphery may stifle clinical judgment and innovation critical for personalizing care and achieving fit-to-context (e.g., with respect to the feasibility, acceptability, cost-effectiveness, and sustainability of the intervention). Attention to key components is growing in the field of implementation science, indicated by recommendations to categorize adaptations as fidelity consistent or inconsistent (Wiltsey-Stirman et al., 2019) and to employ standardized tools to describe how an intervention was delivered and/or adapted post hoc (e.g., TiDieR, Hoffmann et al., 2014; FRAME, Wiltsey-Stirman et al., 2019). Yet, no current models outline how to define essential elements a priori, in the absence of empirical evidence of individual component effectiveness.

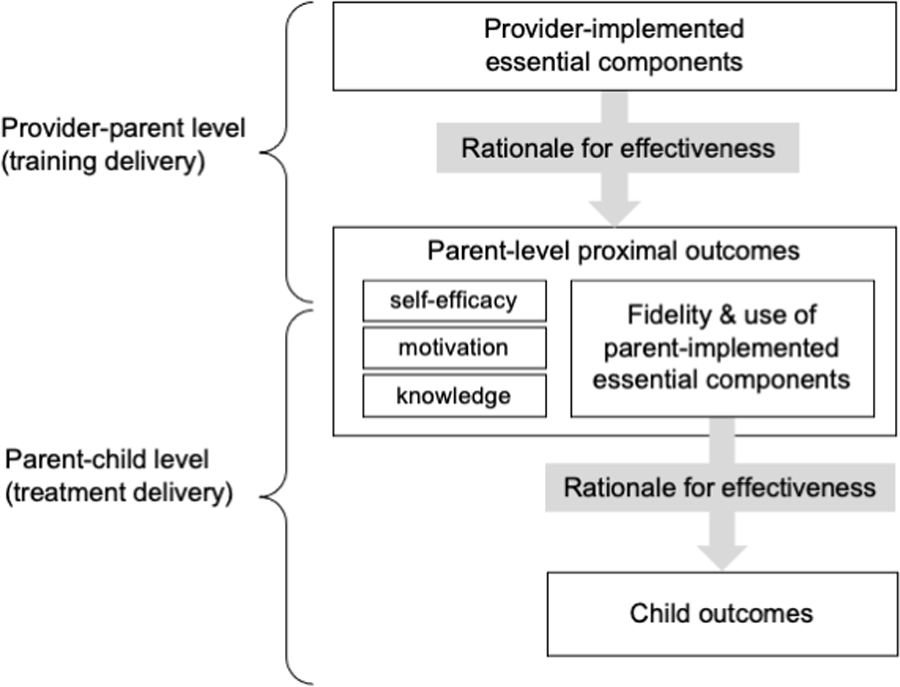

In this paper, we describe the CORE (COmponents & Rationale for Effectiveness) Fidelity Method, which defines the most central components of psychosocial evidence-based treatments (EBTs) for more effective implementation. The CORE Fidelity Method can be used with EBTs at any stage before there is empirical evidence of core components: before the first evaluation of the EBT, after many efficacy studies have been conducted, and iteratively as the EBT is evaluated in different contexts and in different stages of the translational pipeline. Here, we demonstrate its use through a case example of an EBT for autistic children. As is true in the broader field of behavioral health, evidence for central components for EBTs in autism is growing but limited (Gulsrud et al., 2016; Pellecchia et al., 2015; Frost, Russell & Ingersoll, 2021). The majority of EBTs for autistic individuals consist of multiple components, and many EBTs for young autistic children are parent-implemented, and thus, multilevel (Wainer & Ingersoll, 2013; Figure 1).

Figure 1.

Conceptual model of how essential components affect outcomes in a multilevel (parent-implemented) intervention.

Our case example focuses on Reciprocal Imitation Teaching (RIT), a parent-implemented Naturalistic Developmental Behavioral Intervention (NDBI) that targets spontaneous imitation and social communication skills in young children (Ingersoll, 2012; Schreibman et al., 2015). RIT was initially implemented as a direct, provider-to-child intervention (Ingersoll & Schreibman, 2006; Ingersoll et al., 2007; Ingersoll, 2010; Ingersoll, 2012), with four independent replications using single-case design (Cardon & Wilcox, 2011; Zaghlawan & Ostrosky, 2016; Penney & Schwartz, 2019; Töret & Özmen, 2019). RIT has been evaluated in various formats and settings, including telehealth training (Wainer & Ingersoll, 2013), enhanced provider coaching (Penney & Schwartz, 2019), parent implementation (Zaghlawan & Ostrosky, 2016), parent-to-parent peer training (Hall et al., 2018), and implementation in the Washington State Part C early intervention (EI) system (Ibañez et al., 2021). However, the core components of RIT have not been documented, and the rationale for RIT’s effectiveness has not been evaluated.

Our group is beginning a hybrid effectiveness-implementation trial of parent-implemented RIT (Figure 1) across Part C EI systems in four states. In preparation for this trial, there was a clear need to consolidate RIT information from previous studies, especially in light of EI providers’ reports that they often adapt RIT to their circumstances (Ibañez et al., 2021). A priori specification of essential intervention components before an effectiveness-implementation trial will facilitate development of succinct and consistent training materials that are useful for the broadest audience possible (Curran et al., 2012).

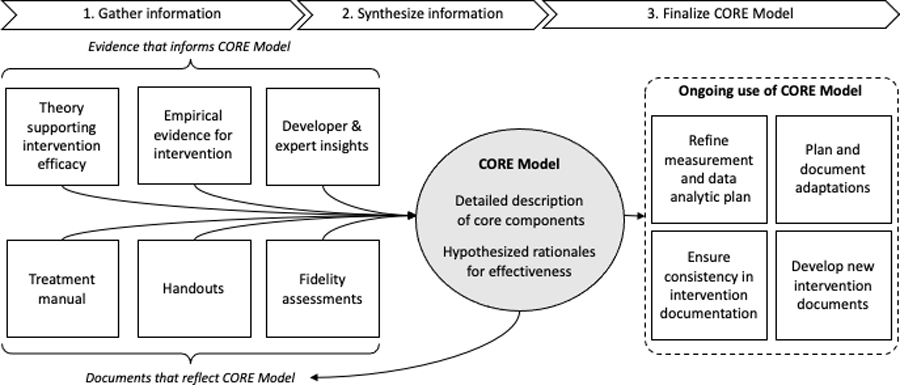

Method

The CORE Fidelity Method (Table 1, Figure 2) is a team-based method that involves the following three stages (Table 1): first, to gather information, including a review and discussion of the fidelity literature, published and grey literature on the EBT and related interventions, and interviews with recognized experts on the EBT. Second, to synthesize information, including noting key distinctions in how the EBT is structured and delivered, describing commonalities and variations in components across intervention documents, and noting both empirical evidence of and theory for hypothesized causal mechanisms by which intervention components are efficacious. Third, to draft the CORE (COmponents & Rationales for Effectiveness) Model, which includes (1) the most essential elements of the intervention and potential recommended or adaptable components, each paired with empirically determined or hypothesized causal explanations of efficacy, and (2) a plan for ongoing use of the EBT CORE model to revise and create intervention documents, frame future analyses of efficacy, measure fidelity, and evaluate potential adaptations to the intervention (Figure 2).

Table 1.

COmponents & Rationales for Effectiveness (CORE) Fidelity Method: Development and Case Example with RIT

| Stage | Activity | Activity scope | Illustrative case example: Reciprocal Imitation Teaching (RIT) for social communication |

|---|---|---|---|

| 1. Gather information | Review published literature | • Conceptual literature (e.g.,

fidelity, adaptation, theory of change) • Empirical literature (e.g., prior efficacy studies, related interventions) |

We reviewed literature on adaptation, theory of change, prior efficacy and effectiveness studies of RIT, and studies of other NDBIs. |

| Review “grey literature” | • Unpublished studies (e.g., conference

proceedings) • Intervention materials (e.g., manuals, handouts, fidelity tools) |

For RIT, we reviewed the

following: • Intervention manuals (previous and current) • Provider training slide deck & handouts • Parent training lesson plans and handouts • Current fidelity measurement forms |

|

| Interview recognized experts | • Intervention developers, independent implementers, trainers, and clinicians | We interviewed lead investigators, including RIT developers and experts in other NDBIs. | |

|

| |||

| 2. Synthesize information | Describe consistencies & variations across intervention iterations | • Across studies (e.g.,

adaptations) • Within studies (e.g., different descriptions of what is required versus recommended for fidelity) |

We noted variations across studies in the

length of training sessions and the amount of recommended practice and

delivery. • There were within-study variations between parent and provider manuals (which encourage certain strategies) and fidelity checklists (which frame them as mandatory). |

|

| |||

| Conceptualize key distinctions in the structure of the intervention | • Intervention levels (e.g., trainer to

therapist, to parent, to child) • Critical strategies (i.e., intrinsic to the intervention as defined) • Modular components (i.e., could be implemented independently) |

We considered the following to be important

for our conceptualization of key RIT components: • The multi-level nature of RIT as a parent-implemented intervention • Adherence vs. competence in delivering essential practices • Function vs. specifying form when necessary • Elements as mandatory, recommended, or conditional |

|

| Record empirical or hypothesized causal explanations of efficacy | • RCTs, small-n designs, evaluations of

treatment mechanisms • Published causal models and experts’ beliefs about how the intervention works |

We identified efficacy studies of RIT and

searched for evidence of treatment mediation effects for similar

NDBIs. We identified prior theory underlying RIT and NDBIs and recorded experts’ explicit causal hypotheses. |

|

|

| |||

| 3. Finalize CORE model | Work towards consensus among intervention experts and stakeholders | • Preliminary

discussion • Intentional consensus process • Additional learning cycles as necessary to reach consensus |

We used Nominal Group Technique (NGT) and invited both RIT developers and expert trainers/clinicians to contribute their research and clinical insights on potential key components and their hypothesized causal explanations. |

| Document essential intervention

components (Components) |

• All intervention elements hypothesized as necessary for causal effects | Examples from RIT CORE model: • Contingently imitate child toy play, body movements, gestures, and vocalizations. • Model an action for the child to imitate—so that the child can see it—after a period of contingent imitation. • Pacing of the RIT cycle: spend more time on imitation than on asking the child to imitate. |

|

| Document hypothesized causal

explanations (Rationale for Effectiveness) |

• Primary and alternative hypotheses of causal effect | Example from RIT CORE model: • Component: Describe the child’s and your play/actions using simple, follow-in language • Rationale for effectiveness: Increases the child’s attention to the imitation model/action and provides appropriate language models. Promotes language. |

|

| Use CORE Fidelity Method in ongoing efficacy studies and implementation efforts | A plan for how to prevent drift or unhelpful

adaptations by: • Assessing fidelity • Ensuring fidelity when drafting new documents • Analyzing treatment mechanisms |

We initiated a study committee to review new documents (e.g., provider handouts) and initiatives (e.g., plan to analyze mechanism) and compare them to the CORE model as documented. | |

Figure 2. Diagram of CORE Fidelity Method.

Knowledge of essential intervention components is supported by evidence, theory, and developer insight and is reflected in intervention manual, materials, and fidelity measures. The CORE model makes this “latent” knowledge observable and concrete by documenting both essential intervention components and their rationales for effectiveness. The CORE Fidelity Method therefore supports informed revisions to original intervention documents; new documents; better measurement of fidelity, mechanisms of efficacy, and intervention outcomes; and informs adaptations when implementing in new settings.

CORE Fidelity Method Stage 1: Gather Information

Review Published Literature

The team discusses and becomes familiar with current literature on fidelity measurement, the EBT of interest, and similar interventions. Members should represent a variety of perspectives, roles, and disciplines (e.g., implementation scientists, intervention developers, clinicians). Literature review should focus on a) the description of the form and function of intervention components; and b) theories or empirical demonstrations of active ingredients and mechanisms of change. The goal of this step is for teams to think carefully about what “fidelity” means for their intervention.

Review Grey Literature

Team members closely review “grey literature,” including current and past fidelity documents, manuals, lesson plans (if applicable), EBT training materials, and/or grant applications. The goal of this step is to develop a nuanced understanding of the EBT’s structure (i.e., what comprises a “component”) from sources that typically include greater detail than peer-reviewed manuscripts.

Interview Recognized Experts

Not all information about an intervention is available in written form. Often, clinicians and intervention developers have assumptions about which components of their intervention are most important and why. Relevant interview questions include, “What are the components, procedures, or practices that are essential to this intervention?”, and, “How or why does doing this component lead to the intended outcomes of this intervention?” The goal of this step is to generate a list of potential essential elements with initial hypotheses as to why they work (i.e., causal mechanisms).

CORE Fidelity Method Stage 2: Synthesize Information

Describe Consistencies and Variation Across Intervention Iterations

The research team should review each training or fidelity document within and across research studies or implementation settings and note commonalities and differences across materials. Did the format or content of the intervention change at any point? For example, has task-shifting been used to increase capacity for delivering the intervention (e.g., a transition from provider- to parent-implemented EBT delivery)? Were changes introduced by the research team, or by clinicians in response to local needs? Inter-study (e.g., adaptations across studies) and intra-study distinctions (e.g., descriptions across documents) should be noted. Common elements across iterations of the intervention may be candidates for core elements, while variations may fall in the adaptable periphery. For interventions with more or less evidence of drift or more or less clearly prescribed procedures, this step may be abbreviated. At the end of this step, groups should have a list of common elements and variations or documented adaptations to the intervention.

Conceptualize Key Distinctions in the Structure of the Intervention

Team members discuss key concepts as they relate to the development of the CORE model of the EBT. For example, is it important to account for multiple levels of intervention, or the system in which the intervention is embedded? Does the intervention include modular components or decision-making algorithms? Will components be required, or framed as conditional on certain circumstances? After this stage, teams can begin drafting the initial structure for the intervention’s CORE model (e.g., whether there are multiple levels of intervention delivery).

Record Empirical and Hypothesized Causal Explanations of Efficacy

Both empirically demonstrated and hypothesized causal mechanisms of efficacy should be tentatively paired with each key component. Empirical evidence should be prioritized when available. More often, intervention developers will have written or unwritten theories about why their intervention is effective (e.g., clinical insights based on case examples). All of these “hypothesized” mechanisms, or causal explanations, should be included in the CORE model. Even when not yet empirically validated, causal mechanisms ground and justify each component while setting the stage for future research. After this step, each core element should be paired with one or more plausible hypotheses as to why or how they work.

CORE Fidelity Method Stage 3: Finalize CORE Model

Work Towards Consensus Among Intervention Experts and Stakeholders

Soliciting and incorporating intervention expert and community stakeholder input is necessary to finalize the CORE model. We recommend that the research team choose a method for reaching group consensus that is most pragmatic for their project (e.g., Delphi method, Dalkey & Helmer, 1963; Analytic Hierarchy Process, Saaty, 1990; Nominal Group Technique, Delbecq et al., 1975). To conduct a trial, it is necessary to achieve consensus regarding essential intervention components but not causal explanations; competing causal explanations may inform analyses and stimulate additional research.

Document Essential Intervention Elements (COmponents), & Document Hypothesized Causal Explanation (Rationales for Effectiveness)

Documenting the elements deemed essential for intervention outcomes and plausible hypotheses for how they work is essential to ensure reliability and prevent drift. The scope and level of detail may vary according to characteristics of the intervention. The team should choose a descriptive format for their CORE model that will be pragmatically useful in achieving their research aims. By this step, the research team should have documented a list or model comprised of essential intervention elements with their hypothesized causal explanations.

Ideally, the following types of evidence will be considered as a rationale for effectiveness for each core component, in descending order of weight: 1) evidence of change mechanism from the EBT or other similar intervention studies, 2) other evidence from the EBT or similar intervention studies, 3) evidence from basic science, 4) practice-based evidence (Locke et al., 2019), and 5) clinical insights.

Use CORE Fidelity Method in Ongoing Efficacy Studies and Implementation Efforts

By making core components—and their rationales for effectiveness—transparent and concrete, the CORE Fidelity Method supports: 1) informed revisions to original intervention documents; 2) development of new intervention documents to support EBT delivery; 3) more targeted measurement of fidelity, mechanisms of efficacy, and intervention outcomes; and 4) adaptations when implementing in new settings. In addition, the team should commit to a process by which they will reference and use their CORE model for these four purposes in their ongoing work.

Community Involvement.

There was no direct community involvement in the present study. However, members of this project team are EI or clinical providers. Also, the need for the CORE Fidelity Method was demonstrated in part due to community early intervention provider feedback (Ibañez et al., 2021) suggesting that providers frequently adapt RIT. Future work refining the RIT CORE model within the larger RIT trial will involve community providers and parents who have been trained in RIT; we will ask them for their insight on core RIT components and their rationales for effectiveness as we re-complete the CORE Fidelity Method in an iterative fashion. In addition, autistic study team members, providers, and parents of autistic children or children at risk for ASD will be asked to provide their thoughts on how acceptable and satisfactory RIT is (i.e., social validity) as well as on how they think the RIT adaptable periphery could be best tailored. We have existing information on the feasibility and acceptability of RIT by parents and providers (Walton & Ingersoll, 2012; Ibañez et al., 2019).

Results: Application of the CORE Fidelity Method

We applied the CORE Fidelity Method to RIT over approximately 15 weeks. Additional input was provided by a team of 5 RIT developers and expert RIT trainers. Including preparation time and meetings across all three stages of the Method, the group members most active in the creation of the RIT core model dedicated approximately 73 person-hours to the effort (around 15 hours total per person).

CORE Fidelity Method Stage 1: Gather Information

Review Published Literature

To start, our group considered the question, “What is fidelity?” Initiating discussions with a series of informal presentations on different conceptual and empirical articles (e.g., Nelson et al., 2012; Wainer & Ingersoll, 2013), we had several spirited discussions about conceptual aspects of fidelity, including distinctions between adherence and competence (Collyer et al., 2020), intervention forms and core functions (Perez Jolles et al., 2019; Kirk et al. 2019), and classification of intervention elements as mandatory, recommended, conditional, or prohibited (i.e., incorporating deontic logic). Next, we reviewed published literature on RIT and NDBIs, in particular noting any discussion about the form (e.g., number of sessions, client characteristics) and function (e.g., hypothesized causal mechanisms) of RIT intervention components.

Review “Grey Literature”

We reviewed 17 unique RIT documents, including the current RIT manual, provider training materials and handouts, caregiver handouts and lesson plans, and a fidelity measurement form. RIT has documentation for multiple delivery formats (e.g., researchers, community providers, caregivers, siblings) and via multiple modalities (e.g., in-person, synchronous telehealth, asynchronous telehealth). Document review revealed a wide range of RIT components, with notable variation across documents and in how RIT was defined over time and across studies. RIT documents included a combination of essential and recommended components that were often not explicitly delineated. This variation clearly justified the need for the development of a lean CORE Model for RIT.

Interview Recognized Experts

We created a list of questions for RIT intervention developers regarding what they viewed as the mandatory, recommended, and conditional elements of RIT, and why or how they thought each element influences RIT’s intended outcomes of improved spontaneous imitation and social communication. RIT intervention developers were invited to a meeting to help clarify their position on each of the elements and sources of variation. They provided feedback that informed our preliminary grouping of components, and they also mentioned additional components that we had not previously noted in our document review (e.g., intensity, or having some level of ‘extended’ practice).

CORE Fidelity Method Stage 2: Synthesize Information

Describe Consistencies and Variations across Intervention Iterations

Across the range of sources of information regarding RIT, we noted that some elements were always present (e.g., imitate the child, narrate your imitation, present a model for the child to imitate, and spend more time imitating the child than modeling new actions). We also noted several inter-study variations, including differing levels of delivery, whether providers modeled gestures in addition to actions, whether two sets of identical toys were required, the duration of each RIT session, and the recommended intervention dose. We noted potential intra-study variations between parent and provider manuals (which encourage certain strategies) and fidelity checklists (which frame them as mandatory). For example, “create a defined space” and “limit distractions” were phrased as mandatory steps in RIT manuals and lesson plans but were missing from the fidelity checklist. Both were later conceptualized as contingent on children’s level of distraction in the final CORE model.

Conceptualize Key Distinctions in the Structure of the Intervention

We considered the multilevel nature of RIT as a parent-implemented intervention including: parent-to-child, or treatment delivery; provider-to-parent, or training delivery; and even trainer-to-provider, or implementation strategy delivery. Essential components at the provider-to-parent level may directly impact parents’ self-efficacy, motivation and knowledge of RIT, and are thought to indirectly affect child social communication outcomes by increasing the quality and frequency with which parents use RIT techniques (Figure 1). Parent coaching and building family capacity align with practice recommendations from the Division for Early Childhood (Division for Early Childhood, 2014) and are key principles of the federally-funded early intervention system in which our RIT implementation trial will take place (IDEA, 2004; Pickard et al., 2021).

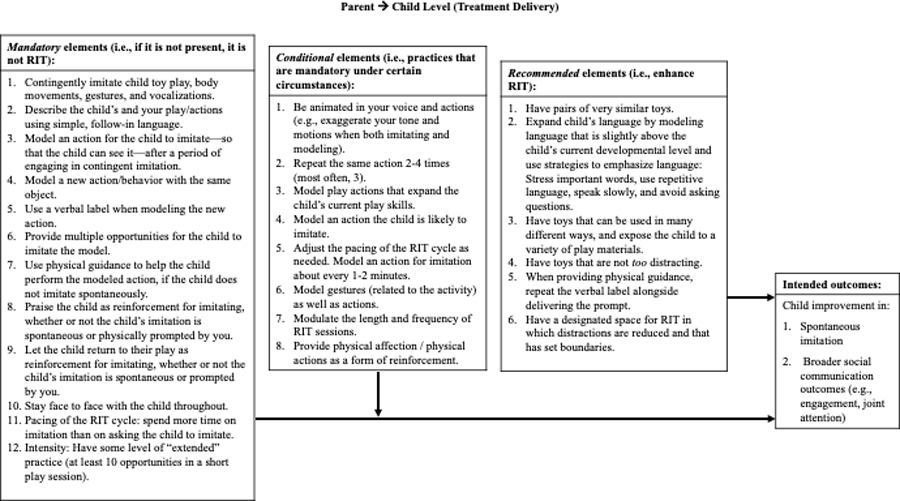

Many intervention developers conceptualize some aspects of their intervention as essential and others as recommended, but rarely is this clearly delineated in their training materials. In creating the CORE model for RIT, we defined mandatory elements as fundamental for the intervention to be considered RIT. Conditional elements were defined as practices that are mandatory under certain circumstances. For example, a conditional practice might become mandatory depending on the characteristics of the child (e.g., language level, play level, self-regulation, attention), caregivers (e.g., stress, schedule demands), or environment (e.g., availability of toys). Recommended elements were defined as practices that improve or enhance the delivery of RIT.

Record Empirical and Hypothesized Causal Explanations of Efficacy

We identified efficacy studies of RIT and searched for evidence of intervention mediation effects for similar NDBIs. We identified prior theory underlying RIT and NDBIs and recorded explicit causal hypotheses reported by experts.

The developmental and behavioral theories underlying RIT suggests potential causal explanations for its efficacy. For example, developmental cascades (Masten & Cicchetti, 2010) describe the process by which a child’s gains in one domain (e.g., cognitive or fine motor) build sequentially and influence gains in a variety of other domains. For example, children’s imitation of others’ actions has been found to predict later increases in joint attention, language, and social communication in the context of RIT, in other NDBIs, and in development more generally (e.g., Ingersoll, 2012; Yoder et al., 2020; Edmunds et al., 2017). RIT is developmental in that the context for intervention (e.g., which toys or activities are used) is child-directed. RIT can also be viewed through the lens of behavioral theory in that it includes an embedded discrete trial that ends with natural reinforcement via adult praise and children returning to their own, self-directed play.

Empirical evidence of mediated intervention effects does not yet exist for RIT; thus, it was important to become familiar with literature from other NDBIs. For example, the majority of RIT intervention time consists of the adult imitating the child in a responsive manner, and this type of responsiveness to the child has been found to mediate intervention effects for three different NDBIs (JASPER; Gulsrud et al., 2016; PACT; Pickles et al., 2015; ImPACT; Yoder et al., 2020).

CORE Fidelity Method Stage 3: Finalize the CORE Model

Work Towards Consensus Among Intervention Experts and Stakeholders

Nominal Group Technique (NGT; Delbecq et al., 1975) is a structured, group consensus building process consisting of four main steps: independent idea generation, round robin idea sharing, clarification of ideas, and voting. NGT was selected for this project because it promotes targeted discussion and produces a clear set of ideas for group voting. Two researchers (SE and AB) facilitated NGT with five RIT developers and expert trainers to reach consensus on the mandatory, recommended, and conditional components of the intervention at both the provider-parent and parent-child levels of delivery. Note that we conducted our consensus process with intervention experts and academic clinicians only; future consensus processes should include other stakeholders who have experienced RIT directly (e.g., providers, parents). In addition, future studies of RIT with older child, adolescent, and adult populations should include autistic patients who have received RIT.

Independent idea generation occurred asynchronously. Participants were asked to respond to two sets of probes provided within a spreadsheet sent via email. One set of questions focused on RIT implementation at the provider-parent level, while another set of questions focused on the parent-child level (Appendix, Table A1). Participants and facilitators then met via video conference to complete round robin idea sharing, clarification of ideas, and voting. A facilitator prompted one participant at a time to share a single component of RIT; whether they considered it to be mandatory, conditional, or recommended; and how they think it works. The facilitator asked each participant in turn to share one idea until no new ideas were presented. A second facilitator recorded participant responses via a screen-shared document. The round robin process was conducted once for the parent-child level and once for the provider-parent level of RIT delivery.

RIT components were then reviewed for clarity, and similar components were combined. Participants voted on which of the remaining components they thought were mandatory, conditional, or recommended by inserting a participant-unique icon next to each component description. Majority-voted items within each category were included in the final RIT CORE model, with weighted voting for the developer. There was group consensus for the majority of components. Complete consensus was reached for 42% of the mandatory components at the parent-child level, the remainder had at least 80% agreement, and all were endorsed by the developer. Throughout the NGT process, participants revised their categorization of components based on rationale provided by other experts.

Document Essential Intervention Elements (COmponents), & Document Hypothesized Causal Explanation (Rationale for Effectiveness)

During the NGT consensus process, we assembled a set of components judged to be essential to the efficacy of RIT. Documentation of each RIT component includes a description (i.e., what the strategy looks like), hypothesized causal mechanism of efficacy (i.e., why the strategy is effective for clinical outcomes), and specification of whether it is mandatory, recommended, or conditional, in which mandatory and conditional components are viewed as essential, and recommended are viewed as within the adaptable periphery. We encouraged RIT NGT participants to include many different types of information from studies on RIT, studies of other NDBIs, RIT materials, and clinical insights as part of their rationales for effectiveness for each core component they named. For parent – child RIT components, experts frequently drew on previous research on social communication development in general (rather than intervention evidence). For example, for the mandatory component, “describe the child’s and your play/actions using simple, follow-in language,” the rationale for effectiveness included empirical evidence from studies of general development: “Increases the child’s attention to the adult’s imitative actions and promotes child language development.” See Figure 3 for an overview model at the parent-child level. A full CORE model at both levels is available in the Appendix.

Figure 3. CORE RIT Model at the parent-to-child (treatment delivery) level.

An identical chart for the provider-to-parent (indirect delivery) level of RIT is available in the Appendix.

At the parent-child level, 12 components were identified as mandatory, eight as conditional, and six as recommended. An example mandatory RIT component at the parent-child level is, “Model an action for the child to imitate—so that the child can see it—after a period of contingent imitation.” The rationale for effectiveness is that modeling actions to imitate sets up the expectation for children to imitate, and this expectation starts to build the skill of imitation. An example conditional RIT component is, “Repeat the same action 2–4 times (often 3).” According to RIT developers and trainers, the child’s attention may be lost if too many opportunities are presented. Alternatively, some children may require additional presentations to process the action. Thus, the ideal number of repetitions is dependent upon child presentation. Finally, an example recommended component is to, “Have a designated space for RIT in which distractions are reduced and that has set boundaries.” This is thought to enhance children’s attention to the interaction and maximize learning.

At the provider-parent level, 12 RIT components were identified as mandatory, none as conditional, and nine as recommended. For example, one mandatory coaching component is to, “have parents practice RIT strategies in some manner (e.g., during session, submitting a video, role play),” because parent practice increases knowledge of RIT, encourages self-efficacy, and affords opportunities to receive feedback when it is conducted in a way that is consistent with parents’ comfort level and cultural values. A recommended RIT coaching component is to provide, “visual supports (handouts, videos, etc.) that parents can take away from the session and to which they can refer,” because this reinforces knowledge and supports ongoing use.

Use CORE Fidelity Method in Ongoing Efficacy Studies and Implementation Efforts

Last, we developed an internal process to use the CORE Fidelity Method in an implementation trial to: 1) inform revisions to intervention documents; 2) ensure accuracy when creating new documents; 3) facilitate better measurement of fidelity, mechanisms of efficacy, and intervention adaptations and outcomes; and 4) inform adaptations when implementing in new settings (Figure 2). We formed a study committee that will review new documents (e.g., revised provider handouts) and initiatives (e.g., plans to analyze mechanisms) and compare them to the CORE model. We also plan to use the CORE model to help us classify provider-led adaptations as fidelity consistent or inconsistent during our effectiveness-implementation trial of RIT in early intervention agency settings across four states.

Discussion

While researchers are often concerned with the delivery of essential intervention components, providers are more often concerned with the need to adapt practices to the individual and their context (Boyd et al., 2016; Kirk et al., 2020). This widens the science-to-service gap, and the CORE Fidelity Method aims to help bridge this gap. To advance research on the implementation of autism-focused interventions, we need to capture the dialectic between key components that are thought to affect outcomes and other peripheral components that are amenable to adaptation. Further, in line with “theory of change,” we argue that intervention components should be considered essential only if they have a clear rationale for effectiveness. In this paper, we proposed the CORE Fidelity Method, which outlines flexible yet systematic steps for documenting central intervention components of any psychosocial intervention.

While there is a strong belief in the psychosocial intervention literature that an intervention must be delivered to a high level of fidelity in order to be effective (Proctor et al., 2011; Stahmer et al., 2015; Prowse & Nagel, 2015), empirical investigations have been mixed. In fact, several meta-analyses have found a nonsignificant relation between fidelity and outcomes for psychosocial interventions for children (e.g., Rapley & Loades, 2018; Webb et al., 2010), while others found small relations between adherence and outcome, but not competence and outcome (Collyer et al., 2018). There could be many reasons for the inconsistent findings between fidelity and outcomes, such as the way that fidelity was measured and/or a restriction in range on the fidelity measure. In existing systems (e.g., IDEA Part C Early Intervention, schools), it is likely that fidelity to an overly broad set of strategies may hinder the ability of the intervention to be personalized to meet individual needs. Despite the lack of link between fidelity and outcomes, it is still worth achieving accurate fidelity measurement to not only understand content (i.e., clinical outcomes), but to have a better understanding of context (i.e., whether the core components of a selected intervention work in EI settings for young children with autism when implemented by EI providers).” It is our belief that when fidelity is distilled to the central components that have a clear rationale for effectiveness, the relation between intervention fidelity and desired outcomes may become more apparent.

Looking to the future, we anticipate several ways the CORE Fidelity Method can improve the quality of implementation research and the delivery of EBTs in the community. We recommend that researchers preparing to implement an EBT follow the steps of the CORE Fidelity Method to define the core components of their intervention. In our own research, we believe the CORE Fidelity Method will help us to improve the internal reliability of our randomized trial by helping to resolve inconsistencies across training documents (e.g., manuals, handouts, fidelity measures) and create new documents (e.g., for a new consultation service with EI providers). “Recommended” RIT components have helped us begin to define the adaptable periphery of RIT and make planned adaptations to RIT for our implementation trial. While our use of the CORE Fidelity Method occurred prior to an effectiveness-implementation trial, we recommend that research teams consider using the CORE Fidelity Method at each stage of the efficacy-to-effectiveness pipeline – from single case designs to randomized control trials – so that developers and their teams can track the evolution of an intervention and think about each intervention component mechanistically.

Measuring fidelity is expensive and sometimes impractical in effectiveness and implementation trials (Perepletchikova, 2011). We have already found the RIT CORE model to be useful in developing streamlined fidelity measures that reflect our conceptualization of mandatory, conditional, and recommended elements. We have considered more explicitly the role of provider-parent and parent-child fidelity, and how each level can best be measured. The CORE Fidelity Method is also a relatively cost-effective implementation strategy to use when preparing for a study of intervention implementation or mechanisms of efficacy. Our team included several trainees (including the lead author), who led and attended meetings, led or observed the NGT, and contributed to this process, enhancing both training value and cost effectiveness. Optimizing fidelity measures to focus on key elements may support the creation of more practical and cost-effective fidelity tools, thus making fidelity monitoring more feasible for community implementation.

Finally, the CORE Fidelity Method has helped us clarify and document the hypothesized mechanisms of change of RIT for later empirical validation. For example, the RIT component requiring parents to present multiple imitation models and adjust the number of models based on child attention is justified by the rationale that children’s attention to the model is crucial for their understanding and imitation. Child attention to models could therefore be evaluated as a potential mediator of treatment effects in future RIT trials. At the provider-parent level, the impact of parent coaching fidelity on child outcomes is rarely examined (Penney & Schwartz, 2019), but will also be a focus of our future research given the parent-implemented nature of most early social communication interventions. Likewise, the CORE Fidelity Method suggests that more complex analyses may be required to empirically demonstrate links between fidelity and positive outcomes. For example, some parent behaviors are required contingently on child behavior (e.g., whether or not the child is displaying disruptive behaviors) and on context (e.g., whether significant distractions arise that require attention). In such cases, behavioral coding of recorded parent-child sessions must account for child behaviors and features of the environment to assess the appropriateness of parent responses. In addition, analysis of the influence of fidelity on child outcomes may require more than simple main effects of parents’ use of RIT strategies; interaction terms that include child- and context-level variables may also be needed to demonstrate influence on outcomes.

There are several limitations to this study that inform our future work on the CORE Fidelity Method. First, while we included RIT creators, expert trainers, and clinicians, we did not include end-user stakeholders such as clinicians who are currently working in birth-to-three or community settings and parents who have been trained in RIT. The perspectives of these stakeholders would further inform the application of the RIT CORE model and would be especially helpful at adapting the periphery around core components to best fit family and community needs. Exploring whether families find these components to be face valid and acceptable would add to the social validity of the RIT CORE model and of RIT in general. We plan to involve families and community providers in a review and potential revision of the RIT CORE model as we use the CORE Fidelity Method iteratively in our hybrid trial. In addition, it is possible that some core RIT components, especially those that were termed “recommended” or “conditional,” might be different for families and communities of different cultural, racial, or socioeconomic backgrounds. This should be explored in future iterative evaluations of the RIT CORE model.

From a practitioner perspective, we hope that the RIT CORE model and future CORE models for other social communication interventions will help clinicians develop a clear framework for how these interventions work, which will in turn inform how they choose to use and adapt intervention techniques. Creating a lean set of mandatory intervention elements may also facilitate implementation of social communication interventions across existing service systems. For instance, Azad and colleagues (2021) have found that parents and teachers may use as few as five of eight required intervention components regularly, with even fewer components being used by both parents and teachers. “CORE Models” for other EBTs in autism, or for NDBIs as an intervention class, should be created to inform community implementation across specific brand-name interventions.

In closing, our work dovetails with state-of-the-art adaptation models in implementation science and growing interest to develop implementation strategies that specifically support EBT adaptation. For example, by first completing the CORE Fidelity Method, researchers and clinicians will be better able to make planned adaptations using the MADI framework (Kirk et al., 2020). Making adaptations in a thoughtful and planned manner has already been the focus of implementation research (Aarons et al., 2012), and we believe that the CORE Fidelity Method complements and supports these efforts. A CORE model, derived with a systematic method, enables the rest of an intervention to be adapted to customize community fit, informs the creation of feasible fidelity measures, sets up hypothesized mechanisms of action for later empirical validation, and empowers the decision-making of researchers and clinicians alike.

Supplementary Material

References

- Abry T, Hulleman CS, & Rimm-Kaufman SE (2015). Using indices of fidelity to intervention core components to identify program active ingredients. American Journal of Evaluation, 36(3), 320–338. 10.1177/1098214014557009 [DOI] [Google Scholar]

- Azad GF, Minton KE, Mandell DS, & Landa RJ (2021). Partners in School: An implementation strategy to promote alignment of evidence-based practices across home and school for children with autism spectrum disorder. Administration and Policy in Mental Health and Mental Health Services Research, 48(2), 266–278. 10.1007/s10488-020-01064-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell EC, Marcus DK, & Goodlad JK (2013). Are the parts as good as the whole? A meta-analysis of component treatment studies. Journal of Consulting and Clinical Psychology, 81(4), 722–736. 10.1037/a0033004 [DOI] [PubMed] [Google Scholar]

- Cardon T a, & Wilcox MJ (2011). Promoting imitation in young children with autism: a comparison of reciprocal imitation training and video modeling. Journal of Autism and Developmental Disorders, 41(5), 654–666. 10.1007/s10803-010-1086-8 [DOI] [PubMed] [Google Scholar]

- Carroll C, Patterson M, Wood S, Booth A, Rick J, & Balain S (2007). A conceptual framework for implementation fidelity. Implementation Science, 2(1), 1–9. 10.1186/1748-5908-2-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA, & Norton WE (2016). The Adaptome: Advancing the science of intervention adaptation. American Journal of Preventive Medicine, 51(4), S124–S131. 10.1016/j.amepre.2016.05.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Dziak JJ, Kugler KC, & Trail JB (2014). Factorial experiments: Efficient tools for evaluation of intervention components. American Journal of Preventive Medicine, 47(4), 498–504. 10.1016/j.amepre.2014.06.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collyer H, Eisler I, & Woolgar M (2020). Systematic literature review and meta-analysis of the relationship between adherence, competence and outcome in psychotherapy for children and adolescents. European Child and Adolescent Psychiatry, 29(4), 417–431. 10.1007/s00787-018-1265-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuijpers P, Cristea IA, Karyotaki E, Reijnders M, & Hollon SD (2019). Component studies of psychological treatments of adult depression: A systematic review and meta-analysis. Psychotherapy Research, 29(1), 15–29. 10.1080/10503307.2017.1395922 [DOI] [PubMed] [Google Scholar]

- Curran GM, Bauer M, Mittman B, Pyne JM, & Stetler C (2012). Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical care, 50(3), 217–226. 10.1097/MLR.0b013e3182408812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalkey N, & Helmer O (1963). An experimental application of the Delphi method to the use of experts. Management Science, 9(3), 458–467. 10.1287/mnsc.9.3.458 [DOI] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, & Lowery JC (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 1–15. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delbecq AL, VandeVen AH, & Gustafson DH, (1975). Group techniques for program planning: A guide to nominal group and Delphi processes Scott Foresman and Company. [Google Scholar]

- Dimidjian S, & Hollon SD (2010). How would we know if psychotherapy were harmful? American Psychologist, 65(1), 21–33. 10.1037/a0017299 [DOI] [PubMed] [Google Scholar]

- Division for Early Childhood. (2014). DEC recommended practices in early intervention/early childhood special education 2014 Retrieved from http://www.dec-sped.org/recommendedpractices

- Edmunds SR, Ibañez LV, Warren Z, Messinger DS, & Stone WL (2016). Longitudinal prediction of language emergence in infants at high and low risk for autism spectrum disorder. Development and Psychopathology, 29(1), 1–11. 10.1017/S0954579416000146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frost KM, Russell K, & Ingersoll B (2021). Using qualitative content analysis to understand the active ingredients of a parent-mediated naturalistic developmental behavioral intervention. Autism, 11. 10.1177/13623613211003747 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, Kadoorie SEL, Dixon-Woods M, McCulloch P, Wyatt JC, Phelan AWC, & Michie S (2014). Better reporting of interventions: Template for intervention description and replication (TIDieR) checklist and guide. BMJ (Online, 348(March), 1–12. 10.1136/bmj.g1687 [DOI] [PubMed] [Google Scholar]

- Gallagher M, Hares T, Spencer J, Bradshaw C, & Webb I (1993). The Nominal Group Technique: A research tool for general practice? Family Practice, 10(1), 76–81. 10.1093/fampra/10.1.76 [DOI] [PubMed] [Google Scholar]

- Gulsrud AC, Hellemann G, Shire S, & Kasari C (2016). Isolating active ingredients in a parent-mediated social communication intervention for toddlers with autism spectrum disorder. Journal of Child Psychology and Psychiatry, 57(5), 606–613. 10.1111/jcpp.12481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall TA, Mastel S, Nickel R, & Wainer A (2018). Parents training parents: Lessons learned from a study of reciprocal imitation training in young children with autism spectrum disorder. Autism, 136236131881564. 10.1177/1362361318815643 [DOI] [PubMed]

- Haynes A, Brennan S, Redman S, Williamson A, Gallego G, & Butow P (2016). Figuring out fidelity: A worked example of the methods used to identify, critique and revise the essential elements of a contextualised intervention in health policy agencies. Implementation Science, 11(1). 10.1186/s13012-016-0378-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Individuals With Disabilities Education Act, 20 U.S.C. § 1400 (2004).

- Ingersoll B, Berger N, Carlsen D, & Hamlin T (2016). Improving social functioning and challenging behaviors in adolescents with ASD and significant ID: A randomized pilot feasibility trial of reciprocal imitation training in a residential setting. Developmental Neurorehabilitation, 00(00), 1–10. 10.1080/17518423.2016.1211187 [DOI] [PubMed] [Google Scholar]

- Ingersoll B (2010). Pilot randomized controlled trial of Reciprocal Imitation Training for teaching elicited and spontaneous imitation to children with autism. Journal of Autism and Developmental Disorders, 40(9), 1154–1160. 10.1007/s10803-010-0966-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingersoll B, Lewis E, & Kroman E (2007). Teaching the imitation and spontaneous use of descriptive gestures in young children with autism using a naturalistic behavioral intervention. Journal of Autism and Developmental Disorders, 37(8), 1446–1456. 10.1007/s10803-006-0221-z [DOI] [PubMed] [Google Scholar]

- Ingersoll B (2012). Brief report: effect of a focused imitation intervention on social functioning in children with autism. Journal of Autism and Developmental Disorders, 42(8), 1768–1773. 10.1007/s10803-011-1423-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingersoll B, & Schreibman L (2006). Teaching reciprocal imitation skills to young children with autism using a naturalistic behavioral approach: Effects on language, pretend play, and joint attention. Journal of Autism and Developmental Disorders, 36(4), 487–505. 10.1007/s10803-006-0089-y [DOI] [PubMed] [Google Scholar]

- Johnson LD, Fleury V, Ford A, Rudolph B, & Young K (2018). Translating evidence-based practices to usable interventions for young children with autism. Journal of Early Intervention, 40(2), 158–176. 10.1177/1053815117748410 [DOI] [Google Scholar]

- Kazdin AE (2007). Mediators and mechanisms of change in psychotherapy research. Annual Review of Clinical Psychology, 3(1), 1–27. 10.1146/annurev.clinpsy.3.022806.091432 [DOI] [PubMed] [Google Scholar]

- Kendall PC, & Beidas RS (2007). Smoothing the trail for dissemination of evidence-based practices for youth: Flexibility within fidelity. Professional Psychology: Research and Practice, 38(1), 13–20. 10.1037/0735-7028.38.1.13 [DOI] [Google Scholar]

- Kendall PC, Chu B, Gifford A, Hayes C, & Nauta M (1998). Breathing life into a manual: Flexibility and creativity with manual-based treatments. Cognitive and Behavioral Practice, 5(2), 177–198. 10.1016/S1077-7229(98)80004-7 [DOI] [Google Scholar]

- Kendall PC, & Frank HE (2018). Implementing evidence-based treatment protocols: Flexibility within fidelity. Clinical Psychology: Science and Practice, 25(4), 1–12. 10.1111/cpsp.12271 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirk MA, Moore JE, Wiltsey Stirman S, & Birken SA (2020). Towards a comprehensive model for understanding adaptations’ impact: The model for adaptation design and impact (MADI). Implementation Science, 15(1), 1–15. 10.1186/s13012-020-01021-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke J, Rotheram-Fuller E, Harker C, Kasari C, & Mandell DS (2019). Comparing a Practice-Based Model with a Research-Based Model of social skills interventions for children with autism in schools. Research in Autism Spectrum Disorders, 62(January), 10–17. 10.1016/j.rasd.2019.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller CJ, Wiltsey-Stirman S, & Baumann AA (2020). Iterative Decision-making for Evaluation of Adaptations (IDEA): A decision tree for balancing adaptation, fidelity, and intervention impact. Journal of Community Psychology, 48(4), 1163–1177. 10.1002/jcop.22279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pellecchia M, Connell JE, Beidas RS, Xie M, Marcus SC, & Mandell DS (2015). Dismantling the active ingredients of an intervention for children with autism. Journal of Autism and Developmental Disorders, 45(9), 2917–2927. 10.1007/s10803-015-2455-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penney A, & Schwartz I (2019). Effects of coaching on the fidelity of parent implementation of reciprocal imitation training. Autism, 23(6), 1497–1507. 10.1177/1362361318816688 [DOI] [PubMed] [Google Scholar]

- Perepletchikova F (2011). On the topic of treatment integrity. Clin Psychol, 18(2), 148–153. 10.1111/j.1468-2850.2011.01246.x.On [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickard K, Mellman H, Frost K, Reaven J, & Ingersoll B (2021). Balancing fidelity and flexibility: Usual care for young children with an increased likelihood of having autism spectrum disorder within an early intervention system. Journal of Autism and Developmental Disorders, (0123456789). 10.1007/s10803-021-04882-4 [DOI] [PMC free article] [PubMed]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, & Hensley M (2011). Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health, 38(2), 65–76. 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prowse PT, & Nagel T (2015). A meta-evaluation: The role of treatment fidelity within psychosocial interventions during the last decade. African Journal of Psychiatry (South Africa), 18(2). 10.4172/Psychiatry.1000251 [DOI] [Google Scholar]

- Rapley HA, & Loades ME (2019). A systematic review exploring therapist competence, adherence, and therapy outcomes in individual CBT for children and young people. Psychotherapy Research, 29(8), 1010–1019. 10.1080/10503307.2018.1464681 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saaty TL (1990). How to make a decision: The analytic hierarchy process. European Journal of Operational Research, 48(1), 9–26. 10.1016/0377-2217(90)90057-I [DOI] [PubMed] [Google Scholar]

- Sam AM, Cox AW, Savage MN, Waters V, & Odom SL (2020). Disseminating information on evidence-based practices for children and youth with autism spectrum disorder: AFIRM. Journal of autism and developmental disorders, 50(6), 1931–1940. [DOI] [PubMed] [Google Scholar]

- Schreibman L, Dawson G, Stahmer AC, Landa R, Rogers SJ, McGee GG, Kasari C, Ingersoll B, Kaiser AP, Bruinsma Y, McNerney E, Wetherby A, & Halladay A (2015). Naturalistic developmental behavioral interventions: Empirically validated treatments for autism spectrum disorder. Journal of Autism and Developmental Disorders, 45(8), 2411–2428. 10.1007/s10803-015-2407-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stahmer AC, Rieth S, Lee E, Reisinger EM, Mandell DS, & Connell JE (2015). Training teachers to use evidence-based practices for autism: Examining procedural and implementation fidelity. Psychology in the Schools, 52(2). 10.1002/pits.21815 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Töret G, & Özmen ER (2019). Effects of reciprocal imitation training on social communication skills of young children with autism spectrum disorder. Egitim ve Bilim, 44(199), 279–296. 10.15390/EB.2019.8222 [DOI] [Google Scholar]

- Wainer AL, & Ingersoll BR (2013). Disseminating ASD interventions: A pilot study of a distance learning program for parents and professionals. Journal of Autism and Developmental Disorders, 43(1), 11–24. 10.1007/s10803-012-1538-4 [DOI] [PubMed] [Google Scholar]

- Wainer A, & Ingersoll B (2013). Intervention fidelity: An essential component for understanding ASD parent training research and practice. Clinical Psychology Science and Practice, 352–374.

- Walton KM, & Ingersoll BR (2012). Evaluation of a sibling-mediated imitation intervention for young children with autism. Journal of Positive Behavior Interventions, 14(4), 241–253. 10.1177/1098300712437044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wampold BE (2015). How important are the common factors in psychotherapy? An update. World Psychiatry, 14(3), 270–277. 10.1002/wps.20238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb CA, DeRubeis RJ, & Barber JP (2010). Therapist adherence/competence and treatment outcome: A meta-analytic review. J Consult Clin Psychol, 78(2), 200–211. 10.4088/PCC.19r02465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiltsey-Stirman S (2020). Commentary: Challenges and opportunities in the assessment of fidelity and related constructs. Administration and Policy in Mental Health and Mental Health Services Research, 47(6), 932–934. 10.1007/s10488-020-01069-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiltsey-Stirman SW, Baumann AA, & Miller CJ (2019). The FRAME: An expanded framework for reporting adaptations and modifications to evidence-based interventions. Implementation Science, 14(58), 1–10. 10.1186/s13012-019-0898- [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder PJ, Stone WL, & Edmunds SR (2020). Parent utilization of ImPACT intervention strategies is a mediator of proximal then distal social communication outcomes in younger siblings of children with ASD. Autism, 25(1). 10.1177/1362361320946883 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaghlawan HY, & Ostrosky MM (2016). A Parent-implemented intervention to improve imitation skills by children with autism: A pilot study. Early Childhood Education Journal, 44(6), 671–680. 10.1007/s10643-015-0753-y [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.