Abstract

High-throughput experimentation has revolutionized data-driven experimental sciences and opened the door to the application of machine learning techniques. Nevertheless, the quality of any data analysis strongly depends on the quality of the data and specifically the degree to which random effects in the experimental data-generating process are quantified and accounted for. Accordingly calibration, i.e. the quantitative association between observed quantities and measurement responses, is a core element of many workflows in experimental sciences.

Particularly in life sciences, univariate calibration, often involving non-linear saturation effects, must be performed to extract quantitative information from measured data. At the same time, the estimation of uncertainty is inseparably connected to quantitative experimentation. Adequate calibration models that describe not only the input/output relationship in a measurement system but also its inherent measurement noise are required. Due to its mathematical nature, statistically robust calibration modeling remains a challenge for many practitioners, at the same time being extremely beneficial for machine learning applications.

In this work, we present a bottom-up conceptual and computational approach that solves many problems of understanding and implementing non-linear, empirical calibration modeling for quantification of analytes and process modeling. The methodology is first applied to the optical measurement of biomass concentrations in a high-throughput cultivation system, then to the quantification of glucose by an automated enzymatic assay. We implemented the conceptual framework in two Python packages, calibr8 and murefi, with which we demonstrate how to make uncertainty quantification for various calibration tasks more accessible. Our software packages enable more reproducible and automatable data analysis routines compared to commonly observed workflows in life sciences.

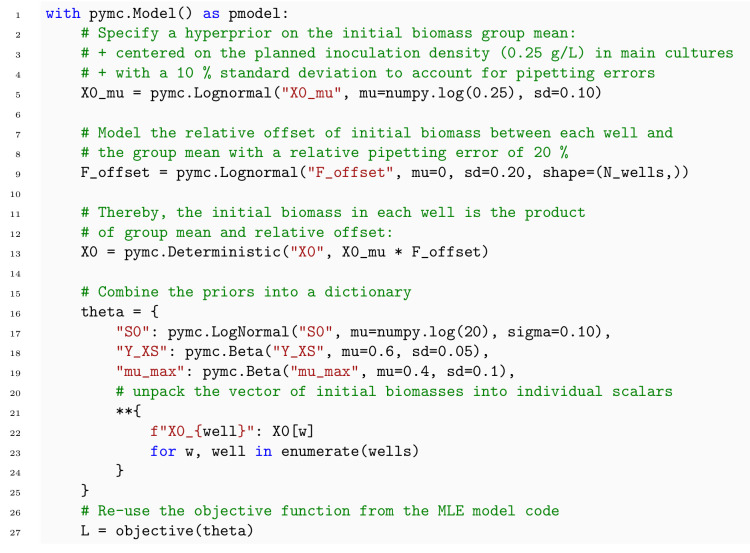

Subsequently, we combine the previously established calibration models with a hierarchical Monod-like ordinary differential equation model of microbial growth to describe multiple replicates of Corynebacterium glutamicum batch cultures. Key process model parameters are learned by both maximum likelihood estimation and Bayesian inference, highlighting the flexibility of the statistical and computational framework.

Author summary

In experimental fields like biotechnology, scientists need to quantify process parameters such as concentrations and state the uncertainty around them. However, measurements rarely yield the desired quantity directly; for example, the measurement of scattered light is just an indirect measure for the number of cells in a suspension. For reliable interpretation, scientists must determine the uncertainty around the underlying quantities of interest using statistical methods.

A key step in these workflows is the establishment of calibration models to describe the relation between the quantities of interest and measurement outcomes. This is typically done using measurements of reference samples for which the true quantities are known. However, implementing and applying these statistical models often requires skills that are not commonly taught.

We therefore developed two software packages, calibr8 and murefi, to simplify such calibration and modeling procedures. To showcase our work, we performed an experiment commonly seen in microbiology: the acquisition of a microbial growth curve, in this case of Corynebacterium glutamicum, in an online measurement device. Using our software, we built a mathematical model of the overall process to quantify relevant parameters with uncertainty, e.g. the growth rate or the yield of biomass per amount of glucose.

This is a PLOS Computational Biology Software paper.

1 Introduction

1.1 Calibration in life sciences

Calibration modeling is an omnipresent task in experimental science. Particularly the life sciences make heavy use of calibration modeling to achieve quantitative insights from experimental data. The importance of calibration models (also known as calibration curves) in bioanalytics is underlined in dedicated guidance documents by EMA and FDA [1, 2] that also make recommendations for many related aspects such as method development and validation. While liquid chromatography and mass spectrometry are typically calibrated with linear models [3], a four- or five-parameter logistic model is often used for immuno- or ligand-binding assays [2, 4–6]. The aforementioned guidance documents focus on health-related applications, but there are countless examples where (non-linear) calibration needs to be applied across biological disciplines. From dose-response curves in toxicology to absorbance or fluorescence measurements, or the calibration of online measurement systems, experimentalists are confronted with the task of calibration.

At the same time, recent advances in affordable liquid-handling robotics facilitate lab scientists in chemistry and biotechnology to (partially) automate their specialized assays (e.g. [7, 8]). Moreover, advanced robotic platforms for parallelized experimentation, monitoring and analytics [8, 9] motivate online data analysis and calibration for process control of running experiments.

1.2 Generalized computational methods for calibration

Experimental challenges in calibration are often unique to a particular field and require domain knowledge to be solved. At the same time, the statistical or computational aspects of the workflow can be generalized across domains. With the increased amount of available data in high-throughput experimentation comes the need for equally rapid data analysis and calibration. As a consequence, it is highly desirable to develop an automatable, technology-agnostic and easy-to-use framework for quantitative data analysis with calibration models.

From our perspective of working at the intersection between laboratory automation and modeling, we identified a set of requirements for calibration: Data analyses rely more and more on scripting languages such as Python or R, making the use of spreadsheet programs an inconvenient bottleneck. At various levels, and in particular when non-linear calibration models are involved, the statistically sound handling of uncertainty is at the core of a quantitative data analysis.

Before going into detail about the calibration workflow, we would like to highlight its most important aspects and terminology based on the definition of calibration by the International Bureau of Weights and Measures (BIPM) [10]:

2.39 calibration: “Operation that, under specified conditions, in a first step, establishes a relation between the quantity values with measurement uncertainties provided by measurement standards and corresponding indications with associated measurement uncertainties and, in a second step, uses this information to establish a relation for obtaining a measurement result from an indication.”

2.9 measurement result: “[…] A measurement result is generally expressed as a single measured quantity value and a measurement uncertainty.”

The “first step” from the BIPM definition is the establishment of a relation that we will call calibration model henceforth. In statistical terminology, the relationship is established between an independent variable (BIPM: quantity values) and a dependent variable (BIPM: indications) and it is important to note that the description of measurement uncertainty is a central aspect of a calibration model. In the application (“second step”) of the calibration model, the quantification of uncertainty is a core aspect as well.

Uncertainty arises from the fact that measurements are not exact, but subject to some form of random effects. While many methods such as linear regression assume that these random effects are distributed according to a Normal distribution, we want to stress that a generalized framework for calibration should not make such constraints. Instead, domain experts should be enabled to choose a probability distribution that is best suited to describe their measurement system at hand.

Going beyond the BIPM definition, we see the application of calibration models two-fold:

Inference of individual independent quantity values from one or more observations.

Inferring the parameters of a more comprehensive process model from measurement responses obtained from (samples of) the system.

For both applications, uncertainties should be a standard outcome of the analysis. In life sciences, the commonly used estimate of uncertainty is the confidence interval. The interpretation of confidence intervals however is challenging, as it is often oversimplified and confused with other probability measures [11, 12]. Furthermore, their correct implementation for non-linear calibration models, and particularly in combination with complex process models, is technically demanding. For this reason, we use Bayesian credible intervals that are interpreted as the range in which an unobserved parameter lies with a certain probability [13]. In Section 2.3 we go into more details about the uncertainty measures and how they are obtained and interpreted.

Even though high-level conventions and recommendations exist, the task of calibration is approached with different statistical methodology across the experimental sciences. In laboratory automation, we see a lack of tools enabling practitioners to build tailored calibration models while maintaining a generalized approach. At the same time, generalized calibration models have the potential to improve adequacy of complex simulations in the related fields.

While numerous software packages for modeling biological systems are available, most are targeted towards complex biological networks and do not consider calibration modeling or application to large hierarchical datasets. Notable examples are Data2Dynamics [14] or PESTO [15], both allowing to customize calibration models and the way the measurement error is described. However, both tools are implemented in MATLAB and are thus incompatible with data analysis workflows that leverage the rich ecosystem of scientific Python libraries. Here, Python packages such as PyCoTools3 [16] for the popular COPASI software [17] provide valuable functionality, but are limited with respect to custom calibration models, especially in a Bayesian modeling context. To the best of our knowledge, none of these frameworks provide customizable calibration models that can be used outside of the process modeling context and are at the same time compatible with Bayesian modeling as well as modular combination with other libraries.

1.3 Aim of this study

This study aims to build an understanding of how calibration models can be constructed to describe both location and spread of measurement outcomes such that uncertainty can be quantified. In two parts, we demonstrate a toolbox for calibration models, calibr8, on the basis of application examples, thus showing how it directly addresses questions typical for quantitative data analysis.

In part one (Section 4.1) we demonstrate how to construct such calibration models based on a reparametrized asymmetric logistic function applied to a photometric assay. We give recommendations for obtaining calibration data and introduce accompanying open-source Python software that implements object-oriented calibration models with a variety of convenience functions.

In part two (Section 4.2) we show how calibration models can become part of elaborate process models to accurately describe measurement uncertainty caused by experimental limitations. We introduce a generic framework, murefi, for refining a template process model into a hierarchical model that flexibly shares parameters across experimental replicates and connects the model prediction with observed data via the previously introduced calibration models. This generic framework is applied to build an ordinary differential equation (ODE) process model for 28 microbial growth curves gained in automated, high-throughput experiments. Finally, we demonstrate how the calibration model can be applied to perform maximum likelihood estimation (MLE) or Bayesian inference of process model parameters while accounting for non-linearities in the experimental observation process.

Although this paper chooses biotechnological applications, the presented approach is generic and our Python implementations are applicable to a wide range of research fields. Our documentation includes examples from broader life-science applications such as cell-counting and enzyme catalysis, which can be transferred to statistically similar problems in, for example, environmental research or chemistry.

2 Theoretical background

2.1 Probability theory for calibration modeling

Probability distributions are at the heart of virtually all statistical and modeling methods. They describe the range of values that a variable of unknown value, also called random variable, may take, together with how likely these values are. This work focuses on univariate calibration tasks, where a continuous variable is obtained as the result of the measurement procedure. Univariate, continuous probability distributions such as the Normal or Student-t distribution are therefore relevant in this context. Probability distributions are described by a set of parameters, such as {μ, σ} in the case of a Normal distribution, or {μ, scale, ν} in the case of a Student-t distribution.

To write that a random variable “rv” follows a certain distribution, the ∼ symbol is used: rv ∼ Student- t(μ, scale, ν). The most commonly found visual representation of a continuous probability distribution is in terms of its probability density function (PDF, S1 Fig), typically written as p(rv).

The term rv conditioned on d is used to refer to the conditional probability of a random variable rv given that certain data d was observed. It is written as p(rv ∣ d).

A related term, the likelihood , takes the inverse perspective on how likely it is to make observations d given a fixed value of the random variable. Both p(d ∣ rv) and are common notations for the likelihood. Note that the likelihood is not a PDF of the random variable [18]. We use the notation of throughout this paper for better visibility of likelihoods.

In situations where only limited data is available, a Bayesian statistician argues that prior information should be taken into account. The likelihood can then be combined with prior beliefs into the posterior probability according to Bayes’ rule (Eq 1).

| (1) |

According to Eq 1, the posterior probability p(rv∣d) of the random variable rv given the data is equal to the product of prior probability times likelihood, divided by its integral.

When only considering the observed data, the probability of the random variable conditioned on data p(rv ∣ d), can be obtained by normalizing the likelihood by its integral (Eq 2).

| (2) |

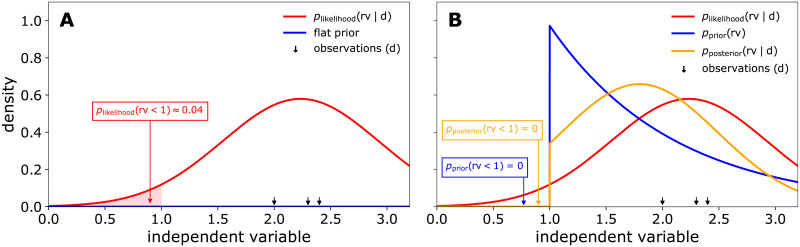

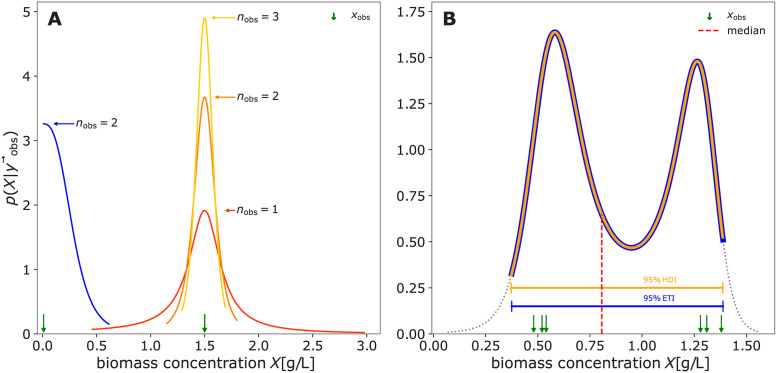

From the Bayesian perspective, Eq 2 can be understood as a special case of Bayes’ rule (Eq 1) with flat (uninformative) prior information. This connection between a likelihoodist and a Bayesian perspective on independent variable probabilities is illustrated in Fig 1. In Fig 1A, the red probability density function was obtained only from the likelihood of observations (black arrows), corresponding to Eq 2 or the Bayesian perspective with a flat prior (blue). Assuming that the independent variable is a priori known to be ≥ 1, one might choose a corresponding prior (Fig 1B, blue). The posterior (orange) then compromises prior and likelihood according to Eq 1, resulting in a posterior probability of 0 that the variable is below 1. For a thorough introduction on Bayesian methods, we refer the interested reader to [19].

Fig 1. Comparison of likelihoodist and Bayesian probability densities.

Using a noise model, the likelihood function normalized by its integral gives a likelihoodist probability density function (red) for the independent variable. In A the resulting probability that the variable of interest lies below 1 is clearly positive. In a hypothetical scenario where the variable of interest is known to be ≥ 1, a shifted exponential prior could be assigned (B). The posterior probability (orange) is then obtained via Bayes’ rule (Eq 1) and gives the desired 0 probability of the variable being less than 1. A may be viewed as a special case of the Bayesian perspective with a flat prior (blue). Observations and distribution parameters were chosen to obtain a good layout: , d = [2.0, 2.3, 2.4].

2.2 Parameter estimation

A mathematical model ϕ is a function that describes the state of system variables by means of a set of parameters. The model is a representation of the underlying data generating process, meaning that the model output from a given set of parameters is imitating the expected output in the real system. From a given list of parameters , a model can make predictions of the system variables, in the following denominated as . In machine learning, this quantity is often called .

| (3) |

A predictive model can be obtained when the parameters are estimated from observed experimental data . In this process, the experimental data is compared to data predicted by the model. In order to find the prediction matching the data best, different approaches of parameter estimation can be applied. This process is sometimes also referred to as inference or informally as fitting.

To obtain one parameter vector, optimization of so-called loss functions or objective functions can be applied. In principle, these functions compare prediction and measurement outcome, yielding a scalar that can be minimized. Various loss functions such as the mean absolute error (MAE or L1 loss) or the mean squared error (MSE or L2 loss) can be formulated for the optimization process.

In the following, we first consider a special case, least squares estimation using the MSE, before coming to the generalized approach of maximum likelihood estimation. The former, which is often applied in biotechnology in the context of linear regression, is specified in the following equation:

| (4) |

Here, the vectors and represent one observed time series and the corresponding prediction. If several time series contribute to the optimization, their differences (residuals) can be summed up:

| (5) |

To keep the notation simple, we will in the following use Yobs and Ypred to refer to the set of N time series vectors. While each individual pair of and vectors must have the same length, note that different pairs might be of different length. Y should thus not be interpreted as a matrix notation. In later chapters, we will see how the Python implementation handles the sets of observations (Section 3.2.4).

Coming back to the likelihood functions introduced in Section 2.1, the residual-based loss functions are a special case of a broader estimation concept, the maximum likelihood estimation:

| (6) |

Here, a probability density function is used to quantify how well observation and prediction, the latter represented by the model parameters, match. In case of a Normal-distributed likelihood with constant noise, the result of MLE is the same as a weighted least-squares loss [20]. In comparison to residual-based approaches, the choice of the PDF in a likelihood approach leads to more flexibility, for example covering heteroscedasticity or measurement noise that cannot be described by a Normal distribution.

As introduced in Section 2.1, an important extension of the likelihood approach is Bayes’ theorem (Eq 1). Applying this concept, we can perform Bayesian inference of model parameters:

| (7) |

| (8) |

Similar to MLE, a point estimate of the parameter vector with highest probability can be obtained by optimization (Eq 8), resulting in the maximum a posteriori (MAP) estimate. While the MLE is focused on the data-based likelihood, MAP estimates incorporate prior knowledge into the parameter estimation.

To obtain the full posterior distribution , which is describing the probability distribution of parameters given the observed data, one has to solve Eq 7. The integral, however, is often intractable or impossible to solve analytically. Therefore, a class of algorithms called Markov chain Monte Carlo (MCMC) algorithms is often applied to find numerical approximations for the posterior distribution (for more detail, see Section 3.2.6).

The possibility to not only obtain point estimates but a whole distribution describing the parameter vector, is leading to an important concept: uncertainty quantification.

2.3 Uncertainty quantification of model parameters

When aiming for predictive models, it is important to not only estimate one parameter vector, but to quantify how certain the estimation is. In the frequentist paradigm, uncertainty is quantified with confidence intervals. When applied correctly, they provide a useful measure, for example in hypothesis testing where the size of a certain effect in a study is to be determined. However, interpretation of the confidence interval can be challenging and it is frequently misinterpreted as the interval that has a 95% chance to contain the true effect size or true mean [11]. However, to obtain intervals with such a simple interpretation, further assumptions on model parameters are required [12].

In Bayesian inference, prior distribution provide these necessary assumptions and the posterior can be used for uncertainty quantification. As a consequence, Bayesian credible intervals can indeed be interpreted as the range in which an unobserved parameter lies with a certain probability [13]. The choice of probability level or interval bounds is arbitrary. Commonly chosen probability levels are 99, 95, 94 or 90%. Consequently, there are many equally valid flavors of credible intervals. The most important ones are listed below:

Highest posterior density intervals (HDI) are chosen such that the width of the interval is minimized

Equal-tailed intervals (ETI) are chosen such that the probability mass of the posterior below and above the interval are equal

Half-open credible intervals specify the probability that the parameter lies on one side of a threshold

In the scope of this paper, we will solely focus on the Bayesian quantification of parameter uncertainty. Note that uncertainty of parameters should not be confused with the measurement uncertainty mentioned in the context of calibration in Section 1.2, which will be further explained in the following section.

2.4 Calibration models

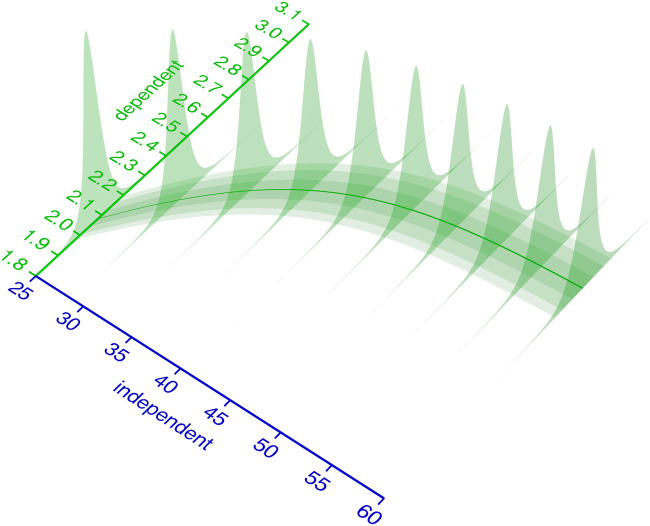

Coming back to the BIPM definition of calibration (Section 1.1), we can now associate aspects of that definition with the statistical modeling terminology. In Fig 2, the blue axis “independent” variable corresponds to the “quantity values” from the BIPM definition. At every value of the independent variable, the calibration model (green) describes the probability distribution (green slices) of measurement responses. This corresponds to the “indications with associated measurement uncertainties” from the BIPM definition.

Fig 2. Relationship of independent and dependent variable.

The distribution of measurement responses (dependent variable) can be modeled as a function of the independent variable. This measurement response probability distribution (here: Student-t) is parametrized by its parameters the mean μ (solid green line) and spread parameters σ and ν. Some or all of the distributions parameters are modeled as a function of the independent variable.

Neither the formal definition nor the conceptual framework presented in this study impose constraints on the kind of probability distribution that describes the measurement responses. Apart from the Normal distribution, a practitioner may choose a Student-t distribution if outliers are a concern. The Student-t distribution has a v parameter that influences how much probability is attributed to the tails of the distribution (S1 Fig), or in other words how likely it is to observe extreme values. Other distributions such as Laplace or Huber distributions were also shown to be beneficial for outlier-corrupted data [21]. Depending on the measurement system at hand, a Lognormal, Gamma, Weibull, Uniform or other continuous distributions may be appropriate. Also discrete distributions such as the Poisson, Binomial or Categorical may be chosen to adequately represent the observation process. A corresponding example with a Poisson distribution for the dependent variable is included in the documentation [22].

For some distributions, including Normal and Student-t, the parameters may be categorized as location parameters affecting the median or spread parameters affecting the variance, while for many other distributions the commonly used parameterization is not as independent. The parameters of the probability distribution that models the measurement responses must be described as functions of the independent variable. In the example from Fig 2 relationship, a Student-t distribution with parameters {μ, scale, ν} is used. Its parameter μ is modeled with a logistic function, the scale parameter as a 1st order polynomial of μ and ν is kept constant. It should be emphasized that the choice of probability distribution and functions to model its parameters is completely up to the domain expert.

When coming up with the structure of a calibration model, domain knowledge about the measurement system should be considered, particularly for the choice of probability distribution. An exploratory scatter plot can help to select an adequate function for the location parameter of the distribution (μ in case of a Normal or Student-t). A popular choice for measurement systems that exhibit saturation kinetics is the (asymmetric) logistic function. Many other measurement systems can be operated in a “linear range”, hence a 1st order polynomial is an equally popular model for the location parameter of a distribution. To describe the spread parameters (σ, scale, ν, …), a 0th (constant) or 1st order (linear) polynomial function of the location parameter is often a reasonable choice.

After specifying the functions in the calibration model, the practitioner must fit the model (Section 2.2) and decide to stick with the result, or modify the functions in the model. This iteration between model specification and inspection is a central aspect of modeling. To avoid overfitting or lack of interpretability, we recommend to find the simplest model that is in line with domain knowledge about the measurement system, while minimizing the lack-of-fit.

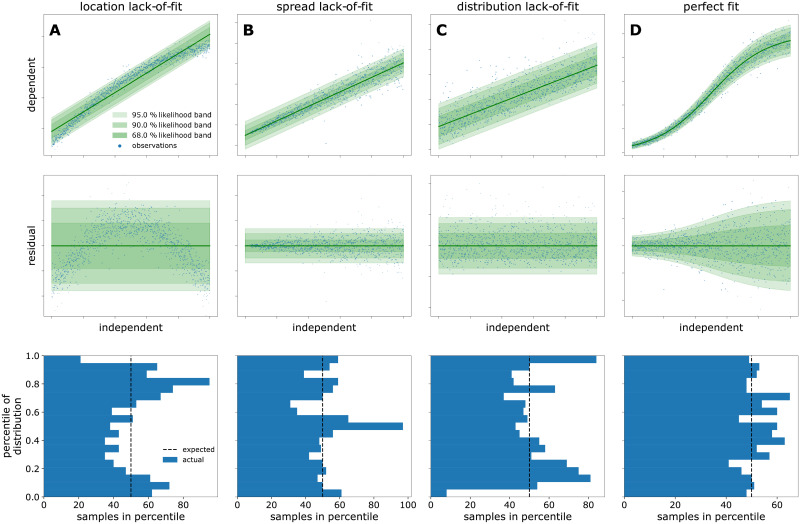

The term lack-of-fit is used to describe systematic deviation between the model fit and data. It refers not only to the trend of location and spread parameters but also to the kind of probability distribution. A residual plot is often instrumental to diagnose lack-of-fit and discriminate it from purely random noise in the observations. In Fig 3, different calibration models (top), residuals (middle) and the spread of data points along the percentiles of the probability distribution (bottom) illustrate how to diagnose a lack-of-fit. The blue data points in Fig 3C are Lognormal-distributed, but the calibration model assumes a Student-t distribution. In such cases, a large number of observations may be needed to spot that the chosen noise model does match the data. As an alternative to a percentile-based visualization, practitioners may therefore opt to perform a Kolmogorov-Smirnov test, or visualize based on (empirical) cumulative density functions (ECDF). Ideally such a visualization should be prepared with ECDF confidence bands [23] and we invite the interested reader to contribute an implementation of this method to calibr8. A well-chosen model (Fig 3D) is characterized by the random spread of residuals without systematic deviation and the equivalence of the modeled and observed distribution. When enough calibration data points are available, the modeled and observed distributions can be compared via the occupancy of percentiles.

Fig 3. Diagnostic plots of model fits.

The raw data (blue dots) and corresponding fit is visualized in the top row alongside 95, 90, and 68% likelihood bands of the model. Linear and logistic models were fitted to synthetic data to show three kinds of lack-of-fit error (columns 1–3) in comparison to a perfect fit (column 4). The underlying structure of the data and model is as follows: A: Homoscedastic linear model, fitted to homoscedastic nonlinear data. B: Homoscedastic linear model, fitted to heteroscedastic linear data. C: Homoscedastic linear model, fitted to homoscedastic linear data that is Lognormal-distributed. D: Heteroscedastic logistic model, fitted to heteroscedastic logistic data. The residual plots in the middle row show the distance between the data and the modeled location parameter (green line). The bottom row shows how many data points fall into the percentiles of the predicted probability distribution. Whereas the lack-of-fit cases exhibit systematic under- and over-occupancy of percentiles, only in the perfect fit case all percentiles are approximately equally occupied.

Whereas the BIPM definition uses the word uncertainty in multiple contexts, we prefer to always use the term to describe uncertainty in a parameter, but never to refer to measurement noise. In other words, the parameter uncertainty can often be reduced by acquiring more data, whereas measurement noise is inherent and constant. In the context of calibration models, the methods for uncertainty quantification (Section 2.3) may be applied to the calibration model parameters, the independent variable, or both. Uncertainty quantification of calibration model parameters can be useful when investigating the structure of the calibration model itself, or when optimization does not yield a reliable fit. Because the independent variable is in most cases the parameter of interest in the application of a calibration model, the quantification of uncertainty about the independent variable is typically the goal. To keep the examples easy and understandable, we fix calibration model parameters at their maximum likelihood estimate. The calibr8 documentation includes an example where the calibration model parameters are estimated jointly together with the process model [22].

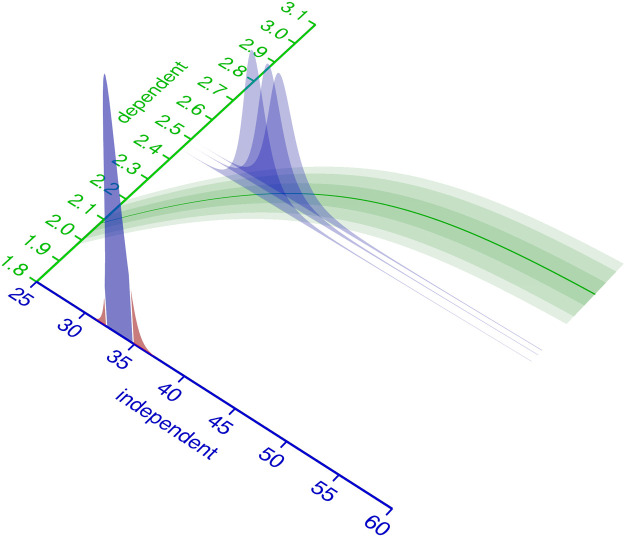

In Fig 4 uncertainty, the green likelihood bands on the ground of the 3D plot represent a calibration model with fixed parameters. To quantify the independent variable with associated Bayesian uncertainty, it must be considered as a random variable. Accordingly, p(rvindependent ∣ d) from either a likelihoodist (Eq 2) or Bayesian (Eq 1) perspective is the desired outcome of the uncertainty quantification.

Fig 4. Uncertainty about the independent variable.

An intuition for inferring the independent variable from an observed dependent variable is to cut (condition) the green probability distribution model at the observed value (blue slices) and normalize its area to 1. The resulting (blue) slice is a potentially asymmetric probability distribution that describes the likelihood of the observation, given the independent variable. Its maximum (the maximum likelihood estimate) is the value of the independent variable that best describes the observation. For multiple observations, the probability density function for the independent variable corresponds to the product of the PDFs of the observations. The red shoulders mark the regions outside of the 90% equal-tailed interval.

Given a single observed dependent variable, the likelihoodist p(rvindependent ∣ d) (Eq 2) corresponds to the normalized cross section of the likelihood bands at the observed dependent variable (Fig 4, blue slices). With multiple observations, p(rvindependent ∣ d) becomes the product (superposition) of the elementwise likelihoods (Fig 4, blue slice at the axis). For a Bayesian interpretation of p(rvindependent ∣ d) (Eq 1), the blue likelihood slice is superimposed with an additional prior distribution. More practical details on uncertainty quantification of the independent variable in a calibration model are given in Section 4.

2.5 Process models

Most research questions are not answered by inferring a single variable from some observations. Instead, typical questions target the comparison between multiple conditions, the value of a latent (unobservable) parameter, or the inter- and extrapolation of a temporally evolving system. For example, one might extract a latent parameter that constitutes a key performance indicator, or make decisions based on predictions (extrapolation) of new scenarios. Data analysis for all of these and many more scenarios is carried out with models that are tailored to the system or process under investigation. Such models are typically derived from theoretical (textbook) understanding of the process under investigation and in terms of SI units, but are not concerned with the means of making observations. Henceforth, we use the term process model (ϕpm) to describe such models and discriminate them from calibration models (ϕcm) that are explicitly concerned with the observation procedure.

Whereas calibration models are merely univariate input/output relationships of a measurement system, process models may involve many parameters, hierarchy, multivariate predictions or more complicated functions such as ordinary or partial differential equations. For example, they may predict a temporal evolution of a system with differential equations, sharing some parameters between different conditions, while keeping others local. In life sciences, time series play a central role, hence our application example is also concerned with a temporally evolving system.

Nevertheless, calibration models ϕcm and process models ϕpm are models, and the methods for estimation of their parameters (Section 2.2) as well as uncertainty quantification (Section 2.3) apply to both. As described in Section 2.3, the likelihood is the ultimate all-rounder tool in parameter estimation. The key behind our proposed discrimination between calibration and process models is the observation that a calibration model can serve as a modular likelihood function for a process model (Eq 9).

| (9) |

Conceptually separating between calibration models and process models has many advantages for the data analysis workflow in general. For example, the model components are logically separated, but the parameters can still be jointly estimated. After going into more detail about the implementation of calibration models and process models in Section 3, we will demonstrate their application and combination in Section 4.

3 Material and methods

3.1 Experimental workflows

3.1.1 Automated cultivation platform

All experiments were conducted on a robotic platform with an integrated small-scale cultivation system. In our setup, a BioLector Pro microbioreactor system (m2p-labs GmbH, Baesweiler, Germany), is integrated into a Tecan Freedom EVO liquid handling robot (Tecan, Männedorf, Switzerland). The BioLector Pro is a device to quasi-continuously observe biomass, pH and dissolved oxygen (DO) during cultivation of microorganisms in specialized microtiter plates (MTPs). These rectangular plates comprise multiple reaction cavities called “wells”, usually with volumes in microliter or milliliter scale. The BioLector allows to control temperature and humidity while shaking the plates at adjustable frequencies between 800 and 1500 rpm.

The liquid handler, which allows to take samples for at-line measurements during cultivation, is surrounded by a laminar flow hood to ensure sterile conditions for liquid transfer operations. Next to the BioLector Pro, various other devices are available on the platform, including an Infinite M Nano+ microplate photometer (Tecan, Männedorf, Switzerland), a cooling carrier and a Hettich Rotanta 460 robotic centrifuge (Andreas Hettich GmbH & Co. KG, Tuttlingen, Germany). The overall setup is similar to the one described by Unthan et al. 2015 [8]. The automated platform enables to perform growth experiments with different microorganisms, to autonomously take samples of the running process and to perform bioanalytical measurements, e.g. quantification of glucose. It is thus a device for miniaturised, automated bioprocess cultivation experiments.

In this work, we used specialized 48-well deepwell plates called FlowerPlates (MTP-48-B, m2p-labs GmbH, Baesweiler, Germany) that are commonly used for cultivations in the BioLector device. The plates are pre-sterilized and disposable and were covered with a gas-permeable sealing film with a pierced silicone layer for automation (m2p-labs GmbH, Baesweiler, Germany). The biomass was quasi-continuously detected via scattered light [24] at gain 3 with 4 minutes cycle time to obtain backscatter measurements. DO and pH were not measured since they are not relevant for the application examples. Both cultivation and biomass calibration experiments were conducted in the BioLector Pro at 30°C, 3 mm shaking diameter, 1400 rpm shaking frequency, 21% head-space oxygen concentration and ≥ 85% relative humidity.

3.1.2 Strain, media preparation and cell banking and cultivation

The wild-type strain Corynebacterium glutamicum ATCC 13032 [25] was used in this study. If not stated otherwise, all chemicals were purchased from Sigma–Aldrich (Steinheim, Germany), Roche (Grenzach-Wyhlen, Germany) or Carl Roth (Karlsruhe, Germany) in analytical quality.

Cultivations were performed with CGXII defined medium with the following final amounts per liter of distilled water: 20 g D-glucose, 20 g (NH4)2SO4, 1 g K2HPO4, 1 g KH2PO4, 5 g urea, 13.25 mg CaCl2 · 2 H2O, 0.25 g MgSO4 · 7 H2O, 10 mg FeSO4 · 7 H2O, 10 mg MnSO4 · H2O, 0.02 mg NiCl2 · 6 H2O, 0.313 mg CuSO4 · 5 H2O, 1 mg ZnSO4 · 7 H2O, 0.2 mg biotin, 30 mg protocatechuic acid. MOPS were used as buffering agent and the pH was adjusted to 7.0 using 4 M NaOH.

A working cell bank (WCB) was prepared from a shake flask culture containing 50 mL of the described CGXII medium and 10% (v/v) brain heart infusion (BHI) medium (). It was inoculated with 100 μl cryo culture from a master cell bank stored at -80°C. The culture was incubated for approximately 16 hours in an unbaffled shake flask with 500 ml nominal volume at 250 rpm, 25 mm shaking diameter and 30°C. The culture broth was then centrifuged at 4000 × g for 10 minutes at 4°C and washed once in 0.9% (w/v) sterile NaCl solution. After centrifugation, the pellets were resuspended in a suitable volume of NaCl solution to yield a suspension with an optical density at 600 nm (OD600) of 60. The suspension was then mixed with an equal volume of 50% (w/v) sterile glycerol, resulting in cryo cultures of OD600≈30. Aliquots of 1 mL were quickly transferred to low-temperature freezer vials, frozen in liquid nitrogen and stored at -80°C.

3.1.3 Algorithmic planning of dilution series

All calibration experiments require a set of standards (reference samples) with known concentrations, spanning across sometimes multiple orders of magnitude. Traditionally such standards are prepared by manually pipetting a serial dilution with a 2x dilution factor in each step. This can result in a series of standards whose concentrations are evenly spaced on a logarithmic scale. While easily planned, a serial dilution generally introduces inaccuracies that accumulate with an increasing number of dilution steps. It is therefore desirable to plan a dilution series of reference standards such that the number of serial dilution steps is minimized.

To reduce the planning effort and allow for a swift and accurate preparation of the standards, we devised an algorithm that plans liquid handling instructions for preparation of standards. Our DilutionPlan algorithm considers constraints of a (R × C) grid geometry, well volume, minimum and maximum transfer volumes to generate pipetting instructions for human or robotic liquid handlers.

First, the algorithms reshapes a length R ⋅ C vector of sorted target concentrations into the user specified (R × C) grid typically corresponding to a microtiter plate. Next, it iteratively plans the transfer and filling volumes of grid columns which are subject to the volume constraints. This column-wise procedure improves the compatibility with multi-channel manual pipettes, or robotic liquid handlers. Diluting from a stock solution is prioritized over the (serial) dilution from already diluted columns. The result of the algorithm are (machine-readable) pipetting instructions to create R ⋅ C single replicates with concentrations very close to the targets. We open-sourced the implementation as part of the robotools library [26].

As the accuracy of the calibration model parameter estimate increases with the number of calibration points, we performed all calibrations with the maximum number of observations that the respective measurement system can make in parallel. The calibration with 96 glucose and 48 biomass concentrations is covered in the following chapters.

3.1.4 Glucose assay calibration

For the quantification of D-glucose, the commercial enzymatic assay kit “Glucose Hexokinase FS” (DiaSys Diagnostic Systems, Holzheim, Germany) was used. For the master mix, four parts buffer and one part enzyme solution were mixed manually. The master mix was freshly prepared for each experiment and incubated at room temperature for at least 30 minutes prior to the application for temperature stabilization. All other pipetting operations were performed with the robotic liquid handler. For the assay, 280 μL master mix were added to 20 μL analyte in transparent 96-well flat bottom polystyrol plates (Greiner Bio-One GmbH, Frickenhausen, Germany) and incubated for 6 minutes, followed by absorbance measurement at 365 nm. To treat standards and cultivation samples equally, both were diluted by a factor of 10 (100 μL sample/standard + 900 μL diluent) as part of the assay procedure.

As standards for calibration, 96 solutions with concentrations between 0.075 and were prepared from fresh CGXII cultivation medium (Section 3.1.2) with a concentration of D-glucose. The DilutionPlan algorithm (Section 3.1.3) was used to plan the serial dilution procedure with glucose-free CGXII media as the diluent, resulting in 96 unique concentrations, evenly distributed on a logarithmic scale. Absorbance results from the photometer were parsed with a custom Python package (not published) and paired with the concentrations from the serial dilution series to form the calibration dataset used in Section 4.1.2. 83 of the 96 concentration/absorbance pairs lie below and were used to fit a linear model in Section 4.1.1.

3.1.5 Biomass calibration

Calibration data for the biomass/backscatter calibration model (Section 4.1.2) was acquired by measuring 48 different biomass concentrations at cultivation conditions (Section 3.1.2) in the BioLector Pro. 100 mL C. glutamicum WT culture was grown overnight on glucose CGXII medium (Section 3.1.2) in two unbaffled 500 mL shake flasks with 50 mL culture volume each (N = 250 rpm, r = 25 mm). The cultures were harvested in the stationary phase, pooled, centrifuged and resuspended in 25 mL 0.9% (w/v) NaCl solution. The highly concentrated biomass suspension was transferred into a magnetically stirred 100 mL trough on the liquid handler for automated serial dilution with logarithmically evenly spaced dilution factors from 1× to 1000×. The serial dilution was prepared by the robotic liquid handler in a 6 × 8 (48-well square) deep well plate (Agilent Part number 201306–100) according to the DilutionPlan (Section 3.1.3). 6x 800 μL of biomass stock solution were transferred to previously dried and weighed 2 mL tubes, immediately after all transfers of stock solution to the 48 well plate had occurred. The 2 mL tubes were frozen at -80°C, lyophilized over night, dried again at room temperature in a desiccator over night and finally weighted again to determine the biomass concentration in the stock solution.

After a column in the 48 well plate was diluted with 0.9% (w/v) NaCl solution, the column was mixed twice by aspirating 950 μL at the bottom of the wells and dispensing above the liquid surface. The transfers for serial dilutions (columns 1 and 2) and to the 48 well FlowerPlate were executed right after mixing to minimize the effects of biomass sedimentation as much as possible. The FlowerPlate was sealed with a gas-permeable sealing foil (product number F-GP-10, m2p-labs GmbH, Baesweiler, Germany) and placed in the BioLector Pro device. The 1 h BioLector process for the acquisition of calibration data was programmed with shaking frequency profile of 1400, 1200, 1500, 1000 rpm while maintaining 30°C chamber temperature and measuring backscatter with gain 3 in cycles of 3 minutes.

The result file was parsed with the bletl Python package [27] to extract backscatter measurements made at 1400 rpm shaking frequency. A log(independent) asymmetric logistic calibration model was fitted as described in Section 4.1.2. The linear calibration model for comparison purposes (Section 4.2.3) was implemented with its intercept fixed to the background signal predicted by the asymmetric logistic model (). It was fitted to a subset of calibration points approximately linearly spaced at 30 different biomass concentrations from 0.01 to .

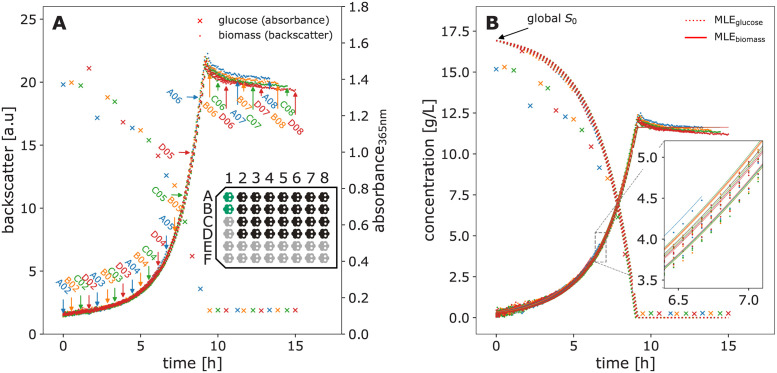

3.1.6 Microbial growth experiment

Cultivations with C. glutamicum were performed in the automated cultivation platform (Section 3.1.1) under the described conditions. CGXII medium with glucose and without BHI was used as cultivation medium. To start the growth experiments, the liquid handler was used to transfer 20 μL of a WCB aliquot into the first column of FlowerPlate wells, which were pre-filled with 780 μL medium. These wells were run as a preculture. When precultures reached a backscatter readout of 15, which corresponds to a cell dry weight of approximately , the inoculation of the main culture wells was triggered. 780 μL medium were distributed into each main culture well (columns 2–8) and allowed to warm up for approximately 15 minutes. Preculture wells A01 and B01 were pooled and 20 μL culture broth was transferred to each main culture well, resulting in 800 μL final volume. The theoretical biomass concentration at the start of the main cultures is accordingly. This strategy was used to avoid a lag-phase with non-exponential growth.

Backscatter measurements of biomass concentration were acquired continuously, while main culture wells were harvested at predetermined time points to measure glucose concentrations in the supernatant. The time points were chosen between 0 and 15 hours after the inoculation of main cultures to cover all growth phases. For harvesting, the culture broth was transferred to a 1 mL deep-well plate by the liquid handler. The plate was centrifuged at 3190 × g at 4°C for 5 minutes and the supernatant was stored on a 1 mL deep well plate chilled to 4°C. The glucose assay was performed after all samples were taken.

3.2 Computational methods

All analyses presented in this study were performed with recent versions of Python 3.7, PyMC ==3.11.2 [28], ArviZ >=0.9 [29], PyGMO >=2.13 [30], matplotlib >=3.1 [31], NumPy >=1.16 [32], pandas >=0.24 [33, 34], SciPy >=1.3 [35] and related packages from the Python ecosystem. For a full list of dependencies and exact versions see the accompanying GitHub repository [36].

The two packages presented in this study, calibr8 and murefi, may be installed via semantically versioned releases on PyPI. Source code, documentation and detailed release notes are available through their respective GitHub projects [37, 38].

3.2.1 Asymmetric logistic function

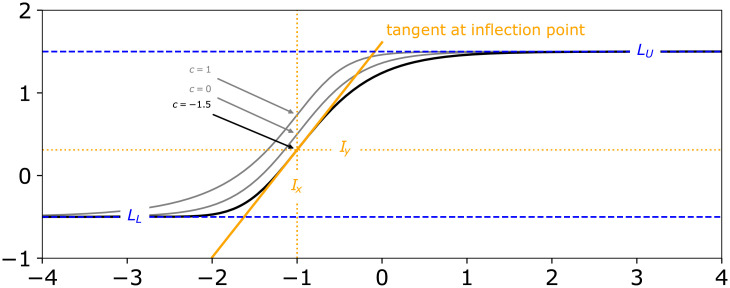

The asymmetric, five-parameter logistic function (also known as Richard’s curve) was previously shown to be a good model for many applications [39], but it is often defined in a parameterization (Eq 10) that is non-intuitive. Some parametrizations even introduce a sixth parameter to make the specification more intuitive, but this comes at the cost of structural non-identifiability [40, 41]. Furthermore, in the most commonly found parametrization (Eq 10), one parameter is constrained to be strictly positive. We also found that structural non-identifiability between the parameters makes it difficult to define an initial guess and bounds to reliably optimize a model based on this parametrization.

| (10) |

To make calibration model fitting more user-friendly, we reparameterized the commonly used form such that all five parameters are intuitively interpretable and structurally independent (Fig 5). With our reparameterization (Eq 11), the 5-parameter asymmetric logistic function is parameterized by lower limit , upper limit , inflection point x-coordinate , slope at inflection point and an asymmetry parameter . At c = 0, the y-coordinate of the inflection point lies centered between LL and LU. Iy moves closer to LU when c > 0 and accordingly closer to LL when c < 0 (Fig 5, black and gray curves). An interactive version of Fig 5 can be found in a Jupyter Notebook in the calibr8 GitHub repository [37].

Fig 5. Reparametrized asymmetric logistic function.

When parametrized as shown in Eq 11, each of the 5 parameters can be manipulated without influencing the others. Note that, for example, the symmetry parameter c can be changed without affecting the x-coordinate of the inflection point (Ix), or the slope S at the inflection point (gray vs. black).

For readability and computational efficiency, we used SymPy [42] to apply common subexpression elimination to Eq 11 and our implementation respectively (S1 File). The step wise derivation from Eqs 10 to 11 is shown in S1 File and in a Jupyter Notebook in the calibr8 GitHub repository [37].

| (11) |

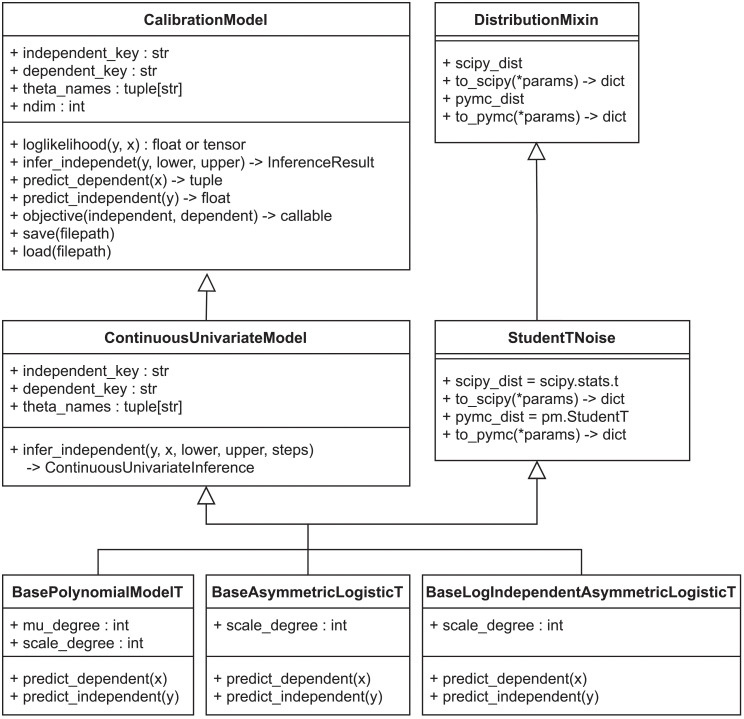

3.2.2 calibr8 package for calibration models and modular likelihoods

With calibr8 we present a lightweight Python package that specializes on the definition and modular implementation of non-linear calibration models for calibration and modeling workflows.

The calibr8 application programming interface (API) was designed such that all calibration models are implemented as classes that inherit from calibr8.CalibrationModel, which implements properties and methods that are common to all calibration models (Fig 6). The common interface simplifies working with calibration models in a data analysis or modeling workflow. For a detailed explanation of classes and inheritance in calibr8 we refer the interested reader to the documentation [22]. For example, the CalibrationModel.objective can be used to create objective functions to optimize the model parameters. The objective relies on the loglikelihood method to compute the sum of log-likelihoods from independent and dependent variables. It uses the predict_dependent method internally to obtain the parameters of the probability distribution describing the dependent variables, conditioned on the independent variable.

Fig 6. calibr8 class diagram.

All calibr8 models inherit from the same CalibrationModel and DistributionMixin classes that define attributes, properties and method signatures that are common to all calibration models. Some methods, like loglikelihood() or objective() are implemented by CalibrationModel directly, whereas others are implemented by the inheriting classes. Specifically the predict_* methods depend on the choice of the domain expert. With a suite of Base*T classes, calibr8 provides base classes for models based on Student-t distributed observations. A domain expert may start from any of these levels to implement a custom calibration model for a specific application.

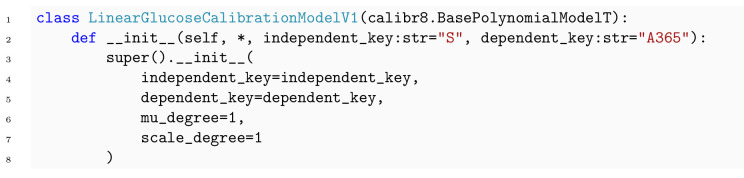

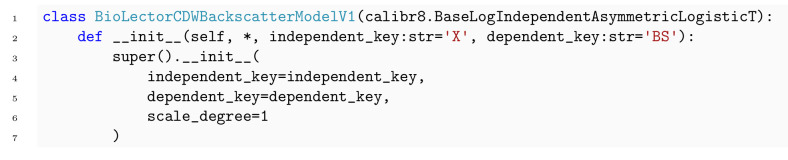

Through its inheritance-based design, the calibr8.CalibrationModel gives the domain expert full control over the choice of trend functions and probability distributions. Conveniently, calibr8 already implements functions such as polynomial, logistic and asymmetric_logistic, as well as base classes for commonly found noise models. By leveraging these base models, the implementation of a user-defined calibration model reduces to just a few lines of code (Box 1 Code 1 and Box 2 Code 2). In the current version we implemented base classes for continuous univariate and multivariate calibration models and anticipated models with discrete independent variables.

Box 1. Code 1. Implementation of glucose/absorbance calibration model using convenience type.

|

Box 2. Code 2. Implementation of CDW/backscatter calibration model using convenience type.

|

The implementations depicted in Fig 6 are fully compatible with aesara.Variable inputs, resulting in TensorVariable outputs. Aesara is a graph computation framework that auto-differentiates computation graphs written in Python and compiles functions that evaluate with high performance [43]. This way, the loglikelihood function of a CalibrationModel can be auto-differentiated and compiled, thus facilitating efficient computation with optimization or gradient-based MCMC sampling algorithms (Section 3.2.6). For more details about the implementation, please refer to the documentation and code of the calibr8 package [37].

Convenience features. To facilitate modeling workflows, calibr8 implements convenience functions for optimization (fit_scipy, fit_pygmo) and creation of diagnostic plots (calibr8.plot_model) as shown in Section 2.4 and Section 4.1.2. As explained in Section 2.4the residual plot on the right of the resulting figure is instrumental to judge the quality of the model fit.

Standard properties of the model, estimated parameters and calibration data can be saved to a JSON file via the CalibrationModel.save method. The saved file includes additional information about the type of calibration model and the calibr8 version number (e.g. S3 File) to support good versioning and data provenance practices. When the CalibrationModel.load method is called to instantiate a calibration model from a file, standard properties of the new instance are set and the model type and calibr8 version number are checked for compatibility.

3.2.3 Numerical inference

To numerically infer the posterior distribution of the independent variable, given one or more observations, infer_independent implements a multi-step procedure. The three outputs of this procedure are a vector of posterior probability evaluations, densely resolved around the locations of high probability mass, and the bounds of the equal-tailed as well as the highest-density intervals (ETI, HDI) corresponding to a user-specified credible interval probability.

In the first step, the likelihood function is integrated in the user-specified interval [lower, upper] with scipy.integrate.quad. Second, we evaluate its cumulative density function (CDF) at 10 000 locations in [lower, upper] and determine locations closest to the ETI99.999%. Next, we re-evaluate the CDF at 100 000 locations in the ETI99.999% to obtain it with sufficiently high resolution in the region of highest probability. Both ETI and HDI with the (very close to) user-specified ci_prob are obtained from the high-resolution CDF. Whereas the ETI is easily obtained by finding the CDF evaluations closest to the corresponding lower and upper probability levels, the HDI must be determined through optimization (Eq 12).

| (12) |

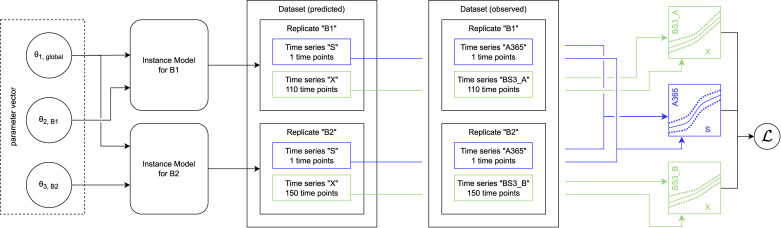

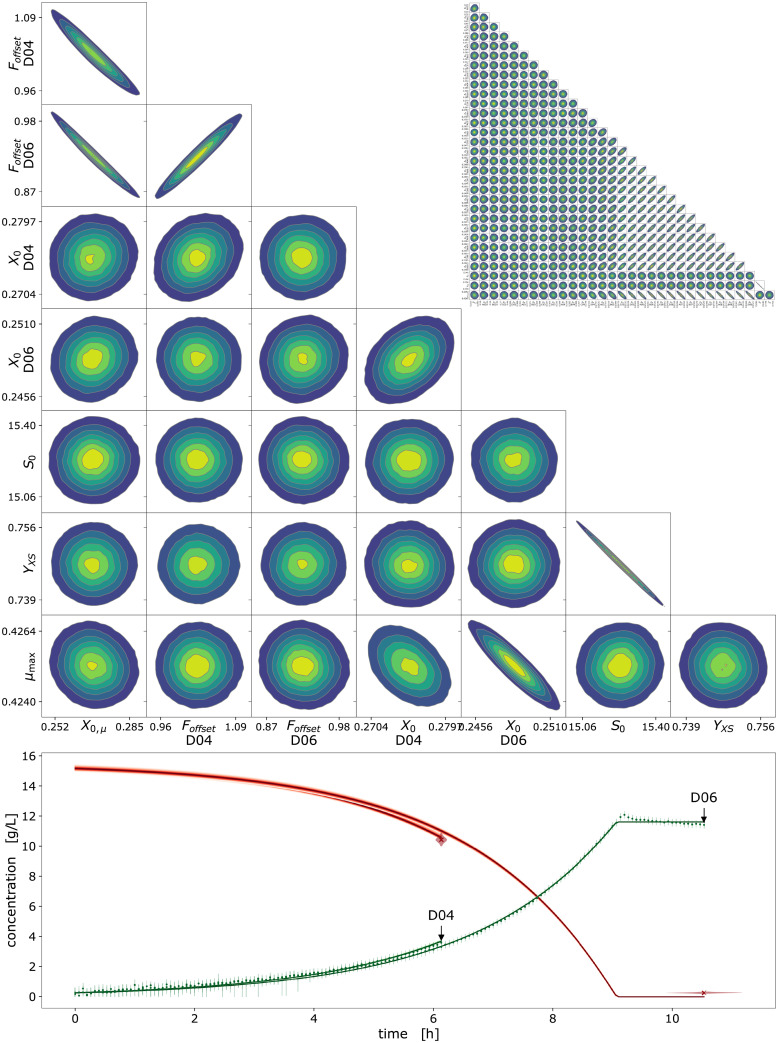

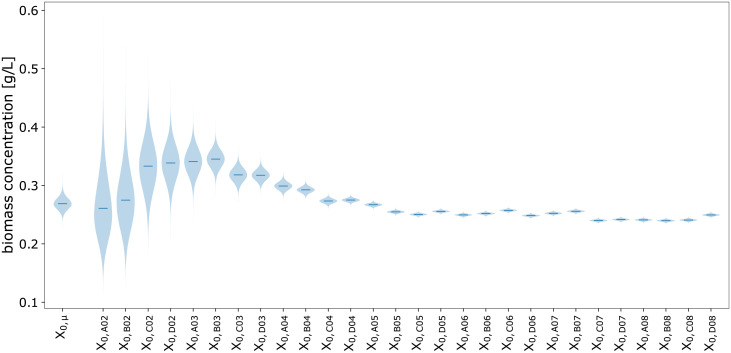

3.2.4 murefi package for building multi-replicate ODE models

Models of biochemical processes are traditionally set up to describe the temporal progression of an individual system, such as a reaction vessel. Experimental data, however, is commonly obtained from multiple reaction vessels in parallel, often run under different conditions to maximize information gain. This discrepancy between the prototypical model of the biological system and the heterogeneous experimental data to be fitted is typically resolved by replicating the model for all realizations of the biological system in the dataset. Along with the replication of the model, some model parameters may be kept global, while others can be local to a subset of the replicates, for example due to batch effects or different start conditions.

With a Python package we call murefi (multi-replicate fitting), we implemented data structures, classes and auxiliary functions that simplify the implementation of models for such heterogeneous time series datasets. It seamlessly integrates with calibr8 to construct likelihood-based objective functions for optimization or Bayesian inference. To enable the application of efficient optimization or sampling algorithms, the use of automatic differentiation to obtain gradients of the likelihood w.r.t. input parameters is highly desirable. Various methods for automatic differentiation of ODE models are available, but their efficiency is closely connected to the implementation and size of the model [44]. In murefi we implemented support for sunode [45], a recently implemented Python wrapper around the SUNDIALS suite of nonlinear and differential/algebraic equation solvers [46]. When used in the context of a PyMC model, a process model created with calibr8 and murefi can therefore be auto-differentiated, solved, optimized and MCMC-sampled with particularly high computational efficiency.

Structure of time series data and models. To accommodate for the heterogeneous structure of time series experiments in biological applications, we implemented a data structure of three hierarchy levels. The murefi.Timeseries object represents the time and state vectors , of a single state variable or observation time series. To allow association of state and observed variables via calibr8 calibration models, the Timeseries is initialized with independent_key and dependent_key. Multiple Timeseries are bundled to a murefi.Replicate, which represents either the observations obtained from one reaction vessel, or the predictions made by a process model. Consequently, the murefi.Dataset aggregates replicates of multiple reaction vessels, or the model predictions made for them (Fig 7, center). To allow for a separation of data preprocessing and model fitting in both time and implementation, a murefi.Dataset can be saved as and loaded from a single HDF5 file [47, 48].

Fig 7. Data structures and computation graph of murefi models.

Elements in a comprehensive parameter vector are mapped to replicate-wise model instances. In the depicted example, the model instances for both replicates “B1” and “B2” share θ1,global as the first element in their parameter vectors. The second model parameter θ2 is local to the replicates, hence the full parameter vector (left) is comprised of three elements. Model predictions are made such that they resemble the structure of the observed data, having the same number of time points for each predicted time series. An objective function calculating the sum of log-likelihoods is created by associating predicted and observed time series via their respective calibration models. By associating the calibration models based on the dependent variable name, a calibration model may be re-used across multiple replicates, or kept local if, for example, the observations were obtained by different methods.

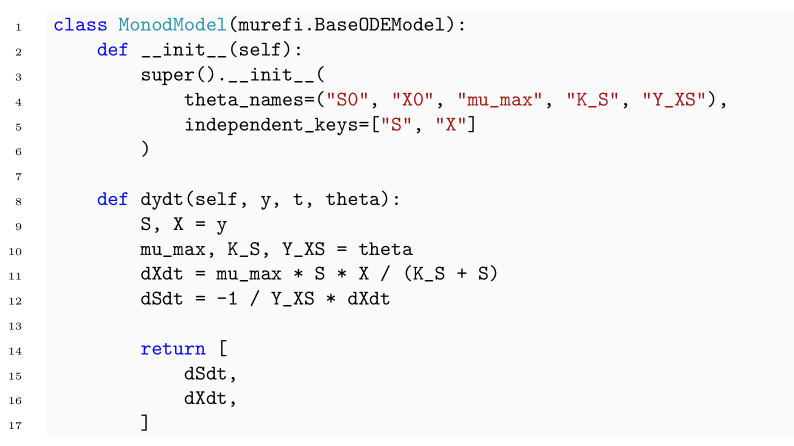

To describe a reaction system by a system of ODEs, a new class is implemented by subclassing the murefi.BaseODEModel convenience type. In the constructor of the class, the names and order of parameters and state variables are defined, whereas the differential equations are implemented in a dydt instance method. An example is shown in Box 3 Code 3 with the implementation of the Monod kinetics for microbial growth.

Box 3. Code 3. Implementation of Monod ODE model using murefi.BaseODEModel convenience type.

|

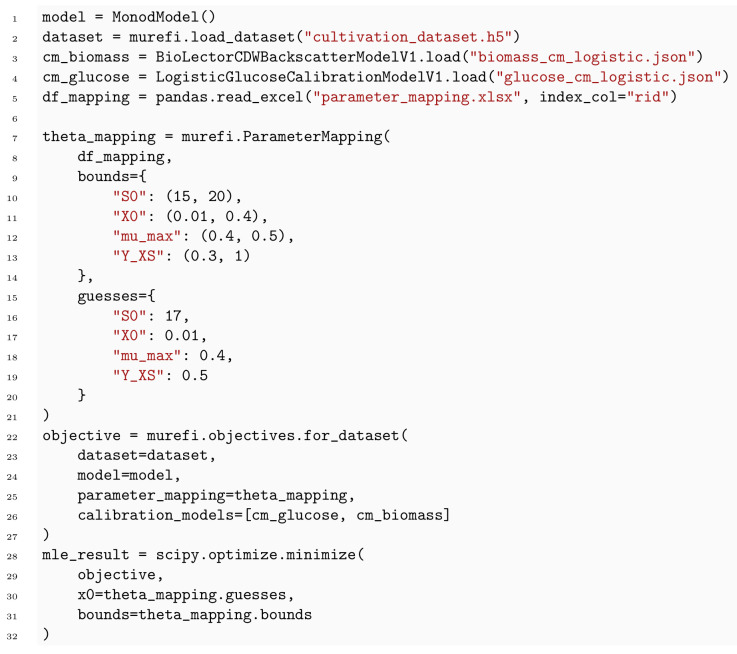

Parameter mapping and objective function

In addition to a murefi model instance, a murefi.Dataset and calibration models, a murefi.ParameterMapping must be defined to facilitate the creation of an objective function. This mapping specifies whether parameters are local or global and the rules with which they are shared between replicates. The ParameterMapping may be represented as a table, assigning each element of replicate-wise parameter vectors to constants or names of parameters in a comprehensive parameter vector. In Fig 7, the parameter mapping is depicted by arrows mapping elements of a 3-element comprehensive parameter vector to 2-element parameter vectors of the replicate-wise models. A table representation of the parameter mapping used to fit the Monod model in Section 4.2 is shown in S4 File.

Model predictions are made such that the time points of the predicted time series match those of the observed data (Fig 7, center). Based on the (in)dependent_key, the predicted and observed Timeseries can be associated with each other and passed to the corresponding CalibrationModel.loglikelihood method to calculate . Note that this procedure conveniently allows for calibration models to be shared by multiple replicates, as well as making observations of one state variable with more than one analytical method.

An objective function performing the computation depicted in Fig 7 can be created with a single call to a convenience function. For compute-efficient optimization and specifically Bayesian parameter estimation, the elements in the parameter vector can also be Aesara tensors, resulting in the creation of a symbolic computation graph. The computation graph cannot only be statically compiled but also auto-differentiated, if all operations in the graph are also auto-differentiable. This is already the case for standard calibr8 calibration models and is also available for murefi -based process models when the sunode [45] package is installed. A corresponding example of obtaining gradients from an ODE model with calibr8, murefi and sunode is included in the murefi documentation [49].

3.2.5 Optimization

In this work, optimization algorithms are involved at multiple steps of the workflow. Unless otherwise noted we used scipy.optimize.minimize with default settings to obtain the MLEs of calibration and process models. Our current implementation to compute HDIs (Section 3.2.3) uses scipy.optimize.fmin with an underlying Nelder-Mead simplex algorithm.

Initial guesses, as well as parameter bounds for maximum-likelihood optimization, were motivated from prior assumptions or exploratory plots of the data. Based on the intuitive parametrization of the asymmetric logistic (Section 3.2.1) we specified initial guesses for calibration models such that the model prediction from the guessed parameter vector was at least in the same order of magnitude as the data. For MLE of process model parameters, the guessed parameters were motivated from prior assumptions, e.g. the amount of used substrate or values obtained from literature research. Likewise, we specified bounds to be realistic both biologically and based on exploratory scatter plots of the data.

3.2.6 MCMC sampling

In contrast to optimization, MCMC sampling follows a very different paradigm. Whereas in MLE the likelihood function is iteratively evaluated to find its maximum, Bayesian inference aims to approximate the posterior probability distribution according to Eq 7.

Most sampling algorithms draw the posterior samples in the form of a Markov chain with a equilibrium distribution that matches the posterior probability distribution. While early MCMC algorithms, such as Random-walk Metropolis [50] are conceptually simple and easy to implement, they are computationally ineffective on problems with more than just a handful of dimensions [51, 52]. Instead of implementing inefficient algorithms by hand, practitioners can rely on state of the art libraries for Bayesian inference. These libraries apply automatic transformations, provide diagnostic tools and implement much more efficient sampling algorithms that often use gradients for higher computational efficiency.

Probabilistic programming languages / libraries (PPL), such as PyMC [53], Pyro [54], Stan [55] or Tensorflow Probability [56] use automatic differentiation and typically implement at least one of the gradient-based sampling algorithms Hamiltonian Monte Carlo (HMC) or No-U-Turn Sampling (NUTS) [52]. While PPLs typically require a model to be implemented using their API, other libraries such as emcee [57] provide, for example, gradient-free ensemble algorithms that can be applied to black-box problems. PyMC, the most popular Python-based PPL, implements both gradient-based (HMC, NUTS) as well as gradient-free algorithms, such as Differential Evolution MCMC (DE-MCMC) [58], DE-MCMC-Z [51] or elliptical slice sampling [59] in Python, allowing easy integration with custom data processing and modeling code. In this study, PyMC was used to sample posterior distributions with either DE-MCMC-Z (pymc.DEMetropolisZ) or NUTS.

MCMC sampling of the process model. Whereas in DE-MCMC, proposals are informed from a random pair of other chains in a “population”, the DE-MCMC-Z version selects a pair of states from its own history, the “Z”-dimension. Compared to DE-MCMC, DE-MCMC-Z yields good results with fewer chains that can run independently. The pymc.DEMetropolisZ sampler differs from the original DE-MCMC-Z in a tuning mechanism by which a tune_drop_fraction of by default 90% of the samples are discarded at the end of the tuning phase. This trick reliably cuts away unconverged “burn-in” history, leading to faster convergence.

pymc.DEMetropolisZ was applied to sample the process model in Section 4.2.3. MCMC chains were initialized at the MAP to accelerate convergence of the DE-MCMC-Z sampler in the tuning phase. 50 000 tuning iterations per chain were followed by 500 000 iterations to draw posterior samples for further analysis. The DEMetropolisZ settings remained fixed at ( (default), ϵ = 0.0001) for the entire procedure.

The diagnostic from ArviZ [29] was used to check for convergence (all , S1 Table).

3.2.7 Visualization techniques

Plots were prepared from Python with a combination of Matplotlib [31], ArviZ and PyMC. We used POV-Ray to produce Figs 2 and 4 and https://diagrams.net for technical drawings. Probability densities were visualized with the pymc.gp.utils.plot_gp_dist helper function that overlays many polygons corresponding to percentiles of a distribution, creating the colorful bands of plots seen in Section 4.2.4 and others. Posterior predictive samples were obtained by randomly drawing observations from the calibration model, based on independent values sampled from the posterior distribution. If not stated otherwise, the densities plotted for MCMC prediction results were obtained from at least 1000 posterior samples. The pair plots of 2-dimensional kernel density estimates of posterior marginals (e.g. S2 Fig) were prepared with ArviZ.

4 Results and discussion

4.1 Application: Implementing (non-)linear calibration models with calibr8

A common application of calibration models in life sciences are enzymatic assays, where the quantification of glucose is one out of many popular examples. In this section, data from a glucose assay is used as a demonstration case for building calibration models with calibr8. First, the linear range of the assay is described by the corresponding linear calibration model to then explore an extended concentration range by implementing a calibration model with logistic trend of the location parameter. We examine a second calibration example that is nonlinear in its nature, namely the backscatter/biomass relationship of a commercially available cultivation device with online measurement, a BioLector Pro device (Section 3.1.1). Finally, we demonstrate how uncertainty estimates for biomass concentrations can be easily obtained with calibr8.

4.1.1 Linear calibration model

To acquire data for the establishment of a calibration model, 96 glucose standards between 0.001 and were subjected to the glucose assay. A frequent approach to calibration modeling in life sciences is to identify the linear range of an assay and to discard measurements outside this range. From a previous adaptation of the glucose assay for automation with liquid handling robotics, the linear range was expected to be up to (Holger Morschett, personal communication, 2019). Since samples are diluted by a factor of 10 before the assay, 83 glucose standards with concentrations below remain for a linear calibration model.

As described in Section 2.4, calibration models use a probability distribution to describe the relation between independent variable and measurement outcome, both subject to random noise. In this example, we chose a Student-t distribution, thus the change of location parameter μ over the independent variable determines the trend of the calibration model. calibr8 provides a convenience class BasePolynomialModelT that was used to implement a glucose calibration model with linear trend (Box 1 Code 1). For the spread parameter scale, we also chose a linear function dependent on μ to account for increasing random noise in dependency of the absorbance readout of the assay. Both can easily be adapted by changing the respective parameters mu_degree and scale_degree passed to the constructor of the convenience class. The degree of freedom ν in a BasePolynomialModelT is estimated from the data as a constant. The mathematical notation of this model can be found in S2 File.

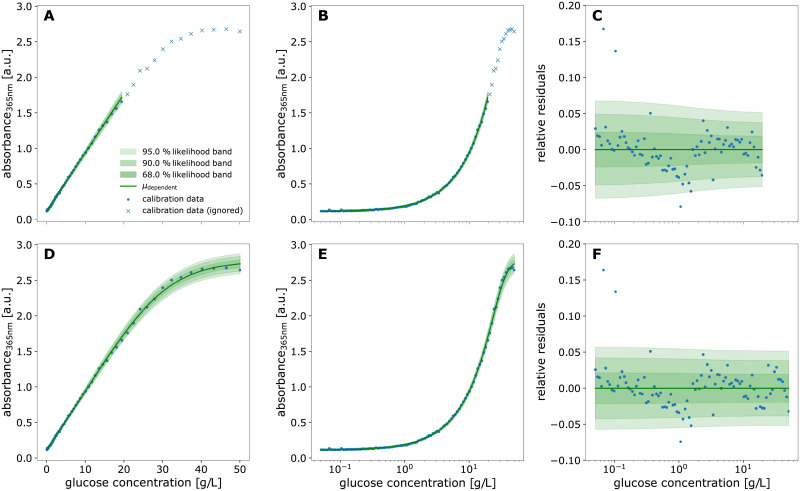

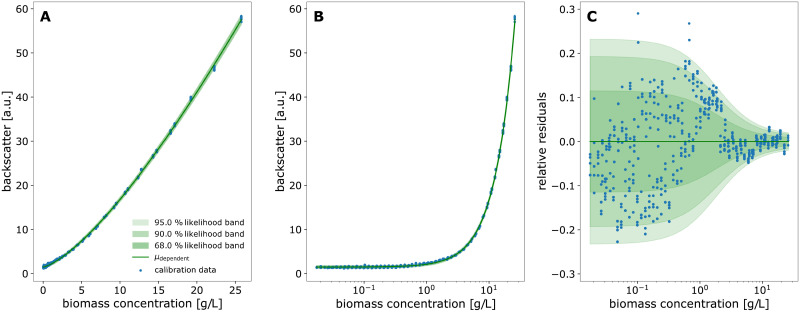

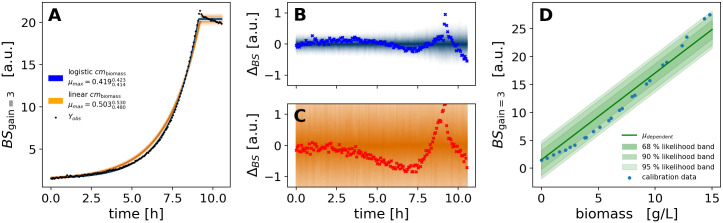

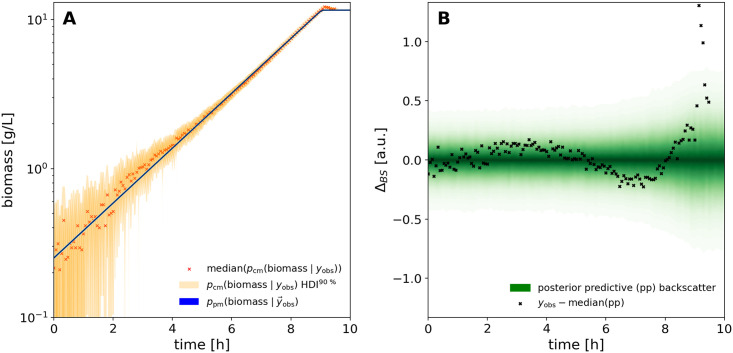

The calibration model resulting from MLE of location and spread parameters was plotted with another calibr8 convenience function (Fig 8A–8C). The plot shows the calibration model and measurement data (Fig 8A), the same relation with a logarithmic x-axis (Fig 8B) and the relative residuals of data and model predictions (Fig 8C). As it is often recommended for biological assays, the glucose concentrations of the dilution series were evenly spaced on a logarithmic scale [60, 61], thus ensuring a higher accuracy of the model in the low-concentrated area (Fig 8B). To evaluate the quality of the linear calibration model, the residuals of data and model prediction were analyzed (Fig 8C). Overall, the residuals lie between ±5% of the observed values, demonstrating the high precision of the data. For concentrations higher than , an s-shaped trend is observed in the residuals, meaning that data first lies below and then above the linear model prediction. This indicates a lack-of-fit as described in Section 2.4. However, the discrepancy might also be caused by errors in the serial dilution that was pipetted with the robotic liquid handler, resulting in deviations from the expected linear relation. Moreover, it can be seen that the relative spread of residuals is quite constant, meaning that the absolute deviation increases with higher concentrations (Fig 8C). Although the linearly increasing scale parameter accounts for this rise of the underlying random noise, it can be seen that it is slightly overestimated by the model since all data points above lie within a 90% probability interval.

Fig 8. Linear (top) and logistic (bottom) calibration model of glucose assay.

A calibration model comprising linear functions for both the location parameter μA365 and the scale parameter of a Student-t distribution was fitted to calibration data of glucose standard concentrations () and absorbance readouts by maximum likelihood estimation (A-C). The calibration data used to fit the linear model is the subset of standards that were spaced evenly on a log-scale up to (B, E). Likewise, a calibration model with a 5-parameter asymmetric logistic function for the μ parameter of the Student-t distribution was fitted to the full calibration dataset (D-E). In both models, the scale parameter was modeled as a 1st-order polynomial function of μ and the degree of freedom ν as a constant. The extended range of calibration standard concentrations up to reveals a saturation kinetic of the glucose assay (A, D) and depending on the glucose concentration, the residuals (C, F) with respect to the modeled location parameter are scattered by approximately 5%. Modeling the scale parameter of the distribution as a 1st-order polynomial function of μ describes the broadening of the distribution at higher concentrations (C).

In comparison to simple linear regression, which is often evaluated by the coefficient of determination R2 alone, the demonstrated diagnostics allow to judge whether the choice of model is appropriate. In this case, a more sophisticated model for the spread of the Student-t distribution could be chosen to reduce the lack-of-fit. Moreover, all data points lying above were not considered so far to allow for a linear model. In the following, we will therefore modify the existing calibration model to include a logistic function for the location parameter.

4.1.2 Logistic calibration model

Although linear calibration models are useful in many cases, some relations in datasets are non-linear in their nature. Moreover, restricting analytical measurements to an approximately linear range instead of calibrating all concentrations of interest can be limiting. If the order of magnitude of sample concentrations is unknown, this leads to laborious dilution series or repetition of measurements to ensure that the linear range is met. In contrast, non-linear calibration models allow to describe complex relationships and, in case of biological assays, to reduce these time- and material-consuming workflows.

Many recommendations for experimental design in calibration can be found in literature (e.g. [60]). Having determined the range of interest for the calibration model, it should be exceeded in both directions if possible, thus ensuring that the relevant concentrations are well-covered. This way, all model parameters, including limits where applicable, can be identified from the observed data. Afterwards, the expected relationship between dependent and independent variable is to be considered. Since the glucose assay readout is based on absorbance in a plate reader (Section 3.1.4), which has a lower and upper detection limit, a saturation effect at high glucose concentrations is expected. In our demonstration example, glucose concentrations of up to were targeted to cover relevant concentration for cultivation (Section 4.2) and at the same time to exceed the linear range towards the upper detection limit.

Sigmoidal shapes in calibration data, e.g. often observed for immunoassays, can be well-described by logistic functions [39]. In the calibr8 package, a generalized logistic function with five parameters is used in an interpretable form (Section 3.2.1). It was used to implement a calibration model where the location parameter μ is described by a logistic function dependent on the glucose concentration. A respective base class BaseAsymmetricLogisticT is provided by calibr8 (S1 File). The mathematical notation of the resulting model is given in S2 File. Using the whole glucose dataset up to , parameters of the new calibration model were estimated (Fig 8D–8F).

Overall, the logistic trend of the location parameter matches the extended calibration data well (Fig 8D and 8E). Since the scale parameter of the Student-t distribution is modeled as a linear function dependent on μ, the width of the likelihood bands approaches a limit at high glucose concentrations (Fig 8F). For concentrations greater than , no residuals lie outside of the 90% probability interval, indicating that the distribution spread is overestimated as it was before. Importantly, a direct comparison between the two calibration models (Fig 8C and 8F) reveals a high similarity in the reduced range (). This demonstrates how a non-linear model extends the range of concentrations available for measurement and modeling while improving the quality of the fit. For the glucose assay, truncating to a linear range thus becomes obsolete.

While non-linear models were so far shown to be useful to extend the usable concentration range of an assay, other applications do not allow to linearly approximate a subrange of measurements. Such an example is the online detection of biomass in growth experiments, where the non-invasive backscatter measurement (Section 3.1.1) does not allow for dilution of the cell suspension during incubation. To model the distribution of backscatter observations as a function of the underlying biomass concentration, a structure similar to the glucose calibration model was chosen (S2 File). In contrast, the location parameter μ was modeled by a polynomial function of the logarithmic cell dry weight (CDW). The final CDW/backscatter calibration model was implemented using the calibr8.BaseLogIndependentAsymmetricLogisticT convenience class (Box 2 Code 2).

Two independent experiments were conducted to obtain calibration data as described in Section 3.1.5. The model was fitted to pooled data using the calibr8.fit_pygmo convenience function. As shown in Fig 9, the model accurately describes the nonlinear correlation between biomass concentration and observed backscatter measurements in the cultivation device (Fig 9A and 9B). Non-linearity is particularly observed for biomass concentrations below (Fig 9A). Moreover, the residual plot (Fig 9C) mainly shows a random distribution; solely residuals between 1 and indicate a lack-of-fit. To assess the potential influence, the resulting uncertainty in estimated biomass concentrations has to be considered, which will be further discussed in Section 4.1.3. Overall, the chosen logistic calibration model describes the calibration data well and is thus useful to transform backscatter measurements from the BioLector device into interpretable quantitative biomass curves.

Fig 9. Calibration model of biomass-dependent backscatter measurement.

Backscatter observations from two independent calibration experiments (1400 rpm, gain = 3) on the same BioLector Pro cultivation device were pooled. A non-linearity of the backscatter/CDW relationship is apparent already from the data itself (A). The evenly spaced calibration data (B) are well-described with little lack-of-fit error (C). At low biomass concentrations the relative spread of the measurement responses starts at ca. 20% and reduces to approximately 2% at concentrations above .

In summary, this section illustrated how calibration models can be built conveniently with calibr8 and showed that the asymmetric logistic function is suitable to describe many relationships in biotechnological measurement systems. Note that the only requirement to estimate CalibrationModel parameters with calibr8 is to provide a numpy.ndarray with data for the independent and dependent variable. The code is agnostic of bioprocess knowledge and can thus be transferred to other research fields without further adaptations.

Having demonstrated how concentration/readout relations can be described by different calibration models, a remaining question is how to apply those calibration models. An important use-case is to obtain an estimate of concentrations in unknown samples, where uncertainty quantification is a crucial step.