Abstract

This paper describes Guided Search 6.0 (GS6), a revised model of visual search. When we encounter a scene, we can see something everywhere. However, we cannot recognize more than a few items at a time. Attention is used to select items so that their features can be ‘bound’ into recognizable objects. Attention is ‘guided’ so that items can be processed in an intelligent order. In GS6, this guidance comes from five sources of preattentive information: (1) top-down and (2) bottom-up feature guidance, (3) prior history (e.g. priming), (4) reward, and (5) scene syntax and semantics. These sources are combined into a spatial “priority map”, a dynamic attentional landscape that evolves over the course of search. Selective attention is guided to the most active location in the priority map approximately 20 times per second. Guidance will not be uniform across the visual field. It will favor items near the point of fixation. Three types of functional visual field (FVFs) describe nature of these foveal biases. There is a resolution FVF, an FVF governing exploratory eye movements, and an FVF governing covert deployments of attention. To be identified as targets or rejected as distractors, items must be compared to target templates held in memory. The binding and recognition of an attended object is modeled as a diffusion process taking > 150 msec/item. Since selection occurs more frequently than that, it follows that multiple items are undergoing recognition at the same time, though asynchronously, making GS6 a hybrid of serial and parallel processes. In GS6, if a target is not found, search terminates when an accumulating quitting signal reaches a threshold. Setting of that threshold is adaptive, allowing feedback about performance to shape subsequent searches. Simulation shows that the combination of asynchronous diffusion and a quitting signal can produce the basic patterns of response time and error data from a range of search experiments.

Visual search has been a major topic of research for decades. There are a number of reasons for this. To begin, we spend a great deal of time doing search tasks. Many of these are so fast and seemingly trivial that we don’t tend to think of them as searches. Think, for example, about eating dinner. You search for the fork, then the potatoes, then the salt, then the potatoes again, then your drink, then the napkin, and so forth. As you drive, you look for specific items like the exit sign at the same time as you are searching for broad categories like “danger”. In more specialized realms, radiologists search images for signs of cancer, transportation security officers search carry-on baggage for threats, and so forth. Search is a significant, real-world task. At the same time, it has proven to be a very productive experimental paradigm in the lab. In a classic laboratory search task, observers might be asked to look for a target that is present on 50% of trials among some variable number of distractors. The number of items in the display is known as the “set size” and very systematic and replicable functions relate response time (or “reaction time”, “RT” in either case) and/or accuracy to that set size (Wolfe, 2014).

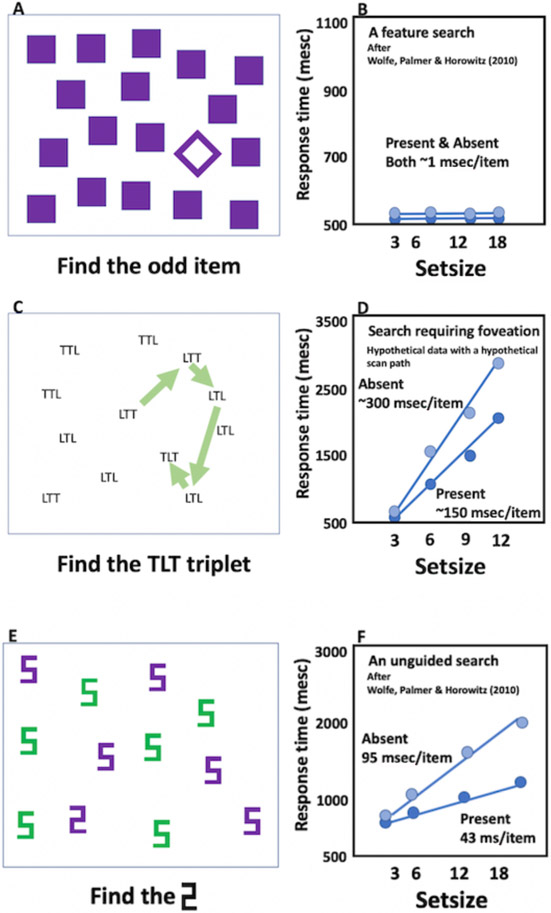

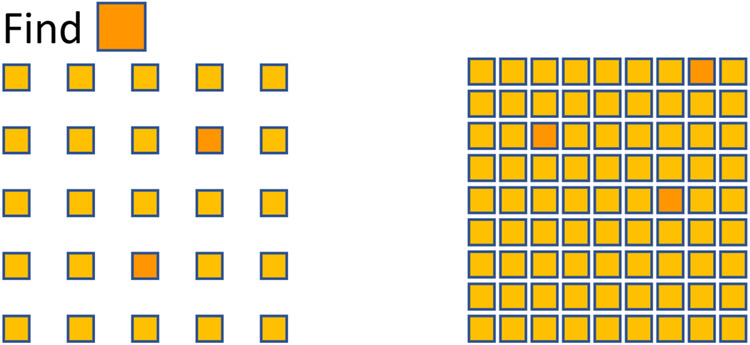

For some tasks (as shown in Figure 1a-b), the number of distractors has little or no impact. The target seems to simply “pop-out” of the display (Egeth, Jonides, & Wall, 1972) and, indeed, may “capture” attention, even if it is not the target of search (Jonides & Yantis, 1988; Theeuwes, 1994). The slope of the RT x set size functions will be near (but typically a little greater than) 0 msec/item (Buetti, Xu, & Lleras, 2019). For other tasks, the time required to find the target increases (typically, more or less linearly) with the set size. In some cases, this reflects underlying limits on visual resolution. Thus, if the task is to find “TLT” among other triplets composed of Ts and Ls, a combination of acuity and crowding limits (Levi, Klein, & Aitsebaomo, 1985; Whitney & Levi, 2011) will require that each triplet be foveated in series until the target is found or the search is abandoned (Figure 1c-d). Since the eyes fixate on 3-4 items per second, the slope of the RT x set size functions will be ~250-350 msec/item for target-absent trials (when all items need to be examined in order to be sure that the target is not present). Slopes for target-present trials will be about half that because observers will need to examine about half of the items on average before stumbling on the target. Figure 1e-f shows a more interesting case. Here the target, a digital “2” is presented among digital 5s. The items are large and the display sparse enough to avoid most effects of crowding. Nevertheless, slopes of the target-absent trials will tend to be around 90 msec/item on absent trials and, again, about half that on target-present trials (Wolfe, Palmer, & Horowitz 2010). This will be true, even if the eyes do not move (Zelinsky & Sheinberg, 1997).

Figure 1:

Basic laboratory search tasks and RT x set size graphs.

These patterns in the data were uncovered in the ‘60s and ‘70s (Kristjansson & Egeth, 2020) and formed the basis of Anne Treisman’s enduringly influential Feature Integration Theory (FIT) (Treisman & Gelade, 1980). Building on an architecture proposed by Neisser (1967), Treisman held that there was an initial, “preattentive” stage of processing, in which a limited set of basic features like color and orientation could be processed in parallel across the visual field. In this, she was inspired by the then-novel physiological findings showing cortical cells and areas that appeared to be specialized for these features (e.g. Zeki, 1978). In behavioral experiments, a unique feature, if it was sufficiently different from its neighbors, would pop-out and be detected, independent of the number of distractor items.

Basic features might be processed in parallel in separate cortical maps, but we do not see separate features. We see objects whose features are bound together. Treisman proposed that this “binding” required selective attention to connect isolated features to a single representation (Roskies, 1999; Treisman, 1996; Wolfe & Cave, 1999). This attention was capacity limited, meaning that only one or a very few items could be attended and bound at any given time. As a result, while a salient unique feature could be found in parallel, all other types of targets would require serial, selective attention from item to item. This proposed serial/parallel dichotomy and FIT more generally have proven to be extremely influential and persistent (~14,000 citations for Treisman and Gelade, 1980, in Google Scholar at last check).

Influential or not, it became clear over the course of the 1980s that FIT was not quite correct. The core empirical challenge came from searches for conjunctions of two features. For example, observers might be asked to search for a red vertical target among red horizontal and green vertical distractors. Identification of this target would require binding of color and orientation and, thus, it should require serial search. However, it became clear that conjunction searches were often more efficient than FIT would predict (Egeth, Virzi, & Garbart, 1984; McLeod, Driver, & Crisp, 1988; Nakayama & Silverman, 1986; Quinlan & Humphreys, 1987; Wolfe, Cave, & Franzel, 1989). The explanation can be illustrated by a version of a conjunction search used by Egeth, Virzi, and Garbart (1984). If we return to Figure 1e-f, suppose you knew that the “2” was purple. It should be intuitively obvious that, while search may still be necessary, it will be unnecessary to attend to green items. If just half the items are purple, then just half the items are relevant to search and the slopes of the RT x set size functions will be cut in half, relative to the case where there is no color information.

In 1989, Wolfe, Cave, & Franzel (1989) proposed that the preattentive feature information could be used to “guide” the serial deployment of attention; hence the name of the model, “Guided Search” (GS). The original version of GS was otherwise quite similar to FIT. The core difference was that, while FIT proposed a dichotomy between parallel and serial search tasks, GS proposed a continuum based on the effectiveness of guidance. Pop-out search (Fig 1a-b) arose when preattentive feature information guided attention to the target the first time, every time. A search for a 2 among 5s would be unguided because both target and distractors contained the same basic features. Results for conjunction searches lay in between, reflecting different amounts of guidance.

Treisman recognized the problem with the original FIT and proposed her own accounts in subsequent papers (e.g. Treisman & Sato, 1990). It was a subject of some annoyance to her that she continued to get taken to task for theoretical positions that she no longer held. Indeed, to this day, 40 years after FIT appeared, a simple two-stage, parallel-serial dichotomy is asserted in textbooks and by many research papers, especially outside the core disciplines of Experimental Psychology/Cognitive Science. To avoid this fate, when the time came to revise Guided Search in the light of new research, the paper was entitled “Guided Search 2.0: A revised model of visual search.” (Wolfe, 1994a). Subsequent revisions have also been given version numbers. GS2 remains the most cited of the versions. GS3 (Wolfe & Gancarz, 1996) was something of a dead end and GS4 (Wolfe, 2007) was published as a book chapter and thus, less widely known. GS5 (Wolfe, Cain, Ehinger, & Drew, 2015) did not get beyond being a conference talk before being derailed by new data. The goal of the present paper is to describe Guided Search 6 (GS6). Since GS2 is the best known version of GS, this paper will frame GS6 in terms of the major changes from GS2. GS6 is needed because we know a lot more about search than we knew in 1980 or 1994. Still, this model presented here is an evolution and not a repudiation of the core ideas of FIT and the earlier versions of GS. Though some would disagree (Di Lollo, 2012; Hulleman & Olivers, 2017; Kristjansson, 2015, Zelinsky et al., 2020), the basic ideas from 40 years ago have proven very durable.

Guided Search 2.0

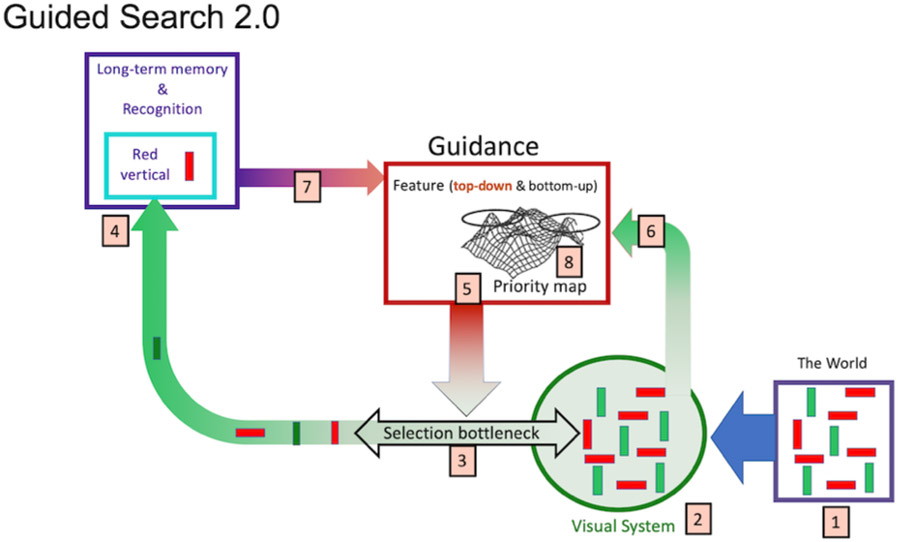

Figure 2 offers an illustration of GS2. The numbers are referred to in the summary of the key ideas, presented below:

Figure 2:

A representation of Guided Search 2.0

Information from the world….

… is represented in the visual system. The nature of that representation will depend on the position of items in the visual field, properties of early visual channels, etc. In the early stages of processing, this will involve extraction of information about basic features like color and orientation.

Capacity limitations require that many activities, notably object recognition, can only be performed on one or a very few items at a time. Thus, there is a tight bottleneck that passes only the current object of attention for capacity-limited processing (e.g. “binding”).

An item, selected by attention, is bound, recognized, and tested to determine if it is a target or a distractor. If it is a match, search can end. If there are no matches, search will terminate when a quitting threshold (not diagrammed here) is reached.

Importantly, selection is rarely random. Access to the bottleneck is guided by a “priority map” that represents the system’s best guess as to where to deploy attention next. Attention will be deployed to the most active location in the map.

One source of priority map guidance comes from “bottom-up” salience: Salience is based on coarse representations of a limited number of basic features like color, size, etc. Bottom-up is defined as “stimulus-driven”.

Attentional priority is also determined by “top-down” guidance. “Top-down” guidance represents the implicit or explicit goals of the searcher. Top-down guidance is based on the basic features of the target as represented in memory. That is, if the observer was searching for a red vertical line, the red color and vertical orientation of that target could be used to guide attention.

Both of these sources of guidance are combined in a weighted manner to direct attention to the next item/location. If that item is a distractor, that location is suppressed (perhaps via “inhibition of return” IOR (R. Klein, 1988)), and attention is deployed to the next highest peak in the map. This guided search continues until the target is found or the search terminates.

From GS2 to GS6

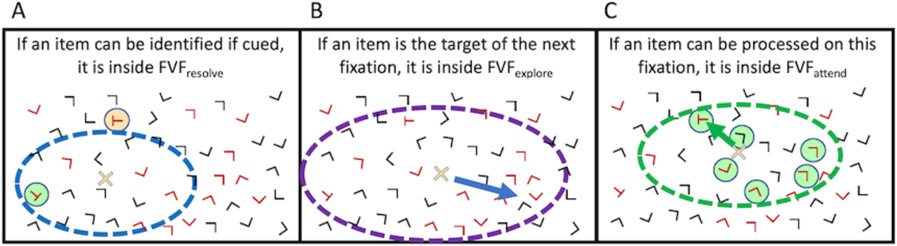

The core ideas of GS have remained relatively constant over time, but new data requires modifications of each of the main components: preattentive features, guidance, serial vs parallel processing, & search termination. In addition, the model requires consideration of topics that were not discussed in GS2; notably, the contribution of “non-selective” processing, the role of eccentricity (functional visual fields – FVF), the role of non-selective processing (scene gist, ensembles, etc.), and the nature of the search template (or templates) and their relationship to working memory and long-term memory.

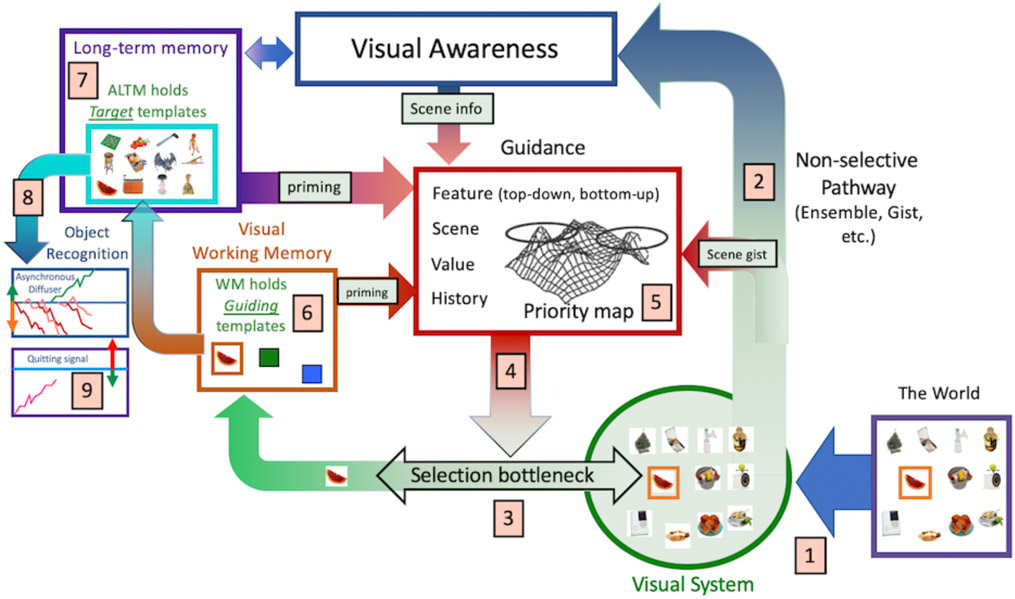

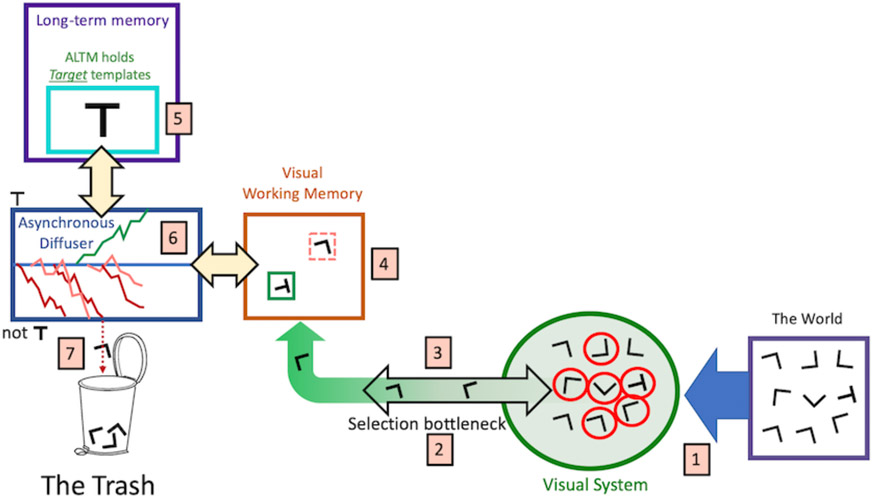

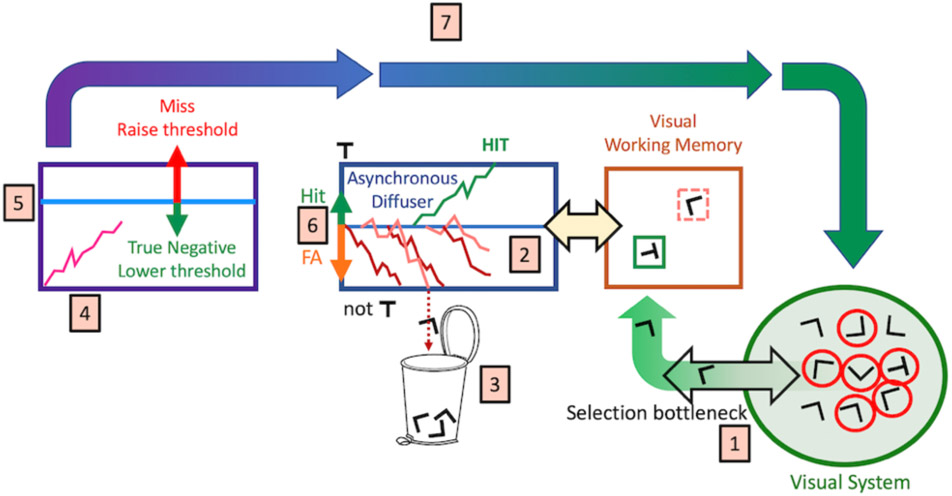

Here, in the same format as the GS2 diagram and description, Figure 3 illustrates GS6. This diagram and description will introduce the topics for the bulk of the paper.

Figure 3:

A representation of Guided Search 6.0

Information from the world is represented in the visual system. The nature of that representation will depend on the position in the visual field relative to fixation (eccentricity effects, crowding, etc.). Thus, an item near fixation will be more richly represented than one further away. These eccentricity constraints define one of three types of functional visual field (FVF) that are relevant for search (see #10, below).

Some representation of the visual input is available to visual awareness at all points in the field, in parallel, and via a non-selective pathway that was not considered in GS2. Thus, you see something everywhere. Ensemble statistics, scene gist, and other rapidly extracted attributes generally do not require selective attention and can be attributed to this non-selective pathway (Wolfe, Vo, Evans, & Greene, 2011).

There are capacity limits that require that many tasks can only be performed on one (or a very few) item/s at a time. Notably, for present purposes, this includes object recognition. Selective attention, as used here, refers to the processes that determine which items or regions of space will be passed through the bottleneck. Items are selected by attention at a rate of ~20 Hz, though this will vary with task difficulty (Wolfe, 1998).

Access to the bottleneck (i.e. attentional selection) is “guided” (hence Guided Search).

In GS6, there are five types of guidance that combine to create an attentional “Priority Map.” Bottom-up (salience) and top-down (user/template-driven) guidance by preattentive visual features are retained from all previous versions of GS. Newer data support guidance by the history of prior attention (e.g. priming), value (e.g. rewarded features), and, very importantly, guidance from the structure and meaning of scenes.

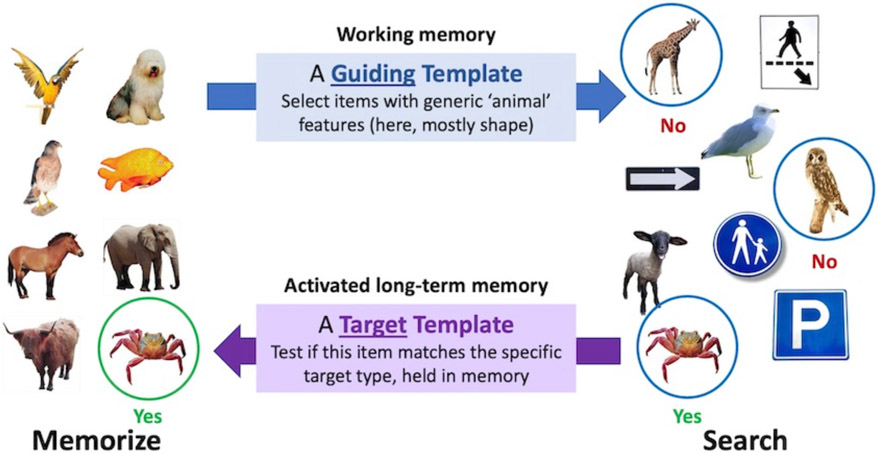

The selected object of attention is represented in working memory (Speculatively, the limits on what can be selected at any given time may be related to the limits on the capacity of WM). The contents of WM can prime subsequent deployments of attention. WM also holds the top-down “guiding template” (i.e. the template that guides toward target attributes like “yellow” and “curved” if you are looking for a banana).

A second template is held in “activated long-term memory” (ALTM), a term referring to a piece of LTM relevant to the current task. This “target template” can be matched against the current object of attention in WM in order to determine if that object of attention is a target item. Thus, the target template is used to determine that this item is not just yellow and curve. Is it, in fact, the specific banana that is being looked for? In contrast to the one or two guiding templates in WM, ALTM can hold a very large number of target templates (as in Hybrid Search tasks having as many as 100 possible targets (Wolfe, 2012)). Those target templates might be highly specific (this banana in this pose) or much more general (e.g. any fruit).

The act of determining if an item, selected by attention, and represented in WM, is a target can be modeled as a diffusion process, with one diffuser for every target template that is held in ALTM. If a target is found, it can be acted upon.

A separate diffuser accumulates toward a quitting threshold. This will, eventually, terminate search if a target is not found before the quitting threshold is reached.

Not shown: In addition to a resolution Functional Visual Field (FVF), mentioned in #1 above, two other FVFs govern search. An attentional FVF governs covert deployments of attention during a fixation. That is, if you are fixated at one point, your choice of items to select is constrained by this attentional FVF. An explorational FVF constrains overt movements of the eyes as they explore the scene in search of a target.

A short-hand for capturing the main changes in GS6 might be that there are now 2 pathways, 2 templates, 2 diffusion mechanisms, 3 FVFs, and 5 sources of guidance. The paper is divided into six sections: 1) Guidance, 2) The Search Process, 3) Simulation of the Search Process, 4) Spatial Constraints and Functional Visual Fields, 5) Search Templates, and 6) Other Search Tasks.

Five Forms of Guidance

In this section, we will review the two “classic” forms of guidance: top-down and bottom-up feature guidance. Then we will argue for the addition of three other types of guidance: history (e.g. priming), value, and, the most important of these, scene guidance. The division of guidance into exactly five forms is less important than the idea that there are multiple sources of guidance that combine to create an attention-directing landscape here called a “priority map”.

What do we know about classic top-down and bottom-up guidance by preattentive features?

To begin, “preattentive” is an unpopular term in some circles; in part, because it can be used, probably incorrectly, to propose that some pieces of the brain are “preattentive” or to propose that preattentive processing occurs for the first N msec and then ends. The term is useful in the following sense. If we accept the existence of selective attention and if we accept that, by definition, we cannot selectively attend to everything at once, it follows that, when a stimulus appears, some aspects have not been selected yet. To the extent that they are being processed, that processing is tautologically preattentive. A stimulus feature that can be processed in this way is, thus, a preattentive feature. This is not the end of the discussion. For instance, if an item is attended and then attention is deployed elsewhere, is its “post-attentive” state similar to its preattentive state (Rensink, 2000; Wolfe, Klempen, & Dahlen, 2000)? For the present, if selective attention is a meaningful term, then preattentive is a meaningful term as well. Some information (e.g. aspects of texture and scene processing) can be thought of as “non-selective” [Wolfe, 2011 #10142] in the sense that, not only are they available before attentional selection but they have an impact on visual awareness without the need for attentional selection.

Preattentive Feature Guidance

A preattentive feature is a property capable of guiding the deployment of attention. These features are derived from but are not identical to early visual processing stages. Orientation serves as a clear example of the difference between early vision (#1 in Fig 3) and preattentive guidance (#5) because it has been the subject of extensive research. For instance, early visual processes allow for very fine differentiation of the orientation of lines. A half degree tilt away from vertical is not hard to detect (Olzak & Thomas, 1986). That detectable difference will not guide attention. The difference between an item and its neighbors must be much greater if an attention-guiding priority signal is to be generated (roughly 10-15 deg. It will depend on the stimulus parameters; see Foster & Ward, 1991a, 1991b; Foster & Westland, 1998). Similar effects occur in color (Nagy & Sanchez, 1990) and, no doubt, they would be found in other features if tested. Guidance is based on a coarse representation of a feature. That coarse representation is not simply the fine representation divided by some constant. Using orientation, again, as an example, geometrically identical sets of orientations do not produce identical guidance of attention. The categorical status of the items is important. Thus, a −10 deg target among +50 and −50 deg distractors is easier to find than a 10 deg target among −30 and 70 deg distractors. The second set of lines is simply a 20 deg rotation of the first. Thus, the angular relations between the target and distractor lines are the same. However, in the first set, the target is the only steep line whereas in the second set, it is merely the steepest (Wolfe, Friedman-Hill, Stewart, & O'Connell, 1992). A target of a unique category is easier to find (see also Kong, Alais, & Van der Berg, 2017). Again, there are similar effects in color (Nagy & Sanchez, 1990).

Fine discriminations, like the discrimination that half degree tilt from vertical, rely on information encoded in early vision and require attention. This can be seen as an example of reentrant processing (Di Lollo,, Enns, & Rensink, 2000) and/or support for the Reverse Hierarchy Theory (Hochstein & Ahissar, 2002). In both cases, the idea is that attention makes it possible to reach down from later stages of visual processing of the visual system to make use of fine-grain information represented in early vision.

Preattentive guidance is complex

It would be lovely if top-down and bottom-up feature guidance could be calculated in a straight-forward manner from the stimulus, using rules that generalize across different featural dimensions. Bottom-up salience maps are based on something like this assumption (e.g. Bisley & Mirpour, 2019; Itti & Koch, 2000; Li, 2002) and, certainly, there are important general rules. Duncan and Humphreys (1989) gave a clear articulation of some of the most basic principles. In general, guidance to a target will be stronger when the featural differences between target (T) and distractor (D) are larger (TD differences) and guidance to a target will be stronger when the featural differences amongst distractors are smaller (DD similarity). Other, more quantitative rules about combining signals across features are appearing (Buetti et al., 2019; Lleras et al., 2020). That said, TD and DD distances are not simple functions of the distance from the one feature value to another in some unit of the physical stimulus or some unit of perceptual discriminability like a just noticeable difference (Nagy & Sanchez, 1990; Nagy, Sanchez, & Hughes, 1990). Moreover, it is an unfortunate fact that rules that apply to one guiding feature do not necessarily apply to another guiding feature, or even to the same feature in a different situation. For example, it seems quite clear that color and orientation both guide attention in simple searches for conjunctions of color and orientation (e.g. Friedman-Hill & Wolfe, 1995). One would like to imagine that any time that half of the items in a display had a guiding feature like a specific color or orientation, the other half of the items would be treated as irrelevant to search. However, that does not appear to be consistently true. Orientation information can fail to guide and can even make search less efficient (Hulleman, 2020; Hulleman, Lund, & Skarratt, 2019). When guidance is provided by previewing one feature, different features (color, size, orientation) can show very different patterns of guidance, even if the feature differences have been equated (Olds & Fockler, 2004). Here, too, orientation information can actually make search less efficient. For modeling purposes, using basic salience measures and basic rules about TD and DD similarity is a decent approximation but not a full account.

Preattentive processing takes time

Earlier versions of GS (and other accounts of feature guidance) tended to treat the preattentive, feature processing stage as a single, essentially instantaneous step in which the features were processed in parallel across the entire stimulus. If the target was “red” and “vertical”, that color and orientation information was immediately available in a priority map, ready to guide attention. That is not correct. Palmer et al. (2019) showed that it takes 200-300 msec for even very basic guidance by color to be fully effective. Lleras and his colleagues (2020) have produced important insights into the mechanics of this “parallel”, “preattentive” stage of processing in a series of experiments that show that RTs in basic feature searches increase with the log of the set size. Even the most basic of feature searches do not appear to have completely flat, 0 msec/item slopes (Buetti, Cronin, Madison, Wang, & Lleras, 2016; Madison, Lleras, & Buetti, 2018). Lleras et al. (2020) offer an interesting account of the cause of this log function in their “target contrast signal theory (TCS)”. They argue that a diffusion process (Ratcliff, 1978) accumulates information about the difference between each item and the designated target. Other diffusion models (including GS, see below) typically ask how long it takes for information to accumulate to prove that an item is a target. TCS emphasizes how long it takes to decide that attention does not need to be directed to a distractor item. The TCS model envisions a preattentive stage that ends when all the items that need to be rejected have been rejected. The remaining items (any targets as well as other “lures” or “candidates”) are then passed to the next stage. Since diffusion has a random walk component, some items will take longer than others to finish. Leite and Ratcliff (2010) have shown that the time required to end a process with multiple diffusers will be a log function of the number of diffusers and, in TCS, this explains the log functions in the data. In more recent work, Heaton et al. (2020) make the important point that it is a mistake to think of preattentive processing as something that stops after some time has elapsed. Preattentive and/or non-selective processing must be ongoing when a stimulus is visible. Deployment of attention will be dependent on the priority map generated by the current state of that preattentive processing and that current state will be continually evolving especially as the eyes and/or the observer move.

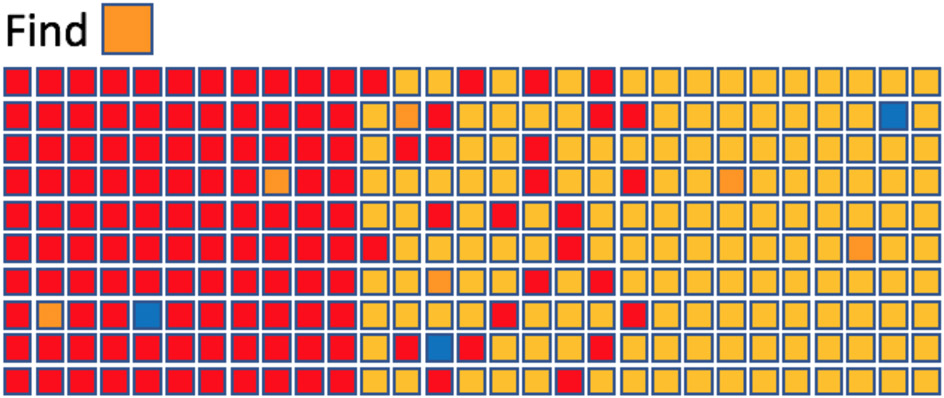

TCS does not explain some important aspects of preattentive processing (nor is it intended to do so). For example, what is happening when the target is simply an odd item that “pops-out” because it is unique? Thus, in Figure 4 (which we will discuss for other purposes in a moment), the intended targets are orange. Nevertheless, attention is attracted to the blue items even though the blue items can be easily rejected as not orange. They are sufficiently different from their neighbors to attract attention in a ‘bottom-up’, stimulus-driven manner. Regardless, the TCS model and its associated data make the clear point that the preattentive processing stage will take some amount of time and that this time will be dependent on the number of items in the display, even if all items are processed in parallel.

Figure 4:

Feature search based on bottom-up salience, top-down relations, and top-down identity.

TCS also raises the possibility that guidance could be as much about rejecting distractors as it is about guiding toward targets (Treisman and Sato, 1990); a topic that has seen a recent burst of interest (e.g. Conci, Deichsel, Müller, & Töllner, 2019; Cunningham & Egeth, 2016; Stilwell & Vecera, 2019). In thinking about distractor rejection, it is important to distinguish two forms of rejection. One could reject items that do not have the target feature (e.g. in Fig 4, reject items that are not orange) or one could reject items that have a known distractor feature (e.g. reject items that are red). Friedman-Hill and Wolfe (Exp 4, 1995) and Kaptein, Theeuwes, & Van der Heijden (1995) found evidence that observers could not suppress a set of items on the basis of its defining feature. In a study of priming effect, Wolfe, Butcher, Lee, & Hyle (2003) found that the effects of repeating target features were much greater than those of repeating distractors. Still, the distractor effects were present and subsequent work, suggests that distractor inhibition is a contributor to guidance even if it may take longer to learn and establish (Cunningham and Egeth, 2016, Stillwell & Vecera, 2020).

Feature guidance can be relational

Over the past decade, Stefanie Becker’s work has emphasized the role of relative feature values in the guidance of attention (Becker, 2010; Becker, Harris, York, & Choi, 2017). This is also illustrated in Figure 4, where, on the left, the orange targets are the yellower items while on the right, the same targets are the redder items. Attention can be guided by a filter that is not maximally sensitive to the feature(s) of the target. On the right side of Figure 4, for example, it might be worth using a filter maximally sensitive to “red” even though the target is not red. The most useful filter will be the one that reveals the greatest difference between target and distractors (Yu & Geng, 2019). Targets and distractors that can be separated by a line, drawn in some feature space, are said to be “linearly separable” (Bauer, Jolicoeur, & Cowan, 1998; Bauer, Jolicreur, & Cowan, 1996). If, as in the middle of Figure 4, no line in color space separates targets and distractors, search is notably more difficult (look for the same orange squares). Some of this is due to the inability to use Becker’s relational guidance when targets are not linearly separable from distractors, and some of the difficulty is due to added bottom-up (DD similarity) noise produced by the highly salient contrast between the two types of yellow and red distractors. Note, however, that attention can still be guided to the orange targets, showing that top-down guidance is not based entirely on a single relationship (for more, see Kong, Alais, & Van der Berg, 2016; Lindsey et al., 2010). Moreover, Buetti et al. (2020) have cast doubt on the whole idea of linear separability, arguing that performance in the inseparable case can be explained as a function of performance on each of the component simple feature searches. In their argument, the middle of Figure 4 would be explained by the two flanking searches without the need for an additional effect of linear separability.

Spatial relations are at least as important as featural relations in feature guidance. Figure 5 illustrates this point using density. In the figure, the orange targets and yellow distractors on the left are the same as those on the right but those orange targets are less salient and guide attention less effectively because they are not as physically close to the yellow distractors (Nothdurft, 2000). An interesting side effect of density is that the RT x set size function can become negative if increasing density speeds the search more than the set size effect slows search (Bravo & Nakayama, 1992).

Figure 5:

Density effects in search: Feature differences are easier to detect when items are closer together.

Feature Guidance is modulated by position in the visual field

GS2, following Neisser (1967) says “there are parallel processes that operate over large portions of the visual field at one time” (Wolfe, 1992, p203). However, it is important to think more carefully about the spatial aspects of guidance and preattentive processing.

The visual field is not homogeneous. Of course, we knew this with regard to attentive vision and object recognition. As you read this sentence, you need to fixate one word after another, because acuity falls off with distance from the fovea, the point of fixation (Olzak & Thomas, 1986). Moreover contours “crowd” each other in the periphery, making them still harder to perceive correctly (Levi, 2008). Thus, you simply cannot read words of a standard font size more than a small distance from fixation. What must be true but is little remarked on, is that preattentive guidance of attention must also be limited by eccentricity effects

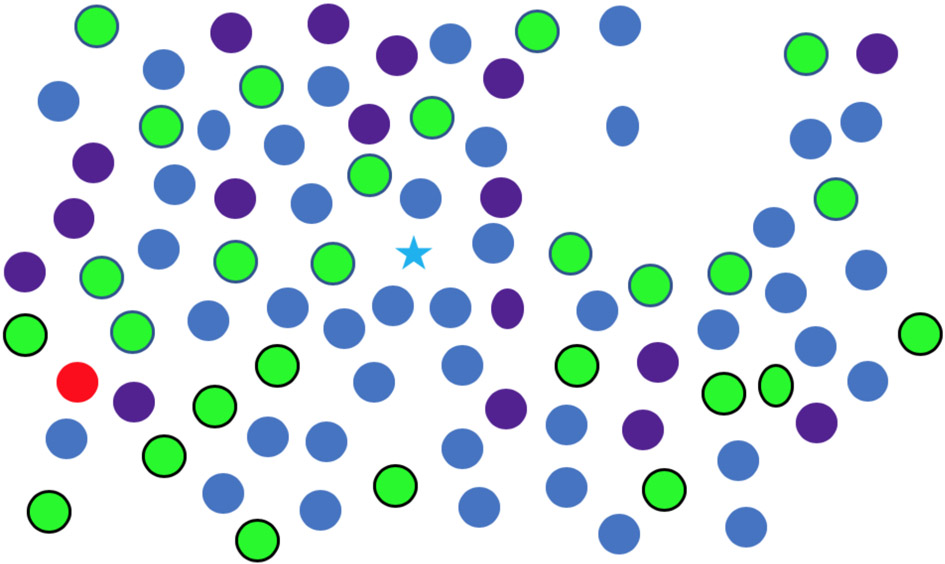

In Figure 6, look at the star and report on the color of all the ovals that you can find. Without moving your eyes, you will be able to report the purple oval at about 4 o’clock and the blue isolated oval at 2 o’clock. The same preattentive shape/orientation information that guides your attention to those ovals will not guide your attention to the other two ovals unless you move your eyes. Thus, while preattentive processing may occur across large swaths of the visual field at the same time, the results of that processing will vary with eccentricity and with the structure of the scene. In that vein, it is known that there are eccentricity effects in search. Items near fixation will be found more quickly and these effects can be neutralized by scaling the stimuli to compensate for the effects of eccentricity (Carrasco, Evert, Chang, & Katz, 1995; Carrasco & Frieder, 1997; Wolfe, O'Neill, & Bennett, 1998).

Figure 6:

Look at the star and report the color of ovals.

Thinking about search in the real world of complex scenes, it is clear that the effects of eccentricity on guidance are going to be large and varied. Returning to Figure 6, for example, both color and shape are preattentive features but guidance to “oval” will fail at a much smaller eccentricity than guidance to that one “red” spot. Rosenholtz and her colleagues attribute the bulk of variation in the efficiency of search tasks to the effects of crowding and the loss of acuity in the periphery (Rosenholtz, Huang, & Ehinger, 2012; Rosenholtz, 2011, 2020; Zhang, Huang, Yigit-Elliott, & Rosenholtz, 2015). Guided Search isn’t prepared to go that far, but it is clear that crowding and eccentricity will limit preattentive guidance. Those limits will differ for different features in different situations, but this topic is vastly understudied. We will return to these questions in the later discussion of the functional visual field (FVF). For the present, it is worth underlying the thought that preattentive guidance will vary as the eyes move in any normal, real world search.

Levels of selection: Dimensional Weighting

Though guidance is shown as a single box (#5 in Figure 3) controlling access to selective processing (#4), it is important to recognize that selection is a type of attentional control, not one single thing. We have been discussing guidance to specific features (e.g. blue … or bluest), but attention can also be directed to a dimension like color. This “dimension weighting” has been extensively studied by Herman Muller and his group (reviewed in Liesefeld, Pollmann, & Müller, 2019; Liesefeld & Müller, 2019). Their “dimension-weighting account” (DWA) is built on experiments where, for example, the observer might be reporting on some attributes of a green item in a field of blue horizontal items. If there is a salient red “singleton” distractor, it will slow responses more than an equally salient vertical distractor. DWA argues that a search for green puts weight on the color dimension. This results in more distraction from another color than from another dimension like orientation.

At a level above dimensions, observers can attend to one sense (e.g. audition) over another (e.g. vision). As any parent can attest, their visual attention to the stimuli beyond the windshield can be disrupted, if their attention is captured by the auditory signals from the back seat of the car.

Building the priority map – Temporal factors and the role of “attention capture”

In Guided Search, attention is guided to its next destination by a winner-take-all operation (Koch & Ullman, 1985) on an attentional priority map (Serences & Yantis, 2006). In GS2, the priority map was modeled as a weighted average of contributions from top-down and bottom-up processing of multiple basic features. In GS6, there are further contributions to priority, as outlined in the next sections. In thinking about the multiple sources of guidance, it is worth underlining the point made earlier, that the priority map is continuously changing and continuously present during a search task. Different contributions to priority have different temporal properties. Bottom-up salience, for instance, may be a very powerful but a short-lived form of guidance (Donk & van Zoest, 2008). Theeuwes and his colleagues (Theeuwes, 1992; Van der Stigchel, et al., 2009), as well as many others (e.g. Harris, Becker, & Remington, 2015; Lagroix, Yanko, & Spalek, 2018; Lamy & Egeth, 2003) have shown that a salient singleton will attract attention. Indeed, there is an industry studying stimuli that ‘capture’ attention (Folk & Gibson, 2001; Theeuwes, Olivers, & Belopolsky, 2010; Yantis & Jonides, 1990). Donk and her colleagues have argued that this form of guidance is relatively transient in experiments using artificial stimuli (Donk & van Zoest, 2008) and natural scenes (Anderson, Ort, Kruijne, Meeter, & Donk, 2015). Others have shown that the effects may not completely vanish in the time that it takes to make a saccade (De Vries, Van der Stigchel, Hooge, & Verstraten, 2017), but this transient nature of bottom-up salience may help to explain why attention does not get stuck on high salience, non-target spots in images (Einhauser, Spain, & Perona, 2008). Lamy et al. (2020) make the useful point that “attention capture” may be a misnomer. It might be better to think that stimuli for attention capture create bumps in the priority map. In many capture designs, that bump will be the winner in the winner-take-all competition for the next deployment of attention. However, other capture paradigms may be better imagined as changing the landscape of priority, rather than actually grabbing or even splitting the ‘spotlight’ of attention (Gabbay, Zivony, & Lamy, 2019).

The landscape of priority can be modulated in a negative/inhibitory manner as well. Suppose that one is searching for blue squares among green squares and blue circles. This conjunction search can be speeded if one set of distractors (e.g. all the green squares) is shown first. This is known as “visual marking” and is thought to reflect some reduction in the activation of the previewed items (Watson & Humphreys, 1997). One could conceive of marking as a boost to the priority of the later stimuli, rather than inhibition (Donk & Theeuwes, 2003; but see Kunar, Humphries, and Smith, 2003). For present purposes, the important point is that marking shows that priority can evolve over time. Subsequent work has shown the limits on that evolution. If there is enough of a break in the action, the map may get reset (Kunar, Humphreys, Smith, & Hulleman, 2003; Kunar, Shapiro, and Humphreys, 2006). If we think about priority maps in the real world or in movies, it would be interesting to see if the maps are reset by event boundaries (Zacks & Swallow, 2007).

Expanding the idea of guidance – history effects

As the phenomenon of marking suggests, the priority map is influenced by several forms of guidance other than the traditional top-down and bottom-up varieties. To quote Failing and Theeuwes (2018); “Several selection biases can neither be explained by current selection goals nor by the physical salience of potential targets. Awh et al. (2012) suggested that a third category, labeled as “selection history”, competes for selection. This category describes lingering selection biases formed by the history of attentional deployments that are unrelated to top-down goals or the physical salience of items.” (P514 of Failing & Theeuwes, 2018). There are a variety of effects of history. We are dividing these into two forms of guidance. We will use the term “history” effects to refer to the effects that arise from passive exposure to some sequence of stimuli (e.g. priming effects). In contrast, “value” or “reward” effects are those where the observer is learning to associate positive or negative value to a feature or location. This distinction is neither entirely clear nor vitally important. These phenomena represent ways to change the landscape of the priority map that are not based on salience or the observer’s goals. One classic form of the priming variety of history effects is the ‘priming of pop-out’ phenomenon of Maljkovic & Nakayama (1994). In an extremely simple search for red among green and vice versa, they showed that RTs were speeded when a red target on trial N followed red on trial N-1 (or green followed green). Theeuwes has argued that all feature-based attention can be described as priming of one form or another (Theeuwes, 2013; Theeuwes, 2018). This seems a bit extreme. After all, you can guide your attention to all the blue regions in your current field of view without having been primed by a previous blue search. Top-down guidance to blue would seem to be enough (see Leonard & Egeth, 2008). Nevertheless, the previous stimulus clearly exerts a force on the next trial. In the “hybrid foraging” paradigm, where observers (Os) search for multiple instances of more than one type of target, they are often more likely to collect two of the same target type in a row (run) than they are to switch to another target type (Kristjansson, Thornton, Chetverikov, & Kristjansson, 2018; Wolfe, Aizenman, Boettcher, & Cain, 2016). These runs are, no doubt, partially due to priming effects. Introspectively, when the first instance of a target type is found in a search display containing multiple instances, those other instances seem to ‘light up’ in a way that suggests that finding the first one primed all the other instances, giving them more attentional priority.

Contextual cueing

Contextual cueing (Chun & Jiang, 1998) represents a different form of a history effect. In Contextual cueing, Os come to respond faster to repeated displays than to novel displays, as if the Os had come to anticipate where the target would appear even though they had no explicit idea that the displays had been repeating (Chun, 2000). It has been argued that contextual cueing might just be a form of response priming (Kunar, Flusberg, Horowitz, & Wolfe, 2007). That is, Os might just be faster to respond when they find a target in a contextually cued location. However, the predominant view has been that contextual cueing represents a form of implicit scene guidance (see below) in which recognition of the scene (even implicitly) boosts the priority map in the likely target location (Sisk, Remington, & Jiang, 2019; Harris and Remington, 2020).

Value

A different route to modulation of priority comes from paradigms that associate value with target and/or distractor features. If you reward one feature (e.g. red) and/or punish another (e.g. green), items with rewarded features will attract more attention and items with punished features will attract less attention (Anderson, Laurent, & Yantis, 2011). As with contextual cueing, it could be argued that the effect of reward is to speed responses, once the target is found and not to guide attention to the target. However, Lee and Shomstein (2013) varied set sizes and found that value could make slopes shallower. This is an indication that value had its effects on the search process and not just on the response once a target is found. Moreover, the effects of reward can be measured using real scenes (Hickey, Kaiser, & Peelen, 2015), an indication that value can be a factor in everyday search.

We are labeling “history” and “value” as two types of guidance. One could further divide these and treat each paradigm (e.g. contextual cueing) as a separate type of guidance or, like Awh et al. (2012), one could group all these phenomena into “selection history”. Alternatively, priming, contextual cueing, value, marking, etc. could all be seen as variants top-down guidance. Bottom-up guidance is driven by the stimulus. Top-down guidance would be guidance with its roots in the observer. This was the argument of Wolfe, Butcher, Lee, & Hyle (2003) and of prior versions of GS. GS6 accepts the logic of Awh, Belopolsky, & Theeuwes (2012). Top-down guidance represents what the observer wants to find. History and value guidance show how the state of the observer influences search, independent of the observer’s intentions. Again, the important point is that there are multiple modulators of the landscape of attentional priority beyond top-down and bottom-up guidance.

Scene Guidance

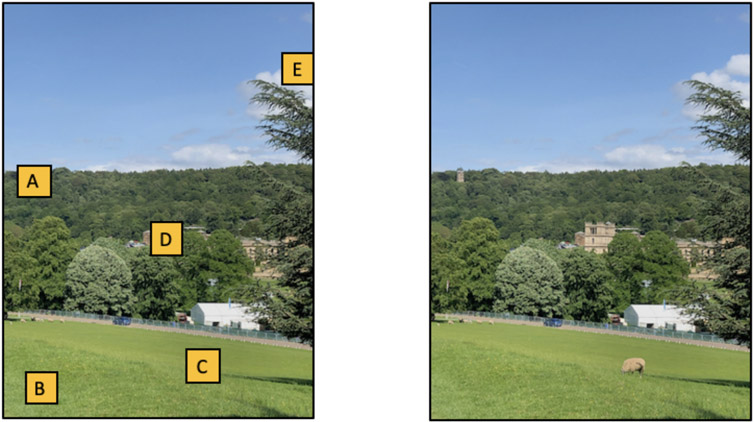

Selection history makes a real contribution to attentional guidance. However, these effects seem quite modest if compared to “scene guidance”, the other addition to the family of attention-guiding factors in GS6. Guidance by scene properties was not a part of earlier forms of Guided Search, largely because scenes were not a part of the body of data being explained by the model. Given a literature that dealt with searching random arrays of isolated elements on a computer screen, there was not much to say about the structure of the scene (though we tried in Wolfe, 1994b). Of course, the real world in which we search is highly structured and that structure exerts a massive influence on search. In Figure 7a, ask which box or boxes are likely to hide a sheep. Unlike a search for a T amongst a random collection of L’s, where every item is a candidate target, there are sources of information in this scene that rapidly label large swathes of the image as ‘not sheep’.

Figure 7:

A) Which boxes could hide a sheep? B) Find sheep. The scene is on the grounds of Chatsworth House, a stately home in Derbyshire, England

Top-down guidance to sheep features is important here, but even with no sign of a sheep, scene constraints make it clear that “C” is a plausible location. Spatial layout cues indicate that any sheep behind “B” would be very small and “A”, “D”, and “E” are implausible, even though, looking at Figure 7b, there are sheep-like basic features in the fluffy white clouds behind “E” and the bits in the building behind “D” that share color and rough shape with the sheep who was, in fact, behind “C”.

Like selection history, scene guidance is a term covering a number of different modulators of priority. Moreover, perhaps more dramatically than the other forms of guidance, scene guidance evolves over time. In Figure 3, this is indicated by having two sources of scene information feeding into guidance. In the first moments after a scene becomes visible, the gist of the scene becomes available. Greene and Oliva (2009) demonstrated that exposures of 100 sec or less are all that are needed to permit Os to grasp the rough layout of the scene. Where is the ground plane? What is the rough scale of the space? A little more time gives the observer rough semantic information about the scene: Outdoors, rural, etc. For this specific example, very brief exposures are adequate to determine that there is likely to be an animal present (Li, VanRullen, Koch, & Perona, 2002; Thorpe, Fize, & Marlot, 1996), even if not to localize that animal (Evans & Treisman, 2005). Castelhano and her colleagues have formalized this early guidance by the layout in her Surface Guidance Framework (Pereira & Castelhano, 2019).

With time, other forms of scene guidance emerge. For example, Boettcher et al. (2018) have shown that “anchor objects” can guide attention to the location of other objects. Thus, if you are looking for a toothbrush, you can be guided to likely locations if you first locate the bathroom sink. Presumably, this form of scene guidance requires more processing of the scene than does the appreciation of the gistlike “spatial envelope” of the scene (Oliva, 2005). Since anchor objects are typically larger than the target object (sink -> toothbrush), this can be seen as related to the global-local processing distinction originally popularized by Navon (1977).

As one way to quantify scene guidance, Henderson and Hayes (2017) introduced the idea of a “meaning map”. A meaning map is a representation akin to the salience map that reflects bottom-up guidance of attention. To create a meaning map, Henderson and Hayes divided scenes up into many small regions. These were posted online, in isolation and in random order as a “Mechanical Turk” task in which observers were asked to rate the meaningfulness of each patch (i.e. a patch containing an eye might be rated as highly meaningful; a piece of wall, much less so). These results are summed together to form a heatmap showing where, in the scene, there was more or less meaning present. Meaning maps can predict eye movements better than salience maps calculated for the same images (Pedziwiatr, Wallis, Kümmerer, & Teufel, 2019). The method loses the valuable guiding signal from scene structure, but it is a useful step on the way to putting scene guidance on a similar footing with top-down and bottom-up guidance.

Rather like those classical sources of guidance, scene guidance may have a set of features, though these may not be as easy to define as color, size, etc. For example, in scene guidance, it is useful to distinguish between “syntactic” guidance – related to the physics of objects in the scene (e.g. toasters don’t float) and “semantic” guidance – related to the meaning of objects in the scene (e.g. toasters don’t belong in the bathroom; Biederman, 1977; Henderson & Ferreira, 2004; Vo & Wolfe, 2013).

Guidance summary

Guidance remains at the heart of Guided Search. Guidance exists to help the observer to deploy selective attention in an informed manner. The goal is to answer the question, “Where should I attend next?”. The answer to that question will be based on a dynamically changing priority map, constructed as a weighted average of the various sources of guidance (see Yamauchi and Kawahara, 2020, for a recent example of the combination of multiple sources of guidance). The weights are under some explicit control. Top-down guidance to specific features is the classic form of explicit control (I am looking for something shiny and round). There must be an equivalent top-down aspect of scene guidance (I will look for that shiny round ball on the floor). There are substantial bottom-up, automatic, and/or implicit sources of guidance, particularly early in a search. Factors like salience and priming will lead to attentional capture early in a search. More extended searches must become more strategic to avoid perseveration (I know I looked for that shiny ball on the floor. Now I will look elsewhere). One way of understanding these changes over time is to realize that the priority map is continuously changing over time, and to be clear Treisman’s classic “preattentive” and “attentive” stages of processing are both active throughout a search.

Dividing guidance into five forms is somewhat arbitrary. There are different ways to lump or split guiding forces. One way to think about the forms of guidance that are added in GS6 (history, value, & scene) is that all of them can be thought of as long-term memory effects on search. They are learned over time: History effects on a trial by trial basis, value over multiple trials, and scene effects over a lifetime of experience. This role for long-term memory is somewhat different than the proposed role of activated long-term memory as the home of target templates in search, as will be discussed later.

What are the basic features that guide visual search?

A large body of work describes the evidence that different stimulus features can guide search (e.g. color, motion, etc.). Other work documents that there are plausible features that do not guide search: e.g. intersection type (Wolfe & DiMase, 2003) or surface material (Wolfe & Myers, 2010). A paper like this one would be an obvious place to discuss the evidence for each candidate feature, but this has been done several times recently (Wolfe, 2014, 2018; Wolfe & Horowitz, 2004; Wolfe & Horowitz, 2017) so the exercise will not be repeated here.

There are changes in the way we think about basic features in GS6. GS2 envisioned guiding features as coarse, categorical abstractions from the ‘channels’ that define sensitivity to different colors, orientations, etc. (Wolfe et al., 1992). The simulation of GS2, for example, made use of some very schematic, broad color and orientation filters (see Figure 3 of Wolfe, 1994a). This works well enough for relatively simple features like color or orientation (though some readers took those invented filters a bit too literally). It does not work well for more complex features like shape. It is clear that some aspects of shape guide attention and there have been various efforts to establish the nature of a preattentive shape space. Huang (2020) has proposed a shape space with three main axes: segmentability, compactness, and spikiness that seems promising. However, the problem becomes quite daunting if we think about searching for objects. Search for a distinctive real-world object in a varied set of other objects seems to be essentially unguided (Vickery, King, & Jiang, 2005). By this, we mean that the RT x set size functions for such a search look like those from other unguided tasks like the search for a T among Ls or a 2 among 5s (Fig 1). On the other hand, a search for a category like “animal” can be guided. In a hybrid search task (Wolfe, 2012) in which observers had to search for any of several (up to 16) different animals, Cunningham et al. (2014) found that Os did not attend to objects like flags or coins; presumably, because no animals are that circular or rectangular. They did attend to distractors like clothing; presumably, because at a preattentive level, crumpled laundry has features that might appear to be sufficiently animal-like to be worth examining. But what are those features?

We would be hard-pressed to describe the category, “animal”, in terms of the presence or absence of classic preattentive features (e.g. What size is an animal, in general?). One way to think about this might be to imagine that the process of object recognition involves something like a deep neural network (DNN; Kriegeskorte & Douglas, 2018). If one is looking for a cow, finding that cow would involve finding an object in the scene that activates the cow node at the top of some many-layered object recognition network. Could some earlier layer in that network contain a representation of cow-like shape properties that could be used to guide attention in the crude manner that shape guidance appears to proceed? That is, there might be a representation in the network that could be used to steer attention away from books and tires, but would not exclude horses or, perhaps, bushes or piles of old clothes. This is speculative, but it is appealing to think that shape guidance might be a rough abstraction from the relatively early stages of the processes that perform object recognition, just as color guidance appears to be a relatively crude abstraction from the processes that allow you to assess the precise hue, saturation, and value of an attended color patch (Nagy & Cone, 1993; Wright, 2012).

The Search Process

In GS6, the search process is simultaneously serial and parallel

How does search for that cow proceed? The guidance mechanisms, described above, create a priority map based on a weighted average of all the various forms of guidance. Attention is directed to the current peak in that map. If it is the target and there is only one target, the search is done. If not, attention moves to the next peak until the target(s) is/are found and/or the search is terminated. Figure 8 shows how GS6 envisions this process.

Figure 8:

The search process in GS6.

Referring to the numbers in Figure 8, (1) there is a search stimulus in the world; here a search for a T among Ls. (2) Items are selected from the visual system’s representation of that stimulus, in series. (3) Not shown, the choice of one item over another would be governed by the priority map, the product of the guidance processes, discussed earlier. (4) The selected item may be represented in Working Memory (Drew, Boettcher, & Wolfe, 2015). This will be discussed further, when the topic of the “search template” is considered. (5) The object of search is represented as a “Target Template” in Activated Long Term Memory (ALTM). Again, more will be said about templates, later. (6) Each selected item is compared to the target template by means of a diffusion process (Ratcliff, 1978; Ratcliff, Smith, Brown, & McKoon, 2016). It is not critical if this is strictly an asynchronous “diffusion” process, a “linear ballistic accumulator” (Brown & Heathcote, 2008), a “leaky accumulator” (Bogacz, Usher, Zhang, & McClelland, 2007), or another similar mechanism. There may be a correct choice to be made here but, for present purposes, all such processes have similar, useful properties, discussed below and there is good evidence that the nervous system is using such processes in search (Schall, 2019). Evidence accumulates toward a target boundary or a non-target boundary. (7) Distractors that reach the non-target boundary are ‘discarded’. The interesting question about those discarded distractors is whether they are irrevocably discarded or whether they can be selected again, later in the search. For example, in a foraging search like berry picking, one can imagine a berry, rejected on first glance, being accepted later on. In a search for a single target, successful target-present search ends when evidence from a target item reaches the target boundary (6).

Several questions about this process need to be addressed.

What is the purpose of an “asynchronous diffuser”?

What is the fate of rejected distractors and how do we avoid perseverating on a salient distractor object?

How is search terminated?

The Asynchronous Diffuser or The Carwash

The slope of RT x set size functions, like those in Figure 1, can be thought of as a measure of the rate with which the search process, shown in Figure 8, deals with items in the visual display. A task like the T vs L search, shown here, might produce target-present slopes of about 20-40 msec per item. For purposes of illustration, suppose that this T vs L search produces a target-present slope of 30 msec/item and further suppose, as Treisman would have proposed, that this is an unguided, serial, self-terminating search. If so, then observers would have to search through about half of the items, on average, before stumbling on the target. Taking this factor of 2 into account, if the target-present slope is 30 msec/item, the “true” rate would be about 60 msec/item or about 17 items per second moving through the system. Unfortunately, no one has developed an “attention tracker” that can monitor covert deployments of attention the way that an eye tracker can track overt deployments of the eyes so we cannot say for sure that attention is being discretely deployed in at the rate suggested by the slopes of RT x set size functions. There are useful hints that neural rhythms in the right frequency range are important to the neural basis of covert attention. For instance, Buschman and Miller (2009) could see monkeys shifting attention every 40 msec accompanied by local field potentials oscillating at 25 Hz. Lee, Whittingto, & Kopell (2013) built a neural inspired model to show how oscillations in this Beta rhythm range (18-25 Hz) could reproduce a variety of top-down attentional effects (see Miller and Buschman, 2013, for a review of this literature).

So, GS assumes that items are being selected one after the other and the data suggest that this is occurring at a rate of around 20 Hz. The problem is that no one seems to think that object recognition can take place in ~50 msec. Much more typical is the conclusion from an ERP study by Johnson and Olhausen (2003), that holds that recognition takes “between 150-300 msec”. Even papers proposing “ultra-rapid” recognition (VanRullen & Thorpe, 2001) are suggesting that imperfect but above chance performance takes 125-150 msec per object (Hung, Kreiman, Poggio, & DiCarlo, 2005; VanRullen & Thorpe, 2001). Thus, a model that proposes that items are selected and fully recognized every 50 msec is simply not plausible.

One solution has been to propose parallel processing of the display (Palmer & McLean, 1995; Palmer, Verghese, & Pavel, 2000) or, more recently, parallel processing of items in some region around fixation (see discussion of the functional visual field, below: Hulleman & Olivers, 2017). The GS6 solution, as illustrated in Figure 8, is to propose that items may be selected to enter the processing pipeline every 50-60 msec or so, but that it may take several hundred msec to move through the process to the point of recognition. As a consequence, at any given moment in search, multiple items will be in the diffusion/recognition process. Since they entered that process one after the other, the result is an asynchronous diffusion. A real-world analogy is a carwash (Moore & Wolfe, 2001; Wolfe, 2003). Cars enter and leave the carwash in a serial manner but multiple cars are being washed at the same time. Hence the process is neither strictly serial nor parallel. In computer science, this would be a pipeline architecture (Ramamoorthy & Li, 1977).

It is notoriously difficult to use behavioral data to distinguish serial processes from parallel processes (Townsend, 1971; Townsend, 2016). GS6 would argue that it is an essentially fruitless endeavor in visual search. The carwash/asynchronous diffuser is both serial and parallel. Moreover, it is easy to imagine variations on the carwash architecture of Figure 8. Maybe two “cars” can enter at once. Maybe one car can pass another car, entering second but leaving first (this is actually illustrated in the diffusion box in Figure 8 when the second and third red lines cross). It will be next to impossible to discriminate between variants like this in behavioral data and, in fact, it does not matter very much. The important points are: 1) selective attention appears to select one or a very few objects at one time, 2) the time between selections is shorter than the time to recognize an object; and, therefore, 3) multiple items must be undergoing recognition at the same time.

The fate of rejected distractors and the role of inhibition of return

Returning to Figure 8, we show rejected “L”s being tossed into an extremely metaphorical garbage can. What does that mean? In particular, does that mean that once rejected, a distractor is completely removed from the search? Should visual search be characterized as an example of “sampling without replacement”? Feature Integration and early versions of GS assumed this was the case. In GS2, search proceeded from the highest spot in the priority map to the next and the next, until the target was found or the search ended. The proposed mechanism for this was “inhibition of return” (IOR; Klein, 1988; Posner, 1980; Posner & Cohen, 1984). In non-search tasks, if attention is directed to a location and then removed, it is harder to get attention back to the previously attended location (Klein, 2000). This is IOR. Klein (1988) applied IOR to visual search. The idea was that the rejected distractors would be inhibited, preventing re-visitation.

In 1998, Horowitz and Wolfe (1998) did a series of experiments in which they made IOR impossible; for example, by replotting all of the items on the screen every 100 msec during search. They found that this did not change the efficiency with which targets were found (i.e., the target-present slopes did not change). They declared that “visual search has no memory” (Horowitz & Wolfe, 1998), by which they meant that the search mechanism does not keep track of rejected distractors. Of course, there is usually good memory for targets (Gibson, Li, Skow, Brown, & Cooke, 2000). The “no memory” claim – the claim that visual search is an example of sampling with replacement - was controversial (Horowitz & Wolfe, 2005; Kristjansson, 2000; Ogawa, Takeda, & Yagi, 2002; Peterson, Kramer, Wang, Irwin, & McCarley, 2001; Shi, Allenmark, Zhu, Elliott, & Müller, 2019; Shore & Klein, 2000; von Muhlenen, Muller, & Muller, 2003) and, probably, too strong. There is probably some modest memory for rejected distractors but not enough to support sampling without replacement. GS6 assumes that something like 4-6 previous distractors are remembered, in the sense that they are not available to be immediately reselected.

Several mechanisms probably serve to prevent visual search from getting stuck and perseverating on a couple of highly salient distractors.

There is probably some IOR, serving as a “foraging facilitator” (Klein & MacInnes, 1999), or maybe not (Hooge, Over, van Wezel, & Frens, 2005).

As noted earlier, bottom-up salience may fade after stimulus onset (Donk & van Zoest, 2008), and noise in the priority map may serve to randomly change the location of the peak in that map.

Observers may have implicit or explicit prospective strategies for search that discourage revisiting items (Gilchrist & Harvey, 2006). For example, given a dense array of items, observers will tend to adopt some strategy like “reading” from top left to bottom right. If this is done rigorously, the result is search that is, effectively, without replacement even though no distractor-specific memory would be required.

Finally, in any more extended search, explicit episodic memory can guide search. If I know that I looked on the kitchen counter for the salt, it may be time to check the dining table.

GS6 abandons the idea of sampling without replacement. In our work, we never found evidence that observers were marking rejected distractors in order to avoid revisiting them, but others have some evidence and the other mechanisms, listed above, will cause the search process to behave as if it has some memory. In any case, it is obvious we can search effectively without becoming stuck on some salient pop-up ad on the webpage.

Search Termination

If we do not mark every rejected distractor, how do we terminate search when there is no target present? The most intuitive approach is to imagine that some pressure builds up, embodying the thought that one has searched long enough. Broadly, there have been two modeling approaches. One approach assumes that, at each time point or after each rejection of a distractor, there is some probability of terminating the search with a target-absent response. That probability increases with each rejected distractor according to some rule (Moran, Zehetleitner, Liesefeld, Müller, & Usher, 2015; Moran, Zehetleitner, Mueller, & Usher, 2013; Schwarz & Miller, 2016). Alternatively, one can propose that an internal signal accumulates toward some quitting threshold and that search is terminated if that threshold is reached (Chun & Wolfe, 1996; Wolfe & VanWert, 2010). Some models have aspects of both processes (Hong, 2005) and there are other approaches; e.g. proposing a role for coarse to fine processing (Cho & Chong, 2019).

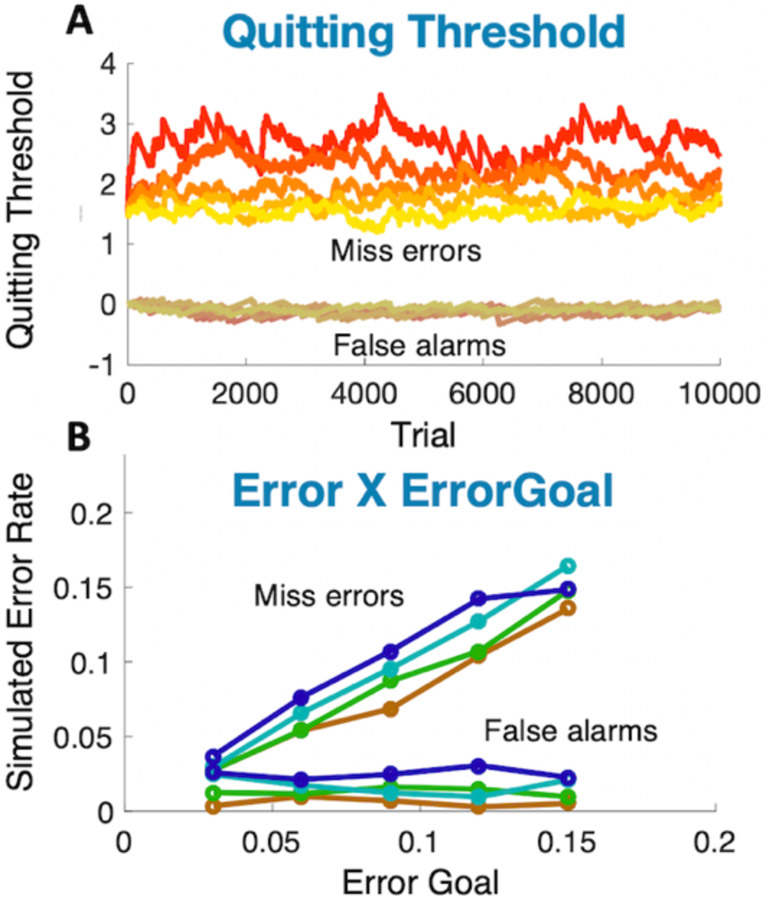

As in earlier versions of GS, GS6 uses diffusion of a signal toward a quitting threshold, though we have implemented probabilistic rules in simulation and have found that the results are comparable. The GS6 version is diagrammed in Figure 9.

Figure 9:

The GS6 search termination process

Items are selected in series (1) and enter into the asynchronous diffuser, described previously (2). Distractors are rejected. The extent to which rejected distractors are remembered and not reselected is a parameter of the model. Our assumption is that any memory for rejected distractors is quite limited (Horowitz & Wolfe, 2005). A noisy signal (4) diffuses toward a quitting threshold (5). If the threshold is reached, search is terminated, resulting in either a true negative response or a false negative (miss) error. If no item has reached the target threshold to produce a true positive (hit) or false positive (false alarm error), and if the quitting signal has not reached the quitting threshold, the process continues (7) with another selection.

Critically, the quitting threshold (5) is set adaptively. If the observer makes a true negative response, the threshold is lowered making subsequent search terminations faster. If the observer misses a target, the threshold is raised. The size of increases and decreases in the threshold is determined by the observer’s tolerance for errors. If errors are costly, threshold increases markedly after an error. This would result in longer response times and fewer errors. The degree to which a search is guided is also captured by this adaptive process of setting the quitting threshold. Imagine that 50% of items in a letter search can be discarded as having the wrong color to be a target, if the quitting threshold is set for an unguided search, no targets will be missed and the quitting threshold will be driven down to allow for markedly faster target-absent response.

A second adaptive process governs the start point of the diffusion in the asynchronous diffuser (6). In signal detection terms, the separation between the target and distractor bounds in the diffuser gives an estimate of the discriminability of targets and distractors (roughly, but not quite, d’ – see below). The starting point of the diffusion is related to the criterion. If the start point is closer to the target bound than to the distractor bound, this corresponds to a liberal criterion (c > 0). If the observer finds a target, the starting point/criterion moves up to a more liberal position. If the observer makes a false positive error, the starting point/criterion moves down to a more conservative position. This adjustment is important in accounting for target-prevalence effects, discussed below.

This is illustrated in the simulation below but, before turning to the simulation, it is important to note that the process, described here, applies to laboratory experiments with hundreds of trials of the same search. We need to think somewhat differently about searches in the real world.

Most real-world search tasks are one-time or intermittent tasks. For instance, consider search in the refrigerator for the leftovers from last night’s dinner. There is going to be one trial of this search. If someone else has eaten the leftovers, you will need to stop when you have searched long enough. We assume that a combination of a history of prior searches and an assessment of the current state of the refrigerator allows you to set an initial quitting threshold. In a laboratory version of that task, you could then adaptively adjust the threshold to optimize your quitting time. A one shot setting of the threshold will allow you to quit in a reasonable, even if, probably, not an optimal amount of time. That one shot setting will be based on a long-term adaptive process of learning how long this sort of task should take.

Even laboratory tasks require the equivalent of that assessment of the contents of the refrigerator. The quitting threshold must be different for a set size of 2 and a set size of 20. Evidence suggests that Os correct somewhat imperfectly for changes in set size with the result that Os reliably make more errors at larger set sizes (e.g. Wolfe, 1998). Interestingly, performance in basic search tasks in the lab is about the same whether several set sizes are intermixed, or if set sizes are run in separate blocks. This suggests that the quitting threshold (QT) should be expressed as:

Moreover, this f(set size) term should be f(effective set size), where the “effective set size” is some estimate of the number of items that would be worth selective attention. In the colored letter search, mentioned above, the letters of the wrong color would not be part of the effective set size. In the leftovers search, you would search through items that could be the leftovers and not attend to each egg in the egg tray. The idea of the effective set size captures the impact of guidance on the search process.

Simulating the search process

A full computational model of GS would require models of early vision, gist/ensemble processing, scene understanding, and more in order to create a priority map. Sadly, that is more than can be done here. In this paper, we report on the results of a more limited project to simulate the mechanics of search as described in Figure 9. In effect, this is like simulating something like a T among Ls or 2 among 5s search (Fig 1E) where the priority map is not relevant (The GS2 simulation had such a condition). Even without the priority map, there are many moving and interlocking parts in the two diffusion mechanisms proposed here. In the absence of an explicit simulation (or a mathematically forma description), it is hard to know if those parts interact to produce plausible results. Our simulation suggests that this architecture can reproduce a range of important findings from the literature and can do so with a fixed set of parameters. That is, we do not need one set of parameters to explain target-prevalence effects and another set to explain the target-absent to target-present ratio of the slopes of the RT x set size functions, for example. The simulation does not promise to identify the correct values for all parameters. For example, the idea of the asynchronous diffuser is that multiple items can be selected in series and then processed at the same time. How many items can be selected? We don’t know and we don’t have a clear empirical way to get a precise answer. In the simulation, a range of values work. We show results for a limit of five items, but it does not matter much if we repeat the simulation with 3 or 6 items. MATLAB code for this simulation will be posted at https://osf.io/9n4hf/files/ , and it should be possible to try different parameters. Guided Search has always had a large number of parameters that can be adjusted as Miguel Eckstein once elegantly illustrated (Eckstein, Beutter, Bartroff, & Stone, 1999). This remains true in GS6. There are two points to be made here. First, there is no reason to assume that the real human search engine does not have a large number of parameters. Second, the goal is to show that the GS6 search engine can produce a range of findings without the need to specifically adjust parameters for each simulated experiment.

Simulation specifics

The simulation of the architecture, proposed in Figure 9, has the following properties.

The asynchronous diffuser has a capacity of 5 items.

A new item is selected every 50 msec, if there is space available.

A currently selected item cannot be reselected but other items can be reselected so this model has a memory for, at most, five items.

The diffuser is updated every 10 msec with a diffusion rate of 1/20th of the distance to either the target or distractor bounds (given a neutral criterion starting point, see below). Thus, without noise it would take 200 msec from selection to target identification.

The diffusion is a noisy process with a standard deviation equal to 2.5X of the diffusion rate.

The quitting signal begins to accumulate after the first item has been identified. This diffusion is also a noisy process with a standard deviation equal to 2.5X of the diffusion rate.

If an item hits the target bound, the trial ends with a target-present response. If an item hits the distractor bound, it is removed from the diffuser and a new item can be selected.

If the quitting signal reaches the quitting threshold, the trial ends with a target-absent response.

On each trial, the quitting threshold is proportional to the set size. That is, if the set size is 20, the quitting threshold is twice what it would be for a set size of 10. Linearity is probably an oversimplification since the quitting threshold would be proportional to some estimate of numerosity and not a perfectly accurate count.

Target-present responses adjust the starting point of the asynchronous diffuser. If the response is correct (a hit), the starting point moves up one step. In effect, the criterion becomes more liberal. If the response is a false positive (false alarm), the starting point moves down by a much larger step, set to 16X of the upward step.

Target-absent responses adjust the quitting threshold. If the response is a true negative, the quitting threshold declines by one step, making subsequent quitting faster. If the response is a false negative (miss error), the quitting threshold increases by a larger step defined as (downward step)/(desired error rate). Thus, if the simulation was aiming for an 8% error rate, the upward step would be 1/0.08 = 12.5X the downward step.

The upward step is further scaled by the target prevalence. Prevalence refers to the proportion of trials that have a target present. Most search experiments are run at a prevalence of 0.5 or 1.0 if the task is to localize or identify the target. Prevalence has strong effects on error rates (Wolfe, Horowitz, & Kenner, 2005; Wolfe & Van Wert, 2010). In the simulation, the actual upward step size after an error is (downward step)/(desired error rate * prevalence * 2).

The simulation was run for 10000 trials at each of five prevalence levels (.1, .3, .5, .7, .9). Set size was randomly distributed among set sizes 5, 10, 15, & 20. The intended error rate was set to 8%. As noted, the parameters are easily varied. This is not a claim about exact values of any of these. It is a claim that one set of values will produce a set of plausible search behaviors.

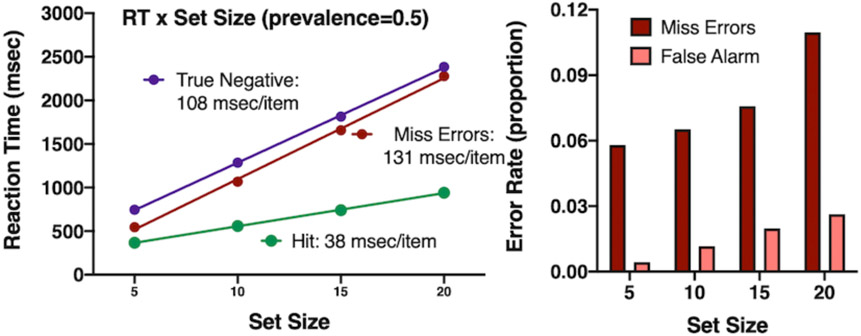

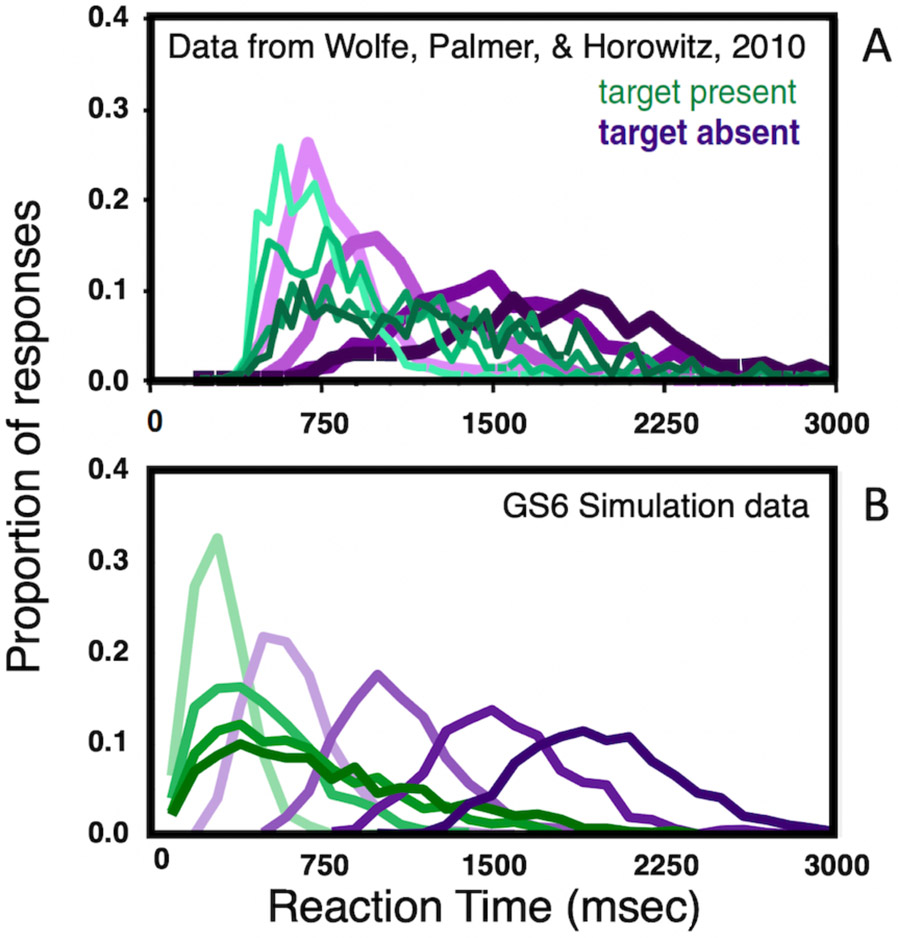

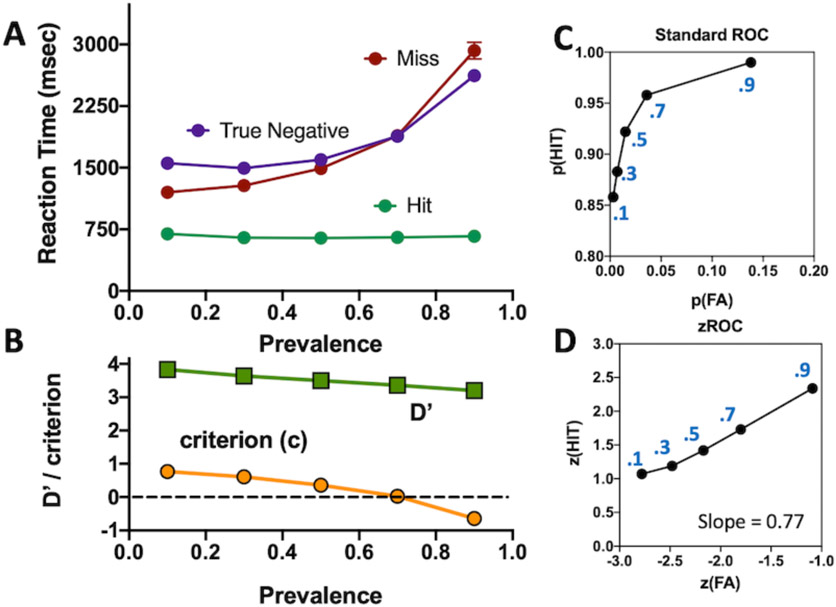

Simulation Results

Figure 10 shows the standard results for a visual search experiment (for comparison, see, for example, Wolfe, Palmer, & Horowitz, 2010). Reaction Time (RT) rises linearly with set size. Correct target-present RTs are faster than target-absent. Miss RTs are somewhat faster than True Negative RTs. Miss error rates average about 8%, which was the goal in this run of the simulation. Error rates increase with set size, as is typical in search experiments. At 50% target prevalence, false alarm errors are markedly less common than miss errors; again, as is typical inlaboratory studies.

Figure 10:

Results of a simulation of the aspects of GS6, illustrated in Figure 9: A: RT x set size functions (c.f. Fig 1f), B: Error rates

Slope ratios