Introduction

With increasing funding to the National Institute of Diabetes and Digestive and Kidney Diseases and the Advancing American Kidney Health and Kidney Innovation Accelerator (KidneyX) initiatives, the field of nephrology has great opportunities for expanding research and innovation. However, when seizing these opportunities, it is important to remember that establishing evidence-based practices (EBPs) through clinical research is merely one step on the journey of translating EBPs and best practices into routine clinical care. Equally important are understanding how to disseminate EBPs to relevant stakeholders (i.e., clinicians, patients, professional organizations/societies), and how to evaluate the implementation of such practices in the real world. The processes of gathering this knowledge are known collectively as dissemination and implementation science (DIS). Specifically, dissemination researchers study how to effectively share evidence and information about an EBP with target audiences, and implementation researchers study how to facilitate the adoption, integration, and maintenance of EBPs in targeted settings, with the goal of improving care and outcomes (1).

Although clinical research focuses on if an intervention works in a tightly managed study setting, DIS focuses on how and why interventions work in real-world settings. Clinical research is generally concerned with health effects or outcomes of EBPs, whereas DIS assesses the process of implementing EBPs. No two research settings are exactly alike, and systematic DIS research can reveal what makes an EBP effective in one setting versus another. In this way, DIS takes a pragmatic approach, challenging the adage, “if you build it, they will come,” and instead postulating “if you build it in a carefully documented way and collect key process-related outcome measures throughout, we can learn why some come and some do not.”

DIS borrows from a diverse range of disciplines, including the social sciences, psychology, organization and management, and health economics. DIS study teams also typically comprise interdisciplinary stakeholders and participants, including clinician researchers, social scientists, administrators, staff, and patients. Thus, DIS research teams are well equipped to conduct studies in complex, multistakeholder nephrology care settings, such as outpatient dialysis units, chronic kidney disease clinics, and kidney transplant centers. This article aims to highlight some of the key components for conducting DIS research, illustrating with applications from the nephrology literature, and juxtaposes DIS research with clinical research and quality improvement.

Key Components for Conducting Dissemination and Implementation Research

The literature offers guidelines and blueprints (2,3) for the conduct of rigorous DIS research and writing effective DIS research grant applications (4). In robust DIS research, researchers typically, at a minimum: (1) address a specific implementation-related research question, (2) are guided by a model, framework, and/or theory, (3) identify an appropriate research design and measurable implementation-related outcomes, and (4) use explicit implementation strategies (see Table 1 for examples of each component and resources).

Table 1.

Dissemination and implementation research methodology, examples, and resources

| Research Component | Examples | Resources |

|---|---|---|

| DIS research questions | • What are barriers and facilitators to implementation of the intervention or evidence-based practice? • What contextual factors influence adoption of the intervention or evidence-based practice? |

(2) (4) |

| Frameworks | • Consolidated Framework for Implementation Research (CFIR) | (7) Consolidated Framework for Implementation Research website: www.cfirguide.org |

| • Exploration, Preparation, Implementation, Sustainment (EPIS) | (8) | |

| • Reach, Effectiveness, Adoption, Implementation, Maintenance (RE-AIM) | (9) | |

| • PRECEDE-PROCEED | (22) | |

| • Promoting Action on Research Implementation in Health Services (PARiHS) | (23) | |

| Theories | • Diffusion of Innovations • Organizational readiness for change |

(24)

(12) (6) (24) (systematic literature review of more than 60 frameworks) (25) Dissemination & Implementation Models in Health Research and Practice website: www.dissemination-implementation.org |

| Research designs | • Experimental • Quasi-experimental • Pragmatic • Observational • SMART/Adaptive designs |

(14) |

| • Effectiveness-implementation hybrid | (15) | |

| • Mixed methods | (26) | |

| Implementation outcomes | • Acceptability • Adoption • Appropriateness • Cost • Feasibility • Fidelity • Penetration • Sustainment |

(28) |

| Implementation strategies | • Staff training • Stakeholder committees • Feedback reports • Media and marketing campaigns |

(27) (19) |

DIS Research Questions

In traditional clinical research studies, research questions investigate effects or health outcomes, often associated with an innovative intervention. DIS research questions instead focus on dissemination, implementation, and integration of innovations or EBPs (2). DIS studies primarily assess determinants or factors influencing implementation and processes of implementation. When asking DIS research questions, researchers can consider what is to be assessed, the level of analysis (i.e., patient, provider, organizational level), and the outcomes to be measured (2). For example, Goff et al. (5) evaluated the implementation of an advanced-care planning, shared decision-making intervention among patients on hemodialysis. The authors aimed to collect data that would aid in the subsequent dissemination and scale-up of the intervention in broader clinical settings. To accomplish this, their DIS research question asked what are the barriers and facilitators to effective implementation of the shared decision-making intervention among patients with end-stage kidney disease using hemodialysis, who are approaching end of life (5)?

Models, Frameworks, and Theories

Models, frameworks, and theories provide the scaffolding for DIS research and are used to illustrate the translation of research into practice, explore and explain influences on implementation outcomes, and guide the evaluation of implementation in every phase (6). These tools can be used flexibly to outline the relationships between study variables and implementation outcomes, and they may be either purely descriptive or explanatory, offering hypotheses or research questions to explain behaviors and EBP implementation outcomes (6). Commonly used frameworks include the Consolidated Framework for Implementation Research (CFIR) (7), which is frequently used to identify factors that may influence implementation outcomes (i.e., barriers and facilitators to implementation within and outside the organization); the Exploration, Preparation, Implementation, Sustainment Framework (8), which characterizes the steps of implementation processes; and the Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE-AIM) framework (9), which supports assessments of implementation dimensions from intervention reach to sustainability. Dozens of frameworks, models, and theories have been developed to date.

In one nephrology-based DIS study, Hynes et al. (10) used the RE-AIM framework to guide the assessment of implementation processes and outcomes of an interdisciplinary care coordination intervention for patients on dialysis. In another study, Washington and colleagues (11) used both the Organizational Readiness for Change theory (12) and the Complex Innovation Implementation Framework (13) to guide their assessment of barriers and facilitators to the implementation of the Chronic Disease Self-Management Program in dialysis and assess staff’s “readiness for change.”

When designing DIS studies, it is critical to consider the context surrounding implementation. For instance, in studies of health care disparities, it is important to consider how social determinants of health, particularly racism, affect health inequities directly and indirectly through differences in implementation processes and outcomes (e.g., differential reach into low-income communities, differential rates of participant attrition). Adapted versions of commonly used DIS frameworks have emerged that focus on health equity, including an extension of RE-AIM (14).

Research Designs

Much like clinical research study designs, DIS studies can use traditional experimental, quasi-experimental, pragmatic, and observational study designs (15). Many DIS studies use mixed-methods designs (15), including examinations of quantitative data (e.g., surveys, administrative data) alongside qualitative data (e.g., semistructured interviews, focus groups). Qualitative research is especially well suited to DIS to capture context and nuance related to implementation processes to ultimately aid in refining how an EBP is scaled in the real world.

DIS emphasizes faster, real-world (pragmatic) implementation of evidence into practice than clinical research, and hybrid effectiveness-implementation study designs allow varying emphasis on assessing an intervention’s effectiveness versus assessing implementation outcomes in one study rather than multiple separate studies (16). In the hybrid type 1 design, effectiveness is the primary aim and implementation is a secondary aim, whereas the hybrid type 3 design has the reverse emphasis, focusing more on implementation; the hybrid type 2 design assigns equal priority to implementation and effectiveness (16). Gordon et al. (17) described a hybrid study design in their protocol for implementing and testing an intervention for increasing Hispanic living donor kidney transplant rates.

Implementation Outcomes and Strategies

DIS researchers typically measure the determinants and outcomes of implementation rather than the outcomes of EBPs or other interventions themselves. Proctor et al. (18) developed a “taxonomy” of eight distinct implementation outcome types, encompassing both observed and latent constructs—acceptability, adoption, appropriateness, feasibility, fidelity, cost, penetration, and sustainability of an intervention or EBP (18). For example, in Gordon et al.’s hybrid study (17,19), the authors measured outcomes such as organizational readiness to change, organizational culture, perceptions of implementation, and barriers and facilitators to intervention implementation using in-depth, semistructured interviews guided by CFIR.

When choosing implementation outcomes to assess, it is important to consider and include the range of participants involved in implementation—patients, providers, administrators, and organizations. DIS provides opportunities for collaboration across disciplines and encourages participation and engagement among a wide range of stakeholders and members of the care team historically underrepresented in the research process, such as social workers and dieticians. These team members often have valuable insider knowledge and insight into relevant aspects of DIS, including operations and workflow.

Quality Improvement and DIS

Distinct from the intervention or EBP itself are the strategies used to implement them in practice. Implementation strategies are activities that are meant to catalyze or foster implementation and are often directly tied to measurement outcomes (20). Some examples of implementation strategies include audit and feedback reports, staff training, and marketing campaigns (20). In a study by Patzer et al. (20) that used RE-AIM to evaluate implementation of a multicomponent intervention aimed at improving kidney transplant waitlisting, one implementation strategy used was to assemble an advisory board to oversee intervention dissemination.

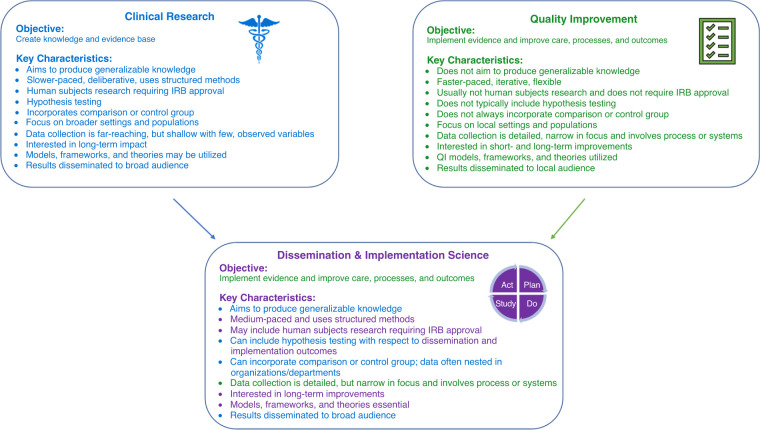

DIS can resemble quality improvement (QI), which is likely more familiar to most nephrology practitioners. QI generally has a narrow scope, targeting the local level (e.g., individual dialysis facilities) and can often be accomplished relatively quickly (1). Conversely, DIS has a broader scope, aims to produce generalizable knowledge, uses more rigorous study designs than QI, and includes multiple levels of stakeholders (1). Key characteristics of clinical research, QI, and DIS are compared in Figure 1. Dorough et al. (21) illustrate the application of both QI and DIS in their study about “My Dialysis Plan,” a patient-centered, multidisciplinary dialysis care planning QI project, which aimed to increase congruence between patient goals of care with their dialysis care (22). The DIS study, guided by CFIR, used qualitative methods to assess barriers, facilitators, and strategies for implementation of the QI project (22).

Figure 1.

Comparing and contrasting conventional clinical research, quality improvement, and dissemination and implementation science. QI, quality improvement; DIS, dissemination and implementation science; IRB, institutional review board. Blue text represents characteristics of clinical research, green text represents characteristics of QI, and purple text represents characteristics unique to DIS.

Conclusion

DIS provides tools needed for bridging the gap between research and practice, and funding agencies have taken note and are supporting DIS projects increasingly. It is encouraging that nephrology has begun to adopt DIS approaches, and the field will benefit from more rigorous DIS research that fully embraces the methods outlined herein. We encourage the reader to explore the resources laid out in this article, and consider how DIS could inform future research in nephrology as we work collectively toward improving the translation of EBPs and best practices into routine clinical care.

Disclosures

C. Escoffery reports being a scientific advisor or member of Society for Public Health Education Past President. M. Urbanski reports receiving research funding from Georgia CTSA TL1TR002382 UL1TR002378; reports being a scientific advisor or member of the Editorial Board of the Journal of Nephrology Social Work. R. Patzer reports being a scientific advisor or member of the Editorial Board of the American Journal of Transplantation, CJASN Editorial Board, and Chair of the United Network for Organ Sharing Data Advisory Board. A. Wilk reports being a scientific advisor and member of the Editorial Board of BMC Nephro-logy; and reports receiving research funding from the National Institute of Diabetes and Di-gestive and Kidney Diseases (1K01DK128384-01).

Funding

M. Urbanski's efforts were supported in part by the Georgia CTSA (TL1TR002382, UL1TR002378). A.S. Wilk's efforts were supported in part by the National Institute of Diabetes and Digestive and Kidney Diseases (1K01DK128384-01).

Acknowledgments

The content of this article reflects the personal experience and views of the author(s) and should not be considered medical advice or recommendation. The content does not reflect the views or opinions of the American Society of Nephrology (ASN) or Kidney360. Responsibility for the information and views expressed herein lies entirely with the author(s).

Author Contributions

All authors conceptualized the study, wrote the original draft, and reviewed and edited the manuscript; R. Patzer provided supervision.

References

- 1.Rabin BA, Brownson RC: Terminology for Dissemination and Implementation Research. In: Dissemination and Implementation Research in Health Translating Science to Practice, edited by Brownson RC, Colditz GA, Proctor EK, 2nd Ed., New York, NY, Oxford University Press, 2018 [Google Scholar]

- 2.Peters DH, Adam T, Alonge O, Agyepong IA, Tran N: Republished research: Implementation research: What it is and how to do it Br J Sports Med 48: 731–736, 2014. 10.1136/bmj.f6753 [DOI] [PubMed] [Google Scholar]

- 3.Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM: An introduction to implementation science for the non-specialist. BMC Psychol 3: 32, 2015. 10.1186/s40359-015-0089-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Proctor EK, Powell BJ, Baumann AA, Hamilton AM, Santens RL: Writing implementation research grant proposals: Ten key ingredients. Implement Sci 7: 96, 2012. 10.1186/1748-5908-7-96 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Goff SL, Unruh ML, Klingensmith J, Eneanya ND, Garvey C, Germain MJ, Cohen LM: Advance care planning with patients on hemodialysis: An implementation study. BMC Palliat Care 18: 64, 2019. 10.1186/s12904-019-0437-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Nilsen P: Making sense of implementation theories, models and frameworks. Implement Sci 10: 53, 2015. 10.1186/s13012-015-0242-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC: Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci 4: 50, 2009. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Aarons GA, Hurlburt M, Horwitz SM: Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health 38: 4–23, 2011. 10.1007/s10488-010-0327-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Glasgow RE, Vogt TM, Boles SM: Evaluating the public health impact of health promotion interventions: The RE-AIM framework. Am J Public Health 89: 1322–1327, 1999. 10.2105/AJPH.89.9.1322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hynes DM, Fischer MJ, Schiffer LA, Gallardo R, Chukwudozie IB, Porter A, Berbaum M, Earheart J, Fitzgibbon ML: Evaluating a novel health system intervention for chronic kidney diseasecare using the RE-AIM framework: Insights after two years. Contemp Clin Trials 52: 20–26, 2017. 10.1016/j.cct [DOI] [PubMed] [Google Scholar]

- 11.Washington TR, Hilliard TS, Mingo CA, Hall RK, Smith ML, Lea JI: Organizational readiness to implement the chronic disease self-management program in dialysis facilities. Geriatrics (Basel) 3: 31, 2018. 10.3390/geriatrics30200313101106910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Weiner BJ: A theory of organizational readiness for change. Implement Sci 4: 67, 2009. 10.1186/1748-5908-4-67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shelton RC, Chambers DA, Glasgow RE: An extension of RE-AIM to enhance sustainability: Addressing dynamic context and promoting health equity over time. Front Public Health 8: 134, 2020. 10.3389/fpubh.2020.001343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brown CH, Curran G, Palinkas LA, Aarons GA, Wells KB, Jones L, Collins LM, Duan N, Mittman BS, Wallace A, Tabak RG, Ducharme L, Chambers DA, Neta G, Wiley T, Landsverk J, Cheung K, Cruden G: An overview of research and evaluation designs for dissemination and implementation. Annu Rev Public Health 38: 1–22, 2017. 10.1146/annurev-publhealth-031816-044215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C: Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care 50: 217–226, 2012. 10.1097/MLR.0b013e3182408812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gordon EJ, Lee J, Kang RH, Caicedo JC, Holl JL, Ladner DP, Shumate MD: A complex culturally targeted intervention to reduce Hispanic disparities in living kidney donor transplantation: An effectiveness-implementation hybrid study protocol. BMC Health Serv Res 18: 368, 2018. 10.1186/s12913-018-3151-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Helfrich CD, Weiner BJ, McKinney MM, Minasian L: Determinants of implementation effectiveness: Adapting a framework for complex innovations. Med Care Res Rev 64: 279–303, 2007. 10.1177/1077558707299887 [DOI] [PubMed] [Google Scholar]

- 18.Gordon EJ, Romo E, Amórtegui D, Rodas A, Anderson N, Uriarte J, McNatt G, Caicedo JC, Ladner DP, Shumate M: Implementing culturally competent transplant care and implications for reducing health disparities: A prospective qualitative study. Health Expect 23: 1450–1465, 2020. 10.1111/hex.13124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kirchner JE, Waltz TJ, Powell BJ, Smith JL, Proctor EK: Implementation Strategies. In: Dissemination and Implementation Research in Health Translating Science to Practice, edited by Brownson RC, Colditz GA, Proctor EK, 2nd Ed., New York, NY, Oxford University Press, 2018 [Google Scholar]

- 20.Patzer RE, Smith K, Basu M, Gander J, Mohan S, Escoffery C, Plantinga L, Melanson T, Kalloo S, Green G, Berlin A, Renville G, Browne T, Turgeon N, Caponi S, Zhang R, Pastan S: The ASCENT (Allocation System Changes for Equity in Kidney Transplantation) Study: A randomized effectiveness-implementation study to improve kidney transplant waitlisting and reduce racial disparity. Kidney Int Rep 2: 433–441, 2017. 10.1016/j.ekir.2017.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dorough A, Forfang D, Mold JW, Kshirsagar AV, DeWalt DA, Flythe JE: A person-centered interdisciplinary plan-of-care program for dialysis: Implementation and preliminary testing. Kidney Med 3: 193–205.e1, 2021. 10.1016/j.xkme.2020.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Green LW, Kreuter MW: Health Program Planning: An Educational and Ecological Approach, 4th Ed., New York, NY, McGraw-Hill, 2005 [Google Scholar]

- 23.Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A: Evaluating the successful implementation of evidence into practice using the PARiHS framework: Theoretical and practical challenges Implementation Sci 3: 1–12, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rogers EM: Diffusion of Innovations, 5th Ed., New York, NY, Free Press, 2003 [Google Scholar]

- 25.Tabak RG, Khoong EC, Chambers DA, Brownson RC: Bridging research and practice: Models for dissemination and implementation research Am J Prev Med 43: 337–350, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Creswell JW, Klassen AC, Plano Clark V, Clegg Smith K: Best Practices for Mixed Methods in the Health Sciences. Bethesda, MD: Office of Behavioral and Social Sciences Research, National Institutes of Health, 2011 [Google Scholar]

- 27.Waltz T J, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, Proctor EK, Kirchner JE: Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study Implementation Sci 10: 1–8, 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M: Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda Adm Policy Ment Health 38: 65–76, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]