Abstract

Federated learning is a distributed learning framework that is communication efficient and provides protection over participating users’ raw training data. One outstanding challenge of federate learning comes from the users’ heterogeneity, and learning from such data may yield biased and unfair models for minority groups. While adversarial learning is commonly used in centralized learning for mitigating bias, there are significant barriers when extending it to the federated framework. In this work, we study these barriers and address them by proposing a novel approach Federated Adversarial DEbiasing (FADE). FADE does not require users’ sensitive group information for debiasing and offers users the freedom to opt-out from the adversarial component when privacy or computational costs become a concern. We show that ideally, FADE can attain the same global optimality as the one by the centralized algorithm. We then analyze when its convergence may fail in practice and propose a simple yet effective method to address the problem. Finally, we demonstrate the effectiveness of the proposed framework through extensive empirical studies, including the problem settings of unsupervised domain adaptation and fair learning. Our codes and pre-trained models are available at: https://github.com/illidanlab/FADE.

Keywords: Federated learning, Adversarial learning, Unsupervised domain adaptation, Fairness

1. INTRODUCTION

The last decade witnessed the surging adoption of personal devices such as smartphones, smartwatches, and smart personal assistants. These devices directly interface with the users, collect personal data, conduct light-weighted computations, and use machine learning models to offer personalized services. The challenges from privacy concerns of sensitive personal data, limited computational resources, performance issues of localized learning all together lead to the federated learning (FL) paradigm [4, 34]. FedAvg [32], for example, provides an efficient and privacy-aware FL framework. Users train models locally, upload them to a central server iteratively aggregated to form a global model. FL greatly alleviated privacy concerns because the server can only access model parameters from the users instead of the raw data used for training.

One major challenge of FL comes from the user heterogeneity where users provide statistically different data for training local models [5, 9]. Such heterogeneity may come from different sources. For example, the users may collect data under various conditions according to preferential or usages differences. Consider the learning of handwashing behavior from accelerometers of smartwatches, where patterns can drastically change when using different basins worldwide. Such domain shift [19] can lead to negative impacts during knowledge transfer among users [37]. Another common source of heterogeneity comes from the sensitive group information such as age, gender, and social groups, which are variables typically not to be identified during learning. Heterogeneity from this source is often associated with critical fairness issues [6] after deploying the models, where groups with less resource or smaller computation capability may be biased or even ignored during the learning [29], and the resulting global model may perform worse in minority groups.

Adversarial learning [12] has been a powerful approach to mitigate bias in centralized learning, in which an adversarial objective minimizes the information extracted by an encoder that can be maximally recovered by a parameterized model, discriminator. For example, it has been applied to disentangle task-specific features that may cause negative transfer [27], to perform unsupervised domain adaptation [11, 42], and recently to achieve fair learning [46]. However, there are significant barriers when applying adversarial techniques in FL: 1) Most existing approaches follow a top-down principle. In the context of FL, the adversarial objective requires the server to access the sensitive group variable (e.g., gender) to construct an adversarial loss. This requirement directly violates the privacy consideration design for FL, and users may not want to disclose their sensitive group variables. 2) adversarial learning demands extra information from users for training the adversarial component and imposes an additional computational burden on smart devices that may not be able to afford. 3) besides, it remains unknown how the introduction of an adversarial component would impact the distributed learning behavior (e.g., convergence property) of FL.

To address the challenges mentioned above, we propose a novel adversarial framework for debiasing federated learning following a bottom-up principle, called Federated Adversarial DEbiasing (FADE). Besides the benefits from typical FL on communication efficiency and data privacy, FADE aims to achieve the following goals:

Privacy-Protecting: The learning algorithm conforms to the privacy design of FL and does not require users’ group variable to achieve debiasing w.r.t. the group variable.

Autonomous: A user can choose to join and opt-out from the adversarial component anytime (e.g., due to computational budget or privacy budget) while still participate in the regular federated learning.

Satisfiable: Under above restrictions, the distributed learning should output a debiased and accurate model, despite the user heterogeneity and unpredictable user participation.

To achieve these goals, we first propose a generic algorithm for FADE and show that ideally, it can attain the same global optimality as the one by the central algorithm. We then show how its convergence may fail in practice and propose a simple yet effective method to address the problem. Finally, we demonstrate the effectiveness of the proposed framework through extensive empirical studies on various applications.

2. RELATED WORK

Federated Learning (FL) [32] is a distributed learning framework that allows users with different capabilities to collaboratively train a model without sharing their own data. A critical challenge in FL is the heterogeneity among users. Viewing the learning process of FL as knowledge transfer among different users, heterogeneity in user data leads to negative transfer between users and compromises generalization [3]. One idea to alleviate the negative effect from the heterogeneity during the training, is to find the consensus among users. For example, in [10, 14, 21, 26], the consensus on task knowledge is achieved by distillation. In this work, we seek an alternative and efficient approach by adversarial debiasing the users of different groups.

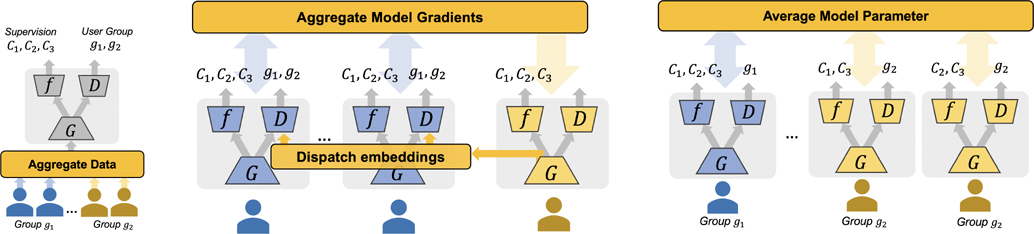

Adversarial Learning has been widely applied in various domains, such as neural language recognition [27], image-to-image (dense) prediction [31], image generation [12], and etc. Conceptually, adversarial learning aims to solve a two-player (or multi-player) game between two adversarial objectives, which typically leads to a min-max optimization problem. Existing approaches can be briefly categorized as: 1) Sample-to-Sample (S2S) adversarial learning, where the adversarial objective quantifies the difference between synthetic and real samples. Examples include adversarial learning against adversarial attacks [30] and generative adversarial networks [12]. 2) Group-to-Group (G2G) adversarial learning, which aims to reduce the max discrepancy (bias) between group distributions, for example, adversarial domain adaptation [11], adversarial fairness [46] and adversarial multi-task learning [27]. All these variants assume the availability of adversarial groups in the same computation node, e.g., by aggregating data in Fig. 1a, and thus cannot be directly extended to federated learning to the violation of privacy design (requiring access of the sensitive group information). A recent effort is done by [38] where embeddings of different groups are shared (see Fig. 1b). Nevertheless, both sharing data and embeddings could induce additional privacy risk and communication costs. The proposed FADE eliminated these requirements, leading to private and efficient distributed collaboration between users/groups.

Figure 1:

Illustrations of different adversarial learning frameworks for debiasing. f, D and G are classifier (task model), discriminator and encoder, respectively. C1, C2, C3 represents the task supervisions, for example, ground-truth classes, in the corresponding users. g1 and g2 represents the two groups of users. The encoders are adversarially trained such that the embeddings are informative for distinguishing C1, C2, C3 but not g1, g2. The proposed FADE tackles a more challenging problem than other two because of isolated and non-sharing group/user data (or embeddings) and class-wise non-iid users within groups.

3. FEDERATED ADVERSARIAL DEBIASING

In this section, we first formulate the proposed Federated Adversarial Debiasing (FADE) framework. We work on the standard federated learning problem setting which learns one model from a set of distributed participating users. Users conduct local learning based on their own data and send the parameters of learning models to a server periodically. The server aggregates the local models to form a global model. We assume the users have non-iid data and each user belongs to one of the E user groups as indicated by a group variable (e.g., age, gender, race) that is not to be shared outside of the local learning.

The model of each user consists of three components: a decoder f for the learning task (e.g., classification target), an encoder G, and a group discriminator D, as illustrated in Fig. 1c. In the two-group setting (a data point belongs to either group 0 and 1), D outputs a scalar in (0, 1) approximating the probability of an input data point x belong to the group 0. More generally, for E groups, we use a softmax mapping in the last layer of D which outputs an E-dimensional vector. The FADE objective learns f, D, G by:

| (1) |

| (2) |

where is the task loss for the i-th user, is the adversarial loss, and mg is the number of users in group g. Note that we absorb the variable model D into Li,g in Eq. (2), and the objective is still an optimization over f, D, G. For classification tasks, the task loss can be defined as , where denotes the cross-entropy loss and pi is the data distribution of user i. The adversarial loss is defined as , where Dg(G(x)) is the g-th output of the softmax vector. The optimal solution for the min-max problem is the adversarial balance when D is unable to tell the difference of G(x) among groups. For the two-group case, the adversarial loss can be modified as:

| (3) |

where is the indicator function.

One fundamental difference between traditional adversarial learning and FADE is that FADE only has one group data in the loss function. Hence, users have no sense of what an adversary (a user from other groups) looks like. Directly optimizing this objective may fail in finding the right direction towards convergence. In the worst case, the optimal solution may not be the adversarial balance. In the next section, we will provide principled analysis to the adversarial balance that is achievable under appropriate conditions.

We summarize the server and user update strategies in Algorithms 1 and 2. The server is responsible for aggregating users’ models and dispatching the global models to users. Meanwhile, users train the received global model and the adversarial component using local data. Note that we use the reversal gradient strategy to implement the min-max optimization in Algorithm 1. Our algorithm enjoys the two nice properties:

Autonomous: Different from vanilla FL, FADE allows the users to decide whether or not to join the learning of the discriminator D at each iteration. A user can opt-in the discriminator learning at a low frequency or completely opt-out when privacy becomes a concern or learn with restrictive computational resources. For example, in the adversarial domain adaptation setting [38] where some users have supervision and some others not, some supervised user may not want to help unsupervised users. FADE will significantly reduce the communication cost and privacy risk overhead involved by cutting down the interactions form these users.

Privacy: In the proposed FADE framework, the group label g will be restricted to local learning and the group debiasing is done through the discriminator model D. Thus, users will not be able to obtain the other users’ sensitive attributes including the group variable. Moreover, following [33], the privacy of FADE can be strictly protected by directly injecting Differential-Privacy noise during the gradient descent procedure.

Algorithm 1.

FADE User Update

| Input: f, G, D received from server, learning rate η, adversarial parameter λ, user data distribution pi | |

| 1: | f0, D0, G0 = f, D, G |

| 2: | for t = 1, …, K do |

| 3: | Sample a batch by x ~ pi (x) or (x, y) ~ pi(x, y) |

| 4: | z ← G(x) |

| 5: | , |

| 6: | if adversarial game D is accepted by user i then |

| 7: | |

| 8: | Dt+1 ← Dt + η∇D |

| 9: | Gt+1 ← Gt − η∇G |

| 10: | else |

| 11: | |

| 12: | Dt+1 ← Dt |

| 13: | Gt+1 ← Gt − η∇G |

| 14: | ft+1 ← ft − η∇f |

| Output: fK+1, GK+1, DK+1 | |

Algorithm 2.

FADE Server Aggregation

| Input: Initial models f, G, D momentum parameter β | |

| 1: | for t ∈ 1, …, Tmax do |

| 2: | Select m active users uniformly at random into |

| 3: | Broadcast θt = (ft, Gt, Dt) users |

| 4: | for user i in in parallel do |

| 5: | User updates by Algorithm 1 |

| 6: | Aggregate and average |

| Output: ft, Gt, Dt | |

4. OPTIMALITY ANALYSIS

Despite the fact that FADE enables autonomous and improves privacy in learning, it is critical to ask if the algorithm gives a satisfiable solution and what is the optimal solution of Eq. (1). Remarkably, FADE differs from traditional adversarial learning by Eq. (3), where only one group is used to evaluate the adversarial objective. This imposes a unique challenge in learning as it may compromise the convergence of learning. Below we give formal analysis of the optimality when Algorithm 2 is iterated with users from two groups in non-zero probability. Since most of multi-group adversarial problems can be transformed into two-group problems, we focus on discussing the two-group case for the ease of analysis.

Consider the case when each group only has one user. The data distributions for the two users are p1 and p2, respectively. We single out the min-max optimization in Eq. (1) as:

For simplicity, we denote G(x) by z and slightly abuse p1(x) by p1(z) in our discussion. Hence, we can define:

which is the maximal discrepancy between p1(z) and p2(z) that D can characterize. Now, we can rewrite the min-max problem as which minimizes the distribution distance over z. Alternatively, we can formulate it by since p1 and p2 are parameterized by G.

Because users may participate federated learning at varying frequencies, we use an auxiliary random variable ξi ∈ {0, 1} for i = 0, 1 to denote whether the user is active for training. We assume ξi is subject to the Bernoulli distribution, B(1, αi). Plug ξi into to obtain and take expectation:

| (4) |

Therefore, our problem is transformed as minimizing .

Note that with p1 and p2 given, the solution of the maximization in is:

| (5) |

with which we can derive the optimality sufficiency as below.

Theorem 4.1. The condition p1(z) = p2(z) is a sufficient condition for minimizing and the minimal value is α1 log α1 + α2 log α2 + (α1 + α2) log(α1 + α2).

Theorem 4.1 shows that even if some users are inactive, the distribution matching, p1 = p2, remains a sufficient optimality condition. We remark that the above result can be generalized to multiple users when all users are iid and ξi represent the ratio of group i in users. In addition, we notice Theorem 4.1 does not guarantee a stable convergence or exclude other undesired solutions. We discuss these issues in the following.

4.1. The effect of imbalanced groups

Although Theorem 4.1 shows the optimality of the matched distribution, the optimization may still fail to converge especially when one group of users are relatively inactive, e.g., α1 ≪ α2. When α1 ≪ α2 or reverse, we call the situation as imbalanced groups. The imbalanced groups happens because the users are free to quit or joint the training. From Eq. (5), we observe that D*(x) will be less sensitive to changes of p1(x) if α1 ≪ α2, and vice versa. Meanwhile, log D*(x) → −∞ and approaches the minimum even if p1 and p2 are quite different.

Theorem 4.2. Let ϵ be a positive constant. Suppose |logp1(x) − logp2(x)| ≤ ϵ for any x in the support of p1 and p2. Then we have when α1 ≪ α2.

Theorem 4.2 reveals that the imbalance between groups could greatly reduce the sensitivity of the discrepancy ϵ between p1 and p2. A less sensitive discriminator will ignore the minor differences between groups. The importance of discrepancy sensitivity for the adversarial convergence was also discussed in [2]. It is easy to see the negative impact of the low sensitivity: 1) higher communication cost incurs due to more communication rounds are required to check the discrepancy; 2) the optimization possibly fails to converge due to vanished gradients (scaled by α1).

4.2. Squared adversarial loss

In Eq. (4), when α1 → 0 and α2 → 1, we notice that approaches 0 while . In other words, the large value of is neglected due to its coefficient α1. To re-emphasize the value, a heuristic method is to increase the weight when is large. Thus, we propose to replace by:

| (6) |

which we call squared adversarial loss. We can write the corresponding discrepancy as:

Though we derive the squared adversarial loss in a heuristic manner, the loss can be explained in the view of resource-fair federated learning [22]. Because the adversarial objective pays more attention to the frequent group, we can interpret the problem as the unfairness between groups. Following [22], we generalize our adversarial loss function as:

| (7) |

where q ≥ 1. If q = 1, the loss degrades to the vanilla one.

4.3. The effect of non-iid users

It is well-known that typical federated learning approaches suffer from very heterogeneous users since they sample data from very different distributions. The adversarial objective captured and decreases the group heterogeneity by design. Another kind of heterogeneity is related to the users’ tasks. We argue that the heterogeneity is natural and could be essential for the task discriminability but may be accidentally eliminated by adversarial learning. For example, three users are non-iid by three classes. After FADE training, the non-iid users collapse to the similar distributions due to the wrong sense of the group discrepancy.

To prove the existence of user-collapsed solution for FADE, we consider z ~ p(z|T = t), or simply z ~ p(z|t), where t is a discrete hidden variable related to users’ tasks. For example, each user has one class of samples in classification tasks. Then t is the corresponding class. In addition, we define which is a p.d.f. For simplicity, we assume all users always participate the learning, i.e., αi = 1 for all users. Hence, we can obtain as

whose maximizer is given by: . Use similar derivations as in Theorem 4.1, we can show that is a sufficient optimality condition, which implies:

| (8) |

First, we can still obtain from Eq. (8) where we use . If , then we can get the vanilla solution, p1(z) = p2(z).

Except for the vanilla solution, a trivial solution to Eq. (8) is p(z|t) = p2(z). However, the solution could hurt the task utility since it may eliminate the inherent difference between tasks. For instance, if t represents the classification label, the solution will vanish the discriminability of the representation z. We call the scenario as the user collapse. It worth noticing that user collapse could happen even if the p1 and p2 are matched.

4.4. Mitigate user collapse

Since there are arbitrarily many solutions to , we need to constraint the feasible solutions such that the collapsed solution will be eliminated. Notice is related to the mutual information between t and z. Conceptually, we can modify the adversarial loss to:

where I(G(x); t|i) is the mutual information conditioned on user i. Because mutual information is hard to estimate in practice (especially given few samples), we provide some surrogate solutions.

If the t represents the class labels and supervision is available, then I(G(x); t|i) is already encouraged by Ltask. If supervision is not available, we may maximize the entropy of the output of classifier f such that the correlation between user’s tasks and representations will not disappear during training. Useful techniques were previously exploited for unsupervised domain adaptation, e.g., [28], and we defer the technique details to Section 5.2.

4.5. Privacy risks from malicious FADE users

Our analysis suggests the feasibility of using adversarial training in the federated setting. The distribution matching is achievable under variety of cases including imbalanced groups, although the success rate may vary. But such power also implies potential privacy overhead associated with FADE. Consider a malicious user i who wants to steal data from others, FADE can match pi(x) with a victim’s data pj(x). The empirical study in [16] also discussed the risk where a malicious attacker may take advantage of the discriminator to steal other users’ data. Our results in Theorem 4.1 theoretically show that the attack is possible in general. During the learning of the adversarial discriminator, injecting predefined noise is known to be effective to defend such attacks [41]. Meanwhile, users could quit or frequently opt-out the federated communication when the privacy budget (quantified by noise and Differential Privacy metric [7]) is low. Based on Theorem 4.2, when more and more users opt-out the communication, the adversary’s discriminator can hardly sense one victim’s distribution.

5. EXPERIMENTS ON UNSUPERVISED DOMAIN ADAPTATION

In this section, we evaluate the FADE algorithms on Unsupervised Domain Adaptation (UDA) [10, 24, 38]. UDA aims to mitigate the domain shift between supervised and unsupervised data such that the trained classifiers can generalize to unlabeled data. We call the supervised user (domain) as the source user (domain). Each domain may include multiple users.

Related work. [38] is among the first to discuss the adversarial UDA under federated constraint, through sharing the embedding of samples. However, we consider a more challenging problem, a federated adversarial learning without sharing data. Recently, learning without access to the source data has gained increasing attention. [24] (SHOT) considered the domain adaptation only using the source-domain model which surprisingly outperformed most traditional UDA with source supervisions. However, its success relies on the pre-matched representation distribution (but not well discriminated) by batch normalization (BN) layers. In the FADE setting, the BN layers will fail to match representations since the local estimate of their mean will be easily biased. In addition, in [10], distillation is used to avoid directly passing data. Differing from [10], FADE is more efficient since it does not need to upload all models from source domain to target domain. For example, if Ms users (Mt) in source (target) domain take part in training, sending models will involves MsMt communication. Instead, FADE only use Ms + Mt times to communicate between domains.

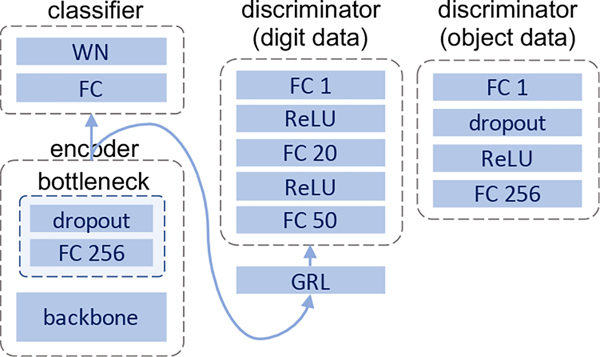

Network architectures. We adopt the same network architecture as the state-of-the-art of UDA [23]. As presented in Fig. 4, we first use a backbone network to extract sample features. Specifically, we use modified LeNet [28] for digit recognition, ResNet50 [15] for Office and Office-Home datasets, and ResNet101 for the VisDA-C dataset. We use an one-layer bottleneck to reduce the feature dimension. After the bottleneck, we get a representation of 256-dimension. A single fully-connected layer is used for classification at the end. The discriminators are small-scale networks to match the capability of the classifiers. The networks and algorithms are implemented using PyTorch 1.7. The ResNet backbones pre-trained on ImageNet are retrieved from the torchvision 0.8 package.

5.1. Digit recognition with imbalanced groups

As discussed in Section 4.1, group imbalance could result in the mismatch of group distributions. Here, we empirically evaluate the effect of the imbalanced groups on convergence, adversarial losses and utility performance.

Digit dataset is a standard UDA benchmark built on digit images collected from different environments. 10 digits, from 0 to 9, are included. We follow the UDA protocol of [17] and use two subsets: MNIST and USPS. MNIST dataset contains 60, 000 training images and 10, 000 testing 28 × 28 gray-scale images. USPS consists of 7291 training and 2007 testing 16 × 16 gray-scale images. We augment the USPS training set by resizing and random rotation.

Setup. We assume 2 users from source and target domain, respectively. In each round, we select one user with predefined probability. For example, the case that source and target users are of 0.05 and 0.95 probability implies severe imbalance. If a user/group has high probability, that means the user/group will actively participate in the adversarial learning and the other will activate less. The experiment can also be generalized to multiple users in same frequency while one domain has more users. Both situations imply the imbalance between two groups. In experiments, we fix the batch size to 32 and run one user per communication round. In total, we train the users for global 8600 rounds. In each global round, the users will train locally for 10 iterations. Experiments are repeated 3 times with three fixed seeds. At the beginning, we train the models with adversarial coefficient λ = 0 when all source users are involved until the classification loss converges. Then, we follow [11, 23] to use the decaying schedule of learning rates and schedule the adversarial coefficient λ from 0 to 1.

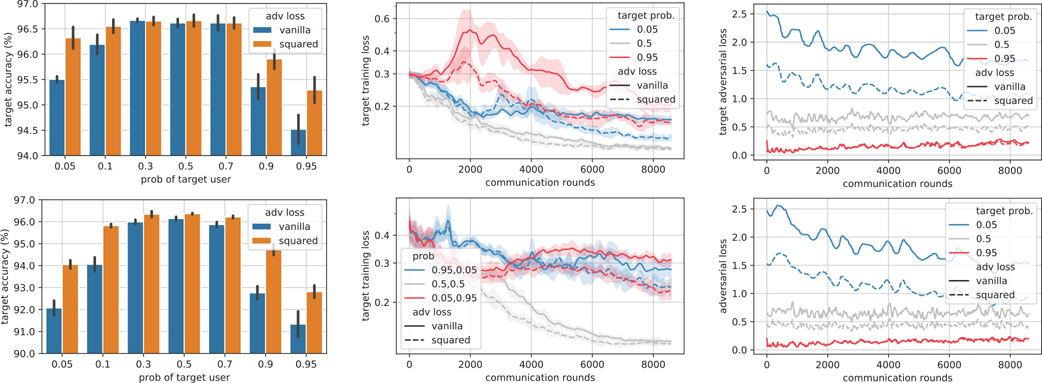

Results are reported in Fig. 2. Left two figures show the negative impact of imbalanced groups. When the imbalance is severe (large or small target probability), the drop in target accuracies is more obvious. In the middle pane, the convergence curves of imbalanced groups fluctuate more significantly and fail to converge. In the last pane, the imbalanced cases have large adversarial losses which barely decrease by federated iterations. It explains why the corresponding classification tasks fail to converge. When using the squared adversarial losses, the ignored adversarial losses of low-frequent users are reduced during federated learning. Meanwhile, the convergence of utility losses are faster. Thus, the negative impact of imbalanced groups is mitigated.

Figure 2:

Comparison of vanilla adversarial loss versus the squared adversarial loss on MNIST-to-USPS (top) and USPS-to-MNIST (bottom) UDA. We vary the probability of target users. For both UDA experiments, the SOTA central methods [23] can achieve over 98% accuracies. From left to right, the columns are target domain accuracies, classification losses and adversarial losses of target domain users.

5.2. Object recognition with non-iid users

In Section 4.3, we prove that the non-iid distribution of users will lead to a trivial solution which may lose the natural discrepancy between users. For federated classification learning where each user only has a partial set of classes, the loss of user discrepancy will make the representations non-discriminative to classes. Here, we conduct experiments to reveal the impact of the non-iid users.

Dataset. We adopt three object recognition datasets, Office [40] (small size), Office-Home [44] (medium size) and VisDA-C [39] (large size), including image of office products. The former two are standard benchmarks widely used for UDA. The Office dataset contains three domains: Amazon (A), DSLR (D) and Webcam (W) with 2817, 498, 795 images, respectively. 31 object classes of images are taken under different office environments (corresponding to domains). The Office-Home datasets have 65 categories and 4 domains: Artistic images (Ar), Clip Art (Cl), Product images (Pr), and Real-World images (Re) with 2427, 4365, 4439 and 4357 images, respectively. The VisDA dataset is a challenging large-scale benchmark. The source domain comprises 12-way synthetic classification data. In total, 1.5 × 105 images are synthesized by rendering 3D models and are adapted to 55, 000 unlabeled real-world images.

Setup. In total, 4 users are generated from two domain datasets. First, we let the single source domain user with all classes. Second, we generate 3 non-iid target domain users with partial set of classes following the standard federated setting [32]. For Office dataset, each user has 20 classes and adjacent users have consecutive classes with 10-class stride. For instance, user 1 has class 0 to 20 and user 2 has class 10 to 30. For OfficeHome dataset, each user has 45 classes with 20-class stride. For VisDA-C dataset, each user has 5 classes with 4-class stride. All users in the same domain will have the same number of samples. We select 2 users per communication round when training on OfficeHome. For VisDA-C dataset, we adopt 1 user per round. In this case, the major difficulty comes from non-iid distributions of users conditioned on the subset of classes. In experiments, the parameters for SHOT follows [23]. Details of network architectures and learning setup are discussed in Appendix B.

Baselines. We compare different UDA methods extended by FADE upon the presence of non-iid users. DANN [11] is the first work on adversarial domain adaptation based on which many recent methods are developed. CDAN [28] is the first to condition the discriminator prediction on the estimated classes, which aligns with our purpose to maximize the mutual information between user (related to classes) and representation. SHOT [23] (extended by FedAvg [32]) is the current state-of-the-art method in domain adaptation which does not use source data, assuming approximately mitigated domain shift.

Results. We summarize the results in Tables 1 and 2. Note that the straightforward extension of DANN without constraints will suffer from the user heterogeneity. Therefore, we observe catastrophic failures by DANN, for example, D→A with only a low accuracy. This kind of failures happens when both D (498) is of less samples than A (2817). The possible reason is that the discriminators fail to sense the position of target domain batches which is a small ratio of all target-domain samples and changes frequently by iterations. In comparison, when regulated by estimated classes, SHOT and methods combined with SHOT perform better. Notably, because SHOT relies on BN states to mitigate domain shift, its accuracies are much worse than its central version. Since SHOT can provide pseudo supervisions which conditions on the estimated users’ local classes, DANN+SHOT outperforms DANN. In reverse, DANN helps SHOT to mitigate the domain shift. We further explore CDAN+SHOT, which conditions group discrimination on local classifier outputs (correlated to users’ classes). As a result, CDAN+SHOT outperforms other methods and is close to the central version of CDAN. Plus, CDAN+SHOT achieves the best average accuracies when the number of users per round varies from 1 to 4. Remarkably, in the hardest case where only one user is trained per round, CDAN+SHOT gains the best accuracies on 8 out of 9 tasks. In a more challenging large-scale VisDA-C dataset, CDAN+SHOT also shows its advantage against other baselines (see Table 2). We note that adversarial methods are more robust to the non-iid users.

Table 1:

Averaged classification UDA accuracies (%) on Office and OfficeHome dataset with 3 non-iid target users and 1 source user. Underlines indicate the occurrence of non-converged results. Standard deviations are included in brackets.

| Method | A→D | A→W | D→A | D→W | W→A | W→D | Re→Ar | Re→Cl | Re→Pr | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| Federated methods | ||||||||||

| Source only | 79.5 | 73.4 | 59.6 | 91.6 | 58.2 | 95.8 | 67.0 | 46.5 | 78.2 | 72.2 |

| non-iid target users w/ 20 (Office) or 45 (OfficeHome) classes per user | ||||||||||

| FADE-DANN | 85.4 (1.9) | 81.8 (1.8) | 43.1 (33) |

97.7 (0.5) | 64.8 (0.5) | 99.7 (0.2) | 46.4 (37) |

34.9 (27) |

78.8(0.1) | 70.3 |

| FADE-CDAN | 92.3 (1.2) | 91.6 (0.5) | 65.9(9.3) | 98.9 (0.2) | 70.2 (0.8) | 99.9 (0.1) | 70.3 (1.6) | 54.9 (4.6) | 82.2 (0.1) | 80.7 |

| FedAvg-SHOT | 83.6 (0.5) | 83.1 (0.5) | 64.7 (1.4) | 91.7 (0.2) | 64.7 (2.2) | 97.4 (0.5) | 70.7 (0.5) | 55.4 (0.5) | 80.1 (0.3) | 76.8 |

| iid target users | ||||||||||

| FADE-DANN | 84.2 (1.5) | 81.3 (0.4) | 66.3 (0.3) | 97.5 (1.2) | 59.4 (10.6) | 99.9 (0.2) | 67.3 (0.9) | 51.3 (0.4) | 79.0 (0.6) | 76.2 |

| FADE-CDAN | 93.6 (0.8) | 92.2 (1.3) | 71.2 (1.0) | 98.7 (0.4) | 71.3 (0.7) | 100 (0.0) | 70.6 (1.3) | 55.1 (1.0) | 82.3 (0.2) | 81.7 |

| FedAvg-SHOT | 96.3 (0.5) | 94.3 (1.1) | 70.9 (2.0) | 98.4 (0.4) | 72.7 (0.9) | 99.8 (0.0) | 74.8 (0.3) | 60.0 (0.1) | 84.9 (0.2) | 83.6 |

|

| ||||||||||

| Central methods | ||||||||||

| ResNet [15] | 68.9 | 68.4 | 62.5 | 96.7 | 60.7 | 99.3 | 53.9 | 41.2 | 59.9 | 67.9 |

| Source only [23] | 80.8 | 76.9 | 60.3 | 95.3 | 63.6 | 98.7 | 65.3 | 45.4 | 78.0 | 73.8 |

| DANN [11] | 79.7 | 82.0 | 68.2 | 96.9 | 67.4 | 99.1 | 63.2 | 51.8 | 76.8 | 76.1 |

| CDAN [28] | 92.9 | 94.1 | 71.0 | 98.6 | 69.3 | 100 | 70.9 | 56.7 | 81.6 | 81.7 |

| SHOT [23] | 94.0 | 90.1 | 74.7 | 98.4 | 74.3 | 99.9 | 73.3 | 58.8 | 84.3 | 83.1 |

Table 2:

Comparison of target accuracies on Visda-C dataset.

| Methods | Source only | DANN | SHOT | CDAN |

|---|---|---|---|---|

|

| ||||

| Central | 46.6 | 57.6 | 82.9 | 73.9 |

| FADE | 54.3 | 56.4 | 69.2 | 73.1 (+SHOT) |

6. EXPERIMENTS ON FAIR FEDERATED LEARNING

The fair federated learning is motivated by the imbalanced groups in training. For example, when vendor rallies people to use their software and train model with locally collected data, the global model may be biased by the majority, e.g., male users. When a user from another gender uses the software, she/he may find that the model performs poorly. As a result, the minority group vanishes while majority continues to dominate. Thus, a method actively debiasing w.r.t. the groups will be essential to defend the tendency.

Related work. The fairness in federated learning was first discussed in [22] where users are thought to have different capability for computation. Fairness was enforced by increasing the weights of large loss, which was less optimized. In this experiment, we consider the unfairness brought by the difference of group distributions. With FADE, we use a discriminator locally to justify whether the user’s representations are biased from the other group. Related central algorithms have been exploited [8, 29, 45]. To the best of our knowledge, we are the first to encourage such group-based fairness in federated setting. Importantly, our method preserve the privacy of group variables. The concerns of the privacy of group variables was previously discussed [13]. In [13], Hashimoto et al. assumes the group membership and number of groups are unknown to the central learning server, when users interact with the system and contribute data. Our FADE extends the setting to a distributed framework where the private group information is still unknown to other parties including the aggregation server.

We utilize the Equalized Odds (ΔEO) to evaluate the degree of fairness. Consider a binary classifier predicting label variable y when representations are sampled from two groups. We denote the conditional p.d.f. p(z|g, y) as zg,y which shapes the probability of z at group g and class y. An algorithm is said to be fair if the positive ΔEO (defined below) is close to 0.

| (9) |

which comprises the absolute difference in false positive rates and the absolute difference in false negative rates.

6.1. Fair adult income prediction

Dataset. We evaluate our algorithm on the UCI Adult dataset1 which is a standard benchmark for fair classification. The dataset consists of over 40,000 vector samples from the 1994 US Census. Each sample includes 14 attributes predicting if his/her income is over 50,000 dollars.

Setup. We adversarially disentangle the unfair representations from the gender. When keeping the total data size fixed, we construct one female user and vary the number of male users. Each synthesized user evenly split the samples in the group. We run FADE for 8,000 communication rounds. In every round, 2 users are selected to train for 1 local iteration on a batch of 64 samples. The accuracies and fairness are evaluated on the left-out 10% samples. The network structure is in Fig. 5. We set hyper-parameters as β = 0.5 and the initial learning rate as 10−3.

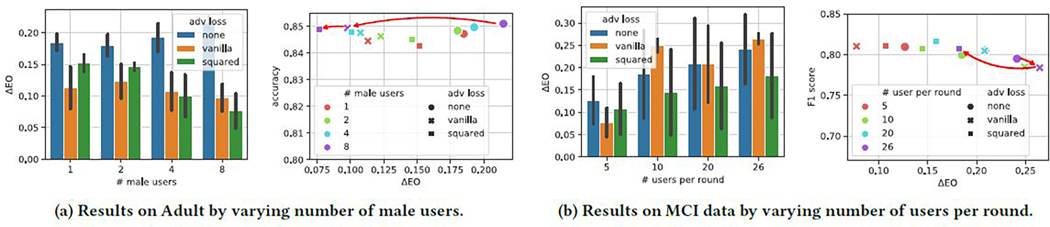

Results are depicted in Fig. 3a. Without adversarial training, the unfairness is aggravated when the imbalance between groups worsens. When more male users are involved, the squared adversarial loss is able to further improve the fairness. Instead, the vanilla adversarial learning performs better when the two groups are balanced. Both adversarial losses will maintain the utility performance close to the non-adversarial method.

Figure 3:

FADE with/without adversarial losses. In each subfigure, left is fairness measured by ΔEO where smaller values indicates better fairness; right is the trade off between fairness and utility where left-top is the preferred balance.

6.2. Fair MCI detection

Dataset. Mild Cognition Impairment (MCI) is the pre-symptom of Alzheimer’s Disease (AD) which typically happens on elders. Early detection of MCI is important for prevention of AD occurrence and treatment [1, 43]. Details of the dataset is comprised in Appendix B.3 where females forms the majority group (over 94%). The prediction task here is to classify the disease condition, Normal Cognition (NC) or MCI, based on the daily activities (walking speed, etc.).

Setup. In the original dataset, there are 88 users with different number of samples. We notice the imbalance between NC and MCI users will greatly degrade the model quality. To focus on our fairness task, we manually select 26 users such that 13 users was diagnosed as NC at least once and the other 13 ones are stable MCI patients. Because male users are much fewer than female ones, we prefer to select male users when balancing the two classes. After downsampling, users have 39 samples on average. Among the 26 users, there are 6 males and 20 females in total. Details of features, preprocessing and network architectures are deferred to Appendix B.3. We set hyper-parameters as β = 0.5, the initial learning rate as 10−2 and batch size as 16. In the 700 communication rounds, we first train without adversarial losses for 400 rounds and then schedule the λ and learning rates as the Adult experiments.

Results. We compare the convergence of the training F1-score (utility) and ΔEO (fairness) by varying the number of users per round. As shown in Fig. 3b, the unfairness is obvious with ΔEO over 0.2 when no adversarial losses are used. We see that the vanilla adversarial loss failed to debias in most cases. In contrast, the squared adversarial loss stably debias the unfair performance in all cases. When the number of users per round is less than 10, even the non-adversarial loss is more fair. The natural debiasing could be attributed to the random selection of users, which breaks the domination of one group in a short span.

7. CONCLUSION

In this work, we propose a unified framework for federated adversarial learning called FADE. Our framework preserves the user privacy and allows user to freely opt-in/out the learning of the adversarial component. To our best knowledge, we are the first to study the properties of adversarial learning in the federated setting. We presented the potential challenge and solution for the FADE, and identified a gap between FADE and its centralized counterpart as an open question for our future work.

CCS CONCEPTS.

• Computing methodologies → Distributed algorithms; Machine learning; • Transfer learningL

Acknowledgement

This material is based in part upon work supported by the National Science Foundation under Grant IIS-1749940, EPCN-2053272, Office of Naval Research N00014-20-1-2382, and National Institute on Aging (NIA) R01AG051628, R01AG056102, P30AG066518, P30AG024978, RF1AG072449.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org.

A. PROOFS

Proof of Theorem 4.1. Substitute into Eq. (4):

where the last inequality is from the non-negative property of KL divergence.

Note when p1 = p2, both KL divergence is 0. Thus, we can conclude that p1 = p2 is the sufficient condition. □

Proof of Theorem 4.2. For the ease of derivation, we assume α1 and α2 are normalized s.t. α1 + α2 = 1. From |log p1(x) – log p2(x)| ≤ ϵ, we can get

Thus,

Similarly,

Therefore,

where we manually add (α1 + α2) to normalize α1. □

B. EXPERIMENT DETAILS

B.1. Dynamic schedules

We use dynamic schedules for learning rates and the adversarial parameter λ following previous work [11]. Specifically,

where K is the number of local iterations and Tmax is the number of global rounds. Notably, ηt is schedule locally and λt is scheduled globally.

B.2. Network architectures

Federated UDA.

The network architectures are presented in Fig. 4.

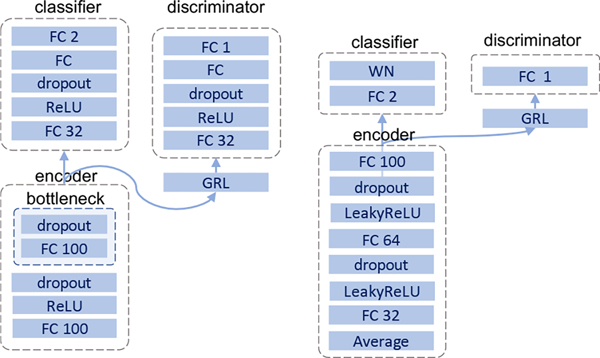

Figure 4:

Network architectures for digit and object datasets. WN denotes the weight-norm layer [23] and FC 256 denotes fully-connected layer with 256 units. GRL is the gradient reversal layer [11].

Batch normalization in FADE.

During training, we share the parameters of ResNet between users. Notably, in ResNet, batch normalization (BN) layer is densely embedded in different depth. The BN layer is known to be important for transferring between distinct domains, because the hidden representations will be normalized with mean and variance estimated from a batch. Because such estimation could be easily biased by a small batch, running estimation by accumulating results from previous batches is a common practice. Thus, it is also important for all users to get the global estimate of the mean and variance by communication. However, sharing such a running estimate of representation mean and standard variance may leak the private information [35, 36]. For example, given a feature vector at a specific layer, the input image can be reverted using a conditional generative network [35, 36]. Instead of sharing the mean and variance (BN states), we keep the values the same as values pre-trained on ImageNet.

Fair federated learning.

We depict the network architectures for Adult and MCI datasets in Fig. 5. For the Adult dataset, we aim to evaluate the performance of deep networks. Thus, we use a deeper network other than a shallow one for central algorithms [29]. Because of the small size of the MCI dataset, we adopt a small network architecture where only two layers of LSTM are used for feature extraction and one layer for classification or group identifying.

Figure 5:

Network architectures for Adult and MCI datasets. LSTM 100 indicates a Long Short-Term Memory (LSTM) cell with 100 hidden units.

B.3. Details of MCI datasets

Dataset

Due to the mild symptoms and expansive cost of clinic diagnosis, early detection of MCI is a hard task. To address the challenge, MCI detection models is built on a MCI dataset, which is collected with Intelligent Systems for Assessing Aging Change (ISAAC), a longitudinal cohort study [18, 20]. A total of 152 participants were enrolled beginning in 2017. 12 variables are extracted from the participants’ sensor data and clinical diagnoses was done once a year. Meanwhile, four kinds of demographic information are also recorded, including age, gender, education, and ethnicity, which are potentially unfair features for each patient.

Though prior work has shown the effectiveness of machine learning methods in diagnosis prediction [18, 25], the possibility of training such a model fairly in a distributed framework remains unknown. We assume the sensor data can be immediately trained locally and only the trained models are sent to the server. The distributed framework brings in several new challenges. First, users’ data are kept locally and many users only have one-class data which makes the local model less discriminative. For example, 13 users are always diagnosed as MCI during his/her recording. Second, it is difficult to do adversarial learning like Fig. 1b. Because the users’ group information, e.g., gender, can not be revealed to others, the server has no idea who will be the adversarial group. Therefore, we utilize the FADE framework to tackle these issues as illustrated in Fig. 1c. As far as privacy is concerned, in the ISAAC protocol, the sensor data were collected periodically by engineers such that the user data are kept away from others. But we argue that our extension to federated setting is practical because the data are not directly shared.

Preprocessing.

Since the records of some patients are missing due to occasionally off-line of sensor systems, and these incomplete samples can introduce uncertainty in our experiments, we choose to remove some samples according to a certain missing value. To generate samples, hundreds of days of records for each patient will then be sliced by a moving window, and each slice is used as a sample for training or to be predicted. The slicing is done inside each person’s sequence without overlap. The time window is moved in a step of 7 days. Only a subsequence of a small enough ratio of missing values will be maintained for the current study. The number of sequences for each patient is related to the amount of data the patient has. For some of the patients, they have only a small number of records. We also remove the samples of those patients to avoid inaccurate prediction.

We have 12 varaibles in total, including gender (Rsex), years of education (Ryrschool), race/ethnicity (Rethnic), age at each date (ageyrs), total computer use (compuse), computer sessions (numcsess), track sensor line (linenum), walks (numwalks), mean walking speed (meanws), upper quartile of walking speed (wsq3), coefficient of var of walking speed (wscv) and std deviation of walking speed (wsstddev). We preprocess special variables in the following specified methods. For linenum which is a sensor metric identity value, its integer values are transformed into a one-hot encoding form that uses the position of a single one to indicate the ID value. RSex and Rethic variables are encoded in the same way. The ages are transformed by 3-bin discretization. All continuous variables are normalized within [−1, 1] by min-max scaling such that no significant variance will occur between different variables and their coefficients could be trained in a numerically robust way.

All the data features are collected in a relatively redundant way, for which they should be carefully selected for better prediction performance. We select features using mutual information, which measures the dependency between the variables. It is equal to zero if and only if two random variables are independent, and the higher value means higher dependency. A special case is the linenum variable which only makes sense when other walking speed features are used. As a result, when a walking feature is selected according to the above metrics, the linenum variable is automatically included.

Footnotes

Contributor Information

Junyuan Hong, Michigan State University, East Lansing, Michigan, USA.

Zhuangdi Zhu, Michigan State University, East Lansing, Michigan, USA.

Shuyang Yu, Michigan State University, East Lansing, Michigan, USA.

Zhangyang Wang, University of Texas at Austin, Austin, Texas, USA.

Hiroko Dodge, Oregon Health & Science University, Portland, Oregon, USA.

Jiayu Zhou, Michigan State University, East Lansing, Michigan, USA.

REFERENCES

- [1].Aisen PS, Andrieu S, Sampaio C, Carrillo M, Khachaturian ZS, Dubois B, Feldman HH, Petersen RC, Siemers E, Doody RS, Hendrix SB, Grundman M, Schneider LS, Schindler RJ, Salmon E, Potter WZ, Thomas RG, Salmon D, Donohue M, Bednar MM, Touchon J, and Vellas B. 2011. Report of the Task Force on Designing Clinical Trials in Early (Predementia) AD. Neurology 76, 3 (Jan. 2011), 280–286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Arjovsky Martin, Chintala Soumith, and Bottou Léon. 2017. Wasserstein GAN. (Jan. 2017). [Google Scholar]

- [3].Ben-David Shai, Blitzer John, Crammer Koby, Kulesza Alex, Pereira Fernando, and Vaughan Jennifer Wortman. 2010. A Theory of Learning from Different Domains. Machine Language 79, 1–2 (May 2010), 151–175. [Google Scholar]

- [4].Bonawitz Keith, Ivanov Vladimir, Kreuter Ben, Marcedone Antonio, McMahan H. Brendan, Sarvar Patel, Ramage Daniel, Segal Aaron, and Seth Karn. 2017. Practical Secure Aggregation for Privacy-Preserving Machine Learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security (CCS ‘17). Association for Computing Machinery, New York, NY, USA, 1175–1191. [Google Scholar]

- [5].Dinh Canh T., Tran Nguyen H., and Nguyen Tuan Dung. 2020. Personalized Federated Learning with Moreau Envelopes. In Advances in Neural Information Processing Systems. [Google Scholar]

- [6].Dwork Cynthia, Hardt Moritz, Pitassi Toniann, Reingold Omer, and Zemel Richard. 2012. Fairness through Awareness. In Proceedings of the 3rd Innovations in Theoretical Computer Science Conference (ITCS ‘12). Association for Computing Machinery, Cambridge, Massachusetts, 214–226. [Google Scholar]

- [7].Dwork Cynthia, Frank McSherry Kobbi Nissim, and Smith Adam. 2006. Calibrating Noise to Sensitivity in Private Data Analysis. In Theory of Cryptography (Lecture Notes in Computer Science). Springer; Berlin Heidelberg, 265–284. [Google Scholar]

- [8].Edwards Harrison and Storkey Amos. 2016. Censoring Representations with an Adversary. ICLR (March 2016). [Google Scholar]

- [9].Fallah Alireza, Mokhtari Aryan, and Ozdaglar Asuman. 2020. Personalized Federated Learning: A Meta-Learning Approach. In Advances in Neural Information Processing Systems. [Google Scholar]

- [10].Feng Hao-Zhe, You Zhaoyang, Chen Minghao, Zhang Tianye, Zhu Minfeng, Wu Fei, Wu Chao, and Chen Wei. 2021. KD3A: Unsupervised Multi-Source Decentralized Domain Adaptation via Knowledge Distillation. AAAI (2021). [Google Scholar]

- [11].Ganin Yaroslav and Lempitsky Victor. 2015. Unsupervised Domain Adaptation by Backpropagation. In International Conference on Machine Learning. PMLR, 1180–1189. [Google Scholar]

- [12].Goodfellow Ian J., Jean Pouget-Abadie Mehdi Mirza, Xu Bing, David Warde-Farley Sherjil Ozair, Courville Aaron, and Bengio Yoshua. 2014. Generative Adversarial Networks. arXiv:1406.2661 [cs, stat] (June 2014). [Google Scholar]

- [13].Hashimoto Tatsunori, Srivastava Megha, Namkoong Hongseok, and Liang Percy. 2018. Fairness without demographics in repeated loss minimization. In International Conference on Machine Learning. PMLR, 1929–1938. [Google Scholar]

- [14].He Chaoyang, Annavaram Murali, and Avestimehr Salman. 2020. Group Knowledge Transfer: Federated Learning of Large CNNs at the Edge. arXiv:2007.14513 [cs] (Nov. 2020). [Google Scholar]

- [15].He Kaiming, Zhang Xiangyu, Ren Shaoqing, and Sun Jian. 2016. Deep Residual Learning for Image Recognition. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2016). [Google Scholar]

- [16].Hitaj Briland, Ateniese Giuseppe, and Perez-Cruz Fernando. 2017. Deep Models Under the GAN: Information Leakage from Collaborative Deep Learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security (CCS ‘17). ACM, New York, NY, USA, 603–618. [Google Scholar]

- [17].Hoffman Judy, Tzeng Eric, Park Taesung, Zhu Jun-Yan, Isola Phillip, Saenko Kate, Efros Alexei, and Darrell Trevor. 2018. CyCADA: Cycle-Consistent Adversarial Domain Adaptation. In International Conference on Machine Learning. PMLR, 1989–1998. [Google Scholar]

- [18].Hong Junyuan, Kaye Jeffrey, Dodge Hiroko H., and Zhou Jiayu. 2020. Detecting MCI Using Real-Time, Ecologically Valid Data Capture Methodology: How to Improve Scientific Rigor in Digital Biomarker Analyses. Alzheimer’s & Dementia 16, S5 (2020), e044371. [Google Scholar]

- [19].Quiñonero-Candela Joaquin, Sugiyama Masashi, Schwaighofer Anton, and Lawrence Neil D.. 2008. Dataset Shift in Machine Learning | The MIT Press. MIT Press. [Google Scholar]

- [20].Kaye Jeffrey A., Maxwell Shoshana A., Mattek Nora, Hayes Tamara L., Dodge Hiroko, Pavel Misha, Jimison Holly B., Wild Katherine, Boise Linda, and Zitzelberger Tracy A.. 2011. Intelligent Systems for Assessing Aging Changes: Home-Based, Unobtrusive, and Continuous Assessment of Aging. The Journals of Gerontology: Series B 66B, suppl_1 (July 2011), i180–i190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Li Daliang and Wang Junpu. 2019. FedMD: Heterogenous Federated Learning via Model Distillation. arXiv:1910.03581 [cs, stat] (Oct. 2019). [Google Scholar]

- [22].Li Tian, Sanjabi Maziar, Beirami Ahmad, and Smith Virginia. 2019. Fair Resource Allocation in Federated Learning. In International Conference on Learning Representations. [Google Scholar]

- [23].Liang Jian, Hu Dapeng, and Feng Jiashi. 2020. Do We Really Need to Access the Source Data? Source Hypothesis Transfer for Unsupervised Domain Adaptation. International Conference on Machine Learning (Oct. 2020). [Google Scholar]

- [24].Liang Jian, Hu Dapeng, Wang Yunbo, He Ran, and Feng Jiashi. 2020. Source Data-Absent Unsupervised Domain Adaptation through Hypothesis Transfer and Labeling Transfer. ArXiv (2020). [DOI] [PubMed] [Google Scholar]

- [25].Lin Ming, Gong Pinghua, Yang Tao, Ye Jieping, Albin Roger L., and Dodge Hiroko H.. 2018. Big Data Analytical Approaches to the NACC Dataset: Aiding Preclinical Trial Enrichment. Alzheimer Disease & Associated Disorders 32, 1 (2018), 18–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Lin Tao, Kong Lingjing, Stich Sebastian U., and Jaggi Martin. 2020. Ensemble Distillation for Robust Model Fusion in Federated Learning. In Advances in Neural Information Processing Systems. [Google Scholar]

- [27].Liu Pengfei, Qiu Xipeng, and Huang Xuanjing. 2017. Adversarial Multi-Task Learning for Text Classification. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Vancouver, Canada, 1–10. [Google Scholar]

- [28].Long Mingsheng, Cao Zhangjie, Wang Jianmin, and Jordan Michael I.. 2018. Conditional Adversarial Domain Adaptation. arXiv:1705.10667 [cs] (Dec. 2018). [Google Scholar]

- [29].Madras David, Creager Elliot, Pitassi Toniann, and Zemel Richard. 2018. Learning Adversarially Fair and Transferable Representations. International Conference on Machine Learning (2018), 11. [Google Scholar]

- [30].Madry Aleksander, Makelov Aleksandar, Schmidt Ludwig, Tsipras Dimitris, and Vladu Adrian. 2019. Towards Deep Learning Models Resistant to Adversarial Attacks. arXiv:1706.06083 [cs, stat] (Sept. 2019). [Google Scholar]

- [31].Maninis Kevis-Kokitsi, Radosavovic Ilija, and Kokkinos Iasonas. 2019. Attentive Single-Tasking of Multiple Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1851–1860. [Google Scholar]

- [32].Brendan McMahan Eider Moore, Ramage Daniel, Hampson Seth, and Arcas Blaise Aguera y. 2017. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Artificial Intelligence and Statistics. 1273–1282. [Google Scholar]

- [33].Brendan McMahan H, Ramage Daniel, Talwar Kunal, and Zhang Li. 2018. Learning Differentially Private Recurrent Language Models. In International Conference on Learning Representations. [Google Scholar]

- [34].Mohassel P and Zhang Y. 2017. SecureML: A System for Scalable Privacy-Preserving Machine Learning. In 2017 IEEE Symposium on Security and Privacy (SP). 19–38. [Google Scholar]

- [35].Nguyen Anh, Clune Jeff, Bengio Yoshua, Dosovitskiy Alexey, and Yosinski Jason. 2017. Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4467–4477. [Google Scholar]

- [36].Nguyen Anh, Dosovitskiy Alexey, Yosinski Jason, Brox Thomas, and Clune Jeff. 2016. Synthesizing the Preferred Inputs for Neurons in Neural Networks via Deep Generator Networks. In Advances in Neural Information Processing Systems 29. Curran Associates, Inc., 3387–3395. [Google Scholar]

- [37].Pan Sinno Jialin and Yang Qiang. 2010. A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering 22, 10 (Oct. 2010), 1345–1359. [Google Scholar]

- [38].Peng Xingchao, Huang Zijun, Zhu Yizhe, and Saenko Kate. 2019. Federated Adversarial Domain Adaptation. In International Conference on Learning Representations. [Google Scholar]

- [39].Peng Xingchao, Usman Ben, Kaushik Neela, Hoffman Judy, Wang Dequan, and Saenko Kate. 2017. VisDA: The Visual Domain Adaptation Challenge. arXiv:1710.06924 [cs] (Nov. 2017). [Google Scholar]

- [40].Saenko Kate, Kulis Brian, Fritz Mario, and Darrell Trevor. 2010. Adapting Visual Category Models to New Domains. In Proceedings of the 11th European Conference on Computer Vision: Part IV (ECCV’10). Springer-Verlag, Berlin, Heidelberg, 213–226. [Google Scholar]

- [41].Torkzadehmahani Reihaneh, Kairouz Peter, and Paten Benedict. 2019. DP-CGAN: Differentially Private Synthetic Data and Label Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern RecognitionWorkshops. 0–0. [Google Scholar]

- [42].Tzeng Eric, Hoffman Judy, Saenko Kate, and Darrell Trevor. 2017. Adversarial Discriminative Domain Adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 7167–7176. [Google Scholar]

- [43].Vellas B, Bateman R, Blennow K, Frisoni G, Johnson K, Katz R, Langbaum J, Marson D, Sperling R, Wessels A, Salloway S, Doody R, and Aisen P. 2015. Endpoints for Pre-Dementia AD Trials: A Report from the EU/US/CTAD Task Force. The journal of prevention of Alzheimer’s disease 2, 2 (June 2015), 128–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Venkateswara Hemanth, Eusebio Jose, Chakraborty Shayok, and Panchanathan Sethuraman. 2017. Deep Hashing Network for Unsupervised Domain Adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5018–5027. [Google Scholar]

- [45].Wadsworth Christina, Vera Francesca, and Piech Chris. 2018. Achieving Fairness through Adversarial Learning: An Application to Recidivism Prediction. arXiv:1807.00199 [cs, stat] (June 2018). [Google Scholar]

- [46].Zhang Brian Hu, Lemoine Blake, and Mitchell Margaret. 2018. Mitigating Unwanted Biases with Adversarial Learning. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society (AIES ‘18). Association for Computing Machinery, New York, NY, USA, 335–340. [Google Scholar]