Abstract

In the past decade, decision neuroscience and neuroeconomics have developed many new insights in the study of decision making. This review provides an overarching update on how the field has advanced in this time period. Although our initial review a decade ago outlined several theoretical, conceptual, methodological, empirical, and practical challenges, there has only been limited progress in resolving these challenges. We summarize significant trends in decision neuroscience through the lens of the challenges outlined for the field and review examples where the field has had significant, direct, and applicable impacts across economics and psychology. First, we review progress on topics including reward learning, explore–exploit decisions, risk and ambiguity, intertemporal choice, and valuation. Next, we assess the impacts of emotion, social rewards, and social context on decision making. Then, we follow up with how individual differences impact choices and new exciting developments in the prediction and neuroforecasting of future decisions. Finally, we consider how trends in decision-neuroscience research reflect progress toward resolving past challenges, discuss new and exciting applications of recent research, and identify new challenges for the field.

This article is categorized under:

Psychology > Reasoning and Decision Making

Psychology > Emotion and Motivation

Keywords: choice, decision neuroscience, economics, neuroimaging, noninvasive brain stimulation

Graphical Abstract

1 |. INTRODUCTION

Decision neuroscience and neuroeconomics seek to identify the neural processes that underlie decision making (Beck et al., 2008; Glimcher et al., 2009), particularly the subjective value of rewards (Levy & Glimcher, 2012), the uncertainty of different outcomes (Ma & Jazayeri, 2014), and how people make interpersonal decisions (Hackel & Amodio, 2018). Although the terms “neuroeconomics” and “decision neuroscience” have been used interchangeably in the literature, we use the latter term throughout this review for greater clarity and breadth.

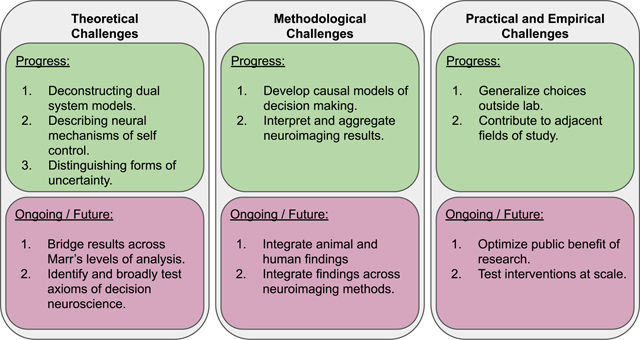

The field of decision neuroscience advanced quickly in its early years, identifying many brain regions involved in the valuation of rewards and social decisions. Despite the early advances of decision neuroscience, our initial review in 2010 identified several key questions and challenges that limited the field (Smith & Huettel, 2010). For example, how can we reconcile the frameworks of decision and cognitive neuroscience? What issues may require advancements in the techniques and technology of neuroscience? How can we integrate and link findings from different species and methodologies? Other challenges can even come from outside the laboratory, preventing meaningful impacts on people’s lives. For example, are neuroscientific results convincing enough to meaningfully contribute to economic policy? Taken together, these questions—which are centered on theoretical, methodological, and practical challenges—highlight critical considerations for the interdisciplinary field of decision neuroscience.

This review provides an overarching update on how the field has advanced since our initial review and identifies the varying degrees of progress made toward addressing these challenges. We extend on the original Smith and Huettel review to include literature from the past decade of reward prediction error, reward anticipation, risk, and ambiguity, explore–exploit choices, temporal discounting, emotional and social decision making, value comparison, and recent advances in understanding individual differences. We focus on new developments to models, theories, methods, and concepts of value-based decision making that have made the greatest impact over the last 10 years. This approach may overemphasize recent trends and methods (e.g., human fMRI); however, it helps us to characterize how efforts have been spent and the incentives in the field of decision neuroscience. While there have been advances in value-independent and perceptual decision making related to sensory representation (Yeon & Rahnev, 2020), motor decisions, and option selection (Ota et al., 2020; Parvin et al., 2018), we concentrate on choices related to value, social context, and emotion. After reviewing recent trends in decision neuroscience, we conclude our summary with a focus on recent research assessing individual differences. Here we reflect on decision making as a spectrum of behaviors and how they are related to demographic, developmental, and clinical variables. Finally, we consider how these recent trends reflect progress made on various theoretical, conceptual, methodological, empirical, and practical challenges (Huettel, 2010; Smith & Huettel, 2010) in decision neuroscience. We argue that the field has achieved considerable and direct impacts across economics and psychology.

2 |. VALUE: FROM REWARD LEARNING TO VALUE COMPARISON

Much of the early work in decision neuroscience had focused on reward learning, the explore–exploit dilemma, risky decisions, temporal discounting, and valuation. Earlier advances before 2010 had identified many of the brain regions involved in these processes. Meta-analyses have since confirmed the robust involvement of regions including the ventral striatum (VS), and the ventromedial prefrontal cortex (vmPFC) during different aspects of decision making such as valuation (Bartra et al., 2013; Clithero & Rangel, 2014), reward learning (Chase et al., 2015), reward consumption, and anticipation (Diekhof et al., 2012). We will review how the theories and constructs underlying these problems have progressed over the past decade. To help orient readers to the brain regions that are repeatedly mentioned throughout the review, we provide a statistical map describing the regions consistently involved in value-based decision making (Figure 1; (see Poldrack et al., 2012 for details).

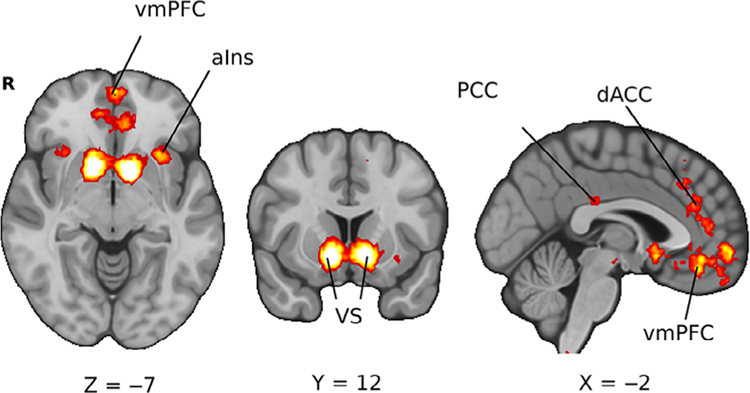

FIGURE 1.

Brain regions associated with decision making. The association test for an automated meta-analysis of value-based decision-making studies provided by the Neurosynth platform. Key regions highlighted include the ventral striatum (VS), ventromedial prefrontal cortex (vmPFC), dorsal anterior cingulate cortex (dACC), posterior cingulate cortex (PCC), and anterior insula (aIns). The figure was generated through Neurosynth by using an association test for an automated meta-analysis of value-based decision-making studies

2.1 |. Reward learning

The process of using feedback through positive and negative reinforcement, described as reward learning, is a fundamental component of value-based decision making. Simple reward learning involves calculating the difference between expected and observed outcomes. This difference between expected and observed outcome values is encoded through dopamine activity as reward prediction errors (RPE; Cannon & Bseikri, 2004; Watabe-Uchida et al., 2017; Wise, 1980). While reward learning is a well-established discipline, there have recently been several substantial advancements. Specifically, three major trends in reward learning during the past decade include research on biases in the RPE signal, investigating the role of the hippocampus and episodic memory in reward learning, and distinguishing between model-based and model-free learning.

Advances in reward learning research over the last decade have focused on teasing apart different influences on positive (better than expected) and negative (worse than expected) learning rates. A consistent set of results reflecting greater positive than negative learning rates led to the interpretation of an optimism bias in reward learning (den Ouden et al., 2013; Niv et al., 2012; Sharot & Garrett, 2016). While there is little consensus as to why positive prediction errors are more heavily weighted, explanations include the influence of reward learning on risk preferences (Niv et al., 2012), exploratory behavior (Garrett & Daw, 2020), and psychological defense mechanisms (Sharot & Garrett, 2016). Others have suggested that the biases for positive prediction errors are driven by processes similar to sensory adaptation (Bavard et al., 2018, 2021). By using simulations, researchers have identified an adaptive role for separate learning rates for optimal learning across environments with high and low long run averages of rewards (Cazé & van der Meer, 2013). In other words, when the long run average of rewards is high, it can be beneficial to underweight negative prediction errors. However, when faced with differentially rewarding environments, humans do not adapt these separate learning rates as predicted in these simulations (Gershman, 2015). Strangely, there still may be a link between the long running rewards in an environment and an optimistic RPE. Tonic dopamine has been thought to encode the long-term average of rewards (Dayan, 2012; Niv et al., 2007) and studies increasing tonic dopamine through the administration of levodopa (L-Dopa) have found that it impairs learning specific to feedback that was worse than expected (Sharot et al., 2012). Other research has also shown that RPEs take into account feedback from unchosen options (Palminteri et al., 2015). These results together suggest a complicated relationship between the learned reward environment and an optimistic learning bias.

Understanding positive versus negative learning rates has been an effective tool to explore how we integrate learned information. Further, examining learning rates for obtained versus forgone outcomes (counterfactual learning; Palminteri et al., 2015) has revealed that optimism bias is not present for counterfactual learning (Chambon et al., 2020), and instead negative learning rates dominate the updating of counterfactual information. These combined results further suggest a general confirmation bias. Exploring traditional cognitive biases within the framework of reward learning has helped to alleviate earlier conceptual challenges through integrating decision and cognitive neuroscience (Doll et al., 2011; Jarcho et al., 2011; Kappes et al., 2020).

To investigate the mechanisms of reward learning in the brain, most reinforcement learning research has naturally focused on canonical reward sensitive regions such as the VS, vmPFC, and the VTA. However, there have been increased efforts to assess the relationship between reward learning and memory, with a focus on the role of the hippocampus (Kempadoo et al., 2016; Perez & Lodge, 2018). In particular, the hippocampus can alleviate two major problems for common classes of models that rely on RPE. First, the hippocampus supports reward learning when the outcome of our decisions are delayed (Tan et al., 2008). Second, the hippocampus may help distinguish rewarding elements when learning from stimuli with many features (Gershman & Daw, 2017), and generalize how those features may be relevant to future outcomes (Gershman & Daw, 2017).

The hippocampus can help to connect actions and outcomes that have been separated over time (e.g., associating study habits you have been developing over a long time period with better grades; Gershman & Daw, 2017; Tan et al., 2008). Although learning from immediate rewards is selectively impaired for individuals with striatal damage, they can still learn from delayed feedback, a skill that is selectively impaired for those with middle temporal lobe and hippocampus damage (Foerde et al., 2013). Other work has shown that coordinated activity between the hippocampus, orbitofrontal cortex (OFC), and striatal regions (Miller et al., 2017; Stoianov et al., 2018; F. Wang et al., 2020) may provide a key link between lower-level reward learning and the integration of high-level information in episodic memory to support future decisions.

Furthermore, the hippocampus can support learning when the outcomes associated with a stimulus depend on the combination of features present rather than each feature independently. For example, though the yellow feature of lemons and bananas indicates ripeness, we know that one fruit will be sour and the other one sweet. In these situations, the hippocampus can compute a sparse representation of the stimulus through a process of pattern separation and a value can be associated with the computed representation rather than each individual feature (O’Reilly & McClelland, 1994). Results from fMRI have confirmed this theory showing that patterns of activity in the hippocampus help to guide reward learning from stimuli with multiple features (Ballard et al., 2019; Niv et al., 2015). Combining fMRI and pharmacological techniques identified functional coupling between the midbrain and the hippocampus, which was modulated by dopamine activity (Kahnt & Tobler, 2016). This dopamine-dependent coupling was particularly important for similarity-based processing and generalizing outcome predictions across stimuli (Kahnt & Tobler, 2016). These results suggest that both episodic memory and reinforcement learning mechanisms work together to understand real-world outcomes. Both understanding complex stimuli with multiple features and properly associating delayed rewards are essential parts of real-world learning. Understanding how the hippocampus interacts with value and reward learning will be important to properly depict and model these processes.

The third trend within reward learning research in the past decade has attempted to identify different kinds of learning, their precise roles in various behavior, and whether different kinds of learning are supported by distinct or similar neural structures and functions. In particular, there has been considerable effort to understand model-based and model-free reward learning. Model-free learning only relies on feedback to update an expected reward associated with a stimulus or action and is often associated with habitual learning and Pavlovian conditioning. Model-based learning, however, allows learners to form a mental model of the environment and make predictions and updates based on their model. Model-based learning is also often associated with goal-directed behavior (Huys et al., 2014). While model-free learning focuses on action-outcome associations, adaptive behavior requires humans to understand the interaction of states and actions to produce an outcome (i.e., model-based learning; Daw et al., 2011; Kurdi et al., 2019). The two-stage reward learning task (Figure 2) has been used ubiquitously to dissociate the weights of model-based and model-free learning (Daw et al., 2011). In this task, an initial decision leads to one of two possible outcomes where a second decision is made. By observing how individuals treat rare and common transitions from the first to second stage, we can estimate how heavily individuals rely on each strategy.

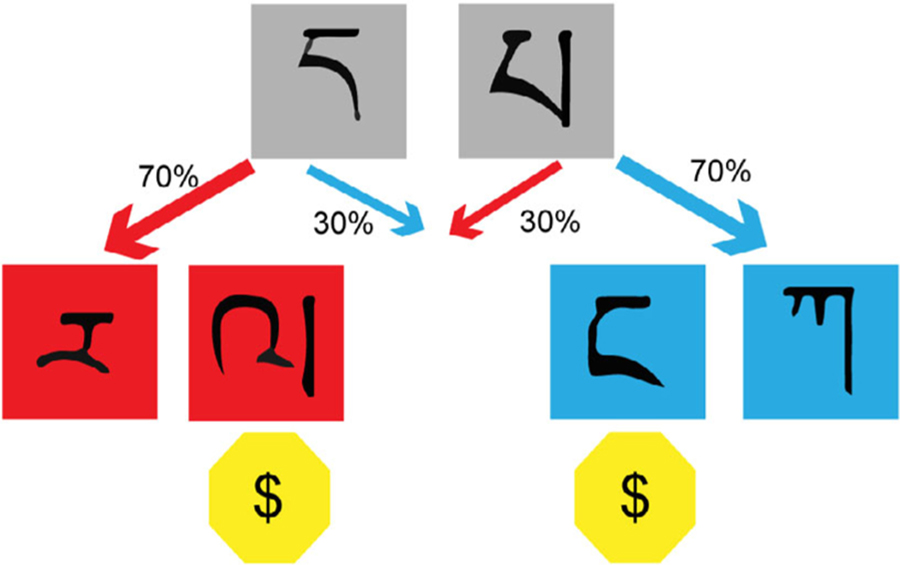

FIGURE 2.

General schematic for the two-stage model-based learning task. In the first stage, participants choose one of two gray boxes, with a Tibetan character to identify it. Depending on the chosen box, participants transition with different probabilities to a second-stage state, either the red or the blue state. In this example, each box preferentially transitions participants to a particular state (red or blue) with a 70% chance and with the remaining chance (30%) to the opposite state. In the second stage, participants choose between two boxes (with identifying Tibetan characters) and receive a reward or do not. Each box in the second stage has a different reward probability which changes throughout the experiment

One potentially major confound to the original design was that some model-free behavior could appear to be model-based learning. Recent evaluations suggest that participant misconceptions of the commonly used two-stage task may falsely appear as model-free learning, confounding the interpretations of model-free and model-based learning tasks (Feher da Silva & Hare, 2020). Initial alterations to the task to address these shortcomings included adding predictors in the analysis to capture the tendency to repeat correct choices and to map transition reversals from the first step choice (Akam et al., 2015). Further, Kool et al. identified five different factors that reduced the effectiveness of the two-stage task: the low distinguishability of second-stage probabilities, too low drift rate of second-stage probabilities, probabilistic (rather than deterministic) transition structure between stages, possibility of choice in second stage, and low informativeness of outcomes (Kool et al., 2016). By accounting for these factors, the task better reflects the accuracy-demand tradeoffs of model-based and model-free learning. We invite the reader to review Kool et al., 2016 for a detailed analysis. Overall, these recent results suggest that a large set of model-based and model-free algorithms can mimic model-based actions.

Most learners, however, are thought to use both model-based and model-free learning (Daw et al., 2011; Groman et al., 2019). This combined approach suggests that humans need to arbitrate between both approaches to learning. Neuroimaging work seems to suggest that these signals are balanced through the lateral prefrontal cortex as regions encoding model-free values (e.g., putamen and supplementary motor cortex) can be downregulated during functional coupling with the lateral PFC (Lee et al., 2014). This mechanism may help to arbitrate between model-based and model-free strategies by decreasing the influence of model-free learning on future decisions. The lateral PFC has also shown to be active during the arbitration of learning through imitation- and emulation-based social observational learning strategies (Charpentier et al., 2020), mirroring the involvement in model-based and model-free learning. While the lateral PFC seems to be key in both accounts, it is unclear whether these are distinct processes or whether the lateral PFC is a domain-general integrator of learned information. Finally, the successor representation algorithm has proposed a way to balance the flexibility of model-based learning and the efficiency of model-free learning without directly arbitrating between the two (Gershman, 2018). Successor representations rely on stored predictions about future states rather than re-computing the value of every decision or using precomputed action values. By storing predictions about future states, successor representations can balance tradeoffs associated with model-free and model-based learning without requiring either (Momennejad et al., 2017). Still, many of the neuroscientific findings, such as work suggesting that dopamine encodes a reward prediction error, can be difficult to disambiguate from successor representations under which dopamine is thought to signal a similar temporal difference error (Gershman, 2018). Future work should aim to identify hypotheses that might falsify or disambiguate these classes of models.

Reward learning has been a significant area of research for much longer than the past decade. However, we have seen concepts and theories broaden to encompass larger domains of human behavior and begun to address the weaknesses of reward learning by investigating the interaction between reward learning and episodic memory. Finally, there has been considerable research into different types of learning, particularly as they relate to model-based versus model-free strategies. Reward learning promises to be an exciting area of research for the foreseeable future as it relates to the theoretical and conceptual challenges of decision neuroscience.

2.2 |. The explore–exploit dilemma

When making sequential choices, we face a decision to exploit our current source of reward or explore new options. These decisions are significant in a variety of situations, such as choosing how much to invest in a stock or how a firm should allocate its resources. Some ways researchers study such situations include explore–exploit tasks, such as n-armed bandits (Daw et al., 2006) and foraging tasks (Stephens & Krebs, 1986). Research in the preceding decade started investigating the neural correlates of exploration, exploitation, and uncertainty in dynamic situations. This left the field with the major challenge of describing the trade-off between exploitation and exploration as a single problem addressed by a unitary or larger set of mechanisms in the brain (Cohen et al., 2007).

Exploratory behavior has been defined in several ways. One distinction is made between directed exploration which is driven by information-seeking and systematically exploring one’s options, and random exploration, which is driven by decision noise (Wilson, Geana, et al., 2014). Further, exploratory mechanisms and their effectiveness are critically affected by uncertainty in the environment, such as the probabilities of payouts of various choices in an n-armed bandit task (Daw et al., 2006), or the subsequent patches in a foraging task (Stephens & Krebs, 1986). Two major accounts explain explore–exploit behaviors, which are the interaction of several neural regions (e.g., dACC, dorsal striatum, lateral PFC, and VS; Donoso et al., 2014), or a dual-system driven by opponent processes in frontoparietal regions (Mansouri et al., 2017). Since these models seem to be unresolved, understanding how subcortical areas interact with frontopolar regions in explore–exploit decisions and how environmental uncertainty mediates this process may inform the underlying mechanisms of explorative and exploitative choices in a dynamic environment.

Newer evidence has suggested that subcortical areas and those implicated in dopaminergic transmission have a more substantial role in explore–exploit behaviors than previously realized (Chakroun et al., 2020), which complicates the understanding of what mechanisms drive these behaviors. Increased dopamine levels in the striatum promoted explorative behavior (Verharen et al., 2019) and influenced participants to leave patches in poor environments earlier in a foraging task (Heron et al., 2020). However, lower dopamine levels only attenuated directed exploration compared to random exploration (Chakroun et al., 2020), suggesting that varying dopamine may have disparate effects depending on the exploration strategy implemented. Further, exploitative compared to explorative decision making elicits greater activation in the ventral tegmental area (VTA; Laureiro-Martínez et al., 2015), suggesting that explore–exploit decisions are associated with reward regions.

Nonetheless, cortical regions also exert a clear and major role in explore–exploit decisions, specifically in representing the environmental uncertainty of new options (Badre et al., 2012; Navarro et al., 2016; Tomov et al., 2020). Uncertainty in an environment has been reflected in neural responses in several ways, with relative uncertainty in the right rostrolateral PFC driving directed exploration (Badre et al., 2012), and total uncertainty in the right dlPFC driving random exploration (Tomov et al., 2020). When deciding to switch from exploring to exploiting, the activation of the vmPFC seems to impact three major constructs: uncertainty (Trudel et al., 2020), evidence accumulation in switching decisions (Blanchard & Gershman, 2018), and the decision to leave a given patch to explore another in foraging problems (McGuire & Kable, 2015). Further, recent transcranial direct current stimulation (tDCS) used anodal and cathodal stimulation of the frontopolar cortex influencing participants to make slower exploratory and faster exploitative decisions, respectively (Raja Beharelle et al., 2015). Another study using the same approach found these changes in behavior were specific to directed but not random exploration (Zajkowski et al., 2017) indicating a causal relationship in frontopolar regions in modulating explore–exploit choices. Further, risk-taking in a sequential decision-making task was represented in the right lateral PFC (Holper, ten Brincke, et al., 2014), suggesting that assessing risk may be another feature of exploration. Next, exploitative decision making elicits greater activation in the ventral tegmental area (VTA) and PFC compared to exploration decisions (Laureiro-Martínez et al., 2015), suggesting that explore–exploit decision making significantly interacts with reward regions. Finally, activation in the temporoparietal junction (TPJ), intraparietal sulcus (IPS), and anterior cingulate cortex (ACC) in explorative decision making suggest that explore–exploit decisions may employ attentional processes, interacting with executive function (Laureiro-Martínez et al., 2015). Taken together, these findings reinforce the importance of both frontopolar and subcortical regions in explore–exploit decisions.

Recent research has revealed how subcortical regions contribute to reward learning and how frontopolar responses of environmental uncertainty contribute to explore–exploit decisions. Despite these advances, theoretical models of explore–exploit decisions remain unresolved because subcortical regions play a more substantial role than previously realized, complicating the ability to establish a unitary set of mechanisms that cut across multiple explore–exploit paradigms. This result has led to a more complex representation of explore–exploit choices which integrate both model-based and model-free representation of the environment. Another complicating factor may be the lack of converging evidence over different kinds of tasks (von Helversen et al., 2018) and their apparent lack of ecological validity due to tasks lacking realistic tradeoffs found in the natural world (Mobbs et al., 2018). Another unresolved challenge includes understanding how social context affects explore–exploit decisions, such as how teams initiate or terminate a project. However, current findings together have made significant inroads into understanding explorative and exploitative behavior. Future research into explore–exploit problems has promise for individuals and policymakers to provide valuable insights into how people explore and allocate resources in uncertain and dynamic environments.

2.3 |. Risk and ambiguity

It is often challenging to predict the outcome of a decision. When faced with uncertain outcomes, decisions can be described in the form of a risky gamble. For example, a patient might need to weigh the probability and value of a treatment option. Although there is considerable heterogeneity in risk behavior across decision makers, decision neuroscience has focused on a small number of models to explain these processes. We focus on results from three of these models, including prospect theory (Kahneman & Tversky, 1979), expected utility theory (Friedman & Savage, 1948; Knutson & Peterson, 2005), and portfolio theory (Markowitz, 1991; Raggetti et al., 2017). The first model, prospect theory, describes risk aversion using diminishing returns for subjective value, accounts for preference reversals in losses (loss aversion), and weights subjective probabilities to explain how people behave in the face of uncertain outcomes (Barberis, 2013). Applying this theory allows researchers to estimate subjective value at an individual level with results indicating that the vmPFC, lateral PFC (Holper, Wolf, & Tobler, 2014; Schultz, 2010), and VS track the subjective value of risky prospects (Blankenstein et al., 2017; Levy, 2017; Levy et al., 2010). These processes are likely supported by dopamine action in those regions (Castrellon et al., 2019; Morgado et al., 2015; Soutschek et al., 2020). Focusing on the differences in behavior between subjects with differences in neuroanatomy tends to highlight an alternate set of regions including the right posterior parietal cortex (Gilaie-Dotan et al., 2014) and amygdala (Jung et al., 2018). While the meaning of this discrepancy awaits further study, these differences could indicate potential sources for trait- and state-like risk behavior.

Although loss aversion and probability weighting, like subjective value, seem to be reflected in the striatum and vmPFC, there are different regions and neurochemistry that uniquely contribute to subjective probability. Specifically, subjective probability, the tendency to overweight low probabilities and underweight high probabilities (Kahneman & Tversky, 1979; Tversky & Kahneman, 1992), is modulated by dopaminergic action (Burke et al., 2018; Takahashi et al., 2010) and has been reflected in the activation of the dlPFC and PCC (Suter et al., 2015; Wu et al., 2011). Neural markers of loss aversion have been reported in the insula, and the amygdala (Bartra et al., 2013; Canessa et al., 2013, 2017; Sokol-Hessner et al., 2013), and have been related to both norepinephrine (Sokol-Hessner et al., 2015; Takahashi et al., 2013) and dopamine (Chen et al., 2020), suggesting that subjective value arises through the cooperation of multiple independent mechanisms.

Although we have emphasized prospect theory as the prevailing approach to risk taking in decision making, there are alternative models that also explain risk behaviors, including expected utility theory and mean–variance frameworks such as portfolio theory. While the expected utility is a simple model and explains a wide array of human decisions (Burghart et al., 2013), expected utility theory does not predict loss aversion or probability weighting. Alternatively, portfolio and other risk theories have focused on the variance and skew of risky outcomes (Markowitz, 1991; Raggetti et al., 2017). Both mean–variance and expected utility frameworks were difficult to disambiguate with behavior alone and it was hypothesized that neural information might give rise to better insights into the computations of risk (Tobler & Weber, 2014). However, current decision-neuroscience research has been unable to distinguish a leading model as neural signals consistent with both expected utility (Gilaie-Dotan et al., 2014; Levy et al., 2011; Lopez-Guzman et al., 2018) and mean–variance frameworks (Grabenhorst et al., 2019; Holper, Wolf, & Tobler, 2014; Symmonds et al., 2011) have been often observed within the regions thought to typically encode subjective value like VS, aIns, and PFC. Future work may attempt to reconcile mean–variance and expected utility approaches or find signals that are inconsistent with one of these frameworks.

While many day-to-day decisions involve risky choices, most do not have explicitly defined probabilities of success. When a person lacks an explicit description of the probability of an outcome, they are experiencing a condition known as ambiguity (Ellsberg, 1961). Individuals tend to prefer options where the probability of different outcomes is known (i.e., risk) compared to options where the probabilities are unknown (i.e., ambiguity), often at the expense of potential rewards (Jia et al., 2020). Although these types of decisions are modeled differently, a large set of research has focused on whether ambiguous decisions were processed similarly to risky decisions or are supported by distinct mechanisms (Hsu et al., 2005; Huettel et al., 2006). Activation unique to ambiguity, as opposed to risk, has been associated with the AMY, PCC, temporal gyrus, and lateral PFC (I. Levy et al., 2010; Pushkarskaya et al., 2015). Although most individuals are averse to ambiguity, familiarity with a task can greatly decrease this bias (Denison et al., 2018; Grimaldi et al., 2015; Hayden et al., 2010; Lempert et al., 2015). One reason experience may reduce ambiguity is through reimagining ambiguous lotteries as compound lotteries. Compound lotteries are two-stage lotteries where the prize of the first lottery is the opportunity to participate in another lottery. For example, an urn might be filled with winning or losing tickets, determined by flipping a coin. Although the average urn will have equal amounts of winning and losing tickets, individuals seem to be sensitive to the statistical distribution of the urn’s composition, often described as the second-order probability (Halevy, 2007; Klibanoff et al., 2009). Increased sampling of an unknown lottery reduces the variance of these second-order distributions and could reflect the relationship between experience and ambiguity aversion. Research using fMRI has shown that the PCC and precuneus seem to track the second-order probabilities necessary to represent these compound lotteries (Bach et al., 2011; Paul et al., 2015). This suggests that individuals do represent the complex second-order probabilities assumed by these models. A strong relationship between behaviors in the face of ambiguity and compound lotteries may provide avenues for linking behaviors under risk and ambiguity. However, it is still uncertain whether there is a strong relationship between individual preferences for compound lotteries and ambiguous decisions.

2.4 |. Discounting and self-control

A hallmark of typical decision makers is their tendency to act impulsively. Within decision neuroscience, impulsivity has often been assessed by measuring tradeoffs between current rewards and future rewards, referred to as intertemporal choice. Seminal work by George Ainslie has demonstrated that people tend to devalue rewards hyperbolically at a consistent rate over time (Ainslie, 1975). However, a more recent “as soon as possible” model describes this hyperbolic discounting as relative to the soonest possible reward (Kable & Glimcher, 2010). While there is debate over which model best represents discounting behavior, it remains unclear how variations in self-control best explain intertemporal choices (Scheres et al., 2013). A significant question at the end of the preceding decade about temporal discounting was whether there was a value signal unique to discounting (Carter et al., 2010). Most of the recent advances in the field of intertemporal choice have been in acquiring a more nuanced understanding of what drives these decisions.

New findings have established clearer roles of prefrontal and subcortical regions in regulating discounting decisions, characterizing the ventral striatum (VS) as the driver of impulsivity, dlPFC as the brakes, and the vmPFC as the central arbiter between the two with a slight bias toward acting as the brakes. Subcortical structures exert an important role in impulsive decisions, with heightened activity in the dorsal (Hamilton et al., 2020) and ventral striatum (de Water et al., 2017) among adolescents predicting impulsive choices. Framing intertemporal choice problems sequentially highlights opportunity costs and is associated with decreased impulsivity (Magen et al., 2008), which may be due to lower activity within the striatum in response to immediate rewards and a reduced activation of the dlPFC to facilitate the choice of larger later rewards (Magen et al., 2014). These results suggest that the valuation process is significantly modulated by projections from reward-related subcortical structures. Further, some recent evidence suggests that intertemporal valuation converges in the vmPFC, consistent with its characterization as a valuation hub in other domains such as explore–exploit dilemmas and empathetic choices described elsewhere in the review. For example, discounting tendencies varied in several discounting tasks for different age groups but remained consistent when reduced to subjective value mapped in the vmPFC (Seaman et al., 2018).

However, recent investigations into the dlPFC and vmPFC in discounting decisions have found mixed findings with regard to their roles as the “brakes” and the “central hub” respectively. One investigation found that increased temporal discounting was linked to greater connectivity between both the dlPFC and the vmPFC; however, it was the increased activity in the vmPFC during decisions that were associated with a greater tendency to delay rewards (Hare et al., 2014). Stimulation of both the vmPFC (Cho et al., 2015), and dlPFC (He et al., 2016; Shen et al., 2016; Xiong et al., 2019) increased delay discounted choices for future larger rewards and disruption of the lateral prefrontal cortex increased impulsive choices (Figner et al., 2010), suggesting that both the dlPFC and the vmPFC together may serve to counter impulsivity. Surprisingly, another investigation found that stimulating the dlPFC (Kekic et al., 2014) did not increase delayed discounted choices. Nonetheless, the overall direction of recent studies suggests that increased activation of dlPFC is both necessary and sufficient to influence the delay of gratification and may be interpreted as a mirror to an impulsive drive though the vmPFC may also serve a modulating role in impulsivity above and beyond integrating the signal between the VS and the dlPFC. This interpretation seemed to be consistent with earlier work suggesting that while the VS was more sensitive to the magnitude of a reward, the dlPFC was more sensitive to the delay of rewards (Ballard & Knutson, 2009), with increased dlPFC activation associated with shorter delays. In sum, the weight of the evidence suggests that the vmPFC still seems to be the central hub of the valuation process as it integrates signals from both the dlPFC and VS, though it may also exert some “braking” influence on its own account.

The substantial role of the vmPFC in regulating intertemporal choice suggests that this brain region has an important role in computing the value of current and future rewards. However, integrating research in intertemporal choice, valuation, and emotion suggests that the vmPFC calculates value across many types of decisions, diminishing the likelihood that there is a value signal unique to discounting choices. Taken together, in the past decade researchers have increased the spatial specificity of the vmPFC and the dlPFC, their connectivity with reward regions, and developed a causal understanding of these relationships which sheds light on the valuation of current versus future rewards.

2.5 |. Valuation

One of the foundational ideas within decision neuroscience is that choice-related processes about actions and goods reduce to a single common value (Padoa-Schioppa, 2011; Wunderlich et al., 2012). To this end, many fMRI studies have looked for evidence of a brain region or network responsible for a “common currency” value signal. Early work looked for brain regions that encode subjective value independent of a task (reward learning, risk, temporal discounting, etc.) and the type of good (monetary, food, social, information, etc.), with results often converging on the vmPFC (Camille et al., 2011; McNamee et al., 2013; Smith & Huettel, 2010; Winecoff et al., 2013) and VS (Krastev et al., 2016; Tang et al., 2012; Vassena et al., 2014). Although some studies implicated the OFC as a possible source of a common currency (Charpentier et al., 2018; D. J. Levy & Glimcher, 2012; Padoa-Schioppa & Conen, 2017), other accounts suggest that the OFC’s role is more specialized in the comparison process or representing the state space for decisions (Blanchard et al., 2015; Rudebeck & Murray, 2011; Wilson, Takahashi, et al., 2014).

To identify separate value signals, researchers have assessed the roles of the OFC in both social and general value processing, leveraged multivariate pattern approaches, and investigated connectivity between regions with mixed results. These methods have found patterns of responses that code for multiple values (Kobayashi & Hsu, 2019) and some that code specific reward values (Smith et al., 2016; Wake & Izuma, 2017). A multivariate analyses of OFC during food-based decisions found that overall subjective value of a particular food item is represented in patterns of neural activity in both medial and lateral parts of the OFC, despite that only the lateral OFC represented nutritional attribute of the item (Suzuki et al., 2017). These findings suggested that signals from lateral OFC were integrated into medial OFC to compute a common subjective value of the food item. Likewise, the computation of multiple values integrated into a common signal is necessary for social decisions (Ebner et al., 2018; Izuma et al., 2008; Rademacher et al., 2010; Wake & Izuma, 2017). Though these studies face many difficulties such as identifying a common currency when also accounting for nonvalue variables like attention, affect, salience, and premotor activation (O’Doherty, 2014; Rigney et al., 2018), these results have identified some of the organizing principles that describe how these signals are integrated (Suzuki & O’Doherty, 2020). To help overcome these weaknesses, some models represent value as an emergent process that incorporates these variables by integrating value from numerous regions (Hunt & Hayden, 2017). This value integration process compares between attributes of assets under consideration and assigns attribute salience in order to guide choice behavior (Hunt et al., 2014). Understanding and characterizing the consistent patterns of competition in this hierarchy for real-world stimuli could be a persistent challenge for the field.

Another major vein of research has explored the role of choice on the neural signals of value and preference. While some efforts have tried to separate value from choice (Louie & Glimcher, 2010), increasing evidence suggests that the act of choosing may itself have its own value (Ly et al., 2019). One way this has been studied is by presenting participants with agency or no agency situations. Using this approach, when participants were able to make their own choices in a gambling game they reported more favorable ratings and showed increased VS activation compared to watching a computer make selections for them (Leotti & Delgado, 2014). Strikingly, given the choice between playing themselves and allowing the computer to play in their place, participants strongly preferred gambles with the agency even when it presented significant financial costs to themselves, with the degree of cost tracked by activation of the vmPFC (Wang & Delgado, 2019). Other studies have reported increased activation across multiple value-related regions for items that were previously chosen during difficult decisions (Jarcho et al., 2011). In particular, activity in the vmPFC, VS, and inferior frontal gyrus were associated with increased preference for chosen items after decisions were made.

Another method to study the value of choice has been to increase the number of choices available to a participant. In these tasks, as the number of choices increased, BOLD responses in the VS and ACC followed an inverted U shape suggesting a choice overload (Reutskaja et al., 2018). This pattern continued when participants were asked to reconsider their choices, initially increasing value for items chosen from a small set but decreasing again for items chosen from larger sets, where vlPFC activation reflected the influence of choice set size on revaluation (Fujiwara et al., 2017). Another study noted increased connectivity between vlPFC and PCC when individuals have more choice, particularly if they exhibited greater self-reported reward sensitivity (Cho et al., 2016). The combination of results displaying the value of control and choice overload presents an opportunity to understand when and why choice modulates value signals.

Another recent major trend has been the application of models that describe sensory perception, particularly drift-diffusion models (DDM; Ratcliff, 2002; Smith, 2000) and divisive normalization (Heeger, 1992; Louie et al., 2015) to value-based decision making. DDM and divisive normalization both draw parallels between valuation and perceptual cognition and attempt to explain when and why people have trouble discriminating between different values. These models are not mutually exclusive and work has been done to investigate how these effects may interact (Otto & Vassena, 2020). Although we hope that the following descriptions give the reader an intuition about these two models and why they have been so widely applied, the full extent of their contributions in risky decision making (Ma & Jazayeri, 2014; Peters & D’Esposito, 2020) and reward learning (Bavard et al., 2018; Pedersen et al., 2017) cannot be contained here. Over the last decade, both DDM and divisive normalization have been applied in similar areas including multi-attribute choice (Chang et al., 2019; Fisher, 2017), temporal effects of value (Clay et al., 2017; Zimmermann et al., 2018), and investigating the role of attention in decision making (Gluth et al., 2020; Webb et al., 2020).

Drift diffusion models describe the valuation process as an accumulation of evidence between alternatives. As relative evidence accumulates for an option, it drives a decision toward a decision threshold associated with that option. A choice is made when the evidence passes the decision threshold. DDM has been used to account for the effects of timing and attention in the valuation process (Gluth et al., 2020; Krajbich et al., 2012; Mormann et al., 2010). The DDM estimates four parameters: the starting point bias (pre-existing preferences for one response), the drift rate (evidence accumulation), decision threshold (linked to speed/accuracy trade-offs), as well as nondecision time (Clay et al., 2017; Lerche & Voss, 2017). FMRI results suggest that evidence accumulation in value-based decisions is reflected in the posterior-medial frontal cortex (Pisauro et al., 2017), which exhibits task-dependent coupling with the vmPFC and the striatum. Consistent with its role in integrating the evidence prior to reaching a decision, this region also exhibits task-dependent coupling with the vmPFC and the striatum, brain areas known to encode the subjective value of decision alternatives (Polanía et al., 2015; van Vugt et al., 2012). Additionally, stimulation of the dlPFC affected the strength of evidence accumulation showing a causal relationship to activity in these areas and the accumulation process (Maier et al., 2020).

Decision neuroscience has taken advantage of drift-diffusion and other sequential sampling models, extending these processes to examine the role of temporal dynamics (Luzardo et al., 2017), attention (Krajbich & Rangel, 2011), and multiple attributes (Fisher, 2021) in economic decisions. Through exploring the effects of time on decisions, researchers have shown that these processes are remarkably flexible. When given less time to make a decision, individuals experience a decreased decision threshold and increased noise in the slope of their drift process (Milosavljevic et al., 2010). Other results have indicated that the evidence accumulation process can begin at various times in a process guided by attention (Maier et al., 2020). Some drift-diffusion models have explicitly included the influence of attention in decision making, suggesting that evidence accumulation is amplified when certain features are being attended to (Krajbich & Rangel, 2011) and visual attention is crucial when choosing among large sets and familiar stimuli (S. M. Smith & Krajbich, 2019). Incorporating both visual attention and varied temporal dynamics to DDM indicated that shifts in visual gaze under increased time pressure predicted more selfish choices among participants (Teoh et al., 2020). Taken together, DDM has been effectively applied across varying time horizons and in applications assessing the effects of attention on decision making.

However, it has been challenging to extend DDM toward decisions where people consider two or more attributes underlying the binary choices presented to them. For example, a person choosing between two cars may incorporate trade-offs between price, mileage, and horsepower when deciding which car they want to buy. While attentional DDM tends to model evidence accumulation to decide between binary alternatives (e.g., car one and car two), other models have described the evidence accumulation process occurring along with the attributes (e.g., price, mileage, and horsepower) instead (Bhatia, 2013; Trueblood et al., 2014; Tsetsos et al., 2012). To investigate how people model evidence accumulation along with attributes rather than alternatives, researchers introduce additional irrelevant alternatives to classic DDM problems, which reduced choice accuracy or even biased choices (Chau et al., 2020; Kaptein et al., 2016). Such results suggest that there are a variety of contextual distractor effects on decision making (Busemeyer et al., 2019). While one recent experiment was unable to reproduce the distractor effect, instead indicating that high-value distractors attracted greater attention and slower responses (Gluth et al., 2020), a reanalysis of the same dataset employing an alternate model of divisive normalization suggested that high-value distractors biased choices through splitting attention in multi-attribute decisions (Webb et al., 2020). Taken together, DDM has had somewhat mixed applications when integrated with multi-attribute models, though has been successfully extended when accounting for attention and temporal dynamics.

Another neural model of value-based decision making includes divisive normalization. The divisive normalization model was originally built to describe how the efficient coding of visual information affects perception. This model has since been applied to show how decision values are neurally coded in a given context relative to all of the options considered. Specifically, divisive normalization describes the encoding of an asset’s subjective value through a process that re-scales value proportionally to the sum of all values in the current choice set plus some constant (Louie et al., 2015). Activity from neural recordings in the lateral intraparietal cortex of rhesus monkeys was shown to reflect these normalized decision values as the value-related neural activity of each option was proportional to the values of other available alternatives (Louie et al., 2011). In addition, context-based fluctuations in value coding within the vmPFC and OFC seem to follow the divisive normalization rule and can explain a variety of irrational behaviors such as the decoy effect (Louie et al., 2011, 2013; Rangel & Clithero, 2012). In sum, many argue that divisive normalization is optimal for the biological coding of d values across multiple domains (Landry & Webb, 2021; Louie et al., 2015; Steverson et al., 2019; Tsetsos et al., 2016).

Recent extensions of divisive normalization, like DDM, have focused on multi-attribute choice, temporal effects, and the role of attention. In multi-attribute choice, divisive normalization has been linked to the decoy effect and other irrational considerations of nonchosen options (Dumbalska et al., 2020; Soltani et al., 2012). This inclusion of irrelevant alternatives in the choice process arises from the simple neural dynamics of normalization circuits by using a model organism whose neural circuitry has been fully described, Caenorhabditis elegans (Cohen et al., 2019). However, normalization not only occurs over concurrent alternatives, but also over time. This temporal normalization decreases an item’s coded value after recently experiencing high-value items and increases the coded value after experiencing many low-value items (Khaw et al., 2017). Lastly, divisive normalization has been expanded to incorporate the modulation of choice by attentional processes (Reynolds & Heeger, 2009). Some suggested that while attentional modulation may primarily act on sensory areas, value-related modulation may drive decision-related activity in regions like lateral intraparietal cortex (Louie et al., 2011). However, demonstrations of how reward affects early visual processing have suggested that value and top-down attention engage overlapping mechanisms of neuronal selection (Stanişor et al., 2013).

The extensions we have described across DDM and divisive normalization help to highlight not only the utility of computational models to describe various cognitive processes but also the underlying mechanisms of attention and value, which have been of considerable interest to decision neuroscience as a whole. Despite advances in understanding the underlying processes of valuation, there needs to be further work to thoroughly disentangle the neural mechanisms affecting choice. Better descriptions of reward processing mechanisms may provide a foundation to understand real-world behaviors. However, despite the critical importance of valuation in the decision-making process, other factors such as emotional and social influences should be considered to understand human decision processes more fully.

3 |. EMOTIONAL AND SOCIAL MODULATORS OF DECISION MAKING

The effects of emotions and social influence on decision making constitute their own perennial topics for researchers interested in decision neuroscience, developing into fields of affective and social neuroscience respectively. Both domains have made significant inroads into understanding behavior during the 2000s to 2010s but were left with questions assessing whether cognition and emotion should remain distinct elements in psychology (Dalgleish et al., 2009) and how to integrate recent findings of the physiological elements responsible for social behavior (Norman et al., 2010). Most of this work has revolved around the interaction of value-related regions such as the VS and socially engaged areas (Figure 3) like the right-temporoparietal junction (rTPJ). However, the significant overlap of activation in regions like the vmPFC suggests that there is a degree of integration of social, value, and emotion-related information (Delgado et al., 2016). Nonetheless, recent findings suggest that emotional and social related regions such as the ACC and rTPJ can modulate the function of value related areas like the vmPFC during social and emotional decisions (Lockwood et al., 2015; Morelli et al., 2015; Smith, Lohrenz, et al., 2014; van den Bos et al., 2014). Recent research has made significant inroads into characterizing how neural representations of emotional and social information modulate the function of canonically cognitive regions (Smith et al., 2015). Both conceptual and empirical challenges remain in integrating these models with the broader literature; however, questions remain to what degree these factors interact with traditional reward processes.

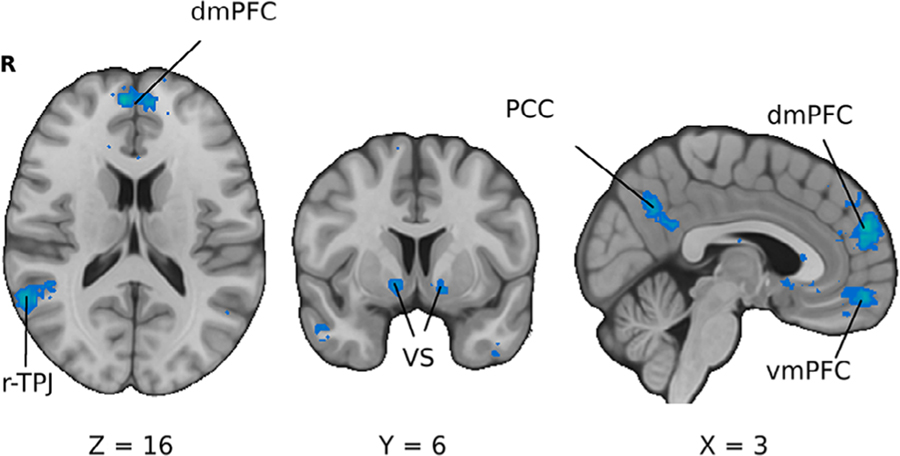

FIGURE 3.

Brain regions associated with social processes. The association test for an automated meta-analysis of social interactions provided by the Neurosynth platform. Key regions highlighted include the ventromedial prefrontal cortex (vmPFC), dorsomedial prefrontal cortex (dmPFC), ventral striatum (VS), and right temporoparietal junction (rTPJ)

3.1 |. Emotion

While in the past, many have viewed emotions and rationality as conflicting processes, they are now seen as collaborative aspects of real-life decision making and either may augment or interfere with optimal decision making across decision frames (Li et al., 2017). Many consequential real-life situations involve making decisions in emotional contexts such as decisions under stress, and decisions where one’s choices affect others. As a result, there has been extensive research in decision neuroscience into factors such as stress and the experience of empathy for others as these factors can modulate preferences related to emotional decision-making and substantially affect one’s choices.

In particular, there has been extensive research on how stress modulates seemingly optimal decision making, with mixed findings. Acute stress impairs the ability to persist in the face of setbacks perceived as uncontrollable (Bhanji et al., 2016), influences people to make riskier less valuable choices (Wemm & Wulfert, 2017), contributes to participants overexploiting resources (Lenow et al., 2017), and make less model-based decisions when their working memory is taxed (Otto et al., 2013). As a result, it has been challenging to isolate patterns in how stress affects behaviors, sometimes hindering and at other times helping to make more optimal decisions. For example, moderate physiological stress may help participants make more optimal choices, augmenting learning to maximize long-term rewards from experience (Byrne et al., 2019). These stress effects seem to directly affect the core circuitry of reward processing, with regions including the dorsal striatum and OFC showing a decreased sensitivity to monetary outcomes in individuals who had just performed a cold pressor task (Porcelli et al., 2012). Taken together, these conflicting results suggest that stress may act as a polarizing variable, eliciting stronger behaviors in somewhat unpredictable ways across several domains of research.

Assessing how stress affects economic measures of individual differences such as risk, uncertainty, and ambiguity aversion has led to mixed results. Although meta-analytic methods have suggested that stress increases risk-taking behavior (Starcke & Brand, 2016), stress has shown incredibly mixed effects on decision making under uncertainty. Some results only show an increased tolerance to ambiguity (FeldmanHall et al., 2016), while others find an overall increase in risk taking without ambiguity mediating this effect (Buckert et al., 2014). Others still have shown that acute physiological stress has no effect on risk attitudes, loss aversion, or choice consistency (Sokol-Hessner et al., 2016). These inconsistent results may reflect methodological variability across studies but also a nonlinear effect of stress on behavior in general (Peifer et al., 2014; Porcelli & Delgado, 2017; Salehi et al., 2010; Schilling et al., 2013). A possible explanation worth investigating is that acute stress may increase optimal behavior related to risk, uncertainty, and ambiguity in the short term, and results in degradation of optimal choices in longer terms. Taken together, the mixed behavioral results present a puzzle for future researchers to link the effects of stress on various domains of choice.

Although not an emotion itself, empathy, or the ability to recognize, understand, and share the thoughts and feelings of another can substantially impact decision making and ties together both social and emotional influences on choice behavior (Lockwood et al., 2016). Empathy is associated with generosity (Park et al., 2017), which is both an intuitive link and a quantitative measure of behavior. People who acted generously had slower reaction times (Hutcherson et al., 2015), greater self-reported happiness (Park et al., 2017) and stronger activation in the TPJ, VS (Hutcherson et al., 2015; Park et al., 2017), and the vmPFC (Hutcherson et al., 2015). Empathy has been further delineated into affective empathy, described as inferring the experience or feeling of others’ emotions, and cognitive empathy, which can be described as mentalizing or taking the perspective of another (Kerr-Gaffney et al., 2019; Zaki et al., 2012). Empathy requires considerable cognitive effort (Cameron et al., 2019) and while people act prosocially, they would rather exert more effort to benefit themselves (Lockwood et al., 2017), suggesting people differentiate the value of helping others compared to selfishly benefiting themselves. Studies attempting to separate affective and cognitive empathy using fMRI have shown that affective empathy was associated with activation in the Anterior Insula (AIns; Masten et al., 2011), whereas cognitive empathy was associated with activation in TPJ, suggesting two separate mechanisms for altruistic behavior (Tusche et al., 2016).

There are several theorized mechanisms that seem to drive cognitive and affective empathetic behavior, including mentalizing and emotionally experiencing the pain of others, respectively. One mechanism that may impact the mentalizing component of cognitive empathy includes value computations for the self versus others in the rostral dmPFC for modeled choices, and vmPFC for choices about to be executed (Nicolle et al., 2012). Further, watching others experiencing rewards is associated with trait empathy (Lockwood et al., 2015) and is encoded in the OFC and ACC in rhesus monkeys (Chang et al., 2013), and the ACC (Lockwood et al., 2015), vmPFC, and the VS in humans (Morelli et al., 2015). With respect to affective empathy, the driving mechanism may be experiencing the pain of others, which is associated with social pain regions such as the dACC and the aIns (Masten et al., 2011). Further, greater gray matter density in the aIns was also correlated with higher affective empathy scores (Eres et al., 2015). Next, the ACC has been robustly associated with various elements of social pain, which include self-reported distress and rejection (Rotge et al., 2015; Woo et al., 2014), further reinforcing its importance in the experience of affective empathy.

However, one common region that shares activation between vicarious and personal reward is the vmPFC (Harris et al., 2018; Morelli et al., 2015), suggesting that value associated with most empathic decisions may be computed in this region. Further, participants with vmPFC lesions gave less money to those who were suffering (Beadle et al., 2018), indicating that the vmPFC may be critical for regulating empathetic behavior. While distinctions are still made for cognitive and affective processes, the commonality of the vmPFC in both systems indicates that a connectivity-based approach across a gradient of activation may be a more accurate representation rather than a dual system of cognition versus emotion. Future directions include developing a causal understanding of empathetic mechanisms using brain stimulation methods. For example, one unresolved question is whether value in the vmPFC moderates affective processes, or is value assessed first, with empathetic processes moderating the subsequent value of a decision? Nonetheless, current findings indicate a more complex and robust understanding of both cognitive and affective empathy, and their respective neural correlates in both cortical and subcortical regions of the brain.

3.2 |. Social context

Many decisions occur in social situations, which, along with emotional experiences can substantially modulate our choices. Social reinforcement is a potent reward in its own right (Distefano et al., 2018; Hackel et al., 2017; Wake & Izuma, 2017) and helps to explain human aversion to inequality (Fehr & Schurtenberger, 2018; Tricomi et al., 2010). Moreover, the presence of peers influences participant’s choices among adolescents (Powers et al., 2018; Somerville et al., 2019; Van Hoorn et al., 2017), which more generally seems to be the product of modulated social value signals (Fareri et al., 2012). Applying social contexts to decision making adds a crucial dimension to understanding human choices, as social situations such as being in the presence of a peer or negotiating at an auction can substantially affect behavior in ways that seem normatively inconsistent with economic models (Somerville et al., 2019; Van Hoorn et al., 2017). Moreover, while canonical decision-making models (e.g., reward learning) can certainly describe social behavior, violations of social expectations can attenuate these mechanisms (FeldmanHall et al., 2018), suggesting that social processes involve a separate and distinct decision-making system. Assessing how social situations affect behavior and how they are neurally represented can substantively inform how social context modulates choices such as when people decide to trust others.

One important aspect that may explain social behaviors includes how social rewards are encoded in the brain, with people deriving unique reward value from social interactions (Lin et al., 2012). Studies have shown that peers can enhance impulsivity (O’Brien et al., 2011) and risky choice among adolescents, an effect tied to increased striatal responses to reward (Chein et al., 2011). In contrast, the presence of a mother can have the opposite effect, reducing risk-taking behavior among adolescents through blunted striatal responses and enhanced connectivity between the striatum and vlPFC (Telzer et al., 2015). The VS also responds to social rewards, such as social acceptance (Distefano et al., 2018; Wake & Izuma, 2017), inequity (Tricomi et al., 2010), rewards given to in-group versus out-group members (Hackel et al., 2017), and social comparison (Bault et al., 2011). The VS plays a critical role in integrating social information by coding social context and rewards (Báez-Mendoza & Schultz, 2013). For example, striatal reward value signals are enhanced during rewarding experiences shared with a friend (D. Fareri et al., 2012), when self-disclosing to others (Tamir et al., 2015; Tamir & Mitchell, 2012), and when receiving positive feedback in social interactions (Jones et al., 2011; Simon et al., 2014; Sip et al., 2015). Receipt of social reward modulates connectivity between regions comprising reward circuitry (e.g., striatum and vmPFC) and social brain regions such as the TPJ (Smith, Clithero, et al., 2014; van den Bos et al., 2014).

Socially rewarding situations, such as trusting another person, evoke activation in regions that overlap with the default mode network (DMN), including the vmPFC, PCC, and TPJ (Acikalin et al., 2017; Mars et al., 2012). Building on these findings, recent work has shown that there is enhanced connectivity between the DMN, superior frontal gyrus, and superior parietal lobule when experiencing reciprocated trust from close social others (Fareri et al., 2020). These findings suggest that DMN helps to integrate signals of the relative importance of positive experiences with close others and strangers. A meta-analysis identified that social conformity converges on activation including the VS, dmPFC, and aIns (Wu et al., 2016). Integrating these findings suggests that social contexts are inherently rewarding and that social reward circuitry, especially involving the VS, have robust impacts on social behavior (Bhanji & Delgado, 2014).

Social situations such as negotiations, or factors like trust and dishonesty, often require imagining the thoughts of one’s social partner. This act of mentalizing involves the temporal–parietal junction (TPJ) through tasks involving perspective taking (Martin et al., 2020), with the disruption of the TPJ decreasing the perceived harm of hurting others (Young et al., 2010). Further, the TPJ was associated with the discounting of delayed and prosocial rewards (Soutschek et al., 2016, 2020), predictive of social actions (Carter et al., 2012), and even the development of social relationships between players during a public goods game (Bault et al., 2015). While many of these findings reflect an increased activation in the TPJ for close social others, there have also been reports of reduced r-TPJ activity after negative interactions with a friend (Park & Young, 2020). Overall, the weight of recent research points toward the TPJ exerting a strong role in social contexts.

However, while the TPJ exerts a strong role in social situations, other regions such as the amygdala, VS, and vmPFC also exert an important modulating role in social contexts. For example, small self-serving instances of dishonesty can escalate over time, with a resulting reduction in amygdala response (Garrett et al., 2016). Moreover, a recent finding suggested that the computation of social value, contingent upon social closeness with a partner, in the VS and mPFC drive collaboration in a trust task (Fareri et al., 2015). Nonetheless, while manipulations of strategic games with human and computer opponents often show increased activation across numerous regions including the vmPFC, and PCC (Kätsyri et al., 2013), the TPJ showed the most distinctive social bias (Carter et al., 2012). During these social decisions, the TPJ has been shown to functionally couple with the vmPFC and influence the encoding of subjective value, with reduced TPJ activation after negative interactions associated with smaller decreases in social closeness (Makwana et al., 2015; Strombach et al., 2015). Although many investigations point toward the TPJ as necessary for social decisions, it remains unclear whether this region computes social-specific information or contributes toward generalized perspective taking that is integrated into other processes during social decisions.

Further investigations are necessary to investigate the precise role of the TPJ and other brain regions in mentalizing, social closeness, and its associations with experiencing trust. Given perceived social distance and trustworthiness of others, these factors may affect how people interpret the fairness of another person’s actions and how they act in their own self-interest. In social interactions and bargaining situations, people have strong attitudes toward fairness, which may be explained through inequity version and a perceived value for punishing norm violators (Mendes et al., 2018). Rejecting a partner who has acted unfairly in the Ultimatum Game has been associated with increased nucleus accumbens activation (Strobel et al., 2011; White et al., 2013), indicating that choosing to punish can itself be rewarding and resulted in increased activity in the dmPFC and bilateral aIns (Bellucci et al., 2018; White et al., 2013), forming elements of a punishment network. Moreover, transcranial direct current stimulation to the lateral PFC significantly affected both voluntary and sanction-based compliance in a variant of the Ultimatum Game (Ruff et al., 2013), suggesting that compliance may be due to fear of punishment.

Another explanation for discrepancies between Dictator and Ultimatum Game choices may be due to variability in participant strategic reasoning and potentially mediated by Emotional Intelligence (Sazhin et al., 2020). Furthermore, social norms tend to be more strongly enforced when a third party was perceived to be treated unfairly, rather than as an individual (FeldmanHall et al., 2014), suggesting that the variability in individual choices in the Ultimatum Game may incorporate other factors than punishment to enforce social norms. However, the aIns was found to be involved in decisions associated with harm done to self, whereas the amygdala was associated with punishment done to others (Stallen et al., 2018). Since unfair choices seem to reliably elicit aIns responses in the Ultimatum Game, the fear of punishment seems to at least partially explain this behavior. Nonetheless, a reliable finding is that cortical regions are recruited in balancing social rewards, with the vmPFC encoding immediate expected rewards in a public goods game as an individual utility while the lateral frontopolar cortex encodes the group value (Park et al., 2019). Taken together, these results indicate that people delicately balance social rewards and the threats of punishment in their choices, and recent research has been able to pinpoint the respective neural correlates of these decisional processes.

In summary, recent evidence has converged on several mechanisms to explain decisional processes in emotional and social contexts. One common theme is that situations involving empathy and trust are interconnected with the VS and vmPFC. These networks are further mediated by the TPJ, aIns, and ACC depending on the emotional or social context. Current and future challenges should assess whether these factors should continue to be viewed as simply modulators of decision making, or whether they should constitute unique parameters in a decision-making model. While these factors have shown robust effects in many studies, it remains unclear how much more variance they explain when integrated with canonical decision-making models associated with RPE and Valuation. Moreover, it remains unclear how strong these effects are outside of the lab. Finally, research into the role of social dynamics in decision making has placed a large emphasis on adolescents, especially in the realm of peer influence. These sample biases and the reliance on college-aged individuals heighten the need for understanding individual and demographic differences in decision making. These and future findings could provide a deeper understanding of bargaining behavior, which is relevant for understanding behavior in negotiations and auctions, clinical research into emotion/social disorders, and can lead to better predictions of social behavior.

4 |. INDIVIDUAL VARIABILITY: AGE DIFFERENCES AND CLINICAL EXTENSIONS

Understanding individual variability in decision making is a central goal of decision neuroscience (Smith & Delgado, 2015; K. S. Wang et al., 2016; Yoon et al., 2012). In particular, the field has made progress toward linking typical neurological development across the lifespan (Samanez-Larkin & Knutson, 2015) with changes in decision making as well as applications to psychopathology (Baskin-Sommers & Foti, 2015). Changes across the lifespan have focused on topics from the seemingly hasty decisions of adolescence to how decisions may reflect symptoms of mild cognitive impairment associated with old age (Lempert et al., 2020). In addition to strides made toward characterizing how responses to decision variables change across the lifespan, the field of computational psychiatry (Huys et al., 2016) has helped to incorporate decision neuroscience into contemporary models of psychiatric or mental disorders. In particular computational psychiatry, has focused on formal models of brain function to understand the mechanisms of psychopathology (Friston et al., 2014; Gillan et al., 2015; Montague et al., 2012).

Clinical applications hold promise for the practical utility of decision-neuroscience research. For example, task-dependent connectivity between the striatum and medial prefrontal cortex (MPFC) has been linked to prosocial value (Distefano et al., 2018; Hackel et al., 2017; Sul et al., 2015; Wake & Izuma, 2017). Other work has shown that prosocial decisions may be linked to gender differences in striatal activation and social preferences (Soutschek et al., 2017). Beyond social decision making and social preferences, understanding individual variability can also provide insight into how people perceive advertising campaigns (Venkatraman et al., 2015), with ventral striatal activation being a relatively strong predictor for how different people rate various 30-s TV advertisements. Further, a recent study demonstrated that the effect of price on an individual’s expectations and perceptions of product quality, referred to as the marketing placebo effect, was linked to gray matter density in the striatum and insula (Plassmann & Weber, 2015). A deeper understanding of consumer preferences and behavior is of great interest to marketers and may inform more effective targeting strategies for advertising campaigns.

Although these examples illustrate how decision neuroscience has begun to characterize individual variability in decision making in healthy adults, a wealth of other studies have extended this basic approach to consider variation across the lifespan (Mohr et al., 2010; Van Duijvenvoorde & Crone, 2013). One important area of research relates to adolescent decision making (Bjork & Pardini, 2015; Blakemore & Robbins, 2012; Hartley & Somerville, 2015). For instance, an influential early study suggested that adolescent risky choice is driven by tolerance to ambiguity (Tymula et al., 2012). Risk-taking behavior in adolescents has been linked to heightened reward sensitivity and VS reactivity (Braams et al., 2015; Somerville et al., 2010) and also reduced functional connectivity between the MPFC and vlPFC (Qu et al., 2015). Building on these findings, recent work has shown that increased cognitive control and integration of future-oriented thoughts reduces impulsivity in adolescents (van den Bos et al., 2015). Taken together, these findings have generally supported a model wherein adolescent risk-taking behavior and impulsivity are characterized by an imbalance of prefrontal and subcortical activity (Meyer & Bucci, 2016; Somerville et al., 2011) [but see (Romer et al., 2017) for an alternative perspective].

Yet, studies on adolescent decision making have extended these findings by considering individual differences in response to social reward and social context (Steinberg, 2008). For example, one study demonstrated that participants who showed increased VS responses to monetary rewards for their family—a prosocial reward—exhibited decreased risk-taking behavior 1 year later (Telzer et al., 2013). Given that much of adolescent risk-taking occurs in the presence of peers, other work has examined the role of social context in risk-taking behavior. Recent work has demonstrated that individual differences in adolescent risk-taking behavior are linked to peer conflict, with greater risk-taking behavior among adolescents with more peer conflict and low social support (Telzer et al., 2014). Although these studies are beginning to shine a light on the neurocognitive mechanisms that shape risky behavior among adolescents, large-scale longitudinal datasets are needed to examine individual differences in neurodevelopmental trajectories (Foulkes & Blakemore, 2018).

Some decision-making processes change as people age into middle and older adulthood (Mohr et al., 2010). Over the past decade, a host of studies within decision neuroscience have sought to characterize age differences in decision making in the laboratory and the real world (Samanez-Larkin, 2015). These studies have provided critical insights into the cognitive, affective, motivational, and neurobiological factors that shape decision making across older adults (Samanez-Larkin & Knutson, 2015). For example, older adults exhibit reduced striatal responses to reward prediction errors but not reward outcomes (Samanez-Larkin et al., 2014). These differences in reward learning appear to be mediated by individual differences in frontostriatal white matter integrity (Samanez-Larkin et al., 2012) and could also be linked to reduced dopaminergic receptors and transporters (Karrer et al., 2017). In addition, older adults are generally less risk-seeking than younger adults, though there is significant variation across domains and individuals (Josef et al., 2016). Building on these results, a recent study demonstrated that reduced temporal discounting in older adults is associated with richer perception-based details of autobiographical memory, an effect that may be linked to entorhinal cortical thickness (Lempert et al., 2020).