Abstract

Background

Increased mobile phone penetration allows the interviewing of respondents using interactive voice response surveys in low- and middle-income countries. However, there has been little investigation of the best type of incentive to obtain data from a representative sample in these countries.

Objective

We assessed the effect of different airtime incentives options on cooperation and response rates of an interactive voice response survey in Bangladesh and Uganda.

Methods

The open-label randomized controlled trial had three arms: (1) no incentive (control), (2) promised airtime incentive of 50 Bangladeshi Taka (US $0.60; 1 BDT is approximately equivalent to US $0.012) or 5000 Ugandan Shilling (US $1.35; 1 UGX is approximately equivalent to US $0.00028), and (3) lottery incentive (500 BDT and 100,000 UGX), in which the odds of winning were 1:20. Fully automated random-digit dialing was used to sample eligible participants aged ≥18 years. The risk ratios (RRs) with 95% confidence intervals for primary outcomes of response and cooperation rates were obtained using log-binomial regression.

Results

Between June 14 and July 14, 2017, a total of 546,746 phone calls were made in Bangladesh, with 1165 complete interviews being conducted. Between March 26 and April 22, 2017, a total of 178,572 phone calls were made in Uganda, with 1248 complete interviews being conducted. Cooperation rates were significantly higher for the promised incentive (Bangladesh: 39.3%; RR 1.38, 95% CI 1.24-1.55, P<.001; Uganda: 59.9%; RR 1.47, 95% CI 1.33-1.62, P<.001) and the lottery incentive arms (Bangladesh: 36.6%; RR 1.28, 95% CI 1.15-1.45, P<.001; Uganda: 54.6%; RR 1.34, 95% CI 1.21-1.48, P<.001) than those for the control arm (Bangladesh: 28.4%; Uganda: 40.9%). Similarly, response rates were significantly higher for the promised incentive (Bangladesh: 26.5%%; RR 1.26, 95% CI 1.14-1.39, P<.001; Uganda: 41.2%; RR 1.27, 95% CI 1.16-1.39, P<.001) and lottery incentive arms (Bangladesh: 24.5%%; RR 1.17, 95% CI 1.06-1.29, P=.002; Uganda: 37.9%%; RR 1.17, 95% CI 1.06-1.29, P=.001) than those for the control arm (Bangladesh: 21.0%; Uganda: 32.4%).

Conclusions

Promised or lottery airtime incentives improved survey participation and facilitated a large sample within a short period in 2 countries.

Trial Registration

ClinicalTrials.gov NCT03773146; http://clinicaltrials.gov/ct2/show/NCT03773146

Keywords: mobile phone survey, interactive voice response survey, survey, interactive voice response, non-communicable disease, surveillance, airtime incentive, response rate, cooperation rate, communicable disease, Uganda, Bangladesh, low income, middle income, LMIC, Africa, incentive, RCT, randomized controlled trial, lottery, cooperation, participation

Introduction

It is well evidenced that low- and middle-income countries are undergoing demographic and epidemiologic transitions; there is an increasing burden from noncommunicable diseases such as hypertension, diabetes, stroke, and other diseases [1,2]. Four mostly modifiable risk factors primarily contribute to this high noncommunicable disease burden—unhealthy diets, physical inactivity, tobacco use, and excessive alcohol consumption [3,4]. Continuous surveillance and monitoring of these risk factors are crucial to prevent and control noncommunicable diseases [5]. However, collecting data for noncommunicable disease risk factor surveillance is challenging in low- and middle-income countries due to the level of effort, time, and money required for face-to-face interviews and associated data management, analysis, and reporting [6].

High-income countries implement telephone interviews to obtain population-level estimates for health-related outcomes [7,8]. Participation in telephone surveys has declined in recent years in high-income countries, and other survey methods (eg, web-based) are also used to collect health-related data. Although most low- and middle-income countries do not have the infrastructure for conducting landline- or web-based surveys, increased access and ownership of mobile phones in low- and middle-income countries provide the opportunity to use mobile phone numbers for household surveys [9]. Throughout the COVID-19 pandemic, mobile phone surveys have been used to collect data on a broad range of topics [10-13].

There are several options for delivering mobile phone surveys: SMS text messaging, call center interviews by a human operator, and interactive voice response [14]. Interactive voice response is a mobile phone survey method wherein respondents use their mobile phone keypad to select answers from prespecified options (eg, “press 1 if you are male; press 2 if you are female”). Incentives for mobile phone surveys, often delivered as cash, coupons, vouchers, or airtime balances, have been shown to increase survey participation [15]. It could also be considered as compensation for the time spent by participants. Incentives may reduce the amount of time required for data collection by recruiting the optimum number of participants in a shorter time period. In high-income countries, where there is a larger body of evidence on a range of different survey types, providing an incentive beforehand typically produces better survey response than promised or lottery incentives across a [16-18]; however, overall findings have been mixed, and some studies [19,20] show that providing incentives does not improve participation. Past studies [21-24] from low- and middle-income countries have also shown similar mixed results. Studies [21,22] have also shown that delivering incentives to everyone, than using a lottery, can increase participation and reduce cost; there have been limited number of studies [23,24] examining the impact of different incentive amount on the overall survey cost, and investigating these factors would be helpful in understanding the feasibility of mobile phone surveys for future data collection. We aim to fill in these gaps in the literature by assessing the effect of different airtime incentive approaches on the cooperation, response, contact, and refusal rates of an interactive voice response survey for noncommunicable disease behavioral risk factors.

Methods

Study Design

We conducted a randomized controlled trial in Bangladesh (an area of approximately 148,000 km2 with an estimated population of 160 million [25]) and Uganda (an area of approximately 241,000 km2 with an estimated population of 40 million [25]). In 2017, mobile phone subscription rates were 83 and 55 subscribers per 100 people in Bangladesh and Uganda, respectively [9].

In this trial, incentives were delivered as airtime (ie, mobile phone balance). Participants were randomized to 1 of 3 study arms: no incentive (control arm), a promised airtime incentive of 50 Bangladeshi Taka (US $0.60; 1 BDT is approximately equivalent to US $0.012) or 5000 Ugandan Shilling (US $1.35; 1 UGX is approximately equivalent to US $0.00028) for completing the interactive voice response survey, or lottery incentive (500 BDT and 100,000 UGX), wherein the odds of winning were 1:20. The conduct, analysis, and reporting of results were performed in accordance with Consolidated Standards of Reporting Trials guidelines [26].

Participants

Participants were sampled using a fully automated random-digit dialing method [27]. Briefly, the country codes along with the 3-digit sequence specific to the mobile network operator were used as the base for potential mobile phone numbers. The remaining 7 digits were generated randomly. Respondents who self-reported being aged 18 years or older were eligible for the trial. The survey was programmed to have a designated local number appear on the respondent’s caller ID screen.

Randomization and Masking

The automated randomization process was performed within the interactive voice response platform to cover all mobile phone networks in both countries. Participants were randomized after selecting the survey language but prior to consent (Figure S1 in Multimedia Appendix 1). Due to the nature of the study design, participants were informed about the incentive during the survey introduction and, therefore, were not blinded to study arm allocation. Statisticians involved in data cleaning and analysis were blinded to participant allocation.

Procedures

The overall procedures were similar in both countries. Interactive voice response surveys were sent only once to each phone number, and calls were made between 8 AM and 8 PM local time. Respondents who picked up the phone were instructed to select a language from a list of languages: Bangla or English in Bangladesh and Luganda, Luo, Runyakitara, or English in Uganda. Candidate participants listened to a description of the survey objectives and requirements for the incentive (ie, survey completion) as applicable (Table S1 and Figure S1 in Multimedia Appendix 1). Participants were told that they would not incur any expenses by answering the survey. Age-eligibility was confirmed (ie, “Are you 18 years or older? If yes, press 1; if no, press 3“). Age-eligible candidates were provided the consent disclosure statement and asked to authorize their participation by pressing the 1 button on the mobile phone. Participants answered demographic and noncommunicable disease questions, and only those who completed the survey received the incentive. Participants were instructed to press the star key to repeat any questions.

Demographic data on age, gender, education, and location were collected to perform subgroup analysis (ie, to identify differences in participation by those characteristics). Noncommunicable disease questions were grouped into 5 modules: tobacco use, alcohol consumption, dietary habits (including consumption of fruits, vegetables, and salt), physical activity, and medical conditions (including hypertension and diabetes). Because respondents could end the interview before finishing all modules, the order of the noncommunicable disease modules was randomized to minimize attrition and to ensure that data reporting errors were as randomly distributed as possible. Questions within a module were not randomized in order to maintain skip patterns. The questionnaire was adapted from standardized surveys [28], and initial cognitive testing and user groups were conducted with people who identified themselves as being from a low- and middle-income country at Johns Hopkins University [29]. A series of key informant interviews and focus group discussions were also conducted in each country to assess the comprehensibility and accuracy of translated questionnaires and to improve the usability of the interactive voice response platform.

Ethical Approval

Johns Hopkins Bloomberg School of Public Health, Makerere University School of Public Health, The Uganda National Council for Science and Technology, and The Institute of Epidemiology Disease Control and Research institutional review boards approved the study protocol (number NCT03773146). The study was registered (NCT03773146), and the study protocol has been published elsewhere [30].

Outcomes

The primary outcomes of this trial were response rates 4 and cooperation rates 1, as defined by the American Association for Public Opinion Research (Table S2 in Multimedia Appendix 1) [31]. Response rate calculations included partial and complete surveys in the numerator. Cooperation rate was calculated as the proportion of complete interviews from all eligible respondents, but the calculation did not include people who immediately hung up or who did not answer the age question in the denominator. Secondary outcomes were contact rate 2 and refusal rate 2 [31]. The cooperation rate was the number of complete interviews divided by the sum of complete, partial, and noninterviews. Complete interviews were defined as respondents who answered at least 4 of the 5 noncommunicable disease modules. Partial interviews were defined as respondents who answered between 1 and 3 noncommunicable disease modules. Noninterviews included refusals (ie, participants who ended the survey at the consent question) and break-offs (ie, participants who were 18 years or older but did not complete an noncommunicable disease module). The response rate was calculated as the number of complete and partial interviews divided by the total number of complete and partial interviews, refusals, break-offs, and the estimated proportion of age-eligibility unknown calls (individuals who initiated the survey but did not answer the age question). The estimated proportion of unknown eligibility was obtained from the proportion of participants who responded to the age-screening question and indicated they were 18 years or older. Calls were classified as ineligible if the individual indicated an age below 18 years or did not pick up the phone. As a secondary analysis, contact refusal and response rates were calculated without applying e for the unknown participants.

Statistical Analysis

Demographic characteristics of complete interviews were described by study arms and compared using chi-square tests. Using the control arm as the reference category, risk ratios (RR) and 95% confidence intervals were calculated for contact, response, refusal, and cooperation rates with log-binomial regression [32]. To assess any potential effect modification of incentives on cooperation rates, the log-binomial models were extended and interaction terms with education, gender, age, and location were tested. We did not assess any effect modification for response rates because its equation included disposition codes for Unknown (participants who did not answer any of the demographic questions).

We calculated pooled risk ratios for different incentive arms using random-effects meta-analysis [33]. The heterogeneity statistic (ie, I2) was estimated using the Mantel-Haenszel method. The I2 statistic indicates the proportion of variability in effect that resulted from heterogeneity instead of chance or sampling error. A lower I2 statistic suggests lower heterogenicity. We calculated the direct delivery cost per complete survey, which included the cost of airtime used to deliver the survey and the incentive amount, as applicable. We summed the total call durations by arm and multiplied by per-minute airtime cost (US $0.04 in Bangladesh and $0.10 in Uganda) to produce the estimated cost per completed survey. Stata (version 14.0; StataCorp LLC) was used to analyze data. An α=.05 was assumed for all tests of statistical significance.

Sample Size

We used the same assumptions to calculate required sample sizes for the trial in both countries. With a 30% cooperation rate of the control arm, 5% type 1 error, and 80% power, 376 participants were required to complete the interview for each study arm in order to detect a 10% difference between control and incentive arms. The total required sample size (ie, complete surveys) was 1128 in each country. As recommended [34], we did not inflate the sample for multiple comparisons.

Results

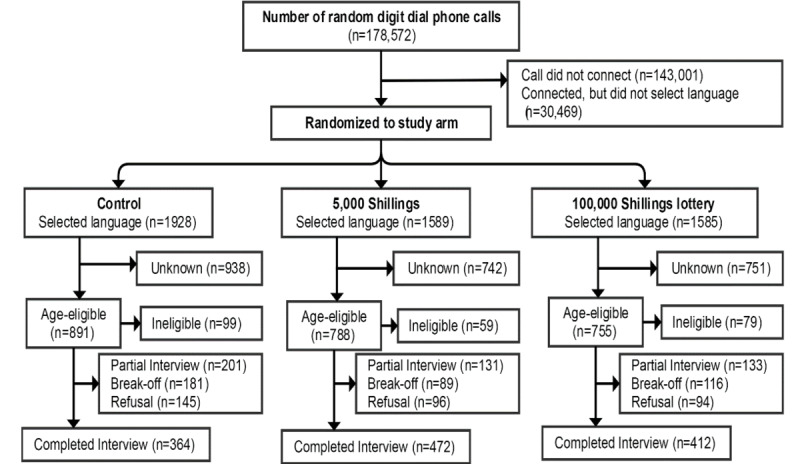

From June 14, 2017 to July 14, 2017, a total of 1165 compete interviews were obtained from 546,746 phone calls in Bangladesh (Figure 1). In Uganda, 178,572 calls were made between March 26 and April 22, 2017 to obtain 1248 complete interviews (Figure 2). In both countries, the sociodemographic characteristics of complete interviews were similar across study arms (Table 1). Of 1165 respondents in Bangladesh, 89.4% (n=1042) respondents were male. Of 1248 respondents in Uganda, 76.0% (n=948) respondents were male. Most respondents were between the ages of 18 to 29 years old—74.4% (867/1165) and 71.0% (886/1248) in Bangladesh and Uganda, respectively.

Figure 1.

Consolidated Standard of Reporting Trial diagram of study participants in Bangladesh.

Figure 2.

Consolidated Standard of Reporting Trial diagram of study participants in Uganda.

Table 1.

Demographic characteristics of complete interviews by study arm.

|

|

Bangladesh (n=1165), n (%) or n | Uganda (n=1248), n (%) or n | ||||||

|

|

|

Control (n=393) | Promised incentive (n=413) | Lottery incentive (n=359) | Control (n=364) | Promised incentive (n=472) | Lottery incentive (n=412) | |

| Sex |

|

|

|

|

|

|

||

|

|

Male | 353 (89.8) | 369 (89.4) | 320 (89.1) | 276 (77.5) | 357 (77.6) | 315 (78.7) | |

|

|

Female | 39 (9.9) | 44 (10.7) | 37 (10.3) | 80 (22.5) | 103 (22.4) | 85 (21.3) | |

|

|

Other | 1 (0.3) | 0 (0.0) | 2 (0.6) | N/Aa | N/A | N/A | |

|

|

Missing | 0 | 0 | 0 | 8 | 12 | 12 | |

| Age group (years) |

|

|

|

|

|

|

||

|

|

18-29 | 301 (76.6) | 291 (70.5) | 275 (76.6) | 257 (72.2) | 326 (71.0) | 303 (75.6) | |

|

|

30-49 | 75 (19.1) | 109 (26.4) | 71 (19.8) | 91 (25.6) | 124 (27.0) | 92 (23.0) | |

|

|

50-69 | 10 (2.5) | 9 (2.2) | 9 (2.5) | 6 (1.7) | 7 (1.5) | 3 (0.8) | |

|

|

70+ | 7 (1.8) | 4 (1.0) | 4 (1.1) | 2 (0.6) | 2 (0.4) | 3 (0.8) | |

|

|

Missing | 0 | 0 | 0 | 8 | 13 | 11 | |

| Education attempted |

|

|

|

|

|

|

||

|

|

None | 29 (22.3) | 34 (25.4) | 28 (20.7) | 65 (18.3) | 63 (13.5) | 59 (14.5) | |

|

|

Primary | 100 (76.9) | 100 (74.6) | 107 (79.3) | 83 (23.4) | 114 (24.4) | 107 (26.3) | |

|

|

Secondary | N/A | N/A | N/A | 146 (41.1) | 209 (44.8) | 169 (41.5) | |

|

|

Tertiary or higher | N/A | N/A | N/A | 61 (17.2) | 81 (17.3) | 72 (17.7) | |

|

|

Refused | 1 (0.3) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | |

|

|

Missing | 263 | 279 | 224 | 9 | 5 | 5 | |

| Location |

|

|

|

|

|

|

||

|

|

Urban | 225 (57.2) | 222 (53.8) | 180 (50.1) | 178 (49.7) | 250 (54.1) | 227 (56.3) | |

|

|

Rural | 165 (42.0) | 191 (46.2) | 179 (49.9) | 180 (50.3) | 212 (45.9) | 176 (43.7) | |

|

|

Refused | 3 (0.8) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | |

|

|

Missing | 0 | 0 | 0 | 6 | 10 | 9 | |

| Language |

|

|

|

|

|

|

||

|

|

Bangla | 390 (99.2) | 410 (99.3) | 355 (98.9) | N/A | N/A | N/A | |

|

|

English | 3 (0.8) | 3 (0.7) | 4 (1.1) | 56 (15.4) | 68 (14.4) | 66 (16.0) | |

|

|

Luganda | N/A | N/A | N/A | 213 (58.5) | 260 (55.2) | 248 (60.2) | |

|

|

Luo | N/A | N/A | N/A | 36 (9.9) | 50 (10.6) | 29 (7.0) | |

|

|

Runyakitara | N/A | N/A | N/A | 59 (16.2) | 93 (19.8) | 69 (16.8) | |

|

|

Missing | 0 | 0 | 0 | 0 | 1 | 0 | |

aN/A: not applicable.

The sociodemographic characteristics of respondents with complete and partial interviews were similar in both countries, with the exception of a significant difference in age distribution in Bangladesh (P=.002); complete interviews had higher proportion of respondents aged 18 to 29 years old than partial interviews (Table S3 in Multimedia Appendix 1). The median time spent completing the interactive voice response survey was 15 minutes 8 seconds (IQR 14 minutes 8 seconds to 16 minutes 15 seconds) and 13 minutes 38 seconds (IQR 12 minutes 39 seconds to 14 minutes 45 seconds) in Bangladesh and Uganda, respectively. The direct costs of airtime, and incentives where applicable per complete interview were $3.89 and $3.16 in the control arm, $3.90 and $3.91 in the promised incentive arm, and $4.05 and $4.12 in the lottery incentive arm, in Bangladesh and Uganda, respectively (Table 2).

Table 2.

Disposition codes and survey rates by study arm.

|

|

Bangladesh | Uganda | |||||

|

|

|

Control | Promised incentive | Lottery incentive | Control | Promised incentive | Lottery incentive |

| Complete interview, n | 393 | 413 | 359 | 364 | 472 | 412 | |

| Partial interview, n | 282 | 175 | 185 | 201 | 131 | 133 | |

| Refusal |

|

|

|

|

|

|

|

|

|

Refusal | 402 | 296 | 265 | 145 | 96 | 94 |

|

|

Breaks-off | 306 | 167 | 171 | 181 | 89 | 116 |

| Unknown other, n | 2615 | 1671 | 1769 | 938 | 742 | 751 | |

|

|

Estimated unknowna | 1833 | 1171 | 1240 | 854 | 675 | 684 |

| Ineligible, n |

|

|

|

|

|

|

|

|

|

Under age | 587 | 465 | 402 | 99 | 59 | 79 |

|

|

Call did not connectb | 152,494 | 152,494 | 152,495 | 47,667 | 47,667 | 47,667 |

|

|

Connected, but no language selectionb | 26,114 | 26,113 | 26,113 | 10,156 | 10,156 | 10,157 |

| Average cost (US $) per complete interviewc | 3.89 | 3.90 | 4.05 | 3.16 | 3.91 | 4.12 | |

| Contact rate | 43.00 | 47.30 | 44.10 | 51.10 | 53.90 | 52.50 | |

|

|

Risk ratio (95% CI) | Ref | 1.10 (1.04-1.17) | 1.03 (0.97-1.09) | Ref | 1.05 (0.99-1.13) | 1.03 (0.96-1.10) |

|

|

P value | Ref | .002 | 0.40 | Ref | .11 | .43 |

| Response rate | 21.00 | 26.50 | 24.50 | 32.40 | 41.20 | 37.90 | |

|

|

Risk ratio (95% CI) | Ref | 1.26 (1.14-1.39) | 1.17 (1.06-1.29) | Ref | 1.27 (1.16-1.39) | 1.17 (1.06-1.29) |

|

|

P value | Ref | <.001 | .002 | Ref | <.001 | .001 |

| Refusal rate | 22.00 | 20.80 | 19.60 | 18.70 | 12.70 | 14.60 | |

|

|

Risk ratio (95% CI) | Ref | 0.95 (0.85- 1.05) | 0.89 (0.80- 0.99) | Ref | 0.68 (0.57- 0.80) | 0.78 (0.67- 0.92) |

|

|

P value | Ref | .30 | .04 | Ref | <.001 | .002 |

| Cooperation rate | 28.40 | 39.30 | 36.60 | 40.90 | 59.90 | 54.60 | |

|

|

Risk ratio (95% CI) | Ref | 1.38 (1.24-1.55) | 1.28 (1.15-1.45) | Ref | 1.47 (1.33-1.62) | 1.34 (1.21-1.48) |

|

|

P value | Ref | <.001 | <.001 | Ref | <.001 | <.001 |

aEstimated proportion of unknown cases that were age-eligible was 70.1% for Bangladesh and 91.0% for Uganda.

bEvenly distributed to each study arm due to randomization occurring after language selection.

cOnly includes cost of the call based on time participants spent on the survey plus airtime incentive, as applicable.

Cooperation and response rates were significantly higher in the promised incentive arm (cooperation: 413/1051, 39.3%; RR 1.38, 1.24-1.55, P<.001; response: 588/2222, 26.5%, RR 1.26, 95% CI 1.14-1.39, P<.001) and in the lottery arm (cooperation: 359/980, 36.6%; RR 1.28, 95% CI 1.15-1.45, P<.001; response: 544/2220, 24.5%; RR 1.17, 95% CI 1.06-1.29, P=.002) compared with those for the control arm (cooperation: 393/1383, 28.4%; response: 675/3216, 21.0%). In Uganda, the cooperation and response rates were higher than those in Bangladesh. Rates were significantly higher in the promised (cooperation: RR 1.47, 95% CI 1.33-1.62, P<.001; response: RR 1.27, 95% CI 1.16-1.39, P<.001) and lottery arms (cooperation: RR 1.34, 95% CI 1.21-1.48, P<.001; response: RR 1.17, 95% CI 1.06-1.29, P=.001) compared with those for the control arm. In both countries, cooperation and response rates were similar when using equations that did not include the estimated proportion of age-eligible participants in the unknown disposition code (Table S4 in Multimedia Appendix 1). In both countries, subgroup analyses showed that participant gender, age, education, and location did not modify the intervention’s effect on cooperation rate (Tables S5 and S6 in Multimedia Appendix 1).

Pooling Bangladesh and Uganda participants showed that the promised incentive (pooled RR 1.42, 95% CI 1.32-1.53, P<0.001) and lottery incentive (pooled RR 1.31, 95% CI 1.21-1.41, P<0.001) significantly improved cooperation rate compared with no incentive (Figure 3). Similarly, response rates were significantly higher in the promised incentive (pooled RR 1.26, 95% CI 1.18-1.35, P<0.001) and lottery incentive (pooled RR 1.17, 95% CI 1.09-1.25, P<0.001, I2=0.0%) arm compared with that in the control arm. Overall, any incentive significantly improved cooperation rates by 37% (pooled RR 1.37, 95% CI 1.29-1.44, P<0.001) and response rates by 22% (pooled RR 1.22, 95% CI 1.18-1.28, P<.001), and these results were highly consistent (cooperation: I2=12.1%, P=.33; response: I2=0.0%, P=.47).

Figure 3.

Pooled risk ratios for cooperation and response rate by study arm.

Discussion

In this study, the promised and lottery incentive arms had higher response and cooperation rates than control arms (ie, no incentive arm) in both Bangladesh and Uganda. Given that the pooled analysis showed that the incentives were highly consistent at increasing these survey rates in two culturally and geographically distinct low- and middle-income countries, the provision of airtime incentives may be a useful mechanism to increase interactive voice response survey participation in other low-resource settings.

There are very few studies [4,35] that have examined the use of airtime incentives in low- and middle-income countries. Our study adds significant knowledge to the growing body of literature on the impact of incentive amount on mobile phone survey in these countries. A previous study [4] similarly found that both promised and lottery airtime incentives significantly improved the completion rate of a random-digit dialing interactive voice response survey in Zimbabwe. In Mozambique, the lottery incentive, but not the promised incentive, increased completion rates [35].

A study [36] from Honduras also found that providing either US $1 or $5 of airtime significantly improved response rates compared with the no incentive arm. Another study [22] showed that providing an airtime incentive of at least 50 BDT in Bangladesh and 5000 UGX in Uganda improved survey participation compared to people without any incentive and also reduced the number of incomplete interviews.

Our interactive voice response survey data collection was quick and inexpensive. In Bangladesh, we collected 1165 complete interviews in 21 days at a cost of approximately US $4.00 per complete interview. In Uganda, 1248 complete interviews were collected in 18 days at a cost under US $4.00 per complete interview. Our findings are similar to those from a random-digit dialing interactive voice response survey in Ghana collected 9469 complete interviews in 27 days at a cost of US $4.95 per complete interview [37]. The average cost of a competed interview is much lower than the average cost of such a household survey, this indicates that the mobile phone survey could be cost-effective compared to household surveys. For instance, Lietz and colleagues [38] estimated the average cost of per completed interview of the Nouna Health and Demographic Survey in rural Burkina Faso as approximately US $25. Although the specific objectives of that survey were broader and required a longer amount of time than our survey, future studies should compare the average cost of conducting an interview in such mobile phone surveys. In Bangladesh, our use of an airtime incentive to motivate participants to complete the interview became cost-neutral compared to the control arm. The savings in cost was due to the decreased number of partial interviews and, therefore, fewer phone calls. We did not see a similar finding in Uganda where the promised (US $1.35) and lottery (US $28) incentive amounts were higher than those in Bangladesh (promised: US $0.60; lottery: US $6.00). The difference in promised incentive amount may also account for some differences in participation rate by country. Specifically, people may not initiate a survey if the promised incentive amount appears low, which would ultimately reduce participation. Future work could manipulate the odds of winning the lottery and its amount to ensure the incentive is cost-neutral or even cost-saving [39].

Our cooperation and response rates were calculated in a standardized manner using American Association for Public Opinion Research guidelines [31], which allows for comparison with other studies. In a nationally administered random-digit dialing interactive voice response survey, with persons ≥18 years in Ghana, in which no incentives were provided, contact (39%) and response (31%) rates were similar to those observed in our control arms for Bangladesh and Uganda [37]. However, we observed higher refusal and lower cooperation rates in Bangladesh (refusal: 22%; cooperation: 28%) and Uganda (refusal: 19%; cooperation: 41%) than what was observed in Ghana (refusal: 7%; cooperation: 59%). These differences may be explained by variations in the eligibility criteria, length of survey, and the classification of disposition codes for complete, break-offs, refusals, and partial interviews. For instance, L’Engle and colleagues [37] defined complete interviews as responding to all survey questions, while we defined complete as 4 out of 5 modules.

There are a range of ethical considerations in mobile phone survey [40]. Our survey started with an introduction that included the purpose of the study, the sponsoring agency, time commitment, and that the data would be kept confidential. Participants were offered an opportunity to consent to the survey by pressing a button on their mobile phone and were allowed to refuse to answer any question. Additional studies that evaluate alternative ways to consent participants are needed to maximize participant’s understanding of the study [41]. Additionally, there has been considerable discussion on the ethics of incentives and health research [42,43]. Our use of incentives was informed by in-country stakeholders, amounts used were less than a day’s working wage and were not paired with risky or unsafe behavior. Nonetheless, we believe important to acknowledge that efforts to optimize use of incentives, in general, should be informed not only by cost-effectiveness considerations. Incentives that insufficiently reflect response burden, or that, perhaps in rare cases, have the potential to unduly influence or induce participation, ought to be avoided.

We observed a higher proportion of male, young (ie, 18 to 29 years old), or urban residents compared to general population in both countries. This finding was similar to those of random-digit dialing interactive voice response surveys conducted in Afghanistan, Ethiopia, Ghana, Mozambique, Tanzania, and Zimbabwe [35,37,44]. Male gender, younger age, higher education, and urban residence have been found to be associated with mobile phone ownership in low- and middle-income countries, including East Africa [45] and Bangladesh [46]. This does raise concerns about the ability to generate nationally representative estimates (ie, generalizability of the findings). Advances in sampling and statistical methodology may be required for such estimates. Quota sampling could be used to ensure a more equal distribution of the sociodemographic characteristics [47]. Others have found that weighted estimates of noncommunicable disease indicators collected via mobile phone survey approximate household collected data [48].

This study has several strengths. First, the randomization was automated and embedded within the interactive voice response platform. This safeguarded against misallocation of participants to study arm which could bias response and cooperation rates. Second, we employed standardized protocols and questionnaires in both countries and used the same technology platform to deliver interactive voice response surveys to afford for cross-country comparisons. Lastly, our sampling frame consisted of all known mobile network operators in each country; thereby minimizing potential selection bias.

In addition to underrepresentation from some sociodemographic populations, this study has some limitations. First, there was a substantial number of phone calls in Bangladesh and Uganda where we were unable to determine the status of the phone numbers. Calling people randomly can also reduce response. We could not determine if the phone numbers we called were active or inactive numbers [49]. As randomization to study arm occurred after participants picked up the phone, we chose to designate these phone calls as nonworking numbers. This decision inflates our contact, response, and refusal rates, but has no effect on the cooperation rate. Second, although not an issue in Bangladesh where 99% of the respondents took the interactive voice response survey in Bangla, our survey was only available in 3 of the 6 major language groups in Uganda [50]. This might lead to some selection error due to unavailability of the preferred language and would have larger implications for nationally representative surveys [35]. We did not check the quality of collected data as that was not the main purpose of this study; future studies should investigate that.

We investigated the response, contact, and cooperation rates of 2 different incentive structures compared to providing no incentives in 2 geographically and linguistically, distinct countries. We observed that providing either type of incentive enhanced survey participation and minimized associated costs.

Acknowledgments

This study was made possible by the generous support of Bloomberg Philanthropies (grant 41388.01) to AAH and the people of Australia through the Department of Foreign Affairs and Trade. The contents are the responsibility of the authors and do not necessarily reflect the views of Bloomberg Philanthropies or the Government of Australia. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

We are thankful to our collaborators and research teams at the Institute of Epidemiology, Disease Control and Research, Bangladesh and Makerere University School of Public Health, Uganda. We are also thankful to Viamo (formerly VOTOmobile) and colleagues Kevin Schuster and Tom Mortimore for their assistance with the interactive voice response platform. Our thanks also go to our research collaborators in the noncommunicable disease component at the Centers for Disease Control and Prevention, the Centers for Disease Control and Prevention Foundation, the World Health Organization, and all those who played a part in developing initial versions of the questionnaires used; who participated in translating survey instruments, collecting data during the formative phase, and making audiorecordings for interactive voice response; or who contributed in any way in both countries.

Abbreviations

- BDT

Bangladeshi Taka

- RR

risk ratio

- UGX

Ugandan Shilling

Supporting information.

CONSORT eHEALTH Checklist (V 1.6.1).

Data Availability

All data files are available from the OpenICPSR repository database (accession number: ICPSR 107284).

Footnotes

Conflicts of Interest: None declared.

References

- 1.Boutayeb A. The double burden of communicable and non-communicable diseases in developing countries. Trans R Soc Trop Med Hyg. 2006 Mar;100(3):191–9. doi: 10.1016/j.trstmh.2005.07.021.S0035-9203(05)00317-2 [DOI] [PubMed] [Google Scholar]

- 2.Bygbjerg IC. Double burden of noncommunicable and infectious diseases in developing countries. Science. 2012 Sep 21;337(6101):1499–501. doi: 10.1126/science.1223466.337/6101/1499 [DOI] [PubMed] [Google Scholar]

- 3.Global status report on noncommunicable diseases 2010. World Health Organization Institutional Repository for Information Sharing. [2022-04-19]. https://apps.who.int/iris/bitstream/handle/10665/148114/9789241564854_eng.pdf .

- 4.Global Burden of Disease Collaborators Global, regional, and national comparative risk assessment of 79 behavioural, environmental and occupational, and metabolic risks or clusters of risks in 188 countries, 1990–2013: a systematic analysis for the Global Burden of Disease Study 2013. Br Dent J. 2015 Oct 9;219(7):329–329. doi: 10.1038/sj.bdj.2015.751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Global action plan for the prevention and control of noncommunicable diseases 2013-2020. World Health Organization. 2013. [2018-08-24]. https://www.who.int/publications/i/item/9789241506236 .

- 6.Calba C, Goutard FL, Hoinville L, Hendrikx P, Lindberg A, Saegerman C, Peyre M. Surveillance systems evaluation: a systematic review of the existing approaches. BMC Public Health. 2015;15:448. doi: 10.1186/s12889-015-1791-5. http://www.biomedcentral.com/1471-2458/15/448 .10.1186/s12889-015-1791-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pickens CM, Pierannunzi C, Garvin W, Town M. Surveillance for certain health behaviors and conditions among states and selected local areas - behavioral risk factor surveillance system, United States, 2015. MMWR Surveill Summ. 2018 Jun 29;67(9):1–90. doi: 10.15585/mmwr.ss6709a1. http://europepmc.org/abstract/MED/29953431 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Healy J, Rodriguez-Lainz A, Elam-Evans LD, Hill HA, Reagan-Steiner S, Yankey D. Vaccination coverage among foreign-born and U.S.-born adolescents in the United States: successes and gaps - National Immunization Survey-Teen, 2012-2014. Vaccine. 2018 Mar 20;36(13):1743–1750. doi: 10.1016/j.vaccine.2018.02.052. http://europepmc.org/abstract/MED/29483032 .S0264-410X(18)30239-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Measuring the information society report 2017. International Telecommunication Union. 2017. [2022-01-01]. https://www.itu.int/en/ITU-D/Statistics/Pages/publications/mis2017.aspx .

- 10.Byonanebye DM, Nabaggala MS, Naggirinya AB, Lamorde M, Oseku E, King R, Owarwo N, Laker E, Orama R, Castelnuovo B, Kiragga A, Parkes-Ratanshi R. An interactive voice response software to improve the quality of life of people living with HIV in Uganda: randomized controlled trial. JMIR Mhealth Uhealth. 2021 Feb 11;9(2):e22229. doi: 10.2196/22229. https://mhealth.jmir.org/2021/2/e22229/ v9i2e22229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Akhtar Z, Mah-E-Muneer S, Rashid MM, Ahmed MS, Islam MA, Chowdhury S, Khan Z, Hassan MZ, Islam K, Parveen S, Debnath N, Rahman M, Chowdhury F. Antibiotics use and its knowledge in the community: a mobile phone survey during the COVID-19 pandemic in Bangladesh. Antibiotics (Basel) 2021 Aug 29;10(9):1052. doi: 10.3390/antibiotics10091052. https://www.mdpi.com/resolver?pii=antibiotics10091052 .antibiotics10091052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lamichhane A, Rana S, Shrestha K, Paudyal R, Malla P, Upadhyaya S, Uprety D, Gurung J, Satow E. Violence and sexual and reproductive health service disruption among girls and young women during COVID-19 pandemic in Nepal: a cross-sectional study using interactive voice response survey. PLoS One. 2021 Dec 8;16(12):e0260435. doi: 10.1371/journal.pone.0260435. https://dx.plos.org/10.1371/journal.pone.0260435 .PONE-D-21-21956 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zheng Y, Wang W, Zhong Y, Wu F, Zhu Z, Tham Y, Lamoureux E, Xiao L, Zhu E, Liu H, Jin L, Liang L, Luo L, He M, Morgan I, Congdon N, Liu Y. A peer-to-peer live-streaming intervention for children during COVID-19 homeschooling to promote physical activity and reduce anxiety and eye strain: cluster randomized controlled trial. J Med Internet Res. 2021 Apr 30;23(4):e24316. doi: 10.2196/24316. https://www.jmir.org/2021/4/e24316/ v23i4e24316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hyder AA, Wosu AC, Gibson DG, Labrique AB, Ali J, Pariyo GW. Noncommunicable disease risk factors and mobile phones: a proposed research agenda. J Med Internet Res. 2017 May 05;19(5):e133. doi: 10.2196/jmir.7246. https://www.jmir.org/2017/5/e133/ v19i5e133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Greenleaf AR, Gibson DG, Khattar C, Labrique AB, Pariyo GW. Building the evidence base for remote data collection in low- and middle-income countries: comparing reliability and accuracy across survey modalities. J Med Internet Res. 2017 May 05;19(5):e140. doi: 10.2196/jmir.7331. https://www.jmir.org/2017/5/e140/ v19i5e140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Singer E, Ye C. The use and effects of incentives in surveys. Ann Am Acad Pol Soc Sci. 2012 Nov 26;645(1):112–141. doi: 10.1177/0002716212458082. [DOI] [Google Scholar]

- 17.Brick J, Montaquila J, Hagedorn M, Roth S, Chapman C. Implications for RDD design from an incentive experiment. J Off Stat. 2005;21(4):571–589. https://www.scb.se/contentassets/ca21efb41fee47d293bbee5bf7be7fb3/implications-for-rdd-design-from-an-incentive-experiment.pdf . [Google Scholar]

- 18.Smith MG, Witte M, Rocha S, Basner M. Effectiveness of incentives and follow-up on increasing survey response rates and participation in field studies. BMC Med Res Methodol. 2019 Dec 05;19(1):230. doi: 10.1186/s12874-019-0868-8. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/s12874-019-0868-8 .10.1186/s12874-019-0868-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gjøstein Dagrun Kyte, Huitfeldt A, Løberg Magnus, Adami H, Garborg K, Kalager M, Bretthauer M. Incentives and participation in a medical survey. Tidsskr Nor Laegeforen. 2016 Jul;136(12-13):1082–7. doi: 10.4045/tidsskr.15.1096. http://tidsskriftet.no/article/3500377 .3500377 [DOI] [PubMed] [Google Scholar]

- 20.Pieper D, Kotte N, Ober P. The effect of a voucher incentive on a survey response rate in the clinical setting: a quasi-randomized controlled trial. BMC Med Res Methodol. 2018 Aug 16;18(1):86. doi: 10.1186/s12874-018-0544-4. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/s12874-018-0544-4 .10.1186/s12874-018-0544-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chamie G, Ndyabakira A, Marson KG, Emperador DM, Kamya MR, Havlir DV, Kwarisiima D, Thirumurthy H. A pilot randomized trial of incentive strategies to promote HIV retesting in rural Uganda. PLoS One. 2020 May 29;15(5):e0233600. doi: 10.1371/journal.pone.0233600. https://dx.plos.org/10.1371/journal.pone.0233600 .PONE-D-19-27665 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gibson DG, Wosu AC, Pariyo GW, Ahmed S, Ali J, Labrique AB, Khan IA, Rutebemberwa E, Flora MS, Hyder AA. Effect of airtime incentives on response and cooperation rates in non-communicable disease interactive voice response surveys: randomised controlled trials in Bangladesh and Uganda. BMJ Glob Health. 2019;4(5):e001604. doi: 10.1136/bmjgh-2019-001604. https://gh.bmj.com/lookup/pmidlookup?view=long&pmid=31565406 .bmjgh-2019-001604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Göritz A. Incentives in web studies: methodological issues and a review. Int J Internet Sci. 2006;1(1):58–70. http://www.websm.org/uploadi/editor/1145983752Goeritz_2006_Incentives_in_Web_Studies.pdf . [Google Scholar]

- 24.Gibson DG, Pereira A, Farrenkopf BA, Labrique AB, Pariyo GW, Hyder AA. Mobile phone surveys for collecting population-level estimates in low- and middle-income countries: a literature review. J Med Internet Res. 2017 May 05;19(5):e139. doi: 10.2196/jmir.7428. https://www.jmir.org/2017/5/e139/ v19i5e139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.The world factbook. Central Intelligence Agency. 2019. [2019-07-22]. https://www.cia.gov/the-world-factbook/

- 26.Schulz KF, Altman DG, Moher D, CONSORT Group CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. BMC Med. 2010 Mar 24;8:18. doi: 10.1186/1741-7015-8-18. https://bmcmedicine.biomedcentral.com/articles/10.1186/1741-7015-8-18 .1741-7015-8-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Waksberg J. Sampling methods for random digit dialing. J Am Stat Assoc. 1978 Mar;73(361):40–46. doi: 10.1080/01621459.1978.10479995. [DOI] [Google Scholar]

- 28.The STEPS instrument and support materials. World Health Organization. 2018. [2018-08-24]. https://www.who.int/teams/noncommunicable-diseases/surveillance/systems-tools/steps .

- 29.Gibson DG, Farrenkopf BA, Pereira A, Labrique AB, Pariyo GW. The development of an interactive voice response survey for noncommunicable disease risk factor estimation: technical assessment and cognitive testing. J Med Internet Res. 2017 May 05;19(5):e112. doi: 10.2196/jmir.7340. https://www.jmir.org/2017/5/e112/ v19i5e112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gibson DG, Pariyo GW, Wosu AC, Greenleaf AR, Ali J, Ahmed S, Labrique AB, Islam K, Masanja H, Rutebemberwa E, Hyder AA. Evaluation of mechanisms to improve performance of mobile phone surveys in low- and middle-income countries: research protocol. JMIR Res Protoc. 2017 May 05;6(5):e81. doi: 10.2196/resprot.7534. https://www.researchprotocols.org/2017/5/e81/ v6i5e81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.The American Association for Public Opinion Research Standard definitions: final dispositions of case codes and outcome rates for surveys. Encyclopedia Survey Research Methods. 2016. [2018-01-01]. https://www.aapor.org/aapor_main/media/publications/standard-definitions20169theditionfinal.pdf .

- 32.Wacholder S. Binomial regression in GLIM: estimating risk ratios and risk differences. Am J Epidemiol. 1986 Jan;123(1):174–84. doi: 10.1093/oxfordjournals.aje.a114212. [DOI] [PubMed] [Google Scholar]

- 33.Deeks J, Altman D, Bradburn M. Statistical methods for examining heterogeneity and combining results from several studies in meta-analysis. In: Ingger M, Smith G, Altman D, editors. Systematic Reviews in Health Care: Meta‐Analysis in Context, Second edition. London, United Kingdom: BMJ Publishing Group; 2008. p. 312. [Google Scholar]

- 34.Rothman KJ. No adjustments are needed for multiple comparisons. Epidemiology. 1990;1(1):43–46. doi: 10.1097/00001648-199001000-00010. [DOI] [PubMed] [Google Scholar]

- 35.Leo B, Morello R, Mellon J, Piexoto T, Davenport S. Do mobile surveys work in poor countries. International Household Survey Network. 2012. [2018-10-05]. https://www.cgdev.org/sites/default/files/CGD-Working-Paper-398-Mobile-Phones.pdf .

- 36.Ballivian A, Azevedo J, Durbin W. Using mobile phones for high frequency data collection. In: Toninelli D, Pinter R, de Pedraza P, editors. Mobile Research Methods: Opportunities and Challenges of Mobile Research Methodologies. London: Ubiquity Press; 2013. [Google Scholar]

- 37.L'Engle K, Sefa E, Adimazoya EA, Yartey E, Lenzi R, Tarpo C, Heward-Mills NL, Lew K, Ampeh Y. Survey research with a random digit dial national mobile phone sample in Ghana: methods and sample quality. PLoS One. 2018;13(1):e0190902. doi: 10.1371/journal.pone.0190902. https://dx.plos.org/10.1371/journal.pone.0190902 .PONE-D-17-19642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lietz H, Lingani M, Sié Ali, Sauerborn R, Souares A, Tozan Y. Measuring population health: costs of alternative survey approaches in the Nouna Health and Demographic Surveillance System in rural Burkina Faso. Glob Health Action. 2015 Aug 07;8(1):28330. doi: 10.3402/gha.v8.28330. http://europepmc.org/abstract/MED/26257048 .28330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Gajic A, Cameron D, Hurley J. The cost-effectiveness of cash versus lottery incentives for a web-based, stated-preference community survey. Eur J Health Econ. 2012 Dec;13(6):789–99. doi: 10.1007/s10198-011-0332-0. [DOI] [PubMed] [Google Scholar]

- 40.Ali J, Labrique AB, Gionfriddo K, Pariyo G, Gibson DG, Pratt B, Deutsch-Feldman M, Hyder AA. Ethics considerations in global mobile phone-based surveys of noncommunicable diseases: a conceptual exploration. J Med Internet Res. 2017 May 05;19(5):e110. doi: 10.2196/jmir.7326. https://www.jmir.org/2017/5/e110/ v19i5e110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ali J, DiStefano MJ, Coates McCall I, Gibson DG, Al Kibria GM, Pariyo GW, Labrique AB, Hyder AA. Ethics of mobile phone surveys to monitor non-communicable disease risk factors in low- and middle-income countries: a global stakeholder survey. Glob Public Health. 2019 Aug 10;14(8):1167–1181. doi: 10.1080/17441692.2019.1566482. [DOI] [PubMed] [Google Scholar]

- 42.Mduluza T, Midzi N, Duruza D, Ndebele P. Study participants incentives, compensation and reimbursement in resource-constrained settings. BMC Med Ethics. 2013;14 Suppl 1:S4. doi: 10.1186/1472-6939-14-S1-S4. https://www.biomedcentral.com/1472-6939/14/S1/S4 .1472-6939-14-S1-S4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lunze K, Paasche-Orlow MK. Financial incentives for healthy behavior: ethical safeguards for behavioral economics. Am J Prev Med. 2013 Jun;44(6):659–65. doi: 10.1016/j.amepre.2013.01.035.S0749-3797(13)00186-4 [DOI] [PubMed] [Google Scholar]

- 44.Pariyo GW, Greenleaf AR, Gibson DG, Ali J, Selig H, Labrique AB, Al Kibria GM, Khan IA, Masanja H, Flora MS, Ahmed S, Hyder AA. Does mobile phone survey method matter? reliability of computer-assisted telephone interviews and interactive voice response non-communicable diseases risk factor surveys in low and middle income countries. PLoS One. 2019 Apr 10;14(4):e0214450. doi: 10.1371/journal.pone.0214450. https://dx.plos.org/10.1371/journal.pone.0214450 .PONE-D-18-32667 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Khatun F, Heywood AE, Hanifi SMA, Rahman MS, Ray PK, Liaw S, Bhuiya A. Gender differentials in readiness and use of mHealth services in a rural area of Bangladesh. BMC Health Serv Res. 2017 Aug 18;17(1):573–11. doi: 10.1186/s12913-017-2523-6. https://bmchealthservres.biomedcentral.com/articles/10.1186/s12913-017-2523-6 .10.1186/s12913-017-2523-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zurovac D, Otieno G, Kigen S, Mbithi AM, Muturi A, Snow RW, Nyandigisi A. Ownership and use of mobile phones among health workers, caregivers of sick children and adult patients in Kenya: cross-sectional national survey. Global Health. 2013 May 14;9:20. doi: 10.1186/1744-8603-9-20. https://globalizationandhealth.biomedcentral.com/articles/10.1186/1744-8603-9-20 .1744-8603-9-20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Labrique A, Blynn E, Ahmed S, Gibson D, Pariyo G, Hyder AA. Health surveys using mobile phones in developing countries: automated active strata monitoring and other statistical considerations for improving precision and reducing biases. J Med Internet Res. 2017 May 05;19(5):e121. doi: 10.2196/jmir.7329. http://www.jmir.org/2017/5/e121/ v19i5e121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Mahfoud Z, Ghandour L, Ghandour B, Mokdad AH, Sibai AM. Cell phone and face-to-face interview responses in population-based surveys. Field Methods. 2014 Jul 01;27(1):39–54. doi: 10.1177/1525822X14540084. [DOI] [Google Scholar]

- 49.Lau C, di Tada N. Identifying non-working phone numbers in Africa for response rate calculations. Surv Pract. 2017 Dec 28;:1–3. doi: 10.29115/sp-2018-0020. [DOI] [Google Scholar]

- 50.Guwatudde D, Mutungi G, Wesonga R, Kajjura R, Kasule H, Muwonge J, Ssenono V, Bahendeka SK. The epidemiology of hypertension in Uganda: findings from the National Non-Communicable Diseases Risk Factor Survey. PLoS One. 2015 Sep 25;10(9):e0138991–13. doi: 10.1371/journal.pone.0138991. https://dx.plos.org/10.1371/journal.pone.0138991 .PONE-D-15-27427 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting information.

CONSORT eHEALTH Checklist (V 1.6.1).

Data Availability Statement

All data files are available from the OpenICPSR repository database (accession number: ICPSR 107284).