Abstract

Motivation

A dictionary of k-mers is a data structure that stores a set of n distinct k-mers and supports membership queries. This data structure is at the hearth of many important tasks in computational biology. High-throughput sequencing of DNA can produce very large k-mer sets, in the size of billions of strings—in such cases, the memory consumption and query efficiency of the data structure is a concrete challenge.

Results

To tackle this problem, we describe a compressed and associative dictionary for k-mers, that is: a data structure where strings are represented in compact form and each of them is associated to a unique integer identifier in the range . We show that some statistical properties of k-mer minimizers can be exploited by minimal perfect hashing to substantially improve the space/time trade-off of the dictionary compared to the best-known solutions.

Availability and implementation

Supplementary information

Supplementary data are available at Bioinformatics online.

1 Introduction

A k-mer is a string of length k over the DNA alphabet . Software tools based on k-mers are in widespread use in Bioinformatics. Many large-scale analyses of DNA share the elementary need of determining the exact membership of k-mers to a given set , i.e. they rely on the space/time efficiency of a dictionary data structure for k-mers (Chikhi et al., 2021). This work proposes such an efficient dictionary. More precisely, the problem we study here is defined as follows. Given a large string over the DNA alphabet (e.g. a genome or a pan-genome), let be the set of all its distinct k-mers, with . A dictionary for is a data structure that supports the following two operations:

for any k-mer g, returns a unique integer if (i is the ‘identifier’ of g in ) or i = – 1 if ;

for any extracts the k-mer g for which .

By means of the Lookup query, the dictionary is able to answer membership queries in an exact way (rather than approximate) and to associate satellite information to k-mers (such as abundances). Thanks to the Access query, the original set can be reconstructed, meaning that the dictionary is a self-index for .

In sequence analysis tasks, it is very often the case that we are given a pattern P of length and we are interested in answering membership to for all the k-mers read consecutively from P, i.e. for . For example, we may decide that the whole pattern P is present in a genome if the number of k-mers of P that belong to is at least , for a prescribed coverage threshold , such as (Bingmann et al., 2019; Solomon and Kingsford, 2016). In other words, Lookup queries are often issued for consecutive k-mers (one being the previous shifted to the right by one symbol) (Robidou and Peterlongo, 2021). While it is obviously possible to perform Lookup queries for a pattern of length , it also seems profitable to answer ‘Is a member of ?’ more efficiently knowing that the previous k-mer shares k−1 symbols with . We regard this latter scenario as that of streaming queries.

Therefore, our objective is to support Lookup, Access and streaming membership queries as efficiently as possible in compressed space. (The data structure is static: insertions/deletions of k-mers are not supported.)

As a first introductory remark we shall mention that the algorithmic literature about the so-called compressed string dictionary problem is rich of solutions, e.g. based on Front-Coding, tries, hashing or combinations of such techniques (see the survey by Martínez-Prieto et al. (2016)). However, these solutions are unlikely to be competitive for the specialized version of the problem we tackle here because they are relevant for ‘generic’ strings that usually: (i) have variable length; (ii) are drawn from larger alphabets (e.g. ASCII); and (iii) do not exhibit particular properties that can aid compression. Instead, k-mers are fixed-length strings; their alphabet of representation is very small (just 2 bits per alphabet symbol are sufficient); and since k-mers are extracted consecutively from DNA, two consecutive strings overlap by k−1 symbols that are redundant and should not be represented twice in the dictionary. This motivates the study of specialized solutions for k-mers.

These properties are elegantly captured by the de Bruijn graph representation of —a graph whose nodes are the k-mers in and the edges model the string overlaps between the k-mers. Using this formalism, it is possible to reduce the redundancy of the symbols in by considering paths in the graph and their corresponding strings. We will better formalize this point in Section 2.

For the scope of this work, it is sufficient to point out that: (i) many algorithms have been proposed to build de Bruijn graphs (dBGs) efficiently (Chikhi et al., 2016; Khan et al., 2021; Khan and Patro, 2021) from which these paths can be extracted for indexing purposes; (ii) not surprisingly, essentially all state-of-the-art dictionaries for k-mers—that we briefly review in Section 3—are based on the principle of indexing such collections of paths (Almodaresi et al., 2018; Chikhi et al., 2014; Marchet et al., 2021; Rahman and Medvedev, 2020). We also follow this direction.

However, we note that existing dictionary data structures either represent such paths with an FM-index (Ferragina and Manzini, 2000) (or one of its many variants), hence retain highly compressed space but very slow query time in practice or, vice versa, resort on hashing for fast evaluation but take much more space (Almodaresi et al., 2018; Marchet et al., 2021). It is, therefore, desirable to have a good balance between these two extremes.

For this reason, we show that we can still enjoy the query efficiency of hashing while taking small space—significantly less space than prior schemes based on (minimal and perfect) hashing. More specifically, we show how two statistical properties of k-mer minimizers—precisely, those of being sparse and skewly distributed in DNA sequences—can be better exploited to derive an efficient dictionary based on minimal perfect hashing and compact encodings (Section 4). We evaluate the proposed data structure over sets of billions of k-mers, under different query distributions and modalities, and exhibit a substantial performance improvement compared to prior solutions for the problem (Section 5). Our C++ implementation of the dictionary is available at https://github.com/jermp/sshash.

2 Preliminaries

In this section, we give some preliminary remarks to better support the exposition in Sections 3 and 4. Let be the collection of the n distinct k-mers extracted from a given, large, string (or set of strings). This string can be, e.g. the genome of an organism.

Throughout the article, we consider to be identical two k-mers that are the reverse complement of each other.

Path covering the de Bruijn graph. A dBG for is a directed graph where the set of nodes is and a direct edge from node u to v exists if and only if the last k—1 symbols of u are equal to the first k−1 symbols of v, i.e. . It follows that a path traversing nodes corresponds to a string of length spelled out by the path, obtained by ‘glueing’ all the nodes’ k-mers in order.

A disjoint-node path cover for is a set of paths where each node belongs to exactly one path, e.g. a set of unitigs, maximal unitigs, or maximal stitched unitigs (Rahman and Medvedev, 2020) (also known as simplitigs (Břinda et al., 2021)). We denote such a cover with .

The strings in form the natural basis for a space-efficient dictionary because: (i) by considering paths in the graph, the number of symbols in is less than the number of symbols in the original and, (ii) by being a disjoint-node path cover we are guaranteed that there are no duplicate k-mers in . Therefore, we assume from now on that a path cover has been computed for as the input for our problem.

Minimizers and super-k-mers. Given a k-mer g, an integer , and a total order relation R on all m-length strings, the smallest m-mer of g according to R is called the minimizer of g. R could be, e.g. the simple lexicographic order. Instead, here we use the random order given by a hash function h, chosen from a universal family. Therefore, simply put, the minimizer of g is the m-mer of g that minimizes the value of h (sometimes called a ‘random’ minimizer).

Minimizers are very popular in sequence analysis, such as for seed-and-extend algorithms, because of the following empirical property: consecutive k-mers tend to have the same minimizer (Roberts et al., 2004; Schleimer et al., 2003). This means that there are far less distinct minimizers than k-mers—approximately, times less minimizers than k-mers (independently of the sequence length), if m is not very small compared to k (more precisely, see Zheng et al. (2020, Theorem 3)). For example, if k = 31 and m = 20, we should expect to see less minimizers than k-mers.

Given a string S of length at least k (e.g. a path in a dBG), we call a super-k-mer of S a maximal sequence of consecutive k-mers having the same minimizer (Li et al., 2013).

Minimal perfect hashing. Given a set of n distinct keys, a function f that maps bijectively the keys into the integer range is called a minimal perfect hash function (MPHF) for the set . The function is allowed to return an arbitrary value in for any key that does not belong to , hence it can be realized in small space, in practice 2–3 bits/key (albeit bits/key are sufficient in theory (Mehlhorn, 1982)). Many efficient algorithms have been proposed to build MPHFs from static sets that scale well to large values of n and retain practically-constant evaluation time. In this paper, we use PTHash (Pibiri and Trani, 2021a,b) for its very fast evaluation time, usually better than other techniques, and good space effectiveness.

Elias–Fano encoding. Given a monotone integer sequence whose largest (last) element is less than or equal to a known quantity U, the Elias–Fano encoding represents S in at most bits (Elias, 1974; Fano, 1971). With o(n) extra bits it is possible to decode any in constant time and support successor queries in time. We point the interested reader to the survey by Pibiri and Venturini (2021, Section 3.4) for a complete description and discussion of the encoding.

Elias–Fano has been recently used as a key ingredient of many compressed, practical, data structures (see, e.g. Perego et al., 2021; Pibiri and Venturini, 2017, 2019).

3 Related work

As anticipated in Section 1, most existing solutions for exact membership queries are based on indexing paths of the dBG (see also Section 2), such as its unitigs or maximal (possibly, stitched) unitigs. These approaches have also been summarized in the recent survey by Chikhi et al. (2021, Section 4.2), hence we give a rather cursory overview here.

The paths can be represented using an FM-index (Ferragina and Manzini, 2000) for very compact space (Chikhi et al., 2014; Rahman and Medvedev, 2020). The practical efficiency of the FM-index mainly depends on how many samples of the suffix-array are kept in the index.

Other approaches resort on hashing for fast lookup queries. For example, Bifrost (Holley and Melsted, 2020) uses a hash table of minimizers whose values are the locations of the minimizers in the unitigs. The index was designed to be dynamic, hence allowing insertion/removal of k-mers and consequent re-computation of the unitigs. The dynamic nature of Bifrost makes it consume higher space compared to static approaches using compressed hash representations and succinct data structures, like Pufferfish (Almodaresi et al., 2018) and Blight (Marchet et al., 2021). Hence, it is regarded as out of scope for this work.

Pufferfish (Almodaresi et al., 2018) associates to each k-mer its location in the unitigs using an MPHF and a vector of absolute positions. The authors also propose a sparse version of the index where the vector of positions is sampled to improve space usage at the expense of query time. Blight (Marchet et al., 2021) is another associative dictionary based on minimal perfect hashing. All the super-k-mers having the same minimizer are grouped together into an index partition and a separate MPHF is built for all the k-mers in the partition. Since the k-mers’ offsets are relative to a given partition, the space usage is improved compared to Pufferfish. To further reduce space, a k-mer is associated to the segment of super-k-mers where it belongs to, for a given . This reduces the space of the dictionary by b bits per k-mer but a lookup needs to scan (at most) super-k-mers. Very importantly, Pufferfish and Blight are also optimized for streaming membership queries.

4 Sparse and skew hashing

In this section, we describe our main contribution: an exact, associative and compressed dictionary data structure for k-mers, supporting fast Lookup, Access and streaming queries. From a high-level point of view, the dictionary is obtained via a careful combination of minimal perfect hashing and compact encodings. In particular, we show how two important properties of minimizers—those of being sparse (Section 4.1) and skewly distributed (Section 4.2) in DNA strings—can be exploited to achieve an efficient dictionary. We aim at a good trade-off between dictionary space and query efficiency.

Recall from Section 2 that the dictionary is built from a collection of paths covering a dBG (e.g. the maximal stitched unitigs), that is: a collection of strings, each of length at least k symbols, with no duplicate k-mers. For ease of notation, we indicate with p the number of paths in the collection and with N their cumulative length (the total number of DNA bases in the input). The number of (distinct) k-mers is, therefore, .

4.1 Sparse hashing

The starting point for our development is based on the well-known empirical property of minimizers in that consecutive k-mers are likely to have the same minimizer. Thus, instead of working with individual k-mers, we focus on maximal sequences of k-mers having the same minimizer—the so-called super-k-mers (see Section 2). Super-k-mers are useful because of the following two reasons.

As super-k-mers are likely to span several consecutive k-mers, we expect to see far fewer super-k-mers than k-mers—approximately, times less for a large-enough minimizer length m. Informally, this property allows a space usage proportional to the number of super-k-mers, thus sparsifying the dictionary.

A super-k-mer of length K is a space-efficient representation for its constituent k-mers since it takes bits/k-mer instead of the trivial cost of 2k bits/k-mer.

Therefore, our refined ambition is to index the super-k-mers of the input using minimizers. Although this can simply be achieved via hashing the minimizers, i.e. by concatenating all the super-k-mers having the same minimizer (like in the Blight index (Marchet et al., 2021))—we claim that his approach is very wasteful in terms of space. In fact, note that each super-k-mer has a fixed cost of bits for representing the ‘tail’ of its string (its last k−1 symbols). This fixed cost is only well amortized (say, negligibly small) when the length of the super-k-mer is much larger than k−1. In other words, when the super-k-mer contains many more k-mers than k−1. While possible in some extreme cases (e.g. the same minimizer repeats in sequence), it is not usually so for the values of k and m used in concrete applications; actually, a super-k-mer is more likely to contain k-mers or less.

If z indicates the number of super-k-mers in the input, then the space of this simple solution would be, at least, bits/k-mer (extra space is then needed to accelerate the queries). For example, consider the whole human genome with k = 31 and m = 20. There are more than super-k-mers for, roughly, distinct k-mers. Therefore, partitioning the strings according to super-k-mers would cost at least 11.50 bits/k-mer. As we will better see in Section 5, our dictionary can be tuned to take, overall, 8.28 bits/k-mer in this case (or less).

Thus, it is of utmost importance to not break the strings according to super-k-mers if space-efficiency is a concern. Instead, we identify a super-k-mer in the strings, whose total length is N, with an absolute offset of bits. To be precise, an offset is the position in of the first base of a super-k-mer. Since k should be chosen large enough to allow good k-mer specificity, will be much larger than in practice, even for the largest genomes. For example, we use k = 31 in our experiments, as done in many other works (Almodaresi et al., 2018; Bingmann et al., 2019; Marchet et al., 2021; Rahman and Medvedev, 2020), whereas is around 30–33 for collections with billions of k-mers (see also Table 2 at page 6). The use of absolute offsets can almost halve the space overhead for the indexing of super-k-mers in such cases. The space saving is even larger for larger k.

Table 2.

Some basic statistics for the datasets used in the experiments, for k = 31, such as number of: k-mers (n), paths (p), and bases (N)

| Dataset | n | p | N | |

|---|---|---|---|---|

| Cod | 502 465 200 | 2 406 681 | 574 665 630 | 30 |

| Kestrel | 1 150 399 205 | 682 344 | 1 170 869 525 | 31 |

| Human | 2 505 445 761 | 13 014 641 | 2 895 884 991 | 32 |

| Bacterial | 5 350 807 438 | 26 449 008 | 6 144 277 678 | 33 |

Dictionary layout and compression. Based on the above discussion, we now detail the different components of our dictionary data structure.

Strings. The p strings in the input are written one after the other in a vector of 2N bits (2 bits per input base). We also keep in a sorted integer sequence of length p the endpoints of the strings to avoid detection of alien k-mers. This sequence, Endpoints, is compressed with Elias–Fano and takes bits.

Minimizers. Let be the set of all distinct minimizers seen in the input, with , and z the number of super-k-mers. Clearly, we have because a minimizer can appear more than once in the input. Given a minimizer r, let us call the bucket of the minimizer r, Br, the set of all the super-k-mers that have minimizer r. We build an MPHF f for . The MPHF provides us an addressable space of size M: for a minimizer r, the value is the ‘bucket identifier’ of r. We keep an array , where is the size of the bucket of r, and . We then take the prefix-sums of Sizes, i.e. we replace with for all i > 0. Therefore, for a given minimizer r, now indicates that there are super-k-mers before bucket Br in the order given by f. The MPHF costs roughly 3 bits per minimizer; the Sizes array is compressed with Elias–Fano too and takes bits.

Offsets. The absolute offsets of the super-k-mers into the strings are stored in an array, , in the order given by f. For a minimizer r such that , its offsets are written consecutively (and in sorted order) in . Note that, by construction, . The space for the Offsets array is bits.

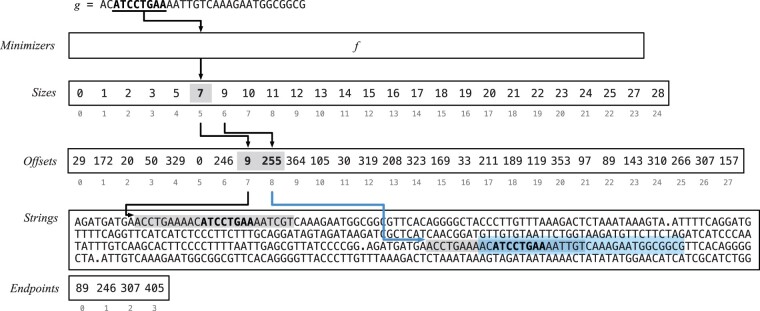

Figure 1 illustrates the different components of the dictionary and provides a concrete example for an input collection of four strings. Next, we describe how the Lookup and Access queries are supported.

Fig. 1.

A schematic representation of the proposed dictionary data structure. The input of the example contains p = 4 strings (pictorially separated by a ‘.’ symbol, but practically by the Endpoints array) for a total of N = 405 bases, and k-mers for k = 31. There are M = 24 minimizers for m = 8 and z = 28 super-k-mers, thus the Sizes and Offsets arrays have length, respectively, and z = 28. All minimizers have bucket size equal to 1 except for 3 of them (i.e. AACCTGAA, ATCCTGAA, TGTCAAAG) that have bucket size equal to 2. The picture also shows an example of Lookup for the k-mer g ACATCCTGAAAATTGTCAAAGAATGGCGGCG, whose minimizer r = ATCCTGAA is highlighted in bold font. The flow of the algorithm is represented by the arrows. First, the function f returns the identifier of r as f(r) = 5. Then the bucket size of r is computed: in this case, we have , indicating that there are 2 super-k-mers to consider. The offsets of the super-k-mers are retrieved as and . The two super-k-mers are scanned in Strings starting at and , respectively. (At most k-mers are considered in each super-k-mer, as highlighted by the gray box. See the Supplementary Material for a discussion about this point.) Lastly, the k-mer g is found at position w = 8 in the second super-k-mer, i.e. at offset . Since there are two strings before the one containing g, then j = 2, and we have to discard invalid ranks for the calculation of the identifier i of g. Therefore, we return

Lookup. We first recall that the Lookup query takes as input a k-mer g and returns a unique identifier i for g: if g is found in the dictionary, or i = – 1 otherwise. The Lookup algorithm is as follows.

We compute the minimizer r of g and its bucket identifier as f(r). Then, we locate the super-k-mers in its bucket Br by retrieving the corresponding offsets from , where and . For every offset t in , we scan the super-k-mer starting from comparing its k-mers to the query g. If g is not found, we just return –1. Instead, if g is found in position w in the super-k-mer, we return the ‘identifier’ i of g as , where j < p is the number of strings before the one containing the offset t (this quantity is computed from the Endpoints array).

Refer to Figure 1 for an example of Lookup and to the Supplementary Material for further technical details.

Double strandedness. A detail of crucial importance for the Lookup algorithm is double strandedness. A k-mer and its reverse complement are considered to be identical. This means that if a k-mer g is not found by the Lookup algorithm, there can still be the possibility for its reverse complement to be found in Strings. Therefore, the actual Lookup routine will first search for g and—only if not found—will also search for . This effectively doubles the query time for Lookup in the worst case.

To guarantee that a Lookup will always inspect one single bucket, we use a different minimizer computation (during both query and dictionary construction): we select as minimizer the minimum between the minimizer of g and that of . In this way, it is guaranteed that two k-mers being the reverse complements of each other always belong to the same bucket.

This different minimizer selection actually changes the parsing of super-k-mers from the input during the construction of the dictionary. We refer to this parsing modality as canonical henceforth, in contrast to the regular modality we assumed so far. When this modality is chosen, we expect to see an increase in the number of distinct minimizers used (on average, the minimizers of g and have equal probability of being the minimum one) for a higher space usage, but faster query time.

We will explore the space/time trade-off between the regular and canonical modalities in Section 5.1.

Access. The Access query retrieves the k-mer string g given its identifier i. This identifier represents the rank of g in Strings but, since the strings have variable lengths and their last k−1 symbols do not correspond to valid ranks, we cannot directly access Strings at position i. Instead, we have to compute the offset t corresponding to the k-mer g of rank i. Therefore, we perform a binary search for i in Endpoints to determine t and return .

Lastly, in this section, we point out that the minimizer length m controls a space/time trade-off for the proposed dictionary data structure. Small values of m create fewer and longer super-k-mers, thus lowering the space for the smaller values of z and M. On the other hand, m should not be chosen too small to avoid the scan of many super-k-mers at query time. We will experimentally show the trade-off in Section 5.1. Next, we take a deeper look at lookup time.

4.2 Skew hashing

The efficiency of the Lookup query depends on the number of super-k-mers in the bucket of a minimizer, which we refer to as the ‘size’ of the bucket. Since a minimizer can appear multiple times in the input strings, nothing prevents its bucket size to grow unbounded. For example, on the human genome, the largest bucket size can be as large as for m = 20 (or even larger for smaller values of m), meaning that a query inspecting such a bucket would be very slow in practice.

To avoid the burden of these heavy buckets, i.e. to guarantee that a Lookup inspects a constant number of super-k-mers in the worst case, we exploit another important property of minimizers: the distribution of the bucket size is (very) skewed for sufficiently large m. That is, most minimizers appear just once and relatively few of them repeat many times—an observation also made in several previous works (see, e.g. Chikhi et al., 2014; Jain et al., 2020).

Table 1 shows an example of such distribution for the first k-mers (for k = 31) of the human genome. Similar values were obtained for other genomes. (See the tables for other values of n in Supplementary Material.) More precisely, a value in the table represents the fraction of buckets having size s, for (only the first 5 sizes are shown for conciseness). The important thing to observe in the example is that, for m > 17, the distribution is very skewed, e.g. most buckets () contain just 1 super-k-mer. As we want to take advantage of this distribution, we proceed as follows.

Table 1.

Bucket size distribution (%) for k = 31 and the first k-mers of the human genome, by varying minimizer length m

| Size/m | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 13.7 | 19.8 | 29.7 | 42.4 | 61.5 | 79.5 | 89.8 | 94.4 | 96.3 | 97.1 | 97.5 |

| 2 | 7.5 | 10.6 | 14.4 | 17.7 | 19.4 | 13.6 | 7.3 | 3.9 | 2.4 | 1.7 | 1.4 |

| 3 | 5.2 | 7.3 | 8.8 | 10.4 | 8.4 | 3.7 | 1.4 | 0.8 | 0.5 | 0.4 | 0.4 |

| 4 | 4.0 | 5.5 | 6.0 | 7.0 | 4.1 | 1.3 | 0.5 | 0.3 | 0.2 | 0.2 | 0.2 |

| 5 | 3.2 | 4.4 | 4.5 | 5.0 | 2.2 | 0.6 | 0.3 | 0.2 | 0.1 | 0.1 | 0.1 |

We fix two quantities and L, with . By virtue of the skew distribution, we have that the number of buckets whose size is larger than is small for a proper choice of , as well as the number of k-mers belonging to such buckets. For example with m = 20 and , we see from Table 1 that we have of buckets with more than super-k-mers. This allows us to build an MPHF to speed up Lookup but only for a small fraction of the total k-mers—in marked contrast with prior schemes (reviewed in Section 3) that build the function over the entire set of k-mers. For ease of exposition, in the following we assume that , where max is the largest bucket size (the corner case for is straightforward to handle). For , let be the set of all the k-mers belonging to buckets of size s, with s such that

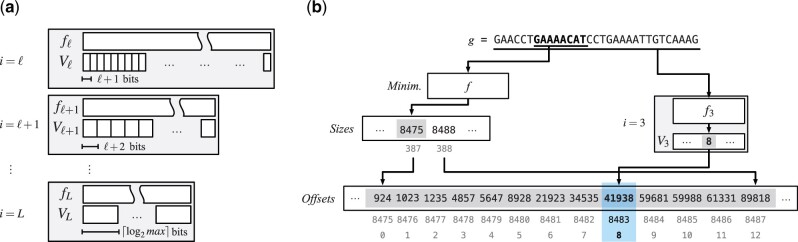

We build an MPHF fi for each set . Now, given a k-mer , we know that it belongs to a bucket containing at most super-k-mers. Therefore, we can store the identifier of the super-k-mer containing g in a vector, , at position . Importantly, each integer in Vi requires just i + 1 bits to be represented (VL is formed by -bit integers). Figure 2a illustrates this structure.

Fig. 2.

A schematic view of the skew index component of the dictionary (a), comprising partitions , each consisting of an MPHF fi and a compact vector Vi. Let us consider an example (b) of Lookup for g GAACCTGAAAACATCCTGAAAATTGTCAAAG and . Suppose that the bucket for the minimizer contains s = 13 super-k-mers (whose offsets are in the picture), thus it belongs to partition i = 3 because . (Each integer in V3 is less than , so it can be coded in bits.) Now, also suppose that g is located in the 9-th super-k-mer of the bucket (i.e. that of index 8). It would then be time-consuming to fully scan the 8 super-k-mers before the 9-th. Therefore, we retrieve 8—the index of the 9-th super-k-mer where g is located—from and know that g has to be searched for in the super-k-mer whose offset is

We point out that, again thanks to the skew distribution, it is very likely to have . Therefore, for a proper choice of and L, we expect this additional skew index component of the dictionary to take little space, while granting very fast searches.

To make a concrete example, let us consider the human genome and the skew index with and L = 12. So we form partitions; each partition is made up of an MPHF and a compact vector. Each MPHF fi can be tuned to take bits per key, whereas we spend i + 1 bits per integer in Vi, . (As already mentioned, for m = 20, thus we spend bits per integer in .) The crucial point is that we have 0.016% of buckets that comprise more than super-k-mers, for just 1.86% of the total k-mers. For this reason, the skew index costs less than 0.21 bits/k-mer over a total of 8.28 bits/k-mer (see also Supplementary Fig. S1a).

Accelerated lookup. Using the skew index to accelerate is simple. As for regular Lookup, we compute the minimizer r of g and the quantities and . Therefore, we know that the bucket of r has size . Let . If , then the bucket is ‘small’ and we proceed as already explained in Section 4.1. Otherwise, we know that g, if present in the dictionary, belongs to some partition i of the skew index that, as per our description above, has MPHF fi and compact vector Vi. Thus, we retrieve the super-k-mer identifier and finally search for g in the super-k-mer whose offset is . (Note that if , then g cannot belong to the dictionary.) In conclusion, although the skew index performs 2 additional accesses per Lookup, one for fi and one for Vi, it limits the number of accesses made to Strings to . (To handle reverse complements, we may have to repeat the process also for the reverse complement of g.)

Figure 2b shows a concrete example of Lookup.

4.3 Streaming queries

The Lookup algorithm we have described in the previous section is context-less, i.e. it does not take advantage of the specific, consecutive, query order issued by sequence analysis tasks. As already mentioned in Section 1, given a string P of length , we are interested in determining the result of Lookup for all the k-mers read consecutively from P. We would like to do it faster than just performing independent lookups. Therefore, in this section, we describe some important optimizations for streaming lookup queries that work well with the proposed dictionary data structure. The general idea is to cache some extra information about the result for the k-mer to speed up the computation for the next k-mer in P, say .

The algorithm keeps track of the minimizer r of g and the position j at which the last match was found in , i.e. if g belongs to the dictionary, then it is located at for some j. These two variables make up a state information that is updated during the execution of the algorithm. Given that consecutive k-mers are likely to share the same minimizers, we compare r to the minimizer of gnx, say rnx.

If , then we know that gnx belongs to the same bucket Br of g, thus we avoid recomputing f and spare the accesses to both Sizes and Offsets. Also, if g was actually found in the dictionary (therefore, starting at ) good chances are that gnx is found at . If so, we refer to the latter matching case as an extension. Intuitively, if the algorithm ‘extends’ frequently, i.e. most matches in P are determined by just looking at consecutive k-mers in Strings, then fast evaluation is retained. If the algorithm does not extend from g to gnx, i.e. gnx is not found at , then we scan the bucket Br. Therefore, if present in the dictionary, gnx will be found at some other position jnx. So we update the state by setting .

If , then we proceed as for a regular Lookup query, locating the new bucket and searching for gnx. We then set .

Of course it can happen that the minimizer r does not belong to the set of minimizers indexed by the dictionary. Recall from Section 4.1 that we build the MPHF f for the set of all the distinct minimizers in the input. In this case, we are sure that any k-mer g whose minimizer is not to be found in the dictionary. By definition, however, we are not able to detect if using the MPHF f. That is, f will still locate a bucket and all the k-mers in the bucket will have (the same) minimizer, different from r. Therefore, when searching for g, we first compare r with the minimizer of the first k-mer read in the bucket: if they are different, we know that and g does not belong to the dictionary. In the case when , the algorithm still caches the last seen minimizer because if then also and gnx cannot belong to the dictionary.

In conclusion—as long as the minimizer is the same—either the algorithm works locally in the same bucket, or safely skips the search.

Another convenient information to cache in the state of the algorithm is the orientation of the last match, i.e. whether the last queried k-mer g was found in the dictionary as g or as its reverse complement . In fact, if g was found as g then also gnx is likely to be found as gnx and extension should be tried in forward direction (say, from lower to higher offsets in Strings). But if g was found as , then is more efficient to try to extend the matching in backward direction, hence effectively iterating backwards in . In fact, suppose that the whole string P (for ease of exposition) is present in Strings but in its reverse complement form. Then the first k-mer g of P will be found as in last position in the located ‘region’ of Strings, say at some position j. Any other attempt to extend the matching in forward direction (from j to j + 1) will then fail and any subsequent gnx will be searched for by re-scanning the bucket again. That is, we end up in scanning the bucket for times, for at least k-mer comparisons. To prevent this quadratic behavior in case of reverse complemented patterns, we try to directly extend the matching for gnx moving from j to j−1.

5 Experiments

In this section, we benchmark the proposed dictionary data structure—which we refer to as SSHash in the following—and compare it against the indexes reviewed in Section 3. For all our experiments, we fix k to 31.

Our implementation of SSHash is written in C++17 and available at https://github.com/jermp/sshash. For the experiments we report here, the code was compiled with gcc 11.2.0 under Ubuntu 19.10 (Linux kernel 5.3.0, 64 bits), using the flags -O3 and -march=native. We do not explicitly use any SIMD instruction in our codebase.

We use a server machine equipped with an Intel i9-9940X processor (clocked at 3.30 GHz) and 128 GB of RAM. The reported timings were collected using a single core of the processor. All dictionaries were fully loaded in internal memory before running the experiments. The SSHash dictionaries were also built entirely in internal memory.

Datasets. We downloaded some DNA collections (in.fasta format) and built the compacted dBG using the tool BCALM (v2) (Chikhi et al., 2016), without any k-mer filtering, to extract the maximal unitigs. We then run the tool UST (Rahman and Medvedev, 2020) to compute the corresponding path covers. Table 2 reports the basic statistics of the path covers. In particular we used: the whole genomes of the atlantic cod (Gadus morhua) and the common kestrel (Falco tinnunculus), the whole GRCh38 human genome (Homo sapiens), and a collection of more than 8000 bacterial genomes from Almodaresi et al. (2018).

At the code repository https://github.com/jermp/sshash we provide further instructions on how to download and prepare the datasets for indexing.

5.1 Tuning

Before comparing SSHash against other dictionaries, we first benchmark SSHash in isolation to fix a suitable choice for m and quantify the impact of the different parsing modalities (regular vs. canonical) that we introduced in Section 4.1. Following our discussion in Section 4.2, we use and L = 12 for all SSHash dictionaries.

To measure query time, we use 106 queries and report the mean between 5 measurements. For positive lookups, i.e. those for k-mers present in the dictionary, we sampled uniformly at random 106 k-mers from each collection and use them as queries. Very importantly, 50% of them were transformed into their reverse complements to make sure we benchmark the dictionaries in the most general case. For negative lookups, we simply use randomly generated k-mer strings. For Access, we generated 106 integers uniformly at random in the range for each collection and extract the corresponding k-mer strings.

Access and iteration time. We first recall that the time for Access (and thus, that for iteration) does not depend on m nor . The average Access time is, instead, affected by the size of the data structure, i.e. by n and p: Access is on average faster than Lookup since the wanted string is accessed directly, rather than searched for in the dictionary. Iterating thorough all k-mers in the dictionary is very fast and even independent from n: on average, it costs 20–22 ns/k-mer. Therefore, for the rest of this section, we entirely focus on lookup time.

Space and lookup time. With the help of Supplementary Table S1 (see also the example at page 5 for ), we choose some suitable ranges of m for the different dataset sizes. The space/time trade-off by varying m in such ranges, for both regular and canonical parsing modalities, is shown in Table 3. As we discussed in Section 4.1 and apparent from the table, m controls a trade-off between dictionary size and lookup time: the smaller the m value, the more compact the dictionary, but the slower the dictionary as well (and vice versa). While it is difficult to precisely tell by how much the space will grow when moving from m to m + 1, we see that the space grows by bits/k-mer, for both regular and canonical parsing. The canonical parsing modality costs bits/k-mer more than the regular one for the same value of m because more distinct minimizers are used. However, the canonical version improves lookup time significantly (especially for negative queries), by a factor of on average, because only one bucket per query is inspected in the worst case rather than two by the regular modality.

Table 3.

Space in bits/k-mer (bpk) and Lookup time (indicated by Lkp+ for positive queries; by Lkp– for negative) in average ns/k-mer for regular and canonical SSHash dictionaries by varying minimizer length m

| Dataset |

m

|

m

|

m

|

m

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| bpk | Lkp+ | Lkp– | bpk | Lkp+ | Lkp– | bpk | Lkp+ | Lkp– | bpk | Lkp+ | Lkp– | |

| Cod | 15 | 16 | 17 | 18 | ||||||||

| Regular | 6.60 | 1236 | 1267 | 6.82 | 1100 | 1174 | 6.98 | 1045 | 1158 | 7.21 | 1015 | 1157 |

| Canonical | 7.68 | 945 | 768 | 7.92 | 834 | 690 | 8.18 | 786 | 672 | 8.47 | 755 | 658 |

| Kestrel | 16 | 17 | 18 | 19 | ||||||||

| Regular | 6.19 | 1137 | 1323 | 6.48 | 1042 | 1265 | 6.79 | 1005 | 1245 | 7.12 | 997 | 1240 |

| Canonical | 7.30 | 882 | 781 | 7.68 | 790 | 722 | 8.09 | 743 | 696 | 8.51 | 730 | 691 |

| Human | 17 | 18 | 19 | 20 | ||||||||

| Regular | 7.44 | 1591 | 1668 | 7.67 | 1459 | 1573 | 7.95 | 1406 | 1547 | 8.28 | 1338 | 1530 |

| Canonical | 8.76 | 1150 | 936 | 9.04 | 1054 | 881 | 9.39 | 990 | 854 | 9.80 | 958 | 838 |

| Bacterial | 18 | 19 | 20 | 21 | ||||||||

| Regular | 7.42 | 1535 | 1867 | 7.80 | 1425 | 1813 | 8.22 | 1389 | 1780 | 8.70 | 1368 | 1774 |

| Canonical | 8.75 | 1129 | 1043 | 9.22 | 1051 | 995 | 9.75 | 1028 | 947 | 10.34 | 998 | 956 |

Note: For each dataset, we indicate promising configurations in bold font.

Since we seek for a good balance between dictionary space and lookup time, in the light of the results reported in Table 3, we choose m as follows. For Cod and Kestrel: m = 17 with regular parsing; m = 16 with canonical parsing; for Human and Bacterial: m = 20 with regular parsing; m = 19 with canonical parsing. In the following, we assume these values of m are used and omit the indication from the tables.

In general, we observe that a good value for m satisfies , i.e. m should be chosen as to have—at least—as many possible minimizers as the number of bases in the input. It is therefore recommended to use or .

5.2 Comparison against other dictionaries

In this section, we compare SSHash against the following state-of-the-art dictionaries that we briefly reviewed in Section 3:

dBG-FM (Chikhi et al., 2014)—An implementation of the popular FM-index tailored for DNA. This implementation is widely used as an exact membership data structure for k-mers (Chikhi et al., 2014; Rahman and Medvedev, 2020), also in the ABySS assembler (Simpson et al., 2009; Jackman et al., 2017). The implementation has a main trade-off parameter (a sampling factor) that we vary as s = 32, 64, 128.

Pufferfish (Almodaresi et al., 2018)—We test both the dense and sparse versions of the index. The sparse version was obtained with parameters s = 9 and e = 4 as used in the original paper.

Blight (Marchet et al., 2021)—We test the index with sampling rate b = 0, 2, 4 and minimizer length m = 10 as suggested in the paper. We recall that a sampling rate of b > 0 reduces the index space by b bits/k-mer at the expense of query time.

We use the C++ implementations from the respective authors: links to the various GitHub libraries are provided in the References. All sources were compiled using the same compilation flags as used for SSHash.

Space and lookup time. We first consider the space of the dictionaries, reported in Table 4. The space of SSHash is significantly better than that of the other approaches based on minimal perfect hashing, roughly: (or more) better than Blight, and better than Pufferfish. This is so primarily because these approaches build an MPHF for the entire set of k-mers, hence associate a positional information (e.g. in the reference genome) to each k-mer in the input. We point out that, unlike for Blight, this is expected for Pufferfish dense since it was exactly designed for the purpose of reference mapping. (The shaded rows in Table 4 account for the space needed by Pufferfish to only support Lookup, i.e. discarding the color information in its colored dBG structure.)

Table 4.

Dictionary space in total GB and average bits/k-mer (bpk)

| Dictionary | Cod |

Kestrel |

Human |

Bacterial |

||||

|---|---|---|---|---|---|---|---|---|

| GB | bpk | GB | bpk | GB | bpk | GB | bpk | |

| dBG-FM, s = 128 | 0.22 | 3.48 | 0.44 | 3.07 | — | — | — | — |

| dBG-FM, s = 64 | 0.27 | 4.38 | 0.55 | 3.86 | — | — | — | — |

| dBG-FM, s = 32 | 0.39 | 6.16 | 0.78 | 5.43 | — | — | — | — |

| Pufferfish, sparse | 1.75 | 27.80 | 3.69 | 25.66 | 8.87 | 28.32 | 18.91 | 28.28 |

| 1.49 | 23.70 | 3.37 | 23.40 | 7.50 | 23.96 | 16.09 | 24.06 | |

| Pufferfish, dense | 2.69 | 42.76 | 5.97 | 41.54 | 14.11 | 45.04 | 30.70 | 45.89 |

| 2.43 | 38.66 | 5.65 | 39.28 | 12.74 | 40.68 | 27.88 | 41.68 | |

| Blight, b = 4 | 0.91 | 14.53 | 2.16 | 15.00 | 5.04 | 16.11 | 11.40 | 17.04 |

| Blight, b = 2 | 1.04 | 16.57 | 2.45 | 17.04 | 5.67 | 18.13 | 12.74 | 19.05 |

| Blight, b = 0 | 1.17 | 18.61 | 2.74 | 19.06 | 6.32 | 20.17 | 14.12 | 21.11 |

| SSHash, regular | 0.44 | 6.98 | 0.93 | 6.48 | 2.59 | 8.28 | 5.50 | 8.22 |

| SSHash, canonical | 0.50 | 7.92 | 1.00 | 7.30 | 2.94 | 9.39 | 6.17 | 9.22 |

The dBG-FM index is, not surprisingly, the most compact, thanks to the compression of the powerful Burrows–Wheeler transform (BWT) (Burrows and Wheeler, 1994). (We were unable to build the index correctly on the larger Human and Bacterial datasets.) While dBG-FM is several times smaller than Pufferfish and Blight, note that its smallest version tested (for s = 128) is only essentially smaller than regular SSHash and this gap diminishes at higher sampling rates. For example, dBG-FM for s = 32 is only smaller than regular SSHash. However, SSHash answers lookup queries much faster than dBG-FM as shown in Table 5. A lookup query in the dBG-FM index is implemented as a classic count query on a FM-index (see the paper by Ferragina and Manzini (2000) for details) which, for a pattern of length k, generates at least k cache-misses. This cost is even higher for the handling of reverse complements that may induce two distinct count queries.

Table 5.

Dictionary Lookup time in average ns/k-mer

| Dictionary | Cod |

Kestrel |

Human |

Bacterial |

||||

|---|---|---|---|---|---|---|---|---|

| Lkp+ | Lkp– | Lkp+ | Lkp– | Lkp+ | Lkp– | Lkp+ | Lkp– | |

| dBG-FM, s = 128 | 22 980 | 16 501 | 23 934 | 16 764 | — | — | — | — |

| dBG-FM, s = 64 | 15 013 | 10 919 | 15 929 | 11 462 | — | — | — | — |

| dBG-FM, s = 32 | 11 386 | 7929 | 11 703 | 8073 | — | — | — | — |

| Pufferfish, sparse | 1110 | 700 | 5456 | 769 | 13 656 | 862 | 27 748 | 983 |

| Pufferfish, dense | 624 | 439 | 635 | 485 | 720 | 519 | 816 | 582 |

| Blight, b = 4 | 2520 | 2751 | 2743 | 3104 | 2820 | 3329 | 3105 | 3913 |

| Blight, b = 2 | 1800 | 1643 | 1916 | 1820 | 2008 | 1975 | 2095 | 2146 |

| Blight, b = 0 | 1571 | 1317 | 1692 | 1472 | 1780 | 1610 | 1859 | 1751 |

| SSHash, regular | 1045 | 1158 | 1042 | 1265 | 1338 | 1530 | 1389 | 1780 |

| SSHash, canonical | 834 | 690 | 882 | 781 | 990 | 854 | 1051 | 995 |

We observe that SSHash regular is as fast as (or faster than) the fastest Blight’s version, for b = 0, and faster for higher b. SSHash canonical is always much faster than Blight. Pufferfish dense is instead faster than SSHash thanks to its simpler lookup procedure that just needs to retrieve the absolute offset of a k-mer using hashing and check the k-mer against the reference string. However, we point out that: (i) this higher Lookup efficiency comes at a significant penalty in space effectiveness compared to SSHash, and (ii) the sparse variant’s performance degrades on larger datasets.

Streaming membership query time. We now consider streaming membership queries. Pufferfish and Blight are also optimized to answer these kind of stateful queries. To query the dictionaries, we use some reads (in.fastq format) downloaded from the European Nucleotide Archive, and related to each dataset—Cod: run accession SRR12858649 with 2 041 092 reads, each of length 110 bases; Kestrel: run accession SRR11449743 with 14 647 106 reads, each of length 125 bases; Human: run accession SRR5833294 with 34 129 891 reads, each of length 76 bases; Bacterial: run accession SRR5901135 with 4 628 576 reads of variable length (a sequencing run of Escherichia Coli).

We lookup for every k-mer read in sequence from the query files. For all the indexes, we just count the number of returned results rather than saving them to a vector. The result is reported in Table 6.

Table 6.

Query time for streaming membership queries for various dictionaries

| (a) high-hit workload | ||||||||

|---|---|---|---|---|---|---|---|---|

| Dictionary | Cod |

Kestrel |

Human |

Bacterial |

||||

| SRR12858649 | SRR11449743 | SRR5833294 | SRR5901135 | |||||

| 81.37% hits |

74.60% hits |

91.65% hits |

87.79% hits |

|||||

| Tot | Avg | Tot | Avg | Tot | Avg | Tot | Avg | |

| Pufferfish, sparse | 0.6 | 214 | 14.1 | 609 | 17.0 | 651 | 9.1 | 691 |

| Pufferfish, dense | 0.2 | 92 | 8.5 | 368 | 10.5 | 402 | 5.3 | 404 |

| Blight, b = 4 | 2.1 | 766 | 32.5 | 1400 | 27.3 | 1041 | 11.4 | 864 |

| Blight, b = 2 | 1.2 | 453 | 16.6 | 714 | 17.5 | 670 | 8.6 | 648 |

| Blight, b = 0 | 0.8 | 282 | 10.8 | 464 | 11.5 | 440 | 5.8 | 434 |

| SSHash, regular | 0.5 | 166 | 6.2 | 267 | 8.2 | 311 | 3.0 | 223 |

| SSHash, canonical | 0.3 | 111 | 5.1 | 219 | 6.7 | 253 | 2.4 | 184 |

|

| ||||||||

| (b) low-hit workload | ||||||||

| Dictionary |

Cod |

Kestrel |

Human |

Bacterial |

||||

| SRR11449743 | SRR12858649 | SRR5901135 | SRR5833294 | |||||

|

0.659% hits |

0.484% hits |

0.002% hits |

0.086% hits |

|||||

| Tot | Avg | Tot | Avg | Tot | Avg | Tot | Avg | |

| Pufferfish, sparse | 14.6 | 627 | 0.9 | 312 | 11.3 | 855 | 25.5 | 975 |

| Pufferfish, dense | 8.7 | 374 | 0.2 | 92 | 5.8 | 435 | 13.6 | 518 |

| Blight, b = 4 | 72.2 | 3112 | 6.6 | 2407 | 35.7 | 2704 | 253.2 | 9675 |

| Blight, b = 2 | 45.9 | 1978 | 3.0 | 1115 | 19.1 | 1445 | 117.7 | 4498 |

| Blight, b = 0 | 18.1 | 780 | 1.8 | 655 | 14.4 | 1088 | 32.2 | 1232 |

| SSHash, regular | 10.7 | 463 | 0.9 | 314 | 6.2 | 463 | 14.3 | 544 |

| SSHash, canonical | 5.1 | 220 | 0.4 | 155 | 2.5 | 183 | 6.4 | 244 |

Note: The query time is reported as total time in minutes (tot), and average ns/k-mer (avg). We also indicate the query file (SRR number) and the percentage of hits. Both high-hit ( hits) and low-hit ( hits) workloads are considered.

In general terms, we see that SSHash is either comparable to or faster (by ) than Pufferfish and Blight. This holds true for both high-hit workloads ( hits, i.e. k-mers present in the dictionary) and low-hit workloads ( hits). It is important to benchmark the dictionaries under these two different query scenarios as both situations are meaningful in practice. (In our experiments, low-hit workloads are obtained by querying the dictionaries using a different query file as indicated in Table 6.) Indeed, observe that while Pufferfish’s performance is robust under both scenarios, Blight’s query time significantly degrades when most queries are negative, especially for b > 0. Also regular SSHash is almost slower for low-hit workloads compared to high-hit workloads. This is expected, however, because almost all queries are exhaustively inspecting two buckets per k-mer as we explained in Section 4.1. Note that its performance is anyway better than Blight’s and not much worse than Pufferfish’s (dense variant). The canonical version of SSHash protects against this behavior in case of low-hit workload and, in fact, is generally the fastest dictionary.

Another meaningful point to mention is that SSHash does not allocate extra memory at query time, i.e. only the memory of the index—as reported in Table 4—is retained (the memory for the state information maintained by the streaming algorithm described in Section 4.3 is constant). Pufferfish also does not allocate extra memory. Blight, instead, consumes more memory at query time than that required by its index layout on disk. For example, to perform the queries on the Human dataset in Table 6a, Blight with b = 0 uses a maximum resident set size of 7.51 GB compared to the 6.32 GB taken by its index on disk (23.98 versus 20.17 bits/k-mer). This effect is even accentuated for higher b values.

Construction time. Table 7 reports the time and internal memory used to build the dictionaries. The dBG-FM index needs to build the BWT of the input prior to indexing. This step can be very time consuming for large collections such as the ones of practical interest. That is, another important advantage of schemes based on hashing compared to BWT-based indexes is that they require significantly less time to build. This is evident from the result reported in the table.

Table 7.

Dictionary construction times in minutes (using a single processing thread) and peak internal memory used during construction in GB (blight’s performance was the same for all values of b in the experiment)

| Dictionary | Cod |

Kestrel |

Human |

Bacterial |

||||

|---|---|---|---|---|---|---|---|---|

| Min | GB | Min | GB | Min | GB | Min | GB | |

| dBG-FM, s = 128 | 28.5 | 0.5 | 100.0 | 0.7 | — | — | — | — |

| dBG-FM, s = 64 | 28.5 | 0.6 | 100.0 | 0.9 | — | — | — | — |

| dBG-FM, s = 32 | 28.5 | 0.7 | 100.0 | 1.1 | — | — | — | — |

| Pufferfish, sparse | 15.5 | 3.3 | 35.2 | 6.7 | 86.0 | 19.4 | 200.8 | 40.1 |

| Pufferfish, dense | 13.0 | 2.8 | 29.2 | 5.9 | 70.7 | 14.0 | 173.2 | 30.4 |

| Blight | 5.0 | 3.3 | 11.0 | 7.0 | 25.0 | 7.5 | 50.0 | 15.8 |

| SSHash, regular | 1.5 | 2.6 | 3.8 | 5.7 | 12.5 | 15.4 | 29.6 | 33.4 |

| SSHash, canonical | 2.0 | 2.8 | 4.4 | 5.8 | 16.2 | 17.3 | 36.0 | 36.6 |

Due to space constraints, we do not describe the SSHash’s construction algorithm in this article. We just point out that the construction is efficient; indeed SSHash took much less time to build on the test collections compared to both Blight and Pufferfish. Its memory usage is comparable to that of Pufferfish, while Blight scales better in this regard (e.g. by retaining less internal memory on Human and Bacterial) as it partially uses external memory. In future work, we will adapt the SSHash dictionary construction to use external memory too.

We also note that the SSHash canonical takes consistently more time and space to build than the regular variant: this is a direct consequence of the denser sampling of minimizers.

6 Conclusions and future work

We have studied the compressed dictionary problem for k-mers and proposed a solution, SSHash, based on a careful orchestration of minimal perfect hashing and compact encodings. In particular, SSHash is an exact and associative k-mer dictionary designed to deliver good practical performance. From a technical perspective, SSHash exploits the sparseness and the skew distribution of k-mer minimizers to achieve compact space, while allowing fast lookup queries.

We tested SSHash on collections of billions of k-mers and compared it against other indexes, under different query workloads (high- versus low-hit) and modalities (random versus streaming). Our implementation of SSHash is written in C++ and open source.

Compared to BWT-based indexes (like the dBG-FM index), SSHash is more than one order of magnitudes faster at lookup for only larger space on average. Compared to prior schemes based on minimal perfect hashing (like Pufferfish and Blight), SSHash is significantly more compact ( depending on the configuration) without sacrificing query efficiency. Indeed, SSHash is also the fastest dictionary for streaming membership queries. For these reasons, we believe that SSHash embodies a superior space/time trade-off for the problem tackled in this work.

Several avenues for future work are possible. We mention some promising ones. First, we will engineer the dictionary construction to use multi-threading and external memory. Parallel query processing is also interesting; since SSHash is a read-only data structure, its queries are amenable to parallelism. We could also add support for other types of queries, such as navigational queries (Chikhi et al., 2014) that, given a k-mer g, ask to enumerate all the extensions of g (i.e. in both forward and backward direction) that are present in the dictionary. Another promising direction could adapt the SSHash data structure to also store the abundances of k-mers, which is a separate but related problem in the literature (Italiano et al., 2021; Shibuya et al., 2021). Based on the observation that consecutive k-mers tend to have the same or very similar abundance we expect to add a small extra space to SSHash to store this information. In this article, we focused on minimizers for their simplicity and practical efficiency but one could also explore the effects of replacing the minimizers with other types of string sampling mechanisms (Loukides and Pissis, 2021; Sahlin, 2021). Lastly, we also plan to study the approximate version of the dictionary problem where it is allowed to tolerate a prescribed false positive rate.

Supplementary Material

Acknowledgments

I thank Rossano Venturini for useful discussions on this work. I also thank the reviewers for their valuable suggestions that led to an improved presentation of the article.

Data availability

The data used in this work can be downloaded and pre-processed using the instructions at https://github.com/jermp/sshash#datasets.

Funding

This work was partially supported by the projects: MobiDataLab (EU H2020 RIA, grant agreement No 101006879) and OK-INSAID (MIUR-PON 2018, grant agreement No ARS01_00917).

Conflict of Interest: none declared.

References

- Almodaresi F. et al. (2018) A space and time-efficient index for the compacted colored de Bruijn graph. Bioinformatics, 34, i169–i177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bingmann T. et al. (2019) COBS: a compact bit-sliced signature index. In: International Symposium on String Processing and Information Retrieval. Springer, pp. 285–303.

- Břinda K. et al. (2021) Simplitigs as an efficient and scalable representation of de Bruijn graphs. Genome Biol., 22, 1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burrows M., Wheeler D. (1994) A block-sorting lossless data compression algorithm. In: Digital SRC Research Report. [Google Scholar]

- Chikhi R. et al. (2014) On the representation of de Bruijn graphs. In: International Conference on Research in Computational Molecular Biology. Springer, pp. 35–55. https://github.com/jts/dbgfm.

- Chikhi R. et al. (2016) Compacting de Bruijn graphs from sequencing data quickly and in low memory. Bioinformatics, 32, i201–i208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chikhi R. et al. (2021) Data structures to represent a set of k-long DNA sequences. ACM Comput. Surv., 54, 1–22. [Google Scholar]

- Elias P. (1974) Efficient storage and retrieval by content and address of static files. J. ACM, 21, 246–260. [Google Scholar]

- Fano R.M. (1971) On the Number of Bits Required to Implement an Associative Memory. Memorandum 61, Computer Structures Group, MIT.

- Ferragina P., Manzini G. (2000) Opportunistic data structures with applications. In: Proceedings 41st Annual Symposium on Foundations of Computer Science. IEEE, pp. 390–398.

- Holley G., Melsted P. (2020) Bifrost: highly parallel construction and indexing of colored and compacted de Bruijn graphs. Genome Biol., 21, 1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Italiano G. et al. (2021) Compressed weighted de Bruijn graphs. In: 32nd Annual Symposium on Combinatorial Pattern Matching (CPM 2021). pp. 1–16.

- Jackman S.D. et al. (2017) ABySS 2.0: resource-efficient assembly of large genomes using a bloom filter. Genome Res., 27, 768–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jain C. et al. (2020) Weighted minimizer sampling improves long read mapping. Bioinformatics, 36, i111–i118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khan J., Patro R. (2021) Cuttlefish: fast, parallel and low-memory compaction of de Bruijn graphs from large-scale genome collections. Bioinformatics, 37(Suppl_1), i177–i186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khan J. et al. (2021) Scalable, ultra-fast, and low-memory construction of compacted de Bruijn graphs with cuttlefish 2. bioRxiv. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y. et al. (2013) Memory efficient minimum substring partitioning. Proc. VLDB Endow., 6, 169–180. [Google Scholar]

- Loukides G., Pissis S.P. (2021) Bidirectional string anchors: a new string sampling mechanism. In: 29th Annual European Symposium on Algorithms (ESA 2021). 2021, pp. 1–64.

- Marchet C. et al. (2021) Blight: efficient exact associative structure for k-mers. Bioinformatics, 37, 2858–2865. [DOI] [PubMed] [Google Scholar]

- Martínez-Prieto M.A. et al. (2016) Practical compressed string dictionaries. Inf. Syst., 56, 73–108. [Google Scholar]

- Mehlhorn K. (1982) On the program size of perfect and universal hash functions. In: 23rd Annual Symposium on Foundations of Computer Science. IEEE, pp. 170–175.

- Perego R. et al. (2021) Compressed indexes for fast search of semantic data. IEEE Trans. Knowl. Data Eng., 33, 3187–3198. [Google Scholar]

- Pibiri G.E., Trani R. (2021a) PTHash: Revisiting FCH minimal perfect hashing. In: SIGIR ’21: The 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, Canada, July 11-15, 2021. ACM, pp. 1339–1348.

- Pibiri G.E., Trani R. (2021b) Parallel and external-memory construction of minimal perfect hash functions with PTHash. CoRR, abs/2106.02350.

- Pibiri G.E., Venturini R. (2017) Clustered Elias-Fano indexes. ACM Trans. Inf. Syst., 36, 2:1–2:33. [Google Scholar]

- Pibiri G.E., Venturini R. (2019) Handling massive N-gram datasets efficiently. ACM Trans. Inf. Syst., 37, 1–25:41. [Google Scholar]

- Pibiri G.E., Venturini R. (2021) Techniques for inverted index compression. ACM Comput. Surv., 53, 1–125:36. [Google Scholar]

- Rahman A., Medvedev P. (2020) Representation of k-mer sets using spectrum-preserving string sets. In: International Conference on Research in Computational Molecular Biology. Springer, pp. 152–168. https://github.com/medvedevgroup/UST. [DOI] [PMC free article] [PubMed]

- Roberts M. et al. (2004) Reducing storage requirements for biological sequence comparison. Bioinformatics, 20, 3363–3369. [DOI] [PubMed] [Google Scholar]

- Robidou L., Peterlongo P. (2021) findere: fast and precise approximate membership query. In: String Processing and Information Retrieval. Springer International Publishing, Cham, pp. 151–163. ISBN 978-3-030-86692-1. [Google Scholar]

- Sahlin K. (2021) Effective sequence similarity detection with strobemers. Genome Res., 31, 2080–2094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schleimer S. et al. (2003) Winnowing: local algorithms for document fingerprinting. In: Proceedings of the 2003 ACM SIGMOD international conference on Management of data. pp. 76–85.

- Shibuya Y. et al. (2021) Space-efficient representation of genomic k-mer count tables. In: 21st International Workshop on Algorithms in Bioinformatics (WABI 2021), Vol. 201. pp. 8–1. [DOI] [PMC free article] [PubMed]

- Simpson J.T. et al. (2009) Abyss: a parallel assembler for short read sequence data. Genome Res., 19, 1117–1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solomon B., Kingsford C. (2016) Fast search of thousands of short-read sequencing experiments. Nat. Biotechnol., 34, 300–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zheng H. et al. (2020) Improved design and analysis of practical minimizers. Bioinformatics, 36, i119–i127. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data used in this work can be downloaded and pre-processed using the instructions at https://github.com/jermp/sshash#datasets.