Summary

Several reviews have been conducted regarding artificial intelligence (AI) techniques to improve pregnancy outcomes. But they are not focusing on ultrasound images. This survey aims to explore how AI can assist with fetal growth monitoring via ultrasound image. We reported our findings using the guidelines for PRISMA. We conducted a comprehensive search of eight bibliographic databases. Out of 1269 studies 107 are included. We found that 2D ultrasound images were more popular (88) than 3D and 4D ultrasound images (19). Classification is the most used method (42), followed by segmentation (31), classification integrated with segmentation (16) and other miscellaneous methods such as object-detection, regression, and reinforcement learning (18). The most common areas that gained traction within the pregnancy domain were the fetus head (43), fetus body (31), fetus heart (13), fetus abdomen (10), and the fetus face (10). This survey will promote the development of improved AI models for fetal clinical applications.

Subject areas: Health informatics, Diagnostic technique in health technology, Medical imaging, Artificial intelligence

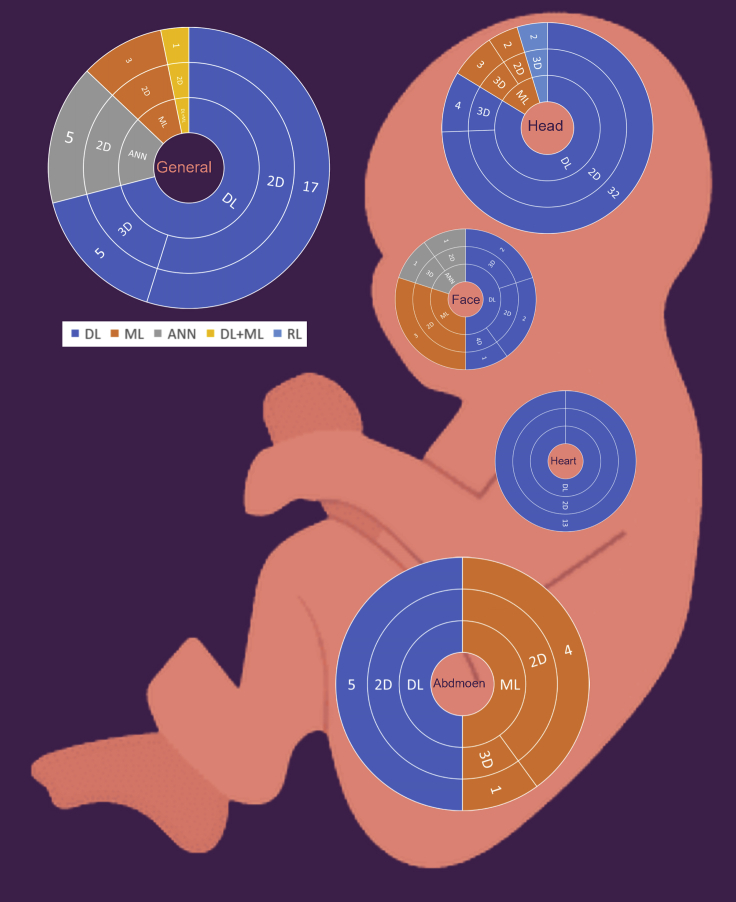

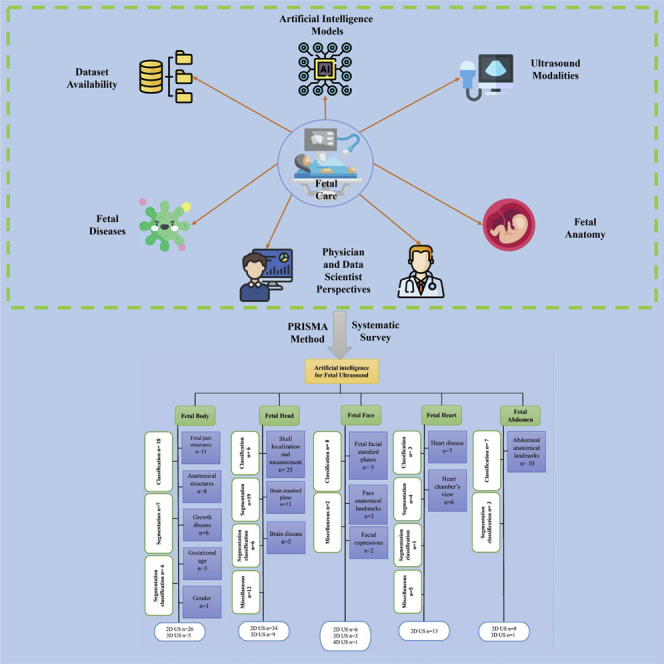

Graphical abstract

Highlights

-

•

Artificial intelligence studies to monitor fetal development via ultrasound images

-

•

Fetal issues categorized based on four categories — general, head, heart, face, abdomen

-

•

The most used AI techniques are classification, segmentation, object detection, and RL

-

•

The research and practical implications are included.

Health informatics; Diagnostic technique in health technology; Medical imaging; Artificial intelligence

Introduction

Background

Artificial intelligence (AI) is a broad discipline that aims to replicate the inherent intelligence shown by people via artificial methods (Hassabis et al., 2017). Recently, AI techniques have been widely utilized in the medical sector (Miller and Brown, 2018). Historically, AI techniques were standalone systems with no direct link to medical imaging. With the development of new technology, the idea of ‘joint decision-making’ between people and AI offers the potential of boosting high performance in the area of medical imaging (Savadjiev et al., 2019).

In computer science, machine learning (ML), deep learning (DL), artificial neural network (ANN) and reinforcement learning (RL) are subset techniques of AI that are used to perform different tasks on medical images such as classification, segmentation, object identification, and regression (Fatima and Pasha, 2017; Kim et al., 2019b; Shahid et al., 2019). Diagnosis using computer-aided detection (CAD) has moved toward becoming AI automated process in the medical images (Castiglioni et al., 2021), which include most of the medical imaging data such as X-ray radiography, fluoroscopy, MRI, medical ultrasonography or ultrasound, endoscopy, elastography, tactile imaging, and thermography (Alzubaidi et al., 2021a, 2021b; Fujita, 2020). However, digitized medical images come with a plethora of new information, possibilities, and challenges. Therefore, AI techniques are able to address some of these challenges by showing impressive accuracy and sensitivity in identifying imaging abnormalities. These techniques promise to enhance tissue-based detection and characterization with the potential to improve diagnoses of diseases (Tang, 2020).

At present, the use of AI techniques in medical images has been discussed in depth across many medical disciplines, including identifying cardiovascular abnormalities, detecting fractures and other musculoskeletal injuries, aiding in diagnosing neurological diseases, reducing thoracic complications and conditions, screening for common cancers, and many other prognoses and diagnosis tasks (Castiglioni et al., 2021; Cheikh et al., 2020; Deo, 2015; Handelman et al., 2018; Miotto et al., 2017). Furthermore, AI techniques have shown the ability to provide promising findings when utilizing prenatal medical images, such as monitoring fetal development at each stage of pregnancy, predicting a pregnant placenta’s health, and identifying potential complications (Balayla and Shrem, 2019; Chen et al., 2021a; Iftikhar et al., 2020; Raef and Ferdousi, 2019). AI techniques may assist with detecting several fetal diseases and adverse pregnancy outcomes with complex etiologies and pathogeneses such as amniotic band syndrome, congenital diaphragmatic hernia, congenital high airway obstruction syndrome, fetal bowel obstruction, gastroschisis, omphalocele, pulmonary sequestration, and sacrococcygeal teratoma (Correa et al., 2016; Larsen et al., 2013; Sen, 2017). Therefore, more research is needed to understand the role of utilizing AI techniques in the early stage of pregnancy, both to prevent and reduce unfavorable outcomes as well as provide an understanding of fetal anomalies and illnesses throughout pregnancy and for drastically reducing the need of more invasive diagnostic procedures that may be harmful for the fetus (Chen et al., 2021b).

Various imaging modalities (e.g., ultrasound, MRI, and computed tomography (CT)) are available and can be utilized by AI techniques during pregnancy (Kim et al., 2019b). In medical literature, ultrasound imaging has become popular and is used during all pregnancy trimesters. Ultrasound is crucial for making diagnoses, along with tracking fetal growth and development. In addition, ultrasound can provide both precise fetal anatomical information as well as high-quality photos and increased diagnostic accuracy (Avola et al., 2021; Chen et al., 2021a). There are numerous benefits and few limitations when using ultrasound. Acquiring the device is a very inexpensive process, particularly when compared to other instruments used on the same organs, such as MRI or positron emission tomography (PET). Unlike other acquisition equipment for comparable purposes, ultrasound scanners are portable and long-lasting. The second major benefit is that unlike MRI and CT the ultrasound machine does not pose any health concerns for pregnant women as the produced signals are entirely safe for both the mother and the fetus (Whitworth et al., 2015). Although the literature exploring the benefits of utilizing AI technologies for ultrasound imaging diagnostics in prenatal care has grown, more research is needed to understand the different roles AI can have in diagnostic prenatal care using ultrasound images.

Research objective

Previous reviews on ultrasound medical images for pregnant women using AI techniques have not been thoroughly conducted. Early reviews have attempted to summarize the use of AI in the prenatal period (Al-yousif et al., 2021; Arjunan and Thomas, 2020; Avola et al., 2021; Davidson and Boland, 2021; Garcia-Canadilla et al., 2020; Hartkopf et al., 2019; Kaur et al., 2017; Komatsu et al., 2021a; Shiney et al., 2017; Torrents-Barrena et al., 2019). However, these reviews are not comprehensive because they only target specific issues such as fetal cardiac function (Garcia-Canadilla et al., 2020), Down syndrome (Arjunan and Thomas, 2020), and fetal CNS (Hartkopf et al., 2019). Similarly, other reviews have not focused on AI techniques and ultrasound images as the primary intervention (Kaur et al., 2017; Shiney et al., 2017; Torrents-Barrena et al., 2019). Two reviews covered the use of general AI techniques utilized in the prenatal period through ultrasound. However, they did not focus on the fetus as the primary population in their review (Avola et al., 2021; Davidson and Boland, 2021). Another systematic review (Al-yousif et al., 2021) addressed AI classification technologies for fetal health, but it only targeted cardiotocography disease. Other review (Komatsu et al., 2021a) they introduced various areas of medical AI research including obstetrics but the main focus on US imaging in general. Therefore, a comprehensive survey is necessary to understand the role of AI technologies for the entire prenatal care period using ultrasound images. This work will be the first comprehensive study that systematically reviews how AI techniques can assist during pregnancy screenings of fetal development and improve fetus growth monitoring. In this survey we aim to: (1) Describe the application of AI techniques on different fetal spatial ultrasound dimensions; (2) Discuss how different methodologies are used—classification, segmentation, object-detection, regression, and RL, (3) Highlight the dataset acquisition and availability; (4) Identify practical and research implications and gaps for future research.

Results

Search results

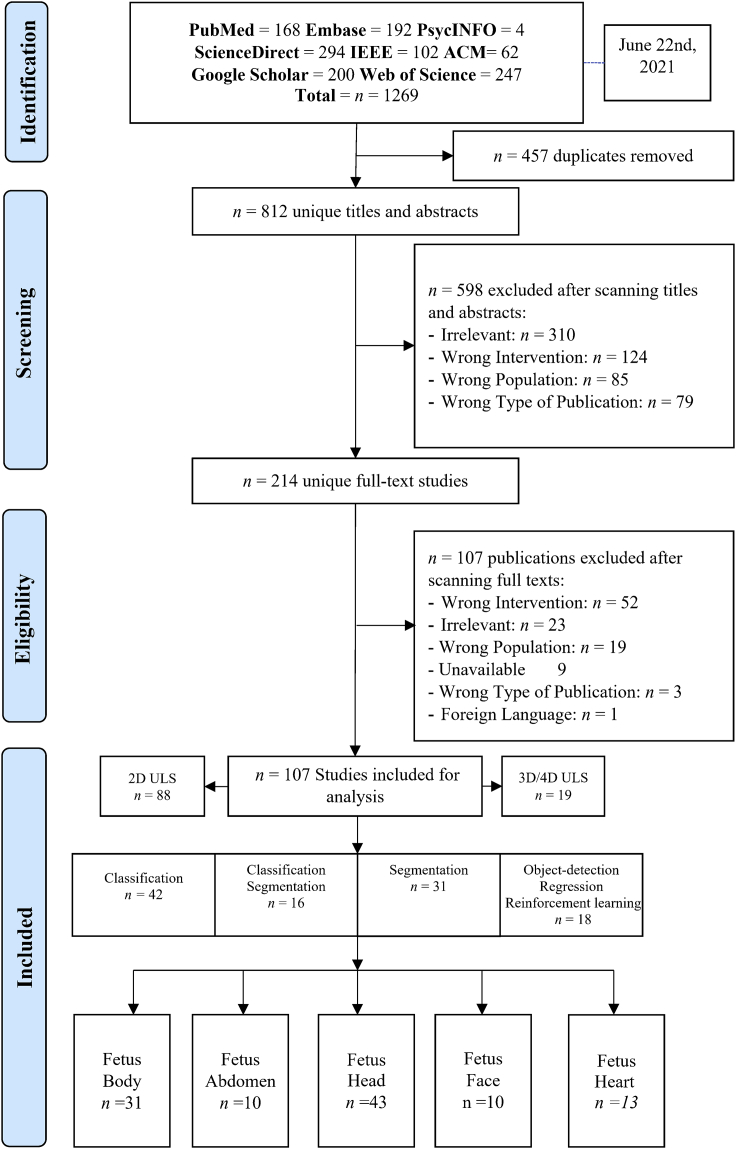

As shown in Figure 1, the search yielded a total of 1269 citations. After excluding 457 duplicates, there were 812 unique titles and abstracts. A total of 598 citations were excluded after evaluating the titles and abstracts. After full-text screening, n = 107 citations were excluded from the remaining n = 214 papers. The narrative synthesis includes a total of 107 studies. Across screening steps, both authors reported the excluding reason for each study to ensure reliability of the work. The total excluded studies were n= 704 articles. These studies did not meet selection criteria because of the following reasons: (1) irrelevant, (2) wrong intervention, (3) wrong population, (4) wrong publication type, (5) unavailable, and (6) foreign language article. These terminologies are defined in Table 1.

Figure 1.

Literature map showing the selected studies in red dot and recommended studies in black dot

Table 1.

Definition of the excluded terminologies

| Terminologies | Definition |

|---|---|

| Irrelevant |

|

| Wrong intervention |

|

| Wrong population |

|

| Wrong publication type |

|

| Unavailable |

|

| Foreign language |

|

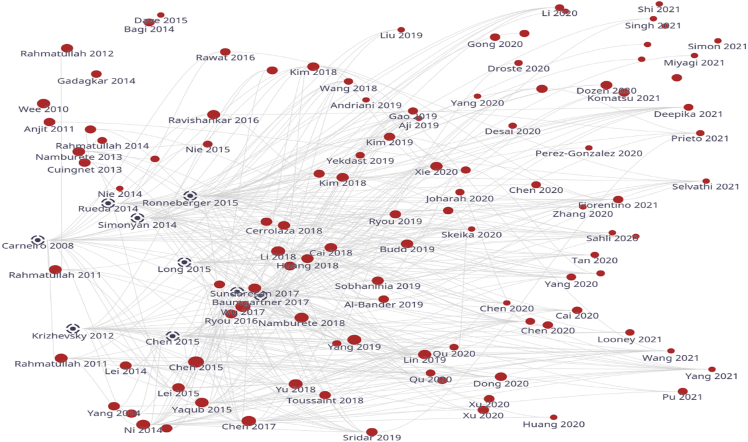

Assessment of bibliometrics analysis

To validate the selected studies, we used a bibliometrics analysis (Kokol et al., 2021) tool that explores the literature through citations and automatically recommends highly related articles to our scope. This ensured that we were unlikely to miss any relevant study. Figure 1 provides a citations map between selected studies over time. The map shows that all selected studies are relevant over time from 2010 to 2021 and recommends nine studies that may be relevant to our scope based on the interactive citation. However, these studies were excluded because they did not meet our inclusion criteria. Therefore, we concluded that our search was comprehensive and included most of the relevant studies.

Identification of result themes

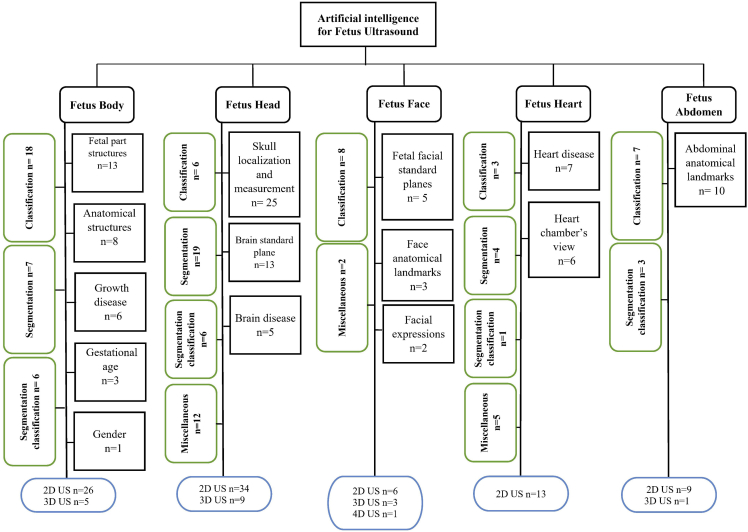

In Figure 2, we grouped the studies by the fetus organ that each study addresses including: 1) Fetus head (n = 43, 40.18%), 2) fetus body (n = 31, 28.97%), 3) fetus heart (n = 13,12.14%), 4) fetus face (n = 10, 9.34%), and 5) fetus abdomen (n = 10, 9.34%). Each group is classified by sub-group as detailed in Figure 3. In addition, most of the studies used 2D US (n = 88, 82.24%) and 3D/4D US were rare and reported only in (n = 19, 17.75%). AI tasks are grouped based on the most common use. We found that classification was used in (n = 42, 39.25%), segmentation was used in (n = 31, 28.97%), both classification and segmentation were used together in (n = 16, 14.95%), object-detection, regression, and RL were seen as miscellaneous in (n = 18, 16.82%).

Figure 2.

PRISMA diagram showing our literature search inclusion process

Figure 3.

Summary of AI methods implemented on fetal ultrasound images

Definition of result themes

Ultrasound imaging modalities

There was a total of (n = 88, 82.24%) studies that utilized 2D US images. Therefore, here is a brief about what the 2D ultrasound is. Sound waves are used in all ultrasounds to produce an image. The conventional ultrasound picture of a growing fetus is a 2D image. The fetus’s internal organs may be seen with a 2D ultrasound, which provides outlines and flat-looking pictures. 2D ultrasounds have been widely available for a long time and have a very good safety record. These devices do not pose the same dangers as X-rays because they employ non-ionizing rather than ionizing radiation (Avola et al., 2021). Ultrasounds are typically conducted at least once throughout pregnancy, most often between 18 and 22 weeks in the second trimester (Bethune et al., 2013). This examination, also known as a level two ultrasound or anatomy scan, is performed to monitor the fetus’s development. Ultrasounds may be used to examine a variety of things during pregnancy, including: 1) How the fetus is developing; 2) The gestational age of the fetus; 3) Any abnormalities within the uterus, ovaries, cervix, or placenta; 4) The number of fetuses you are carrying; 5) Any difficulties the fetus may be experiencing; 6) The fetus’s heart rate; 7) Fetal growth and location in the uterus, 8) Amniotic fluid level, 9) Sex assignment, 10) Signs of congenital defects, and 11) Signs of Down syndrome (Driscoll and Gross, 2009; Masselli et al., 2014).

There was a total of (n = 18, 16.8%) studies that utilized 3D. Therefore, here is a brief about what is 3D ultrasound. Observing three-dimensional (3D) ultrasound images has increasingly grown in recent years. Although 3D ultrasounds may be beneficial in identifying facial or skeletal abnormalities (Huang and Zeng, 2017), 2D ultrasounds are commonly utilized in medical settings because they can clearly reveal the interior organs of a growing fetus. The picture of a 3D ultrasound is created by putting together several 2D photos obtained from various angles. Many parents like 3D photos because they believe they can see their baby’s face more clearly than they can with flat 2D images.

Despite this, the Food and Drug Administration (FDA) does not recommend using a 3D ultrasound for entertainment purposes (Abramowicz, 2010). The “as low as reasonably attainable” (ALARA) approach directs ultrasound technologists to be used solely in a clinical setting in order to reduce exposure to heat and radiation (Abramowicz, 2015). Although an ultrasound is generally thought to be harmless, there is insufficient data to determine what long-term exposure to ultrasound may be to a fetus or a pregnant woman. There is no way of knowing how long a session will take or if the ultrasound equipment will work correctly in non-clinical situations such as places that give “keepsake” photos (Dowdy, 2016).

4D ultrasound is identical to a 3D ultrasound, but the image it creates is updated constantly – comparable to a moving image. This sort of ultrasound is usually performed for fun rather than for medical concerns. As previously mentioned, because ultrasound is medical equipment that should only be used for medical purposes (Kurjak et al., 2007), the FDA does not advocate getting ultrasounds for enjoyment or bonding purposes. Unless their doctor or midwife has recommended it as part of prenatal care, pregnant patients should avoid non-medical locations that provide ultrasounds (Dowdy, 2016). Although there is no proven risk, both 3D and 4D ultrasounds employ higher-than-normal amounts of ultrasound energy and the sessions are longer compared to 2D which may have adverse fetal effects (Mack, 2017; Payan et al., 2018).

AI image processing task

There was a total of (n = 42, 39.25%) studies that utilized classification, which is the process of assigning one or more labels to an image, and it is one of the most fundamental problems concerning accurate computer vision and pattern recognition. It has many applications including image and video retrieval, video surveillance, web content analysis, human-computer interaction, and biometrics. Feature coding is an important part of image classification, and several coding methods have been developed in recent years. In general, image classification entails extracting picture characteristics before classifying them (Naeem and Bin-Salem, 2021). As a result, the main aspect of utilizing image classification is understanding how to extract and evaluate image characteristics ( Wang et al., 2020).

There was a total of (n = 31, 28.97%) studies that utilized segmentation, which is one of the most complex tasks in medical image processing involving distinguishing the pixels of organs or lesions from background medical images such as CT or MRI scans (Bin-Salem et al., 2022). A number of researchers have suggested different automatic segmentation methods by using existing technology (Asgari Taghanaki et al., 2021). Previously, traditional techniques such as edge detection filters and mathematical algorithms were used to construct earlier systems (Bali and Singh, 2015). After this, machine learning techniques for extracting hand-crafted characteristics were the dominating method for a substantial time period. The main issue for creating such a system has always been designing and extracting these characteristics, and the complexity of these methods has been seen as a substantial barrier to deployment. In the 2000s, deep learning methods began to grow in popularity because of advancements in technology because this method had significantly better skills in image processing jobs. Deep learning methods have emerged as a top choice for image segmentation, particularly medical image segmentation, because of their promising capabilities (Hesamian et al., 2019).

There was a total of (n = 12, 11.21%) studies that utilized object detection techniques, which is the process of locating and classifying items. A detection method is used in biomedical images to determine the regions where the patient’s lesions are situated as box coordinates. There are two kinds of deep learning-based object detection. These region proposal-based algorithms are one example. Using a selective search technique, this method extracts different kinds of patches from input images. After that, the trained model determines if each patch contains numerous items and classifies them according to their region of interest (ROI). In particular, the region proposal network was created to speed up the detection process. Object identification in the other methods is done using the regression-based algorithm as a one-stage network. These methods use image pixels to directly identify and detect bounding box coordinates and class probabilities within entire images (Kim et al., 2019b).

There was a total of (n = 2, 1.8%) studies that utilized RL. The concept behind RL is that an artificial agent learns by interacting with its surroundings. It enables agents to autonomously identify the best behavior to exhibit in each situation to optimize performance on specified metrics. The basic concept underlying RLis made up of many components. The RL agent is the process’s decision-maker, and it tries to perform an action that has been recognized by the environment. Depending on the activity performed, the agent receives a reward or penalty from its surroundings. Exploration and exploitation are used by RL agents to determine which activities provide the most rewards. In addition, the agent obtains information about the condition of the environment (Sahba et al., 2006). For medical image analysis applications, RL provides a powerful framework. RL has been effectively utilized in a variety of applications, including landmark localization, object detection, and registration using image-based parameter inference. RL has also been shown to be a viable option for handling complex optimization problems such as parameter tuning, augmentation strategy selection, and neural architecture search. Existing RL applications for medical imaging can be split into three categories: parametric medical image analysis, medical image optimization, and numerous miscellaneous applications (Zhou et al., 2021).

Description of included studies

As shown in (Table 2), more than half of the studies (n= 53, 49.53%) were obtained from journal articles whereas the other half were found in conference proceedings (n= 43, 40.18%) and book chapters (n = 11, 10.28%). Studies were published between 2010 and 2021. The majority of studies were published in 2020 (n = 30, 28.03%), followed by 2021 (n = 19, 17.75%), 2019 (n = 14, 13.08%), 2018 (n = 11, 10.28), 2014 (n = 9, 8.41%), 2017 (n = 6, 5.60%), 2015 (n = 6, 5.60%), 2016 (n = 4, 3.73%), 2013 (n = 3, 2.80%), 2011 (n = 3, 2.80%), 2012 (n = 1, 0.93%), and 2010 (n = 1, 0.93%). In regard to studies examining fetal organs, fetus skull localization and measurement was reported on in (n = 25, 23.36%) studies, followed by fetal part structures (n = 13, 12.14%) and brain standard plane in (n = 13, 12.14%) studies. Abdominal anatomical landmarks were reported in (n = 10, 9.34%) studies. In addition, fetus body anatomical structures were reported in (n = 8, 7.47%) studies, heart disease was reported on in (n = 7, 6.54%) studies, and growth disease (n = 6, 5.60%) and brain disease were also reported in (n = 5, 4.67%) studies. The view of heart chambers was reported on in (n = 6, 5.60%) studies, fetal facial standard planes were reported in (n = 5, 4.67%) studies, gestational age (n = 3, 2.80%) and face anatomical landmarks were reported on in (n = 3, 2.80%) studies, facial expressions were reported on in (n = 2, 1.86%) studies, and gender identification was reported on only in (n = 1, 0.93%) study. In addition, most of the studies were conducted in China (n = 41, 38.31%), followed by the UK (n = 25, 23.36%), and India (n = 14, 13.08%). Surprisingly, some institutes mainly focused on this field and contributed a high number of studies. For example, many (n = 20, 18.69%) studies were conducted at the University of Oxford, UK and several (n = 13, 12.14%) were conducted in Shenzhen University, China.

Table 2.

Characteristics of the included studies (n = 107)

| Characteristics | Number of studies |

|---|---|

| Type of publication | (n, %) |

| Journal article | (53, 49.53) |

| Conference proceedings | (43, 40.18) |

| Book chapter | (11, 10.28) |

| Year of publication | |

| 2021 | (19, 17.75) |

| 2020 | (30, 28.03) |

| 2019 | (14, 13.08) |

| 2018 | (11, 10.28) |

| 2017 | (6, 5.60) |

| 2016 | (4, 3.73) |

| 2015 | (6, 5.60) |

| 2014 | (9, 8.41) |

| 2013 | (3, 2.80) |

| 2012 | (1, 0.93) |

| 2011 | (3, 2.80) |

| 2010 | (1, 0.93) |

| Fetal Organ | |

| Fetus body | (31, 28.97) |

| Fetal part structures | (13, 12.14) |

| Anatomical structures | (8, 7.47) |

| Growth disease | (6, 5.60) |

| Gestational age | (3, 2.80) |

| Gender | (1, 0.93) |

| Fetus head | (43, 40.18) |

| Skull localization and measurement | (25, 23.36) |

| Brain standard plane | (13, 12.14) |

| Brain disease | (5, 4.67) |

| Fetus face | (10, 9.34) |

| Fetal facial standard planes | (5, 4.67) |

| Face anatomical landmarks | (3, 2.80) |

| Facial expressions | (2, 1.86) |

| Fetus heart | (13,12.14) |

| Heart disease | (7, 6.54) |

| Heart chambers view | (6, 5.60) |

| Fetus abdomen | (10, 9.34) |

| Abdominal anatomical landmarks | (10, 9.34) |

| Publication Country and top institute | |

| China | (41, 38.31) |

| Shenzhen University | (13, 12.14) |

| Beihang University | (5, 4.67) |

| Chinese University of Hong Kong | (4, 3.73) |

| Hebei University of Technology | (2, 1.86) |

| Fudan University | (2, 1.86) |

| Shanghai Jiao Tong University | (2, 1.86) |

| South China University of Technology | (2, 1.86) |

| Other institutes | (11, 10.28) |

| UK | (25, 23.36) |

| University of Oxford | (20, 18.69) |

| Imperial College London | (4, 3.73) |

| King’s College, London | (1, 0.93) |

| India | (14, 13.08) |

| Japan | (4, 3.73) |

| Indonesia | (4, 3.73) |

| USA | (3, 2.80) |

| South Korea | (3, 2.80) |

| Iran | (3, 2.80) |

| Australia | (1, 0.93) |

| Canada | (1, 0.93) |

| Mexico | (1, 0.93) |

| France | (1, 0.93) |

| Italy | (1, 0.93) |

| Tunisia | (1, 0.93) |

| Spain | (1, 0.93) |

| Iraq | (1, 0.93) |

| Brazil | (1, 0.93) |

| Malaysia | (1, 0.93) |

Artificial intelligence for fetus ultrasound

Fetus body

As shown in (Table 2), the overall purpose of several (n= 31, 28.97%) studies was to identify general characters of the fetus (e.g., gestational age, gender) or diseases such as intrauterine growth restriction (IUGR). In addition, most of the studies aimed to identify the fetus itself in the uterus or fetus part structure during different trimesters. The first trimester is from week 1 to the end of week 12. The second trimester is from week 13 to the end of week 26, and the third trimester is from week 27 to the end of the pregnancy. We also report on a performance comparison between the various techniques for each fetus body group including objective, backbone methods, optimization, fetal age, best obtained result, and observations (see Table 3).

Table 3.

Articles published using AI to improve fetus body monitoring: objective, backbone methods, optimization, fetal age, and AI tasks

| Study | Objective | Backbone Methods/Framework | Optimization/Extractor methods | Fetal age | AI tasks |

|---|---|---|---|---|---|

| Fetal Part Structures | |||||

| (Maraci et al., 2015) | To identify the fetal skull, heart and abdomen from ultrasound images | SVM as the classifier | Gaussian Mixture Model (GMM) Fisher Vector (FV) |

26th week | classification |

| (Liu et al., 2021) | To segment the seven key structures of the neonatal hip joint | Neonatal Hip Bone Segmentation Network (NHBSNet) | Feature Extraction Module Enhanced Dual Attention Module (EDAM) Two-Class Feature Fusion Module (2-Class FFM) Coordinate Convolution Output Head (CCOH) |

16 - 25 weeks. | segmentation |

| (Rahmatullah et al., 2014) | To segment organs head, femur, and humerus in ultrasound images using multilayer super pixel images features | Simple Linear Iterative Clustering (SLIC) Random forest |

Unary pixel shape feature image moment |

N/A | segmentation |

| (Weerasinghe et al., 2021) | To automate kidney segmentation using fully convolutional neural networks. | FCNN: U-Net & UNET++ | N/A | 20 to 40 weeks | segmentation |

| (Burgos-Artizzu et al., 2020) | To evaluate the maturity of current Deep Learning classification techniques for their application in a real maternal-fetal clinical environment | CNN DenseNet-169 | N/A | 18 to 40 weeks | classification |

| (Cai et al., 2020) | To use the learnt visual attention maps to guide standard plane detection on all three standard biometry planes: ACP, HCP and FLP. | Temporal SonoEyeNet (TSEN) Temporal attention module: Convolutional LSTM Video classification module: Recurrent Neural Networks (RNNs)+ |

CNN feature extractor: VGG-16 | N/A | classification |

| (Ryou et al., 2019) | To support first trimester fetal assessment of multiple fetal anatomies including both visualization and the measurements from a single 3D ultrasound scan | Multi-Task Fully Convolutional Network (FCN) U-Net |

N/A | 11 to 14 weeks | Segmentation Classification |

| (Sridar et al., 2019) | To automatically classify 14 different fetal structures in 2D fetal ultrasound images by fusing information from both cropped regions of fetal structures and the whole image | support vector machine (SVM)+ Decision fusion | Fine-tuning AlexNet CNN | 18 to 20 weeks | Classification |

| (Chen et al., 2017) | To automatic identification of different standard planes from US images | T-RNN framework: LSTM |

Features extracted using J-CNN classifier | 18 to 40 weeks | Classification |

| (Cai et al., 2018) | To classify abdominal fetal ultrasound video frames into standard AC planes or background | M-SEN architecture Discriminator CNN |

Generator CNN | N/A | Classification |

| (Gao and Noble, 2019) | To detect multiple fetal structures in free-hand ultrasound | CNN Attention Gated LSTM |

Class Activation Mapping (CAM) | 28 to 40 weeks | classification |

| (Yaqub et al., 2015) | To extract features from regions inside the images where meaningful structures exist. | Guided Random Forests | Probabilistic Boosting Tree (PBT) | 18 to 22 weeks | Classification |

| (Chen et al., 2015) | To detect standard planes from US videos | T-RNN LSTM (Transferred RNN) |

Spatio-Semporal Feature J-CNN |

18 to 40 weeks | Classification |

| Anatomical Structures | |||||

| (Yang et al., 2019) | To propose the first and fully automatic framework in the field to simultaneously segment fetus, gestational sac and placenta, | 3D FCN + RNN hierarchical deep supervision mechanism (HiDS) | BiLSTM module denoted as FB-nHiDS | 10 - 14 weeks | Segmentation |

| (Looney et al., 2021) | To segment the placenta, amniotic fluid, and fetus. | FCNN | N/A | 11 - 19 weeks | Segmentation |

| (Li et al., 2017) | To segment the amniotic fluid and fetal tissues in fetal US images | The encoder-decoder network based on VGG16 | N/A | 22ND weeks | Segmentation |

| (Ryou et al., 2016) | To localize the fetus and extract the best fetal biometry planes for the head and abdomen from first trimester 3D fetal US images | CNN | Structured Random Forests | 11 - 13 weeks | Classification |

| (Toussaint et al., 2018) | To detect and localize fetal anatomical regions in 2D US images | ResNet18 | Soft Proposal Layer (SP) | 22 - 32 weeks | Classification |

| (Ravishankar et al., 2016) | To reliably estimate abdominal circumference | CNN + Gradient Boosting Machine (GBM) | Histogram of Oriented Gradient (HoG) | 15 - 40 weeks | Classification |

| (Wee et al., 2010) | To detect and recognize the fetal NT based on 2D ultrasound images by using artificial neural network techniques. | Artificial Neural Network (ANN) | Multilayer Perceptron (MLP) Network Bidirectional Iterations Forward Propagations Method (BIFP) |

N/A | Classification |

| (Liu et al., 2019) | To detect NT region | U-Net NT Segmentation PCA NT Thickness Measurement |

VGG16 NT Region Detection | 4 - 12 weeks | Segmentation |

| Growth disease | |||||

| (Bagi and Shreedhara, 2014) | To propose the biometric measurement and classification of IUGR, using OpenGL concepts for extracting the feature values and ANN model is designed for diagnosis and classification | ANN Radial Basis Function (RBF) |

OpenGL | 12–40 Weeks |

Classification |

| (Selvathi and Chandralekha, 2021) | To find the region of interest (ROI) of the fetal biometric and organ region in the US image | DCNN AlexNet | N/A | 16 -27 weeks | Classification |

| (Rawat et al., 2016) | To detect fetal abnormality in 2D US images | ANN + Multilayered perceptron neural networks (MLPNN) | Gradient vector flow (GVF) Median Filtering |

14 - 40 weeks | Classification segmentation |

| (Gadagkar and Shreedhara, 2014) | To develop a computer-aided diagnosis and classification tool for extracting ultrasound sonographic features and classify IUGR fetuses | ANN | Two-Step Splitting Method (TSSM) for Reaction-Diffusion (RD) | 12–40 Weeks |

Classification segmentation |

| (Andriani and Mardhiyah, 2019) | To develop an automatic classification algorithm on the US examination result using Convolutional Neural Network in Blighted Ovum detection | CNN | N/A | N/A | Classification |

| (Yekdast, 2019) | To propose an intelligent system based on combination of ConvNet and PSO for Down syndrome diagnosis. | CNN | Particle Swarm Optimization (PSO) | N/A | Classification |

| (Maraci et al., 2020) | To automatically detect and measure the transcerebellar diameter (TCD) in the fetal brain, which enables the estimation of fetal gestational age (GA) | CNN FCN | N/A | 16- - 26 weeks | Classification segmentation |

| (Chen et al., 2020a) | To accurately estimate the gestational age from the fetal lung region of US images. | U-NET | N/A | 24 - 40 weeks | Classification segmentation |

| (Prieto et al., 2021) | To classify, segment, and measure several fetal structures for the purpose of GA estimation | U-NET RESTNET |

Residual UNET (RUNET) | 16th weeks | Classification segmentation |

| (Maysanjaya et al., 2014) | To measure the accuracy of Learning Vector Quantization (LVQ) to classify the gender of the fetus in the US image" | ANN | Learning Vector Quantization (LVQ) Moment invariants |

N/A | Classification |

Fetal part structures

Classification

For identification and localization of fetal part structure, image classification was widely used in (n= 9, 8.41%) studies. Only 2D US images were utilized for classification purposes. Studies (Gao and Noble, 2019; Maraci et al., 2015; Yaqub et al., 2015) used a classification task to identify and locate fetal skull, heart, and abdomen from 2D US images in the second trimester. Besides that, in (Cai et al., 2018), classification was used to locate the exact abdominal circumference plane (ACP), and in (Cai et al., 2020), more results were obtained by locating head circumference plane (HCP), the abdominal circumference plane (ACP), and the femur length plane (FLP). Furthermore, in studies (Chen et al., 2015, 2017; Sridar et al., 2019) researching the second and third trimester, multi-classes were used to identify and locate various parts including (1) fetal abdominal standard plane (FASP); (2) fetal face axial standard plane (FFASP); and (3) fetal four-chamber view standard plane (FFVSP) of the heart. Study (Burgos-Artizzu et al., 2020) located the mother’s cervix in addition to abdomen, brain, femur, and thorax.

Segmentation

Image segmentation task was used in (n = 3, 2.80%) studies for the purpose of fetal part structure identification. 2D US imaging (Liu et al., 2021) was used to segment neonatal hip bone including seven key structures: (1) chondro-osseous border (CB), (2) femoral head (FH), (3) synovial fold (SF), (4) joint capsule and perichondrium (JCP), (5) labrum (La), (6) cartilaginous roof (CR), and (7) bony roof (BR). Only one study in (Weerasinghe et al., 2021) provided segmentation model that was able to segment the fetus kidney using 3D US in the third trimester. Segmentation was used to locate fetal head, femur, and humerus using 2D US as seen in (Rahmatullah et al., 2014).

Classification and segmentation

In (Ryou et al., 2019), whole fetal segmentation followed by classification tasks were used to locate the head, abdomen (in sagittal view), and limbs (in axial view) using 3D US images taken in the first trimester.

Anatomical part structures

Classification

For identification and localization of anatomical structures, image classification was used in (n= 4, 3.73%) studies. Only one study (Ryou et al., 2016) used 3D US images and other studies used 2D US (Ravishankar et al., 2016; Toussaint et al., 2018; Wee et al., 2010). In (Ryou et al., 2016), the whole fetus was localized in the sagittal plane and classifier was then applied to the axial images to localize one of three classes (head, body and non-fetal) during the second trimester. Multi-classification methods were utilized in (Toussaint et al., 2018) to localize head, spine, thorax, abdomen, limbs, and placenta in the third trimester. Moreover, binary classification was used in (Ravishankar et al., 2016) to identify abdomen versus non-abdomen. In (Wee et al., 2010), a classification method was used to detection and measure nuchal translucency (NT) in the beginning of second trimester. Moreover, monitoring NT combined with maternal age can provide effective insight of screening Down syndrome.

Segmentation

An image segmentation task was used in (n= 4, 3.73%) studies for the purpose of fetal mage segmentation was target task anatomical structures identification. In (Looney et al., 2021; Yang et al., 2019), 3D US were utilized to segment fetus, gestational sac amniotic fluid, and placenta in the beginning of the second trimester. However, in (Li et al., 2017), 2D US was used to segment amniotic fluid and the fetus in the late trimester. Finally, 2D US in (Liu et al., 2019) was utilized to segment and measure NT in the first trimester of pregnancy.

Growth disease diagnosis

Classification

For diagnosis of fetal growth, disease image classification was conducted in (n= 4, 3.73%) studies using 2D US. In (Bagi and Shreedhara, 2014) binary classification was used for early diagnosis of intrauterine growth restriction (IUGR) at the third trimester of pregnancy. The features considered to determine a diagnosis of IUGR are gestational age (GA), biparietal diameter (BPD), abdominal circumference (AC), head circumference (HC), and femur length (FL). In addition, binary classification was used in the second trimester of pregnancy to identify normal versus abnormal fetus growth as seen in (Selvathi and Chandralekha, 2021). In (Andriani and Mardhiyah, 2019), binary classification was used to identify the normal growth of the ovum by distinguishing blighted ovum from healthy ovum. Lastly, the binary classification was used to distinguish between healthy fetuses and those with Down syndrome (Yekdast, 2019).

Classification and segmentation

For diagnosis of fetal growth disease, image classification along with segmentation were used in (n= 2, 1.86%) studies using 2D US. In (Gadagkar and Shreedhara, 2014; Rawat et al., 2016), segmentation of the region of interest (ROI), followed by classification to diagnosis (IUGR) (normal versus abnormal) were carried out. This was done by using US images taken in both second and third trimesters of pregnancy. This classification relied on the measurement of the following: (1) Fetal abdominal circumference (AC), (2) Head circumference (HC), (3) BPD, and (4) Femur length.

Gestational age (GA) estimation

Classification and segmentation

Both classification and segmentation tasks were used to estimate GA in (n = 3, 2.80%) studies using 2D US taken in the second and third trimester of pregnancy. In (Maraci et al., 2020), the trans-cerebellar diameter (TCD) measurement was used to estimate GA in week. The TC plane frames are extracted from the US images using classification. Segmentation then localizes the TC structure and performs automated TCD estimation, from which the GA can thereafter be estimated via an equation. In (Chen et al., 2020a), 2D US was used to estimate GA based on region of fetal lung in the second and third trimester. In the first stage, the segmentation is to learn the recognition of fetal lung region in the ultrasound images. Classification is also used to accurately estimate the gestational age from the fetal lung region of ultrasound images. Several fetal structures were used to estimate GA in (Prieto et al., 2021), which focused on the second trimester. In this study, 2D US images were classified into four categories: head (BPD and HC), abdomen (AC), femur (FL), and fetus (crown-rump length: CRL). Then, the regions of interest (i.e., head, abdomen, and femur) were segmented and the results of biometry measurements were used to estimate GA.

Gender identification

Classification

Binary-classification was used in (Maysanjaya et al., 2014) to identify the gender of the fetus. Image preprocessing, image segmentation, and feature extraction (shape description) were used to obtain the value of the feature extraction and gender classification. This task is categorized as classification because a segmentation task is not clearly defined.

Fetus head

As shown in (Table 2), the primary purpose of (n= 43, 40.18%) studies is to identify and localize fetus skull (e.g., head circumference), brain standard planes (e.g., Lateral sulcus (LS), thalamus (T), choroid plexus (CP), cavum septi pellucidi (CSP)) or brain diseases (e.g., hydrocephalus (premature GA), ventriculomegaly, CNS malformations)). The following subsection discusses each category based on the implemented task. (Table 4) presents a comparison between the various techniques for each fetus head group (including objective, backbone methods, optimization, fetal age, best obtained result, and observations), studies for each group under the fetus head category, and provides an overview of methodology and observations.

Table 4.

Articles published using AI to improve fetus head monitoring: objective, backbone methods, optimization, fetal age, and AI tasks

| Study | Objective | Backbone Methods/Framework | Optimization/Extractor methods | Fetal age | AI tasks |

|---|---|---|---|---|---|

| Skull localization and measurement | |||||

| (Sobhaninia et al., 2020) | To localize the fetal head region in US imaging | Multi-scale mini-LinkNet network | N/A | 12 - 40 weeks | Segmentation |

| (Nie et al., 2015b) | To locate the fetal head from 3D ultrasound images using shape model | AdaBoost | Shape Model Marginal Space Haar-like features |

11 - 14 weeks | Classification |

| (Nie et al., 2015a) | To detect fetal head | Deep Belief Network (DBN) Restricted Boltzmann Machines |

Hough transform Histogram Equalization |

11 - 14 weeks | Classification |

| (Aji et al., 2019) | To semantically segment fetal head from maternal and other fetal tissue | U-NET | Ellipse fitting | 12 - 20 weeks | Segmentation |

| (Droste et al., 2020) | To automatically discover and localize anatomical landmarks; measure the HC, TV, and the TC | CNN | Saliency maps | 13 - 26 weeks | Miscellaneous |

| (Desai et al., 2020) | To demonstrate the effectiveness of hybrid method to segment fetal head | DU-Net | Scattering Coefficients (SC) | 13 - 26 weeks | Segmentation |

| (Brahma et al., 2021) | To segment fetal head using Network Binarization | Depthwise Separable Convolutional Neural Networks DSCNNs. | Network Binarization | 12 - 40 weeks | Segmentation |

| (Qiao and Zulkernine, 2020) | To segment the fetal skull boundary and fetal skull for fetal HC measurement | U-NET | Squeeze and Excitation (SE) blocks | 12 - 40 weeks | Segmentation |

| (Sobhaninia et al., 2019) | To automatically segment and estimate HC ellipse. | Multi-Task network based on Link-Net architecture (MTLN) | Ellipse Tuner | 12 - 40 weeks | Segmentation |

| (Zhang et al., 2020b) | To capture more information with multiple-channel convolution from US images | Multiple-Channel and Atrous MA-Net | Encoder and Decoder Module | N/A | Segmentation |

| (Zeng et al., 2021) | To automatically segment fetal ultrasound image and HC biometry | Deeply Supervised Attention-Gated (DAG) V-Net | Attention-Gated Module | 12 - 40 weeks | Segmentation |

| (Perez-Gonzalez et al., 2020) | To compound a new US volume containing the whole brain anatomy | U-NET + Incidence Angle Maps (IAM) | CNN Normalized Mutual Information (NMI) |

13 to 26 weeks | Segmentation |

| (Zhang et al., 2020a) | To directly measure the head circumference, without having to resort the handcrafted features or manually labeled segmented images. | CNN regressor (Reg-Resnet50) | N/A | 12 - 40 weeks | Segmentation |

| (Fiorentino et al., 2021) | To propose region-CNN for head localization and centering, and a regression CNN to accurately delineate the HC | CNN regressor (U-net) | Tiny-YOLOv2 | 12 - 40 weeks | Miscellaneous |

| (Li et al., 2020) | To present a novel end-to-end deep learning network to automatically measure the fetal HC, biparietal diameter (BPD), and occipitofrontal diameter (OFD) length from 2D US images | FCNN (SAPNet) | Regression network | 12 - 40 weeks | Miscellaneous |

| (Al-Bander et al., 2020) | To segment fetal head from US images | FCN | Faster R-CNN | 12 - 40 weeks | Miscellaneous |

| (Fathimuthu Joharah and Mohideen, 2020) | To deal with a completely computerized detection device of next fetal head composition | Multi-Task network based on Link-Net architecture (MTLN) | Hadamard Transform (HT) ANN FeedForward (NFFE) Classifier |

N/A | Miscellaneous |

| (Li et al., 2018) | To measure HC automatically | Random Forest Classifier | Haar-like features ElliFit method |

18 - 33 weeks | Classification |

| (Sinclair et al., 2018) | To determine measurements of fetal HC and BPD | FNC | N/A | 18 - 22 weeks | Segmentation |

| (Yang et al., 2020b) | To segment the whole fetal head in US volumes | Hybrid attention scheme (HAS) | 3D U-NET + Encoder and Decoder architecture for dense labeling | 20 - 31 weeks | Segmentation |

| (Xu et al., 2021) | To segment fetal head using a flexibly plug-and-play module called vector self-attention layer (VSAL) | CNN | Vector Self-Attention Layer (VSAL) Context Aggregation Loss (CAL) |

12 - 40 weeks | Segmentation |

| (Cerrolaza et al., 2018) | To provide automatic framework for skull segmentation in fetal 3D US | Two-Stage Cascade CNN (2S-CNN) U-NET | Incidence Angle Map Shadow Casting Map |

20 - 36 weeks | Segmentation |

| (Skeika et al., 2020) | To segment 2D ultrasound images of fetal skulls based on a V-Net architecture | Fully Convolutional Neural Network Combination (VNet-c) | N/A | 12 - 40 weeks | Segmentation |

| (Namburete and Noble, 2013) | To segment the cranial pixels in an ultrasound image using a random forest classifier | Random Forest Classifier | Simple Linear Iterative Clustering (SLIC) Haar Features |

25 - 34 weeks | Segmentation |

| (Budd et al., 2019) | To automatically estimate fetal HC | U-Net | Monte-Carlo Dropout | 18 - 22 weeks | Segmentation |

| Brain standard plane | |||||

| (Qu et al., 2020a) | To automatically recognize six standard planes of fetal brains. | CNN+ Transfer learning DCNN | N/A | 18 - 22 weeks 40th week |

Classification |

| (Cuingnet et al., 2013) | To help the clinician or sonographer obtain these planes of interest by finding the fetal head alignment in 3D US | Random forest classifier | Shape model and template deformation algorithm Hough transform |

19 - 24 weeks. | Classification segmentation |

| (Singh et al., 2021b) | To segment the fetal cerebellum from 2D US images | U-NET +ResNet (ResU-NET-C) | N/A | 18 - 20 weeks | Segmentation |

| (Yang et al., 2021b) | To detect multiple planes simultaneously in challenging 3D US datasets | Multi-Agent Reinforcement Learning (MARL) | RNN Neural Architecture Search (NAS) Gradient-based Differentiable Architecture Sampler (GDAS) |

19 - 31 weeks | Miscellaneous |

| (Lin et al., 2019b) | To detect standard plane and quality assessment | Multi-task learning Framework Faster Regional CNN (MF R-CNN) | N/A | 14 - 28 weeks | Miscellaneous |

| (Kim et al., 2019a) | To tackle the automated problem of fetal biometry measurement with a high degree of accuracy and reliability | U-Net, CNN | Bounding-box regression (object-detection) | N/A | Miscellaneous |

| (Lin et al., 2019a) | To determine the standard plane in US images | Faster R-CNN | Region Proposal Network (RPN) | 14 - 28 weeks | Miscellaneous |

| (Namburete et al., 2018) | To address the problem of 3D fetal brain localization, structural segmentation, and alignment to a referential coordinate system | Multi-Task FCN | Slice-Wise Classification | 18 - 34 weeks | Classification segmentation |

| (Huang et al., 2018) | To simultaneously localize multiple brain structures in 3D fetal US | View-based Projection Networks (VP-Nets) | U-Net CNN |

20 - 29 weeks | Classification segmentation |

| (Qu et al., 2020b) | To automatically identify six fetal brain standard planes (FBSPs) from the non-standard planes. | Differential-CNN | Modified feature map | 16 - 34 weeks | Classification |

| (Wang et al., 2019) | To obtain the desired position of the gate and Middle Cerebral Artery (MCA) | MCANet | Dilated Residual Network (DRN) Dense Upsampling Convolution (DUC) block |

28 - 40 weeks | Segmentation |

| (Yaqub et al., 2013) | To segment four important fetal brain structures in 3D US | Random Decision Forests (RDF) | Generalized Haar-features | 18 - 26 weeks | Segmentation |

| (Yang et al., 2021a) | To automatically localize fetal brain standard planes in 3D US | Dueling Deep Q Networks (DDQN) | RNN-based Active Termination (AT) (LSTM) | 19 - 31 weeks | Miscellaneous |

| (Liu et al., 2020) | To evaluate the feasibility of CNN-based DL algorithms predicting the fetal lateral ventricular width from prenatal US images. | ResNet50 | Faster R-CNN Class Activation Mapping (CAM) |

22 - 26 weeks. | Miscellaneous |

| (Sahli et al., 2020) | To recognize and separate the studied US data into two categories: healthy (HL) and hydrocephalus (HD) subjects | CNN | N/A | 20 - 22 weeks. | Classification |

| (Chen et al., 2020b) | To automatically measure fetal lateral ventricles (LVs) in 2D US images | Mask R-CNN | Feature Pyramid Networks (FPN) Region Proposal Network (RPN) |

N/A | Miscellaneous |

| (Xie et al., 2020b) | To apply binary classification for central nervous system (CNS) malformations in standard fetal US brain images in axial planes | CNN | Split-view Segmentation | 18 - 32 weeks | Classification segmentation |

| (Xie et al., 2020a) | To develop computer-aided diagnosis algorithms for five common fetal brain abnormalities. | Deep convolutional neural networks (DCNNs) VGG-net | U-net Gradient-Weighted Class Activation Mapping (Grad-CAM) |

18 - 32 weeks | Classification segmentation |

Skull localization and measurement

Classification

Classification tasks for skull localization and HC are rarely used, as seen only in (n = 3, 2.80%) studies. Studies (Li et al., 2018; Nie et al., 2015a) used classification to localize the region of interest (ROI) and identify the fetus head based on 2D US taken in the first and second trimesters respectively. 3D US taken in the first trimester were used to detect fetal head in one study (Nie et al., 2015b).

Segmentation

Skull localization and HC in most of the studies (n = 16, 14.81%) used segmentation task. 2D US was used in 16 studies and one study used 3D US. Various network architecture were used to segment and locate the skull and perform HC as seen in (Aji et al., 2019; Brahma et al., 2021; Budd et al., 2019; Desai et al., 2020; Namburete and Noble, 2013; Perez-Gonzalez et al., 2020; Qiao and Zulkernine, 2020; Skeika et al., 2020; Sobhaninia et al., 2020,2019; Xu et al., 2021; Zeng et al., 2021; Zhang et al., 2020b). Besides identifying HC, in (Sinclair et al., 2018) segmentation was also used to find fetal BPD. Further investigation shows that the work completed in (Cerrolaza et al., 2018) was the first investigation about whole fetal head segmentation in 3D US. The segmentation network for skull localization in (Xu et al., 2021) was tested to identify a view of the four heart chambers on different datasets.

Miscellaneous

In addition to segmentation, another method was used to locate and identify the fetus skull in (n = 6, 5.60%) studies. As seen in (Li et al., 2020), regression was used with segmentation to identify HC, BPD, and occipitofrontal diameter (OFD). Besides this, in (Droste et al., 2020), neural network was used to train the saliency predictor and predict saliency maps to measure HC and identify the alignment of both transventricular (TV) as well as transcerebellar (TC). In addition, an object detection task was used with segmentation in (Al-Bander et al., 2020) to locate the fetal head at the end of the first trimester. In (Fiorentino et al., 2021), object detection was used for head localization and centering and regression to delineate the HC accurately. In (Fathimuthu Joharah and Mohideen, 2020), classification and segmentation tasks were used by utilizing a multi-task network to identify head composition.

Brain standard plane

Classification

The classification was used in (n = 2, 1.86%) studies to identify the brain standard plane, and both studies used 2D US images taken in the second and third trimesters. In (Qu et al., 2020a; 2020b), two different classification network architectures were used to identify six fetal brain standard planes. These architectures include horizontal transverse section of the thalamus, horizontal transverse section of the lateral ventricle, transverse section of the cerebellum, mid-sagittal plane, paracentral sagittal section, and coronal section of the anterior horn of the lateral ventricles.

Segmentation

The segmentation task was used in (n = 3, 2.80%) studies to identify the brain standard plane. Each of these studies used 2D US images. In (Singh et al., 2021b), 2D US images taken in the second trimester were used to segment fetal cerebellum structures. In addition, in (Wang et al., 2019), 2D US images taken in the third trimester were used to segment fetal middle cerebral artery (MCA). Authors in (Yaqub et al., 2013) utilized 3D US images in the second trimester to formulate the segmentation as a classification problem to identify the following brain planes; background, choroid plexus (CP), lateral posterior ventricle cavity (PVC), the cavum septum pellucidi (CSP), and cerebellum (CER).

Classification and segmentation

Classification with segmentation is employed in (n = 3, 2.80%) studies to identify the brain standard plane. These studies all used 3D US images. In (Namburete et al., 2018), both tasks were used to identify brain alignment based on skull boundaries and then head segmentation, eye localization, and prediction of brain orientation in the second and third trimesters. Moreover, in (Huang et al., 2018), segmentation followed by a classification task was used to detect CSP, Tha, lateral ventricles (LV), cerebellum (CE), and cisterna magna (CM) in the second trimester. Segmentation and classification are employed by 3D US in (Cuingnet et al., 2013) to identify the skull, mid-sagittal plane, and orbits of the eyes in the second trimester of pregnancy.

Miscellaneous

For brain standard planes identification in (n = 5, 4.62%), methods different than previously mentioned were used, including; segmentation with object detection, object detection, classification with object detection, and RL. As seen in (Yang et al., 2021a), the RL-based technique was employed for the first time on 3D US images to localize standard brain planes, including trans-thalamic (TT) and trans-cerebellar (TC) in the second and third trimesters. RL is used in (Yang et al., 2021b) to localize the following: mid-sagittal (S), transverse (T), and coronal (C) planes in volumes and trans-thalamic (TT), trans-ventricular (TV), and trans-cerebellar (TC)). Object detection architecture is utilized on 2D US images in (Lin et al., 2019a) to localize the trans-thalamic plane in the second trimester of pregnancy, including LS, T, CP, CSP, and third ventricle (TV). In (Lin et al., 2019b), classification with object detection was used to detect LS, T, CP, CSP, TV, brain midline (BM). In contrast (Kim et al., 2019a),used classification with object detection to localize cavum septum pellucidum (CSP) and ambient cistern (AC) and cerebellum), as well as to measure HC and BPD.

Brain disease

Classification

The binary classification technique utilized 2D US images taken in the second trimester as seen in (Sahli et al., 2020) to detect hydrocephalus disease using premature GA.

Classification and segmentation

Studies (Xie et al., 2020a, 2020b) both used 2D US images taken in the second and third trimesters to segment craniocerebral and identify abnormalities or specific diseases. As seen in (Xie et al., 2020b), CNS malformations were detected using binary classification. Moreover, in (Xie et al., 2020a), multi-classification was used to identify the following problems: TV planes contained occurrences of ventriculomegaly and hydrocephalus, and TC planes contained occurrences of Blake pouch cyst (BPC).

Miscellaneous

In (Chen et al., 2020b; Liu et al., 2020) different methods were used to detect ventriculomegaly disease based on 2D US images taken in the second trimester. In study (R. Liu et al., 2020), object detection and regression first identify fetal brain ultrasound images from standard axial planes as normal or abnormal. Second regression was then used to find the lateral ventricular regions of images with big lateral ventricular width. Then, the width was anticipated with a modest error based on these regions. Furthermore (Chen et al., 2020b), was the first study to propose an object-detection-based automatic measurement approach for fetal lateral ventricles (LVs) based on 2D US images. The approach can both distinguish and locate the fetal LV automatically as well as measure the LV’s scope quickly and accurately.

Fetus face

As shown in (Table 2), the primary purpose of (n= 10, 9.34%) studies was to identify and localize fetus face features such as the fetal facial standard plane (FFSP) (i.e., axial, coronal, and sagittal plane), face anatomical landmarks (e.g., nasal bone), and facial expressions (i.e., sad, happy). The following subsection discusses each category based on the implemented task. (Table 5). presents comparisons between the various techniques for each fetus face group including objective, backbone methods, optimization, fetal age, best obtained result, and observations.

Table 5.

Articles published using AI to improve fetus face monitoring: objective, backbone methods, optimization, fetal age, and AI tasks

| Study | Objective | Backbone Methods/Framework | Optimization/Extractor methods | Fetal age | AI tasks |

|---|---|---|---|---|---|

| Fetal facial standard plane (FFSP) | |||||

| (Lei et al., 2014) | To address the issue of recognition of standard planes (i.e., axial, coronal and sagittal planes) in the fetal US image | SVM classifier | AdaBoost for detect region of interest, ROI) Dense Scale Invariant Feature Transform (DSIFT) Aggregating vectors for feature extraction fish vector (FV) Gaussian Mixture Model (GMM) |

20 - 36 weeks | Classification |

| (Yu et al., 2016) | To automatically recognize the FFSP from US images | Deep convolutional networks (DCNN) | N/A | 20 - 36 weeks | Classification |

| (Yu et al., 2018) | To automatically recognize FFSP via a deep convolutional neural network (DCNN) architecture | DCNN | t-Distributed Stochastic Neighbor Embedding (t-SNE) | 20 - 36 weeks | Classification |

| (Lei et al., 2015) | To automatically recognize the fetal facial standard planes (FFSPs) | SVM classifier | Root scale invariant feature transform (RootSIFT) Gaussian mixture model (GMM) Fisher Vector (FV) Principal Component Analysis (PCA) |

20 - 36 weeks | Classification |

| (Wang et al., 2021) | To automatically recognize and classify FFSPs | SVM classifier | Local Binary Pattern (LBP) Histogram of Oriented Gradient (HOG) |

20 - 24 weeks | Classification |

| Face anatomical landmarks | |||||

| (Singh et al., 2021a) | To detect position and orientation of facial region and landmarks | SFFD-Net (Samsung Fetal Face Detection Network) multi-class segmented | N/A | 14 - 30 weeks | Miscellaneous |

| (Chen et al., 2020c) | To detect landmarks in 3D fetal facial US volumes | CNN Backbone Network | Region Proposal Network (RPN) Bounding-box regression |

N/A | Miscellaneous |

| (Anjit and Rishidas, 2011) | To detect nasal bone for US of fetus | Back Propagation Neural Network (BPNN) | Discrete Cosine Transform (DCT) Daubechies D4 Wavelet transform |

11 - 13 weeks | Miscellaneous |

| Facial expressions | |||||

| (Dave and Nadiad, 2015) | To recognize facial expressions from 3D US | ANN | Histogram equalization Thresholding Morphing Sampling Clustering Local Binary Pattern (LBP) Minimum Redundancy and Maximum Relevance (MRMR) |

N/A | Classification |

| (Miyagi et al., 2021) | To recognize fetal facial expressions that are considered as being related to the brain development of fetuses | CNN | N/A | 19 - 38 weeks | Classification |

Fetal facial standard plane (FFSP)

Classification

Classification was used to classify the FFSP using 2D US Images as seen in (n = 5, 4.67%). Using 2D US images that were taken in the second and third trimesters, four studies (Lei et al., 2014, 2015; Yu et al., 2016, 2018) identify ocular axial planes (OAP), the median sagittal planes (MSP), and the nasolabial coronal planes (NCP). In addition, authors in (Wang et al., 2021) were able to identify the FFSP using 2D US images taken in the second trimester.

Face anatomical landmarks

Miscellaneous

Different methods were used to identify face anatomical landmarks in (n = 3, 2.80) studies. In (Singh et al., 2021a), 3D US images taken in the second and third trimesters were segmented to identify background, face mask (excluding facial structures), eyes, nose, and lips. In another study the object detection method was used on 3D US images in (Chen et al., 2020c) to detect the left fetal eye, middle eyebrow, right eye, nose, and chin. Furthermore, classification used 2D US images taken in the first and second trimesters to detect the nasal bone. This was done to enhance the detection rate of Down syndrome, as seen in (Anjit and Rishidas, 2011).

Facial expressions

Classification

For the first time, 4D US images were utilized using the multi-classification method to identify fetus facial expression into Sad, Normal, and Happy as seen in (Dave and Nadiad, 2015). Study (Miyagi et al., 2021) used 2D US images in the second and third trimesters to classify fetal facial expression into eye blinking, mouthing without any expression, scowling, and yawning.

Fetus heart

As shown in (Table 2), the primary purpose of (n= 13, 14.04%) studies is to identify and localize fetus heart diseases and the fetus heart chambers view. The following subsection discusses each category based on the implemented task. (Table 6). presents comparisons between the various techniques for each fetus heart group including objective, backbone methods, optimization, fetal age, best obtained result, and observations.

Table 6.

Articles published using AI to improve fetus heart monitoring: Objective, backbone methods, optimization, fetal age, and AI tasks

| Study | Objective | Backbone Methods/Framework | Optimization/Extractor methods | Fetal age | AI tasks |

|---|---|---|---|---|---|

| Heart disease | |||||

| (Yang et al., 2020a) | To perform multi-disease segmentation and multi-class semantic segmentation of the five key components | U-NET + DeepLabV3+ | N/A | N/A | Segmentation |

| (Gong et al., 2020) | To recognize and judge fetal congenital heart disease (FHD) development | DGACNN Framework | CNN Wasserstein GAN + Gradient Penalty (WGAN-GP) DANomaly Faster-RCNN |

18–39 weeks | Miscellaneous |

| (Dozen et al., 2020) | To segment the ventricular septum in US | Cropping-Segmentation-Calibration (CSC) | YOLOv2 cropping module U-NET segmentation Module VGG-backbone Calibration Module |

18-28 weeks | Miscellaneous |

| (Komatsu et al., 2021b) | To detect cardiac substructures and structural abnormalities in fetal US videos | Supervised Object detection with Normaldata Only (SONO) | CNN YOLOv2 |

18-34 weeks | Miscellaneous |

| (Arnaout et al., 2021) | To identify recommended cardiac views and distinguish between normal hearts and complex CHD and to calculate standard fetal cardiothoracic measurements | Ensemble of Neural Networks | ResNet and U-Net Grad-CAM |

18-24 weeks | Classification segmentation |

| (Dinesh Simon and Kavitha, 2021) | To learn the features of Echogenic Intracardiac Focus (EIF) that can cause Down Syndrome (DS) whereas testing phase classifies the EIF into DS positive or DS negative based | Multi-scale Quantized Convolution Neural Network (MSQCNN) | Cross-Correlation Technique (CCT) Enhanced Learning Vector Quantiser (ELVQ) |

24–26 weeks | Classification |

| (Tan et al., 2020) | To perform automated diagnosis of hypoplastic left heart syndrome (HLHS) | SonoNet (VGG16) | N/A | 18–22 weeks | Classification |

| Heart chamber’s view | |||||

| (Xu et al., 2020b) | To perform automated segmentation of cardiac structures | CU-NET | Structural Similarity Index Measure (SSIM) | N/A | Segmentation |

| (Xu et al., 2020a) | To accurately segment seven important anatomical structures in the A4C view | DW-Net | Dilated Convolutional Chain (DCC) module W-Net module based on the concept of stacked U-Net |

N/A | Segmentation |

| (Dong et al., 2020) | To automatically quality control the fetal US cardiac four-chamber plane | Three CNN-based Framework | Basic-CNN, a variant of SqueezeNet Deep-CNN with DenseNet-161 as basic architecture The ARVBNet for real-time object detection. |

14 - 28 weeks | Miscellaneous |

| (Pu et al., 2021) | To localize the end-systolic (ES) and end-diastolic (ED) from ultrasound | Hybrid CNN based framework | YOLOv3 Maximum Difference Fusion (MDF) Transferred CNN |

18 - 36 weeks | Miscellaneous |

| (Sundaresan et al., 2017) | To detect the fetal heart and classifying each individual frame as belonging to one of the standard viewing planes | FCN | N/A | 20 - 35 weeks | Segmentation |

| (Patra et al., 2017) | To jointly predict the visibility, view plane, location of the fetal heart in US videos. | Multi-Task CNN | Hierarchical Temporal Encoding (HTE) | 20 - 35 weeks | Classification |

Heart disease

Classification

Two studies used classification methods to identify heart diseases based on 2D US images taken in the second trimester. In (Dinesh Simon and Kavitha, 2021), binary-classification task was utilized to identify Down syndrome versus normal fetuses based on identifying the echogenic intracardiac foci (EIF). Furthermore, in (Tan et al., 2020), binary-classification task was utilized to detect hypoplastic left heart syndrome (HLHS) versus healthy cases based on the four-chamber heart (4CH), left ventricular outflow tract (LVOT), and right ventricular outflow tract (RVOT).

Segmentation

2D US images were utilized by multi-class segmentation to identify heart disease, including: left heart syndrome (HLHS), total anomalous pulmonary venous connection (TAPVC), pulmonary atresia with intact ventricular septum (PA/IVS), endocardial cushion defect (ECD), fetal cardiac rhabdomyoma (FCR), and Ebstein’s anomaly (EA) (Yang et al., 2020a).

Classification and segmentation

Classification and segmentation are used to detect congenital heart disease (CHD) based on 2D US images taken in the second trimester (Arnaout et al., 2021). Cases were classified into normal hearts vs CHD. This classification was orchestrated by identifying five views of the heart in fetal CHD screening, including three-vessel trachea (3VT), three-vessel view (3VV), left-ventricular outflow tract (LVOT), axial four chambers (A4C), and abdomen (ABDO).

Miscellaneous

To identify heart disease, various methods were proposed based on the capabilities of 2D US images. Classification with object detection was utilized in (Gong et al., 2020; Komatsu et al., 2021b). Fetal congenital heart disease (FHD) can be detected in the second and third trimesters based on how quickly the fetus grows between gestational weeks, and how the shape of the four chambers in the heart changes over time (Gong et al., 2020). Furthermore, classification with object detection was used to detect cardiac substructures and structural abnormalities in the second and third trimesters (Komatsu et al., 2021b). Lastly, segmentation with object detection was used to locate the ventricular septum in 2D US images in the second trimester, as seen in (Dozen et al., 2020).

Heart chambers view

Classification

2D US images taken in the second and third trimesters were utilized in (Patra et al., 2017) to propose a classification task utilized to localize the four chambers (4C), the left ventricular outflow tract (LVOT), the three vessels (3V), and the background (BG).

Segmentation

Segmentation was used in (n = 3, 2.80%) studies based on 2D US images. In two studies (Xu et al., 2020a, 2020b), seven critical anatomical structures in the apical four-chamber (A4C) view were segmented, including: left atrium (LA), right atrium (RA), left ventricle (LV), right ventricle (RV), descending aorta (DAO), epicardium (EP) and thorax. In another study (Sundaresan et al., 2017), segmentation was used to locate four-chamber views (4C), left ventricular outflow tract view (LVOT), and three-vessel view (3V) in the second and third trimesters.

Miscellaneous

2D US images were used in (n = 2, 1.86) studies, and both studies utilized classification with object detection. In the second trimester (Dong et al., 2020), the first classification conducted was the cardiac four-chamber plane (CFP) into non-CFPs and CFPs (i.e., apical, bottom, and parasternal CFPs). The second task was to then classify the CFPs in terms of the zoom and gain of 2D US images. In addition, object detection was utilized to detect anatomical structures in the CFPs, including left atrial pulmonary vein angle (PVA), apex cordis and moderator band (ACMB), and multiple ribs (MRs). In (Pu et al., 2021), object detection was used in the second and third trimesters to extract attention regions for improving classification performance and determining the four-chamber view, including detection of end-systolic (ES) and end-diastolic (ED).

Fetus abdomen

As shown in (Table 2), the primary purpose of (n= 10, 10.8%) studies was to identify and localize the fetus’s abdomen, including abdominal anatomical landmarks (i.e., stomach bubble (SB), umbilical vein (UV), and spine (SP)). The following subsection discusses this category based on the implemented task. (Table 7). presents comparisons between the various techniques for each fetus abdomen including objective, backbone methods, optimization, fetal age, best obtained result, and observations.

Table 7.

Articles published using AI to improve fetus abdomen monitoring: objective, backbone methods, optimization, fetal age, and AI tasks

| Study | Objective | Backbone Methods/Framework | Optimization/Extractor methods | Fetal age | AI tasks |

|---|---|---|---|---|---|

| Abdominal anatomical landmarks | |||||

| (Rahmatullah et al., 2011b) | To automatically detect two anatomical landmarks in an abdominal image plane stomach bubble (SB) and the umbilical vein (UV). | AdaBoost | Haar-like feature | 14 - 19 weeks | Classification |

| (Yang et al., 2014) | To localize fetal abdominal standard plane (FASP) from US including SB, UV, and spine (SP) | Random Forests Classifier+ SVM | Haar-like feature Radial Component-Based Model (RCM) |

18 - 40 weeks | Classification |

| (Kim et al., 2018) | To classify ultrasound images (SB, amniotic fluid (AF), and UV) and to obtain an initial estimate of the AC." | Initial Estimation CNN + U-Net | Hough transform | N/A | Classification segmentation |

| (Jang et al., 2017) | To classify ultrasound images (SB, AF, and UV) and measure AC | CNN | Hough transform | 20 - 34 weeks | Classification segmentation |

| (Wu et al., 2017) | To find the region of interest (ROI) of the fetal abdominal region in the US image. | Fetal US Image Quality Assessment (FUIQA) | L-CNN is able to localize the fetal abdominal ROI AlexNet C-CNN then further analyzes the identified ROI DCNN to duplicate the US images for the RGB channels rotating" |

16 - 40 weeks | Classification |

| (Ni et al., 2014) | To localize the fetal abdominal standard plane from ultrasound | Random forest classifier+ SVM classifier | Radial Component-based Model (RCM) Vessel Probability Map (VPM) Haar-like features |

18 - 40 weeks | Classification |

| (Deepika et al., 2021) | To diagnose the (prenatal) US images by design and implement a novel framework | Defending Against Child Death (DACD) | CNN U-Net Hough-man transformation |

N/A | Classification segmentation |

| (Rahmatnllah et al., 2012) | To detect important landmarks employed in manual scoring of ultrasoundimages. | AdaBoost | Haar-like feature | 18 - 37 weeks | Classification |

| (Rahmatullah et al., 2011a) | To automatically select the standard plane from the fetal US volume for the application of fetal biometry measurement. | AdaBoost | One Combined Trained Classifier (1CTC) Two Separately Trained Classifiers (2STC) Haar-like feature |

20 - 28 weeks | Classification |

| (Chen et al., 2014) | To localize the FASP from US images. | DCNN | Fine-Tuning with Knowledge Transfer Barnes-Hut Stochastic Neighbor Embedding (BH-SNE) |

18 - 40 weeks) | Classification |

Abdominal anatomical landmarks

Classification

Classification task was used in (n = 7, 6.54%) studies to localize abdominal anatomical landmarks. 2D US images were used in seven studies (Chen et al., 2014; Ni et al., 2014; Rahmatnllah et al., 2012; Rahmatullah et al., 2011b; Wu et al., 2017; Yang et al., 2014) and 3D US images in only one study (Rahmatullah et al., 2011a). In (Rahmatnllah et al., 2012; Rahmatullah et al., 2011a, 2011b; Wu et al., 2017), various classifiers were utilized to localize stomach bubble (SB) and umbilical vein (UV), in the first and second trimester as seen in (Rahmatullah et al., 2011a, 2011b), and in the second and third trimesters as seen in (Rahmatnllah et al., 2012; Wu et al., 2017). Furthermore, works in (Chen et al., 2014; Ni et al., 2014; Yang et al., 2014) located the spine (SP) besides SB and UV on the same trimesters.

Classification and segmentation

2D US images taken within random trimesters were used in (Deepika et al., 2021; Kim et al., 2018). In (Kim et al., 2018), SB, UV, and AF were localized. Further, abdominal circumference (AC), spine position, and bone regions were estimated. In (Deepika et al., 2021), 2D US images were classified into normal versus abnormal fetuses based on the fetus images such as AF, SB, UV, and SA. Study (Jang et al., 2017) located SB and the portal section from the UV, and observed amniotic fluid (AF) in the second and third trimesters.

Dataset analysis

Fetus body

In this area, ethical and legal concerns presented the most barriers to comprehensive research. Therefore, datasets were not available to the research community. For example, in this research, studies discussed how the fetal body contains five subsections (fetal part structure, anatomical structure, growth disease, gestational age, and gender identificationOf the available studies (n = 31, 28.9%), one dataset was intended to be available online in (Yaqub et al., 2015) related to identifying fetal part structure. Unfortunately, this dataset has not yet been released by the author. The only public dataset that was available online for fetal struture classification was released by Burgos-Artizzu et al. (2020) The dataset is a total of 12,499 2D fetal ultrasound images, including brain 143, Trans-cerebellum 714, Trans-thalamic 1638, Trans-ventricular 597, abdomen 711, cervix 1626, femur 1040, thorax 1718, and 4213 are unclassified fetal images. Acquiring a high volume of the dataset was also challenging in most of the studies; therefore, multi-data augmentation in (n = 11, 10.2%) studies (Andriani and Mardhiyah, 2019; Burgos-Artizzu et al., 2020; Cai et al., 2018; Gao and Noble, 2019; Li et al., 2017; Maraci et al., 2020; Ryou et al., 2019; Weerasinghe et al., 2021; Yang et al., 2019) has been observed. These augmented images were employed to boost classification and segmentation performance. SimpleITK library (Lowekamp et al., 2013) was used for augmentation in (Weerasinghe et al., 2021). Because of limited data samples in addition to augmentation, k-fold cross-validation method was utilized in (n = 8, 7.4%) studies (Bagi and Shreedhara, 2014; Cai et al., 2020; Gao and Noble, 2019; Maysanjaya et al., 2014; Rahmatullah et al., 2014; Ryou et al., 2016; Wee et al., 2010; Yaqub et al., 2015). These cross-validation methods are also used to resolve issues such as overfitting and bias in dataset selection. Only one software named ITK-SNAP (Yushkevich et al., 2006) was reported in (Weerasinghe et al., 2021) to segment structures in 3D images.

Fetus head

This section contains three subsections (skull localization, brain standard planes, and brain disease). There were a total of (n = 43, 40.18%) studies, but we only found one online public dataset called HC18 grand challenge (van den Heuvel et al., 2018). This dataset was used in (n = 13, 12.14%) studies (Aji et al., 2019; Al-Bander et al., 2020; Brahma et al., 2021; Desai et al., 2020; Fiorentino et al., 2021; Li et al., 2020; Qiao and Zulkernine, 2020; Skeika et al., 2020; Sobhaninia et al., 2020,2019; Xu et al., 2021; Zeng et al., 2021; Zhang et al., 2020a). This dataset contains 2D US images collected from 551 pregnant women at different pregnancy trimesters. There were 999 images in the training set and 335 for testing; the sonographer manually annotated the HC. Image augmentation was applied in (n = 9, 8.41%) studies (Al-Bander et al., 2020; Brahma et al., 2021; Fiorentino et al., 2021; Li et al., 2020; Skeika et al., 2020; Sobhaninia et al., 2019,2020; Zeng et al., 2021; Zhang et al., 2020a), and cross-validation was employed in (Zhang et al., 2020a).

(Table 8) highlights the best result achieved despite the challenges of recording the outcomes of the selected studies.

Table 8.

Compared between studies that utilized the HC18 dataset

| Study | DSC | HD | DF | ADF |

|---|---|---|---|---|

| (Sobhaninia et al., 2020) | 0.926 | 3.53 | 0.94 | 2.39 |

| (Aji et al., 2019) | N/A | N/A | 14.9% | N/A |

| (Desai et al., 2020) | 0.973 | 1.58 | N/A | N/A |

| (Brahma et al., 2021) | 0.968 | N/A | N/A | N/A |

| (Qiao and Zulkernine, 2020) | 0.973 | N/A | N/A | 2.69 |

| (Sobhaninia et al., 2019) | 0.968 | 1.72 | 1.13 | 2.12 |

| (Zeng et al., 2021) | 0.979 | 1.27 | 0.09 | 1.77 |

| (Fiorentino et al., 2021) | 0.977 | 1.32 | 0.21 | 1.90 |

| (Li et al., 2020) | 0.977 | 0.47 | N/A | 2.03 |

| (Al-Bander et al., 2020) | 0.977 | 1.39 | 1.49 | 2.33 |

| (Xu et al., 2021) | 0.971 | 3.23 | N/A | N/A |

| (Skeika et al., 2020) | 0.979 | N/A | N/A | N/A |

DSC, Dice similarity coefficient; ACC, Accuracy; Pre, Precision; HD, Hausdorff distance; DF, Difference; ADF, Absolute Difference; IoU, Intersection overUnion; mPA, mean Pixel Accuracy.

Fetus face